Along the auditory pathway from auditory nerve to midbrain to cortex, individual neurons adapt progressively to sound statistics, enabling the discernment of foreground sounds, such as speech, over background noise.

Abstract

Identifying behaviorally relevant sounds in the presence of background noise is one of the most important and poorly understood challenges faced by the auditory system. An elegant solution to this problem would be for the auditory system to represent sounds in a noise-invariant fashion. Since a major effect of background noise is to alter the statistics of the sounds reaching the ear, noise-invariant representations could be promoted by neurons adapting to stimulus statistics. Here we investigated the extent of neuronal adaptation to the mean and contrast of auditory stimulation as one ascends the auditory pathway. We measured these forms of adaptation by presenting complex synthetic and natural sounds, recording neuronal responses in the inferior colliculus and primary fields of the auditory cortex of anaesthetized ferrets, and comparing these responses with a sophisticated model of the auditory nerve. We find that the strength of both forms of adaptation increases as one ascends the auditory pathway. To investigate whether this adaptation to stimulus statistics contributes to the construction of noise-invariant sound representations, we also presented complex, natural sounds embedded in stationary noise, and used a decoding approach to assess the noise tolerance of the neuronal population code. We find that the code for complex sounds in the periphery is affected more by the addition of noise than the cortical code. We also find that noise tolerance is correlated with adaptation to stimulus statistics, so that populations that show the strongest adaptation to stimulus statistics are also the most noise-tolerant. This suggests that the increase in adaptation to sound statistics from auditory nerve to midbrain to cortex is an important stage in the construction of noise-invariant sound representations in the higher auditory brain.

Author Summary

We rarely hear sounds (such as someone talking) in isolation, but rather against a background of noise. When mixtures of sounds and background noise reach the ears, peripheral auditory neurons represent the whole sound mixture. Previous evidence suggests, however, that the higher auditory brain represents just the sounds of interest, and is less affected by the presence of background noise. The neural mechanisms underlying this transformation are poorly understood. Here, we investigate these mechanisms by studying the representation of sound by populations of neurons at three stages along the auditory pathway; we simulate the auditory nerve and record from neurons in the midbrain and primary auditory cortex of anesthetized ferrets. We find that the transformation from noise-sensitive representations of sound to noise-tolerant processing takes place gradually along the pathway from auditory nerve to midbrain to cortex. Our results suggest that this results from neurons adapting to the statistics of heard sounds.

Introduction

Because our auditory world usually contains many competing sources, behaviorally important sounds are often obscured by background noise. To accurately recognize these sounds, the auditory brain must therefore represent them in a way that is robust to noise. Previous work has suggested that the auditory system does build such sound representations. In the auditory periphery, sounds are represented in terms of their physical structure, including any noise [1]–[3], while data from human imaging studies suggest that, in higher areas of auditory cortex (AC), relevant sounds are represented in a more context-independent, categorical manner [4]–[8]. However, we know very little about the neural computations that might generate noise invariance or where exactly along the auditory pathway this is achieved.

We do, on the other hand, know that the firing patterns of individual auditory neurons change with acoustic context. Numerous experiments have varied the statistics of sound stimulation, such as sounds' overall intensity, modulation depth, or contrast, or the presence of background noise. In response to these manipulations, auditory neurons from the periphery to primary cortex have been observed to change their gain [9]–[12], temporal receptive field shape (i.e., modulation transfer function, MTF) [9],[11],[13]–[17], spectral receptive field shape [18],[19], and output nonlinearities [20],[21], or they undergo more complex changes in response patterns [22],[23]. These changes have been explored or explained in terms of signal detection theory [11], efficient coding [17],[20],[24], or maintaining sensitivity to ecologically relevant stimuli [21],[23]. Such forms of adaptation—not to the repetition of a fixed stimulus, but to the statistics of ongoing stimulation—offer a plausible neural mechanism for the construction of noise-invariant representations. A population of neurons that adapts to the constant statistics of a background noise could become desensitized to that noise, while still accurately representing simultaneously presented, modulated foreground sounds.

Here, we investigated whether adaptation to stimulus statistics in the auditory system enables the brain to build noise-invariant representations of sounds. To do this, we carried out three experiments. First, we measured neural responses to complex sounds embedded in stationary noise, by recording from single units and small multi-unit clusters in the auditory midbrain and cortex and by simulating responses in the auditory periphery. We find that as one progresses through the auditory pathway, neural responses become progressively more independent of the level of background noise. Second, we measured how the coding of individual neurons in these auditory centers is affected by the changes in stimulus statistics induced by adding background noise. We find that there is a progressive increase through the auditory pathway in the strength of adaptation to the altered stimulus statistics. Third, we considered how the noise-dependent responses of individual units combine to produce population codes. Population representations are usually addressed only indirectly, for example, by summing up results from individual units (though see [25],[26]), but here we investigated these directly, by asking how well the original, “clean” sounds could be decoded from the population responses to noise-tainted stimuli. We find a progressive increase in the noise tolerance of population representations of sound. Moreover, neuron-level changes in the strength of adaptation and population-level changes in the noise tolerance of decoding are well correlated both within and across auditory centers. This suggests that adaptation to stimulus statistics may indeed be a neural mechanism that drives the construction of noise-tolerant representations of sound.

Results

We recorded neural responses in the central nucleus of the inferior colliculus (IC) and the primary fields of the AC in ferrets, while presenting a set of natural sounds in high and low signal-to-noise ratio (SNR) conditions (referred to as “clean” and “noisy” below). We compared these recorded neural responses against a sophisticated model of sound representation in the auditory nerve (AN) [27]. The simulated auditory nerve (sAN) model captures the functional components of the auditory periphery from the middle ear to the AN, including the adaptation that occurs at synapses between inner hair cells and AN fibers.

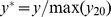

We presented four audio segments (two speech, two environmental), to which spectrally matched noise had been added. In the “clean” condition, the SNR was 20 dB; in the “noisy” conditions, SNRs were 10 dB, 0 dB, or −10 dB (Figure 1). Fifty different noise tokens were used, so that responses reflected the average properties of the noise. We refer to the sounds in the clean condition as being the signal, and the sounds in the noisy conditions as being the signal plus noise. The noise we used was stationary—that is, its statistics did not change over time; it also had a flat modulation spectrum and no cross-band correlation. Such noises are exemplified by the sounds of rain, vacuum cleaners, jet engines, and radio static [17],[28]. We used this subclass of noise as such sounds are almost always ecologically irrelevant, and their statistics differ from those of relevant signals; the signal/noise distinction was therefore as unambiguous as possible. Very little sound signal was detectable to our ears in the noisiest condition, which lies close to the threshold of human and animal speech recognition abilities during active listening [25],[29]–[31].

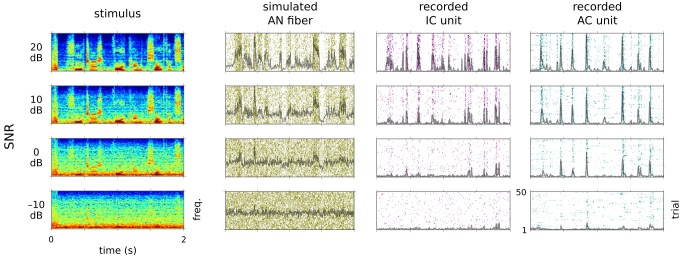

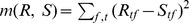

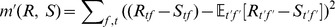

Figure 1. Single unit responses to clean and noisy sounds.

Left column, the spectrogram of a segment of speech under four noise conditions, with the noise level increasing (i.e., the SNR decreasing) from top to bottom. Second to fourth columns, example rasters showing the responses of sAN responses and of responses recorded in the IC and AC, over 50 stimulus presentations. Gray lines, average PSTH.

For each auditory center (sAN, IC, AC), we measured how the neural coding of sounds changed as background noise was introduced. We found that, as we progressed from sAN to IC to AC, the distribution of neural responses became progressively more tolerant (i.e., less sensitive) to the level of background noise. This was evident at the gross level, as the distribution of sAN firing rates for each unit,  , changed considerably as a function of the background noise level, while IC firing rates changed less, and AC even less so (Figure 2A–B). More notably, when we conditioned these response distributions on each 5 ms stimulus time bin, the response distributions

, changed considerably as a function of the background noise level, while IC firing rates changed less, and AC even less so (Figure 2A–B). More notably, when we conditioned these response distributions on each 5 ms stimulus time bin, the response distributions  became more statistically independent of the background noise level from sAN to IC to AC (Figure 2C). This demonstrates that neural responses to complex sounds become less sensitive to background noise level as one ascends the auditory pathway.

became more statistically independent of the background noise level from sAN to IC to AC (Figure 2C). This demonstrates that neural responses to complex sounds become less sensitive to background noise level as one ascends the auditory pathway.

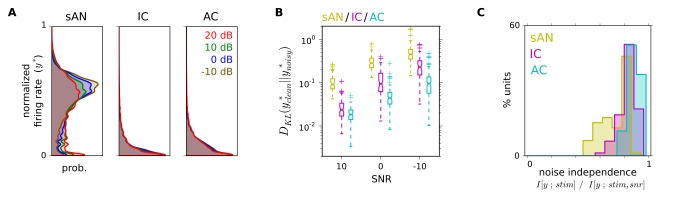

Figure 2. Along the auditory pathway, neurons' response distributions become increasingly independent of the level of background noise.

(A) Average distribution of normalized firing rates by location/SNR. For each unit,  , where

, where  is the firing rate. This shows that the average response distribution within the population changes less with noise in higher auditory centers. (B) Kullback–Leibler divergence between individual units' normalized firing-rate distributions evoked from clean sounds and evoked from noisy sounds. Smaller values indicate that firing rate distributions were similar. This shows that individual neurons' response distributions change less with noise in higher auditory centers. (C) Statistical independence of stimulus-conditioned response distributions

is the firing rate. This shows that the average response distribution within the population changes less with noise in higher auditory centers. (B) Kullback–Leibler divergence between individual units' normalized firing-rate distributions evoked from clean sounds and evoked from noisy sounds. Smaller values indicate that firing rate distributions were similar. This shows that individual neurons' response distributions change less with noise in higher auditory centers. (C) Statistical independence of stimulus-conditioned response distributions  to the background noise level (see Materials and Methods for details of metric). Lower values indicate that response distributions were highly dependent on the stimulus SNR; a value of 1 indicates that response distributions were completely independent of the stimulus SNR. Median values of 0.80/0.84/0.88 for sAN/IC/AC (

to the background noise level (see Materials and Methods for details of metric). Lower values indicate that response distributions were highly dependent on the stimulus SNR; a value of 1 indicates that response distributions were completely independent of the stimulus SNR. Median values of 0.80/0.84/0.88 for sAN/IC/AC ( , pairwise rank-sums tests).

, pairwise rank-sums tests).

Adaptive Coding

What underlies this shift in coding, such that the responses of neurons in higher auditory centers are overall more tolerant to noise? To understand this, we considered three ways in which noise affects signals within auditory neurons' receptive fields (Figure 3A).

Figure 3. Effect of background noise on incoming signals within neurons' receptive fields.

(A) Left, sound intensity within a cortical neuron's receptive field for clean (20 dB) and noisy (0 dB) stimulation (see Figure S1B). Right, distribution of the sounds' within-channel intensities. (B) Signals in (A) after adaptation to signal statistics.

First, noise is an energy mask: when components of the original signal have intensities (within the receptive field) lower than that of the noise, they are obscured. Second, although the statistics of noise might not change over time, the noise itself is a time-varying stimulus, and auditory neurons may respond to noise transients [32],[33]. Because neurons in higher auditory centers progressively filter out faster temporal modulations [1], the energy of noise transients within neurons' linear receptive fields decreases from AN to IC to AC. However, simulations demonstrate that this alone cannot account for the observed differences in noise independence (Figure S1).

Finally, adding noise affects the statistics of the stimulus within the receptive field in two ways: it increases the baseline intensity, and it reduces the effective size of the peaks in intensity above the baseline—that is, it lowers the contrast. These effects can be roughly summarized as changing the mean ( ) and standard deviation (

) and standard deviation ( ) of the stimulus intensity distribution (which is, incidentally, non-Gaussian [24],[34],[35]).

) of the stimulus intensity distribution (which is, incidentally, non-Gaussian [24],[34],[35]).

If auditory neurons faithfully encoded stimuli within their receptive fields—irrespective of the stimulus statistics—then the response distributions would change their  and

and  along with the stimulus distribution. However, if neurons adapted to the statistics—for example, by normalizing their responses relative to the local

along with the stimulus distribution. However, if neurons adapted to the statistics—for example, by normalizing their responses relative to the local  and

and  —then the response distributions would change less with the addition of noise (Figure 3B). Indeed, as shown above, the response distributions of sAN units changed considerably when noise was introduced, while those of IC units changed less, and cortex even less so. The increased noise tolerance in higher auditory centers may therefore result from a progressive increase in the strength of adaptation to stimulus statistics along the auditory pathway.

—then the response distributions would change less with the addition of noise (Figure 3B). Indeed, as shown above, the response distributions of sAN units changed considerably when noise was introduced, while those of IC units changed less, and cortex even less so. The increased noise tolerance in higher auditory centers may therefore result from a progressive increase in the strength of adaptation to stimulus statistics along the auditory pathway.

- and

- and  -Adaptation Grow Stronger Along the Auditory Pathway

-Adaptation Grow Stronger Along the Auditory Pathway

Given our reasoning above, we predicted that neuronal adaptation to  and

and  would increase along the auditory pathway. Previous experiments have shown that

would increase along the auditory pathway. Previous experiments have shown that  -adaptation increases from AN to IC [20],[36] and that there is strong

-adaptation increases from AN to IC [20],[36] and that there is strong  -adaptation in AC [10],[12]; however, the overall changes in

-adaptation in AC [10],[12]; however, the overall changes in  - and

- and  -adaptation across the auditory pathway are unknown.

-adaptation across the auditory pathway are unknown.

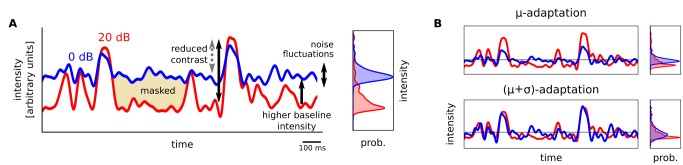

We first tested the hypothesis that  -adaptation increases along the auditory pathway. Taking the neural responses to natural sounds, we quantified the degree to which introducing background noise changed the neural responses during the “baseline” periods of sound stimulation, such as when there was little stimulus energy within neurons' receptive fields to drive spiking. Rather than attempt to estimate neurons' receptive fields, we instead measured the relevant responses operationally. We defined a reference firing rate for each unit,

-adaptation increases along the auditory pathway. Taking the neural responses to natural sounds, we quantified the degree to which introducing background noise changed the neural responses during the “baseline” periods of sound stimulation, such as when there was little stimulus energy within neurons' receptive fields to drive spiking. Rather than attempt to estimate neurons' receptive fields, we instead measured the relevant responses operationally. We defined a reference firing rate for each unit,  , at the 33rd percentile of that unit's firing rate distribution during clean sound stimulation. We then calculated how often the firing rate exceeded

, at the 33rd percentile of that unit's firing rate distribution during clean sound stimulation. We then calculated how often the firing rate exceeded  under different noise conditions (Figure 4A). The motivation for this measure is that, when

under different noise conditions (Figure 4A). The motivation for this measure is that, when  -adaptation is weak, responses are sensitive to the baseline intensity of the stimulus, so adding noise should drive this value up. If

-adaptation is weak, responses are sensitive to the baseline intensity of the stimulus, so adding noise should drive this value up. If  -adaptation is strong, such that the neuron adapts out the increased baseline intensity of the stimulus, then the firing rate should exceed

-adaptation is strong, such that the neuron adapts out the increased baseline intensity of the stimulus, then the firing rate should exceed  about as often in the noisy conditions as in the clean condition. We refer to these two possibilities as being of low, or high, baseline invariance (BI), respectively.

about as often in the noisy conditions as in the clean condition. We refer to these two possibilities as being of low, or high, baseline invariance (BI), respectively.

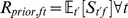

Figure 4. Increasing adaptation to stimulus baseline along the auditory pathway.

(A) Calculation of BI, a measure of  -adaptation, for an example sAN fiber. CDF, cumulative distribution of firing rates.

-adaptation, for an example sAN fiber. CDF, cumulative distribution of firing rates.  , the 33rd percentile of the CDF under clean sound stimulation —that is, the firing rate with the cumulative probability

, the 33rd percentile of the CDF under clean sound stimulation —that is, the firing rate with the cumulative probability  . BI indicates how little

. BI indicates how little  changes with SNR, as

changes with SNR, as  . (B) Units' BI in each location.

. (B) Units' BI in each location.

Introducing noise caused sAN fibers to change their firing relative to  the most, and AC units the least (Figure 4B; median BI of 87/96/98% for sAN/IC/AC;

the most, and AC units the least (Figure 4B; median BI of 87/96/98% for sAN/IC/AC;  ). Similar results were obtained with

). Similar results were obtained with  placed at other percentiles between 10% and 50%. This confirms that

placed at other percentiles between 10% and 50%. This confirms that  -adaptation increases along the auditory pathway.

-adaptation increases along the auditory pathway.

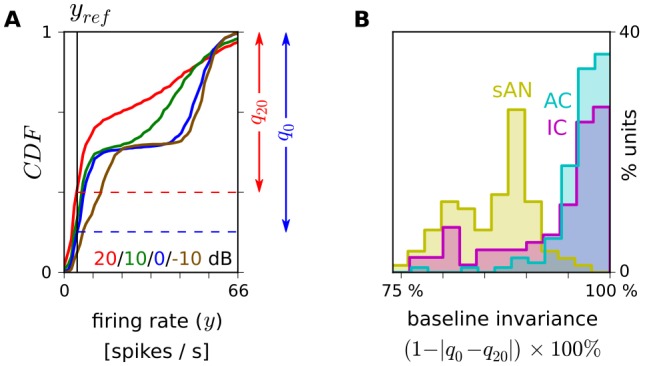

We next tested the hypothesis that  -adaptation increases along the auditory pathway, by comparing how changes in contrast affect the gain of neurons at each location [10],[12]. We analyzed units' responses to dynamic random chord (DRC) sequences of differing contrasts (Figure 5A). DRCs comprise a sequence of chords, composed of tones whose levels are drawn from particular distributions. This allows efficient estimation of the spectrotemporal receptive fields (STRFs) of auditory neurons [37]–[39]. Varying the width of the level distributions allows parametric control over stimulus contrast. As in previous studies [10],[12], we modeled neuronal responses using the linear–nonlinear (LN) framework [40],[41], assuming that each neuron had a fixed (i.e., contrast-independent) STRF and a variable (contrast-sensitive) output nonlinearity. Contrast-dependent changes in coding are thus revealed through changes to output nonlinearities [10],[12].

-adaptation increases along the auditory pathway, by comparing how changes in contrast affect the gain of neurons at each location [10],[12]. We analyzed units' responses to dynamic random chord (DRC) sequences of differing contrasts (Figure 5A). DRCs comprise a sequence of chords, composed of tones whose levels are drawn from particular distributions. This allows efficient estimation of the spectrotemporal receptive fields (STRFs) of auditory neurons [37]–[39]. Varying the width of the level distributions allows parametric control over stimulus contrast. As in previous studies [10],[12], we modeled neuronal responses using the linear–nonlinear (LN) framework [40],[41], assuming that each neuron had a fixed (i.e., contrast-independent) STRF and a variable (contrast-sensitive) output nonlinearity. Contrast-dependent changes in coding are thus revealed through changes to output nonlinearities [10],[12].

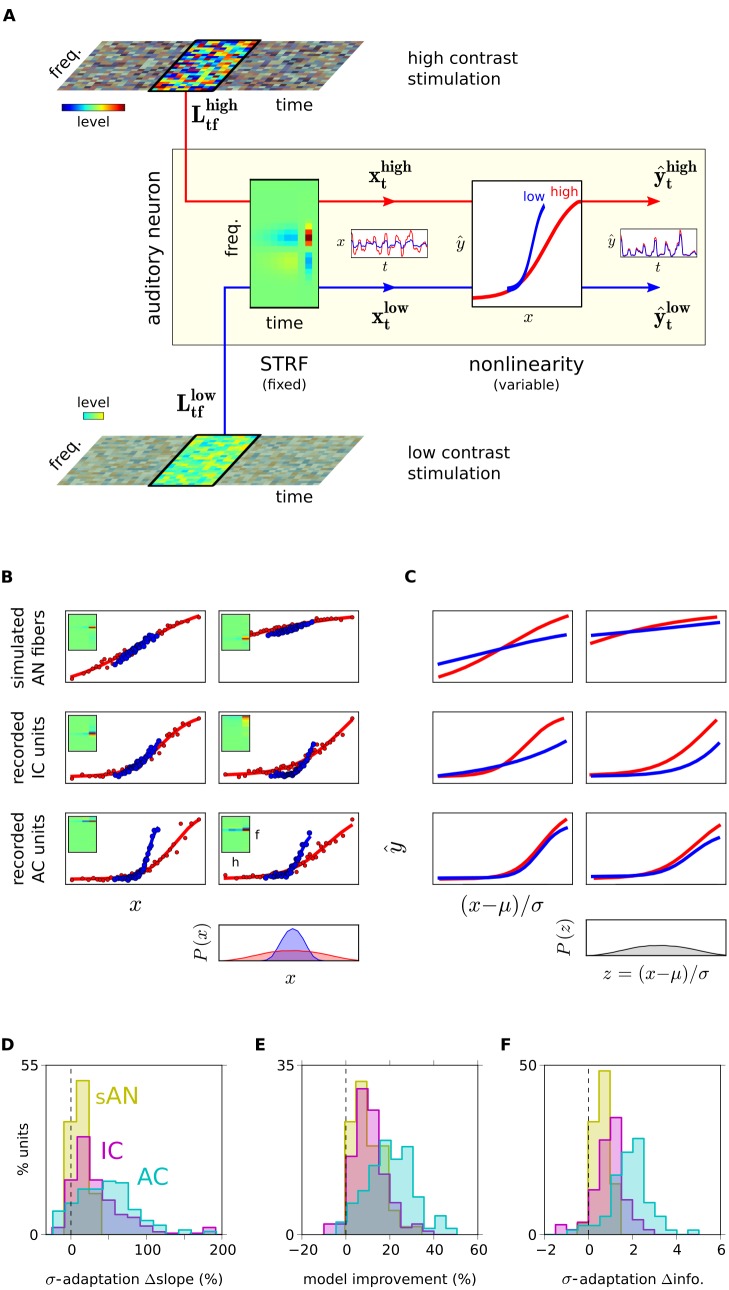

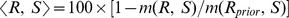

Figure 5. Increasing adaptation to stimulus contrast along the auditory pathway.

(A) Schematic of adaptive-LN model. Top/bottom, DRC stimuli. DRCs are filtered through a STRF, then passed through an output nonlinearity, yielding the firing rate ( ). Output nonlinearities change with stimulus contrast. Insets, example time series. (B) Example units, nonlinearities during low (blue) and high (red) contrast DRCs. Insets, STRFs. Bottom, distributions of STRF-filtered DRCs under low/high contrast. (C) Nonlinearities in (B), replotted in normalized coordinates. (D) Contrast-dependent changes to the slope of units' nonlinearities. (E) Percentage of residual signal power explained by gain kernel model above an LN model [12]. (F) Log increase in Fisher information in units' encoding of low contrast stimuli, resulting from adaptation to this distribution. Zero, no adaptation. Larger positive values, greater adaptation.

). Output nonlinearities change with stimulus contrast. Insets, example time series. (B) Example units, nonlinearities during low (blue) and high (red) contrast DRCs. Insets, STRFs. Bottom, distributions of STRF-filtered DRCs under low/high contrast. (C) Nonlinearities in (B), replotted in normalized coordinates. (D) Contrast-dependent changes to the slope of units' nonlinearities. (E) Percentage of residual signal power explained by gain kernel model above an LN model [12]. (F) Log increase in Fisher information in units' encoding of low contrast stimuli, resulting from adaptation to this distribution. Zero, no adaptation. Larger positive values, greater adaptation.

Changing contrast had little effect on sAN coding, but caused small gain changes for IC units, and large gain changes for cortical units (Figure 5B; further examples in Figure S2). Higher in the auditory pathway, contrast-dependent gain changes were stronger (sAN/IC/AC medians: 11/27/44%;  ; Figure 5D), occurred on slower timescales (time constants

; Figure 5D), occurred on slower timescales (time constants  negligible/35/117 ms for sAN/IC/AC;

negligible/35/117 ms for sAN/IC/AC;  ; Figure S3), and were more important to adaptive-LN model predictive power (median improvement over LN model for sAN/IC/AC: 8/10/20%; not significant for sAN vs. IC,

; Figure S3), and were more important to adaptive-LN model predictive power (median improvement over LN model for sAN/IC/AC: 8/10/20%; not significant for sAN vs. IC,  otherwise; Figure 5E) [12]. We confirmed this with a Fisher information analysis: by comparing how much Fisher information a unit typically carried in its firing rate about a low contrast stimulus when it was adapted to low contrast with the amount it typically carried about the same stimulus when it was adapted to high contrast, we found that contrast-adaptive changes in coding were more profound higher up in the auditory pathway (Figure 5F; median

otherwise; Figure 5E) [12]. We confirmed this with a Fisher information analysis: by comparing how much Fisher information a unit typically carried in its firing rate about a low contrast stimulus when it was adapted to low contrast with the amount it typically carried about the same stimulus when it was adapted to high contrast, we found that contrast-adaptive changes in coding were more profound higher up in the auditory pathway (Figure 5F; median  of 0.6/1.0/2.0 for sAN/IC/AC;

of 0.6/1.0/2.0 for sAN/IC/AC;  ). Thus there is an increase in

). Thus there is an increase in  -adaptation along the auditory pathway.

-adaptation along the auditory pathway.

Population Representations of Sound

Given that  - and

- and  -adaptation increase along the auditory pathway, how does this affect the representation of complex sounds by populations of auditory neurons? To answer this, we used a stimulus reconstruction method [42]–[45] that quantified how accurately the spectrogram of a presented sound could be reconstructed from the neuronal responses of each population.

-adaptation increase along the auditory pathway, how does this affect the representation of complex sounds by populations of auditory neurons? To answer this, we used a stimulus reconstruction method [42]–[45] that quantified how accurately the spectrogram of a presented sound could be reconstructed from the neuronal responses of each population.

The reconstruction was done as follows. We first trained a spectrogram decoder on the population's responses to clean sounds (Figure 6). This decoder was based on a dictionary approach (see Materials and Methods section “Population Decoding”). We then tested the decoder on a novel set of responses to clean sounds and measured how close the reconstructed spectrograms,  , were to the original sound spectrograms,

, were to the original sound spectrograms,  , using a similarity metric,

, using a similarity metric,  . These measurements quantify the degree to which the spectrogram of the clean sounds was encoded in the population responses.

. These measurements quantify the degree to which the spectrogram of the clean sounds was encoded in the population responses.

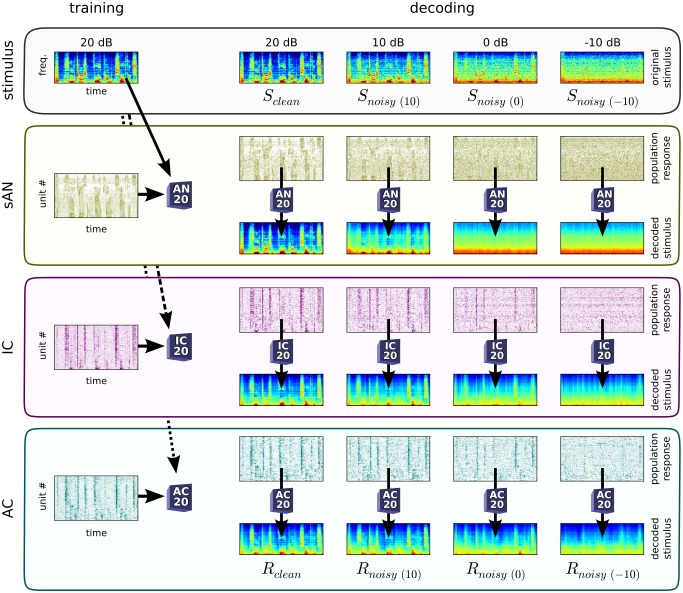

Figure 6. Decoding the population representations of clean and noisy sounds.

Schematic of the decoding of neural responses. For each auditory center, a decoder was trained to reconstruct the clean sound spectrogram from the population responses to the clean sounds. We then measured the performance of these decoders when reconstructing spectrograms from the responses to both clean and noisy sounds. Top row, spectrogram of a 2(20 dB SNR) and noisy (10/0/−10 dB SNR) conditions. Left column, decoder training from responses to clean sounds. Population responses are shown as neurograms: each row depicts the time-varying firing rate of a single unit in the population; rows are organized by CF. Right, reconstructed spectrograms ( ) from population responses to noisy sounds, using the same decoders as trained on the left. The similarity between the reconstructed spectrogram

) from population responses to noisy sounds, using the same decoders as trained on the left. The similarity between the reconstructed spectrogram  and the presented spectrogram

and the presented spectrogram  is measured by

is measured by  ; likewise, the similarity between

; likewise, the similarity between  and the original, clean spectrogram

and the original, clean spectrogram  is measured by

is measured by  . The tendencies for the sAN decoder to produce

. The tendencies for the sAN decoder to produce  -like spectrograms, and the IC and AC decoders to produce

-like spectrograms, and the IC and AC decoders to produce  -like spectrograms, are most visible for the 0 dB and −10 dB conditions.

-like spectrograms, are most visible for the 0 dB and −10 dB conditions.

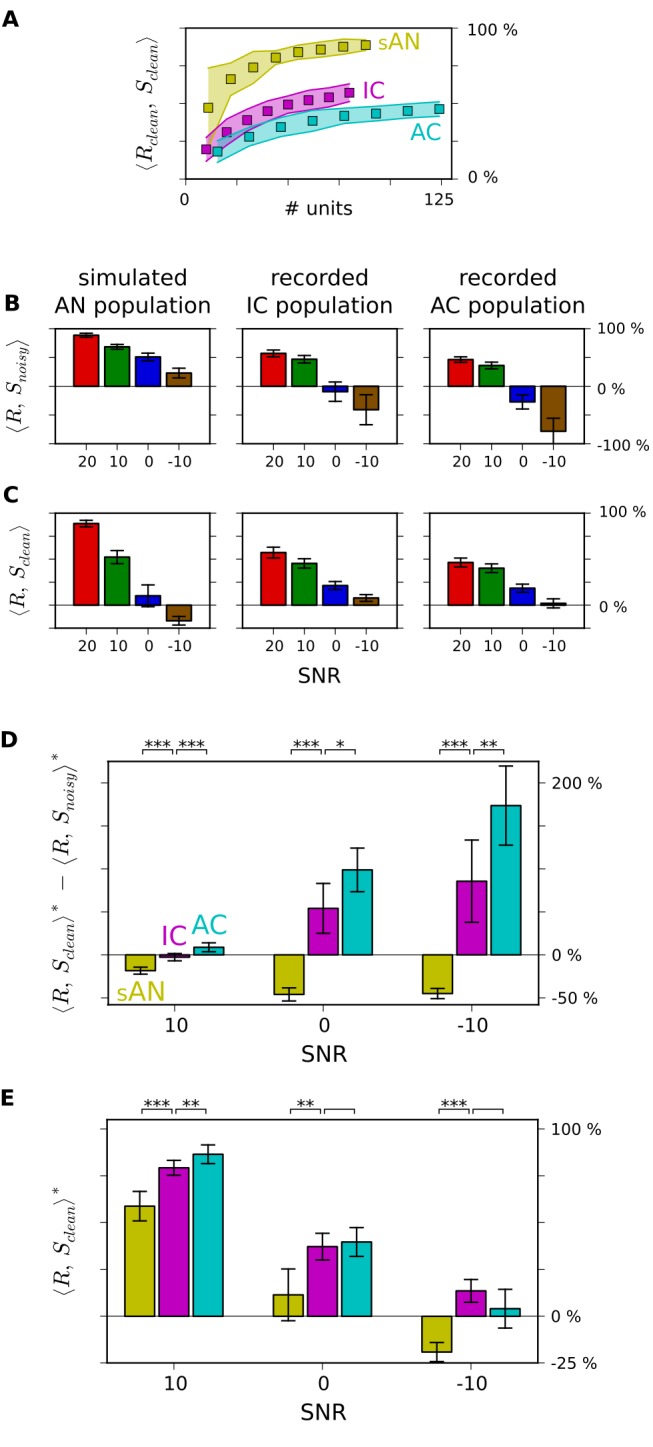

For all three auditory centers, reconstruction accuracy increased with population size (Figure 7A). The best reconstructions were available from sAN responses; reconstructions from IC and AC were less accurate. This is likely to be due to several factors. In particular, the synthetic sAN population provided more uniform coverage of the frequency spectrum (Figure S4), and contained less trial-to-trial variability than the recorded data. Also, both IC and AC are well known to have greater low-pass modulation filtering [1], which should reduce the overall fidelity of the spectrogram encoding at these higher auditory centers.

Figure 7. Population representations of natural sounds become more noise-tolerant along the auditory pathway.

(A) Similarity between decoded responses to the clean sounds ( ), and the clean sounds' spectrograms (

), and the clean sounds' spectrograms ( ). Abscissa, sampled population size. Colored areas, bootstrapped 95% confidence intervals. (B–C) Similarity between decoded responses to the noisy sounds (

). Abscissa, sampled population size. Colored areas, bootstrapped 95% confidence intervals. (B–C) Similarity between decoded responses to the noisy sounds ( ), and the spectrograms of the presented, noisy sounds (B), or the spectrograms of the original, clean sounds (C). Reconstructions are from the full populations in each location. Red bars are the same in (B) and (C), denoting

), and the spectrograms of the presented, noisy sounds (B), or the spectrograms of the original, clean sounds (C). Reconstructions are from the full populations in each location. Red bars are the same in (B) and (C), denoting  (i.e., the rightmost points for each curve in A). Error bars, bootstrapped 95% confidence intervals. (D) Index of whether decoded responses were more similar to the presented, noisy sound (negative values), or the original, clean sound (positive values). Similarities denoted by asterisks (

(i.e., the rightmost points for each curve in A). Error bars, bootstrapped 95% confidence intervals. (D) Index of whether decoded responses were more similar to the presented, noisy sound (negative values), or the original, clean sound (positive values). Similarities denoted by asterisks ( ) are normalized to the maximum score for each location,

) are normalized to the maximum score for each location,  . Error bars, 95% confidence intervals. Pairwise comparison statistics (bootstrapped):

. Error bars, 95% confidence intervals. Pairwise comparison statistics (bootstrapped):  (***),

(***),  (**),

(**),  (*). (E) Decoder accuracy in recovering the clean sound's identity from noisy responses, relative to accuracy in doing so from clean responses.

(*). (E) Decoder accuracy in recovering the clean sound's identity from noisy responses, relative to accuracy in doing so from clean responses.

What Is Being Encoded by Neural Populations?

Our interest was not in the absolute performance of these decoders, but rather in how the stimulus representations changed with the addition of background noise. We began by asking, what are sAN, IC, and AC encoding in their population responses? This is a difficult question to address since the dimensionality of a population response is very high. We therefore recast this problem as follows. We considered a scenario where the higher brain has learned to recognize sounds in the absence of noise, based on the respective encodings in sAN, IC, and AC. We then asked what would happen if the brain then tries to extract sound features from responses to the noisy sounds, if it is assumed that neural populations encode sound features in exactly the same way as when noise was absent.

We considered two hypotheses for what might happen. First, when the brain attempts to reconstruct stimulus features from the noisy sounds, it might accurately recover the whole sound mixture, containing the superimposed signal and noise. Alternatively, the reconstructed stimulus might include the signal alone, and not the noise. We denote these two possibilities as “mixture”-like and “signal only”–like representations. These are two ends of a spectrum: the sAN, IC, and AC populations may show different degrees of “mixture”-like and “signal only”–like coding.

To test these hypotheses, we used the same decoders (which had already been trained on the clean stimuli) to reconstruct the stimulus spectrograms from the responses of the three populations to the noisy sounds. We quantified how the accuracy of the reconstructed spectrograms ( ) changed across noise levels, by measuring the similarity of

) changed across noise levels, by measuring the similarity of  both to the presented, noisy spectrograms (

both to the presented, noisy spectrograms ( ; Figure 7B) and to the spectrogram of the original, clean sound (

; Figure 7B) and to the spectrogram of the original, clean sound ( ; Figure 7C). To be able to compare these values across different populations, we normalized these measurements, by dividing them by that population's value of

; Figure 7C). To be able to compare these values across different populations, we normalized these measurements, by dividing them by that population's value of  (the absolute performance of the decoder on the clean sound responses). We denote the normalized values as

(the absolute performance of the decoder on the clean sound responses). We denote the normalized values as  and

and  , respectively.

, respectively.

The rationale for these measurements was as follows. If the reconstructed spectrogram contains both the signal and the noise, then  should be more similar to the spectrogram of the noisy, presented sound,

should be more similar to the spectrogram of the noisy, presented sound,  , than it is to the spectrogram of the original, clean sound,

, than it is to the spectrogram of the original, clean sound,  , which contains the signal alone. Thus,

, which contains the signal alone. Thus,  would be less than 0. On the other hand, if the reconstructed spectrogram contains the signal, but not the noise, then

would be less than 0. On the other hand, if the reconstructed spectrogram contains the signal, but not the noise, then  should be more similar to

should be more similar to  than to

than to  , and so

, and so  would be greater than 0.

would be greater than 0.

For the sAN responses, we found that  . This indicates that, using a fixed decoder, both the signal and the noise are extracted from the sAN responses. In other words, the noise directly impinges on the encoding of the signal in the sAN responses. The reverse was true for AC, where

. This indicates that, using a fixed decoder, both the signal and the noise are extracted from the sAN responses. In other words, the noise directly impinges on the encoding of the signal in the sAN responses. The reverse was true for AC, where  . This indicates that, using a fixed decoder, the signal can be extracted from the AC responses, without recovering much of the noise. The IC responses lay between these two extrema (Figure 7D).

. This indicates that, using a fixed decoder, the signal can be extracted from the AC responses, without recovering much of the noise. The IC responses lay between these two extrema (Figure 7D).

It is important to emphasize here that this does not imply that noise features are altogether discarded by the level of the cortex, and not represented at all. The decoders here were specifically trained to extract the clean signal; these results therefore highlight how much or how little the encoding of the original signal is affected by the addition of background noise. As we used new noise tokens on each presentation, it was not possible to train decoders to extract the noise in the mixture from the response (rather than the clean sound), nor to accurately determine the extent to which transient noise features can be recovered from population responses. We therefore treat the noise here as a nuisance variable—that is, as a distractor from the encoding of the ecologically more relevant components of the sound signal.

In sum, while population representations in the periphery are more “mixture”-like, insofar as stationary noises are encoded in a similar way as complex sounds, there is a shift towards more “signal only”–like population representations in midbrain and then cortex, wherein stationary noise is not encoded together with the foreground sound.

Noise-Tolerant Population Representations of Sound

We next asked a related but different question: If we start with a population representation of the clean sound, how tolerant is this representation to the addition of background noise? Unlike the question above, this requires us to take into account that the addition of noise degrades any reconstruction (Figure 7B–C).

To measure noise tolerance, we reasoned as follows. The decoder estimates a relationship between the population response and the clean sound spectrogram (i.e., the signal). If a population representation is noise-tolerant, such that the response does not change considerably when background noise is added, then  should be as accurately recovered from responses to the noisy sounds as it is from the clean sounds (i.e.,

should be as accurately recovered from responses to the noisy sounds as it is from the clean sounds (i.e.,  should be high). Conversely, if the population representation is noise-intolerant, such that the response changes considerably when background noise is added, then

should be high). Conversely, if the population representation is noise-intolerant, such that the response changes considerably when background noise is added, then  should be more poorly recovered from responses to the noisy sounds than from responses to the clean sounds (i.e.,

should be more poorly recovered from responses to the noisy sounds than from responses to the clean sounds (i.e.,  should be low). We found that for moderate noise levels, the value of

should be low). We found that for moderate noise levels, the value of  was highest for the AC, and lowest for the sAN (Figure 7E). This suggests that cortex maintains a more consistent representation of the signal as noise is added.

was highest for the AC, and lowest for the sAN (Figure 7E). This suggests that cortex maintains a more consistent representation of the signal as noise is added.

Thus, the population representations of sound change through the auditory pathway. In the periphery, neural populations that encode the signal also encode the noise in a similar way, responding to features of the mixed input. By the level of the cortex, however, neural populations represent the signal in a more noise-tolerant fashion, by responding to the sound features that are common between clean and noisy conditions.

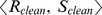

Adaptive Coding Partially Accounts for Noise-Tolerant Populations

Earlier, we demonstrated that adaptation to stimulus statistics increases along the auditory pathway. We therefore asked whether this could account for how background noise affects population representations of complex sounds along the auditory pathway.

To develop this hypothesis, we simulated populations of model auditory neurons with variable degrees of adaptation to sound statistics (Figure S5). These simulations confirmed that increasing  -adaptation and

-adaptation and  -adaptation could account for the decoder results shown in Figure 7D–E. In particular, the simulations made two specific predictions. The first is that the increase in

-adaptation could account for the decoder results shown in Figure 7D–E. In particular, the simulations made two specific predictions. The first is that the increase in  -adaptation along the auditory pathway may be responsible for the shift from encoding

-adaptation along the auditory pathway may be responsible for the shift from encoding  (in sAN) to

(in sAN) to  (in AC), as observed in Figure 7D. This is because

(in AC), as observed in Figure 7D. This is because  -adaptation would remove the strong differences in response baselines between the representations of clean and noisy sounds (Figure 3B, top). The second prediction is that the increase in

-adaptation would remove the strong differences in response baselines between the representations of clean and noisy sounds (Figure 3B, top). The second prediction is that the increase in  -adaptation along the auditory pathway could be responsible for the increased tolerance of

-adaptation along the auditory pathway could be responsible for the increased tolerance of  decoding to the addition of noise, as observed in Figure 7E. This is because

decoding to the addition of noise, as observed in Figure 7E. This is because  -adaptation rescales the representation of the stimulus, such that the peaks in intensity are relatively independent of the noise level (Figure 3B, bottom).

-adaptation rescales the representation of the stimulus, such that the peaks in intensity are relatively independent of the noise level (Figure 3B, bottom).

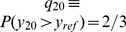

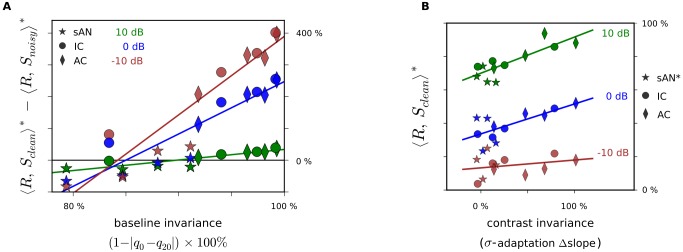

To test the first prediction—that  -adaptation drives populations to represent

-adaptation drives populations to represent  rather than

rather than  —we subdivided each neuronal population into four groups according to the neurons' baseline invariance (BI; our measure of

—we subdivided each neuronal population into four groups according to the neurons' baseline invariance (BI; our measure of  -adaptation). For example, in IC, the 20 neurons with lowest BI formed a subpopulation with mean BI of 83%, and the 20 neurons with highest BI formed a subpopulation with mean BI of 99%. We then decoded responses from each of the 12 subpopulations. We found that the subpopulations with larger BI yielded more

-adaptation). For example, in IC, the 20 neurons with lowest BI formed a subpopulation with mean BI of 83%, and the 20 neurons with highest BI formed a subpopulation with mean BI of 99%. We then decoded responses from each of the 12 subpopulations. We found that the subpopulations with larger BI yielded more  -like spectrograms upon decoding (Figure 8A). That is, neurons with stronger adaptation to baseline sound intensity showed more “signal only”–like coding than “mixture”-like coding. This factor largely explained the differences in

-like spectrograms upon decoding (Figure 8A). That is, neurons with stronger adaptation to baseline sound intensity showed more “signal only”–like coding than “mixture”-like coding. This factor largely explained the differences in  between each level of the pathway (Table S1A).

between each level of the pathway (Table S1A).

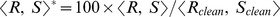

Figure 8. Higher  - and

- and  -adaptation explain the increased noise-tolerance of population representations.

-adaptation explain the increased noise-tolerance of population representations.

(A) Relationship between decoder performance and BI (measure of  -adaptation). Each point represents a subpopulation (one quarter) of the units from each of the sAN/IC/AC populations, subdivided according to units' BI (values in Figure 4B). Abscissa, mean BI in the subpopulation. Ordinate, performance of the subpopulation decoder. Lines, linear fit per SNR. (B) Relationship between decoder performance and CI (measure of

-adaptation). Each point represents a subpopulation (one quarter) of the units from each of the sAN/IC/AC populations, subdivided according to units' BI (values in Figure 4B). Abscissa, mean BI in the subpopulation. Ordinate, performance of the subpopulation decoder. Lines, linear fit per SNR. (B) Relationship between decoder performance and CI (measure of  -adaptation), similar to (A). Here, each point represents a subpopulation (one quarter) of the units from each of the sAN/IC/AC populations, subdivided according to the amount of units' contrast adaptation (values in Figure 5D). sAN values of

-adaptation), similar to (A). Here, each point represents a subpopulation (one quarter) of the units from each of the sAN/IC/AC populations, subdivided according to the amount of units' contrast adaptation (values in Figure 5D). sAN values of  were adjusted for low BI (see Figure S6).

were adjusted for low BI (see Figure S6).

To test the second prediction—that  -adaptation drives populations to encode

-adaptation drives populations to encode  in a more noise-tolerant fashion—we again subdivided each population into four groups, by sorting units by their contrast-dependent gain changes—that is, the extent of their contrast invariance (our measure of

in a more noise-tolerant fashion—we again subdivided each population into four groups, by sorting units by their contrast-dependent gain changes—that is, the extent of their contrast invariance (our measure of  -adaptation). Those subpopulations with stronger contrast-dependent gain control yielded

-adaptation). Those subpopulations with stronger contrast-dependent gain control yielded  -representations that degraded less with the addition of noise. This factor largely explained the differences in

-representations that degraded less with the addition of noise. This factor largely explained the differences in  across auditory centers (Figure 8B, Table S1B). Together, these results support the notion that adaptation to stimulus statistics is an important mechanism that drives populations of auditory neurons to represent sounds a noise-tolerant way.

across auditory centers (Figure 8B, Table S1B). Together, these results support the notion that adaptation to stimulus statistics is an important mechanism that drives populations of auditory neurons to represent sounds a noise-tolerant way.

Discussion

Our data show that, as one progresses along the auditory pathway from the AN to IC to AC, neurons show increasing adaptation to the mean ( , Figure 4) and contrast (

, Figure 4) and contrast ( , Figure 5) of sounds. This adaptation to stimulus statistics is relevant to hearing in noisy environments, because an important effect of background noise is to change these sound statistics. By adapting to such changes, populations of neurons could, in principle, produce a relatively noise-invariant code for nonstationary sounds (Figure 3). Consistent with this hypothesis, we found that population representations of natural sounds in higher auditory centers show stronger tolerance to the addition of stationary background noise (Figure 7), and that this noise tolerance could largely be explained by increases in

, Figure 5) of sounds. This adaptation to stimulus statistics is relevant to hearing in noisy environments, because an important effect of background noise is to change these sound statistics. By adapting to such changes, populations of neurons could, in principle, produce a relatively noise-invariant code for nonstationary sounds (Figure 3). Consistent with this hypothesis, we found that population representations of natural sounds in higher auditory centers show stronger tolerance to the addition of stationary background noise (Figure 7), and that this noise tolerance could largely be explained by increases in  - and

- and  -adaptation (Figure 8). This suggests that the increase in adaptation to stimulus statistics along the auditory pathway makes an important contribution to the construction of noise-invariant representations of sound.

-adaptation (Figure 8). This suggests that the increase in adaptation to stimulus statistics along the auditory pathway makes an important contribution to the construction of noise-invariant representations of sound.

Towards Normalized Representations

The effect of  - and

- and  -adaptation can be understood by representing the structure of a sound as a time-varying function,

-adaptation can be understood by representing the structure of a sound as a time-varying function,  . The brain does not have direct access to

. The brain does not have direct access to  ; instead, when the sound is produced at a particular amplitude (

; instead, when the sound is produced at a particular amplitude ( ) and is heard against a background of other sounds (

) and is heard against a background of other sounds ( ), the signal that the ear actually receives is the sound mixture

), the signal that the ear actually receives is the sound mixture  . To identify a sound, the brain must recover the sound structure,

. To identify a sound, the brain must recover the sound structure,  , without being confused by the often irrelevant variables

, without being confused by the often irrelevant variables  and

and  .

.

Experiments with synthetic DRC stimuli show a shift in coding away from a raw signal (resembling  ) in the periphery toward a more normalized signal (resembling

) in the periphery toward a more normalized signal (resembling  ) in the cortex. When the contrast of DRCs is manipulated, we find that sAN responses to DRCs are reasonably well described by an LN model without gain changes. Their firing rate is a function of

) in the cortex. When the contrast of DRCs is manipulated, we find that sAN responses to DRCs are reasonably well described by an LN model without gain changes. Their firing rate is a function of  —that is, the DRC filtered through that neuron's STRF (Figure 5B). This suggests that the AN, as a whole, provides a relatively veridical representation of sound mixtures reaching the ear. In comparison, many cortical units, and some IC units, adapt to changes in DRC contrast by changing their gain. These units' firing rates are not a function of

—that is, the DRC filtered through that neuron's STRF (Figure 5B). This suggests that the AN, as a whole, provides a relatively veridical representation of sound mixtures reaching the ear. In comparison, many cortical units, and some IC units, adapt to changes in DRC contrast by changing their gain. These units' firing rates are not a function of  (as in the sAN); they are often better described as a function of a normalized variable,

(as in the sAN); they are often better described as a function of a normalized variable,  , in which the stimulus contrast (

, in which the stimulus contrast ( ) has been divided out (Figure 5C). Even though AC neurons do not show complete contrast-invariance for these stimuli (the median AC gain change was 44%; perfect

) has been divided out (Figure 5C). Even though AC neurons do not show complete contrast-invariance for these stimuli (the median AC gain change was 44%; perfect  -encoding would be 100% gain change; Figure 5D), AC neurons' responses depend less on stimulus contrast than those in IC or sAN. A similar shift in coding is evident when considering small changes in the mean level of a DRC. Whereas each sAN fiber provides a relatively fixed representation of

-encoding would be 100% gain change; Figure 5D), AC neurons' responses depend less on stimulus contrast than those in IC or sAN. A similar shift in coding is evident when considering small changes in the mean level of a DRC. Whereas each sAN fiber provides a relatively fixed representation of  , IC and AC units adjust their baseline firing rates so that they effectively subtract out the stimulus mean (Figures S7 and S8). The effect of adaptation to stimulus statistics is thus that cortex (and, to a lesser degree, IC) provides a sound representation that is closer to the underlying sound,

, IC and AC units adjust their baseline firing rates so that they effectively subtract out the stimulus mean (Figures S7 and S8). The effect of adaptation to stimulus statistics is thus that cortex (and, to a lesser degree, IC) provides a sound representation that is closer to the underlying sound,  , than to the sound mixture reaching the ear,

, than to the sound mixture reaching the ear,  .

.

Functional Mechanisms for Building Noise-Invariant Representations

It is likely that adaptation to stimulus statistics is one of several changes in neural coding that contributes towards the construction of noise-invariant representations of sounds. Related findings were obtained by Lesica and Grothe [17], who studied changes in MTFs of IC neurons under noisy stimulation. Just as our investigation of  - and

- and  -adaptations was initially motivated by considering how the statistics of within-receptive field signals would change under clean and noisy sound stimulation (Figure 3), so Lesica and Grothe began by investigating the difference in the amplitude modulation spectra between foreground vocalizations and background noises. They observed that vocalizations contain more power in slow (

-adaptations was initially motivated by considering how the statistics of within-receptive field signals would change under clean and noisy sound stimulation (Figure 3), so Lesica and Grothe began by investigating the difference in the amplitude modulation spectra between foreground vocalizations and background noises. They observed that vocalizations contain more power in slow ( Hz) amplitude modulations than background noises. When the authors presented vocalizations to gerbils and recorded from neurons in the IC, they found that single units' MTFs shifted from being bandpass to more lowpass, suggesting that IC neurons redirect their coding capacity to modulation bands of higher SNR under noisy conditions.

Hz) amplitude modulations than background noises. When the authors presented vocalizations to gerbils and recorded from neurons in the IC, they found that single units' MTFs shifted from being bandpass to more lowpass, suggesting that IC neurons redirect their coding capacity to modulation bands of higher SNR under noisy conditions.

Similar results were recently obtained by Ding and Simon [8], who measured the aggregate activity in human AC via magnetoencephalography, as subjects listened to speech in spectrally matched noise. They found that as background noise is added to speech, the entrainment of aggregate cortical activity to slow temporal modulations (<4 Hz) in the speech signal remains high, while entrainment to faster (4–8 Hz) modulations degrades with noise. Since the gross envelope of the original speech can be decoded from aggregate responses to the clean and noisy stimuli, noise induces a change in response gain as well as changes to MTFs.

The relationship between our observations of increasing  -adaptation from periphery to cortex, and these previous findings of changing MTFs in IC neurons and aggregate cortical activity, may depend on the modulation specificity of the gain changes. For instance, a nonspecific increase in neural response gain would manifest as an overall upwards shift in the MTF. Conversely, an upwards shift within a small region of the MTF corresponds to a modulation-band–specific increase in gain. One possibility is that during complex sound stimulation, auditory neurons determine their gain independently for different modulation “channels” (such as described in modulation filterbank models [28],[46]), as a function of the signal statistics within each channel. This might have different effects on MTFs depending on the modulation spectrum of the background noise. In indirect support of this possibility, the extent to which the coding of different cells is affected by a given background noise appears to depend on each cell's modulation tuning [47]. An alternative possibility is that auditory neurons might always become more modulation lowpass in the presence of background noise, regardless of the noise's actual modulation statistics. This might reflect a set of priors about what is signal and what is noise in an incoming sound mixture. Our set of unique sounds and background noises was too small to test these two hypotheses (or even to measure MTFs). Nevertheless, if auditory neurons additionally demonstrate modulation-specific gain in response to noise, it is likely that this effect grows stronger from periphery to cortex.

-adaptation from periphery to cortex, and these previous findings of changing MTFs in IC neurons and aggregate cortical activity, may depend on the modulation specificity of the gain changes. For instance, a nonspecific increase in neural response gain would manifest as an overall upwards shift in the MTF. Conversely, an upwards shift within a small region of the MTF corresponds to a modulation-band–specific increase in gain. One possibility is that during complex sound stimulation, auditory neurons determine their gain independently for different modulation “channels” (such as described in modulation filterbank models [28],[46]), as a function of the signal statistics within each channel. This might have different effects on MTFs depending on the modulation spectrum of the background noise. In indirect support of this possibility, the extent to which the coding of different cells is affected by a given background noise appears to depend on each cell's modulation tuning [47]. An alternative possibility is that auditory neurons might always become more modulation lowpass in the presence of background noise, regardless of the noise's actual modulation statistics. This might reflect a set of priors about what is signal and what is noise in an incoming sound mixture. Our set of unique sounds and background noises was too small to test these two hypotheses (or even to measure MTFs). Nevertheless, if auditory neurons additionally demonstrate modulation-specific gain in response to noise, it is likely that this effect grows stronger from periphery to cortex.

These data also provide some insight as to how our results might extend to more complex classes of background noise. Here, we have characterized coding changes induced by adding stationary noise with flat modulation spectra and no cross-band correlations. Many background sounds have more complex (often 1/f-like) modulation spectra [28],[35]; a greater proportion of their modulation energy lies within the common passband of midbrain and cortical auditory neurons. Since our simulations suggest that greater modulation tuning plays only a small part in enabling tolerance to noise with flat modulation spectra, it should be less important still for enabling tolerance to noise with 1/f-like modulation spectra. We therefore expect that the adaptive coding we and others describe is crucial for more general classes of background noise. Beyond this, some background sound textures also contain correlations across carrier or modulation channels [28], while others are nonstationary, changing their statistics over time. An understanding of how these noise features differentially affect signal encodings along the auditory pathway would require further experiments utilizing a broader set of background noises.

An alternative hypothesis for how the brain builds noise-invariant representations of sound is that the very nature of these representations may be changing along the auditory pathway, from an emphasis on encoding predominantly spectrotemporal information in the periphery to encoding information about the presence of higher level auditory features in cortex. This, for instance, is a position recently argued for by Chechik and Nelken [48], based on their investigation of the responses of cat cortical neurons to the components of natural birdsong. Emerging data from the avian brain support this idea: the avian analogue of AC appears to shift its encoding toward sparse representations of song elements, which can be encoded in a noise-robust manner [49]. Our results relate to this hypothesis by emphasizing that, to the extent that the mammalian midbrain and cortex do encode spectrotemporal information about ongoing sounds, they do so in progressively more normalized coordinates. This captures at least some (but likely not all) of the proposed representational shifts from periphery to cortex.

Finally, bottom-up mechanisms are undoubtedly just a part of a broader infrastructure for selecting and enhancing representations of particular sounds heard within complex acoustic scenes. In our experiments, we chose stimuli for which the assignment of the tags “signal” and “noise” (or “foreground”/“background,” or “relevant”/“irrelevant”) to components of the mixture is reasonably justified by the different statistical structures of natural and background sounds [17],[28],[35],[50]. On the other hand, there are also many real-world situations for which such assignment is ambiguous, and depends on task-specific demands. Listening to a single talker against a background of many is one notable instance. Yet human imaging studies reveal that in such circumstances, the neural representation of attended talkers is selectively enhanced relative to that of unattended talkers, even at low SNRs [7],[26],[51]. While noise tolerance appears to grow even stronger between core and belt AC [7],[8], this is likely to be attention-dependent [7],[8],[52]–[54]. Understanding how we create noise-tolerant representations of sound within more complex mixtures is thus interwoven with questions of how we segment these scenes, how we tag the components as “signal” and “noise,” and how we direct our attention accordingly.

In sum, our results provide a clear picture of a bottom-up process that contributes to the emergence of noise-invariant representations of natural sounds in the auditory brain. As neurons' adaptation to stimulus statistics gradually grows stronger along the auditory pathway, populations of these neurons progressively shift from encoding low-level physical attributes of incoming sounds towards more mean-, contrast-, and noise-independent information about stimulus identity. The result is a major computational step towards the context-invariant, categorical sound representations that are seen in higher areas of AC.

Materials and Methods

Animals and Physiology

All animal procedures were approved by the local ethical review committee and performed under license from the UK Home Office.

Extra-cellular recordings were performed in medetomidine/ketamine-anesthetized ferrets. Previous work has shown that this does not affect the contrast adaptation properties of cortical neurons [10]. Full surgical procedures for cortical recordings (primary auditory cortex and anterior auditory field), spike-sorting routines, unit selection criteria, and sound presentation methods (diotic, earphones, 48828 kHz sample rate) are provided in ref. [12]. Surgery for IC recordings were performed as in ref. [55]. Recordings were made bilaterally in both locations.

The AN was simulated using the complete model of Zilany et al. [27]. We generated spiking responses from 100 fibers at a 100 kHz sample rate, with the same distribution of center frequencies (CFs) and spontaneous rates (SRs) as in that paper (see section “AN Model” below); n = 85 fibers were used based on reliably evoked responses to the natural stimuli [10],[12].

Stimuli

Four natural sound segments were presented (forest sounds, rain, female speech, male speech sped up by 50%), with a combined duration of 16 s, to 5 animals (IC, 2 animals, n = 80 units; AC, 3 animals, n = 124 units). For each sound, noise tokens were synthesized with the same power spectrum and duration, and mixed with the original source. The amplitudes of the source and noise were scaled so that the SNR was 20 dB for the clean condition, and 10/0/−10 dB for the noisy conditions, with a fixed root-mean-square (RMS) level of 80 dB SPL. The “clean” condition was therefore high-SNR, but not entirely noise-free; this was necessary to keep its (log)-spectrogram bounded from below at reasonable values. Fifty unique noise tokens were generated for each sound and each SNR. All sounds included 5 ms cosine ramps at onset and offset. The set of stimuli were presented in random order, interleaved with ∼7 min of DRC stimulation. DRCs were constructed from tones spaced at 1/6-octave intervals from 500 Hz to 22.6 kHz; these changed in level synchronously every 25 ms. Tone levels were drawn from uniform distributions with a mean  dB SPL, and halfwidths of

dB SPL, and halfwidths of  dB. Responses to these DRCs informed the analysis in Figure 8B.

dB. Responses to these DRCs informed the analysis in Figure 8B.

The analysis in Figure 5A–F was from DRCs presented to a further 6 animals (IC, 3 animals, n = 136 units; AC, 3 animals, n = 76 units); these procedures were as described in ref. [12]. Here, tones were 1/4-octave spaced, and tone-level distributions had  dB SPL and

dB SPL and  dB. Approximately 30–60 min of DRCs were presented during each penetration. Stimuli in Figures S7 and S8 were presented to 2 animals (IC) and 4 animals (AC).

dB. Approximately 30–60 min of DRCs were presented during each penetration. Stimuli in Figures S7 and S8 were presented to 2 animals (IC) and 4 animals (AC).

AN Model

We simulated the AN using the phenomenological model of Zilany et al. [27]. We chose the Zilany model because it captures many physiological features of the AN responses to simple and complex sounds, including middle-ear filtering, cochlear compression, and two-tone suppression. It does not explicitly model the action of the olivocochlear bundle, such as the medial olivocochlear reflex, which modulates cochlear gain during periods of high-amplitude stimulation [56] and may therefore improve the audibility of transient sounds, such as tones or vowels, in noise [57],[58]. However, it does capture the adaptation of AN responses to the mean level of a sound as experimentally measured in the cat AN [36],[59].

We used the full AN model as provided in the authors' code, including the exact (rather than approximate) implementation of power law adaptation. We simulated 100 AN fibers, using the same distribution of CFs and SRs that the authors used in that paper, based on previous physiological data [60]. Of the 100 fibers, 16 were low SR, 23 were medium SR, and 61 were high SR. For each SR, fibers had log-spaced CFs between 250 Hz and 20 kHz.

We ran three controls on this model. First, we tested whether there was a difference in the results from low, medium, or high SR fibers, and found little to no difference between the metrics presented in the main text. Second, Zilany et al. present both an exact and an approximate implementation of power law adaptation; we therefore simulated both and found that the two implementations produced very similar results.

Finally, the adaptation built into the model allows past stimulation history to affect current responses. We therefore tested whether the decoder results changed as we increased the length of preceding stimulation. To do this, we simulated the stimulus presentation sequences used during physiological recordings, where natural sounds were played back-to-back (with a 100 ms silence between sounds). The stimuli were presented in pseudorandom order, as in physiology experiments. As the time and memory complexity of the sAN simulation algorithm grows exponentially with stimulus length, the longest sequences we were able to present in reasonable time were four sounds (i.e., 16 s) in duration. Next, we selected the responses to either the first, the second, the third, or the fourth sound in each sequence. The first set of responses were generated with 0 s of preceding stimulation; these were discarded to avoid unstable initial behavior. We considered each of the remaining sets of responses: the second set, with an average of 4 s of preceding stimulation; the third, with an average of 8 s; and the fourth, with an average of 12 s. Using this schema, we simulated three entire sAN populations and calculated the relevant decoder metrics for each. There was very little difference between the values of the metrics in Figure 7D–E when the amount of preceding stimulation was varied between 4 and 12 s. We were therefore confident that the simulated adaptation had reached a steady state. Data in the main text are from the fourth set of responses; these are simulated with an adaptation “memory” of 12 s of natural stimulation.

KL Divergence Calculation

To measure how the distributions of units' responses changed with the addition of noise (Figure 2B), we performed the following analysis for each unit. We began with the trial-averaged, time-varying firing rates evoked over the stimulus ensemble for each SNR ( , where

, where  is SNR and

is SNR and  is time), at a 5 ms resolution. We scaled these firing rates relative to the maximum firing rate produced by that unit in the 20 dB SNR condition:

is time), at a 5 ms resolution. We scaled these firing rates relative to the maximum firing rate produced by that unit in the 20 dB SNR condition:  . We then approximated the distributions

. We then approximated the distributions  for each SNR

for each SNR  , by binning

, by binning  at a resolution (bin size) of 0.01, and using a maximum

at a resolution (bin size) of 0.01, and using a maximum  of 2 (enforced for consistency; no

of 2 (enforced for consistency; no  ever exceeded this value). The counts in each bin were augmented by a value of 0.5 (generally about 2%–10% of the observed count; equivalent to using a weak Dirichlet prior with a uniform base measure

ever exceeded this value). The counts in each bin were augmented by a value of 0.5 (generally about 2%–10% of the observed count; equivalent to using a weak Dirichlet prior with a uniform base measure  ); this ensured that the results remained finite. We then normalized the counts to have unitary sum. Finally, we computed the Kullback–Leibler divergence between

); this ensured that the results remained finite. We then normalized the counts to have unitary sum. Finally, we computed the Kullback–Leibler divergence between  and

and  , with values shown in Figure 2B.

, with values shown in Figure 2B.

Noise Independence Calculation

To assess how the stimulus-conditioned responses depended on the level of background noise, we calculated a mutual information (MI)-based measure for each unit (Figure 2C). For each background-noise condition ( ), we labeled the stimulus in each time bin with an index,

), we labeled the stimulus in each time bin with an index,  , using the same

, using the same  indices across SNRs. We then calculated the (bias-corrected) MI between the unit's evoked response distributions,

indices across SNRs. We then calculated the (bias-corrected) MI between the unit's evoked response distributions,  , and the

, and the  index,

index,  , and the MI between

, and the MI between  and both the

and both the  index and the

index and the  ,

,  . Bias-corrections were performed by shuffling labels [61]. The ratio between these respective quantities measures the proportion of the response entropy that can be reduced by knowing the

. Bias-corrections were performed by shuffling labels [61]. The ratio between these respective quantities measures the proportion of the response entropy that can be reduced by knowing the  index, as compared with knowing both the

index, as compared with knowing both the  index and the

index and the  . If the responses are statistically independent of the noise, then

. If the responses are statistically independent of the noise, then  should equal

should equal  , as knowing the

, as knowing the  adds no further information. Consequently, a value of 1 means that the response distribution contains information about the underlying sound stimulus but not the level of background noise; lower values mean that the information about the underlying sound stimulus is more SNR-dependent.

adds no further information. Consequently, a value of 1 means that the response distribution contains information about the underlying sound stimulus but not the level of background noise; lower values mean that the information about the underlying sound stimulus is more SNR-dependent.

Estimating Contrast-Dependent Gain Changes

To measure how the slope of units' nonlinearities changed as the contrast of the DRC stimuli changed (Figures 5D and 8B), we used the following process. As described in the section “Stimuli” above, units in Figure 5D were stimulated with DRCs used in a previous study [12]. We considered only data from the two uniform contrast conditions in that study—that is, DRC segments where all tone levels were drawn from a distribution with  dB (i.e.,

dB (i.e.,  dB), or where all tone levels were drawn from a distribution with

dB), or where all tone levels were drawn from a distribution with  dB (

dB ( dB). We fitted the following nonlinearity to this dataset:

dB). We fitted the following nonlinearity to this dataset:

| (1) |

| (2) |

| (3) |

The reported values of  are given as percentages; this is the ratio:

are given as percentages; this is the ratio:

| (4) |

Thus 0% indicates no slope changes, and 100% indicates perfect compensation for stimulus contrast. It is also possible under this metric that  can exceed 100%: this indicates that the unit's gain change was even stronger than was necessary to compensate for the changes in contrast.

can exceed 100%: this indicates that the unit's gain change was even stronger than was necessary to compensate for the changes in contrast.

The units in Figure 8B were stimulated with a different set of DRCs. These had tone-level distributions with half-widths drawn from  dB (and

dB (and  as above). We fitted the same contrast-dependent nonlinearity as above (Equations 1–3). Here, since a broader range of contrasts was used, the reported values of

as above). We fitted the same contrast-dependent nonlinearity as above (Equations 1–3). Here, since a broader range of contrasts was used, the reported values of  are given as:

are given as:

| (5) |

There were no significant differences between the measures in Equations 4 and 5.

Estimating Contrast-Dependent Changes in Coding ( )

)

As the contrast of DRC stimuli changed, units' output nonlinearities predominantly changed their gain (as in Figure 5B). Some units' output nonlinearities also showed other adaptive shifts (examples in Figure S2). To quantify the overall effect of contrast-dependent changes to output nonlinearities, we constructed a measure of how these adaptive shifts change the amount of information a unit's firing rate carries about the ongoing stimulus (Figure 5F).

As above (see “Estimating Contrast-Dependent Gain Changes”; Figures 5D and 8B), we limited our analysis for each unit to data from the two uniform contrast conditions. For each unit, we fitted individual output nonlinearities for the two conditions (these are the blue and red curves shown in Figure 5B and Figure S2A); we denote these two curves as  and

and  , respectively:

, respectively:

| (6) |

| (7) |

where  is the STRF-filtered DRC for that unit. Unlike in the previous section, these two nonlinearities were not constrained to have the same values of

is the STRF-filtered DRC for that unit. Unlike in the previous section, these two nonlinearities were not constrained to have the same values of  and

and  .

.

For sigmoidal  , and Poisson spiking, the Fisher information conveyed by the unit about

, and Poisson spiking, the Fisher information conveyed by the unit about  is:

is:

| (8) |

Where  .

.

Using these equations, we estimated the expected  over the low contrast distribution of stimuli for both

over the low contrast distribution of stimuli for both  and

and  . We generated

. We generated  samples of

samples of  values from the low contrast distribution (by filtering a long, low contrast DRC through the STRF) and calculated the expectations

values from the low contrast distribution (by filtering a long, low contrast DRC through the STRF) and calculated the expectations  and

and  over these samples. Finally, we defined:

over these samples. Finally, we defined:

| (9) |

where the logarithm removes the dependency on the maximum firing rate. Thus, this measure estimates how much more Fisher information a unit carries about low contrast stimuli when it is adapted to low contrast stimulation, compared with when it is adapted to high contrast stimulation.

Population Decoding

Log-amplitude spectrograms of natural sounds were computed with 256 frequency bins (0.1–24 kHz) and downsampled to 5 ms time resolution. Neuronal responses were binned at 5 ms resolution to match the resolution of the spectrograms. Responses to 40 randomly selected repeats of the clean sound were set aside as a training set for the decoder.

We decoded the stimulus spectrogram from population responses using a dictionary approach. We made the following assumptions: (1) the responses of pairs of units, or of a given unit at two different times, were conditionally independent given the stimulus; (2) the expected firing rate of unit  in time bin

in time bin  was a function of the recent history of stimulation—that is, of the spectrogram segment

was a function of the recent history of stimulation—that is, of the spectrogram segment  (where

(where  is the full sound spectrogram,

is the full sound spectrogram,  is frequency, and

is frequency, and  is a history index, covering 20 bins—i.e., 100 ms); and (3) the observed firing rate of unit

is a history index, covering 20 bins—i.e., 100 ms); and (3) the observed firing rate of unit  at time

at time  ,

,  , was the result of an inhomogeneous Poisson process, with

, was the result of an inhomogeneous Poisson process, with  for some function

for some function  . Rather than attempting to parameterize

. Rather than attempting to parameterize  , we obtained maximum a posteriori estimates of