Abstract

Active dendritic branchlets enable the propagation of dendritic spikes, whose computational functions remain an open question. Here we propose a concrete function to the active channels in large dendritic trees. Modelling the input-output response of large active dendritic arbors subjected to complex spatio-temporal inputs and exhibiting non-stereotyped dendritic spikes, we find that the dendritic arbor can undergo a continuous phase transition from a quiescent to an active state, thereby exhibiting spontaneous and self-sustained localized activity as suggested by experiments. Analogously to the critical brain hypothesis, which states that neuronal networks self-organize near criticality to take advantage of its specific properties, here we propose that neurons with large dendritic arbors optimize their capacity to distinguish incoming stimuli at the critical state. We suggest that “computation at the edge of a phase transition” is more compatible with the view that dendritic arbors perform an analog rather than a digital dendritic computation.

Critical systems are organized in a fractal-like pattern spanning across numerous temporal and spatial scales. The brain has recently been included amongst abundant physical and biological systems exhibiting traces of criticality1,2. In the past decade, several phenomena suggestive of critical states have been observed in different systems: multielectrode data from cortical slices in vitro3,4,5, anesthetized6, awake7 and behaving animals8; human electrocorticography9, electroencephalography10, magnetoencephalography10,11, as well as functional magnetic resonance imaging11,12 recordings. Together, these experiments suggest evidence for the intrinsic pervasiveness of criticality into a wide range of spatio-temporal brain scales.

The critical brain hypothesis offers an appealing solution to a long-lasting conundrum of neuroscience: how can localized information (sometimes from very specific regions) propagate in the brain without a spatial/temporal exponential decay or saturation1,13? Advantages of neuronal systems poised around a critical state include optimization of the dynamic range of neuronal networks14, as confirmed experimentally15, as well as transmission and storage of information3,4,16,17. However, a fundamental assumption of the critical brain hypothesis has so far not been examined: is the single neuron the minimal dynamic unit in neuronal networks, like a spin site in the Ising model for ferromagnets (the prototypical system for phase transitions)? Or can critical phenomena associated with phase transitions already occur at the single neuron level? Adopting a statistical physics approach, we explore the behavior of dendritic branchlets at the sub-cellular level as forming a network of functional dynamical units.

Here we treat neurons with their extensive dendritic trees as excitable media (see Fig. 1a). This task is far from trivial because of the complexity18,19, the diversity of neurons20, and the absence of information about key elements governing the dynamics, such as how the zoo of ionic channels is distributed along the dendrites21,22,23,24. In fact, there is an entire research area concerned with biophysically detailed neuronal modeling24,25,26,27 and dendritic computation28.

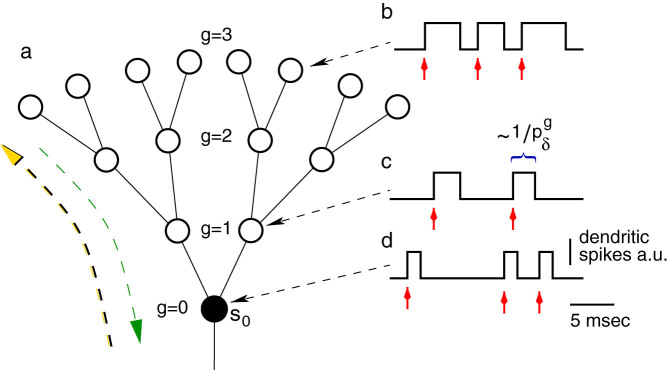

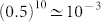

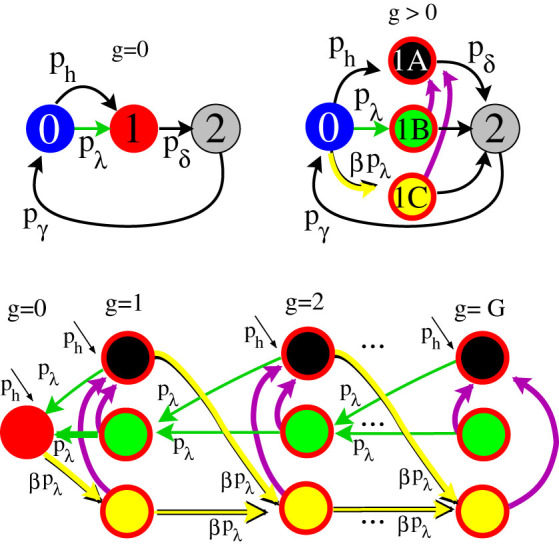

Figure 1. Model of an active dendritic tree with non-stereotyped dendritic spikes.

(a), Excitable elements (circles) connected (bars) in a Cayley tree topology with G = 3 layers and coordination number z = 3 (one mother and k = 2 daughter branches). Large green (yellow) arrow illustrates forward (backward) propagation. (b)–(d), Biologically motivated dendritic spike with duration depending on the distance from the soma as a net effect of variable density of ionic channels. Red arrows indicate that the site is being stimulated (by an external input and/or by mother or daughter branches), and  represents the average period of dendritic spikes at layer g.

represents the average period of dendritic spikes at layer g.

For our modeling purposes, we evoke the statistical physics principle that emergent phenomena seem to depend on only few characteristics such as symmetries, dimensionality, network topology, type of coupling etc. Hence the basic dynamical units (atoms, spins) need not be modeled in detail29. Our cellular automaton approach to dendritic computation unveils the emergence of critical phenomena at the single neuron level from a large number of sub-cellular interacting units (dendritic compartments).

We find that the dendritic arbor can fire spontaneously when we take into account the fact that dendritic spikes are non-stereotyped, that is, with variable durations30. In this case, a smooth continuous phase transition appears between rest and self-sustained dendritic activity. At the interface between these states, there is a critical regime, which optimizes the dynamic range of the input-output response function of the neuron's dendritic arbor. The presence of the phase transition can increase the maximum neuronal signal compression capacity by up to ~ 100 times. In our critical-neuron model, the minimal dynamic units are dendritic branchlets (or perhaps patches of dendritic spines), so that even a single neuron can have a highly nonlinear input-output response function. This capability of a single neuron to compress stimulus intensity varying over several orders of magnitude in a decade of output firing rate could be the basis of psychophysical power laws31. In addition, such refinement in the basic dynamical unit corresponds to a modeling improvement of spatial resolution of a few orders of magnitude.

Results

The overwhelming majority of models of criticality in neuronal networks, if not all, neglect the characteristic tree shape of neurons. Typically, the collective behavior studied is generated by oversimplified point-like neurons with no spatial structure4,14,32,33,34,35,36. Dropping this strong assumption unveils a new sublevel of neuronal dynamics where concepts from statistical physics can be applied. In previous work we introduced a simple model of active dendrites37. In the model, nonlinear excitable waves of activity, not accounted for by passive dendrites and linear cable theory, propagate and annihilate upon collisions, giving rise to large signal compression abilities. Weak inputs are highly amplified whereas strong inputs are not subjected to early saturation. The dynamic range, which quantifies how many decades of stimulus intensity can be distinguished by the excitable medium, attains large values. This property has proven robust against several model variants37,38.

In fact, excitable media with a tree structure performed better than other network topologies39,40. Moreover, the system dynamics in a tree topology allows analytical treatment due to the absence of loops. The model was quantitatively understood by solving the master equation under an excitable-wave (EW) mean-field approximation38. However, phase transitions are not observed in such dendritic arbors when the dynamics of the excitable elements (active dendritic branchlets) is deterministic. In this paper, we study a different dendritic arbor with non-stereotyped dendritic spikes that exhibit probabilistic spike duration30, as well as non-homogeneous spike duration41, introduced by a layer-dependent function. Such biologically motivated improvements in the model give rise to a new dynamical regime consisting of a self-sustained state with spontaneous activity that emerges through a non-equilibrium phase transition.

Physiological experiments show that dendritic spikes depend on several voltage-gated ionic channels. Due to the non-homogeneous density of channels along the proximal-distal axis, dendritic spikes can present distinct dynamical features in different regions of the dendritic tree. Dominated by the slow calcium-dependent potentials27,41,42 or N-methyl-D-aspartate (NMDA) potentials30, the dendritic spikes/plateaus have variable duration (see for example Ref. 30 and references therein), and the duration of active periods can become effectively longer at more distal sites41, as illustrated in Figs. 1b through d. These responses account for a variety of active behaviors including dendritic spikes with longer and more variable duration than sodium-dependent somatic spikes30.

In our previous models37,38, other parameters have been studied, but the time-length of the spikes that we introduce here proves to be a crucial factor. Such variability in the duration of the spike has been shown to shape the network dynamics43, and to enhance the computational capability of neuronal networks44. In the present model, the variable duration of dendritic spikes plays an important role in dendritic computation because it is capable of controlling the emergence of a phase transition. Evidence for both sides of this continuous non-equilibrium phase transition has been reported experimentally23,41. Several different patterns of activity observed experimentally are candidates for the active phase of the putative phase transition we propose: plateaus30,45, single spikes42,46,47, bursts48,49, and oscillations50,51.

Model

As illustrated in Fig. 1a, we model the dendritic arbor as connected probabilistic cellular automata with a Cayley tree topology of active branchlets capable of transmitting dendritic spikes. The states update in parallel with discrete time steps of δt = 1 ms. Exploring the idea of a dendritic branchlet as a fundamental functional unit of the nervous system (see Ref. 52 and references therein), we consider dendritic branchlets as excitable units. Each excitable unit (the i-th dendritic branchlet) has three possible discrete states si(t) ∈ {0, 1, 2}. A quiescent site (si(t) = 0) may become active in the next time step (si(t + 1) = 1) either by external driving or by propagation of an excitable-wave from an active neighbor with probability pλ. External input arrives at quiescent sites with probability ph = 1 − exp(−hδt) per time step, where h stands for the rate of independent Poisson processes and may vary in a range of several orders of magnitude. An active site (si(t) = 1) becomes refractory (si(t + 1) = 2) with probability  at each time step, where g indicates the layer that the site belongs to (see Fig. 1c). To close the cycle, refractory sites become quiescent with probability pγ (we fix pγ = 1/2 throughout this paper).

at each time step, where g indicates the layer that the site belongs to (see Fig. 1c). To close the cycle, refractory sites become quiescent with probability pγ (we fix pγ = 1/2 throughout this paper).

The probabilistic nature of our model takes into account the fact that the density of ion channels in a given spatial region is not always large enough to allow their mean activation (or inactivation, or deactivation etc.) to be described by a continuous variable, and hence fluctuations around the mean are not negligible. Such non-deterministic and irregular factors have already been proven to play a crucial role in shaping the neuronal excitability53,54, the propagation55 and the duration of dendritic spikes30. Despite the fact that subthreshold dendritic activity can influence the integration of synaptic signals51, we assume a large attenuation of subthreshold activity to an extent that a boost of activity is required for activity propagation. It is noteworthy that our previous model37, which features this very same assumption, has been recently validated by detailed morphologically reconstructed multi-compartmental cell models with active dendrites but also including passive propagation of subthreshold activity56.

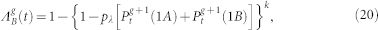

The model generalizes our previous work37,38 by introducing a probabilistic spike duration, which is motivated by experiments (see Ref. 30 for a recent review paper). In what follows, we first consider a simple homogeneous model with spike duration controlled by a uniform probability  ,

,  (our previous model37 is thus recovered when pδ = 1). For

(our previous model37 is thus recovered when pδ = 1). For  the spike duration becomes unreasonably long. Thus, our attention is mostly concentrated in the regime between the extremes (0 < pδ < 1). Whereas the stereotyped sodium-dependent somatic spikes last typically 1 ms, the duration of dendritic activity exhibits large variability depending on the type of neuron and ion channels involved, and fall within the range of 1–10 milliseconds (or even longer30). This is captured in our model by the fact that our time step δt = 1 ms, and the time spent in the active state is about

the spike duration becomes unreasonably long. Thus, our attention is mostly concentrated in the regime between the extremes (0 < pδ < 1). Whereas the stereotyped sodium-dependent somatic spikes last typically 1 ms, the duration of dendritic activity exhibits large variability depending on the type of neuron and ion channels involved, and fall within the range of 1–10 milliseconds (or even longer30). This is captured in our model by the fact that our time step δt = 1 ms, and the time spent in the active state is about  : For pδ = 1, the duration of our model dendritic spike is 1 ms, and for pδ = 0.1 the average duration would be 10 ms. We start by analysing this model with homogeneous spatial distribution of spike duration, which has some advantages: it is clear, intuitive, and allows analytical insights about its phase transition, which is controlled by pλ and pδ.

: For pδ = 1, the duration of our model dendritic spike is 1 ms, and for pδ = 0.1 the average duration would be 10 ms. We start by analysing this model with homogeneous spatial distribution of spike duration, which has some advantages: it is clear, intuitive, and allows analytical insights about its phase transition, which is controlled by pλ and pδ.

Next, we study a more realistic scenario based on the relevant physiological evidence previously described: the model assumes that the spike duration depends on the proximal distance, such as previously reported in the Purkinje cell41. Owing to the absence of a clear functional dependence in the literature, for simplicity, we consider a linear dependence  , where G = 10 (unless otherwise stated) stands for the tree size, and the parameter α (0 ≤ α ≤ 1) controls the inhomogeneity.

, where G = 10 (unless otherwise stated) stands for the tree size, and the parameter α (0 ≤ α ≤ 1) controls the inhomogeneity.

To characterize the dynamical regimes of our system, we must define our order parameter29. The main order parameter of interest here is the stationary firing rate of the proximal site s0 (see Fig. 1a):

|

where T is an averaging time window and δi,j is the Kronecker delta (by definition, δi,j = 1 if i = j, and zero otherwise). The proximal dendrite firing rate F is biologically interesting because it provides the main input to the neuronal soma and can be thought of as proportional to the neuronal firing rate. Notice that s0 and hence F are functions of the external input with intensity h. One of our main foci will be on the response function F(h).

Phase diagram without external input

It is instructive to analyze the complex spatio-temporal dynamics of an extensive dendritic tree in the simplest scenario, when average spike durations are homogeneous (α = 0) and the system is subjected to no external driving (h = 0). The absence of stimulus is a somewhat artificial condition, but very informative because it crisply uncovers the critical region. Starting with the states of nodes randomly distributed according to a uniform distribution among the three possible states, we follow the dynamics for a sufficiently long time (T ~ 104 time steps) until a stationary state is reached. If the tree reaches an active and stable level, in which the activity in the dendritic tree does not vanish and the firing rate of the proximal site is nonzero, the system is said to be supercritical. On the contrary, if the activity of the system vanishes rapidly, the system is subcritical. For large systems, the fate of the system depends weakly on the initial condition and is mainly determined by control parameters pλ (which controls the coupling between branchlets) and pδ (which controls the average spike duration). The critical regime occurs at the border between the sub- and supercritical regimes, where the activity level vanishes slowly and without a characteristic time scale14.

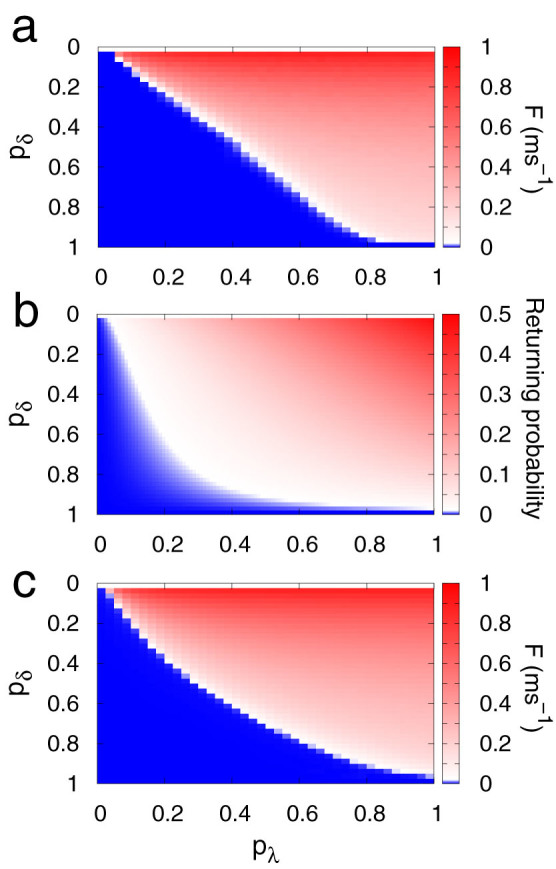

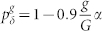

As represented in Fig. 2a, we numerically find the critical curve of the parameter plane (pλ, pδ) in a finite tree with G = 10. The critical curve corresponds to the border between the absorbing state with F(h = 0) = 0 (blue) and the active state with F(h = 0) > 0 (red). Naturally, a true phase transition occurs only for infinite systems, but for moderately large systems we already observe activity that persists for simulation times much longer than any relevant biological time scale. It is important to emphasize that no active phase exists for dendritic spikes with a deterministic duration, pδ = 1. Only when they are non-stereotyped (pδ < 1) does self-sustained activity become possible.

Figure 2. Continuous phase transition in active dendritic trees with non-stereotyped dendritic spikes.

(a), Average firing rate as a function of pλ (which governs the coupling among branchlets) and pδ (which governs the average spike duration) in numerical simulations for tree size G = 10. (b), Returning probability (see Methods for details) as a function of pλ and pδ. (c), Same as panel (a) but for the generalized excitable-wave mean-field approximation (see Methods for details).

To qualitatively understand why the activity dies out in a specific part of the parameter space of a finite tree, we define the returning probability R. It corresponds to the probability that an active site A stimulates a given quiescent neighbor B and receives back the stimulus at later times after performing a complete cycle (i.e., after going through the refractory (sA = 2) and the quiescent (sA = 0) states). A necessary (but not sufficient) condition for a network without loops to exhibit a stable self-sustained state (and consequently a phase transition) is that R ≠ 0. A nonzero returning probability is necessary for the persistence of the self-sustained state because otherwise the activity ceases after a few time steps due to collisions of the excitable waves with the boundaries (layer G) and with one another. The Methods section illustrates the fastest example of a successful process of returning activity, which requires at least three time steps, and extends the calculation for arbitrarily long two-site processes. Figure 2b shows R(pλ, pδ), in which a transition similar to that of Fig. 2a is observed. A comparison between them confirms that R ≠ 0 is a necessary but not sufficient condition for an active phase to emerge.

Another analytical approach to shed light on the results is to employ one of the many mean-field approximations available in the statistical physics literature29. We have previously developed an excitable-wave (EW) mean-field approximation that focuses on the wave propagation direction, making it particularly suitable for excitable waves on a tree38. In the Methods section we describe a generalized version of the approximation to account for non-stereotyped dendritic spikes. As Fig. 2c shows, the phase transition observed in the simulations can be qualitatively described by the generalized EW (GEW) mean-field approximation.

Neuronal response to external input

From herein, we focus on the more relevant scenario in which a neuron has to somehow cope with information arriving stochastically at its thousands of synapses. We make the simplest assumption that, at each branchlet, the average excess of incoming excitatory post-synaptic potentials (EPSPs), as compared to inhibitory post-synaptic potentials (IPSPs), can be modeled by an independent Poisson process with rate h. We study the response function F(h) (averaged over T = 104 time steps and five realizations) and its dependence on model parameters.

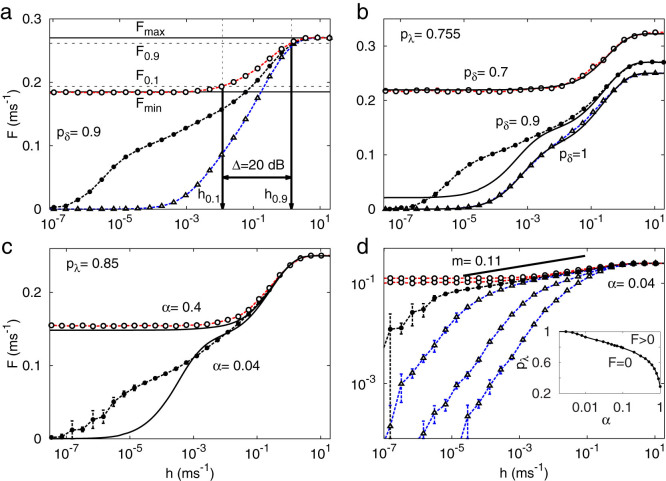

Figure 3a depicts three response functions F(h; pλ) for a fixed pδ, exemplifying one response function of each kind: subcritical (triangles, blue), critical (closed circles, black) and supercritical (open circles, red). Alternatively, for a fixed pλ, the critical line can also be crossed by varying pδ, as depicted by Fig. 3b. In this case, the maximum firing rate displays its dependence on pδ: Fmax = (1 + 3pδ)−1.

Figure 3. Response functions and phase diagram.

(a), Response curves F(h) for pλ = 0.5, 0.755, 1 (from right to left). Horizontal lines and vertical arrows show the relevant parameters for calculating the dynamic range Δ (see Eq. (2)). (b), Response curves dependence on pδ for homogeneous distribution smelt  . Symbols connected by dashed lines represent simulation results whereas continuous lines represent the generalized excitable-wave (GEW) mean-field approximation. (c) and (d), Response curves dependence on g, i.e.,

. Symbols connected by dashed lines represent simulation results whereas continuous lines represent the generalized excitable-wave (GEW) mean-field approximation. (c) and (d), Response curves dependence on g, i.e.,  . (d), Family of response curves for pλ = 0.4, 0.6, 0.8, 0.85, 0.9, 1 (from right to left). Solid line represents a power law with exponent m = 0.11, which serves as a guide to the eye for the simulation results for the critical value pλ = 0.85. Inset: phase diagram. Tree size is G = 10 for all panels.

. (d), Family of response curves for pλ = 0.4, 0.6, 0.8, 0.85, 0.9, 1 (from right to left). Solid line represents a power law with exponent m = 0.11, which serves as a guide to the eye for the simulation results for the critical value pλ = 0.85. Inset: phase diagram. Tree size is G = 10 for all panels.

For some classes of dendrites, it can be more realistic to employ our heterogeneous model, with α > 0. We consider α varying in a logarithmic range of several decades because the phase transition already occurs for  . In this instance, the phase transition is controlled by both pλ and α, giving rise to a critical curve in parameter space, as depicted in the inset of Fig. 3d. Analogously to the homogeneous case, for fixed pλ the transition depends on α, as depicted in Fig. 3c. For both homogeneous and heterogeneous models (solid line of Figs. 3b and c), the GEW mean-field approximation captures particularly well the behavior of both sub- and supercritical curves but fails to capture the strong amplification of the critical curves under weak external driving. As shown in the family of curves for fixed α displayed in Fig. 3d, the critical curve has a very small exponent (

. In this instance, the phase transition is controlled by both pλ and α, giving rise to a critical curve in parameter space, as depicted in the inset of Fig. 3d. Analogously to the homogeneous case, for fixed pλ the transition depends on α, as depicted in Fig. 3c. For both homogeneous and heterogeneous models (solid line of Figs. 3b and c), the GEW mean-field approximation captures particularly well the behavior of both sub- and supercritical curves but fails to capture the strong amplification of the critical curves under weak external driving. As shown in the family of curves for fixed α displayed in Fig. 3d, the critical curve has a very small exponent ( ), which cannot be correctly described by mean-field approximations. This exponent m is similar to a Stevens psychophysical response exponent31 observed at the single-neuron scale37, as had been previously noticed at the level of a neuronal ensemble14,57,58.

), which cannot be correctly described by mean-field approximations. This exponent m is similar to a Stevens psychophysical response exponent31 observed at the single-neuron scale37, as had been previously noticed at the level of a neuronal ensemble14,57,58.

Excitable networks have a recognized ability to compress several decades of input rate in a single decade of output rate14,36,37,38,39,40,57. This information processing capacity emerges exclusively from local interactions between the excitable elements. A large dynamic range is robust, being also obtained for models with increasing levels of biophysical realism, such as networks of FitzHugh-Nagumo and Hodgkin-Huxley elements57,59, as well as more sophisticated models based on detailed anatomical information of the retina56,60.

The definition of dynamic range is illustrated in Fig. 3a14. We start by neglecting the regions of the response curves which are close to the detection threshold Fmin or the saturation level Fmax. According to a conventional definition, only the interval between F0.1 and F0.9 (arbitrarily defined within 10% of the plateau levels) properly codes the input. Those firing rates correspond to external driving with rates h0.1 and h0.9. The dynamic range is the range (measured in decibels) between h0.1 and h0.9, i.e.,

|

The phase transition is an important feature for signal compression. Comparing the different regimes, as depicted for instance in Fig. 3a, a simple reasoning allows one to understand why the critical curve optimizes the compressing capacity14. The subcritical curves cannot amplify small external stimuli, since the activity from incoming pulses vanishes exponentially fast in space and time. At the other extreme, for supercritical curves, almost any pulse initiates the self-sustained network activity for arbitrarily long time periods, masking the response to weak stimuli. However, when the network has the correct critical parameters, the spontaneous network activity is composed of neuronal avalanches launched by spontaneous fluctuations of the system. In the presence of an external field h, such avalanches add and superpose, creating the response function F(h) (analogous to magnetization for non-zero fields in magnetic systems). In this case, the network dynamic range is optimized.

Since phase transitions are properly defined only for infinite systems, we have investigated the effects of system size in our model. It turns out that, even for moderately large systems (e.g. G = 10, as in Figs. 2 and 3), self-sustained activity survives for a period of time which is much longer than any relevant characteristic time for neuronal processing (T ~ 104 time steps ~10 seconds), which is the operational marker that we have employed for the transition. Its occurrence is associated with the peaks in dynamic range shown in Fig. 4a.

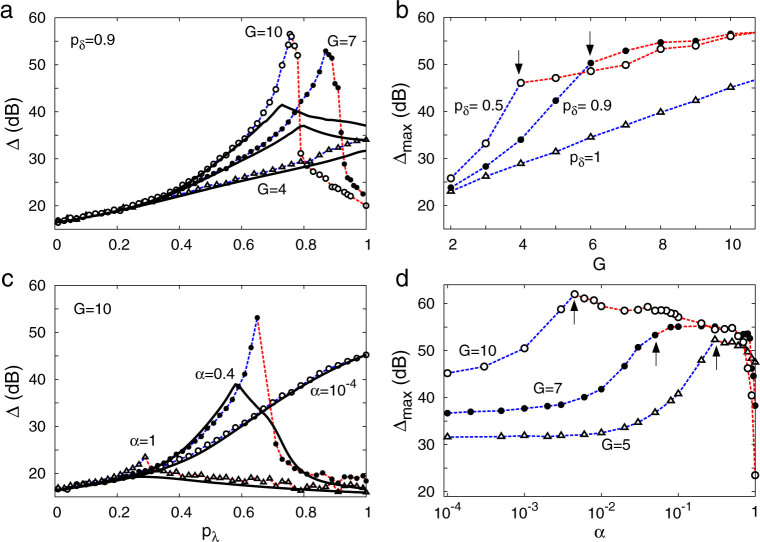

Figure 4. Dynamic range Δ.

(a) and (b), for homogeneous distribution of pδ. (c) and (d), for layer-dependent distributions:  . (b) and (d) depicts Δmax which is the maximum of the Δ(pλ) curve. In each curve phase transitions occur only at and on the right hand side of arrows. Symbols connected by dashed lines represent simulation results whereas continuous lines represent the generalized excitable-wave (GEW) mean-field approximation.

. (b) and (d) depicts Δmax which is the maximum of the Δ(pλ) curve. In each curve phase transitions occur only at and on the right hand side of arrows. Symbols connected by dashed lines represent simulation results whereas continuous lines represent the generalized excitable-wave (GEW) mean-field approximation.

In small trees, activity dies out rapidly due to wave collisions with the boundaries, preventing self-sustained activity. Taking for example the case of G = 4 in Fig. 4a, the maximum Δmax (of the Δ vs. pλ curve) occurs at pλ = 1, meaning that finite size effects masks the (infinite size) phase transition. Larger trees, in contrast, can exhibit Δmax = Δ(pλc) for weaker coupling revealing the presence of a phase transition at pλc(G) < 1. The larger is the tree, the smaller is the pλc(G) (Fig. 4a), and the larger is the Δmax (Fig. 4b).

As presented in Fig. 2, pδ = 1 prevents an active state. However, for pδ < 1 a phase transition to an active state occurs in large enough trees. The black arrows in Fig. 4b represent the minimum tree size that gives rise to our operationally defined transition for pδ = 0.5 (G = 4), and pδ = 0.9 (G = 6).

The optimization of the dynamic range at the critical value (Fig. 4c), and the growth of the maximum dynamic range with the tree size (Fig. 4d) are also observed in the model with non-uniform spike duration. In this more realistic model version, the phase transition vanishes for α ~ 0, as depicted in the inset of Fig. 3d, since in such case the spike duration is deterministic across the tree. Increasing α from zero, Δmax(α) grows up to a maximum around the region where the phase transition appears (represented by arrows in Fig. 4d). As shown in Fig. 4c, larger values of α lead to a phase transition with smaller critical coupling pλc. On the other hand, as depicted in Fig. 4d, the Δmax(α) curves show plateaus with heights and widths that both increase with tree size. This suggests that large active dendritic trees with non-uniform spike duration lead to a large dynamic range, a result whose robustness is attested by the width of the plateaus. In particular, the difference in dynamic range for an optimized α compared with the α = 0 case is remarkable, attaining about 20 dB for G = 5 (Fig. 4d).

Discussion

We have shown that the idea of a critical network of excitable elements being able to optimally process incoming stimuli can be applied at the subcellular (dendritic) scale. Instead of a network of excitable point-like neurons connected by synapses, here we have shown that electrically connected excitable dendritic branchlets can cause a dendritic arbor of a single neuron to undergo a phase transition. The key ingredient for this scenario is stochasticity in the duration of dendritic spikes.

Several experiments indicate that a dendritic spike at a distal site is usually not strong enough to trigger a somatic action potential55. This suggests that real dendrites ought not typically show deterministic signal propagation (that is, pλ < 1). In the present model, taking for instance a dendrite with tree size G = 10 and probability of activity propagation pλ = 0.5, the probability for a most distal dendritic spike to propagate all over to the proximal site and potentially generate a spike is very small:  . Thus, the model is in accordance with experimental wisdom. Remarkably, whenever the dendritic parameters lie near the silent/active phase transition, our results prove that non-reliable dendritic spike propagation is compatible with an optimal dynamic range.

. Thus, the model is in accordance with experimental wisdom. Remarkably, whenever the dendritic parameters lie near the silent/active phase transition, our results prove that non-reliable dendritic spike propagation is compatible with an optimal dynamic range.

We have examined the implications of variable dendritic spikes (non-homogeneous and non-deterministic) for dendritic computation. We have shown that a necessary condition for a phase transition to occur in active dendrites is that the returning probability R be nonzero, which in turn can only occur with variable dendritic duration (pδ < 1). In an attempt to incorporate more details on dendritic spikes, we have also modeled different spatial functions of average spike duration, and showed that our main results are robust in that parameter space.

While the variable-duration criterion must be satisfied to give rise to a critical neuron, it does not play such a crucial role in giving rise to a critical neuronal network43. The key difference between the two spatial scales is the topology: due to inhibitory signaling mechanisms during growth61, a dendritic tree contains no loops, whereas in neuronal networks they abound. The possibility of criticality at both scales naturally raises the question: if neurons can be critical, why should we need critical networks? We propose that the answer depends on the type of neuronal computation and the scale at which it occurs. In the cases where the processing is distributed among a large number of neurons with small dendritic trees, it is plausible to look at point-like neurons as the basic units of a larger system which, via balanced synapses, can tune itself to a collective critical regime. By contrast, single-neuron criticality could play a computational role in cases where neurons have extended dendritic trees and therefore must cope with large variations of synaptic input (such as olfactory mitral cells or cerebellar Purkinje cells, for instance). Clearly, in principle nothing prevents the mechanisms at both scales from acting together.

The input-output response function of a critical neuron amplifies small-intensity inputs while preventing early saturation when subjected to large-intensity inputs. The response function follows a slow-increasing power-law function F ~ hm, in which m corresponds to a rather tiny exponent  , as shown in Fig. 3d. Such a slow-increasing function could be easily confounded with a logarithmic (Weber-Fechner's psychophysical) law31,57. These results strengthen previous suggestions that a system poised at a critical point is a natural candidate to explain how m < 1 Stevens' power-law exponents emerge14.

, as shown in Fig. 3d. Such a slow-increasing function could be easily confounded with a logarithmic (Weber-Fechner's psychophysical) law31,57. These results strengthen previous suggestions that a system poised at a critical point is a natural candidate to explain how m < 1 Stevens' power-law exponents emerge14.

Our simple model yields similar results to those obtained with detailed compartmental modeling of morphologically reconstructed dendrites: the maximum dynamic range a dendritic arbor can attain grows with the tree size56. This supports the previous proposal that neurons with large dendritic arbors might have grown to attain such impressive size and complexity in order to enhance their capacity to distinguish the amount of external driving37,38.

Owing to our irresistible tendency of comparing brain processes with whichever technology happens to be dominant at the time, the term dendritic computation is typically associated with a symbolic and digital-like information processing21. However, dendritic trees are living tissues that change all the time, growing and retracting branchlets, spines and synapses. For example, 30% of spine surface retracts in hippocampal neurons over the rat estrous cycle62. This, we believe, does not seem compatible with a fixed circuitry implementing, say, logical gates. In contrast, our framework suggests that dendritic arbors perform a robust analog computation, which is resilient to disturbances of tree properties. For instance, pruning half of the tree corresponds to changing from G to G − 1, which amounts to a small decrease in the dynamic range.

In contrast to physical systems, biological systems can approach the critical state through homeostatic mechanisms33,63. This self-organized tuning is essential for processing information over a large range of stimulus intensities. We believe that critical neurons do indeed exist, but experimental limitations or lack of a clear theoretical framework possibly prevented spatio-temporal neuronal criticality from having been reported in the literature to date. It is important to emphasize, however, that our model focusses on a regime dominated by the active propagation of activity (dendritic spikes). Whether or not a phase transition occurs in a regime where active as well as passive mechanisms coexist remains untested and should be investigated in future models and experiments.

Experimental confirmation of single-neuron criticality requires spatial and temporal recording resolutions at the edge of current available techniques. Several lines of evidence already suggest critical phenomena at the neuron level. In vivo long-range inter-spike time interval correlations, which may have originated from a critical neuron, have been reported in human neurons from hippocampus64 and amygdala65, as well as in rat neurons from the leech ganglia and the hippocampus66. Furthermore, neglecting the extensive spatial features of neurons, criticality in neuronal excitability has recently been proposed35.

Signs of a self-sustained state could be associated to patterns of dendritic activation: spikes, plateaus, bursts, and oscillations41. Evidently, dendritic trees can also be on the other side of the transition, showing a silent or rest state. Our model predicts a continuous phase transition so that criticality lies between such subcritical (silent) and supercritical (self-sustained active) regimes. We speculate that adaptation and homeostatic mechanisms, similar to those discussed in Refs. 33, 63, could finely tune a self-organized critical state (or more precisely, a self-organized quasi-critical state63). Such homeostatic mechanisms, conceivably present at the dendritic level, will be presented in a forthcoming paper.

On the computational side, our cellular automaton model can be generalized so that each branchlet is modeled by a detailed biophysical compartment with a plethora of ion channels56, coupled with (noisy) axial resistances. However, as in the proverbial arbor that prevents us from seeing the forest, we must be aware that detailed biophysical modeling may hamper us from detecting phase transitions if the model has few compartments. Our statistical physics model, with simple excitable units but connected in a large dendritic network, enables us to see the forest.

Methods

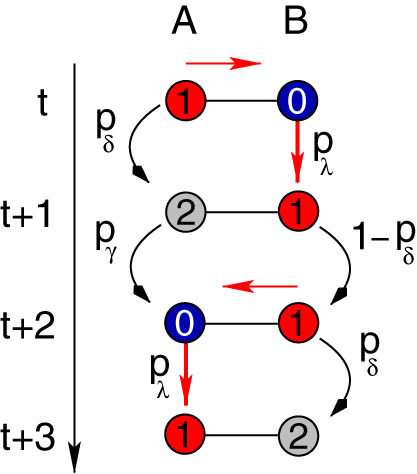

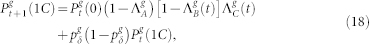

Returning probability calculation

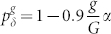

The returning probability R > 0 is a necessary condition for a phase transition. For the phase transition between quiescent and self-sustained states to occur the system must allow the self-sustained activity to be kept for arbitrarily long times. In a finite network without loops, such as a Cayley tree, this condition is only satisfied if the activity can go back and forth between two neighbor sites, say A and B, as illustrated in Fig. 5. Otherwise, in the absence of external driving, the system must go to the rest state (regardless of the initial condition) after a maximum of 2G + 1 time steps.

Figure 5. Example of returning activity.

An active site A stimulates its neighbor B (red arrow) and receives back the activity three time steps later. This process involves a series of intermediate steps. Site A must pass through the refractory (sA(t + 1) = 2) and quiescent (sA(t + 2) = 0) states before becoming active (sA(t + 3) = 1) again. Moreover, site B must be kept in the active state (sB(t + 1) = 1) for at least one time step (sB(t + 2) = 1) in order to be able to excite back the susceptible A site (sA(t + 2) = 0). Therefore a nonzero persistence probability (1 − pδ > 0) is fundamental for a nonzero returning probability (R > 0).

It is possible to calculate the returning probability between two neighbor sites that obey the cyclic cellular automata rules. The example of Fig. 5 can be computed directly. Considering the initial condition at time t, sA(t) = 1 and sB(t) = 0, there is a unique possible path for the excitable wave to go back and forth after three time steps. Notice that, by construction, sA(t) must complete the cycle (i.e., it must go through states sA = 2 and sA = 0), since we have excluded the trivial solution: sA(t) = 1,  . The final configuration sA(t + 3) = 1 and sB(t + 3) = 2 is therefore reached with probability

. The final configuration sA(t + 3) = 1 and sB(t + 3) = 2 is therefore reached with probability  , where pδpγpλ is the probability of site A to go throughout the cycle, and pλ(1 − pδ)pδ is the probability of site B to receive the input, to remain active, and finally to become refractory.

, where pδpγpλ is the probability of site A to go throughout the cycle, and pλ(1 − pδ)pδ is the probability of site B to receive the input, to remain active, and finally to become refractory.

This example is the simplest one. In general, however, there are infinitely many possibilities that must be included in a full calculation of R. The configuration sA = 2 and sB = 1, which was depicted at time t + 1 in Fig. 5, could have also be found at later times: t + 2, t + 3 and so on, leading to identical final configuration sA = 1 and sB = 2. The calculation of the returning probability which accounts for all the possible intermediate steps, considering that site B has just been activated (sB(t + 1) = 1), involves three sums of infinite geometric series, S1, S2 and S3. Site A can spend arbitrarily long periods active (∝ S1), refractory (∝ S2), or quiescent (∝ S3) and nevertheless receive back the excitable wave, given that the pivot site B remains active in the meantime. Finally, without loss of generality, we can ignore the final state of site B to find the returning probability after infinitely many time steps:

|

where

|

|

|

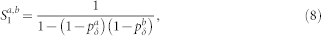

Equations (3)–(6) were used in Fig. 2b. However, this result can also be extended to account for the heterogeneous case with pδ belonging to different layers (a and b). The returning probability Ra,b turns out to be

|

where

|

|

|

Generalized excitable-wave mean-field approximation

This approximation generalizes the recently proposed excitable-wave (EW) mean-field calculation38. Here, the scope of the approximation is enlarged to account for non-stereotyped dendritic spikes with probabilistic spike duration (pδ ≤ 1) and non-homogeneous spatial distributions (pδ(g)). This is a single-site mean-field approximation, but it keeps track of the excitable-wave direction of propagation. Remarkably, the system response is better captured by this approximation than by the traditional two-site mean-field approximation38.

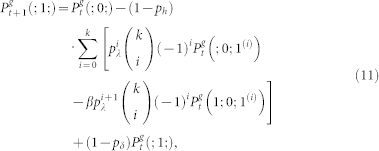

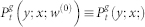

First we explain the notation and then recall the system master equations. Assuming that at time t a site at generation g is in state x; its mother site at generation g − 1 is in state y; and i (j) of its daughter branches at generation g + 1 are in state z (w) etc., the joint probability of this configuration reads as:  . We also employ the usual normalization conditions

. We also employ the usual normalization conditions  . Thus, the master equations for arbitrary coordination number z = k + 1 and layers 0 < g < G is given by38:

. Thus, the master equations for arbitrary coordination number z = k + 1 and layers 0 < g < G is given by38:

|

|

|

where  is a two-site joint probability. For simplicity, we also drop the excess of notation symbols (;), such that

is a two-site joint probability. For simplicity, we also drop the excess of notation symbols (;), such that  stands for

stands for  which is the probability of finding at time t a site at generation g in state x (regardless of its neighbors).

which is the probability of finding at time t a site at generation g in state x (regardless of its neighbors).

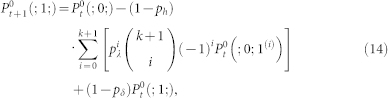

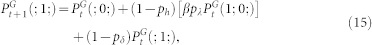

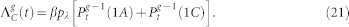

Equations controlling the active state for sites belonging to the first (g = 0) and last (g = G) layer can be obtained from straightforward modifications of Eq. (11), yielding:

|

|

while equations controlling the refractory (12) and quiescent (13) states remain unchanged. The full description of the dynamics requires higher-order terms (infinitely many in the limit G → ∞), but, as we show below, Eqs. (11)–(13) are enough for the GEW approximation.

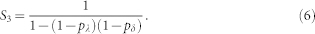

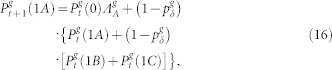

The rationale for the EW approximation (restricted to pδ = 1) is simple38: in an excitable tree, activity is decomposed in forward- and backward-propagating excitable waves. We separate (for g > 0) the active state (1) into three different active states: (1A), (1B), and (1C), as represented in Fig. 6.  (black) corresponds to the density of active sites which received an external input and thus generates excitable-wave propagating both forwards and backwards.

(black) corresponds to the density of active sites which received an external input and thus generates excitable-wave propagating both forwards and backwards.  (green) corresponds to the density of active sites which received only forward-propagating input. Finally,

(green) corresponds to the density of active sites which received only forward-propagating input. Finally,  (yellow) corresponds to the density of active sites which received only backward-propagating input.

(yellow) corresponds to the density of active sites which received only backward-propagating input.

Figure 6. Generalized excitable-wave mean-field approximation.

Top-left panel illustrates the dynamics of each node and of layer g = 0 under the GEW mean-field approximation. Top-right panel illustrates the dynamics of further layers under the GEW mean-field approximation. Bottom panel shows the excitable-waves direction of propagation. Notice that in the absence of purple arrows the flux vanishes in the state (1C) of the last layer. The purple arrows represent transitions that occur at each time step with probability (1 − pδ)2 (see text for details). This generalization allows a qualitative description of the system for pδ < 1.

For a correct description of the general case, pδ ≤ 1, we need to introduce loops into the topology as represented by the purple arrows of Fig. 6. First, we assume that with probability 1 − pδ an active site remains active for the next time step. Second, among those sites that remained active we consider that a fraction 1 − pδ of them can jump to  , and therefore contribute with both forward- and backward-propagating excitable-waves. This last step mimics the actions of the returning activity, since state (1A) is a required intermediate step to fulfill a back and forth movement of the excitable-wave under the GEW mean-field approximation.

, and therefore contribute with both forward- and backward-propagating excitable-waves. This last step mimics the actions of the returning activity, since state (1A) is a required intermediate step to fulfill a back and forth movement of the excitable-wave under the GEW mean-field approximation.

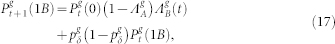

Following these ideas, and applying the usual mean-field approximations38, one can write the equations for the g > 0 layers as

|

|

|

where the excitation probabilities are given by

|

|

|

Equations (12) and (13) remain unchanged, with  . The dynamics of the most distal layer g = G is obtained by fixing

. The dynamics of the most distal layer g = G is obtained by fixing  . The proximal element (g = 0) has a simpler dynamics since it does not receive backpropagating waves, so its activity is simply governed by

. The proximal element (g = 0) has a simpler dynamics since it does not receive backpropagating waves, so its activity is simply governed by

|

with

|

Taking into account the normalization conditions, the dimensionality of the map resulting from the GEW approximation is the same as for the EW approximation38: 4(G − 1) + 5. Numerical solutions of this map with β = 1 leads to the solid curves in Fig. 3 and 4.

Author Contributions

Conceived and designed the simulations: L.L.G., O.K. and M.C. Performed the simulations and analyzed results: L.L.G. Contributed to analysis: O.K. and M.C. Wrote the paper: L.L.G., O.K. and M.C.

Acknowledgments

We are thankful to James A. Roberts for a careful reading of the manuscript. The authors acknowledge financial support from Brazilian agency CNPq. LLG and MC have also been supported by FACEPE, CAPES and special programs PRONEX. MC has received financial support from PRONEM, and OK and MC have been supported by CNAIPS-USP. This research was also supported by a grant from MEC (Spain) and FEDER under project FIS2007-60327 (FISICOS) to LLG.

References

- Chialvo D. R. Emergent complex neural dynamics. Nat Phys 6, 744–750 (2010). [Google Scholar]

- Sornette D. Critical Phenomena in Natural Sciences (Springer, Berlin, 2000). [Google Scholar]

- Beggs J. M. & Plenz D. Neuronal avalanches in neocortical circuits. J Neurosci 23, 11167–11177 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beggs J. M. & Plenz D. Neuronal avalanches are diverse and precise activity patterns that are stable for many hours in cortical slice cultures. J Neurosci 24, 5216–5229 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman N. et al. Universal critical dynamics in high resolution neuronal avalanche data. Phys Rev Lett 108, 208102 (2012). [DOI] [PubMed] [Google Scholar]

- Gireesh E. D. & Plenz D. Neuronal avalanches organize as nested theta- and beta/gamma-oscillations during development of cortical layer 2/3. P Natl Acad Sci USA 105, 7576–7581 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petermann T. et al. Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. P Natl Acad Sci USA 106, 15921–15926 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro T. L. et al. Spike avalanches exhibit universal dynamics across the sleep-wake cycle. PLoS ONE 5, e14129 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller K. J., Sorensen L. B., Ojemann J. G. & Den Nijs M. Power-law scaling in the brain surface electric potential. PLoS Comput Biol 5, e1000609 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linkenkaer-Hansen K., Nikouline V. V., Palva J. M. & Ilmoniemi R. J. Long-range temporal correlations and scaling behavior in human brain oscillations. J Neurosci 21, 1370–7 (2001). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitzbichler M. G., Smith M. L., Christensen S. R. & Bullmore E. Broadband criticality of human brain network synchronization. PLoS Comput Biol 5, e1000314 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eguiluz V. M., Chialvo D. R., Cecchi G. A., Baliki M. & Apkarian A. V. Scale-free brain functional networks. Phys Rev Lett 94, 4 (2005). [DOI] [PubMed] [Google Scholar]

- Sporns O. Networks of the Brain (MIT Press, 2010). [Google Scholar]

- Kinouchi O. & Copelli M. Optimal dynamical range of excitable networks at criticality. Nat Phys 2, 348–351 (2006). [Google Scholar]

- Shew W., Yang H., Petermann T., Roy R. & Plenz D. Neuronal avalanches imply maximum dynamic range in cortical networks at criticality. J Neurosci 29, 15595–15600 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haldeman C. & Beggs J. M. Critical branching captures activity in living neural networks and maximizes the number of metastable states. Phys Rev Lett 94, 058101 (2005). [DOI] [PubMed] [Google Scholar]

- Shew W. L., Yang H., Yu S., Roy R. & Plenz D. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J Neurosci 31, 55–63 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wen Q., Stepanyants A., Elston G. N., Grosberg A. Y. & Chklovskii D. B. Maximization of the connectivity repertoire as a statistical principle governing the shapes of dendritic arbors. P Natl Acad Sci USA 106, 12536–12541 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zomorrodi R., Ferecskó A. S., Kovács K., Kröger H. & Timofeev I. Analysis of morphological features of thalamocortical neurons from the ventroposterolateral nucleus of the cat. J Comp Neurol 518, 3541–3556 (2010). [DOI] [PubMed] [Google Scholar]

- Snider J., Pillai A. & Stevens C. F. A universal property of axonal and dendritic arbors. Neuron 66, 45–56 (2010). [DOI] [PubMed] [Google Scholar]

- Koch C. Biophysics of Computation (Oxford University Press, New York, 1999). [Google Scholar]

- Reyes A. Influence of dendritic conductances on the input-output properties of neurons. Annu Rev Neurosci 24, 653–675 (2001). [DOI] [PubMed] [Google Scholar]

- Johnston D. & Narayanan R. Active dendrites: colorful wings of the mysterious butterflies. Trends Neurosci 31, 309–316 (2008). [DOI] [PubMed] [Google Scholar]

- Coop A. D., Cornelis H. & Santamaria F. Dendritic excitability modulates dendritic information processing in a purkinje cell model. Front Comput Neurosci 4, 10 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rall W. Theoretical significance of dendritic trees for neuronal input-output relations. In Reiss, R. F. (ed.) Neural Theory and Modeling (Stanford Univ. Press, Stanford, CA, 1964). [Google Scholar]

- Carnevale N. T. & Hines M. L. The NEURON Book (Cambridge University Press, 2009). [Google Scholar]

- Stuart, G., Spruston, N. & Häusser, M. (eds.) Dendrites (Oxford University Press, New York, 1999). [Google Scholar]

- London M. & Häusser M. Dendritic computation. Annu Rev Neurosci 28, 503–532 (2005). [DOI] [PubMed] [Google Scholar]

- Marro J. & Dickman R. Nonequilibrium Phase Transition in Lattice Models (Cambridge University Press, Cambridge, 1999). [Google Scholar]

- Antic S. D., Zhou W.-L., Moore A. R., Short S. M. & Ikonomu K. D. The decade of the dendritic NMDA spike. J Neurosci Res 3001, 2991–3001 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens S. S. Psychophysics: Introduction to its perceptual, neural, and social prospects (John Wiley and Sons, 1975). [Google Scholar]

- Bak P. & Chialvo D. R. Adaptive learning by extremal dynamics and negative feedback. Phys Rev E 63, 031912 (2001). [DOI] [PubMed] [Google Scholar]

- Levina A., Herrmann J. M. & Geisel T. Dynamical synapses causing self-organized criticality in neural networks. Nat Phys 3, 857–860 (2007). [Google Scholar]

- Rubinov M., Sporns O., Thivierge J.-P. & Breakspear M. Neurobiologically realistic determinants of self-organized criticality in networks of spiking neurons. PLoS Comput Biol 7, e1002038 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gal A. & Marom S. Self-organized criticality in single neuron excitability. arXiv preprint arXiv:1210.7414 (2012). [DOI] [PubMed] [Google Scholar]

- Gollo L. L., Mirasso C. & Eguíluz V. M. Signal integration enhances the dynamic range in neuronal systems. Phys Rev E 85, 040902 (2012). [DOI] [PubMed] [Google Scholar]

- Gollo L. L., Kinouchi O. & Copelli M. Active dendrites enhance neuronal dynamic range. PLoS Comput Biol 5, e1000402 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gollo L. L., Kinouchi O. & Copelli M. Statistical physics approach to dendritic computation: The excitable-wave mean-field approximation. Phys Rev E 85, 011911 (2012). [DOI] [PubMed] [Google Scholar]

- Assis V. R. V. & Copelli M. Dynamic range of hypercubic stochastic excitable media. Phys Rev E 77, 011923 (2008). [DOI] [PubMed] [Google Scholar]

- Larremore D., Shew W. & Restrepo J. Predicting Criticality and Dynamic Range in Complex Networks: Effects of Topology. Phys Rev Lett 106, 058101 (2011). [DOI] [PubMed] [Google Scholar]

- Llinás R. & Sugimori M. Electrophysiological properties of in vitro purkinje cell dendrites in mammalian cerebellar slices. J Physiol 305, 197–213 (1980). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiller J., Schiller Y., Stuart G. & Sakmann B. Calcium action potentials restricted to distal apical dendrites of rat neocortical pyramidal neurons. J Physiol 505, 605–616 (1997). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manchanda K., Yadav A. C. & Ramaswamy R. Scaling behavior in probabilistic neuronal cellular automata. Phys Rev E 87, 012704 (2013). [DOI] [PubMed] [Google Scholar]

- Villacorta-Atienza J. A. & Makarov V. A. Wave-processing of long-scale information by neuronal chains. PLoS ONE 8, e57440 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki T., Kodama S., Hoshino C., Izumi T. & Miyakawa H. A plateau potential mediated by the activation of extrasynaptic NMDA receptors in rat hippocampal CA1 pyramidal neurons. Eur J Neurosci 28, 521–534 (2008). [DOI] [PubMed] [Google Scholar]

- Davie J. T., Clark B. A. & Häusser M. The origin of the complex spike in cerebellar purkinje cells. J Neurosci 28, 7599–7609 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Major G., Polsky A., Denk W., Schiller J. & Tank D. W. Spatiotemporally graded NMDA spike/plateau potentials in basal dendrites of neocortical pyramidal neurons. J Neurophysiol 99, 2584–2601 (2008). [DOI] [PubMed] [Google Scholar]

- Wong R. K. & Prince D. A. Dendritic mechanisms underlying penicillin-induced epileptiform activity. Science 204, 1228–1231 (1979). [DOI] [PubMed] [Google Scholar]

- Wong R. K. & Stewart M. Different firing patterns generated in dendrites and somata of CA1 pyramidal neurones in guinea-pig hippocampus. J Physiol 457, 675–687 (1992). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamondi A., Acsády L., Wang X. J. & Buzsáki G. Theta oscillations in somata and dendrites of hippocampal pyramidal cells in vivo: activity-dependent phase-precession of action potentials. Hippocampus 8, 24461 (1998). [DOI] [PubMed] [Google Scholar]

- Remme M. W. H., Lengyel M. & Gutkin B. S. The role of ongoing dendritic oscillations in single-neuron dynamics. PLoS Comput Biol 5, e1000493 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Branco T. & Häusser M. The single dendritic branch as a fundamental functional unit in the nervous system. Curr Opin Neurobiol 20, 494–502 (2010). [DOI] [PubMed] [Google Scholar]

- Carelli P. V., Reyes M. B., Sartorelli J. C. & Pinto R. D. Whole cell stochastic model reproduces the irregularities found in the membrane potential of bursting neurons. J Neurophysiol 94, 1169–1179 (2005). [DOI] [PubMed] [Google Scholar]

- Cannon R. C., O'Donnell C. & Nolan M. F. Stochastic ion channel gating in dendritic neurons: morphology dependence and probabilistic synaptic activation of dendritic spikes. PLoS Comput Biol 6, e1000886 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jarsky T., Roxin A., Kath W. L. & Spruston N. Conditional dendritic spike propagation following distal synaptic activation of hippocampal CA1 pyramidal neurons. Nat Neurosci 8, 1667–1676 (2005). [DOI] [PubMed] [Google Scholar]

- Publio R., Ceballos C. C. & Roque A. C. Dynamic range of vertebrate retina ganglion cells: Importance of active dendrites and coupling by electrical synapses. PloS ONE 7, e48517 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Copelli M., Roque A. C., Oliveira R. F. & Kinouchi O. Physics of Psychophysics: Stevens and Weber-Fechner laws are transfer functions of excitable media. Phys Rev E 65, 060901 (2002). [DOI] [PubMed] [Google Scholar]

- Furtado L. S. & Copelli M. Response of electrically coupled spiking neurons: a cellular automaton approach. Phys Rev E 73, 011907 (2006). [DOI] [PubMed] [Google Scholar]

- Ribeiro T. L. & Copelli M. Deterministic excitable media under Poisson drive: Power law responses, spiral waves and dynamic range. Phys Rev E 77, 051911 (2008). [DOI] [PubMed] [Google Scholar]

- Publio R., Oliveira R. F. & Roque A. C. A computational study on the role of gap junctions and rod Ih conductance in the enhancement of the dynamic range of the retina. PLoS ONE 4, e6970 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jan Y.-N. & Jan L. Y. Branching out: mechanisms of dendritic arborization. Nat Rev Neurosci 11, 316–328 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolley C. S., Gould E., Frankfurt M. & McEwen B. S. Naturally occurring fluctuation in dendritic spine density on adult hippocampal pyramidal neurons. J Neurosci 10, 4035–4039 (1990). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonachela J. A., De Franciscis S., Torres J. J. & Muñoz M. A. Self-organization without conservation: Are neuronal avalanches generically critical? J Stat Mech-Theory E 2010, 28 (2010). [Google Scholar]

- Parish L. M. et al. Long-range temporal correlations in epileptogenic and non-epileptogenic human hippocampus. Neuroscience 125, 1069–76 (2004). [DOI] [PubMed] [Google Scholar]

- Bhattacharya J., Edwards J., Mamelak A. N. & Schuman E. M. Long-range temporal correlations in the spontaneous spiking of neurons in the hippocampal-amygdala complex of humans. Neuroscience 131, 547–555 (2005). [DOI] [PubMed] [Google Scholar]

- Mazzoni A. et al. On the dynamics of the spontaneous activity in neuronal networks. PLoS ONE 2, e439 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]