Abstract

A fundamental principle in memory research is that memory is a function of the similarity between encoding and retrieval operations. Consistent with this principle, many neurobiological models of declarative memory assume that memory traces are stored in cortical regions, and the hippocampus facilitates the reactivation of these traces during retrieval. The present investigation tested the novel prediction that encoding–retrieval similarity can be observed and related to memory at the level of individual items. Multivariate representational similarity analysis was applied to functional magnetic resonance imaging data collected during encoding and retrieval of emotional and neutral scenes. Memory success tracked fluctuations in encoding–retrieval similarity across frontal and posterior cortices. Importantly, memory effects in posterior regions reflected increased similarity between item-specific representations during successful recognition. Mediation analyses revealed that the hippocampus mediated the link between cortical similarity and memory success, providing crucial evidence for hippocampal–cortical interactions during retrieval. Finally, because emotional arousal is known to modulate both perceptual and memory processes, similarity effects were compared for emotional and neutral scenes. Emotional arousal was associated with enhanced similarity between encoding and retrieval patterns. These findings speak to the promise of pattern similarity measures for evaluating memory representations and hippocampal–cortical interactions.

Keywords: emotional memory, episodic memory, functional neuroimaging, multivariate pattern analysis

Introduction

Memory retrieval involves the reactivation of neural states similar to those experienced during initial encoding. The strength of these memories is thought to vary as a function of encoding–retrieval match (Tulving and Thomson 1973), with stronger memories being associated with greater correspondence. This relationship has been formalized in models linking recognition memory success to the quantitative similarity of event features sampled during encoding and retrieval (Bower 1972), as well as in the principle of transfer-appropriate processing, which proposes that memory will be enhanced when the cognitive operations engaged during encoding are related to those supporting memory discrimination at retrieval (Morris et al. 1977). Encoding–retrieval similarity also bears special relevance to neurocomputational models that posit a role for the hippocampus in guiding a replay of prior learning events across the neocortex (Alvarez and Squire 1994; McClelland et al. 1995; Nadel et al. 2000; Sutherland and McNaughton 2000; Norman and O'Reilly 2003). In these models, the hippocampus binds cortical representations associated with the initial learning experience, and then facilitates their reactivation during the time of retrieval.

Prior attempts to measure encoding–retrieval match have focused on identifying differences in hemodynamic responses to retrieval sets that vary only in their encoding history, such as prior association with a task, context, or stimulus (reviewed by Danker and Anderson 2010). For example, when retrieval cues are held constant, words studied with visual images elicit greater activity in the visual cortex at retrieval than words studied with sounds (Nyberg et al. 2000; Wheeler et al. 2000), consistent with the reactivation of their associates. However, memory often involves discriminating between old and new stimuli of the same kind. Within the context of recognition memory, transfer-appropriate processing will be most useful when it involves the recapitulation of cognitive and perceptual processes that are uniquely linked to individual items and differentiate them from lures (Morris et al. 1977). Because previous studies have compared sets of items with a shared encoding history, their measures of processing overlap have overlooked those operations that are idiosyncratic to each individual stimulus. The current experiment makes the important advance of measuring the neural similarity between encoding and recognition of individual scenes (e.g. an image of a mountain lake vs. other scene images). By linking similarity to memory performance, we aim to identify regions in which neural pattern similarity to encoding is associated with retrieval success, likely arising from their participation in operations whose recapitulation benefits memory. These operations may be limited to perceptual processes supporting scene and object recognition, evident along occipitotemporal pathways, or may include higher-order processes reliant on frontal and parietal cortices.

Item-specific estimates also facilitate new opportunities for linking encoding–retrieval overlaps to the function of the hippocampus. One possibility is that the hippocampus mediates the link between neural similarity and behavioral expressions of memory. The relation between the hippocampus and cortical reactivation is central to neurocomputational models of memory, which predict enhanced hippocampal–neocortical coupling during successful memory retrieval (Sutherland and McNaughton 2000; Wiltgen et al. 2004; O'Neill et al. 2010). Although these ideas have been supported by neurophysiological data (Pennartz et al. 2004; Ji and Wilson 2007), it has been a challenge to test this hypothesis in humans. To this end, the present approach newly enables the analysis of how the hippocampus mediates the relationship between encoding–retrieval pattern similarity and memory retrieval on a trial-to-trial basis.

Finally, the neural similarity between encoding and retrieval should be sensitive to experimental manipulations that modulate perception and hippocampal memory function. There is extensive evidence that emotional arousal increases the strength and vividness of declarative memories, thought to arise from its influence on encoding and consolidation processes (LaBar and Cabeza 2006; Kensinger 2009). Although the neural effects of emotion on encoding (Murty et al. 2010) and retrieval (Buchanan 2007) phases have been separately characterized, they have seldom been directly compared (but see Hofstetter et al. 2012). Emotional stimuli elicit superior perceptual processing during encoding (Dolan and Vuilleumier 2003), which may result in perceptually rich memory traces that can be effectively recaptured during item recognition. Furthermore, arousal-related noradrenergic and glucocorticoid responses modulate memory consolidation processes (McGaugh 2004; LaBar and Cabeza 2006), which are thought to rely on hippocampal–cortical interactions similar to those supporting memory reactivation during retrieval (Sutherland and McNaughton 2000; Dupret et al. 2010; O'Neill et al. 2010; Carr et al. 2011). One possibility is that the influence of emotion on consolidation is paralleled by changes in encoding–retrieval similarity during retrieval. We test the novel hypothesis that emotion, through its influence on perceptual encoding and/or hippocampal–cortical interactions, may be associated with increased pattern similarity during retrieval.

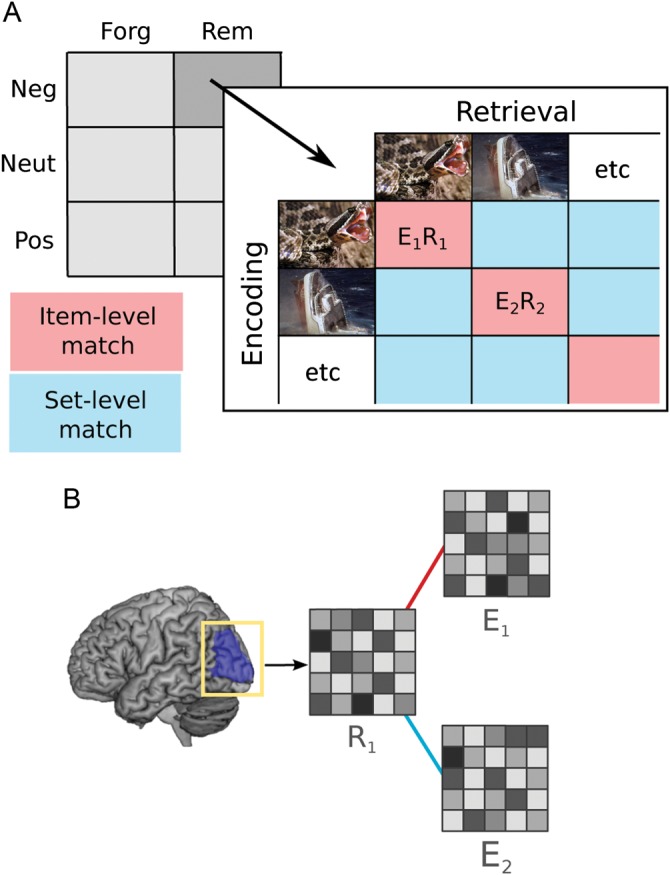

Here, we use event-related functional magnetic resonance imaging (fMRI), in combination with multivariate pattern similarity analysis, to evaluate the neural similarity between individual scenes at encoding and retrieval. Across several regions of interest (ROIs), we calculated the neural pattern similarity between encoding and retrieval trials, matching individual trials to their identical counterparts (“item-level pairs”) or to other stimuli drawn from the same emotional valence, encoding condition, and memory status (“set-level pairs”; Fig. 1). To assess evidence for item-specific fluctuations in neural similarity, we specifically sought regions showing a difference between remembered and forgotten items that were augmented for item-level pairs relative to set-level pairs. Given the recognition design employed for retrieval, we anticipated that evidence for item-specific similarity would be particularly pronounced in regions typically associated with visual processing. These techniques yielded estimates of the neural pattern similarity for each individual trial, newly enabling a direct test of the hypothesis that the hippocampus mediates the relationship between cortical similarity and behavioral expressions of memory. Finally, we tested the novel prediction that emotion enhances cortical similarity by comparing these effects for emotionally arousing versus neutral scenes.

Figure 1.

An overview of the experimental and analysis design. Participants viewed emotionally negative, positive, and neutral images during scene encoding and recognition memory tasks; each image was classified as remembered or forgotten. Beta estimates were computed for each individual trial at both encoding and recognition (A). For the full complement of encoding–retrieval pairs, including item-level pairs (same picture) and set-level pairs (same valence, task and memory status, different pictures), beta patterns were extracted from 31 separate anatomical ROIs and the similarity between patterns was computed (B). E, encoding; R, retrieval; rem, remembered; forg, forgotten; neg, negative; neut, neutral; pos, positive.

Materials and Methods

Participants

Twenty-one participants completed the experiment. Two participants were excluded from analysis: One due to excessive motion and one due to image artifacts affecting the retrieval scans. This resulted in 19 participants (9 female), ranging in age from 18 to 29 (M = 23.3, standard deviation [SD] = 3.1). Participants were healthy, right-handed, native English speakers, with no disclosed history of neurological or psychiatric episodes. Participants gave written informed consent for a protocol approved by the Duke University Institutional Review Board.

Experimental Design

Participants were scanned during separate memory encoding and recognition sessions, set 2 days apart. During the first session, they viewed 420 complex visual scenes for 2s each. Following each scene, they made an emotional arousal rating on a 4-point scale and answered a question related to the semantic or perceptual features of the image. During the second session, participants saw all of the old scenes randomly intermixed with 210 new scenes for 3s each. For each trial, they rated whether or not the image was old or new on a 5-point scale, with response options for “definitely new,” “probably new,” “probably old,” “definitely old,” and “recollected.” The fifth response rating referred to those instances in which they were able to recall a specific detail from when they had seen that image before. For all analyses, memory success was assessed by collapsing the fourth and fifth responses, defining those items as “remembered,” and comparing them with the other responses, referred to here as “forgotten.” This division ensured that sufficient numbers of trials (i.e. >15) were included as remembered and forgotten. In both sessions, trials were separated by a jittered fixation interval, exponentially distributed with a mean of 2 s.

The stimuli consisted of a heterogeneous set of complex visual scenes drawn from the International Affective Picture System (Lang et al. 2001) as well as in-house sources. They included 210 emotionally negative images (low in valence and high in arousal, based on normative ratings), 210 emotionally positive images (high in valence and high in arousal), and 210 neutral images (midlevel in valence and low in arousal). The influence of arousal was assessed by comparing both negative and positive images to neutral. Additional details about the encoding design and the stimulus set are described in Ritchey et al. (2011), which report subsequent memory analyses of the encoding data alone.

fMRI Acquisition and Pre-Processing

Images were collected using a 4T GE scanner, with separate sessions for encoding and retrieval. Stimuli were presented using liquid crystal display goggles, and behavioral responses were recorded using a 4-button fiber optic response box. Scanner noise was reduced with earplugs and head motion was minimized using foam pads and a headband. Anatomical scanning started with a T2-weighted sagittal localizer series. The anterior (AC) and posterior commissures (PC) were identified in the midsagittal slice, and 34 contiguous oblique slices were prescribed parallel to the AC–PC plane. Functional images were acquired using an inverse spiral sequence with a 2-s repetition time, a 31-m echo time, a 24-cm field of view, a 642 matrix, and a 60° flip angle. Slice thickness was 3.8 mm, resulting in 3.75 × 3.75 × 3.8 mm voxels.

Preprocessing and data analyses were performed using SPM5 software implemented in Matlab (www.fil.ion.ucl.ac.uk/spm). After discarding the first 6 volumes, the functional images were slice-timing corrected, motion corrected, and spatially normalized to the Montreal Neurological Institute template. Functional data from the retrieval session were aligned with data from the encoding session, and normalization parameters were derived from the first functional from the encoding session. Data were spatially smoothed with an 8-mm isotropic Gaussian kernel for univariate analyses and left unsmoothed for multivariate pattern analyses.

fMRI Analysis

General Linear Model

All multivariate pattern analyses were based on a general linear model that estimated individual trials. General linear models with regressors for each individual trial were estimated separately for encoding and retrieval, yielding one model with a beta image corresponding to encoding trial and another model with a beta image corresponding to each retrieval trial. The postimage encoding ratings were also modeled and combined into a single regressor; thus, all analyses reflect the picture presentation time only. Regressors indexing head motion were also included in the model. The validity of modeling individual trials has been previously demonstrated (Rissman et al. 2004), and the comparability of these models to more traditional methods was likewise confirmed within this dataset.

Additional general linear models were estimated for univariate analysis of the retrieval phase, which were used to define functional ROIs for the mediation analyses (described below). The models were similar to that described above, but collapsed the individual trials into separate regressors for each emotion type, as in standard analysis approaches. These models also included parametric regressors indexing the 5-point subsequent memory response for old items, as well as a single regressor for all new items. Contrasts corresponding to the effects of memory success, emotion, and the emotion by memory interaction were generated for each individual. Univariate analysis procedures and results are described in greater detail in Supplementary Materials.

Multivariate Encoding–Retrieval Similarity Analysis

A series of 31 bilateral anatomical ROIs were generated from the Anatomical Automatic Labeling (AAL) system (Tzourio-Mazoyer et al. 2002) implemented in WFU Pickatlas (Maldjian et al. 2003). We expected that encoding–retrieval similarity effects could arise not only from the reactivation of perceptual processes, likely to be observed in occipital and temporal cortices, but also higher-order cognitive processes involving the lateral frontal and parietal cortices. Thus, the ROIs included all regions comprised in the occipital, temporal, lateral prefrontal, and parietal cortices (Table 1). Regions within the medial temporal lobe were also included due to their known roles in memory and emotional processing. Two of these regions (olfactory cortex and Heschl's gyrus) were chosen as primary sensory regions that are unlikely to be responsive to experimental factors, and thus they serve as conceptual controls. Bilateral ROIs were chosen to limit the number of comparisons while observing effects across a broad set of regions, and reported effects were corrected for multiple comparisons.

Table 1.

All regions tested with ANOVA results for the effects of match and memory

| Region | Voxels | Mean r | Main effect of match |

Main effect of memory |

Match × memory interaction |

|||

|---|---|---|---|---|---|---|---|---|

| F1,18 | P | F1,18 | P | F1,18 | P | |||

| Occipital | ||||||||

| Calcarine | 635 | 0.23 | 176.09 | 0.000* | 1.99 | 0.088 | 8.74 | 0.004 |

| Cuneus | 436 | 0.12 | 52.46 | 0.000* | 3.05 | 0.049 | 2.62 | 0.062 |

| Lingual | 609 | 0.17 | 222.77 | 0.000* | 3.86 | 0.032 | 12.18 | 0.001* |

| Inf occipital | 283 | 0.19 | 55.93 | 0.000* | 11.69 | 0.002* | 6.56 | 0.010 |

| Mid occipital | 780 | 0.18 | 131.33 | 0.000* | 16.95 | 0.000* | 15.29 | 0.001* |

| Sup occipital | 428 | 0.16 | 124.92 | 0.000* | 21.78 | 0.000* | 4.55 | 0.023 |

| Temporal | ||||||||

| Fusiform | 699 | 0.21 | 89.36 | 0.000* | 1.83 | 0.002 | 0.46 | 0.254 |

| Inf temporal | 1019 | 0.05 | 119.06 | 0.000* | 24.66 | 0.000* | 0.01 | 0.468 |

| Mid temporal | 1396 | 0.06 | 38.36 | 0.000* | 26.92 | 0.000* | 16.64 | 0.000* |

| Mid temporal pole | 287 | 0.01 | 0.26 | 0.309 | 2.15 | 0.080 | 0.02 | 0.448 |

| Sup temporal pole | 407 | 0.01 | 0.69 | 0.209 | 0.55 | 0.234 | 0.00 | 0.493 |

| Sup temporal | 803 | 0.02 | 0.22 | 0.323 | 14.12 | 0.001* | 2.87 | 0.054 |

| Frontal | ||||||||

| Inf frontal opercularis | 382 | 10 | 39.50 | 0.000* | 27.64 | 0.000* | 2.61 | 0.062 |

| Inf frontal orbitalis | 526 | 0.03 | 1.26 | 0.138 | 7.30 | 0.007 | 0.00 | 0.499 |

| Inf frontal triangularis | 691 | 0.08 | 26.38 | 0.000* | 45.36 | 0.000* | 0.47 | 0.251 |

| Mid frontal | 1472 | 0.04 | 32.05 | 0.000* | 18.42 | 0.000* | 2.47 | 0.067 |

| Mid frontal orbital | 267 | 0.01 | 0.43 | 0.260 | 0.15 | 0.352 | 2.79 | 0.056 |

| Sup frontal | 1204 | 0.02 | 31.29 | 0.000* | 2.26 | 0.075 | 0.15 | 0.353 |

| Sup medial frontal | 758 | 0.02 | 17.61 | 0.000* | 4.76 | 0.021 | 0.63 | 0.218 |

| Sup frontal orbital | 294 | 0.01 | 6.04 | 0.012 | 1.40 | 0.126 | 0.26 | 0.308 |

| Insula | 549 | 0.02 | 0.16 | 0.348 | 3.74 | 0.034 | 0.04 | 0.418 |

| Parietal | ||||||||

| Angular | 409 | 0.06 | 23.63 | 0.000* | 9.68 | 0.003 | 6.91 | 0.009 |

| Inf parietal | 565 | 0.06 | 42.86 | 0.000* | 28.00 | 0.000* | 0.11 | 0.371 |

| Sup parietal | 651 | 0.08 | 1.19 | 0.145 | 1.23 | 0.141 | 0.09 | 0.382 |

| Precuneus | 1014 | 0.05 | 50.28 | 0.000* | 22.54 | 0.000* | 8.90 | 0.004 |

| Supramarginal | 434 | 0.03 | 17.80 | 0.001* | 49.54 | 0.000* | 34.24 | 0.000* |

| MTL | ||||||||

| Amygdala | 74 | 0.01 | 1.59 | 0.111 | 0.59 | 0.227 | 0.44 | 0.258 |

| Hippocampus | 273 | 0.02 | 0.00 | 0.473 | 2.35 | 0.072 | 0.02 | 0.441 |

| Parahippocampal gyrus | 304 | 0.04 | 21.79 | 0.000* | 3.72 | 0.000* | 7.00 | 0.008 |

| Primary sensory controls | ||||||||

| Heschl | 63 | 0.01 | 1.33 | 0.132 | 2.33 | 0.072 | 1.60 | 0.111 |

| Olfactory | 68 | 0.01 | 0.01 | 0.471 | 0.17 | 0.342 | 0.01 | 0.472 |

Note: Mean r refers to the Pearson's correlation coefficient between encoding and retrieval patterns for remembered items, averaged across the group. The main effects and interactions involving emotion are presented separately in Table 4.

*Denote regions significant at Bonferroni-corrected threshold.

MTL, medial temporal lobe; Inf, inferior; Mid, middle; Sup, superior.

The full pattern of voxel data from a given ROI was extracted from each beta image corresponding to each trial, yielding a vector for each trial Ei at encoding and each trial Rj at retrieval (Fig. 1). Each of the vectors was normalized with respect to the mean activity and SD within each trial, thus isolating the relative pattern of activity for each trial and ROI. Euclidean distance was computed for all possible pair-wise combinations of encoding and retrieval target trials, resulting in a single distance value for each EiRj pair. This distance value measures the degree of dissimilarity between activity patterns at encoding and those at retrieval—literally the numerical distance between the patterns plotted in n-dimensional space, where n refers to the number of included voxels (Kriegeskorte 2008). An alternative metric for pattern similarity is the Pearson's correlation coefficient, which is highly anti-correlated with Euclidean distance; not surprisingly, analyses using this measure replicate the findings reported here. For ease of understanding, figures report the inverse of Euclidean distance as a similarity metric; regression analyses likewise flip the sign of distance metric z-scores to reflect similarity.

Pair-wise distances were summarized according to whether or not the encoding–retrieval pair corresponded to an item-level match between encoding and retrieval (i.e. trial Ei refers to the same picture as trial Rj). In comparison, set-level pairs were matched on the basis of emotion type, encoding task, and memory status, but excluded these item-level pairs. These experimental factors were controlled in the set-level pairs to mitigate the influence of task engagement or success. Any differences observed between the item- and set-level pair distances can be attributed to the reactivation of memories or processes specific to the individual stimulus. On the other hand, any memory effects observed across both the item- and set-level pair distances may reflect processes general to encoding and recognizing scene stimuli.

To assess neural similarity, mean distances for the item- and set-level match pairs were entered into a 3-way repeated-measures analysis of variance (ANOVA) with match level (item level, set level), memory status (remembered, forgotten), and emotion (negative, neutral, positive) as factors. We first considered the effects of match and memory irrespective of emotion. Reported results were significant at a 1-tailed family-wise error rate of P < 0.05, with a Bonferroni correction for each of the 31 tested ROIs (effective P < 0.0016). For regions showing a significant match by memory interaction, the spatial specificity of these findings was interrogated further by bisecting the anatomical ROIs along the horizontal and coronal planes, separately for each hemisphere. The resulting 8 subregions per ROI were analyzed identically to the methods described above and corrected for multiple comparisons.

To evaluate whether item-level similarity varied by whether participants gave a recollected or definitely old response, mean distances for the item-level pairs were entered into a 2-way repeated-measures ANOVA with memory response (recollected, definitely old) and emotion type (negative, neutral, positive) as factors. Finally, although the pattern vectors were standardized prior to distance computation, it remained a concern that overall activation in the ROIs could be driving some of these memory-related distance effects. To rule out this possibility, we conducted a logistic regression using trial-to-trial measures of mean ROI activation during encoding, mean ROI activation during retrieval, and pattern similarity to predict binary memory outcomes, within each participant and for each of the memory-sensitive regions. The beta coefficients were tested with 1-sample t-tests to determine whether they significantly differed from zero across the group. A significant result would indicate that mean activation estimates and encoding–retrieval similarity make separable contributions to memory. All subsequent memory-related analyses were restricted to regions for which encoding–retrieval similarity remained a significant predictor of memory in this regression. Both sets of tests were corrected for multiple comparisons across 31 ROIs, P < 0.0016.

Functional Connectivity

Another goal was to test the hypothesis that the link between cortical pattern similarity and memory is mediated by hippocampal activity at retrieval. To address this hypothesis, multilevel mediation analysis was conducted via the Multilevel Mediation and Moderation toolbox (Wager et al. 2009; Atlas et al. 2010), using encoding–retrieval similarity (from the item-level pairs) as the independent variable, 5-point memory responses as the dependent variable, and hippocampal activity at retrieval as the mediating variable. Each of these trial-wise vectors was normalized within participants prior to analysis, and trials identified as outliers (>3 SDs from the mean) were excluded. Hippocampal functional ROIs included voxels within the AAL anatomical ROI that showed parametric modulation by memory strength for old items, defined separately for each individual at the liberal threshold of P < 0.05 to ensure the inclusion of all memory-sensitive voxels. Voxels were limited to left hippocampus, where stronger univariate retrieval success effects were observed. Two participants were excluded from this analysis for not having above-threshold responses in the left hippocampus. Models were estimated via bootstrapping for each participant and then participants were treated as random effects in a group model that tested the significance of each path. Significant mediation was identified by the interaction of path a (similarity to hippocampal activity) and path b (hippocampal activity to memory, controlling for similarity). The mediation model was specified separately for each of the 8 neocortical ROIs showing significant match and memory effects, and statistical tests were thresholded at Bonferroni-corrected P < 0.05, 1-tailed (effective P < 0.0063). It should be noted that it is not feasible to ascertain neural directionality at the level of trial-wise estimates; thus, for the sake of completeness, the reverse mediation was also computed, with hippocampal activity as the independent variable and encoding–retrieval similarity as the mediating variable.

Finally, for ROIs showing evidence of hippocampal mediation, the correlation of pattern distance and hippocampal activity during retrieval was computed separately for remembered and forgotten items, excluding trials identified as outliers. This enabled the direct test of whether pattern similarity covaried with hippocampal activity within successfully remembered or forgotten items only, and whether this correlation was stronger for remembered than forgotten items, using 1-sample and paired t-tests, respectively, on z-transformed r-values. This technique is similar to functional connectivity analyses based on beta series (Rissman et al. 2004; Daselaar et al. 2006), but relates activity to pattern distance rather than activity to activity.

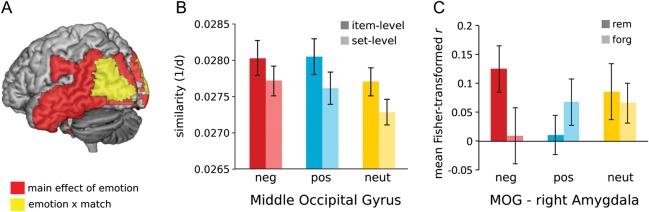

Emotion Effects

For regions showing significant modulation by match level, the influence of emotion was assessed in the 3-way repeated-measures ANOVA with match level (item level, set level), memory status (remembered, forgotten), and emotion (negative, neutral, positive) as factors. Reported results were significant at a 1-tailed family-wise error rate of P < 0.05, with a Bonferroni correction for each of the 19 tested ROIs (effective P < 0.0026). The region showing the maximal main effect of emotion and match by emotion interaction (middle occipital gyrus) was interrogated further using functional connectivity methods similar to those described above to assess its relationship with amygdala activity during retrieval. Amygdala functional ROIs included voxels within the AAL anatomical ROI (limited to the right amygdala, where stronger univariate emotion effects were observed) that showed modulation by emotion or the interaction of emotion and memory for old items, defined separately for each individual at the liberal threshold of P < 0.05. Both the mediation tests and memory-modulated correlation tests were implemented similarly to those described above, but with amygdala filling in the role of the hippocampus.

Results

Behavioral Data

Overall memory performance was very good: Old items were remembered 53.7% (SD = 19.2) of the time, whereas new items were incorrectly endorsed as such only 3.3% (SD = 2.4) of the time, on average (Supplementary Table 2). These rates resulted in an average d’ score of 2.04 (SD = 0.48), which is well above chance, t(18) = 18.40, P < 0.001. Memory performance was significantly modulated by the emotional content of the images, as measured by d’ (mean ± SD: negative, 2.33 ± 0.52, positive, 1.91 ± 0.52, neutral, 1.92 ± 0.52). Negative pictures were recognized with greater accuracy than both neutral, t(18) = 4.18, P = 0.001, and positive, t(18) = 5.69, P < 0.001, pictures.

Evidence for Item-Level Encoding–Retrieval Similarity

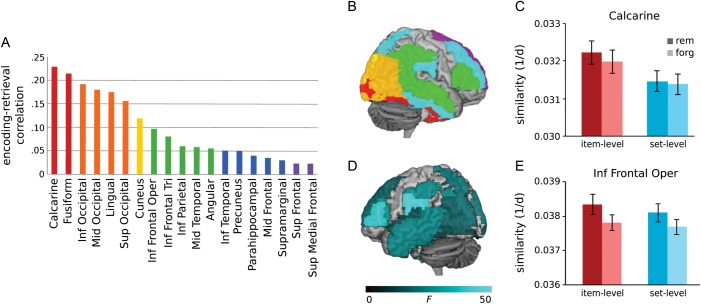

Pattern similarity between encoding and retrieval was measured by calculating the pair-wise distance between each retrieval trial and each encoding trial. Distances were sorted with respect to whether the paired trials corresponded to the same pictures (item-level pairs) or different pictures of the same type (set-level pairs). As expected, many of the 31 tested ROIs showed a main effect of match (Fig. 2A,B), exhibiting greater encoding–retrieval similarity for item-level pairs than for set-level pairs, P < 0.0016 (Table 1). Because the same images were presented at encoding and recognition, this finding demonstrates that these pattern similarity measures are sensitive to the visual and conceptual differences that distinguish individual images, consistent with prior evidence that individual scenes can be decoded using voxel information from the visual cortex (Kay et al. 2008). Not surprisingly, item-specific encoding–retrieval similarity is highest in regions devoted to visual perception; for example, the mean correlation between encoding and retrieval patterns in calcarine ROI was 0.23 (subject range: 0.10–0.34) for remembered items (Fig. 2C).

Figure 2.

Effects of item match and memory on encoding–retrieval pattern similarity. Among regions displaying greater encoding–retrieval similarity for item- than set-level pairs, the correlation between encoding and retrieval patterns was strongest in regions devoted to visual processing (A and B). Color-coding reflects the strength of this correlation, averaged across remembered trials. For example, the calcarine ROI showed a main effect of match, in that item-level pairs were more similar than set-level pairs, regardless of memory (C). Many regions were sensitive to the main effect of memory (D), in which greater encoding–retrieval similarity was associated with better memory, regardless of whether it was an item-level or set-level match. This main effect is represented in the inferior frontal gyrus opercularis ROI (E). Error bars denote standard error of the mean. Rem, remembered; forg, forgotten; d, distance; inf, inferior; mid, middle; sup, superior.

Several regions, including inferior frontal gyrus, precuneus, inferior parietal, occipital gyri, and inferior and middle temporal gyri, were characterized by a main effect of memory success such that encoding–retrieval similarity was enhanced during successful recognition regardless of match level, P < 0.0016 (Fig. 2D,E). This similarity may reflect the re-engagement of processes that are generally beneficial to both scene encoding and retrieval (e.g. heightened attention), compatible with meta-analytic data marking frontal and parietal regions as consistent predictors of memory success at both phases (Spaniol et al. 2009).

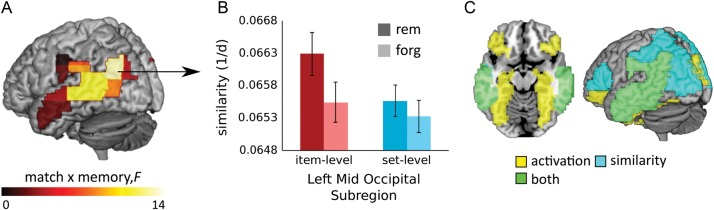

In the critical test of memory-related pattern similarity, significant interactions between match and memory status were identified in middle occipital gyrus, middle temporal gyrus, and supramarginal gyrus, P < 0.0016 (Table 1). Marginal effects were also noted in precuneus (P = 0.004). In each of these regions, memory success was associated with greater encoding–retrieval similarity, particularly when the retrieval trial was compared with its identical encoding trial. This interaction supports the idea that similar item-specific processes are engaged during both encoding and recognition, and that this processing overlap is affiliated with successful memory discrimination. To further interrogate spatial localization, each left or right ROI showing a significant interaction was split into quadrants. Analysis of these subregions revealed that interaction effects were concentrated within the left anterior-dorsal subregion of middle occipital gyrus and the bilateral posterior-dorsal subregions of middle temporal gyrus (Fig. 3A,B; Supplementary Table 3). These findings are consistent with our prediction that processes supported by occipitotemporal regions, such as visual scene or object processing, are engaged during both encoding and retrieval, and that the memory benefits of this overlap can be observed at the level of the individual item. There were no significant increases in neural similarity for trials accurately endorsed with the recollected versus definitely old response, although the middle occipital gyrus and hippocampus exhibited trends in that direction (Supplemental Materials).

Figure 3.

The influence of item-specific similarity on memory. Encoding–retrieval similarity at the level of individual items predicted memory success above and beyond set-level similarity in the middle occipital, middle temporal, and supramarginal gyri, evidence by the interaction of memory and match. The spatial localization of these effects was ascertained by splitting these ROIs into subregions (A), among which the maximal interaction was observed in the left anterior-dorsal subregion of middle occipital gyrus (B). Finally, mean ROI retrieval activation and encoding–retrieval similarity independently predicted across-trial variation in memory success, demonstrating a lack of redundancy among these measures (C). Error bars denote standard error of the mean. D, distance; rem, remembered; forg, forgotten; mid, middle.

Across-trial logistic regression analysis confirmed that, for nearly all regions identified as memory sensitive, encoding–retrieval pattern similarity significantly predicted memory performance even when mean retrieval activation estimates (for both encoding and retrieval) were included in the model (Fig. 3C; Table 2). These results also clarify that, although we did not observe pattern similarity effects in the hippocampus, mean retrieval activity in this region predicted memory success; the amygdala showed a similar trend (P = 0.002). Thus, we can be sure that these regions are sensitive to memory in the present design; however, standard spatial resolution parameters may be too coarse to detect consistent patterns in these structures (cf. Bonnici et al. 2011). The dissociability of these effects suggests that similarity between trial-specific patterns during encoding and retrieval is associated with enhanced memory in a manner that goes beyond the magnitude of hemodynamic response.

Table 2.

Within-subjects logistic regression of memory performance on ROI activity and encoding–retrieval similarity

| Region | Encoding activation |

Retrieval activation |

Encoding–retrieval similarity |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean beta | t(18) | P | Mean beta | t(18) | P | Mean beta | t(18) | P | |

| Occipital | |||||||||

| Calcarine | −0.02 | −0.49 | 0.315 | 0.06 | 2.56 | 0.010 | 0.04 | 1.19 | 0.124 |

| Cuneus | −0.15 | −4.49 | 0.000 | 0.14 | 4.15 | 0.000* | 0.05 | 1.94 | 0.034 |

| Lingual | 0.04 | 1.09 | 0.145 | 0.02 | 0.72 | 0.240 | 0.06 | 2.20 | 0.021 |

| Inf occipital | 0.12 | 2.82 | 0.006 | 0.08 | 2.69 | 0.008 | 0.13 | 3.29 | 0.002 |

| Mid occipital | 0.02 | 0.62 | 0.273 | 0.07 | 2.15 | 0.023 | 0.15 | 4.23 | 0.000* |

| Sup occipital | −0.01 | −0.24 | 0.408 | 0.05 | 1.31 | 0.103 | 0.12 | 4.53 | 0.000* |

| Temporal | |||||||||

| Fusiform | 0.12 | 2.64 | 0.008 | 0.12 | 4.01 | 0.000* | 0.04 | 0.89 | 0.192 |

| Inf temporal | 0.07 | 2.19 | 0.021 | 0.13 | 5.06 | 0.000* | 0.13 | 4.56 | 0.000* |

| Mid temporal | −0.02 | −0.57 | 0.287 | 0.13 | 4.27 | 0.000* | 0.15 | 4.96 | 0.000* |

| Mid temporal Pole | 0.04 | 1.62 | 0.062 | −0.01 | −0.23 | 0.410 | 0.01 | 0.18 | 0.43 |

| Sup temporal Pole | 0.04 | 1.65 | 0.058 | −0.01 | −0.66 | 0.257 | 0.01 | 0.51 | 0.308 |

| Sup temporal | −0.13 | −3.33 | 0.002 | 0.05 | 1.97 | 0.032 | 0.06 | 3.35 | 0.002 |

| Frontal | |||||||||

| Inf frontal opercularis | 0.00 | 0.17 | 0.433 | 0.11 | 2.63 | 0.008 | 0.14 | 5.57 | 0.000* |

| Inf frontal orbitalis | 0.02 | 0.68 | 0.252 | 0.17 | 4.77 | 0.000* | 0.05 | 1.69 | 0.054 |

| Inf frontal triangularis | 0.02 | 0.73 | 0.236 | 0.13 | 3.14 | 0.003 | 0.14 | 4.69 | 0.000* |

| Mid frontal | −0.14 | −3.92 | 0.001 | 0.01 | 0.46 | 0.326 | 0.11 | 3.36 | 0.002 |

| Mid frontal orbital | −0.13 | −3.92 | 0.001 | 0.09 | 2.85 | 0.005 | −0.03 | −0.92 | 0.185 |

| Sup frontal | −0.07 | −2.46 | 0.012 | 0.01 | 0.32 | 0.375 | 0.03 | 0.84 | 0.205 |

| Sup medial frontal | 0.03 | 0.97 | 0.174 | 0.15 | 4.76 | 0.000* | 0.04 | 1.40 | 0.089 |

| Sup frontal orbital | −0.05 | −2.11 | 0.025 | 0.08 | 3.00 | 0.004 | 0.02 | 0.87 | 0.197 |

| Insula | −0.07 | −2.26 | 0.018 | 0.07 | 3.43 | 0.002 | 0.03 | 1.28 | 0.108 |

| Parietal | |||||||||

| Angular | −0.12 | −4.04 | 0.000 | 0.13 | 4.69 | 0.000* | 0.12 | 3.57 | 0.001* |

| Inf parietal | −0.12 | −3.66 | 0.001 | 0.04 | 0.94 | 0.181 | 0.15 | 4.81 | 0.000* |

| Sup parietal | −0.01 | −0.25 | 0.404 | −0.03 | −0.76 | 0.229 | 0.11 | 3.84 | 0.001* |

| Precuneus | −0.09 | −2.27 | 0.018 | 0.04 | 1.91 | 0.036 | 0.10 | 3.31 | 0.002 |

| Supramarginal | −0.06 | −1.62 | 0.061 | 0.12 | 4.06 | 0.000* | 0.16 | 8.19 | 0.000* |

| MTL | |||||||||

| Amygdala | 0.07 | 2.44 | 0.016 | 0.08 | 3.28 | 0.002 | 0.01 | 0.41 | 0.344 |

| Hippocampus | 0.07 | 2.29 | 0.017 | 0.12 | 6.07 | 0.000* | 0.00 | −0.06 | 0.476 |

| Parahippocampal gyrus | 0.07 | 2.29 | 0.017 | 0.12 | 4.37 | 0.000* | −0.01 | −0.28 | 0.390 |

Note: Mean beta refers to the logistic regression beta coefficient, averaged across the group.

*Denote regions significant at Bonferroni-corrected threshold.

MTL, medial temporal lobe; Inf, inferior; Mid, middle; Sup, superior.

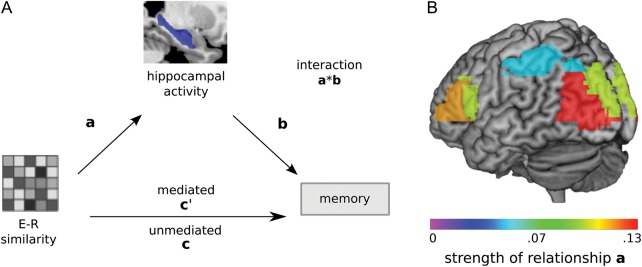

Role of the Hippocampus in Mediating Cortical Similarity

It was hypothesized that if encoding–retrieval pattern similarity reflects the reactivation of the memory trace, then its relationship with memory performance should be mediated by hippocampal activity at retrieval (Fig. 4A). Indeed, hippocampal activity was a significant statistical mediator of the relationship between encoding–retrieval similarity and memory, P < 0.0063, for occipital, inferior frontal, and inferior parietal cortices (Fig. 4B; Table 3). After accounting for the influence of the hippocampus, the relationship between encoding–retrieval similarity and memory remained intact for each of these regions, providing evidence for partial mediation. There was also evidence that encoding–retrieval similarity in the occipital ROIs mediates the relationship between hippocampal activity and memory. These findings speak to the dynamic influences of hippocampal activity and cortical pattern similarity at retrieval on each other and on memory. Finally, for inferior frontal and occipital cortices, the correlation between pattern similarity and hippocampal activity at retrieval was significant across trials even when restricted to remembered items only, P < 0.0063. Correlations did not differ between remembered and forgotten items, P > 0.05. Taken together, activity in the hippocampus is associated with greater fidelity between neocortical representations at encoding and retrieval, and this relationship facilitates successful memory performance.

Figure 4.

Hippocampal mediation of the link between cortical similarity and memory expression. Mediation analysis tested the hypothesis that the hippocampus mediates the relationship between encoding–retrieval cortical similarity and memory success (A). Among regions showing memory-modulated encoding–retrieval similarity effects, the influence of encoding–retrieval similarity in inferior frontal, inferior parietal, and occipital ROIs on memory was significantly mediated by the hippocampus (B). Most of these regions were marked by a significant correlation between encoding–retrieval cortical similarity and hippocampal activation at retrieval, across remembered items; color-coding reflects the strength of this relationship. E–R, encoding–retrieval.

Table 3.

Mediation analysis linking encoding–retrieval similarity to memory

| Mean beta coefficients |

Mean X–M correlation |

||||||

|---|---|---|---|---|---|---|---|

| X → M (a) | M → Y (b) | Mediated X → Y (c’) | Unmediated X → Y (c) | Mediation term (a × b) | Rem | Forg | |

| E–R similarity (X) to memory (Y), mediated by left HC activity at retrieval (M) | |||||||

| Mid occipital | 0.104* | 0.115* | 0.091* | 0.102* | 0.009* | 0.132* | 0.069* |

| Sup occipital | 0.089* | 0.119* | 0.064* | 0.073* | 0.008* | 0.100* | 0.084 * |

| Inf temporal | 0.049 | 0.120* | 0.054* | 0.058* | 0.004 | 0.051 | 0.050 |

| Mid temporal | 0.022 | 0.122* | 0.073* | 0.074* | 0.002 | 0.031 | 0.005 |

| Inf frontal opercularis | 0.087* | 0.117* | 0.061* | 0.070* | 0.007* | 0.099* | 0.065* |

| Inf frontal triangularis | 0.090* | 0.114* | 0.077* | 0.086* | 0.007* | 0.111* | 0.064* |

| Inf parietal | 0.040* | 0.122* | 0.082* | 0.085* | 0.004* | 0.047 | 0.011 |

| Supramarginal | −0.005 | 0.123* | 0.070* | 0.069* | −0.010 | 0.003 | −0.033 |

| Left HC activity at retrieval (X) to memory (Y), mediated by E–R similarity (M) | |||||||

| Mid occipital | 0.110* | 0.091* | 0.115* | 0.124* | 0.005* | — | — |

| Sup occipital | 0.093* | 0.064* | 0.119* | 0.124* | 0.003* | — | — |

| Inf temporal | 0.051 | 0.054* | 0.120* | 0.123* | 0.000 | — | — |

| Mid temporal | 0.023 | 0.073* | 0.122* | 0.123* | 0.000 | — | — |

| Inf frontal opercularis | 0.091* | 0.061* | 0.117* | 0.124* | 0.003 | — | — |

| Inf frontal triangularis | 0.095* | 0.077* | 0.114* | 0.123* | 0.005 | — | — |

| Inf parietal | 0.040* | 0.082* | 0.122* | 0.123* | 0.001 | — | — |

| Supramarginal | −0.005 | 0.070* | 0.123* | 0.123* | 0.000 | — | — |

| E–R similarity (X) to memory (Y), mediated by right amygdala activity at retrieval (M) | |||||||

| Mid occipital | 0.044 | 0.036* | 0.103* | 0.103* | 0.000 | 0.070 | 0.046 |

| Right amygdala activity at retrieval (X) to memory (Y), mediated by E–R similarity (M) | |||||||

| Mid occipital | 0.046 | 0.103* | 0.036* | 0.039* | 0.002 | — | — |

*Denote regions significant at Bonferroni-corrected threshold.

Inf, inferior; Mid, middle; Sup, superior; rem, remembered; forg, forgotten; E–R, encoding–retrieval; HC, hippocampus.

Emotional Modulation of Cortical Similarity

Emotion globally increased the similarity of encoding and retrieval patterns (main effect of emotion, P < 0.0026) in many regions including the occipital, inferior and middle temporal, and supramarginal gyri (Table 4), in that encoding–retrieval similarity was greater for negative and positive than neutral items, consistent with an influence of arousal. Emotion also affected the difference in similarity between item- and set-level match pairs (interaction of emotion and match, F2,36 = 6.31, P = 0.004) in middle occipital gyrus (Fig. 5A,B). The relationship between memory and encoding–retrieval similarity was consistent across emotion types. The middle occipital region was further explored via functional connectivity and mediation analyses linking amygdala activity at retrieval to encoding–retrieval similarity. The correlation between amygdala activity and encoding–retrieval similarity in middle occipital gyrus showed a significant emotion by memory interaction, F2,32 = 4.10, P = 0.026 (Fig. 5C). This interaction reflected stronger correlations for remembered than forgotten trials among negative items, t(16) = 2.07, P = 0.027, 1-tailed, but not positive or neutral items, Ps > 0.05. Mediation analysis showed that amygdala activity did not mediate the relationship between encoding–retrieval similarity and memory, or vice versa (Table 3), indicating that the hippocampus remains the primary link between cortical similarity and memory. Altogether, these findings suggest that emotional arousal is associated with heightened encoding–retrieval similarity, particularly in posterior cortical regions, and the recapitulation of negative information tends to engage the amygdala during successful retrieval.

Table 4.

Regions showing emotional modulation of encoding–retrieval similarity

| Effect | Region | Match × memory × emotion ANOVA |

|

|---|---|---|---|

| F2,36 | P | ||

| Main effect of emotion | Mid occipital | 19.45 | 0.000* |

| Inf temporal | 17.61 | 0.000* | |

| Inf occipital | 16.46 | 0.000* | |

| Angular | 15.38 | 0.000* | |

| Inf parietal | 12.43 | 0.000* | |

| Supramarginal | 9.05 | 0.000* | |

| Mid temporal | 8.29 | 0.001* | |

| Sup occipital | 6.51 | 0.002* | |

| Sup temporal | 5.11 | 0.006 | |

| Sup parietal | 4.95 | 0.006 | |

| Inf frontal opercularis | 4.92 | 0.006 | |

| Fusiform | 4.26 | 0.011 | |

| Sup frontal | 4.13 | 0.012 | |

| Inf frontal triangularis | 3.88 | 0.015 | |

| Emotion × match interaction | Mid occipital | 6.31 | 0.002* |

| Fusiform | 3.41 | 0.022 | |

| Emotion × memory interaction | Inf frontal opercularis | 3.85 | 0.015 |

| Emotion × match × memory interaction | Fusiform | 3.52 | 0.020 |

Note: Regions showing marginal effects (P < 0.025) are also shown. The main effects and interactions not involving emotion are presented separately in Table 1.

*Denote regions significant at Bonferroni-corrected threshold.

Inf, inferior; Mid, middle; Sup, superior.

Figure 5.

Influence of emotion on encoding–retrieval similarity and amygdala responses during retrieval. Emotion significantly enhanced overall encoding–retrieval similarity effects in a number of posterior regions and specifically enhanced item-level effects within the middle occipital gyrus (A). The middle occipital gyrus showed greater encoding–retrieval similarity for negative and positive relative to the neutral trials (B). Encoding–retrieval similarity in middle occipital gyrus was additionally correlated across trials with retrieval activity in the amygdala; this correlation was modulated by memory success for negative but not positive or neutral trials (c). Error bars denote standard error of the mean. Neg, negative; pos, positive; neut, neutral; MOG, middle occipital gyrus.

Discussion

These results provide novel evidence that successful memory is associated with superior match between encoding and retrieval at the level of individual items. Furthermore, hippocampal involvement during retrieval mediates this relationship on a trial-to-trial basis, lending empirical support to theories positing dynamic interactions between the hippocampal and neocortex during retrieval. These findings generalize to memories associated with emotional arousal, which magnifies the similarity between encoding and retrieval patterns.

Neural Evidence for Encoding–Retrieval Similarity

Prior reports have highlighted the reactivation of set-level information from the initial learning episode during memory retrieval, marked by spatial overlap between task-related hemodynamic responses at each phase (reviewed by Rugg et al. 2008; Danker and Anderson 2010). Multivariate pattern analysis methods have advanced these findings by demonstrating that neural network classifiers, trained to associate experimental conditions with hemodynamic patterns from encoding, can generate predictions about which type of information is reactivated at retrieval (reviewed by Rissman and Wagner 2012), including patterns associated with the memorandum's category (Polyn et al. 2005), its encoding task (Johnson et al. 2009), or its paired associate (Kuhl et al. 2011). Importantly, despite these methodological improvements, evidence thus far has been limited to broad set-level distinctions tied to the encoding manipulation. Overlaps between encoding and retrieval will most benefit discrimination between old and new items when they carry information specific to individual stimuli.

The present study advances this line of research by providing essential evidence that the similarity between encoding and retrieval operations can be tracked at the level of individual items. For some regions, particularly in occipitotemporal cortices, encoding–retrieval similarity is most affected by memory success when trials are matched at the level of individual items. The presence of this interaction provides direct evidence for the theory of encoding–retrieval match, in that increased similarity is associated with superior memory performance. Item similarity may reflect cognitive and perceptual operations particular to the individual stimulus that occur during both encoding and retrieval or the reactivation of processes or information associated with initial encoding. Both forms of information may serve to induce brain states at retrieval that more closely resemble the brain states of their encoding counterparts. Unlike previously reported results, these measures are not constrained by category or task and are therefore ideal for flexibly capturing instances of the encoding–retrieval match tailored to each individual person and trial. In many regions spanning frontal, parietal, and occipital cortices, encoding–retrieval similarity predicted memory success when mean activation did not, suggesting that in these regions, pattern effects may be more informative than univariate estimates of activation.

Recent investigations using pattern similarity analysis have related variation across encoding trials to memory success, exploiting the ability of similarity measures to tap into the representational structure underlying neural activity (Kriegeskorte 2008). Jenkins and Ranganath (2010) used pattern distance to link contextual shifts during encoding to successful memory formation. Another set of experiments demonstrated that increased similarity between encoding repetitions, calculated separately for each individual stimulus, predicts memory success, suggesting that memories benefit from consistency across learning trials (Xue et al. 2010). In contrast, the present study focuses on the similarities between processing the same mnemonic stimuli at 2 separate phases of memory—namely, the overlap between encoding and explicit recognition processes. Because a different task is required at each phase, the similarity between encoding and retrieval trials likely arises from either perceptual attributes, which are common between remembered and forgotten trials, or from information that aids or arises from mnemonic recovery, which differ between remembered and forgotten trials. Thus, any differences between remembered and forgotten items in the item-level pairs should reflect item-specific processes that benefit memory or the reactivation of encoding-related information during the recognition period.

Hippocampal–Cortical Interactions During Successful Memory Retrieval

Because pattern similarity measures yield item-specific estimates of encoding–retrieval overlap, they offer new opportunities to investigate the role of the hippocampus in supporting neocortical memory representations. Novel analyses relating trial-by-trial fluctuations in cortical pattern similarity to hippocampal retrieval responses revealed that the hippocampus partially mediates the link between encoding–retrieval similarity and retrieval success across a number of neocortical ROIs. Many theories of memory have posited dynamic interactions between the hippocampus and neocortex during episodic retrieval (Alvarez and Squire 1994; McClelland et al. 1995; Sutherland and McNaughton 2000; Norman and O'Reilly 2003), such that hippocampal activation may be triggered by the overlap between encoding and retrieval representations or may itself promote neocortical pattern completion processes. The mediation models tested here suggest some combination of both mechanisms. However, evidence for the hippocampal mediation account tended to be stronger and distributed across more regions, possibly stemming from our use of a recognition design. Interestingly, in our mediation models, hippocampal activation did not fully account for the link between encoding–retrieval similarity and memory, implying that both hippocampal and neocortical representations are related to memory retrieval (McClelland et al. 1995; Wiltgen et al. 2004).

Implications of Emotional Modulation of Cortical Pattern Similarity

Emotion is known to modulate factors relevant to encoding–retrieval similarity, such as perceptual encoding (Dolan and Vuilleumier 2003) and memory consolidation (McGaugh 2004), in addition to enhancing hippocampal responses during both encoding (Murty et al. 2010) and retrieval (Buchanan 2007). However, its influence on encoding–retrieval match has never before been investigated. Here, we present novel evidence that, across all trials, emotion heightens the similarity between encoding and retrieval patterns in several regions in the occipital, temporal, and parietal cortices. The relationship between encoding–retrieval similarity and memory was consistent across levels of emotion, suggesting that memory-related pattern similarity is affected by emotion in an additive rather than interactive way. One possible explanation for these findings is that emotion might generally increase the likelihood or strength of hippocampal–cortical interactions that contribute to both consolidation and retrieval (Carr et al. 2011). Another possible explanation is that emotion might facilitate perceptual processing during both encoding and retrieval, thus resulting in heightened similarity between the encoding and retrieval of emotional items, particularly among regions devoted to visual perception. Consistent with the latter interpretation, the amygdala appears to modulate emotional scene processing in extrastriate regions including middle occipital gyrus but not in early visual areas like the calcarine sulcus (Sabatinelli et al. 2009).

Functional connectivity analyses revealed that neural similarity in the occipital cortex correlated with amygdala activity during successfully retrieved negative trials. Negative memories are thought to be particularly sensitive to perceptual information (reviewed by Kensinger 2009), and enhanced memory for negative visual details has been tied to the amygdala and its functional connectivity with late visual regions (Kensinger et al. 2007). Increased reliance on perceptual information, along with observed memory advantages for negative relative to positive stimuli, may account for these valence-specific effects. Unlike the hippocampus, however, the amygdala did not mediate the link between similarity and memory, suggesting that amygdala responses during rapid scene recognition may be driven by the recovery and processing of item-specific details. These observations stand in contrast to results obtained in a more effortful cued recall paradigm, in which amygdala activation appeared to play a more active role in the successful recovery of autobiographical memories (Daselaar et al. 2008). In the present study, the hippocampus remained the primary route by which encoding–retrieval similarity predicted memory performance, for both emotional and neutral trials.

Future Directions

The present study used a recognition task during the retrieval phase, which has the benefit of matching perceptual information and thus encouraging maximal processing overlap between encoding and retrieval. Through this approach, we demonstrated that encoding–retrieval similarity varies with memory success and is related to hippocampal activation, consistent with the positive influence of encoding–retrieval overlap or transfer-appropriate processing. However, it is worth noting that considerable task differences and the collection of data across multiple sessions may have limited the sensitivity of the current design to event similarities. Although we are encouraged by the results reported here, future experiments using closely matched tasks might be better suited to uncovering subtle changes in pattern similarity, for example, as a function of stimulus type. Furthermore, the use of a recognition design limits our ability to ascertain the direction of hippocampal–cortical interactions, in that processing overlaps in cortical regions might trigger hippocampal activation or vice versa. Another approach would be to measure cognitive and neural responses reinstated in the absence of supporting information, as in a cued- or free-recall design. This control would ensure that the similarity between encoding and retrieval patterns would be driven by information arising from hippocampal memory responses, rather than being inflated by perceptual similarities. This test would be an important step toward determining whether hippocampal pattern completion processes can drive cortical similarities between encoding and retrieval.

Conclusions

In conclusion, these data provide novel evidence that episodic memory success tracks item-specific fluctuations in encoding–retrieval match, as measured by the patterns of hemodynamic activity across the neocortex. Importantly, this relationship is mediated by the hippocampus, providing critical evidence for hippocampal–neocortical interactions during the reactivation of memories at retrieval. We also report new evidence linking emotion to enhancements in encoding–retrieval similarity, a mechanism that may bridge understanding of how emotion impacts encoding, consolidation, and retrieval processes. Altogether, these findings speak to the promise of pattern similarity measures for evaluating the integrity of episodic memory traces and for measuring communication between the medial temporal lobes and distributed neocortical representations.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This work was supported by National Institutes of Health grants #NS41328 (R.C. and K.S.L.) and #AG19731 (R.C.). M.R. was supported by National Research Service Award #F31MH085384.

Supplementary Material

Notes

We thank Alison Adcock, Vishnu Murty, Charan Ranganath, and Christina L. Williams for helpful suggestions. Conflict of Interest: None declared.

References

- Alvarez P, Squire LR. Memory consolidation and the medial temporal lobe: a simple network model. Proc Natl Acad Sci. 1994;91:7041–7045. doi: 10.1073/pnas.91.15.7041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atlas LY, Bolger N, Lindquist MA, Wager TD. Brain mediators of predictive cue effects on perceived pain. J Neurosci. 2010;30:12964–12977. doi: 10.1523/JNEUROSCI.0057-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnici HM, Kumaran D, Chadwick MJ, Weiskopf N, Hassabis D, Maguire EA. Decoding representations of scenes in the medial temporal lobes. Hippocampus. 2011;22:1143–1153. doi: 10.1002/hipo.20960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bower GH. Stimulus-sampling theory of encoding variability. In: Melton AW, Martin E, editors. Coding processes in human memory. Washington (DC): V.H. Winston & Sons; 1972. pp. 85–123. [Google Scholar]

- Buchanan T. Retrieval of emotional memories. Psychol Bull. 2007;133:761–779. doi: 10.1037/0033-2909.133.5.761. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr MF, Jadhav SP, Frank LM. Hippocampal replay in the awake state: a potential substrate for memory consolidation and retrieval. Nat Neurosci. 2011;14:147–153. doi: 10.1038/nn.2732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danker JF, Anderson JR. The ghosts of brain states past: remembering reactivates the brain regions engaged during encoding. Psychol Bull. 2010;136:87–102. doi: 10.1037/a0017937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daselaar SM, Fleck MS, Cabeza R. Triple dissociation in the medial temporal lobes: recollection, familiarity, and novelty. J Neurophysiol. 2006;96:1902–1911. doi: 10.1152/jn.01029.2005. [DOI] [PubMed] [Google Scholar]

- Daselaar SM, Rice HJ, Greenberg DL, Cabeza R, LaBar KS, Rubin DC. The spatiotemporal dynamics of autobiographical memory: neural correlates of recall, emotional intensity, and reliving. Cereb Cortex. 2008;18:217–229. doi: 10.1093/cercor/bhm048. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Vuilleumier P. Amygdala automaticity in emotional processing. Ann N Y Acad Sci. 2003;985:348–355. doi: 10.1111/j.1749-6632.2003.tb07093.x. [DOI] [PubMed] [Google Scholar]

- Dupret D, O'Neill J, Pleydell-Bouverie B, Csicsvari J. The reorganization and reactivation of hippocampal maps predict spatial memory performance. Nat Neurosci. 2010;13:995–1002. doi: 10.1038/nn.2599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hofstetter C, Achaibou A, Vuilleumier P. Reactivation of visual cortex during memory retrieval: content specificity and emotional modulation. NeuroImage. 2012;60:1734–1745. doi: 10.1016/j.neuroimage.2012.01.110. [DOI] [PubMed] [Google Scholar]

- Jenkins LJ, Ranganath C. Prefrontal and medial temporal lobe activity at encoding predicts temporal context memory. J Neurosci. 2010;30:15558–15565. doi: 10.1523/JNEUROSCI.1337-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ji D, Wilson MA. Coordinated memory replay in the visual cortex and hippocampus during sleep. Nat Neurosci. 2007;10:100–107. doi: 10.1038/nn1825. [DOI] [PubMed] [Google Scholar]

- Johnson J, McDuff S, Rugg M, Norman K. Recollection, familiarity, and cortical reinstatement: a multivoxel pattern analysis. Neuron. 2009;63:697–708. doi: 10.1016/j.neuron.2009.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay KN, Naselaris T, Prenger RJ, Gallant JL. Identifying natural images from human brain activity. Nature. 2008;452:352–355. doi: 10.1038/nature06713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kensinger EA. Remembering the details: effects of emotion. Emotion Rev. 2009;1:99–113. doi: 10.1177/1754073908100432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kensinger EA, Garoff-Eaton RJ, Schacter DL. How negative emotion enhances the visual specificity of a memory. J Cogn Neurosci. 2007;19:1872–1887. doi: 10.1162/jocn.2007.19.11.1872. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N. Representational similarity analysis – connecting the branches of systems neuroscience. Front Syst Neurosci. 2008;2:1–28. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl B, Rissman J, Chun MM, Wagner AD. Fidelity of neural reactivation reveals competition between memories. Proc Natl Acad Sci. 2011;108:5903–5908. doi: 10.1073/pnas.1016939108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaBar KS, Cabeza R. Cognitive neuroscience of emotional memory. Nat Rev Neurosci. 2006;7:54–64. doi: 10.1038/nrn1825. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Technical report A-5. Gainesville (FL): The Center for Research in Psychophysiology, University of Florida; 2001. International affective picture system (IAPS): instruction manual and affective ratings. [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. NeuroImage. 2003;19:1233–1239. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- McClelland JL, McNaughton BL, O'Reilly RC. Why there are complementary learning systems in the hippocampus and neocortex: insights from the successes and failures of connectionist models of learning and memory. Psychol Rev. 1995;102:419–457. doi: 10.1037/0033-295X.102.3.419. [DOI] [PubMed] [Google Scholar]

- McGaugh JL. The amygdala modulates the consolidation of memories of emotionally arousing experiences. Ann Rev Neurosci. 2004;27:1–28. doi: 10.1146/annurev.neuro.27.070203.144157. [DOI] [PubMed] [Google Scholar]

- Morris CD, Bransford JD, Franks JJ. Levels of processing versus transfer appropriate processing. J Verbal Learning Verbal Behav. 1977;16:519–533. [Google Scholar]

- Murty V, Ritchey M, Adcock RA, LaBar KS. fMRI studies of successful emotional memory encoding: a quantitative meta-analysis. Neuropsychologia. 2010;48:3459–3469. doi: 10.1016/j.neuropsychologia.2010.07.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadel L, Samsonovich A, Ryan L, Moscovitch M. Multiple trace theory of human memory: computational, neuroimaging, and neuropsychological results. Hippocampus. 2000;10:352–368. doi: 10.1002/1098-1063(2000)10:4<352::AID-HIPO2>3.0.CO;2-D. [DOI] [PubMed] [Google Scholar]

- Norman KA, O'Reilly RC. Modeling hippocampal and neocortical contributions to recognition memory: a complementary-learning-systems approach. Psychol Rev. 2003;110:611–646. doi: 10.1037/0033-295X.110.4.611. [DOI] [PubMed] [Google Scholar]

- Nyberg L, Habib R, McIntosh AR, Tulving E. Reactivation of encoding-related brain activity during memory retrieval. Proc Natl Acad Sci. 2000;97:11120–11124. doi: 10.1073/pnas.97.20.11120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Neill J, Pleydell-Bouverie B, Dupret D, Csicsvari J. Play it again: reactivation of waking experience and memory. Trends Neurosci. 2010;33:220–229. doi: 10.1016/j.tins.2010.01.006. [DOI] [PubMed] [Google Scholar]

- Pennartz CMA, Lee E, Verheul J, Lipa P, Barnes CA, McNaughton BL. The ventral striatum in off-line processing: ensemble reactivation during sleep and modulation by hippocampal ripples. J Neurosci. 2004;24:6446–6456. doi: 10.1523/JNEUROSCI.0575-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polyn SM, Natu VS, Cohen JD, Norman KA. Category-specific cortical activity precedes retrieval during memory search. Science. 2005;310:1963–1966. doi: 10.1126/science.1117645. [DOI] [PubMed] [Google Scholar]

- Rissman J, Gazzaley A, D'Esposito M. Measuring functional connectivity during distinct stages of a cognitive task. NeuroImage. 2004;23:752–763. doi: 10.1016/j.neuroimage.2004.06.035. [DOI] [PubMed] [Google Scholar]

- Rissman J, Wagner AD. Distributed representations in memory: insights from functional brain imaging. Ann Rev Psychol. 2012;63:101–128. doi: 10.1146/annurev-psych-120710-100344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritchey M, LaBar KS, Cabeza R. Level of processing modulates the neural correlates of emotional memory formation. J Cogn Neurosci. 2011;23:757–771. doi: 10.1162/jocn.2010.21487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rugg MD, Johnson JD, Park H, Uncapher MR. Encoding-retrieval overlap in human episodic memory: a functional neuroimaging perspective. Progress in Brain Research. 2008;169:339–352. doi: 10.1016/S0079-6123(07)00021-0. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Lang PJ, Bradley MM, Costa VD, Keil A. The timing of emotional discrimination in human amygdala and ventral visual cortex. J Neurosci. 2009;29:14864–14868. doi: 10.1523/JNEUROSCI.3278-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spaniol J, Davidson PS, Kim AS, Han H, Moscovitch M, Grady CL. Event-related fMRI studies of episodic encoding and retrieval: meta-analyses using activation likelihood estimation. Neuropsychologia. 2009;47:1765–1779. doi: 10.1016/j.neuropsychologia.2009.02.028. [DOI] [PubMed] [Google Scholar]

- Sutherland GR, McNaughton B. Memory trace reactivation in hippocampal and neocortical neuronal ensembles. Curr Opin Neurobiol. 2000;10:180–186. doi: 10.1016/s0959-4388(00)00079-9. [DOI] [PubMed] [Google Scholar]

- Tulving E, Thomson DM. Encoding specificity and retrieval processes in episodic memory. Psychol Rev. 1973;80:352–373. [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Wager TD, Waugh CE, Lindquist M, Noll DC, Fredrickson BL, Taylor SF. Brain mediators of cardiovascular responses to social threat: Part I: reciprocal dorsal and ventral sub-regions of the medial prefrontal cortex and heart-rate reactivity. NeuroImage. 2009;47:821–835. doi: 10.1016/j.neuroimage.2009.05.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL. Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiltgen BJ, Brown RAM, Talton LE, Silva AJ. New circuits for old memories: the role of the neocortex in consolidation. Neuron. 2004;44:101–108. doi: 10.1016/j.neuron.2004.09.015. [DOI] [PubMed] [Google Scholar]

- Xue G, Dong Q, Chen C, Lu Z, Mumford JA, Poldrack RA. Greater neural pattern similarity across repetitions is associated with better memory. Science. 2010;330:97–101. doi: 10.1126/science.1193125. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.