Abstract

Experimental data from neuroscience suggest that a substantial amount of knowledge is stored in the brain in the form of probability distributions over network states and trajectories of network states. We provide a theoretical foundation for this hypothesis by showing that even very detailed models for cortical microcircuits, with data-based diverse nonlinear neurons and synapses, have a stationary distribution of network states and trajectories of network states to which they converge exponentially fast from any initial state. We demonstrate that this convergence holds in spite of the non-reversibility of the stochastic dynamics of cortical microcircuits. We further show that, in the presence of background network oscillations, separate stationary distributions emerge for different phases of the oscillation, in accordance with experimentally reported phase-specific codes. We complement these theoretical results by computer simulations that investigate resulting computation times for typical probabilistic inference tasks on these internally stored distributions, such as marginalization or marginal maximum-a-posteriori estimation. Furthermore, we show that the inherent stochastic dynamics of generic cortical microcircuits enables them to quickly generate approximate solutions to difficult constraint satisfaction problems, where stored knowledge and current inputs jointly constrain possible solutions. This provides a powerful new computing paradigm for networks of spiking neurons, that also throws new light on how networks of neurons in the brain could carry out complex computational tasks such as prediction, imagination, memory recall and problem solving.

Author Summary

The brain has not only the capability to process sensory input, but it can also produce predictions, imaginations, and solve problems that combine learned knowledge with information about a new scenario. But although these more complex information processing capabilities lie at the heart of human intelligence, we still do not know how they are organized and implemented in the brain. Numerous studies in cognitive science and neuroscience conclude that many of these processes involve probabilistic inference. This suggests that neuronal circuits in the brain process information in the form of probability distributions, but we are missing insight into how complex distributions could be represented and stored in large and diverse networks of neurons in the brain. We prove in this article that realistic cortical microcircuit models can store complex probabilistic knowledge by embodying probability distributions in their inherent stochastic dynamics – yielding a knowledge representation in which typical probabilistic inference problems such as marginalization become straightforward readout tasks. We show that in cortical microcircuit models such computations can be performed satisfactorily within a few  . Furthermore, we demonstrate how internally stored distributions can be programmed in a simple manner to endow a neural circuit with powerful problem solving capabilities.

. Furthermore, we demonstrate how internally stored distributions can be programmed in a simple manner to endow a neural circuit with powerful problem solving capabilities.

Introduction

The question whether brain computations are inherently deterministic or inherently stochastic is obviously of fundamental importance. Numerous experimental data highlight inherently stochastic aspects of neurons, synapses and networks of neurons on virtually all spatial and temporal scales that have been examined [1]–[5]. A clearly visible stochastic feature of brain activity is the trial-to-trial variability of neuronal responses, which also appears on virtually every spatial and temporal scale that has been examined [2]. This variability has often been interpreted as side-effect of an implementation of inherently deterministic computing paradigms with noisy elements, and it has been attempted to show that the observed noise can be eliminated through spatial or temporal averaging. However, more recent experimental methods, which make it possible to record simultaneously from many neurons (or from many voxels in fMRI), have shown that the underlying probability distributions of network states during spontaneous activity are highly structured and multimodal, with distinct modes that resemble those encountered during active processing. This has been shown through recordings with voltage-sensitive dyes starting with [6], [7], multi-electrode arrays [8], and fMRI [9], [10]. It was also shown that the intrinsic trial-to-trial variability of brain systems is intimately related to the observed trial-to-trial variability in behavior (see e.g. [11]). Furthermore, in [12] it was shown that during navigation in a complex environment where simultaneously two spatial frames of reference were relevant, the firing of neurons in area CA1 represented both frames in alternation, so that coactive neurons tended to relate to a common frame of reference. In addition it has been shown that in a situation where sensory stimuli are ambiguous, large brain networks switch stochastically between alternative interpretations or percepts, see [13]–[15]. Furthermore, an increase in the volatility of network states has been shown to accompany episodes of behavioral uncertainty [16]. All these experimental data point to inherently stochastic aspects in the organization of brain computations, and more specifically to an important computational role of spontaneously varying network states of smaller and larger networks of neurons in the brain. However, one should realize that the approach to stochastic computation that we examine in this article does not postulate that all brain activity is stochastic or unreliable, since reliable neural responses can be represented by probabilities close to 1.

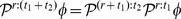

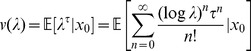

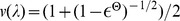

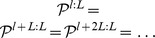

The goal of this article is to provide a theoretical foundation for understanding stochastic computations in networks of neurons in the brain, in particular also for the generation of structured spontaneous activity. To this end, we prove here that even biologically realistic models  for networks of neurons in the brain have – for a suitable definition of network state – a unique stationary distribution

for networks of neurons in the brain have – for a suitable definition of network state – a unique stationary distribution  of network states. Previous work had focused in this context on neuronal models with linear sub-threshold dynamics [17], [18] and constant external input (e.g. constant input firing rates). However, we show here that this holds even for quite realistic models that reflect, for example, data on nonlinear dendritic integration (dendritic spikes), synapses with data-based short term dynamics (i.e., individual mixtures of depression and facilitation), and different types of neurons on specific laminae. We also show that these results are not restricted to the case of constant external input, but rather can be extended to periodically changing input, and to input generated by arbitrary ergodic stochastic processes.

of network states. Previous work had focused in this context on neuronal models with linear sub-threshold dynamics [17], [18] and constant external input (e.g. constant input firing rates). However, we show here that this holds even for quite realistic models that reflect, for example, data on nonlinear dendritic integration (dendritic spikes), synapses with data-based short term dynamics (i.e., individual mixtures of depression and facilitation), and different types of neurons on specific laminae. We also show that these results are not restricted to the case of constant external input, but rather can be extended to periodically changing input, and to input generated by arbitrary ergodic stochastic processes.

Our theoretical results imply that virtually any data-based model  , for networks of neurons featuring realistic neuronal noise sources (e.g. stochastic synaptic vesicle release) implements a Markov process through its stochastic dynamics. This can be interpreted – in spite of its non-reversibility – as a form of sampling from a unique stationary distribution

, for networks of neurons featuring realistic neuronal noise sources (e.g. stochastic synaptic vesicle release) implements a Markov process through its stochastic dynamics. This can be interpreted – in spite of its non-reversibility – as a form of sampling from a unique stationary distribution  . One interpretation of

. One interpretation of  , which is in principle consistent with our findings, is that it represents the posterior distribution of a Bayesian inference operation [19]–[22], in which the current input (evidence) is combined with prior knowledge encoded in network parameters such as synaptic weights or intrinsic excitabilities of neurons (see [23]–[26] for an introduction to the “Bayesian brain”). This interpretation of neural dynamics as sampling from a posterior distribution is intriguing, as it implies that various results of probabilistic inference could then be easily obtained by a simple readout mechanism: For example, posterior marginal probabilities can be estimated (approximately) by observing the number of spikes of specific neurons within some time window (see related data from parietal cortex [27]). Furthermore, an approximate maximal a posteriori (MAP) inference can be carried out by observing which network states occur more often, and/or are more persistent.

, which is in principle consistent with our findings, is that it represents the posterior distribution of a Bayesian inference operation [19]–[22], in which the current input (evidence) is combined with prior knowledge encoded in network parameters such as synaptic weights or intrinsic excitabilities of neurons (see [23]–[26] for an introduction to the “Bayesian brain”). This interpretation of neural dynamics as sampling from a posterior distribution is intriguing, as it implies that various results of probabilistic inference could then be easily obtained by a simple readout mechanism: For example, posterior marginal probabilities can be estimated (approximately) by observing the number of spikes of specific neurons within some time window (see related data from parietal cortex [27]). Furthermore, an approximate maximal a posteriori (MAP) inference can be carried out by observing which network states occur more often, and/or are more persistent.

A crucial issue which arises is whether reliable readouts from  in realistic cortical microcircuit models can be obtained quickly enough to support, e.g., fast decision making in downstream areas. This critically depends on the speed of convergence of the distribution of network states (or distribution of trajectories of network states) from typical initial network states to the stationary distribution. Since the initial network state of a cortical microcircuit

in realistic cortical microcircuit models can be obtained quickly enough to support, e.g., fast decision making in downstream areas. This critically depends on the speed of convergence of the distribution of network states (or distribution of trajectories of network states) from typical initial network states to the stationary distribution. Since the initial network state of a cortical microcircuit  depends on past activity, it may often be already quite “close” to the stationary distribution when a new input arrives (since past inputs are likely related to the new input). But it is also reasonable to assume that the initial state of the network is frequently unrelated to the stationary distribution

depends on past activity, it may often be already quite “close” to the stationary distribution when a new input arrives (since past inputs are likely related to the new input). But it is also reasonable to assume that the initial state of the network is frequently unrelated to the stationary distribution  , for example after drastic input changes. In this case the time required for readouts depends on the expected convergence speed to

, for example after drastic input changes. In this case the time required for readouts depends on the expected convergence speed to  from – more or less – arbitrary initial states. We show that one can prove exponential upper bounds for this convergence speed. But even that does not guarantee fast convergence for a concrete system, because of constant factors in the theoretical upper bound. Therefore we complement this theoretical analysis of the convergence speed by extensive computer simulations for cortical microcircuit models.

from – more or less – arbitrary initial states. We show that one can prove exponential upper bounds for this convergence speed. But even that does not guarantee fast convergence for a concrete system, because of constant factors in the theoretical upper bound. Therefore we complement this theoretical analysis of the convergence speed by extensive computer simulations for cortical microcircuit models.

The notion of a cortical microcircuit arose from the observation that “it seems likely that there is a basically uniform microcircuit pattern throughout the neocortex upon which certain specializations unique to this or that cortical area are superimposed” [28]. This notion is not precisely defined, but rather a term of convenience: It refers to network models that are sufficiently large to contain examples of the main types of experimentally observed neurons on specific laminae, and the main types of experimentally observed synaptic connections between different types of neurons on different laminae, ideally in statistically representative numbers [29]. Computer simulations of cortical microcircuit models are practically constrained both by a lack of sufficiently many consistent data from a single preparation and a single cortical area, and by the available computer time. In the computer simulations for this article we have focused on a relatively simple standard model for a cortical microcircuit in the somatosensory cortex [30] that has already been examined in some variations in previous studies from various perspectives [31]–[34].

We show that for this standard model of a cortical microcircuit marginal probabilities for single random variables (neurons) can be estimated through sampling even for fairly large instances with 5000 neurons within a few  of simulated biological time, hence well within the range of experimentally observed computation times of biological organisms. The same holds for probabilities of network states for small sub-networks. Furthermore, we show that at least for sizes up to 5000 neurons these “computation times” are virtually independent of the size of the microcircuit model.

of simulated biological time, hence well within the range of experimentally observed computation times of biological organisms. The same holds for probabilities of network states for small sub-networks. Furthermore, we show that at least for sizes up to 5000 neurons these “computation times” are virtually independent of the size of the microcircuit model.

We also address the question to which extent our theoretical framework can be applied in the context of periodic input, for example in the presence of background theta oscillations [35]. In contrast to the stationary input case, we show that the presence of periodic input leads to the emergence of unique phase-specific stationary distributions, i.e., a separate unique stationary distribution for each phase of the periodic input. We discuss basic implications of this result and relate our findings to experimental data on theta-paced path sequences [35], [36] and bi-stable activity [37] in hippocampus.

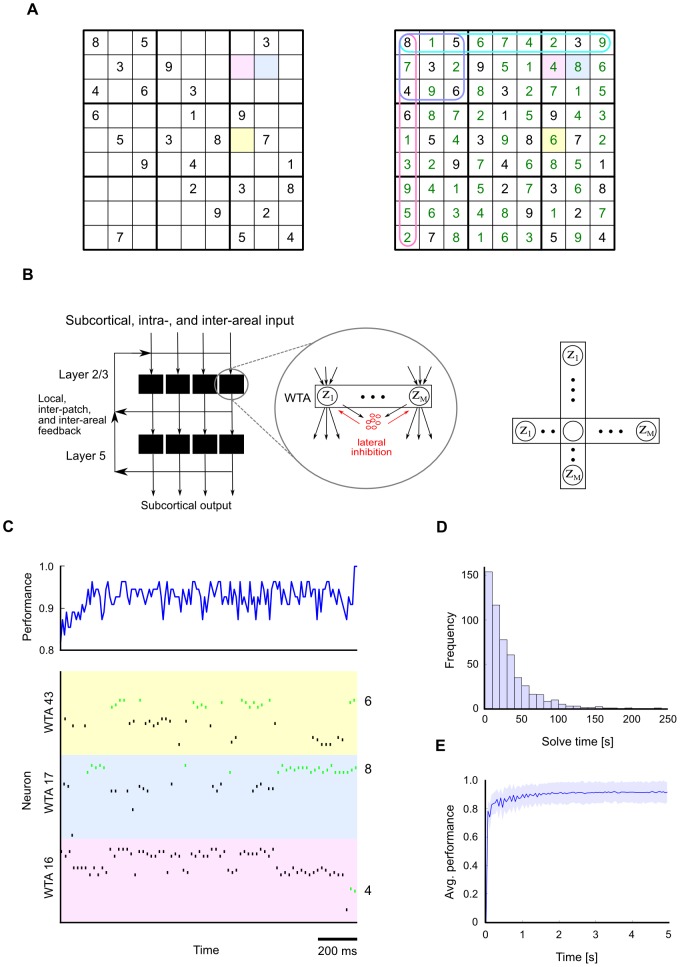

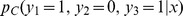

Finally, our theoretically founded framework for stochastic computations in networks of spiking neurons also throws new light on the question how complex constraint satisfaction problems could be solved by cortical microcircuits [38], [39]. We demonstrate this in a toy example for the popular puzzle game Sudoku. We show that the constraints of this problem can be easily encoded by synaptic connections between excitatory and inhibitory neurons in such a way that the stationary distribution  assigns particularly high probability to those network states which encode correct (or good approximate) solutions to the problem. The resulting network dynamics can also be understood as parallel stochastic search with anytime computing properties: Early network states provide very fast heuristic solutions, while later network states are distributed according to the stationary distribution

assigns particularly high probability to those network states which encode correct (or good approximate) solutions to the problem. The resulting network dynamics can also be understood as parallel stochastic search with anytime computing properties: Early network states provide very fast heuristic solutions, while later network states are distributed according to the stationary distribution  , therefore visiting with highest probability those solutions which violate only a few or zero constraints.

, therefore visiting with highest probability those solutions which violate only a few or zero constraints.

In order to make the results of this article accessible to non-theoreticians we present in the subsequent Results section our main findings in a less technical formulation that emphasizes relationships to experimental data. Rigorous mathematical definitions and proofs can be found in the Methods section, which has been structured in the same way as the Results section in order to facilitate simultaneous access on different levels of detail.

Results

Network states and distributions of network states

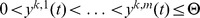

A simple notion of network state at time  simply indicates which neurons in the network fired within some short time window before

simply indicates which neurons in the network fired within some short time window before  . For example, in [20] a window size of 2ms was selected. However, the full network state could not be analyzed there experimentally, only its projection onto 16 electrodes in area V1 from which recordings were made. An important methodological innovation of [20] was to analyze under various conditions the probability distribution of the recorded fragments of network states, i.e., of the resulting bit vectors of length 16 (with a “1” at position

. For example, in [20] a window size of 2ms was selected. However, the full network state could not be analyzed there experimentally, only its projection onto 16 electrodes in area V1 from which recordings were made. An important methodological innovation of [20] was to analyze under various conditions the probability distribution of the recorded fragments of network states, i.e., of the resulting bit vectors of length 16 (with a “1” at position  if a spike was recorded during the preceding 2ms at electrode

if a spike was recorded during the preceding 2ms at electrode  ). In particular, it was shown that during development the distribution over these

). In particular, it was shown that during development the distribution over these  network states during spontaneous activity in darkness approximates the distribution recorded during natural vision. Apart from its functional interpretation, this result also raises the even more fundamental question how a network of neurons in the brain can represent and generate a complex distribution of network states. This question is addressed here in the context of data-based models

network states during spontaneous activity in darkness approximates the distribution recorded during natural vision. Apart from its functional interpretation, this result also raises the even more fundamental question how a network of neurons in the brain can represent and generate a complex distribution of network states. This question is addressed here in the context of data-based models  for cortical microcircuits. We consider notions of network states

for cortical microcircuits. We consider notions of network states  similar to [20] (see the simple state

similar to [20] (see the simple state  in Figure 1C) and provide a rigorous proof that under some mild assumptions any such model

in Figure 1C) and provide a rigorous proof that under some mild assumptions any such model  represents and generates for different external inputs

represents and generates for different external inputs  associated different internal distributions

associated different internal distributions  of network states

of network states  . More precisely, we will show that for any specific input

. More precisely, we will show that for any specific input  there exists a unique stationary distribution

there exists a unique stationary distribution  of network states

of network states  to which the network converges exponentially fast from any initial state.

to which the network converges exponentially fast from any initial state.

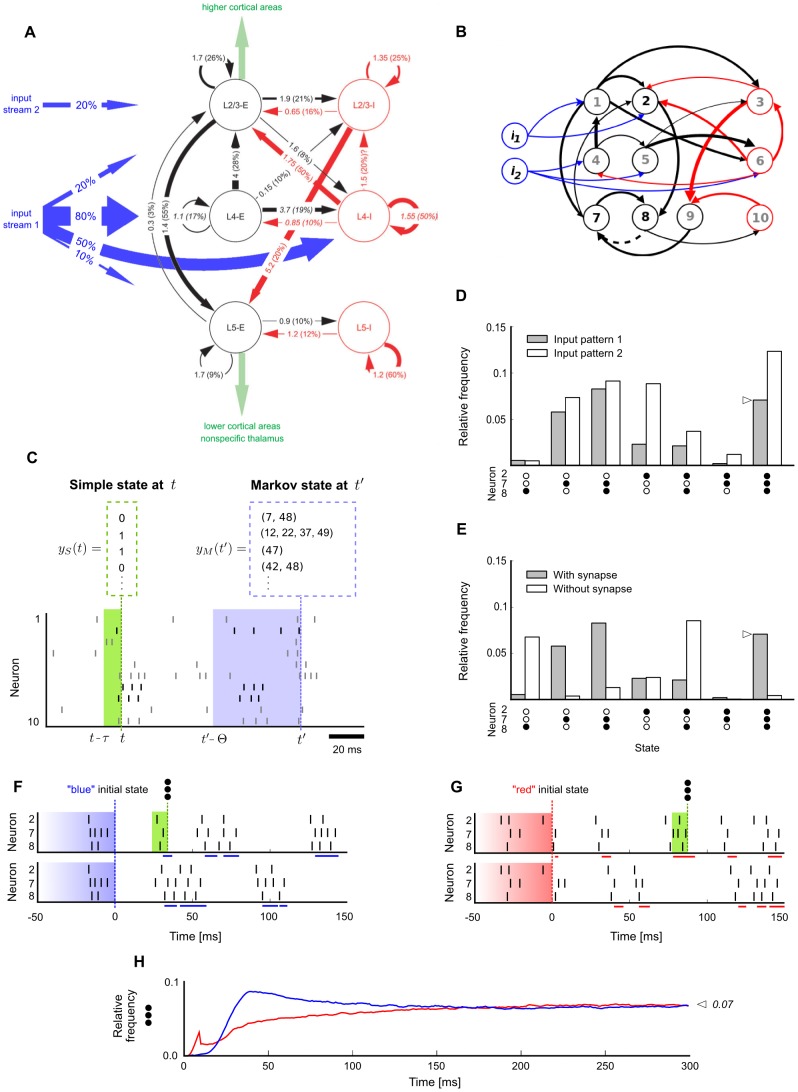

Figure 1. Network states and stationary distributions of network states in a cortical microcircuit model.

A. Data-based cortical microcircuit template from Cereb. Cortex (2007) 17: 149-162 [30];  reprinted by permission of the authors and Oxford University Press. B. A small instantiation of this model consisting of 10 network neurons

reprinted by permission of the authors and Oxford University Press. B. A small instantiation of this model consisting of 10 network neurons  and 2 additional input neurons

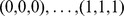

and 2 additional input neurons  . Neurons are colored by type (blue:input, black:excitatory, red:inhibitory). Line width represents synaptic efficacy. The synapse from neuron 8 to 7 is removed for the simulation described in E. C. Notions of network state considered in this article. Markov states are defined by the exact timing of all recent spikes within some time window

. Neurons are colored by type (blue:input, black:excitatory, red:inhibitory). Line width represents synaptic efficacy. The synapse from neuron 8 to 7 is removed for the simulation described in E. C. Notions of network state considered in this article. Markov states are defined by the exact timing of all recent spikes within some time window  , shown here for

, shown here for  . Simple states only record which neurons fired recently (0 = no spike, 1 = at least one spike within a short window

. Simple states only record which neurons fired recently (0 = no spike, 1 = at least one spike within a short window  , with

, with  throughout this figure). D. Empirically measured stationary distribution of simple network states. Shown is the marginal distribution

throughout this figure). D. Empirically measured stationary distribution of simple network states. Shown is the marginal distribution  for a subset of three neurons 2,7,8 (their spikes are shown in C in black), under two different input conditions (input pattern 1:

for a subset of three neurons 2,7,8 (their spikes are shown in C in black), under two different input conditions (input pattern 1:  firing at

firing at  and

and  at

at  , input pattern 2:

, input pattern 2:  at

at  and

and  at

at  ). The distribution for each input condition was obtained by measuring the relative time spent in each of the simple states (0,0,0), …, (1,1,1) in a single long trial (

). The distribution for each input condition was obtained by measuring the relative time spent in each of the simple states (0,0,0), …, (1,1,1) in a single long trial ( ). The zero state (0,0,0) is not shown. E. Effect of removing one synapse, from neuron 8 to neuron 7, on the stationary distribution of network states (input pattern 1 was presented). F. Illustration of trial-to-trial variability in the small cortical microcircuit (input pattern 1). Two trials starting from identical initial network states

). The zero state (0,0,0) is not shown. E. Effect of removing one synapse, from neuron 8 to neuron 7, on the stationary distribution of network states (input pattern 1 was presented). F. Illustration of trial-to-trial variability in the small cortical microcircuit (input pattern 1). Two trials starting from identical initial network states  are shown. Blue bars at the bottom of each trial mark periods where the subnetwork of neurons 2,7,8 was in simple state (1,1,1) at this time

are shown. Blue bars at the bottom of each trial mark periods where the subnetwork of neurons 2,7,8 was in simple state (1,1,1) at this time  . Note that the “blue” initial Markov state is shown only partially: it is actually longer and comprises all neurons in the network (as in panel C, but with

. Note that the “blue” initial Markov state is shown only partially: it is actually longer and comprises all neurons in the network (as in panel C, but with  ). G. Two trials starting from a different (“red”) initial network state. Red bars denote periods of state (1,1,1) for “red” trials. H. Convergence to the stationary distribution

). G. Two trials starting from a different (“red”) initial network state. Red bars denote periods of state (1,1,1) for “red” trials. H. Convergence to the stationary distribution  in this small cortical microcircuit is fast and independent of the initial state: This is illustrated for the relative frequency of simple state (1,1,1) within the first

in this small cortical microcircuit is fast and independent of the initial state: This is illustrated for the relative frequency of simple state (1,1,1) within the first  after input onset. The blue/red line shows the relative frequency of simple state (1,1,1) at each time

after input onset. The blue/red line shows the relative frequency of simple state (1,1,1) at each time  estimated from many (

estimated from many ( ) “blue”/“red” trials. The relative frequency of simple state (1,1,1) rapidly converges to its stationary value denoted by the symbol

) “blue”/“red” trials. The relative frequency of simple state (1,1,1) rapidly converges to its stationary value denoted by the symbol  (marked also in panels D and E). The relative frequency converges to the same value regardless of the initial state (blue/red).

(marked also in panels D and E). The relative frequency converges to the same value regardless of the initial state (blue/red).

This result can be derived within the theory of Markov processes on general state spaces, an extension of the more familiar theory of Markov chains on finite state spaces to continuous time and infinitely many network states. Another important difference to typical Markov chains (e.g. the dynamics of Gibbs sampling in Boltzmann machines) is that the Markov processes describing the stochastic dynamics of cortical microcircuit models are non-reversible. This is a well-known difference between simple neural network models and networks of spiking neurons in the brain, where a spike of a neuron causes postsynaptic potentials in other neurons - but not vice versa. In addition, experimental results show that brain networks tend to have a non-reversible dynamics also on longer time scales (e.g., stereotypical trajectories of network states [40]–[43]).

In order to prove results on the existence of stationary distributions  of network states

of network states  , one first needs to consider a more complex notion of network state

, one first needs to consider a more complex notion of network state  at time

at time  , which records the history of all spikes in the network

, which records the history of all spikes in the network  since time

since time  (see Figure 1C). The window length

(see Figure 1C). The window length  has to be chosen sufficiently large so that the influence of spikes before time

has to be chosen sufficiently large so that the influence of spikes before time  on the dynamics of the network after time

on the dynamics of the network after time  can be neglected. This more complex notion of network state then fulfills the Markov property, such that the future network evolution depends on the past only through the current Markov state. The existence of a window length

can be neglected. This more complex notion of network state then fulfills the Markov property, such that the future network evolution depends on the past only through the current Markov state. The existence of a window length  with the Markov property is a basic assumption of the subsequent theoretical results. For standard models of networks of spiking neurons a value of

with the Markov property is a basic assumption of the subsequent theoretical results. For standard models of networks of spiking neurons a value of  around 100ms provides already a good approximation of the Markov property, since this is a typical time during which a post-synaptic potential has a non-negligible effect at the soma of a post-synaptic neuron. For more complex models of networks of spiking neurons a larger value of

around 100ms provides already a good approximation of the Markov property, since this is a typical time during which a post-synaptic potential has a non-negligible effect at the soma of a post-synaptic neuron. For more complex models of networks of spiking neurons a larger value of  in the range of seconds is more adequate, in order to accommodate for dendritic spikes or the activation of

in the range of seconds is more adequate, in order to accommodate for dendritic spikes or the activation of  receptors that may last 100ms or longer, and the short term dynamics of synapses with time constants of several hundred milliseconds. Fortunately, once the existence of a stationary distribution is proved for such more complex notion of network state, it also holds for any simpler notion of network state (even if these simpler network states do not fulfill the Markov property), that results when one ignores details of the more complex network states. For example, one can ignore all spikes before time

receptors that may last 100ms or longer, and the short term dynamics of synapses with time constants of several hundred milliseconds. Fortunately, once the existence of a stationary distribution is proved for such more complex notion of network state, it also holds for any simpler notion of network state (even if these simpler network states do not fulfill the Markov property), that results when one ignores details of the more complex network states. For example, one can ignore all spikes before time  , the exact firing times within the window from

, the exact firing times within the window from  to

to  , and whether a neuron fired one or several spikes. In this way one arrives back at the simple notion of network state from [20].

, and whether a neuron fired one or several spikes. In this way one arrives back at the simple notion of network state from [20].

Theorem 1 (Exponentially fast convergence to a stationary distribution)

Let

be an arbitrary model for a network of spiking neurons with stochastic synaptic release or some other mechanism for stochastic firing.

be an arbitrary model for a network of spiking neurons with stochastic synaptic release or some other mechanism for stochastic firing.

may consist of complex multi-compartment neuron models with nonlinear dendritic integration (including dendritic spikes) and heterogeneous synapses with differential short term dynamics. We assume that this network

may consist of complex multi-compartment neuron models with nonlinear dendritic integration (including dendritic spikes) and heterogeneous synapses with differential short term dynamics. We assume that this network

receives external inputs from a set of input neurons

receives external inputs from a set of input neurons

which fire according to Poisson processes at different rates

which fire according to Poisson processes at different rates

. The vector

. The vector

of input rates can be either constant over time (

of input rates can be either constant over time (

), or generated by any external Markov process that converges exponentially fast to a stationary distribution.

), or generated by any external Markov process that converges exponentially fast to a stationary distribution.

Then there exists a stationary distribution

of network states

of network states

, to which the stochastic dynamics of

, to which the stochastic dynamics of

converges from any initial state of the network exponentially fast. Accordingly, the distribution of subnetwork states

converges from any initial state of the network exponentially fast. Accordingly, the distribution of subnetwork states

of any subset of neurons converges exponentially fast to the marginal distribution

of any subset of neurons converges exponentially fast to the marginal distribution

of this subnetwork.

of this subnetwork.

Note that Theorem 1 states that the network embodies not only the joint distribution  over all neurons, but simultaneously all marginal distributions

over all neurons, but simultaneously all marginal distributions  over all possible subsets of neurons. This property follows naturally from the fact that

over all possible subsets of neurons. This property follows naturally from the fact that  is represented in a sample-based manner [25]. As a consequence, if one is interested in estimating the marginal distribution of some subset of neurons rather than the full joint distribution, it suffices to observe the activity of the particular subnetwork of interest (while ignoring the remaining network). This is remarkable insofar, as the exact computation of marginal probabilities is in general known to be quite difficult (even NP-complete [44]).

is represented in a sample-based manner [25]. As a consequence, if one is interested in estimating the marginal distribution of some subset of neurons rather than the full joint distribution, it suffices to observe the activity of the particular subnetwork of interest (while ignoring the remaining network). This is remarkable insofar, as the exact computation of marginal probabilities is in general known to be quite difficult (even NP-complete [44]).

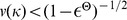

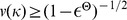

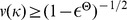

Theorem 1 requires that neurons fire stochastically. More precisely, a basic assumption required for Theorem 1 is that the network behaves sufficiently stochastic at any point in time, in the sense that the probability that a neuron fires in an interval  must be smaller than

must be smaller than  for any

for any  . This is indeed fulfilled by any stochastic neuron model as long as instantaneous firing rates remain bounded. It is also fulfilled by any deterministic neuron model if synaptic transmission is modeled via stochastic vesicle release with bounded release rates. Another assumption is that long-term plasticity and other long-term memory effects have a negligible impact on the network dynamics on shorter timescales which are the focus of this article (milliseconds to a few seconds). Precise mathematical definitions of all assumptions and notions involved in Theorem 1 as well as proofs can be found in Methods (see Lemma 2 and 3).

. This is indeed fulfilled by any stochastic neuron model as long as instantaneous firing rates remain bounded. It is also fulfilled by any deterministic neuron model if synaptic transmission is modeled via stochastic vesicle release with bounded release rates. Another assumption is that long-term plasticity and other long-term memory effects have a negligible impact on the network dynamics on shorter timescales which are the focus of this article (milliseconds to a few seconds). Precise mathematical definitions of all assumptions and notions involved in Theorem 1 as well as proofs can be found in Methods (see Lemma 2 and 3).

An illustration for Theorem 1 is given in Figure 1. We use as our running example for a cortical microcircuit model  the model of [30] shown in Figure 1A, which consists of three populations of excitatory and three populations of inhibitory neurons on specific laminae. Average strength of synaptic connections (measured as mean amplitude of postsynaptic potentials at the soma in

the model of [30] shown in Figure 1A, which consists of three populations of excitatory and three populations of inhibitory neurons on specific laminae. Average strength of synaptic connections (measured as mean amplitude of postsynaptic potentials at the soma in  , and indicated by the numbers at the arrows in Figure 1A) as well as the connection probability (indicated in parentheses at each arrow as

, and indicated by the numbers at the arrows in Figure 1A) as well as the connection probability (indicated in parentheses at each arrow as  in Figure 1A) are based in this model on intracellular recordings from 998 pairs of identified neurons from the Thomson Lab [45]. The thickness of arrows in Figure 1A reflects the products of those two numbers for each connection. The nonlinear short-term dynamics of each type of synaptic connection was modeled according to data from the Markram Lab [46], [47]. Neuronal integration and spike generation was modeled by a conductance-based leaky-integrate-and-fire model, with a stochastic spiking mechanism based on [48]. See Methods for details.

in Figure 1A) are based in this model on intracellular recordings from 998 pairs of identified neurons from the Thomson Lab [45]. The thickness of arrows in Figure 1A reflects the products of those two numbers for each connection. The nonlinear short-term dynamics of each type of synaptic connection was modeled according to data from the Markram Lab [46], [47]. Neuronal integration and spike generation was modeled by a conductance-based leaky-integrate-and-fire model, with a stochastic spiking mechanism based on [48]. See Methods for details.

The external input  consists in a cortical microcircuit of inputs from higher cortical areas that primarily target neurons in superficial layers, and bottom-up inputs that arrive primarily in layer 4, but also on other layers (details tend to depend on the cortical area and the species). We model two input streams in a qualitative manner as in [30]. Also background synaptic input is modeled according to [30].

consists in a cortical microcircuit of inputs from higher cortical areas that primarily target neurons in superficial layers, and bottom-up inputs that arrive primarily in layer 4, but also on other layers (details tend to depend on the cortical area and the species). We model two input streams in a qualitative manner as in [30]. Also background synaptic input is modeled according to [30].

Figure 1B shows a small instantiation of this microcircuit template consisting of 10 neurons (we had to manually tune a few connections in this circuit to facilitate visual clarity of subsequent panels). The impact of different external inputs  and of a single synaptic connection from neuron 8 to neuron 7 on the stationary distribution is shown in Figure 1D and E, respectively (shown is the marginal distribution

and of a single synaptic connection from neuron 8 to neuron 7 on the stationary distribution is shown in Figure 1D and E, respectively (shown is the marginal distribution  of a subset of three neurons 2,7 and 8). This illustrates that the structure and dynamics of a circuit

of a subset of three neurons 2,7 and 8). This illustrates that the structure and dynamics of a circuit  are intimately linked to properties of its stationary distribution

are intimately linked to properties of its stationary distribution  . In fact, we argue that the stationary distribution

. In fact, we argue that the stationary distribution  (more precisely: the stationary distribution

(more precisely: the stationary distribution  for all relevant external inputs

for all relevant external inputs  ) can be viewed as a mathematical model for the most salient aspects of stochastic computations in a circuit

) can be viewed as a mathematical model for the most salient aspects of stochastic computations in a circuit  .

.

The influence of the initial network state on the first  ms of network response is shown in Figure 1F and G for representative trials starting from two different initial Markov states (blue/red, two trials shown for each). Variability among trials arises from the inherent stochasticity of neurons and the presence of background synaptic input. Figure 1H is a concrete illustration of Theorem 1: it shows that the relative frequency of a specific network state (1,1,1) in a subset of the three neurons 2,7 and 8 converges quickly to its stationary value. Furthermore, it converges to this (same) value regardless of the initial network state (blue/red).

ms of network response is shown in Figure 1F and G for representative trials starting from two different initial Markov states (blue/red, two trials shown for each). Variability among trials arises from the inherent stochasticity of neurons and the presence of background synaptic input. Figure 1H is a concrete illustration of Theorem 1: it shows that the relative frequency of a specific network state (1,1,1) in a subset of the three neurons 2,7 and 8 converges quickly to its stationary value. Furthermore, it converges to this (same) value regardless of the initial network state (blue/red).

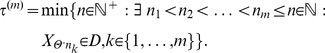

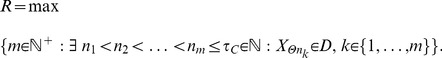

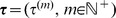

Stationary distributions of trajectories of network states

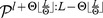

Theorem 1 also applies to networks which generate stereotypical trajectories of network activity [41]. For such networks it may be of interest to consider not only the distribution of network states in a short window (e.g. simple states with  , or

, or  ), but also the distribution of longer trajectories produced by the network. Indeed, since Theorem 1 holds for Markov states

), but also the distribution of longer trajectories produced by the network. Indeed, since Theorem 1 holds for Markov states  with any fixed window length

with any fixed window length  , it also holds for values of

, it also holds for values of  that are in the range of experimentally observed trajectories of network states [41], [49], [50]. Hence, a generic neural circuit

that are in the range of experimentally observed trajectories of network states [41], [49], [50]. Hence, a generic neural circuit  automatically has a unique stationary distribution over trajectories of (simple) network states for any fixed trajectory length

automatically has a unique stationary distribution over trajectories of (simple) network states for any fixed trajectory length  . Note that this implies that a neural circuit

. Note that this implies that a neural circuit  has simultaneously stationary distributions of trajectories of (simple) network states of various lengths for arbitrarily large

has simultaneously stationary distributions of trajectories of (simple) network states of various lengths for arbitrarily large  , and a stationary distribution of simple network states. This fact is not surprising if one takes into consideration that if a circuit

, and a stationary distribution of simple network states. This fact is not surprising if one takes into consideration that if a circuit  has a stationary distribution over simple network states this does not imply that subsequent simple network states represent independent drawings from this stationary distribution. Hence the circuit

has a stationary distribution over simple network states this does not imply that subsequent simple network states represent independent drawings from this stationary distribution. Hence the circuit  may very well produce stereotypical trajectories of simple network states. This feature becomes even more prominent if the underlying dynamics (the Markov process) of the neural circuit is non-reversible on several time scales.

may very well produce stereotypical trajectories of simple network states. This feature becomes even more prominent if the underlying dynamics (the Markov process) of the neural circuit is non-reversible on several time scales.

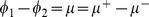

Extracting knowledge from internally stored distributions of network states

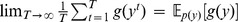

We address two basic types of knowledge extraction from a stationary distribution  of a network

of a network  : the computation of marginal probabilities and maximal a posteriori (MAP) assignments. Both computations constitute basic inference problems commonly appearing in real-world applications [51], which are in general difficult to solve as they involve large sums, integrals, or maximization steps over a state space which grows exponentially in the number of random variables. However, already [21], [25] noted that the estimation of marginal probabilities would become straightforward if distributions were represented in the brain in a sample-based manner (such that each network state at time

: the computation of marginal probabilities and maximal a posteriori (MAP) assignments. Both computations constitute basic inference problems commonly appearing in real-world applications [51], which are in general difficult to solve as they involve large sums, integrals, or maximization steps over a state space which grows exponentially in the number of random variables. However, already [21], [25] noted that the estimation of marginal probabilities would become straightforward if distributions were represented in the brain in a sample-based manner (such that each network state at time  represents one sample from the distribution). Theorem 1 provides a theoretical foundation for how such a representation could emerge in realistic data-based microcircuit models on the implementation level: Once the network

represents one sample from the distribution). Theorem 1 provides a theoretical foundation for how such a representation could emerge in realistic data-based microcircuit models on the implementation level: Once the network  has converged to its stationary distribution, the network state at any time

has converged to its stationary distribution, the network state at any time  represents a sample from

represents a sample from  (although subsequent samples are generally not independent). Simultaneously, the subnetwork state

(although subsequent samples are generally not independent). Simultaneously, the subnetwork state  of any subset of neurons represents a sample from the marginal distribution

of any subset of neurons represents a sample from the marginal distribution  . This is particularly relevant if one interprets

. This is particularly relevant if one interprets  in a given cortical microcircuit

in a given cortical microcircuit  as the posterior distribution of an implicit generative model, as suggested for example by [20] or [21], [22].

as the posterior distribution of an implicit generative model, as suggested for example by [20] or [21], [22].

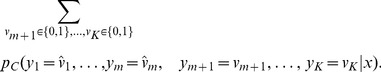

In order to place the estimation of marginals into a biologically relevant context, assume that a particular component  of the network state

of the network state  has a behavioral relevance. This variable

has a behavioral relevance. This variable  , represented by some neuron

, represented by some neuron  , could represent for example the perception of a particular visual object (if neuron

, could represent for example the perception of a particular visual object (if neuron  is located in inferior temporal cortex [52]), or the intention to make a saccade into a specific part of the visual field (if neuron

is located in inferior temporal cortex [52]), or the intention to make a saccade into a specific part of the visual field (if neuron  is located in area LIP [53]). Then the computation of the marginal

is located in area LIP [53]). Then the computation of the marginal

|

(1) |

would be of behavioral significance. Note that this computation integrates information from the internally stored knowledge  with evidence about a current situation

with evidence about a current situation  . In general this computation is demanding as it involves a sum with exponentially many terms in the network size

. In general this computation is demanding as it involves a sum with exponentially many terms in the network size  .

.

But according to Theorem 1, the correct marginal distribution  is automatically embodied by the activity of neuron

is automatically embodied by the activity of neuron  . Hence the marginal probability

. Hence the marginal probability  can be estimated by simply observing what fraction of time the neuron spends in the state

can be estimated by simply observing what fraction of time the neuron spends in the state  , while ignoring the activity of the remaining network [21]. In principle, a downstream neuron could gather this information by integrating the spike output of

, while ignoring the activity of the remaining network [21]. In principle, a downstream neuron could gather this information by integrating the spike output of  over time.

over time.

Marginal probabilities of subpopulations, for example  , can be estimated in a similar manner by keeping track of how much time the subnetwork spends in the state (1,0,1), while ignoring the activity of the remaining neurons. A downstream network could gather this information, for example, by integrating over the output of a readout neuron which is tuned to detect the desired target pattern (1,0,1).

, can be estimated in a similar manner by keeping track of how much time the subnetwork spends in the state (1,0,1), while ignoring the activity of the remaining neurons. A downstream network could gather this information, for example, by integrating over the output of a readout neuron which is tuned to detect the desired target pattern (1,0,1).

Notably, the estimation of marginals sketched above is guaranteed by ergodic theory to converge to the correct probability as observation time increases (due to Theorem 1 which ensures that the network is an ergodic Markov process, see Methods). In particular, this holds true even for networks with prominent sequential dynamics featuring, for example, stereotypical trajectories. However, note that the observation time required to obtain an accurate estimate may be longer when trajectories are present since subsequent samples gathered from such a network will likely exhibit stronger dependencies than in networks lacking sequential activity patterns. In a practical readout implementation where recent events might be weighed preferentially this could result in more noisy estimates.

Approximate maximal a posteriori (MAP) assignments to small subsets of variables  can also be obtained in a quite straightforward manner. For given external inputs

can also be obtained in a quite straightforward manner. For given external inputs  , the marginal MAP assignment to the subset of variables

, the marginal MAP assignment to the subset of variables  (with some

(with some  ) is defined as the set of values

) is defined as the set of values  that maximize

that maximize

|

(2) |

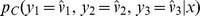

A sample-based approximation of this operation can be implemented by keeping track of which network states in the subnetwork  occur most often. This could, for example, be realized by a readout network in a two stage process: first the marginal probabilities

occur most often. This could, for example, be realized by a readout network in a two stage process: first the marginal probabilities  of all

of all  subnetwork states

subnetwork states  are estimated (by 8 readout neurons dedicated to that purpose), followed by the selection of the neuron with maximal probability. The selection of the maximum could be achieved in a neural network, for example, through competitive inhibition. Such competitive inhibition would ideally lead to a winner-take-all function such that the neuron with the strongest stimulation (representing the variable assignment with the largest probability) dominates and suppresses all other readout neurons.

are estimated (by 8 readout neurons dedicated to that purpose), followed by the selection of the neuron with maximal probability. The selection of the maximum could be achieved in a neural network, for example, through competitive inhibition. Such competitive inhibition would ideally lead to a winner-take-all function such that the neuron with the strongest stimulation (representing the variable assignment with the largest probability) dominates and suppresses all other readout neurons.

Estimates of the required computation time

Whereas many types of computations (for example probabilistic inference via the junction tree algorithm [51]) require a certain computation time, probabilistic inference via sampling from an embodied distribution  belongs to the class of anytime computing methods, where rough estimates of the result of a computation become almost immediately available, and are automatically improved when there is more time for a decision. A main component of the convergence time to a reliable result arises from the time which the distribution of network states needs to become independent of its initial state

belongs to the class of anytime computing methods, where rough estimates of the result of a computation become almost immediately available, and are automatically improved when there is more time for a decision. A main component of the convergence time to a reliable result arises from the time which the distribution of network states needs to become independent of its initial state  . It is well known that both, network states of neurons in the cortex [54] and quick decisions of an organism, are influenced for a short time by this initial state

. It is well known that both, network states of neurons in the cortex [54] and quick decisions of an organism, are influenced for a short time by this initial state  (and this temporary dependence on the initial state

(and this temporary dependence on the initial state  may in fact have some behavioral advantage, since

may in fact have some behavioral advantage, since  may contain information about preceding network inputs, expectations, etc.). But it has remained unknown, what range of convergence speeds for inference from

may contain information about preceding network inputs, expectations, etc.). But it has remained unknown, what range of convergence speeds for inference from  is produced by common models for cortical microcircuits

is produced by common models for cortical microcircuits  .

.

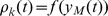

We address this question by analyzing the convergence speed of stochastic computations in the cortical microcircuit model of [30]. A typical network response of an instance of the cortical microcircuit model comprising 560 neurons as in [30] is shown in Figure 2A. We first checked how fast marginal probabilities for single neurons converge to stationary values from different initial network Markov states. We applied the same analysis as in Figure 1H to the simple state ( ) of a single representative neuron from layer 5. Figure 2B shows quite fast convergence of the “on”-state probability of the neuron to its stationary value from two different initial states. Note that this straightforward method of checking convergence is rather inefficient, as it requires the repetition of a large number of trials for each initial state. In addition it is not suitable for analyzing convergence to marginals for subpopulations of neurons (see Figure 2G).

) of a single representative neuron from layer 5. Figure 2B shows quite fast convergence of the “on”-state probability of the neuron to its stationary value from two different initial states. Note that this straightforward method of checking convergence is rather inefficient, as it requires the repetition of a large number of trials for each initial state. In addition it is not suitable for analyzing convergence to marginals for subpopulations of neurons (see Figure 2G).

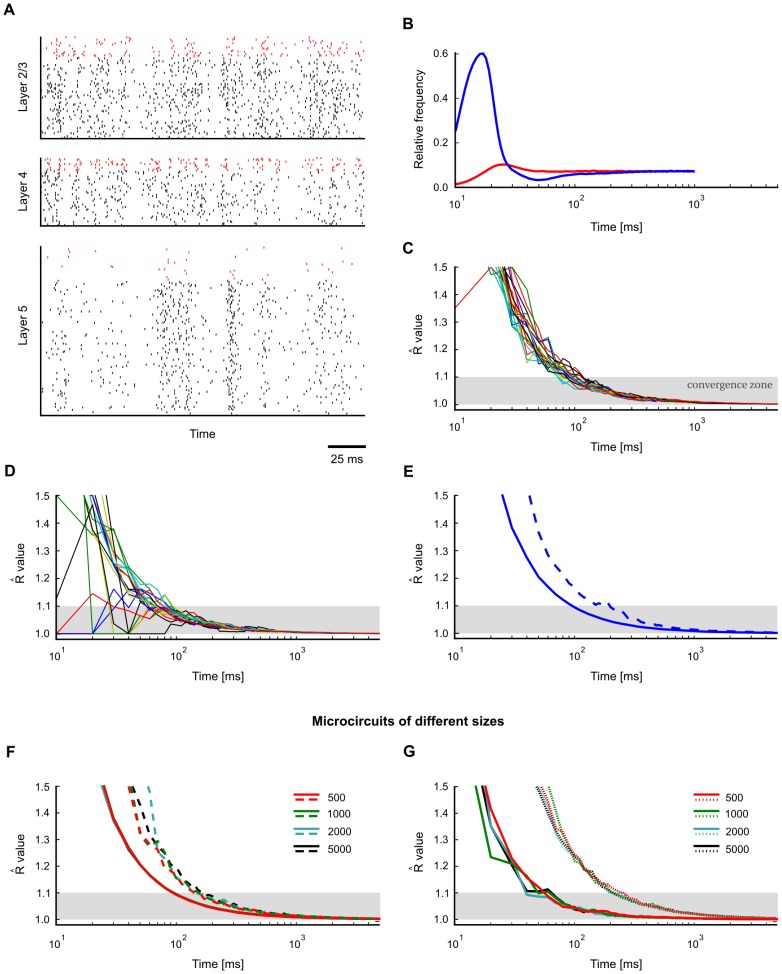

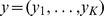

Figure 2. Fast convergence of marginals of single neurons and more complex quantities in a cortical microcircuit model.

A. Typical spike response of the microcircuit model based on [30] comprising 560 stochastic point neurons. Spikes of inhibitory neurons are indicated in red. B. Fast convergence of a marginal for a representative layer 5 neuron (frequency of “on”-state, with  ) to its stationary value, shown for two different initial Markov states (blue/red). Statistics were obtained for each initial state from

) to its stationary value, shown for two different initial Markov states (blue/red). Statistics were obtained for each initial state from  trials. C. Gelman-Rubin convergence diagnostic was applied to the marginals of all single neurons (simple states,

trials. C. Gelman-Rubin convergence diagnostic was applied to the marginals of all single neurons (simple states,  ). In all neurons the Gelman-Rubin value

). In all neurons the Gelman-Rubin value  drops to a value close to 1 within a few

drops to a value close to 1 within a few  , suggesting generally fast convergence of single neuron marginals (shown are 20 randomly chosen neurons; see panel E for a summary of all neurons). The shaded area below 1.1 indicates a range where one commonly assumes that convergence has taken place. D. Convergence speed of pairwise spike coincidences (simple states (1,1) of two neurons, 20 randomly chosen pairs of neurons) is comparable to marginal convergence. E. Summary of marginal convergence analysis for single neurons in C: Mean (solid) and worst (dashed line) marginal convergence of all 560 neurons. Mean/worst convergence is reached after a few

, suggesting generally fast convergence of single neuron marginals (shown are 20 randomly chosen neurons; see panel E for a summary of all neurons). The shaded area below 1.1 indicates a range where one commonly assumes that convergence has taken place. D. Convergence speed of pairwise spike coincidences (simple states (1,1) of two neurons, 20 randomly chosen pairs of neurons) is comparable to marginal convergence. E. Summary of marginal convergence analysis for single neurons in C: Mean (solid) and worst (dashed line) marginal convergence of all 560 neurons. Mean/worst convergence is reached after a few  . F. Convergence analysis was applied to networks of different sizes (500–5000 neurons). Mean and worst marginal convergence of single neurons are hardly affected by network size. G. Convergence properties of populations of neurons. Dotted: multivariate Gelman-Rubin analysis was applied to a subpopulation of 30 neurons (5 neurons were chosen randomly from each pool). Solid: convergence of a “random readout” neuron which receives spike inputs from 500 randomly chosen neurons in the microcircuit. It turns out that the convergence speed of such a generic readout neuron is even slightly faster than for neurons within the microcircuit (compare with panel E). A remarkable finding is that in all these cases the network size does not affect convergence speed.

. F. Convergence analysis was applied to networks of different sizes (500–5000 neurons). Mean and worst marginal convergence of single neurons are hardly affected by network size. G. Convergence properties of populations of neurons. Dotted: multivariate Gelman-Rubin analysis was applied to a subpopulation of 30 neurons (5 neurons were chosen randomly from each pool). Solid: convergence of a “random readout” neuron which receives spike inputs from 500 randomly chosen neurons in the microcircuit. It turns out that the convergence speed of such a generic readout neuron is even slightly faster than for neurons within the microcircuit (compare with panel E). A remarkable finding is that in all these cases the network size does not affect convergence speed.

Various more efficient convergence diagnostics have been proposed in the context of discrete-time Markov Chain Monte Carlo theory [55]–[58]. In the following, we have adopted the Gelman and Rubin diagnostic, one of the standard methods in applications of MCMC sampling [55]. The Gelman Rubin convergence diagnostic is based on the comparison of many runs of a Markov chain when started from different randomly drawn initial states. In particular, one compares the typical variance of state distributions during the time interval  within a single run (within-variance) to the variance during the interval

within a single run (within-variance) to the variance during the interval  between different runs (between-variance). When the ratio

between different runs (between-variance). When the ratio  of between- and within-variance approaches 1 this is indicative of convergence. A comparison of panels B and C of Figure 2 shows that in the case of marginals for single neurons this interpretation fits very well to the empirically observed convergence speed for two different initial conditions. Various values between 1.02 [58] and 1.2 [57], [59], [60] have been proposed in the literature as thresholds below which the ratio

of between- and within-variance approaches 1 this is indicative of convergence. A comparison of panels B and C of Figure 2 shows that in the case of marginals for single neurons this interpretation fits very well to the empirically observed convergence speed for two different initial conditions. Various values between 1.02 [58] and 1.2 [57], [59], [60] have been proposed in the literature as thresholds below which the ratio  signals that convergence has taken place. The shaded region in Figure 2C–G corresponds to

signals that convergence has taken place. The shaded region in Figure 2C–G corresponds to  values below a threshold of 1.1. An obvious advantage of the Gelman-Rubin diagnostic, compared with a straightforward empirical evaluation of convergence properties as in Figure 2B, is its substantially larger computational efficiency and the larger number of initial states that it takes into account. For the case of multivariate marginals (see Figure 2G), a straightforward empirical evaluation of convergence is not even feasible, since relative frequencies of

values below a threshold of 1.1. An obvious advantage of the Gelman-Rubin diagnostic, compared with a straightforward empirical evaluation of convergence properties as in Figure 2B, is its substantially larger computational efficiency and the larger number of initial states that it takes into account. For the case of multivariate marginals (see Figure 2G), a straightforward empirical evaluation of convergence is not even feasible, since relative frequencies of  states would have to be analyzed.

states would have to be analyzed.

Using the Gelman-Rubin diagnostic, we estimated convergence speed for marginals of single neurons (see Figure 2C, mean/worst in Figure 2E), and for the product of the simple states of two neurons (i.e., pairwise spike coincidences) in Figure 2D. We found that in all cases the Gelman-Rubin value drops close to 1 within just a few  . More precisely, for a typical threshold of

. More precisely, for a typical threshold of  convergence times are slightly below

convergence times are slightly below  in Figure 2C–E. A very conservative threshold of

in Figure 2C–E. A very conservative threshold of  yields convergence times close to

yields convergence times close to  .

.

The above simulations were performed in a circuit of 560 neurons, but eventually one is interested in the properties of much larger circuits. Hence, a crucial question is how the convergence properties scale with the network size. To this end, we compared convergence in the cortical microcircuit model of [30] for four different sizes (500, 1000, 2000 and 5000). To ensure that overall activity characteristics are maintained across different sizes, we adopted the approach of [30] and scaled recurrent postsynaptic potential (PSP) amplitudes inversely proportional to network size. A comparison of mean (solid line) and worst (dashed line) marginal convergence for networks of different sizes is shown in Figure 2F. Notably we find that the network size has virtually no effect on convergence speed. This suggests that, at least within the scope of the laminar microcircuit model of [30], even very large cortical networks may support fast extraction of knowledge (in particular marginals) from their stationary distributions  .

.

In order to estimate the required computation time associated with the estimation of marginal probabilities and MAP solutions on small subpopulations

, one needs to know how fast the marginal probabilities of vector-valued states

, one needs to know how fast the marginal probabilities of vector-valued states  of subnetworks of

of subnetworks of  become independent from the initial state of the network. To estimate convergence speed in small subnetworks, we applied a multivariate version of the Gelman-Rubin method to vector-valued simple states of subnetworks (Figure 2G, dotted lines, evaluated for varying circuit sizes from 500 to 5000 neurons). We find that multivariate convergence of state frequencies for a population of

become independent from the initial state of the network. To estimate convergence speed in small subnetworks, we applied a multivariate version of the Gelman-Rubin method to vector-valued simple states of subnetworks (Figure 2G, dotted lines, evaluated for varying circuit sizes from 500 to 5000 neurons). We find that multivariate convergence of state frequencies for a population of  neurons is only slightly slower than for uni-variate marginals. To complement this analysis, we also investigated convergence properties of a “random readout” neuron which integrates inputs from many neurons in a subnetwork. It is interesting to note that the convergence speed of such a readout neuron, which receives randomized connections from a randomly chosen subset of 500 neurons, is comparable to that of single marginals (Figure 2F, solid lines), and in fact slightly faster.

neurons is only slightly slower than for uni-variate marginals. To complement this analysis, we also investigated convergence properties of a “random readout” neuron which integrates inputs from many neurons in a subnetwork. It is interesting to note that the convergence speed of such a readout neuron, which receives randomized connections from a randomly chosen subset of 500 neurons, is comparable to that of single marginals (Figure 2F, solid lines), and in fact slightly faster.

Impact of different dynamic regimes on the convergence time

An interesting research question is which dynamic or structural properties of a cortical microcircuit model  have a strong impact on its convergence speed to the stationary distribution

have a strong impact on its convergence speed to the stationary distribution  . Unfortunately, a comprehensive treatment of this question is beyond the scope of this paper, since virtually any aspect of circuit dynamics could be investigated in this context. Even if one focuses on a single aspect, the impact of one circuit feature is likely to depend on the presence of other features (and probably also on the properties of the input). Nonetheless, to lay a foundation for further investigation, first empirical results are given in Figure 3.

. Unfortunately, a comprehensive treatment of this question is beyond the scope of this paper, since virtually any aspect of circuit dynamics could be investigated in this context. Even if one focuses on a single aspect, the impact of one circuit feature is likely to depend on the presence of other features (and probably also on the properties of the input). Nonetheless, to lay a foundation for further investigation, first empirical results are given in Figure 3.

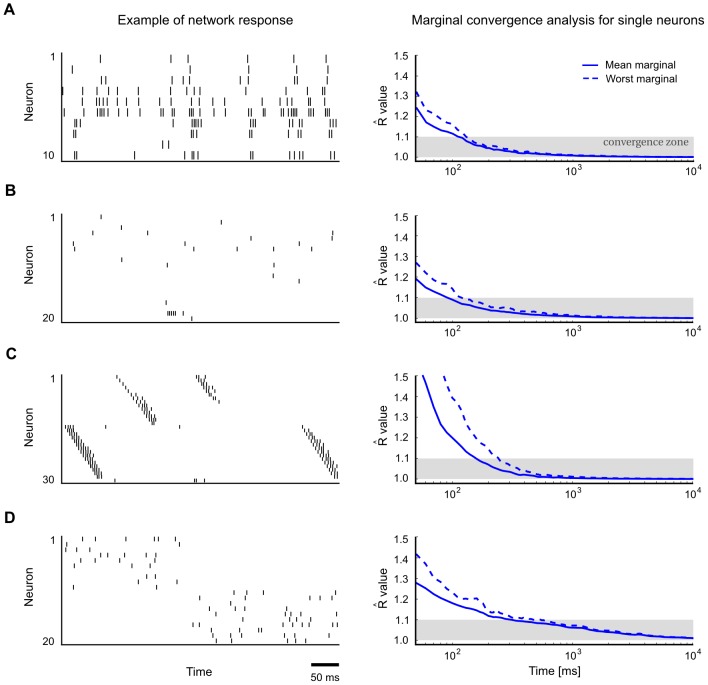

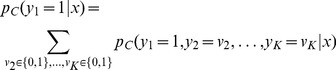

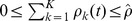

Figure 3. Impact of network architecture and network dynamics on convergence speed.

Convergence properties for single neurons (as in Figure 2C) in different network architectures were assessed using univariate Gelman-Rubin analysis. Typical network activity is shown on the left, convergence speed on the right (solid: mean marginal, dashed: worst marginal). A. Small cortical column model from Figure 1 (input neurons not shown). B. Network with sparse activity (20 neurons). C. Network with stereotypical trajectories (50 neurons, inhibitory neurons not shown). Despite strongly irreversible dynamics, convergence is only slightly slower. D. Network with bistable dynamics (two competing populations, each comprising 10 neurons). Convergence is slower in this circuit due to low-frequency switching dynamics between two attractors.

As a reference point, Figure 3A shows a typical activity pattern and convergence speed of single marginals in the small cortical microcircuit model from Figure 1. To test whether the overall activity of a network has an obvious impact on convergence speed, we constructed a small network of 20 neurons (10 excitatory, 10 inhibitory) and tuned connection weights to achieve sparse overall activity (Figure 3B). A comparison of panels A and B suggests that overall network activity has no significant impact on convergence speed. To test whether the presence of stereotypical trajectories of network states (similar to [41]) has a noticeable influence on convergence, we constructed a small network exhibiting strong sequential activity patterns (see Figure 3C). We find that convergence speed is hardly affected, except for the first  (see Figure 3C). Within the scope of this first empirical investigation, we were only able to produce a significant slow-down of the convergence speed by building a network that alternated between two attractors (Figure 3D).

(see Figure 3C). Within the scope of this first empirical investigation, we were only able to produce a significant slow-down of the convergence speed by building a network that alternated between two attractors (Figure 3D).

Distributions of network states in the presence of periodic network input

In Theorem 1 we had already addressed one important case where the network  receives dynamic external inputs: the case when external input is generated by some Markov process. But many networks of neurons in the brain are also subject to more or less pronounced periodic inputs (“brain rhythms” [61]–[63]), and it is known that these interact with knowledge represented in distributions of network states in specific ways. For instance, it had been shown in [35] that the phase of the firing of place cells in the hippocampus of rats relative to an underlying theta-rhythm is related to the expected time when the corresponding location will be reached. Inhibitory neurons in hippocampus have also been reported to fire preferentially at specific phases of the theta cycle (see e.g. Figure S5 in [12]). Moreover it was shown that different items that are held in working memory are preferentially encoded by neurons that fire at different phases of an underlying gamma-oscillation in the monkey prefrontal cortex [64] (see [65] for further evidence that such oscillations are behaviorally relevant). Phase coding was also reported in superior temporal sulcus during category representation [66]. The following result provides a theoretical foundation for such phase-specific encoding of knowledge within a framework of stochastic computation in networks of spiking neurons.

receives dynamic external inputs: the case when external input is generated by some Markov process. But many networks of neurons in the brain are also subject to more or less pronounced periodic inputs (“brain rhythms” [61]–[63]), and it is known that these interact with knowledge represented in distributions of network states in specific ways. For instance, it had been shown in [35] that the phase of the firing of place cells in the hippocampus of rats relative to an underlying theta-rhythm is related to the expected time when the corresponding location will be reached. Inhibitory neurons in hippocampus have also been reported to fire preferentially at specific phases of the theta cycle (see e.g. Figure S5 in [12]). Moreover it was shown that different items that are held in working memory are preferentially encoded by neurons that fire at different phases of an underlying gamma-oscillation in the monkey prefrontal cortex [64] (see [65] for further evidence that such oscillations are behaviorally relevant). Phase coding was also reported in superior temporal sulcus during category representation [66]. The following result provides a theoretical foundation for such phase-specific encoding of knowledge within a framework of stochastic computation in networks of spiking neurons.

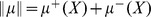

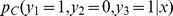

Theorem 2 (Phase-specific distributions of network states)

Let

be an arbitrary model for a network of stochastic spiking neurons as in Theorem 1. Assume now that the vector of input rates

be an arbitrary model for a network of stochastic spiking neurons as in Theorem 1. Assume now that the vector of input rates

has in addition to fixed components also some components that are periodic with a period

has in addition to fixed components also some components that are periodic with a period

(such that each input neuron

(such that each input neuron

emits a Poisson spike train with an

emits a Poisson spike train with an

-periodically varying firing rate

-periodically varying firing rate

). Then the distribution of network states

). Then the distribution of network states

converges for every phase

converges for every phase

(

(

) exponentially fast to a unique stationary distribution of network states

) exponentially fast to a unique stationary distribution of network states

at this phase

at this phase

of the periodic network input

of the periodic network input

.

.

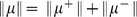

Hence, a circuit  can potentially store in each clearly separable phase

can potentially store in each clearly separable phase  of an (externally) imposed oscillation a different, phase-specific, stationary distribution

of an (externally) imposed oscillation a different, phase-specific, stationary distribution  . Below we will address basic implications of this result in the context of two experimentally observed phenomena: stereotypical trajectories of network states and bi-stable (or multi-stable) network activity.

. Below we will address basic implications of this result in the context of two experimentally observed phenomena: stereotypical trajectories of network states and bi-stable (or multi-stable) network activity.

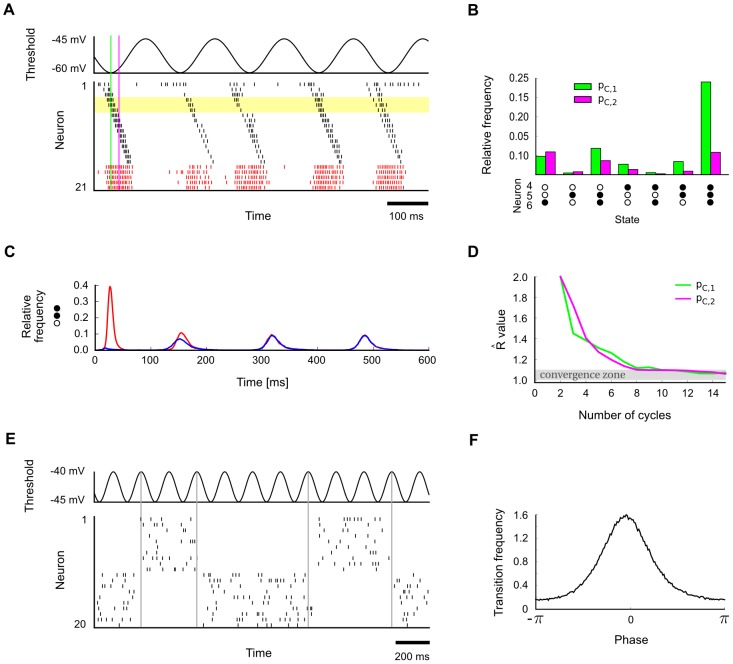

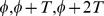

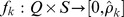

Figure 4A–D demonstrates the emergence of phase-specific distributions in a small circuit (the same as in Figure 3C but with only one chain) with a built-in stereotypical trajectory similar to a spatial path sequence generated by hippocampal place cell assemblies [35], [36]. Figure 4A shows a typical spike pattern in response to rhythmic background stimulation (spikes from inhibitory neurons in red). The background oscillation was implemented here for simplicity via direct rhythmic modulation of the spiking threshold of all neurons. Note that the trajectory becomes particularly often initiated at a specific phase of the rhythm (when neuronal thresholds are lowest), like in experimental data 35,36. As a result, different phases within a cycle of the rhythm become automatically associated with distinct segments of the trajectory. One can measure and visualize this effect by comparing the frequency of network states which occur at two different phases, i.e., by comparing the stationary distributions  for these two phases. Figure 4B shows a comparison of phase-specific marginal distributions on a small subnetwork of 3 neurons, demonstrating that phase-specific stationary distributions may indeed vary considerably across different phases. Convergence to the phase-specific stationary distributions

for these two phases. Figure 4B shows a comparison of phase-specific marginal distributions on a small subnetwork of 3 neurons, demonstrating that phase-specific stationary distributions may indeed vary considerably across different phases. Convergence to the phase-specific stationary distributions  can be understood as the convergence of the probability of any given state to a periodic limit cycle as a function of the phase

can be understood as the convergence of the probability of any given state to a periodic limit cycle as a function of the phase  (illustrated in Figure 4C). An application of the Gelman-Rubin multivariate diagnostic suggests that this convergence takes places within a few cycles of the theta oscillation (Figure 4D).

(illustrated in Figure 4C). An application of the Gelman-Rubin multivariate diagnostic suggests that this convergence takes places within a few cycles of the theta oscillation (Figure 4D).

Figure 4. Emergence of phase-specific stationary distributions of network states in the presence of periodic network input.

A. A network with a built-in stereotypical trajectory is stimulated with a  background oscillation. The oscillation (top) is imposed on the neuronal thresholds of all neurons. The trajectories produced by the network (bottom) become automatically synchronized to the background rhythm. The yellow shading marks the three neurons for which the analysis in panels B and C was carried out. The two indicated time points (green and purple lines) mark the two phases for which the phase-specific stationary distributions are considered in panels B and D (

background oscillation. The oscillation (top) is imposed on the neuronal thresholds of all neurons. The trajectories produced by the network (bottom) become automatically synchronized to the background rhythm. The yellow shading marks the three neurons for which the analysis in panels B and C was carried out. The two indicated time points (green and purple lines) mark the two phases for which the phase-specific stationary distributions are considered in panels B and D ( and

and  into the cycle, with phase-specific distributions

into the cycle, with phase-specific distributions  and

and  , respectively). B. The empirically measured distributions of network states are observed to differ significantly at two different phases of the oscillation (phases marked in panel A). Shown is for each phase the phase-specific marginal distribution over 3 neurons (4, 5 and 6), using simple states with

, respectively). B. The empirically measured distributions of network states are observed to differ significantly at two different phases of the oscillation (phases marked in panel A). Shown is for each phase the phase-specific marginal distribution over 3 neurons (4, 5 and 6), using simple states with  . The zero state (0,0,0) is not shown. The empirical distribution for each phase

. The zero state (0,0,0) is not shown. The empirical distribution for each phase  was obtained from a single long run, by taking into account the network states at times

was obtained from a single long run, by taking into account the network states at times  , etc., with cycle length