Abstract

Objectives. We assessed the timeliness, accuracy, and cost of a new electronic disease surveillance system at the local health department level. We describe practices associated with lower cost and better surveillance timeliness and accuracy.

Methods. Interviews conducted May through August 2010 with local health department (LHD) staff at a simple random sample of 30 of 100 North Carolina counties provided information on surveillance practices and costs; we used surveillance system data to calculate timeliness and accuracy. We identified LHDs with best timeliness and accuracy and used these categories to compare surveillance practices and costs.

Results. Local health departments in the top tertiles for surveillance timeliness and accuracy had a lower cost per case reported than LHDs with lower timeliness and accuracy ($71 and $124 per case reported, respectively; P = .03). Best surveillance practices fell into 2 domains: efficient use of the electronic surveillance system and use of surveillance data for local evaluation and program management.

Conclusions. Timely and accurate surveillance can be achieved in the setting of restricted funding experienced by many LHDs. Adopting best surveillance practices may improve both efficiency and public health outcomes.

Communicable disease reporting is central to public health surveillance, providing data to detect outbreaks and to describe disease trends.1 Over the past 10 years, communicable disease surveillance has transitioned from traditional paper-based disease reports to electronic reporting.2 All states have converted parts or all of their disease reporting to electronic systems, and most states now use an electronic system to enter and transmit case information at local and state public health agencies.3

The transition to electronic reporting has resulted in corresponding modifications to surveillance practice, including changes in who enters and accesses communicable disease case data and how these data are entered at local and state health department levels.4–6 These and other changes have been described at the state level,3,7,8 but less information is available describing changes at the local level. Furthermore, there is little documented information on the cost or cost-effectiveness of electronic communicable disease surveillance systems at any level. Because funds for local public health are scarce and must be prioritized on the basis of costs and benefits, information about the costs of electronic disease surveillance is needed.

In 2008, North Carolina implemented the North Carolina Electronic Disease Surveillance System (NC EDSS). The goal of this study was to describe the resources dedicated to communicable disease surveillance with NC EDSS at the local health department (LHD) level. We examined the cases reported before and after NC EDSS implementation and calculated personnel costs associated with communicable disease reporting with the NC EDSS system. Finally, we assigned LHDs composite scores on the basis of accuracy and timeliness of case reports, and compared costs and surveillance practices for LHDs with better and worse timeliness and accuracy.

METHODS

We created a simple random sample of 30 of 100 North Carolina counties (representing 30 of 85 LHDs) to identify LHD staff for participation. We based the sampling strategy on discussion with local experts. Because there was little knowledge of which potential stratification factors would be relevant (there was little information available on associations between LHD characteristics and surveillance outcomes), we chose a simple random sample. We invited 2 staff members from each LHD (60 staff in total) to respond to a survey administered in face-to-face interviews. The first was the LHD’s designated NC EDSS lead, who had supervisory responsibility for NC EDSS. The second participant was a communicable disease (CD) staff member with responsibility for reporting cases in NC EDSS, randomly selected from a list generated by the NC EDSS lead.

Survey Instrument

We developed separate structured questionnaires for NC EDSS leads and communicable disease staff members. The NC EDSS lead questionnaire focused on LHD organizational practices and staffing capacities. The questionnaire asked the lead to list the staff currently using NC EDSS and the proportion of a full-time equivalent (FTE) position that each staff member spent using NC EDSS; we verified names with a user list generated by the NC EDSS system.

In addition, the questionnaire asked NC EDSS leads and CD staff members about surveillance practices, including how individual case entry was performed, who performed surveillance tasks and how they were performed (review of new case reports, communication about cases, and extraction of data), and daily surveillance and NC EDSS workflows (how work is shared among staff; who is responsible for entering, reviewing, and extracting data; and how surveillance data are used). Finally, the questionnaire asked staff members to complete a log recording the amount of time spent on daily tasks, including NC EDSS, for 5 consecutive work days.

Data Analysis

We conducted in-person interviews between May and August 2010 and recorded and transcribed the responses. We entered data into a Microsoft Access database and exported the data to SAS version 9.2 (SAS Institute, Cary, NC) for analysis. To adjust for potential confounding by population size, we also performed analyses with LHDs stratified as small (population served < 55 654), medium (population served 55 655–107 427), and large (population served > 107 427) by using the North Carolina 50th and 75th county population percentiles (2009 estimates).9

We calculated the cost of staff time by using the best available salary data for each job title. Average salaries for administrative assistants and CD nurses were available by county.10 We used average nursing supervisor salaries from the North Carolina Division of Public Health to approximate salary for nursing supervisors. These 3 staff categories made up most of the NC EDSS users; we applied their salaries to the few other laboratory and clinical staff users as appropriate. We added benefit costs11 (30%) to estimated salary figures. To calculate local personnel costs per case, we multiplied the FTEs reported for each county by the appropriate salaries and divided costs by 3 to represent the 4-month survey period. We calculated cost per case as the total local personnel cost divided by the total number of cases reported during the study period. We calculated cost per capita per year as the total personnel cost multiplied by 3 (to represent a full year) and divided by population served by the LHD. We calculated a comparison of estimated personnel cost per case report for those LHDs that did not report adding staff after implementation of NC EDSS (18 LHDs). For this calculation, the number of FTEs working with NC EDSS was assumed to be the same in 2007 and 2010.

We compared communicable disease data from May 1 through August 31 for 2007 and 2010 to assess the number, accuracy, and timeliness of case reports. We assessed data on vaccine-preventable, sexually transmitted, and other notifiable communicable diseases; syphilis and HIV were not integrated into NC EDSS in 2010 and we excluded them from the study. Data were not available for active tuberculosis reports, so timeliness and accuracy calculations excluded tuberculosis. Reporting of latent tuberculosis infection is not required by the state and is performed in some, but not all, LHDs, so we excluded these cases from all analyses. Some reportable disease cases (e.g., pertussis) can require complex follow-up and contact tracing, whereas others (e.g., chlamydia) require less from LHD staff. To assess how the burden of complex cases was distributed, we calculated the proportion of chlamydia cases for each LHD.

Electronic laboratory reporting (ELR) from the North Carolina State Laboratory of Public Health and LabCorp was implemented concurrently with NC EDSS. Electronic laboratory reporting decreases the time required for case processing in LHDs.12 To assess how the benefit of ELR was distributed, we calculated the proportion of cases reported by ELR for each LHD.

We measured accuracy as the proportion of case reports returned to the LHD for corrections or missing data. We calculated timeliness as the proportion of cases reported to the state within 30 days of receipt by the LHD (30 days is the requested timeframe for case report submission). We could assess timeliness only for completed case reports. Although the case reports without a completion date are not captured in the timeliness calculation, they are an important indicator of untimely case handling. To assess incomplete case reports, we evaluated the proportion of case reports created but not further processed for 45 or more days.

To summarize surveillance outcomes, we created a composite score for each LHD, with 1 point awarded for being in the best third of the LHDs surveyed for timeliness (> 79% of completed case reports submitted to state in < 30 days), for accuracy (< 17% returned for corrections or missing data), and for case reports created but not further processed (< 1% of total case reports > 45 days old and not further processed). We classified LHDs with 2 or 3 points as having best surveillance outcomes, whereas those with 0 or 1 points were considered “not best.” We used the t test and the Pearson exact χ2 test to assess the statistical differences in costs between these groups.

RESULTS

Surveys were completed for 28 of 30 LHDs invited to participate, for a 93% response rate. Communicable disease nurses from 21 of 28 (75%) LHDs returned weeklong activity logs. One LHD reported an extremely high number of FTEs per case report, which was not consistent with other data from that LHD; therefore, we removed this outlier from analysis for a final sample of 27 LHDs. County populations associated with each LHD ranged from 8888 to 923 944 persons; there were 9 small, 8 medium, and 10 large LHDs in the sample. There were no significant differences in population between sample LHDs and all North Carolina LHDs (Table 1).

TABLE 1—

Sample Local Health Department Population Compared With North Carolina Total Population: May–August 2010

| Characteristic | Sample, Mean ±SD | Total, Mean ±SD |

| LHD population | 132 899 ±181 273 | 95 725 ±142 523 |

| Cases reported | 368 ±530 | 253 ±450 |

| Proportion of cases reported by ELR | 53 ±19 | 54 ±17 |

| Proportion chlamydia cases | 53 ±11 | 53 ±12 |

| Characteristic by LHD size groupa | ||

| Population | ||

| Small | 32 873 ±15 175 | 27 489 ±13 897 |

| Medium | 81 010 ±20 792 | 74 395 ±16 409 |

| Large | 298 986 ±250 867 | 253 507 ±216 838 |

| Cases reported | ||

| Small | 68 ±64 | 55 ±54 |

| Medium | 212 ±96 | 179 ±95 |

| Large | 839 ±715 | 722 ±709 |

| Proportion of cases reported by ELR | ||

| Small | 60 ±18 | 57 ±17 |

| Medium | 56 ±14 | 59 ±11 |

| Large | 39 ±20 | 42 ±18 |

| Proportion of chlamydia cases | ||

| Small | 52 ±15 | 52 ±15 |

| Medium | 50 ±9 | 54 ±8 |

| Large | 55 ±13 | 54 ±9 |

Note. ELR = electronic laboratory reporting; LHD = local health department.

Small (n = 9): population served < 55 654; medium (n = 8): population served 55 655–107 427; and large (n = 10): population served > 107 427.

From May to August 2007, 8701 cases of communicable disease were reported to these LHDs via paper cards; during the same period in 2010, 10 868 cases were reported electronically via NC EDSS, an increase of 2167 (25%) cases. In 2010, 57% of all case reports submitted were for chlamydia. Thirty-eight percent of all cases reported were reported by ELR. There were no significant differences in the total number of cases, the proportion of cases submitted by ELR, and the proportion of case reports that were for chlamydia between the sample LHDs and all North Carolina LHDs (Table 1). However, the proportion of cases reported by ELR was lower among large LHDs (Table 1; P = .003).

The implementation of NC EDSS increased the number of data elements entered by LHD staff; some data entry previously performed by the state became the responsibility of the LHD, and data elements were added for some reportable diseases. When asked to report changes to case management arising from the implementation of NC EDSS, 9 of 27 (33%) NC EDSS leads reported that they had added staff, had increased time spent by existing staff on CD surveillance, or both.

Local Personnel Costs

The average LHD dedicated 1.2 FTEs or 48 hours per week (range = 0.1–6 FTEs) to using NC EDSS and most of this time (0.9 FTEs or 36 hours per week per LHD) was contributed by CD nurses. The majority of individual staff members using NC EDSS (88 individuals; 65%) spent less than 8 hours per week using the system. Most employees, in large, medium, and small LHDs, had other duties in addition to using NC EDSS, such as patient care, case and contact investigation and interviews, laboratory duties, and administrative duties. The following findings describe those FTEs dedicated to NC EDSS use.

The number of case reports processed per FTE per month varied widely (range = 16–154 cases per FTE per month; Figure A, available as a supplement to the online version of this article at http://www.ajph.org). The average number of case reports processed per FTE was smaller in small LHDs (50 cases per FTE per month) than in medium or large LHDs (72 and 89, respectively; between-group differences P = .09). Local personnel costs per case ranged from $27 to $373 (Figure B, available as a supplement to the online version of this article at http://www.ajph.org), and the average cost per case report was higher for small LHDs ($162) than medium ($91) or large LHDs ($74; between-group differences P = .05). The average local personnel cost per capita per year for electronic disease surveillance activities was $0.70.

In the 18 LHDs where NC EDSS leads did not report adding staff or staff time as one of the changes made in response to NC EDSS implementation, the average number of cases per FTE per month reported in 2007 was less than that reported in 2010 (52 vs 63 cases per FTE per month). The average personnel cost per case report in 2007 ($163) was greater than the cost in 2010 ($119; P = .29).

Surveillance Outcomes and Practices

We classified 8 LHDs as having best surveillance outcomes (as indicated by 2 or 3 points on the composite score); these were 3 of 9 small LHDs, 3 of 8 medium LHDs, and 2 of 10 large LHDs. Neither the mean proportion of chlamydia cases nor the proportion of cases that were reported by ELR differed significantly between “best” and “not-best” LHDs (Table 2).

TABLE 2—

Local Health Department Characteristics by Most Timely and Accurate (Best) and Less Timely and Accurate (Not-Best) Surveillance Outcomes: 27 North Carolina Local Health Departments, May–August 2010

| Characteristic | Best, No. (%) or Mean ±SD (n = 8) | Not-Best, No. (%) or Mean ±SD (n = 19) |

| LHD sizea | ||

| Small | 3 (33) | 6 (66) |

| Medium | 3 (37) | 5 (63) |

| Large | 2 (20) | 8 (80) |

| Proportion of cases reported by ELR | 55 ±16 | 48 ±20 |

| Small LHDs | 56 ±17 | 61 ±20 |

| Medium LHDs | 64 ±8 | 50 ±14 |

| Large LHDs | 41 ±19 | 38 ±20 |

| Proportion of chlamydia cases | 54 ±9 | 52 ±12 |

| Small LHDs | 54 ±9 | 51 ±12 |

| Medium LHDs | 51 ±2 | 50 ±12 |

| Large LHDs | 58 ±18 | 54 ±12 |

Note. ELR = electronic laboratory reporting; LHD = local health department.

Small (n = 9): population served < 55 654; medium (n = 8): population served 55 655–107 427; and large (n = 10): population served > 107 427.

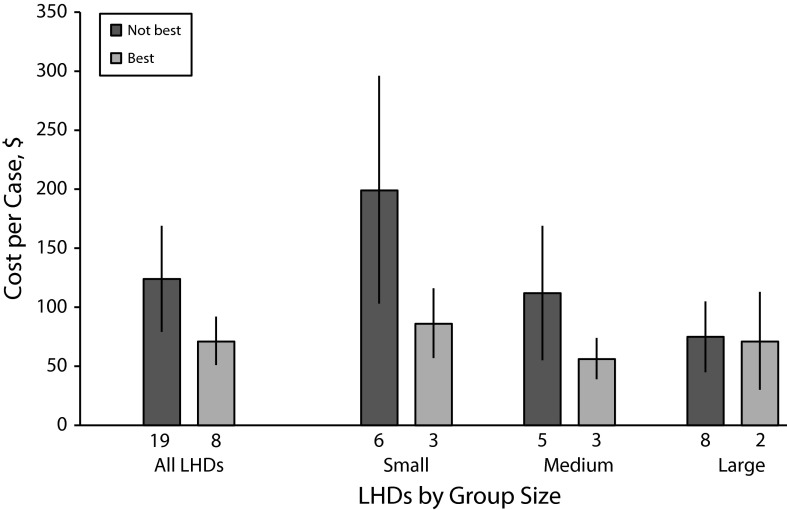

Local health departments with best surveillance outcomes had lower average personnel cost per case ($71) than LHDs in the “not best” group ($124; P = .03; Figure 1). Average cost per capita per year was also lower for LHDs with best surveillance outcomes ($0.59 vs $0.75), although this difference was not significant (P = .42). Among LHDs with best surveillance outcomes, the cost per case report did not differ by LHD size; among LHDs in the “not best” group, the cost per case was lower in larger LHDs (Figure 1), but this difference was not significant (P = .27).

FIGURE 1—

Average personnel cost per case report by surveillance outcome and local health department size group: 27 North Carolina local health departments, May–August 2010.

Note. LHD = local health department. Whiskers indicate 95% confidence intervals. LHDs stratified as small (population served < 55 654), medium (population served 55 655–107 427), and large (population served > 107 427) by using the North Carolina 50th and 75th county population percentiles (2009 estimates).9

Surveillance practices reported more frequently in LHDs with best surveillance outcomes are presented in Table 3. These practices included using the NC EDSS “wizard” for data entry rather than looking at every case data entry screen and manually identifying the required case report form questions, daily review of LHD lists of cases awaiting processing in NC EDSS, entry of cases by public health nurses only, and use of additional risk information gathered for certain communicable diseases. The interviewed CD nurses’ confidence in their computer abilities was higher in LHDs with best surveillance outcomes. However, none of these differences were statistically significant. Lead and CD nurse years of experience and lead nurse confidence in their computer abilities were not higher in LHDs with best surveillance outcomes. None of the practices listed in Table 3 were associated with a difference in the proportion of cases reported by ELR.

TABLE 3—

Surveillance Practices Performed by Local Health Departments With Most Timely and Accurate (Best) and Less Timely and Accurate (Not-Best) Surveillance Outcomes: 27 North Carolina Local Health Departments, May–August 2010

| Surveillance Practices | No. (%) Reporting Practice (n = 27) | No. (%) Reporting Practice Among Best LHDs (n = 8) | No. (%) Reporting Practice Among Not-Best LHDs (n = 19) |

| LHD staff | |||

| Use risk information for local purposes | 9 (33) | 4 (80)a | 5 (31) |

| Use surveillance data for evaluation | 11 (41) | 5 (63) | 6 (32) |

| Use NC EDSS to communicate with state public health agency | 23 (85) | 8 (100) | 15 (79) |

| Use NC EDSS data in annual reports | 19 (70) | 7 (88) | 12 (63) |

| Link cases to other cases in NC EDSS | 17 (63) | 6 (75) | 11 (58) |

| Use templates and letters provided in NC EDSS | 11 (42) | 4 (50) | 7 (39) |

| CD nurse | |||

| Uses “wizard” to enter data in NC EDSS | 21 (78) | 8 (100) | 13 (68) |

| Reports he or she can check NC EDSS task lists every day | 15 (56) | 6 (75) | 9 (47) |

| Is “very confident” or “confident” of computer abilities | 22 (81) | 8 (100) | 14 (74) |

| NC EDSS case entry by public health nurses only (no administrative or laboratory staff) | 16 (59) | 6 (75) | 10 (53) |

Note. CD = communicable disease; LHD = local health department; NC EDSS = North Carolina electronic disease surveillance system.

Three “don’t know” responses.

DISCUSSION

Following the implementation of the electronic reportable disease surveillance system in North Carolina in 2007–2008, as of 2010, the amount of data entered per case and the total number of cases reported have increased. Our findings suggest that the transitions to electronic case reporting by LHDs and ELR have translated into a lower personnel cost per case report processed. The average personnel cost per case report and per capita were lower in LHDs with best surveillance timeliness and accuracy. This suggests that implementation of best practices can improve case reporting efficiency even in smaller LHDs, and that best practices can be identified and applied in all LHDs to increase workforce efficiency and lower personnel cost.

The implementation of NC EDSS and ELR in 2007–2008 occurred in tandem with other changes in reportable disease case management in North Carolina. First, the number of data elements entered was increased. Second, a requirement that LHD staff confirm correct treatment of reported cases of chlamydia and gonorrhea infection was implemented. Reports of these infections comprised the majority of reportable disease case reports (in 2010, 79% of total cases) and the time required to confirm treatment of all reported chlamydia and gonorrhea cases is considerable. Third, LHDs were invited to enter all reported cases into NC EDSS, rather than only those cases meeting case definition; some LHDs began using NC EDSS to record all locally reported suspected cases before confirmation of case status. The study findings suggest that NC EDSS implementation has led to greater efficiency in case processing, allowing LHDs to process more cases, to enter more data per case, and to confirm treatment on a majority of cases, without increased resources for this work.

Costs for surveillance as a separate activity are not often calculated by public health agencies and are rarely documented. The National Association of County and City Health Officers national profile survey13 provides information on the number of “epidemiologists” employed by LHDs; however, some LHD staff in North Carolina reported anecdotally that they do not consider staff performing surveillance duties (generally, CD nurses) to be “epidemiologists.” Therefore, National Association of County and City Health Officers survey results were not used to assess surveillance capacity in North Carolina LHDs. In this study, the average cost calculated of $0.70 per capita per year for electronic disease surveillance is 2% of the average total expenditure (all public health activities) per capita ($42) calculated for LHDs in 42 US states in 2005.14 The personnel cost per case report varied widely among the LHDs included in the study, reflecting different organizational structures and practices, and potential opportunities for improvement.

Some of the findings presented suggest a relationship between surveillance outcomes and LHD size. The proportion of cases reported by ELR was lower among large LHDs, as was the cost per case reported; fewer large LHDs were in the top third for timeliness and accuracy. Because processing of ELR cases is faster,12 the reduced timeliness seen in large LHDs may be linked to the lower proportion of cases reported by ELR. Practices and organizational structures differ in large LHDs.15–17 One research study indicated that increased LHD size was associated with better performance on essential public health services, which include surveillance (e.g., service 2: diagnose or investigate health problems).17 This finding was broad (e.g., service 2 includes emergency response as well as surveillance activities), but demonstrated a pattern of increase in service delivery in LHDs serving up to 500 000 persons and level or decreasing service delivery with further increases in size. Findings from our study provide some specificity to augment these previous findings, suggesting that although large LHDs may experience economies of scale (e.g., report more cases per FTE), this does not necessarily benefit surveillance timeliness and accuracy.

This study compared surveillance practices between the best third (as defined by our survey data) of LHDs in the sample and the remaining two thirds. Therefore, information is presented that reflects current best practice as seen in our sample rather than best practices defined by an arbitrary standard. This has the advantage of identifying practices that are known to be implementable in LHDs. Practices reported more frequently by counties with best surveillance outcomes and lower cost per case may be worthy of adopting more broadly. These practices fall into 2 categories: practices that result in more efficient use of NC EDSS (such as using the “wizard” and checking task lists every day) and practices that result in incorporation of surveillance data for local purposes (such as using surveillance data for program evaluation and annual reports). Although our findings cannot demonstrate that these practices are directly responsible for more timely, accurate, or lower-cost surveillance, the practices are logical and should be recommended to all LHDs.

Limitations

These findings should be read with the following limitations in mind. The simple random sample may have underrepresented very large LHDs (which are a relatively small proportion of total LHDs in NC), and a stratified sample may have allowed us to detect significant differences in practice and outcomes in very large LHDs. Cost estimates were based solely on salaries and did not include direct costs such as local infrastructure, resources and supplies, and training. Furthermore, FTE time was calculated only for direct use of the NC EDSS system, and did not capture epidemiological case investigation activities performed away from the system interface. Inclusion of these costs would increase the personnel cost per case report.

Because we had no consensus on how to define them a priori, we defined our best and not-best categories on the basis of timeliness and accuracy seen in our survey sample; these definitions may not be generalizable to the remainder of NC counties. However, analyses of timeliness for all LHDs (data not shown) show no significant difference in timeliness between sample LHDs and the other LHDs.

We collected data for this study during the summertime. There are seasonal differences in the number of case reports for some diseases; however, the bulk of the cases reported in this data set (79%) were chlamydia and gonorrhea, which do not have seasonal variation. Seasonal changes in the cases that make up the case report burden are likely to be similar across LHDs. Therefore, the bias that might result from seasonality would be nondifferential.

Finally, our findings suggest that some LHDs are reporting more cases with equivalent staff time following NC EDSS implementation. However, without administrative data on the number of FTEs used for surveillance duties for LHDs before and following NC EDSS implementation, no definitive conclusions can be drawn. It is important to consider these findings in the context of public health governance in North Carolina that may have an impact on surveillance. Most North Carolina LHDs are going through or have been accredited through the state-level accreditation process that began in 2004.18–20

Conclusions

By 2007, all states were in the process of implementing some form of statewide EDSS.3 Implementation of these systems has been completed in many states, and has resulted in efficiencies in reporting21 but has also required adjustments in state and local health department practices around case reporting. Hopkins has noted the traditional attributes of surveillance systems (timeliness, positive and negative predictive value) that should be considered in designing electronic surveillance systems,22 but little information is available on how to best structure electronic systems to improve efficiency and cost-effectiveness in case reporting. In the meantime, sharing useful LHD practices may be a simple way to identify cost-efficiencies for electronic reportable disease surveillance practice.

Acknowledgments

This research was supported by the Centers for Disease Control and Prevention (CDC; grant 1PO1 TP 000296).

The authors would like to thank North Carolina Division of Public Health and local health department staff members for their time and insights. We are also grateful to Del Williams, Rob Pace, and Jean-Marie Maillard at the North Carolina Division of Public Health for valuable discussion of these results.

This research was carried out by the North Carolina Preparedness and Emergency Response Research Center, which is part of the UNC Center for Public Health Preparedness at the University of North Carolina at Chapel Hill’s Gillings School of Global Public Health.

Note. The contents are solely the responsibility of the authors and do not necessarily represent the official views of CDC. Additional information can be found at http://cphp.sph.unc.edu/ncperrc.

Human Participant Protection

The survey was approved as exempt from review by the institutional review board of the University of North Carolina at Chapel Hill.

References

- 1.Thacker SB, Berkelman RL. Public health surveillance in the United States. Epidemiol Rev. 1988;10:164–190. doi: 10.1093/oxfordjournals.epirev.a036021. [DOI] [PubMed] [Google Scholar]

- 2.Centers for Disease Control and Prevention. State electronic disease surveillance systems—United States, 2007 and 2010. MMWR Morb Mortal Wkly Rep. 2011;60(41):1421–1423. [PubMed] [Google Scholar]

- 3.Boulton ML, Hadler J, Beck AJ, Ferland L, Lichtveld M. Assessment of epidemiology capacity in state health departments, 2004–2009. Public Health Rep. 2011;126(1):84–93. doi: 10.1177/003335491112600112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Octania-Poole S, Tan C, Bednarczyk M, Troutman E, Verma A. HIMSS Nicholas E. Davies Award Application. 2008 [Google Scholar]

- 5.Mettenbrink C. Business intelligence: harnessing the power of information. Oral presentation at: Council of State and Territorial Epidemiologists Annual Conference; June 5, 2012; Omaha, NE.

- 6.Tassini K, Shteye P, Mehta V, Dey AK, Voorhees R, Wagner M. Comprehensive evaluation of the infectious disease bio-surveillance system in a county public health department. Oral presentation at: Council of State and Territorial Epidemiologists Annual Conference; June 14, 2011; Pittsburgh PA.

- 7.Centers for Disease Control and Prevention. Assessment of epidemiology capacity in state health departments—United States, 2009. MMWR Morb Mortal Wkly Rep. 2009;58(49):1373–1377. [PubMed] [Google Scholar]

- 8.Centers for Disease Control and Prevention. Status of state electronic disease surveillance systems—United States, 2007. MMWR Morb Mortal Wkly Rep. 2009;58(29):804–807. [PubMed] [Google Scholar]

- 9. North Carolina Office of State Budget and Management. 2009 certified county population estimates. Available at: http://www.osbm.state.nc.us/ncosbm/facts_and_figures/socioeconomic_data/population_estimates/demog/countytotals_2000_2009.html. Accessed March 15, 2012.

- 10. University of North Carolina, Chapel Hill, School of Government. County salaries in North Carolina. Available at: http://www.sog.unc.edu/node/518. Accessed January 25, 2012.

- 11. US Bureau of Labor Statistics. Economic news release: employer costs for employee compensation. Washington, DC: US Department of Labor; 2011. USDL-11–1305.

- 12.Samoff E, Fangman MT, Fleischauer AT, Waller AE, MacDonald PDM. ELR decreases the time required to process cases at state and local public health agencies. Oral presentation at: Council of State and Territorial Epidemiologists Annual Conference; June 5, 2012; Omaha, NE.

- 13.2010 National Profile of Local Health Departments. Washington, DC: National Association of County and City Health Organizations; 2011. [Google Scholar]

- 14.Erwin PC, Greene SB, Mays GP, Ricketts TC, Davis MV. The association of changes in local health department resources with changes in state-level health outcomes. Am J Public Health. 2011;101(4):609–615. doi: 10.2105/AJPH.2009.177451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Erwin PC. The performance of local health departments: a review of the literature. J Pub Health Manag Pract. 2008;14(2):E9–E18. doi: 10.1097/01.PHH.0000311903.34067.89. [DOI] [PubMed] [Google Scholar]

- 16.Vest JR, Menachemi N, Ford EW. Governance’s role in local health departments’ information system and technology usage. J Public Health Manag Pract. 2012;18(2):160–168. doi: 10.1097/PHH.0b013e318226c9ef. [DOI] [PubMed] [Google Scholar]

- 17.Mays GP, McHugh MC, Shim K et al. Institutional and economic determinants of public health system performance. Am J Public Health. 2006;96(3):523–531. doi: 10.2105/AJPH.2005.064253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. The North Carolina Division of Public Health and The North Carolina Institute for Public Health. North Carolina local health department accreditation. Available at: http://nciph.sph.unc.edu/accred. Accessed January 20, 2012.

- 19. Public Health Accreditation Board. Standards and Measures. Version 1.0. Available at: http://www.phaboard.org/wp-content/uploads/PHAB-Standards-and-Measures-Version-1_0.pdf. Accessed January 20, 2012.

- 20.Samoff E, MacDonald PDM, Fangman MT, Waller AE. Local surveillance practice evaluation in North Carolina and value of new national accreditation measures. J Public Health Manag Pract. 2013;19(2):146–152. doi: 10.1097/PHH.0b013e318252ee21. [DOI] [PubMed] [Google Scholar]

- 21.Hamilton JJ, Goodin K, Hopkins RS. Automated application of case classification logic to improve reportable disease surveillance. Oral presentation at: Public Health Information Network Conference; August 30–September 3, 2009; Atlanta, GA. Available at: https://cdc.confex.com/cdc/phin2009/webprogram/Paper21169.html. Accessed April 13, 2012. [Google Scholar]

- 22.Hopkins RS. Design and operation of state and local infectious disease surveillance systems. J Public Health Manag Pract. 2005;11(3):184–190. doi: 10.1097/00124784-200505000-00002. [DOI] [PubMed] [Google Scholar]