Abstract

Given recent interest in syllabic rates (~2-5 Hz) for speech processing, we review the perception of “fluctuation” range (~1-10 Hz) modulations during listening to speech and technical auditory stimuli (AM and FM tones and noises, and ripple sounds). We find evidence that the temporal modulation transfer function (TMTF) of human auditory perception is not simply low-pass in nature, but rather exhibits a peak in sensitivity in the syllabic range (~2-5 Hz). We also address human and animal neurophysiological evidence, and argue that this bandpass tuning arises at the thalamocortical level and is more associated with non-primary regions than primary regions of cortex. The bandpass rather than low-pass TMTF has implications for modeling auditory central physiology and speech processing: this implicates temporal contrast rather than simple temporal integration, with contrast enhancement for dynamic stimuli in the fluctuation range.

1. INTRODUCTION

A theme of this special issue is the role of vocalizations as stimuli in auditory neuroscience. Vocalizations can be considered as part of a larger class of communication signals used by other species and man-made devices, which by necessity exhibit modulations. As Picinbono (1997) states: “Let us remember that a purely monochromatic signal such as a cos (ωt+ φ) cannot transmit any information. For this purpose, a modulation is required, …” Likewise, unmodulated noise cannot transmit any information, so we can expect on a priori grounds a link between AM/FM (amplitude/frequency modulation) studies and speech studies (Rosen, 1992). In fact, the same auditory regions involved in speech processing are strongly activated by AM/FM sounds. For example, the non-primary cortical areas most activated for AM/FM processing in the syllabic (~2-5 Hz) range are also implicated in pathways for intelligible speech (Scott et al., 2006, Hall, 2012) (Section 4). Thus, we have chosen as our contribution to “Communication Sounds in the Brain” a new consideration of AM/FM processing with relevance to speech. A premise of this review is that careful study of AM/FM results will lead to insights for speech processing.

Recent reviews (Joris et al., 2004, Malone and Schreiner, 2010) cover well the periodicity pitch range surrounding voice fundamental frequency (F0, ~50-500 Hz), and there is a well-established speech processing literature on extracting F0. The roughness range (~25-125 Hz) has also been studied extensively and treated well in recent reviews. However, the slower ranges of AM/FM (to be termed the ‘fluctuation’ range, ~1-10 Hz) are traditionally understudied. We will also find that the neural systems most strongly implicated in fluctuation perception – the ‘belt’ and ‘parabelt’ regions of the CNS – are far less studied than ‘core’ regions (as commented by Goldstein and Knight, 1980, Hall, 2005). In parallel, the slower aspects of speech – syllabic time scales, prosody, stress, intonation, emotional aspects, etc. – are understudied relative to the spectrotemporally-detailed aspects for phonetic purposes. In further parallel, algorithmic approaches to speech processing have only rarely (and more recently) focused on longer time scales. Given the recent interest in syllabic time scales (~2-5 Hz) for speech perception, human neurophysiology, and computer speech processing (Hall, 2005, Greenberg, 2006, Ghitza and Greenberg, 2009, Giraud and Poeppel, 2012, Obleser et al., 2012, Peelle and Davis, 2012), we have chosen to review these time scales in more basic studies of auditory perception and physiology. This is not a comprehensive review of AM/FM sounds, rather a focus on the fluctuation (~1-10 Hz) range and the corresponding time scales of speech. Before embarking on our review, we offer our thoughts on the theme for this special issue.

1.1. What are the roles of speech and modulated sounds in auditory neuroscience?

In the exploratory phase of empirical data gathering, speech is a useful stimulus because, amongst other things, it elicits robust activations throughout the auditory nervous system. These yield overall observations concerning directly the stimulus set of interest, which our eventual models must explain. However, the empirical observations available to us – a variety of auditory stations in various species under various anesthetics, using various particular synthetic or natural speech sounds for a given study – do not allow us to easily perceive the essential patterns to be included in the model building exercise. Even a complete catalogue of each auditory station responding to each possible phoneme or speech sound would likely remain inadequate. On the other hand, technical stimuli (AM/FM) can be arrayed systematically according to a single parameter (modulation frequency) and related directly to communication theory and signals/systems theory. This obviously accelerates the model building exercise during the difficult early phases, when even the overall layout and essential features of the models are still in question. However, we find that speech is an essential stimulus again in the final stages of model building – the final selection of model structure and specification of model parameters. Since speech is taken to be the stimulus set of interest, the final least-squares or other fit should be determined by the use of speech stimuli whenever possible. We note in this context that speech is usually ‘sufficiently exciting’, which is a mathematical requirement in system identification (Ljung, 1999), and essentially means that speech is sufficiently rich in spectrotemporal features to cover the signal space of interest. In some contexts, where the modeler has already chosen a certain model structure – for example, the spectro-temporal receptive field (STRF) – then one can usefully skip straight to the use of speech as a stimulus for final least-squares-fit of model parameters. But as we seek more realistic models of CNS function, with new aspects to exploit for speech processing applications, we may require ongoing use of technical stimuli.

We are at a point in history where we have good models of the auditory periphery for most speech processing purposes. By a ‘good’ model is meant one with broad explanatory power, accurate predictions for arbitrary inputs, and as few parameters as possible (the principle of parsimony). Adequate models of the cochlear nucleus appear to be arriving or nearly on the horizon, but this still places us some distance from a complete computational model of the auditory CNS. Before arriving at a full physiological model, we can hope to arrive at simpler models which are considerably abstracted from actual physiological details (yet including as much physiological insight as possible). In order to approach the modeling problem for auditory CNS, certain simplifications are useful or necessary at the early stages. First, we can ignore binaural/spatial aspects in a first model for speech processing purposes (other than the multispeaker situation, where binaural cues are essential, Cherry, 1953, Bregman, 1990, Schimmel et al., 2008). However, more severe simplifications appear to be required in order to relate psychophysics, human neuroscience, animal neurophysiology, and computer speech processing together in a comprehensible way.

We suggest that studies of modulated (AM/FM) sounds may serve as an intermediate stage, before final model specifications, in the long-term goals of speech neurophysiology and modeling. Extensive bodies of work are already available concerning AM/FM sounds in communication theory, signals and systems theory, human psychophysics, and animal neurophysiology. For a given modulation type, there is a systematic space of signals controlled by a single parameter (modulation frequency), allowing unambiguous mapping across research domains into a single orderly framework. While this is still not a sufficiently complicated space to understand all aspects of speech, it is a long way in the right direction compared to clicks and tones, the traditional technical stimuli. The fact that many workers have adopted modulation filter banks (Kay and Matthews, 1972, Dau et al., 1997) or related approaches (Greenberg and Kingsbury, 1997), i.e. adding to the spectral and temporal dimensions a modulation dimension (Atlas and Shamma, 2003, Singh and Theunissen, 2003), indicates the utility of having a stimulus set which can be systematically ordered along the modulation frequency axis (as opposed to various random stimuli).

AM/FM sounds also have the advantage of lacking spectral structure. We noted that severe simplification is often required at early model building stages, such as ignoring binaural/spatial processing. We can also ignore spectral-domain pitch processing for AM/FM sounds below the range where periodicity pitch is elicited (below ~50 Hz), where the resulting spectral structure is not resolvable by the ear. Thus, we can ignore two-tone interaction, lateral inhibition, and other complexities of cross-spectral processing. Results discussed below (Sections 2, 4) indicate that spectro-temporal processing is to a first approximation separable, such that spectral and temporal processing studied separately can be recombined to predict spectro-temporal results. Before auditory CNS models will become available for arbitrary signals, preliminary models to account for temporal stimuli are likely to appear. Since speech can be understood by temporal cues alone (Shannon et al., 1995), this further suggests that study of temporal processing in isolation from complex spectral structure may serve as a first approximation for preliminary models. However, as we argued above, these models should then be tested for parameter specification by use of natural speech signals when possible.

Finally, there is abundant evidence that the same basic auditory percepts experienced during listening to modulated sounds (‘fluctuation’, ‘roughness’, ‘periodicity pitch’) are also experienced when the same modulation frequencies are present in the speech signal. Voice fundamental frequency (F0), from glottal pulse rate, elicits the same basic pitch sensation as periodic clicks or AM/FM stimuli of the same frequency. Glottal shimmer (AM) and jitter (FM) result in roughness range (~25-125 Hz) modulations, and correspondingly elicit a perception of roughness in the voice (Wendhal, 1966b, Wendhal, 1966a, Coleman, 1971). However, tremulo (AM) and vibrato (FM) in the voice occur below the roughness range (~2-20 Hz, usually ~7 Hz), and generally sound pleasing and form part of musical technique (Seashore, 1936, Potter et al., 1947). Thus, perception of AM/FM sounds directly predicts perception of vocalizations, in so far as the same modulation rates are present. In Section 2 we consider these basic percepts for AM/FM sounds, where it should be kept in mind that these are the same basic percepts experienced during listening to vocalization stimuli.

2. BASIC AUDITORY PERCEPTS FOR AM AND FM SOUNDS

Before narrowing our focus to the fluctuation range (~1-10 Hz), we set up the context of the full range of AM/FM percepts.

2.1. AM tones

The most basic modulated sounds are “beats”, which consist of two sinusoidal tones at frequencies f1 and f2 added together. The resulting sound exhibits AM modulations at the frequency fm = |f1 - f2|. Beats were already understood by the time of Helmholtz (1863, see Wever, 1929 for history), who introduced the term ‘roughness’ for two violin notes beating at fm = ~30 Hz. A second means of generating AM tones is to multiply a pure tone by an envelope of frequency fm. The perception of these two types of AM sound is essentially identical and they are summarized together (Figs. 1 and 2). To our knowledge, only one author has used magnitude scaling, over the full range of AM (from fluctuation through roughness to periodicity pitch), and thus obtained a self-consistent and fairly comprehensive data set (Fastl, 1977b, Fastl and Stoll, 1979, Fastl, 1982, Fastl, 1983, Fastl and Zwicker, 2007). In Fig. 1, we summarize Fastl’s data for AM tones, as obtained carefully from figures in his text (Fastl and Zwicker, 2007). Fig. 2 includes a variety of relevant data collected over the decades for comparison, and for delineating existence vs. non-existence regions.

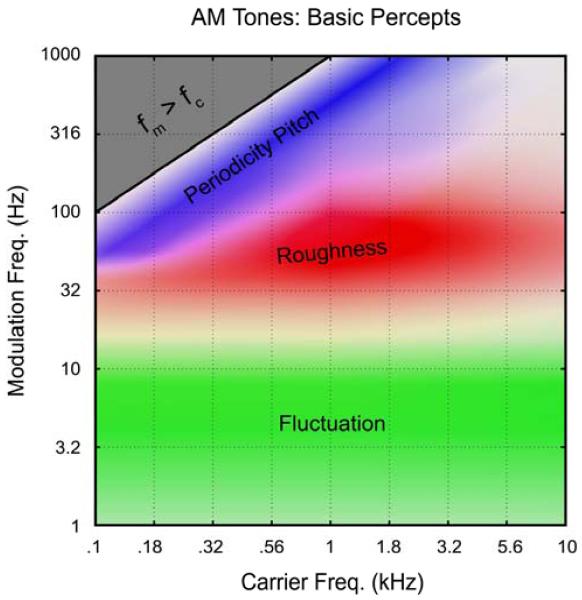

Figure 1.

The three basic perceptual qualities experienced during listening to AM tones, based quantitatively on the magnitude scaling data of Fastl (1983; Fastl and Zwicker 2007). All results were obtained at a comfortable listening level (e.g., 70 phon), with 100% AM modulation depth. Note that the periodicity pitch and roughness ranges overlap (purple color). The peak of pitch strength is always experienced when fm = fc/2, because in this case the lower sideband is positioned at precisely the fundamental frequency. Roughness is defined relative to a standard of fc = 1 kHz and fm = 70 Hz. Similar overall results are obtained for AM noise and FM tones, except that pitch strength is much weaker for AM noise, and roughness is much stronger for FM tones. Note that the identical percepts are elicited by speech stimuli in so far as the spectrogram exhibits modulations in the appropriate ranges. For speech, ‘periodicity pitch’ is ‘voice fundamental’, and ‘fluctuation’ is sometimes called ‘rhythm’.

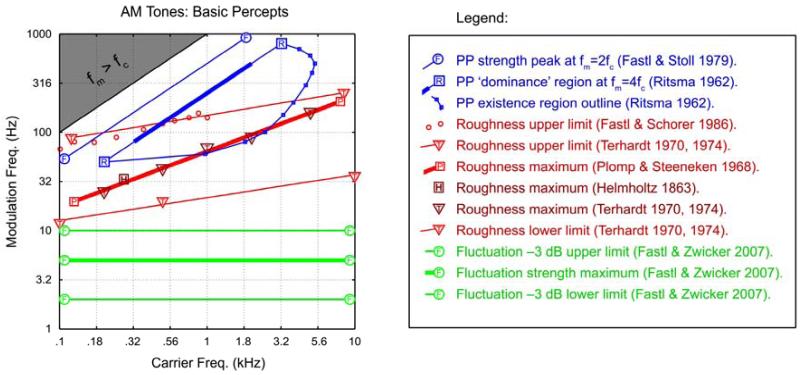

Figure 2.

The three basic percepts during listening to AM tones, according to various authors. The thin lines can be taken to indicate boundaries of existence regions and the thick lines can be taken to indicate a maximal or dominant region for the percept. Fluctuation (green): The thick green line indicates the peak of fluctuation strength at 5 Hz, and thin lines indicate approximately the -3 dB points. Roughness (red): The upper/lower limits of roughness (thin red lines) are obtained from Terhardt (1970, 1974). The small red circles are from a re-examination of the upper limit of roughness by Fastl and Schorer (1986). The data of Plomp and Steeneken (1968) on maximal roughness were well-fit by a straight line (in log-log coordinates), given by the thick red line. The dark-red triangles give the maximal roughness according to Terhardt (1974), in close agreement. Interestingly, the original point of maximum roughness (German: ‘Rauhigkeit’) as given by Helmholtz (1863) for two beating violin tones (dark-red square), is also in close agreement with Plomp and Steeneken (1968). Periodicity Pitch (blue): The thick blue line is the classic ‘dominance region’ of Ritsma (1962), drawn according to his rule that the periodicity pitch percept is dominated by frequencies near the 4th harmonic, fc = 4 fm. This also matches the rule for pitch dominance with ripple noise (at 4/τ) (Bilsen and Ritsma, 1967, Yost et al., 1978, Yost, 1982). The small blue circles and blue arc delineate the existence region of periodicity pitch from Ritsma (1962), each point obtained as the average over his 3 subjects. Along this line, a 100% modulated tone just evokes a sensation of periodicity pitch (note that he did not include fm = fc/2 on conceptual grounds, arguing that it did not qualify as a ‘residue pitch’ given that the fundamental frequency is present). The upper blue line (Fastl and Stoll, 1979, Fastl and Zwicker, 2007) is drawn at fm = fc/2.

Note that the region between fluctuation and roughness is not adequately covered in Fig. 1 (fm = ~16 Hz). The percept around 16 Hz does not seem prototypical of fluctuation or roughness as currently defined; we suggest the use of “intermittence” (Wever, 1929) or “flutter” (Nourski and Brugge, 2011) for this range. Prototypes for the 4-category scheme could be 1-kHz tones modulated at 4, 16, 64, and 250 Hz. This would yield logarithmic spacing on the fm scale; for example, modulation filter banks typically employ logarithmic spacing above the fluctuation range (Dau et al., 1997).

2.2. Other modulated sounds

The preceding section directly concerned AM tones (including beats), but the results apply directly to FM tones and AM noise. FM and AM tones with modulation rates in the periodicity pitch range are perceptually indistinguishable – both exhibit strong sidebands separated by a sufficient degree that they are resolvable by the ear. Thus, the blue regions in Figs. 1 and 2 can be considered identically applicable to AM and FM tones, where a pitch sensation is evoked by the spectral (harmonic) structure.

For the roughness range, FM tones have been studied specifically by Terhardt and Kemp (1982). Both authors emphasize the close similarity between the roughness results for AM and FM. The major difference is that FM tones elicit a greater roughness percept (up to 6 times greater: Fastl and Zwicker, 2007), but this does not appear to involve any shift in the existence region or region of maximal roughness. Thus, the red regions in Figs. 1 and 2 can be considered equally applicable to AM and FM tones.

For the fluctuation range, FM tones have only been studied quantitatively by Fastl (1983, Fastl and Zwicker, 2007) to our knowledge. His results were obtained for AM and FM in the same subjects and the comparisons again indicate no appreciable difference between AM and FM. Obviously, we can clearly distinguish AM and FM perceptually, but their fluctuation strengths have a similar tuning as a function of fm, peaking near ~5 Hz. Thus, the green regions in Figs. 1 and 2 apply to both AM and FM tones.

For AM broadband noise, the results are similar to those for AM tones within the frequency range from fc = ~0.4 - 4 kHz (wherein the most detailed auditory processing occurs, and which is most critical for speech intelligibility). That is, the frequencies near ~1 kHz are most strongly weighted in determining the outcome for broadband noise. Thus, broadband noise with AM near ~4 Hz gives a strong fluctuation percept (Fastl, 1982), and AM noise near 70 Hz (or ripple noise with delays near τ = 1/70 sec) gives a strong roughness percept (Patterson et al., 1978, Bilsen and Wieman, 1980). The major difference between AM tones and AM noise is a strong reduction in the periodicity pitch strength for AM noise. Although melodies can be recognized using only AM noise (Burns and Viemeister, 1976, Burns and Viemeister, 1981), where the long term spectrum remains white, only a faint, “whispy” pitch-like sensation is evoked. However, this does not appear to involve any overall shift of the existence or dominance regions. For example, we noted in Fig. 2 that the dominance region of Ritsma (1962) for AM tones is confirmed with cosine noise (Bilsen and Ritsma, 1967, Yost et al., 1978, Yost, 1982).

Overall, the basic percepts illustrated in Figs. 1 and 2 are similar for all technical stimuli – beats, AM tones, FM tones, AM noise, and ripple noise – thus increasing their utility as summaries of many basic psychoacoustic results. As already mentioned, speech stimuli also elicit the same basic percepts in so far as they exhibit modulations in the ranges indicated in Figs. 1 and 2.

3. FOCUS ON THE FLUCTUATION RANGE

3.1. AM detectability

Having established the overall percepts for AM and FM sounds, we focus in this section on the fluctuation range (~1-10 Hz). Specifically, we will survey a body of evidence in support of the central claim of this review – that human auditory perception exhibits a tuning to modulations occurring within the fluctuation range, peaking broadly at ~2-5 Hz. This is similar to the typical syllabic rate of speech, to be discussed in Section 3.3.

To preview this claim, we make another plot in the form of Figs. 1 and 2, except now instead of depicting the strengths of the percepts (Fig. 1) or the approximate existence regions of the percepts (Fig. 2), we plot the detectability of AM as a function of carrier frequency (fc) and modulation frequency (fm). While this does not allow insight into the perceptual experience of the listener, it has the advantage of requiring no verbal labels or subjective categories. Instead, the listener merely needs to indicate whether modulation has or has not been detected in some sound; for a pair of sounds, the listener indicates which one was modulated vs. unmodulated. To date, the most comprehensive data of this type remains that of Zwicker (1952), who studied four subjects at several different loudness levels (Ls) and fcs. For each combination of L and fc, a wide range of fms were tested from 1 Hz to several kHz. Fig. 3 shows the outcome for L = 60 phon (a comfortable listening level in the range of conversational speech); note in the plot that a peak represents maximal detectability. Notice that there is a broad trough (difficult to detect AM) in the middle of the roughness range, rising on either side to two broad peaks. The first peak occurs in the periodicity pitch range, and depends on spectral processing (i.e., the sidebands become detectable as separate pitches or as part of a harmonic pattern), but this is beyond the present scope. The second peak occurs at 4 Hz for each carrier frequency tested (tests were at fm = 1, 2, 4, 8, … Hz). Importantly, the sensitivity declines at 2 Hz and further at 1 Hz. Had Zwicker tested lower, he likely would have found the detectability of AM to decline even more drastically, as seen below (Section 3.2).

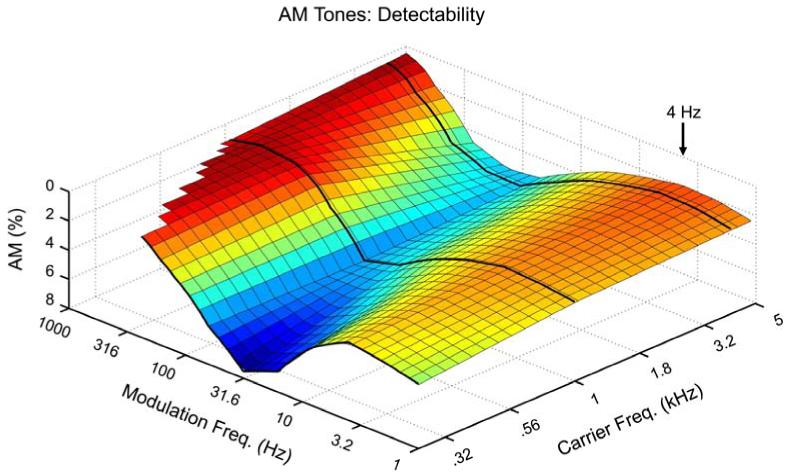

Figure 3.

Detectability of AM tones as a function of fc and fm according to data of Zwicker (1952), which is the most complete to date. Three fcs were tested (0.25, 1 and 4 kHz), as indicated by the black curves, over a wide range of fms. Further details are given in the text. The major result for present purposes is the peak in sensitivity centered at fm = 4 Hz. Note also the overall increase in sensitivity in going to toward the basal region of the cochlea (higher fcs), which play an overall stronger role in temporal envelope processing.

Zwicker’s finding at ~4 Hz (also, Zwicker and Feldtkeller, 1967) is not widely appreciated as an established fact about human AM processing. The major reasons are to be discussed in Section 3.3 (emphasis on the roughness and periodicity pitch ranges, and the claims of a low-pass TMTF). Given that evidence in favor of a (broad) band-pass tuning in the range ~2-5 Hz has obvious implications for syllabic rate speech perception (Section 3.3), we next enumerate a comprehensive survey (but kept as succinct as possible) of the psychoacoustic evidence for this central claim.

3.2. The evidence

Claim: Human auditory perception of modulated sounds – AM tones, FM tones, AM noise, and ripple sounds – exhibits a (broad) band-pass tuning of sensitivity in the range ~2-5 Hz, and is not simply a low-pass response.

Evidence

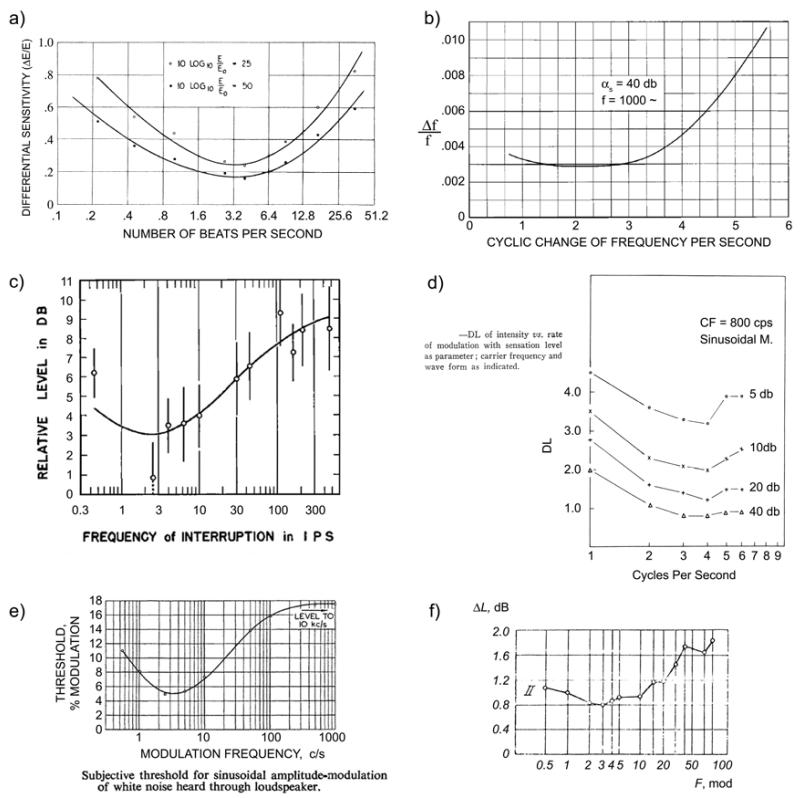

In perhaps the first modern psychoacoustic experiment with electronic equipment, Riesz (1928) set out to determine the sensitivity of the ear to small differences in intensity. Rather than creating abrupt increments of intensity, the method of beating tones was used to create smooth intensity modulations. Riesz first tested a range of fcs and fms in 3 observers to find the region of best sensitivity: “All observers showed practically the same results at all frequencies and intensities. A representative curve… is shown in Fig. [4a] (the particular frequency used here was 1000 cycles per second). It is characterized by a broad minimum in the neighborhood of 3 cycles of intensity fluctuation per second.” See Fig. 4(a).

Shower and Biddulph (1931) set out to determine the sensitivity of the ear to small differences in frequency. Like Riesz (1928), abrupt transitions were avoided by sinusoidal modulation: “Since it is impossible to vary the frequency of a system without scattering energy into frequency regions other than that being used, a method of variation in which this scattering would be a minimum was sought.”. Different rates of sinusoidal FM were tested to determine the rate of maximum sensitivity to subtle variations of the tone frequency: “The results of these observations are shown in Fig. 4. The curve shows a broad minimum from 2 to 3 variations per second.” See Fig. 4(b). We note that tasks requiring acute pitch sensitivity tend to reveal the slower end of the sensitivity range (~2-3 Hz), so the fact that Riesz’s minimum for AM was at ~3-4 Hz may be significant.

Pollack (1951) studied interrupted white noise and found “a broad minimum in the region of 4 i.p.s. (interruptions per second)”. This is not a standard AM detectability experiment (e.g., it used loudness judgments), but it has been cited for the earliest premonitions of the system’s analysis viewpoint (van Zanten, 1980). It is also interesting that Pollack discussed his results in terms of the then-current ‘alpha-scanning’ hypothesis of brain rhythms, since the equivalent vision experiment with interrupted white light gives a peak near the alpha range (Bartley, 1939). This was also related to growing interest in “excitability cycles” (Clare and Bishop, 1952, Chang, 1960), and thus premonitory of current writings on the role of cortical theta oscillations in auditory/speech processing.

Zwicker (1952) tested a wide range of sinusoidal AM (SAM) tones. These were produced by multiplying a tone by a sinusoidal envelope, whereas beats are produced by summing two nearby tones. However, the results are very similar throughout the fm vs. fc plane (Section 2), so we refer to both as “AM tones” or “SAM tones”. As covered in Section 3.1 (Fig. 3), Zwicker found peak AM sensitivity at 4 Hz.

Tonndorf et al. (1955) used SAM tones to test the difference limen for intensity (DL, synonymous for our purposes with the just-noticeable difference). This is very similar to Riesz (1928), but measures were obtained in 19 subjects and focused on the AM range fm = 1-6 Hz. They found (see their Fig. 5 in Fig. 4d): “As seen in Figure 5, the variation with modulation frequency was similar for all sensation levels, reaching its smallest value at 4 cps, although the difference between 3 and 4 cps was rather small. In a similar manner, the between-subject variation reached a minimum at 4 cps, …”

Stott and Axon (1955) provided an important expansion of the above results to broadband noise and to FM. They tested 8 subjects with tones from fc = 0.05-10 kHz, for both AM and FM, as well as SAM broadband noise. They made an important methodological comment (Section 3.4) that just presenting sounds and asking the subject if they notice the presence modulation is not an optimal method: “…aural fatigue and auditory imagery were serious factors in these conditions. …Greater consistency resulted if the pure tone was presented first and the modulation gradually increased until the subject indicated that he was aware of the change.” For AM tones, they found: “There is enhanced perception of modulation frequencies around 3 or 4c/s, but below 0.5c/s perception becomes more difficult as memory is called into play.” But for FM tones, the maximal sensitivity was found around 2-3 Hz, confirming Shower and Biddulph (1931). They were the first to test SAM noise, and found: “As with pure tone, the most sensitive discrimination is found in the region of 3-4c/s, where the threshold is 5%.” See Fig. 4(e).

Dubrovskii and Tumarkina (1967) studied SAM broadband noise and the subject indicated “the time at which he recognized the presence of modulation of the signal.” They found (Fig. 4e) that: “The curves attain a minimum in the range of modulation frequencies 1.5-5 cps.” This study is noteworthy for being the first to suggest a model for the low-pass aspect of the curve (the decreasing sensitivity above 5 Hz): “For low modulation frequencies (on the order of 2-5 cps) the ear manages to keep up with the variations in noise level. With an increase in modulation frequency, the variations in the level become too rapid for the stimulus rise and fall processes in the auditory system to be able to keep pace with the level changes. In this case…the difference between the minimum and maximum excitation diminishes.” That is, the output of the integration (excitation in the CNS) should exhibit less amplitude modulation than the input signal, for faster AM rates. They show a simple RC integration circuit as a model.

Zwicker and Feldtkeller (1967) report extensive measurements with AM and FM tones, AM white-noise, and AM and FM bandpass noise, including the data of Zwicker (1952). They also provide a clear introduction to AM and FM in general, so this is a good starting point for an introductory reader (English translation, Zwicker and Feldtkeller, 1998). They found for AM tones, AM white-noise, and AM bandpass-noise that: “The highest sensitivity is at a modulation frequency of 4 Hz.” For FM bandpass noise: “As for tones, the ear is most sensitive to modulation frequencies between 2 and 5 Hz.” And for FM tones: “All the curves have a broad minimum in the range of 2 to 5 Hz.” We show their results for AM white noise in Fig. 4(e), because these exhibit an important difference compared to the results for AM tones (Fig. 3). Although the region of maximal sensitivity in the fluctuation range is essentially unchanged, the sensitivity to periodicity-pitch range AM is not present for white noise as it is for tones. This is further confirmation that the sensitivity to periodicity-pitch range AM is based primarily on spectral processing, since this cue is not available for AM noise as it is for AM tones.

Figure 4.

Historical demonstrations of maximum sensitivity to modulations in the range ~2-5 Hz. See text for info. a) Riesz (1928), beats (SAM tones). b) Shower and Biddulph (1931), FM tones. c) Pollack (1951), interrupted white noise. d) Tonndorf et al. (1955), SAM tones. e) Stott and Axon (1955), SAM white noise. f) Dubrovskii and Tumarkina (1967), SAM white noise.

Figure 5.

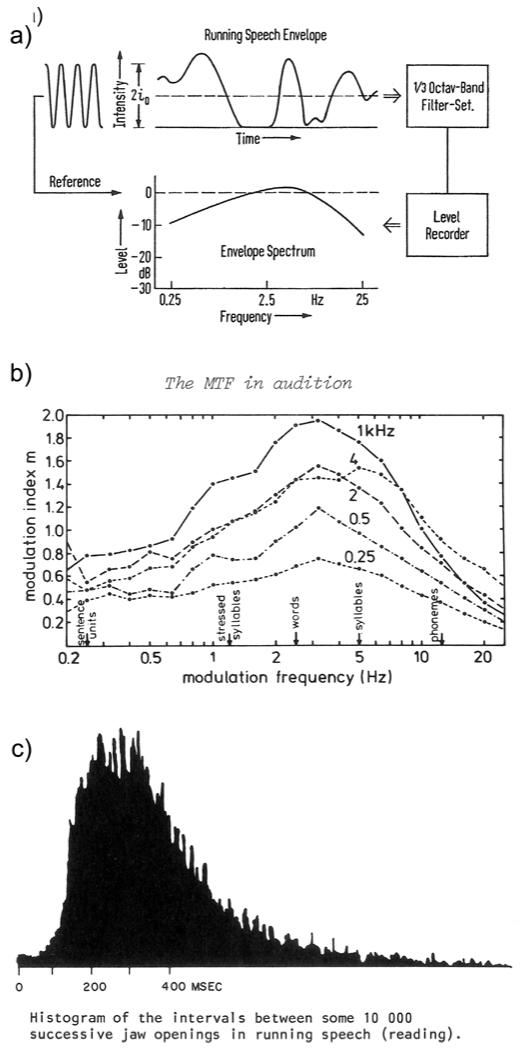

The envelope spectrum of natural speech production. a) Houtgast and Steeneken (1973): “The fluctuations of running speech as represented by the envelope spectrum.” b) Plomp et al. (1984): Average envelope spectrum for 1-min discourses from 10 male speakers. c) Ohala (1975): Jaw opening intervals during continuous speech. The majority of intervals occur in the range ~200-500 ms (i.e., ~2-5 Hz) and almost all intervals (other than small motion noise discussed by the author) occur in the range ~100-1000 ms (~1-10 Hz).

This concludes the classic evidence for the claim of ~2-5 Hz tuning (noting that this is not a sharp peak, and that frequencies below 1-2 Hz must be tested to clearly see the full bandpass nature). An important summary point is that the same general finding applies to all technical stimuli tested (AM and FM tones and narrow-band noise, and AM broad-band noise). We have omitted a few references of lesser historical value (such as abstracts), but some of these can be found in the review of Kay (1982). Further evidence is found in studies of spectro-temporal modulation transfer functions (Section 3.5), but first we must introduce the temporal modulation transfer function (TMTF).

3.3. TMTF and relevance to speech

An important concept required for further evidence on the ~2-5 Hz tuning, and its relevance to speech, is the temporal modulation transfer function (TMTF). The TMTF was first introduced into hearing research by Møller (1972a, 1972b), who studied the responses of single-units in the cochlear nucleus to AM and FM stimuli (Section 4). The concept of the TMTF is quite simple: take an input signal and an output signal, related by a system (black box); but instead of relating the raw input/output signals, we instead attempt to relate the envelopes of the input/output signals. It is that simple – extract the envelopes of the input and output, and compute a transfer function. For Møller’s TMTF, the input was the envelope of the stimulus (AM tones or noises) and the output was the time-varying firing-rate of the single-unit (like the envelope, the firing-rate is a non-negative quantity, and so behaves like an envelope for computing a TMTF).

Independently, Houtgast and Steeneken (1973) introduced the TMTF in the context of room acoustics. Typical rooms result in a low-pass smoothing of the envelope of acoustic signals, with important implications for speech processing. For example, this smoothing most strongly reduces AM in the periodicity pitch range (~50-500 Hz), but does not affect the spectral pattern (harmonic structure), so it makes sense that we perceive voice fundamental primarily by spectral rather than temporal processing. As part of this work, Houtgast and Steeneken (1973, Houtgast et al., 1980) computed the long-term envelope spectrum of speech. That is, they extracted the (overall) intensity envelope of the speech waveform, and computed its spectrum. They found that the modulation spectrum of speech exhibits a broad peak in the range ~2-5 Hz (Fig. 5). In case there is any doubt that this abstract measure of the acoustic envelope represents the syllabic rate of speech production, we include the concrete measurements of Fig. 5(c) (Ohala, 1975) where: “The subject (…) read technical prose for about 1 1/2 hours; jaw movement was tracked optically… There is a large single peak around 250 ms, which may be the modal syllable rate or the preferred frequency of the mandible.”

In an important continuation of the TMTF work, Drullman, Festen and Plomp (1994b, 1994a) studied the manipulation of the speech envelope spectrum in terms of its consequences for speech perception and intelligibility. Specifically, Drullman et al. either low-pass filtered (1994b) or high-pass filtered (1994a) the Hilbert envelope of speech, within each of the sub-bands separately, and then reconstructed the speech using the filtered envelope and the original ‘fine structure’. Intelligibility was degraded primarily by removing AM in the fluctuation range (~1-10 Hz, peak in the range ~2-5 Hz), with some additional degradation for consonants at ~8-16 Hz. Although there are certain technical difficulties in using the Hilbert envelope directly in this application (e.g., see Clark and Atlas, 2009), their results have been overall confirmed and are a major historical impetus behind the current interest in the ~delta/theta bands for speech processing. A related historical impetus from the same era was the finding of Shannon et al. (1995) that speech devoid of spectral structure (temporal envelope cues only) can remain intelligible.

3.4. The claim of a low-pass TMTF

Given the extensive body of evidence for ~2-5 Hz modulation sensitivity in psychoacoustical studies and the obvious relevance to speech, it might seem impossible that a psychoacoustician of the 1970s or 1980s would overlook this evidence, and instead see a purely low-pass response for AM detectability. Yet the majority of workers appeared to turn to the low-pass view in the late 1970s, and we are still partly in the era of vaguely accepting the existence of a low-pass TMTF. We argue here that, in fact, little or no evidence in favor of a low-pass TMTF was produced. Once the low-pass view became prevalent, and interest began to rise for the 40-Hz AM range, the low-pass view became confirmed for a trivial reason – many studies on AM processing did not use fms lower than 5-10 Hz, and so could not possibly have detected the band-pass nature of the tuning centered at ~2-5 Hz. In order to see how the era of low-pass TMTF came about, we must enter briefly into the auditory model which these workers were attempting to confirm.

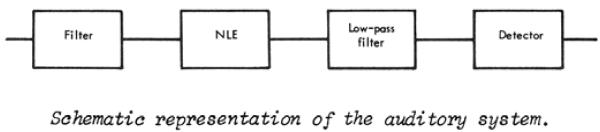

Licklider (1959) introduced the following basic model of the peripheral auditory system: the acoustic stimulus is subjected to band-pass filtering (according to the cochlea), and then half-wave rectified and smoothed (according to the conversion from hair-cell to auditory-nerve response). This basic model, including a pre-emphasis stage (according to the middle ear), was given again by Flanagan (1961). Both Licklider and Flanagan were highly influential in auditory and speech theory, and this basic model has since been used innumerable times, with various choices for the filters. Now, the half-wave rectified and smoothed stimulus is ‘the envelope’ according to the model auditory system (even though it intermixes ‘fine structure’ according to Hilbert transform theory), and so the final stage of smoothing should result in a low-pass response of the auditory PNS with respect to AM processing. It is easy to see how this highly influential model leads to the expectation of a low-pass TMTF. If the smoothing time-constant were, say, 10 ms, then modulations occurring within this effective duration, i.e. fm > 100Hz, would be eliminated or reduced by the smoothing. Another way of stating this is that our temporal acuity (Green, 1973) is limited by the smoothing action of the hair-cell/synapse. Green’s student, Viemeister, would later become one of the leading authors on auditory temporal processing, still highly cited today. Viemeister’s work, along with two other early authors on the TMTF in psychoacoustics (Rodenburg, 1977, van Zanten, 1980), forms the primary historical origin of the notion (still assumed, implicitly or otherwise, by many current authors), that human perception of AM sounds is basically a low-pass process. We now take a closer look at these three early TMTF authors, and show that in fact they produced little or no evidence for a strictly low-pass TMTF in AM processing.

The TMTF was first introduced into psychoacoustics by Rodenburg (1972 thesis, 1977), who studied the threshold for detecting modulated vs. unmodulated white noise (2 interval forced-choice, 2IFC). Recall from Section 3.2 that there is no AM sensitivity in the periodicity-pitch range for white noise, so the sensitivity for AM noise declines monotonically through the roughness range and into the periodicity-pitch range. Thus, starting at the peak at ~2-5 Hz and higher, we expect a purely low-pass appearance for AM white noise. This is exactly what Rodenburg (1977) found, and this is particularly expected given that the great majority of his data was collected in the range fm = 5-1000 Hz. His Figure 2 shows two isolated data points for AM sensitivity in the range 2-4 Hz, and these actually do exhibit a decline in sensitivity relative to the 5-10 Hz range. Within the range of variability displayed, the bandpass functions of Section 3.2 would probably fit his data equally well. Since Rodenburg assumed that the AM threshold was determined by the low-pass filter in his model (Fig. 6), he fit a simple RC-filter characteristic to his data (note that an RC filter is a low-pass smoothing filter and also called a ‘leaky integrator’).

The next student of the TMTF in psychoacoustics was Viemeister (1973 abstract, 1977, 1979, Bacon and Viemeister, 1985, Viemeister and Plack, 1993). Like Rodenburg, Viemeister (1977) was driven by the Licklider-Flanagan model: “According to this scheme a single frequency channel consists of a bandpass, “critical band” filter followed by a nonlinearity, typically half-wave rectification, followed in turn by a lowpass filter. In the context of this descriptive model the present problem is to measure the transfer function for the lowpass filter…” Like Rodenburg, Viemeister (1977) used SAM white noise in a 2IFC experiment (the subject indicates which interval contains the modulation), and fit the data with an RC filter characteristic. However, the data shows a subtle decline in sensitivity going from fm = 4 Hz to 3 Hz, and again from 3 Hz to 2 Hz. Within the range of variability displayed, a bandpass characteristic would fit the data equally well. Moreover, Viemeister discloses a potentially serious methodological flaw with the 2IFC procedure: the modulator is gated at sine phase, such that at the very onset of the modulated interval the intensity is at its lowest point. This provides a simple onset detection cue which will be stronger the slower the modulation given that subjects are sensitive to stimulus rise time (unfortunately, Rodenburg and van Zanten do not specify the onset phase for their stimuli). Also recall the methods comment of Stott and Axon (1955): the 2IFC procedure is expected to fatigue the subjects and give results with greater variability than the method used in most of the classic studies of Section 3.2 (where the sound was turned on and then the modulation depth varied until just detectable).

van Zanten (1980) also used the model of Fig. 6, and assumed that the TMTF was a measure of “the transfer function of the leaky integrator”. Van Zanten used similar methods as Rodenburg and Viemeister, and similar to their data, a subtle increase in AM detection threshold is sometimes seen at the lowest AM frequency tested (2 Hz), possibly consistent with the bandpass model, particularly given the variability displayed in the data for 3 subjects. However, he also concluded in favor of a low-pass characteristic.

Fastl (1977a) presented an extensive study of temporal masking: an AM noise is presented as a masker, and the task is to detect a brief probe tone presented within the noise. If the probe tone is presented at the peak of the noise, there is an increased detection threshold compared to if the probe tone is presented in the trough of the noise. Fastl did not obtain measures below an AM of 5 Hz, but it is should be obvious that the detection of the probe can only continue to improve with lower frequency maskers (because the probe tone will be surrounded by longer and longer intervals of near silence). Since the detectability of AM is not tested here (just the detectability of a probe tone as a function of local-SNR), we do not consider this a measure of “the TMTF”. Nonetheless, both Rodenburg (1977) and Viemeister (1977) used this experiment as a second measure of “the TMTF”. As expected, they both found a low-pass function. Examination of their actual data shows a peak at 4-5 Hz AM, and not at 2 Hz, their lowest frequency tested, which is surprisingly compatible with the band-pass view.

Figure 6.

Schematic model of the auditory system from Rodenburg (1977): “We assume that the auditory system can be described by a model consisting of a critical band filter, a nonlinear element (rectifier), a low pas filter and a detector. The modulation threshold is determined by a low-pass filter and a detector.”

Thus, we find that the early students of the TMTF were driven by the Licklider-Flanagan model of the auditory periphery (Fig. 6), and essentially viewed the TMTF as an exercise in finding the integrating time constant of the low-pass filter element (including CNS contributions). These studies were highly influential and initiated what we might term ‘the low-pass era’ for auditory temporal processing. Other influential authors of that era studied AM detectability only for frequencies above 5 Hz (Terhardt, 1974) or even 20 Hz (Patterson et al., 1978), and the discovery of the 40-Hz EEG response by Galambos and Makeig (1981) decisively shifted interest away from the lowest modulation frequencies. However, we may be entering a new era, partly instigated by interest in syllabic-rate speech processing (Introduction), where occasional acceptance of the classic bandpass characteristic is indicated. Another sign of a return to the bandpass interpretation comes from recent studies of the STMTF.

3.5. The spectro-temporal modulation transfer function (STMTF)

A generalization of the TMTF is the spectro-temporal modulation transfer function (STMTF). Recall that the TMTF is defined by computing the envelope spectrum of input and output, and computing the transfer characteristic. Computation of the envelope spectrum is essentially a 1-D Fourier transformation of the envelope (followed by smoothing). In a psychoacoustic context (Section 3.4), “the TMTF” is obtained by using AM detection thresholds as the output variable. Computation of the STMTF is essentially a 2-D Fourier transformation of the spectrogram (followed by smoothing), and using psychoacoustic detection thresholds as an output measure. The sounds used in this case are ‘ripple sounds’, which vary on a continuum from purely temporal modulation (AM sounds) to purely spectral modulation (essentially harmonic stacks as in periodicity pitch). We do not cover all ripple-sound studies here, just the two most important historical works where the psychoacoustic methods were introduced (van Zanten and Senten, 1983, Chi et al., 1999), and one recent study of high interest for speech perception (Elliott and Theunissen, 2009).

The STMTF was introduced into psychoacoustics by van Zanten and Senten (1983), using two subjects (themselves), 2IFC, and a fixed spectral modulation frequency of 200 Hz (i.e., this is similar to a periodicity pitch sound with fundamental of 200 Hz). They found a peak sensitivity at a temporal modulation of ~1 Hz, declining monotonically above and below (i.e., a bandpass characteristic, although the reason for the peak at ~1 Hz is not clear). Since they only tested one spectral modulation frequency, this is not actually a study of the STMTF, and more recent studies are to be considered a major improvement.

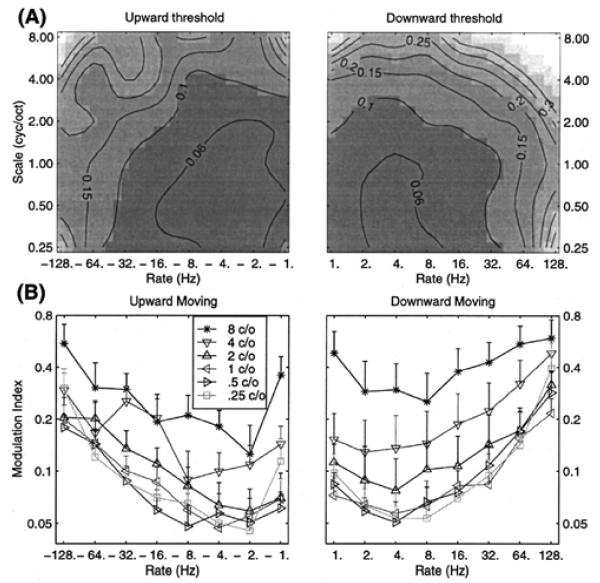

Chi et al. (1999) were the first to study the full STMTF for ripple sounds, using 4 subjects, 2IFC, and a range of spectral modulation frequencies. The task was to choose the interval containing the modulated vs. unmodulated sound. Given the historical importance, their main results are shown in Fig. 7. Notice the clear bandpass characteristic in the vicinity of ~2-5 Hz for all spectral modulation frequencies, and for upward and downward oriented ripples. Importantly, they demonstrated that the spectral and temporal results are separable. That is, the matrix of numbers plotted in part (A) of their figure, can be decomposed by singular value decomposition, and the first component alone explains over 85% of the variance. This has several implications, one of which is that the temporal and spectral MTFs can be studied separately, and then simply multiplied together to form the 2-D STMTF to a first approximation. Thus, the many results cited above for purely temporal studies remain essentially valid in the spectro-temporal framework. It is therefore not surprising that the ~2-5 Hz bandpass characteristic was found here as it was in classic temporal studies of AM tones and noise. This result is also consistent with the fact that the same bandpass characteristic has been found for all technical stimuli tested (AM and FM tones, AM white noise, etc.).

Elliot and Theunissen (2009) used manipulations of the spectro-temporal modulations of speech to study the effects of different regions on speech intelligibility. This is an important update to the studies of Drullman et al. (1994), who manipulated the temporal envelope only. With notch filters (see their Figs. 5-6), the results show the strongest degradation of speech intelligibility in the range ~2-7 Hz. For low-pass filtering, the range was shifted somewhat higher (note that certain consonants require modulation frequencies in the range ~8-16 Hz for intelligibility, but these are isolated bursts/onsets of fast AM, not repetitive modulation.) In any case, their results definitely do not support a purely low-pass view, since the temporal modulations below ~1 Hz made little contribution to intelligibility.

Figure 7.

The spectro-temporal modulation transfer function (STMTF) of Chi et al. (1999). In (A), the abscissa gives the temporal modulations (AM) in Hz, and the ordinate the spectral modulations (cyc/oct). Upward vs. downward ripple sounds (this concerns the orientation of the ripples in the spectrogram) are shown on the left vs. right of the figure, respectively. In (B), the temporal results are shown for various spectral frequencies, and overall exhibit a strong bandpass characteristic, centered at ~2-5 Hz.

Overall, the results concerning spectro-temporal modulations confirm the classic bandpass results for AM sensitivity, and for relevance to speech. We note again that the bandpass characteristic is broadly peaked in the range ~2-5 Hz, and not a sharp peak declining rapidly to 0 on either side. Measurements must be made well below fm = 2 Hz in order to clearly see the high-pass portion of the curve. It is surprising to us how consistent the frequency range of ~2-5 Hz is found. There has been some confusion recently as to which AM frequency range to cite for “theta” interest in speech (see commentary by Obleser et al., 2012). Although various psychoacoustic studies show (broad) peaks at 2-5 Hz, 2-3 Hz, 3-5 Hz, or 1-7 Hz, we believe that the best summary range (using integers) is decisively “~2-5 Hz”. For brain wave research, this overlaps the “delta” (~1-4 Hz) and “theta” (~4-7 Hz) ranges, so in those contexts one might refer to “delta/theta” tuning. All of these are part of the “fluctuation” range (~1-10 Hz) as currently defined (Fig. 1). The full fluctuation range encompasses the delta, theta, and alpha (~7-14 Hz) bands of the EEG.

We now take the ~2-5 Hz peak in modulation sensitivity as an empirical finding which requires explanation, and turn next to neurophysiological studies.

4. HUMAN NEUROPHYSIOLOGY

Human auditory EEG, MEG, PET, and fMRI studies of modulated sounds focus overwhelmingly on the periodicity pitch and roughness ranges. Scalp EEG and MEG studies in the 1980s and 1990s, later followed by fMRI studies, focused on the responses to 40-Hz repetitive or AM stimuli. This was driven initially by clinical and basic research interest (Galambos et al., 1981, Sheer, 1989), and then by the post-Singer (1992) interest in synchrony and 40-Hz. Overwhelmingly, these studies only included AM rates down to 5-20 Hz. The focus in human neuroscience on the faster AM rates is part of the historical reason that the viewpoint became dominant in the 1980s and 1990s that the TMTF is simply low-pass in nature (as would be observed trivially if the lowest AM rate tested is 5-20 Hz). Nonetheless, we identify a handful of fMRI studies providing evidence for the ~2-5 Hz bandpass characteristic.

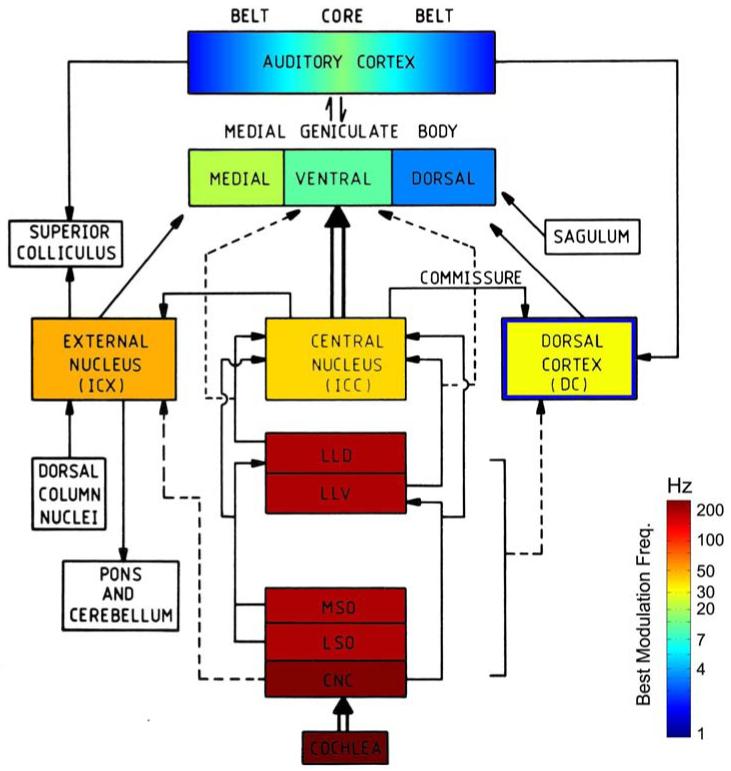

The fMRI evidence is discussed first, because it is more straightforward in its interpretation. But even here there is one brief caveat of interpretation: If a given voxel is found to exhibit peak activation for some fm, say 5 Hz, this does not mean that all neurons within the voxel have best modulation frequencies (BMFs) peaked at 5 Hz. It means that some weighted average over the neurons within the voxel yields a peak at 5 Hz. Also note that the BMFs do not necessarily reflect local cortical processing, but may be inherited from lower CNS processing. We will find that BMFs in the range ~2-5 Hz are unlikely to be inherited from lower brainstem centers, but this does not exclude the thalamus. Despite these caveats, we know that the psychophysical outcome depends ultimately on the population-level cortical activity, and fMRI yields roughly a measure of population-level firing rate, so evidence from this method should be useful with respect to psychoacoustics.

4.1. Human fMRI

Given the sluggish response of blood flow to cortical activation, fMRI is not used to measure cycle-by-cycle responses to modulated sounds (Harms and Melcher (2002) estimate ~0.1 Hz as the upper limit for fMRI). Typically, a given modulation rate (fm) is presented for multiple seconds and the total blood-flow response is measured. Conceptually, this is a simple means of obtaining a tuning to modulation rate for a given brain region: present various fms and measure the total activation as a function of fm. A number of studies of this type have been published, and a smaller number touch upon the ~2-5 Hz region of interest here.

As introduction to the fMRI evidence, we examine the now-classic study of Giraud et al. (2000), which is also noteworthy for discussing syllable-rate fm (~2-5 Hz). They used SAM white noise at rates of 4, 8, 16, …, 256 Hz, and several basic facts about temporal processing are established here. A common set of brain regions were found to respond to modulated > unmodulated noise: these included the known subcortical auditory stations, Heschl’s gyrus (HG), superior temporal gyrus (STG) and sulcus (STS), and supramarginal gyrus (SMG). It was noted by Scott et al. (2006) that essentially the same regions which respond greater to intelligible speech vs. speech-envelope-modulated noise, also respond greater to modulated vs. unmodulated sounds. That is, the STG/STS regions (homologous to monkey parabelt regions which respond to species-specific vocalizations) are activated by speech > AM noise > noise > silence. This shows the general relevance of AM sounds for speech perception regions.

Giraud et al. (2000) also confirm the general principle from animal neurophysiology (Section 4.2) that the best modulation frequencies (BMFs, i.e., the fms which elicit the strongest response) decrease with progress along the auditory pathway from cochlear nucleus (CN) to cortex. The CN responds to ~periodicity-pitch range AM, the inferior colliculus (IC) to ~roughness range, and the cortex to ~fluctuation range AM. The majority of AM-sensitive cortex showed the greatest activation to fm = 4 Hz, their lowest frequency tested. They also found transient responses to fm > 16 Hz, which were significant in a more restricted region (in or near the HG).

With these general points in mind, we look at the handful of fMRI studies which used low-frequency AM rates and provide evidence that the ~2-5 Hz psychophysical tuning is reflected in cortical activation:

The first auditory fMRI studies were published by Binder et al. (1994a, 1994b). Binder et al. (1994b) used a presentation rate of 3 Hz in order to elicit strong activation, as did other early studies. Binder et al. (1994a) studied the effect of repetition rates, using syllables presented at 0.17 to 2.5 Hz. According to the band-pass perspective, this should reveal the high-pass portion of the curve (i.e., below the peak at ~2-5 Hz). Their results are consistent with this, showing monotonically increasing activation from 0.17 to 2.5 Hz in the superior temporal auditory regions. Frith and Friston (1996, using PET) studied tone repetition rates from 0 to 1.5 Hz, and also found a high-pass characteristic in superior temporal cortices. Rinne et al. (2005, fMRI) studied repetition rates of harmonic tones (periodicity-pitch stimuli) in superior temporal cortex. They found 0.5 < 1 < 1.5 < 2.5 < 4 Hz or 0.5 < 1 < 1.5 < 2.5 = 4 Hz, depending on the state of (intermodal) attention. This is consistent with a high-pass characteristic below the peak region of ~2-5 Hz.

A number of fMRI studies (e.g., Giraud et al., 2000, Seifritz et al., 2003) looked at AM sounds or repetition rates in the roughness range (sometimes for interest in 40 Hz), with the lowest rate tested in the range 4-20 Hz. These studies found a low-pass characteristic, as expected for the range above the peak at ~2-5 Hz.

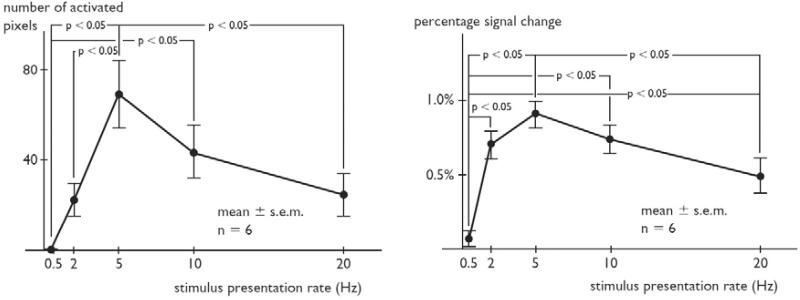

Tanaka et al. (2000) presented a 1-kHz tone (30-ms duration) at rates of 0.5, 2, 5, 10, and 20 Hz (each rate in separate 30-s blocks): “On the whole, the number of activated pixels increased up to a rate of 5 Hz and then decreased.” This bandpass characteristic was statistically significant and is evident in their Fig. 4 (number of pixels activated) and Fig. 5 (percent signal change). This increase in activation strength and extent at 5 Hz is consistent with the results of Giraud et. al. (2000) at 4 Hz.

Harms and Melcher (2002) studied the IC, MGB, HG, and STG with white noise bursts at 1, 2, 10, 20 and 35/s (each rate in separate 30-s blocks). The peak activations were found at IC: 35/s; MGB: 20/s; HG: 10/s; and STG: 2/s. The decreasing rate preference with progress along the auditory pathway is consistent with Giraud et al. (2000) and with animal evidence (Joris et al., 2004, Malone and Schreiner, 2010). Keep in mind for this and other studies that the exact peak is only with respect to the coarse spacing between rates tested (so the peak at 2 Hz here is relative to 1 and 10 Hz). A nice methodological feature in this study was controls for intensity and for total intensity in a block, and such intensity effects were not found to drive the response (the cortex is sensitive to AM, but insensitive to overall amplitude).

Langers et al. (2003) studied ripple sounds in a 2IFC task (similar to Chi et al. 1999, Section 3.5) including temporal modulation frequencies of 2, 8, and 32 Hz. There was greater activation extent and level, particularly postero-lateral to HG, with 2 > 8 > 32 Hz. Given the course spacing, this is consistent with either a low-pass or band-pass characteristic, but this study is noteworthy here because they confirmed the separability result of Chi et al. (1999). That is, not only is psychoacoustic sensitivity separable into spectral and temporal modulation transfer functions, but also apparently the cortical activation patterns. On the other hand, Schönwiesner and Zatorre (2009) report a lower degree of separability for ripple sounds using fMRI. Their temporal MTFs exhibited peaks between 2.8-3.7 Hz depending on ROI.

Thus, the overall psychoacoustic findings are basically confirmed here, such as the decreased sensitivity in the roughness range (Fig. 3) and increased sensitivity in the fluctuation range. We identify only 2 studies (Tanaka et al., 2000, Harms and Melcher, 2002) which included an adequate range of repetition rates to disclose the bandpass characteristic near ~2-5 Hz, and both studies essentially confirm this characteristic. Studies of slower repetition rates (below 2.5 Hz) for syllables (Binder et al., 1994a), simple tones (Frith and Friston, 1996), and complex tones (Rinne et al., 2005) are compatible with the high-pass portion of the curve, and a number of studies using faster AM or repetition rates are compatible with the low-pass portion of the curve (e.g., Giraud et al., 2000, Langers et al., 2003, Seifritz et al., 2003).

The processing of modulations in the fluctuation range and the sensitivity centered at ~2-5 Hz are more strongly associated with non-primary auditory cortex – regions surrounding HG, and particularly STG and planum temporale (PT) regions lying posterior and lateral to HG. Thus, modulations near ~2-5 Hz elicit not only greater levels of activation, but also a greater extent of activation given the larger size of non-primary vs. primary cortex. Boemio et al. (2005) also emphasized the role of belt/parabelt regions in “temporal structure” processing (see Fig. 11 for introduction to ‘core’ vs. ‘belt’ and ‘parabelt’). Note that HG participates in the ~2-5 Hz finding, but it also expresses tuning to higher modulation rates in the ‘flutter’ range (~16 Hz). Hall (2005), who studied 5 Hz AM and FM stimuli (Hall et al., 2002, Hart et al., 2003), reached similar conclusions: “The results from the fMRI studies in humans converge on the importance of non-primary auditory cortex, including the lateral portion of HG (field ALA), but particularly subdivisions of PT (fields LA and STA), in the analysis of these slow-rate temporal patterns in sound.” These regions involved in fluctuation-range AM/FM overlap heavily the regions involved in speech processing (Giraud et al., 2000, Scott et al., 2006), confirming again the relevance of modulated sounds to speech.

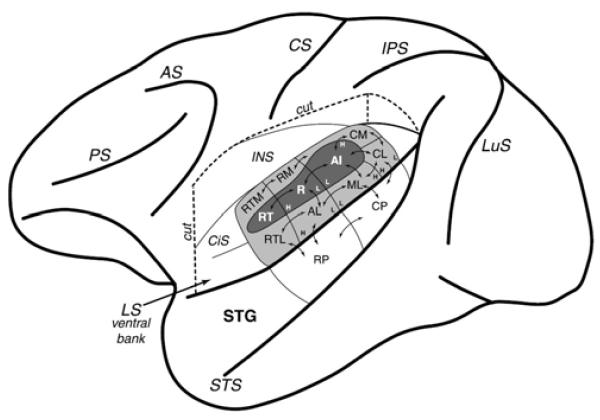

Figure 11.

Macaque cortex: core, belt, and parabelt regions from Hackett et al. (2001) (who also studied humans). ‘Core’ regions (AI, R, RT) are in dark gray within the lateral sulcus (LS, e.g. the ‘Sylvian fissure’). ‘Belt’ regions (CM, RM, RTM, RTL, AL, ML, CL) are in light gray. ‘Parabelt’ regions (RP, CP) occupy the major exposed surface of the superior temporal gyrus (STG). Note that human anatomical organization is suggested to be similar (Hackett et al., 2001, Sweet et al., 2005, Fullerton and Pandya, 2007, Brugge et al., 2008, Baumann et al., 2013).

4.2. Scalp EEG and MEG

Compared to the relatively straightforward interpretation of fMRI studies, the interpretation of scalp EEG and MEG studies is extraordinarily difficult. First, one must clearly distinguish between spontaneous brain rhythms and stimulus-driven rhythms. Second, the biophysical and physiological origins of the signal are poorly understood, and, even if understood, the ability to localize the source of the signal is blurred by the skull. Any given EEG electrode effectively averages over primary and secondary auditory cortices, and non-auditory cortices. Third, there is a distinction between ‘evoked’ and ‘induced’ activity, and for the steady-state response (SSR) or AM situation we must decide on how to treat the event-related potentials (ERPs). The tuning to 40-Hz for the SSR, for example, is driven by the simple fact that the auditory middle-latency auditory evoked potentials (MAEPs) have their major peaks (Po-Na-Pa-Nb) separated by ~12.5 ms. That is, with 40-Hz AM or repetitive stimulation, the successive peaks overlap so as to reinforce each other. Now, this does not mean that the 40-Hz result says nothing about the time-scales of cortical processing, because it is probably not pure coincidence that the cortical ERPs exhibit this particular time separation. But it does make the interpretation of auditory SSRs more difficult, and particularly for slower AM rates where the long-latency auditory evoked potentials (LAEPs) will begin to overlap. This is probably one of the reasons why slower AM rates are rarely reported in the scalp EEG and MEG literature.

Another issue of interpretation, relevant also to fMRI, concerns the distinction between the rate TMTF (rTMTF) and the synchrony or vector-strength TMTF (vTMTF). In the rTMTF, the amount of modulation in the stimulus at some fm is correlated with the total increase in firing rate in the neural response. In the vTMTF, the amount of stimulus fm is correlated with the amount of modulation in the neural response at that same fm. Clearly, the fMRI response is driven by the rTMTF, since the BOLD signal follows the total firing rate over the recent past of several seconds. In contrast, the raw EEG signal should follow the vTMTF, since oscillations of the EEG essentially follow the oscillations of pyramidal cell dipole strength and polarity (with an additional LTI system representing the extracellular transfer to the electrode). This is beyond the present scope, but is in line with the long held view that the EEG is driven by synchrony of synaptic potentials. The word “synchrony” here should not invoke any great mystery – this statement means nothing more than: the modulation at frequency fm of the number of apical vs. basal synaptic potentials results in the appearance (with some LTI phase-shift and gain) of the frequency fm in the raw EEG signal. This is standard dipole theory of EEG, but is beyond the present scope.

Thus, the interpretation of EEG results is not straightforward, and would require a separate full-length review alone. We only note that the work of Picton, one of the leading expert on auditory SSRs, shows results consistent with the predicted band-pass characteristic (Picton et al., 1987) and with clear relevance to the speech envelope (Aiken and Picton, 2008). However, there is inter-subject (and inter-electrode) variability and one can find confirmation of probably any perspective in the total EEG/MEG/ECoG literature. There are a large number of studies, some of them excellent and clear-headed in their interpretations, focusing on AM rates above the fluctuation rate, usually on or around the famous 40-Hz (Galambos et al., 1981, Sheer, 1989, Picton et al., 2003), but these are not relevant to the present focus on the fluctuation range. Overall, we are not able to draw and strong conclusions from the scalp EEG/MEG literature for fluctuation range AM/FM.

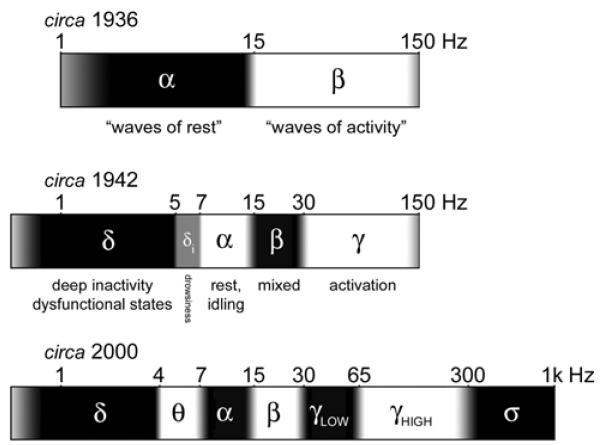

In order to complete our task of usefully bringing together basic information about the fluctuation (~1-10 Hz) range, we include Figure 9. Since there has been critique recently concerning the inconsistent use of different Greek-letter frequency bands (δ, θ, α, β, γ), this figure reviews the historical introduction and current conventions for these symbols, although the gray transitions indicate an acceptable sloppiness for the boundaries. Note that these are merely useful labels, although the ranges do correspond to some degree to distinct categories of spontaneous brain rhythms; critics since Grass and Gibbs (1938) have emphasized that the EEG spectrum is to be thought of as a continuum. This is understood by all workers in the field and there is nothing wrong with using these labels as quick reference, so long as the usage is clear.

Figure 9.

History of the EEG spectrum. All boundaries are approximate (indicated by gray transitional areas), due to different usages by different authors, but best-fit integers for the boundaries are indicated. The frequency scale is logarithmic. Top (circa 1936): Two frequency bands, α and β, were distinguished by Berger (1930), with the division at ~15-20 Hz. The lower/upper boundaries of α/β were not specified (frequencies outside the range ~2-150 Hz were not studied then). Ectors (1936), who systematically mapped sensory and motor cortices in awake rabbits, aptly referred to these ranges as “waves of rest” and “waves of activity”. Middle (circa 1942): δ (~0.5-5 Hz) was introduced by Walter (1936) for slow rhythms from dysfunctional tissue in the vicinity of tumors, and was quickly adopted for slow waves during deep sleep (Davis et al., 1937). γ (above ~30 Hz) was introduced by Jasper and Andrews (1938), although it had been discovered and systematically used for brain activation mapping by Ectors (1936). The “intermediate δ” band (~5-7 Hz) was introduced by Jung (1941) as a sign of drowsiness, and is included here as the precursor to today’s θ band (~4-7 Hz), also well-known to correlate with drowsiness. Bottom (circa 2000): The contemporary EEG spectrum includes the θ band (Walter and Dovey, 1944) and division of the γ band into low (~30-60 Hz) and high (~65-300 Hz) regions (Crone et al., 1998). A set of phenomena above ~300 Hz are generated by summed multi-unit spiking, labeled here the σ band (after Curio, 2000). Note that the recent labels (high-γ, σ) are not universally accepted, and note that high/low divisions have been proposed for other bands also.

We note that the classic view that slow rhythms (δ, θ, α) represent cortical inactivity or ‘idling’, whereas fast rhythms (γ) represent cortical activation (Ectors, 1935, Pfurtscheller, 1999, Crone et al., 2001), has been abundantly confirmed by blood-flow and metabolic measures (Darrow and Graf, 1945, Logothetis et al., 2001, Mukamel et al., 2005). Thus, future work on the role of “θ” in speech EEG/MEG should be careful to distinguish spontaneous θ, which is generally a sign of drowsiness and idling, from stimulus-driven θ. For example, Scheeriniga et al. (2009) recently demonstrated with simultaneous EEG/fMRI that certain θ increases, thought to be due to cognitive activity, were in fact just increases in cortical idling in non-engaged parts of the cortex. Thus, researchers using scalp EEG/MEG to study stimulus-related and top-down θ influences during speech must proceed with particular caution in methods and interpretation. This is not to discourage work in this area, and we believe that important missing evidence will be provided by EEG/MEG (or perhaps LFP/ECoG) studies. For example, Kenmochi and Eggermont (1997) report a correlation between a cortical neuron’s BMF and frequency of spontaneous oscillation in the LFP of cat auditory cortex. They also report correlations between click-following rate and the amplitudes of local idling rhythms. The slower regions of cortex (in terms of click-following rate) exhibit larger spontaneous rhythms. It has been known since the 1940’s LFP/ECoG literature that non-primary regions exhibit larger spontaneous rhythms compared to primary regions, which is therefore in line with their slower BMFs during auditory stimulation (Section 5). Thus, the LFP/ECoG spontaneous rhythms may reflect the temporal dynamics observed during stimulation.

5. ANIMAL NEUROPHYSIOLOGY

Based on the human fMRI evidence, two expectations for (unanesthetized) primate cortex are: 1. Primary regions exhibit some ~2-5 Hz AM/FM tuning, but also tuning up to ~20 Hz or more, with the peak at ~5-10 Hz. 2. Non-primary regions, particularly those lying lateral to HG, are generally slower and appear to more strongly express the ~2-5 Hz peak AM/FM tuning.

Several excellent reviews exist for general AM/FM results in animal neurophysiology (Kay, 1982, Langner, 1992, Joris et al., 2004, Wang et al., 2008, Malone and Schreiner, 2010), and our main purpose here is to understand results from humans, so we do not give a comprehensive review. However, we can still provide a useful focus on the fluctuation range (~1-10 Hz), and attempt to determine the neural correlates of the observed psychophysical tuning to AM/FM with broad peak at ~2-5 Hz. The fMRI results predict a bandpass tuning in the population-level firing rate with a broad peak at ~2-5 Hz in certain non-primary auditory cortices. Since the prediction from human fMRI focuses on (lateral) non-primary regions, understanding of the evidence requires a brief introduction to core vs. belt regions.

5.1. Basic orientation: core vs. belt

This basic distinction for auditory cortex emerged in the 1940s in anatomical and physiological studies of the cat (Fig. 10), and reviews by the key early workers can still be used for basic orientation (Rose and Woolsey, 1958, Ades, 1959, Woolsey, 1961). The early physiological workers used evoked potentials (ECoG), which resulted in blurred spatial resolution compared to more modern maps (e.g., Fig. 11) based on multi- or single-unit spike rates (Merzenich and Brugge, 1973, Imig et al., 1977, Aitkin et al., 1986). A tour de force history is given by Jones (2010). The ‘core’ vs. ‘belt’ distinction is also extended to subcortical structures (Andersen et al., 1980, Calford and Aitkin, 1983, Aitkin, 1986), which is essential to our hypotheses concerning the origins of the ~2-5 Hz tuning, so it will be illustrated below (Fig. 14).

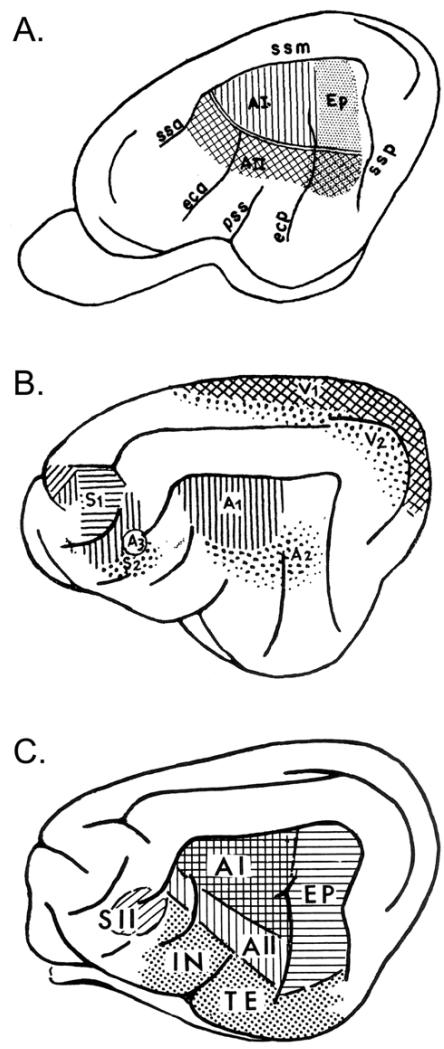

Figure 10.

Cat cortex: quick orientation and terminology. A. The basic sensory-responsive cortices from Bremer (1952). ‘A3’ is a small auditory-responsive zone within S2 (multimodal). B. Core (AI) and belt (AII, Ep) regions from Rose and Woolsey (1949), using anatomy and evoked-potential mapping (‘Ep’ = posterior ectosylvian area; ‘ss’ = suparasylvian sulcus). C. Summary of auditory-responsive regions from Ades (1959) including core (AI), belt (AII, EP), and parabelt/association (‘IN’ = insular region; ‘TE’ = temporal area) regions. Note that these regions, based on ECoG evoked-potentials, are expanded (spatially blurred) compared to current maps based on single- or multi-unit mapping.

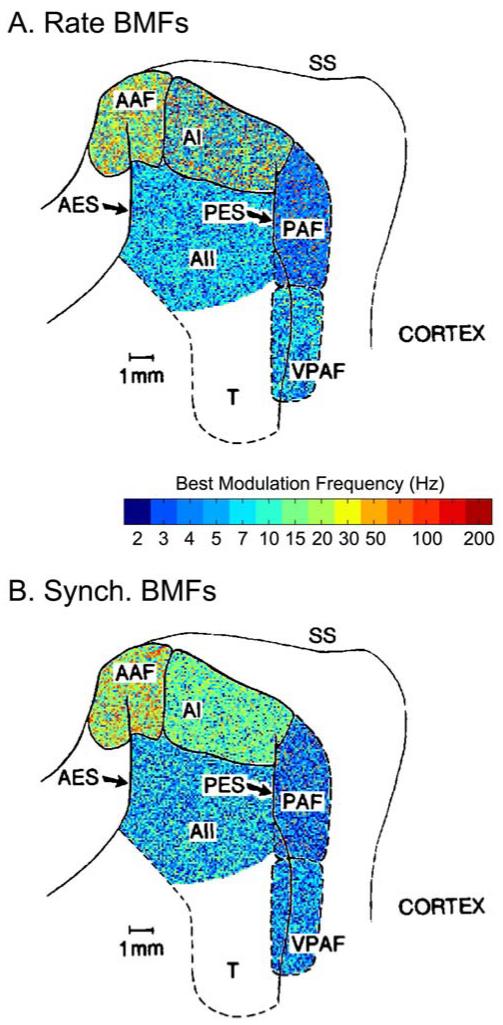

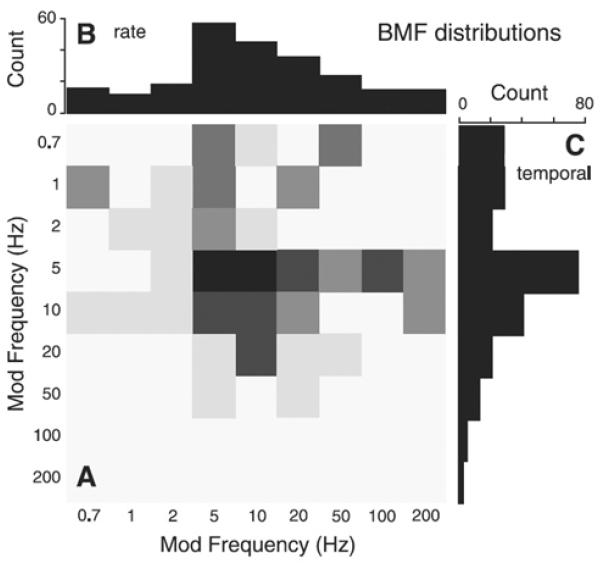

Figure 14.

Best modulation frequencies (BMFs) for AM tones as a function of anatomical region in the cat (Schreiner and Urbas, 1988). The underlying map is adapted from Andersen et al. (1980), whose terminology is employed by Schreiner and Urbas. Rate (A) and synchrony (B) BMFs were obtained for 172 single-units using 14 AM rates from 2.2 to 200 Hz (see colorbar, note that “2” really means “< 2.2” since no lower AM rates were tested). Each of the five cortical regions was colored according to the proportion of BMFs observed at each rate (positions within a given field are assigned randomly). This quickly summarizes the results for the main conclusions: AAF exhibits the fastest BMFs (up to 100 Hz, but still typically near ~20 Hz) followed by AI. The ‘belt’ regions AII, PAF, and VPAF exhibit the slowest BMFs, overwhelmingly in the fluctuation range (~1-10 Hz). PAF (receiving heavy input from MGBd) is the slowest, with a clear preference for ~2-5 Hz AM.

Comparing cats and monkeys for homologous regions is not always straightforward, but at least AAF and CM appear established as near-homologues. There has been a huge transformation of the neocortex (expansion of association areas, greater number and depth of sulci) going from carnivores to primates. The peri-Sylvian auditory regions appear rotated by nearly 180°, hence an anterior field matching to a caudo-medial field. AAF/CM is the best studied auditory field outside of the core, and is in some ways more similar to core than to belt areas (Imaizumi et al., 2005). It tunes to higher modulation frequencies than even AI, and so AAF/CM is to be excluded from certain summary statements about ‘belt’ regions, such as their typically slower characteristics. As a final point of general orientation (Rauschecker and Tian, 2000), caudal belt areas (CL, and sometimes CM) are implicated in an auditory ‘where’ pathway (towards parietal lobe regions for spatial processing), whereas lateral belt regions begin a ‘what’ pathway (towards temporal lobe regions for processing of natural sounds and species-specific vocalizations). The evidence for functional specialization does not appear unequivocal to us, and we mention this only as a point of general orientation: In these terms, the fMRI evidence predicts slower (~2-5 Hz) tuning in belt and parabelt regions of the ‘what’ (lateral) pathway.

For humans (Hackett et al., 2001, Sweet et al., 2005, Fullerton and Pandya, 2007, Brugge et al., 2008, Baumann et al., 2013): the core is localized to HG, the belt to surrounding regions of the supratemporal plane (STP) and lateral HG, and the parabelt to further surrounding regions, including most of the exposed surface of the superior temporal gyrus (STG). Lateral belt regions may just emerge from the Sylvian fissure onto the exposed STG. The fMRI activations for fluctuation-range AM/FM were found most strongly in regions lateral to HG, which are belt and parabelt regions. HG displayed some ~2-5 Hz tuning, in addition to faster tunings (up to ~20-32 Hz), so ‘core’ regions of animal cortex should exhibit a subset of cells with this characteristic.

5.2. Single-unit studies of core cortex (AI)

Single-unit studies of auditory cortex began in the 1950s (Erulkar et al., 1956), but the early studies were concerned overwhelmingly with methodological issues and basic response properties to clicks and tones (latency, intensity relations, tonotopy, etc.). Katsuki et al. (1960) briefly mention “remarkable” responses to beating tones in unanesthetized monkeys, but no specifics are given. Some early animal ECoG studies (Goldstein et al., 1959) used repetitive click stimuli with focus on periodicity pitch, and later studies also focused on these rapid repetition rates, but these are not directly relevant to the present focus. Single-unit work of the 1970s ± a decade focused heavily on subdivision of cortical fields, tonotopy, and other response properties of AI. Thus, despite a long history of work on AI, we find only a small number of studies for fluctuation range AM/FM:

Whitfield and Evans (Whitfield, 1957, Whitfield and Evans, 1965, Evans, 1968) studied AI of unanesthetized cats using FM stimuli, including sinusoidal FM in the fluctuation range. Whitfield (1957) found that the ECoG (surface potential) from AI could be driven at the same rate as the FM for rates between 2-18 Hz. Whitfield and Evans (1965) found that most single-units responded to FM tones more consistently than to static tones. Testing a range of FM rates, they found: “Rates as low as 1 cycle/sec. were still effective in a few units in evoking periodic firing consistently related to some point on the modulation waveform. For most units, however, rates below 2-3 cycles/sec. and above 15 cycles/sec. tended to be less effective in evoking the consistent responses…” Evans (1968) discusses this result in terms of the emphasis in cortex on dynamic vs. static stimuli.

Fastl et al. (1986) searched for the neural correlates of fluctuation strength in AI of unanesthetized squirrel monkeys. SAM tones from 0.5 to 32 Hz AM were tested at various modulation depths, and the correspondence with human psychophysics was seen as “promising”. Specifically, AI neurons generally exhibited bandpass characteristics with best modulation frequencies (BMFs) below 32 Hz, often in the upper fluctuation range (~5-10 Hz).

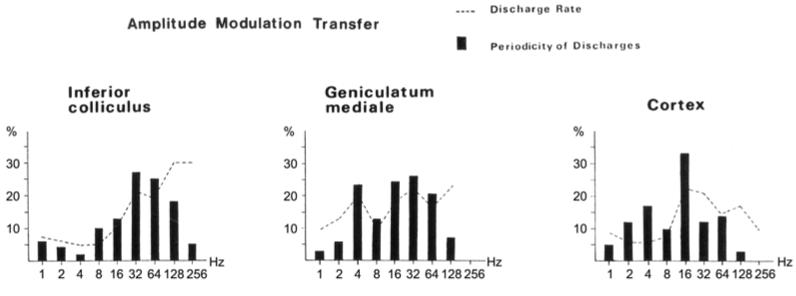

Müller-Preuss et al. (1988) studied IC, MGB, and auditory cortex in unanesthetized squirrel monkeys using SAM noise and tones. AM rates from 1-256 Hz were tested for ~450 units total. They confirm Fastl et al. (1986) in that: “the most impressive result is that most of the units are sensitive within a particular band of AM-frequencies. There are only a few units which display a low pass characteristic or have complex response patterns (i.e. multiple peaked).” Their full data for IC and MGB will be discussed in Section 5.4, but here we note the appearance of a peak near ~4 Hz (for vTMTFs) in the thalamocortical data compared to IC (Fig. 12). However, the full report of the cortical data (Bieser and Müller-Preuss, 1996), with more extensive measurements, shows a broad peak at ~8 Hz for core regions, not two peaks at 4 and 16 Hz. The majority of core BMFs were in the range 1-32 Hz, so the results are overall consistent with core results in other primates (although squirrel monkeys appear to exhibit overall faster AM tuning preferences than Old World primates, Brian Malone, personal communication).

Eggermont (1993, 1994) studied AM noise and AM/FM tones in lightly anesthetized cat (light ketamine, and he provides some evidence that the anesthesia does not drive the results, so they are included). In AI of the adult cat, synchrony-BMFs for AM noise peaked in the range 8-12 Hz, whereas for AM/FM tones they peaked mostly in the range 4-7 Hz (full range up to 32 Hz or more). Eggermont heavily studied click trains, which give results most similar to AM noise (both are broadband stimuli), but we do not cover this here. See also Eggermont for comparison of click trains to other AM/FM stimuli, where he points out that AI neurons prefer stimulus categories with rapid onsets (clicks, gamma tones, etc.).

Liang et al. (2002) studied AI in awake marmosets, using SAM and SFM tones. Modulation frequencies as low as 1-4 Hz were used and most single-units exhibited a band-pass preference. The majority of rate and synchrony BMFs were in the range 4-32 Hz, although rate BMFs in particular can be found as high as 128-256 Hz. They emphasize the similarity in their results for AM and FM stimuli, although we note slightly lower BMFs for SFM (see similar comment in Section 3). Bendor and Wang (2008) showed that, within the core cortical regions of awake marmoset monkeys, R and RT have longer latencies and slower AM modulation tunings than AI. They proposed a caudal-to-rostral gradient of increasing temporal integration with implication of hierarchical progression.

Malone et al. (2007) studied SAM tones in core regions (AI, R) of unanesthetized macaques, and found the majority of BMFs in the range ~4-32 Hz (peak at ~5-10 Hz for their fms tested). They emphasize the lack of correlation between rate and temporal BMFs, but the peaks/ranges are similar for rate and temporal BMFs (Fig. 13), so the population-level result is essentially similar.

Yin et al. (2011) studied AI in awake macaques using SAM noise (the lowest rates tested were 5 and 10 Hz). The great majority of rate and synchrony BMFs were in the range 5-30 Hz, with the peak at 5-10 Hz.

Figure 12.