Abstract

BACKGROUND

Value-based purchasing programs will use administrative data to compare hospitals by rates of hospital-acquired pressure ulcers (HAPUs) for public reporting and financial penalties. Validation of administrative data for these purposes, however, is lacking.

OBJECTIVE

To assess the validity of the administrative data used to generate HAPU rates to compare hospitals for public reporting and financial penalty, by comparing hospital performance as assessed by HAPU rates generated from administrative and surveillance data.

DESIGN

Retrospective analysis of 2 million all-payer administrative records for 448 California hospitals and quarterly hospital-wide surveillance data for 213 hospitals from the California Nursing Outcomes and Prevalence Study (as publicly reported on CalHospitalCompare).

SETTING

196 acute-care hospitals with >=6 months of available administrative and surveillance data

PATIENTS

Non-obstetric adults discharged in 2009.

MEASUREMENTS

Hospital-specific HAPU rates were computed as the percentage of discharged adults (from administrative data) or examined adults (from surveillance data) with >=1 HAPU stage II and above (HAPU2+). Categorization of hospital performance using administrative data was compared to the grade assigned using the surveillance data.

RESULTS

By administrative data, the mean (CI) hospital-specific HAPU2+ rate was 0.15% (0.13, 0.17); by surveillance data, the mean (CI) hospital-specific HAPU2+ rate was 2.0% (1.8, 2.2). Of the 49 hospitals with HAPU2+ rates from administrative data in the highest (worst) quartile, the surveillance dataset assigned these hospitals performance grades of “Superior” for 3 hospitals, “Above Average” for 14 hospitals, “Average” for 15 hospitals, and “Below Average” for 17 hospitals.

LIMITATIONS

Data are from 1 state, 1 year.

CONCLUSIONS

Hospital performance scores generated from HAPU2+ rates varied considerably from administrative data and surveillance data, suggesting administrative data may not be appropriate for comparing hospitals.

INTRODUCTION

Pressure ulcers (i.e., “bed sores”) are skin or tissue injuries over bony prominences, due to pressure and/or shear (1). Pressure ulcer severity is categorized by stages (Appendix Table 1) ranging from stage I (non-blanchable erythematous intact skin) to stage IV (with full-thickness tissue loss exposing bone, tendon or muscle) (1). Hospital-acquired pressure ulcers (HAPUs) are painful (2), common (1–2.5 million annually in US (3, 4)), costly (3–6), often preventable (7) and potentially fatal complications (4). The cost to heal each pressure ulcer varies by severity of ulcer and population studied from hundreds of dollars (3) for earlier ulcer stages I-II, to $5000–151,700 (4–6) to heal an advanced ulcer.

The principal policy initiative used to motivate hospitals to prevent complications like HAPUs is “value-based purchasing” programs (8–11) like the October 2008 Hospital-Acquired Conditions (HAC) Initiative (9, 10) which eliminated extra Medicare payments for treating certain complications. Yet, the HAC Initiative is more than a rule change reducing extra payments. Because it requires hospitals to identify each diagnosis as a “present-on-admission” or “hospital-acquired” condition, it changes the purpose of administrative data used to generate claims for requesting hospital payment to a dataset for comparing hospitals by complication rates. Of note, although HAC diagnoses no longer generate additional payment, hospital coders following federal guidelines (12–14) are required to list all diagnoses in administrative data that affect patient care or length-of-stay. Each hospital’s HAC rates from administrative data has been publicly reported by the Centers for Medicare and Medicaid Services’ (CMS) website called Hospital Compare (15) since 2011. In October 2014, as mandated in the Affordable Care Act, the quartile of hospitals with the highest risk-adjusted HAC rates will be penalized with a 1% pay reduction for all Medicare admissions (8), based on a total HAC score including a HAPU measure generated from administrative data. The specific measure currently proposed for comparing hospitals by HAPU rates is the Agency for Healthcare Research and Quality (AHRQ) Patient Safety Index (PSI 3) (11, 16), which includes extensive inclusion and exclusion criteria applied to administrative discharge data in order to generate hospital rates of potentially preventable hospital-acquired pressure ulcers.

Decisions regarding hospital comparisons, payment changes and public reporting have occurred with limited validation (14) of the administrative data that serves as the foundation to generate these HAC measures, including the AHRQ PSI measure. To model such a validation process of the administrative data used to generate these HAC measures, we took advantage of an existing statewide dataset of HAPU rates and performance scores generated using surveillance data obtained by comprehensive patient skin exams to compare how a hospital’s report card regarding HAPU rates (as a better or worse performer) varied whether generated by administrative data or surveillance data. Specifically, we assessed how hospitals in the quartile with the highest (i.e., worst rates, at risk for financial penalty) HAPU rates by administrative data were scored by similar HAPU measures using surveillance data.

METHODS

Study Design

We conducted retrospective analyses of 1 year of all-payer administrative discharge records and quarterly hospital-wide pressure ulcer surveillance data for California hospitals in 2009 to assess the validity of HAPU rates and hospital performance scores generated from administrative data for comparing hospital performance. The University of Michigan’s human subjects review board approved this study.

Data Sources

For the administrative data, we used the Healthcare Cost and Utilization Project (HCUP) State Inpatient Dataset (17) for California 2009, sponsored by AHRQ, containing hospitalization-specific demographic, procedure and diagnosis codes (including location and stage-specific pressure ulcer codes (10, 12) detailed in Appendix Text 1 and Appendix Tables 2–4) generated by hospitals to submit payment requests (i.e., claims) for each discharge from 448 acute care non-federal California hospitals in 2009. HCUP data are translated into a uniform format to facilitate comparisons, protect patient identity, and undergo quality control procedures to confirm data are internally valid and consistent (18). The 2009 American Hospital Association Annual Survey Database provided hospital characteristics (19).

The surveillance dataset used was generated by the Collaborative Alliance for Nursing Outcomes (CALNOC) Pressure Ulcer Prevalence Study (20, 21). The 2009 dataset available for analysis reports hospital-level HAPU rates from patients with at least one HAPU stage II or above (without a present-on-admission pressure ulcer) for 213 California hospitals as publicly reported on CalHospitalCompare (22) by the California Hospital Assessment and Reporting Taskforce (CHART) (23); the patient-level and hospitalization-level data collected to generate this surveillance dataset was not available to study, and hospital rates for different stages of pressure ulcers (such as stages III or above) were not available for analysis. CALNOC surveillance data (20) are generated by a team (24) including bedside nurses and wound care specialists, guided by on-site representative(s) with expertise in CALNOC data collection (25). The team performs quarterly hospital-wide patient skin exams (clinical surveillance exams) and reviews the entire medical record (including nursing notes) to describe pressure ulcers by stage as defined by the National Pressure Ulcer Advisory Panel (1, 20) and whether ulcers were hospital-acquired. The submitted data undergo screening procedures for inconsistencies and internal validation checks (26); independent registered nurses certified in CHART data collection methods periodically audit each participating hospital (22). The CALNOC pressure ulcer measurement methodology and data registry are recognized by the National Quality Forum (25, 26); CALNOC is also a recognized registry for CMS and the Joint Commission (27).

Study Population

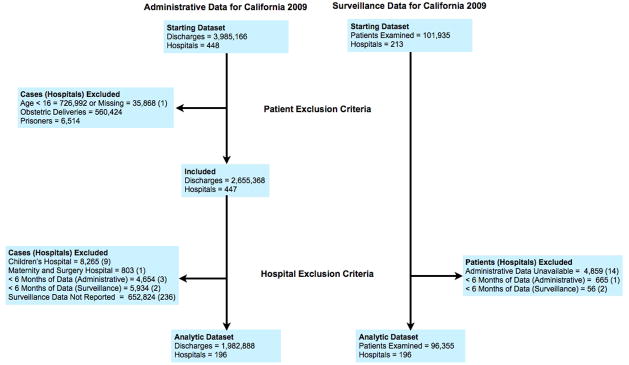

Figure 1 depicts the patient and hospital exclusion criteria used to construct the analytic datasets. Patient exclusions were applied to the administrative dataset to match those used to generate the available hospital-level surveillance dataset as closely as possible. The study population of adults excluded patients under age 16 and obstetrical hospitalizations in both administrative and surveillance data. Only acute-care medical-surgical hospitals with administrative and surveillance data available for at least 6 months (or 2 quarters) in 2009 were included. We performed analyses for an all-payer population, as what was initially a Medicare policy expanded to other payers (28, 29). Hospital-acquired pressure ulcers were counted for generating hospital-level HAPU rates if the patient had at least one hospital-acquired pressure ulcer of stage II or above and if the patient also had no other pressure ulcers present at the time of admission. No length-of-stay restrictions were applied while generating either the administrative or surveillance dataset. Some clinical exclusions applied in surveillance data collection (20) could not be applied to administrative data: medical instability, when turning for skin exam was contraindicated (e.g., uncontrolled pain, unstable fracture), dying patients for whom pressure ulcer prevention was no longer a goal, patients not in rooms at the time of exam, and exam refusal (although patient consent was not required).

Figure 1.

Study Flow Diagram

Comparing HAPU rates and performance scores assessed using administrative and surveillance data

HAPU cases were identified in administrative data by having at least one ICD-9-CM of 24 available secondary diagnosis codes being a stage-specific pressure ulcer listed as hospital-acquired (detailed in Appendix Text 1, Appendix Table 5). Hospital HAPU2+ rates from administrative data (“administrative HAPU2+”) were computed as the percentage of all adult hospitalizations having at least one HAPU stage II and above without any pressure ulcer diagnoses listed as present-on-admission (Appendix Table 5). For each hospital, this administrative HAPU2+ measure is a cumulative incidence rate (1) of HAPUs stages II and over, calculated over the entire length-of-stay for each hospitalization.

Hospital rates of pressure ulcers stages II and above from the surveillance data (“surveillance HAPU2+”) were reported as a percentage of adult patients examined hospital-wide in 2009 with at least one HAPU stage II and above, without a present-on-admission pressure ulcer (Appendix Table 5). Due to the method of data collection, this surveillance measure is a point-prevalence HAPU2+ rate for each hospital. A 95% confidence interval (CI) was generated by CHART (23) for each hospital rate using Bayesian estimation (30) based on the number of patients in the hospital examined that year in the pressure ulcer prevalence study. The publicly reported hospital grades based on HAPU rates provided with the surveillance dataset from CHART were assigned by comparing the hospital’s rate and confidence intervals to the 10th, 50th, and 90th percentiles (detailed in Appendix Table 6 and the Appendix Figure).

We anticipated that each hospital’s HAPU2+ incidence rate from administrative data would be lower than each hospital’s HAPU2+ rate from surveillance data because point-prevalence assessments will collect more data from patients with longer length-of-stays (31) which is a potential risk factor for and consequence of pressure ulcers (and less data from those with short length-of-stays). However, because both our incidence and point-prevalence measures were specific to hospital-acquired pressure ulcers occurring in the time period of the hospitalization, we anticipated there would be a positive correlation between the measures for individual hospitals.

Statistical Analyses

Hospital-level descriptive statistics and 95% CIs are reported. Pearson’s r was used to test the strength of association between hospital HAPU2+ rates from administrative and surveillance data. Hospitals were ranked and divided into quartiles according to their administrative HAPU2+ rate, from the best performers (quartile 1) to the worst performers (quartile 4). After aligning the administrative data quartiles of performance with the four surveillance data performance grades (“Superior”, “Above Average”, “Average” and “Below Average”), similarities and differences in performance were quantified graphically. Analyses were conducted using Stata/MP 12.1 (Stata Corp., College Station, TX), by H.R. and J.M.

Role of Funding Source

This study’s funder AHRQ was not involved in the design, result reporting or interpretation, or decision to publish this manuscript.

RESULTS

Cohort Characteristics

In our analytic dataset (Figure 1), there were 1,982,888 adult discharges from 196 acute-care California hospitals in 2009 with >=6 months of administrative and surveillance data available to study. Using patient characteristics available in the administrative data (Table), hospitalizations for patients who developed a HAPU2+ were noted to be for older patients with longer length-of-stays, more diagnoses listed, more frequently admitted for unscheduled surgery and experienced higher in-hospital mortality compared to hospitalizations for patients without pressure ulcer diagnoses. The 196 hospitals had mean of 10,117 hospitalizations (range: 289–39,510), with a mean of 1.8% (CI: 1.4, 2.3) hospitalizations for rehabilitation.

Table.

Hospital-level discharge characteristics by pressure ulcer status, according to administrative discharge data (N=196 Hospitals)

| All Discharges | Discharges without a pressure ulcer | Discharges with Hospital-Acquired Pressure Ulcer (administrative HAPU2+)* | |

|---|---|---|---|

| Volume, n | |||

| Mean (SD) | 10117 (6262) | 9795 (6106) | 15 (15) |

| Median (IQR) | 9377 (5729,13359) | 9083 (5502,12861) | 10.5 (4,21) |

| Range | 289 to 39510 | 284 to 38259 | 0 to 74 |

| Age, y | |||

| Mean (SD) | 62.3 (4.8) | 61.9 (4.8) | 69.6 (8.4) |

| Median (IQR) | 62.8 (60.8,65.4) | 62.4 (60.4,65.1) | 71.0 (64.7, 75.1) |

| Range | 47.0 to 78.2 | 46.8 to 78.1 | 33.0 to 87.8 |

| Female, % | |||

| Mean (SD) | 54.2 (4.5) | 54.3 (4.5) | 48.7 (21.4) |

| Median (IQR) | 54.4 (52.2,56.7) | 54.5 (52.2,56.8) | 50.0 (35.5, 60.0) |

| Range | 36.3 to 78.0 | 36.4 to 78.2 | 0 to 100.0 |

| Length of Stay, d | |||

| Mean (SD) | 5.0 (1.3) | 4.8 (1.2) | 24.9 (12.6) |

| Median (IQR) | 4.8 (4.2, 5.5) | 4.7 (4.0,5.3) | 22.2 (17.5,28.9) |

| Range | 1.9 to 13.3 | 1.9 to 13.0 | 2.0 to 93.1 |

| Diagnoses per Discharge, n | |||

| Mean (SD) | 9.8 (1.7) | 9.5 (1.6) | 19.7 (2.8) |

| Median (IQR) | 9.8 (8.9, 10.8) | 9.4 (8.5,10.6) | 20.0 (18.3, 21.6) |

| Range | 4.9 to 15.7 | 4.7 to 15.3 | 11.1 to 25.0 |

| Medicare Discharges, % | |||

| Mean (SD) | 50.3 (10.9) | 49.5 (10.8) | 67.0 (22.5) |

| Median (IQR) | 52.2 (45.9, 57.6) | 51.1 (45.0,56.7) | 66.7 (52.9, 80.0) |

| Range | 8.5 to 72.1 | 8.0 to 71.3 | 0 to 100.0 |

| Type of Hospitalization, % | |||

| Medical | |||

| Mean (SD) | 67.8 (10.7) | 67.4 (10.8) | 52.8 (25.0) |

| Median (IQR) | 68.3 (63.0,74.8) | 67.7 (62.5,74.4) | 50.0 (36.4,67.4) |

| Range | 1.6 to 99.7 | 1.7 to 99.6 | 0 to 100.0 |

| Scheduled Surgical | |||

| Mean (SD) | 17.1 (10.2) | 17.6 (10.3) | 8.4 (12.2) |

| Median (IQR) | 16.4 (10.5,21.1) | 17.0 (10.8,21.8) | 5.1 (0,12.5) |

| Range | 0 to 95.1 | 0 to 95.0 | 0 to 100.0 |

| Unscheduled Surgical | |||

| Mean (SD) | 15.0 (4.5) | 15.1 (4.5) | 38.9 (23.6) |

| Median (IQR) | 14.8 (12.1,17.6) | 14.8 (12.0,17.7) | 39.4 (23.1,52.6) |

| Range | 0.3 to 32.7 | 0.4 to 33.1 | 0 to 100.0 |

| Died during Hospitalization, % | |||

| Mean (SD) | 3.1 (0.8) | 2.8 (0.8) | 16.3 (18.0) |

| Median (IQR) | 3.1 (2.5,3.5) | 2.8 (2.2,3.2) | 14.3 (0, 22.7) |

| Range | 0 to 6.0 | 0 to 5.4 | 0 to 100.0 |

| Para/Hemi/Quadriplegia, % | |||

| Mean (SD) | 4.0 (1.5) | 3.5 (1.4) | 10.8 (12.7) |

| Median (IQR) | 3.9 (3.1,4.7) | 3.5 (2.6,4.3) | 9.4 (0, 16.7) |

| Range | 0.8 to 11.6 | 0.7 to 11.0 | 0 to 100.0 |

| Spina Bifida/Anoxic Brain Damage, % | |||

| Mean (SD) | 0.5 (0.3) | 0.4 (0.2) | 3.9 (8.9) |

| Median (IQR) | 0.4 (0.3,0.6) | 0.4 (0.3,0.5) | 0 (0,5.3) |

| Range | 0 to 2.0 | 0 to 1.3 | 0 to 66.7 |

| Transfers In+, % | |||

| Mean (SD) | 7.1 (5.1) | 6.8 (4.9) | 14.0 (18.2) |

| Median (IQR) | 6.0 (3.2,9.8) | 5.8 (3.2,9.2) | 9.3 (0,20.3) |

| Range | 0.2 to 23.9 | 0.2 to 22.2 | 0 to 100.0 |

10 hospitals had a HAPU2+ rate equal to zero; discharge characteristics are reported for the 186 hospitals with a non-zero rate.

Includes patients admitted from another hospital or other health facility including long-term care facilities.

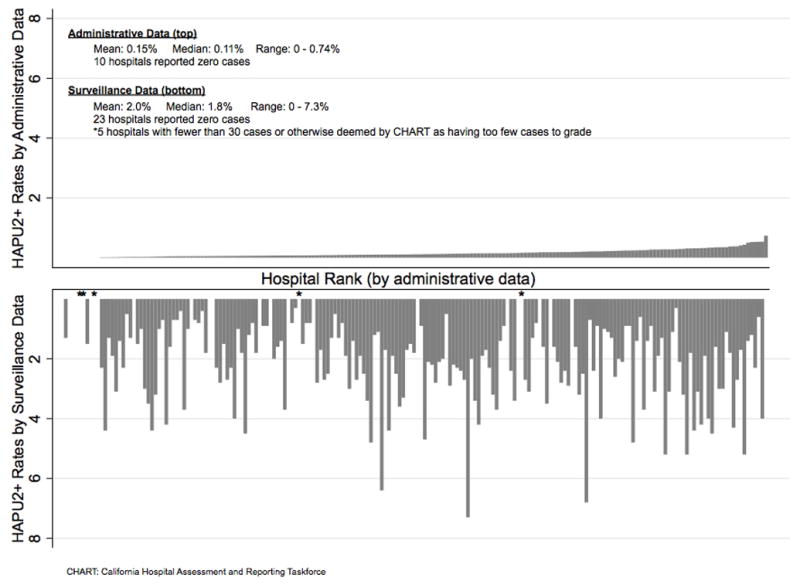

Hospital Rates of Hospital-Acquired Pressure Ulcers stages 2 and above

Administrative HAPU2+ rates had a mean of 0.15% (CI: 0.13, 0.17) of discharges with a range of 0–0.74% for an all-payer population, and HAPU2+ rates among Medicare patients were 0.20% (CI: 0.17, 0.22). Surveillance HAPU2+ rates had a higher mean hospital rate of 2.0% (CI: 1.8, 2.2) of patients examined with a range of 0–7.3%. Next we assessed how well each individual hospital’s HAPU2+ rates from administrative data correlated with the same hospital’s HAPU2+ rates from the surveillance data. First we graphed each hospital’s HAPU2+ rate according to administrative data (Figure 2, top graph) in rank order. Then, for the same hospitals and in the same order, we graphed each hospital’s HAPU2+ rate according to surveillance data (Figure 2, bottom graph). Quantitatively, the correlation between each hospital’s HAPU2+ rate from administrative data and from surveillance data was very weakly positive with Pearson’s r of 0.20 (CI: 0.06, 0.33).

Figure 2.

Hospital rates of hospital-acquired pressure ulcers (stages II and above), by administrative data and surveillance data

CHART: California Hospital Assessment and Reporting Taskforce

Are worst performers by administrative data graded similarly by surveillance data?

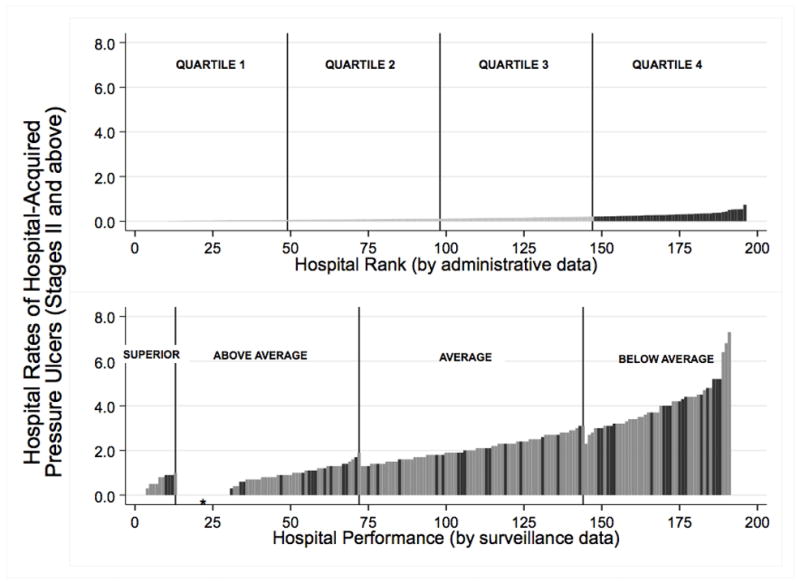

As illustrated in Figure 3 (top), to identify the hospitals interpreted as worst performers by administrative data, we ranked hospitals by their administrative HAPU2+ rates and divided the hospital rank list into 4 performance quartiles, ranging from quartile 1 (lower rates, “best performers”) to quartile 4 (highest rates, “worst performers”). Black bars in the top of Figure 3 highlight these 49 “worst performer” hospitals in quartile 4. Then, we assessed the grades assigned by the surveillance dataset to these “worst performer” hospitals by administrative data. As illustrated in Figure 3 (bottom), these “worst performer” hospitals by administrative data rates (highlighted by black bars) were assigned grades over the entire performance spectrum. Of the 49 hospitals with HAPU2+ rates from administrative data in the highest (worst) quartile, the surveillance dataset assigned these hospitals performance grades of “Superior” for 3 hospitals, “Above Average” for 14 hospitals, “Average” for 15 hospitals, and “Below Average” for 17 hospitals.

Figure 3.

Relative performance of hospitals according to administrative data (top) and surveillance data (bottom)

(Top) Hospitals were ranked by their administrative HAPU2+ rates and divided into 4 performance quartiles, ranging from quartile 1 (lower rates, “best performers”) to quartile 4 (highest rates, “worst performers”). Black bars highlight the 49 “worst performer” hospitals in quartile 4. (Bottom) The grades assigned by the surveillance dataset to the same 49 “worst performer” hospitals by administrative data are shown by black bars again. These “worst performer” hospitals by administrative data rates were assigned grades over the entire performance spectrum, including several in the “Superior” and “Above Average” categories. Only 17 (35%) of 49 hospitals in the worst quartile by administrative data were graded as “Below Average” by surveillance data. Note that 5 hospitals did not receive a performance grade by the surveillance method because they had insufficient data. These 5 hospitals are excluded from the bottom graph. The surveillance rates may decrease from left to right as a result of the grading method, as explained in Appendix Table 6 and Appendix Figure.

*1 hospital was identified as being among the worst quartile by administrative data but had a zero rate by surveillance data.

DISCUSSION

The most important finding from this study is that hospital grades as worse or better performers regarding hospital-acquired pressure ulcers can be very different whether assessed by administrative data (as an incidence rate) or surveillance data (as a point-prevalence rate). There was very little correlation between each individual hospital’s HAPU2+ rates from administrative data and surveillance data. This raises concerns about the use of administrative data alone for comparing hospitals by HAPU rates for public reporting or financial penalty.

As expected from differences in incidence and prevalence measure collection, hospital incidence HAPU2+ rates from administrative were lower than hospital point-prevalence HAPU2+ rates from surveillance data. However, we did not expect that the surveillance HAPU2+ rates would be 10-fold higher than the administrative HAPU2+ rates. By literature review (1, 31–34), most studies reported incidence of HAPUs including all stages and point-prevalence of pressure ulcers including both present-on-admission and hospital-acquired ulcers, with point-prevalence rates 2–6 times higher than the incidence rates. Because our study’s point-prevalence surveillance measure included only HAPU2+ ulcers in patients who were not admitted with a present-on-admission ulcer, we expected a smaller difference between the incidence and point-prevalence measures. Reported rates of HAPU2+ incidence in acute care vary widely from 0.4–12.9% (35), yet are higher than the incidence found in this study suggesting under-reporting of HAPUs in administrative data (suspected as a consequence of administrative data collection requirements, described below). A 2009 study (33) reported a HAPU2+ point-prevalence rate of 3.1%, similar to the ranges seen in this study using surveillance data.

This study illustrates the limitations of incidence rates from administrative data for measuring HAPUs due to how administrative data is generated (and similarly demonstrated regarding catheter-associated urinary tract infection rates (14, 36, 37)). Hospital coders are required to collect diagnoses for claims only from documentation from “providers” (i.e. physicians, physician assistants, nurse practitioners); coders can obtain pressure ulcer stage from nurse and wound specialist notes yet can only list the pressure ulcer as a diagnosis if supported by provider notes (12, 13). Physicians have limited training in assessing and documenting pressure ulcers, and physician documentation is likely focused on the diagnoses that led to or continue to justify hospitalization (rarely being pressure ulcers). Even advanced ulcers are often not documented in administrative data as diagnoses (1, 38). Physicians also may not comment on pressure ulcers that are not requiring additional wound care beyond the bedside nurse (such as stage I–II, or closed deep tissue injuries).

In contrast, CALNOC point-prevalence surveillance data are collected using a standardized method (20) requiring dedicated skin exams and medical record review by examiners trained to detect and stage all pressure ulcers. Although the CALNOC data collection is more resource and time-intensive than administrative data generation, a periodic evaluation such as performed in California may be reasonable for generating more valid data than administrative data for comparing hospital performance.

Limitations of this study include our inability to apply all clinical exclusion criteria for the surveillance dataset to administrative data. Yet, had we been able to apply all these exclusions (such as contraindications to turn, increasing pressure ulcer risk), this could have yielded even lower rates from the administrative data, resulting in an even greater difference in HAPU2+ rates generated from the two sources. Point-prevalence surveillance data collection is biased to capture more data from patients with prolonged length-of-stay (31), which could increase rates in surveillance data compared to administrative data. Only 1 year of post-policy data (2009) was evaluated; the current poor correlation between administrative and surveillance data may improve with time and limit these findings’ generalizability.

We acknowledge that gaming of diagnosis codes (e.g., not listing hospital-acquired pressure ulcers as diagnoses on discharge data to avoid public reporting or incorrectly describing hospital-acquired pressure ulcers as present-on-admission) is a potential important unintended response to value-based purchasing programs that use administrative data to assess, compare and penalize by complication rates. Yet, in the time period of this study (2009), although hospitals could not receive additional payment for hospital-acquired pressure ulcers, there was little incentive to game HAPU rates in administrative data as public reporting of HAPU rates from administrative data on Hospital Compare did not occur until 2011, nor were there financial penalties for hospital HAPU rates from administrative data. As these value-based programs evolve, assessing for systematic changes in hospital coding patterns in administrative data to reduce financial penalty or public reporting will be important to detect potential gaming. However, we suspect that the complex rules regarding documentation and coding of hospital-acquired pressure ulcers (12, 13) and poor documentation of pressure ulcers by providers likely explained why HAPU rates were low in administrative data more than gaming.

This study provides an overall assessment of the validity of the administrative discharge data used to generate measures of hospital-acquired pressure ulcers; this study does not assess the appropriateness of inclusion and exclusion criteria anticipated to be applied to administrative data to generate the recently proposed AHRQ PSI 3 measure for comparing hospitals by risk-adjusted rates of potentially preventable hospital-acquired pressure ulcers. However, because AHRQ PSI 3 focuses on more severe pressure ulcers (stages III and above) which are much rarer than stage II HAPUs (as detailed in Appendix Table 3), and because the AHRQ PSI 3 measure excludes several patient groups with increased rates of HAPUs such as patients with paralysis or transfers from outside hospitals (as summarized in the Table), the higher rarity of the HAPU events measured using AHRQ PSI 3 is anticipated to influence reliability of this measure as a quality indicator.

One potential alternative dataset to administrative data for comparing hospitals by HAPU rates is the Medicare Patient Safety Monitoring System (MPSMS) data (39), generated from a more extensive retrospective medical record review (including nursing notes) of a sample of Medicare fee-for-service patients to detect HACs including HAPUs. The MPSMS dataset identified a nationwide all-stage HAPU rate of 4.5% of Medicare patients developing ≥1 HAPU (40), compared to an all-stage HAPU rate of 0.32% for Medicare patients in our statewide administrative data. Yet, the MPSMS remains dependent on how well HAPUs are documented in the medical record.

In summary, different hospital performance scores in combination with very large differences between the HAPU rates by administrative and surveillance data suggest that using administrative data for comparing hospitals for public reporting and financial penalty regarding HAPUs may be inappropriate. Using administrative data has potential for unfairly penalizing hospitals because hospitals with higher HAPU rates may simply do a better job in documenting skin exams and pressure ulcers in provider notes used by coders to generate administrative data.

Supplementary Material

Acknowledgments

PRIMARY FUNDING SOURCE: AHRQ

The authors thank Andrew Hickner, MSI, for providing assistance with references and manuscript editing. The authors appreciate the insight provided by Gwendolyn Blackford, BS, RHIA, about processes used and regulations followed by hospital coders while assigning diagnosis codes.

The pressure ulcer surveillance data as reported on-line by CalHospitalCompare was obtained with application, permission, and data use instructions of the executive committee of the California Hospital Assessment and Reporting Taskforce (CHART).

The study was funded by grant 1K08-HS019767-01 (Dr. Meddings) from the Agency for Healthcare Research and Quality. The authors were also supported by the award 1R01-0HS018344-01A1 (Dr. McMahon). Dr. Meddings is also a recipient of the National Institutes of Health Clinical Loan Repayment Program for 2009 to 2013.

Footnotes

Prior Presentations: Presented by oral presentation at the 2012 Society of General Internal Medicine 34rd Annual Meeting in Orlando, Florida, and by poster presentation at the AcademyHealth 2012 Annual Research Meeting in Orlando, Florida.

Disclosures/Funding: AHRQ

References

- 1.Pieper B with the National Pressure Ulcer Advisory Panel (NPUAP) Pressure Ulcers: prevalence, incidence, and implications for the future. 2. Washington, DC: NPUAP; 2012. [Google Scholar]

- 2.Pieper B, Langemo D, Cuddigan J. Pressure ulcer pain: a systematic literature review and National Pressure Ulcer Advisory Panel white paper. Ostomy Wound Manage. 2009;55:16–31. [PubMed] [Google Scholar]

- 3.Beckrich K, Aronovitch SA. Hospital-acquired pressure ulcers: a comparison of costs in medical vs. surgical patients. Nurs Econ. 1999;17:263–71. [PubMed] [Google Scholar]

- 4.Berlowitz D, Lukas CV, Parker V, Niederhauser A, Silver J, Logan C, et al. Preventing Pressure Ulcers in Hospitals: A Toolkit for Improving Quality of Care. Rockville, MD: Agency for Healthcare Research and Quality; Apr, 2011. [Accessed at on 22 January 2013.]. AHRQ Publication No. 11-0053-EF. http://www.ahrq.gov/research/ltc/pressureulcertoolkit/putoolkit.pdf. [Google Scholar]

- 5.Lyder CH, Preson J, Grady JN, Scinto J, Allamn R, Berstrom N, et al. Quality of care for hospitalized Medicare patients at risk for pressure ulcers. Arch Intern Med. 2001;161:1549–54. doi: 10.1001/archinte.161.12.1549. [DOI] [PubMed] [Google Scholar]

- 6.Brem H, Maggi J, Nierman D, Rolnitzky L, Bell D, Rennert F, et al. High cost of stage IV pressure ulcers. Am J Surg. 2010;200:473–7. doi: 10.1016/j.amjsurg.2009.12.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Black J, Edsberg LE, Baharestani MM, Langemo D, Goldberg M, McNichol L, et al. Pressure ulcers: avoidable or unavoidable? Results of the National Pressure Ulcer Advisory Panel Consensus Conference. Ostomy Wound Manage. 2011;57:24–37. [PubMed] [Google Scholar]

- 8. [Accessed at on 22 January 2013.];The Patient Protection and Affordable Care Act, Section 3008: Payment adjustment for conditions acquired in hospitals. Pub L No. 111–148, 124 Stat 122. http://www.gpo.gov/fdsys/pkg/PLAW-111pub148/pdf/PLAW-111publ148.pdf.

- 9.Centers for Medicare and Medicaid Services (CMS), HHS. Medicare program; changes to the hospital inpatient prospective payment systems and fiscal year 2008 rates. Fed Regist. 2007;72:47129–8175. [PubMed] [Google Scholar]

- 10.Centers for Medicare and Medicaid Services (CMS), HHS. Medicare program; changes to the hospital inpatient prospective payment systems and fiscal year 2009 rates. Fed Regist. 2008;73:48473–91. [PubMed] [Google Scholar]

- 11.Centers for Medicare and Medicaid Services (CMS), HHS. Medicare program; hospital inpatient prospective payment systems for acute care hospitals and the long term care hospital prospective payment system and proposed fiscal year 2014 rates. Fed Regist. 2013;78:27622–35. [PubMed] [Google Scholar]

- 12.Centers for Medicare & Medicaid Services (CMS), National Center for Health Statistics (NCHS) ICD-9-CM Official Guidelines for Coding and Reporting. [Accessed at on 29 January 2013.];Effective 1 October 2008 (Fiscal Year 2009) http://www.ama-assn.org/resources/doc/cpt/icd9cm_coding_guidelines_08_09_full.pdf.

- 13.Centers for Medicare & Medicaid Services (CMS), National Center for Health Statistics (NCHS) ICD-9-CM Official Guidelines for Coding and Reporting. [Accessed at on 29 January 2013.];Effective 1 October 2009 (Fiscal Year 2010) http://www.cdc.gov/nchs/data/icd9/icdguide09.pdf.

- 14.Snow C, Holtzman L, Waters H, McCall N, Halpern M, Newman L, et al. Accuracy of coding in the hospital-acquired conditions-present on admission program final report. Research Triangle Park, NC: RTI International; [Accessed at on 20 May 2013]. 2012. http://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/HospitalAcqCond/Downloads/Accuracy-of-coding-Final-Report.pdf. [Google Scholar]

- 15.Centers for Medicare & Medicaid Services (CMS), and the U.S. Department of Health & Human Services (HHS) [Accessed at on 22 January 2013.];Medicare’s Hospital Compare website measures for readmissions, complications and deaths, including hospital-acquired complications. http://www.medicare.gov/HospitalCompare/About/HOSInfo/RCD.aspx.

- 16. [Accessed at on 10 May 2013.];AHRQ Quality Indicators, Patient Safety Indicators #3, Technical Specifications, Pressure Ulcer Rate. http://www.qualityindicators.ahrq.gov/Downloads/Modules/PSI/V44/TechSpecs/PSI03_Pressure_Ulcer_Rate.pdf.

- 17.Healthcare Cost and Utilization Project (HCUP) Overview and Description of State Inpatient Databases (SID) Rockville, MD: Agency for Healthcare Research and Quality; Jan, 2013. [Accessed at on 22 January 2013]. http://www.hcup-us.ahrq.gov/sidoverview.jsp. [Google Scholar]

- 18.Healthcare Cost and Utilization Project (HCUP) HCUP Quality Control Procedures. Rockville, MD: Agency for Healthcare Research and Quality; Sep, 2008. [Accessed at on 22 January 2013.]. http://www.hcup-us.ahrq.gov/db/quality.jsp. [Google Scholar]

- 19.American Hospital Association (AHA) Data Collection Methods. Health Forum, LLC; 2012. [Accessed at on 22 January 2013.]. http://www.ahadataviewer.com/about/data. [Google Scholar]

- 20.Collaborative Alliance for Nursing Outcomes (CALNOC) Codebook Part II: Data Capture and Submission, Adult Acute Care Version: January 1, 2010. [Accessed at on 22 January 2013.];CalNOC Pressure Ulcer Prevalence Study Overview. :23–33. http://scientificplanningcommittee.wikispaces.com/file/view/Codebook_2010+Part+II+Site+v.1.pdf.

- 21.Stotts NA, Brown DS, Donalson NE, Aydin C, Fridman M. Eliminating hospital-acquired pressure ulcers: within our reach. Adv Skin Wound Care. 2013;26:13–8. doi: 10.1097/01.ASW.0000425935.94874.41. [DOI] [PubMed] [Google Scholar]

- 22.California HealthCare Foundation, the University of California at San Francisco Philip R. Lee Institute for Health Policy Studies, and the California Hospitals Assessment and Reporting Taskforce (CHART) [Accessed at on 29 January 2013.];CalHospitalCompare Patient Safety Measures, including Hospital-Acquired Pressure Ulcers. http://www.calhospitalcompare.org/about-us/about-the-ratings.aspx and http://www.calhospitalcompare.org/resources-and-tools/why-quality-matters/how-we-rate-quality-on-this-website.aspx.

- 23.California HealthCare Foundation. [Accessed at on 22 January 2013.];California Hospital Assessment and Reporting Taskforce (CHART) Overview. http://www.chcf.org/topics/hospitals/index.cfm?itemID=111065&subsection=chart.

- 24.Collaborative Alliance for Nursing Outcomes (CALNOC) [Accessed at on 22 January 2013.];Pressure Ulcers and their Assessment: A CALNOC Tutorial on Pressure Ulcers and their Assessment. https://http://www.secure-calnoc.org/TUTORIAL_AND_PUBLIC_FILES/Pressure_Ulcer_Tutorial/Introduction.htm.

- 25.Aydin C, Bolton L, Donaldson N, Brown D, Buffman M, Elashoff J, et al. Creating and analyzing a statewide nursing quality measurement database. J Nurs Scholarsh. 2004;36:371–8. doi: 10.1111/j.1547-5069.2004.04066.x. [DOI] [PubMed] [Google Scholar]

- 26.Aydin CE, Bolton LB, Donaldson N, Brown SD, Mukerji A. Beyond nursing quality measurement: the nation’s first regional nursing virtual dashboard. In: Henriksen K, Battles JB, Keyes MA, Grady ML, editors. Advances in Patient Safety: New Directions and Alternative Approaches (Vol. 1: Assessment) Rockville, MD: Agency for Healthcare Research and Quality; [Accessed at on 22 January 2013]. 2008. http://www.ahrq.gov/downloads/pub/advances2/vol1/Advances-Aydin_2.pdf. [Google Scholar]

- 27.Collaborative Alliance for Nursing Outcomes (CALNOC) [Accessed at on 22 January 2013.];Frequently Asked Questions. http://www.calnoc.org/displaycommon.cfm?an=1&subarticlenbr=15.

- 28.Centers for Medicare and Medicaid Services (CMS), HHS. Medicaid program; payment adjustment for provider-preventable conditions including health care-acquired conditions. Fed Regist. 2011;76:32816–38. [PubMed] [Google Scholar]

- 29.Blue Cross and Blue Shield announces system-wide payment policy for “never events”. Washington, DC: Blue Cross Blue Shield Association; Press release: 26 February 2010. [Google Scholar]

- 30.Suess E, Trumbo B. Introduction to Probability Simulation and Gibbs Sampling with R. New York, NY: Springer Science+Business Media, LLC; 2010. [Google Scholar]

- 31.Stausberg J, Kroger K, Maier I, Schneider J, Neibel W. Pressure ulcers in secondary care: incidence, prevalence, and relevance. Adv Skin Wound Care. 2005;18:140–5. doi: 10.1097/00129334-200504000-00011. [DOI] [PubMed] [Google Scholar]

- 32.Jenkins ML, O’Neal E. Pressure ulcer prevalence and incidence in acute care. Adv Skin Wound Care. 2010;23:556–9. doi: 10.1097/01.ASW.0000391184.43845.c1. [DOI] [PubMed] [Google Scholar]

- 33.VanGilder C, Amlung S, Harrison P, Meyer S. Results of the 2008–2009 International Pressure Ulcer Prevalence Survey and a 3-year, acute care, unit specific analysis. Ostomy Wound Manage. 2009;55:39–45. [PubMed] [Google Scholar]

- 34.Whittington KT, Briones R. National prevalence and incidence study: 6 year sequential acute care data. Adv Skin Wound Care. 2004;17:490–4. doi: 10.1097/00129334-200411000-00016. [DOI] [PubMed] [Google Scholar]

- 35.Cuddigan J, Berlowitz D, Ayello E. Pressure ulcers in america: prevalence, indicidence, and implications for the future: an executive summary of the National Pressure Ulcer Advisory Panel monograph. Adv Skin Wound Care. 2001;14:208–15. doi: 10.1097/00129334-200107000-00015. [DOI] [PubMed] [Google Scholar]

- 36.Meddings J, Saint S, McMahon LF., Jr Hospital-acquired catheter-associated urinary tract infection: documentation and coding issues may reduce financial impact of Medicare’s new payment policy. Infect Control Hosp Epidemiol. 2010;31:627–33. doi: 10.1086/652523. [DOI] [PubMed] [Google Scholar]

- 37.Meddings JA, Reichert H, Rogers MA, Saint S, Stephansky J, McMahon LF. Effect of nonpayment for hospital-acquired, catheter-associated urinary tract infection: a statewide analysis. Annals of internal medicine. 2012;157:305–12. doi: 10.7326/0003-4819-157-5-201209040-00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Berlowitz DR, Brand HK, Perkins C. Geriatric syndromes as outcome measures of hospital care: can administrative data be used? J Am Geriatr Soc. 1999;47:692–6. doi: 10.1111/j.1532-5415.1999.tb01591.x. [DOI] [PubMed] [Google Scholar]

- 39.Qualidigm. Medicare Patient Safety Monitoring System (MPSMS) [Accessed at on 22 January 2013.];Annual Report of 2011 Data: Overview. 2012 Dec; http://www.qualidigm.org/wp-content/uploads/2012/02/MPSMS-Overview-Final-Report.pdf.

- 40.Lyder CH, Wang Y, Metersky M, Curry M, Kliman R, Verzier NR, et al. Hospital-acquired pressure ulcers: results from the national Medicare Patient Safety Monitoring System study. J Am Geriatr Soc. 2012;60:1603–8. doi: 10.1111/j.1532-5415.2012.04106.x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.