Abstract

Tracking progress toward the goal of preparedness for public health emergencies requires a foundation in evidence derived both from scientific inquiry and from preparedness officials and professionals. Proposed in this article is a conceptual model for this task from the perspective of the Centers for Disease Control and Prevention–funded Preparedness and Emergency Response Research Centers. The necessary data capture the areas of responsibility of not only preparedness professionals but also legislative and executive branch officials. It meets the criteria of geographic specificity, availability in standardized and reliable measures, parameterization as quantitative values or qualitative distinction, and content validity. The technical challenges inherent in preparedness tracking are best resolved through consultation with the jurisdictions and communities whose preparedness is at issue.

Keywords: emergency preparedness, national health security

For the past decade, states, territories, and major metropolitan jurisdictions have received federal funding to improve public health preparedness (PHP) for emergencies and disasters. Tracking progress toward that goal has become a national policy priority. Under a cooperative agreement with Centers for Disease Control and Prevention’s (CDC’s) Office of Public Health Preparedness and Response, the Association of State and Territorial Health Officials is developing a measure of health security and preparedness at the national and state levels. This National Health Security Preparedness Index (NHSPI) will become a summary measure that communicates the level of health security preparedness across state, metropolitan, territorial, and tribal jurisdictions receiving federal PHP funding.1

When fully designed and implemented, the NHSPI can become a tool both for measuring progress in preparedness and for maintaining accountability for federal preparedness funding. Among the challenges to achieving this potential is to establish a foundation in evidence derived both from scientific inquiry—including literature reviews and primary scholarship—and from vetting the NHSPI processes and implementation with officials and professionals.

The coauthors of this article represent the Preparedness and Emergency Response Research Centers, funded by the CDC and based at member schools of the Association of Schools of Public Health. All 9 Preparedness and Emergency Response Research Centers received an invitation to advise the NHPSI project under the terms of a CDC cooperative agreement with the Association of Schools of Public Health. Principal investigators and other faculty and staff members from 8 Preparedness and Emergency Response Research Centers participated by reviewing the relevant published literature2 and assessing the NHSPI’s scientific and practical challenges.3 Drawing from that work, the present authors focus this article on one particularly important issue: the availability and quality of data needed to measure preparedness for the NHPSI and the resulting technical challenges associated with index development.

Conceptual Framework for Measuring Governmental Preparedness

The attributes selected for NHSPI measurement should be within the control of those whose decisions and activities determine preparedness. Otherwise, the potentially positive impact of tracking preparedness across jurisdictions may be lost. Three domains, using the acronym “LEO,” emphasize that the locus of control varies across many sectors of government. Legal and policy determinants (L) describe the powers, duties, and constraints on public health agencies as well as their organizational and governance structures—all of which are responsibilities of elected officials and agency regulators. In addition, this domain includes performance standards, emergency plans, and response protocols that are responsibilities of emergency public health professionals. Economic and resource determinants (E) include assets such as budgets, facilities, equipment, and personnel (both in numbers and qualifications). Legislators and chief executives determine budgets. Executive branch officials authorize property acquisitions, establish personnel categories and numbers, and authorize purchases. Emergency public health officials specify needs for personnel, facilities, equipment, and supplies. Operational determinants (O) describe the processes, work flows, and actions that constitute performance and determine its quality. Emergency public health professionals carry out programs, conduct exercises and drills, and coordinate interagency activities.

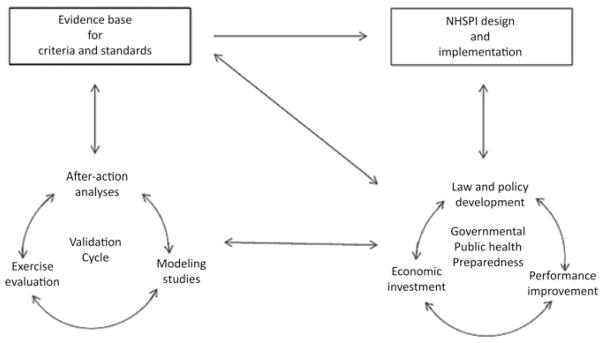

The Figure illustrates the LEO domains within a conceptual model. It shows that measuring governmental PHP—such as through the NHSPI—can enhance it through mutually reinforcing processes.

FIGURE 1. FIGURE Conceptual Model for Measuring Governmental Preparedness.

Abbreviation: NHSPI, National Health Security Preparedness Index.

Governmental PHP represents the collective characteristics, activities, and attributes that determine the quality of response to public emergencies. From the standpoint of accountability for federal investments, this preparedness depends on the LEO determinants: laws and policies that define its roles and responsibilities, economic resources available, and performance quality.

NHSPI design and implementation depend on interfaces with governmental public health agencies. The agencies not only provide information and data for the NHSPI but also direct its attention to their needs and priorities. As a system of measurement and accountability, the NHSPI can suggest how to improve laws and policies, how to better target public investments, and how to better ensure performance quality. Over time, observations of whether and how these efforts build preparedness may influence and refine the NHSPI design.

A validation cycle analyzes governmental PHP through data and information generated from public health emergency experience, evaluation exercises, and modeling studies. Each of these produces information and insight useful for the others: actual emergencies generate outcomes in terms of health and community recovery; evaluation studies of exercises and drills can help determine what metrics are associated with desired outcomes; and modeling studies test questions and problems that are not amenable to empirical research methods. The validation cycle builds an evidence base that improves the NHSPI design, informs governmental PHP, and contributes back to the validation cycle. This implies that the work of NHSPI model design is not once and for all but rather ongoing for as long as the index remains in use.

Examples of LEO Data

A review of governmental and research literature shows that the LEO data for PHP attributes are commonly recognized. Nelson et al4 proposed 16 “key elements” of preparedness: 2 were legal or policy-related (plans for mass health care and liability barriers); 6 were economic (epidemiology systems, laboratory systems, supply chain resources, workers and volunteers, leaders, and financial systems); and 8 were operational (health risk assessment, assignment of roles and responsibilities, incident command, public engagement, countermeasures and mitigation strategies, testing operational capabilities, and performance management).

The CDC’s annual reports have standardized data for preparedness determinants in all 3 domains.5 These include legal/policy data (status of continuity of operations plans), economic data (numbers of reference laboratories and of CHEMPACK nerve agent antidote containers), and operational data (receipt and investigation of urgent disease reports and year-round surveillance for seasonal influenza). A component of several reports is a technical assistance review rating of the operational capability to distribute supplies and materiel from the Strategic National Stockpile.

Trust for America’s Health series of annual “readiness” assessments use criteria including laws and legal authorizations; economic resources including funding streams, laboratories, communication systems, and personnel; and many on operational and performance capabilities.6

Numerous studies provide further examples. Barbisch and Koenig used the terms staff, structures, stuff with definitions placing them within the economics domain; and the term systems that included both policies and procedures.7 Federal bioterrorism funding was found to be related to preparedness level of local health departments, based on a review of legal authorities and preparedness plans, whether an emergency preparedness coordinator was on staff, and whether there was participation in drills, assessment of staff’s competencies, and staff preparedness trainings.8 Laws are shown to define the scope of practice for public health professionals and volunteers during emergencies.9 During the 1918–1919 pandemic, the use of legally authorized social distancing measures (such as school closures, isolations, quarantines, and public gathering bans) significantly influenced the variation in excess death rates among US cities.10

Data Requirements and Sources

The data for use in the NHSPI should meet 4 criteria: (1) geographic specificity for the states, metropolitan areas, and other localities of interest; (2) availability in measures that are standardized and reliable across jurisdictions and time periods; (3) standardization as quantitative values or qualitative distinctions; and (4) content validity relative to the determinants and outcomes of governmental PHP. Each of the LEO domains of preparedness determinants has existing data sets that measure up against these criteria in different ways.

Geographic specificity

The “data” for measuring legal and policy determinants of preparedness may exist as statutes, regulations, policies, plans, and even judicial decisions. Many of these sources are readily available and continuously updated in electronic format for all US jurisdictions.* Other legal and policy sources including plans and protocols may or may not be published, depending on the jurisdiction. Numerous economic data sets based on recent research describe the financing, facilities, equipment, and human resources available to states and localities. These include association surveys, governmental documents, and research reports. Reliable and geographically specific sources include surveys conducted by the National Association of County & City Health Officials, the Association of State and Territorial Health Officials, the Area Resource File, and the reports and Web sites of governmental agencies and private associations. Operational data describe the performance of such activities as timely communication among agencies and efficiency of distributing mass prophylaxis. Such measures allow for ranking and comparison among states and metropolitan jurisdictions as is done by the CDC’s annual preparedness reports5 and its National Public Health Performance Standards Program.11

Reliability

Reliability is a measure of consistency and accuracy in the acquisition of data. For legal and policy domains, statutes, regulations, formal policies, and plans present official statements and are thus “reliable” in the sense of being consistent and accurate sources of data. The sources for economic data are rich but inconsistent across jurisdictions, choice of metrics, and points in time. For example, Trust for America’s Health reports over a 5-year period used at least 36 different measures of preparedness including many of the economics domains, but only a few measures were repeated in more than 1 or 2 annual reports.6 Operational data sets for public health agencies exist in the forms of self-reported after-action reports, and research studies often using nonstandardized measures for a few select locations. The best available operational data for 50 states and 4 metropolitan areas come from the CDC technical assistance review reports and PHP capability assessments.5 Beginning 2011, the reported metrics have pertained mainly to PHP capabilities. These are likely to be reliable because CDC reviewers all use the same measurement procedures, but reliability tests have not been published.

Standardization

This criterion means that preparedness attributes should be measured in relative values that are comparable across jurisdictions. All of the language-based legal and policy sources are subject to interpretation, which are typically expressed not as numerical values but as categories. For example, Hodge et al12 classified school closure laws on the basis of which governmental departments had decision-making authority, and Potter et al13 compared such laws on the basis of whether or not statutes delegated centralized versus local authority and whether plans specified closure and reopening criteria. Economic resources are measured and reported as continuous values or amounts, such as dollars, full-time equivalent employees, square footage of space, and quantity of supplies. Operational attributes may be measured either quantitatively (ie, elapsed time to perform a task) or qualitatively (ie, Likert scale based on observer assessment).

Validity

Validation is meant to ensure that a selected metric in fact measures the intended variable: in this case, governmental PHP. But because public health emergencies strike localities and populations in unique ways and at different times, observed outcomes may not be attributable to the preparedness attribute. For example, a count of influenza case fatalities might depend more on interpersonal contact patterns or availability of hospital ventilators than on how well a state health department distributed vaccines. Validation is therefore a particular challenge for the NHSPI.

Each LEO domain has unique characteristics that influence whether and how data might be validated. For legal and policy data—that is, statutes, regulations, policies, and protocols—validation studies are lacking, although a new focus of research on how laws affect health outcomes may yield useful methods and insights.14 In the economic domain, there is limited empirical evidence to support a causal correspondence between the amount of funding available to a public health agency and its effectiveness in implementing preparedness strategies.15 There appears to be only a modest correlation between a jurisdiction’s budget and its emergency planning performance.16 In the operational and performance domain, expert panel methods are typical,4 and empirically based validation is rare. For example, Potter et al17 documented that, among 116 outbreaks of 8 different infectious diseases reported over a 25-year period, none presented case incidence outcomes relative to the accepted chronological performance criteria for field epidemiology.

Across the domains, modeling studies can help address the validation challenge. Modeling methods include some that are mathematical and deterministic and others that are computational and stochastic. Mathematical algorithms can represent patterns discerned in previous events, such as transmission rates of influenza in past annual outbreaks. Computational simulations can test selected variables within a statistical range of possibilities, based on time, duration, place, severity, population vulnerability and behavior, and other factors. The Institute of Medicine recommended ways to strengthen modeling for infectious disease mitigation,18 and these may also apply to other types of emergency.

Technical Challenges and Limitations

Because the LEO domains are interdependent, data selection should discriminate so that measurements in one domain do not overamplify or duplicate measurements in another domain. Legal powers may authorize or limit operations, such as due process requirements for ordering a quarantine or mandatory medical treatment. Policies control what tax revenues and grant funds may be used for economic resources such as personnel and supplies. Economic resources determine how well operations are equipped. Preparedness professionals adjust for deficits in a domain they cannot control through adjustments elsewhere: for example, if a jurisdiction lacks the economic resources to acquire a certain communication technology, then its operational protocols may specify ways to optimize the available channels of communication.

Furthermore, the weighting of preparedness measurements will involve difficult and, to some extent, subjective decisions, but it may be unavoidable. State, territorial, and local jurisdictions differ substantially in terms of preparedness attributes. In some meaningful part, differences in such measurables as legislation, investment in facilities and equipment, and operational expertise arise due to the frequency of hurricanes, wild-fires, floods, or whatever risks are locally or regionally prevalent. Weighting of measurements can account for such jurisdiction-specific risks, vulnerabilities, and priorities.

Other limitations of the proposed conceptual model are best resolved through consultation with the jurisdictions and communities whose preparedness is at issue. The model provides only for measuring preparedness of governmental public health agencies; it does not show the interplay of attributes of agencies with those of their communities and populations. The concept of “resiliency” captures the behaviors, social capital assets, and other factors that determine how well a community or population can recover from a public health emergency.19 The fact that a community’s overall resiliency places unique demands on its public health agency means that those responsible cannot exercise full control over an emergency response. A community’s vulnerable populations may have needs either invisible to planners or beyond their scope of duties and resources. Consider the lack of transportation for evacuating New Orleans’ lowest-income residents during Hurricane Katrina. Thus, improved ways to measure community resilience will necessarily include engagement of community representatives.

Conclusions

Measuring preparedness should direct attention to the appropriate centers of responsibility in a public health system. The proposed conceptual model incorporates evidence derived from research as well as practical experience and openness to quality improvement. The model uses attributes that include legal and policy factors, economic assets, and resource constraints, as well as operational capabilities and performance. Recognizing the wide distribution of authority and responsibility for preparedness among legislative and executive policy makers, executive agency officials, and emergency public health professionals, the model engages these stakeholders both to inform the selection and weighting of data and to address technical challenges in a balanced manner. Using an iterative approach to index design through cycles of validation, input from the jurisdictions subject to measurement, and periodic revision of indicators, the model takes advantage of cumulative experience that includes improved technical methods, observed policy changes, and increased performance standards.

Acknowledgments

Funding for the work presented in this article was provided by the Coordinating Office for Terrorism Preparedness and Emergency Response, Centers for Disease Control and Prevention (CDC). This article was supported by Cooperative Agreement no. 5U36CD300430 from the CDC. Its contents are solely the responsibility of the authors and do not necessarily reflect the official views of CDC. Additional funding was provided by the National Institute of General Medical Sciences MIDAS grant 1U54GM088491-01, which had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Footnotes

The authoritative, continuously updated source for all federal, state, and local laws is Lexis/Nexis, available by subscription.

The authors declare no conflicts of interest.

References

- 1.Steering Committee for the National Health Security Preparedness Index. Draft of March 8, 2012, presented at: NHSPI Kickoff Meeting for the Model Design Workgroup; May 15, 2012; Arlington, VA. [Google Scholar]

- 2.Stoto MA, Piltch-Loeb R, Potter MA, et al. Annotated Bibliography of Research Resources for Measurement of Public Health Preparedness. Washington, DC: Association of Schools of Public Health; 2012. [Accessed December 13, 2012]. http://www.asph.org/UserFiles/Final%20Annotated%20Bibliography_No%20CDC%20logo.pdf. [Google Scholar]

- 3.Potter MA, Houck OC, Miner K, Shoaf K. Prepared with sponsorship by the Office of Public Health Preparedness and Response, Centers for Disease Control and Prevention. Washington, DC: Association of Schools of Public Health; 2012. [Accessed March 22, 2013]. The National Health Security Preparedness Index: Opportunity and Challenges. A White Paper by the CDC-Funded Preparedness and Emergency Response Research Centers. http://www.asph.org/UserFiles/NHSPI_White_Paper_11-26-12_FINAL-ALL.pdf. [Google Scholar]

- 4.Nelson C, Lurie N, Wasserman J, Zakowski S. Conceptualizing and defining public health emergency preparedness. Am J Public Health. 2007;97(supp 1):S9–S11. doi: 10.2105/AJPH.2007.114496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Centers for Disease Control and Prevention, Coordinating Office of Terrorism Preparedness and Disaster Response. [Accessed September 26, 2012];Public health preparedness reports. http://www.cdc.gov/phpr/pubs-links/2008/index.htm. Published 2008–2012.

- 6.Trust for America’s Health. [Accessed September 25, 2012];Ready or not? Protecting the public’s health from diseases, disasters & bioterrorism. http://healthyamericans.org/reports. Published 2003–2011.

- 7.Barbisch DF, Koenig KL. Understanding surge capacity: essential elements. Acad Emerg Med. 2006;13:1098–1102. doi: 10.1197/j.aem.2006.06.041. [DOI] [PubMed] [Google Scholar]

- 8.Avery G, Zabriskie-Timmerman J. The impact of federal bioterrorism funding programs on local health department preparedness activities. Eval Health Prof. 2009;32(2):95–127. doi: 10.1177/0163278709333151. [DOI] [PubMed] [Google Scholar]

- 9.Hodge JG, Jr, Mount JK, Reed JF. Scope of practice for public health professionals and volunteers. J Law Med Ethics. 2005;33(4, suppl):53–54. [PubMed] [Google Scholar]

- 10.Markel H, Lipman HB, Navarro JA, et al. Nonpharmaceutical interventions implemented by US cities during the 1918–1919 influenza pandemic. JAMA. 2007;298(6):644–654. doi: 10.1001/jama.298.6.644. [DOI] [PubMed] [Google Scholar]

- 11.Corso LC, Lenaway D, Beitsch LM, Landrum LB, Deutsch H. The National Public Health Performance Standards: driving quality improvement in public health systems. J Public Health Manag Pract. 2010;16(1):19–23. doi: 10.1097/PHH.0b013e3181c02800. [DOI] [PubMed] [Google Scholar]

- 12.Hodge J, Bhattacharya D, Gray J. Assessment of School Closure Law in Response to Pandemic Flu. The Centers for Law’s & the Public’s Health; 2008. [Accessed September 24, 2008]. http://www2a.cdc.gov/phlp/docs/Legal%20Preparedness%20for%20School%20Closures%20in%20Response%20to%20Pandemic%20Influenza.pdf. [Google Scholar]

- 13.Potter MA, Brown ST, Cooley PC, et al. School closure as an influenza mitigation strategy: how variations in legal authority and plan criteria can alter the impact. BMC Public Health. 2012;12:977. doi: 10.1186/1471-2458-12-977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Parker SG. A Progress Report. Princeton, NJ: Robert Wood Johnson Foundation; 2012. [Accessed December 13, 2012]. Public Health Law Research: Making the Case for Laws That Improve Health. http://www.rwjf.org/content/dam/farm/reports/program_results_reports/2012/rwjf73044. [Google Scholar]

- 15.Mays GP, McHugh MC, Shjim K, et al. Institutional and economic determinants of public health system performance. Am J Public Health. 2006;96(3):523–531. doi: 10.2105/AJPH.2005.064253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lindell MK, Whitney DH, Futch CJ, Clause CS. The local emergency planning committee: a better way to coordinate disaster planning. In: Silves RT, Waugh WL Jr, editors. Disaster Management in the US and Canada: The Politics, Policymaking, Administration and Analysis of Emergency Management. Springfield, IL: Charles C Thomas Publishers; 1996. pp. 234–249. [Google Scholar]

- 17.Potter MA, Sweeney P, Iuliano AD, Allswede MP. The evidence base for performance standards in the detection & containment of infectious disease outbreaks. J Public Health Manag Pract. 2007;13(5):510–518. doi: 10.1097/01.PHH.0000285205.40964.28. [DOI] [PubMed] [Google Scholar]

- 18.Institute of Medicine, Committee on Modeling Community Containment for Pandemic Influenza, Board on Population Health and Public Health Practice. Modeling Community Containment for Pandemic Influenza: A Letter Report. Washington, DC: National Academies Press; 2006. [Google Scholar]

- 19.Aldrich DP. Building Resilience. Social Capital in Post-Disaster Recovery. Chicago, IL: University of Chicago Press; 2012. [Google Scholar]