Introduction

Inpatient consultations between generalists and specialty care providers represent a specific situation where poor information exchange between physicians could result in delayed diagnoses, inadequate follow up, or duplication of services.1, 2 Unfortunately, subspecialty trainees do not receive formal instruction in how to provide an effective consultation.3 As a result, consultation notes are variable in quality and often do not meet the needs of the referring provider or the patient.4

Enhancing the Quality of Consultation Notes

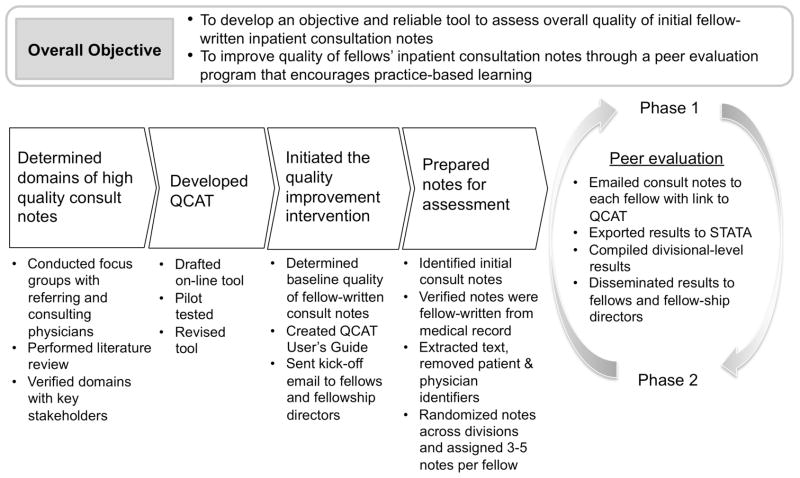

This initiative focused on developing a peer evaluation program that taught trainees about the components of high-quality consultation notes and then engaged them in self-improvement through reflection on performance data and practice-based learning (Figure 1). The program included the development of a consultation assessment tool, followed by the implementation of the peer-evaluation across our Department’s fellowship programs.

Figure 1.

Process map for “Improving Quality of Fellow-Written Consult Notes” project

Development of Quality of Consultation Measures

Development of consultation performance metrics began with a literature review, followed by focus groups with both referring physicians (attendings and housestaff) and consulting physicians (specialty fellows) to review findings from the literature search, refine the list of potential metrics, and identify important domains not identified in the literature. Specifically, discussions focused on characteristics of effective consultation notes, the most important elements of consultation notes, and the practice of giving/getting feedback about written consultations. In addition, focus groups provided essential input as to the elements of high quality consultation notes.

We identified five quality domains, consistent with prior literature5, 6: 1) Reason for consultation (specifying a consult question; explaining thought processes for conclusions); 2) Diagnostic plan (citing rationales for recommended labs or studies); 3) Therapeutic plan (listing medications with proper dose, route, schedule; discussing procedures and peri-procedural tasks); 4) Communication (documenting verbal discussions with providers; providing anticipatory guidance); and 5) Educational value (citing relevant articles; providing a well-developed differential diagnosis).

Development of Consultation Assessment Tool

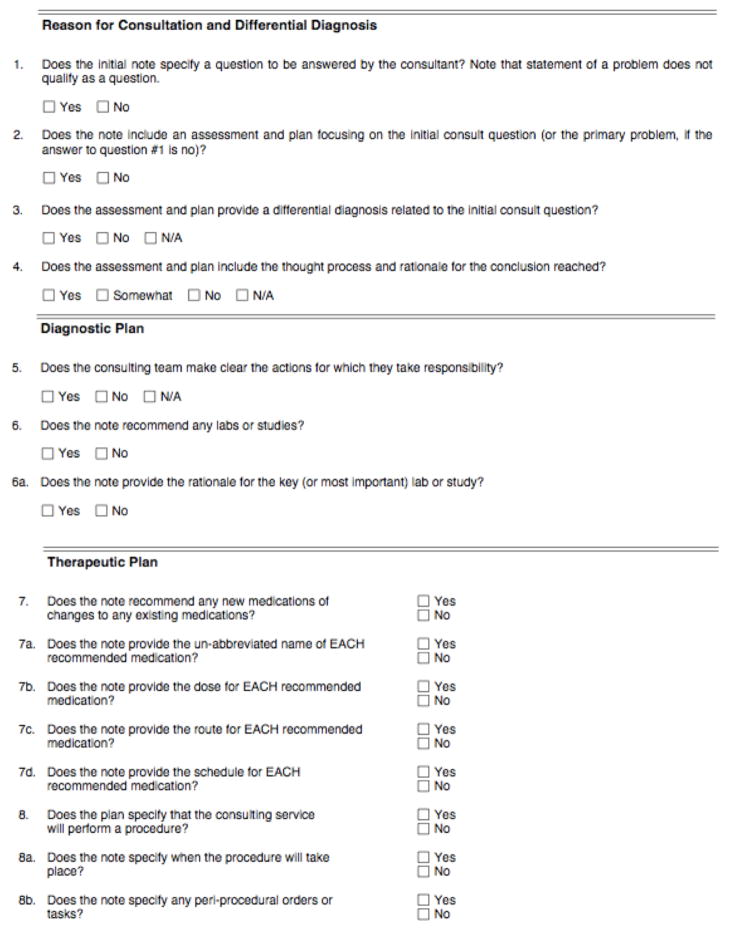

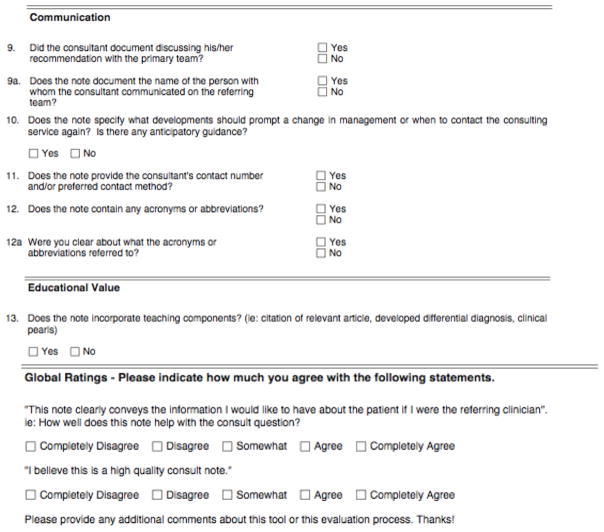

Using these domains, we developed an electronic Quality of Consultation Assessment Tool (QCAT) through an iterative process. The QCAT was refined to maximize objectivity and reproducibility with input from key stakeholders (attending specialist physicians, fellowship directors, and Department physician leaders). The final instrument, developed using an online database technology7 for easy dissemination and use, comprised a 13-point checklist across the 5 equally weighted domains, and two global measures of quality using 5-point Likert scales (Figure 2). A percentage score was calculated for each domain of each note. “Yes”, “Somewhat”, and “No” answers were awarded 5, 3, and 0 points respectively. The sum of those scores was the numerator. The total number of possible points, after excluding questions with the answer “Not Applicable”, was the denominator. Domain scores were averaged to calculate the total score for each consult note.

Figure 2.

Quality of Assessment Tool (QCAT)

Implementation of a peer evaluation program

Following several rounds of pilot testing, we established baseline “quality” scores using the QCAT on a random selection of inpatient fellow-written consultation notes. Notes were identified using billing data and confirmed via medical record review. Concurrently, we developed an electronic Users’ Guide to the QCAT that introduced the tool, explained how results would be tracked and disseminated, and provided rationales for QCAT quality measures. Additionally, the User’s Guide provided sample consultation notes with guidance on how to critically evaluate them and ways in which those notes could be improved to achieve higher quality scores.

We initiated the peer evaluation program with a kick-off email to our Department’s fellows and fellowship directors. This communication described the program, highlighted baseline quality data at the divisional-level, and provided the QCAT Users’ Guide. Fellows were asked to use the QCAT to blindly evaluate 3–5 peer-written initial consultation notes that had been redacted to remove patient and provider identifiers.

Results, consisting of domain scores and total scores, were compiled at the divisional-level and distributed to fellows and fellowship-directors (phase 1). Several months later, the peer evaluation process and dissemination of results were repeated (phase 2).

Early Experiences with QCAT

Overall, our fellow-written initial consultation notes were of average baseline quality (mean score 60%), with deficiencies in “Communication” and “Education” domains (mean scores 29% and 52%, respectively). No changes in the quality of consultation notes were observed between baseline, phase 1, and 2. Fellow involvement was low (27%) in all time periods.

Participating fellows stated that QCAT provided valuable information about important components of consultation notes and that the program provided some opportunity for self-reflection, but not enough for meaningful change.

Enhancing the program to achieve success

After this pilot phase, we refined our program to increase fellow participation. First, we recruited Divisional QCAT champions. These fellows self-identified as interested in quality improvement and patient safety and were willing to act as ambassadors to their divisions. QCAT champions were responsible for increasing their colleagues’ participation and presenting results and areas for improvements to their peers. Second, we hosted a kick-off event for QCAT champions to officially recognize their work and to provide a forum to brainstorm ways to increase their colleagues’ participation. This venue allowed for cross-fertilization of ideas and best practices across Divisions. Third, we asked fellows to evaluate fewer notes but at more regular intervals throughout the year, thereby providing feedback to fellows and fellowship directors on a monthly rather than quarterly basis.

Discussion

All fellowship programs strive to train subspecialists who can provide high-quality consultations, with initial notes representing a critical competency. Our consultation quality assessment tool, coupled with a peer evaluation program, provided a mechanism to actively teach fellows about the components of high-quality consultation notes. Our initial experience suggested that the major barrier to our program’s effectiveness was our initial difficulty engaging a diverse group of fellows in disparate clinical divisions (as opposed to residency trainees, which reside in a single organizational unit). In addition, infrastructure is needed to provide fellows with timely, reliable, actionable data to promote behavior change, specifically infrastructure which permits collection of enough observations of individual fellow’s notes to provide longitudinal feedback.

However, our program has features which predict potential success: This program applied the tenets of practice-based learning, satisfying a core ACGME training competency8 and encouraging fellows to participate in self-directed learning. We are optimistic that our efforts to marry educational interventions with tools that generate a system for audit, feedback, and self-directed learning improvement will provide an opportunity for meaningful improvements in the future.

Acknowledgments

We would like to thank participating UCSF fellows and fellowship directors.

Funding sources

DST was partially funded by the UCSF KL2 Award number RR024130.

Footnotes

Conflict of interest

None of the authors have conflicts of interest to report.

Authorship roles

All authors have had access to the data, a role in designing the study, analyzing the data and writing the manuscript. They all approve of the final submitted version.

References

- 1.Keely E, Myers K, Dojeiji S, Campbell C. Peer assessment of outpatient consultation letters--feasibility and satisfaction. BMC Med Educ. 2007;7:13. doi: 10.1186/1472-6920-7-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Boulware DR, Dekarske AS, Filice GA. Physician preferences for elements of effective consultations. J Gen Intern Med. 2010 Jan;25(1):25–30. doi: 10.1007/s11606-009-1142-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Goldman L, Lee T, Rudd P. Ten commandments for effective consultations. Arch Intern Med. 1983 Sep;143(9):1753–1755. [PubMed] [Google Scholar]

- 4.Tattersall MH, Butow PN, Brown JE, Thompson JF. Improving doctors’ letters. Med J Aust. 2002 Nov 4;177(9):516–520. doi: 10.5694/j.1326-5377.2002.tb04926.x. [DOI] [PubMed] [Google Scholar]

- 5.Stille CJ, Mazor KM, Meterko V, Wasserman RC. Development and validation of a tool to improve paediatric referral/consultation communication. BMJ Qual Saf. 2011 Aug;20(8):692–697. doi: 10.1136/bmjqs.2010.045781. [DOI] [PubMed] [Google Scholar]

- 6.Berta W, Barnsley J, Bloom J, et al. Enhancing continuity of information: essential components of a referral document. Can Fam Physician. 2008 Oct;54(10):1432–1433. 1433, e1431–1436. [PMC free article] [PubMed] [Google Scholar]

- 7.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009 Apr;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Moskowitz EJ, Nash DB. Accreditation Council for Graduate Medical Education competencies: practice-based learning and systems-based practice. Am J Med Qual. 2007 Sep-Oct;22(5):351–382. doi: 10.1177/1062860607305381. [DOI] [PubMed] [Google Scholar]