Abstract

The way we perceive an object depends both on feedforward, bottom-up processing of its physical stimulus properties and on top-down factors such as attention, context, expectation, and task relevance. Here we compared neural activity elicited by varying perceptions of the same physical image—a bistable moving image in which perception spontaneously alternates between dissociated fragments and a single, unified object. A time-frequency analysis of EEG changes associated with the perceptual switch from object to fragment and vice versa revealed a greater decrease in alpha (8–12 Hz) accompanying the switch to object percept than to fragment percept. Recordings of event-related potentials elicited by irrelevant probes superimposed on the moving image revealed an enhanced positivity between 184 and 212 ms when the probes were contained within the boundaries of the perceived unitary object. The topography of the positivity (P2) in this latency range elicited by probes during object perception was distinct from the topography elicited by probes during fragment perception, suggesting that the neural processing of probes differed as a function of perceptual state. Two source localization algorithms estimated the neural generator of this object-related difference to lie in the lateral occipital cortex, a region long associated with object perception. These data suggest that perceived objects attract attention, incorporate visual elements occurring within their boundaries into unified object representations, and enhance the visual processing of elements occurring within their boundaries. Importantly, the perceived object in this case emerged as a function of the fluctuating perceptual state of the viewer.

Keywords: object perception, object attention, bistable perception

Introduction

A fundamental function of the visual system is to integrate individual perceptual features into coherent and meaningful representations of objects. This seems effortless as we scan a given visual scene, but object perception is in fact computationally challenging (Edelman, 1997; Kietzmann, Lange, & Riedmiller, 2009). Objects in our cluttered visual environment do not always have distinct physical boundaries (e.g., they can be overlapping or occluded), and in order for such objects to be perceived, separate elements in the visual field must be combined into a unified whole (Ben-Av, Sagi, & Braun, 1992; Grossberg & Mingolla, 1985; Grossberg, Mingolla, & Ross, 1997; Palmer, Brooks, & Nelson, 2003). Whether an object is perceived depends on numerous external (i.e., “bottom-up”) factors, such as its physical properties and its location in visual space, but also on internal (“top-down”) factors, such as selective attention (Blair, Watson, Walshe, & Maj, 2009; Driver, Davis, Russell, Turatto, & Freeman, 2001; Freeman, Sagi, & Driver, 2001; Treisman & Kanwisher, 1998), task relevance (Egner & Hirsch, 2005), context (Bar, 2004; Federmeier & Kutas, 2001; Oliva & Torralba, 2007), and prior exposure (Summerfield & Egner, 2009).

Previous event-related potential (ERP) studies of the neural mechanisms underlying object processing have found modulations in the occipital N1 component (160–200 ms) associated with object-based attention (e.g., Drew, McCollough, Horowitz, & Vogel, 2009; He, Fan, Zhou, & Chen, 2004; Kasai, 2010; Kasai & Kondo, 2007; Kasai, Moriya, & Hirano, 2011; Kasai & Takeya, 2012; Martínez et al., 2006; Martínez, Ramanathan, Foxe, Javitt, & Hillyard, 2007; Martínez, Teder-Sälejärvi, & Hillyard, 2007; Senkowski, Röttger, Grimm, Foxe, & Herrmann, 2005). For example, He et al. (2004), Martínez et al. (2006), and Martínez et al. (2007) found an enhancement in the N1 elicited by attended versus unattended objects, equating for spatial attention effects. Martínez et al. (2006) and Martínez, Ramanathan, et al. (2007) localized these object-based effects to the lateral occipital complex (LOC), consistent with the abundant studies that have linked this region with processes of object perception and recognition (e.g., Appelbaum, Ales, Cottereau, & Norcia, 2010; Cichy, Chen, & Haynes, 2011; Grill-Spector, Kourtzi, & Kanwisher, 2001; Grill-Spector & Sayres, 2008; Malach et al., 1995; Pourtois, Schwartz, Spiridon, Martuzzi, & Vuilleumier, 2009; Shpaner, Murray, & Foxe, 2009). In addition to these N1 modulations associated with object perception, a recent study found an enhanced positivity around the same latency (∼150–200 ms) associated with the perceived figure in a figure-ground display, and also localized this positivity to the LOC (Pitts, Martínez, Brewer, & Hillyard, 2011).

Studies using illusory Kanizsa figures formed by aligned inducers also found an enhanced N1 amplitude elicited by the figures relative to their unaligned counterparts (e.g., Martínez, Ramanathan, et al., 2007; Martínez, Teder-Sälejärvi, & Hillyard, 2007; Senkowski et al., 2005). In a design using bilateral displays that required participants to attend to one hemifield for potential targets, Kasai and Kondo (2007), Kasai (2010), Kasai et al. (2011), and Kasai and Takeya (2012) found that the degree of lateral asymmetry of the N1 component over scalp sites contralateral versus ipsilateral to the attended hemifield depended on the degree of perceptual grouping of the objects in the two hemifields. For example, if a bar connected the objects in each hemifield, the lateralized N1 response was reduced, even if the connecting bar was occluded (Kasai & Takeya, 2012). These results suggest that attention automatically spreads throughout the boundaries of connected objects and provide evidence that object recognition processes modulate spatial selection in the visual cortex.

ERP studies examining object perception under partial viewing conditions have identified distinct neural mechanisms associated with perceptual closure (e.g., Doniger, 2002; Doniger et al., 2000, 2001; Sehatpour, Molholm, Javitt, & Foxe, 2006). When participants have been shown increasingly more details of a fragmented or partially occluded object, a negativity has been reported over bilateral occipitotemporal scalp regions at around 250–300 ms following stimulus onset, which is maximal at the point that there is just enough visual information for participants to recognize the object. This negativity is referred to as the closure negativity, or Ncl, and has also been localized to the LOC (Sehatpour et al., 2006).

Electrophysiological studies of animals (e.g., Fries, 2005; Singer, 1999; Singer & Gray, 1995) and of humans (Bertrand & Tallon-Baudry, 2000; Debener, Herrmann, Kranczioch, Gembris, & Engel, 2003; Gruber & Müller, 2005; Keil, Müller, & Ray, 1999; Rodriguez et al., 1999; Tallon-Baudry, Bertrand, Hénaff, Isnard, & Fischer, 2005; Zion-Golumbic & Bentin, 2007) have also demonstrated the importance of oscillatory synchrony in integrating spatially distributed neuronal activity associated with distinct object parts or features. Much of this work has focused on the gamma band (>30 Hz), especially with respect to perceptual integration (e.g., Bertrand & Tallon-Baudry, 2000). However, studies have also found electroencephalography (EEG) desynchronization in the alpha (8–12 Hz) and beta (13–30 Hz) ranges associated with the perception of real and illusory contours (Kinsey & Anderson, 2009) and object versus nonobject patterns (Maratos, Anderson, Hillebrand, Singh, & Barnes 2007; Vanni, Revonsuo, & Hari, 1997).

Across methodologies, the prevalent designs for studying object perception have used well-defined Gestalt principles to separate objects, such as spatially separated rectangles (e.g., Egly, Driver, & Rafal, 1994; He et al., 2004; Martínez et al., 2006), intact versus fragmented rectangles (e.g., Martínez et al., 2007; Yeshurun, Kimchi, Sha'shoua, & Carmel, 2009), or illusory objects formed by aligned inducers (e.g., Martínez, Ramanathan, et al., 2007; Martínez, Teder-Sälejärvi, & Hillyard, 2007; Moore, Yantis, & Vaughan, 1998; Tallon-Baudry, Bertrand, Delpuech, & Pernier, 1996). However, in those studies, the objects were defined and differentiated by bottom-up stimulus information. Even in the case of illusory objects such as Kanizsa figures, in which low-level information is equated in the object and nonobject conditions, the objects themselves are defined by information in the image. When the inducers are aligned, the Gestalt cue of good continuation gives rise to the perception of an illusory object; when they are not aligned, the object is not perceived.

Given the importance of top-down factors in perceptual organization, it is important to isolate the mechanisms involved in situations where object perception may vary under conditions of identical low-level sensory information that produce “object” and “nonobject” perceptions. One way to study perceptual variations of physically identical stimuli is to use ambiguous, “bistable” images, which give rise to two alternate perceptual interpretations. Evidence suggests that the same perceptual mechanisms underlie the processing of bistable and “unambiguous” displays (Leopold & Logothetis, 1999; Long & Toppino, 2004; Peterson & Gibson, 1991; Slotnick & Yantis, 2005); accordingly, by analyzing the mechanisms underlying the perceptual alterations of bistable images, we can shed light on more general mechanisms of visual processing (Başar-Eroglu, Strüber, Stadler, Kruse, & Başar, 1993; İşoğlu-Alkaç & Başar-Eroğlu, 1998; Kornmeier, 2004; Kornmeier & Bach, 2005, 2006; Kornmeier, Ehm, Bigalke, & Bach, 2007; Pitts, Gavin, & Nerger, 2008; Pitts, Martínez, Brewer, & Hillyard, 2011).

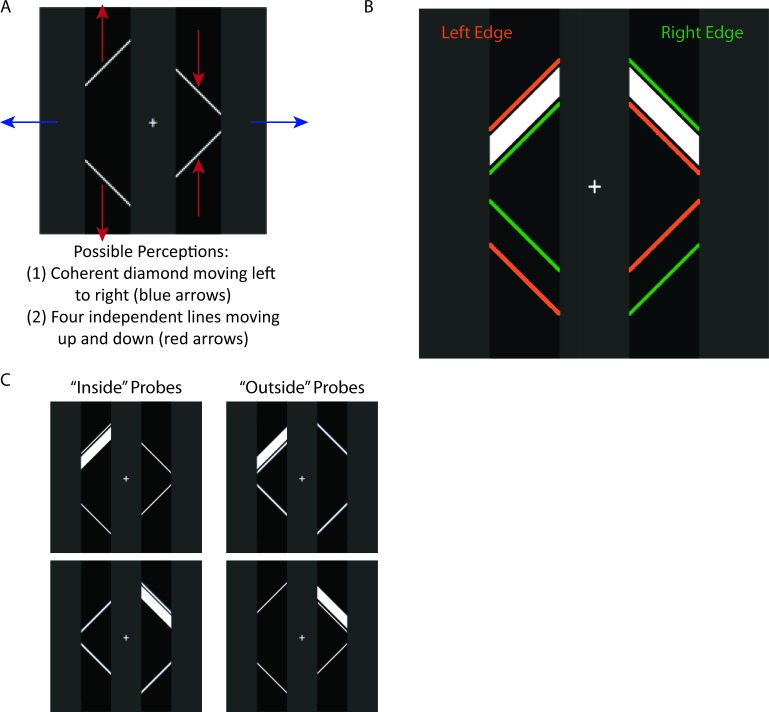

The bistable image shown in Figure 1 has recently been used to investigate perceptual integration in object perception (de-Wit & Kubilius, 2012; Fang, Kersten, & Murray, 2008; Murray & Kersten, 2002; Naber, Carlson, Verstraten, & Einhäuser, 2011). First described by Lorenceau and Shiffrar (1992), the bistable nature of this “ambiguous diamond” display is induced through motion. As the line segments move, participants see either four separate lines (“fragments”) moving up and down or one integrated object (an occluded diamond shape) moving left to right, and perception spontaneously alternates from one percept to the other. Critically, the visual input is identical in the two perceptual states, thereby making possible the comparison of perceptual processes during object perception versus fragment perception. In a behavioral study using this ambiguous diamond display, Naber et al. (2011) found that the subjective impression of an integrated object facilitated detection and discrimination of elements within the object's boundaries.

Figure 1.

Bistable moving visual image used in experiment (Lorenceau & Shiffrar, 1992). (A) When the image moves, the four white lines are either perceived as a single coherent diamond (object) moving left to right (behind the gray columns, which are perceived as occluders) or as four separate independent lines (fragments) moving up and down. (B) Schematic of shape and location of probes. Probes were derived from the region of overlap (minus one pixel on either side) between the diamond at its leftmost (orange fragments) and rightmost (green fragments) edge. (C) Example probe stimuli. Probes were flashed randomly in the upper left or upper right quadrants. During object perception, the probes appeared either “inside” or “outside” the object, depending on the location of the object.

In the current study we used electrophysiological recordings to investigate the cortical processes that differentiate the subjective perception of a coherent object from that of fragmented parts in the ambiguous diamond display. Participants indicated via button press their perceptual state as it alternated from object to fragment and vice versa. We examined how the subjective perception of a coherent object affects integrative visual processing by adding probes to the display and comparing neural activity to the probes as a function of perceptual state. Comparing the neural activity elicited by the probes during object relative to fragment perception revealed differences in sensory processing of elements that appeared within the perceived object. In addition, we observed oscillatory differences in the EEG associated with the perceptual switch from fragment to object and vice versa.

Method

Participants

Fifteen undergraduates (10 women; age range 18–32 years) from the University of California, San Diego, participated in the experiment for monetary compensation. All were right-handed and had normal or corrected-to-normal vision. All gave informed consent as approved by the committee for the protection of human subjects at the University of California, San Diego, and in accordance with the Declaration of Helsinki.

Stimuli

During testing participants maintained fixation on a central white fixation cross subtending 0.5° that remained on the video screen throughout the experiment. The basic display consisted of a white diamond on a black background that subtended 7.8° × 7.8° of visual angle when viewed from a distance of 80 cm. The diamond appeared behind three 2.5° × 11.8° gray occluding bars. One of the gray bars was centered at fixation, and the other two were centered 3.6° to the left and right of fixation, respectively. When in motion, the diamond gave rise to two possible perceptual states: (a) a single, coherent diamond moving left to right behind the three gray bars (“object”), or (b) four independent line segments moving up and down beside the three gray bars (“fragments”; see Figure 1A; Lorenceau & Shiffrar, 1992; Naber et al., 2011). To enhance the ambiguity of the display, the diamond had a sinusoidal movement pattern (Naber et al., 2011), and took 1.35 s to move from one side (left/right) to the other. Hence, the period of motion was 2.7 s (i.e., duration of one full cycle from left to right and back again). The peak amplitude was 2.3°/s, and the diamond paused at each side of the display for 100 ms.

The luminance of the occluding bars was set individually for each participant so that the alternative perceptual states occurred with approximately equal frequency. Higher luminance bars bias perception towards the diamond, whereas lower luminance bars bias perception towards the fragments (Naber et al., 2011). Participants also have predisposed biases, so the luminance was adjusted individually in an attempt to reduce any biases towards one state or the other.

Briefly flashed white parallelograms subtending 2.9° × 0.7° served as irrelevant probes, and appeared either in the upper left or upper right quadrant of the display. To equate the physical characteristics and spatial location of probes appearing inside versus outside of the diamond, the shape and location of each probe was formed by the region of overlap between the diamond at its leftmost and rightmost points (Figure 1B). The left probes were presented either in the region that was just within the diamond at its leftmost point or just outside the diamond at its rightmost point (Figure 1C), and vice versa for the right probes. Thus, depending on the location of the diamond, the probe could appear to be contained within the boundaries of the diamond shape (e.g., a left probe when the diamond was at its leftmost point), and the physically identical probe in the same spatial location could also appear to be outside of it (e.g., left probe when the diamond was at its rightmost point). This assured that the ERPs elicited by each probe could be attributed to its location with respect to the oriented fragments/diamond and not to differences in spatial location per se. There was one pixel (0.07°) separating the probe from the diamond's boundary at each location, so that the probes never spatially abutted or overlapped the diamond. The probes were presented at the instant the diamond was at its leftmost or rightmost point for a duration of 100 ms. Activity elicited by the probes during self-reported object perception was compared to activity elicited by the same probes, in the same physical locations, during fragment perception. Although the inside–outside distinction was not relevant during fragment perception, we refer to “inside probes” and “outside probes” to indicate their locations relative to the diamond. Note, however, that the absolute spatial locations of the inside and outside probes in the display were identical.

Procedure

Prior to the start of the experiment, the luminance of the occluding bars was adjusted individually for each participant to a level at which she reported oscillating between perceiving the object and perceiving fragments for equal duration for a period of 3 min. The participants were first presented with black occluding bars and asked to indicate their perceptual state; almost all participants reported seeing only fragments moving up and down. They were then presented with white occluding bars; almost all participants reported seeing only a diamond object moving left to right. They were then presented with occluding bars that were the shade of gray exactly in the middle (i.e., RGB values 127, 127, and 127) and continued to report their perceptual state as the shade of the bars changed every 3 min. Once they reported object and fragment states equally for a period of 3 min, the experiment was initiated and the final shade of gray was used throughout the entire experiment.

Participants viewed the ambiguous diamond display from a distance of 80 cm while maintaining central fixation and indicated their perceptual state (object, fragment) via button press. All participants used their dominant (right) hand to indicate object versus fragment perception; one button indicated a perceptual switch to “object” and a second button indicated a perceptual switch to “fragments.” A third button was pressed with the left hand to start the experiment, as well as to indicate an ambiguous perceptual state, or anything other than a concrete object or fragment percept. Akin to previous ERP studies of bistable images (e.g., Başar-Eroglu et al., 1993; Pitts et al., 2011), we asked participants to use their dominant hand to obtain as accurate an estimate as possible of the timing of their perceptual switches, since we relied on participants' self-report and response times are faster with the dominant than nondominant hand (Kerr, Mingay, & Elithorn, 1963).

While participants viewed the display and indicated their perceptual state, the irrelevant probes flashed for 100 ms randomly at the left and right locations (see Stimuli description), and participants were told to ignore them. Two fifths of the time the probes appeared to be within (but not touching) the boundaries of the diamond (i.e., one fifth were left inside probes and one fifth were right inside probes), and two fifths of the time they appeared outside the boundaries of the diamond (i.e., one fifth were left outside probes and one fifth were right outside probes). To reduce expectancies associated with the probe and to subtract neural activity associated with the motion of the diamond, one fifth of the time no probe appeared when the diamond reached its leftmost or rightmost point. Neural activity elicited in the no-probe trials was subtracted from activity elicited by the probes to get a pure measure of probe-related activity separate from any activity elicited by the moving lines or object. Since the motion was periodic, participants could predict when the probes were about to be flashed, and the existence of the no-probe trials may not have eliminated such expectancies or predictive mechanisms; importantly, however, probes occurred with equal probability during each perceptual state (object or fragment), and inside and outside probes also occurred with equal probability, so predictive mechanisms could not account for differences between perceptual states or for differences between states for one probe condition and not the other.

EEG recording

The EEG was recorded continuously using 64 Ag-AgCl pin-type active electrodes mounted on an elastic cap (Electro-Cap International, Eaton, OH) according to the extended 10–20 system, and from two additional electrodes placed at the right and left mastoids. The electrode impedances were kept below 5 kΩ. Scalp signals were amplified by a battery-powered amplifier (SA Instrumentation, Encinitas, CA) with a gain of 10,000 and band-pass filtered from 0.1 to 80 Hz. Eye movements and blinks were monitored by horizontal (attached to the external canthi) and vertical (attached to the infraorbital ridge of the right eye) electrooculogram (EOG) recordings. A right mastoid electrode served as the reference for all scalp channels and the vertical EOG (VEOG). Left and right horizontal EOG (HEOG) channels were recorded as a bipolar pair. Signals were digitized to disk at 250 Hz. Each recording session lasted 120–180 min, including setup time and cap and electrode preparation. Short breaks were given every 3 min to help alleviate participant fatigue.

ERP analysis

Trials were discarded if they contained an eye blink or an eye movement artifact (>200 μV), or if any channel exceeded 55 μV. On average, 15% of trials were rejected due to these artifacts. Averaged mastoid-referenced ERPs were calculated off-line as the difference between each scalp channel and an average of the left and right mastoid channels. To analyze neural activity to the probes, the ERPs time-locked to probe and no-probe events were averaged separately, baseline corrected from −100 to 0 ms, and low-pass filtered at 30 Hz. ERPs to the no-probe in each perceptual state (fragment, object) and diamond location (left, right) were subtracted from ERPs to the probe in the same state and location. For example, ERPs to the left no-probe during object perception were subtracted from the inside left probe during object perception, and ERPs to the left no-probe during fragment perception were subtracted from the inside left probe during fragment perception. Similarly, ERPs to the right no-probe during object perception were subtracted from the outside left probe (because the diamond was at its rightmost point when the left probes appeared outside of it) during object perception, and so on. This approach is similar to subtraction techniques that have been used both in previous ERP studies and in neuroimaging studies (see, for example, Luck, Fan, & Hillyard, 1993; Petersen, Fiez, & Maurizio, 1992). The underlying assumption is one of additivity (e.g., McCarthy & Donchin, 1981). In the current paradigm the assumption is that the ERP elicited by diamond itself in a particular perceptual state will not change depending on the presence of the probe, so probe-related activity can be isolated by subtracting out the ERP elicited by the diamond. Prior to artifact rejection, one fifth of the trials were no-probe trials, one fifth were trials in which a left inside probe occurred, one fifth were left outside trials, one fifth were right inside trials, and one fifth were right outside trials. Hence, a roughly equal number of no-probe trials were subtracted from the probe trials to derive probe-related activity.

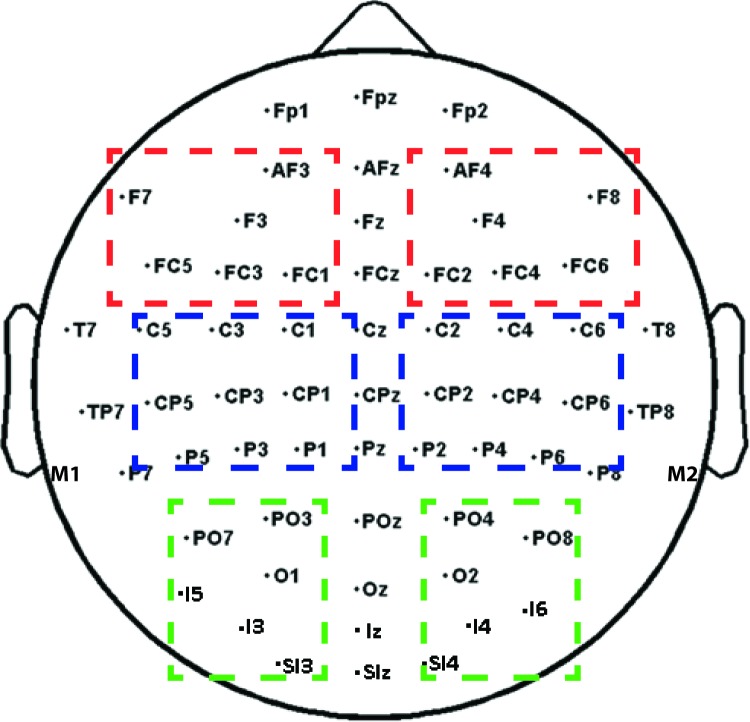

Prior to statistical analysis, ERPs to the left probe and right probe were collapsed into ipsilateral and contralateral locations on the scalp and averaged. To circumvent the multiple testing problem (Oken & Chiappa, 1986), we used an approach based on region of interest, in which we averaged ERPs across a number of electrodes to yield one value for the left hemisphere and one value for the right hemisphere. Based on previous object-related ERP modulations (e.g., Kasai, 2010; Kasai & Kondo, 2007; Kasai, Moriya, & Hirano, 2011; Kasai & Takeya, 2012; Martínez et al. 2007; Pitts et al., 2011), and also because we were comparing visual evoked ERPs elicited by the probes during each perceptual state, we focused our probe analysis on occipital electrodes (PO3/O4, PO7/8, O1/2, I3/4, I5/6, SI3/4; see Figure 2, green boxes) and looked for differences in the ∼200-ms latency period. The precise time window was centered around the maximum amplitude of the component of interest in the grand average based on topographical distributions, which revealed a difference between perceptual states in the range of the P2 (184–212 ms) component over the occipital scalp. Perceptual state effects were quantified in terms of mean amplitudes within the P2 latency window and entered into perceptual state (object, fragment) × hemisphere (ipsilateral, contralateral) analysis of variance (ANOVA). Follow-up planned comparisons were conducted when appropriate to test the difference between perceptual states for contralateral versus ipsilateral probes.

Figure 2.

Topography of electrodes used in the experiment. Dashed boxes show the electrodes averaged in the frontal (red), central-parietal (blue), and occipital (green) regions in the time-frequency analyses. Left hemisphere (LH) and right hemisphere (RH) electrodes were averaged separately to yield two values (one for the LH and one for the RH) in each region. Only electrodes in the occipital cluster (averaged separately for left and right hemispheres) were used in the probe ERP analysis.

To analyze ERPs associated with the perceptual switch, EEG epochs were time-locked to the participants' motor responses, low pass filtered at 30 Hz, and baseline corrected over the interval −1500 to −1200 ms prior to the response. For this analysis we focused on central-parietal electrodes (C3/4, CP1/2, CP3/4, P1/2, P3/4), based on previous ERP studies of perceptual switches (e.g., Başar-Eroglu et al., 1993; İşoğlu-Alkaç & Başar-Eroğlu, 1998). Mean amplitudes within specific latency windows prior to and following the button press were compared between perceptual states by a perceptual state (object, fragment) × hemisphere (ipsilateral, contralateral) ANOVA.

Source analyses

To model the neural generators of perceptual state effects, two difference source localization algorithms were applied to the grand-averaged ERP difference waves (formed by subtracting the ERPs to identical probes during object perception minus fragment perception). First, inverse dipole modeling was carried using Brain Electrical Source Analysis program (BESA; version 5). BESA iteratively adjusts the location and orientation of dipolar sources to minimize the residual variance between the calculated model and the observed ERP voltage topography (Scherg, 1990). We fit symmetrical pairs of dipoles that were mirror constrained in location but not in orientation during the interval of the P2 (184–212 ms).

The neural generators of these grand-averaged ERP difference waves were also modeled using a minimum-norm linear inverse solution approach that involves local autoregressive averaging (LAURA; Grave de Peralta Menendez, Murray, Michel, Martuzzi, & Gonzalez Andino, 2004). The LAURA solution space included 4,024 evenly spaced nodes (6-mm3 spacing), restricted to the gray matter of the Montreal Neurological Institute's (MNI) average brain. No a priori assumptions were made regarding the number or location of active sources. Time windows for estimating the sources of the component were the same as in the ERP statistical analyses. LAURA solutions were computed and transformed into a standardized coordinate system (Talairach & Tournoux, 1988) and exported into the AFNI software package (Cox, 1996) and projected onto structural (MNI) brain images for visualization.

Time-frequency analysis

To analyze induced oscillatory cortical activity associated with the perceptual switch, the single trial EEG signal on each channel was convolved with 6-cycle Morlet wavelets over a 4-s window beginning 2 s prior to the button press indicating a perceptual switch. Instantaneous power and phase were extracted at each time point (at 250-Hz sampling rate) over frequency scales from 0.7 to 60 Hz incremented logarithmically (Lakatos et al., 2005). The square roots of the power values (the sum of the squares of the real and imaginary Morlet components) were then averaged over single trials to yield the total averaged spectral amplitudes (in microvolts) for each condition (i.e., switch to fragment, switch to object). The averaged spectral amplitude was not baseline corrected because there was not a clear time reference for the perceptual switch (Ehm, Bach, & Kornmeier, 2011; İşoğlu-Alkaç, & Başar-Eroğlu, 1998; İşoğlu-Alkaç, & Strüber, 2006), and we were interested in differences surrounding switches to one perceptual state versus the other. We assumed that response time in denoting the perceptual switch would not differ systematically between conditions. We expected object-related differences in spectral amplitude between conditions to be limited to occipital electrodes, because we hypothesized that differences in neural activity between object and fragment perceptual states would involve visual areas. However, topographically widespread effects have been reported in alpha, beta, and gamma bands during bistable perception (e.g., Ehm et al., 2011; İşoğlu-Alkaç & Strüber, 2006; Kornmeier & Bach, 2012), so we performed statistical analyses on three left hemisphere and three right hemisphere clusters of electrodes covering the frontal (AF3/4, F3/4, F7/8, FC1/2, FC3/4, FC5/6), central-parietal (CP1/2, CP3/4, CP5/6, C1/2, C3/4, C5/6, P1/2, P3/4), and occipital (O1/2, PO3/4, PO7/8, I3/4, SI3/4, I5/6) scalp (Figure 2). Based on previous studies of bistable perception showing alpha and beta band power decreases as well as gamma band power increases starting around 500 ms prior to the button press denoting a perceptual switch (e.g., Ehm et al., 2011; İşoğlu-Alkaç & Strüber, 2006; Strüber & Hermann, 2002) we focused on the time window −500 to −100 ms prior to the button press. ANOVAs with factors of percept (object, fragment), scalp region (frontal, central-parietal, occipital), and hemisphere (left, right) were carried out separately for the alpha (8–12 Hz), beta (16–30 Hz), and gamma (30-50 Hz) bands for the time window 100–500 ms preceding the button press indicating a switch. Using an approach similar to that of previous studies (e.g., Strüber & Hermann, 2002), we treated the analyses in each frequency band separately, using Bonferroni correction where applicable locally within each analysis.

Results

Behavioral results

The mean switch rate was 8.1 switches/min. Overall, 47.8% of the total button presses indicated a switch to object perception, 49.9% indicated a switch to fragment perception, and 2.3% indicated a switch to an ambiguous percept. The average median durations of the object and fragment percepts were 5.1 and 6.8 s, respectively. Figure 3 depicts the frequency histograms for the object and fragment percept durations, showing that both have similar time courses. The data fit well with a gamma distribution, consistent with previous studies of bistable perception (Borsellino, Marco, Allazetta, Rinesi, & Bartolini, 1972; Fang et al., 2008) and binocular rivalry (Kovács, Papathomas, Yang, & Feher, 1996; Lehky, 1995). This was confirmed statistically with a χ2 test, p > 0.50 and p > 0.75 for the object and fragment percepts, respectively.1

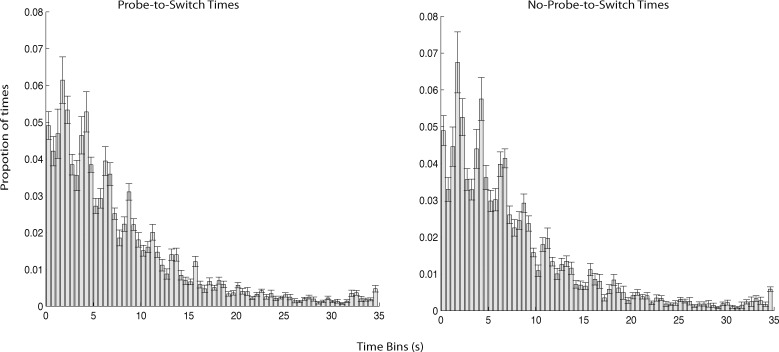

Figure 3.

Histograms of durations for the object (left) and fragment (right) percepts. Data are fitted using a gamma function (smooth black lines). The gamma function fitted to the object histogram has a shape parameter = 2.3 and a scale parameter = 3.0, and the gamma function fitted to the fragment histogram has a shape parameter = 2.1 and a scale parameter = 4.1.

The gamma distribution of perceptual durations suggests that the probe did not elicit perceptual switches in our paradigm (c.f., Kanai, Moradi, Shimojo, & Verstraten, 2005), but one possibility is that perceptual switches occurred with a relatively constant delay with respect to probe onset. To rule out this possibility, we determined the time from each probe until the subsequent perceptual switch and plotted a histogram of the distribution of probe-to-switch times. We compared this with a similar histogram showing the time from each no-probe until the subsequent perceptual switch and found no difference between these two distributions (p > 0.90, χ2 test). These data are shown in Figure 4. Note that this includes all probes and no-probes, though similar results were found when only considering the last probe or no-probe before each button press (see Figures 5B and 5C). We also compared the probe-to-switch times for switches to object versus switches to fragment and found no difference in these distributions (p > 0.75, χ2 test), nor was there a difference between object and fragment distributions for no-probe-to-switch times (p > 0.90, χ2 test). Hence, these data suggest that the probes did not influence the rate of perceptual switches but rather that participants were able to ignore the probes.

Figure 4.

Histograms of probe-to-switch and no-probe-to-switch times. The button press denoting a switch was used as an estimate of the switch time. Bar height corresponds to the probability that a perceptual switch occurred within a certain 500-ms bin.

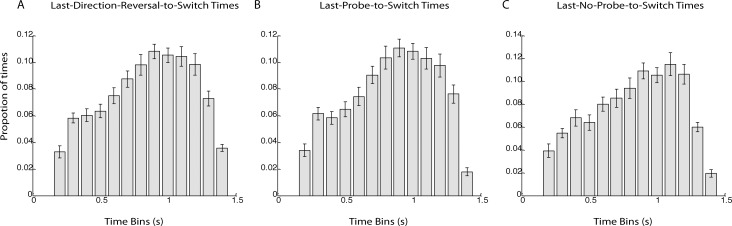

Figure 5.

(A) Histogram of the time from each button press denoting a switch to the last motion reversal of the stimulus. Bar height corresponds to the probability that the last motion reversal occurred within a certain 100-ms bin. The distribution is not uniform, suggesting the possibility that participants were, on average, more likely to experience a perceptual switch during a particular phase of the stimulus. Nonetheless, there was much individual variability (see Supplementary Figure 2), and while some participants did seem more likely to experience perceptual switches during a particular phase of the stimulus, others did not. (B) and (C) show histograms of the time from each button press denoting the switch to the last probe and no-probe, respectively. The similarity between the three distributions is evidence that the probes themselves did not trigger the perceptual switches. Instead, switches were linked to the cycle of the stimulus.

In order to determine whether participants were on average more likely to experience a perceptual switch during a particular phase of the stimulus, we also plotted a histogram of the time of each button press denoting a switch to the last motion reversal of the stimulus. These data are plotted in Figure 5A. This distribution was not uniform, F(12, 182) = 18.5, p < 0.0001, indicating that participants were not equally likely to experience perceptual switches at all phases of the moving stimulus. However, the same histograms plotted individually for each participant revealed variability across participants (see Supplementary Figure 1), suggesting that some participants were more likely than others to experience a perceptual switch at a particular phase of the moving stimulus, and for those participants whose switches were tied to a particular phase, there was variability in which phase triggered the switch across participants. Hence, given the variability across participants and the variability in reaction times, we cannot be sure which phase of the moving stimulus produced the highest frequency of switches. Comparison of the histograms of the time from each button press to the last motion reversal (5A), probe (5B), and no-probe (5C), respectively, suggests that perceptual switches were linked to the phase of the moving stimulus and not to the probes, because of the similarity across the three distributions. Indeed, a condition (probe, no-probe) × time bin (1–13) ANOVA did not find a significant interaction between condition and bin, F(12, 168) = 1.47, ns.

Probe-related ERP results

To examine whether neural processing of probes that occurred within the perceived object's boundaries differed from processing of physically identical probes not bound by the perceived object, ERPs to the inside and outside probes were compared during object and fragment perception (see Methods). Initial scrutiny of the ERPs to the inside probes showed a divergence between object and fragment percepts in the latency range of the P2, a positive deflection at posterior lateral electrode sites, between 184 and 212 ms, which was greater over contralateral than ipsilateral sites (Figure 6A). In contrast, the ERPs to the outside probes did not differ between object and fragment perceptual states. A statistical analysis confirmed these observations. The analysis was performed on the mean amplitude between 184 and 212 ms following probe onset, and the electrodes included in the analysis were PO3/O4, PO7/8, O1/2, I3/4, I5/6, and SI3/4. The mean amplitudes were averaged across these sites to obtain a single amplitude value for the electrode cluster ipsilateral to the probe location and a single value for the contralateral electrode cluster. To compare physically identical stimulus conditions, the difference in mean amplitude for each probe (i.e., inside, outside) was tested by separate 2 × 2 ANOVAs (Pitts et al., 2011) with factors perceptual state (object, fragment) and electrode cluster (ipsilateral, contralateral). The analysis of the inside probes revealed a significant interaction between perceptual state and cluster, F(1, 14) = 6.15, p < 0.03]. Follow-up planned comparisons revealed that for the contralateral electrode cluster, the 184- to 212-ms amplitude measure was significantly larger during object (1.5 μV) than fragment (1.0 μV) perception, t(14) = −2.18, p < 0.05, whereas there was no difference between object (1.2 μV) and fragment (0.9 μV) perception over the ipsilateral cluster, t(14) = −1.27, p = 0.23]. The analysis of the outside probes revealed only a significant main effect of electrode cluster, F(1, 14) = 16.9, p < 0.005], reflecting greater amplitudes over the contralateral (1.2 μV) than ipsilateral (0.9 μV) cluster. Importantly, the perceptual state × cluster interaction did not approach statistical significance (F < 1).

Figure 6.

(A) ERPs time-locked to the onset of the probes shown for the occipital cluster (see text). ERPs elicited by the corresponding “no probe” have been subtracted from the ERPs elicited by each probe. For inside probes, there was an enhanced positivity between 184 and 212 ms during object relative to fragment perception (noted by arrow), whereas there was no difference between perceptual states for outside probes. Horizontal bipolar eye channels (HEOG) and vertical monopolar eye channel (VEOG) show no difference in eye movements between perceptual states. (B) The scalp distribution between 184 and 212 ms for probe ERPs elicited during object perception, fragment perception, and the difference (object minus fragment), shown separately for inside probes (left) and outside probes (right).

The scalp topography of the ERP in the object minus fragment difference wave elicited by inside probes over the P2 time window (184–212 ms) differed from that of the ERPs elicited by inside probes during fragment perception. We will refer to this difference wave component as the P2d. Whereas the P2d had maximum amplitude over the contralateral occipital scalp, the topography elicited by inside probes during fragment perception was more bilateral (Figure 6B). To statistically evaluate this difference, the amplitudes of a single row of occipital electrodes (PO7/PO8, O1/O2, and OZ) were normalized by subtracting the amplitude at each electrode from the minimum amplitude in each condition and dividing by the difference between the maximum and minimum amplitude in each condition (McCarthy & Wood, 1985). This procedure removes any difference in the overall amplitudes between conditions. The normalized amplitudes were compared via ANOVA with the factors percept condition (object, fragment) and electrode (1–5). This analysis yielded a statistically significant interaction between condition and electrode, F(4, 56) = 5.98, p < 0.005, reflecting the more contralateral distribution of the P2 elicited by the inside probes during periods of object perception versus fragment perception.

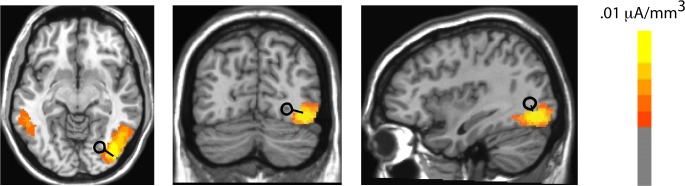

Source analyses results

A pair of mirror-symmetric dipoles was fit using BESA to the difference topography of the perceptual state effect (object fragment) found on the P2d elicited by the inside probes. A pair of dipoles situated in the middle fusiform gyrus, within the boundaries of Talairach coordinates previously reported for the LOC (e.g., Grill-Spector et al., 2001; Grill-Spector, Kushnir, & Edelman, 1999; Grill-Spector & Sayres, 2008; Malach et al., 1995) accounted for 90% of the variance in the scalp topography of the P2d over the time interval 184–212 ms. LAURA source estimations based on the grand averaged P2d also revealed a strong source for this component contralateral to the location of the probe, near to the location of the dipoles fit by BESA (within 11 mm). The Talairach coordinates and anatomical locations corresponding to the LAURA and BESA source estimations are displayed in Table 1 and Figure 7.

Table 1.

Talairach coordinates of BESA and LAURA source estimations for the P2 component (184–212 ms)

|

Solution |

x |

y |

z |

Anatomical location |

| BESA | 27 | −70 | 5 | Right middle occipital gyrus |

| LAURA | 38 | −70 | 9 | Right middle occipital gyrus |

Figure 7.

Grand-average LAURA source estimations (yellow and orange areas) and superimposed dipoles for the P2 object fragment difference (P2d) found for inner probes. Data are collapsed into ipsilateral (represented by the left hemisphere) and contralateral (represented by the right hemisphere) regions.

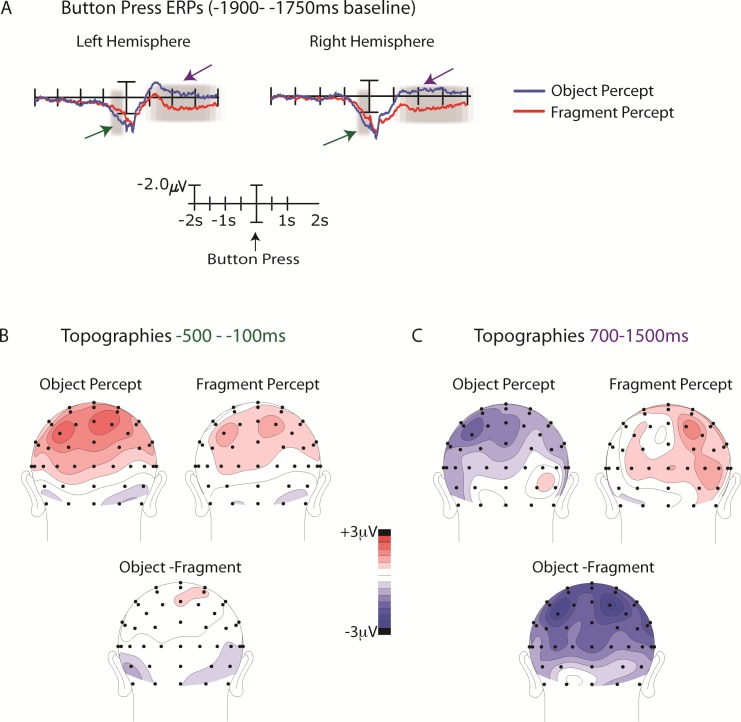

Switch-related ERP results

ERPs associated with the perceptual switch (i.e., time-locked to the response; see Methods) revealed a slow positivity starting around 500 ms prior to the response, which was greatest over central-parietal electrodes (Figures 8A and 8B). The mean amplitudes at central-parietal electrodes (C3/4, CP1/2, CP3/4, P1/2, P3/4) between −100 and −500 ms prior to the response were averaged to obtain a single amplitude value for the left and right hemispheres, respectively. A percept (object, fragment) by hemisphere (left, right) ANOVA revealed no significant effects. Importantly, there was no main effect of percept (F = 1.5), nor was there an interaction between percept and hemisphere (F < 1).

Figure 8.

(A) ERPs time-locked to the response shown for the central-parietal cluster (see text). The well-documented perceptual switching-related positivity, the LPC onsets ∼500 ms prior to the response and peaks around the time of the response (green arrows). The LPC did not differ between perceptual switch types (object vs. fragment). Following the response, a slow negativity was seen for object relative to fragment perception (purple arrows). The highlighted areas encompass the latency windows for each of these components on which the statistical analyses were performed, showing no difference in the preresponse LPC between perceptual states, and a significantly greater negativity following the response denoting a switch to object relative to fragment perception. (B) The scalp topographies for the LPC latency window shown separately for switches o object perception, fragment perception, and the difference (object minus fragment). The LPC is greatest over central-parietal electrodes but does not differ between perceptual states. (C) The corresponding scalp topographies following the response. Following the response denoting a switch to object perception, an enhanced negativity is seen with a broad, central-parietal distribution.

In addition to the positivity seen prior to the response, the response-locked ERPs beginning around 500 ms and persisting up to 1500 ms after the response included a slow negativity. This negativity was widely distributed over bilateral central-parietal sites and was greater following switches to object than to fragment perception (Figure 8C). To statistically evaluate this difference, the mean amplitudes at central-parietal electrodes (C3/4, CP1/2, CP3/4, P1/2, P3/4) between 700 and 1500 ms following the response were averaged to obtain a single amplitude value for the left and right hemispheres, respectively. A percept (object, fragment) × hemisphere (left/right) ANOVA revealed a main effect of percept, F(1, 14) = 5.39, p < 0.05, indicating that the ERP following the perceptual switch to object was significantly more negative (−0.82 μV) than the ERP following the perceptual switch to fragment (1.11 μV).

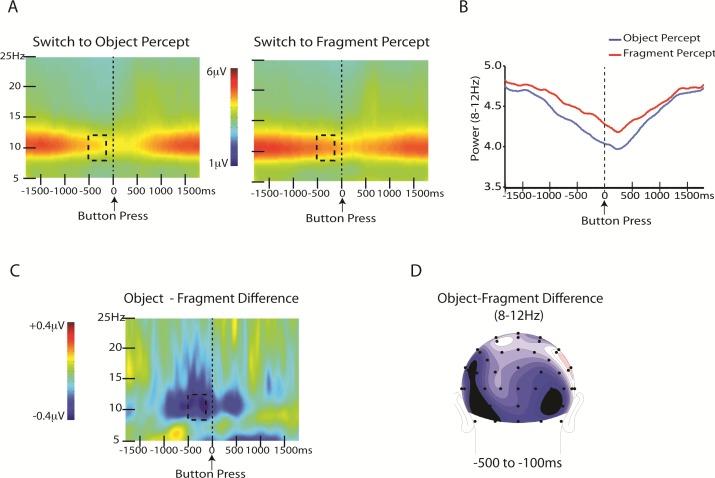

Time-frequency analysis results

To compare modulations of EEG activity associated with perceiving a coherent object versus disparate fragments, we compared spectral amplitudes in six clusters of electrodes covering the frontal, central-parietal, and occipital scalp (see Methods). For each region, the mean amplitudes were averaged across left and right hemisphere electrodes separately to obtain a single amplitude value for the left hemisphere cluster, and a single value for the right hemisphere cluster. We performed a percept (object, fragment) × region (frontal, central-parietal, occipital) × hemisphere (left, right) ANOVA for the time window 500 to 100 ms preceding the button press, separately for alpha, beta, and gamma bands (see Methods). This analysis only yielded significant differences between perceptual states in the alpha band. The percept × region × hemisphere ANOVA of the alpha amplitudes revealed a significant interaction of percept × region, F(2, 28) = 9.4, p < 0.001. Planned comparisons revealed significantly lower alpha power during perceptual switches to object (3.5 μV) than to fragment (4.3 μV), t(1, 14) = 2.7, p < 0.02 (Bonferroni corrected), over the occipital region (Figure 9), whereas there were no significant differences between perceptual states for the central-parietal or frontal regions, t(14) = 1 and t(14) < 1, respectively. The analysis of alpha band amplitudes also revealed a significant main effect of region, F(2, 28) = 17.3, p < 0.00002. Post hoc t-tests revealed that alpha amplitudes were lower over the central-parietal region (3.4 μV) than both the occipital (4.2 μV), t(14) = 6.7, p < 0.0001 (Bonferroni corrected), and frontal (4.0 μV), t(14) = 3.6, p < 0.003 (Bonferroni corrected) regions, whereas alpha amplitudes over the occipital and frontal regions did not differ from one another, t(14) = 1.6, ns. There was also a significant interaction of region × hemisphere, F(2, 28) = 4.3, p < 0.05, as alpha amplitudes over the central-parietal region were greater over the right (3.7 μV ) than left (3.2 μV ) hemisphere, t(14) = 3.03, p < .01 (Bonferroni corrected), but there was no difference between the hemispheres for the occipital nor the frontal regions, t < 1 for both.

Figure 9.

Differences in alpha activity between the two perceptual states (object and fragment). (A) Time-frequency plots of EEG surrounding the perceptual switch shown separately for switches to object perception and fragment perception. Dashed boxes show the time window in which statistical analyses were performed. (B) Graph of power in the alpha band (8–12 Hz) for switches to object and fragment perception. (C) Difference between time-frequency plot of EEG surrounding the perceptual switch to object minus the perceptual switch to fragment. Dashed box shows the time window for which alpha power significantly differed between object and fragment states. (D) Scalp topography of the difference (object minus fragment) in the −100- to −500-ms time window.

For the beta band, the only significant effect in this analysis was a main effect of region, F(2, 28) = 28.4, p < 0.000001. Post hoc t-tests revealed that beta amplitudes were lower over the central-parietal region (2.3 μV) than both the occipital (3.0 μV), t(14) = 6.4, p < 0.00002 (Bonferroni corrected) and frontal (3.9 μV), t(14) = 6.8, p < 0.00001 (Bonferroni corrected) regions, and beta amplitudes over the frontal region were significantly greater than those over the occipital region, t(14) = 3.4, p < 0.005 (Bonferroni corrected). Similarly, the analysis of the gamma band amplitudes revealed only a main effect of region, F(2, 28) = 17.9, p < 0.0002. Post hoc t-tests revealed that gamma amplitudes were lower over the central-parietal region (1.6 μV) than both the occipital (2.6 μV), t(14) = 4.3, p < 0.001 (Bonferroni corrected) and frontal (3.4 μV), t(14) = 6.2, p < 0.00003 (Bonferroni corrected) regions, whereas gamma amplitudes over the occipital and frontal regions did not differ from one another, t(14) = 2.2, ns.

Discussion

Participants viewed an ambiguous diamond display and indicated via button press their perception of the bistable figure as it switched from fragments to object and vice versa. ERPs elicited by irrelevant probes occurring within the boundaries of the object showed an enhanced P2d (184–212 ms) during object perception relative to fragment perception. Comparison of the scalp topographies in this latency window suggested that the enhanced amplitude in the P2d elicited by inside probes during object perception had a different scalp topography than the P2d elicited by the same probes during fragment perception. The object-related P2d enhancement had a contralateral distribution, whereas the distribution of the P2 during fragment perception was more medial. The source of this object-related P2d effect was estimated to be in the LOC, based on its Talairach coordinates (e.g., Grill-Spector et al., 2001; Grill-Spector, Kushnir, & Edelman, 1999; Grill-Spector & Sayres, 2008; Malach et al., 1995). In contrast, ERPs elicited by the same probes outside the boundaries of the object did not differ between the two perceptual states. ERPs surrounding the perceptual switch also showed a dissociation between the perceptual states. Following the response indicating a switch to object, there was a slow negativity that was broadly distributed over the central-parietal scalp that persisted for 1500 ms following the response, which was significantly reduced following the response indicating a switch to fragment. In contrast, ERPs preceding the response did not show a difference between perceptual states. However, a time-frequency analysis of the EEG surrounding the perceptual switch revealed greater alpha reduction associated with the perceptual switch from fragment to object than vice versa.

Unlike previous object-based modulations that have been found in the N1 latency range, the present probe data revealed a difference between object and fragment perception in the latency range of the P2 (184–212 ms). However, the object-related P2d observed here occurred at a similar latency as the previous object-based modulations of the N1, had a similar topography, and like the previous localized to the LOC. Interestingly, a recent study using Rubin's bistable face/vase (Rubin, 1915/1958) also found a positive enhancement around the same latency as our P2d for probes occurring on the perceived figure (i.e., face/vase) that localized to the LOC (Pitts et al., 2011). Studies examining the perception of fragmented objects have also reported an even later negativity (Ncl) associated with perceptual closure, which has also been localized to the LOC (e.g., Sehatpour et al., 2006). Future research is needed to determine what functional or anatomical differences may exist between the previously reported object-related modulations in the latency ranges of the N1, P2d, and Ncl.

The object-based P2d enhancement in the current experiment is also consistent with behavioral studies of contour integration showing that targets are better detected within a closed boundary (Kovács & Julesz, 1993), and that regions inside a closed contour appear brighter than regions outside of the contour (Paradiso & Nakayama, 1991). In this vein, electrophysiological studies in animals have shown neurons in early visual areas to be sensitive to border ownership, showing preferences for stimulation on the side of the figure in a figure/ground display (e.g., Hung, Ramsden, & Roe, 2007; Lamme, 1995; Lamme, Zipser, & Spekreijse, 1998; Qui, Sugihara, & von der Heydt, 2007; Zhang & von der Heydt, 2010; Zipser, Lamme, & Schiller, 1996). In particular, Hung et al. (2007) demonstrated a border-to-surface shift in relative spike timing of V1 and V2 neurons in the cat visual cortex, suggesting that feedforward processing in V1 and V2 supports edge-to-surface propagation, perhaps underlying the perceptual brightness enhancement found behaviorally for areas within closed boundaries (e.g., Kovács & Julesz, 1993). Enhanced neural responses to elements on the inside of a figure relative to the outside of a figure have been shown in V1 and V2 for figures defined on the basis of orientation, color, and motion, and although these enhanced responses have been shown to be modulated by attention (e.g., Qui et al., 2007), they have been found irrespective of spatially directed attention (Marcus & Van Essen, 2002). The P2d enhancement we found for probes occurring within the bounded object may reflect a similar, figure-selective mechanism in the LOC.

Future research is needed to clarify the specific roles of cortical areas V1, V2, and LOC, and how they interact in figural enhancement. For example, Qui et al. (2007) recently found attentional modulations in V2 in response to figural elements that had a similar latency to the object-based effect found in the current study. Examining border-ownership cells in V2 of nonhuman primates, they found attentional modulations between ∼150 and 200 ms when presenting separated and overlapping figures, coincident with the latency of the P2d in the current study. An important question for future research is whether activity in LOC modulates figure-selective neurons in V1 and V2. Previous work has shown figure-related responses in V1 to be suppressed in anesthetized monkeys (Lamme et al., 1998), whereas the classical receptive field tuning properties were unaffected. This suggests that figural enhancement in V1 is influenced by top-down feedback from higher-level areas, perhaps including LOC. Consistent with this idea, the object-based enhancement in the current study was found irrespective of physical stimulus differences between the “object” and “fragment” percepts, suggesting that feedforward processing alone cannot account for the finding that elements within a perceived object are enhanced relative to the same elements appearing outside the boundaries of the object.

The object-based P2d effect in the current experiment was found over the contralateral hemisphere and localized to the contralateral LOC. This is consistent with studies demonstrating spatial selectivity in the LOC (Aggelopoulos & Rolls, 2005; DiCarlo & Maunsell, 2003; Larsson & Heeger, 2006; MacEvoy & Epstein, 2011; Martínez et al., 2006; Martínez, Ramanathan, et al., 2007; Martínez, Teder-Sälejärvi, & Hillyard, 2007; McKyton & Zohary, 2007; Niemeier, Goltz, Kuchinad, Tweed, & Vilis, 2005; Strother et al., 2011; Yoshor, Bosking, Ghose, & Maunsell, 2007), and points to the close interaction between the “space” and “object” systems in the brain (e.g., Faillenot, Decety, & Jeannerod, 1999; Kravitz & Behrmann, 2011; Merigan & Maunsell, 1993). The fact that the object-based P2d enhancement localized to the LOC adds to the growing body of literature that implicates this critical region in object perception, both in functional magnetic resonance imaging (fMRI; e.g., Grill-Spector et al., 1999, 2001; Grill-Spector & Sayres, 2008; Malach et al., 1995) and EEG/ERP (e.g., Martínez et al., 2007) experiments. Indeed, recent fMRI experiments using the ambiguous diamond display found that activity in the LOC increased and activity in V1 decreased during object perception versus fragment perception (Fang et al., 2008; Murray & Kersten, 2002). The results from the current experiment support and extend those findings, suggesting that increased activity in the LOC during object perception also results in additional processing of visual elements associated with the object (i.e., occurring within its boundaries). This may reflect a figural enhancement process that integrates the new elements with the existing object percept, as has been demonstrated for figure-selective neurons in early visual areas V1 and V2 (e.g., Hung et al., 2007; Lamme, 1995; Lamme et al., 1998; Qui et al., 2007; Zhang & von der Heydt, 2010; Zipser et al., 1996). In a more recent fMRI study, Caclin and colleagues (2012) manipulated contrast, motion, and shape separately in the ambiguous diamond display and compared bistable and unambiguous (i.e., externally changing) percepts of the object versus fragments. For both bistable and unambiguous displays, they found increased activity in ventral and occipital regions during perception of the bound object, and greater activity in motion-related dorsal areas during fragment perception. Further, they found that the different feature manipulations preferentially modulated regions sensitive to those features, with enhanced activity in dorsal areas (i.e., hMT+) than ventral and occipital areas for the motion display and vice versa for the shape and contrast displays. In the present study we attempted to remove stimulus-related activity from that related to the probe by subtracting activity in the no-probe trials from activity in the probe trials. Specifically, we used this subtraction method to remove motion-related activity in order to focus on perceptual processing of the probes as a function of perceptual state (see Supplementary Figure 2 for data without this subtraction method). As such, we did not design our study to examine motion processing or form-motion binding per se. An interesting avenue for future research could investigate if the integration of different features (e.g., motion, color) differentially modulates the P2d.

That the ERPs following the response also showed a dissociation between perceptual states is further evidence that perceiving a coherent object engages distinct neural mechanisms than perceiving disparate fragments, even without any changes in the physical stimulus. The object-related broad negativity following the response had a central parietal topography and persisted for at least 1500 ms after the response; it is therefore unlikely to reflect early perceptual processes. Instead, it is possible that this negativity reflects the sustained attention to the position of the diamond object, given abundant research associating parietal areas with sustained attention and attentional control (e.g., Häger et al., 1998; Johannsen et al., 1997; Mennemeier et al., 1994; Sarter, Givens, & Bruno, 2001; Singh-Curry & Husain, 2009; Wolpert, Goodbody, & Husain, 1998). Additional research is needed to determine the neural process reflected in this negativity.

The results of the mean alpha amplitudes surrounding the perceptual switch revealed significantly lower alpha power associated with the perceptual switch to object perception than to fragment perception. This object-related effect was limited to the occipital region, suggesting that it was related to perceptual processing and not to an overall decrease in vigilance (İşoğlu-Alkaç & Strüber, 2006; Klimesch, 1999). Importantly, a significant difference in alpha power during object relative to fragment perception was found in the interval 500–100 ms preceding the response, suggesting that the alpha reduction preceding the button press was associated with the perceptual switch itself. A previous study comparing response times to endogenously versus exogenously induced perceptual switches of the Necker cube found variable response times across participants varying between 530 and 733 ms (Kornmeier & Bach, 2004), suggesting that the alpha reduction we found may not have preceded the switch but rather resulted from it.

Since we relied on participants' report of their perceptual switches, we cannot be certain of the exact point in time the switches in fact occurred. However, examination of the switch-related ERPs suggests that the divergence in alpha power between switches to object versus fragment occurred after the perceptual switch, because the difference in alpha power coincided with the onset of the “late positive component” (LPC), a switch-related ERP that is considered to reflect postperceptual processes and is likely to be equivalent to the P300 (Başar-Eroğlu et al., 1993; Britz, Landis, & Michel, 2009; İşoğlu-Alkaç & Başar-Eroğlu, 1998; Kornmeier, 2004; Kornmeier & Bach, 2004, 2005, 2006, 2012; Kornmeier, Ehm, Bigalke, & Bach, 2007; O'Donnell, Hendler, & Squires, 1988; Pitts, Nerger, & Davis, 2007; Pitts et al., 2008; Strüber, Basar-Eroglu, Hoff, & Stadler, 2000). The LPC has been reported both in studies using intermittent presentation as well as studies using sustained presentation (i.e., in which the bistable image is continuously present and participants indicate their perceptual state as it switches; Başar-Eroğlu et al., 1993), as we did in the current experiment. Consistent with studies using sustained presentation, the onset of the LPC in the current experiment was ∼500 ms prior to the response denoting the perceptual switch and peaked around the time of the response. Previous reports of the LPC using the intermittent presentation method have found its onset to be ∼300 ms post stimulus onset (and presumed perceptual switch) and peak ∼450 ms. The LPC (P300) has been considered to reflect a postperceptual, “context updating” process in visual short-term memory (Donchin & Coles, 1988). If we assume that the LPC reflects a postperceptual process, this is further evidence that the perceptual switch in the current experiment occurred prior to 500 ms before the response (i.e., prior to the onset of the LPC).

Along similar lines, recent studies have reported decreased alpha power around the time of perceptual switch for other bistable images (Ehm et al., 2011; İşoğlu-Alkaç & Başar-Eroğlu, 1998; İşoğlu-Alkaç & Strüber, 2006; Strüber & Hermann, 2002). Those studies also found decreases in alpha power to coincide with the LPC, suggesting that the decrease in alpha was linked to the conscious recognition of the perceptual change. As in previous studies of bistable perception using a continuous presentation of the bistable display and relying on participants' report of their perceptual state, we did not use a baseline correction for the spectral amplitudes because there was no obvious time interval to use as a baseline (e.g., İşoğlu-Alkaç & Başar-Eroğlu, 1998; İşoğlu-Alkaç & Strüber, 2006). Given the wide variation in perceptual switch times within the experiment (Figure 2), as well as the variability of switch rates across participants, arbitrarily selecting a baseline interval in the spectral analysis would result in a loss of statistical power to detect differences between perceptual states. Had we selected a baseline interval well before the button press denoting the switch, the baseline interval might have reflected activity prior to the previous perceptual switch in some trials (i.e., in trials with shorter switch-rates). If, on the other hand, the baseline interval we chose was too close to the button press denoting the switch, it might have reflected activity related to the switch itself. Confirming this assumption, we applied an ad hoc baseline correction −1500 to −1200 ms before the button press denoting the switch and found a similar difference in alpha power prior to the button press denoting the switch, though the difference was less robust and did not reach statistical significance (see Supplementary Figure 3). It is of note that these problems with applying an ad hoc baseline are also relevant to the response-locked ERPs. In the case of the ERPs, a baseline must be applied to be able to compare voltage changes across conditions. There may be less noise in the ERP baseline because the temporal resolution is better in the ERPs relative to the time-frequency analysis, in which there is a tradeoff between temporal and frequency resolution, with temporal resolution worse at lower frequencies (Herrmann, Grigutsch, & Busch, 2005). However, since response times and switch rates vary across participants and even across trials within participants (Strüber et al., 2000; Strüber & Hermann, 2002), applying an ad hoc baseline to the ERPs introduces noise and hence the ERP results time locked to the response must be interpreted with caution. Nonetheless, without a baseline correction, comparisons between activity before and after the perceptual switch may depend on the duration of the previous perceptual episode or on the level of activity just before the time of the switch. The similarity of probe-to-switch times between object and fragment switches argues against this interpretation, but it remains a possibility. Previous studies have attempted to alleviate this baseline issue by using an intermittent presentation and time locking responses to the onset of the stimulus (e.g., Ehm et al., 2011; Kornmeier et al., 2007; Pitts et al., 2009), but this may be less feasible with the current bistable stimulus because it is induced through motion.

In a magnetoencephalography (MEG) study, Strüber and Hermann (2002) compared endogenous switches in an ambiguous motion display with exogenous switches, in which they changed the physical characteristics of the image to induce a perceptual switch from one motion condition to the other. For the endogenous condition, they found a constant decrease in alpha power over a 1-s interval preceding the button press denoting the perceptual switch. In contrast, the exogenous condition exhibited a sharp decline starting ∼300 ms preceding the button press. The authors interpreted this result as evidence for a bottom-up explanation for perceptual switches (e.g., Hochberg, 1950; Kruse, Stadler, & Wehner, 1986; Long, Toppino, & Mondin, 1992; Toppino & Long, 1987), suggesting that they arise from neural satiation, in which the current percept gradually decays until a threshold that initiates the switch is reached. We also found a gradual decline in alpha power in surrounding the perceptual switch to both object and fragment (see Figure 8B). However, the decline in alpha power diverged between the two perceptual states ∼500 ms preceding the response. While similar bottom-up factors may have caused the perceptual switch in both cases, the difference in alpha power between switches to object and switches to fragment likely reflects a post-switch difference in alpha reduction.

The greater alpha reduction associated with switches to object relative to fragment perception is consistent with evidence that the subjective perception of an object guides attention. Reductions in alpha amplitude in response to visual stimuli (event-related desynchronization [ERD]) has been proposed to reflect neuronal excitability and disinhibition (Klimesch, Sauseng, & Hanslmayr, 2007; Palva & Palva, 2011) and has been used in many studies as a direct index of attention (e.g., Banerjee, Snyder, Molholm, & Foxe, 2011; Bastiaansen, Böcker, Brunia, De Munck, & Spekreijse, 2001; Flevaris, Bentin, & Robertson, 2011; Foxe & Snyder, 2011; Thut, Nietzel, Brandt, & Pascual-Leone, 2006; Volberg, Kliegl, Hanslmayr, & Greenlee, 2009; Ward, 2003; Worden, Foxe, Wang, & Simpson, 2000). Given previous studies associating alpha ERD with attention (e.g., Thut et al., 2006), this suggests that the perception of the object attracted attention, even in the absence of any bottom-up stimulus changes or differences in task relevancy. Though the task itself—to track changes in perception—engaged sustained attention, the greater reduction in alpha associated with switches to object perception than to fragment perception was limited to the time surrounding the switch itself, suggesting an additional, transient attentional process that was elicited by the perception of the object.

Abundant evidence has demonstrated a relationship between attentional mechanisms and perceptual organization (e.g., Scholl, 2001); perceptual organization not only constrains attention (Behrmann, Zemel, & Mozer, 1998; Davis & Driver, 1997; Driver et al., 2001; Driver, Baylis, & Parasuraman, 1998; Duncan, 1984; Moore et al., 1998; Watson & Kramer, 1999), but attentional selection can also influence perceptual organization (e.g., Vecera, Flevaris, & Filapek, 2004). That the perceptual switch to object was associated with alpha ERD is consistent with behavioral studies showing that the onset of a new visual object captures attention (Yantis, 1993; Yantis & Jonides, 1996). However, other studies have suggested that luminance and motion transients capture attention irrespective of whether or not they are associated with an object (Franconeri, Hollingworth, & Simons, 2005; Franconeri & Simons, 2003). The results from the present experiment contribute to this issue, suggesting that the subjective experience of perceiving a new object can in fact capture attention, without any luminance or motion changes. This is also consistent with recent behavioral evidence that the organization of task irrelevant visual elements into an object automatically attracts attention (Kimchi, Yeshurun, & Cohen-Savransky, 2007; Yeshurun et al., 2009). Importantly, unlike these previous studies, the perceptual object in this case was not derived from bottom-up stimulus information but emerged as a function of the perceptual state of the viewer.

A further possibility is that the reduction in alpha surrounding the perceptual switch might reflect one or more facets of perceptual integration required for the perception of the object; namely contour integration, coherent motion integration, and form-motion integration since the object in this case was derived through motion (Aissani, Cottereau, Dumas, Paradis, & Lorenceau, 2011; Benmussa, Aissani, Paradis, & Lorenceau, 2011; Caclin et al., 2012; Lorenceau & Alais, 2001). Though figural enhancement effects have been found in early visual areas for objects defined on the basis of numerous cues including orientation, motion, and color (Lamme, 1995; Lamme et al., 1998; Qui et al., 2007; Zhang & von der Heydt, 2010; Zipser et al., 1996), feature-specific neural regions are also recruited to process objects defined on the basis of multiple features (e.g., Caclin et al., 2012). The difference in alpha power between object and fragment conditions in the current study was widely distributed across occipital sites (Figure 9D), perhaps indicative of coordinated oscillatory activity in multiple neural regions recruited to perceive the coherent object, including motion-related (hMT+) and object-related (LOC) areas (e.g., Caclin et al., 2012). Indeed, previous research associating decreases in alpha power with enhanced attention has also demonstrated that attention-related effects across tasks in different modalities have distinct scalp topographies, suggesting attention-related decreases in alpha restricted to the task-relevant neural areas (e.g., Banerjee et al., 2011; Kelly, Lalor, Reilly, & Foxe, 2006; Worden et al., 2000).

It is noteworthy that we did not find oscillatory differences in the gamma range between object and fragment perceptual states. This is in contrast to numerous studies that have associated gamma oscillations with perceptual integration and object perception (e.g., Bertrand & Tallon-Baudry, 2000; Debener et al., 2003; Gruber & Müller, 2005; Keil et al., 1999; Rodriguez et al., 1999; Tallon-Baudry et al., 2005; Zion-Golumbic & Bentin, 2007). However, recent MEG studies of oscillatory activity associated with real and illusory contour perception (Kinsey & Anderson, 2009), object versus nonobject patterns (Maratos, Anderson, Hillebrand, Singh, & Barnes, 2007), and ambiguous motion perception (Strüber & Hermann, 2002) did not find differences in the gamma range either. Rather, a decrease in the 10- to 30-Hz range in those studies was associated with contour and object perception. Future research is needed to tease out the differences between studies of coherent object perception that do and do not yield effects in the gamma range. One possibility is that differences in task conditions such as task relevancy may underlie the discrepancies (Kinsey & Anderson, 2009). The results from the current experiment suggest that the subjective perception of an integrated object does not necessarily result in oscillatory activity in the gamma range.

Conclusions

In sum, the results from this experiment provide electrophysiological evidence that the subjective perception of a coherent object engages attentional mechanisms and triggers additional processing of elements occurring within a perceived object's boundaries. In particular, the object-related P2d elicited by probes inside the perceived object's boundaries provides evidence that neural processing of elements in the visual field is mediated by whether they are contained within the boundaries of an attended object or not (e.g., Kasai & Takeya, 2012; Martínez et al., 2006; Martínez, Ramanathan, et al., 2007; Martínez, Teder-Sälejärvi, & Hillyard, 2007). The present data extend previous observations by showing that perceptual objects that guide attention need not be defined by bottom-up Gestalt cues. Coherent object percepts can also be determined by the perceptual state of the viewer. That the scalp distribution differed between the object-related P2d enhancement and the P2 elicited during fragment perception suggests that elements associated with an object are processed differently from independent elements in the visual field and do not merely generate an enhancement of the same perceptual process. Instead, these data suggest that elements that fall within an object's boundaries trigger additional processing in area LOC, perhaps reflecting the integration of the new element with the existing object percept.

Acknowledgments

This work was supported by an NIMH grant (1P50MH86385), an NSF grant (BCS-1029084), and an NIMH institutional training grant (T32MH020002-13).

Commercial relationships: none.

Corresponding author: Anastasia V. Flevaris.

Email: ani.flevaris@gmail.com.

Address: Department of Psychology, University of Washington, Seattle, Washington.

Footnotes

The data also fit well with a lognormal distribution (p > 0.75 and p > 0.90 for the object and fragment percepts, respectively, χ2 test). We chose to fit the data to a gamma distribution due to the convention in studies of binocular rivalry and bistable perception. The important point is that both perceptual states had similar time courses and both had a similar gamma or lognormal distribution, as shown previously in studies using the ambiguous diamond display (e.g., Fang et al., 2008).

Contributor Information

Anastasia V. Flevaris, Email: ani.flevaris@gmail.com.

Antigona Martínez, Email: antigona@ucsd.edu.

Steven A. Hillyard, Email: shillyard@ucsd.edu.

References

- Aggelopoulos N. C., Rolls E. T. (2005). Scene perception: Inferior temporal cortex neurons encode the positions of different objects in the scene. The European Journal of Neuroscience, 22 11, 2903–2916 [DOI] [PubMed] [Google Scholar]

- Aissani C., Cottereau B., Dumas G., Paradis A.-L., Lorenceau J. (2011). Magnetoencephalographic signatures of visual form and motion binding. Brain Research , 1408, 27–40 [DOI] [PubMed] [Google Scholar]

- Appelbaum L. G., Ales J. M., Cottereau B., Norcia A. M. (2010). Configural specificity of the lateral occipital cortex. Neuropsychologia, 48 11, 3323–3328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee S., Snyder A. C., Molholm S., Foxe J. J. (2011). Oscillatory alpha-band mechanisms and the deployment of spatial attention to anticipated auditory and visual target locations: Supramodal or sensory-specific control mechanisms? The Journal of Neuroscience, 31 27, 9923–9932 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M. (2004). Visual objects in context. Nature Reviews Neuroscience, 5 8, 617–629 [DOI] [PubMed] [Google Scholar]

- Başar-Eroğlu C., Strüber D., Stadler M., Kruse P., Başar E. (1993). Multistable visual perception induces a slow positive EEG wave. The International Journal of Neuroscience, 73 1-2, 139–151 [DOI] [PubMed] [Google Scholar]

- Bastiaansen M. C., Böcker K. B., Brunia C. H., De Munck J. C., Spekreijse H. (2001). Event-related desynchronization during anticipatory attention for an upcoming stimulus: A comparative EEG/MEG study. Clinical Neurophysiology, 112 2, 393–403 [DOI] [PubMed] [Google Scholar]

- Behrmann M., Zemel R. S., Mozer M. C. (1998). Object-based attention and occlusion: Evidence from normal participants and a computational model. Journal of Experimental Psychology: Human Perception and Performance, 24 4, 1011–1036 [DOI] [PubMed] [Google Scholar]

- Ben-Av M. B., Sagi D., Braun J. (1992). Visual attention and perceptual grouping. Perception & Psychophysics, 52 3, 277–294 [DOI] [PubMed] [Google Scholar]

- Benmussa F., Aissani C., Paradis A.-L., Lorenceau J. (2011). Coupled dynamics of bistable distant motion displays. Journal of Vision, 11 8: 14, 1–19, http://www.journalofvision.org/content/11/8/14, doi:10.1167/11.8.14 [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Bertrand O., Tallon-Baudry C. (2000). Oscillatory gamma activity in humans: A possible role for object representation. International Journal of Psychophysiology, 38 3, 211–223 [DOI] [PubMed] [Google Scholar]

- Blair M. R., Watson M. R., Walshe R. C., Maj F. (2009). Extremely selective attention: Eye-tracking studies of the dynamic allocation of attention to stimulus features in categorization. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35 5, 1196–1206 [DOI] [PubMed] [Google Scholar]

- Borsellino A., Marco A., Allazetta A., Rinesi S., Bartolini B. (1972). Reversal time distribution in the perception of visual ambiguous stimuli. Biological Cybernetics, 10 3, 139–144 [DOI] [PubMed] [Google Scholar]

- Britz J., Landis T., Michel C. M. (2009). Right parietal brain activity precedes perceptual alternation of bistable stimuli. Cerebral Cortex, 19 1, 55–65 [DOI] [PubMed] [Google Scholar]

- Caclin A., Paradis A.-L., Lamirel C., Thirion B., Artiges E., Poline J.-B., et al. (2012). Perceptual alternations between unbound moving contours and bound shape motion engage a venral/dorsal interplay. Journal of Vision, 12 7: 11, 1–24, http://www.journalofvision.org/content/12/7/11, doi:10.1167/12.7.11 [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Cichy R., Chen Y., Haynes J. (2011). Encoding the identity and location of objects in human LOC. Neuroimage, 54 3, 2297–2307 [DOI] [PubMed] [Google Scholar]

- Cox R. (1996). AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research, 29 3, 162–173 [DOI] [PubMed] [Google Scholar]

- Davis G., Driver J. (1997). Spreading of visual attention to modally versus amodally completed regions. Psychological Science, 8 4, 275–281 [Google Scholar]

- Debener S., Herrmann C. S., Kranczioch C., Gembris D., Engel A. K. (2003). Top-down attentional processing enhances auditory evoked gamma band activity. Neuroreport , 14 5, 683–686 [DOI] [PubMed] [Google Scholar]