Abstract

Background

Prescribing errors are common. It has been suggested that the severity as well as the frequency of errors should be assessed when measuring prescribing error rates. This would provide more clinically relevant information, and allow more complete evaluation of the effectiveness of interventions designed to reduce errors.

Objective

The objective of this systematic review was to describe the tools used to assess prescribing error severity in studies reporting hospital prescribing error rates.

Data Sources

The following databases were searched: MEDLINE, EMBASE, International Pharmaceutical Abstracts, and CINAHL (January 1985–January 2013).

Study Selection

We included studies that reported the detection and rate of prescribing errors in prescriptions for adult and/or pediatric hospital inpatients, or elaborated on the properties of severity assessment tools used by these studies. Studies not published in English, or that evaluated errors for only one disease or drug class, one route of administration, or one type of prescribing error, were excluded, as were letters and conference abstracts. One reviewer screened all abstracts and obtained complete articles. A second reviewer assessed 10 % of all abstracts and complete articles to check reliability of the screening process.

Appraisal

Tools were appraised for country and method of development, whether the tool assessed actual or potential harm, levels of severity assessed, and results of any validity and reliability studies.

Results

Fifty-seven percent of 107 studies measuring prescribing error rates included an assessment of severity. Forty tools were identified that assessed severity, only two of which had acceptable reliability and validity. In general, little information was given on the method of development or ease of use of the tools, although one tool required four reviewers and was thus potentially time consuming.

Limitations

The review was limited to studies written in English. One of the review authors was also the author of one of the tools, giving a potential source of bias.

Conclusion

A wide range of severity assessment tools are used in the literature. Developing a basis of comparison between tools would potentially be helpful in comparing findings across studies. There is a potential need to establish a less time-consuming method of measuring severity of prescribing error, with acceptable international reliability and validity.

Electronic supplementary material

The online version of this article (doi:10.1007/s40264-013-0092-0) contains supplementary material, which is available to authorized users.

Background

Prescribing errors are common in hospital inpatients. While errors are under-reported in clinical practice, research studies using methods other than spontaneous reporting have found much higher rates. In a recent systematic review of the prevalence, incidence, and nature of prescribing errors in hospital inpatients (including a wider range of methods for identifying errors), the median error rates in 65 eligible studies were 7 % of medication orders, 52 errors per 100 admissions, and 24 errors per 1,000 patient days [1]. Even errors that do not result in harm create additional work and can adversely affect patients’ confidence in their care.

Tools for measuring errors are needed to evaluate the effectiveness of interventions designed to reduce them. Medication error rates are often used to compare drug distribution systems [2–4] and to assess the effects of interventions. However, medication errors range from those with very serious consequences to those that have little or no impact on the patient. It has thus been suggested that the severity as well as the prevalence of errors should be taken into account [5, 6]. Assessing the severity of errors detected increases the clinical relevance of studies’ findings when compared with studies based on prevalence alone. In their systematic review of the prevalence, incidence, and nature of prescribing errors in hospital inpatients, Lewis et al. [1] noted that methods of classifying severity were disparate, but did not discuss the tools identified.

In this study, our objective was to describe and evaluate tools used to assess prescribing error severity in studies reporting prescribing error rates in the hospital setting.

Methods

Search Strategy

We carried out a systematic review to identify the tools that have been used to assess prescribing error severity in hospitals, and to investigate the validation and reliability of those tools. A recent comprehensive review of studies of the prevalence of prescribing errors in hospitals up to the end of 2007 was carried out by Lewis et al. [1]. We used the results of this search but excluded conference abstracts and letters [7] and those studies not assessing the severity of error. SG then re-ran Lewis et al.’s search strategy to identify additional papers published between 2008 and January 2013 (inclusive). The following databases were searched: MEDLINE, EMBASE, International Pharmaceutical Abstracts, and CINAHL using the keywords (error OR medication error OR near miss OR preventable adverse event) AND (prescription OR prescribe) AND (rate OR incidence OR Prevalence OR epidemiology) AND (Inpatient OR Hospital OR Hospitalization)—see Appendix for full example. SG searched the reference list of any relevant reviews identified and obtained the full text of any original studies that potentially met our inclusion criteria. Finally, SG hand-searched the reference lists of all included articles and searched our research team’s local database of medication error studies in order to identify any further studies informing the development, reliability, or validity of the severity assessment tools identified.

Inclusion and Exclusion Criteria

Inclusion Criteria

Peer-reviewed studies that reported on the detection and rate of prescribing errors in prescriptions for adult and/or pediatric hospital inpatients, or elaborated on the properties of severity tools used by these studies were included. All study designs and lengths of follow-up were included. If the same study was reported in multiple papers, all papers were included.

Exclusion Criteria

The exclusion criteria were based on those of Lewis et al. [1], with conference abstracts, letters, and studies not measuring severity also excluded [7]:

Studies not published in English.

Letters.

Conference abstracts.

Studies neither reporting the incidence of prescribing error separately from all types of medication error nor reporting the reliability and validity of tools that have been used to assess severity in these studies.

Studies of errors for only one disease or drug class or for one route of administration or one type of prescribing error.

Studies carried out in primary care or hospital outpatients.

Screening and Data Extraction for Electronic Search

All database search results were combined into a Reference Manager® 11 database. An electronic duplicate search was conducted using Reference Manager® 11 followed by a manual duplicate search. All duplicate papers were removed. SG then screened each title and abstract to determine whether the full research paper should be retrieved or whether it was evident it did not meet the inclusion criteria at that stage. BDF independently screened a random 10 % sample of abstracts to check the reliability of the screening (agreement level 91 %). All discrepancies were resolved through discussion. SG then reviewed all retrieved full papers to determine whether the article met the inclusion criteria and BDF independently reviewed a random 10 % sample of full papers to check reliability (agreement level 100 %). SG then extracted data from the included articles regarding the tools used to assess prescribing error severity. A second researcher (BDF) checked the tables (but did not carry out a duplicate data extraction). The following data were extracted directly into electronic tables: country and method of development, whether the tool assessed actual or potential harm, levels of severity assessed, and results of any validity and reliability studies. We extracted any data referring to the reliability and validity of the instruments rather than focusing on any particular type of reliability or validity. Authors were not contacted to provide further information. The data extracted were not amenable to meta-analysis; a descriptive analysis was therefore conducted. We did not formally assess the risk of bias.

Results and Discussion

Overview

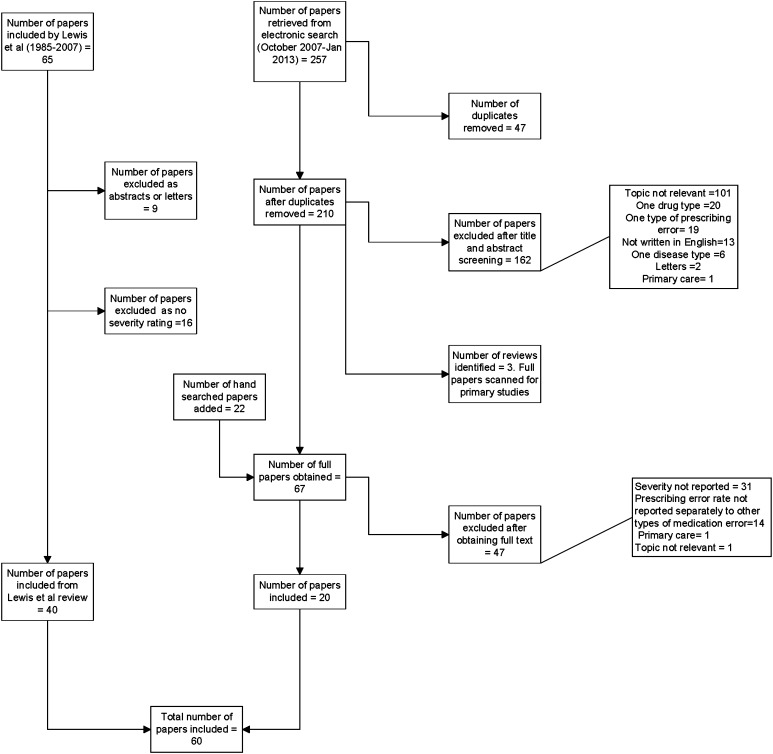

Forty (62 %) of the 65 papers originally identified by Lewis et al. [1] met our criteria. 210 abstracts were screened during our additional electronic search and 67 full-text articles were obtained. Twenty of these papers met our inclusion criteria; the rest were letters, conference abstracts, or did not assess severity (Fig. 1). When combined with the papers from Lewis et al. [1], a total of 60 papers were included [8–67] and 40 tools (including adaptations of other tools) identified. Forty studies (67 %) used original or adapted versions of four tools [11, 16, 68, 69], but there were also 18 tools designed for individual studies. It is notable that 46 (44 %) of 104 studies measuring the prevalence of prescribing errors in secondary care did not include any assessment of severity. The included tools and their properties are shown in Table 1 of the Electronic Supplementary Material (ESM).

Fig. 1.

Flow chart of papers identified, screened, and evaluated

Methods of measuring severity were diverse, although most tools had some features in common. The tools all comprised single-item classification systems for error severity with associated definitions. The majority were presented as ordinal Likert scales but one tool was based on a visual analog scale [11]. Seven tools [24, 27, 48, 50–52, 68] were a mixture of a severity assessment scale and another type of assessment. For example, the NCC MERP (National Coordinating Council for Medication Error Reporting and Prevention) index [68] includes a category ‘not an error’. As a consequence, the tool is a mixture of a severity assessment scale and a tool for recording whether or not an error had occurred. One study measured the predicted patient outcome from clinical pharmacists’ recommendations made in response to identified prescribing errors rather than the direct clinical significance of the errors themselves [9].

Tool Development

Little information was given on the development of the majority of tools (ESM Table 1). No information was given at all for 19 (47.5 %) [9–12, 16–23, 34–39, 41–47, 57–60, 63, 64, 66, 67] of the 40 tools. For the other tools, information was usually limited to statements explaining that the tool was based on a previous one, the development of which was not described or referenced. However, the authors of two tools described the rationale or methodology by which they adapted these previous tools. The NCC MERP index was collapsed from nine to six categories by Forrey et al. [48] because the original distinctions were considered ambiguous or seemed similar. In a separate study [31], an expert panel survey was used to adapt Folli et al.’s tool [16]. Again, in neither case was the development of the original tool described or referenced.

While many tools were developed for medication errors in general, others were developed for studies of prescribing error specifically. Tools were developed in a range of countries (15 UK, 10 USA, 14 other, 1 not stated). Tools developed for use in one country may not be transferable to other countries, due to differences in healthcare systems.

Potential Versus Actual Harm

Thirty (75 %) tools were based on potential rather than actual harm. It is of interest that the NCC MERP index [68] was developed to assess actual harm but was subsequently used or adapted to assess potential harm in six studies [48, 50, 51, 54–56]. Tools based on actual patient outcomes may have practical limitations if a researcher becomes aware of any errors as they occur and may be ethically obliged to intervene, or in retrospective studies where it may be difficult to identify any clinical effects because of the delay between the occurrence and identification of errors [11]. The main benefit of using potential outcomes is that even in the absence of actual patient harm, judgments can be made about severity; however, assessing potential outcomes is likely to be more subjective.

Severity Levels

Tools varied in the number and range of severity levels assessed. The number of levels of severity ranged from two to continuous. The majority of tools included levels ranging from potentially or actually lethal, to minor/mild error, or no harm. However, some tools had ‘severe’ or ‘harmful’ as the highest level of severity and did not have a separate category for life-threatening errors. In addition, Folli et al.’s [16] lowest harm rating was ‘significant’. Some authors [24–27] expanded the number of severity categories from Folli et al. [16] to include minor errors. Adding a category could complicate the assessment for the reviewers, but it allows for a wider range of responses, and therefore potentially increases the sensitivity of the method.

Reliability

A measure of reliability was established for 17 (43 %) tools (ESM Table 2) [9, 11, 15, 16, 24, 28, 31, 33, 34, 37–39, 48, 50, 63, 67, 68]. In all cases this was inter-rater reliability, which could be particularly important where potential harm was being assessed. The Folli et al. [16] scale appeared to have higher inter-rater reliability when used to assess actual harm (κ = 0.67–0.89 [16]) than potential harm (κ = 0.32–0.37 [17]). However, this finding should be interpreted with caution as these were two separate studies using different assessors. High inter-rater reliability (κ > 0.7) was found for five tools (ESM Table 2): Folli adapted by Abdel-Qader et al. [24], Folli adapted by Lesar et al. [28] in 1990, Kozer et al.’s 2002 tool [39], NCC MERP index adapted by Forrey et al. [48], and Wang et al.’s tool [67]. It is of note that the NCC MERP index was more reliable when collapsed into six levels of severity than when all nine levels were used in the same studies [48, 50]. Dean and Barber [11] used generalizability theory to establish the reliability of their tool. They found that in order to achieve an acceptable generalizability coefficient (>0.8) four reviewers were required and their mean score then used as the index of severity. Subsequent studies measuring the severity of prescribing errors using Dean and Barber’s tool [11] have used five reviewers based on a conference abstract, which does not meet this review’s inclusion criteria. There was no information regarding reliability for 23 (57 %) tools [11, 25–27, 35, 40–42, 51–62, 64–66], and in seven cases (18 %) descriptive information was given but no statistical information presented [9, 15, 31, 33, 34, 37, 63].

Validity

Validity was only reported for five (12.5 %) tools [11, 48, 61] (ESM Table 2). These all explored construct or criterion validity and measured raters’ judgments of potential harm against actual harm in situations where the outcome was known. Dean and Barber [11] found that there was a clear relationship between potential harm as assessed using their scales and actual harm. Forrey et al. [48] found that the original NCC MERP index [68] had 74 % alignment and that their adapted version had 81.0–83.9 % alignment when potential harm assessment was compared with actual harm. Ridley et al. [61] reported that there was no relationship between potential harm and apparent actual harm.

Acceptability

Very little information was given on acceptability or ease of use of the tools. However, Dean and Barber’s tool [11] requires four reviewers to rate error severity in order to achieve acceptable reliability, which could potentially be viewed as time consuming and a disadvantage of that particular tool.

Comparison with Studies Measuring Medication Administration Errors

Our findings are similar to those for studies of administration errors. In our review, 43 % of studies of prescribing error prevalence did not include assessment of severity. Of those that did, 67 % used previously established methods and 33 % used their own tools. In a review of studies of the prevalence of administration errors, Keers et al. [70] found that 44 % of 91 studies did not attempt to determine the clinical significance of identified administration errors and that whilst 82 % of these studies used previously published severity tools, 18 % used their own criteria.

Review Limitations

Our search strategy excluded studies not published in English and focused on the hospital setting. We based our work on a previous paper and an existing search strategy rather than developing our own. However, this strategy was a sufficiently close fit to match our needs. We acknowledge that one of the authors of this review, BDF, was the author of one of the tools [11], giving a potential source of bias. One of the databases that we searched was not publically available, but our own local database of medication error studies.

Recommendations

Researchers and clinicians may have different needs in relation to a tool for assessing the severity of medication errors. However, in general, an ideal tool should be specific to medication error severity, relatively easy and not too time consuming to use, reliable, and validated in different healthcare systems. Few studies presented information on ease of use or the time required. We identified only two tools with acceptable validity and reliability: the NCC MERP index as adapted by Forrey et al. [48], and Dean and Barber’s tool [11]. It is not possible to directly compare the reliability of the two tools as they used different methods of assessing reliability. However, information about their development and ease of use is limited and Dean and Barber’s tool [11] may be more time consuming to use. Forrey et al.’s tool [48] is a mixture of error identification and error severity. Currently, the most appropriate instrument will need to be selected based on use. Forrey et al.’s tool may be most appropriate for use in clinical practice as it is less time consuming to use. However, Dean and Barber’s tool may be better for research as it has been tested on a larger sample size and the continuous scale potentially permits more powerful statistical analysis in comparative studies. There is also scope for developing and testing of a new tool which meets all of the criteria above. Due to the wide range of tools used in the literature, researchers should also consider developing a basis of comparison between tools to assist in comparing findings across studies.

Conclusion

When assessing the effects of interventions on prescribing error rates, the severity of error should also be considered [5, 6]. When selecting a tool to assess prescribing error severity, its development, reliability, validity, and ease of use need to be taken into account. There is potentially the need to establish a less time-consuming method of measuring severity of prescribing errors, with acceptable international reliability and validity. Due to the wide range of tools used, developing a basis of comparison between tools would potentially be helpful in comparing findings across studies.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgments

This study had no specific source of funding. However, the Centre for Medication Safety and Service Quality is affiliated with the Centre for Patient Safety and Service Quality (CPSSQ) at Imperial College Healthcare NHS Trust, which is funded by the National Institute of Health Research (NIHR). This paper represents independent research supported by the Imperial NIHR Patient Safety Translational Research Centre. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR, or the Department of Health.

Conflict of interest

Sara Garfield, Matt Reynolds, Liesbeth Dermont, and Bryony Dean Franklin have no conflicts of interests that are directly relevant to the content of this review.

Appendix: Search Strategy EMBASE

medication error/ or prescribing error.mp.

near miss.mp.

adverse drug reaction/ or adverse event.mp.

error preventable.mp.

prescription/ or prescribe.mp. or prescription.mp.

incidence/ or incidence.mp.

prevalence.mp. or prevalence/

epidemiology/ or epidemiology.mp

rate.mp.

hospitalization/ or hospitalization.mp

hospital/

in patients.mp. or hospital patient/

1 or 2 or 3 or 4

6 or 7 or 8 or 9

10 or 11 or 12

5 and 13 and 14 and 15

16 and 2008:2013 (sa year).

Footnotes

An erratum to this article is available at http://dx.doi.org/10.1007/s40264-014-0135-1.

References

- 1.Lewis PJ, Dornan T, Taylor D, et al. Prevalence, incidence and nature of prescribing errors in hospital inpatients. Drug Saf. 2009;32(5):379–389. doi: 10.2165/00002018-200932050-00002. [DOI] [PubMed] [Google Scholar]

- 2.Dean BS, Allan EL, Barber ND, et al. Comparison of medication errors in an American and a British hospital. Am J Health Syst Pharm. 1995;52:2543–2549. doi: 10.1093/ajhp/52.22.2543. [DOI] [PubMed] [Google Scholar]

- 3.Barker KN, Pearson RE, Hepler CD, et al. Effect of an automated bedside dispensing machine on medication errors. Am J Hosp Pharm. 1984;41:1352–1358. [PubMed] [Google Scholar]

- 4.Schnell BR. A study of unit-dose drug distribution in four Canadian hospitals. Can J Hosp Pharm. 1976;29:85–90. [PubMed] [Google Scholar]

- 5.Uzych L. Medication errors—beyond frequency. Am J Health Syst Pharm. 1996;53:1079. doi: 10.1093/ajhp/53.9.1079. [DOI] [PubMed] [Google Scholar]

- 6.American Society of Hospital Pharmacists ASHP guidelines on preventing medication errors in hospitals. Am J Hosp Pharm. 1993;50:305–314. [PubMed] [Google Scholar]

- 7.Franklin BD, McLeod M, Barber N. Comment on ‘prevalence, incidence and nature of prescribing errors in hospital inpatients: a systematic review’. Drug Saf. 2010;33(2):163–166. doi: 10.2165/11319080-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 8.Bohomol E, Ramos LH, D’Innocenzo M. Medication errors in an intensive care unit. J Adv Nurs. 2009;65(6):1259–1267. doi: 10.1111/j.1365-2648.2009.04979.x. [DOI] [PubMed] [Google Scholar]

- 9.Dale A, Copeland R, Barton R. Prescribing errors on medical wards and the impact of clinical pharmacists. Int J Pharm Pract. 2003;11(1):19–24. doi: 10.1211/002235702829. [DOI] [Google Scholar]

- 10.Dean B, Schachter M, Vincent C, et al. Prescribing errors in hospital inpatients: their incidence and clinical significance. Qual Saf Health Care. 2002;11(4):340–344. doi: 10.1136/qhc.11.4.340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dean BS, Barber ND. A validated, reliable method of scoring the severity of medication errors. Am J Hosp Pharm. 1999;56(1):57–62. doi: 10.1093/ajhp/56.1.57. [DOI] [PubMed] [Google Scholar]

- 12.Franklin BD, Reynolds M, Shebl NA, et al. Prescribing errors in hospital inpatients: a three-centre study of their prevalence, types and causes. Postgrad Med J. 2011;87(1033):739–745. doi: 10.1136/pgmj.2011.117879. [DOI] [PubMed] [Google Scholar]

- 13.Shulman R, Singer M, Goldstone J, et al. Medication errors: a prospective cohort study of hand-written and computerised physician order entry in the intensive care unit. Crit Care. 2005;9(5):R516–R521. doi: 10.1186/cc3793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Barber N, Franklin BD, Cornford T, et al. Safer, faster, better? Evaluating electronic prescribing: report to the Patient Safety Research Programme. Nov 2006. http://www.who.int/patientsafety/information_centre/reports/PS019_Barber_Final_report.pdf. Accessed 7 Aug 2013.

- 15.Dobrzanski S, Hammond I, Khan G, et al. The nature of hospital prescribing errors. Br J Clin Gov. 2002;7(3):187–193. doi: 10.1108/14664100210438271. [DOI] [Google Scholar]

- 16.Folli HL, Poole RL, Benitz WE, et al. Medication error prevention by clinical pharmacists in two children’s hospitals. Pediatrics. 1987;79(5):718–722. [PubMed] [Google Scholar]

- 17.Bates DW, Leape LL, Petrycki S. Incidence and preventability of adverse drug events in hospitalized adults. J Gen Intern Med. 1993;8:289–294. doi: 10.1007/BF02600138. [DOI] [PubMed] [Google Scholar]

- 18.Bates DW, Cullen DJ, Laird N, et al. Incidence of adverse drug events and potential adverse drug events: implications for prevention. JAMA. 1995;274(1):29–34. doi: 10.1001/jama.1995.03530010043033. [DOI] [PubMed] [Google Scholar]

- 19.Forster AJ, Halil RB, Tierney MG. Pharmacist surveillance of adverse drug events. Am J Hosp Pharm. 2004;61(14):1466–1472. doi: 10.1093/ajhp/61.14.1466. [DOI] [PubMed] [Google Scholar]

- 20.Ho L, Brown GR, Millin B. Characterization of errors detected during central order review. Can J Hosp Pharm. 1992;45(5):193–197. [PubMed] [Google Scholar]

- 21.Neri ED, Gadelha PG, Maia SG, et al. Drug prescription errors in a Brazilian hospital. Rev Assoc Med Bras. 2011;57(3):301–308. doi: 10.1016/S0104-4230(11)70063-X. [DOI] [PubMed] [Google Scholar]

- 22.Kaushal R, Bates DW, Landrigan C, et al. Medication errors and adverse drug events in pediatric inpatients. JAMA. 2001;285(16):2114–2120. doi: 10.1001/jama.285.16.2114. [DOI] [PubMed] [Google Scholar]

- 23.Leape LL, Cullen DJ, Clapp MD, et al. Pharmacist participation on physician rounds and adverse drug events in the intensive care unit. JAMA. 1999;282(3):267–270. doi: 10.1001/jama.282.3.267. [DOI] [PubMed] [Google Scholar]

- 24.Abdel-Qader DH, Harper L, Cantrill JA, et al. Pharmacists’ interventions in prescribing errors at hospital discharge: an observational study in the context of an electronic prescribing system in a UK teaching hospital. Drug Saf. 2010;33(11):27–44. doi: 10.2165/11538310-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 25.Blum KV, Abel SR, Urbanski CJ, et al. Medication error prevention by pharmacists. Am J Hosp Pharm. 1988;45(Sep):1902–1903. [PubMed] [Google Scholar]

- 26.Caruba T, Colombet I, Gillaizeau F, et al. Chronology of prescribing error during the hospital stay and prediction of pharmacist’s alerts overriding: a prospective analysis. BMC Health Serv Res. 2010;10:13. doi: 10.1186/1472-6963-10-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fernandez-Llamazares CM, Calleja-Hernandez MA, Manrique-Rodriguez S, et al. Prescribing errors intercepted by clinical pharmacists in paediatrics and obstetrics in a tertiary hospital in Spain. Eur J Clin Pharmacol. 2012;68(9):1339–1345. doi: 10.1007/s00228-012-1257-y. [DOI] [PubMed] [Google Scholar]

- 28.Lesar TS, Briceland LL, Delcoure K, et al. Medication prescribing errors in a teaching hospital. JAMA. 1990;263(17):2329–2334. doi: 10.1001/jama.1990.03440170051035. [DOI] [PubMed] [Google Scholar]

- 29.Lesar TS, Briceland L, Stein DS. Factors related to errors in medication prescribing. JAMA. 1997;277(4):312–317. doi: 10.1001/jama.1997.03540280050033. [DOI] [PubMed] [Google Scholar]

- 30.Lesar TS, Lomaestro BM, Pohl H. Medication-prescribing errors in a teaching hospital. A 9-year experience. Arch Intern Med. 1997;157(14):1569–1576. doi: 10.1001/archinte.1997.00440350075007. [DOI] [PubMed] [Google Scholar]

- 31.Tully MP, Parker D, Buchan I, et al. Patient safety research programme: medication errors 2: pilot study. Report prepared for the Department of Health; 2006. http://www.birmingham.ac.uk/Documents/college-mds/haps/projects/cfhep/psrp/finalreports/PS020FinalReportCantril.pdf. Accessed 7 Aug 2013.

- 32.Tully MP, Buchan IE. Prescribing errors during hospital inpatient care: factors influencing identification by pharmacists. Pharm World Sci. 2009;31(6):682–688. doi: 10.1007/s11096-009-9332-x. [DOI] [PubMed] [Google Scholar]

- 33.Webbe D, Dhillon S, Roberts CM. Improving junior doctor prescribing—the positive impact of a pharmacist intervention. Pharm J. 2007;278(7437):136–138. [Google Scholar]

- 34.Grasso BC, Genest R, Jordan CW, et al. Use of chart and record reviews to detect medication errors in a state psychiatric hospital [see comment] Psychiatr Serv. 2003;54(5):677–681. doi: 10.1176/appi.ps.54.5.677. [DOI] [PubMed] [Google Scholar]

- 35.Hartwig SC, Denger SD, Schneider PJ. Severity-indexed, incident report-based medication error-reporting program. Am J Hosp Pharm. 1991;48:2611–2616. [PubMed] [Google Scholar]

- 36.Sangtawesin V, Kanjanapattanakul W, Srisan P, et al. Medication errors at Queen Sirikit National Institute of Child Health. J Med Assoc Thai. 2003;86(Suppl 3):S570–S575. [PubMed] [Google Scholar]

- 37.Haw C, Stubbs J. Prescribing errors at a psychiatric hospital. Pharm Pract. 2003;13(2):64–66. [Google Scholar]

- 38.King WJ, Paice N, Rangrej J, et al. The effect of computerized physician order entry on medication errors and adverse drug events in pediatric inpatients. Pediatrics. 2003;112(3):506–509. doi: 10.1542/peds.112.3.506. [DOI] [PubMed] [Google Scholar]

- 39.Kozer E, Scolnik D, Macpherson A, et al. Variables associated with medication errors in pediatric emergency medicine. Pediatrics. 2002;110:737–742. doi: 10.1542/peds.110.4.737. [DOI] [PubMed] [Google Scholar]

- 40.Lisby M, Nielsen LP, Mainz J. Errors in the medication process: frequency, type, and potential clinical consequences. Int J Qual Health Care. 2005;17(1):15–22. doi: 10.1093/intqhc/mzi015. [DOI] [PubMed] [Google Scholar]

- 41.Lustig A. Medication error prevention by pharmacists—an Israeli solution. Pharm World Sci. 2000;22(1):21–25. doi: 10.1023/A:1008774206261. [DOI] [PubMed] [Google Scholar]

- 42.Morrill GB, Barreuther C. Screening discharge prescriptions. Am J Hosp Pharm. 1988;45(9):1904–1905. [PubMed] [Google Scholar]

- 43.Cimino MA, Kirschbaum MS, Brodsky L, et al. Assessing medication prescribing errors in pediatric intensive care units. Pediatr Crit Care Med. 2004;5(2):124–132. doi: 10.1097/01.PCC.0000112371.26138.E8. [DOI] [PubMed] [Google Scholar]

- 44.Colpaert K, Claus B, Somers A, et al. Impact of computerized physician order entry on medication prescription errors in the intensive care unit: a controlled cross-sectional trial. Crit Care. 2006;10(1):R21. doi: 10.1186/cc3983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Dequito AB, Mol PG, van Doormaal JE, et al. Preventable and non-preventable adverse drug events in hospitalized patients: a prospective chart review in the Netherlands. Drug Saf. 2011;34(11):1089–1100. doi: 10.2165/11592030-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 46.Van Doormaal JE, van den Bemt PM, Mol PG, et al. Medication errors: the impact of prescribing and transcribing errors on preventable harm in hospitalised patients. Qual Saf Health Care. 2009;18(1):22–27. doi: 10.1136/qshc.2007.023812. [DOI] [PubMed] [Google Scholar]

- 47.Wilson DG, McArtney RG, Newcombe RG, et al. Medication errors in paediatric practice: insights from a continuous quality improvement approach. Eur J Pediatr. 1998;157(9):769–774. doi: 10.1007/s004310050932. [DOI] [PubMed] [Google Scholar]

- 48.Forrey RA, Pedersen CA, Schneider PJ. Interrater agreement with a standard scheme for classifying medication errors. Am J Health Syst Pharm. 2007;64:175–181. doi: 10.2146/ajhp060109. [DOI] [PubMed] [Google Scholar]

- 49.Williams SD, Ashcroft DM. Medication errors: how reliable are the severity ratings reported to the national reporting and learning system? Int J Qual Health Care. 2009;21(5):316–320. doi: 10.1093/intqhc/mzp034. [DOI] [PubMed] [Google Scholar]

- 50.Bobb A, Gleason K, Husch M, Feinglass J, Yarnold PR, Noskin GA. The epidemiology of prescribing errors: the potential impact of computerized prescriber order entry. Arch Intern Med. 2004;164(7):785–792. doi: 10.1001/archinte.164.7.785. [DOI] [PubMed] [Google Scholar]

- 51.Lepaux DJ, Schmitt E, Dufay E. Fighting medication errors: results of a study and reflections on causes and ways for prevention. Int J Risk Saf Med. 2002;15(3-4):203–211. [Google Scholar]

- 52.Jiménez Muñioz AB, Muiño Miguez A, Rodriguez Pérez MP, et al. Medication error prevalence. Int J Health Care Qual Assur. 2010;23(3):328–338. doi: 10.1108/09526861011029389. [DOI] [PubMed] [Google Scholar]

- 53.Jiménez Muñoz AB, Muiño Miguez A, Rodriguez Pérez MP, et al. Comparison of medication error rates and clinical effects in three medication prescription-dispensation systems. Int J Health Care Qual Assur. 2011;24(3):238–248. doi: 10.1108/09526861111116679. [DOI] [PubMed] [Google Scholar]

- 54.Van den Bemt PMLA, Postma MJ, Van Roon EN, et al. Cost–benefit analysis of the detection of prescribing errors by hospital pharmacy staff. Drug Saf. 2002;25(2):135–143. doi: 10.2165/00002018-200225020-00006. [DOI] [PubMed] [Google Scholar]

- 55.Shawahna R, Rahman NU, Ahmad M, et al. Electronic prescribing reduces prescribing error in public hospitals. J Clin Nurs. 1920;20:3233–3245. doi: 10.1111/j.1365-2702.2011.03714.x. [DOI] [PubMed] [Google Scholar]

- 56.Van Gijssel-Wiersma DG, Van den Bemt PM, Walenbergh-van Veen MC. Influence of computerised medication charts on medication errors in a hospital. Drug Saf. 2005;28(12):1119–1129. doi: 10.2165/00002018-200528120-00006. [DOI] [PubMed] [Google Scholar]

- 57.Conroy S. Association between licence status and medication errors. Arch Dis Child. 2011;96(3):305–306. doi: 10.1136/adc.2010.191940. [DOI] [PubMed] [Google Scholar]

- 58.Rees S, Thomas P, Shetty A, et al. Drug history errors in the acute medical assessment unit quantified by use of the NPSA classification. Pharm J. 2007;279:469–471. [Google Scholar]

- 59.Parke J. Risk analysis of errors in prescribing, dispensing and administering medications within a district hospital. J Pharm Pract Res. 2006;36(1):21–24. [Google Scholar]

- 60.Pote S, Tiwari P, D’Cruz S. Medication prescribing errors in a public teaching hospital in India: a prospective study. Pharm Pract. 2007;5(1):17–20. doi: 10.4321/s1886-36552007000100003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ridley SA, Booth SA, Thompson CM, et al. Prescription errors in UK critical care units. Anaesthesia. 2004;59(12):1193–1200. doi: 10.1111/j.1365-2044.2004.03969.x. [DOI] [PubMed] [Google Scholar]

- 62.Sagripanti M, Dean B, Barber N. An evaluation of the process-related medication risks for elective surgery patients from pre-operative assessment to discharge. Int J Pharm Pract. 2002;10(3):161–170. doi: 10.1111/j.2042-7174.2002.tb00604.x. [DOI] [Google Scholar]

- 63.Stubbs J, Haw C, Taylor D. Prescription errors in psychiatry—a multi-centre study. J Psychopharmacol. 2006;4:553–561. doi: 10.1177/0269881106059808. [DOI] [PubMed] [Google Scholar]

- 64.Terceros Y, Chahine-Chakhtoura C, Malinowski JE, et al. Impact of a pharmacy resident on hospital length of stay and drug-related costs. Ann Pharmacother. 2007;41(5):742–748. doi: 10.1345/aph.1H603. [DOI] [PubMed] [Google Scholar]

- 65.Vila-de-Muga M, Colom-Ferrer L, Gonzalez-Herrero M, et al. Factors associated with medication errors in the pediatric emergency department. Pediatr Emerg Care. 2011;27(4):290–294. doi: 10.1097/PEC.0b013e31821313c2. [DOI] [PubMed] [Google Scholar]

- 66.Vira T, Colquhoun M, Etchells E. Reconcilable differences: correcting medication errors at hospital admission and discharge. Qual Saf Health Care. 2006;15(2):122–126. doi: 10.1136/qshc.2005.015347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Wang JK, Herzog NS, Kaushal R, Park C, Mochizuki C, Weingarten SR. Prevention of pediatric medication errors by hospital pharmacists and the potential benefit of computerized physician order entry. Pediatrics. 2007;119(1):E77–E85. doi: 10.1542/peds.2006-0034. [DOI] [PubMed] [Google Scholar]

- 68.National Coordinating Council for Medication Error Reporting and Prevention; 2001. About medication errors. http://www.nccmerp.org/pdf/indexBW2001-06-12.pdf. Accessed 14 Aug 2013.

- 69.National Patient Safety Agency fourth report from the Patient Safety Observatory. PSO/4 Safety in doses: medication safety incidents in the NHS. NPSA 2007.

- 70.Keers RN, Williams SD, Cooke J, et al. Prevalence and nature of medication administration errors in health care settings: a systematic review of direct observational evidence. Pharmacoepidemiology. 2013;47:237–256. doi: 10.1345/aph.1R147. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.