Abstract

To maintain a stable representation of the visual environment as we move, the brain must update the locations of targets in space using extra-retinal signals. Humans can accurately update after intervening active whole-body translations. But can they also update for passive translations (i.e., without efference copy signals of an outgoing motor command)? We asked six head-fixed subjects to remember the location of a briefly flashed target (five possible targets were located at depths of 23, 33, 43, 63 and 150cm in front of the cyclopean eye) as they moved 10cm left, right, up, down, forward or backward, while fixating a head-fixed target at 53cm. After the movement, the subjects made a saccade to the remembered location of the flash with a combination of version and vergence eye movements. We computed an updating ratio where 0 indicates no updating and 1 indicates perfect updating. For lateral and vertical whole-body motion, where updating performance is judged by the size of the version movement, the updating ratios were similar for leftward and rightward translations, averaging 0.84±0.28 (mean±SD), as compared to 0.51±0.33 for downward and 1.05±0.50 for upward translations. For forward/backward movements, where updating performance is judged by the size of the vergence movement, the average updating ratio was 1.12±0.45. Updating ratios tended to be larger for far targets than near targets, although both intra- and inter-subject variabilities were smallest for near targets. Thus, in addition to self-generated movements, extra-retinal signals involving otolith and proprioceptive cues can also be used for spatial constancy.

INTRODUCTION

How do we maintain the percept of a seemingly stable world around us? While we take individual snapshots of the world through our eyes, these images become unstable once we redirect gaze (i.e., change the line of sight) to a new location. Yet we do not perceive such instability because an active process – known as visuospatial updating – occurs that takes our intervening movements into account. Spatial updating results in spatial constancy and allows us to interact with the environment in meaningful and accurate ways. But while our knowledge of the neural substrates that underlie visuospatial updating is still in its infancy (Duhamel et al. 1992; Goldberg and Bruce 1990; Sommer and Wurtz 2002, 2006), there is much we do know about the extraretinal signals necessary for spatial updating to occur.

For example, both humans and non-human primates can update for rotations associated with actively generated changes in eye, head and body position. This has been shown with variants of the double-step saccade paradigm in which a peripheral target is briefly flashed and must be later foveated after intervening movements of the eyes (Hallett and Lightstone 1976; Herter and Guitton 1998; McKenzie and Lisberger 1986; Ohtsuka 1994; Schlag et al. 1990; Zivotofsky et al. 1996) and head/body (Medendorp et al. 2002). These findings, based on self-generated movements, have led many to speculate that efference copy signals of the outgoing motor command are critical for spatial updating.

The necessity of such efference copies has been investigated by studies that examined the efficacy of spatial updating during passive rotations of the eyes and head/body. For the former, changes in eye position via stimulation of the superior colliculus and burst neurons in the paramedian pontine reticular formation have shown that information from these brainstem areas can be utilized to maintain spatial constancy (Sparks and Mays 1983). For the latter, passive whole-body rotations have shown that humans can take intervening yaw (Klier et al. 2006) and roll (Klier et al. 2005, 2006) rotations into account, although there were substantial individual differences and updating for roll was better, on average, than updating for yaw.

In the real world, our bodies not only rotate, but translate in space either actively (i.e., when we walk or run) or passively (i.e., when we sit in a car or ride on a moving sidewalk). But updating for translation is more complicated than updating for rotations for two reasons: First, rotations only require the amplitude and direction of the intervening movement to be taken into account, whereas translations additionally require the consequences of motion parallax to be accounted for. Since objects that are located closer to our eyes move larger distances across our retinas than farther objects, greater changes in version are required for near targets and smaller changes in version are required for far targets (given the same translational amplitude). Second, while rotations do not change the distance of an object from the observer, translations do (especially those along the forward/backward axis). Thus the brain must also generate changes in vergence angles after intervening translational movements.

Previous studies have evaluated the ability of humans to estimate the amplitude of passive, linear self-motion, also known as path integration (Mittelstaedt and Mittelstaedt, 1980). Several studies have shown that humans can accurately judge the distance traveled toward a target (Berthoz et al. 1995; Glasauer and Brandt 2007; Israel et al. 1993, 1997; Siegler et al. 2000). However, while path integration could be a signal that is used for accurate spatial updating, these studies did not dissociate the visual location of the target from the motor command necessary to foveate it accurately. Notably, accurate path integration does not necessarily imply accurate updating ability. For the latter, there is an additional computational step (i.e., updating the retinal error for a motor command).

A single human study has demonstrated that subjects can take actively generated translations into account for lateral (i.e., side-to-side) visuospatial updating (Medendorp et al. 2003). Recently, a number of animal studies have shown that monkeys can also update after lateral (Li et al. 2005) and fore-aft (Li and Angelaki 2005) translations. In addition, labyrinthectomized animals lose their ability to update in both the lateral and forward/backward directions, but interestingly, deficits in the forward/backward dimension are more pronounced and show less recovery (Wei et al. 2006). Again, these results point to a significant role of the vestibular system, especially the otolith organs, in translational spatial updating.

In order to determine whether spatial updating is not simply a product of primate overtraining or learning, we designed the current experiment to quantify human updating capabilities for passive translation. For the first time, we analyze updating along all three axes – forward/backward, rightward/leftward and upward/downward. We found that subjects perform well after forward/backward, lateral and upward translations, but consistently undershoot the target after downward translations. Preliminary results of this work have appeared in abstract form (Klier et al. 2007a).

METHODS

Subjects

Six subjects (4 female and 2 male) ranging in age from 28 to 48 years old were recruited to participate in the experiment. All but one (EK) were naïve to the purpose of the experiment, all had normal or corrected-to-normal vision, and none had any known neuromuscular or neurological deficits. All subjects gave informed consent to the experimental protocol and the eye movement recording procedures were approved by the Ethics Committee of the Canton of Zurich, Switzerland.

Measuring three-dimensional eye position

Three-dimensional eye positions of both eyes were measured using the magnetic search coil technique and three-dimensional Skalar search coils (Skalar Instruments, Delft, The Netherlands). The magnetic field system consisted of three mutually-orthogonal magnetic fields, generated by a cubic frame with side lengths of 0.5 m, operating at frequencies of 80, 96 and 120 Hz. Three-dimensional eye position was calibrated using an algorithm that simultaneously determined the orientation of the coil on the eye and offset voltages based on nine target fixations in close-to-primary and secondary gaze positions (Klier et al. 2005). These targets were projected via a projector (LC-XG210, EIKI Deutschland GmbH, Germany) onto a tangent screen (150 cm × 190 cm) located at a distance of 2 m in front of the subjects.

Experimental Equipment

Subjects sat on a six degree-of-freedom moveable platform (E-Cue 624–1800, Simulator Systems B.V., The Netherlands) capable of linear translations in three-dimensions: lateral = right/left, vertical = up/down, and forward/backward. A wooden chair complete with a foam head-rest and aviation harness was located in the center of the platform. During each experiment, each subject’s head was fixed to the chair by a personalized, thermoplastic mask that was molded to the face and bolted to the chair around the subject’s head. In addition, the subject’s body was fixed to the chair via seatbelts around the pelvis and torso, and with evacuation pillows that filled the spaces between the subject and the sides of the chair.

The space-fixed, visual targets consisted of five red LEDs located at 23, 33, 43, 63 and 150 cm away from the subjects’ cyclopean eye (figure 1A). The relative distances of these targets were chosen such that they spanned the range of vergence angles that human subjects are capable of achieving (figure 1B). These space-fixed targets were mounted between a homemade, U-shaped, plexiglass apparatus with four thin wires bridging the two long arms (resembling railroad tracks). Four of the space-fixed targets were located in the center of the four spanning wires, while the furthest target (150 cm) was placed on the end of another plexiglass rod that extended, away from the subject, from the middle of the short arm of the first apparatus. As these targets were required to be space-fixed, the entire plexiglass apparatus was suspended from the ceiling with a wooden pole, and the height of the five targets was adjusted for each subject by placing them at eye level. One LED, that functioned as the head-fixed fixation target, was mounted via a vertical, metal rod that was clamped to the motion platform at a distance of 53 cm from the subjects’ eyes (figure 1A – boxed “F”). Thus this latter LED moved with the platform and with the subjects. In order to avoid the fixation LED from running into the space-fixed targets at 63 cm and 43 cm when the chair moved 10 cm forward and backward, respectively, it was placed approximately 2 mm below eye level. This offset did not affect updating measurements during lateral translations where performance is measured by changes in horizontal version, or forward/backward translations where performance is measured by changes in vergence angle. Moreover, this small offset was also negligible during vertical translations where subjects had to update for amplitudes of 100 mm.

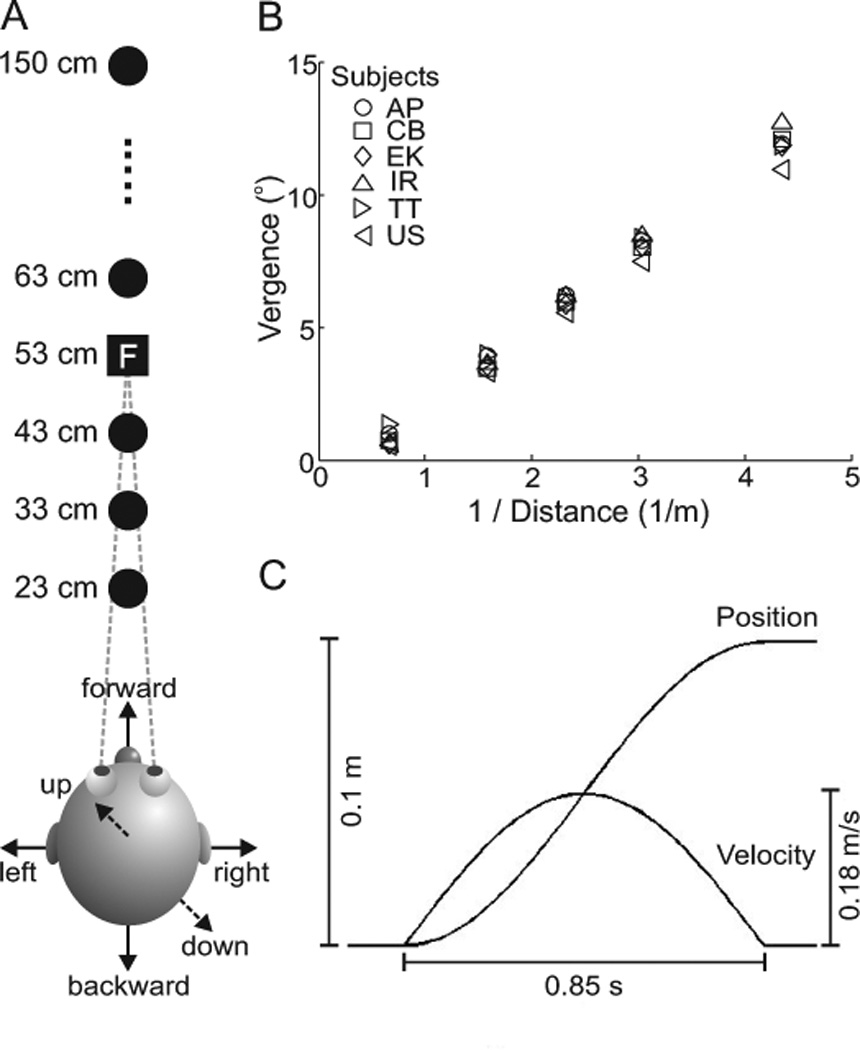

Figure 1. Experimental set-up and vergence angles.

A. Above view of subject and LED targets. The five space-fixed targets (black circles) were located at 23 cm, 33 cm, 43 cm, 63 cm and 150 cm away from the subject’s cyclopean eye. The head-fixed fixation LED (black square with letter “F”) was located 53 cm from the subject. Arrows indicate the six possible movement directions. B. Vergence angles required to fixate the five targets plotted as a function of 1/distance. Different symbols indicate the individual subjects’ performances during control fixations. Subjects’ vergence increased with increasing target distance and spanned nearly the entire range of possible vergence angles. C. Chair motion dynamics. Each translation had an amplitude of 10 cm, lasted 0.85 s and a cosine velocity profile with a peak velocity of 0.18 m/s.

Finally, a light source consisting of 19 white LEDs, arranged in concentric circles on a disc, was mounted on top of the chair and was illuminated before, during and after the flash of each space-fixed target. This was done so that the subjects could correctly and accurately localize the location of the space-fixed target. This ambient light was always turned off before the motion of the chair began, and thus spatial updating was always done in complete darkness.

Experimental Procedure

Calibration

Each experiment began and ended with a calibration task. Using the aforementioned projector and screen, nine targets were back projected, one at a time in a counterclockwise pattern, and the subjects had to fixate each of these targets for three seconds. The first target was located directly in front of the subjects, while the other eight were in the cardinal directions at amplitudes of 10° and 14°.

Fixation Trials

In order to determine the appropriate version and vergence angles of the two eyes for each of the five space-fixed targets in depth, we illuminated and had subjects fixate each target for three seconds. This was done with the subjects at center (where the five space-fixed targets where lined up directly in front of the cyclopean eye – figure 1A), and with the subjects statically positioned at 10 cm to the right, left, down and up of this center position.

Stationary Trials

How accurately subjects acquired these space-fixed targets during a memory saccade was determined during stationary trials where subjects either remained at center (figure 2A) or were moved 10 cm rightward, leftward, downward or upward (figure 2B – rightward movement). Once this position was attained, the ambient light was then turned on, followed by the head-fixed LED 0.5 s later. The subject had to fixate the head-fixed LED and, 1.5 s after it was turned on, one of the space-fixed targets was briefly flashed for 100 ms. The subjects had to maintain fixation on the head-fixed LED but remember the location of the flashed space-fixed target. After the space-fixed target was extinguished, a delay period of 1.5 s followed, after which time the head-fixed LED was extinguished. This cued the subjects to make a memory saccade to the remembered location of the flashed space-fixed target and hold that final position for 2 s until they heard an auditory tone which instructed them to relax and prepare for the next trial. The final eye position was held for 2 s so that the entire vergence movement could be completed. Note that the space-fixed targets were not turned back on at the end of each trial and so subjects were not provided any visual feedback and thus they could not learn the task.

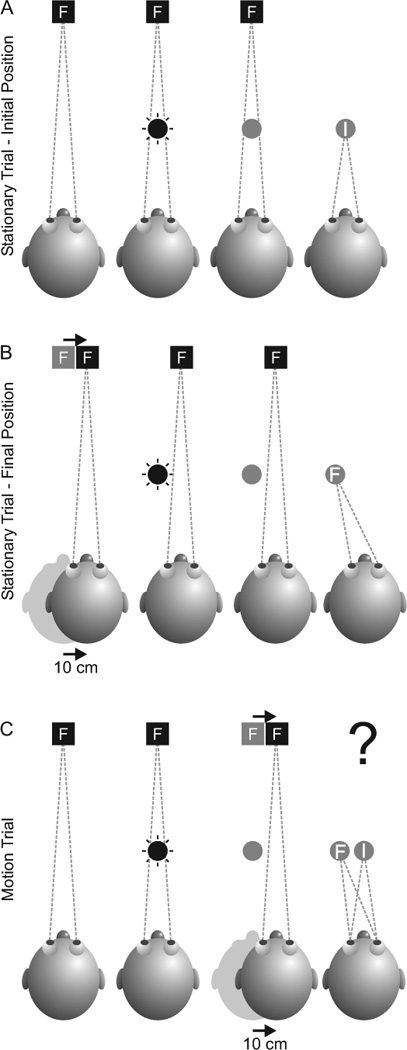

Figure 2. Experimental protocol and rationale.

The experiment consisted of stationary trials that did not require spatial updating and motion trials that did require spatial updating. During an initial stationary trial (A), the subject would fixate the fixation target (black square with letter “F”) and one of the space-fixed targets, located directly in front of the subject, would briefly flash for 100 ms (black circle). After a memory interval, the offset of the fixation target cued the subject to make a saccade to the remembered location of the flash. An accurate eye movement is indicated by the letter “I” in the gray circle. The same sequence was carried out during a final stationary trial (B), except the subject was first displaced by 10 cm to one of the final positions (10 cm right is illustrated here). Here, an accurate eye movement is indicated by the letter “F” in the gray circle. Finally, in the motion trials (C), subjects would begin the trial in the initial position. They would fixate the fixation target and note the location of the flashed space-fixed target. During the memory period, they would be moved 10 cm to the final position (same movement here as in B). The rationale of this study was to see if eye movements to the remembered target location in the motion trials would more closely resemble the eye movements observed in the initial position (I) or the final position (F) of the stationary trials. Eye movements corresponding to I would indicate that no updating occurred, while eye movements corresponding to F would indicate that updating did occur.

Motion Trials (figure 2C)

The motion trial was the critical updating paradigm and was similar to the stationary trial except that the subjects always started at the center position and were moved during the trial, in between the presentation of the flashed, space-fixed target and the memory saccade to its remembered location. This served to dissociate the sensory, retinal image of the space-fixed target from the motor command necessary to accurately foveate it. As in the stationary trials, in the motion trials the ambient light and the head-fixed fixation LED were turned on and the subject had to fixate the fixation LED. After 1.5 s, one of the space-fixed targets was briefly flashed for 100 ms and the subjects had to make note of its location. After the space-fixed target was extinguished, the subject was translated 10 cm in any one of six directions – rightward, leftward, downward, upward, forward or backward (a reconstructed motion profile is illustrated in figure 1C). The head-fixed LED remained illuminated throughout the movement and subjects had to maintain fixation on it, and continue to fixate it, once the movement stopped (the movement and post-movement fixation lasted 1.5 s). The fixation LED was then extinguished and the subjects made a memory saccade to the remembered, space-fixed location of the previously flashed target. The memory saccade and subsequent fixation lasted 2 s, at which time an auditory tone instructed the subjects to relax. Again, no visual feedback was provided at the end of the trial and the subject was moved back to the center position in between trials. All stationary and motion trials (n=49) were randomly interleaved and divided into two sets (with 24 trials in the first set (set A) and 25 trials in the second set (set B)). This was done so that subjects could have a break after each 24 or 25 trials. On each experimental day, the two sets were run three times (i.e., A, B, A, B, A, B), and each subject was run twice, on two separate days, resulting in a total of six repetitions (i.e., 6A’s and 6 B’s) for each stationary and motion trial.

Importantly, in the motion trials, subjects were always moved from the center, initial position (figures 1A and 2A) to any one of six final positions (e.g., figure 2B and C). Corresponding stationary trials were specifically selected such that there was one stationary trial for the initial position and one stationary trial for the final position for each motion trial. Thus the main goal was to determine if the subjects’ updated movements in the motion trials corresponded better with the memory movements in the initial stationary trials (i.e., no updating) or with the memory movements in the final stationary trials (i.e., perfect updating) (figure 2C). Note that it was not necessary to collect final stationary trials for the forward/backward motion trials. This is because the three nearest space-fixed targets (at 23, 33 and 43 cm) were each spaced 10 cm apart from one another and thus could serve as final stationary trials for one another. For example, when the 33 cm space-fixed target was flashed and the subject was moved forward, the stationary trial of the 23 cm space-fixed target functioned as the final stationary trial. Similarly, when the 33 cm space-fixed target was flashed and the subject was moved backward, the stationary trial of the 43 cm space-fixed target functioned as the final stationary trial.

There were a total of four forward/backward motion trials – two with forward motion (33 cm target flash with 10 cm forward motion and 43 cm target flash with 10 cm forward motion) and two with backward motion (23 cm target flash with 10 cm backward motion and 33 cm flash with 10 cm backward motion). Only three of the five space-fixed targets were used for forward/backward motion (23, 33 and 43 cm) because the necessary changes in vergence for the two farthest targets (at 63 and 150 cm) would have been extremely small, ranging from 0.14° for a 10 cm backward movement when the target at 150 cm is flashed to 1.03° for a 10 cm forward movement when the target at 63 cm is flashed (these vergence angles were computed using an intraocular distance of 6 cm). Thus, updating ability would have been too difficult to accurately measure for these very small vergence changes. For the same reason, the target at 43 cm was only associated with forward motion (requiring 2.41° of vergence change) but not backward motion that would only require 1.50° of vergence change. Finally, the closest target at 23 cm was only associated with backward motion because forward motion would require a final vergence angle of 25.99°, which is too large for humans to make.

Data Analysis

Raw data from each subject’s left and right eyes, sampled at 833 Hz, was first converted into rotation vectors, which indicate the horizontal, vertical and torsional positions of the eye. These positions were subsequently transformed into eye velocity by taking the derivative of eye position (dE/dt, where E is three-dimensional eye position). Finally, angular eye velocity (Ω) was computed from the previous two values by the equation Ω = 2 (dE/dt + E x dE/dt) / (1 + │E│2)) (where ″x″ designs the cross vector product). Version eye position was computed as the average of left and right eye position: (R+L)/2, whereas the vergence angle was computed as the difference between right and left eye position: R – L. All saccade trajectories to the remembered target locations were automatically selected using the following criteria: The start of a saccade was selected when the square root of the sum of squares of the horizontal, vertical and torsional angular velocities exceeded 10° /sec for both eyes. The end of a saccade occurred when the same value decreased below 10° /sec for both eyes. The end of the memory period was taken at a point 250 ms before the auditory tone. Two post-saccadic periods, saccade end and memory end, were measured since it has been shown that vergence responses often take longer than vergence responses to reach completion (Westheimer 1954; Yarbus 1967). These data, along with the actual locations of the targets in space allowed us to plot saccade trajectories and endpoints, and conduct statistical analyses on our data.

Updating Ratios

In order to quantify how well subjects were able to take their intervening movements into account when computing the updated eye movement to the remembered target location, we computed updating ratios for both version and vergence. Perfect updating would be indicated by motion trial version and vergence angles that are equal to final stationary trial version and vergence angles (figure 2C, rightmost panel – circled “F”). In contrast, no updating would be indicated by motion trial version and vergence angles that are equal to initial stationary trial version and vergence angles (figure 2C, rightmost panel – circled “I”). Thus the following updating ratio was computed separately for version and vergence values:

where Vmotion is version or vergence angle for an individual motion trial, MVstat.initial is the mean version or vergence angle from the initial stationary trials, and MVstat.final is the mean version or vergence angle for the final stationary trials. A value of 1 indicates perfect updating (i.e., the subjects’ eyes were directed to the space-fixed location of the LED), whereas a value of 0 indicates no updating (i.e., the subjects’ eyes were directed to the head-fixed location of the LED). Note that for horizontal and vertical translations, the version updating ratio is important for determining updating ability as this is the main variable that must change if updating occurs. In contrast, initial and final vergence angles are nearly identical and thus the corresponding updating ratio is useless. In contrast, for forward/backward updating, the vergence updating ratio is key since the main change in eye position after forward/backward motion is a vergence movement. Here, the initial and final version angles are too similar to derive any meaningful data (this will be demonstrated in figure 5). Updating ratios were computed for each subject separately for the two experimental days and then these two values were averaged for the final updating ratio. This was done to control for varying levels of arousal, motivation and fatigue that sometimes led to differences in version and (especially) vergence for each individual target across the two different experimental days.

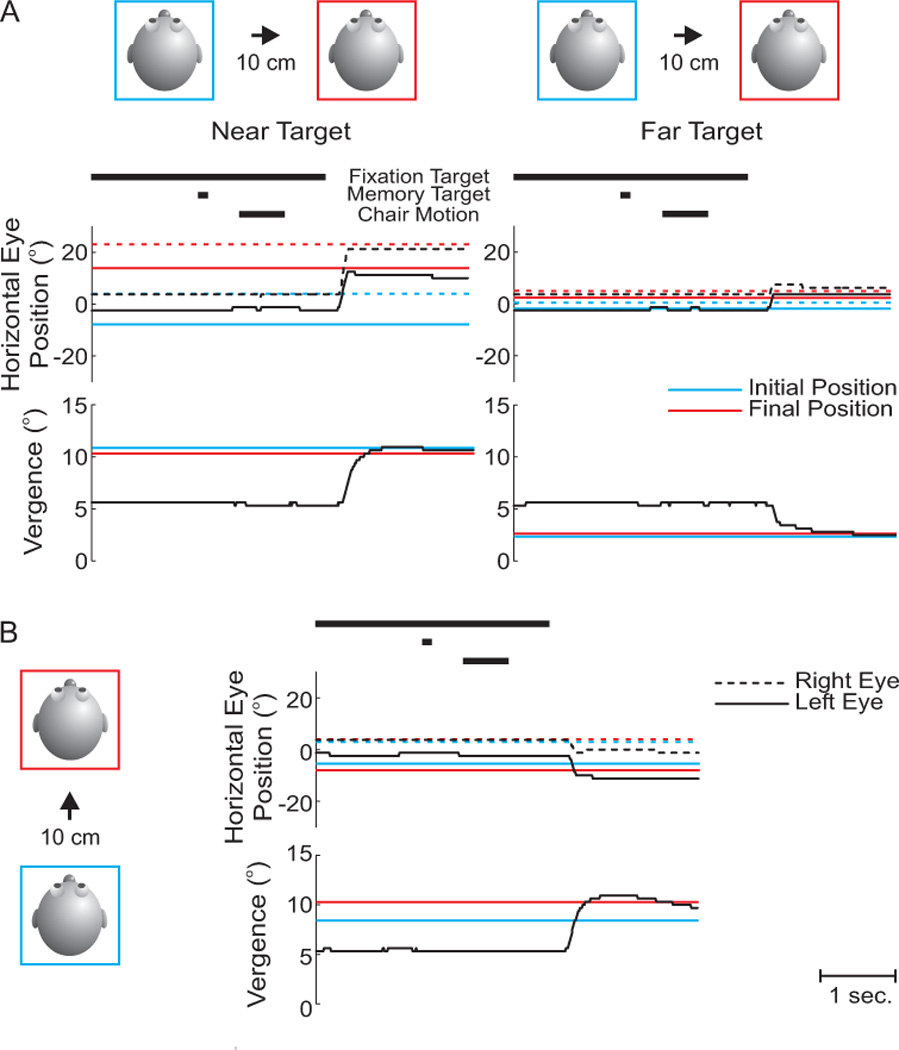

Figure 5. Motion trial examples.

A. Eye movement traces as a function of time are shown for a lateral motion trial that translated the subject from center to 10 cm to the right of center, after the nearest target (23 cm) (left column) and farthest target (150 cm) (right column) were flashed. The black bars at the top of the figure indicate when the fixation and memory targets were turned on and off and when the chair was translated. Traces are plotted from the onset of the fixation target to the onset of the auditory tone that signaled the end of the trial. Horizontal eye position (upper traces) for the right (dashed line) and left (solid line) eye, as well as vergence eye position (lower traces) are shown. In addition, average memory version and vergence eye positions are indicated for the corresponding initial (blue traces) and final (red traces) stationary trials (from figure 4 – right column). B. Similar traces for a forward motion trial that translated the subject from center to 10 cm forward of center.

RESULTS

The locations of the space-fixed targets (figure 1A) were chosen such that they covered nearly the entire range of human version and vergence angles. While it is obvious that subjects can make a wide variety of version eye movements, we first wanted to confirm that they could comfortably span the range of vergence angles presented to them. Figure 1B shows the actual vergence angles of the six subjects (various shapes), derived from the fixation trials, indicating that they could in fact cover the entire range of vergence angles provided. Importantly, note that there was a clear and noticeable difference in the vergence angles for all subjects across all the different targets in depth.

We first analyzed the subjects’ performances during the stationary trials in order to obtain a measure of their performance in memory trials when no updating was required. The stationary trials were conducted in both the initial (figure 2A) and final (figure 2B) positions of the motion trials (figure 2C). An example of a final stationary trial for a rightward 10 cm translation is shown in figure 3 for both the nearest target (23 cm) that was in front of the fixation point (53 cm) and the farthest target (150 cm) that was behind the fixation point (53 cm). For the near target (left column), the required memory saccade was composed of a large, conjugate, horizontal movement to the left (upper traces) and a large increase in vergence (lower trace). In contrast, for the far target (right column), the memory saccade consisted of a much smaller leftward version movement (upper traces) and a decrease in vergence (lower trace).

Figure 3. Stationary trial examples.

Eye movement traces as a function of time are shown for a stationary trial at 10 cm to the right of center, when the nearest target (23 cm) (left column) and farthest target (150 cm) (right column) were flashed. The black bars at the top of the figure indicate when the fixation and memory targets were turned on and off. Traces are plotted from the onset of the fixation target to the onset of the auditory tone that ended the trial. Horizontal eye position (upper traces) for the right (dashed line) and left (solid line) eye, as well as vergence eye position (lower traces) are shown. Leftward movements are positive.

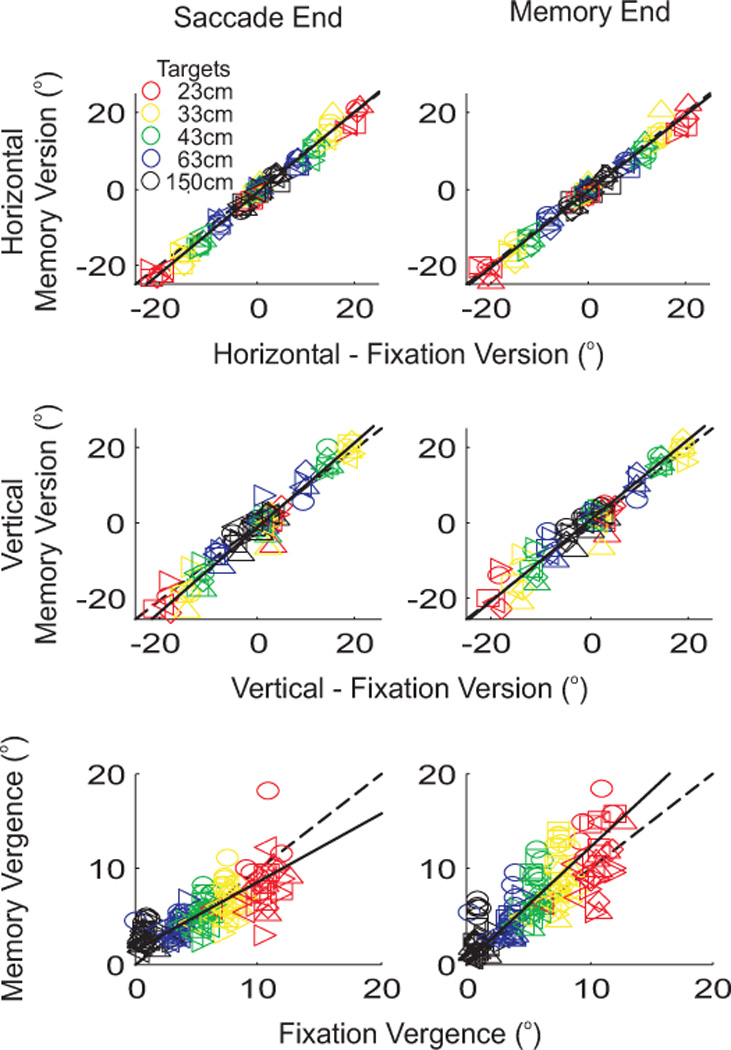

Figure 4 compares the changes in version and vergence in these stationary trials (memory saccade) with the same measures from the fixation trials (when the subjects looked at each target when it was turned on). In each panel, each of the six subjects is indicated by a different symbol (see inset in figure 1B) and each of the five space-fixed targets is indicated by a different color (see inset). A slope of 1 is shown by the dashed line and the average slope across all subjects is shown by a solid line. Data are shown separately for horizontal (top row) and vertical (middle row) components of version, as well as for vergence (bottom row) across all trials. In each panel, the comparison between stationary memory trials and fixation trials is plotted for two time points: (1) the end of the first saccade (left column – defined as the time at which saccade velocity decreased to 10°/s) and (2) the end of the memory period (right column – 250 ms before the auditory tone). For version, there was no difference between stationary memory trials and fixation trials at either the end of the saccade or the end of the memory period, and thus the average slope across subjects was never different from a slope of 1 (t-tests, p > 0.05). The average slopes [± 95% intervals] for horizontal version were 1.06 [1.03, 1.10] at saccade end and 1.02 [0.97, 1.06] at memory end; for vertical version slopes were 1.12 [1.07, 1.18] at saccade end and 1.05 [0.99, 1.10] at memory end. In contrast, for vergence, there was a significant difference between the average slope at saccade end (0.71 [0.59, 0.88]) and the average slope at memory end (1.20 [1.06, 1.34]). Since the slope at memory end was larger than the slope at saccade end, this indicates that the complete vergence movements were not finished at the end of the saccade, but required additional time in order to reach their final angle. Thus, for the following analyses, which focus on the subjects’ performances during the motion updating trials, the stationary trials’ version and vergence values from the end of the memory period will be used as controls. Data for individual subjects’ slopes can be found in table 1.

Figure 4. Comparison of stationary memory and control fixation trials.

Horizontal version (top row), vertical version (middle row) and vergence (bottom row) during the stationary memory trials are compared to control fixations when the space-fixed targets were turned on. Each subject is indicated by a different symbol (see inset in figure 1B), and each target is indicated by a different color (see inset in upper-left panel). Leftward and downward movements are positive. Two time points in the motion trials were compared: the end of the saccade as determined by a velocity criteria (left column), and the end of the memory period defined as 250 ms before the auditory cue (right column). Positive values refer to leftward movements.

Table 1.

Linear regression parameters of stationary memory trials versus control fixations.

| Subject | Version or Vergence |

Saccade End or Memory End |

Slope ±95% CI |

R2 |

|---|---|---|---|---|

| AP | Horizontal Version | Saccade End | 1.11 ± 0.05 | 1.00 |

| Memory End | 0.95 ± 0.05 | 1.00 | ||

| Vertical Version | Saccade End | 1.07 ± 0.13 | 0.96 | |

| Memory End | 0.88 ± 0.10 | 0.97 | ||

| Vergence | Saccade End | 0.80 ± 0.21 | 0.74 | |

| Memory End | 0.96 ± 0.17 | 0.85 | ||

| CB | Horizontal Version | Saccade End | 1.03 ± 0.06 | 1.00 |

| Memory End | 0.92 ± 0.05 | 1.00 | ||

| Vertical Version | Saccade End | 1.08 ± 0.07 | 1.00 | |

| Memory End | 0.98 ± 0.08 | 0.98 | ||

| Vergence | Saccade End | 0.57 ± 0.13 | 0.79 | |

| Memory End | 1.26 ± 0.21 | 0.88 | ||

| EK | Horizontal Version | Saccade End | 1.13 ± 0.05 | 1.00 |

| Memory End | 1.07 ± 0.06 | 1.00 | ||

| Vertical Version | Saccade End | 1.21 ± 0.08 | 1.00 | |

| Memory End | 1.20 ± 0.09 | 0.98 | ||

| Vergence | Saccade End | 0.55 ± 0.11 | 0.81 | |

| Memory End | 0.71 ± 0.12 | 0.87 | ||

| IR | Horizontal Version | Saccade End | 1.14 ± 0.08 | 0.99 |

| Memory End | 1.19 ± 0.08 | 1.00 | ||

| Vertical Version | Saccade End | 1.25 ± 0.11 | 0.98 | |

| Memory End | 1.21 ± 0.12 | 0.97 | ||

| Vergence | Saccade End | 0.63 ± 0.13 | 0.81 | |

| Memory End | 0.99 ± 0.20 | 0.82 | ||

| TT | Horizontal Version | Saccade End | 0.94 ± 0.06 | 1.00 |

| Memory End | 0.93 ± 0.06 | 1.00 | ||

| Vertical Version | Saccade End | 0.91 ± 0.10 | 0.97 | |

| Memory End | 0.80 ± 0.07 | 0.98 | ||

| Vergence | Saccade End | 0.37 ± 0.17 | 0.46 | |

| Memory End | 0.58 ± 0.13 | 0.78 | ||

| US | Horizontal Version | Saccade End | 0.96 ± 0.05 | 1.00 |

| Memory End | 0.96 ± 0.05 | 1.00 | ||

| Vertical Version | Saccade End | 1.04 ± 0.08 | 0.98 | |

| Memory End | 1.03 ± 0.09 | 0.98 | ||

| Vergence | Saccade End | 0.67 ± 0.16 | 0.78 | |

| Memory End | 1.08 ± 0.14 | 0.92 |

Examples of two motion trials are given in figure 5A and B for 10 cm rightward and forward translations, respectively. In A (rightward motion trial), the closer target (left) required a large leftward saccade and an increase in vergence, whereas the farther target (right) required a smaller leftward movement and a decrease in vergence. The subject’s performance in this motion trial (black traces) can be directly compared with the corresponding average memory version/vergence in the initial stationary trials (blue traces) and the final stationary trials (red traces). After the eye movement was made, the subject’s right (black dashed) and left (black solid) eye traces lie closer to the red traces, indicating that the memory version eye movement in the motion trial was more closely aligned to that of the final stationary trials. In contrast, memory vergence in lateral translation trials is not nearly as telling since initial and final stationary vergence angles are nearly identical (i.e., notice how red and blue traces nearly overlap). In fact, for any lateral or vertical translation, the main updating component should occur in the version domain (whereas vergence angles do not change very much).

The opposite pattern is true for forward/backward movements. Figure 5B illustrates a 10 cm forward motion trial in which a target at 33 cm was flashed. Here, differences in version movements between the initial and final stationary trials are negligible (notice close proximity of red and blue traces) since the targets were aligned with the subject’s cyclopean eye and thus also with the motion axis. However, since the subject was moved forward and was therefore now closer to the previously flashed target, they had to make an eye movement with increased vergence to the remembered location of the space-fixed target. This can be seen by the higher vergence angle of the final stationary trial (red trace) as compared to the initial stationary trial (blue trace). The actual vergence movement of the subject (black trace) was closer to the final stationary trial (red trace) after the eye movement was made.

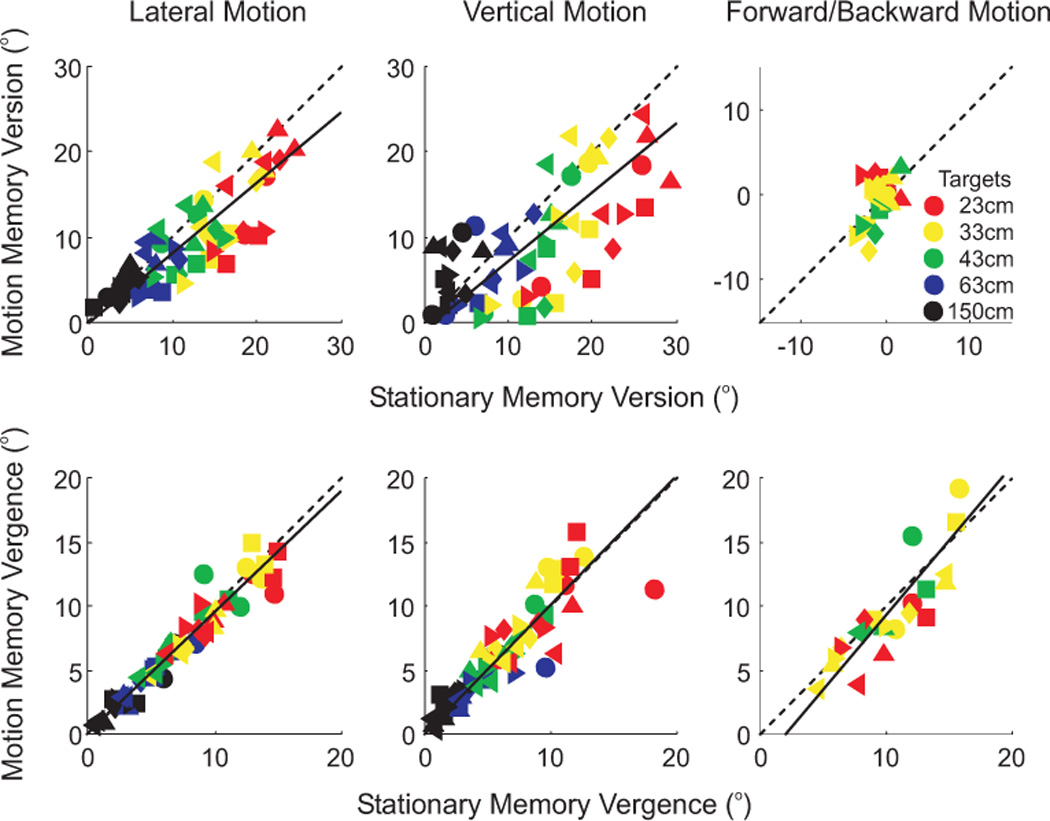

To quantify these observations across all subjects, all targets and all movement directions, we plotted the subjects’ version (figure 6 – top row) and vergence (figure 6 – bottom row) performances in the motion memory trials as a function of their version and vergence performances in the final stationary memory trials for lateral (left column), vertical (middle column) and forward/backward (right column) translations. Data from each subject is shown by a different symbol (see inset of figure 1B) and each target is indicated by a different color (see inset). The unity slope is indicated by the dashed black lines and the average slope across all subjects is shown by the solid black lines. As already mentioned, the version comparison is relevant for lateral and vertical movements and the vergence comparison is relevant for forward/backward movements. The average version comparison slope [±95% confidence intervals] was 0.82 [0.69, 0.94] for lateral motion and 0.81 [0.63, 0.99] for vertical motion. Since these slopes were slightly less than 1, there was only partial updating for lateral and vertical translations. For forward/backward motion, vergence slopes [±95% confidence intervals] averaged 1.17 [0.77, 1.50] and thus were not different from a slope of 1. Data for each individual subject’s slopes in all conditions are given in table 2.

Figure 6. Comparison of motion and final stationary trials.

Motion memory version is plotted as a function of stationary memory version (upper row) and motion memory vergence is plotted as a function of stationary memory vergence (lower row) to determine updating performance. This analysis was done separately for lateral (left column), vertical (middle column) and forward/backward (right column) motion trials. Data for each subject is shown by different symbols (see inset in figure 1B) and data for each of the five space-fixed targets is indicated by the different colors (see inset in upper-right panel). The unity slope indicating perfect updating is shown by the black dashed line, and the best fit slope across all subjects is indicated by the solid black line.

Table 2.

Linear regression parameters of motion trials versus final stationary trials.

| Subject | Motion Type | Version or Vergence |

Slope ±95% CI |

R2 |

|---|---|---|---|---|

| AP | Lateral | Version | 0.69 ±0.33 | 0.74 |

| Vergence | 0.79 ±0.44 | 0.68 | ||

| Vertical | Version | 0.69 ±0.50 | 0.56 | |

| Vergence | 0.54 ± 0.49 | 0.44 | ||

| Fore/Aft | Version | N/A | N/A | |

| Vergence | 2.07 ± 3.41 | 0.77 | ||

| CB | Lateral | Version | 0.44 ±0.12 | 0.90 |

| Vergence | 0.96 ±0.19 | 0.94 | ||

| Vertical | Version | 0.37 ±0.35 | 0.47 | |

| Vergence | 1.14 ±0.27 | 0.93 | ||

| Fore/Aft | Version | N/A | N/A | |

| Vergence | 1.01 ±2.47 | 0.61 | ||

| EK | Lateral | Version | 0.82 ±0.19 | 0.92 |

| Vergence | 0.83 ±0.12 | 0.97 | ||

| Vertical | Version | 0.68 ±0.53 | 0.52 | |

| Vergence | 0.91 ±0.25 | 0.90 | ||

| Fore/Aft | Version | N/A | N/A | |

| Vergence | 0.45 ±0.79 | 0.74 | ||

| IR | Lateral | Version | 0.82 ±0.19 | 0.92 |

| Vergence | 0.93 ±0.15 | 0.96 | ||

| Vertical | Version | 0.50 ±0.25 | 0.72 | |

| Vergence | 1.00 ±0.34 | 0.86 | ||

| Fore/Aft | Version | N/A | N/A | |

| Vergence | 0.74 ±0.82 | 0.88 | ||

| TT | Lateral | Version | 0.44 ±0.19 | 0.79 |

| Vergence | 1.13 ±0.23 | 0.94 | ||

| Vertical | Version | 0.53 ±0.32 | 0.65 | |

| Vergence | 0.76 ±0.42 | 0.68 | ||

| Fore/Aft | Version | N/A | N/A | |

| Vergence | 0.56 ±0.46 | 0.93 | ||

| US | Lateral | Version | 0.90 ±0.28 | 0.87 |

| Vergence | 0.81 ±0.10 | 0.98 | ||

| Vertical | Version | 0.71 ±0.46 | 0.61 | |

| Vergence | 0.66 ±0.20 | 0.88 | ||

| Fore/Aft | Version | N/A | N/A | |

| Vergence | 0.91 ±1.22 | 0.84 |

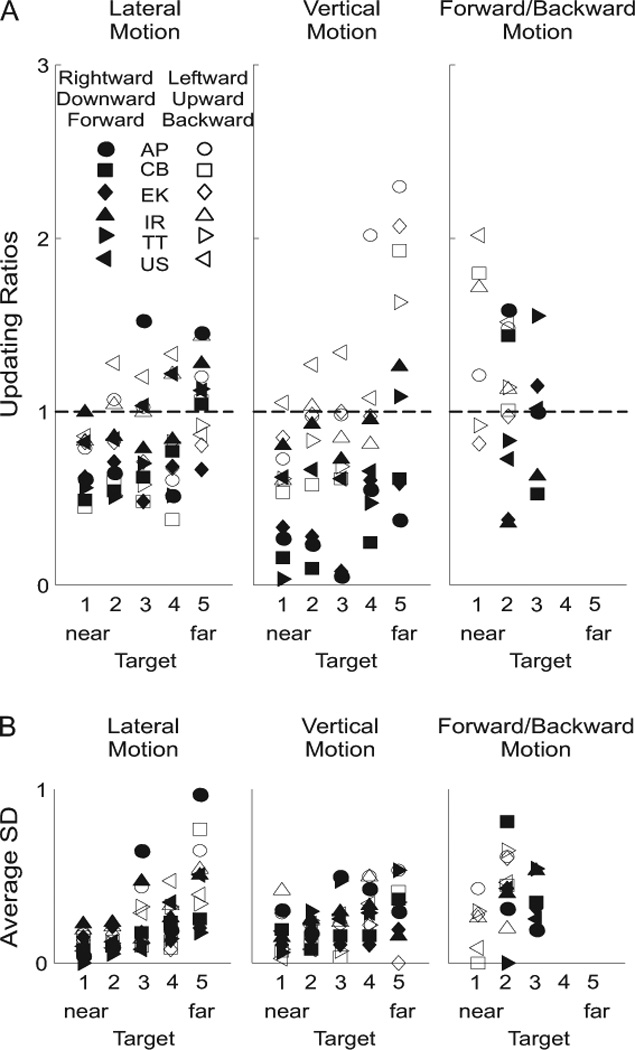

To further quantify each subject’s updating ability, we computed updating ratios for the relevant motion direction and version/vergence combinations (figure 7 and see METHODS for formula). In figure 7A, updating ratios of 1 indicate perfect updating (i.e., subjects’ memory version/vergence angles were equivalent to those in the final stationary trials) and updating ratios of 0 indicate no updating (i.e., subjects’ memory vergence/version angles were equivalent to those in the initial stationary trials). The average updating ratio (±SD) for lateral version was 0.84 ±0.28. A univariate ANOVA, with direction of motion (leftward/rightward) and target distance (five targets in depth) as fixed factors and the updating ratio as the dependent variable, indicated no effect of direction (F(1,49)=0.55, p=0.46), but a significant effect of target distance (F(4,49)=3.14, p=0.02). A post-hoc Tukey test indicated that updating for the furthest target was significantly better than updating for the closest target (target 5 vs. 1, p=0.02).

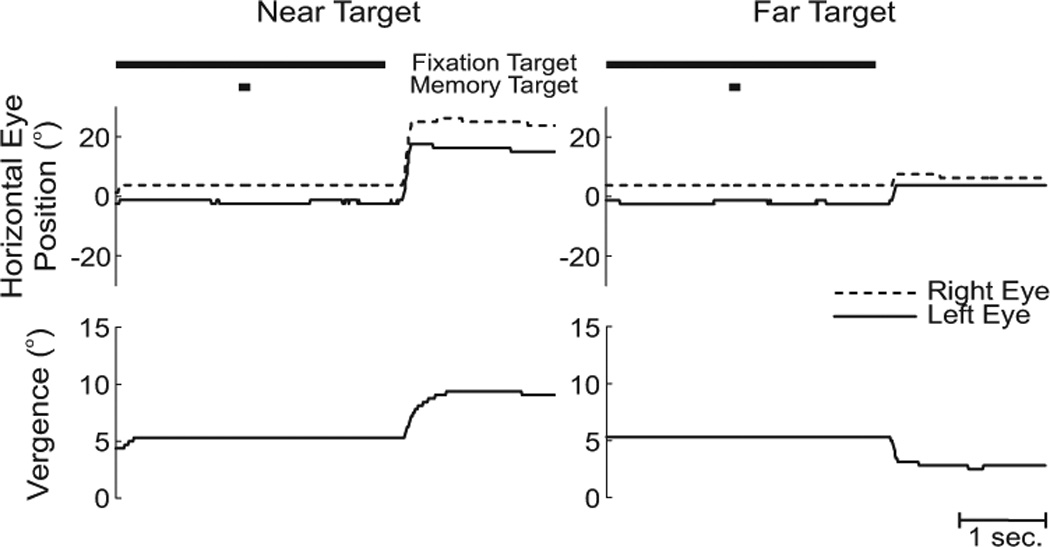

Figure 7. Updating performance.

(A) Updating ratios are plotted for lateral (left panel), vertical (middle panel) and forward/backward (right panel) motion trials. Each of the five space-fixed targets is indicated: 1 = 23cm (nearest); 2 = 33cm; 3 = 43cm; 4 = 63cm and 5 = 150cm (furthest). Each subject is indicated by a different symbol (see inset in left-most panel). Black symbols indicate rightward, downward and forward motion trials, while white symbols represent leftward, upward and backward motion trials. A value of 0 indicates no updating, while a value of 1 indicates perfect updating (dashed line). (B) Intrasubject variability in updating performance is illustrated by the average standard deviation for each subject, in each motion condition, to each of the five space-fixed targets. Same symbols and conventions as in (A).

The average updating ratio (±SD) for vertical version was 0.79 ±0.49. Here, a univariate ANOVA indicated significant effects of both direction (upward/downward) (F(1,49)=56.22, p<0.01) and target depth (F(4,49)=10.60, p<0.01). Updating ratios were higher, and thus closer to 1, for upward movements (1.05 ±0.50) than for downward movements (0.51 ±0.33) (post-hoc Tukey test, p<0.01), and the updating ratio for the furthest target (150cm) was greater than the updating ratios for the four closer targets (post-hoc Tukey tests, p values always <0.01). Qualitatively, one can observe a trend in figure 7A (lateral and vertical motion panels) in which mean updating ratios increased as the targets were displaced further in depth from the subjects.

The average vergence updating ratio for the forward/backward motion trials was 1.12 ±0.45. However, we did not further compare potential direction and target differences because the observed power was relatively low (0.42 compared with 0.70 for lateral and 1.00 for vertical translations). The lower power was due to a number of factors including (1) the smaller number of space-fixed targets used (three instead of five) and the fact that only one direction of motion (instead of two) was used for two of the three space-fixed targets (see METHODS section for details), and (2) the larger intrasubject variability for vergence compared with version movements (Medendorp et al. 2003; Henriques et al. 2003).

To get a better idea of the intrasubject variability present in the current experiment, figure 7B plots the average standard deviation for each subject, in all conditions. The variability of the standard deviations was clearly lower than that of the actual updating ratio means (figure 7A). However, notice that here too there was a trend in which more variability was observed for farther targets. This trend is likely due to motion parallax, where smaller version/vergence changes are required for far targets than for near targets.

DISCUSSION

We have shown that human subjects can, to a large degree, update the locations of visual targets in space following passive translational displacements. Updating ratios were 1.12 ±0.45 after forward/backward translations, 0.84 ±0.28 after lateral (rightward and leftward) translations and 1.05 ±0.50 after upward translations, but lower (0.51 ±0.33) after downward translations. Note that direct comparisons of forward/backward updating and lateral/vertical updating is difficult since the former is based on vergence eye movements while the latter is based on version eye movements. Large intersubject variability was also observed for each motion condition (figure 7A), as has been previously observed for passive updating after roll and yaw rotations (Klier et al. 2005, 2006). Intrasubject variability was low for near target updating, but increased as a function of target distance (figure 7B). Finally, because version and vergence accuracy in the stationary memory trials were identical to those during fixation (figure 4), the observed updating errors likely arise from the processes associated with spatial updating and not from mislocalization of the original location of the target (Medendorp et al. 2003) or from decay of memory storage (White et al. 1994).

These findings show that, in addition to efference copy signals of the outgoing motor command (that are generated during active displacements), extra-retinal sensory information (e.g., vestibular and/or proprioceptive cues) are also useful for translational updating in humans, as has been shown previously for rotational updating (Klier et al. 2005, 2006, 2007b). Among these sensory signals, strong evidence points to the vestibular system as a key player in spatial updating. Wei et al. (2006) attempted to eliminate vestibular signals by labyrinthectomizing animals that were trained to perform translational updating paradigms. They found that both lateral and forward/backward translational updating was compromised after the lesions, but that the effects were much more severe and permanent for forward/backward motion. Lateral updating ratios fell from 0.71 to 0.49 and recovered to near normal levels after 10 weeks, implying that proprioception/somatosensory cues also contribute to updating during lateral and yaw updating. In contrast, forward/backward updating ratios fell from 0.96 to nearly zero and did not recover much even after 16 weeks. Thus while vestibular signals appear to play a vital role in spatial updating, they may be more crucial in certain directions of motion than in others, or alternatively, this may reflect a difference between the version and vergence systems.

Previous studies have demonstrated that humans can correctly gauge the amount of distance traveled after passive whole-body translations (Berthoz et al. 1995; Glasauer and Brandt 2007; Israel et al. 1993, 1997; Siegler et al. 2000). Functionally, this could arise via temporal integration of velocity and/or acceleration information derived from the vestibular system and stored in spatial memory (Berthoz et al. 1995; Israel and Berthoz 1989; Israel et al. 1997). Such path integration signals may be used to update visual maps of space after translations. Alternatively, however, the signals used for updating may arise from efference copies of the cancellation signal used to suppress translational vestibulo-ocular reflex (TVOR). The latter is actually more likely since updating is only necessary when the TVOR is suppressed during self motion (otherwise, if the TVOR is intact, the final motor error is equivalent to the initial retinal error). Finally, note that this efference copy signal is quite different from an efference copy of the motor command associated with voluntary movements. For example, the TVOR cancellation efference copy signal is only available during the passive motion and can therefore not be used for predictive remapping (Duhamel et al. 1992), while the efference copy of the outgoing motor command is available before the movement is executed.

Monkey versus man

As mentioned, Wei et al. (2006) reported average lateral translational updating ratios of 0.71 ±0.21 (mean ±SD) and average forward/backward ratios of 0.96 ±1.10 across their two animals. Thus monkeys were better at updating for forward/backward motion than lateral motion. We can compare these monkey parameters to the human parameters found in the current study. Our average updating ratio across all subjects, targets and directions of motion was 0.84 ±0.28 for lateral motion and 1.12 ±0.45 for forward/backward motion. Thus humans and non-human primates had similar updating abilities, with forward/backward updating having higher ratios, but more variability, than lateral updating.

These similarities are interesting given the differences in the updating paradigms. First, in the monkey experiments, at the end of each motion and stationary trial, the space-fixed target was turned on again, allowing the monkey to make a corrective saccade and permitting learning to occur. Humans have been shown to improve performance on spatial updating tasks when visual feedback is provided (Israel et al. 1999), however, none was permitted in the current study. Yet, this lack of learning did not seem to affect our subjects’ performance as compared to the animals that did receive visual feedback. Second, the monkeys were trained to perform these tasks thousands of times over several months, sometimes a year. And once ready for testing, over 1000 trials were collected for analysis from each animal (Li et al. 2005). Humans were only given a handful of trial runs before the experiment began, and in each experiment only six trials of every target/motion combination could be collected. Thus here, as in all human experiments, the subjects had much less experience with the required tasks and much less data was collected. This latter point should affect the final variability of the data from a purely statistical standpoint as less data leads to greater variability in measurements. This does not seem to have been the case here. Finally, the monkeys had a direct, physiologically relevant reward (i.e., juice) for completing the experiment, whereas the human volunteers did not.

Near versus far targets

The observed trend, that for lateral and vertical updating the average updating ratio increased with increased target depth, might have partially depended on the fact that subjects had their heads fixed. The large version movements required to accurately update near targets is typically accomplished, in natural circumstances, by combined movements of the eyes and head. In fact, the head typically increases its relative contribution with increased gaze shift amplitude (Freedman and Sparks 1997; Klier et al. 2001; Martinez-Trujillo et al. 2003). Thus the relatively lower updating ratios seen with the nearer targets may be improved if this study were to be repeated with the head free to move. Alternatively, if the suppressed TVOR command (which occurs when the head-fixed target is fixated during translation) is used as the updating signal, then differences between near and far TVOR gains may explain the differences between updating ratios for targets in depth. This is because while TVOR gain is near unity for far targets, it is consistently undercompensatory for near targets (Schwarz and Miles 1991). This lower TVOR gain for near targets could thus result in the lower updating ratios we observed.

Source of Extra-retinal Signals

If vestibular signals are used to update the locations of targets in space (as speculated above), then one issue that remains to be resolved is the location at which these vestibular signals are used. Accumulating evidence from saccadic studies have implicated the posterior parietal cortex (Colby and Goldberg 1999; Duhamel et al. 1992), extrastriate visual areas (Nakamura and Colby 2002), as well as the frontal cortex (Goldberg and Bruce 1990; Umeno and Goldberg 1997) as updating centers for intervening saccadic eye movements. These studies have found evidence for remapping, a phenomenon in which the representation of a visual target is moved or remapped at the time of an eye movement, by an amount equivalent to the amplitude and direction of the intervening eye movement. For example, when a saccadic eye movement brings the receptive field of a cell on the location of a previously flashed target, and the target is no longer present, the cell responds as if the target was still present. Thus visual information is shifted from the coordinates of the initial eye position, to the coordinates of the final eye position, thereby maintaining spatial constancy. Several other studies support the notion that target locations are indeed stored in an eye-centered reference frame (Baker et al. 2003; Batista et al. 1999; Colby and Goldberg 1999; Henriques et al. 1998; Snyder 2000).

But does a similar remapping mechanism exist for changes in head/body position that are detected by vestibular stimulation (either rotational or translation motion)? Here, the location of a cell’s receptive field would need to be shifted by the amplitude and direction of the intervening head or whole-body movement. The parameters of these movements could be derived, in eye coordinates, either directly from the suppressed rotational or translational vestibular-ocular reflex, or indirectly from the path integration of the vestibular signal. Some cortical evidence exists for such signals including visuomotor areas like the frontal eye fields (Fukushima et al. 2000). But whether these signals are actually used to remap visual receptive fields is still unknown.

If vestibular signals derived from the brainstem are used for vestibular spatial updating in the cortex, then one must also delineate the pathways by which these signals travel from the vestibular-related brainstem areas to the cortical areas that have been implicated in spatial updating. Two possible pathways, both involving the thalamus, are currently known to exist that may transfer vestibular signals to the visuomotor cortical areas. The first involves vestibular projections to the ventrolateral thalamus (Lang et al. 1979; Meng et al. 2007) which then projects onto areas 2v, 3a and parieto-insular vestibular cortex (PIVC) (Akbarian et al. 1992). PIVC, in turn, has connections to the frontal eye fields (Guldin et al. 1992; Huerta et al. 1987). The second involves prepositus and vestibular nuclei projections to the intralaminar nuclei of the thalamus (Asanuma et al. 1983; Lang et al. 1979; Warren et al. 2003), which then project to both the frontal and parietal cortex (Huerta and Kaas 1990; Huerta et al. 1986; Kaufman and Rosenquist 1985; Shook et al. 1990, 1991). In fact, part of this latter pathway (from mediodorasl thalamus to the frontal eye fields) has already been implicated in transmitting eye movement-related signals for spatial updating after saccades (Sommer and Wurtz 2004; 2006).

ACKNOWLEDGEMENTS

The authors would like to thank Urs Scheifele, Albert Züger and Elena Buffone for technical assistance. This work was supported by a Human Frontier Science Program Long-term Fellowship to EMK, Swiss National Foundation grant 31-100802/1 and a Betty and David Koetser Foundation for Brain Research grant to BJMH, and National Institutes of Health grants DC007620 and DC04260 to DEA.

REFERENCES

- Akbarian S, Grusser OJ, Guldin WO. Thalamic connections of the vestibular cortical fields in the squirrel monkey (Saimiri sciureus) J Comp Neurol. 1992;326:423–441. doi: 10.1002/cne.903260308. [DOI] [PubMed] [Google Scholar]

- Asanuma C, Thach WT, Jones EG. Distribution of cerebellar terminations and their relation to other afferent terminations in the ventral lateral thalamic region of the monkey. Brain Res. 1983;286:237–265. doi: 10.1016/0165-0173(83)90015-2. [DOI] [PubMed] [Google Scholar]

- Baker JT, Harper TM, Snyder LH. Spatial memory following shifts of gaze. I. Saccades to memorized world-fixed and gaze-fixed targets. J Neurophysiol. 2003;89:2564–2576. doi: 10.1152/jn.00610.2002. [DOI] [PubMed] [Google Scholar]

- Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science. 1999;285:257–260. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- Berthoz A, Israel I, Georges-Francois P, Grasso R, Tsuzuku T. Spatial memory of body linear displacement: what is being stored? Science. 1995;269:95–98. doi: 10.1126/science.7604286. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Ann Rev Neurosci. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- Freedman EG, Sparks DL. Eye-head coordination during head-unrestrained gaze shifts in rhesus monkeys. J Neurophysiol. 1997;77:2328–2348. doi: 10.1152/jn.1997.77.5.2328. [DOI] [PubMed] [Google Scholar]

- Fukushima K, Sat T, Fukushima J, Shinmei Y, Kaneko CR. Activity of smooth pursuit-related neurons in the monkey periarcuate cortex during pursuit and passive whole-body rotation. J Neurophysiol. 2000;83:563–587. doi: 10.1152/jn.2000.83.1.563. [DOI] [PubMed] [Google Scholar]

- Glasauer S, Brandt T. Noncummutative updating of perceived self-orientation in three dimensions. J Neurophysiol. 2007;97:2958–2964. doi: 10.1152/jn.00655.2006. [DOI] [PubMed] [Google Scholar]

- Goldberg ME, Bruce CJ. Primate frontal eye fields. III. Maintenance of a spatially accurate saccade signal. J Neurophysiol. 1990;64:489–508. doi: 10.1152/jn.1990.64.2.489. [DOI] [PubMed] [Google Scholar]

- Guldin WO, Akbarian S, Grusser OJ. Cortico-cortical connections and cytoarchitectonics of the primate vestibular cortex: a study in squirrel monkeys (Saimiri sciureus) J Comp Neurol. 1992;326:375–401. doi: 10.1002/cne.903260306. [DOI] [PubMed] [Google Scholar]

- Hallett PE, Lightstone AD. Saccadic eye movements to flashed targets. Vision Res. 1976;16:107–114. doi: 10.1016/0042-6989(76)90084-5. [DOI] [PubMed] [Google Scholar]

- Henriques DY, Klier EM, Smith MA, Lowy D, Crawford JD. Gaze-centered remapping of remembered visual space in an open-loop pointing task. J Neurosci. 1998;18:1583–1594. doi: 10.1523/JNEUROSCI.18-04-01583.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henriques DYP, Medendorp WP, Gielen CCAM, Crawford JD. Geometric computations underlying eye-hand coordination: orientations of the two eyes and the head. Exp Brain Res. 2003;152:70–78. doi: 10.1007/s00221-003-1523-4. [DOI] [PubMed] [Google Scholar]

- Herter TM, Guitton D. Human head-free gaze saccades to targets flashed before gaze-pursuit are spatially accurate. J. Neurophysiol. 1998;80:2785–2789. doi: 10.1152/jn.1998.80.5.2785. [DOI] [PubMed] [Google Scholar]

- Huerta MF, Kaas JH. Supplementary eye field as defined by intracortical microstimulation: connections in macaques. J Comp Neurol. 1990;293:299–330. doi: 10.1002/cne.902930211. [DOI] [PubMed] [Google Scholar]

- Huerta MF, Krubitzer LA, Kaas JH. Frontal eye field as defined by intracortical microstimulation in squirrel monkeys, owl monkeys, macaque monkeys. I. Subcortical connections. J Comp Neurol. 1986;253:415–439. doi: 10.1002/cne.902530402. [DOI] [PubMed] [Google Scholar]

- Huerta MF, Krubitzer LA, Kaas JH. Frontal eye field as defined by intracortical microstimulation in squirrel monkeys, owl monkeys, and macaque monkeys. II Cortical connections. J Comp Neurol. 1987;265:332–361. doi: 10.1002/cne.902650304. [DOI] [PubMed] [Google Scholar]

- Israel I, Berthoz A. Contribution of the otoliths to the calculation of linear displacement. J Neurophysiol. 1989;62:247–263. doi: 10.1152/jn.1989.62.1.247. [DOI] [PubMed] [Google Scholar]

- Israel I, Chapuis N, Glasauer S, Charade O, Berthoz A. Estimation of passive horizontal linear whole-body displacement in humans. J Neurophysiol. 1993;70:1270–1273. doi: 10.1152/jn.1993.70.3.1270. [DOI] [PubMed] [Google Scholar]

- Israel I, Grasso R, Georges-Francois P, Tsuzuku T, Berthoz A. Spatial memory and path integration studied by self-driven passive linear displacement. I. Basic properties. J Neurophysiol. 1997;77:3180–3192. doi: 10.1152/jn.1997.77.6.3180. [DOI] [PubMed] [Google Scholar]

- Israel I, Sievering D, Koenig E. Self-rotation estimate about the vertical axis. Acta Otolaryngol. 1995;115:3–8. doi: 10.3109/00016489509133338. [DOI] [PubMed] [Google Scholar]

- Israel I, Ventre-Dominey J, Denise P. Vestibular information contributes to update retinotopic maps. Neuroreport. 1999;10:3479–3483. doi: 10.1097/00001756-199911260-00003. [DOI] [PubMed] [Google Scholar]

- Kaufman EF, Rosenquist AC. Efferent projections of the thalamic intralaminar nuclei in the cat. Brain Res. 1985;335:257–279. doi: 10.1016/0006-8993(85)90478-0. [DOI] [PubMed] [Google Scholar]

- Klier EM, Angelaki DE, Hess BJM. Roles of gravitational cues and efference copy signals in the rotational updating of memory saccades. J Neurophysiol. 2005;94:468–478. doi: 10.1152/jn.00700.2004. [DOI] [PubMed] [Google Scholar]

- Klier EM, Angelaki DE, Hess BJM. Human visuospatial updating after non-commutative rotations. J Neurophysiol. 2007b;98:537–544. doi: 10.1152/jn.01229.2006. [DOI] [PubMed] [Google Scholar]

- Klier EM, Hess BJM, Angelaki DE. Differences in the accuracy of human visuospatial memory after yaw and roll rotations. J Neurophysiol. 2006;95:2692–2697. doi: 10.1152/jn.01017.2005. [DOI] [PubMed] [Google Scholar]

- Klier EM, Hess BJM, Angelaki DE. Spatial updating performance after lateral, vertical and fore/aft translations in humans. Soc Neurosci Abstr. 2007a in press. [Google Scholar]

- Klier EM, Wang H, Crawford JD. The superior colliculus encodes gaze commands in retinal coordinates. Nat Neurosci. 2001;4:627–632. doi: 10.1038/88450. [DOI] [PubMed] [Google Scholar]

- Lang W, Buttner-Ennever JA, Buttner U. Vestibular projections to the monkey thalamus: an autoradiographic study. Brain Res. 1979;177:3–17. doi: 10.1016/0006-8993(79)90914-4. [DOI] [PubMed] [Google Scholar]

- Li N, Angelaki DE. Updating visual space during motion in depth. Neuron. 2005;48:149–158. doi: 10.1016/j.neuron.2005.08.021. [DOI] [PubMed] [Google Scholar]

- Li N, Wei M, Angelaki DE. Primate memory saccade amplitude after intervened motion depends on target distance. J Neurophysiol. 2005;94:722–733. doi: 10.1152/jn.01339.2004. [DOI] [PubMed] [Google Scholar]

- Martinez-Trujillo JC, Klier EM, Wang H, Crawford JD. Contribution of head movement to gaze command coding in monkey frontal cortex and superior colliculus. J Neurophysiol. 2003;90:2770–2776. doi: 10.1152/jn.00330.2003. [DOI] [PubMed] [Google Scholar]

- McKenzie A, Lisberger SG. Properties of signals that determine the amplitude and direction of saccadic eye movements in monkeys. J Neurophysiol. 1986;56:196–207. doi: 10.1152/jn.1986.56.1.196. [DOI] [PubMed] [Google Scholar]

- Medendorp WP, Smith MA, Tweed DB, Crawford JD. Rotational remapping in human spatial memory during eye and head motion. J Neurosci. 2002;22:RC196. doi: 10.1523/JNEUROSCI.22-01-j0006.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medendorp WP, Tweed DB, Crawford JD. Motion parallax is computed in the updating of human spatial memory. J Neurosci. 2003;23:8135–8142. doi: 10.1523/JNEUROSCI.23-22-08135.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meng H, May PJ, Dickman JD, Angelaki DE. Vestibular signals in primate thalamus: properties and origins. J Neurosci. doi: 10.1523/JNEUROSCI.3931-07.2007. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middelstaedt ML, Mittelstaedt H. Homing path integration in a mammal. Naturwissenschaften. 1980;67:566–567. [Google Scholar]

- Nakamura K, Colby CL. Updating of the visual representation in monkey striate and extrastriate cortex during saccades. Proc Natl Acad Sci. 2002;99:4026–4031. doi: 10.1073/pnas.052379899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohtsuka K. Properties of memory-guided saccades toward targets flashed during smooth pursuit in human subjects. Invest Ophthalmol Vis Sci. 1994;35:509–514. [PubMed] [Google Scholar]

- Schlag J, Schlag-Rey M, Dassonville P. Saccades can be aimed at the spatial location of targets flashed during pursuit. J Neurophysiol. 1990;64:575–581. doi: 10.1152/jn.1990.64.2.575. [DOI] [PubMed] [Google Scholar]

- Schwarz U, Miles FA. Ocular responses to translation and their dependence on viewing distance. I. Motion of the observer. J Neurophysiol. 1991;66:851–864. doi: 10.1152/jn.1991.66.3.851. [DOI] [PubMed] [Google Scholar]

- Siegler I, Viaud-Delmon I, Israel I, Berthoz A. Self-motion perception during a sequence of whole-body rotation in darkness. Exp Brain Res. 2000;134:66–73. doi: 10.1007/s002210000415. [DOI] [PubMed] [Google Scholar]

- Shook BL, Schlag-Rey M, Schlag J. Primate supplementary eye field: I. Comparative aspects of mesencephalic and pontine connections. J Comp Neurol. 1990;301:618–642. doi: 10.1002/cne.903010410. [DOI] [PubMed] [Google Scholar]

- Shook BL, Schlag-Rey M, Schlag J. Primate supplementary eye field. II. Comparative aspects of connections with the thalamus, corpus striatum, and related forebrain nuclei. J Comp Neurol. 1991;307:562–583. doi: 10.1002/cne.903070405. [DOI] [PubMed] [Google Scholar]

- Snyder LH. Coordinate transformations for eye and arm movements in the brain. Curr Opin Neurobiol. 2000;10:747–754. doi: 10.1016/s0959-4388(00)00152-5. [DOI] [PubMed] [Google Scholar]

- Sommer MA, Wurtz RH. A Pathway in primate brain for internal monitoring of movements. Science. 2002;296:1480–1482. doi: 10.1126/science.1069590. [DOI] [PubMed] [Google Scholar]

- Sommer MA, Wurtz RH. Influence of the thalamus on spatial visual processing in frontal cortex. Nature. 2006;222:374–377. doi: 10.1038/nature05279. [DOI] [PubMed] [Google Scholar]

- Sparks DL, Mays LE. Spatial localization of saccade targets. I. Compensation for stimulation-induced perturbations in eye position. J Neurophysiol. 1983;49:45–63. doi: 10.1152/jn.1983.49.1.45. [DOI] [PubMed] [Google Scholar]

- Umeno MM, Goldberg ME. Spatial processing in the monkey frontal eye field. I.Predictive visual responses. J Neurophysiol. 1997;78:1373–1383. doi: 10.1152/jn.1997.78.3.1373. [DOI] [PubMed] [Google Scholar]

- Warren S, Dickman JD, Angelaki DE, May PJ. Convergence of vestibular and somatosensory inputs with gaze signals in macaque thalamus. Soc Neurosci Abstr. 2003;29:391.7. [Google Scholar]

- Wei M, Li N, Newlands D, Dickman JD, Angelaki DE. Deficits and recovery in visuospatial memory during head motion after bilateral labyrinthine lesion. J Neurophysiol. 2006;96:1676–1682. doi: 10.1152/jn.00012.2006. [DOI] [PubMed] [Google Scholar]

- Westheimer G. Mechanisms of saccadic eye movements. A.M.A. Archives of Ophthalmlogy. 1954;52:710–724. doi: 10.1001/archopht.1954.00920050716006. [DOI] [PubMed] [Google Scholar]

- White JM, Sparks DL, Stanford TR. Saccades to remembered target locations: an analysis of systematic and variable errors. Vision Res. 1994;34:79–92. doi: 10.1016/0042-6989(94)90259-3. [DOI] [PubMed] [Google Scholar]

- Yarbus AL. Eye movements and vision. New York: Plenum; 1967. [Google Scholar]

- Zivotofsky AZ, Rottach KG, Averbuch-Heller L, Kori AA, Thomas CW, Dell’Osso LF, Leigh RJ. Saccades to remembered targets: the effects of smooth pursuit and illusory stimulus motion. J Neurophysiol. 1996;76:3671–3632. doi: 10.1152/jn.1996.76.6.3617. [DOI] [PubMed] [Google Scholar]