Abstract

There is an enormous unmet need for knowledge about how new insights from discovery and translational research can yield measurable, population-level improvements in health and reduction in mortality among those having or at risk for neurological disease. Once several, well-conducted randomized controlled trials establish the efficacy of a given therapy, implementation research can generate new knowledge about barriers to uptake of the therapy into widespread clinical care, and what strategies are effective in overcoming those barriers and in addressing health disparities. Comparative effectiveness research aims to elucidate the relative value (including clinical benefit, clinical harms, and/or costs) of alternative efficacious management approaches to a neurological disorder, generally through direct comparisons, and may include comparisons of methodologies for implementation. Congress has recently appropriated resources and established an institute to prioritize funding for such research. Neurologists and neuroscientists should understand the scope and objectives of comparative effectiveness and implementation research, their range of methodological approaches (formal literature syntheses, randomized trials, observational studies, modeling), and existing research resources (centers for literature synthesis, registries, practice networks) relevant to research for neurological conditions, in order to close the well-documented “evidence-to-practice gap.” Future directions include building this research resource capacity, producing scientists trained to conduct rigorous comparative effectiveness and implementation research, and embracing innovative strategies to set research priorities in these areas.

Over the past few decades, there have been significant advances in treatment of neurological disorders; however, there is little knowledge about the comparative effectiveness of alternate medications, devices, and treatments in community practice settings1,2 and a paucity of data on how to “translate into care” or implement research findings into practice to benefit populations with neurological conditions.3

In 2009, Congress appropriated $1.1 billion to federal agencies to invest in comparative effectiveness (CE) research. The appropriation language included an intent to promote development of tools – clinical registries and community-based networks – to produce this new knowledge. To provide guidance, the Institute of Medicine (IOM) produced a report on CE research priorities.4 The federal government’s CE research investment, recent high-profile articles and editorials on implementation and outcomes research, and passage of major healthcare reform legislation5 have attracted attention to the field. A consortium of NIH-funded Clinical Translational Science Award (CTSA) institutions has formed a “Key Function Committee” on CE research to encourage and promote training, development of methods, community involvement, and sharing of advantages and disadvantages of specific approaches on this topic across CTSA institutions, through workshops and regular teleconferences.6 Further, the NIH director has identified generation of knowledge to “benefitting healthcare reform” as one of five research areas “ripe for major advances” and for which NIH “can make substantial contributions.”7 The National Institute of Neurological Disorders and Stroke (NINDS) - which covers several hundred disorders and supports a broad range of research on mechanisms, prevention, and treatments – convened a workshop in October 2009 to address the knowledge and training gaps in CE and implementation research for neurological disorders.8

This article’s purposes are to:

Define the scope and objectives for CE and for implementation research, to clarify how these two areas are related, how they are distinct, and how implementation research interfaces with health disparities research;

Define the range of methodological approaches for CE and implementation research;

Assess the utility and availability of existing resources for conducting CE and implementation research; and

Identify manpower needs and considerations for priority-setting strategies for such research in neurology.

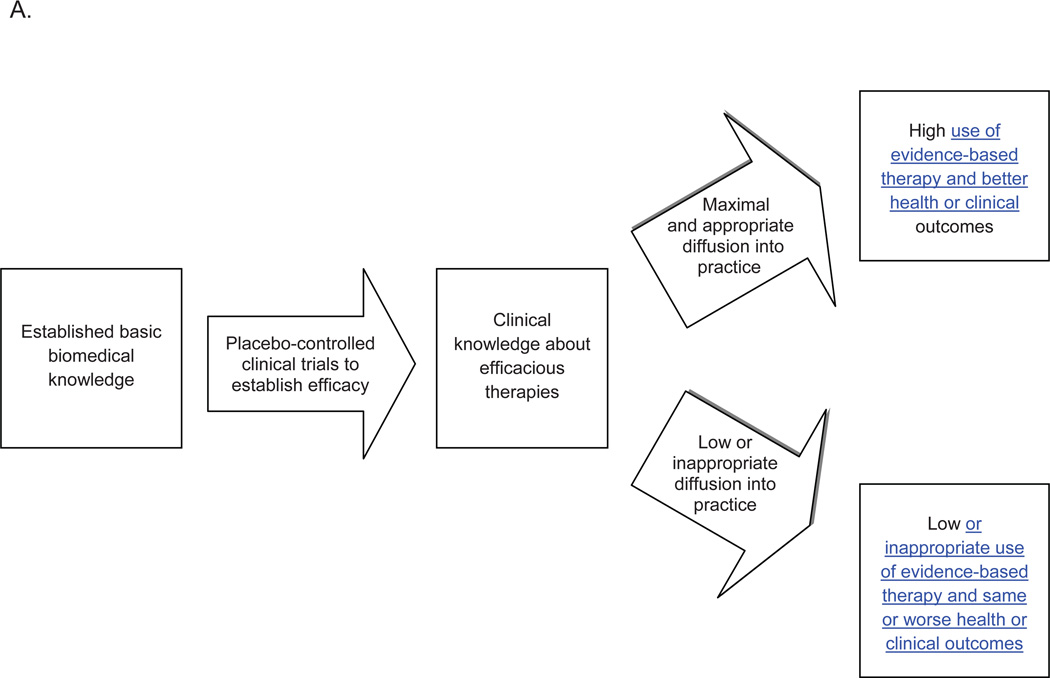

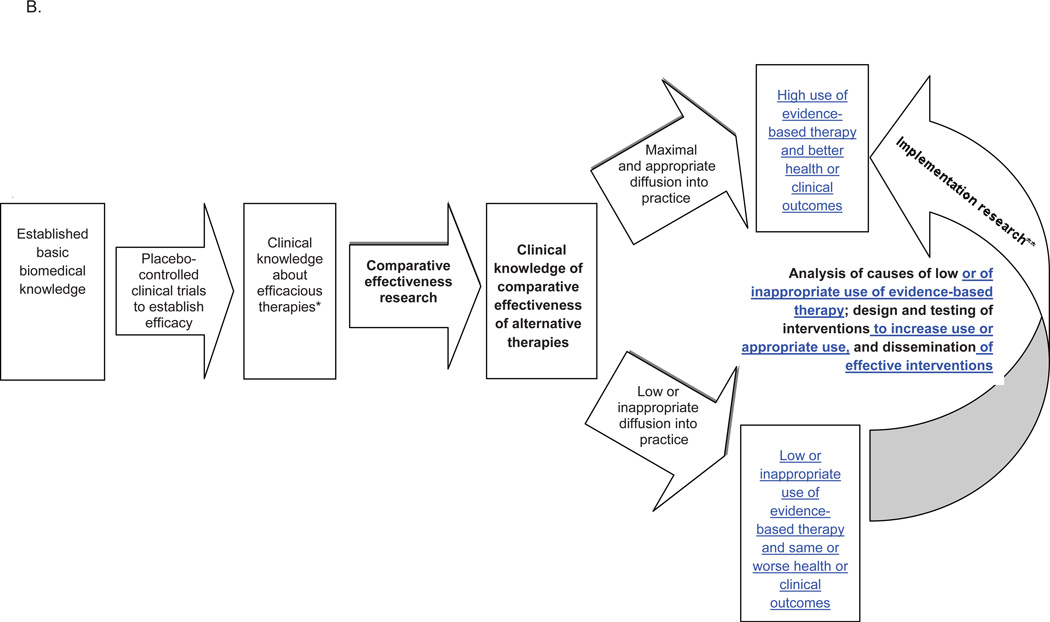

1a. Scope and Objectives of Comparative Effectiveness (CE) Research (Figure)

Figure. Roles of comparative effectiveness and implementation research in translating clinical knowledge into practice.

A. Historical model of knowledge-to-practice translation

B. Model of knowledge-to-practice translation that incorporates comparative effectiveness and implementation research

* Knowledge from efficacy studies can diffuse directly, as well

** The component of implementation research that provides knowledge of relative value of two forms of healthcare delivery or policies is also a form of comparative effectiveness research

Comparative Effectiveness Research Objective: Are there important clinical differences in outcome for condition X between alternative therapies A, B, & C? Informs practice and subsequent implementation research

Implementation Research Objectives: How is broad uptake or diffusion of treatment(s) A of known efficacy for condition X achieved? Ensures that population health improvement/benefit is derived from fruits of basic/clinical studies.

CE research is a relatively new term.2,9 The IOM report describes the knowledge gap this research addresses:

Although there may be studies that indicate that a treatment is efficacious relative to a placebo, there frequently are no studies that directly compare the different available alternatives….CER focuses attention on the evidence base to assist patients and healthcare providers across diverse health settings in making more informed decisions.4 (p. 1)

Thus, CE research can determine what preventions and treatments work for which patients, describing results at both population and subgroup levels, reflecting “the growing potential for individualized and predictive medicine – based on advances in genomics, systems biology, and other biomedical sciences”4 (p. 38).

A classic CE research study in neurology is the Veteran’s Administration (VA) randomized clinical trial (RCT) in the1980s comparing monotherapy for partial epilepsy and secondarily generalized seizures with phenytoin, phenobarbital, primidone, and carbamazepine.10 Study findings subsequently changed clinical practice because of new knowledge that while seizure control was comparable, tolerability was lower for phenobarbital and primidone, which became second-line choices for initial monotherapy for partial seizures. A more recent example is a randomized trial comparing ethosuximide, valproic acid, and lamotrigine for newly-diagnosed childhood absence epilepsy.11 The study compared not only freedom from treatment failure as defined by both efficacy against seizures and toxicity, but also attentional dysfunction, another important outcome in these children. Comparing a new treatment to an existing treatment is not often studied; one reason is that the requirement for FDA approval of treatments for certain conditions is demonstration of safety and of superiority to placebo (efficacy), rather than to alternative available treatments.12 As a consequence, there have been few situations in which the pharmaceutical industry has had an incentive to fund comparative effectiveness studies.

CE research also encompasses research on different methods for delivering healthcare services. For example, one CE research priority in the IOM report is to “Compare the effectiveness of comprehensive, coordinated care and usual care on objective measures of clinical status, patient-reported outcomes, and costs of care for people with multiple sclerosis”4 (p. 9). Fourteen of 100 CE research priorities in the IOM report are specific to a particular neurologic disease; notably, several relatively common neurological disorders (Parkinson’s disease; stroke) were not included.13 However, other, broadly-framed priorities are applicable to neurologic disorders, for example, research to compare the effectiveness of strategies for increasing medication adherence, or the comparative effectiveness of new remote patient monitoring and management technologies relative to usual care in managing chronic disease. CE research can also examine policy decisions ranging from insurance coverage or formulary decisions to comparisons of different reimbursement policies as they affect care for neurological disorders.4

1b. Scope and Objectives of Implementation Research

One major bottleneck in the pathway from discovery research to better health across populations is implementation of knowledge about treatments of proven efficacy into widespread clinical care across diverse practice settings and patient populations.14 The ultimate goal is to improve population health through increased use of treatments proven to produce better health outcomes. An NINDS workshop participant explained, “[clinical trial] evidence should inform what to change; implementation research informs how to change” (E. Kerr, personal communication, October 1, 2009). Research to accelerate this implementation phase involves multiple scientific disciplines: economics, organizational management, psychometrics, anthropology, biostatistics, and others. As with CE research, increasingly central to successful implementation research are linked clinical and administrative databases from healthcare delivery organizations or insurers, and involvement of community-based healthcare services and practices.

Implementation research typically includes a sequence of studies beginning with measurement of current practice patterns, analysis of barriers to full implementation of an evidence-based treatment, then design and testing of multi-faceted, complex social and behavioral-based models of delivering care to produce higher uptake of treatments of proven value. Implementation success is enhanced by theoretical models of behavior change, frameworks for implementation, and evaluations (both qualitative and quantitative) to determine what processes did or did not occur, so that successful models can be sustained and widely disseminated.

An overlap between implementation research and CE research are studies – primarily prospective - comparing the outcomes of different health system or policy strategies designed to increase use of evidence-based care (Figure B); other components of implementation research (analysis of barriers, etc) and of CE research (comparing two alternative treatments) are distinct.

Health disparities research and implementation research

Socioeconomic and racial/ethnic differences in health are partially attributable to differences in access to and receipt of health care services, as well as to barriers to changing behavioral risk factors associated with adverse health outcomes.15 A 2003 IOM report stated that because of the “overwhelming” evidence of substantial disparities in healthcare delivery in the US, research should be focused on developing and implementing strategies to redress them;16 research to create and test interventions to redress racial/ethnic disparities falls within implementation research. In the 1990s, a national probability sample of adults with HIV/AIDS were interviewed prior to and during the introduction of Highly Active Anti-Retroviral Therapy (HAART), which had just been shown to substantially reduce mortality. While all racial groups had improved access to this therapy over time, lower access to HAART for African Americans compared to whites persisted throughout this period.17 Such findings led to policy changes as well as additional research to intervene to increase HAART use among African-Americans.

Frameworks for investigating interventions to reduce disparities for neurological diseases exist.18 However, while some research has documented disparities in stroke19 and epilepsy care,20 and a recent Centers for Disease Control study found substantially lower median age at death for blacks with muscular dystrophies than whites,21 implementation studies to design and test interventions to reduce disparities for neurological diseases are rare.22,23 NINDS has just completed a strategic planning process to identify gaps in disparities research for neurological disorders.24

2. Range of Methodological Approaches

CE and implementation research studies can utilize systematic reviews and meta-analyses of existing published literature and sometimes unpublished data; randomized controlled trials; observational studies based on claims databases or registries; and modeling. Strengths and limitations of alternative approaches can be debated; however, there is agreement that additional research is needed to improve methodology and to establish population databases with more clinical detail, in order to increase the strength of conclusions from these studies. The newly-established Patient Centered Outcomes Research Institute (PCORI) recently named a Methodology Committee, charged with establishing standards and promoting methodologic rigor in this research.

Systematic reviews and meta-analyses

Systematic reviews are the “best way to determine what is known and not known on a clinical topic” (P. Shekelle, personal communication, October 1, 2009). Systematic reviews are a structured method for identifying all relevant published research on a defined question, pruning out studies that do not meet high standards of methodologic quality and rigor, abstracting key data from remaining articles, and analyzing – both quantitatively and qualitatively – findings across relevant studies. Under certain conditions, a meta-analysis can be conducted, in which individual-level data (“pooled analysis”) or aggregate data (most common method of meta-analysis) can be statistically combined across trials. Original unpublished data can be obtained and included, but this is often difficult because of institutional restrictions in sharing of such data. Meta-analyses may provide insight into a meaningful treatment effect not evident from findings of smaller, separate trials: the efficacy of thrombolytic therapy for myocardial infarction would have been evident over a decade earlier had separate trials been subjected to a cumulative meta-analysis.25

Systematic reviews and meta-analyses on comparative effectiveness synthesize all studies of sufficient quality comparing the benefits and risks of two or more alternative treatments for a condition. This may identify important basic and clinical research gaps, supply evidence for guidelines, provide estimates for models, and uncover previously unrecognized effects (positive or negative) in subgroups. Reviews on adverse effects may be particularly useful for rare but serious adverse events. However, even a rigorous, well-done systematic review/meta-analysis cannot yield a definitive result if the underlying evidence base is lacking in quality and quantity.26 In addition, meta-analyses on the same topic may not yield the same conclusions, due to variation in stringency of criteria for inclusion of studies in the analysis. For example, a Cochrane review on speech and language therapy for aphasia following stroke concluded that there was insufficient evidence to guide recommendations for this therapy for post-stroke aphasia because none of the trials identified were of high enough quality to meet inclusion criteria.27 However, a non-Cochrane meta-analysis conducted at about the same time included eight RCTs of speech and language therapy following stroke and drew different conclusions about this therapy based on those trials, because the inclusion criteria for this meta-analysis were not as stringent as that of the Cochrane review.28

Randomized trials

Prospective trials with randomized assignment to alternative treatments or interventions are generally considered the best evidence because of the higher likelihood that measured differences in outcomes are attributable to the intervention and not to confounders such as patient health status or factors that are difficult to measure or account for in observational designs. NINDS has supported several high-profile CE trials, for example, Carotid Revascularization Endarterectomy versus Stent Trial (CREST), a comparison of carotid stenting with carotid endarterectomy.29 One product of CREST consonant with an objective of CE research was the ability to cautiously stratify the extent of benefit across different subgroups.

A non-inferiority trial approach may be useful if standard treatment is associated with risk, inconvenience, or other undesirable properties. The pharmaceutical industry has supported non-inferiority RCTs, with the aim of determining if their product is not worse than the standard treatment, for example, the RE-LY (Randomized Evaluation of Long term anticoagulant therapy) study of dabigatran,30 and the Prevention Regimen For Effectively avoiding Second Strokes (PROFESS) trial.31

CE trials are often large, lengthy, and very costly, requiring stringent adherence to regulatory, safety, and quality protocols. Some CE trials have been conducted that kept the randomized assignments, but differed from traditional RCTs on certain aspects of the design. For example, in contrast to the 1980s VA RCT comparing different monotherapy treatments for epilepsy, the recent Standard and New Antiepileptic Drugs (SANAD) study was an open label randomized trial. The unblinding lowered the cost, making it feasible to conduct, but lack of blinding can introduce higher potential for bias.32

Other trials of healthcare delivery interventions might require randomization of a different unit (physician, clinic) than the patient. Such cluster RCT designs require special expertise, but guidance on how to optimize the quality of such designs are now published.33 Qualitative process evaluations to analyze how well the intervention was put in place are essential.

One of the few implementation research studies that NINDS has supported is a trial to increase use of tPA in community hospitals, INcreasing Stroke Treatment through INteractive behavioral Change Tactic (INSTINCT). Its goal was to determine if a multi-faceted intervention using opinion leaders, targeted messaging on stroke care, performance feedback, and a ‘hotline’ for physician support, increases rates of tPA use among emergency departments and physicians in community hospitals. The intervention design was guided by review of existing literature and a qualitative study of barriers.34

Observational studies

Some questions may be addressed more feasibly through observational studies – particularly retrospective studies of existing electronic medical records (EMR), administrative or billing claims databases, or disease registries - compared to expensive, prospective, randomized trials. Observational study designs employed for CE research include cohort studies (preferably, new-user design),35 case-control studies, natural experiments (for example, following a formulary or policy change), and area variation studies.36

However, a major limitation of such approaches is that key variables necessary to compare groups are typically not available, for example, initial disease severity, which is strongly related to likelihood of receiving certain treatments and thus of certain outcomes. Analyses that do not account for this are likely to be biased: a retrospective analysis of warfarin versus aspirin for intracranial stenosis found that it reduced stroke by half,37 but a later, prospective RCT showed that there was no difference between warfarin and aspirin.38 This discrepancy could be because clinicians gave warfarin to persons in better health and aspirin to persons in worse health (for example, having higher fall risk), and that persons in better health had fewer strokes. These retrospective data did not enable investigators to adjust for differences in selection of one treatment over the other.

Two developments may partially alleviate this central limitation: (1) establishment of carefully-designed registries of certain prospectively collected, standardized healthcare data, and (2) advances in methodologic research on analytic approaches to treatment selection bias and residual confounding in observational study designs, e.g., utilizing propensity scores.

Modeling

Modeling of costs and outcomes of alternative prevention strategies or treatments requires two steps: (1) generating a model using data from a large study like the Framingham Heart Study, and (2) comparing projections of the impact on health across populations when inputs regarding the impact of different programs or treatments are varied, requiring population data. Results of these sophisticated, computer simulation models are typically used by policy-makers. Approaches are particularly well-developed for coronary heart disease, as many large, national datasets exist (Framingham, National Health and Nutrition Examination Survey, census data, others), from which simulation of the population-wide impact of reduction in different risk factors can be estimated and compared. For example, the Coronary Heart Disease Policy Model was used to quantify the projected reduction in annual incidence of coronary heart disease and myocardial infarction - and also stroke - from reduction of dietary salt by varying amounts, as might be effected through regulation of salt content in processed foods.39 Data needed to operate models include levels of other relevant risk factors, intervention costs, mortality or health status, and population demographics. While a stroke prevention model has been developed,40 no models exist for other neurological conditions, largely because an insufficient number of large datasets and studies currently exist to apply this approach.

3. Resources for CE and Implementation Research

Major data sources for CE and implementation research include existing scientific literature or datasets (for systematic reviews/meta-analyses), registries (for observational studies and modeling), and practice networks (for randomized trials). Improving the infrastructure in these areas is one goal of recent federal CE research funding.

Centers specializing in systematic reviews/meta-analysis

The Agency for Healthcare Research and Quality (AHRQ) has an established network of Evidence-Based Practice Centers with scientific and clinical expertise in conducting systematic reviews and meta-analyses. About nine topics are funded by AHRQ yearly, nominated by “professional societies, health systems, employers, insurers, providers, and consumer groups.”41 Factors considered in selection of topics include health burden, societal cost, potential to reduce variation in care, how engaged the nominating organization will be in disseminating findings, and topic relevance to federal programs like Medicare. The CDC generates systematic reviews of existing evidence on the validity and utility of emerging genetic tests for clinical practice applications.42 The Cochrane Collaboration, established in 1993 to facilitate and collate standardized evidence reviews in support of clinical decision-making, has centers worldwide and over 4000 systematic reviews and meta-analyses.43

Registries

Defined as “an organized system that uses observational study methods to collect uniform data to evaluate specified outcomes for a population defined by a particular disease, condition, or exposure, and that serves a predetermined scientific, clinical, or policy purposes,”44 (p. 1) a registry can focus on a specific disease or condition, or a population who has received a device or a particular pharmaceutical, or those who have received a certain healthcare service. A voluntary registry for a rare neurological disorder is ALS C.A.R.E., a physician-led data collection effort with quarterly feedback reports for comparing and improving care.45 A well-known national voluntary registry for a common neurological disorder is the Get With the Guidelines (GWTG) – Stroke registry.46 Modeled after a coronary artery disease registry, GWTG – Stroke aims to improve adherence to inpatient stroke care guidelines. Heavily supported by the American Heart Association, as of July 2009, over one million stroke patient records had been entered. While the registry serves a quality improvement function for participating hospitals, it has also been the source of many studies of levels and determinants of stroke care and outcomes. The disadvantage of this and many other registries is that participating hospitals are not representative of hospitals in the US, completeness of data capture is not monitored, follow-up is costly and requires additional attention to privacy issues, selection bias as to which patients are entered at sites may exist, and potential confounders for a particular research question may not be captured in the registry.

Criteria for evaluating the quality of data registries have been developed.47 AHRQ has budgeted $48 million “for the establishment or enhancement of national patient registries that can be used for researching the longitudinal effects of different interventions and collecting data on under-represented populations” (S. Smith, personal communication, October 2, 2009) and has started a “Registry of Registries.” With greater ability for electronic capture of health data and to link different databases, establishing registries of sufficient data quality that can be tapped for research is increasingly feasible: the National Cancer Institute (NCI) has linked cases from SEER (Surveillance, Epidemiology, and End Results) – which for over 35 years has maintained detailed clinical, demographic, and mortality cause data on persons with cancer – with Medicare claims data on health care utilization. This enables investigation of variations in utilization of cancer tests, procedures, and treatment from diagnosis through treatment.48

The landscape for conducting CE and implementation research will be even further accelerated with advances in information technology and incentives for healthcare delivery systems to use EMRs. Development of “hybrid” models that link within a large integrated delivery system - such as a health maintenance organization or the VA - administrative claims data, EMRs, and any registries (both within the organization and outside, such as SEER) is moving forward rapidly. Each source captures different types of data critical for a particular research question (Table 1). Advances in health information technology may lead to systematic collection of patient-reported data on health status and standardized data on disease severity like the NIH Stroke Scale, making such hybrid databases potentially powerful sources for CE research, as they could link enrollment data with data on drugs and procedures, hospitalizations, labs, physiologic variables like blood pressure, disease severity, and patient-reported outcomes. Further, such EMR-derived registries from large plans or healthcare networks may make feasible CE research for rare or uncommon diseases. The HMO Research Network is a consortium of 16 integrated delivery systems poised to conduct research to “transform health care practice and improve health through population-based collaborative research” (J. Selby, personal communication, October 2, 2009) via a hybrid-type data registry.

Table 1.

Comparison of Types of Variables Typically* Available in Different Databases for Comparative Effectiveness and Implementation Research

| TYPE OF DATABASE (example) |

Patient identifiers |

Diagnosis, visit, & procedure codes (validity varies) |

Vital signs (i.e. BP, weight) |

Laboratory values |

Medication prescriptions and fills |

Patient- reported measures† |

Cost |

|---|---|---|---|---|---|---|---|

| National survey (NHIS‡) | no | no (except for self-reported diagnoses) | no | no | no | yes | no |

| Claims (Medicare) | yes | yes | no | no | yes | no | yes |

| EMR in IDS§(VA) | yes | yes | yes | yes | yes | no | no |

| Registry (GWTG∥) | yes (if longitudinal) | selective | yes | selective | selective | yes | no |

| Hybrid model¶ | yes | yes | yes | yes | yes | yes | if linked to claims |

There are exceptions, for example, NHANES (National Health and Nutrition Examination Survey) is a national survey that includes vital signs and some laboratory data.

such as symptom ratings, quality of life, satisfaction with care, out-of-pocket costs

NHIS = National Health Interview Survey

EMR=electronic medical record; IDS=integrated delivery system

GWTG = Get With the Guidelines

for example, EMR in IDS linked to registry and/or to claims, such as Kaiser Permanente Total Joint Replacement Registry66

Adapted from: Selby, J. (2009, October). Claims databases/hybrid models that include registries. In S. C. Johnston and B. Vickrey (co-Chairs), NINDS workshop for developing strategies to advance implementation (T-2) and comparative effectiveness research to improve the health of patients with neurological diseases. Workshop conducted in Bethesda, Maryland.

In addition to registries, existing databases can be tapped for CE and implementation research. The Society of General Internal Medicine (SGIM) website includes a comprehensive listing of 46 major datasets that can be used in clinical and health services research, and another 12 repositories or compendia of additional databases. In addition to information on access, the website includes an SGIM expert’s comments on the characteristics, strengths, and limitations of each dataset, and a partial list of publications to show examples of research using that dataset.49

Practice networks

While a practice network includes many registry elements, its primary purpose is to conduct studies on the impact of healthcare interventions in “real-world” settings through its established infrastructure. The Vermont Oxford Network, established 20 years ago, now includes over 800 hospitals worldwide contributing data on very low birthweight infants and neonatal ICU patients.50 It has conducted implementation research trials testing different approaches to improving care delivery, and has established a neonatal encephalopathy registry to track the implementation and dissemination of hypothermia, a recently proven treatment.

4. Future Directions

Manpower needs

Multi-disciplinary, collaborative research that draws heavily on social science and public health disciplines has been the norm in health services and outcomes research since its inception and requires advanced research training. Typically, such research is championed and led by a clinician who has completed a multi-year research fellowship and obtained at least a master’s degree in Public Health or related field. There is only a small cadre of neurologist investigators in this field today. With the exception of the AAN’s Practice Research Training Fellowship, most fellowship training opportunities, such as the Robert Wood Johnson Foundation Clinical Scholars Program or VA health services research fellowships,51 are not specific to neurologists. Such research fellowship training could position neurologists to be competitive for career development awards (K23) from NIH, the VA’s Health Services Research and Development Service career development award mechanism,52 or existing NIH-funded networks having a training component, such as SPOTRIAS. The CTSA strategic goal committee has included CE research training within CTSA T32 and K12 awards,53 but additional opportunities are needed for development of neurologist investigators. To attract and develop future leaders in this field, training program directors and department chairs need awareness of the field’s value and the need for advanced training to conduct high-quality research.

Considerations for research priority-setting

Prioritizing questions of greatest significance and impact for investment of research resources across all of medicine is critical.54 A unique challenge in neurology for CE and implementation research priority-setting is the sheer number of diseases, ranging from rare diseases that have very high individual health burdens to common diseases. The new PCORI will be setting priorities to distribute funds allocated for CE research, hopefully to include neurological disorders, incorporating input from the public, “landscape reviews,” its board, its Methodology Committee, and pilot projects it is commissioning.55

At the NINDS workshop, convened because of the desire of NINDS to move forward with this research, other NIH institutes presented how they have implemented priority-setting in CE and implementation research. NHLBI has a specific strategic goal “to generate an improved understanding of the processes involved in translating research into practice…evaluate the risks, benefits, and costs of diagnostic tests and treatments in representative populations and settings…”56 An example is the SPRINT (Systolic Pressure Reduction Intervention Trial) initiative, to learn “whether treating to a systolic blood pressure lower than the currently recommended goal will reduce cardiovascular disease mortality and morbidity” (D. Bild, October 1, 2009). NHLBI’s formal priority setting begins with advice through workshops and discussions with investigators, staff development, an “Idea Forum,” a Board of External Experts, Advisory Council, and then the Director’s decision. Criteria for prioritizing an initiative are perceived scientific importance, potential impact on clinical practice or public health, and whether the question is unlikely to be addressed by industry. NCI has an extensive CE and implementation research program and considers it advisable to have in-house multidisciplinary staff expertise, leveraging resources through collaboration with other NIH institutes and federal agencies. It engages the public, advocates, and patients early, and invests in measurement and data standards. Two NIMH strategic objectives directly address CE and implementation research, including development of personalized interventions and how interventions can be effective across diverse care settings. A Division of Services & Intervention Research exists within NIMH; increasingly, NIMH encourages investigators to emphasize public health impact of research findings, intensively disseminate those findings as an explicit endpoint, and propose a priori a hand-off of research findings to policy-makers. Major challenges NIMH aims to address are conducting this research within actual practice settings and improving the science of dissemination and implementation research.57

Outside of NIH, the VA’s Quality Enhancement Research Initiative (QUERI) links research on how to apply evidence into routine clinical practice.58 QUERI takes advantage of this large delivery system and a network of health services researchers to focus implementation practice-based research developed with a goal for broad dissemination of effective interventions. At present, QUERIs exist for stroke and polytrauma (encompassing traumatic brain injury).

Strategies for priority-setting suggested at the NINDS workshop included obtaining input from high-level reports (for example, IOM), professional societies, advocacy organizations, NINDS program staff, other institutes and agencies (where there is shared responsibility for research on a disease), and representatives from organizations like CMS. Formal, state-of-the-art methods for optimizing such judgments and transparency of the process are desirable.59 Suggested priority-setting criteria include potential impact on clinical practice and public health (including when one treatment is perceived as possibly of marginally greater benefit but has a much higher cost or is much more difficult to implement in community practices than in clinical trial settings), whether industry is unlikely to address the question, overall burden of disease, and evidence of disparities in the translation of evidence into neurological care and outcomes (Table 2).60, 61

Table 2.

Potential scientific opportunity criteria for comparative effectiveness and implementation research

|

Source: National Institute of Neurological Disorders and Stroke. NINDS Strategic Planning Disease Panel Final Report.

http://www.ninds.nih.gov/about_ninds/plans/strategic_plan/disease_module.pdf. Accessed on May 3, 2011.

Funding

Both of the major federal sponsors of CE and implementation research are within the US Department of Health and Human Services (DHHS): NIH, with an over $30 billion budget in FY201062 but only a small percent for CE or implementation research, and AHRQ, with a $403 million budget in FY2010 but nearly all its funding for health services research. The $3 billion planned for CE research funding from the non-profit, independent PCORI over the next decade is to be generated from general federal revenues, the Medicare Trust Fund, and private health plans; 20% must be transferred to DHHS for dissemination.5,63,64 For studies categorized as “comparative effectiveness” according to the NIH Research Portfolio Online Reporting Tools, NINDS was eighth of 17 institutes in FY2010 non-ARRA spending but 14th as a percentage of its budget (1.1%).65 The current categorization process may undercount CE studies but will be refined to enable tracking of expenditures by institute and centers in the future.

Conclusion

Comparative effectiveness and implementation research – concepts now coming to widespread attention – will remain prominent because linking basic and clinical research findings to better and affordable population health is imperative. Other specialties have made substantial inroads into developing tools and performing studies on these topics. Neurology should make a directed effort to embrace this research within the broader neuroscience research continuum, so that the knowledge gained from high-quality research can be translated into tangible benefits for patients with neurological disorders.

Acknowledgements

The following were speakers in the October 2009 NINDS workshop on comparative effectiveness and implementation research; the authors thank them for providing information and references for many of the concepts presented in this article:

David Atkins, MD, MPH, Director, VA QUERI Program

Diane Bild, MD, MPH, NHLBI, NIH

David Chambers, DPhil, NIMH, NIH

Robert T. Croyle, PhD, NCI, NIH

Alan S. Go, MD, Kaiser Permanente Northern California

Lee Goldman, MD, MPH, Columbia University

Sheldon Greenfield, MD, University of California, Irvine

Deborah Hirtz, MD, NINDS, NIH

S. Claiborne Johnston, MD, PhD, University of California, San Francisco, (Chairperson)

Petra Kaufmann, MD, NINDS, NIH

Eve Kerr, MD, MPH, University of Michigan

Walter Koroshetz, MD, NINDS, NIH

Michael Lauer, MD, NHLBI, NIH

Brian S. Mittman, PhD, VA Greater Los Angeles HealthCare System

Robert H. Pfister, MD, University of Vermont

Lee H. Schwamm, MD, Massachusetts General Hospital

Phillip Scott, MD, University of Michigan

Joe V. Selby, MD, MPH, Kaiser Permanente, Oakland, California

Paul Shekelle, MD, PhD, West Los Angeles Veterans Affairs Medical Center

Scott R. Smith, PhD, Agency for Healthcare Research and Quality

Barbara G. Vickrey, MD, MPH, University of California, Los Angeles, (Chairperson)

We also thank Robert Zalusky, PhD, of NINDS for suggestions.

REFERENCES

- 1.Lauer MS, Collins FS. Using science to improve the nation’s health system: NIH’s commitment to comparative effectiveness research. JAMA. 2010;303(21):2182–2183. doi: 10.1001/jama.2010.726. [DOI] [PubMed] [Google Scholar]

- 2.Congressional Budget Office. Research on the comparative effectiveness of medical treatment: issues and options for an expanded federal role. Washington, DC: Congressional Budget Office; 2007. [Google Scholar]

- 3.Westfall JM, Mold J, Fagnan L. Practice-based research--“Blue Highways” on the NIH roadmap. JAMA. 2007;297(4):403–406. doi: 10.1001/jama.297.4.403. [DOI] [PubMed] [Google Scholar]

- 4.IOM (Institute of Medicine) Initial national priorities for comparative effectiveness research. Washington, D.C: The National Academies Press; 2009. [Google Scholar]

- 5.The Patient Protection and Affordable Care Act, 23 (on-line) [Accessed September 23, 2011]; Available: http://frwebgate.access.gpo.gov/cgibin/getdoc.cgi?dbname=111_cong_bills&docid=f:h3590enr.txt.pdf.

- 6.Comparative Effectiveness Research Key Function Committee (on-line) [Accessed September 23, 2011]; Available: http://www.ctsaweb.org/index.cfm?fuseaction=committee.viewCommittee&com_ID=1221&abbr=CERKFC.

- 7.Collins FS. Research agenda. Opportunities for research and NIH. Science. 2010;327(5961):36–37. doi: 10.1126/science.1185055. [DOI] [PubMed] [Google Scholar]

- 8.National Institute of Neurological Disorders and Stroke Strategic Priorities and Principles. [Accessed May 3, 2011]; Available: http://www.ninds.nih.gov/about_ninds/plans/NINDS_strategic_plan.htm.

- 9.Horn SD, Gassaway J. Practice-based evidence study design for comparative effectiveness research. Med Care. 2007;45(10) Supl 2:S50–S57. doi: 10.1097/MLR.0b013e318070c07b. [DOI] [PubMed] [Google Scholar]

- 10.Mattson RH, Cramer JA, Collins JF, Smith DB, Delgado-Escueta AV, Browne TR, et al. Comparison of carbamazepine, phenobarbital, phenytoin, and primidone in partial and secondarily generalized tonic-clonic seizures. N Engl J Med. 1985;313(3):145–151. doi: 10.1056/NEJM198507183130303. [DOI] [PubMed] [Google Scholar]

- 11.Glauser TA, Cnaan A, Shinnar S, Hirtz DG, Dlugos D, Masur D, et al. Ethosuximide, valproic acid, and lamotrigine in childhood absence epilepsy. N Engl J Med. 2010;362(9):790–799. doi: 10.1056/NEJMoa0902014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.O’Connor AB. Building comparative efficacy and tolerability into the FDA approval process. JAMA. 2010;303(10):979–980. doi: 10.1001/jama.2010.257. [DOI] [PubMed] [Google Scholar]

- 13.Johnston SC, Hauser SL. IOM task impossible: set the agenda for neurological comparative effectiveness research. Ann Neurol. 2009;66(5):A4–A6. doi: 10.1002/ana.21913. [DOI] [PubMed] [Google Scholar]

- 14.Sung NS, Crowley WF, Jr, Genel M, Salber P, Sandy L, Sherwood LM, et al. Central challenges facing the national clinical research enterprise. JAMA. 2003;289(10):1278–1287. doi: 10.1001/jama.289.10.1278. [DOI] [PubMed] [Google Scholar]

- 15.King G, William DR. Race and health: a multi-dimensional approach to African-American health. In: Amick BC, Levine S, Tarlov AR, Walsh DC, editors. Society and Health. New York: Oxford University Press; 1995. [Google Scholar]

- 16.IOM (Institute of Medicine) Unequal Treatment: Confronting Racial and Ethnic Disparities in Health Care. Washington, DC: The National Academies Press; 2003. [PubMed] [Google Scholar]

- 17.Shapiro MF, Morton SC, McCaffrey DF, Senterfitt JW, Fleishman JA, Perlman JF, Athey LA, Keesey JW, Goldman DP, Berry SH, Bozzette SA. Variations in the care of HIV-infected adults in the United States: results from the HIV Cost and Services Utilization Study. JAMA. 1999;281:2305–2315. doi: 10.1001/jama.281.24.2305. [DOI] [PubMed] [Google Scholar]

- 18.Cooper LA, Hill MN, Powe NR. Designing and evaluating interventions to eliminate racial and ethnic disparities in health care. J Gen Intern Med. 2002;17(6):477–486. doi: 10.1046/j.1525-1497.2002.10633.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stansbury JP, Jia H, Williams LS, Vogel WB, Duncan PW. Ethnic disparities in stroke: epidemiology, acute care, and postacute outcomes. Stroke. 2005;36(2):374–386. doi: 10.1161/01.STR.0000153065.39325.fd. [DOI] [PubMed] [Google Scholar]

- 20.Burneo JG, Jette N, Theodore W, Begley C, Parko K, Thurman DJ, et al. Disparities in epilepsy: report of a systematic review by the North American Commission of the International League Against Epilepsy. Epilepsia. 2009;50(10):2285–2295. doi: 10.1111/j.1528-1167.2009.02282.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kenneson A, Vatave A, Finkel R. Widening gap in age at muscular dystrophy-associated death between blacks and whites, 1986–2005. Neurology. 2010;75(11):982–989. doi: 10.1212/WNL.0b013e3181f25e5b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dromerick AW, Gibbons MC, Edwards DF, et al. Preventing recurrence of thromboembolic events through coordinated treatment in the District of Columbia. International Journal of Stroke: Official Journal of the International Stroke Society. 2011;6(5):454–460. doi: 10.1111/j.1747-4949.2011.00654.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cheng EM, Cunningham WE, Towfighi A, Sanossian N, Bryg RJ, Anderson TL, Guterman JJ, Gross-Schulman SG, Beanes S, Jones AS, Liu HH, Ettner SL, Saver JL, Vickrey BG. Randomized controlled trial of an intervention to enable stroke survivors throughout the Los Angeles County safety net to “Stay With the Guidelines”. Circulation: Cardiovascular Quality and Outcomes. 2011;4:229–234. doi: 10.1161/CIRCOUTCOMES.110.951012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.NINDS (National Institute of Neurological Disorders and Stroke) Report from the strategic planning advisory panel on health disparities (on-line) [Accessed May 3, 2011]; Available: http://www.ninds.nih.gov/about_ninds/plans/NINDS_health_disparities_report.htm.

- 25.Antman EM, Lau J, Kupelnick B, Mosteller F, Chalmers TC. A comparison of results of meta-analyses of randomized control trials and recommendations of clinical experts. Treatments for myocardial infarction. JAMA. 1992;268(2):240–248. [PubMed] [Google Scholar]

- 26.Ioannidis JP, Cappelleri JC, Lau J. Meta-analyses and large randomized, controlled trials. N Engl J Med. 1998;338(1):59. doi: 10.1056/NEJM199801013380112. author reply 61–2. [DOI] [PubMed] [Google Scholar]

- 27.Greener J, Enderby P, Whurr R. Speech and language therapy for aphasia following stroke. Cochrane Database Syst Rev. 2000;(2) doi: 10.1002/14651858.CD000425. CD000425. [DOI] [PubMed] [Google Scholar]

- 28.Bhogal SK, Teasell R, Speechley M. Intensity of aphasia therapy, impact on recovery. Stroke. 2003;34(4):987–993. doi: 10.1161/01.STR.0000062343.64383.D0. [DOI] [PubMed] [Google Scholar]

- 29.Brott TG, Hobson RW, 2nd, Howard G, Roubin GS, Clark WM, Brooks W, et al. Stenting versus endarterectomy for treatment of carotid-artery stenosis. N Engl J Med. 2010;363(1):11–23. doi: 10.1056/NEJMoa0912321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Connolly SJ, Ezekowitz MD, Yusuf S, Eikelboom J, Oldgren J, Parekh A, et al. Dabigatran versus warfarin in patients with atrial fibrillation. N Engl J Med. 2009;361(12):1139–1151. doi: 10.1056/NEJMoa0905561. [DOI] [PubMed] [Google Scholar]

- 31.Sacco RL, Diener H-C, Yusuf S, Cotton D, Ounpuu S, Lawton WA, et al. Aspirin and extended-release dipyridamole versus clopidogrel for recurrent stroke. N Engl J Med. 2008;359(12):1238–1251. doi: 10.1056/NEJMoa0805002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Marson AG, Al-Kharusi AM, Alwaidh M, Appleton R, Baker GA, Chadwick DW, et al. The SANAD study of effectiveness of carbamazepine, gabapentin, lamotrigine, oxcarbazepine, or topiramate for treatment of partial epilepsy: an unblinded randomised controlled trial. Lancet. 2007;369(9566):1000–1015. doi: 10.1016/S0140-6736(07)60460-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Campbell MK, Elbourne DR, Altman DG. CONSORT statement: extension to cluster randomised trials. BMJ. 2004;328(7441):702–708. doi: 10.1136/bmj.328.7441.702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Meurer WJ, Frederiksen SM, Majersik JJ, Zhang L, Sandretto A, Scott PA. Qualitative data collection and analysis methods: the INSTINCT trial. Acad Emerg Med. 2007;14(11):1064–1071. doi: 10.1197/j.aem.2007.05.005. [DOI] [PubMed] [Google Scholar]

- 35.Magid DJ, Shetterly SM, Margolis KL, Tavel HM, O’Connor PJ, Selby JV, et al. Comparative effectiveness of angiotensin-converting enzyme inhibitors versus beta-blockers as second-line therapy for hypertension. Circ Cardiovasc Qual Outcomes. 2010;3(5):453–458. doi: 10.1161/CIRCOUTCOMES.110.940874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Selby JV, Fireman BH, Lundstrom RJ, Swain BE, Truman AF, Wong CC, et al. Variation among hospitals in coronary-angiography practices and outcomes after myocardial infarction in a large health maintenance organization. N Engl J Med. 1996;335(25):1888–1896. doi: 10.1056/NEJM199612193352506. [DOI] [PubMed] [Google Scholar]

- 37.Chimowitz MI, Kokkinos J, Strong J, Brown MB, Levine SR, Silliman S, et al. The Warfarin-Aspirin Symptomatic Intracranial Disease Study. Neurology. 1995;45(8):1488–1493. doi: 10.1212/wnl.45.8.1488. [DOI] [PubMed] [Google Scholar]

- 38.Chimowitz MI, Lynn MJ, Howlett-Smith H, Stern BJ, Hertzberg VS, Frankel MR, et al. Comparison of warfarin and aspirin for symptomatic intracranial arterial stenosis. N Engl J Med. 2005;352(13):1305–1316. doi: 10.1056/NEJMoa043033. [DOI] [PubMed] [Google Scholar]

- 39.Bibbins-Domingo K, Chertow GM, Coxson PG, Moran A, Lightwood JM, Pletcher MJ, et al. Projected effect of dietary salt reductions on future cardiovascular disease. N Engl J Med. 2010;362(7):590–599. doi: 10.1056/NEJMoa0907355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Matchar DB, Samsa GP, Matthews JR, Ancukiewicz M, Parmigiani G, Hasselblad V, et al. The Stroke Prevention Policy Model: linking evidence and clinical decisions. Ann Intern Med. 1997;127(8 Pt 2):704–711. doi: 10.7326/0003-4819-127-8_part_2-199710151-00054. [DOI] [PubMed] [Google Scholar]

- 41.AHRQ (Agency for Healthcare Research and Quality) EPC topic nomination and selection (on-line) [Accessed May 3, 2011]; Available: http://www.ahrq.gov/clinic/epc/epctopicn.htm.

- 42.Berg AO. The CDC’s EGAPP initiative: evaluating the clinical evidence for genetic tests. Am Fam Physician. 2009;80(11):1218. [PubMed] [Google Scholar]

- 43.The Cochrane Collaboration (on-line) [Accessed May 3, 2011]; Available: http://www.cochrane.org. [Google Scholar]

- 44.Gliklich RE, Dreyer NA, editors. 2nd ed. Rockville, MD: Agency for Healthcare Research and Quality; 2010. Registries for evaluating patient outcomes: a user’s guide. (Prepared by Outcome DEcIDE Center [Outcomes Sciences, Inc. dba Outcome] under Contract No. HHSA29020050035I TO3.) AHRQ Publication No. 10-EHC049. [PubMed] [Google Scholar]

- 45.Jackson CE, Lovitt S, Gowda N, Anderson F, Miller RG. Factors correlated with NPPV use in ALS. Amyotroph Lateral Scler. 2006;7(2):80–85. doi: 10.1080/14660820500504587. [DOI] [PubMed] [Google Scholar]

- 46.Fonarow GC, Reeves MJ, Smith EE, Saver JL, Zhao X, Olson DW, et al. Characteristics, performance measures, and in-hospital outcomes of the first one million stroke and transient ischemic attack admissions in get with the guidelines-stroke. Circ Cardiovasc Qual Outcomes. 2010;3(3):291–302. doi: 10.1161/CIRCOUTCOMES.109.921858. [DOI] [PubMed] [Google Scholar]

- 47.AHRQ (Agency for Healthcare Research and Quality) Electronic newsletter, December 10, 2010 (on-line) [Accessed May 3, 2011]; Available: http://www.ahrq.gov/news/enews/enews301.htm#1.

- 48.Shah SA, Smith JK, Li Y, Ng SC, Carroll JE, Tseng JF. Underutilization of therapy for hepatocellular carcinoma in the medicare population. Cancer. 2011;117(5):1019–1026. doi: 10.1002/cncr.25683. [DOI] [PubMed] [Google Scholar]

- 49.Society of General Internal Medicine. Dataset Compendium (on-line) [Accessed October 13, 2011]; Available: http://www.sgim.org/index.cfm?pageId=760. [Google Scholar]

- 50.Vermont Oxford Network (on-line) [Accessed May 3, 2011]; Available: http://www.vtoxford.org. [Google Scholar]

- 51.Dahodwala N, Meyer A-C. Emerging subspecialties in neurology: health services research. Neurology. 2010;74(10):e37–e39. doi: 10.1212/WNL.0b013e3181d31e6f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.United States Department of Veteran Affairs. VA HSR&D Research Career and Development Program (on-line) [Accessed October 13, 2011]; Available: http://www.hsrd.research.va.gov/funding/cdp.

- 53.Selker HP, Strom BL, Ford DE, Meltzer DO, Pauker SG, Pincus HA, et al. White paper on CTSA consortium role in facilitating comparative effectiveness research: September 23, 2009 CTSA consortium strategic goal committee on comparative effectiveness research. Clin Transl Sci. 2010;3(1):29–37. doi: 10.1111/j.1752-8062.2010.00177.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Brook RH. Possible outcomes of comparative effectiveness research. JAMA. 2009;302(2):194–195. doi: 10.1001/jama.2009.996. [DOI] [PubMed] [Google Scholar]

- 55.Patient-Centered Outcomes Research Institute. Report to the PCORI Board (on-line) [Accessed October 13, 2011]; Available: http://www.pcori.org/images/PDC_Report_2011-05-16.pdf. [Google Scholar]

- 56.NHLBI (National Heart Lung and Blood Institute) Division of prevention and population sciences strategic plan (on-line) [Accessed May 3, 2011]; Available: http://www.nhlbi.nih.gov/about/dpps/strategicplan.htm#goal2.

- 57.PAR 10-038: Dissemination and Implementation Research in Health (R01) (on-line) [Accessed May 3, 2011]; Available: http://grants.nih.gov/grants/guide/pa-files/PAR-10-038.html.

- 58.Stetler CB, Mittman BS, Francis J. Overview of the VA Quality Enhancement Research Initiative (QUERI) and QUERI theme articles: QUERI Series. Implement Sci. 2008;3:8. doi: 10.1186/1748-5908-3-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Jones J, Hunter D. Consensus methods for medical and health services research. BMJ. 1995;311(7001):376–380. doi: 10.1136/bmj.311.7001.376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Gillum LA, Gouveia C, Dorsey ER, Pletcher M, Mathers CD, McCulloch CE, et al. NIH disease funding levels and burden of disease. PLoS ONE. 2011;6(2):e16837. doi: 10.1371/journal.pone.0016837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.National Institute of Neurological Disorder and Stroke. Report from Strategic Planning Advisory Panel on Health Disparities (on-line) [Accessed May 4, 2011]; Available: http://www.ninds.nih.gov/about_ninds/plans/NINDS_health_disparities_report.htm.

- 62.National Institutes of Health Actual Obligations by Institute and Center FY 1998 – FY 2010 (on-line) [Accessed October 13, 2011]; Available from: http://officeofbudget.od.nih.gov/pdfs/FY12/Actual%20Obligations%20by%20IC%201998-2010.pdf.

- 63.Patient-Centered Outcomes Research Institute (on-line) [Accessed October 13, 2011]; Available: http://www.pcori.org/ [Google Scholar]

- 64.Washington AE, Lipstein SH. The Patient-Centered Outcomes Research Institute - Promoting Better Information, Decisions, and Health (on-line) [Accessed October 14, 2011];The New England Journal of Medicine. doi: 10.1056/NEJMp1109407. Available from: http://www.ncbi.nlm.nih.gov/pubmed/21992473. [DOI] [PubMed]

- 65.National Institutes of Health Research Portfolio Online Reporting Tools (RePORT), Project Listing by Category (on-line) [Accessed September 21, 2011]; Available: http://report.nih.gov/rcdc/categories/ProjectSearch.aspx?FY=2010&ARRA=N&DCat=Comparative%20Effectiveness%20Research.

- 66.Paxton EW, Inacio MCS, Khatod M, et al. Kaiser Permanente National Total Joint Replacement Registry: aligning operations with information technology. Clin Orthop Relat Res. 2010;468(10):2646–2663. doi: 10.1007/s11999-010-1463-9. [DOI] [PMC free article] [PubMed] [Google Scholar]