Abstract

Objective

At the balanced intersection of human and machine adaptation is found the optimally functioning brain-computer interface (BCI). In this study, we report a novel experiment of BCI controlling a robotic quadcopter in three-dimensional physical space using noninvasive scalp EEG in human subjects. We then quantify the performance of this system using metrics suitable for asynchronous BCI. Lastly, we examine the impact that operation of a real world device has on subjects’ control with comparison to a two-dimensional virtual cursor task.

Approach

Five human subjects were trained to modulate their sensorimotor rhythms to control an AR Drone navigating a three-dimensional physical space. Visual feedback was provided via a forward facing camera on the hull of the drone. Individual subjects were able to accurately acquire up to 90.5% of all valid targets presented while travelling at an average straight-line speed of 0.69 m/s.

Significance

Freely exploring and interacting with the world around us is a crucial element of autonomy that is lost in the context of neurodegenerative disease. Brain-computer interfaces are systems that aim to restore or enhance a user’s ability to interact with the environment via a computer and through the use of only thought. We demonstrate for the first time the ability to control a flying robot in the three-dimensional physical space using noninvasive scalp recorded EEG in humans. Our work indicates the potential of noninvasive EEG based BCI systems to accomplish complex control in three-dimensional physical space. The present study may serve as a framework for the investigation of multidimensional non-invasive brain-computer interface control in a physical environment using telepresence robotics.

Keywords: Brain-Computer Interface, BCI, EEG, 3D control, motor imagery, telepresence robotics

Introduction

Brain-computer interfaces (BCIs) are aimed at restoring crucial functions to people that are severely disabled by a wide variety of neuromuscular disorders, and at enhancing functions in healthy individuals (Wolpaw et al, 2002; Vallabhaneni et al, 2005; He et al, 2013). Significant advances have been made in the development of BCIs where intracranial electrophysiological signals are recorded and interpreted to decode the intent of subjects and control external devices (Georgopoulos et al, 1982; Taylor et al, 2002; Musallam et al, 2004; Hochberg et al, 2006; Santhanam et al, 2006; Velliste et al, 2008; Hochberg et al, 2012). Noninvasive BCIs have also long been pursued from scalp recorded noninvasive electroencephalograms (EEGs). Among such noninvasive BCIs, sensorimotor rhythm (SMR) based BCIs have been developed using a motor imagery paradigm (Pfurtscheller et al., 1993; Wolpaw et al, 1998, 2004; Wang & He, 2004; Wang et al, 2004; Qin et al, 2004; Yuan et al, 2008, 2010a,b).

The development of BCIs is aimed at providing users with the ability to communicate with the external world through the modulation of thought. Such a task is achieved through a closed loop of sensing, processing and actuation. Bioelectric signals are sensed and digitized before being passed to a computer system. The computer then interprets fluctuations in the signals through an understanding of the underlying neurophysiology, in order to discern user intent from the changing signal. The final step is the actuation of this intent, in which it is translated into specific commands for a computer or robotic system to execute. The user can then receive feedback in order to adjust his or her thoughts, and then generates new and adapted signals for the BCI system to interpret.

Patients suffering from amyotrophic lateral sclerosis (ALS) have often been identified as a population who may benefit from the use of a brain-computer interface (Bai et al, 2010). The components of a satisfactory system were identified in a recent ALS patient survey as highly accurate command generation, a high speed of control, and low incidence of unintentional system suspension, i.e. continuity of control (Huggins 2011). While these needs were reported from a patient population with ALS, similar if not identical needs are likely identifiable in a wide variety of other neurodegenerative disorders and the identification of these needs is a crucial component of future investigation. However, the efficacy of noninvasive SMR based BCIs is supported by research indicating that the ability to generate SMRs remains present in users with other neurodegenerative disorders such as muscular dystrophy and spinal muscular atrophy (Cincotti et al, 2008). It is crucial that researchers developing BCIs keep these identified patient-relevant parameters in mind when developing new systems. One limitation of many BCI systems has been the need for a fixed schedule of events. For patients suffering from various neuromuscular disorders, a fixed schedule of command production would limit autonomy. Our BCI does not require a fixed schedule of commands, but this makes using some standard BCI metrics impossible (Kronegg et al, 2005, Yuan et al, 2013). In order to keep consistent with the common metrics reported, including the metric of information transfer rate (ITR) (McFarland et al 2003), we used a modified ITR to fit our experimental protocol. In addition, we reported ITR values for the 2D cursor task that were calculated by the BCI2000 using standard techniques. Using a modified ITR calculation has been recently suggested in the literature (Yuan et al, 2013). Other metrics include success and failure rates for acquisition of presented targets. Protocols developed for the assessment of BCIs that allow for asynchronous interaction and exploration of the subject’s surroundings will be best equipped to aid in the development of systems with a human focus. Ultimately the development of these systems may someday help to restore that which has been undermined by disease.

In previous studies, we have demonstrated the ability of users to control the flight of a virtual helicopter with two-dimensional control (Royer et al, 2010) and 3-dimensional control (Doud et al 2011) by leveraging a motor imagery paradigm with intelligent control strategies. In these studies, in which subjects imagined moving parts of their bodies in a real-time setup, SMRs were extracted to control the movement of a virtual helicopter.

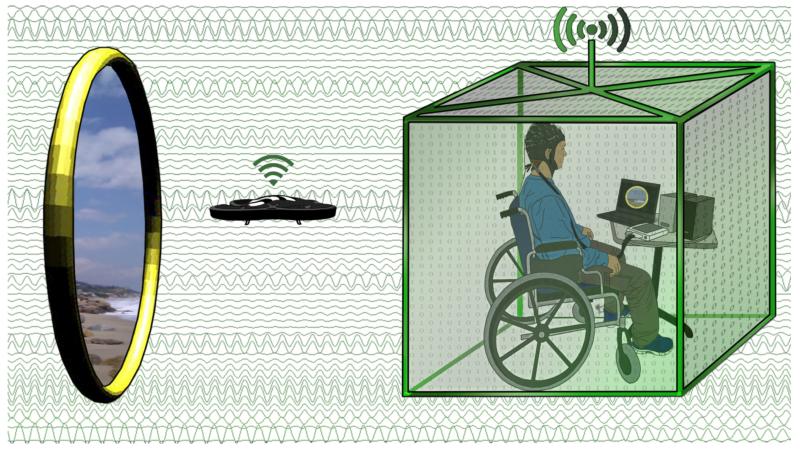

In the present study, we investigate the ability to control the flight of a flying object in 3-dimensional (3D) physical space using sensorimotor rhythms derived from noninvasive EEG in a group of human subjects. Subjects received feedback while flying from a real time video stream that was captured from an onboard camera. The application of telepresence in BCI technology, along with the employment of a continuous, fast and accurate control system are crucial elements in the implementation of a BCI system that has the ability to depart from the immediate surroundings of the user and enter locations where these systems are needed. Figure 1 shows a conceptual diagram of the present study and the potential role of BCI driven telepresence robotics in the restoration of autonomy to a paralyzed individual. The bioelectric signal generated from motor imagination of the hands is represented in the background of the figure. The control signal decoded from the scalp EEG is sent regularly via wifi to the quadcopter to update its movement, while the quadcopter simultaneously acquires video and sends it back to the computer workstation. While a quadcopter was chosen due to its low cost and robust capacity for programmable actuation, the reader may envision any combination of remote mobile devices capable of meaningful interaction with the three-dimensional world. Restoration of autonomy and the ability to freely explore the world through these means are the driving factors for the present investigation.

Figure 1.

A conceptual diagram of the potential role of brain-computer interface driven telepresence robotics in the restoration of autonomy to a paralyzed individual. The bioelectric signal generated from motor imaginations of the hands is represented in the background of the figure. The signal is acquired through the amplifiers in the subject’s workstation where it is then digitized and passed to the computer system. Filtering and further processing of the signal results in a conversion to a control signal that can determine the movement of the quadcopter. This signal is sent regularly via wifi to the quadcopter to update its movement, while the quadcopter simultaneously acquires video and sends it back to the computer workstation. The subject adjusts control and adapts to the control parameters of the system based on the visual feedback of the quadcopter’s video on the computer screen. Restoration of autonomy and the ability to freely explore the world are the driving factors for the development of the system and can be expanded to control of any number of robotic telepresence or replacement systems.

Methods

Study Overview

The study consisted of training and calibration phases, an experimental task phase, and an experimental control phase. In addition, the intrinsic ease of the experimental task was quantified to serve as a baseline comparison to better characterize the achievements of the subjects. Prior to participation in the study, each subject had received exposure to one-dimensional (1D) and two-dimensional (2D) cursor movement tasks using motor imagery. Several of the subjects had also received training in the virtual helicopter control experiments (Royer et al, 2010; Doud et al, 2011).

Regardless of training background, subjects were asked to demonstrate proficiency in 1D and 2D cursor control prior to progression to the AR Drone quadcopter training. Subjects who demonstrated the ability to correctly select 70% or more of valid targets in each of four consecutive 2D cursor trials, or who achieved an average of 70% or more of valid targets over ten consecutive 2D trials, were deemed proficiently skilled in brain-computer interface control for participation in the AR Drone quadcopter study.

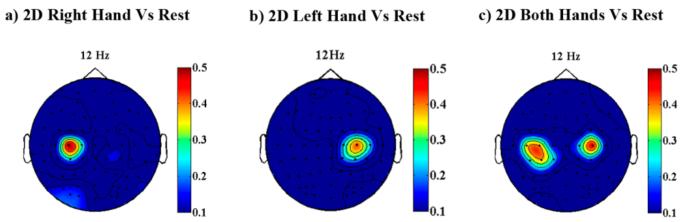

During the initial training period, a statistical optimization of the control signal was performed using the Offline Analysis toolbox released with the BCI2000 development platform (Schalk 2004). This software allows researchers to identify the specific electrodes and frequencies that were most differentially active during the actuation of a given imagination pair. Spectrograms of the R2 value, a statistical measure of degree of correlation of temporal components of the EEG signal with different imagination state pairings, were created so that the electrode and frequency bin (3 Hz width) with the highest correlation value to a given imagination state could be used. In this way, the training period was crucial in determining an optimal, subject-specific control signal that prepared each subject for entry into the real-world task. Figures 2 and 3 show the statistical analysis involved in selecting a control signal for a representative subject. The Offline Analysis toolbox produces a feature map of the R2 value at each frequency and electrode, a topographical representation at a user-specified frequency, and a single-electrode representation of the R squared value that varies with frequency. By evaluating these three figures, a researcher may quickly identify a subject specific electrode-frequency configuration that will best serve the subject as a control signal for a motor imagery based brain-computer interface. Electrode selection for each subject can be seen in the supplementary materials.

Figure 2.

(a-b) A characteristic subject’s R2 topography for the 12Hz component of the right and left hand imaginations as compared to rest. A high R2 value indicates a region in the 12Hz band that is activated differentially between the imagined motion and the rest state. Event related desynchronization in the 12Hz frequency region that is located ipsilateral to the imagined hand is a well-characterized response to motor imagery. (c) The R2 topography of the 12 Hz component of the imagination of both hands as compared to rest.

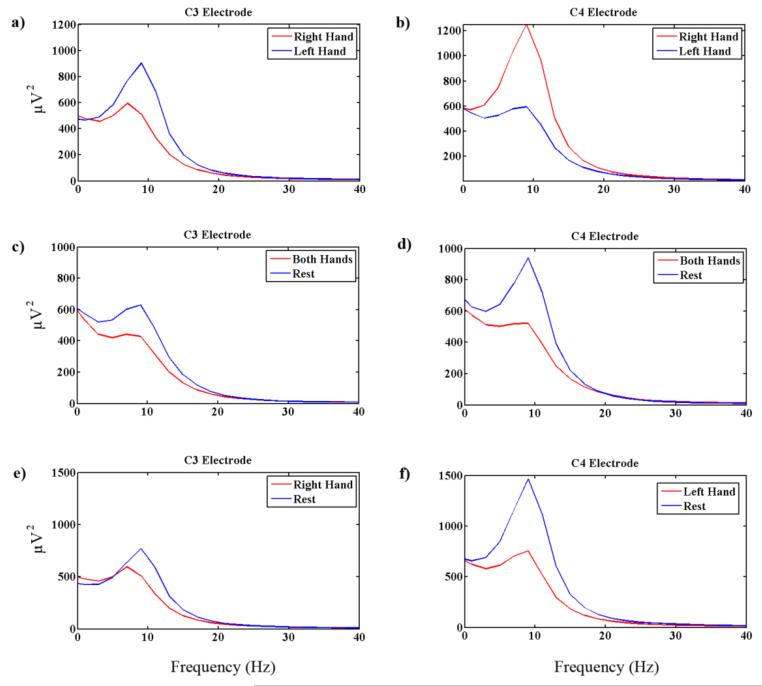

Figure 3.

Spectral power is shown in the control electrodes as a function of frequency. (a-b) Comparisons of spectral power in right vs. left hand imaginations. Note that spectral power is diminished in the electrode contralateral to the imagined hand and increased in the ipsilateral electrode. As a result of the differential synchronization-desynchronization of the electrodes in response to the unilateral motor task, subtracting the C3 electrode from the C4 electrode results in a more separable signal for left-right control. (c-d) Comparisons of spectral power in response to both hands imagined vs. rest show increased power in response to the rest state and desynchronization in response to the imagination of both hands. Both electrodes show desynchronized activity in response to the imaginative state, allowing them to be summed to produce a separable signal for up-down control. (e-f) These spectral plots show left versus rest for the same trials as those constituting the topographies in figure 2.

Experimental Subjects

Five human subjects, aged 21-28 (three female and two male), were included in the study. Each subject provided written consent in order to participate in a protocol approved by the Institutional Review Board of the University of Minnesota. Four of the subjects had not been exposed to BCI before this study, while the fifth subject had been trained and participated in the previous virtual helicopter study detailed in Doud et al 2011.

Subject Training

The initial training phase of the study was aimed at achieving competence in 2D control in the standard BCI 2000 cursor task in addition to a virtual helicopter simulator program developed and described in Royer et al 2010. The reductionist control strategy that was employed in the Royer protocol proved to be a robust control system that was relatively quick and easy to learn. Thus, this protocol was chosen for the transition from virtual to real-world control. Subjects were introduced to and trained in the one-dimensional cursor task (Yuan et al, 2008; Royer & He, 2009) until they achieved a score of 80% or above in four consecutive 3-minute trials, or until they achieved a score of 80% or more when performance was averaged across ten or more consecutive trials. In the one-dimensional cursor task, a target appeared on either the left or right side of the screen and was illuminated with a yellow color. The subject was instructed to perform motor-imagery of left or right hand movement to guide a cursor to the illuminated target, while avoiding an invisible target, which was located on the side of the screen opposite the desired target. Subjects were given 3 experimental sessions, each consisting of at least 9 experimental trials (3 minutes each) to complete this first phase.

A second dimension was then trained independently of the first. Subjects were presented with a second cursor task in which targets appeared at either the top or bottom of the screen. In this task, subjects were instructed to move the cursor up by imagining squeezing or curling both hands and to move the cursor down through the use of a volitional rest. The rest imagination often consisted of subjects focusing on a non-muscular part of the body and relaxing. In this task, addition of the normalized amplitude of the desired frequency components produced the up-down control signal, whereas the left-right control signal was generated from the subtraction of these same frequency components. This allowed for independent control because simultaneous imagination of the hands cancels out the subtracted left-right component, while a lateralized left or right imagination in isolation causes a difference in signal sign that cancels the additive up-down component. Rules for progressing from this phase were the same as in the previous phase, with a limit of 3 experimental sessions for completion.

The third phase paired the control signals from the first two phases together in a 2D cursor task with an array of 4 targets in positions on the top, bottom, left, and right sides of the monitor. Subjects progressed to the next phase of training when they could correctly select 70% or more of valid targets in four consecutive 2D cursor trials or an average of 70% or more of valid targets over ten consecutive 2D trials.

After meeting these criteria, subjects progressed to controlling a virtual helicopter (Royer et al, 2010). The kinetics of this virtual helicopter operated according to a linear tie of velocity to the normalized amplitude of EEG signal components. After up to three sessions (for a total average of about 1 hour of flight time) of training to gain familiarity with this virtual helicopter control protocol, subjects were trained with an enhanced virtual simulation of the AR Drone quadcopter. This improved simulator included an updated virtual model of the AR Drone quadcopter, similar in appearance to the physical quadcopter subjects would eventually be flying in 3D real space. The new simulation program also had an improved control algorithm. It used the same Blender platform physics simulator that was used in Doud et al (2011), but the new algorithm employed force actuation of the drone movement to better approximate the behaviour of the actual AR Drone in a real physical environment. This new algorithm linked subjects’ BCI control signal to the acceleration that was applied to the quadcopter rather than to the velocity of the quadcopter. By actuating the virtual quadcopter through force, we excluded from the system some unrealistic movements that could arise from directly updating the velocity of the model in coordination with the control signal. While this force actuation presented a novel challenge to the subjects, it was an important step in exposing the subjects to the real-world forces that must be managed in order to successfully control the AR Drone. More information on the simulator and its control setup can be found in the supplementary materials. Subject interaction with this simulation system was qualitatively evaluated with the major goal being to familiarize the subject with the control setup, without the pressure of steering an actual robotic drone.

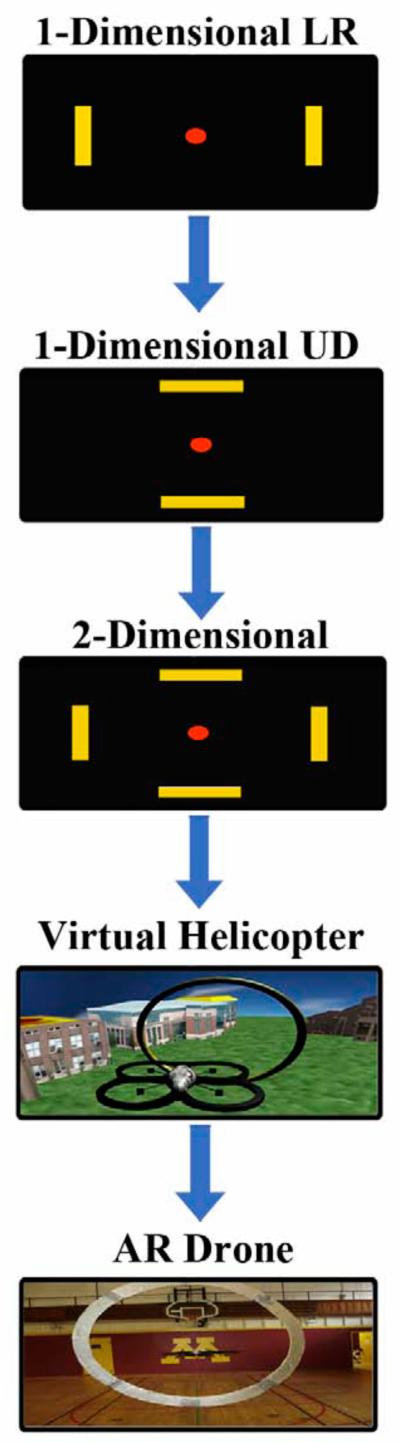

After one to three sessions (consisting of at least 9 three-minute experimental trials each) of training with the virtual AR Drone, subjects started to train with the real AR Drone. The four subjects that were naïve to BCI prior to this study spent an average of 5 hours and 20 minutes of virtual training over an average period of 3 months before starting to train with the real ARDrone. This time includes the 1D Left vs Right, 1D Up vs Down, and 2D cursor tasks, in addition to the virtual helicopter simulation. The order of progression of training can be seen in the flowchart in figure 4.

Figure 4.

An outline of the subjects’ progression through the training sequence that was developed to promote a robust two-dimensional motor imagery based control. During the first three stages of cursor task training, the research team performed an optimization of electrode position and frequency components that contributed to each subject’s control signal. The virtual helicopter task was used to familiarize the subjects with the control system for the ARDrone. The final stage of training was to navigate the ARDrone robotic quadcopter in a real-world environment.

Upon completion of the virtual tasks, subjects were given an opportunity to calibrate their control signals and familiarize themselves with the robotic system by way of a novice-pilot training task. In both the experimental task and the novice-pilot training task, subjects were asked to pilot the AR Drone quadcopter safely through suspended-foam rings. However, in the novice-pilot training task, the rings were spaced further apart and subjects were instructed to fly back and forth such that no ring was acquired more than once in a row. The design of the novice-pilot training task encouraged the user to explore the control space, and gave the subject an opportunity to suggest refinements to the sensitivity of each of the BCI controls. The experimental group spent an average of 84 minutes over 3 weeks on the novice pilot training task. When the subject felt comfortable with the calibration of the control signal in the novice-pilot training task, they were moved to the experimental protocol where speed, accuracy and continuity of control were assessed with the experimental task described below.

Data Collection and Processing

Subjects sat in a comfortable chair, facing a computer monitor in a standard college gymnasium. The experimental set-up was such that a solid wall obscured the subject’s view of the quadcopter. A 64-channel EEG cap was securely fitted to the head of each subject according to the international 10-20 system. EEG signals were sampled at 1000 Hz and filtered from DC – 200 Hz by a Neuroscan Synamps 2 amplifier (Neuroscan Lab, VA) before they were imported into BCI2000 (Schalk et al, 2004) with no spatial filtering. Details of electrode and frequency selection can be seen in the supplementary materials.

The parameterization of BCI2000 during the AR Drone task was such that 4-minute runs consisting of 6-second trials ran in the background of the drone control program and communicated the raw control signal via the UDP packet protocol. The trials were set to have no inter-trial interval or post feedback duration. During each BCI2000 trial, the standard cursor moved through a 2D control space for 6 seconds before being reset to the center of the control space for the next trial. Since there was no inter-trial interval, this 6-second trial pacing was not perceptible by the subject, but was performed to ensure that the system was normalized regularly. Normalization to impose a zero mean and unit variance was performed online with a 30-second normalization buffer. The output signal during cursor movement for all target attempts entered the normalization buffer and was recalculated between trials. The control signal was extracted as the spectral amplitude of the chosen electrodes at the selected frequency components. This was accomplished using BCI2000’s online Autoregressive Filter set with a model order of 16 operating on a time window of 160 ms for spectrum calculation. Effectively, this configuration resulted in continuous output of control signal, pauses for recalculation of normalization factors imperceptible to the subject.

Subjects attended 3 days of the experimental protocol with each daily session consisting of 6-15 trials, lasting up to four minutes each. Subjects were visually monitored for inappropriate eye blinking or muscle activity during each session and were regularly reminded of the importance of minimizing movement. However, observed eye blinking and muscle movement were minimal during all of the experimental sessions. The time-varying spectral component amplitudes from the EEG at predetermined subject-specific electrode locations and frequencies were selected and then integrated online by BCI2000 to produce a control signal that was sent every 30 ms via a UDP port to a Java program that communicated wirelessly with the ARDrone.

Control Environment and Quadcopter

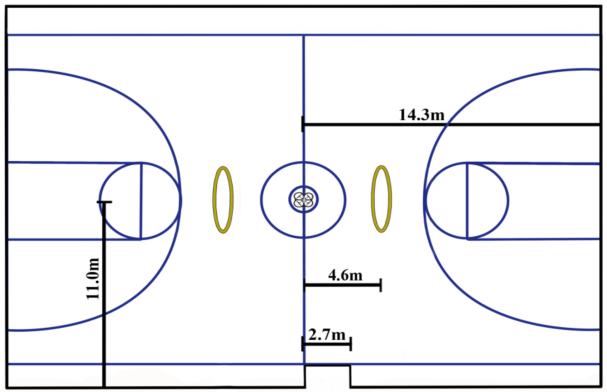

The quadcopter flight environment was set up in a standard college gymnasium. Figure 5 illustrates the experimental setup of the flight environment, and the quadcopter’s starting position relative to the two ring-shaped targets. These targets were made of lightweight foam and were suspended so that the internal edge of the bottom of the ring was approximately 1 m above the ground. Each ring had an outer diameter of 2.7 m and an inner diameter of 2.29 m. Subjects were situated to be facing a solid wall on the side of the gymnasium to ensure safety and to obstruct the subject’s view of the AR Drone quadcopter. Thus, subjects did not directly see the quadcopter, but watched a computer monitor with a first person view from a camera that was mounted on the hull of the quadcopter.

Figure 5.

The layout of the experimental set-up, as well as the dimensions of the quadcopter control space can be seen. The inner diameter of the ring target is 2.29 meters. A small indentation in the control space is seen on the bottom wall where the subject was located during the experiment; thus the quadcopter was not allowed to enter this area.

The AR Drone 1.0 quadcopter (Parrot SA, Paris, France) in the described control space was chosen for the experiments because it provides strong onboard stabilization while allowing for a wide range of programmable speeds and a smooth range of motion. It is a relatively low-cost option for robust control in three-dimensional space with extensive open source support. In addition, the AR Drone provided access to two onboard cameras, and the accelerometer, altitude, and battery data were all reported to the control software and recorded in real time.

Experimental Paradigm

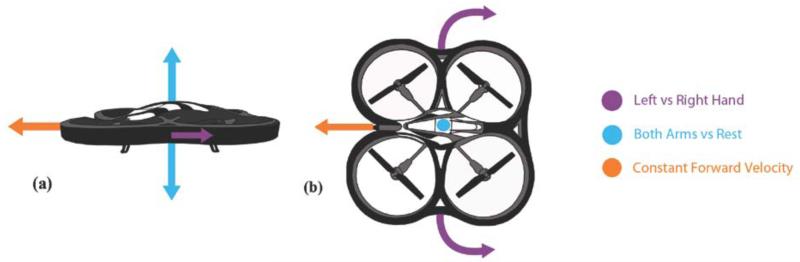

The experimental protocol consisted of three experimental sessions for each subject, with 6-15 trials per session and a maximum flight time of 4 minutes per trial. Each trial began with the AR Drone quadcopter 0.8 meters off the ground. Imagining use of the right hand turned the quadcopter right, while imagination of the left hand turned it left. Imagining both hands together caused the helicopter to rise, while intentionally imagining nothing caused it to fall. A constant forward signal was sent to the quadcopter such that the linear velocity was measured to be 0.69 ± 0.02 m/s in the absence of rotational movement. Figure 6 displays the actuations of the quadcopter that correspond to the subjects’ motor imaginations. Turning the quadcopter attenuated its forward velocity significantly. This is an example of a real-world control parameter that was absent in virtual simulations. Subjects were allowed to pass through the rings in any order, on the condition that no event (i.e. ring collision or target acquisition) occurred within five seconds of the previous event. This time requirement prevented multiple successes from a single target attempt.

Figure 6.

Actuation of the drone is represented by the coloured arrows. The motor imaginations corresponding to each control are seen in the legend on the right. Separable control of each dimension was attained by imagining clenching of the left hand for left turns, right hand for right turns, both hands for an increase in altitude, and no hands for a decrease in altitude. The strength of each control could be independently controlled by weighting coefficients.

In comparison to the novice-pilot training task, the experimental protocol allowed subjects to expand their strategies, while still ensuring that no two events came from the same intentional act. In the experimental task, the rings were positioned such that each ring was 4.6m from the take-off location of the drone.

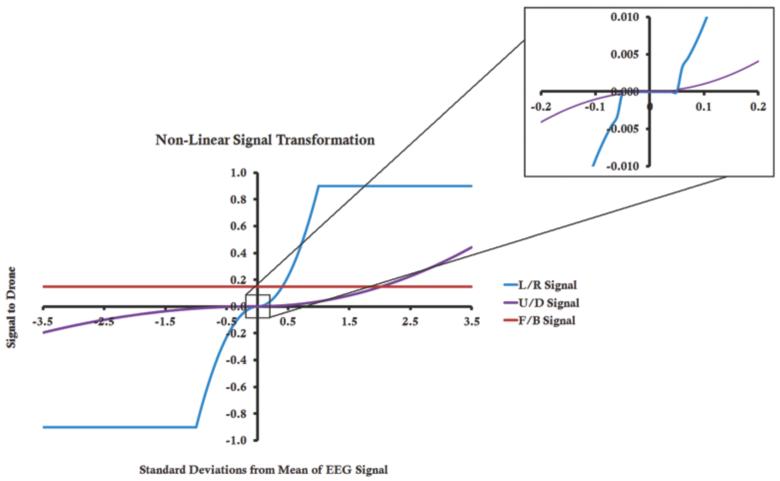

In order to maximize control, subjects were given a system of actuation that is commonly used for remote control vehicles. This was accomplished by using a non-linear transformation of the EEG signal before the control signal was sent to the quadcopter. A graph of this transformation can be seen in Figure 7. The signal used a threshold to remove minor signals that were not likely to have originated from intentional control. Beyond this threshold, the signal was transformed by a quadratic function so that the subjects were able to alter the strength of imagined actions to either achieve fine control for acquiring rings or to make large turns for general steering purposes and obstacle avoidance.

Figure 7.

Control of the AR Drone is shown in terms of the amount of yaw, forward pitch, and altitude acceleration of the drone with 1 being a maximum forward/right/upwards signal, and -1 being a maximum backwards/left/downwards actuation. The constant forward signal was set to correspond to 15% maximum forward tilt and indirectly to a percent of the drone’s maximum forward speed. For the UD signal, maximum actuation would cause the helicopter to supply the maximum amount of lift or to slow its engines in the presence of a negative signal. For the LR signal, maximum actuation refers to the generation of maximum rotational force about the helicopters z-axis. On the x-axis, the BCI2000 generated control signal is the cumulative amplitude of the temporal component of interest for a given electrode. Near the mean signal of zero, a threshold was used to assign a value of zero to small, erroneous signals from randomly generated noise being sent to the drone in the absence of subject intention. The signals could be adjusted by weighting factors for each subject. The relevant equations are seen in the appendix. This graph displays the weighted signal used by subject 1 in the second protocol.

If a subject successfully navigated the quadcopter to pass through a ring, a “target hit” was awarded; however, if the subject only collided with the ring, a “ring collision” was awarded. If the quadcopter collided with a ring, and then passed through that same ring within five seconds, a target hit but not a ring collision was awarded. Finally, if the quadcopter went outside of the boundaries of the control space, the trial was ended and the subject was assigned a “wall collision.”

Trials that lasted for less than four minutes ended because the quadcopter either prematurely exited the bounds of the control space (i.e. a wall collision) or a drone malfunction occurred; malfunctions consisted of occasional wireless network failures or mechanical errors that were outside of the users control. These occasional malfunctions due to mechanical errors or the loss of wireless signal caused a small subset of trials to end early. The data from these trials were analyzed prior to the malfunction, but no wall collision was assigned to the subject’s performance log, as the error was not within their control.

Experimental Control

A control was performed using an experimental protocol in which one naïve subject and two experimental subjects controlled the flight of the quadcopter using keyboard control instead of a BCI. In this protocol, the quadcopter was given the same constant forward velocity, as well as maximum altitude gains and turning speeds that were equivalent to the average of all trained subjects. This protocol allowed for a comparison between a standardized method of control and BCI control. The keyboard control had one advantage in that a “total zero” signal could easily be maintained such that no left/right or up/down actuation occurred. This allowed for easier achievement of straight-line flight in the control experiments. Although it can be argued that a joystick or a remote control box would be a more nuanced method of controlling the quadcopter, this control paradigm employed the discrete keyboard actuation to ensure that the actuated control was the fastest possible within the constrained parameters. In the keyboard control task, subjects were given the same instructions with regards to the manner in which the rings were to be acquired.

In addition, the intrinsic ease of the experimental task was assessed. It is common practice in the field of brain-computer interface to propose a new and novel task for a BCI to perform. When such a task has not yet been explored by other researchers, it can become difficult for readers to determine how much of the subject’s success is attributable to the control system, and how much is attributable to the inherent characteristics of the task. In a task that reports that a subject acquires a target 100% of the time, it is important to also know the target density and how well the system performs when given random noise. If the system can still acquire 100% of the targets in the absence of user intent, the result becomes trivial. To evaluate the intrinsic ease of the presented experimental task, a naïve subject was shown a sham video feed of the helicopter moving in the control space. The subject was instructed to simply observe the screen while sitting quietly and still. In all other ways the system was set up in a manner that is identical to the experimental protocol. The subject wore an EEG cap and signals were acquired that controlled the action of an AR Drone quadcopter in the control space. The performance of the AR Drone in the absence of user intent was measured. This setup was preferred to ensure that realistic biological signals were used as the input of the system; thus, poor performance could not be attributed to a choice of random noise of inappropriate magnitude or characteristics.

Performance Analysis

The performance of BCIs has been quantified by various metrics. Unifying these standard metrics is an important part of establishing a common ground for assessment within the BCI community and encouraging progress from a mutual reference point. In 2003, McFarland et al. described a simple, yet elegant information transfer rate metric based on Shannon’s work (Shannon & Weaver, 1964). However, it has been postulated that this metric cannot be directly applied to asynchronous (self paced) or pointing device BCIs (Kronegg et al, 2005). As it has been postulated that such BCIs may nevertheless be used to assist clinically paralyzed patients, the need has arisen for the development of an analogous assessment for BCI’s that do not require a fixed schedule of commands. An unfixed schedule of commands would allow users to choose and perform activities as they would in a realistic manner (such as picking up a spoon but thereafter deciding they want to switch to a fork instead). In one particular study, subjects successfully navigated a 2D maze using a forward, left, and right command, but the subjects were limited to a maximum of 15 seconds to make a choice (Kus et al, 2012). If no choice was made, the movement selection was restarted. Furthermore, if subjects in the 2D maze test performed a task incorrectly by moving into a wall, this did not cause the cursor to move incorrectly or stop the navigation task, which allowed for continual trials. Recently Yuan et al discusses the difficulty in comparing ITR because of the many intricate differences, including the aforementioned error correction, in BCI systems (2013). The need for explicitly redefining tasks, even if there are multiple choices, is a current limiting factor in the utility of BCIs. However, having an unfixed schedule of commands to reach a target in a dynamic path requires the user to re-plan their path to the target in order to account for mistakes.

In order to create an information transfer rate for an asynchronous BCI system that does not limit user attempt time, the same mathematical basis (established by Shannon and Weaver) used by the current BCI ITR standard was used to quantify an analogous ITR for the present study.

It should be noted that with an unfixed trial length and a large field of operation for the user, the ITR may be lower than comparable BCI systems due to the fact that a long trial, regardless of success or failure, lowers the overall average. This is important in that a BCI system can provide more autonomy in the real world if the system does not require the user to be reset to a position or state after a fixed interval of inactivity. Instead, pursuit of the target should be continuous until the task is achieved.

Using these requirements and the basic equation for bits that was developed by Shannon, we were able to create a metric that would be suitable for an asynchronous real-world BCI task, in which only the initial and final positions of the user are required. The following derivation was used. The index of difficulty (ID) from Fitt’s law in the form of Shannon’s equation uses units of bits. Therefore, using the ID equation from Fitt’s Law,

where D = the distance from the starting point to the center of the target, and W = the width of the target, we were able to calculate bits (Soukeroff & Mackenzie, 2004). Dividing this by the time needed to perform the action of reaching the target, we attained bits/min. The ITR in bits/min was thus calculated by:

This equation was used to calculate the ITR for the first event after take-off (with an event defined as either a target acquisition, ring collision, or wall collision). The net displacement travelled to the target for this study, as seen in figure 5, is 4.6 meters. The diameter of the target, calculated as the inner diameter of the ring, was 2.29 meters. It should be noted that a ring collision, although close to target acquisition, was counted as a failure to transfer any information. Furthermore, this ITR does not account for the nuance that the users started each run with a forward velocity, and without facing the target. However, it does allow for a simple calculation, taking into account only the initial user position and target positions. Such strict guidelines enable the user to give specific instructions throughout the entire time to target acquisition. As we have pointed out, this metric is a coarse approximation of ITR, but it has been alluded to in the BCI field that ITR is often calculated incorrectly when using the standard metric (McFarland et al, 2003; Yuan et al, 2013).

Speed of performance was assessed through the use of two metrics. The average rings per maximum flight (ARMF) metric reports how many targets a subject can acquire on average, if the quadcopter were to remain in flight for the full 4-minute trial. In addition, the average ring acquisition time (ARAT) metric was also used to evaluate the subjects’ speed of control. Continuity of control was evaluated by using the average crashes per maximum flight (ACMF) metric, which reports the average number of crashes that would occur in four minutes given their average rate of occurrence.

ARMF was calculated by:

ARAT was expressed as:

In addition, ACMF was calculated by:

The success rate of the present BCI system was assessed by three metrics: percent total correct (PTC), percent valid correct (PVC), and percent partial correct (PPC). If the subject successfully controlled the quadcopter to pass through a ring, one target hit was awarded. If the quadcopter collided into a ring without passing through it, a ring collision was designated. If the quadcopter collided with a ring and then passed through that same ring, only the target acquisition was counted and the ring collision was not awarded. PTC is the number of target acquisitions divided by the number of attempts (total number of target acquisitions, ring collisions, and wall collision). In contrast, PVC was calculated as the total number of target acquisitions divided by the total number of valid attempts (total number of target hits and wall collisions). A valid attempt occurred when a definitive positive or negative event occurred. In addition, PPC was evaluated to demonstrate how many perfect completions of the tasks were completed versus how many partial successes were achieved.

PTC was calculated by:

PVC was given by:

Lastly, PPC was calculated by:

Results

Subject Success Rate

The subjects were successful in achieving accurate control of the quadcopter in 3D real space. Table 1 displays the performance results. PVC is the subject success rate in all trials in which a result (either correct or incorrect) was achieved. The PTC metric was used as a stricter metric to ensure that each subject made a significant effort towards passing through the target rather than just reaching it. The PPC metric was most analogous to previous BCI experiments in which subjects solely had to reach a target, and not perform the task of passing through the target in a particular orientation after reaching it. The group per trial weighted average for PTC was 66.3%. The PPC weighted average was 82.6%. A summary of these and all other metrics can be seen in Table 1.

Table 1.

A summary of the average performance during the three sessions during which the subjects performed the experimental task, along with the group weighted average of performance for the same metrics. Included for comparison purposes are the grouped performance metrics for keyboard control of the ARDrone by three subjects and an assessment of the baseline ease of the experimental task given input of a random biological signal.

| Paradigm | PVC (%) |

PTC (%) |

PPC (%) |

ARAT (s) |

ARMF (Rings/Max Flight) |

ACMF (Crashes/Max Flight) |

|---|---|---|---|---|---|---|

| Subject 1 | 90.5 | 85.1 | 91.0 | 57.8 | 4.2 | 0.4 |

| Subject 2 | 79.4 | 62.8 | 83.7 | 81.4 | 2.9 | 0.8 |

| Subject 3 | 75.0 | 62.3 | 79.2 | 72.1 | 3.3 | 1.1 |

| Subject 4 | 81.4 | 70.0 | 84.0 | 86.2 | 2.8 | 0.6 |

| Subject 5 | 69.1 | 54.3 | 75.7 | 98.8 | 2.4 | 1.1 |

|

Subjects Weighted

Average |

79.2 | 66.3 | 82.6 | 77.3 | 3.1 | 0.8 |

| Keyboard Control | 100.0 | 100.0 | 100.0 | 19.9 | 12.0 | 0.0 |

| Baseline Ease of Task | 8.3 | 8.3 | 8.3 | 451.0 | 0.5 | 5.9 |

Subjects demonstrated the ability to acquire multiple rings in rapid succession by minimizing turning actuations of the quadcopter. Some subjects were able to do this by temporarily minimizing their left/right signal in order to achieve a more direct path. This is demonstrated in Video 2. Furthermore, subjects were able to achieve fine turn corrections as they approached the rings in order to rotate the quadcopter to pass through the target (seen in Video 1). All subjects were able to successfully achieve high degrees of accuracy by using their motor imaginations to approach and pass through the targets.

Information Transfer Rate

The five subjects who participated in this protocol displayed an average ITR of 6.17 Bits/Min over 10 characteristic trials in a 2D cursor control task in which they were given 6 seconds to reach the target, or were otherwise reset to the center of the screen and given a new target.

During the AR Drone experimental protocol, the five subjects performed at an average ITR of 1.16 Bits/Min. Individual subject values can be seen in Table 2. While lower than those reported in the 2D task, these values represent an important step towards providing BCI users with more autonomy, by not mandating a fixed schedule of events or limiting time. The difference in ITR, while partially due to the strict enforcement of ITR calculation in the real world, could represent some challenges in bringing BCI systems to the real world. While the drop off from 2D cursor is noteworthy, so is the fact that the subjects’ average ITR was 8.29 times higher than the ITR from the ease of control assessment task, a good indication that the subjects were intentionally interacting with the environment via the BCI system. The ease of control assessment used a random biological signal to control the helicopter, and shows that the task could not be completed at a high success rate without an intentionally modulated signal.

Table 2.

A summary of the average ITRs for each of the subjects along with the values for the keyboard control and ease of assessment task (random biological signal).

| Paradigm | Average ITR |

|---|---|

| Subject 1 | 1.92 |

|

| |

| Subject 2 | 1.42 |

| Subject 3 | 1.34 |

|

| |

| Subject 4 | 0.78 |

| Subject 5 | 1.53 |

|

| |

| Group-Weighted Average | 1.16 |

| Keyboard Control | 5.60 |

|

| |

| Baseline Ease of Task | 0.14 |

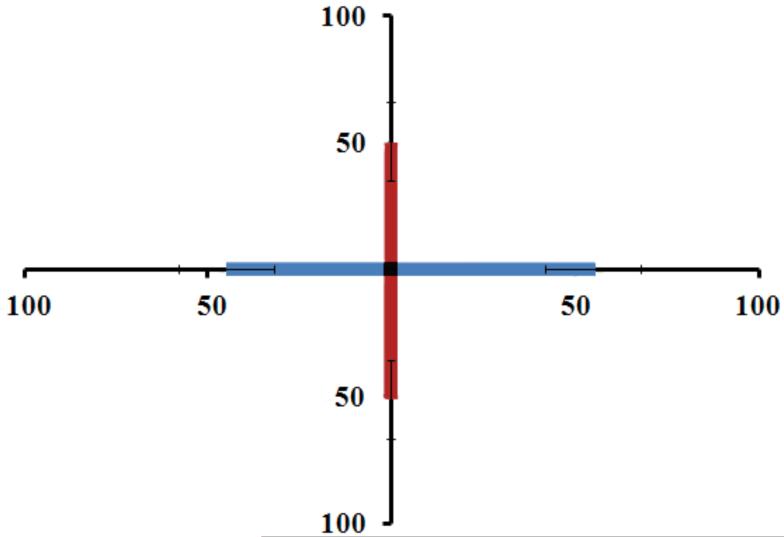

Because of the importance of ITR, it was necessary to ensure that the system did not artificially transfer information without user input, or allow the user to inflate the ITR without using a full range of controls. Therefore, during the period of flight during which ITR was calculated, the direction of control of the user was also tracked, and figure 8 shows the comparison of left to right control together with the comparison to up and down control. This demonstrates that the users had the ability to actuate all dimensions of control in order to move the AR Drone throughout the field to the target.

Figure 8.

Each direction of movement (Up, Down, Left, and Right) is shown as a percentage of the total time spent moving in that dimension (i.e. time moving Left is divided by total time moving either left or right). This is a characteristic plot taken from all of the trials used to calculate ITR for subject 1, which reflects the BCI users use of different directions of control in order to obtain their targets.

Subject Speed of Control

For the purposes of this study, a constant forward command signal was sent to the quadcopter; however, the subjects were able to manipulate the speed by rotating and adjusting the altitude of the quadcopter. It was found that large turns in a single direction tended to increase the velocity, whereas temporarily changing directions decreased the forward linear velocity. Individual subject performance and group average performance for speed of control metrics can be seen in table 1.

The ARMF metric was calculated in order to better quantify the speed performance for each subject. If a subject reached the maximum flight time of four minutes, the quadcopter was automatically landed in order to assure that the battery life had at most a minimal effect on homogeneity of control. The group trial weighted average for ARMF was 3.1 rings per maximum flight.

Subject Continuity of Control

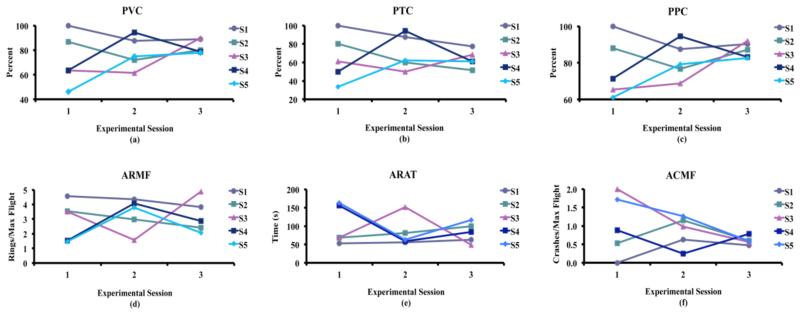

Subjects were given control of the quadcopter in 3D space for flights of up to 4 minutes. During this time, subjects were able to modulate the movements of the quadcopter as they strategized, in order to acquire the most possible rings. However, if the subjects were to collide with the boundaries of the field, then the trial was ended. By calculating the number of boundary collisions that occurred per maximum length of flight (4 minutes), an ACMF metric was developed to compare subject performance. The group trial weighted average for ACMF was 0.8 crashes per maximum flight. Subject progress was tracked over each session and a comparison of subject performance, including metrics of accuracy, speed, and continuity, can be seen in figure 9. Subjects demonstrated the ability to maintain smooth control, while avoiding crashes. Video 1 demonstrates Subject 1 continuously acquiring targets, while avoiding wall collisions and ring collisions in between, thus demonstrating a high level of continuous control.

Figure 9.

Experimental performance metrics are reported over three subject visits (Three experimental sessions). The top row, from left to right, reports percent valid correct (a), percent total correct (b) and percent partial correct, respectively. These metrics reflect the average accuracy of each subject’s control as calculated under different criteria for successful task completion. The bottom row reports, from left to right, average rings per maximum flight (d), average ring acquisition time (e) and average crashes per maximum flight (f). (d) and (e) reflect the speed with which subjects pursued the presented targets, while (e) is a metric of continuity and safety of control.

Experimental Control Results

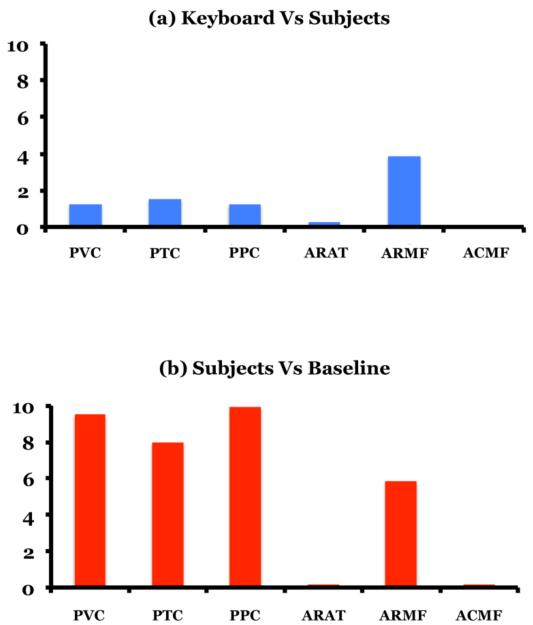

In the keyboard control paradigm, in which a naïve subject and two experimental subjects completed the experimental task using keyboard control instead of BCI, the subjects were able to achieve a group weighted average of 12.0 rings per four-minute trial. In contrast, the trained subjects were able to achieve 3.1 rings per four-minute trial using BCI. Thus, our brain-computer interface system demonstrated the ability to acquire 25.8% of the rings that were acquired via keyboard control, which is a method considered to be the gold standard in terms of reliability and prevalence for our current interactions with computer technology.

For the experimental ease of control assessment (in which a naïve BCI user was given sham feedback), the naïve subject was able to acquire only one target acquisition and no ring collisions over a total flight time of about 7.5 minutes. The reason for the short flight time, despite a normal session of 12 trials, was that the subject crashed the quadcopter quickly after take-off in each trial. The subject had a notably high ACMF of 5.9, indicating the high number of rapid crashes that were observed.

In summary, the trained subjects were able to acquire an average of 3.1 rings per maximum flight time compared to only 0.5 rings per maximum flight for the subject with no training or feedback in the ease of assessment task. The ratios of weighted average performance metrics for keyboard control as compared to BCI control, in addition to the ratios of weighted average performance metrics for BCI control compared to the intrinsic ease of the experimental task are reported in Figure 10.

Figure 10.

(a) The ratios of weighted average performance metrics for keyboard control as compared to brain-computer interface control. Note that a smaller ARAT implies faster ring acquisition and superior performance. Since keyboard control resulted in no crashes, the ACMF value was found to be zero in the ratio of keyboard to subject crashes per max flight. (b) The ratios of weighted average subject performance metrics to baseline BCI system performance using a random EEG signal that was generated by a naive subject receiving no training or task related feedback. Lower ARAT and ACMF metrics are favourable outcomes and so a low ratio for subject to baseline performance implies superior performance by the subjects when compared to the baseline random EEG.

Discussion

The three-dimensional exploration of a physical environment is an important step in the creation of brain-computer interface devices that are capable of impacting our physical world. Implementation of helicopter control has been well established in virtual control environments, but the challenge of making the transition to a system that will function in the physical world is significant. The physical environment in which our robotic system was controlled and the intrinsic characteristics of the physical robotic system introduced several technical obstacles that were not present in prior virtual reality research. Instead of directly influencing an ideal, simulated physics engine to update the movement of a virtual model, subjects are now required to modulate the force of lift that was actuated by the quadcopter. In the absence of adequate lift, a subject’s down command could become unexpectedly large, causing acceleration to a speed that was much faster than intended. Over the course of a long flight, the magnitude of certain actuated movements could decrease slightly due to a draining battery, a consideration that was not reflected in the virtual control studies.

In further contrast to the virtual helicopter control described in Doud et al., an important difference in using the AR Drone quadcopter was the obligatory first-person perspective imposed on the subjects during real-world control. It was therefore not possible, as it had been in prior work, for the subject to see the entire quadcopter as navigation was performed. The field of view was limited to the area immediately in front of the quadcopter with limited visibility on either side, a factor that required the subject to rotate the helicopter and search the field in order to find targets. This presented additional challenges in obstacle avoidance and strategic planning of trajectories for flying through the suspended foam rings. These challenges may have contributed to more ring collisions in this work when compared to the virtual helicopter task and the lower ITR results due to the need for the subjects to search for the ring. Slight lag in communication between the commands that were given to the drone and the return of the video stream to the user’s screen necessitated new strategies that included how to plan ahead for unforeseen circumstances that arose from a short loss of video feedback. Having subjects learn to control a physical system with intrinsic limitations like these, while still demonstrating the ability to complete the task at hand is an obstacle that recipients of future neuroprosthetics will undoubtedly face. Through leveraging the advanced adaptive softwarecapabilities of brain-technology, together with the enormous potential for humans to undergo neuroplastic change, these obstacles are by no means insurmountable. The ability to train in such an environment and to develop techniques for its management is, for that reason, fundamental to the progress of the field. Learning the intrinsic unforeseen obstacles that are present in a physical control environment will allow for the creation of more realistic virtual simulations, which will in turn drive the development of more robust real-world systems. Despite these technical challenges, the subjects in the present study mastered the ability to control the path of a robotic quadcopter to seek out and safely pass through rings in a physical control space that was the size of a basketball court. Technical challenges of this physical control environment were overcome in every metric measured. Despite the described system obstacles, users controlled the movement of the drone in a fast, accurate, and continuous manner.

We have also introduced an ITR metric that can be easily applied to real world, asynchronous, and non-time limited BCI tasks. While the values for this task are lower than those reported in the literature for synchronous, fixed-schedule tasks, there is solid justification for these differences and these systems should be judged against others of comparable asynchronous nature. Notably, the asynchronous task provides that subjects are given the freedom to move in any direction at any time in the process of reaching their goal. If subjects were to incorrectly actuate the AR Drone, such that they actually moved away from the target, this not only penalizes them by increasing the time to the target, but necessitates that they must re-plan their route. This differentiates our system from previous BCI systems, such as that of Kus et al, 2012, in which subjects that made an incorrect choice were allowed back onto the correct path to retry the previous task multiple times. In Kus et al’s maze task, they reported a group average ITR of 4.5 bits/minute, and Rong et al reported ITRs in the range of 1.82 to 8.69 bits/minute, depending on the classifier used. However, it should be noted that these ITR values are not directly comparable because we use a new information transfer rate equation that incorporates a different set of primary assumptions related to the asynchronous nature of our system. A further variable that must not be forgotten in our system is the constant forward velocity and subject point of view. Because the subjects were not initially facing the targets and had a starting velocity when the trials began, they were initially moving away from the targets. In full four minute trials, and because of the first person view, subjects sometimes reported getting lost or disoriented in the field, not knowing where they were. Therefore, before they could get to the target, they had to actually move around the field just to get the target in view. While our system transfers a minimal amount of information from a physiological signal generated in the absence of meaningful feedback, the 8.29 times greater performance of BCI subjects to that of the ease of control assessment is significant. It was shown that the BCI subjects performed at a rate of 4.83 times less than keyboard control, which demonstrates a manifest necessity for continual improvement of BCI systems. However, for subjects that lack all voluntary motor control, even partial improvement is fruitful, so the present BCI results are encouraging. Having an able bodied user perform the task in a normal manner, such as by physical use of a keyboard, may prove useful in assessment of a wide variety of BCI systems. It is our hope that by working towards an appropriate standardization of metrics, the comparative utility of BCI systems can be demonstrated in a more robust manner.

Subject engagement will clearly increase when stimulated with a compelling virtual environment, and this effect is translated into interacting with a physical object in a real-world setting. Motivation for a subject’s success in this study was driven by the engaging and competitive nature of scoring highly in an exciting game. Beyond that motivation, subjects were able to see direct consequences of failure much more clearly than is often possible with a virtual reality study. Where before a crash may have meant a quick reload to the centre of the screen, it now meant a loud noise and a jarring of the camera that was possibly accompanied by a broken drone and a wait to be reset. It has been postulated that non-human primates undergoing training for the use of a robotic arm may experience a sense of embodiment with respect to the new prosthetic as a part of the learning process (Velliste et al, 2008). Recent developments in the field support invasive BCI for use in the control of robotic limbs in 3D space by subjects paralyzed by brainstem lesions (Hochberg et al, 2012). For these subjects, a non-invasive system may eventually serve a similar role, limiting the risk of infection, device rejection, or acting as a bridge in therapy in preparation for implantation. If embodiment does play an important role in the utilization of a neuroprosthetic device, it stands to reason that exposure to a non-invasive system early in the evolution of the disease course and before surgery, may become a viable option, and could result in better retention of function. Even with a less anthropomorphic robotic helicopter as the device of control, subjects still often subjectively experience a similar phenomenon. Subjects new to the task were quickly reminded that a slight bowing of the head when narrowly avoiding the top of a ring would only introduce noise into the system and not improve control. While such behaviour quickly ended with training, the fact that seeing the closeness of the ring produced an urge to slightly duck the head may imply a sense of embodiment regarding the controlled object. Exploring which types of avatars elicit the most robust sense of embodiment in a subject may prove to be a rewarding future pursuit.

This study purposely chose to focus on a telepresence implementation of drone control. Instead of having subjects view the physical drone while completing the task, they were given a video feed from the perspective of the drone. The intention here was twofold: To ensure that the subject could maintain a proper understanding of which direction the drone was facing, a problem often faced by novice remote control model helicopter pilots, and also to further promote the sense of embodiment by seeing through the drone’s “eyes” is crucial in that the subject’s successful control of the drone not be impacted by his or her proximity to it. None of the feedback that was relevant to the control task was dependent on the subject being in the room. The fact that the subject was in the same room with the drone was for experimental convenience rather than out of necessity. With minor modifications to the communication program, a remote user could conceivably control the drone from a considerable distance using brain-computer interface. Such an implementation may introduce additional obstacles such as communication lag or suspended communication. However, in our age of rapid global communication, it is the expectation of the research team that these challenges would be relatively simple to overcome. By ensuring the subject’s ability to control the drone confidently via camera, the implications for expansion to longer distance exploration become compelling.

Choosing rings as the targets for the experimental protocol gives the task an element of both target acquisition and of obstacle avoidance. In a real world situation of brushing one’s teeth or eating with a fork, close enough is rarely good enough. Subjects that trained using the drone protocol presented here were constantly aware that even as target acquisition was imminent, important obstacle avoidance remained essential to success.

By a balance of positive and negative motivators, higher performance in the task, and the real risk of crash, subjects were compelled to higher levels of performance and remained engaged in the experimental task. Such a balance will be crucial in determining the limits of brain-computer interface. A well-tuned system in the absence of subject engagement will fall well short of its potential. As any BCI is a system of connection between a human mind and a machine, ensuring optimum performance of both through system optimization and subject engagement remains an important design requirement of any successful brain-computer interface system.

Conclusion

In the present study we performed an experimental investigation to demonstrate the ability for human subjects to control a robotic quadcopter in a three-dimensional physical space by means of a motor imagery EEG brain-computer interface. Through the use of the BCI system, subjects were able to quickly, accurately, and continuously pursue a series of foam ring targets and pass through them in a real-world environment using only their “thoughts”. The study was implemented with an inexpensive and readily available AR Drone quadcopter, and provides an affordable framework for the development of multidimensional brain-computer interface control of telepresence robotics. We also present an information transfer rate metric for asynchronous real-world brain-computer interface systems, and use this metric to assess our BCI system for the guidance of a robotic quadcopter in three-dimensional physical space. The ability to interact with the environment through exploration of the three-dimensional world is an important component of the autonomy that is lost when one suffers a paralyzing neurodegenerative disorder, and is one that can have a dramatic impact on quality of life. Whether it is with a flying quadcopter or via some other implementation of telepresence robotics, the framework of this study allows for expansion and assessment of control from remote distances with fast and accurate actuation, all qualities that will be valuable in restoring the autonomy of world exploration to paralyzed individuals and expand that capacity in healthy users.

Supplementary Material

Video 5. Subject 5 immediately acquires a ring after take-off, demonstrating fast acclimation to the BCI system when beginning a new run.

Video 1. Subject 1 acquires two targets in 30 seconds. The subject actuates left and right turning to approach the rings from behind in order to be correctly oriented to make a pass at the next ring.

Video 2. Subject 2 shows the ability to suppress left and right actuation in order to take the most direct path between the two rings. This ability is assisted by the non-linear control algorithm.

Video 3. Subject 3 acquires 2 targets consecutively within 20 seconds. The subject shows off an ability to indirectly modulate velocity between rings (slowing down in this case) with sudden left or right turns that shift momentum.

Video 4. Subject 4 demonstrates both continuous and rapid control of the quadcopter by successfully achieving two target acquisitions. Note that the two target acquisitions were differentiated from each other by a period of 10 seconds, which is greater than the predefined threshold for a new target acquisition event.

Acknowledgement

This work was supported in part by NSF CBET-0933067, NSF DGE-1069104, the Multidisciplinary University Research Initiative (MURI) through ONR (N000141110690), NIH EB006433, and in part by the Institute for Engineering in Medicine of the University of Minnesota. The authors would like to acknowledge the Codeminders team for providing an open source platform for Java development of the AR Drone.

References

- Bai O, Lin P, Huang D, Fei D-Y, Floeter MK. Towards a user-friendly brain-computer interface: Initial tests in ALS and PLS patients. Clinical Neurophysiology. 2010;121:1293–1303. doi: 10.1016/j.clinph.2010.02.157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cincotti F, Mattia D, Aloise F, Bufalari S, Schalk G, Oriolo G, Cherubini A, Marciani MG, Babiloni F. Non-invasive brain-computer interface system: towards its application as assistive technology. Brain Res Bull. 2008;75:796–803. doi: 10.1016/j.brainresbull.2008.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doud AJ, Lucas JP, Pisansky MT, He B. Continuous three-dimensional control of a virtual helicopter using a motor imagery based brain-computer interface. PLoS ONE. 2011;6(10):e26322. doi: 10.1371/journal.pone.0026322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doud AJ, LaFleur K, Shades K, Rogin E, Cassady K, He B. Abstract: Noninvasive brain-computer interface control of a quadcopter flying robot in 3D space using sensorimotor rhythms; World Congress 2012 on Medical Physics and Biomedical Engineering; 2012. [Google Scholar]

- Georgopoulos AP, Kalaska JF, Caminiti R, Massey JT. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. Journal of Neuroscience. 1982;2:1527–1537. doi: 10.1523/JNEUROSCI.02-11-01527.1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galán F, Nuttin M, Lew E, Ferrez PW, Vanacker G, et al. A brain-actuated wheelchair: Asynchronous and non-invasive Brain-computer interfaces for continuous control of robots. Clinical Neurophysiology. 2008;119:2159–2169. doi: 10.1016/j.clinph.2008.06.001. [DOI] [PubMed] [Google Scholar]

- He B, Gao S, Yuan H, Wolpaw J. Brain Computer Interface. In: He B, editor. Neural Engineering. 2nd Ed Springer; 2013. pp. 87–151. [Google Scholar]

- Hochberg L, Bacher D, Jarosiewicz B, Masse N, Simeral J, Vogel J, Haddadin S, Liu J, Cash S, van der Smagt P, Donoghue J. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;7398;485 doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan A, Branner A, Chen D, Penn R, Donoghue J. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- Huggins JE, Wren PA, Gruis KL. What would brain-computer interface users want? Opinions and priorities of potential users with amyotrophic lateral sclerosis. Amyotroph Lateral Scler. 2011:318–24. doi: 10.3109/17482968.2011.572978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamousi B, Zhongming L, He B. Classification of motor imagery tasks for brain-computer interface applications by means of two equivalent dipoles analysis. Neural Systems and Rehabilitation Engineering, IEEE Transactions on. 2005;13:166–171. doi: 10.1109/TNSRE.2005.847386. [DOI] [PubMed] [Google Scholar]

- Kronegg J, Voloshynovskiy S, Pun T. Information-transfer rate modeling of EEG-based synchronized brain-computer interfaces (No. 05.03) University of Geneva, Computer Vision and Multimedia Laboratory, Computing Centre; 2005. Technical Report: [Google Scholar]

- Kus R, Valbuena D, Zygierewicz J, Malechka T, Graeser A, Durka P. Asynchronous BCI Based on Motor Imagery With Automated Calibration and Neurofeedback Training. Neural Systems and Rehabilitation Engineering, IEEE Transactions on. 2012;20(6):823–835. doi: 10.1109/TNSRE.2012.2214789. [DOI] [PubMed] [Google Scholar]

- McFarland DJ, Samacki WA, Wolpaw JR. Brain-computer interface operation: Optimizing information transfer rates. Biological Psychology. 2003;63:237–251. doi: 10.1016/s0301-0511(03)00073-5. [DOI] [PubMed] [Google Scholar]

- McFarland DJ, Sarnacki W, Wolpaw J. Electroencephalographic (EEG) control of three-dimensional movement. Journal of Neural Engineering. 2010;7:036007. doi: 10.1088/1741-2560/7/3/036007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musallam S, Corneil BD, Greger B, Scherberger H, Andersen RA. Cognitive Control Signals for Neural Prosthetics. Science. 2004;305:258–262. doi: 10.1126/science.1097938. [DOI] [PubMed] [Google Scholar]

- Neuper C, Scherer R, Wriessnegger S, Pfurtscheller G. Motor imagery and action observation: Modulation of sensorimotor brain rhythms during mental control of a brain-computer interface. Clinical Neurophysiology. 2009;120:239–247. doi: 10.1016/j.clinph.2008.11.015. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Lopes da Silva FH. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clinical Neurophysiology. 1999;110:1842–1857. doi: 10.1016/s1388-2457(99)00141-8. [DOI] [PubMed] [Google Scholar]

- Qin L, Ding L, He B. Motor Imagery Classification by Means of Source Analysis for Brain Computer Interface Applications. Journal of Neural Engineering. 2004;1:135–1441. doi: 10.1088/1741-2560/1/3/002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qin L, He B. A wavelet-based time-frequency analysis approach for classification of motor imagery for brain-computer interface applications. Journal of Neural Engineering. 2005;2:65. doi: 10.1088/1741-2560/2/4/001. [DOI] [PubMed] [Google Scholar]

- Royer AS, Doud AJ, Rose ML, He B. EEG Control of a Virtual Helicopter in 3-Dimensional Space Using Intelligent Control Strategies. Neural Systems and Rehabilitation Engineering, IEEE Transactions on. 2010;18:581–589. doi: 10.1109/TNSRE.2010.2077654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santhanam G, Ryu SI, Yu BM, Afshar A, Shenoy KV. A high-performance brain-computer interface. Nature. 2006;442:195–198. doi: 10.1038/nature04968. [DOI] [PubMed] [Google Scholar]

- Schalk G, McFarland DJ, Hinterberger T, Birbaumer N, Wolpaw JR. BCI2000: a general-purpose brain-computer interface (BCI) system. Biomedical Engineering, IEEE Transactions on. 2004;51:1034–1043. doi: 10.1109/TBME.2004.827072. [DOI] [PubMed] [Google Scholar]

- Scherer R, Lee F, Schlogl A, Leeb R, Bischof H, et al. Toward Self-Paced Brain-Computer Communication: Navigation Through Virtual Worlds. Biomedical Engineering, IEEE Transactions on. 2008;55:675–682. doi: 10.1109/TBME.2007.903709. [DOI] [PubMed] [Google Scholar]

- Shannon CE, Weaver W. The Mathematical Theory of Communication. Univ. Illinois Press; Urbana, IL: 1964. [Google Scholar]

- William Soukoreff R, Scott MacKenzie I. Towards a standard for pointing device evaluation, perspectives on 27 years of Fitts’ law research in HCI. International Journal of Human Computer Studies. 61.6:751–789. [Google Scholar]

- Taylor DM, Tillery SIH, Schwartz AB. Direct Cortical Control of 3D Neuroprosthetic Devices. Science. 2002;296:1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- Vallabhaneni A, Wang T, He B. In: Brain-computer interface. He B, editor. Springer; 2005. [Google Scholar]

- Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008;453:1098–1101. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- Wang T, Deng J, He B. Classifying EEG-based motor imagery tasks by means of time-frequency synthesized spatial patterns. Clinical Neurophysiology. 2004;115:2744–2753. doi: 10.1016/j.clinph.2004.06.022. [DOI] [PubMed] [Google Scholar]

- Wang T, He B. An efficient rhythmic component expression and weighting synthesis strategy for classifying motor imagery EEG in a brain-computer interface. Journal of Neural Engineering. 2004;1:1. doi: 10.1088/1741-2560/1/1/001. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, Birbaumer N, Heetderks WJ, McFarland DJ, Peckham PH, Schalk G, Donchin E, Quatrano LA, Robinson CJ, Vaughan TM. Brain-computer interface technology: a review of the first international meeting. Rehabilitation Engineering, IEEE Transactions on. 2000;8(2):164–173. doi: 10.1109/tre.2000.847807. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proceedings of the National Academy of Sciences of the United States of America. 2004;101:17849–17854. doi: 10.1073/pnas.0403504101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpaw JR, Ramoser H, McFarland DJ, Pfurtscheller G. EEG-Based Communication: Improved Accuracy by Response Verification. IEEE Trans. on Rehab. Eng. 1998;6(3):326–333. doi: 10.1109/86.712231. [DOI] [PubMed] [Google Scholar]

- Yuan P, Gao X, Allison B, Wang Y, Bin G, Gao S. A study of the existing problems of estimating the information transfer rate in online brain-computer interfaces. Journal of Neural Engineering. 2013;10:026014. doi: 10.1088/1741-2560/10/2/026014. [DOI] [PubMed] [Google Scholar]

- Yuan H, Doud A, Gururajan A, He B. Cortical Imaging of Event-Related (de)Synchronization During Online Control of Brain-Computer Interface Using Minimum-Norm Estimates in Frequency Domain. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2008;16:425–431. doi: 10.1109/TNSRE.2008.2003384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan H, Liu T, Szarkowski R, Rios C, Ashe J, et al. Negative covariation between task-related responses in alpha/beta-band activity and BOLD in human sensorimotor cortex: An EEG and fMRI study of motor imagery and movements. NeuroImage. 2010a;49:2596–2606. doi: 10.1016/j.neuroimage.2009.10.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan H, Perdoni C, He B. Relationship between speed and EEG activity during imagined and executed hand movements. Journal of Neural Engineering. 2010b;7:026001. doi: 10.1088/1741-2560/7/2/026001. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Video 5. Subject 5 immediately acquires a ring after take-off, demonstrating fast acclimation to the BCI system when beginning a new run.

Video 1. Subject 1 acquires two targets in 30 seconds. The subject actuates left and right turning to approach the rings from behind in order to be correctly oriented to make a pass at the next ring.

Video 2. Subject 2 shows the ability to suppress left and right actuation in order to take the most direct path between the two rings. This ability is assisted by the non-linear control algorithm.

Video 3. Subject 3 acquires 2 targets consecutively within 20 seconds. The subject shows off an ability to indirectly modulate velocity between rings (slowing down in this case) with sudden left or right turns that shift momentum.

Video 4. Subject 4 demonstrates both continuous and rapid control of the quadcopter by successfully achieving two target acquisitions. Note that the two target acquisitions were differentiated from each other by a period of 10 seconds, which is greater than the predefined threshold for a new target acquisition event.