Abstract

Objective

The Orthopaedic Error Index for hospitals aims to provide the first national assessment of the relative safety of provision of orthopaedic surgery.

Design

Cross-sectional study (retrospective analysis of records in a database).

Setting

The National Reporting and Learning System is the largest national repository of patient-safety incidents in the world with over eight million error reports. It offers a unique opportunity to develop novel approaches to enhancing patient safety, including investigating the relative safety of different healthcare providers and specialties.

Participants

We extracted all orthopaedic error reports from the system over 1 year (2009–2010).

Outcome measures

The Orthopaedic Error Index was calculated as a sum of the error propensity and severity. All relevant hospitals offering orthopaedic surgery in England were then ranked by this metric to identify possible outliers that warrant further attention.

Results

155 hospitals reported 48 971 orthopaedic-related patient-safety incidents. The mean Orthopaedic Error Index was 7.09/year (SD 2.72); five hospitals were identified as outliers. Three of these units were specialist tertiary hospitals carrying out complex surgery; the remaining two outlier hospitals had unusually high Orthopaedic Error Indexes: mean 14.46 (SD 0.29) and 15.29 (SD 0.51), respectively.

Conclusions

The Orthopaedic Error Index has enabled identification of hospitals that may be putting patients at disproportionate risk of orthopaedic-related iatrogenic harm and which therefore warrant further investigation. It provides the prototype of a summary index of harm to enable surveillance of unsafe care over time across institutions. Further validation and scrutiny of the method will be required to assess its potential to be extended to other hospital specialties in the UK and also internationally to other health systems that have comparable national databases of patient-safety incidents.

Keywords: ORTHOPAEDIC & TRAUMA SURGERY, PUBLIC HEALTH

Strengths and limitations of this study.

Several notable strengths of the study: a large national dataset was drawn upon; comprising of over 48 000 orthopaedic reports and the data used are specifically for patient safety.

There is a trade-off between the depth and breadth of the analysis.

Existing learning from national patient-safety reporting systems is limited; some of the information is lost in translation and it is unclear whether all patient-safety incidents are indeed reported.

Secondary analysis of data, including the absence of specific information needed and necessities of using proxies.

Biases exist at several levels: reporting of harmful versus non-harmful events and correct classification of categories of harm. Underlying factors for these biases, such as level of patient-safety culture within institutions, were not assessed.

Introduction

The delivery of safer healthcare remains a challenge globally.1 Over a decade ago, the Institute of Medicine published the seminal report, ‘To err is human’,1 which revealed the previously under-recognised high burden of morbidity and mortality associated with iatrogenic harm. This was then followed by the equally influential Crossing the Quality Chasm, which highlighted the need to develop and make greater use of error reporting systems to enable the generation of learning from patient-safety incidents and inform opportunities for system-level interventions to reduce future risks of harm.2 The challenge for healthcare systems globally remains the consistent delivery of safer care and the associated surveillance of safety within an organisation.

Modern day healthcare involves an array of complicated diagnostic and therapeutic decisions affected by the system within which these occur. Poorly functioning systems and teams have the potential to impact on patient safety. Inevitably, there will be unexpected variation in access, outcomes and quality of care. Whereas some variation is legitimate and indeed desirable (eg, slower surgeons should not be asked to work faster), it is the unwarranted variation that is a major cause of concern to policy-makers and regulators.3 A much-favoured approach for describing this variability is the use of hospital-wide mortality rates. Proponents have argued that these tools provide useful metrics about problems with the quality of inpatient care; uncover system-wide failures and can help patients to choose the safest hospital.4

Patient-safety reporting systems are unused sources of data for the surveillance of harm which enable learning from errors, so that the insights create opportunities for system-level interventions to reduce future risks of harm. Some successes have been reported, for example, in identifying previously undetected risks of new drugs or procedures, but there remain doubts about the wider value of investments in developing and maintaining large-scale incident reporting systems.5 Databases of error reports now exist in many parts of the world, including the UK and the USA.6–8 The UK's National Reporting and Learning System (NRLS) was launched in 2003 and has since accrued over eight million records, making it the most substantial repository of patient-safety incidents in the world. Analyses of this database have revealed risks in a range of clinical areas,9 10 but what has been lacking are high-level, valid summary metrics to allow surveillance of harm across a whole healthcare system in a way that allows, for example: comparison between hospitals; monitoring of time trends and a baseline for assessing and evaluating interventions. Some doubts exist about the wider value of investments in developing and maintaining large-scale incident reporting systems.5

In this paper, we report on the development of one such summary statistic, which aims to (unlike the currently used proxy measures of harm such as Hospital Standardised Mortality Ratios) provide a more direct measure of safety.11 We developed and tested this measure in the field of orthopaedic surgery. This specialty was chosen because it is associated with a relatively high level of harm.12 For example, from 2000 to 2006, an equivalent of US$321 million was paid in adult orthopaedic surgery-related negligence settlements in the UK.13 Similar figures were reported the Physician Insurers Association of America.14

Methods

Developing a model for an error index

Errors will occur in complex systems such as healthcare, and their frequency will relate to the number of procedures undertaken. Such assumptions are made in other fields of risk. In road traffic accidents, for example, predicted crash frequency is a linear function of average daily traffic.15

A simple measure of error is the frequency of errors occurring in any hospital. However, since frequency is a function of the number of procedures that have been carried out, we therefore deemed the frequency of errors per unit of procedure as a more appropriate measure.16 We call this the error propensity. In order to calculate this, we extracted data on all orthopaedic reports made by all English hospitals reporting to the UK's NRLS over a 12-month period from 1 April 2009 to 31 March 2010. In parallel, we approximated the total number of orthopaedic procedures carried out in each hospital using data from the national Hospital Episode Statistics (HES; 2009–2010) database,17 which is a mandatory national database of all patient visits to National Health Service (NHS) hospitals in England, in order to estimate the error propensity. We defined an orthopaedic procedure as any patient entry that involves an Office of Population Censuses and Surveys Classification of Interventions and Procedures code in the OP1 field of HES with a treatment specialty code of 110.

A second component of the Orthopaedic Error Index (OEI) reflects the impact the error has on the patients, that is, how much harm it caused. We call this the error severity, which is based on categories of harm. Harm was defined by user self-reports; the degree of harm was classified as either: no harm, low harm (minimal harm—patient(s) required extra observation or minor treatment), moderate harm (short-term harm—patient(s) required further treatment, or procedure), severe harm (permanent or long-term harm) or death. Further details about the structure of the NRLS are provided in online supplementary appendix 1. We created our summary statistic using principles laid out in the Standards for Statistical Models used for Public Reporting of Health Outcomes.18

The OEI comprised the two main domains of error: the propensity of errors (P) and the severity of harm (S). The mathematical derivation of the OEI is given in online supplementary appendix 2.

Analyses

We estimated P, S and OEI and their SEs for each hospital using STATA V.11 (StataCorp 2011; Stata Statistical Software: Release 12, College Station, Texas, USA: StataCorp LP). Since P, S and the OEI deviated from normality, they were first transformed: OEI using a logarithmic transformation and propensity and severity by taking their reciprocal values. We sought to identify outliers by creating control lines at one, two and three SDs. We plotted OEI (per 1000 procedures) against number of procedures and superimposed lines representing the mean and ±2SD and 3SD of predicted OEI values. Funnel plots provided a visual representation of the data and have been used widely in health services research to compare institutions. We defined outliers as those with an OEI outside the range of µ±3σ, where µ is the mean and σ is the SD for the whole sample. These hospitals require closer scrutiny.19

Results

OEI for all hospitals in England

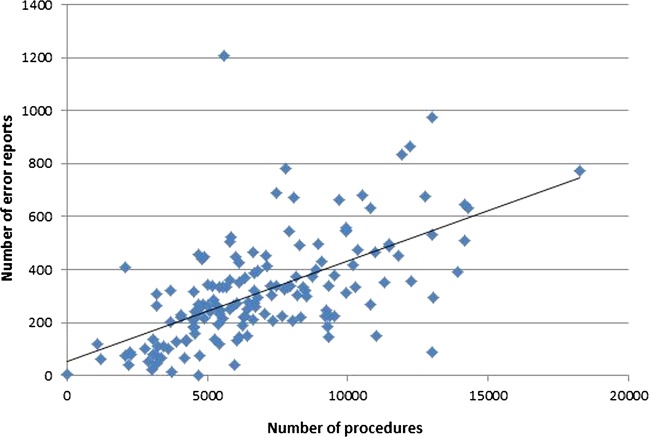

In England, 155 NHS hospitals reported 48 971 patient-safety incidents with varying degrees of harm: 34 530/48 971 (70.5%) no harm; 11 529/48 971 (23.5%) low harm; 2632/48 971 (5.4%) moderate harm; 217/48 971 (0.4%) severe harm and 63/48 971 (0.1%) deaths in the specialty of trauma and orthopaedic surgery during 2009–2010. The mean hospital OEI was 7.09 (SD 2.72). There was a correlation between the number of procedures and error reports of 0.40; therefore an increase of 1000 orthopaedic procedures generated approximately 38 additional error reports (figure 1).

Figure 1.

Relationship between number of error reports and volume of procedures.

Identifying outlier hospitals

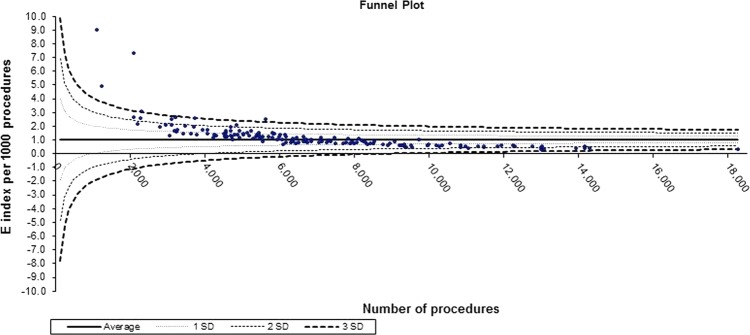

Among the 155 hospitals, 5 lay outside the prespecified control limits (figure 2). These were hospitals that had relatively small numbers of procedures, but high OEI values. Of note, there is an almost linear association with larger hospitals having fewer errors.

Figure 2.

Orthopaedic Error Index for all hospitals in England.

Discussion

The OEI is the first attempt to develop automated procedures to interrogate a national database of patient-safety incidents in order to identify hospitals at disproportionate risk of iatrogenic harm. Applying this tool to all hospitals providing orthopaedic care identified five outlying hospitals: three tertiary care providers and two secondary care providers. While the higher rates may be expected because of case-mix considerations in the tertiary care sites, such deviations are not to be expected in the secondary care providers.

One of these two secondary care providers has subsequently been highlighted nationally as providing substandard care due to failures in administration, management and nursing.20

At present, the NHS and other health systems internationally lack direct indicators of safety. Mortality is a proxy measure and cannot be used in isolation to assess the safety of a hospital. Opponents argue that the construction of Hospital Standardised Mortality Ratios is flawed as the index is unable to discriminate between inevitable and preventable deaths, and that the huge variability in care suggested by these metrics cannot be accounted for by variable quality of care alone.11 Although we did not distinguish between avoidable and non-avoidable incidents in our analysis, previous work by the group has shown that most orthopaedic incidents that result in harm could have been prevented if safety measures had been implemented.21

The process of identifying outliers could be associated with stigma and extensive resource allocation, both financial and reputational.11 Nevertheless, in organisations that foster a culture of reporting and learning, this method should be viewed as one of the many tools that need to be used in parallel to understanding how unsafe the care is in that particular organisation. The use of outlier analysis in singularity does not ensure safe care; it merely acts as a trigger for further checks. One of the hospitals that we identified as an outlier, and that had been the subject of national inquiries, was also noted to have a high Hospital Standardised Mortality Ratio; an excess of up to 1200 deaths occurred here.22 We have thus shown that a patient-safety reporting system, which until recently has been used as a repository collecting reports of errors, can be used to identify institutions that may pose a disproportionate risk to patient safety. However, the work requires greater scrutiny and validation; the purpose of this undertaking was to see if national patient-safety reporting systems can be used for surveillance of unsafe care. As such, we have created a novel metric - the OEI - which is a direct marker of patient safety in individual hospitals. The thrust behind this idea has been the occurrence of several high-profile cases of hospitals such as Alder Hey, Mid-Staffordshire and Stockport NHS hospitals, where a catalogue of medical errors occurred that resulted in varying degrees of harm to the patients.23 Most people would agree that in an era of large datasets, regulators and advisory bodies should have mechanisms to identify hospitals that are struggling to deliver high-quality care at an earlier stage so that corrective responses can be initiated. Monitoring trends in unsafe care over time would be invaluable. They would help, in addition to identifying outliers, in evaluating the effect of safety initiatives and case-mix of patients during different periods of the year. Despite alarming cases of unsafe care, it appears that the NHS is still ill-equipped to identify high-risk hospitals through early warning systems.24 More recently, attempts have been made in the UK to identify failing hospitals by using nationwide surveillance tools which collect data prospectively: the NHS Safety Thermometer that collects data on four domains—venous thromboembolism, urinary tract infections, pressure ulcers and falls;25 the National Surgical Quality Improvement Program which collects measures of outcomes to improve surgical care;26 and the Global Trigger Tool which measures adverse events.27 However, not all hospitals use these tools. Ours is the first tool that uses data from an entire national healthcare system.

Strengths and limitations

Errors can be caused by active failures, for example, mistakes and latent conditions, such as failure of system processes.28 Usually primary data from small, in-depth, qualitative inquiries are used to identify factors that contributed to the errors. The main strength of this approach is that data are specific for patient safety, but a major limitation is the trade-off between the depth and breadth of the analysis. We sought to investigate the use of routinely collected patient-safety incident reports to create a numerical index that could help to provide a complementary perspective by supporting the monitoring of the overall system-wide safety of healthcare provision. Other key strengths of our work include drawing on a large national dataset, comprising of over 48 000 orthopaedic reports, and utilising the full body of data reporting on patient-safety incidents spanning the full spectrum of severity from no harm to death. At present, the learning from national patient-safety reporting systems is limited; some of the information is lost in translation and it is unclear whether all patient-safety incidents are indeed reported.29 The sensitivity of the NRLS at picking up errors has been questioned in the past30 the low power of the study limits generalisability of results to the entire NRLS. Furthermore, all hospitals use the same mechanism of reporting incidents, so the effects of bias and uncertainty are limited with the OEI. Nevertheless this should not deter exploratory work such as ours. We are also cognisant of the fact that there is likely to be a variation in reporting according to the patient-safety culture within hospitals; so a hospital with a high OEI may be one that has an open culture and encourages staff to report patient-safety incidents.31 Of equal importance is the fact that NRLS was a voluntary reporting system until April 2010, when mandatory reporting was introduced for serious untoward incidents.32 In figure 2, we showed that large hospitals (number of orthopaedic procedures) are associated with fewer errors. This must be interpreted with caution as we have not been able to adjust for patient or procedure case-mix due to the paucity and anonymity of the data. Based on work elsewhere, it has been stipulated that specialised surgical services should be provided in tertiary hospitals, although geographical or logistical impediments may occur.33 We cannot make this claim based on our findings. Some local systems of risk management in hospitals opt for root cause analyses to develop local solutions to mitigate harm to patients, but these are not shared nationally, and limited information may be provided to national reporting systems. These systems rely on patient-safety experts methodically trawling through patient-safety incidents by severity and frequency, thereby leading to the production of quarterly reports, alerts and rapid response solutions.34 Such analyses are time-consuming and, as the number of reports rapidly increases, may in the future be unsustainable.35 36 There is also a non-cohesive approach globally to identifying unsafe hospitals. The multitude of quality indicators that are proxy measures of unsafe care is overwhelming. The OEI is a surveillance tool that can enable direct evaluation of safer care in hospitals. It is, we believe, a novel benchmarking tool to assess patient safety across hospitals using a large patient-safety reporting system.

The main limitations are those inherent to any secondary analysis of data, including the absence of specific information needed and necessities of using proxies. Ideally, we would have preferred to link HES data to corresponding NRLS incidents. At present, this is not possible, as the latter does not allow for patient identification via NHS identification numbers. HES data will also give an approximation of orthopaedic and trauma procedures due to coding inaccuracies. However, these are, we believe, largely mitigated in the present analysis by the fact that the data were collected to study error and we refer to our analyses as secondary only because the analysis approach we employed was unanticipated when the study was designed. However, the OEI has several potential limitations. Reporting of patient-safety incidents is a subjective exercise and variation in the dataset is bound to exist. Biases also exist at several levels: reporting of harmful versus non-harmful events and correct classification of categories of harm.37 Underlying factors for these biases, such as the level of patient-safety culture within institutions, were not assessed.38 Further work on measuring the extent and likely impact of such biases is therefore now needed.

Conclusions

With the proliferation of patient-safety reporting systems around the world and an ever-increasing number of patient-safety incidents reported to them, sophisticated analytical techniques are required to identify hospitals that need to strengthen their emphasis on patient safety. This is the first time, to our knowledge, that a surveillance mechanism for safety has been proposed using a reporting system.

Supplementary Material

Footnotes

Contributors: SSP and GN conceived the study design, and analysed and interpreted the data. They drafted earlier versions of the article. AC-S, SJ and BP analysed and interpreted the data and helped to draft subsequent versions of the article. GP, SLD and AS analysed and interpreted the data and revised it critically for important intellectual content. All authors approved the final version to be published.

Funding: This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: The data used for this study can be requested from NHS England by interested researchers and a case by case evaluation is made. No additional unpublished data rest with the authors.

References

- 1.Kohn LT, Corrigan JM, Donaldson MS, To err is human: building a safer health system. Washington: National Academy Press, 1999 [PubMed] [Google Scholar]

- 2.Institute of Medicine Crossing the quality chasm. Washington: National Academy Press, 2001 [Google Scholar]

- 3.The NHS Confederation. Variation in healthcare—does it matter and can anything be done? http://www.nhsconfed.org/Publications/Documents/Variation%20in%20healthcare.pdf (accessed 17 May 2013)

- 4.Bottle A, Jarman B, Aylin P. Strengths and weaknesses of hospital standardised mortality ratios. BMJ 2010;342:c7116. [DOI] [PubMed] [Google Scholar]

- 5.Leape LL. Reporting of adverse events. N Engl J Med 2002;347:1633–8 [DOI] [PubMed] [Google Scholar]

- 6.Sheikh A, Hurwitz B. A national database of medical error. J R Soc Med 1999;92:554–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Panesar SS, Cleary K, Sheikh A. Reflections on the National Patient Safety Agency's database of medical errors. J R Soc Med 2009;102:256–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hickner J, Zafar A, Kuo GM, et al. Field test results of a new ambulatory care medication error and adverse drug event reporting system—MEADERS. Ann Fam Med 2010;8:517–25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Panesar SS, Cleary K, Bhandari M, et al. To cement or not in hip fracture surgery? Lancet 2009;374:1047–9 [DOI] [PubMed] [Google Scholar]

- 10.Cresswell KM, Sheikh A. Information technology-based approaches to reducing repeat drug exposure in patients with known drug allergies. J Allergy Clin Immunol 2008;121:1112–17 [DOI] [PubMed] [Google Scholar]

- 11.Lilford R, Pronovost P. Using hospital mortality rates to judge hospital performance: a bad idea that just won't go away. BMJ 2010;340:955–7 [DOI] [PubMed] [Google Scholar]

- 12.Panesar SS, Carson-Stevens A, Mann BS, et al. Mortality as an indicator of patient safety in orthopaedics: lessons from qualitative analysis of a database of medical errors. BMC Musculoskelet Disord 2012;13:93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Atrey A, Gupte CM, Corbett SA. Review of successful litigation against English health trusts in the treatment of adults with orthopaedic pathology: clinical governance lessons learned. J Bone Joint Surg Am 2010;92:e36. [DOI] [PubMed] [Google Scholar]

- 14.Parikh PD. Key trends for orthopedic medical liability claims. http://www.aaos.org/news/aaosnow/nov09/managing4.asp (accessed 17 May 2013)

- 15.Task Force on Development of the Highway Safety Manual Highway safety manual. Washington, DC: American Association of State Highway and Transportation Officials; 2010, Fig 10-3 [Google Scholar]

- 16.Morse JM, Morse RM. Calculating fall rates: methodological concerns. QRB Qual Rev Bull 1988;14:369–71 [DOI] [PubMed] [Google Scholar]

- 17.Hospital Episode Statistics. http://www.hesonline.nhs.uk/ (accessed 17 May 2013)

- 18.Krumholz HM, Brindis RG, Brush JE, et al. Standards for statistical models used for public reporting of health outcomes: an American Heart Association Scientific Statement from the Quality of Care and Outcomes Research Interdisciplinary Writing Group. Circulation 2006;113:456–62 [DOI] [PubMed] [Google Scholar]

- 19.Spiegelhalter DJ. Funnel plots for comparing institutional performance. Stat Med 2005;24:1185–202 [DOI] [PubMed] [Google Scholar]

- 20.Robert Francis Inquiry Report into Mid Staffordshire NHS Trust. http://webarchive.nationalarchives.gov.uk/20130107105354/http://www.dh.gov.uk/en/Publicationsandstatistics/Publications/PublicationsPolicyAndGuidance/DH_113018 (accessed 17 May 2013)

- 21.Panesar SS, Noble DJ, Mirza SB, et al. Can the surgical checklist reduce the risk of wrong site surgery in orthopaedics?—can the checklist help? Supporting evidence from analysis of a national patient incident reporting system. J Orthop Surg Res 2011;6:18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dyer C. Head of Healthcare Commission excised figures on excess deaths from Mid Staffordshire report. BMJ 2011;342:d2900 [Google Scholar]

- 23.Delamothe T. Repeat after me—“Mid-Staffordshire”. BMJ 2010;340:c188 [Google Scholar]

- 24.Dyer C. Regulators are likely to miss failing trusts now, says Mid Staffordshire head. BMJ 2011;342:d1778 [Google Scholar]

- 25.The NHS Safety Thermometer. http://www.ic.nhs.uk/services/nhs-safety-thermometer (accessed 17 May 2013)

- 26.American College of Surgeon National Surgical Quality Improvement Program. http://site.acsnsqip.org/ (accessed 17 May 2013)

- 27.IHI Global Trigger Tool. http://www.ihi.org/knowledge/Pages/Tools/IHIGlobalTriggerToolforMeasuringAEs.aspx (accessed 17 May 2013)

- 28.Reason J. Human error: models and management. BMJ 2000;320:768–70 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lankshear A, Lowson K, Harden J, et al. Making patients safer: nurses’ responses to patient safety alerts. J Adv Nurs 2008;63:567–75 [DOI] [PubMed] [Google Scholar]

- 30.Sari AB, Sheldon TA, Cracknell A, et al. Sensitivity of routine system for reporting patient safety incidents in an NHS hospital: retrospective patient case note review. BMJ 2007;334:79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.National Patient Safety Agency Seven steps to patient safety. 2004. http://www.nrls.npsa.nhs.uk/resources/collections/seven-steps-to-patient-safety/ (accessed 16 Sep 2013)

- 32.National Patient Safety Agency About reporting patient safety incidents. http://www.nrls.npsa.nhs.uk/report-a-patient-safety-incident/about-reporting-patient-safety-incidents/ (accessed 16 Sep 2013)

- 33.Shervin N, Rubash HE, Katz JN. Orthopaedic procedure volume and patient outcomes: a systematic literature review. Clin Orthop Relat Res 2007;457:35–41 [DOI] [PubMed] [Google Scholar]

- 34.National Patient Safety Agency. 2010. National Reporting and Learning Service. http://www.nrls.npsa.nhs.uk/ (accessed 17 May 2013)

- 35.Lamont T, Scarpello J. National Patient Safety Agency: combining stories with statistics to minimise harm. BMJ 2009;339:b4489. [DOI] [PubMed] [Google Scholar]

- 36.Catchpole K, Bell MD, Johnson S. Safety in anaesthesia: a study of 12,606 reported incidents from the UK National Reporting and Learning System. Anaesthesia 2008;63:340–6 [DOI] [PubMed] [Google Scholar]

- 37.Heinrich HW. Industrial accident prevention: a scientific approach. New York and London: McGraw-Hill Public Company, 1941 [Google Scholar]

- 38.Department of Health An organisation with memory. London: The Stationery Office, 2000 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.