Abstract

Numerous studies have provided clues about the ontogeny of lateralization of auditory processing in humans, but most have employed specific subtypes of stimuli and/or have assessed responses in discrete temporal windows. The present study used near-infrared spectroscopy (NIRS) to establish changes in hemodynamic activity in the neocortex of preverbal infants (aged 4-11 months) while they were exposed to two distinct types of complex auditory stimuli (full sentences and musical phrases). Measurements were taken from bilateral temporal regions, including both anterior and posterior superior temporal gyri. When the infant sample was treated as a homogenous group, no significant effects emerged for stimulus type. However, when infants' hemodynamic responses were categorized according to their overall changes in volume, two very clear neurophysiological patterns emerged. A high responder group, showed a pattern of early and increasing activation, primarily in the left hemisphere, similar to that observed in comparable studies with adults. In contrast, a low responder group showed a pattern of gradual decreases inactivation over time. Although age did track with responder type, no significant differences between these groups emerged for stimulus type, suggesting that the high versus low responder characterization generalizes across classes of auditory stimuli. These results highlight a new way to conceptualize the variable cortical blood flow patterns that are frequently observed across infants and stimuli, with hemodynamic response volumes potentially serving as an early indicator of developmental changes in auditory processing sensitivity.

Keywords: Near-Infrared Spectroscopy, Infants, Music, Speech, Lateralization

1.1 Introduction

Music and speech are two of the most common complex auditory stimuli in our environment. As noted by McMullen and Saffran (2004), to adult listeners, a rock concert and an oral debate are very different auditory experiences, with each carrying very different messages. However, from the inexperienced perspective of an infant, these two sets of complex auditory stimuli have many commonalities. Both music and speech unfold in a linear fashion over time, and are processed by our perceptual systems as coherent sets of dynamic frequencies. In addition, both speech and music are composed of individual components (i.e., phonemes; notes), with different cultures utilizing differing subsets of these “alphabets” (for reviews, see Besson and Schon, 2006; Levitin and Tirovolas, 2009; McMullen and Saffran, 2004).

Behavioral research has demonstrated that even newborns are sensitive to the auditory cues specific to speech, including its phonological structure and rhythm (Christophe and Dupoux, 1996; Mehler, et al., 1988). Newborns are also sensitive to a range of auditory cues important to music processing, including meter (Bergeson and Trehub, 2002; Halit, et al., 2003; Hannon and Trehub, 2005), pitch (Trehub, 2000; Trehub, 2001), timbre (Trehub, et al., 1990) and tempo (Baruch and Drake, 1997; Drake and Bertrand, 2001; Trehub and Thorpe, 1989). Furthermore, infants appear to “co-opt” auditory cues important to music processing as an aid in speech processing and, ultimately, language learning. For example, Bergeson and Trehub (2002) showed that pitch and tempoare both critical to infants' language development and music processing. Jusczyk (1999) and Trehub (2003) separately showed that infants gather information about word and phrase boundaries (and possibly higher level semantic information) through auditory cues typically associated with music processing (e.g., melody, rhythm, timbre, meter). Saffran and colleagues demonstrated that infants use these same auditory cues to distinguish differences in the statistical structure of musical tones (Saffran, et al., 1999), as well as the statistical structure of words (Saffran, et al., 1996). Collectively, these results suggest that a set of auditory cues common to music and speech may facilitate development of the auditory processing system in early infancy.

In adults, imaging data suggest that homologous brain structures are involved in processing speech and music, with the left hemisphere specialized for processing temporal information, and the right hemisphere specialized for processing spectral information (Brown, et al., 2006; Griffiths, et al., 1998; Patterson, et al., 2002; Schneider, et al., 2002; Zatorre, et al., 2002; Zatorre, et al., 1994; Zatorre, et al., 1992). The literature provides a less coherent view of how music and speech are processed—and how this processing develops—in infancy.

Several studies (e.g., Bortfeld, et al., 2009; Dehaene-Lambertz, et al., 2002; for a review, see Minagawa-Kawai, et al., 2011a; Peña, et al., 2003) have focused on how infants process speech stimuli, from single phonemes to running speech. Understandably, the comparison conditions in these studies tend to be other forms of speech (e.g., backwards; native versus non-native) or silence, and there are more data from older infants than from very young infants. Experimental paradigms vary from lab to lab as well. For example, Taga and Asakawa (2007) exposed monolingual Japanese 2- to 4-month-olds to unfamiliar native words and found bilateral temporal activation in response to them. Notably, the majority of stimulus presentation in this case was audiovisual, as the stimuli were presented at different alternating and overlapping intervals with a flashing (4 Hz) checkerboard. In contrast, Minagawa-Kawai et al., (2011b) exposed monolingual Japanese 4-month-olds to short sentences (obtained from auditory-only film dialogs or a speech database) that were delivered by a male or female speaker. Analyses revealed left-lateralized hemodynamic activity for native compared with non-native speech conditions in this unimodal presentation format. Finally, in an important early fMRI study testing 2-3-month-olds, Dehaene-Lambertz and colleagues (2002) observed activation to forward speech that was concentrated in left hemisphere regions comparable to those observed in adult fMRI studies, as well as evidence of discrimination of forward from backward speech, some of which depended on whether infants were in an awake or asleep state. In other words, despite some variability in the data that is arguably attributable to experimental artifacts, findings point most consistently to left lateralized response to speech from an early age. Further evidence in support of this includes a series of NIRS studies from our own lab, demonstrating increased activation in the left relative to the right temporal regions in response to native speech stimuli in older monolingual 6- to 9-month-old infants (Bortfeld et al., 2009).

On the other hand, evidence of other forms of early auditory processing specialization (e.g., to music) is sparse and, to the degree it exists, the findings have been substantially more mixed. While recent fMRI study by Perani and colleagues (2010) found right hemisphere specialization for culture-specific music in newborns, there are several other findings that contradict this. For example, using NIRS, Sakatani et al. (1999) recorded blood flow changes in the bilateral frontal regions of newborns. Although many of the infants showed typical hemodynamic responses (i.e., increased in oxygenated hemoglobin) to what was described as popular piano music, a subset of the infants showed an atypical response such that their deoxygenated hemoglobin (HbR) increased in addition to the typically observed increase in oxygenated hemoglobin. Whether this atypical pattern of responding was the product of immature processing on the part of the infants or reflects problems with the data acquisition system itself is unclear. In favor of the former interpretation, Zaramella and colleagues (2001) observed that a subset of newborns exposed to a tonal sweep showed increases in deoxygenated hemoglobin along with oxygenated hemoglobin in bilateral temporal sites. No significant differences in overall levels of oxygenated hemoglobin were reported for those infants whose levels of HbR increased versus those whose levels decreased. Despite providing better experimental control, tonal sweeps are not particularly musical.

In a study more relevant to our current focus, Kotilahti et al., (2010) exposed newborns to both speech (i.e., excerpts from a story) and music (i.e., a piano concerto) while they recorded changes in blood flow in bilateral temporal sites. Group analyses revealed that neither speech nor music elicited changes in blood flow in the right hemisphere that were significantly different from zero. In the left hemisphere, only speech elicited a hemodynamic response significantly greater than zero (and, despite this, a lateralization index revealed no difference in overall activation between the music and speech conditions). Of course, while in utero, infants are exposed much more consistently to speech (e.g., from their mother) than to any other externally produced auditory stimulus (e.g. music), so this finding (e.g., some laterality for speech, but none for music) is perhaps unsurprising. Recent fMRI data from Dehaene-Lambertz and colleagues (2010) support this interpretation. Although these researchers did not find significant laterality effects (right or left) in 2-3-month-olds in response to music, they did find a (left) laterality effect for speech. However, activity was observed in the right hemisphere was significantly greater for music than for speech.

In sum, there remain discrepancies in speech laterality measures in the current literature. These can be at least partially explained by differences across experimental methodologies, infant ages, cortical measurement locations, and stimuli used (e.g., words, syllables, sentences). However, the nature of responses to different classes of complex auditory stimuli within the same infants remains unclear. The present study addressed this by comparing cortical responses in infants of different ages to speech and to music across the course of the same experimental session. Our goal was to control the variety of confounds by using NIRS to test infants of different ages while they listened to equally complex strings of speech and music, along the lines of what Dehaene-Lambertz and colleagues (2010) fMRI study of two-month-old infants. We anticipated that by including a broad range of ages, we would see a laterality effect for music emerge over developmental time.

1.1.1 The present research

The present study was designed to establish the degree to which hemodynamic responses to different classes of complex auditory stimuli differ within individual infants at various stages of early development. Near-infrared spectroscopy (NIRS) was used to record cortical activity while infants between the ages of 4 and 11 months were exposed to both music and speech. By combining music and speech stimuli in the same experimental session, we were able to compare infants' ability to track the two types of complex auditory stimuli at different points in developmental time. We recorded from bilateral temporal regions, focusing specifically on areas T3 and T4 using the EEG 10-20 system, because past work from our own lab has demonstrated that speech stimuli elicit blood flow changes in these sites specifically in older (e.g., 6- to 9-month-old) infants (e.g., see Bortfeld, et al., 2009; Bortfeld, et al., 2007). Moreover, by recording from bilateral temporal regions, such as the middle and superior temporal gyri (for anatomical locations that correspond to these 10-20 locations, see Okamoto, et al., 2004), we could more readily compare the results from our study with those from other studies focused on comparable areas (e.g., Zaramella et al., 2001; Kotilahti et al., 2010). The wide age range was chosen because there is a dearth of research examining infants' cortical responses to both music and speech in the same experimental session, and understanding the developmental trajectory for auditory processing lateralization for different forms of auditory stimuli will help us understand the neural underpinnings the support such processing in typically developing populations.

1.2 Methods

1.2.1 Participants

Participants were 41 infants (19 males; 22 females between the ages of 4 and 11 months). Four additional infants were tested but eliminated from the sample because of failure to obtain more than one useable block of trials (N = 1) and optical data from more than one channel for both conditions (N =3).

Infants' names were obtained from birth announcements in local newspapers and commercially produced lists, and infants and parents were offered a new toy as compensation for their participation. Informed consent was obtained from the parents before testing began. Prior to testing, it was established based on care taker response whether infants were of at least 37 gestational weeks at time of birth, had passed newborn hearing screening tests, and had had no subsequent history of hearing or visual impairment.

1.2.2 Apparatus

During the experiment, each infant sat on a caretaker's lap in a testing booth. Infants were positioned facing a 53-cm flat panel computer monitor (Macintosh G4) 76 cm away (28.1° visual angle at infants' viewing distance based on a 36 cm wide screen). The monitor was positioned on a shelf, immediately under which audio speakers and a low-light video camera were positioned, oriented towards infants. The monitor was framed by a façade that functioned to conceal the rest of the equipment. The façade was made of three sections. The upper third was a black curtain that covered the wall from side to side and dropped down 84 cm from the ceiling. The middle section, measuring 152 cm (wall to wall horizontally) × 69 cm high, was constructed of plywood and covered with black cloth. The plywood had a rectangular hole cut out of its center that coincided with the size of the viewing surface of the computer monitor (48 cm diagonal). A black curtain hung from the bottom edge of the middle section to the floor. The testing area was separated by a sound-reducing curtain from a control area, where an experimenter operated the NIRS instrument out of the infant's view. Fiber optic cables (15 m each) extended from the instrument to the testing booth and into a custom headband on the infant's head. The cables were bundled together and secured on the wall just over the parent's right shoulder.

The NIRS instrument consisted of three major components: (1) two fiber optic cables that delivered near-infrared light to the scalp of the participant (i.e., emitter fibers); (2) four fiber optic cables that detected the diffusely reflected light at the scalp and transmitted it to the receiver (i.e., detector fibers); and (3) an electronic control box that served both as the source of the near-infrared light and the receiver of the refracted light. The signals received by the electronic control box were processed and relayed to a DELL Inspiron 7000™ laptop computer. A custom computer program recorded and analyzed the signal.

The imaging device used in these studies produced light at 690 and 830 nm wavelengths with two laser-emitting diodes (Boas, et al., 2002). Laser power emitted from the end of the fiber was 4 mW. Light was square wave modulated at audio frequencies of approximately 4 to 12 kHz. Each laser had a unique frequency so that synchronous detection could uniquely identify each laser source from the photo detector signal. Any ambient illumination that occurred during the experiment (e.g., from the visual stimuli) did not interfere with the laser signals because environmental light sources modulate at a significantly different frequency. No detector saturation occurred during the experiment.

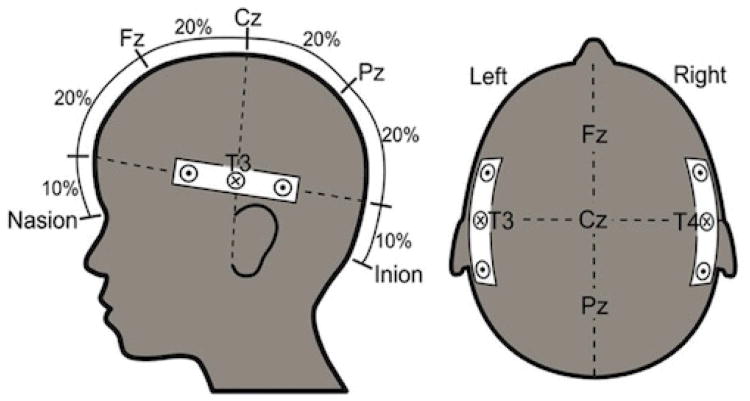

The light was delivered via fiber optic cables (i.e., fibers), each 1 mm in diameter and 15 m in length. These originated at the imaging device and terminated in the headband that was placed on the infant's head. The headband was made of elasticized terry-cloth and was fitted with the two light-emitting and four light-detecting fibers. These were grouped into two emitter/detector fiber sets (i.e., optical probes), each containing two detector fibers placed at 2 cm distance on either side from the central emitter fiber (Figure 1). One optical probe was used to deliver near-infrared light to the left temporal region at approximately position T3 according to the International 10-20 system, and the other delivered light to the right temporal region at approximately position T4. According to the adult literature, these locations correspond to middle temporal and superior temporal gyri (Okamoto, et al., 2004). NIRS data were analyzed by channel within cortical region, (where one paired emitter and detector fiber within each optical probe constituted a channel and each optical probe contained two channels). Responses from the four channels were compared across cortical regions and stimulus conditions.

Figure 1.

Probe layout with emitter probe (x) and two detector probes at 2 cm on either side, with probes localized on or near T3 and T4 to permit simultaneous measurements of the left and right auditory cortex.

1.2.3 Stimuli and Design

The stimuli consisted of audio recordings with accompanying visual animations to maintain infants' attention and to provide a looking time metric to quantify attention. We presented infants with five stimulus blocks, with each block composed of two stimulus trials interspersed with two rest periods. Infants first saw a blank (dark) screen for 20 seconds before stimulus presentation began. Stimulus presentation proceeded as follows: 20 seconds of music with visual animation (music trial), 10 seconds of silence with a blank screen, 20 seconds of speech with visual animation (speech trial), 10 seconds of silence with a blank screen. Each block thus consisted of 20 seconds music + 10 seconds rest + 20 seconds speech + 10 seconds rest, in that order. This sequence repeated five times over the course of the experiment. We employed this alternating design to compare dynamic changes in cerebral blood flow during the course of exposure to alternating complex auditory events (e.g., music and speech). The alternating nature of the music and speech stimuli made it unnecessary to further counterbalance the design for familiarity and/or novelty effects. Our design is distinct from previous studies using NIRS to track infant hemodynamics during auditory processing. First, many of these have isolated aspects of the speech signal, such as using single syllables or tones (Homae, et al., 2006; Minagawa-Kawai, et al., 2004). Others have used complex (i.e., running) speech samples (e.g., Bortfeld, et al., 2009; Bortfeld, et al., 2007; Minagawa-Kawai, et al., 2011b) in which the key contrast in the stimuli was speech-based (i.e., silence versus speech; native versus non-native speech). Although these kinds of comparisons are critical to answering specific questions about the components that contribute to the structure inherent in speech itself, these studies did not capture the full extent of processing involved in extraction of auditory structure in a continuous stream of speech as it contrasts with another, distinct class of complex sound, such as music. Here, we contrast these two forms of auditory stimuli: running speech and running music.

The speech stimuli were recorded by a female speaker reading segments from children's stories using highly animated, infant-directed speech. The content of each speech segment was different, but all contained speech from the same speaker with the same animated intonational variation and positive affective tone. Transcriptions of the story segments are included in the Appendix. Recordings were made using a Sony digital camcorder, and then converted to .wav sound files using Sound Forge 6.0™ audio editing software. Music stimuli were recordings of musical phrases taken from Chopin's Ballade No. 3 in A flat (solo, instrumental piano). Stimuli were edited so that musical phrases started with the onset and ended with the offset of each trial. Both music and speech trials were accompanied by visual stimuli to keep infants engaged; the visual stimuli were of a similar style but varied across trials, again to maintain infants' interest. All visual stimuli consisted of simple, 3-dimensional objects (e.g., spirals, circles, and rectangles) similar in color contrast and motion parameters, and which rotated and moved slowly in front of a high-contrast, colored background. The animations were produced using 3-D Studio Max™ computer graphics software. The auditory and animated digital files were combined using Adobe Premier 6.5™ video editing software, which produced .avi movie files that were then recorded onto a blank DVD. The recorded DVD was then played through the computer monitor and speakers. The hidden speakers were 82 cm from infants, facing them and producing audio stimuli at 75 dB SPL when measured from the approximate location of the infant.

1.2.4 Procedure

After the parent and infant were seated, a head circumference measurement was taken from the infant using a standard cloth tape measure. Each parent was instructed to refrain from talking and interacting with the infant during the course of the experiment, and to hold the infant up so that they were able to comfortably view the screen. Parents were also asked to guide infants' hands down and away from the headband if they began to reach up during the experiment. The experimenter then placed the NIRS headband on the infant. Following the 10-20 system, the two optical probes were adjusted until they were over the left and right temporal areas, centered over the T3 position (on the left) and T4 position (on the right). The experimenter moved to the control area. The room lights in both the experimental and control areas were turned off, leaving only a low intensity light to sufficiently illuminate the experimental area, and light from the computer monitor to light the control area. The source lights of the imaging device were turned on, and stimulus presentation and optical recordings began. Infants were video recorded for the duration of the experimental session for off-line coding of looking-times.

1.2.5 Optical data

The two detector fibers within each optical probe recorded raw optical signals for subsequent digitization at 200 Hz for each of the four channels. NIRS data collected for each of the two stimulus conditions were analyzed the same way. The NIRS apparatus converted the signals to optical density units, which were digitally low-pass filtered at 10.0 Hz for noise reduction, and decimated to 20 samples per second. The control computer then converted the data to relative concentrations of oxygenated (HbO2) and deoxygenated (HbR) hemoglobin using the modified Beer-Lambert law (Strangman, et al., 2002), which calculates the relationship between light absorbance and concentration of particles within a medium. Artifacts originating in infant physiology (e.g., heartbeat) and movement were spatially filtered using a principal components analysis (PCA) of the signals across the four channels (Zhang, et al., 2005). This convention is based on the assumption that systemic components of interference are spatially global and have higher energy than the signal changes evoked by the perceptual stimuli themselves (Zhang, et al., 2005).

1.2.6. Hemodynamic response functions

Hemodynamic activity was recorded in 20 samples per second for each 20 second trial. As expected, hemodynamic responses began manifesting several seconds after stimulus onset and did not begin to abate until near the stimulus offset. Rather than focusing on isolated individual peak values or single average values in a prespecified subset of the total time range (Blasi, et al., 2007; Grossmann, et al., 2008; Karen, et al., 2008; Minagawa-Kawai, et al., 2007), concentration changes in oxygenated blood were calculated by comparing the average HbO2 concentration for the last 15 seconds of each 20 second stimulus trial to the average HbO2 concentration during the last two seconds of the prior rest trial (during which no stimuli were present).

Because our goal was to identify differences in how infants of different ages process music relative to speech, we compared the general hemodynamic response function as it manifested depending upon which complex auditory stimulus (i.e., speech or music) infants were hearing. We average deach 20-second-trial by stimulus type (music or speech), focusing on the final 15 seconds (allowing the initial five seconds for response onset) as a more general indicator of neurophysiological response to our stimuli. We did not include the first five seconds of each response in our analysis because this is generally a ramping up period for the response. We did not use a peak hemodynamic response approach either, because it would have given us information limited to only the maximum responses elicited by our stimuli. Future investigations could build on our findings to further investigate specific properties of the hemodynamic response, but our present goal was to establish the general hemodynamic response during bouts of sustained exposure to the two different forms of auditory stimuli.

Average changes in HbO2 concentration (relative to the 2 seconds during baseline prior to stimulus onset) were thus calculated for each of the (15) one second intervals in each of the (4) recording channels for each of the (2) stimulus conditions. The same procedure was followed to calculate analogous concentration changes in deoxygenated blood using data from the HbR chromophore.

Each infant contributed data from up to five trials of each stimulus type (i.e., 10 trials total). Given the 41 infants included in our sample, the total number of possible useable trials was 410. Through the course of data processing, 21% of the total trials were eliminated due to motion artifact or an error in trial marking. Of the trials excluded from analysis, 98% were excluded due to motion artifacts that could not be corrected, and thus resulted in the trial being removed from analysis. The remaining 2% of excluded trials were removed from analysis due to marking error. On average, infants' data were useable 93% of the time. There were no significant differences in the average number of useable trials per infant for each stimulus condition, (t(40) = .42, p>.10). This high retention rate demonstrates that the infants found the complex stimuli engaging, and the total testing time (5 minutes) tolerable.

1.3 Results

1.3.1 Looking time data

Given the short run-time of the entire experiment, we did not anticipate that infants would develop expectations about the pattern of stimulus trials. Nonetheless, we coded infants' responses for any anticipatory orientation towards the screen immediately prior to each trial's onset (during which the comparison blood flow measures were established). No instances of anticipatory orientation prior to trial onset were detected for either group. The length of time that infants spent looking towards the stimulus trials was then coded by two observers as a general measure of attention. Looking times were calculated for each 20-second trial, and a grand average was computed for the music and speech conditions. The average looking time during the music condition was 16.73 seconds (SD = 3.58), while the average looking time during the speech condition was 16.91 seconds (SD = 3.49). These did not differ significantly from one another (t(40) = .-58, p> .10). Looking times during rest periods were not calculated, as no stimuli were presented (either auditory or visual) at that time. These periods were included to allow the hemodynamic changes induced by stimuli from the previous trial to wash out; these periods consisted of a completely dark screen with no accompanying audio.

An interrater-reliability of 97% was calculated across the two observers' looking time measurements; disagreements were reconciled through discussion. Data were examined to determine whether, for any given block, an infant (a) accumulated less than 10 seconds total looking time per trial or (b) looked away from the display for more than five consecutive seconds. No infants or trial blocks were excluded based on these criteria.

1.3.2. Hemodynamic response function data

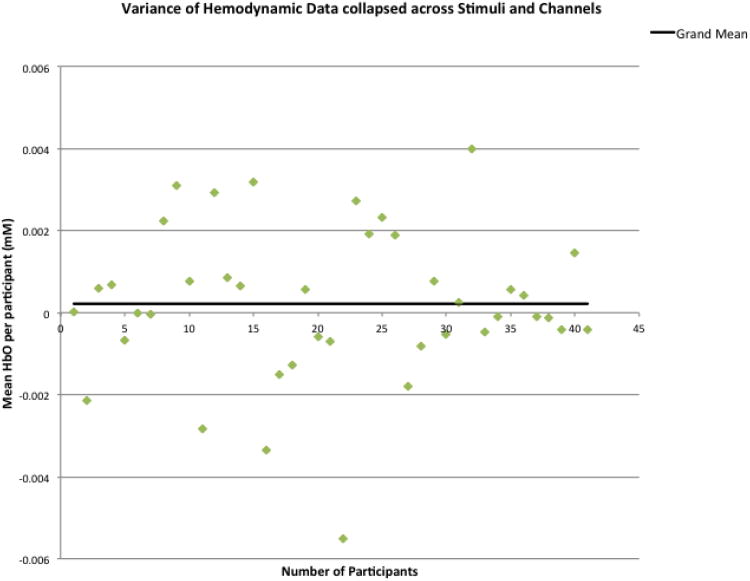

A 2 (speech, music) × 4 (channel: 1= left anterior temporal, 2= left posterior temporal, 3= right anterior temporal, 4= right posterior temporal) × 15 (timepoints per trial) within-design ANOVA was conducted. This overall analysis yielded no significant effects (time, F(14,560) = .69, p> .10; stimulus, F(1,40) = .08, p> .10; channel, F(3,120) = 1.21, p> .10). This was not unexpected, as we anticipated high variability in observed responses as a reflection of our a priori expectations that there would be developmental differences within our infant sample. Supporting this expectation, we found the grand mean hemodynamic response was very near the 2-second baseline average (+.0002), but that individual mean responding ranged dramatically from -.005 to +.004 (± .007) (see Figure 2).

Figure 2.

Variance in mean HbO2 responses per participant in relation to the overall group mean.

Because hemodynamic responding among individuals in our sample was so variable, we sought to identify whether the variance could be systematically explained. That is, if the variance in the overall sample was a result of a real developmental difference rather than random variance, then we should be able to identify a variable (or variables) that would predict a systematic difference among hemodynamic responses. One such variable could be the different chronological ages of our participants. Although others have shown that age is not a reliable predictor of language-related developmental progress in the first year (Weinberg, 1987), it is related to changes in sensitivity to specific speech sounds (e.g., Werker and Tees, 1984). Therefore, to examine how chronological age contributed to our results we entering age as a covariate in the overall ANOVA. Indeed, there was a marginal effect for age (age, F(1, 39) = 3.45, p= .07,η2 = .09) (a small effect), though no other significant effects emerged (age*time, F(14, 546) = 1.04, p> .10; age*stimulus, F(1, 39) = 2.33, p> .10; age*channel, F(3, 117) = .99, p> .10). This hints at the possibility that, had we included more infants, we may have observed a significant effect for age. However, given that only a marginal age effect emerged despite our inclusion of a relatively large sample of infants (N = 41), there appear to be other factors influencing these results.

One possibility is that age-related changes in this domain are nonlinear, a pattern that would certainly weaken the results of any test focused on linear relationships. Nonlinear age effects have been observed in other domains of infant research (e.g., Hayashi, et al., 2001; Naoi, et al., 2012) and may well be underlying our failure to find a significant age effect here. Other demonstrations of laterality effects (e.g., Perani et al., 2010; Dehaene-Lambertz et al., 2010) have been based on data from infants much younger than the youngest infants tested here. Leftward lateralization for language may follow a U-shaped function, whereby initial biases imposed by genetic constraints gradually relax and yield to experience-based tuning over the course of the first year of life. Moreover, while the variability in hemodynamic responses observed here appears to reflect differences in cortical development at least somewhat accounted for by chronological age, other (non-age related) factors were undoubtedly also in play. If infants of similar ages were at different points in development of auditory processing sensitivities, for example, due to differential auditory experience, such differences may have produced systematically different hemodynamic responses. If so, tracking potential causes of such differences may reveal important developmental effects among participants that would otherwise be hidden due to response averaging.

Therefore, in order to evaluate whether the variance in hemodynamic responses was systematic, we conducted a median split analysis on the response-volume data. Median hemodynamic activity (relative to the 2-second pretrial baseline) was calculated for each participant across conditions and channel locations. We then divided the data into a high responder group (infants with greater than median hemodynamic activity), and a low responder group (infants with lower than median activity). Because we determined hemodynamic response volumes based on each individual infant's change from his or her own pretrial baseline, splitting the sample along the median allowed us to establish whether the resulting group difference in response volumes corresponded to a group difference in response patterns. If so, this would indicate that the variance in the overall sample was not random. In other words, if the only group difference were the magnitude of the hemodynamic response, then the high and low responders would simply show different magnitudes of the same pattern. This in turn would suggest that all infants in the sample were processing the stimuli in the same manner, but were devoting varying amounts of processing resources to the task. Alternatively, if the two groups were to show different magnitudes of different patterns, that would suggest the presence of a real developmental difference in auditory processing.

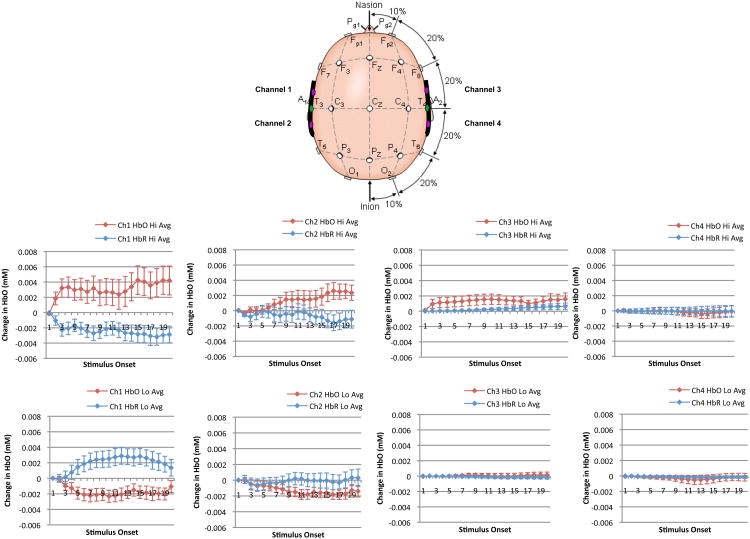

Indeed, the median split analysis revealed that the high responders produced a dramatically different pattern of auditory responses relative to the low responders, and that these different response patterns were consistent within each group (see Figure 3). Specifically, the outcomes of a 2 (high responder, low responder) × 2 (speech, music) × 4 (channel: 1= left anterior temporal, 2= left posterior temporal, 3= right anterior temporal, 4= right posterior temporal) × 15 (timepoints per trial) mixed-design ANOVA on the HbO2 chromophore1 confirmed a main effect of group, F(1, 42) = 102.37, p<.01, η2 = .52 (a medium effect size), whereby high responders produced significantly more activation than low responders overall, as expected. However, while no significant simple effects were observed, the group difference was qualified by several important interactions: group × time, F(14, 546) = 2.49, p<.01, η2 = .027, group × channel, F(3, 117) = 4.27, p<.01, η2 = .00002, and group × time × channel, F(42, 1638) = 1.53, p<.05, η2 = .006. Thus all of our interactions had small effect sizes.

Figure 3.

Mean hemodynamic responding in high and low responder groups per channel recording location.

The significant group × time interaction emerged because high responders began demonstrating activity increases much sooner after stimulus presentation than low responders, and that activation was sustained until near the end of each trial. In contrast, low responders overall showed a slight decrease in HbO2 activity over time. The significant group × channel interaction emerged because high responders showed significantly more activity in left hemisphere channels than low responders. The significant 3-way interaction emerged because high responders displayed more rapid and larger volume HbO2 increases across classes of auditory stimuli in left hemisphere channels across time.

Finally, although there were no significant differences in looking times between high and low responders in either the music (high responder mean 16.26 sec, SD = 4.57; low responder mean 16.63 sec, SD = 4.60; t(20) = 1.08, p> .10) or speech (high responder mean 17.21 sec, SD = 2.13; low responder mean 17.20 sec, SD = 1.78; t(19) = .001, p> .10) conditions, high and low responders did differ significantly by age (t(39) = 2.44, p< .01, d = .80). On average, high responders were 7.5 months of age (SD = 2.51) and low responders were 5.85 months of age (SD = 1.81). This is consistent with the marginal age effect noted earlier in overall hemodynamic responding, with our median split analysis further reducing the variability introduced by non-age related factors to highlight this effect.

1.4 Discussion

The present study used NIRS to examine cortical activity (i.e., changes in the concentration of oxygenated and deoxygenated hemoglobin) in preverbal infants while they listened to samples of complex and continuous speech and music across the course of the same experimental session. To our knowledge, this is the first study to compare cortical processing—as established using NIRS—in awake and behaving infants during a complex music/speech contrast. Moreover, our study is unique in that it included infants across a wide range of ages, with a focus particularly on those in the second half of the first year of life. Because our study included both infant-directed narratives and musical segments composed of complete musical phrases, these complex stimulus sets had structural correspondence across them, allowing us to observe infants' cortical hemodynamics as they switched from processing one form of complex auditory information to another.

This approach has provided important information about how neurophysiological responses change across time and across stimulus type in the same infants. Our data thus build on previous work focusing primarily on 1) very young infants and/or 2) a single class of complex auditory stimuli. By alternating presentation of these two forms of auditory stimuli, we were able to compare hemodynamic activity over a relatively lengthy response interval, capturing the dynamic changes in infants' cortical blood flow during a full course of variable stimulus processing. The approach revealed several noteworthy findings pertaining to infant cortical blood flow.

First, when infants were treated as a homogenous group, the overall analysis showed no detectable differences in responses over time or between hemispheres or stimulus type. This suggested, at least initially, that processing the sorts of complex combinations of stimulus classes we employed here may not be differentially lateralized. That is, without sufficient experience and clearly segregated auditory input (e.g., blocks of music trials and blocks of speech trials, rather than alternating music and speech trials within blocks), infants appeared to process these auditory stimuli using similar cortical regions. However, rather than being a function of the stimuli, we established that this is more likely due to infants' immature allocation of physiological resources, whereby they were not able to track the rapid changes in auditory cues that distinguished one form of sound from the other.

Second, we found that the considerable variability in the overall analysis masked a reliable divergence in auditory processing patterns when infants were divided into high- and low-volume hemodynamic response groups. These groups some what tracked with age. Specifically, a median split analysis showed that infants with high-volume hemodynamic responses (the high responders) showed rapid and sustained cortical activation, particularly in the left temporal regions, to both classes of stimuli. This finding is consistent with other recent findings (e.g., Perani et al., 2010; Kohalti et al., 2010; Dehaene-Lambertz et al., 2010) from younger infants showing a left hemisphere bias for processing speech. Data from these studies were unclear as to a corresponding right hemisphere bias for music, however, the direction of focal activity was generally away from the left hemisphere. Our high responding infants showed a left hemisphere bias for both speech and music, which may be attributable to the relatively intense resource demands inherent in the alternating nature of the stimulus presentation. Moreover, the low responding infants showed an overall decrease in hemodynamic activity regardless of stimulus type. Again, the differences in responder type tracked with age, indicating that the degree of underlying cortical maturation may have been a major source of variability in the data.

The convention in NIRS research is to infer that a significant increase in oxygenated hemoglobin (HbO2) concentration within a given cortical region (and a corresponding decrease in deoxygenated hemoglobin concentration) signals an increase in resources allocated to that region to facilitate processing (Strangman, et al., 2002). In the present study, high responders devoted more processing resources to both classes of auditory stimuli than did low responders. This could indicate that high responders are more developmentally advanced than low responders; alternatively, it could indicate that high responders used more resources because the task was more difficult for them. However, given that high responders were generally older than low responders, we endorse the former interpretation. Moreover, the pattern observed in the high responding infants (i.e., early, high-volume, sustained responses) was roughly similar to patterns observed in our previous NIRS study of infants processing a single form of auditory stimuli (e.g., running speech only) (Bortfeld, et al., 2009; Bortfeld, et al., 2007).

The contrasting patterns of hemodynamic responses in high and low responders in the present study suggest that the low responding infants did not process either form of auditory stimuli in a typical manner, a finding that merits further investigation with a focus on sources of variability in experience and developmental maturity. Deactivations are present in several studies using NIRS (see, Sangrigoli and de Schonen, 2004), however, there is little agreement in the literature about what these atypical responses indicate and where they originate (Gallagher, et al., 2007). One explanation posited in the animal and fMRI literatures, is that the deactivations correspond to a decrease in the activity of large neural populations (Enager, et al., 2004). In contrast, others propose a negative response due to redistribution of blood flow (Shmuel, et al., 2002). In this case, “redistribution” suggests a measure of global blood flow, not specific to a localized area of activity (i.e., the “blood steal” phenomenon) (Shmuel, et al., 2002). Still others suggest that deactivations correspond to inhibitory neural connections (Chen, et al., 2005). In the context of this study and our limited target regions of interest, it is not possible to identify the source of the observed decreases in oxygenated hemoglobin, because we did not collect data from other cortical areas (e.g., frontal and parietal regions). We have considered the above explanations and think it unlikely that our data reflect a decrease in neural activity in the temporal areas, as we have observed canonical hemodynamic responses to similar stimuli with a similar age group in the past (Bortfeld, Fava & Boas, 2009). Moreover, both animal models (e.g, Harrison, et al., 2002; Iadecola, et al., 1997) and adult fMRI data (e.g., Smith, et al., 2004) show that it is unlikely for atypical hemodynamic response functions to cause a general change in direction of blood flow (e.g., posterior to anterior). Indeed, several mechanisms and structures within the brain regulate this process (e.g., Rodriguez-Baeza, et al., 1998). Future NIRS studies with whole-head probes will allow us examine this issue in more detail.

We conclude that the emergence of high-volume, sustained hemodynamic responses in infants during exposure to complex auditory stimuli, as observed in the data from the high responding infants, reveals relatively mature underlying processing mechanisms as compared to the responses observed in the low responding infants. Canonical hemodynamic responses to speech were clearly observable, but not differentially lateralized for music in the high responder group. This suggests that this developmental stage may be an intermediate point between the emergence of sensitivity to informative auditory cues common to both music and speech (and supported by similar structures) and to stimulus-specific qualities that can change quickly over time (which presumably would be indicated by the right- and left-hemispheric specializations for spectral and temporal cue processing as relevant to music and speech respectively (e.g., Schönwiesner, et al., 2005)).

The view of a developmental threshold in preverbal infants' auditory processing specificity is consistent with two separate lines of behavioral evidence. First, previous research has indicated extensive structural overlap in linguistic and non-linguistic stimuli; effective processing of both music and speech relies on the common cues of rhythm, meter, pitch, timbre and tempo, as well as syntactic structure (Juscyzk, 1999; Saffran, et al., 1996; Saffran, et al., 1999). Second, there is evidence suggesting that the statistical learning mechanisms critical for language extend to analysis of non-linguistic tone sequences as well, that these emerge during preverbal infancy, and that they depend on extensive exposure to continuous (rather than discrete) stimuli (Saffran, et al., 1999). While the present study employed continuous stimuli within each trial, infants' prior experiences were no doubt biased towards exposure to continuous segments of speech relative to music.

But what of the finding that high and low responders demonstrated distinctly different cortical response patterns during auditory stimulation? How might these two patterns be interpreted in terms of a developmental threshold for processing classes of complex sound? One possibility is that the different patterns of HbO2 and HbR across the two groups provide clues about where processing resources were allocated beyond the immediate anterior and posterior temporal areas measured in this study. Although at present we can only speculate, it may be that the low responders were simply shifting attention in a way that shifted allocation of hemodynamic resources. For example, if the hemodynamic pattern demonstrated by low responders represents a less developmentally advanced treatment of auditory stimuli, in particular, it may be because the accompanying moving visual stimuli were more salient to this group and cortical resources were allocated accordingly (e.g., toward posterior—visual—processing regions of the brain). Conversely, it is possible that the specific hemodynamic patterns we observed in the high responders (i.e., stronger increases in HbO2 and decreases in HbR in anterior relative to posterior recording locations) for music as well as speech are attributable in part to the moving visual stimuli that accompanied the auditory stimuli. Although the visual stimuli were unrelated in any temporal way to either class of auditory stimulus, the posterior superior temporal sulcus and middle temporal gyrus have been generally implicated in biological motion perception (e.g., Beauchamp, et al., 2003; Beauchamp, et al., 2002; Pelphrey, et al., 2005). At present, there is less research on developmental neural correlates of audiovisual integration, however, existing evidence suggests that audiovisual integration may also localized to the left in superior temporal sulcus regions (Dick, et al., 2010; Nath, et al., 2011). If infants were trying to resolve the multimodal stimuli into one percept, this may have obscured more stimulus-specific response to the different forms of auditory stimuli. Alternatively, the results may present an anterior shift in processing resources. For example, in an adult fMRI study (Chapin, et al., 2010), a frontal-temporal circuit was identified for allocating selective attention to complex auditory stimuli. A shift of this sort would be consistent with high responders demonstrating such a dramatic increase in overall hemodynamic activity during the auditory task for both stimulus types and relative to the low responders. Future research, in which additional cortical measurements (e.g., beyond the temporal regions), will be needed to assess these possibilities.

The current findings highlight the complexity perception of auditory stimuli across time (e.g., blocked by type or alternating between stimulus types), and demonstrate how stimuli can influence patterns of processing in still-developing cortex. Future studies with many more simultaneous recording sites (e.g., bilateral temporal, frontal, and occipital regions) will be needed to track hemodynamic resource allocation under different exposure conditions and address the questions raised by the present study. Additional studies will also be needed to investigate whether the differential patterns of responding observed here are reflected in long-term outcomes in language ability. If such outcomes demonstrate a systematic relationship between the volume and pattern of hemodynamic responses during auditory processing in preverbal infants, it may serve as an early indicator of developmental gains (or delay). For now, such a suggestion is highly speculative, as we have not linked this variable to any other behavioral or demographic/historical data. It remains unknown whether, how, or in what direction the differences in response pattern may play a role in behavior, pointing to an important avenue for future research.

Acknowledgments

The authors thank the undergraduate assistants in the Lil' Aggies' Language Learning Laboratory at Texas A&M University for their help with data collection, and the parents who so graciously agreed to have their infants participate in this research.

This research was supported by a Ruth L. Kirschstein National Research Service Award (NRSA) Individual Fellowship 1F31DC009765-01A1 to Eswen Fava, and grants from the James S. McDonnell Foundation 21st Century Research Award (Bridging Brain, Mind, and Behavior) and from HD046533 to Heather Bortfeld.

A.1 Appendix

The hippo was very hot. He sat on the riverbank and gazed at the little fishes swimming in the water. If only I could be in the water he thought, how wonderful life would be. Then he jumped in and made a mighty splash.

Robert was out riding his bike. He saw his friend Will by the old fence. Did you lose something, he asked. I thought I saw a frog, Will said. I used to have a pet frog named Greeney he'd wait for me by the pond where I lived. He must miss me a lot.

While mama hangs the wash out and pappa rakes the leaves, Oliver chases a big yellow leaf down the hill. He follows it under a twisty tree and all the way to the edge of the woods. From far away, Oliver hears mama calling him. Oliver runs all the way home.

Sometimes the chick and other young penguins dig their beaks into the ice to help them walk up the slippery hill. They toboggon down, fast, on their fluffy bellies. The chick grows and grows, in a short while he'll be a junior penguin.

One very hot afternoon, the farmer and his animals were dozing in the barn. A warm breeze blew through the open doors. The only sound was the buzz buzz of a lazy fly. Suddenly, the buzzing stopped.

Footnotes

In the interest of brevity and clarity, we do not report HbR analyses here, as we were interested in tracking activity in terms of oxygenated blood (HbO2) concentration changes. However, HbR activity followed expected patterns (i.e., the inverse of HbO2 activity) and is illustrated in Figure 3.

Contributor Information

Eswen Fava, University of Massachusetts Amherst.

Rachel Hull, Texas A&M University.

Kyle Baumbauer, University of Pittsburgh.

Heather Bortfeld, University of Connecticut & Haskins Laboratories.

References

- Baruch C, Drake C. Tempo discrimination in infants. Infant Behavior and Development. 1997;20:573–577. [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. fMRI Responses to Video and Point-Light Displays of Moving Humans and Manipulable Objects. Journal of Cognitive Neuroscience. 2003;15:991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Bergeson TR, Trehub S. Absolute pitch and tempo in mothers' songs to infants. Psychological Science. 2002;13:72–75. doi: 10.1111/1467-9280.00413. [DOI] [PubMed] [Google Scholar]

- Besson M, Schon D. Comparison between language and music. Annals of the New York Academy of Sciences. 2006;930:232–258. doi: 10.1111/j.1749-6632.2001.tb05736.x. [DOI] [PubMed] [Google Scholar]

- Blasi A, Fox S, Everdell N, Volein A, Tucker L, Csibra G, Gibson AP, Hebden JC, Johnson MH, Elwell CE. Investigation of depth dependent changes in cerebral haemodynamics during face perception in infants. Physics in Medicine and Biology. 2007;52:6849–6864. doi: 10.1088/0031-9155/52/23/005. [DOI] [PubMed] [Google Scholar]

- Boas DA, Franceschini MA, Dunn AK, Strangman G. In: In Vivo Optical Imaging of Brain Function. Frostig RD, editor. Boca Raton: CRC Press; 2002. pp. 193–221. [Google Scholar]

- Bortfeld H, Fava E, Boas DA. Identifying cortical lateralization of speech processing in infants using near-infrared spectroscopy. Developmental Neuropsychology. 2009;34:52–65. doi: 10.1080/87565640802564481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bortfeld H, Wruck E, Boas DA. Assessing infants' cortical response to speech using near-infrared spectroscopy. NeuroImage. 2007;34:407–415. doi: 10.1016/j.neuroimage.2006.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S, Martinez MJ, Parsons LM. Music and language side by side in the brain: a PET study of the generation of melodies and sentences. European Journal of Neuroscience. 2006;23:2791–2803. doi: 10.1111/j.1460-9568.2006.04785.x. [DOI] [PubMed] [Google Scholar]

- Chapin H, Jantzen K, Kelso JAS, Steinberg F, Large E. Dynamic emotional and neural responses to music depend on performance expression and listener experience. PLOS one. 2010;5 doi: 10.1371/journal.pone.0013812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen CC, Tyler CW, Liu CL, Wang YH. Lateral modulation of BOLD activation in unstimulated regions of the human visual cortex. Neuroimage. 2005;24:802–809. doi: 10.1016/j.neuroimage.2004.09.021. [DOI] [PubMed] [Google Scholar]

- Christophe A, Dupoux E. Bootstrapping lexical acquisition: The role of prosodic structure. Linguistic Review. 1996;13:383–412. [Google Scholar]

- Dehaene-Lambertz G, Dehaene S, Hertz-Pannier L. Functional Neuroimaging of Speech Perception in Infants. Science. 2002;298:2013–2015. doi: 10.1126/science.1077066. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Montavont A, Jobert A, Allirol L, Dubois J, Hertz-Pannier L, Dehaene S. Language or music, mother or Mozart? Structural and environmental influences on infants' language networks. Brain and language. 2010;114:53–65. doi: 10.1016/j.bandl.2009.09.003. [DOI] [PubMed] [Google Scholar]

- Dick AS, Solodkin A, Small SL. Neural development of networks for audiovisual speech comprehension. Brain and Language. 2010;114:101–114. doi: 10.1016/j.bandl.2009.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drake C, Bertrand D. The quest for universals in temporal processing in music. Annual New York Academy of Sciences. 2001;930:17–27. doi: 10.1111/j.1749-6632.2001.tb05722.x. [DOI] [PubMed] [Google Scholar]

- Enager P, Gold L, Lauritzen M. Impaired neurovascular coupling by transhemispheric diaschisis in rat cerebral cortex. Journal of Cerebal Blood Flow and Metabolism. 2004;24:713–719. doi: 10.1097/01.WCB.0000121233.63924.41. [DOI] [PubMed] [Google Scholar]

- Gallagher A, Theriault M, Maclin E, Low K, Gratton G, Fabiani M, Gagnon L, Valois K, Rouleau I, Sauerwein HC, Carmant L, Nyguyen DK, Lortie A, Lepore F, Beland R, Lassonde M. Near-infrared spectroscopy as an alternative to the Wada test for language mapping in children, adults and special populations. Epileptic Discordance. 2007;9:241–255. doi: 10.1684/epd.2007.0118. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Buchel C, Frackowiak RSJ, Patterson RD. Analysis of temporal structure in sound by the brain. Nature Neuroscience. 1998;1:422–427. doi: 10.1038/1637. [DOI] [PubMed] [Google Scholar]

- Grossmann T, Johnson MH, Lloyd-Fox S, Blasi A, Deligianni F, Elwell C, Csibra G. Early cortical specialization for face-to-face communication in human infants. Proceedings of the Royal Society B: Biological Sciences. 2008;275:2803–2811. doi: 10.1098/rspb.2008.0986. 10.1098/rspb.2008.0986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halit H, de Haan M, Johnson MH. Cortical specialisation for face processing: face-sensitive event-related potential components in 3- and 12-month-old infants. NeuroImage. 2003;18:1180–1193. doi: 10.1016/s1053-8119(03)00076-4. [DOI] [PubMed] [Google Scholar]

- Hannon EE, Trehub SE. Metrical categories in infancy and adulthood. Psychological Science. 2005;16:48–55. doi: 10.1111/j.0956-7976.2005.00779.x. [DOI] [PubMed] [Google Scholar]

- Harrison RV, Harel N, Panesar J, Mount RJ. Blood capillary distribution correlates with hemodynamic-based functional imaging in cerebral cortex. Cereb Cortex. 2002;12:225–233. doi: 10.1093/cercor/12.3.225. [DOI] [PubMed] [Google Scholar]

- Hayashi A, Tamekawa Y, Kiritani S. Developmental Change in Auditory Preferences for Speech Stimuli in Japanese Infants. Journal of Speech, Language & Hearing Research. 2001;44:1189. doi: 10.1044/1092-4388(2001/092). [DOI] [PubMed] [Google Scholar]

- Homae F, Watanabe H, Nakano T, Asakawa K, Taga G. The right hemisphere of sleeping infant perceives sentential prosody. Journal of Neuroscience Research. 2006;54:276–280. doi: 10.1016/j.neures.2005.12.006. [DOI] [PubMed] [Google Scholar]

- Iadecola C, Yang G, Ebner TJ, Chen G. Local and propagated vascular responses evoked by focal synaptic activity in cerebellar cortex. Journal of Neurophysiology. 1997;78:651–659. doi: 10.1152/jn.1997.78.2.651. [DOI] [PubMed] [Google Scholar]

- Juscyzk P. How infants begin to extract words from speech. TRENDS in Cognitive Sciences. 1999;3:323–328. doi: 10.1016/s1364-6613(99)01363-7. [DOI] [PubMed] [Google Scholar]

- Karen T, Morren G, Haensse D, Bauschatz AS, Bucher HU, Wolf M. Hemodynamic response to visual stimulation in newborn infants using functional near-infrared spectroscopy. Human Brain Mapping. 2008;29:453–460. doi: 10.1002/hbm.20411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kotilahti K, Nissila I, Nissila T, Lipiainen L, Noponen T, Merilainen P, Huotilainen M, Fellman V. Hemodynamic responses to speech and music in newborn infants. Human Brain Mapping. 2010;31:595–603. doi: 10.1002/hbm.20890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitin DJ, Tirovolas AK. Current advances in the cognitive neuroscience of music. Annals of the New York Academy of Sciences. 2009;1156:211–231. doi: 10.1111/j.1749-6632.2009.04417.x. [DOI] [PubMed] [Google Scholar]

- McMullen E, Saffran JR. Music and Langauge: A Developmental Comparison. Music Perception. 2004;21:289–311. [Google Scholar]

- Mehler J, Jusczyk PW, Lambertz G, Halsted G, Bertoncini J, Amiel-Tison C. A precursor of language acquisition in young infants. Cognition. 1988;29:143–178. doi: 10.1016/0010-0277(88)90035-2. [DOI] [PubMed] [Google Scholar]

- Minagawa-Kawai Y, Cristia A, Dupoux E. Cerebral lateralization and early speech acquisition: a developmental scenario. Developmental Cognitive Neuroscience. 2011a;1:217–232. doi: 10.1016/j.dcn.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y, Mori K, Naoi N, Kojima S. Neural attunement processes in infants during the acquisition of a language-specific phonemic contrast. Journal of Neuroscience. 2007;27:315–321. doi: 10.1523/JNEUROSCI.1984-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y, Mori K, Sato Y, Koizumi T. Differential cortical responses in second language learners to different vowel contrasts. NeuroReport. 2004;15:899–903. doi: 10.1097/00001756-200404090-00033. [DOI] [PubMed] [Google Scholar]

- Minagawa-Kawai Y, van der Lely H, Ramus F, Sato Y, Mazuka R, Dupoux E. Optical brain imaging reveals general auditory and language-specific processing in early infant development. Cerebral Cortex. 2011b;21:254–261. doi: 10.1093/cercor/bhq082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naoi N, Minagawa-Kawai Y, Kobayashi A, Takeuchi K, Nakamura K, Yamamoto JI, Kojima S. Cerebral responses to infant-directed speech and the effect of talker familiarity. NeuroImage. 2012;59:1735–1744. doi: 10.1016/j.neuroimage.2011.07.093. [DOI] [PubMed] [Google Scholar]

- Nath AR, Fava EE, Beauchamp MS. Neural correlates of interindividual differences in children's audiovisual speech perception. Journal of Neuroscience. 2011;31 doi: 10.1523/JNEUROSCI.2605-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okamoto M, Dan H, Sakamoto K, Takeo K, Shimizu K, Kohno S, Oda I, Isobe S, Suzuki T, Kohyama K, Dan I. Three-dimensional probabilistic anatomical cranio-cerebral correlation via the international 10-20 system oriented for transcranial functional brain mapping. Neuroimage. 2004;21:99–111. doi: 10.1016/j.neuroimage.2003.08.026. [DOI] [PubMed] [Google Scholar]

- Patterson RD, Uppenkamp S, Johnsrude IS, Griffiths TD. The Processing of temporal pitch and melody information in auditory cortex. Neuron. 2002;36:767–776. doi: 10.1016/s0896-6273(02)01060-7. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, Michelich CR, Allison T, McCarthy G. Functional Anatomy of Biological Motion Perception in Posterior Temporal Cortex: An fMRI Study of Eye, Mouth and Hand Movements. Cereb Cortex. 2005;15:1866–1876. doi: 10.1093/cercor/bhi064. 10.1093/cercor/bhi064. [DOI] [PubMed] [Google Scholar]

- Peña M, Maki A, Kovacić D, Dehaene-Lambertz G, Koizumi H, Bouquet F, Mehler J. Sounds and silence: an optical topography study of language recognition at birth. Proceedings of the National Academy of Sciences, USA. 2003;100:11702–11705. doi: 10.1073/pnas.1934290100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perani D, Saccuman MC, Scifo P, Spada D, Andreolli G, Rovelli R, Baldoli C, Koelsch S. Functional specializations for music processing in the human newborn brain. PNAS. 2010;107:4758–4763. doi: 10.1073/pnas.0909074107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez-Baeza A, Reina de la Torre F, Ortega-Sanchez M, Sahu-Quillo-Barris J. Perivascular structures in corrosion casts of human central nervous system: A confocal laser and scanning electron microscope study. Anatomical Record. 1998;252:176–184. doi: 10.1002/(SICI)1097-0185(199810)252:2<176::AID-AR3>3.0.CO;2-1. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Johnson EK, Alsin RN, Newport EL. Statistical learning of tone sequences by human infants and adults. Cognition. 1999;70:27–52. doi: 10.1016/s0010-0277(98)00075-4. [DOI] [PubMed] [Google Scholar]

- Sakatani K, Chen S, Lichty W, Zuo H, Wang YP. Cerebral blood oxygenation changes induced by auditory stimulation in newborn infants measured by near infrared spectroscopy. Early Human Development. 1999;55:229–236. doi: 10.1016/s0378-3782(99)00019-5. [DOI] [PubMed] [Google Scholar]

- Sangrigoli S, de Schonen S. Effect of visual experience on face processing: a developmental study of inversion and non-native effects. Developmental Science. 2004;7:74–87. doi: 10.1111/j.1467-7687.2004.00324.x. [DOI] [PubMed] [Google Scholar]

- Schneider P, Scherg M, Dosch HG, Specht HJ, Gutschalk A, Rupp A. Morphology of Hesch's gyrus reflects enhanced activation in the auditory cortex of musicians. Nature Neuroscience. 2002;5:688–694. doi: 10.1038/nn871. [DOI] [PubMed] [Google Scholar]

- Schönwiesner M, Rübsamen R, von Cramon DY. Hemispheric asymmetry for spectral and temporal processing in the human antero-lateral auditory belt cortex. European Journal of Neuroscience. 2005;22:1521–1528. doi: 10.1111/j.1460-9568.2005.04315.x. [DOI] [PubMed] [Google Scholar]

- Shmuel A, Yacoub E, Pfeuffer J, Van de Moortele PF, Adriany G, Hu X, Ugurbil K. Sustained negative BOLD, blood flow and oxygen consumption response and its coupling to the positive response in the human brain. Neuron. 2002;36:1195–1210. doi: 10.1016/s0896-6273(02)01061-9. [DOI] [PubMed] [Google Scholar]

- Smith AT, Williams AL, Singh KD. Negative BOLD in the visual cortex: evidence against blood stealing. Human Brain Mapping. 2004;21:213–220. doi: 10.1002/hbm.20017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strangman G, Boas DA, Sutton JP. Non-invasive neuroimaging using near-infrared light. Biological Psychiatry. 2002;52:679–693. doi: 10.1016/s0006-3223(02)01550-0. [DOI] [PubMed] [Google Scholar]

- Taga G, Asakawa K. Selectivity and localization of cortical response to auditory and visual stimulation in awake infants aged 2 to 4 months. NeuroImage. 2007;36:1246–1252. doi: 10.1016/j.neuroimage.2007.04.037. [DOI] [PubMed] [Google Scholar]

- Trehub SE. The developmental origins of musicality. Nature Neuroscience. 2003;6:669–674. doi: 10.1038/nn1084. [DOI] [PubMed] [Google Scholar]

- Trehub SE. In: The Origins of Music. Wallin NL, et al., editors. Cambridge, MA: MIT Press; 2000. pp. 427–448. [Google Scholar]

- Trehub SE. Musical predispositions in infancy. Annual New York Academy of Sciences. 2001;930:1–16. doi: 10.1111/j.1749-6632.2001.tb05721.x. [DOI] [PubMed] [Google Scholar]

- Trehub SE, Endman M, Thorpe LA. Infants' perception of timbre: classification of complex tones by spectral structure. Journal of Experimental Child Psychology. 1990;49:300–313. doi: 10.1016/0022-0965(90)90060-l. [DOI] [PubMed] [Google Scholar]

- Trehub SE, Thorpe LA. Infants' peception of rhythm. Categorization of auditory sequences by temporal structure. Canadian Journal of Psychology. 1989;43:217–229. doi: 10.1037/h0084223. [DOI] [PubMed] [Google Scholar]

- Weinberg A. In: Parameter Setting. Roeper R, Williams E, editors. D. Reidel Publishing Company; 1987. pp. 173–187. [Google Scholar]

- Werker JF, Tees RC. Cross-language speech perception: evidence for perceptual reorganization during the first year of life. Infant Behavior and Development. 1984;7:49–63. [Google Scholar]

- Zaramella P, Freato F, Amigoni A, Salvadori S, Marangoni P, Suppjei A, Schiavo B, Chiandetti L. Brain auditory activation measured by near-infrared spectroscopy (NIRS) in neonates. Pediatric Research. 2001;49:213–219. doi: 10.1203/00006450-200102000-00014. [DOI] [PubMed] [Google Scholar]

- Zatorre R, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. TRENDS in Cognitive Sciences. 2002;6 doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]

- Zatorre R, Evans AC, Meyer E. Neural mechanisms underlying melodic perception and memory for pitch. Journal of Neuroscience. 1994;14:1908–1919. doi: 10.1523/JNEUROSCI.14-04-01908.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre R, Evans AC, Meyer E, Gjedde A. Lateralization of phonetic and pitch processing in speech perception. Science. 1992;256:846–849. doi: 10.1126/science.1589767. [DOI] [PubMed] [Google Scholar]

- Zhang Y, Brooks DH, Franceschini MA, Boas DA. Eigenvector-based spatial filtering for reduction of physiological interference in diffuse optical imaging. Journal of Biomedical Optics. 2005;10:011014–011011. 011011. doi: 10.1117/1.1852552. [DOI] [PubMed] [Google Scholar]