Abstract

Introduction

This paper describes a method for automatic analysis of the choroid in OCT images of the eye fundus in ophthalmology. The problem of vascular lesions occurs e.g. in a large population of patients having diabetes or macular degeneration. Their correct diagnosis and quantitative assessment of the treatment progress are a critical part of the eye fundus diagnosis.

Material and method

The study analysed about 1’000 OCT images acquired using SOCT Copernicus (Optopol Tech. SA, Zawiercie, Poland). The proposed algorithm for image analysis enabled to analyse the texture of the choroid portion located beneath the RPE (Retinal Pigment Epithelium) layer. The analysis was performed using the profiled algorithm based on morphological analysis and texture analysis and a classifier in the form of decision trees.

Results

The location of the centres of gravity of individual objects present in the image beneath the RPE layer proved to be important in the evaluation of different types of images. In addition, the value of the standard deviation and the number of objects in a scene were equally important. These features enabled classification of three different forms of the choroid that were related to retinal pathology: diabetic edema (the classification gave accuracy ACC1 = 0.73), ischemia of the inner retinal layers (ACC2 = 0.83) and scarring fibro vascular tissue (ACC3 = 0.69). For the cut decision tree the results were as follows: ACC1 = 0.76, ACC2 = 0.81, ACC3 = 0.68.

Conclusions

The created decision tree enabled to obtain satisfactory results of the classification of three types of choroidal imaging. In addition, it was shown that for the assumed characteristics and the developed classifier, the location of B-scan does not significantly affect the results. The image analysis method for texture analysis presented in the paper confirmed its usefulness in choroid imaging. Currently the application is further studied in the Clinical Department of Ophthalmology in the District Railway Hospital in Katowice, Medical University of Silesia, Poland.

Keywords: Eye, Image processing, OCT, Texture analysis, Conditional erosion and dilation

Introduction

Choroid plays an essential role in many physico-chemical processes. The structure is important also for ciliary-retinal vessels (observed in minority of patients) originating from the choroid which supply the speckle field and protect against loss of central vision, for example in the case of central retinal artery (CRA) occlusion [1]. Visible choroidal vessels are found to a lesser extent in foveal avascular zone (FAZ). In the case of fluorescein angiography, it shows no presence of the fluorescence in that area (due to high amount of pigment). Conversely, any leakage of the pigment or FAZ staining indicates macular disease. Fluorescein diagnostic method is, in this case, profiled to carry out this type of diagnosis [2]. In practice, therefore, diagnosis of the eye fundus and choroidal layer using optical coherence tomography (OCT) also brings correct results [3,4]. The analysis of the vascular layer located beneath the RPE layer (retinal pigment ephitelium) in the OCT image of the eye is presented in a small number of publications [4-14]. They are mainly related to qualitative analysis of the choroidal layer without quantitative treatment of the characteristic distributions which occur there. Therefore, in this paper quantitative analysis of the choroidal layer using the new developed algorithm for image analysis and processing was proposed. Most of currently used OCT devices are not intended for choroidal imaging. The authors tried to obtain data resulting from the choroid reflectivity in a wide variety of patients. The algorithm was profiled to the analysis of the following types of images:

–neovascular AMD or exudations secondary to diabetic or thrombotic edema (specific layouts of shadows in the choroid caused by retinal changes) [5,6],

–diffuse macular edema without blood and exudations or ischemia of the inner retinal layers (global reduction of brightness in an OCT image) in such patients there is need to differentiate between the choroidal atrophy due to degeneration or high myopia [7],

–scarring fibrovascular tissue a uniform image proves its presence in most patients [7-9].

These types of images are presented in Table 1 they are subject to further analysis.

Table 1.

Types of images and their features visible in OCT images

| Symbol | Imaging type | Features visible in the image |

|---|---|---|

|

Z1 |

neovascular AMD or exudations secondary to diabetic or thrombotic edema |

characteristic layouts of shadows in the choroid caused by retinal changes |

|

Z2 |

diffuse macular edema without blood and exudations or ischemia of the inner retinal layers |

global reduction in brightness in the OCT image |

| Z3 | scarring fibrovascular tissue | uniform image is evidence of its presence |

Material

The study analysed about 1’000 OCT images acquired using SOCT Copernicus (Optopol Tech. SA, Zawiercie, Poland). The patients ranged in age from 12 to 78 years and had different types of choroidal structure. It was a group of patients routinely examined, analyzed retrospectively and anonymously. The routine tests were carried out in accordance with the Declaration of Helsinki. The images were acquired in DICOM or RAW format with a resolution of 256×1024 pixels at 8 bits per pixel. Image analysis was carried out in Matlab with Image Acquisition Toolbox and Signal Processing tools (version 4.0 and 7.1 respectively), whereas code optimization was carried out in the C language. The proposed algorithm for image analysis enabled to analyse the texture of the choroid portion located beneath the RPE (Retinal Pigment Epithelium) layer. The analysis of the choroid was performed using the new profiled algorithm based on texture analysis and mathematical morphology that is described below. The division into types of images (3 groups) was carried out by an ophthalmologist for 1′000 images representing learning, validation and test groups (proportion: 60%, 20%, 20% respectively). At this stage, patients with other type of images were also eliminated from further analysis - only images with visible alterations in the choroid were further analyzed.

Method

Preprocessing

The input image L GRAY with a resolution M×N = 256×1024 pixels (M – number of rows, N – number of columns of the image) was subjected to median filtering using a mask h sized M h ×N h = 3×3 pixels, thus obtaining the image L MED . The size of the filter mask h was chosen on the basis of medical evidence on the extent of artefacts found in this layer of the eye fundus OCT image and resolution of the image L GRAY . The images of successive stages of image pre-processing are shown in Figures 1 and 2. The image L MED is further analysed to detect the RPE layer y RPE ' (n) [3,15-17]. For this purpose, every n-th column of the image L MED is examined. The position of maximum brightness for each column is determined, i.e.:

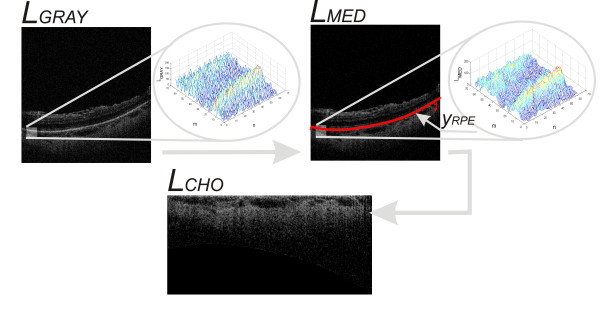

Figure 1.

The method for obtaining tomographic images of the fundus. For a sample 2D tomographic image, the RPE layer (retina pigment epithelium) and the choroid layer CHO are highlighted. Image analysis applies to the proposed algorithm which analyses the choroid layer using new methods of texture analysis and mathematical morphology. In each case, a flat two-dimensional input image is analysed, whose resolution (and that of the OCT apparatus) does not affect the obtained results.

Figure 2.

The course of image pre-processing. The subsequent steps of LGRAY image analysis: filtration with a median filter – LMED, determination of the RPE layer (retinal pigment epithelium) – yRPE(n), coordinate system conversion – LCHO. These steps are part of the image pre-processing which is necessary for further analysis of images.

| (1) |

where:

m,n – coordinates of rows and columns of the matrix m∈(1,M) and n∈(1,N).

The Equation (1) can be directly applied only if for all the analysed rows and successive columns, there is only one maximum value of brightness. In practice, it occurs in about 80% of the analysed cases at the resolution of 8 bits per pixel. The Equation (1) is also very sensitive to noise, especially in the case of single bright pixels – salt and pepper noise. This type of noise is sometimes not fully filtered during median filtering. For this reason, the Equation (1) was expanded to the following form (y RPE '' (n)):

| (2) |

| (3) |

where:

med - median

pr - coefficient determined in the range from 0 to1.

The value of the coefficient pr[18] is determined once for the Equation (2) and is 0.9 (it was determined arbitrarily). Depending on its selection (pr), the number of pixels that influence the calculation of the median is changed. An increase in the value of pr increases the number of pixels from which the median is calculated. Equations (2) and (3) by selecting pr enable correct calculations of the RPE layer individual points only if there is one cluster. In other cases, when there are two or more clusters, the calculations are more difficult. These are cases where the RPE layer is not the brightest layer for the analysed column. Such situations are very rare. The specificity of the Equations (2) and (3) enables to receive values equal to M (last row) for cases where none of the pixels is larger than maxm ∈ (1,M)LMED(m, n) * pr for the analysed column.

Based on the course of yRPE '' (n) (hereinafter abbreviated to yRPE), the image LRPE was created in the following way:

| (4) |

The values of “-1” added to the matrix L RPE enable to distinguish the area above the RPE layer from the pixels beneath the RPE with a value of “0”. The final element of image pre-processing is affine transformation of the image L RPE to the image L CHO containing only the interesting area of the choroid Figure 2. The image L CHO includes all the pixels of the image L RPE from the layer y RPE (n) to the last row (M). The remaining space in the matrix L RPE is filled with the values “-1”. Thus prepared image L CHO with a resolution M C ×N C is subjected to appropriate analysis and processing as described in the next sub-section.

Image processing

The input image L CHO shown in Figure 2 provides the basis for further processing. The specific properties of optical scanners as well as the object (eye) specificity contribute to the fact that brightness uniformity correction is necessary for further processing. For this purpose, the image L MEAN , which results from filtration with an averaging filter with a mask h 2 sized M h2 ×N h2 = 30×30 pixels, was subtracted from the image L CHO . As a result, the image L CHOM was obtained, i.e.:

| (5) |

for m∈(M h2 /2, M C -M h2 /2) and n∈(N h2 /2, N C -N h2 /2).

The size of the mask h 2 is closely related to the size of objects subjected to detection. In the case of the diseases listed in Table 1, the maximum size of objects is 20×20 pixels. Therefore, it was assumed that the size of the mask h 2 must be at least twice as large. This is necessary to carry out the removal of uneven brightness. Further processing steps are related to the detection of objects with the use of morphological operations. For these operations, it is necessary to define the binary image LBW. This image contains information on the location of pixels of the image LCHOM derived from the image LRPE i.e.:

| (6) |

The proper steps of image processing are related to sequential morphological analysis. Morphological opening operations were performed on the image L CHOM . The size of the structural element SE i was varied every 2 pixels in the range from 3×3 to 11×11 pixels (where the subscript i indicates the size, i.e.: SE 3 is a structural element sized 3×3 etc.). An opening operation was carried out for every i-th size of the symmetric structural element SE, i.e.:

| (7) |

These images (L Oi ) after normalization to the range 0 to 1 may be subjected to binarization at a constant threshold p r .

| (8) |

The value of the threshold p r is fixed at 0.5 due to the previously performed operations of removing uneven brightness and standardization. The images L Bi require adjustments related to the removal of small artefacts and holes in objects. This process was carried out using relationships of conditional erosion and dilation of the binary image L Bi . In the case of a symmetrical structural element SE i , relationships of conditional erosion and dilation [19] are simplified to the following form:

| (9) |

| (10) |

where:

LE(C)(m,n) – the resulting binary image after subjecting the image LBi to conditional erosion,

LD(C)(m,n) – the resulting binary image after subjecting the image LBi to conditional dilation,

pwe – constant erosion effectiveness,

pwd – constant dilation effectiveness,

pmn(m,n) – threshold dependent on the coordinates m, n,

sre – the mean value of the analysed area for erosion,

srd – the mean value of the analysed area for dilation.

The mean values sre, srd for erosion and dilation respectively were calculated from the following equations:

| (11) |

| (12) |

The constants p we and p wd determining the effectiveness of erosion and dilation respectively take the following values:

| (13) |

The choice of this range results from the condition of the left side of inequality, i.e.:

| (14) |

The values of p mn are in the range from 0 to 1, whereas the values of (1–p we ) should be non-negative in the range from 0 to 2. The values of (1–p we ) and (1 + p wd ) for p we = p wd = 0 are equal to 1, which means high intensity of conditional operations. For the other values of the thresholds p we and p wd , for example for p we = p wd = 1, there is a complete lack of effectiveness of erosion operations and significant effectiveness of dilation (the impact of selection of p we and p wd values is presented later in this section). However, very often p mn (m,n) = const, irrespective of the location (p mn ≠f(m,n)). Adopting p mn (m,n) = const is due to the nature of conditional operations, where in a general case a condition may not only be dependent on the mean values of s re and s rd , but also on other values of the pixel saturation degree. These special properties of conditional dilation and erosion enable to obtain effective correction of the quality of the input images L Bi . Sequential execution of conditional dilation and erosion (in this case, three times) allows to obtain corrected images L Ki (Figure 3). The shape of the structural element SEi adopted in all of these relationships was as a circle of a pre-specified size because of the shape of the recognized objects. For each image L Ki , characteristics were determined for each object. These features include:

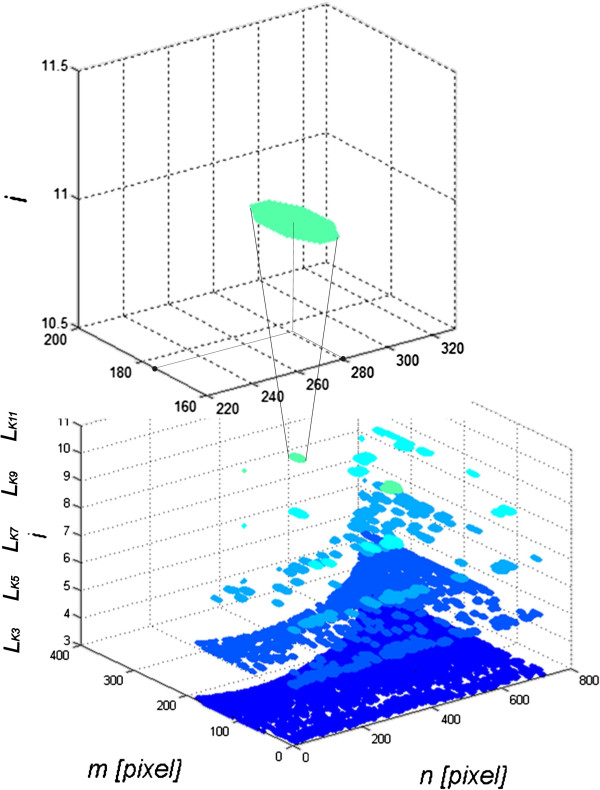

Figure 3.

Image analysis of the sequence of images LKi. Subsequent results are shown for i∈(3, 5, 7, 9, 11). For each image LKi and thus for each i the values of the features from w(1) to w(20) are calculated. For example, one of the objects whose coordinates of the centre of gravity are calculated is shown on the top of the zoom – in this case, they are (176, 281). The features w(6) to w(16) are the mean value of gravity centre coordinates of all objects in the image LKi.

w(1) to w(5) - number of objects in the image L Ki for i∈(3, 5, 7, 9, 11),

w(6) to w(10) - the average position of the centre of gravity in the x-axis for all the objects in the image L Ki for i∈(3, 5, 7, 3, 11),

w(11) to w(15) - the average position of the centre of gravity in the y-axis for all the objects in the image L Ki for i∈(3, 5, 7, 9, 11),

w(16) to w(20) - standard deviation of the mean brightness of pixels of all the objects in the image L Ki for i∈(3, 5, 7, 9, 11),

These features were selected taking into account medical conditions. They are related to the location of vascular lesions from w(6) to w(15), uniformity of brightness distribution within these changes from w(16) to w(20) and the area of changes for the appropriate number of objects from w(1) to w(5). These characteristics form the basis for building a classifier using decision trees (Figure 4 shows the block diagram of the algorithm).

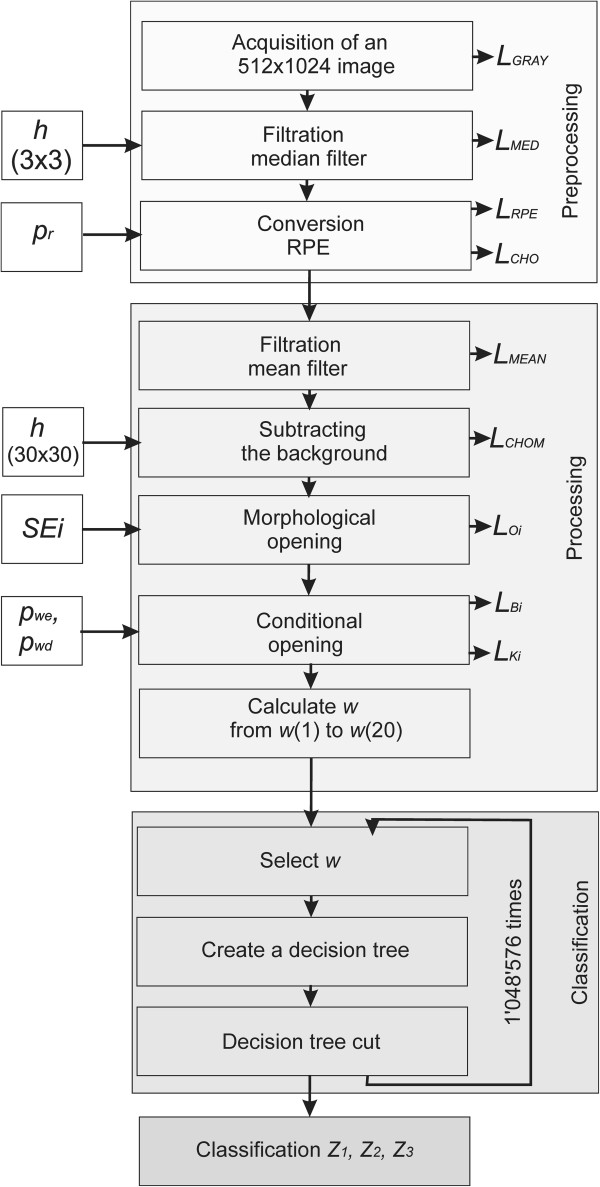

Figure 4.

The block diagram of the algorithm. The block diagram is divided into three main parts, namely image pre-processing using the known techniques of image analysis and processing, the principal analysis of images proposed by the authors and the classifier based on decision trees. The individual processing blocks are described in detail in the paper.

Results

The values of the 20 features obtained (four different types) are further used to build a decision tree. An ophthalmologist divided more than 1′000 images into three groups (Z 1 , Z 2 or Z 3 - Table 1). The division into groups Z 1 , Z 2 or Z 3 was performed manually by an ophthalmologist. As a result, the following frequency of occurrence of choroidal imaging was obtained: the group Z 1 included 23% of all cases, the group Z 2 included 44% of all cases, and the group Z 3 included 33% of all cases. This corresponds to 233, 436 and 331 images respectively. Whereas the division into learning, validation and test groups had the following proportions: 60%, 20% and 20% respectively. These groups were formed after rejecting the images with a mixed character of the changes observed - the type of the disease was not a criterion for exclusion here. A variety of overlapping diseases can be found in the excluded images. The cases of spatially invisible layer of choroid, images resulting from the errors in the acquisition or lacking a visible layer of RPE (for various reasons) were also excluded. Due to the fact that these are retrospective studies, the excluded images did not often cover the full range of the choroid, were deliberately obscured or distorted at the acquisition stage.

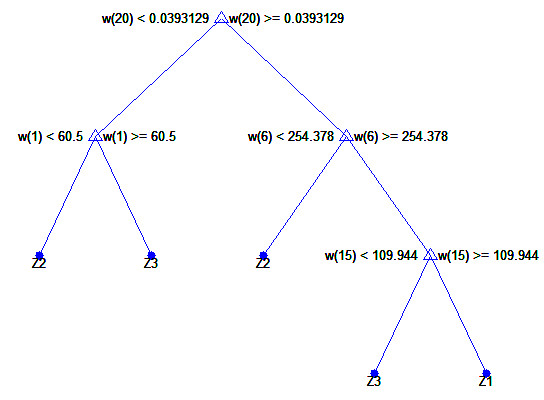

The nodes of the decision tree are different features from w(1) to w(20), the branches are the values corresponding to these attributes, and the leaves make individual decisions – type identification (Z 1 , Z 2 or Z 3 – Table 1). In all cases, a non-parametrical algorithm creating CART (Classification and Regression Trees) binary trees was used as the method for decision tree induction. An increase in the nodes purity was used as the criterion assessing the quality of CART divisions. The Gini index was used as the measure of nodes impurity. The tree creation was not limited by a minimum number of vectors in a node. As the considerations apply to the construction of a classifier based on the knowledge base, w(1) to w(20) features, a preliminary prepared tree Figure 5 was built based on the full information, using the training group. The results of the classification for the complete decision tree for the test group as follows:

Figure 5.

The complete classification tree. The decision tree was created for the classification of imaging types Z1, Z2, Z3 for the features w(1) to w(20). The characteristic elements such as the presence of the feature w(20) in the first node of the tree and successively features w(1), w(6) and w(15) for the other nodes are visible here. The results obtained on this basis are, for example, SPC2 = 0.56, TPR2 = 0.86, ACC2 = 0.70 for Z2.

–for Z1 – SPC1 = 0.74, TPR1 = 0.32, ACC1 = 0.64,

–for Z2 – SPC2 = 0.56, TPR2 = 0.86, ACC2 = 0.70,

–for Z3 – SPC3 = 0.88, TPR3 = 0.097, ACC3 = 0.63.

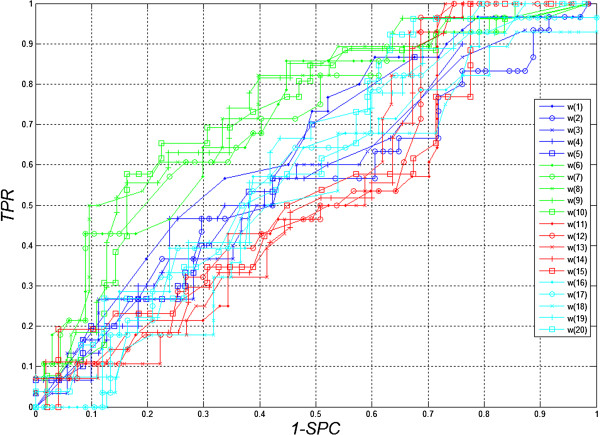

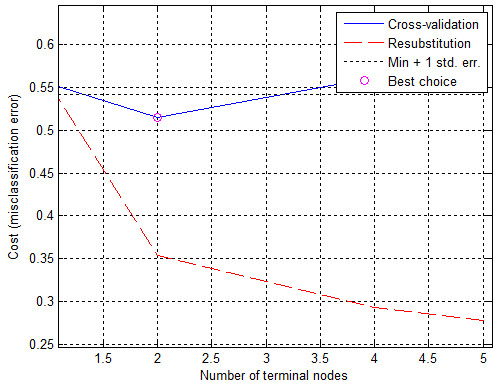

(where: SPC = TN/(FP + TN) – specificity, TPR = TP/(TP + FN) – sensitivity, TN – true negative, TP – true positive, FN – false negative, FN – false positive, subscripts “1”, “2” or “3” indicate the type of images). A closer analysis of the resulting decision tree enables, at this stage, rough assessment of the importance of individual features. For this form of the complete decision tree, there occur only features w(1), w(6), w(15) and w(20). Additional information is provided by the ROC graph (Receiver Operating Characteristic) designated for Z 1 , Z 2 , Z 3 to assess the impact of individual features from w(1) to w(20) separately. This graph (ROC) is shown in Figure 6. Based on the ROC graph and the complete decision tree, it can be concluded that there is a negligible impact of the features w(11) to w(20) on the obtained results. Additionally, the sensitivity of features to changes in the decision-making threshold value is the smallest for the feature w(11), and the highest for w(8) (Figure 6). For this reason, decision trees were created for each configuration of features, thereby forming 220 full decision trees (unpruned trees) and the same number of cut decision trees (over two million decision trees in total - 2′097′152). Figure 7 shows the graph of cost relationships (misclassification error) as a function of stratified cross-validation and resubstitution. The graph was also shown by computing a cutoff value that is equal to the minimum cost plus one standard error. The best level computed by the classregtree test method is the smallest tree under this cutoff (best level = 0 corresponds to the unpruned tree). The best results obtained for the complete decision tree are shown in Table 2. The best results of ACC 2 = 0.83 were obtained for the features w(1), w(2), w(3) and w(4).

Figure 6.

ROC Chart (Receiver Operating Characteristic). The graphs show the impact of threshold values for individual features w(1) to w(20) for the different imaging types Z1, Z2, Z3. A negligible impact of the features w(11) and w(20) on the obtained results can be inferred here. In addition, sensitivity of features to changes in decision-making threshold values is the smallest for the feature w(11) and the highest for w(8).

Figure 7.

The graph of the cost relationships (misclassification error) as a function of stratified cross-validation and resubstitution. The blue shows the cross-validation error. The best choice, the place of decision tree trimming is circled as the closest cut below the minimum error value plus a mean square error (min + 1 std. err).

Table 2.

The three top results obtained for different configurations of the features w(1) to w(20)* for the complete decision tree

| |

Z1 |

Z2 |

Z3 |

||||||

|---|---|---|---|---|---|---|---|---|---|

| w/N° | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 |

|

w(1) |

0 |

0 |

0 |

1 |

1 |

0 |

1 |

1 |

1 |

|

w(2) |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

1 |

|

w(3) |

0 |

0 |

0 |

1 |

1 |

0 |

1 |

0 |

0 |

|

w(4) |

1 |

1 |

1 |

1 |

1 |

0 |

0 |

0 |

0 |

|

w(5) |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

|

w(6) |

1 |

1 |

1 |

0 |

0 |

0 |

1 |

0 |

0 |

|

w(7) |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

1 |

|

w(8) |

0 |

1 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

|

w(9) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(10) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(11) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(12) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(13) |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(14) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(15) |

1 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(16) |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

|

w(17) |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

|

w(18) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(19) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(20) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| ACCi | 0.73 | 0.73 | 0.73 | 0.83 | 0.83 | 0.83 | 0.69 | 0.69 | 0.69 |

*The occurrence of a given feature w is indicated by “1” and its lack by “0”.

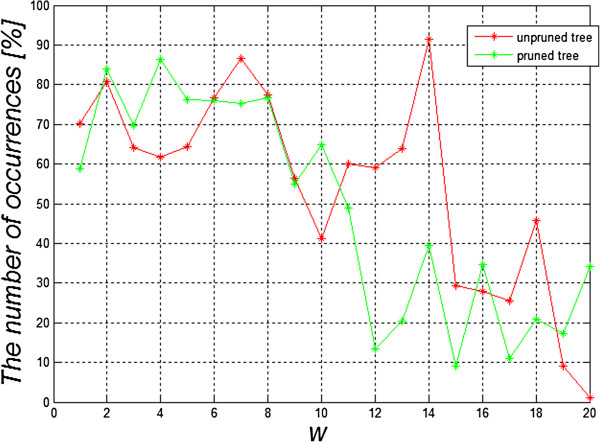

In the classification of groups Z 1 , Z 2 , Z 3 the features from w(9) to w(12) as well as from w(18) to w(20) did not occur. This means that their influence can be neglected for the best classifications (top 3 results). These features (from w(9) to w(12) as well as from w(18) to w(20)) define the average location of the centre of gravity for i = 9 and 11 in the x-axis, for i = 3, 5 in the y-axis and the standard deviation of the average brightness of pixels of all objects for i = 9 and 11. The same results of ACC2 were obtained for various configurations of the features w(1), w(2), w(3) and w(4) or w(5), w(7), w(8), w(16) and w(17), which is also quite interesting (Table 2). The best results for the cut decision tree are shown in Table 3. ACC 2 = 0.82, obtained for the features w(1), w(4) w(5), w(7) w(8), w(12) and w(15), is the best result for the cut decision tree. The results for the groups Z 1 , Z 2 , Z 3 confirm that the features from w(17) to w(20) have the smallest influence. The summary frequency chart of the occurrence of individual features from w(1) to w(20) for the cut and complete decision trees for the first 1′000 best results is interesting (Figure 8). It shows the greatest frequency of occurrence of the feature w(14) for the complete decision tree and the feature w(4) for the cut decision tree. From a practical standpoint, however, minimizing the number of features occurring in the classification seems to be the most vital. Therefore, when analysing only the three top results for the cut decision tree, one feature for Z 1 , six features Z 2 and one feature for Z 3 are obtained. This means that only the group Z 2 requires the largest number of features in the classification. This information is essential for optimizing the computational complexity of the algorithm. The analysis time for a single image does not exceed one second for the Pentium 4 CPU 3.0 GHz, 8GB RAM.

Table 3.

The three top results obtained for different configurations of the features w(1) to w(20) for the cut decision tree

| |

Z1 |

Z2

|

Z3 |

||||||

|---|---|---|---|---|---|---|---|---|---|

| w/N° | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 |

|

w(1) |

0 |

1 |

0 |

1 |

1 |

1 |

1 |

0 |

0 |

|

w(2) |

0 |

0 |

1 |

0 |

0 |

0 |

1 |

0 |

0 |

|

w(3) |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

1 |

0 |

|

w(4) |

1 |

1 |

1 |

1 |

0 |

0 |

0 |

0 |

1 |

|

w(5) |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

0 |

0 |

|

w(6) |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

|

w(7) |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

|

w(8) |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

|

w(9) |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

|

w(10) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(11) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(12) |

0 |

0 |

0 |

1 |

1 |

1 |

0 |

0 |

0 |

|

w(13) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(14) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(15) |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

|

w(16) |

0 |

0 |

0 |

1 |

1 |

1 |

0 |

0 |

0 |

|

w(17) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(18) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(19) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

w(20) |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

| ACCi | 0.76 | 0.76 | 0.76 | 0.82 | 0.81 | 0.81 | 0.68 | 0.68 | 0.68 |

Figure 8.

The graph of occurrence frequency of individual features w(1) to w(20) for the complete decision tree and the cut one for the first top 1′000 results. The graph shows that the highest occurrence concerns the feature w(14) for the complete decision tree and the feature w(4) for the cut decision tree. The occurrence frequency of the features corresponds with the results shown in Tables 2 and 3.

Comparison with other authors’ results

The analysis of the choroidal layer is dealt with in a number of publications [20-23]. In most cases, however, it is qualitative analysis. It includes the analysis of the choroidal layer thickness [20] or analysis with the use of Heidelberg Eye Explorer software [21]. In the first case, central serous chorioretinopathy case-series is presented. The following results were obtained – mean subfoveal choroidal thickness was significantly (significance level p sl = 0.04) larger in the affected eyes (455 ± 73 μm) than in the contralateral unaffected eyes (387 ± 94 μm), in which it was significantly (p sl = 0.005) larger than in the control group (289 ± 71 μm). The control group and the group of patients in this case [20] comprised 15 + 15 patients. The largest vessel diameter was significantly (p sl < 0.001, correlation coefficient: 0.73) correlated with the thickness of the total choroid. A similar small number of patients was analysed in [21]. The described software enables to measure some features of the choroid. However, this method is fully manual. Another interesting analysis is shown by Mrejen S. in [22]. The results obtained there concern the use of various imaging techniques in the diagnosis of the choroid. An automatic method for the analysis of the choroid is shown in the work of Park S.Y. [23]. The analysis presented there concerned only determination of the location of the selected layers in the tomographic image of the fundus. In addition, the correlation between the early treatment diabetic retinopathy study and EDI (enhanced depth imaging) was demonstrated. The automatically measured retinal thickness and volume of 9 early treatment diabetic retinopathy study subfields with conventional and EDI raster scan showed an intraclass correlation coefficient of 0.861 to 0.995 and 0.873 to 0.99 respectively. The number of analyzed cases was 35 patients with chorioretinal diseases and 20 healthy subjects. In this paper, the number of cases is much higher and the image analysis and processing are fully automatic. It seems that useful results can be obtained even with non-EDI SOCT. However, it should be further tested with other devices.

Summary

This paper shows a new fully automated method for the analysis of choroidal images. A division into three types of choroidal images was proposed. The created decision tree enabled to obtain satisfactory classification results. For example, for the classification of images Z 2 , the accuracy ACC 2 was 0.81 (pruned tree). Additionally, the features which have the greatest impact on the classification efficiency were characterized (creating more than 2 million decision trees). These are the characteristics from w(1) to w(9), w(12), w(15) and w(16) which are responsible for the number of objects in the scene and the average position of the centre of gravity in the x-axis for i = 3, 5, 7, 9 and the average position of the centre of gravity in the y-axis for i = 5, 11 and STD for i = 3. In addition, it was shown that for the adopted classifier of the cut decision tree, for the top 1′000 results the feature w(4) was most common. It was also noted that the different locations of scanning differentiate the images of the choroid but the classification result remains the same. Foveal location, however, has the greatest ability to differentiate changes. Individual B-scans located on the macula periphery are closer to each other. The proposed algorithm for image analysis and the classifier were implemented in the C language. Currently, the application is further studied in the Clinical Department of Ophthalmology in District Railway Hospital, Medical University of Silesia in Katowice, Poland.

The presented methodology of the procedure does not cover a large range of opportunities offered by modern methods of image analysis and processing. Currently, there is ongoing work on the optimization of the algorithm in terms of computational complexity. Moreover, its aim is to replace some of the time-consuming functions with some other more efficient ones. In particular, the possibility of applying different methods of texture analysis [23-31], Boolean function [32] or other methods of image analysis [33,34] or the impact of other factors [35-41] is considered. Other types of classifiers ensuring the analysis of other characteristics acquired from images are particularly taken into account.

Consent

Written informed consent was obtained from the patient for the publication of this report and any accompanying images.

Abbreviations

RPE: Retina pigment ephitelium; CRA: Central retinal artery; FAZ: Foveal avascular zone; ROC: Receiver operating characteristic; ACC: Accuracy; SPC: Specificity; TP: True positive; TN: True negative; FN: False negative; FP: False positive; EDI: Enhanced depth imaging.

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

RK suggested the algorithm for image analysis and processing, implemented it and analysed the images. ST, EW, ZW performed the acquisition of the OCT images and consulted the obtained results. All authors have read and approved the final manuscript.

Contributor Information

Robert Koprowski, Email: koprow@us.edu.pl.

Slawomir Teper, Email: slawomir.teper@gmail.com.

Zygmunt Wróbel, Email: zygmunt.wrobel@us.edu.pl.

Edward Wylegala, Email: wylegala@gmail.com.

Acknowledgements

No outside funding was received for this study.

References

- Kai-shun LC, Li H, Neal WR, Liu J, Yim LC, Yiu KLR, Pui PC, Shun CD. Anterior chamber angle measurement with anterior segment optical coherence tomography, a comparison between slit lamp OCT and visante OCT. IOVS. 2008;49(8):3469–3474. doi: 10.1167/iovs.07-1477. [DOI] [PubMed] [Google Scholar]

- Lehmann M, Wolff B, Vasseur V, Martinet V, Manasseh N, Sahel JA, Mauget-Faÿsse M. Retinal and choroidal changes observed with ‘En face’ enhanced-depth imaging OCT in central serous chorioretinopathy. Br J Ophthalmol. 2013;97(9):1181–1186. doi: 10.1136/bjophthalmol-2012-302974. [DOI] [PubMed] [Google Scholar]

- Koprowski R, Wróbel Z. Image Processing in Optical Coherence Tomography: Using Matlab. Katowice, Poland: University of Silesia; 2011. http://www.ncbi.nlm.nih.gov/books/NBK97169/ [PubMed] [Google Scholar]

- Warrow DJ, Hoang QV, Freund KB. Pachychoroid pigment epitheliopathy. Retina. 2013;33(8):1659–1672. doi: 10.1097/IAE.0b013e3182953df4. [DOI] [PubMed] [Google Scholar]

- Yang L, Jonas JB, Wei W. Optical coherence tomography-assisted enhanced depth imaging of central serous chorioretinopathy. Invest Ophthalmol Vis Sci. 2013;54(7):4659–4665. doi: 10.1167/iovs.12-10991. [DOI] [PubMed] [Google Scholar]

- Bousquet E, Beydoun T, Zhao M, Hassan L, Offret O, Behar-Cohen F. Mineralocorticoid receptor antagonism in the treatment of chronic central serous chorioretinopathy: a pilot study. Retina. 2013;33(10):2096–2102. doi: 10.1097/IAE.0b013e318297a07a. [DOI] [PubMed] [Google Scholar]

- Kim JH, Kang SW, Kim JR, Kim SJ. Variability of subfoveal choroidal thickness measurements in patients with age-related macular degeneration and central serous chorioretinopathy. Eye. 2013;27(7):809–815. doi: 10.1038/eye.2013.78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saito M, Saito W, Hashimoto Y, Yoshizawa C, Fujiya A, Noda K, Ishida S. Macular choroidal blood flow velocity decreases with regression of acute central serous chorioretinopathy. Br J Ophthalmol. 2013;97(6):775–780. doi: 10.1136/bjophthalmol-2012-302349. [DOI] [PubMed] [Google Scholar]

- Lim SH, Chang W, Sagong M. Efficacy of half-fluence photodynamic therapy depending on the degree of choroidal hyperpermeability in chronic central serous chorioretinopathy. Eye. 2013;27(3):353–362. doi: 10.1038/eye.2013.13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adhi M, Duker JS. Optical coherence tomography-current and future applications. Curr Opin Ophthalmology. 2013;24(3):213–221. doi: 10.1097/ICU.0b013e32835f8bf8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nicholson B, Noble J, Forooghian F, Meyerle C. Central serous chorioretinopathy: update on pathophysiology and treatment. Surv Ophthalmology. 2013;58(2):103–126. doi: 10.1016/j.survophthal.2012.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang NH, Kim YT. Change in subfoveal choroidal thickness in central serous chorioretinopathy following spontaneous resolution and low-fluence photodynamic therapy. Eye. 2013;27(3):387–391. doi: 10.1038/eye.2012.273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rahman W, Horgan N, Hungerford J. Circumscribed choroidal haemangioma mimicking chronic central serous chorioretinopathy. Fr J Ophthalmol. 2013;36(3):37–40. doi: 10.1016/j.jfo.2012.05.006. [DOI] [PubMed] [Google Scholar]

- Kuroda S, Ikuno Y, Yasuno Y, Nakai K, Usui S, Sawa M, Tsujikawa M, Gomi F, Nishida K. Choroidal thickness in central serous chorioretinopathy. Retina. 2013;33(2):302–308. doi: 10.1097/IAE.0b013e318263d11f. [DOI] [PubMed] [Google Scholar]

- Koprowski R, Wrobel Z. Identification of layers in a tomographic image of an eye based on the canny edge detection. Inf Technol Biomed Adv Intell Soft Comput. 2008;47:232–239. doi: 10.1007/978-3-540-68168-7_26. [DOI] [Google Scholar]

- Koprowski R, Teper S, Weglarz B. et al. Fully automatic algorithm for the analysis of vessels in the angiographic image of the eye fundus. Biomed Eng Online. 2012;11:35. doi: 10.1186/1475-925X-11-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koprowski R, Wróbel Z. Layers recognition in tomographic eye image based on random contour analysis. Computer recognition systems 3. Adv Intell Soft Comput. 2009;57:471–478. doi: 10.1007/978-3-540-93905-4_56. [DOI] [Google Scholar]

- Otsu N. A threshold selection method from gray-level histograms. IEEE Trans. Sys., Man., Cyber. 1979;9(1):62–66. [Google Scholar]

- Koprowski R, Wróbel Z. Automatic segmentation of biological cell structures based on conditional opening and closing. Mach Graph Vis. 2005;14(3):285–307. [Google Scholar]

- Yang L, Jonas JB, Wei W. Choroidal vessel diameter in central serous chorioretinopathy. Acta Ophthalmol. 2013;91(5):358–362. doi: 10.1111/aos.12059. [DOI] [PubMed] [Google Scholar]

- Brandl C, Helbig H, Gamulescu MA. Int Ophthalmol. Springer Science + Business Media Dordrecht; 2013. Choroidal thickness measurements during central serous chorioretinopathy treatment. [DOI] [PubMed] [Google Scholar]

- Mrejen S, Spaide RF. Optical coherence tomography: Imaging of the choroid and beyond. Surv Ophthalmol. 2013;58(5):387–429. doi: 10.1016/j.survophthal.2012.12.001. [DOI] [PubMed] [Google Scholar]

- Park SY, Kim SM, Song YM, Sung J, Ham DI. Retinal thickness and volume measured with enhanced depth imaging optical coherence tomography. Am J Ophthalmol. 2013;156(3):557–566. doi: 10.1016/j.ajo.2013.04.027. [DOI] [PubMed] [Google Scholar]

- Kajić V, Povazay B, Hermann B, Hofer B, Marshall D, Rosin PL, Drexler W. Robust segmentation of intraretinal layers in the normal human fovea using a novel statistical model based on texture and shape analysis. Opt Express. 2010;18(14):14730–14744. doi: 10.1364/OE.18.014730. [DOI] [PubMed] [Google Scholar]

- Acharya UR, Dua S, Du X, Sree SV, Chua CK. Automated diagnosis of glaucoma using texture and higher order spectra features. IEEE Trans Inf Technol Biomed. 2011;15(3):449–455. doi: 10.1109/TITB.2011.2119322. [DOI] [PubMed] [Google Scholar]

- Liu YY, Chen M, Ishikawa H, Wollstein G, Schuman JS, Rehg JM. Automated macular pathology diagnosis in retinal OCT images using multi-scale spatial pyramid and local binary patterns in texture and shape encoding. Med Image Anal. 2011;15(5):748–759. doi: 10.1016/j.media.2011.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu YY, Ishikawa H, Chen M, Wollstein G, Duker JS, Fujimoto JG, Schuman JS, Rehg JM. Computerized macular pathology diagnosis in spectral domain optical coherence tomography scans based on multiscale texture and shape features. Invest Ophthalmol Vis Sci. 2011;52(11):8316–8322. doi: 10.1167/iovs.10-7012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindenmaier AA, Conroy L, Farhat G, DaCosta RS, Flueraru C, Vitkin IA. Texture analysis of optical coherence tomography speckle for characterizing biological tissues in vivo. Opt Lett. 2013;38(8):1280–1282. doi: 10.1364/OL.38.001280. [DOI] [PubMed] [Google Scholar]

- Quellec G, Lee K, Dolejsi M, Garvin MK, Abràmoff MD, Sonka M. Three-dimensional analysis of retinal layer texture: identification of fluid-filled regions in SD-OCT of the macula. IEEE Trans Med Imaging. 2010;29(6):1321–1330. doi: 10.1109/TMI.2010.2047023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lingley-Papadopoulos CA, Loew MH, Zara JM. Wavelet analysis enables system-independent texture analysis of optical coherence tomography images. J Biomed Opt. 2009;14(4):044010. doi: 10.1117/1.3171943. [DOI] [PubMed] [Google Scholar]

- Gossage KW, Smith CM, Kanter EM, Hariri LP, Stone AL, Rodriguez JJ, Williams SK, Barton JK. Texture analysis of speckle in optical coherence tomography images of tissue phantoms. Phys Med Biol. 2006;51(6):1563–75. doi: 10.1088/0031-9155/51/6/014. [DOI] [PubMed] [Google Scholar]

- Porwik P. Efficient spectral method of identification of linear Boolean function. Control Cybern. 2004;33(4):663–678. [Google Scholar]

- Sonka M, Michael Fitzpatrick J. Medical Image Processing and Analysis. Belligham: SPIE; 2000. (Handbook of Medical Imaging). [Google Scholar]

- Siedlecki D, Nowak J, Zajac M. Placement of a crystalline lens and intraocular lens: retinal image quality. J Biomed Opt. 2006;11(5):054012. doi: 10.1117/1.2358959. [DOI] [PubMed] [Google Scholar]

- Castro A, Siedlecki D, Borja D. et al. Age-dependent variation of the gradient index profile in human crystalline lenses. J Mod Opt. 2011;58(19–20):1781–1787. doi: 10.1080/09500340.2011.565888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korzynska A, Hoppe A, Strojny W, Proceedings of the Second IASTED International Conference on Biomedical Engineering. Anaheim, Calif. Calgary, Zurich: ACTA Press; 2004. Investigation of a Combined Texture and Contour Method for Segmentation of Light Microscopy Cell Images; pp. 234–239. [Google Scholar]

- Korus P, Dziech A. Efficient method for content reconstruction with self-embedding. IEEE Trans Image Process. 2013;22(3):1134–1147. doi: 10.1109/TIP.2012.2227769. [DOI] [PubMed] [Google Scholar]

- Tadeusiewicz R, Ogiela MR. Automatic understanding of medical images new achievements in syntactic analysis of selected medical images. Biocybern Biomed Eng. 2002;22(4):17–29. [Google Scholar]

- Tadeusiewicz R, Ogiela MR. Why automatic understanding. Adapt Nat Comput Algorithms Lect Notes Comput Sci. 2007;4432(II):477–491. [Google Scholar]

- Korzynska A, Iwanowski M. Multistage morphological segmentation of bright-field and fluorescent microscopy images. Opt-Electron Rev. 2012;20(2):87–99. [Google Scholar]

- Ortiz S, Siedlecki D, Remon L. et al. Three-dimensional ray tracing on Delaunay-based reconstructed surfaces. Appl Opt. 2009;48(20):3886–3893. doi: 10.1364/AO.48.003886. [DOI] [PubMed] [Google Scholar]