Abstract

Research has demonstrated that infants recognize emotional expressions of adults in the first half-year of life. We extended this research to a new domain, infant perception of the expressions of other infants. In an intermodal matching procedure, 3.5- and 5-month-old infants heard a series of infant vocal expressions (positive and negative affect) along with side-by-side dynamic videos in which one infant conveyed positive facial affect and another infant conveyed negative facial affect. Results demonstrated that 5-month-olds matched the vocal expressions with the affectively congruent facial expressions, whereas 3.5-month-olds showed no evidence of matching. These findings indicate that by 5 months of age, infants detect, discriminate, and match the facial and vocal affective displays of other infants. Further, because the facial and vocal expressions were portrayed by different infants and shared no face-voice synchrony, temporal or intensity patterning, matching was likely based on detection of a more general affective valence common to the face and voice.

Keywords: Affect Recognition, Intersensory Perception, Emotion Perception, Intermodal Matching, Invariant Detection

Perception of emotional expressions is fundamental to social development. Facial and vocal expressions of emotion convey communicative intent, provide a basis for fostering shared experience, are central to the development of emotion regulation, and guide infant exploratory behavior (Gross, 1998; Saarni, Campos, Camras, & Witherington, 2006; Walker-Andrews, 1997). Within the first half year of life, infants are sensitive to emotional information in facial and vocal expressions, (Field, Woodson, Greenberg, & Cohen, 1982; Flom & Bahrick, 2007; Walker-Andrews, 1997), and in the prosodic contours of speech (Fernald, 1985, 1989; Papousek, Bornstein, Nuzzo, Papousek, & Symmes, 1990). Much research has focused on infant discrimination of adult emotional expressions (see Walker-Andrews, 1997; Witherington, Campos, Harriger, Bryan, Margett, 2010 for reviews), particularly for static faces. By 4 months of age infants can discriminate among static faces depicting happy, sad, and fearful expressions (Barrera & Mauer, 1981; Field, Woodson, Greenberg & Cohen, 1982; Field, Woodson, Cohen, Greenberg, Garcia, & Collins, 1983; La Barbera, Izard, Vietze, & Parisi, 1976). For example, La Barbera and colleagues (1976) found that 4- to 6-month-olds discriminated pictures of joyful, angry, and neutral facial expressions and preferred to look at joyful expressions. Between 5 and 7 months of age, infants discriminate between a wider range of static facial expressions including happiness, fear, anger, surprise, and sadness, and can generalize across expressions of varying intensity and across different examples of an expression performed by either the same or different individuals (Bornstein & Arterberry, 2003; Caron, Caron, & MacLean, 1988; Ludman & Nelson, 1988; Nelson & Dolgin, 1985; Nelson, Morse, & Leavitt, 1979; Serrano, Iglesias, & Loeches, 1992).

Infants also discriminate among different vocal emotional expressions portrayed by adults. Research on perception of infant-directed speech demonstrates that infants are sensitive to changes in intonation or prosody that typically convey emotion. Infant-directed speech is characterized by higher pitch frequency, longer pauses, and exaggerated intonation as compared with adult-directed speech and has been referred to as musical speech (Fernald, 1985; Trainor, Clark, Huntley, & Adams, 1997). Infants of 4 to 5 months respond differentially to infant-directed speech conveying approval versus prohibition (Bahrick, Shuman, & Castellanos, 2004; Fernald, 1993; Papousek, Bornstein, Nuzzo, Papousek, & Symmes, 1990). By 5 months, infants can also discriminate happy versus sad or angry vocal expressions when accompanied by a static affective facial expression (Walker-Andrews & Lennon, 1991; Walker-Andrews & Grolnick, 1983) or a static affectively neutral expression (Flom & Bahrick, 2007) but not when accompanied by a checkerboard. Thus, infants discriminate a variety of vocal emotional expressions in adults and perception is enhanced in the context of a face.

Although most research on infant emotion perception has been conducted using static facial expressions, naturalistic expressions of emotion typically involve dynamic and synchronous faces and voices. Studies employing these more ecological affective events suggest that emotion perception emerges earliest in the context of dynamic, temporally coordinated facial and vocal expressions (Flom & Bahrick, 2007; Montague & Walker-Andrews, 2001; see Walker-Andrews, 1997). Flom and Bahrick (2007) found that 4-month-olds discriminated happy, angry, and sad expressions in synchronous audiovisual speech. However, infants did not discriminate these expressions in unimodal auditory stimulation (static face with accompanying speech) until 5 months of age, or in unimodal visual stimulation (dynamic face speaking silently) until 7 months of age. Studies investigating face-voice matching also indicate that matching emerges between 3.5 and 7 months of age and is based on infant detection of invariant audiovisual information including the temporal and intensity changes common across facial and vocal expressions of emotion (Kahana-Kalman & Walker-Andrews, 2001; Walker, 1982; Walker-Andrews, 1986). Matching occurs earliest for familiar faces. For example, 3.5-month-olds matched the facial and vocal expressions of their mothers but not those of an unfamiliar woman (Kahana-Kalman & Walker-Andrews, 2001). Infants even match facial and vocal expressions in the absence of visible features, using facial motion depicted by point light displays (Soken & Pick, 1992). These studies indicate that infants detect invariant temporal and intensity patterning specifying affect common across faces and voices and highlight the important role of dynamic audiovisual information in the emergence of emotion perception.

Although a variety of studies have examined infants’ perception of adult facial expressions, there is little if any research examining infants’ perception of the emotional expressions of other infants (but see reports of contagious crying in neonates, Sagi & Hoffman, 1976; Simner, 1971). However, infants of 3- to 5-months have demonstrated discrimination and matching of dynamic information depicted by their peers in other domains, including that of self/other discrimination. For example, by 3-months, infants discriminate their own face from that of another infant in dynamic videos, and look more to face of the peer (Bahrick, Moss, & Fadil, 1996; Field, 1979, Legerstee, Anderson, & Schaffer, 1998). By 5-months, infants discriminate between a live video feedback of their own body motion and that of another infant and prefer to watch the noncontingent peer motion (Bahrick & Watson, 1985; Collins & Moore, 2008; Moore, 2006; Papousek & Papousek, 1974; Rochat & Striano, 2002; Schmuckler, 1996; Schmuckler & Fairhall, 2001; Schmuckler & Jewell, 2007), demonstrating sensitivity to invariant proprioceptive-visual relations specifying self motion.

Given that infants of 3- to 5-months distinguish between the self and other infants on the basis of facial features as well as temporal information specifying self-motion, infants of this same age range may also detect and perceive information specifying affect conveyed by their peers. The emotional expressions of peers are likely to be similar to the infant’s own emotional expressions in terms of the nature of the vocalizations, facial movements, and temporal and intensity patterning over time. This similarity may promote somewhat earlier detection of emotion in peer than in adult expressions (see Meltzoff, 2007). Further, most studies of infant affect matching have focused on matching specific facial and vocal expressions, and it is not yet known when infants can detect more general affective information specifying positive versus negative emotional valences.

The present study assessed 3.5- and 5-month-olds’ discrimination and matching of facial and vocal emotional expressions of other infants on the basis of their general affective valence. It extends prior research on infant perception of emotional expressions from the domain of adult expressions to the domain of infant expressions. Infant matching of facial and vocal affective expressions of happiness/joy versus anger/frustration in other same-aged infants was assessed using a modified intermodal matching procedure (Bahrick, 1983; 1988). Because the videos and soundtracks of emotional expressions were created from different infants, the audible and visible expressions did not share precise temporal or intensity patterning over time. Rather, they shared more general temporal and intensity information typical of positive versus negative affect. Thus, the present task differs from prior tasks involving matching specific audible and visible emotional expressions (that are temporally congruent) in that it required categorization or generalization across different examples of positive and negative emotional visual and vocal expressions. Given that infants match audible and visible emotional expressions of unfamiliar adults by 5- to 7-months of age, and highly familiar adults (i.e., their mothers), by 3-months of age (Kahana-Kalman & Walker-Andrews, 2001; Walker-Andrews, 1997), we expected infants would match the facial and vocal expressions of other infants by the age of 5-months. However, it was not known whether infants of 3-months would detect the affective information in the present task.

Method

Participants

Twenty 3.5-month-olds (12 females, 8 males; M=108 days, SD=3.8) and 20 5-month-olds (13 females, 7 males, M=149 days, SD=6.23) participated. The data from 19 additional infants were excluded due to experimenter error/equipment failure (n = 3 at 3.5-months; n = 3 at 5-months), excessive fussiness (n = 2 at 3.5 months), falling asleep (n = 1 at 3-months), or failure to look at both stimulus events (n = 7 at 3.5-months, n = 3 at 5-months). All infants were full-term with no complications during delivery. Eighty-eight percent were Hispanic, 8% were African-American, 2% were Caucasian, and 2% were Asian-American.

Stimulus Events

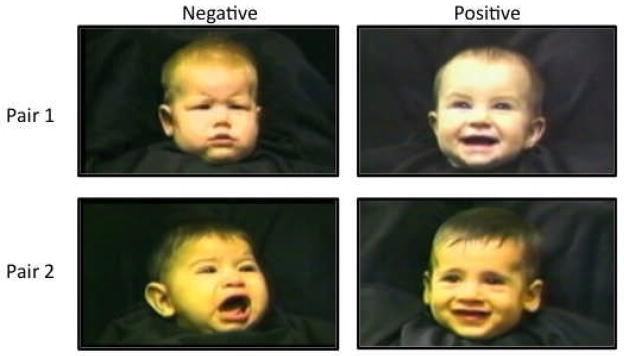

Four dynamic video recordings (see Figure 1) and four audio recordings of infants conveying positive and negative emotional expressions were created from videos of eight infants between the ages of 7.5 and 8.5 months who had participated in a previous study designed to elicit positive and negative affect. The video recordings, taken while infants watched a toy moving in front of them, consisted of their natural vocalizations and facial expressions. Infants wore a black smock and were filmed against a black background. Video recordings of eight infants who were particularly expressive were chosen from a larger set of 30 infants. The two best examples of audio and of video recordings depicting positive emotions (i.e. happiness/joy) and the two best examples of audio and video recordings conveying negative emotions (i.e., frustration/anger) were selected from eight different infants. Stimuli were approximately 10-s long and were looped.

Figure 1.

Photos of stimulus events

Because each vocalization and facial expression came from a different infant, all films and soundtracks were asynchronous. Moreover, because each infant’s expression was idiosyncratic and was characterized by a unique temporal and intensity pattern conveying happiness/joy or frustration/anger, any evidence of infant matching the facial and vocal expressions was considered to be based on global affective information (i.e. positive vs. negative affect) common across the faces and voices rather than on lower level temporal features or patterns.

Apparatus

Infants, seated in a standard infant seat, were positioned 102 cm. in front of two side-by-side 48 cm. video monitors (Panasonic BT-S1900N) that were surrounded by black curtains. A small aperture located above each monitor allowed observers to view infants’ visual fixations. The dynamic facial expressions were presented using a Panasonic edit controller (VHS NV-A500) connected to two Panasonic video decks (AG 6300 and AG 7750). Infant vocalizations were presented from a speaker located between the two video monitors.

A trained observer, unaware of the lateral positions of the video displays and unable to see the video monitors, recorded the infant’s visual fixations. The observer depressed and held one of two buttons on a button box corresponding to infant looking durations to each of the monitors.

Procedure

Infants at each age were randomly assigned to receive one of two pairs of faces. In each pair one infant conveyed a positive facial expression and the other infant conveyed a negative facial expression (see Figure 1). Infants were tested in a modified intermodal matching procedure (see Bahrick, 1983; 1988 for details). A trial began when the infant was looking toward the monitors. At the start of each trial infants heard the positive or negative vocalization for 3–4 s and then the two affective videos appeared side-by-side for 15s. The vocal expressions continued to play throughout the 15s trial. A total of 12 trials were presented in two blocks of 6 trials. Affective vocalizations were presented in one of two random orders within each block such that there were 3 positive and 3 negative vocalizations. The lateral positions of the affective facial displays were counterbalanced across subjects and within subjects from one trial block to the next.

Infant’s proportion of total looking time (PTLT; the number of seconds looking to the affectively matched facial display divided by the total number of seconds looking to both displays) and proportion of first looks (PFL; the number of first looks to the affectively matched facial display divided by the total number of first looks to each display across trials) to the affectively matched facial expression served as the dependent variables. They provide complimentary measures, with PTLT assessing looking time and PFL, frequency of first looks to the matching display (see Bahrick, 2002; Flom, Whipple, & Hyde, 2009). To ensure infants had seen both videos, they were required to have a minimum of 6 trials (at least 3 trials per block) during which they spent at least 5% of their total looking time fixating the least preferred of the two video displays. A second observer recorded visual fixations for five of the infants (25% of the sample) and was used for calculating interobserver reliability. The Pearson correlation between the two observers was r =.96.

Results

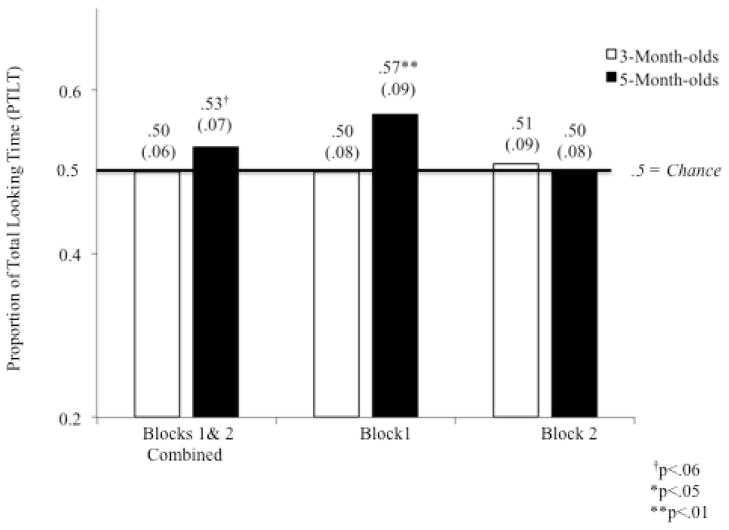

We examined whether 3.5- and 5-month-olds matched an affective vocal expression of one infant to an affectively similar facial expression of another infant. Single sample t-tests (two-tailed) on PTLTs to the affectively matched facial expression were compared against the chance value of .50 (see Figure 2). Results revealed that 5-month-olds reliably matched a peer’s affective vocal and facial expressions during Block 1 (M=.57, SD=.09), t (19)=3.25, p=.004, Cohen’s d=1.5, but not during Block 2 (M=.50, SD=.08), p>.1. Across Blocks 1 and 2 combined 5-month-olds’ PTLT to the matching expression was marginally significant, (M =.53, SD=.07), t (19)=2.02, p=.058, Cohen’s d = .93. In contrast, 3.5-month-olds’ PTLT to the matching expressions failed to reach significance during any block (Block 1, t (19)=.30, p>.1, Block 2, t (19)= .47, p>.1, and Blocks 1 and 2 combined, t (19)=.12, p>.1). To compare performance across age and block, a repeated measures analysis-of-variance (ANOVA) with Trial Block (Block 1 and 2) as the repeated measure and age (3.5- and 5-months) as the between subjects factor was performed on PTLT to the matching facial expression. Results revealed a significant interaction of Age by Trial Block, F (1,38) = 6.63, p = .014, partial eta squared = .15, and no main effects. Specifically, 5-month-olds’ PTLT to the matching face (M=.57, SD=.09) was significantly greater than that of 3.5-month-olds (M=.50, SD=.08) during Block 1, but not during Block 2 or Blocks 1 and 2 combined (both ps> .1).

Figure 2.

Infants’ mean proportion of total looking time (PTLT) to the affectively matched facial displays as a function of age

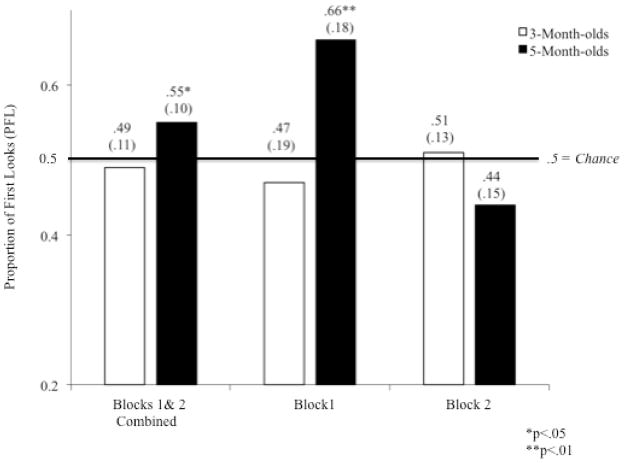

We also examined PFLs to the affectively matched facial display. Analyses and results mirrored those of the PTLTs. Single sample t-tests against .50 (i.e., chance) revealed that a significant proportion of 5-month-olds’ first looks were directed toward the facial expression that matched the vocal expressions during Block 1 (M =.66, SD =.18), t (19) =3.9, p =.001, Cohen’s d =.88. PFLs were also significant for Blocks 1 and 2 combined, (M=.55, SD=.10), t (19)=2.1, p =.05, Cohen’s d .50, but not for Block 2 alone (M=.44, SD .15), t (19) =1.9, p =.07. At 3.5-months of age, infants showed no evidence of matching on any block (all ps>.1).

We also conducted a repeated measures ANOVA to assess differences between age and trial block, with Trial Block (Blocks 1 and 2) as the repeated measure and Age (3.5- and 5-months) as the between subjects factor on PFLs. Results revealed a significant interaction of Age by Trial Block, F (1,38) = 11.6, p = .002, partial eta squared = .24. Results reveal that the PFLs of 5-month-olds were greater than those of 3.5-month-olds during Block 1, t (18) =3.6, p =.002, Cohen’s d =.82 but not during Block 2, t (18) =1.4, p>.1. Results also revealed a main effect of Trial Block, F (1,37) = 4.7, p = .04, partial eta squared = .11, where PFLs for Block 1 (M=.56, SD=.21) were greater than those of Block 2 (M=.47 SD=.14). Finally the effect of Age did not reach significance, F (1,37) = 2.8, p >.10.

We also examined whether infants at each age reliably matched both the positive expressions and the negative expressions. Because positive expressions may be somewhat more attractive to infants than negative expressions, we used a “difference score” approach (see Walker-Andrews, 1986). Specifically, we examined the difference in the infant’s PTLT to the face with the positive expression when they heard the positive vocal expression minus their PTLT to the face with the positive expression when they heard the negative vocal expression for Blocks 1 and 2 combined. The advantage of this approach is that it statistically controls for infants’ preference for a particular facial expression. The identical difference score analysis was also performed for PTLTs to the negative face. Difference scores were compared against the chance value of 0.0 using single sample t-tests. Results indicated that 5-month olds increased their looking to both positive and negative faces during Block 1 and 2 combined (M=.06, SD .11), t (19)=2.57, p .02, Cohen’s d=1.3, when accompanied by the matching vocal expression, but 3-month-olds did not (ps >.1). Thus, even when taking into account infants’ preference for a particular facial expression, these results converge with those of the primary analyses and indicate affect matching of infant facial and vocal expressions at 5- but not 3.5-months of age.

Secondary analyses also revealed that the overall amount of time 5-month-olds spent looking toward the visual displays (M=127s, SD=25s) did not reliably differ from that of the 3-month-olds (M=129s, SD=16s), p>.1. No differences were observed at either age, or any trial block, as a function of face-voice pairing or the order of counterbalancing (all ps>.1). Finally, 3-and 5-month-olds also failed to show a preference for the left or right monitor during any trial block (all ps>.1).

Together, these results demonstrate that 5-month-olds, but not 3-month-olds, match an affective vocal expression of one infant to an affectively similar facial expression of another infant. Five-month-olds also showed reliable matching for both the positive and negative affective expressions and their matching exceeded that of the 3-month-olds.

Discussion

The present findings demonstrate that by 5-months of age, infants are able to match the facial and vocal emotional expressions of other infants. Infants of 5- but not 3.5-months of age showed evidence of matching affect invariant across facial and vocal expressions according to three measures. Five-month-olds showed 1) a significant proportion of total looking time and 2) a significant proportion of first looks to the dynamic facial expression that matched the vocal expression heard across blocks 1 and 2 combined (12 trials) as well as during block 1 alone (first 6 trials). 3) They also showed a significantly greater preference for both the positive and the negative facial expressions across all trials when each was paired with the affectively congruent vocal expression than when each was paired with the incongruent vocal expression. Matching for 5-month-olds was primarily evident during the first block of trials. While it is not known why infants showed little evidence of matching during block 2 (e.g., boredom, fatigue, attention to other event properties, etc.), a number of prior studies have also shown stronger matching in the early portion of the procedure (e.g. Schmuckler & Jewel, 2007; Soken & Pick, 1992; Walker 1982). Together, these results provide clear evidence that 5-month-olds abstract invariant properties common to facial and vocal expressions of happiness/joy and anger/frustration in other infants.

Because the facial and vocal expressions were portrayed by different infants and thus did not share specific temporal and intensity patterning, matching was likely based on detection of a more global affective valence invariant across the facial and vocal expressions rather than on face-voice synchrony or on any other temporal or intensity pattern information specific to particular facial and vocal displays. Vocal expressions of happiness/joy, for example, are typically characterized by a raised second vocal formant when the speaker is smiling (Tartter & Braun, 1994) as well as faster and louder speech in adults (Bowling, Gill, Choi, Prinz, & Purves, 2010). Negative facial expressions (such as sadness, anger or frustration) frequently affect the shape of the face, vocal tract, and source of articulation (e.g., Erickson, Yoshida, Menezes, Fujino, Mochida, & Shibuya, 2006; Ekman & Friesen, 1971) resulting in higher fundamental frequencies but more tense speech with shorter pauses (see Scherer 1986; Juslin & Lukka, 2003, for reviews). Thus, expressions of happiness may depict softer, slower, and/or more gradual changes in face and voice over time whereas expressions of anger may depict louder, more rapid changes. These temporal and intensity patterns are invariant across face and voice and may specify different emotional valences which can be detected only in dynamic, moving expressions (Flom & Bahrick, 2007; Juslin & Lukka, 2003; Stern, 1999; Walker-Andrews, 1986) where changes in intensity and temporal patterns are evident. The present findings are the first to show 5-month-olds match the faces and voices of other infants on the basis of global invariant properties common to facial and vocal emotional expressions.

Infants’ intermodal matching of facial and vocal affective information in unfamiliar peers appears to emerge somewhat earlier (by 5-months but not 3.5-months of age) than their matching of facial and vocal affective information in unfamiliar adults (by 7-months but not 5-months of age; Walker, 1982; 1986). One plausible explanation for matching is that infant’s familiarity with the self, their own affective expressions, and the affective expressions of familiar adults may provide a foundation for infants’ perception of others (see Emde, 1983; Kahana-Kalman & Walker-Andrews, 2001; Moore, 2006). For example, 3- to 5-month-olds have experience with their mirror images (Bahrick, Moss, & Fadil, 1996), are adept at detecting visual-proprioceptive relations generated by feedback from live video transmissions of their own motion (Bahrick & Watson, 1985; Rochat, 2002; Rochat & Striano, 2002), and show imitation of adult facial expressions (Butterworth, 1999; Meltzoff, 2002; Rochat, 2002). Thus, information about their own facial movements may be relatively familiar and accessible and, in part, serve as a basis for matching the facial and vocal displays of peers. In the present study, affective matching was evident at 5- but not at 3.5-months of age. The lack of significance for 3.5-month-olds may be due to a combination of task difficulty and amount of experience accrued with affective displays. Three-month-olds have less experience differentiating emotional expressions than 5-month-olds, showing matching only for expressions of familiar but not unfamiliar adults (Kahana-Kalman & Walker-Andrews, 2001). Further, given that the facial and vocal expressions were portrayed by different infants and required matching on the basis of a general affective valence (rather than matching temporal properties specific to a given expression for a given individual), the task may be somewhat difficult relative to others in the literature.

Taken together, the present findings indicate that perception and matching of facial and vocal emotional expressions of other infants emerges between 3.5- and 5-months of age. By 5-months, infants are able to match the vocal expression of one infant with the facial expression of another infant when they share a common emotional valence (happy/joyous vs. angry/frustrated). These findings highlight the important role of dynamic, audiovisual information in the perception of emotion and demonstrate that 5-month-old infants perceive the general affective valence uniting facial and vocal emotional expressions of other infants.

Figure 3.

Infants’ mean proportion of first looks (PFL) to the affectively matched facial displays as a function of age

Acknowledgments

This research was supported by NICHD grants RO1 HD053776, RO1 HD25669, and K02 HD064943 and NIMH grant, RO1 MH62226, awarded to the second author. A portion of these data were presented at the Society for Research in Child Development, Tampa, FL, April, 2003. We gratefully acknowledge Katryna Anasagasti Carrasco, Melissa Argumosa, and Laura Batista-Teran for their assistance in data collection.

Contributor Information

Mariana Vaillant-Molina, Florida International University.

Lorraine E. Bahrick, Florida International University

Ross Flom, Brigham Young University.

References

- Bahrick LE. Infants’ perception of substance and temporal synchrony in multimodal events. Infant Behavior and Development. 1983;6:429–451. [Google Scholar]

- Bahrick LE. Intermodal learning in infancy: Learning of the basis of two kinds of invariant relations in audible and visible events. Child Development. 1988;59:197–209. [PubMed] [Google Scholar]

- Bahrick LE. Generalization of learning in three-and-a-half-month-old infants on the basis of amodal relations. Child Development. 2002;73:667–681. doi: 10.1111/1467-8624.00431. [DOI] [PubMed] [Google Scholar]

- Bahrick LE, Moss L, Fadil C. Development of visual self-recognition in infancy. Ecological Psychology. 1996;8:189–208. [Google Scholar]

- Bahrick LE, Shuman M, Castellanos I. Intersensory redundancy facilitates infants’ perception of meaning in speech passages. International Conference on Infant Studies; Chicago, IL. 2004. May, [Google Scholar]

- Bahrick LE, Watson JS. Detection of intermodal proprioceptive-visual contingency as a potential basis of self-perception in infancy. Developmental Psychology. 1985;21:963–973. [Google Scholar]

- Barrera ME, Maurer D. The perception of facial expressions by the three-month-old. Child Development. 1981;52:203–206. [PubMed] [Google Scholar]

- Bornstein MH, Arterberry ME. Recognition, discrimination and categorization of smiling by five-month-old infants. Developmental Science. 2003;6:585–599. [Google Scholar]

- Bowling DL, Gill K, Choi JD, Prinz J, Purves D. Major and minor music compared to excited and subdued speech. Journal of the Acoustical Society of America. 2010;127:491–503. doi: 10.1121/1.3268504. [DOI] [PubMed] [Google Scholar]

- Butterworth G. Neonatal imitation: Existence, mechanisms and motives. In: Nadel J, Butterworth G, editors. Imitation in Infancy: Cambridge Studies in Cognitive and Perceptual Development. New York: Cambridge University Press; 1999. pp. 63–88. [Google Scholar]

- Caron AJ, Caron RE, MacLean DJ. Infant discrimination of naturalistic emotional expressions: The role of face and voice. Child Development. 1988;59:604–616. [PubMed] [Google Scholar]

- Collins S, Moore C. The temporal parameters of visual proprioceptive perception in infancy. Paper presented at The International Conference for Infant Studies; Vancouver, BC. 2008. Mar, [Google Scholar]

- Ekman P, Friesen W. Constants across cultures in the face and emotion. Journal of Personality and Social Psychology. 1971;17:124–129. doi: 10.1037/h0030377. [DOI] [PubMed] [Google Scholar]

- Emde RN. The prerepresentational self and its affective core. The Psychoanalytic Study of the Child. 1983;38:165–192. doi: 10.1080/00797308.1983.11823388. [DOI] [PubMed] [Google Scholar]

- Erickson D, Yoshida K, Menezes C, Fujino A, Mochida T, Shibuya Y. Exploratory study of some acoustic and articulatory characteristics of sad speech. Phonetica. 2006;63:1–25. doi: 10.1159/000091404. [DOI] [PubMed] [Google Scholar]

- Fernald A. Four-month-old infants prefer to listen to motherese. Infant Behavior and Development. 1985;8:181–195. [Google Scholar]

- Fernald A. Intonation and communicative intent in mothers’ speech to infants: Is the melody the message? Child Development. 1989;60:1497–1510. [PubMed] [Google Scholar]

- Fernald A. Approval and disapproval: Infant responsiveness to vocal affect in familiar and unfamiliar languages. Child Development. 1993;64:657–674. [PubMed] [Google Scholar]

- Field TM. Differential behavioral and cardiac responses of 3-month-old infants to a mirror and peer. Infant Behavior and Development. 1979;2:179–184. [Google Scholar]

- Field TM, Woodson R, Cohen D, Greenberg R, Garcia R, Collins K. Discrimination and imitation of facial expressions by term and preterm infants. Infant Behavior and Development. 1983;7:19–26. [Google Scholar]

- Field TM, Woodson R, Greenberg R, Cohen D. Discrimination and imitation of facial expressions by neonates. Science. 1982;218:179–181. doi: 10.1126/science.7123230. [DOI] [PubMed] [Google Scholar]

- Flom R, Bahrick LE. The development of infant discrimination of affect in multimodal and unimodal stimulation: The role of intersensory redundancy. Developmental Psychology. 2007;43:238–252. doi: 10.1037/0012-1649.43.1.238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flom R, Whipple H, Hyde D. Infants’ intermodal perception of canine Canis familairis) faces and vocalizations. Developmental Psychology. 2009;45:1143–1151. doi: 10.1037/a0015367. [DOI] [PubMed] [Google Scholar]

- Gross JJ. The emerging field of emotion regulation: An integrative review. Review of General Psychology. 1998;2:271–299. [Google Scholar]

- Juslin PN, Laukka P. Communication of emotions in vocal expression and music performance: Different channels, same code? Psychological Bulletin. 2003;129:770–814. doi: 10.1037/0033-2909.129.5.770. [DOI] [PubMed] [Google Scholar]

- Kahana-Kalman R, Walker-Andrews AS. The role of person familiarity in young infants’ perception of emotional expressions. Child Development. 2001;72:352–362. doi: 10.1111/1467-8624.00283. [DOI] [PubMed] [Google Scholar]

- La Barbera JD, Izard CE, Vietze P, Parisi SA. Four- and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Development. 1976;47:535–538. [PubMed] [Google Scholar]

- Legerstee M, Anderson D, Schaffer A. Five- and eight-month-old infants recognize their faces and voices as familiar and social stimuli. Child Development. 1998;69:37–50. [PubMed] [Google Scholar]

- Ludemann P, Nelson C. Categorical representation of facial expressions by 7-month-old infants. Developmental Psychology. 1988;24:492–501. [Google Scholar]

- Meltzoff AN. Elements of a developmental theory of imitation. In: Meltzoff AN, Prinz W, editors. The imitative mind: Development, evolution, and brain bases; Cambridge Studies in Cognitive and Perceptual Development. New York: Cambridge University Press; 2002. pp. 19–41. [Google Scholar]

- Meltzoff AN. ‘Like me’: A foundation for social cognition. Developmental Science. 2007;10:126–134. doi: 10.1111/j.1467-7687.2007.00574.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague DPF, Walker-Andrews AS. Peekaboo: A new look at infants’ perception of emotion expressions. Developmental Psychology. 2001;37:826–838. [PubMed] [Google Scholar]

- Moore C. The development of commonsense psychology. Mahwah, NJ: Lawrence Erlbaum Associates; 2006. pp. 75–79.pp. 121–125. [Google Scholar]

- Nelson C, Dolgin K. The generalized discrimination of facial expressions by seven-month-old infants. Child Development. 1985;56:58–61. [PubMed] [Google Scholar]

- Nelson CA, Morse PA, Leavitt LA. Recognition of facial expressions by seven-month-old infants. Child Development. 1979;50:1239–1242. [PubMed] [Google Scholar]

- Papousek H, Papousek M. Mirror-image and self recognition in young human infants: A new method of experimental analysis. Developmental Psychology. 1974;7:149–157. doi: 10.1002/dev.420070208. [DOI] [PubMed] [Google Scholar]

- Papousek M, Bornstein MH, Nuzzo C, Papousek H, Symmes D. Infant responses to prototypical melodic contours in parental speech. Infant Behavior and Development. 1990;13:539–545. [Google Scholar]

- Rochat P. Ego function of early imitation. In: Meltzoff AN, Prinz W, editors. The imitative mind: Development, evolution, and brain bases; Cambridge Studies in Cognitive and Perceptual Development. New York: Cambridge University Press; 2002. pp. 85–97. [Google Scholar]

- Rochat P, Striano T. Who is in the mirror: Self-other discrimination in specular images by 4- and 9-month-old infants. Child Development. 2002;73:35–46. doi: 10.1111/1467-8624.00390. [DOI] [PubMed] [Google Scholar]

- Saarni C, Campos JJ, Camras LA, Witherington D. Emotional development: Action, communication, and understanding. In: Damon W, Lerner RL, Eisenberg N, editors. Handbook of Child Psychology, Vol. 3. Social, Emotional and Personality Development. 6. New York: Wiley; 2006. pp. 226–299. [Google Scholar]

- Sagi A, Hoffman ML. Empathic distress in the newborn. Developmental Psychology. 1976;12:175–176. [Google Scholar]

- Scherer KR. Vocal affect expression: A review and a model for future research. Psychological Bulletin. 1986;99:143–165. [PubMed] [Google Scholar]

- Schmukler MA. Visual-proprioceptive intermodal perception in infancy. Infant Behavior and Development. 1996;19:221–232. [Google Scholar]

- Schmukler MA, Fairhall JL. Visual-proprioceptive intermodal perception using point light displays. Child Development. 2001;72:949–962. doi: 10.1111/1467-8624.00327. [DOI] [PubMed] [Google Scholar]

- Schmukler MA, Jewell DT. Infants’ intermodal perception with imperfect contingency information. Developmental Psychobiology. 2007;49:387–398. doi: 10.1002/dev.20214. [DOI] [PubMed] [Google Scholar]

- Serrano JM, Iglesias J, Loeches A. Visual discrimination and recognition of facial expressions of anger, fear, and surprise in four- to six-month-old infants. Developmental Psychobiology. 1992;25:411–425. doi: 10.1002/dev.420250603. [DOI] [PubMed] [Google Scholar]

- Simner ML. Newborn’s response to the cry of another infant. Developmental Psychology. 1971;5:136–150. [Google Scholar]

- Soken NH, Pick AD. Intermodal perception of happy and angry expressive behaviors by seven-month-old infants. Child Development. 1992;63:787–795. [PubMed] [Google Scholar]

- Stern DN. Vitality contours: The temporal contour of feelings as a basic unit for constructing the infant’s social experience. In: Rochat P, editor. Early social cognition: Understanding others in the first months of life. New Jersey: Lawrence; 1999. pp. 67–80. [Google Scholar]

- Tartter V, Braun D. Hearing smiles and frowns in normal and whisper registers. Journal of the Acoustical Society of America. 1994;96:2101–2107. doi: 10.1121/1.410151. [DOI] [PubMed] [Google Scholar]

- Trainor LJ, Clark ED, Huntley A, Adams B. The acoustic basis of infant preferences for infant-directed singing. Infant Behavior and Development. 1997;20:383–396. [Google Scholar]

- Walker AS. Intermodal perception of expressive behaviors by human infants. Journal of Experimental Child Psychology. 1982;33:514–535. doi: 10.1016/0022-0965(82)90063-7. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS. Intermodal perception of expressive behaviors: Relation of eye and voice? Developmental Psychology. 1986;22:373–377. [Google Scholar]

- Walker-Andrews AS. Infants’ perception of expressive behaviors: Differentiation of multimodal information. Psychological Bulletin. 1997;121:437–456. doi: 10.1037/0033-2909.121.3.437. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS, Grolnick W. Discrimination of vocal expression by young infants. Infant Behavior and Development. 1983;6:491–498. [Google Scholar]

- Walker-Andrews AS, Lennon E. Infants’ discrimination of vocal expressions: Contributions of auditory and visual information. Infant Behavior and Development. 1991;14:131–142. [Google Scholar]

- Witherington DC, Campos JJ, Harriger JA, Bryan C, Margett TE. Emotion and its development in infancy. In: Bremner JG, Wachs TD, editors. Handbook of Infant Development. Part 3. Vol. 1. Wiley; New York: 2010. [Google Scholar]