Abstract

The present review article summarizes and expands upon the discussions that were initiated during a meeting of the Cognitive Neuroscience Treatment Research to Improve Cognition in Schizophrenia (CNTRICS; http://cntrics.ucdavis.edu). A major goal of the CNTRICS meeting was to identify experimental procedures and measures that can be used in laboratory animals to assess psychological constructs that are related to the psychopathology of schizophrenia. The issues discussed in this review reflect the deliberations of the Motivation Working Group of the CNTRICS meeting, which included most of the authors of this article as well as additional participants. After receiving task nominations from the general research community, this working group was asked to identify experimental procedures in laboratory animals that can assess aspects of reinforcement learning and motivation that may be relevant for research on the negative symptoms of schizophrenia, as well as other disorders characterized by deficits in reinforcement learning and motivation. The tasks described here that assess reinforcement learning are the Autoshaping Task, Probabilistic Reward Learning Tasks, and the Response Bias Probabilistic Reward Task. The tasks described here that assess motivation are Outcome Devaluation and Contingency Degradation Tasks and Effort-Based Tasks. In addition to describing such methods and procedures, the present article provides a working vocabulary for research and theory in this field, as well as an industry perspective about how such tasks may be used in drug discovery. It is hoped that this review can aid investigators who are conducting research in this complex area, promote translational studies by highlighting shared research goals and fostering a common vocabulary across basic and clinical fields, and facilitate the development of medications for the treatment of symptoms mediated by reinforcement learning and motivational deficits.

Keywords: reinforcement, reward, motivation, learning, cognition

This review manuscript is a result of discussions initiated during a meeting of the Cognitive Neuroscience Treatment Research to Improve Cognition in Schizophrenia (CNTRICS; http://cntrics.ucdavis.edu/; accessed August 19, 2013) and discussions that continued among the co-authors of this manuscript. The purpose of this CNTRICS meeting was to identify experimental procedures and measures that can be used in laboratory animals to assess psychological constructs with relevance to the psychopathology of schizophrenia. The Motivation Working Group of this CNTRICS meeting, which included most of the authors of this article as well as additional participants, was asked to identify experimental procedures in laboratory animals that assess reinforcement learning and motivation, two constructs that are highly relevant to the negative symptoms cluster of schizophrenia (Andreasen, 1982). Tasks nominated by the scientific community were discussed, evaluated, and selected by the Motivation Working Group of CNTRICS based on: (i) relevance to the assessment of reinforcement learning and/or motivation; (ii) extent of prior validation work of the construct validity of these tests (i.e., their ability to measure what they are purported to measure); and (iii) translational potential. The motivation group agreed to focus on tasks that assess the most fundamental processes involved in Reinforcement Learning and Motivation.

The objectives of this review article are to provide descriptions of methods that can be used to study these constructs in experimental animals, review how these procedures are implemented, and discuss how the results are interpreted. In addition, the validity of the measures provided by these procedures and what is known about the neural substrates of the processes involved in performing these tasks are discussed. The tasks selected to be discussed here are the ones that were nominated to the Motivation Working Group of CNTRICS and that, after detailed discussions, were selected as being tasks that assess processes and constructs relevant to the negative symptoms of schizophrenia. This is not necessarily an exhaustive list, and it is recognized that additional tasks exist or can be designed that can assess various aspects of the negative symptoms of schizophrenia. For example, although motivation research includes studies of both aversive and appetitive motivation, the present review focuses on appetitive learning only. Furthermore, we highlight tasks that assess fundamental processes in a relatively simple fashion. Additional more-complicated tasks may also prove to be useful and could have high relevance for modeling the complexity of everyday life challenges faced by patients. Nevertheless, the assessment of basic reinforcement learning and motivational processes will facilitate the analysis of the neuropathological changes in these processes that lead to the negative symptoms. Finally, this review article provides a pharmaceutical industry perspective about how such experimental procedures may be applied to drug discovery efforts for the treatment of the negative symptoms of schizophrenia.

It should be emphasized that the constructs discussed here have relevance to multiple neuropsychiatric disorders characterized by alterations in reinforcement learning and motivational functions, such as major depression, neurodegenerative diseases (e.g., Parkinsonism, Alzheimer’s disease), dementias, and ageing, in addition to having relevance to the negative symptoms of schizophrenia (Salamone et al., 2007; Der-Avakian and Markou, 2012).

Reinforcement Learning was defined by CNTRICS as “Acquired behavior as a function of both positive and negative reinforcers, including the ability to: (a) associate previously neutral stimuli with value, as in Pavlovian conditioning; (b) rapidly modify behavior as a function of changing reinforcement contingencies; and (c) slowly integrate over multiple reinforcement experiences to determine behaviors that are optimal in the long run despite environmental uncertainty”. Motivation was defined during the discussion as those processes that modulate the direction and activation (i.e., initiation, persistence, speed, or exertion of effort) of behavior in relation to significant external and internal stimuli. As revealed by the above definitions, both reinforcement learning and motivation are multifaceted processes (Salamone, 2007; Ward et al., 2012).

Several procedures, including an Autoshaping Task, Probabilistic Reward Learning, and the Response Bias Probabilistic Reward Task, are described here as procedures to assess Reinforcement Learning. Although motivation is involved in the execution of almost all experimental procedures, Effort-Based Tasks and the Outcome Devaluation and Contingency Degradation Tasks provide the opportunity to parse various components of motivation from each other.

Clinical and Preclinical Terminology

Much clinical and preclinical research has examined reinforcement learning and motivational processes in healthy humans, patients, and laboratory animals. The clinical and preclinical literature indicates that there are several different terms used to describe experimental findings in animals and humans. Some of these terms are not defined in a consistent or clear manner. Moreover, little effort has been invested in linking the preclinical and clinical realms, and therefore, terminology varies widely. These discrepancies in terminology have led to some confusion and a lack of successful communication. Thus, we attempt here to briefly relate the various terms used by clinicians, clinical researchers, and animal researchers in the hopes of enhancing the translational value of work on reward and motivation to both preclinical and clinical researchers concerned with these domains of function.

Clinical Terms

The most frequently used term to describe reduced behavioral activation/output in the clinical literature is fatigue, understood as encompassing mental or central fatigue in addition to physical or motor exhaustion (Chaudhuri and Behan, 2004). This term is used for many indications, including schizophrenia, depression, and muscular and neurodegenerative disorders, despite the lack of a strict and precise definition. Used more frequently in other domains (e.g., Parkinson’s disease; c.f., Friedman et al., 2010), the term fatigue is not used in scales common in schizophrenia research (Andreasen, 1981; Kay et al., 1987).

A frequently used term in the clinical realm, and sometimes in preclinical work as well, is apathy. This term is most frequently used to refer to motivational deficits in schizophrenia, dysthymia, depression, stroke, progressive supranuclear palsy, and neurodegeneration, particularly in Huntington’s disease (Ishizaki and Mimura, 2011; van Reekum et al., 2005). Apathy is defined as “diminished goal-oriented behavior and cognition, and a diminished emotional connection to goal-directed behavior” (Marin, 1991); thus, this term describes a type of motivational dysfunction relevant to the focus of this review (Clarke et al., 2011; Oakeshott et al., 2012).

Anhedonia, a term coined by the French psychologist Ribot (Ribot, 1896), is also used in both clinical and preclinical domains. Anhedonia refers to the reduced experience of or inability to experience pleasure during reward delivery. The term anhedonia has been used at times to refer to not only the experience but also the pursuit of pleasure, thus leading to confusion in the literature. This extension of the definition of the term anhedonia to both of these aspects of reinforced behavior (i.e., both the experience of pleasure and the pursuit of rewards) is undesirable because of references to two different psychological processes that are mediated by dissociable, albeit overlapping, neural circuits (Berridge and Robinson, 1998; Der-Avakian and Markou, 2012) and because it leads to confusion in the field. Here, we restrict our use of the term anhedonia to the emotional reaction to reward as it is being experienced.

Avolition, understood as a reduction of the ability to initiate and maintain goal-directed behavior, is a term used in schizophrenia research and diagnosis as part of the negative symptom cluster. Indeed, the Scale for the Assessment of Negative Symptoms (SANS), one of the most commonly used scales in the clinic, considers an avolition-apathy domain as distinct from the other negative symptom clusters, namely anhedonia, alogia, affective flattening, asociality, and attentional impairment (Andreasen, 1981, 1982). The Positive and Negative Syndrome Scale (PANSS; Kay et al., 1987), another widely used scale, uses the term apathy in the context of social withdrawal, which is included in the negative symptom cluster, and “disturbance of volition,” which is included in a general pathology subscale. In the current paper, we restrict our definition of avolition to the processes that modulate the initiation and maintenance of goal-directed behavior.

Anergia, which is defined as “lack of perceived energy,” is used to describe the lack of physical activity without ascribing such inactivity to any particular process, although it is included in the avolition/apathy cluster in the SANS (Andreasen and Olsen, 1982). Psychomotor retardation tends to connote a slowing or reduction of motor activity in general, although it is also used to reflect a reduction of the speed of processing incoming stimuli, resulting in a slow motor response. It is difficult to empirically separate its two components, the motor and the mental slowness (bradyphrenia). Motor retardation appears in the PANSS as part of the general pathology scale (Kay et al., 1987). These clinical terms correspond most closely to what we call the activational aspects of motivation later in this paper.

Preclinical Terms and Their Relationship to the Clinical Terms

There does not appear to be a discrepancy in either the preclinical or clinical domain about reinforcement learning despite the fact that there are many aspects of reinforcement learning. However, the term motivation presents more challenges because motivation is the result of many subprocesses. Motivated behavior takes place in phases, and one distinction seen in the literature is between the sequences of behaviors that bring the experimental animal subject in physical proximity with the goal object (e.g., the reward or reinforcer) or increase the chances that the goal object will be delivered (anticipatory, appetitive, preparatory, approach, or seeking behavior) versus the direct interaction with the motivational stimulus or goal object (i.e., consummatory or taking behavior; Craig, 1917; Andreasen and Olsen, 1982). Note that the anticipatory behavior itself is regulated by multiple subprocesses, including those that underlie the representation of the value of a goal, the assessment of the effort needed to obtain the goal, and the cost-benefit analysis that leads to action (Salamone and Correa, 2002).

Another classic distinction in the literature is between directional aspects of motivation (i.e., the fact that behavior is directed toward or away from stimuli) versus activational aspects (i.e., the fact that motivated behavior is characterized by a high degree of activity, persistence, or effort; Cofer and Appley, 1964; Salamone, 1988). These dimensions of motivation are not mutually exclusive and often are used in concert in the literature (Berridge and Robinson, 1998; Brebion et al., 2000; Salamone, 2007). Nevertheless, these components are also dissociable experimentally (see below). Although the terms used in the human clinical literature and preclinical animal studies are sometimes at variance, there are also examples of consistency and overlap. The term liking is similar to terms such as hedonic impact or positive valence (Smith et al., 2011). Thus, anhedonia could be said to reflect a condition of diminished liking. Similarly, clinical conditions, such as fatigue, apathy, psychomotor retardation, and anergia, are thought to reflect blunted behavioral activation (Salamone, 2007, 2010). Furthermore, studies of reinforcement learning are conducted both in humans and in other animals, often using analogous procedures. Thus, it is possible to review the literature on preclinical studies of motivation and reinforcement learning in a manner that is highly relevant for the understanding of motivational dysfunctions in schizophrenia.

Reinforcement Learning Tasks

There is an extensive literature that suggests that schizophrenia patients have deficits in reinforcement learning (e.g., Yilmaz et al., 2012; Weiler et al., 2009; Farkas et al., 2008; for review, see Gold et al., 2008), although the basis for these deficits may be varied in different subgroups of patients (Farkas et al., 2008). Importantly, reinforcement learning deficits are associated with the expression of negative symptoms and may indeed be an important contributor to the etiology of these symptoms (e.g., Yilmaz et al., 2012). Learning deficits have been reported in both classical (Pavlovian) conditioning procedures (e.g., Hofer et al., 2001; Dowd and Barch, 2012) and instrumental or operant learning tasks (e.g., Weiler et al., 2009).

The three animal procedures that were selected to be described here are the Autoshaping Task that allows the assessment of Pavlovian (classical) conditioning processes and the Probabilistic Learning Task and Response Bias Probabilistic Learning Task that involve operant conditioning.

Autoshaping Task

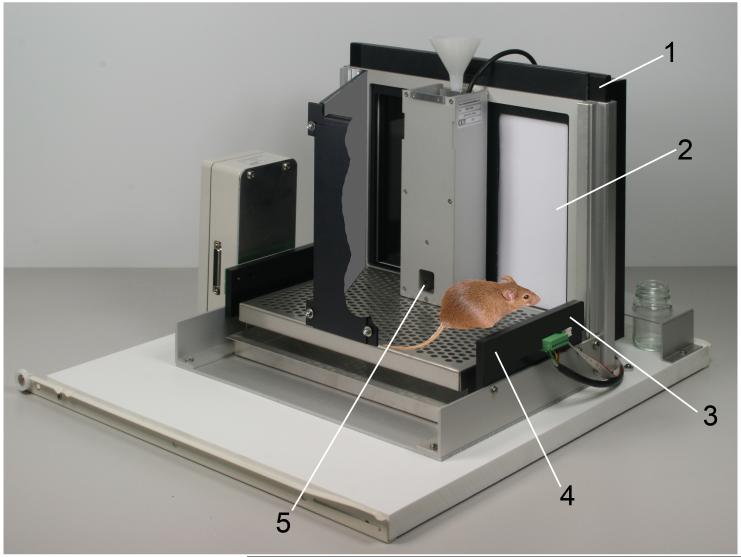

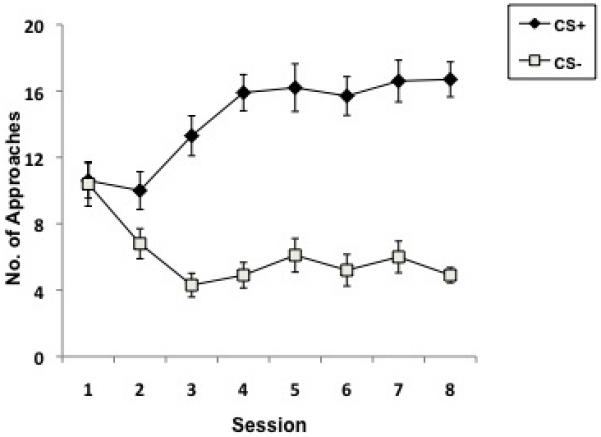

The Autoshaping Task allows the assessment of the construct of Pavlovian (classical) conditioning processes. Autoshaping was discovered when researchers shaped pigeons to peck an illuminated response key and found that merely preceding the delivery of reinforcement with illumination of the key was sufficient to induce pigeons to peck at the illuminated key. In other words, key-pecking behavior was “shaped” without actually making the reward contingent on pecking, hence the name autoshaping (Brown and Jenkins, 1968). In autoshaping, repeated conditioned stimulus (CS)–unconditioned stimulus (US) pairing gives rise to Pavlovian approach behavior that develops across trials, allowing the assessment and comparison of animals’ reinforcement learning rates. In rodents, there are several computer-automated procedures with which to study autoshaping, primarily involving operant conditioning chambers equipped with levers or computer monitors fitted with touch-sensitive screens. In the operant conditioning chambers, first a CS, usually a light near or within a lever or the extension of a retractable lever into the chamber, is followed by reinforcement, and lever contacts are the recorded measure of interest. The task is best conducted as a discriminative conditioning procedure, using a CS+ (e.g., left lever) and a CS− (e.g., right lever) to provide a control for overall levels of responding that are assessed as responding on the CS− manipulandum. After the CS+ presentation, reinforcement is delivered, while no reinforcement is delivered after the CS− presentation. In the touchscreen method (Bussey et al., 1997; see Figure 1A), which is also a discriminative conditioning procedure, a stimulus (white rectangle) is shown on either the left or right of the screen for (usually) 10 s (a detailed protocol is provided in Horner et al., 2013). In both of these methods, over trials the animal learns that the CS+ predicts reward and makes increasingly more responses to the CS+. Responses to the CS− typically stabilize at a low level. Data from the touchscreen method using C57BL/6 mice are shown in Figure 1B. It is also possible to measure, concurrently with stimulus approaches, approaches toward the location of the reinforcer delivery during the CS presentation. In this way, “sign tracking” (i.e., approaches toward the CS+) can be dissociated from “goal tracking” (i.e., approaches toward the reinforcer; Flagel et al., 2007; Danna and Elmer, 2010).

Figure 1.

The Autoshaping task. A. Touchscreen apparatus that shows a mouse with the CS displayed on the right. Presentation of the CS+ is followed by reward delivery; presentation of the CS− is not. 1. Touchscreen assembly. 2. CS. 3. Photocell for detecting approaches toward the CS. 4. Photocells for trial initiation. 5. Reward tray. Figure courtesy of Campden Instruments. B. Typical mouse autoshaping data. Approaches to the CS+ increase while approaches to the CS− decrease over training sessions.

The neural circuitry underlying autoshaping has been strongly implicated in the neuropathology of schizophrenia. Specifically, structures within the mesolimbic dopamine system, as well as elements of the prefrontal cortex, are critical for normal autoshaping behavior. Neurotransmitter systems relevant to schizophrenia, including the dopaminergic and glutamatergic systems, are also involved in performance of this task.

Dopamine depletion in the nucleus accumbens impairs autoshaping acquisition and also performance of a pre-operatively learnt discrimination (Parkinson et al., 2002; Dalley et al., 2002). The core, but not the shell, region of the nucleus accumbens is particularly important for the acquisition of autoshaping (Parkinson et al., 1999). In addition, excitotoxic lesions of the nucleus accumbens core region impair a previously acquired association (Cardinal et al., 2002). Studies involving drug infusions into the nucleus accumbens core during the acquisition of a lever-based autoshaping task revealed that the amino-3-hydroxy-5-methyl-4-isoxazolepropionate (AMPA)/kainate receptor antagonist LY293558 disrupted discriminated approach performance but not acquisition; whereas the N-methyl-d-aspartate (NMDA) receptor antagonist AP-5 impaired acquisition but did not interfere with performance of a previously learned approach (Di Ciano et al., 2001). The dopamine D1/D2 receptor antagonist α-flupenthixol decreased approaches to the CS+ during both acquisition and performance (Di Ciano et al. 2001). A particularly interesting finding is the observation that in rats performing the touch screen version of the task, injections into the nucleus accumbens of the dopamine D1 (SCH 23390) or NMDA (AP-5) receptor antagonists after each daily session of touch screen autoshaping impaired acquisition (Dalley et al., 2005). D2 receptor antagonism (sulpiride) and amphetamine infusion had no effect in this procedure (Dalley et al., 2005). These findings suggest that D1 and NMDA receptors are specifically involved in the consolidation of Pavlovian learning. In contrast to the circuits that include the nucleus accumbens, the dorsal striatum-prefrontal cortex circuit does not appear to be critically involved in autoshaping (Christakou et al., 2001).

The prefrontal cortex appears to be necessary for the acquisition of discriminated approach responses. The orbitofrontal cortex is necessary for acquisition but is not necessary once the discrimination has been learned (Chudasama and Robbins, 2003). Similarly, lesions of the post-genual anterior cingulate have been shown to impair acquisition (Bussey et al., 1997). Additionally, Parkinson et al. (2000b) used a disconnection lesion procedure to show that the nucleus accumbens core and anterior cingulate compose part of a corticostriatal circuit involved in autoshaping. Medial prefrontal lesions (including prelimbic, infralimbic, and pre-genual anterior cingulate) cortex lesions do not substantially affect autoshaping, nor do lesions of the posterior cingulate (Bussey et al., 1997).

Lesions of the subthalamic nucleus (STN) impair autoshaping (Winstanley et al., 2005), consistent with other reports that STN lesions impair sign-tracking (Uslaner et al., 2008). Furthermore, lesions of the pedunculopontine tegmental nucleus (PPTg), a brainstem nucleus that has been implicated in schizophrenia (Yeomans, 1995), impair autoshaping (Inglis et al., 2000), perhaps by altering attentional control or sensory gating, functions thought to be affected in schizophrenia (Nuechterlein et al., 2009; Luck and Gold, 2008).

Other structures involved in autoshaping include the central but not basolateral nucleus of the amygdala (Parkinson et al., 2000a), whereas lesions of the hippocampus may facilitate autoshaping (see below; Ito et al., 2005).

Systemic pharmacological studies also underscore the utility of the autoshaping procedure for preclinical studies relevant to schizophrenia patients. The nonselective dopamine receptor antagonist apomorphine impaired autoshaping (Dalley et al., 2002). Furthermore, the atypical antipsychotic olanzapine and typical antipsychotic haloperidol disrupted conditioned approach to the reward-predictive cue (sign-tracking), but neither drug disrupted conditioned approach to the reward (goal-tracking; Danna and Elmer, 2010). As mentioned above, a D1 receptor antagonist but not D2 receptor antagonist impaired autoshaping when infused into the nucleus accumbens post-session (Dalley et al., 2005).

A few studies have shown that certain manipulations can enhance autoshaping. For example, an improvement of the consolidation of autoshaping after administration of the 5-HT1A receptor agonist 8-OH-DPAT was seen (Meneses and Hong, 1994). Furthermore, intracerebroventricular (ICV) infusions of the serotonergic neurotoxin 5,7-dihydroxytryptamine increased the speed and number of responses made in autoshaping (Winstanley et al., 2004); however, it cannot be argued that these effects represent an “improvement” in task performance, at least not in terms of discrimination learning rate. Interestingly, a more convincing demonstration of increased responding and what appears to be an increase in learning rate was found after excitotoxic hippocampal lesions (Ito et al., 2005).

There are conditioning paradigms available for human testing that involve at least some of the same brain regions implicated in the rodent autoshaping task (e.g., Bechara et al., 1995; Phelps et al., 2001, Delgado et al., 2006). Studies in healthy humans that have examined pavlovian autoshaping indicate similar response patterns to those seen in experimental animals (e.g., Pithers, 1985; Wilcove and Miller, 1984). Although the results of such studies are not in agreement with regard to whether the mechanisms that underlie the behavior in humans and experimental animals are the same, the findings suggest that the development and validation of an autoshaping procedure for humans is feasible.

To summarize, there is now a substantial amount of data on the neurobiology of autoshaping, particularly in the touch screen version of the task, that shows that the task can dissociate the functions of different systems, including neurotransmitter systems and receptor subtypes, and subregions within the same subcortical nuclei. Furthermore, both decrements and increments in performance can be detected. The structures and systems that underlie autoshaping are highly relevant to schizophrenia, as are the psychological processes thought to be tapped by this task. However, whether schizophrenia patients exhibit deficits in Pavlovian (classical) conditioning is unclear. Lubow (2009) argued that the conditioned eyeblink response is not impaired in schizophrenia. However, Romaniuk et al. (2010) reported that patients with schizophrenia showed abnormal activation of the amygdala, midbrain, and ventral striatum during conditioning. Indeed, in the patient group, the activation of midbrain structures correlated with the severity of delusional symptoms. Nevertheless, the use of conditioning paradigms, such as the autoshaping task, will allow researchers to explore this important question. Classical conditioning is a fundamental type of learning, deficits in which could profoundly affect performance in a variety of learning tasks. Classical conditioning is not independently assessed in other reinforcement learning tasks.

Probabilistic Reward Learning Task

Reinforcement learning is generally defined as the modification of behavior based on past experience of the positive and/or negative consequences of particular predictive events (stimuli or actions). Reinforcement learning also refers to computational theories that quantitatively describe how optimal action selection emerges based on internal assignment of value to choice alternatives, where values are derived from reward and/or punishment expectancies built through prior feedback. As discussed below, there is considerable evidence that implicates frontostriatal circuitry and dopaminergic activity in reinforcement learning, neural substrates known to be dysregulated in schizophrenia and other neuropsychiatric disorders. Moreover, recent studies reporting deficits in probabilistic learning in several of these disorders (Frank et al., 2004; Waltz et al., 2007, 2011; Gold et al., 2012; Whitmer et al., 2012; Weiler et al., 2009; Heerey et al., 2008) have led to growing interest in the development of appropriate preclinical animal procedures in this domain.

The majority of probabilistic reinforcement learning paradigms employ an instrumental two-alternative forced choice procedure. Subjects are presented with a series of trials and on every trial are required to select one of two possible response options. Both response options may be associated with a positive or negative reinforcing outcome, but on any given trial, delivery of the reinforcer is uncertain. The odds of outcome delivery for each response option are determined based on a specified probability distribution that is unknown to the subject. Thus, choice options might result in reinforcement but sometimes lead to spurious null or negative feedback. The goal of the subject is to learn to make choices that maximize positive outcomes (and/or minimize negative outcomes) over the course of the session.

Several versions of instrumental probabilistic reinforcement learning tasks have been developed for rodents. Brunswik (1939) first implemented the basic procedure in an elevated T-maze, while more recently reinforcement learning with multiple alternatives has been studied in the nine-hole box (Bari et al., 2010) and operant lever chambers (Hiraoka, 1984). In all of these task variants, rodents learn to preferentially select the more profitable response option as the number of trials increases. Two-alternative probabilistic learning tasks are also widely used in humans and nonhuman primates. However, these tasks typically involve response choices defined by visual stimuli rather than by spatial locations that are predominant in rodent versions. The simplest procedures are similar in structure to rodent tasks; that is, subjects choose between two stimulus alternatives associated with a high or low (e.g., 80:20%) probability of reward (Chamberlain et al., 2006; Kasanova et al., 2011). Other procedures have extended the basic two-choice paradigm to promote the reliance on implicit reinforcement learning processes. For example, in the probabilistic classification task, subjects are asked to predict a binary outcome (e.g., sun or rain) based on a complex set of visual cues, where individual cues are each probabilistically associated with the outcomes (Knowlton et al., 1994). Over trials, normal subjects progressively learn to select the outcome more frequently associated with a specific cue pattern. More recently, Frank et al. (2004) developed a probabilistic selection task in an attempt to better distinguish between learning that results from positive versus negative feedback. During task acquisition, one of three pairs of visual stimuli (e.g., hirigami characters) that vary in reward probabilities (80:20%, 70:30%, and 60:40%) are randomly presented in each trial. The subjects are trained up to 360 trials to gain exposure to these initial pairings and learn to select the more profitable options. To assess the contribution of positive or negative feedback, a transfer/probe phase is implemented, in which novel combinations of stimuli are presented, but no feedback is given. The extent to which the 80% stimulus option is chosen more frequently than its novel pairings (70%, 60%, 40%, 30%) provides an index of positive feedback learning. Conversely, avoidance of the 20% option in the same novel pairings is a marker for negative feedback learning.

Recently, Mar and colleagues developed a novel rodent analogue of the probabilistic selection task in the touchscreen apparatus (Trecker et al., 2012). In the basic procedure, rats are trained to initiate trials in which a pair of distinct visual stimuli are presented on the touchscreen. The first novel pair of stimuli is presented as a standard two-alternative visual discrimination (100:0%) such that touching the profitable stimulus always results in a food pellet reward, and touching the unprofitable stimulus leads only to a 5-s timeout period. After the animals learn to adopt a choice preference for the profitable stimulus (~4-8 sessions of up to 200 trials each), the subjects are sequentially exposed to five novel stimulus pairs, with each pair presented for six consecutive sessions before the next stimuli are introduced. The reward probability ratios for each stimulus pair are 100:0%, 90:10%, 80:20%, 70:30%, and 50:50%, with the order of presentation counterbalanced across animals to help control for possible carry-over effects. As expected based on reinforcement learning theory, rats show a diminishing choice preference for the more profitable option as the difference between profitable and unprofitable probabilities decreases. As an important control, no significant choice preference or stimulus bias for the 50:50% pair was seen (Trecker et al., 2012). In the next phase, the five stimulus pairs are presented, interleaved randomly across trials within the same session, analogous to the human version. Two sets of probe trials are then implemented within the interleaved sessions to assess positive and negative feedback learning. First, all five pairs are presented in novel combinations (each of the 40 possible pairs presented once per session for four sessions, without reinforcement) such that the stimulus associated with higher reward probabilities can be compared with all stimuli that have lower reward probabilities and vice versa. Further information is also obtained by examining choice preferences in relation to the anchoring pairs of 100:0% and 50:50%. Then, all 10 stimuli from the original pairs are combined with a novel stimulus (10 possible pairs presented four times per session, without reinforcement) such that biases toward selecting more profitable stimuli and avoiding unprofitable stimuli can be examined. The application of these probes to a sample of control Lister hooded rats suggested that, similar to the human version, both positive and negative feedback learning is taking place during probabilistic discrimination. Moreover, the avoidance of previously unprofitable stimuli was significantly greater than approach to previously profitable stimuli when these stimuli were paired with a novel stimulus.

This analogue of the probabilistic selection task offers a promising translational tool for examining probabilistic reinforcement learning in rodents and potentially primates. The task engages similar stimulus and response modalities as the human version and enables assessment of identical outcome measures. This task also adds two control choice conditions (100:0% and 50:50%), which are an important innovation for better interpretation of the task results. The task presently requires approximately 50 training sessions from initial box introduction to the end of probe trial testing, but the overall training time has been compressed by giving two sessions per day. Further refinements (e.g., curtailing the task to examine only a single probability) may render the basic task more suitable for higher throughput testing and optimize it for drug discovery/development. Moreover, the procedure also lends itself well to the assessment of other constructs, such as probabilistic reversal learning, providing a further index of executive function (Gilmour et al., 2012). As this task has only recently been developed, there is as yet no psychometric data concerning test-retest reliability or data examining construct validity or sensitivity to pharmacological agents.

There is a growing body of empirical evidence examining the neural bases of reinforcement learning. Ventral and medial areas of the prefrontal cortex have been implicated in the encoding of value of a stimulus and/or expected rewards in human functional magentic resonance imaging studies (Kable and Glimcher, 2007; Hare et al., 2008; Chib et al., 2009; FitzGerald et al., 2009; Levy et al., 2010), as well as in electrophysiological studies in nonhuman primates (Padoa-Schioppa and Assad, 2006, 2008; Kennerley et al., 2011; Padoa-Schioppa, 2009) and rats (Takahashi et al., 2011). Across these species, the medial regions of the orbitofrontal cortex have been implicated in the processing of reward outcome value (Arana et al., 2003; Kringelbach, 2005; Grabenhorst and Rolls, 2009; Rudebeck and Murray, 2011; Noonan et al., 2010; Gourley et al., 2010; Mar et al., 2011), whereas null or negative error-related feedback has been linked to the function of the anterior cingulate cortex (Bellebaum et al., 2010; Carter et al., 1998, 1999; Ito et al., 2003; Bryden et al., 2011). A large amount of theoretical work suggests that the difference between such expected value and actual value signals (prediction error) are requisite to reinforcement learning, and evidence indicates that one of the conditions encoded by the phasic activity of midbrain dopamine neurons is reward prediction errors (Montague et al., 1996; Schultz et al., 1997; for reviews, see Glimcher, 2011; Schultz, 2010). Phasic dopamine signaling has been proposed to modify synaptic plasticity within the frontal cortex and basal ganglia, such that stored expected values can be updated and/or adjusted (Wickens et al., 1996; Surmeier et al., 2009; Sheynikhovich et al., 2011).

While much of the work examining the neural substrates of reinforcement learning has used Pavlovian learning paradigms or instrumental tasks, in which reward timing or magnitude are manipulated to generate prediction errors, relatively less work has examined the neural substrates of instrumental probabilistic reinforcement learning per se. Existing evidence from probabilistic classification and probabilistic learning tasks most consistently implicate roles for the basal ganglia and mesolimbic dopamine system (Shohamy et al., 2008; Maia and Frank, 2011). Empirical and computational modeling work on the probabilistic selection task in humans has suggested that dopamine may contribute to reinforcing both “Go” (learning to select actions with a high reward probability) as well as “No Go” (learning to avoid or suppress actions with low reward probability) learning (Frank et al., 2004). Unmedicated Parkinson’s disease patients (who exhibit depleted striatal dopamine levels) have been observed to learn more from negative than positive outcome feedback, whereas patients on medication (generally exhibiting increased striatal dopamine levels compared with unmedicated patients) show the opposite pattern (Frank et al., 2004; Palminteri et al., 2009). These learning biases are correlated with medication-induced increased sensitivity to positive prediction errors and reduced sensitivity to negative prediction errors in the ventral and dorsolateral striatum (Voon et al., 2010).

Recent studies have also associated genetic factors related to dopamine function with probabilistic learning. A triple dissociation was found for three polymorphisms that affect distinct aspects of dopamine function on probabilistic selection task performance. DARPP-32, linked with D1 dopamine receptor function in the striatum, was associated with choosing stimuli having higher (versus lower) probabilities of reward. C957T, which affects dopamine D2 receptor mRNA translation and stability and striatal postsynaptic D2 receptor density, was associated with the avoidance of stimuli having lower probabilities of reward. Val/Met, which affects the levels of the COMT enzyme and dopamine in the prefrontal cortex, was associated with trial-to-trial lose-shift strategies (Frank et al., 2007). However, identification of the genetic factors and neural circuits that contribute to reinforcement learning must also be tempered by the recognition that reinforcement learning processes appear to be intertwined with higher cognitive functions, such as working memory (Collins and Frank, 2012).

The primary measures of interest in probabilistic reinforcement tasks are the learning rate and asymptotic choice performance. However, as computational models attest, there are numerous factors or mechanisms within reinforcement tasks that may impact a subject’s learning and choice performance and influence interpretation of the results. A subject’s ability to associate and/or assign value to a stimulus or choice-response option may influence learning rates and has long been hypothesized to depend on or be modulated by attentional mechanisms (Mackintosh, 1975; Pearce and Hall, 1980; Dickinson, 1981). The ability to reliably detect and encode the differences between actual and expected outcomes (prediction errors) is widely considered to be the main engine of reinforcement learning (Rangel et al., 2008; Kable and Glimcher, 2009). As described briefly above, a subject’s valuation or weighting of positive or negative outcomes may differentially contribute to reinforcement learning. The extent to which prior reinforcement events are remembered or discounted may also affect individual choice patterns (e.g., a subject who only considers feedback from their last choice might engage in relatively more win-stay lose-shift behavior; Shimp, 1976; Williams, 1991; Collins and Frank, 2012). Furthermore, a subject’s capacity to update memories when outcome value or availability changes will also influence choice, as will susceptibility to bias. In summary, a subject’s propensity to adopt certain response strategies (e.g., exploitation versus exploration), use prediction error information (e.g., model-based or model-free reinforcement learning), or engage other cognitive systems (e.g., implicit or explicit) may further impact their trial-by-trial choices (Daw et al., 2006; Fu and Anderson, 2008; Doll et al., 2012). Such factors and strategic differences should thus be carefully considered when assessing the validity and translation of probabilistic reinforcement learning tasks. The next section suggests some methods for assessing sensitivity to changing reinforcement contingencies.

Response-bias probabilistic reward task

This task provides a measure of reward responsiveness in terms of how reinforcement history and current reinforcement contingencies affect future actions and specifically the pursuit of rewards. The task has both a probabilistic reinforcement learning component and a signal-detection component. However, the interest is neither solely in probabilistic reinforcement learning, which is best assessed with the probabilistic learning tasks described above, nor in the accuracy of signal detection. An important difference between the classic probabilistic learning procedures and the presently described task is that the two stimuli presented are difficult to discriminate, in addition to being differentially and only partially reinforced. One stimulus is designated as the rich stimulus (i.e., the more frequently reinforced), and the other stimulus is the lean stimulus (i.e., the less frequently reinforced; the stimuli are counterbalanced across subjects). By making the two stimuli ambiguous, the procedure allows healthy control subjects to develop a response bias toward the more richly reinforced stimulus. The development of this response bias is often accompanied by differences in accuracy responding for the two stimuli because the subjects tend to respond as though they have detected the rich stimulus even in cases when the lean stimulus was presented. The degree of development of this response bias is a measure of sensitivity to prior reinforcement contingencies (i.e., reward responsiveness) and presumably reflects the subject’s pursuit of reinforcers based on these contingencies.

This task was originally developed by Pizzagalli and colleagues (adapted from Tripp and Alsop, 1999) for use in human subjects in order to provide an objective laboratory-based measure of reward responsiveness that is not based on subjective self-reports (Pizzagalli et al., 2005). In the human version of the task, the subject is presented on a computer screen with a cartoon face that lacks a mouth. On each discrete trial, one of two mouths appears very briefly, and the subject has to press a key on the keyboard to indicate whether s/he saw a long (e.g., 13 mm) or a short (e.g., 11.5 mm) mouth. To allow the response bias to develop, correct responses are only partially reinforced (~40% of the time), with the mouth length designated as the rich stimulus being reinforced three times more frequently than the mouth length designated as the lean stimulus (Pizzagalli et al., 2005; a review of the human version of this task is provided in another CNTRICS paper; see Ragland et al., 2008). Over the duration of a single test session, healthy human subjects develop a response bias, expressed as more responses on the key that signifies the detection of the rich stimulus than the key associated with the lean stimulus. The development of this bias is reflected in the progressively increased accuracy for the rich stimulus and progressively decreased accuracy for the lean stimulus over the duration of the test session (Pizzagalli et al., 2005). The construct validity of the response bias measure as a measure of decreased reward responsiveness has been demonstrated by data showing that depressed inpatients (Vrieze et al., 2013a) and outpatients (Pizzagalli et al., 2008), as well as college student subjects who report increased depressive symptoms (as measured in the Beck Depression Inventory scale; score ≥ 16), failed to develop the response bias seen in healthy subjects (Pizzagalli et al., 2005). Of note, in a recent study, blunted response bias was greatest in major depressive disorder inpatients who reported elevated anhedonic symptoms, and reduced response bias predicted the chronicity of major depressive disorder diagnosis after 8 weeks of naturalistic treatment (Vrieze et al., 2013a). This pattern of results suggests that depressed patients, who are usually characterized by anhedonia and amotivation (American Psychiatric Association, 2000), and subjects with high Beck Inventory “melancholic” subscores and negative affect in the Positive and Negative Affect Scale (PANAS-NA; Watson et al., 1988) are relatively less affected by the differences in reinforcement associated with the different options than controls (Huys et al., 2013). Thus, the task parameters, procedures, and measures, as well as the studies in individuals with high depression scores or depression diagnosis, support the conclusion that the response bias probabilistic reward task provides a measure of reward responsiveness that may be useful for studying dysfunctions in how reinforcement contingencies influence the pursuit of rewards.

A study by Gold and colleagues indicated that medicated schizophrenia patients exhibited no deficits in the response bias probabilistic reward task (Heerey et al., 2008). However, the fact that these were medicated “stable outpatients” with schizophrenia and the fact that the smoking status of the study participants was not assessed provide some limitations and confounds to this work that need to be explored in future investigations. Smoking status at the time of the test is relevant because it has been hypothesized that the high smoking rates of psychiatric populations may reflect attempts to medicate untreated depression-like negative symptoms (Markou et al., 1998); that is, both the prescribed antipsychotic medications and the high smoking rates of the schizophrenia subjects may have alleviated deficits in the patients who participated in this study. Relevant to the above discussion are recent findings showing that the decreased development of response bias in this task was associated with increased levels of nicotine dependence in schizophrenia patients but not in control subjects (Ahnallen et al., 2012). Furthermore, nicotine increased response bias in healthy subjects (Barr et al., 2008). Taken together, these data suggest that nicotine in tobacco smoke may normalize the responsiveness to reinforcement in schizophrenia patients, measured by the response bias probabilistic reward task. Future investigations may address the question of whether response bias measured in this task is affected in unmedicated non-tobacco-smoking schizophrenia patients as it is in depressed patients. If this is not the case in schizophrenia patients, then this task provides the opportunity to study differences in reward processing between depressed patients and schizophrenia patients with high levels of negative symptoms. In this regard, the rat analogue of the response bias probabilistic reward task will provide a valuable tool in the investigation of the neurobiology of deficits in reward responsiveness that may be seen in some, but not all, neuropsychiatric populations characterized by reinforcement learning and motivational deficits.

Toward that goal, Markou, Pizzagalli, and colleagues have been working on developing a rat version of the response-bias probabilistic reward task (Der-Avakian et al., under review). Virtually identical procedures and parameters between the human and rat versions of the task have been used so that the processes measured in the rat task may be homologous and not just analogous to those measured in the human task. In the rat version of the task, rats are gradually trained in a discrete-trial tone duration discrimination task procedure (called a bisection procedure in the literature on temporal discrimination). A trial starts with the presentation of one of two tones, one being a long duration tone and the other being a short duration tone; all of the other parameters of the tone (e.g., frequency and volume) are identical between the two tones. After the tone presentation, the two levers that were previously retracted extend into the box, and the rat may emit a single response on either lever. When the rat responds on the correct lever associated with the previously presented tone duration (location associated with a particular tone duration is counterbalanced across subjects), a food reinforcer is delivered. After either a correct or incorrect response (or after a limited period without any response), the levers are retracted, and after a variable intertrial interval, another trial is initiated by the presentation of another tone. Once the duration discrimination is learned, the rats are allowed to become accustomed to a partial reinforcement contingency that is the same for both types of stimuli. On the test day, which is identical in its parameters to the single test session to which the human subjects are exposed, all parameters of the task remain the same except that the two stimuli (i) become difficult to discriminate (i.e., the long and short durations are similar) and (ii) are differentially reinforced. That is, one stimulus is defined as the rich stimulus and is reinforced 60% of the time, whereas the other lean stimulus is reinforced 20% of the time, resulting in correct responses for rich stimuli being reinforced three times more frequently than correct responses for lean stimuli, exactly as in the human task. The measure of response bias (b) is operationally defined by the following formula:

| [Eq. 1] |

Rich correct is the number of correct responses for the rich stimulus, Lean incorrect is the number of incorrect responses for the lean stimulus, Rich incorrect is the number of incorrect responses for the rich stimulus, and Lean correct is the number of correct responses for the lean stimulus.

Higher b scores reflect increased response bias toward the stimulus associated with greater reinforcement probability, whereas lower scores reflect decreased response bias toward the same stimulus. Although response bias is the primary measure of interest derived from this task, the same data are used to calculate additional measures, such as (i) discriminability, defined as the ability to perceptually discriminate between the two stimuli, and (ii) accuracy for the rich and lean stimuli, defined as the percent correct responses for the rich and lean stimuli, respectively. The following formula is used to calculate discriminability (d), with lower numbers reflecting lower discriminability:

| [Eq. 2] |

Accuracy is of interest because it provides an analysis of the pattern of responding that results in changes in overall response bias. For example, an increased response bias is typically reflected by increased accuracy for the rich stimulus compared with the lean stimulus. Discriminability is of interest because it provides a measure of performance, which can vary depending on the pattern of responding as well. For example, blunted response bias may correspond with high discriminability if the accuracy for both stimuli is equally increased over the duration of the session or low discriminability if the accuracy for both stimuli is equally decreased over the duration of the session. As demonstrated by these examples, response bias may be independent of discriminability.

The development of this response bias probabilistic reward task in rats is very recent, and thus only the effects of few manipulations have been compared between humans and animal subjects. A recent study showed that nicotine withdrawal in both rats and humans resulted in decreased response bias in the task (Pergadia et al., in preparation). Furthermore, a single low dose of the dopamine D2/D3 receptor agonist pramipexole, which presumably acts on presynaptic receptors to decrease dopamine output, decreased response bias in both humans (Pizzagalli et al., 2008) and rats (Der-Avakian et al., under review). The decreased response bias induced by pramipexole in humans was correlated with decreased event-related potential (ERP) activation in the dorsal anterior cingulate cortex (Santesso et al., 2009), an area implicated in the representation of reinforcement value (Rushworth et al., 2007; Bussey et al., 1997). In contrast to the effects of pramipexole, pharmacological manipulations that increase dopamine transmission, that is, nicotine administration in humans (Barr et al., 2008) or rats (Pergadia et al., in preparation) and amphetamine administration in rats (Der-Avakian et al., 2013), increased response bias compared with vehicle-treated controls. These parallel observations in rats and humans provide the first demonstrations that this task has translational potential. As more such data accrue, the task will be ripe for drug discovery efforts aimed to treat reinforcement learning deficits and uncover the neurobiological bases of such abnormalities.

At this stage of task development, it appears prudent to eliminate subjects that do not appear to perform on the task as it was intended to. For example, data from human subjects with extremely fast or slow reaction times throughout the task may be eliminated because such reaction times suggest that the subject was not appropriately attending to the task. Similarly, rats are excluded if an insufficient number of responses for either stimulus is emitted during the test session (e.g., many omissions or responses only on one lever for both tones), thus preventing the reinforcement of correct rich vs. lean responses at a 3:1 ratio. Although inherent biases toward one of the two stimuli/levers before the actual test are sometimes present in rats, such biases are controlled for by including a covariate during analyses of test data that is a measure of the degree of bias toward one lever/stimulus during the most recent training session when stimuli are equally reinforced. Furthermore, although there is good test-retest reliability in humans who perform the task (Pizzagalli et al., 2005; Santesso et al., 2009; Ragland et al., 2008), it has not been determined yet whether there is good test-retest reliability in rats, although so far retesting of the subjects has been possible. Such a feature of the task is very desirable because repeated-measures experimental designs are powerful and would allow one to derive more data from subjects that need to be trained for a couple of months before the test is implemented. Finally, there is a need to implement manipulations hypothesized to induce neuropathology implicated in schizophrenia and/or other psychiatric disorders characterized by reward and motivational deficits and assess the effects of such manipulations on the measures provided by the response bias probabilistic reward task.

Although no functional imaging investigations have been conducted in subjects who perform the response bias probabilistic reward task, the studies in humans and experimental animals that reported the brain areas activated in response to the presentation of conditioned stimuli, in preparation for approach appetitive stimuli, and in response selection based on expected outcomes that are highly likely to be involved in performance in this task. Specifically, nonhuman primate and rat studies indicated that the orbitofrontal cortex (OFC; Roesch and Olson, 2004; Feierstein et al., 2006) and dorsolateral prefrontal cortex (dlPFC; Dias et al., 1996; Kobayashi et al., 2002; Tsujimoto and Sawaguchi, 2004; Wallis and Miller, 2003) contribute to making and evaluating goal-directed decisions, even in situations in which there is imperfect data, as is the case in the response bias probabilistic reward task. The OFC (Kepecs et al., 2008) has been implicated in these tasks, and an electroencephalographic study also indicated that activation within the left dorsolateral and ventromedial prefrontal regions is associated with appetitively motivated behaviors (Pizzagalli et al., 2011; Vrieze et al., 2013b).

Finally, genetics appear to also modulate the effects of manipulations on developing the response bias in this task. In human subjects, an acute mild stress manipulation induced deficits in the development of the response bias, which were more pronounced in subjects who expressed homozygosity for the A allele at the rs12938031 position of the corticotropin-releasing receptor type 1 receptor gene (CRHR1; Bogdan et al., 2011). Similarly, self-reported perceived stress is associated with decreased response bias in human subjects who carry the S or LG allele of the 5-HTTLPR/rs25531 serotonin transporter gene (Nikolova et al., 2012). Consistent with these results, self-perceived professional success among psychiatrically healthy subjects predicted increased response bias in carriers of the Val/Val allele of the COMT/rs4680 genotype that is associated with increased phasic dopamine signaling (Goetz et al., 2013). In rat subjects, a psychosocial stressor involving social defeat resulted in decreased response bias in the task (Der-Avakian, Pizzagalli, and Markou, unpublished observations).

In summary, the response bias probabilistic reward task has been established in the rat in a way that is almost identical to the human version of the task to enhance the translational value of both human and rat tasks. The validation of this task has begun and has so far provided consistent data between humans and rats. The unique aspect of this task is that it assesses whether the integration of reinforcement contingencies occurs that leads to the future pursuit of rewards.

Tasks that Assess the Construct of Motivation

It is important to note that all tasks selected to assess the construct of motivation involve reinforcement learning during their initial acquisition. However, in designing and using these tasks, investigators attempt to derive measures that are most relevant to the construct of motivation unconfounded by learning deficits. Ways of achieving this goal include using relatively simple learning tasks in which learning deficits are unlikely to reveal themselves and/or by assessing motivation after asymptotic performance has been achieved by the subjects in the task.

There are many component aspects of motivation that are essential for goal-directed action. Motivation is influenced by an animal’s representation of the value of future rewards, its’ representation of the cost of obtaining them, and the computation that discounts the value of the reward by the effort and delay costs of working to obtain the reward. Patients may be affected by any or all of these aspects of motivation. Consequently, it is important to analyze the component processes in patients and then, where relevant, in animal models.

Schizophrenia patients have a deficit in the capacity to represent the value of future positive outcomes based on their past experiences (Barch and Dowd, 2010). Given the intuitive relationship between hedonia and motivation, it is surprising that several research groups have found a dissociation between hedonic reaction to rewarding stimuli and motivated behavior in patients with schizophrenia (Barch and Dowd, 2010; Cohen and Minor, 2010; Gard et al., 2007; Gold et al., 2008; Heerey and Gold, 2007; Kring et al., 2011). The majority of the current literature shows intact hedonic reaction to rewarding stimuli but impaired incentive motivation in schizophrenia patients, although a recent study reported a difference in the reaction to emotional stimuli in patients and controls (Strauss and Herbener, 2011). Specifically, patients have a deficit in representing the value of future outcomes (Gard et al., 2007; Gold et al., 2008) and are less likely to respond more for highly rewarding alternatives compared with less rewarding ones relative to controls (Kasanova et al., 2011). Thus, at least one contributor to lowered motivation in patients may be their ability to represent and update the value of future outcomes.

Outcome Devaluation and Contingency Degradation Tasks

One method for assessing sensitivity to the value of future outcomes is the outcome devaluation procedure. This protocol is based on the idea that post-conditioning changes in the value of an outcome should lead subjects to alter the behavior that produces that outcome. For example, if an animal learns that a bar press produces pellets and then the pellets are devalued by being paired with an illness-inducing agent, then the animal becomes less likely to make a response that had previously produced pellets if its behavior is guided by the current value of that outcome (Yin et al., 2005; Corbit and Balleine, 2005). A typical experiment of this sort teaches the subjects to make two different responses to produce two distinctive outcomes. For example, in one session each day, the left bar might produce pellets, and in a second daily session the right bar might produce sucrose. After instrumental training, one of the outcomes is devalued. In this example, half the animals might be satiated on pellets and half on sucrose. After satiation, the animals are given a choice test in which both levers are present but neither produces any rewards. To the extent that behavior is guided by current outcome values, working on the lever associated with the devalued outcome should be suppressed relative to the non-devalued lever. Such results have been reported in rats (Balleine and Dickinson, 1992), mice (Crombag et al., 2010; Hilario et al., 2007), monkeys (West et al., 2011), and humans (Klossek et al., 2008; Valentin et al., 2007). Thus, the outcome devaluation task is suggested as an excellent translational task for assessing the capacity to update the value of future reinforcers.

While the neural underpinnings of impaired anticipatory motivation in schizophrenia are not precisely known, there appears to be homologous circuitry in humans and rodents that regulates these processes. One interesting convergence is that there appear to be distinct neurobiological substrates underlying anticipatory motivation and hedonia (Kringelbach and Berridge, 2010). For example, dopamine signaling has been shown to be critical for anticipatory motivation (Balci et al., 2010; Cagniard et al., 2006; Salamone et al., 2007) but is relatively uninvolved in hedonic reaction to reward (Berridge et al., 2010; Peciña et al., 2003). Thus, to the extent that altered dopamine signaling is an important part of the pathophysiology of schizophrenia, one might anticipate changed incentive motivation but relatively intact hedonic reactions as described above (see Ward et al., 2012).

Studies with human subjects have documented a similar sensitivity of behavior to the current value of an outcome as that found in studies with nonhuman animals. The devaluation of primary rewards (Hogarth et al., 2012; Valentin et al., 2007) as well as secondary rewards, such as stimuli associated with money (de Wit et al., 2012; Lijehom et al, 2012), results in the differential selection of actions associated with outcomes that have not been devalued. These procedures have been adapted for use in children (Klossek et al., 2011) and in psychiatric populations (Gillan et al., 2011). In addition, there is a remarkable similarity in the brain structures and networks that are involved in the selection of goal-directed action (Balleine and O’Doherty, 2010; de Wit et al., 2012; Valentin et al., 2007).

Effort-based Tasks

As mentioned above, the activation of goal-directed action requires a computation of whether the value of a particular goal is worth the effort expended to obtain that outcome. The observation that motivated behaviors have an energetic or activational component is a consistent feature of the literature in psychology, psychiatry, and neurology over the last several decades. Motivational stimuli not only serve to direct actions to particular outcomes; they also activate or invigorate behavior. These activational aspects of motivation are highly adaptive because organisms must overcome work-related constraints or obstacles to gain access to significant stimuli, either by foraging over large distances in the wild or lever pressing or climbing barriers in a laboratory. The vigor or persistence of work output in stimulus-seeking behavior is widely seen as a fundamental aspect of motivation. Furthermore, organisms must make effort-related decisions based on cost/benefit analyses, allocating behavioral resources into goal-directed behaviors based on differential assessments of motivational value and response costs (Salamone et al., 2007, 2009, 2012). These activational aspects of motivation are widely studied in behavioral neuroscience, and they are clinically relevant also. As discussed above, clinicians have come to emphasize the importance of motivational symptoms related to effort expenditure, such as psychomotor slowing, apathy, and anergia in major depression, fatigue in parkinsonism and multiple sclerosis, and avolition in schizophrenia (Demyttenaere et al., 2005; Oakeshott et al., 2012; Salamone et al., 2006, 2010; Ward et al., 2011; Treadway and Zald, 2011). Moreover, it has been argued that many people with psychopathologies have fundamental deficits in reward seeking, exertion of effort, and effort-related decision making that do not simply depend on any problems that they may have with experiencing pleasure (Treadway and Zald, 2011). For these reasons, the establishment of effort-based behavioral tasks in animals can be a critical component of the development of preclinical models of motivational symptoms that are relevant for human psychopathology.

A number of behavioral tasks have been used to assess effort-related motivational processes in animals. One such procedure is the progressive-ratio schedule (i.e., a schedule in which the number of lever presses required per reinforcer gradually increases). As the ratio requirement increases, the animals reach a point at which they cease responding, which is generally known as a breakpoint. Although changes in progressive-ratio breakpoints are sometimes interpreted only in terms of “reward value,” progressive-ratio breakpoints clearly reflect more than just alterations in the appetitive motivational properties of a reinforcing stimulus. For example, changing the kinetic requirements of the instrumental response by increasing the height of the lever decreases progressive-ratio breakpoints (Skjoldager et al., 1993; Schmelzeis and Mittleman, 1996). Thus, despite the fact that some researchers have maintained that the breakpoint provides a direct measure of the appetitive motivational characteristics of a stimulus, it is, as discussed in a classic review by Stewart (1974), most directly a measure of how much work the organism will do to obtain access to that stimulus. Fundamentally, a progressive-ratio breakpoint is an outcome that results from effort-related decision-making processes. The organism is making a cost/benefit decision about whether or not to respond, based partly on the value of the reinforcer but also on the work-related response costs and time constraints imposed by the ratio schedule (Salamone, 2006). Progressive-ratio responding has been used to assess motivational impairments related to schizophrenia; striatal-specific increases in dopamine D2 receptor expression in mice led to decreases in progressive-ratio responding for food reinforcement that were generally unrelated to changes in appetite and other nonspecific effects (Drew et al., 2007; Simpson et al., 2011). Recent studies with a progressive-ratio/chow feeding choice task have shown that the dopamine receptor antagonist haloperidol suppresses food-reinforced progressive ratio responding and lowers breakpoints but nevertheless leaves the consumption of a concurrently available but less preferred food source intact (Randall et al., 2012). The actions of haloperidol on this task differed markedly from those produced by reinforcer devaluation (pre-feeding) and an appetite suppressant drug (the cannabinoid CB1 inverse agonist AM251; Randall et al., 2012). Moreover, high levels of progressive-ratio output were associated with increased expression of phosphorylated DARPP-32 (Thr34) in the nucleus accumbens core (Randall et al., 2012).

Another way of controlling work requirements in an operant schedule is to vary the fixed-ratio (FR) requirement across different schedules. In untreated animals, the overall relationship between ratio size (i.e., the number of lever presses required per reinforcer) and response rate is inverted-U-shaped. Up to a point, as the ratio requirement increases, animals adjust to this challenge by increasing response output. However, if the ratio requirement is high enough (i.e., if the cost is too high), then the animal reaches the point at which additional responses being required actually tend to suppress responding. This pattern of results is known as ratio strain and is analogous to a breakpoint on the progressive-ratio schedule. For example, Aberman and Salamone (1999) studied a range of ratio schedules (FR1, 4, 16, and 64) to assess the effects of nucleus accumbens dopamine depletions. FR1 performance was unaffected by dopamine depletion, and FR4 responding was only transiently and mildly suppressed; however, the schedules with large ratio requirements (i.e., FR16 and FR64) were severely impaired. In fact, dopamine-depleted rats that lever pressed on the FR64 schedule showed significantly fewer responses than those performing on the FR16 schedule. In behavioral economic terms, this pattern can be described as reflecting a change in the elasticity of the demand for food reinforcement (Salamone et al., 2009).

One of the drawbacks of using ratio schedules such as FR or progressive-ratio is that, as the ratio level increases, there is a corresponding increase in reinforcement intermittency because of the lengthening time required to complete the ratio. One way of controlling for this confound is to use tandem variable interval-fixed ratio (VI FR) schedules. Basically, these are interval schedules that have a ratio requirement attached to the interval. Comparing the effect of a manipulation on performance on a VI schedule with a FR1 attached versus the effects of the same manipulation on a comparable VI schedule with a higher ratio attached (FR5 or FR10) allows one to control for the time interval elapsed and independently vary the ratio requirement. These schedules have been used to demonstrate that the effects of nucleus accumbens dopamine depletions or adenosine receptor antagonism are greater with increasing ratios, even when one controls for the time interval requirement (Correa et al., 2002; Mingote et al., 2005, 2008). Another way of controlling for the influence of time intervals is to compare the effects of a manipulation on progressive-ratio responding with effects on a progressive-interval schedule (e.g., Wakabayashi et al., 2004; Ward et al., 2011).

As noted above, animals must continually make effort-related choices that involve assessments of work-related response costs and the potential benefits of responding. Tests of effort-related choice behavior (or effort-related decision making) generally involve tasks in which animals have choices between high effort/high reward and low effort/low reward options. There are several ways of assessing effort-related choice behavior in rodents. One of the procedures that has been used to assess effort-related choice behavior is a concurrent lever pressing/chow feeding task, which offers rodents the option of either lever pressing to obtain a relatively preferred food (e.g., high carbohydrate pellets; usually obtained by lever pressing on an FR5 schedule), or approaching and consuming a less preferred food (lab chow) that is concurrently available in the chamber (Salamone et al., 1991). Extensively trained rats under baseline or control conditions typically get most of their food by lever pressing and consume only small quantities of chow. Several dopamine receptor antagonists with different patterns of selectivity for the various dopamine receptors, including cis-flupenthixol, haloperidol, raclopride, eticlopride, SCH 23390, SKF83566, and ecopipam, all decreased lever pressing for food but substantially increased the intake of the concurrently available chow (Salamone et al., 1991, 1996, 2002; Cousins et al., 1994; Koch et al., 2000; Sink et al., 2008; Worden et al., 2009). Moreover, dopamine transporter (DAT) knockdown mice show the opposite pattern; they display increases in lever pressing and decreases in chow intake (Cagniard et al., 2006). The use of this task for assessing effort-related choice behavior has been validated in several studies. For example, the low dose of haloperidol that produced the shift from lever pressing to chow intake (0.1 mg/kg) did not affect total food intake or alter preference between these two specific foods in free-feeding choice tests (Salamone et al., 1991). Although dopamine receptor antagonists have been shown to reduce FR5 lever pressing and increase chow intake, appetite suppressants from different classes, including amphetamines (Cousins et al., 1994), fenfluramine (Salamone et al., 2002), and cannabinoid CB1 receptor antagonists (Sink et al., 2008), did not increase chow intake at doses that suppressed lever pressing. Similarly, pre-feeding to reduce food motivation suppressed both lever pressing and chow intake (Salamone et al., 1991). The attachment of higher-ratio requirements (up to FR20) in the absence of any drug treatments caused rats to shift from lever pressing to chow intake (Salamone et al., 1997), indicating that this task is sensitive to work load. The concurrent FR/chow intake task also has been used to assess a model of motivational impairments in schizophrenia. Increases in dopamine D2 receptor expression in mice were shown to decrease lever pressing and decrease chow intake (Ward et al., 2012).

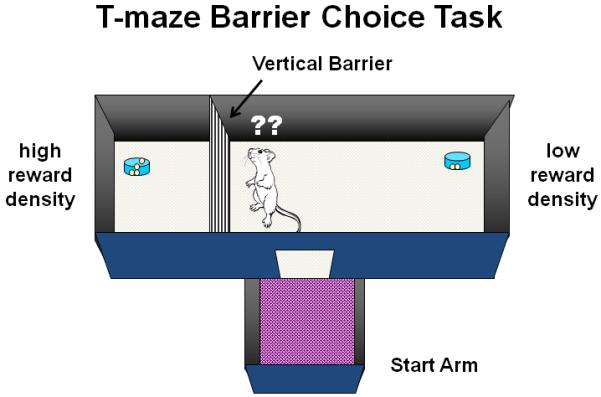

A T-maze barrier choice procedure has been developed to assess the effects of drug or lesion manipulations on effort-related decision making in rodents (Salamone et al., 1994). In this task, the two choice arms of the maze can have different reinforcement densities (e.g., 4 vs. 2 food pellets, or 4 vs. 0), and under some conditions a large barrier is placed in the arm with the higher density of food reinforcement to present the animal with an effort-related challenge. Administration of the dopamine receptor antagonist haloperidol and nucleus accumbens dopamine depletions dramatically affect choice behavior when the high-density arm (4 pellets) has the barrier in position, and the arm without the barrier contains an alternative food source (2 pellets). Dopamine depletions or antagonism decrease the choice of the high density arm and increase the choice of the low density arm (Salamone et al., 1994; Cousins et al., 1996; Denk et al., 2005; Mott et al., 2009). The results of these T-maze studies in rodents, together with the findings from the operant concurrent choice studies reviewed above, indicate that low doses of dopamine receptor antagonists and nucleus accumbens dopamine depletions cause animals to reallocate their instrumental response selection based on the response requirements of the task and select lower effort alternatives for obtaining reinforcers. Like the operant concurrent choice task, the T-maze task for measuring effort-based choice behavior also has undergone considerable behavioral validation and evaluation (Salamone et al., 1994; Cousins et al., 1996; Van den Bos et al., 2006). Although rats treated with dopamine receptor antagonists or nucleus accumbens dopamine depletions are slower than those tested under control conditions, it does not appear as though the choice deficit is secondary to a latency deficit (Salamone et al., 1994; Bardgett et al., 2009). For example, although the increases in latency induced by nucleus accumbens dopamine depletions show rapid post-surgical recovery, the alteration in choice is much more persistent (Salamone et al., 1994). In addition, drug-induced effects on latency and arm choice in the T-maze are pharmacologically dissociable (Bardgett et al., 2009). When no barrier is placed in the arm with the high reinforcement density, rats mostly choose that arm, and neither haloperidol nor nucleus accumbens dopamine depletion alters their response choice (Salamone et al., 1994). When the arm with the barrier contained four pellets, but the other arm contained no pellets, rats with nucleus accumbens dopamine depletions were very slow but still managed to choose the high-density arm, climb the barrier, and consume the pellets (Cousins et al., 1996). In a recent T-maze choice study with mice, it was confirmed that haloperidol reduced the choice of the arm with the barrier, and it also was demonstrated that haloperidol had no effect on choice when both arms had a barrier in place (Pardo et al., 2012). Thus, dopaminergic manipulations do not alter the preference for the high density of reinforcement over the lower density and do not affect discrimination or memory processes related to arm preference.

Recent experiments have used effort discounting procedures to study the effects of dopaminergic manipulations. A T-maze effort discounting task was recently developed by Bardgett et al. (2009). With this task, the amount of food in the high-density arm of the maze was diminished in each trial in which the rats selected that arm (i.e., an “adjusting-amount” discounting variant of the T-maze procedure that allows for the determination of an indifference point for each rat). Administration of either the dopamine D1 receptor family antagonist SCH23390 or D2 receptor family antagonist haloperidol altered effort discounting, making it more likely that rats would choose the arm with the smaller reward. Administration of amphetamine blocked the effects of SCH23390 and haloperidol and also biased rats toward choosing the high-reward/high-cost arm. Floresco et al. (2008) studied the effects of dopaminergic and glutamatergic drugs on both effort (i.e., ratio) and delay discounting using operant procedures. The dopamine receptor antagonist haloperidol altered effort discounting, even when the effects of time delay were controlled for (Floresco et al., 2008).