Abstract

One of the most important aspects of visual attention is its flexibility; our attentional “window” can be tuned to different spatial scales, allowing us to perceive large-scale global patterns and local features effortlessly. We investigated whether the perception of global and local motion competes for a common attentional resource. Subjects viewed arrays of individual moving Gabors that group to produce a global motion percept when subjects attended globally. When subjects attended locally, on the other hand, they could identify the direction of individual uncrowded Gabors. Subjects were required to devote their attention toward either scale of motion or divide it between global and local scales. We measured direction discrimination as a function of the validity of a precue, which was varied in opposite directions for global and local motion such that when the precue was valid for global motion, it was invalid for local motion and vice versa. There was a trade-off between global and local motion thresholds, such that increasing the validity of precues at one spatial scale simultaneously reduced thresholds at that spatial scale but increased thresholds at the other spatial scale. In a second experiment, we found a similar pattern of results for static-oriented Gabors: Attending to local orientation information impaired the subjects’ ability to perceive globally defined orientation and vice versa. Thresholds were higher for orientation compared to motion, however, suggesting that motion discrimination in the first experiment was not driven by orientation information alone but by motion-specific processing. The results of these experiments demonstrate that a shared attentional resource flexibly moves between different spatial scales and allows for the perception of both local and global image features, whether these features are defined by motion or orientation.

Keywords: attention, spatial scale, global, local, motion perception, integration, segmentation

Introduction

When watching the flight path of a flock of birds, there may be differences between the flock’s direction of motion and that of any individual bird. The visual system faces a nontrivial challenge when processing motion information available in the environment. To perceive useful information from cluttered, dynamic scenes, we need to be able to selectively segment and attend to some locations while ignoring others. Additionally, motion information often needs to be integrated across space, enabling the perception of global properties or grouped motion patterns (Braddick, 1993; Dakin, Mareschal, & Bex, 2005; Nakayama, 1985; Raymond, 2000; Smith, Snowden, & Milne, 1994; Watt & Phillips, 2000; Williams & Sekuler, 1984). Local motion and global motion, however, are often at odds, leading to ambiguities that complicate scene segmentation and grouping. Several lines of evidence suggest that to resolve the discrepancies that often arise between different spatial scales of analysis, the visual system relies on attention (Cavanagh, 1992; Hock, Balz, & Smollon, 1998; Michael & Desmedt, 2004; Raymond, 2000; Reynolds & Desimone, 1999; Sàenz, Buraĉas, & Boynton, 2003).

While the ability to switch attention between competing, spatially disparate events has been extensively studied, the mechanisms for switching attention between local and global attributes of the same stimuli are less well understood. Yet, this aspect of attention is one of the most compelling because it affects fundamental processes in vision: the segmentation and integration of images. Control over the spatial extent of our attentional “window” is essential for the flexible perception of global attributes and local details within a cluttered scene. The local motion of one particular bird in a flock, for example, seems just as recognizable as the global motion of the entire flock, as long as we attend to the appropriate spatial scale. Because both scales of motion are usually available and important in a given scene, it is necessary not only to flexibly change from one spatial scale to another but also to be able to divide attention between them (e.g., a driver must simultaneously attend to spatially global motion or optic flow for heading and to the local motion of pedestrians).

The processes of motion segmentation and integration occur in early visual areas in a nearly obligatory manner, beginning as early as the retina (Ölveczky, Baccus, & Meister, 2003). Psychophysical studies have revealed that spatial integration of motion varies with several stimulus properties including eccentricity, contrast, and duration (Bex & Dakin, 2005; Burr & Santoro, 2001; Melcher, Crespi, Bruno, & Concetta, 2004; Murakami & Shimojo, 1993; Neri, Morrone, & Burr, 1998; Sceniak, Ringach, Hawken, & Shapley, 1999; Tadin & Lappin, 2005; Tadin, Lappin, Gilroy, & Blake, 2003; Watamaniuk & McKee, 1998; Watamaniuk & Sekuler, 1992; Watt & Phillips, 2000). Several lines of evidence suggest that spatial summation or integration in cluttered scenes occurs passively and outside of awareness (Harp, Bressler, & Whitney, in press; Parkes, Lund, Angelucci, Solomon, & Morgan, 2001). Physiological studies have shown that as information is processed in higher visual areas, from V1 to MT+, there is greater spatial integration of directional motion signals (Britten & Heuer, 1999; Britten, Shadlen, Newsome, & Movshon, 1993; Seidemann & Newsome, 1999). This may help explain the perception of global motion in random-dot kinematograms (RDKs), plaids, and other stimuli (Adelson & Movshon, 1982; Nakayama, 1985; Raymond, 2000; Snowden, 1990).

Although some mechanisms of motion summation or integration are passive, it is now clear that top–down attention can affect the processing and perception of motion as well. Several electrophysiological and functional neuroimaging studies have shown that attention strongly modulates processing in motion-sensitive areas such as MT, MST, and even V1 (Culham, He, Dukelow, & Verstraten, 2001; Huk & Heeger, 2000; Robertson, 2003; Sasaki et al., 2001; Treue, 2001; Treue & Maunsell, 1996; Watanabe & Shimojo, 1998; Watanabe et al., 1998). Further, several neurological disorders suggest that deficits of attention may affect processing of visual information at different spatial scales; these include neglect, Balint’s syndrome, autism, and schizophrenia (Chen, Bidwell, & Holzman, 2005; Mottron, Burack, Iarocci, Belleville, & Enns, 2003; Plaisted, Swettenham, & Rees, 1999; Robertson, 2003).

The role of exogenous or stimulus-driven attention and top–down voluntary attentional guidance (endogenous attention) in local and global motion perception, however, remains controversial (Chong & Treisman, 2005; Duke et al., 1998; Hock et al., 1998; Melcher et al., 2004; Pomerantz, 1983; Raymond, O’Donnell, & Tipper, 1998; Watamaniuk & McKee, 1998; Watt & Phillips, 2000). For example, the psychophysical studies of Hock et al. (1998) show that the spatial spread of attention alters perceived motion. This could indicate that spatial integration of motion is, to some degree, under voluntary attentional control. However, given that integration and segmentation of dynamic scenes occur at multiple stages along the visual pathway, it is likely that both passive (bottom–up) and top–down mechanisms play a crucial role in the perception of multiple spatial scales of motion.

Although global and local information may be at odds with one another, it is probable that an observer has simultaneous access to both levels—at least to some degree. Watamaniuk and McKee (1998) had subjects judge both the local motion of one target dot and the global motion of an ensemble RDK. They found that the precision of motion judgments at either scale was largely unaffected by the degree to which the trajectories of the two scales differed. Additionally, motion discriminations at each scale were comparable in conditions where attention was equally divided between local and global spatial scales compared to trial runs in which only one spatial scale was judged throughout. Watamaniuk and McKee suggested that both local motion and global motion are detected simultaneously and in parallel with relatively little cost. In that study, however, there was a slight difference in the characteristics of the local stimulus and the individual components of the global stimuli, raising the possibility that preattentive segmentation processes could have contributed to the lack of attentional modulation.

The goal of the experiments here was to further characterize the role that voluntary attention plays in coding local and global visual motion in a dynamic scene. Specifically, we will test whether the perception of global and local motion shares a common attentional resource. To address this question, we presented subjects with arrays of moving elements and psychophysically measured direction discrimination of both local and global motion while subjects divided their attention between different spatial scales. By varying the validity of an attentional precue, we were able to measure the relative “cost” that allocating attention toward one spatial scale (e.g., local) had on the discrimination of the same (local) or other scale (global). The results will show that a common attentional resource allows for both global and local discriminations with a characteristic cost to thresholds when dividing attention between the spatial scales.

Methods

General

Four subjects with normal or corrected-to-normal vision participated in the main experiment (two of whom were naive to the purpose of the experiment). Two subjects from this group were used in a control and follow-up experiment. Stimuli were presented binocularly while the subject’s head rested on a chin rest at a distance of 57 cm in a darkened, sound-dampened room. Test stimuli were presented on a Sony Multiscan G520 color monitor controlled by a Macintosh G4 with a refresh rate of 100 Hz and a display resolution of 1,024 × 768 pixels. The luminance of the background was 35.1 cd/m2. The CRT was linearized with a gamma correction, and physical linearity was confirmed using a Minolta CS100A photometer. Subjects reported psychophysical judgments with the computer keypad.

Stimuli

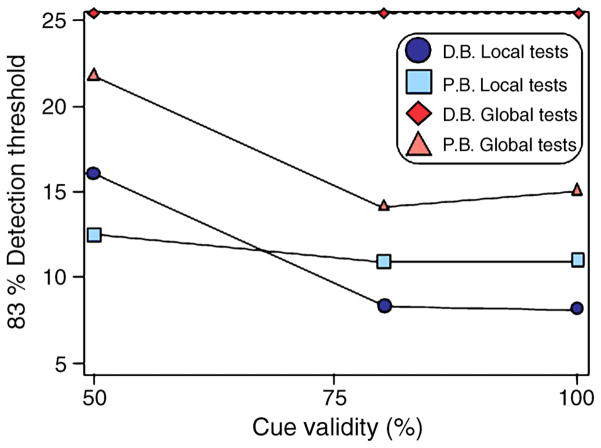

Stimuli consisted of two arrays of drifting Gabors (similar to Whitney, 2005, 2006). Each array contained 20 individual Gabors (Figure 1). Each Gabor consisted of a drifting sine-wave luminance carrier enveloped with static Gaussian contrast modulation. The spatial frequency of the carrier was 0.78 cycles/deg, and the Michelson contrast was 99%. The phase of each Gabor was set randomly at the beginning of each trial. The standard deviation of the Gaussian contrast envelope was 0.5°. The center-to-center separation between each Gabor was 2.58° of visual angle. The eccentricity of the local Gabor was centered 8° from fixation, and the eccentricity of the global array (i.e., the center of the 4 × 5 matrix of Gabors) was 12.5°. The temporal frequency of the carrier motion was 0.59 Hz.

Figure 1.

Sample stimulus for local and global judgments. For global discriminations (red), subjects were required to judge which entire pattern was more upward moving (left, in this example). For local trials, subjects judged the relative motion of two elements (circled in blue) in the display (left, in this example).

The global motion direction was calculated as the arithmetic mean of the directions of the entire array of individual Gabors. The absolute global direction in the two arrays was randomly set within a 63° range centered around 45.5° clockwise (i.e., toward the upper right of the monitor from the subject’s perspective). With this stimulus display, the relative motion of the global Gabor arrays or individual elements can be more upward or more rightward. The difference in the global and local directions of motion between the two arrays could be one of six values from 0° to 15°, in 3° increments. This way, the local Gabors’ motion or the global direction of motion in the two arrays could be the same or could differ by up to 15°. The direction of motion in each individual Gabor (or local patch) was randomly determined within a 30° window, centered on the global motion direction.

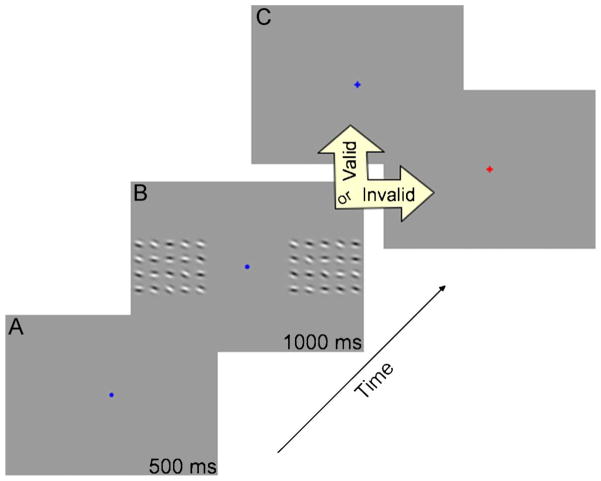

Task design

Subjects maintained fixation on a central dot (0.76° of visual angle) throughout all experiments. At the beginning of each trial, a colored precue was presented for 500 ms to indicate the spatial scale of the to-be-made motion discrimination (either local or global). A red flash indicated that subjects should attend to the global direction of motion, and a blue flash indicated that subjects should attend to the local motion of the nearest Gabor at the bottom of the arrays (circled in blue in Figure 1). Following the precue, the test arrays of Gabors were presented for 1,000 ms. The arrays were then removed, and, following a 500-ms delay, a blue or red postcue was presented at the fixation point until the subject responded. This postcue instructed subjects to judge either the local or the global motion. Figure 2 shows the sequence of each trial.

Figure 2.

Experimental task overview. (A) A color-coded attentional precue specified the spatial scale of the to-be-made motion discrimination. (B) Bilateral test arrays of drifting Gabors could drift more or less upward and contained both local and global motion. (C) Depending on the experimental condition, a postcue indicated whether subjects should judge local or global motion. The postcue either matched or did not match the precue (cue-valid and cue-invalid trials, respectively).

The precue validity, or degree to which the precue matched the postcue, was set at 100%, 80%, or 50% depending on the trial block. In the 100% cue-valid condition, the precue always matched the postcue, validly indicating the spatial scale of the test discrimination every trial during that session. In the 50% cue-valid condition, the precue matched the postcue on only 50% of the trials (i.e., 50% of trials were cue valid, and the other 50% were cue invalid). In this condition, subjects should divide their attention equally across both scales of motion. In the 80% cue-valid condition, the precue matched the postcue in 80% of the trials, and the remaining 20% of the trials were cue invalid. Thresholds for the 20% invalid trials were not included in the analysis. In all trial sessions, subjects were aware of the precue validity of that trial block to manipulate attention between global and local spatial scales. Precue validity was held constant throughout each block.

Depending on the color of the postcue, subjects judged the direction of either the global motion (mean direction difference across the arrays; red circles in Figure 1) or the local motion of two Gabors at the bottom of each array, closest to fixation (blue circles in Figure 1). Subjects judged which array (at the local or global scale) contained more vertically upward motion in a method of constant stimuli, two-alternative forced-choice direction discrimination task. For example, if the postcue was blue, subjects judged which array contained local motion in a more vertical direction. Each session consisted of 2 spatial scales, 6 angular separations (within a 15° range), and 20 trials at each of these 12 conditions, for a total of 240 motion discriminations per trial block. Each subject participated in at least four sessions at each cue validity (counterbalanced order), for a total of at least 2,880 trials. Subjects participated in at least one practice run at each cue validity. If a threshold was not calculable for the 100% validity condition, the subject participated in an additional practice run.

Within each session, for each spatial scale (local and global), a logistic psychometric function was fit to the cumulative response probability (accuracy) as a function of the angular separation between the two arrays. As the separation in direction increased (for local or global direction), the accuracy with which subjects discriminated which array contained more vertically upward motion increased. The logistic function was expressed as

| (1) |

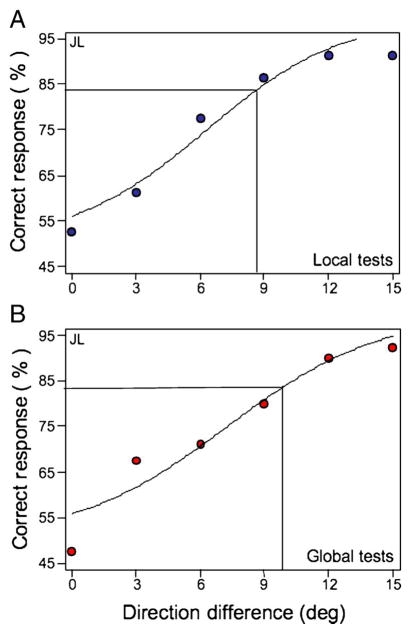

where α is the slope and β is the 83% correct threshold. Figure 3 displays single subject psychometric functions for local and global thresholds at 100% cue validity.

Figure 3.

Psychometric function showing 83% direction discrimination thresholds for a single subject in the 100% cue-valid condition. A logistic function was fit to the data for both local (top panel) and global (bottom panel) data.

Because the global motion direction is composed of local Gabor motion, the local direction of motion in the Gabors correlates with the global motion direction. However, subjects cannot use the local motion to judge the global motion or vice versa. This is because the range of local motion directions is far higher than the range of global motion direction. Even if the two arrays have global motion directions that are 15° separated, the local motion of the two local Gabors can be identical or can even be in directions opposite that of the global direction. Therefore, the local motion is not reliably predictive of the global motion direction.

In a control experiment, we removed the precue to test whether the 50% precue in the first experiment was equivalent to having no precue. The experiment was identical to Experiment 1 with the exception that no precue was presented (the fixation point remained gray at all times) and two subjects made a dual judgment—consecutively reporting both local and global motion in the test display. The ordering of the judgments was balanced across trial runs.

Lastly, we ran a follow-up experiment with identical methods and stimuli as Experiment 1 with the exception that static Gabors were used instead of moving ones, and subjects made judgments of Gabor orientation rather than motion direction. The purpose of this experiment was to determine whether direction discrimination thresholds in the first experiment were determined entirely by static orientation information rather than motion processing per se. Further, this experiment tested whether global and local orientation judgments share an attentional resource.

Results

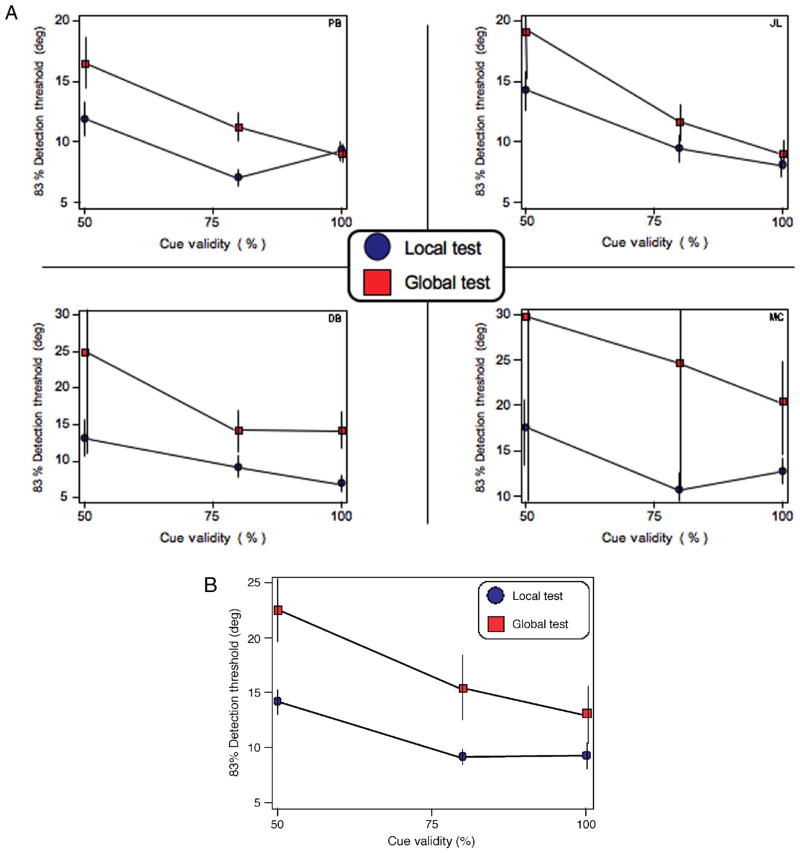

There was a significant effect of cue validity on the precision of motion direction discrimination for global and local spatial scales, F(2, 6) = 91.4, p < .01, η = .968. Thus, there was an attentional trade-off between spatial scales: As subjects’ attention was increasingly directed toward one scale of motion (e.g., local), there was a significant decrease in the precision of judging motion at the opposing scale (e.g., global). Direction discrimination thresholds for local motion were substantially better than global motion, F(1, 3) = 8.7, p = .06, η = .74, but this did not mitigate the effect of cue validity. Figure 4 shows the individual and group data across spatial scales and all cue validities. A linear regression indicated that, on average, for every 10% decrease in local cue validity, there was a direction discrimination threshold elevation of 1.07°. A similar trend was found for global motion, with every 10% decrease in global cue validity resulting in a 1.93° threshold elevation (local motion: r = −.70, p = .011; global motion: r = −.61, p = .036). The difference in slopes in Figure 4B was confirmed by a strong but nonsignificant interaction between local and global thresholds as a function of cue validity, F(2, 6) = 4.4, p = .07, η = .60.

Figure 4.

(A) Direction discrimination thresholds as a function of precue validity for four individual subjects. Each subject displayed a characteristic trade-off in direction discrimination thresholds: Increasing cue validity at one spatial scale reduced thresholds at that spatial scale while simultaneously elevating thresholds at the other spatial scale. The data presented at 50% cue validity in the graphs include both valid and invalid trials (Figure 5 separates the valid and invalid trials, for comparison). (B) Group results. There was a significant effect of cue validity on direction discrimination thresholds, F(2) = 91.38, p < .01, η = .968. Error bars denote ±SEM.

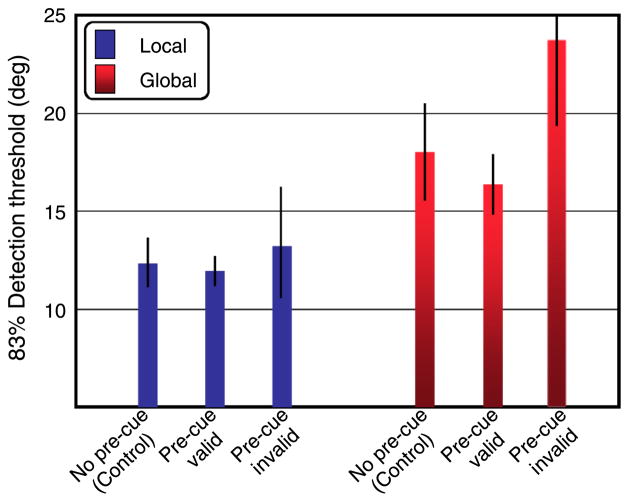

Both local and global motion thresholds were significantly worse when the discrimination was different than the spatial scale that was cued. Interestingly, this was the case even in the 50% cue-valid condition, where the precue matched the postcue in half of the trials. Trials where the precue happened to match the test discrimination lowered the thresholds for both local and global judgments, t(3) = 6.9, p < .01; t(3) = 2.83, p < .05. This pattern of results suggests that subjects were still using the pretrial cue in their strategy or allocation of their attentional resources despite subjects’ understanding that the predictive power of the precue was no better than chance. In a follow-up experiment, therefore, two subjects made consecutive global and local judgments after viewing identical test stimuli as that in the 50% cue condition without a precue (see the Methods section). Figure 5 shows that discrimination thresholds for both global and local motion in the no-precue control condition fell directly between their individual thresholds for correctly and incorrectly cued trials in the original 50% condition.

Figure 5.

Results of a control experiment for two subjects. The discrimination thresholds for the no-precue control condition fell between the cue-valid and cue-invalid conditions (from Experiment 1) for both local and global scales, as expected. This pattern of results for the control condition indicates that subjects can simultaneously encode multiple scales of motion information regardless of the precue.

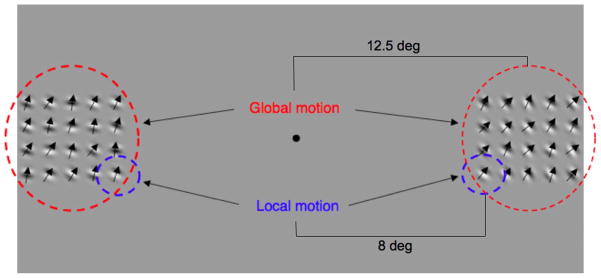

To address the question of whether the observed attentional trade-off was specific to motion or is a general property of spatial attention, we ran a follow-up experiment in which observers judged static-oriented Gabors using the same procedure as the main experiment (Figure 6). Although global static orientation was successfully extracted by one observer, consistent with previous studies (Dakin & Watt, 1997), another subject was not able to discriminate the global orientation of the arrays of static Gabors (dashed line in Figure 6). This speaks to the relative ease with which motion is integrated over space, at least for the stimuli used here. In fact, thresholds were significantly higher for static orientation than for motion direction, for the subject who could discriminate global direction in both static and moving arrays (subject P.B.), t(5) = −4.0, p = .01 (compare Figures 4A and 6). This suggests that static orientation information, although available, was not responsible for the fine discrimination thresholds in Experiment 1. Yet, the same general tradeoff between spatial scales was observed for static stimuli as was found in the first experiment for moving stimuli (Figure 6): Attending to one spatial scale impaired discrimination at the other spatial scale. The results of this control experiment suggest that the trade-off is a characteristic of spatial attention in general and not a particular feature (i.e., motion or orientation).

Figure 6.

Results of a follow-up experiment, using static Gabors, for two subjects. With increasing cue validity, thresholds declined, consistent with the first experiment. Threshold orientation discrimination at both local and global scales was higher than it was for motion direction discrimination (cf. Figure 4). Subject D.B. was unable to discriminate global orientation at any cue validity (dotted line).

Discussion

We measured direction discrimination thresholds for both local elements and global arrays as subjects’ attention was systematically divided across the spatial scales. This situation is surprisingly common in everyday visual scenes and requires us to flexibly change the size of our attentional “window”. The experiments here revealed that a common attentional resource is employed when judging both local and global motion (and orientation).

Our results are partly at odds with previous studies of motion encoding at multiple spatial scales. Watamaniuk and McKee (1998) found that subjects were able to judge both local and global motion directions equally well whether or not subjects knew beforehand which scale they were to judge. Further, the difference in trajectories between each type of motion in their RDK stimulus did not affect thresholds at either level. These authors suggested that motion information across different spatial scales is simultaneously encoded and equally available regardless of the spatial scale of the subject’s attentional focus. The “local” motion in Watamaniuk and McKee’s study, however, was different than the motion contained in the “global” stimulus. The individual dots that defined the global motion of the RDK had very brief lifetimes, and each followed a random-walk trajectory. The local motion was a single dot that followed a continuous trajectory that passed near the fovea and was visible 10 times longer than any of the dots that made up the global stimulus. This may have resulted in preattentive segmentation or popout of the local dot (Nothdurft, 1993, 2002; Rosenholtz, 1999), thereby reducing or even obviating the need for spatially directed attention. Consistent with this suggestion, it is known that motion can be preattentively coded (e.g., motion adaptation occurs under crowded conditions in which subjects are not aware of motion direction; Aghdaee, 2005; Aghdaee & Zandvakili, 2005; Whitney, 2005, 2006). In our stimulus, however, the local motion did not easily segment or pop out from the global motion—the local motion was, in all respects, identical to the individual elements that comprised the global motion. Spatially directed attention was therefore necessary.

The second experiment revealed a similar pattern of results for static orientation information as was found for local and global motion; attention to one spatial scale impaired discrimination of features at the other spatial scale. However, static orientation thresholds were substantially higher than those for local or global motion, suggesting that the results of the first experiment were specific to motion processing and not a by-product of orientation cues. Because there was a similar attentional trade-off for orientation and for motion judgments, it seems likely that the results here are due to a generalized mechanism of spatial attention, one that can operate at either local or global scales quite well but not both simultaneously or in parallel.

To make sense of natural scenes that contain motion information on several spatial scales, it is imperative that perception be flexible. The current study builds on previous experiments, suggesting that attention plays a strong modulating role in perceiving different scales of motion (e.g., Cavanagh, 1992; Hock et al., 1998; Raymond, 2000; Reynolds & Desimone, 1999; Sàenz et al., 2003). Although attention (to spatial scale and motion) is known to produce effects throughout the visual cortex, the neural mechanisms that control these effects—the source of the attentional modulation—remain unclear. Nevertheless, the present results demonstrate that global and local motion processes compete for common attentional resources, as attention toward one scale comes at a characteristic “cost” or loss in precision in discriminating the other scale. These findings could help in identifying the neural mechanism of spatial attention in future single-unit or neuroimaging studies. These results further imply that the processes of motion segmentation and integration at least partly overlap. That is, we can perceive the forest, or the trees, and sometimes both, and this dramatic perceptual ability is in virtue of the flexibility in the spatial scale of motion processing, afforded by attention.

Conclusions

Attention can be flexibly distributed to detect motion at local or global scales but not in parallel. When attention is deployed toward one spatial scale, there is a characteristic cost to detecting motion on the other, less attended, scale. These findings suggest that a common attentional resource underlies the coding of motion across different spatial scales.

Acknowledgments

The authors would like to thank the anonymous reviewers for their thoughtful comments.

Footnotes

Commercial relationships: none.

Contributor Information

Paul F. Bulakowski, Email: pbulakowski@ucdavis.edu, Department of Psychology, University of California, Davis, USA, http://mindbrain.ucdavis.edu/people/pb

David W. Bressler, Email: bressler@berkeley.edu, University of California, Davis, USA, http://argentum.ucbso.berkeley.edu/david_b.html

David Whitney, Email: dwhitney@ucdavis.edu, Department of Psychology, University of California, Davis, USA, http://mindbrain.ucdavis.edu/content/Labs/Whitney/.

References

- Adelson EH, Movshon JA. Phenomenal coherence of moving visual patterns. Nature. 1982;300:523–525. doi: 10.1038/300523a0. [DOI] [PubMed] [Google Scholar]

- Aghdaee SM. Adaptation to spiral motion in crowding condition. Perception. 2005;34:155–162. doi: 10.1068/p5298. [DOI] [PubMed] [Google Scholar]

- Aghdaee SM, Zandvakili A. Adaptation to spiral motion: Global but not local motion detectors are modulated by attention. Vision Research. 2005;45:1099–1105. doi: 10.1016/j.visres.2004.11.012. [DOI] [PubMed] [Google Scholar]

- Bex PJ, Dakin SC. Spatial interference among moving targets. Vision Research. 2005;45:1385–1398. doi: 10.1016/j.visres.2004.12.001. [DOI] [PubMed] [Google Scholar]

- Braddick O. Segmentation versus integration in visual motion processing. Trends in Neurosciences. 1993;16:263–268. doi: 10.1016/0166-2236(93)90179-p. [DOI] [PubMed] [Google Scholar]

- Britten KH, Heuer HW. Spatial summation in the receptive fields of MT neurons. Journal of Neuroscience. 1999;19:5074–5084. doi: 10.1523/JNEUROSCI.19-12-05074.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. Responses of neurons in macaque MT to stochastic motion signals. Visual Neuroscience. 1993;10:1157–1169. doi: 10.1017/s0952523800010269. [DOI] [PubMed] [Google Scholar]

- Burr DC, Santoro L. Temporal integration of optic flow, measured by contrast and coherence thresholds. Vision Research. 2001;41:1891–1899. doi: 10.1016/s0042-6989(01)00072-4. [DOI] [PubMed] [Google Scholar]

- Cavanagh P. Attention-based motion perception. Science. 1992;257:1563–1565. doi: 10.1126/science.1523411. [DOI] [PubMed] [Google Scholar]

- Chen Y, Bidwell LC, Holzman PS. Visual motion integration in schizophrenia patients, their first-degree relatives, and patients with bipolar disorder. Schizophrenia Research. 2005;74:271–281. doi: 10.1016/j.schres.2004.04.002. [DOI] [PubMed] [Google Scholar]

- Chong SC, Treisman A. Attentional spread in the statistical processing of visual displays. Perception & Psychophysics. 2005;67:1–13. doi: 10.3758/bf03195009. [DOI] [PubMed] [Google Scholar]

- Culham J, He S, Dukelow S, Verstraten FA. Visual motion and the human brain: What has neuroimaging told us? Acta Psychologica. 2001;107:69–94. doi: 10.1016/s0001-6918(01)00022-1. [DOI] [PubMed] [Google Scholar]

- Dakin SC, Mareschal I, Bex PJ. Local and global limitations on direction integration assessed using equivalent noise analysis. Vision Research. 2005;45:3027–3049. doi: 10.1016/j.visres.2005.07.037. [DOI] [PubMed] [Google Scholar]

- Dakin SC, Watt RJ. The computation of orientation statistics from visual texture. Vision Research. 1997;37:3181–3192. doi: 10.1016/s0042-6989(97)00133-8. [DOI] [PubMed] [Google Scholar]

- Duke CC, Crewther SG, Lawson ML, Henry L, Kiely PM, West SJ, et al. Motion perception in global versus local attentional modes. Australian and New Zealand Journal of Ophthalmology. 1998;26:S114–S116. doi: 10.1111/j.1442-9071.1998.tb01357.x. [DOI] [PubMed] [Google Scholar]

- Harp T, Bressler D, Whitney D. Position shifts following crowded second order motion adaptation reveal processing of local and global motion without awareness. Journal of Vision. doi: 10.1167/7.2.15. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hock HS, Balz GW, Smollon W. Attentional control of spatial scale: Effects on self-organized motion patterns. Vision Research. 1998;38:3743–3758. doi: 10.1016/s0042-6989(98)00023-6. [DOI] [PubMed] [Google Scholar]

- Huk AC, Heeger DJ. Task-related modulation of visual cortex. Journal of Neurophysiology. 2000;83:3525–3536. doi: 10.1152/jn.2000.83.6.3525. [DOI] [PubMed] [Google Scholar]

- Melcher D, Crespi S, Bruno A, Morrone MC. The role of attention in central and peripheral motion integration. Vision Research. 2004;44:1367–1374. doi: 10.1016/j.visres.2003.11.023. [DOI] [PubMed] [Google Scholar]

- Michael GA, Desmedt S. The human pulvinar and attentional processing of visual distractors. Neuroscience Letters. 2004;362:176–181. doi: 10.1016/j.neulet.2004.01.062. [DOI] [PubMed] [Google Scholar]

- Mottron L, Burack JA, Iarocci G, Belleville S, Enns JT. Locally oriented perception with intact global processing among adolescents with high-functioning autism: Evidence from multiple paradigms. Journal of Child Psychology and Psychiatry. 2003;44:904–913. doi: 10.1111/1469-7610.00174. [DOI] [PubMed] [Google Scholar]

- Murakami I, Shimojo S. Motion capture changes to induced motion at higher luminance contrasts, smaller eccentricities, and larger inducer sizes. Vision Research. 1993;33:2091–2107. doi: 10.1016/0042-6989(93)90008-k. [DOI] [PubMed] [Google Scholar]

- Nakayama K. Biological image motion processing: A review. Vision Research. 1985;25:625–660. doi: 10.1016/0042-6989(85)90171-3. [DOI] [PubMed] [Google Scholar]

- Neri P, Morrone MC, Burr DC. Seeing biological motion. Nature. 1998;395:894–896. doi: 10.1038/27661. [DOI] [PubMed] [Google Scholar]

- Nothdurft HC. The role of features in preattentive vision: Comparison of orientation, motion and color cues. Vision Research. 1993;33:1937–1958. doi: 10.1016/0042-6989(93)90020-w. [DOI] [PubMed] [Google Scholar]

- Nothdurft HC. Attention shifts to salient targets. Vision Research. 2002;42:1287–1306. doi: 10.1016/s0042-6989(02)00016-0. [DOI] [PubMed] [Google Scholar]

- Ölveczky BP, Baccus SA, Meister M. Segregation of object and background motion in the retina. Nature. 2003;423:401–408. doi: 10.1038/nature01652. [DOI] [PubMed] [Google Scholar]

- Parkes L, Lund J, Angelucci A, Solomon JA, Morgan M. Compulsory averaging of crowded orientation signals in human vision. Nature Neuroscience. 2001;4:739–744. doi: 10.1038/89532. [DOI] [PubMed] [Google Scholar]

- Plaisted K, Swettenham J, Rees L. Children with autism show local precedence in a divided attention task and global precedence in a selective attention task. Journal of Child Psychology and Psychiatry. 1999;40:733–742. [PubMed] [Google Scholar]

- Pomerantz JR. Global and local precedence: Selective attention in form and motion perception. Journal of Experimental Psychology: General. 1983;112:516–540. doi: 10.1037//0096-3445.112.4.516. [DOI] [PubMed] [Google Scholar]

- Raymond JE. Attentional modulation of visual motion perception. Trends in Cognitive Sciences. 2000;4:42–50. doi: 10.1016/s1364-6613(99)01437-0. [DOI] [PubMed] [Google Scholar]

- Raymond JE, O’Donnell HL, Tipper SP. Priming reveals attentional modulation of human motion sensitivity. Vision Research. 1998;38:2863–2867. doi: 10.1016/s0042-6989(98)00145-x. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Desimone R. The role of neural mechanisms of attention in solving the binding problem. Neuron. 1999;24:111–125. doi: 10.1016/s0896-6273(00)80819-3. [DOI] [PubMed] [Google Scholar]

- Robertson LC. Binding, spatial attention and perceptual awareness. Nature Reviews, Neuroscience. 2003;4:93–102. doi: 10.1038/nrn1030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenholtz R. A simple saliency model predicts a number of popout phenomena. Vision Research. 1999;39:3157–3163. doi: 10.1016/s0042-6989(99)00077-2. [DOI] [PubMed] [Google Scholar]

- Sàenz M, Buraĉas GT, Boynton GM. Global feature-based attention for motion and color. Vision Research. 2003;43:629–637. doi: 10.1016/s0042-6989(02)00595-3. [DOI] [PubMed] [Google Scholar]

- Sasaki Y, Hadjikhani N, Fischl B, Liu AK, Marrett S, Dale AM, et al. Local and global attention are mapped retinotopically in human occipital cortex. Proceedings of the National Academy of Sciences of the United States of America. 2001;98:2077–2082. doi: 10.1073/pnas.98.4.2077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sceniak MP, Ringach DL, Hawken MJ, Shapley R. Contrast’s effect on spatial summation by macaque V1 neurons. Nature Neuroscience. 1999;2:733–739. doi: 10.1038/11197. [DOI] [PubMed] [Google Scholar]

- Seidemann E, Newsome WT. Effect of spatial attention on the responses of area MT neurons. Journal of Neurophysiology. 1999;81:1783–1794. doi: 10.1152/jn.1999.81.4.1783. [DOI] [PubMed] [Google Scholar]

- Smith AT, Snowden RJ, Milne AB. Is global motion really based on spatial integration of local motion signals? Vision Research. 1994;34:2425–2430. doi: 10.1016/0042-6989(94)90286-0. [DOI] [PubMed] [Google Scholar]

- Snowden RJ. Suppressive interactions between moving patterns: Role of velocity. Perception & Psychophysics. 1990;47:74–78. doi: 10.3758/bf03208167. [DOI] [PubMed] [Google Scholar]

- Tadin D, Lappin JS. Optimal size for perceiving motion decreases with contrast. Vision Research. 2005;45:2059–2064. doi: 10.1016/j.visres.2005.01.029. [DOI] [PubMed] [Google Scholar]

- Tadin D, Lappin JS, Gilroy LA, Blake R. Perceptual consequences of centre-surround antagonism in visual motion processing. Nature. 2003;424:312–315. doi: 10.1038/nature01800. [DOI] [PubMed] [Google Scholar]

- Treue S. Neural correlates of attention in primate visual cortex. Trends in Neurosciences. 2001;24:295–300. doi: 10.1016/s0166-2236(00)01814-2. [DOI] [PubMed] [Google Scholar]

- Treue S, Maunsell JH. Attentional modulation of visual motion processing in cortical areas MT and MST. Nature. 1996;382:539–541. doi: 10.1038/382539a0. [DOI] [PubMed] [Google Scholar]

- Watamaniuk SN, McKee SP. Simultaneous encoding of direction at a local and global scale. Perception & Psychophysics. 1998;60:191–200. doi: 10.3758/bf03206028. [DOI] [PubMed] [Google Scholar]

- Watamaniuk SN, Sekuler R. Temporal and spatial integration in dynamic random-dot stimuli. Vision Research. 1992;32:2341–2347. doi: 10.1016/0042-6989(92)90097-3. [DOI] [PubMed] [Google Scholar]

- Watanabe K, Shimojo S. Attentional modulation in perception of visual motion events. Perception. 1998;27:1041–1054. doi: 10.1068/p271041. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Sasaki Y, Miyauchi S, Putz B, Fujimaki N, Nielsen M, et al. Attention-regulated activity in human primary visual cortex. Journal of Neurophysiology. 1998;79:2218–2221. doi: 10.1152/jn.1998.79.4.2218. [DOI] [PubMed] [Google Scholar]

- Watt RJ, Phillips WA. The function of dynamic grouping in vision. Trends in Cognitive Sciences. 2000;4:447–454. doi: 10.1016/s1364-6613(00)01553-9. [DOI] [PubMed] [Google Scholar]

- Whitney D. Motion distorts perceived position without awareness of motion. Current Biology. 2005;15:324–326. doi: 10.1016/j.cub.2005.04.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitney D. Contribution of bottom–up and top–down motion processes to perceived position. Journal of Experimental Psychology: Human Perception and Performance. 2006;32:1380–1397. doi: 10.1037/0096-1523.32.6.1380. [DOI] [PubMed] [Google Scholar]

- Williams DW, Sekuler R. Coherent global motion percepts from stochastic local motions. Vision Research. 1984;24:55–62. doi: 10.1016/0042-6989(84)90144-5. [DOI] [PubMed] [Google Scholar]