Abstract

In a previous paper we reported the frequency selectivity, temporal resolution, nonlinear cochlear processing, and speech recognition in quiet and in noise for 5 listeners with normal hearing (mean age 24.2 years) and 17 older listeners (mean age 68.5 years) with bilateral, mild sloping to profound sensory hearing loss (Gifford et al., 2007). Since that report, 2 additional participants with hearing loss completed experimentation for a total of 19 listeners. Of the 19 with hearing loss, 16 ultimately received a cochlear implant. The purpose of the current study was to provide information on the pre-operative psychophysical characteristics of low-frequency hearing and speech recognition abilities, and on the resultant postoperative speech recognition and associated benefit from cochlear implantation. The current preoperative data for the 16 listeners receiving cochlear implants demonstrate: 1) reduced or absent nonlinear cochlear processing at 500 Hz, 2) impaired frequency selectivity at 500 Hz, 3) normal temporal resolution at low modulation rates for a 500-Hz carrier, 4) poor speech recognition in a modulated background, and 5) highly variable speech recognition (from 0 to over 60% correct) for monosyllables in the bilaterally aided condition. As reported previously, measures of auditory function were not significantly correlated with pre- or post-operative speech recognition – with the exception of nonlinear cochlear processing and preoperative sentence recognition in quiet (p=0.008) and at +10 dB SNR (p=0.007). These correlations, however, were driven by the data obtained from two listeners who had the highest degree of nonlinearity and preoperative sentence recognition. All estimates of postoperative speech recognition performance were significantly higher than preoperative estimates for both the ear that was implanted (p<0.001) as well as for the best-aided condition (p<0.001). It can be concluded that older individuals with mild sloping to profound sensory hearing loss have very little to no residual nonlinear cochlear function, resulting in impaired frequency selectivity as well as poor speech recognition in modulated noise. These same individuals exhibit highly significant improvement in speech recognition in both quiet and noise following cochlear implantation. For older individuals with mild to profound sensorineural hearing loss who have difficulty in speech recognition with appropriately fitted hearing aids, there is little to lose in terms of psychoacoustic processing in the low-frequency region and much to gain with respect to speech recognition and overall communication benefit. These data further support the need to consider factors beyond the audiogram in determining cochlear implant candidacy, as older individuals with relatively good low-frequency hearing may exhibit vastly different speech perception abilities – illustrating the point that signal audibility is not a reliable predictor of performance on supra-threshold tasks such as speech recognition.

Keywords: cochlear implant, older, aging, psychoacoustic function, low-frequency hearing, bimodal, frequency resolution, temporal resolution, speech recognition

Background

Individuals with considerable low-frequency hearing are receiving cochlear implants at an increasing rate. Current U.S. Food and Drug Administration (FDA) labeled candidacy indications include individuals with moderate sloping to profound sensorineural hearing loss. Thus it is logical that greater attention has been placed on understanding and describing the psychoacoustic properties of low-frequency hearing (e.g., Gifford et al., 2007, 2010; He et al., 2008; Brown and Bacon, 2009; Peters and Moore, 2002) since individuals are combining electric and acoustic hearing either across ears (bimodal hearing) or within the same ear in cases of hearing preservation with cochlear implantation.

Psychophysical estimates of frequency selectivity obtained by deriving auditory filter (AF) shapes using the notched-noise method (Patterson et al., 1982) have shown frequency selectivity to be negatively correlated with audiometric threshold at the signal frequency (fs) (e.g., Peters and Moore, 1992). Thus for individuals with even mild to moderate hearing loss in the lower frequency region, impaired frequency selectivity is not unexpected. Broadened auditory filters associated with impaired frequency selectivity can result in broadened auditory filters, which smear speech spectra across adjacent filters resulting in significantly poorer speech intelligibility, particularly in the presence of background noise (e.g., Baer and Moore, 1994; Moore and Glasberg, 1993).

Audiometric threshold is also negatively correlated with nonlinear cochlear processing. In other words, increases in sensory hearing loss are associated with greater dysfunction and/or destruction of outer hair cells – which are responsible for the active or nonlinear cochlear mechanism. Individuals with mild to moderate hearing loss are expected to demonstrate reduced nonlinear cochlear processing, a mechanism responsible for high sensitivity, broad dynamic range, sharp frequency tuning, and enhanced spectral contrasts via suppression. Thus, any reduction in the magnitude of the nonlinearity may result in one or more functional deficits, including impaired speech recognition.

Given the known relationships between hearing loss, active cochlear mechanics, and spectral resolution, one might hypothesise that individuals with hearing loss rely more heavily upon temporal resolution for speech and sound recognition. Research has shown that temporal resolution in the apical cochlea of individuals with relatively good low-frequency hearing should be comparable to that of a normal-hearing listener under conditions of the same restricted listening bandwidth (e.g., Bacon and Viemeister, 1985; Bacon and Gleitman, 1992). Thus, it is reasonable to believe that when combining acoustic and electric hearing in bimodal listening, normal or near-normal low-frequency acoustic temporal resolution will be associated with high speech recognition performance.

Speech recognition in the presence of a temporally modulated background noise (as compared to a steady-state noise) provides an estimate of the degree of masking release or the listener’s ability to listen in the dips. Past research has shown that listeners with hearing loss (e.g., Bacon et al., 1998) and listeners with cochlear implants (e.g., Nelson et al., 2003) demonstrate either a reduced or an absent masking release relative to listeners with normal hearing. It is believed that the degree of masking release represents a functional measure of temporal resolution. In particular, the masking of speech by 100% modulated noise is probably dominated by forward masking (e.g., Bacon et al., 1998) – for which temporal resolution will impact performance. Qin and Oxenham (2003) examined the effects of simulated cochlear-implant processing on speech perception in quiet, steady-state maskers and in temporally fluctuating maskers. They found that even with a large number of processing channels, the effects of simulated implant processing were more detrimental to speech intelligibility in the presence of the temporally complex masker than in the steady-state masker. Thus, speech perception measures in a temporally fluctuating background may provide a more realistic description of the listening and recognition difficulties experienced by cochlear implant recipients.

In a previous study, we reported on the psychophysical measures of frequency selectivity, temporal resolution, nonlinear cochlear processing, and speech recognition in quiet and in noise, for 5 listeners with normal hearing (mean age 24.2 years) and 17 listeners (mean age 68.5 years) with bilateral sensory hearing loss with audiograms that would have qualified for the North American clinical trial of Med El’s electric and acoustic stimulation (EAS) device or the Nucleus Hybrid implant (Gifford et al., 2007). Since that report, 2 additional participants with hearing loss completed experimentation, for a total of 19 listeners. Of the 19 with hearing loss, 16 ultimately received a cochlear implant. Thus the purpose of the current project was to provide, for these 16 older individuals with mild sloping to profound sensory hearing loss, information on the pre-operative psychophysical characteristics of low-frequency auditory function and speech recognition, and on the resultant postoperative speech recognition and associated benefit from cochlear implantation.

Methods

Participants

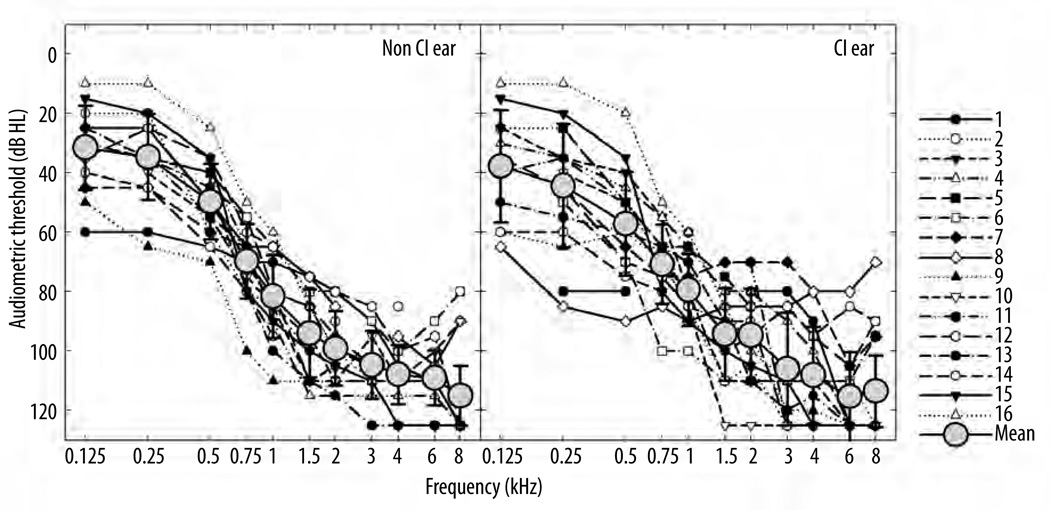

Exactly 16 participants (12 male, 4 female) with hearing loss were evaluated. The participants had been previously recruited for a preoperative study examining psychophysical function of low-frequency hearing (Gifford et al., 2007). All preoperative estimates of psychoacoustic function were obtained monaurally in the ear to be implanted as per the referenced 2007 study. These listeners then went on to receive a cochlear implant which allowed for a comparison of pre- and post-implant auditory function. The mean age was 67.7 years with a range of 48 to 85 years. All participants were paid an hourly wage for their participation. Figure 1 displays, for all participants, individual and mean preoperative audiometric thresholds for the implanted and non-implanted ears. The preoperative low-frequency pure tone average (LF PTA, mean threshold for 125, 250, and 500 Hz) in the ear to be implanted is also shown in Table 1. Preoperative inclusion criteria for the study required that all participants meet audiometric threshold criteria for inclusion in the North American clinical trial of EAS as outlined by Med El Corporation (e.g., Gifford et al., 2007) or for the Nucleus Hybrid S8 device as outlined by Cochlear Americas (Gantz et al., 2009) for at least one ear. It is important to note, however, that although the listeners had EAS-qualifying audiograms, they did not undergo hearing preservation surgery with the EAS or the Hybrid device. Rather all study participants chose to undergo conventional cochlear implantation with a standard long electrode. Participant demographic data including age at implantation, device implanted, and months experience with implant at testing point are shown in Table 1.

Figure 1.

Individual and mean preoperative audiometric thresholds, in dB HL, for the ear to be implanted as well as the non-implanted ear. Error bars represent ±1 standard deviation.

Table 1.

Individual and mean demographic data including age at implantation, device implanted, months experience with implant at test point, and preoperative low-frequency pure tone average (LF PTA) in the implanted ear, in dB HL. Also displayed are individual and mean psychoacoustic estimates of frequency selectivity [equivalent rectangular bandwidth (ERB) of the auditory filter in Hertz], nonlinear cochlear function (Schroeder phase effect, SPE, in dB), amplitude modulation (AM) detection thresholds [average of 16 and 32 Hz in dB (20 log m)], and the speech reception threshold (SRT, in dB SNR) for steady-state (SS) and square-wave (SQ) noise. All psychoacoustic data were obtained preoperatively in the ear to be implanted. A horizontal line indicates that auditory filter shape could not be derived. See results section for additional detail.

| Subject | Age at CI (yrs) |

Device | Months experience |

Pre-CI LF PTA (dB HL) |

ERB (Hz) |

SPE (dB) 500 Hz |

Mean AM detection threshold 16–32 Hz |

SRT (dB SNR) SS, SQ |

|---|---|---|---|---|---|---|---|---|

| 1 | 55 | CI24RE(CA) | 7 | 61.7 | − | −0.8 | −16.6 | >20, >20 |

| 2 | 78 | HR90K 1j | 8 | 33.3 | 276 | −1.5 | −17.7 | >20, >20 |

| 3 | 77 | CI24RE(CA) | 6 | 33.3 | 199 | 2.8 | −15.5 | 17, >20 |

| 4 | 85 | HR90K 1j | 6 | 35.0 | 235 | 2.0 | −17.4 | >20, >20 |

| 5 | 84 | HR90K 1j | 7 | 37.5 | 236 | 7.0 | −23.9 | 17.3, 16.0 |

| 6 | 80 | CI24RE(CA) | 5 | 36.7 | 259 | 2.8 | −16.7 | >20, >20 |

| 7 | 67 | CI24RE(CA) | 18 | 50.0 | 134 | 13.9 | −22.1 | 14.7, 11.7 |

| 8 | 47 | CI24RE(CA) | 39 | 33.3 | 232 | 5.6 | −18.5 | >20, >20 |

| 9 | 70 | CI24RE(CA) | 28 | 61.7 | − | 1.2 | −19.8 | >20, >20 |

| 10 | 77 | HR90K 1j | 12 | 47.5 | 222 | 0.5 | −21.3 | 16.3, 9.7 |

| 11 | 75 | HR90K 1j | 7 | 51.7 | 281 | 0.2 | −21.6 | >20, >20 |

| 12 | 64 | HR90K 1j | 18 | 31.7 | − | 5.0 | −20.9 | 8.0, 8.0 |

| 13 | 62 | HR90K 1j | 23 | 35.0 | 229 | 5.7 | −22.6 | 17.0, 13.0 |

| 14 | 62 | CI24RE(CA) | 20 | 50.0 | 178 | 2.7 | −24.0 | 15.7, 12.7 |

| 15 | 48 | CI24RE(CA) | 70 | 23.3 | − | −3.5 | −22.6 | >20, >20 |

| 16 | 52 | CI24RE(CA) | 15 | 15.0 | 338 | 15.8 | −18.7 | 10.7, 7.7 |

| Mean | 67.7 | N/A | 18.1 | 39.8 | 234.9 | 3.7 | −20.0 | 14.6, 11.3 |

| St dev | 12.5 | N/A | 16.8 | 12.9 | 52.2 | 5.2 | 2.8 | 3.4, 3.0 |

General laboratory procedures

Recorded speech recognition stimuli were presented in the sound field via a single loudspeaker placed in front of the subject (0° azimuth) at a distance of 1 meter. The calibrated presentation level for the speech recognition stimuli was 70 dB SPL (A weighted). Stimuli used in the measurement of low-frequency acoustic processing were presented monaurally via Sennheiser HD250 stereo headphones. All psychophysical testing utilised an adaptive, three-interval, forced-choice paradigm with a decision rule to track 79.4% correct (Levitt, 1971). Stimuli were generated and produced digitally with a 20-kHz sampling rate. All gated stimuli were shaped with 10-ms cos2 rise/ fall times. All test stimuli were temporally centered within the masker. Interstimulus intervals were 300 ms in all masking experiments. Testing was completed in a double-walled sound booth.

Stimuli and conditions

Frequency resolution

As discussed in Gifford et al. (2007), frequency resolution was assessed by deriving auditory filter (AF) shapes using the notched-noise method (Patterson, 1976) in a simultaneous-masking paradigm. Each noise band (0.4 times fs) was placed symmetrically or asymmetrically about the 500-Hz signal (Stone and Moore, 1992). The signal was fixed at 10 dB above absolute threshold [or 10 dB sensation level (SL)], and the masker level was varied adaptively. The masker and signal were 400 and 200 ms in duration, respectively.

Temporal resolution

Temporal resolution was assessed via both amplitude modulation (AM) detection and speech recognition in temporally modulated noise. Amplitude modulation detection was assessed for modulation rates from 4 to 32 Hz, in octave steps. The 500-Hz carrier was fixed at 20 dB SL and gated with each 500-ms observation interval. Modulation depth was varied adaptively. Level compensation was applied to the modulated stimulus (Viemeister, 1979).

Speech recognition in temporally modulated noise was assessed via speech reception threshold (SRT) for the Hearing in Noise Test (HINT; Nilsson et al., 1994) using sentences in both steady-state (SS) and 10-Hz square wave (SQ, 100% modulation depth) noise. The noise spectrum was shaped to match the long-term average spectrum of the HINT sentences. The noise was fixed at an overall level of 70 dB SPL and the sentences were varied adaptively to achieve 50% correct. The SRT was achieved by concatenating two 10-sentence HINT lists that were presented as a single run. The last six presentation levels for sentences 15 through 20 were averaged to provide an SRT for that run. Two runs were completed per condition and the SRTs were averaged to yield a final SRT for each listening condition. Prior to data collection, every subject was presented with a trial run of 20 sentences for task familiarisation in both the bimodal and best-aided EAS listening conditions. The difference in the thresholds for the SS and SQ noises provides a measure of masking release or the listener’s ability to “listen in the dips” to obtain information about the speech stimulus and is thought to reflect a measure of temporal resolution (e.g., Bacon et al., 1998).

Nonlinear cochlear processing

Nonlinear cochlear processing was assessed via masked thresholds for 500-Hz signals in the presence of both positively scaled (m+) and negatively scaled (m–) Schroeder phase harmonic complexes (e.g., Schroeder, 1970; Lentz and Leek, 2001). The m+ and m– Schroeder phase harmonic complexes have identical flat envelopes as they are simply time-reversed versions of one another. However, the m+ complexes tend to be less effective maskers. Researchers have hypothesised that the difference in masking effectiveness results from the m+ complexes producing a more peaked response along the BM, coupled with fast-acting compression (e.g., Carlyon and Datta, 1997; Recio and Rhode, 2000; see also Oxenham and Dau, 2001) – an effect which is maximised when the phase curvature of the harmonic complex is equal, but in opposition to the phase curvature of the auditory filter in which the complex is centered. Masker overall level was fixed at 75 dB SPL (63.9 dB SPL per component) and signal level was varied adaptively. The masker spectrum ranged from 200 to 800 Hz with a fundamental frequency of 50 Hz. The durations of the masker and signal were 400 and 200 ms, respectively. The signal was placed in the temporal center of the masker.

Estimates of speech recognition in quiet and in noise

Preoperative speech recognition was assessed for all participants for words, sentences, and sentences in noise in the sound field at a calibrated presentation level of 70 dB SPL. Word recognition was assessed using one 50-item list of the consonant-nucleus-consonant (CNC, Peterson and Lehiste, 1962) monosyllables. Sentence recognition was assessed using two 20-sentence lists of the AzBio sentences (Spahr et al., 2012) presented in quiet as well as at +10 and +5 dB SNR (4-talker babble). The same metrics and presentation levels were used for all listeners both pre-and post-implant.

Results

Psychophysical estimates of auditory function

Auditory filter (AF) shapes were derived using a roex (p,k) model (Patterson et al., 1982) and the bandwidth was characterised in terms of equivalent rectangular bandwidth [(ERB), Glasberg and Moore, 1990]. The individual and mean preoperative AF bandwidth values for the implanted ears are shown in Table 1. AF shapes could not be derived for four of the participants (#1, 9, 12, and 15) given that the probe could not be masked for the widest notch condition at the highest allowable masker spectrum level (50 dB SPL); for these four listeners, the ERB values were listed as horizontal dashed lines indicating that the AF shape and corresponding ERB could not be determined. The mean AF width, and associated standard deviation, was 234.9 and 52.2 Hz, respectively (with a range of 134 to 338 Hz). As reported by Gifford and colleagues (2007), mean AF width for young listeners with normal hearing on this same task was 104 Hz with a range of 78 to 120 Hz. Thus even preoperatively, the participants with EAS-qualifying audiograms – who had relatively good low-frequency hearing – exhibited impaired frequency selectivity.

Individual and mean modulation detection thresholds for the temporal modulation transfer function (TMTF) averaged across 16 and 32 Hz are listed in Table 1. The mean modulation detection threshold averaged across 16 and 32 Hz was –20.0 dB with a range of –24.0 to –15.5. As reported in Gifford et al. (2007), the mean TMTF threshold averaged across 16 and 32 Hz for the normal-hearing listeners was –18.5 with a range of –23.2 to –11.8. Consistent with what was reported in our prior work, temporal resolution – as determined by modulation detection at relatively low rates – was normal in this population of hearing-impaired listeners.

Individual and mean SRTs for the preoperative HINT in both the steady-state (SS) and square-wave (SQ) background noises are listed in Table 1. Within the hearing-impaired group, there were 8 listeners who could not achieve 50% correct even at +20 dB SNR – these listeners’ SRTs are displayed as >20. Mean SRTs for the 8 listeners who were able to complete the task for the SS noise and the 7 listeners able to complete the task for the SQ noise were 14.6 and 11.3 dB SNR, respectively. For the listeners with normal hearing reported in Gifford et al. (2007), mean SRTs were –2.7 and –17.5 dB SNR for the SS and SQ noises. Thus the normal-hearing listeners exhibited considerable temporal release from masking or the ability to listen in the dips. As compared to the listeners with normal hearing, the hearing-impaired listeners showed little to no benefit from listening in the dips of a modulated noise masker.

Estimates of nonlinear cochlear processing, as defined by the peak-to-valley threshold differences for the m+ and m– Schroeder-masked thresholds, are shown in Table 1 for individual participants as well as for the mean. The mean Schroeder phase effect (SPE) was 3.7 dB with a range of –3.5 to 15.8 dB. For the individuals with normal hearing in Gifford et al. (2007), the mean SPE was 18.0 with a range of 14.5 to 21.5 dB. Thus the majority of individuals with hearing loss exhibited little to no residual nonlinear cochlear function.

Speech recognition

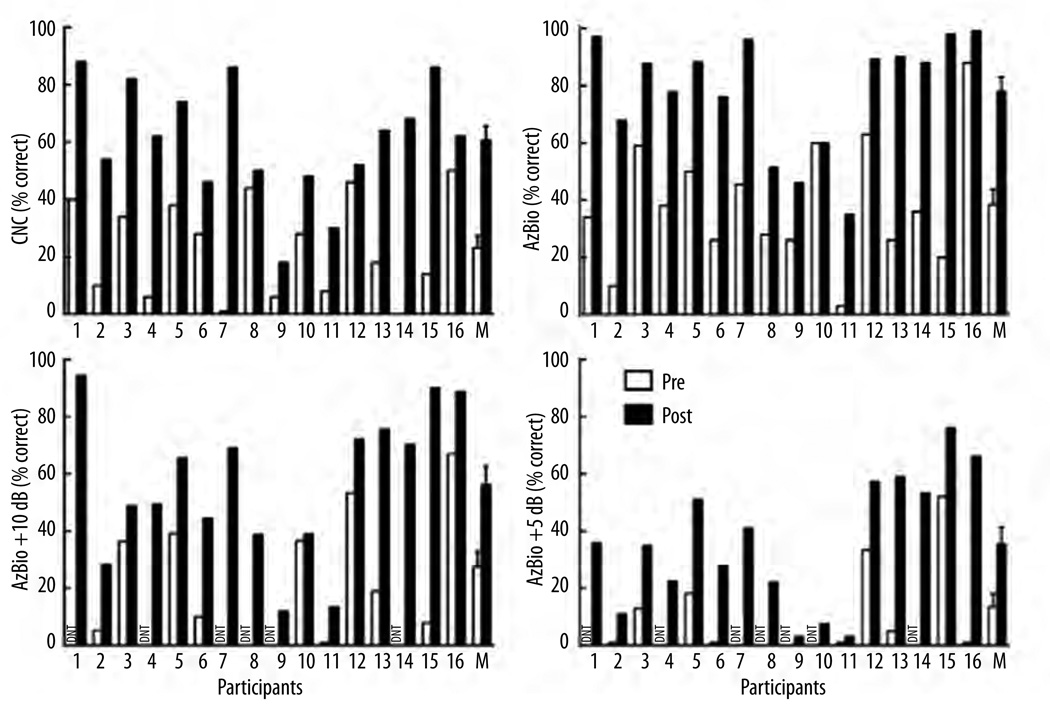

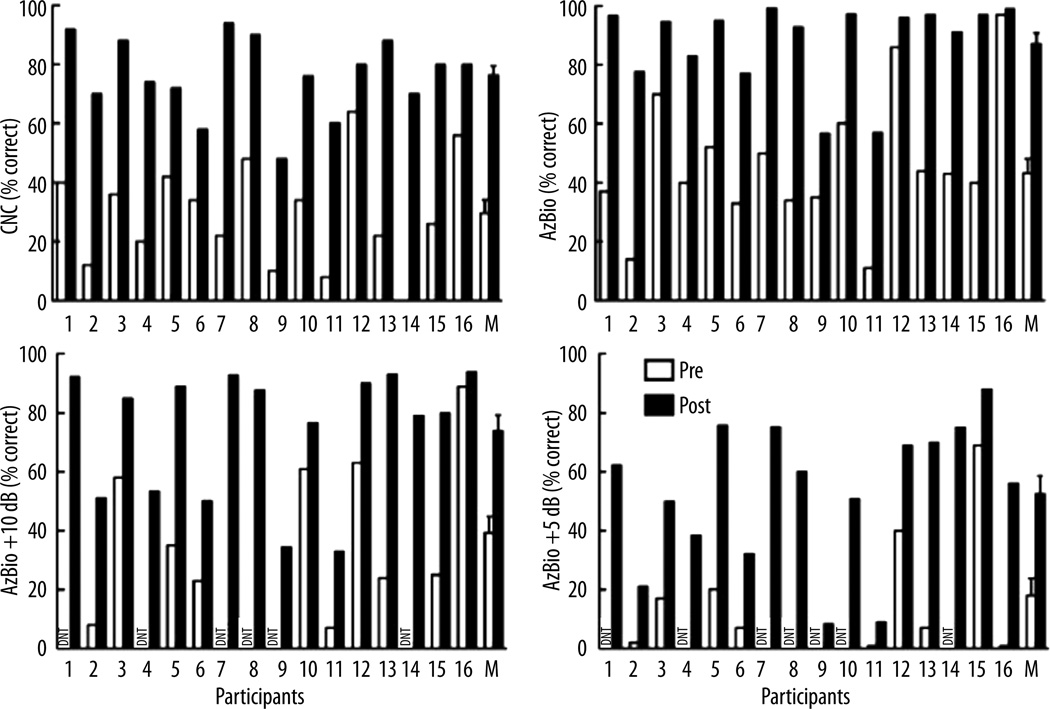

Individual and mean speech recognition scores for both the pre- and post-implant conditions are displayed in Figure 2 and Figure 3 for the ear that was implanted as well as for the best-aided condition, respectively. For any given measure administered preoperatively, there was considerable variability across listeners, with inter-subject differences up to 85 percentage points for CNC word recognition. This variability represents nearly the entire range of possible scores for a group of individuals who had relatively similar preoperative EAS-like audiograms. Post-operatively, all participants exhibited improvement in performance for both the implanted ear as well as in the best-aided condition. At the group level, postoperative performance was significantly higher than preoperative performance for all measures tested. A two-way analysis of variance was completed with metric and test point (pre- versus post-implant) as the variables. The analysis revealed a highly significant effect of test point (F(1,15)=53.5, p<0.001) such that postoperative performance was significantly higher than preoperative performance. There was also an effect of metric (F(1,3)=31.1, p<0.001) which was not unexpected given that performance levels differ across word recognition, sentence recognition in quiet, and in various levels of background noise. There was no interaction between test point and metric (p=0.51) such that postoperative performance was higher than preoperative scores and that did not vary as a function of the administered speech metric.

Figure 2.

Individual and mean speech recognition scores for the ear that was implanted in the preoperative (unfilled bars) and postoperative (filled bars) conditions for CNC monosyllabic words, and AzBio sentences in quiet, at +5 dB, and at +10dB SNR. Error bars represent ±1 standard error.

Figure 3.

Individual and mean speech recognition scores for the best aided condition in the preoperative (unfilled bars, bilaterally aided) and postoperative (filled bars, bimodal hearing) conditions for CNC monosyllabic words, and AzBio sentences in quiet, at +5 dB, and +10 dB SNR. Error bars represent ±1 standard error.

Individual speech recognition performance was assessed using a binomial distribution statistic for a 50-item list of monosyllabic words (Thornton and Raffin, 1978) and the AzBio sentences (Spahr et al., 2012). At the individual level, postoperative CNC word recognition was significantly higher for all but 4 listeners (#6, 10, 12, and 16) in the implanted ear and all but 1 listener (#12) for the best-aided condition. For AzBio sentences in quiet, individual performance was significantly higher in the ear that was implanted for 14 of the 16 listeners (excluding #9 and 10); note that post-implant performance for participant #9 was 20-percentage points higher in the implanted ear, but did not reach significance for 2-list administration (Spahr et al., 2012). Comparing the best-aided conditions pre- and post-implant, AzBio sentence recognition was significantly better for all 16 listeners postoperatively. For AzBio sentences at +10 dB SNR, 14 of the 16 listeners (excluding #3 and 10) exhibited significantly higher postoperative performance as compared to preoperative listening in the ear that was implanted. In a comparison of the best-aided conditions, all but 1 listener (participant #10) exhibited statistically significant improvement in performance for AzBio sentences at +10 dB – despite exhibiting a 17-percentage point improvement in performance. For AzBio sentence recognition at +5 dB SNR, all individuals exhibited significant improvement in performance for both the ear that was implanted as well as the best-aided condition.

In an attempt to relate speech recognition performance to psychophysical function, correlational analyses were completed. The psychophysical metrics used for correlation were AF width in ERBs, AM detection threshold in dB (20 log m) averaged across 16 and 32 Hz, degree of masking release in dB, and SPE in dB. Each of the four psychophysical metrics was compared to performance on the preoperative and postoperative measures of speech recognition in the ear that was implanted. For the majority of Pearson product moment correlation analyses, there were no significant correlations between the psychophysical metrics and speech recognition performance. The exceptions were SPE versus preoperative AzBio sentence recognition in quiet (r=0.64, p=0.008) and at +10 dB SNR (r=0.79, p=0.007). These correlations, however, were primarily driven by data for two participants (#7 and 16) who exhibited the highest SPE as well as the highest preoperative speech recognition performance. No correlations were found to be significant in preoperative measures of psychoacoustic function and postoperative speech recognition in the same ear.

Conclusions

The primary goal of this analysis was to revisit data collected for 16 individuals with EAS-qualifying audiograms describing psychoacoustic function for low-frequency hearing (Gifford et al., 2007) in the preoperative condition as compared to postoperative performance for standard clinical measures of speech recognition. As reported by Gifford et al. (2007) there were significant impairments noted in frequency selectivity, masking release (the difference in SRT between the SS and SQ conditions), and nonlinear cochlear processing for the individuals with EAS-qualifying audiograms in the preoperative listening condition. Temporal resolution at low modulation rates was essentially equivalent to that observed in young normal-hearing listeners.

Exactly 14 of the original 17 individuals with hearing loss reported in Gifford et al. (2007) went on to receive a cochlear implant and 2 additional participants were recruited for pre- and post-implant testing. Thus these data offer a unique look at pre-implant estimates of psychoacoustic function as well as pre- and post-implant speech recognition abilities for individuals with EAS-like audiograms.

Preoperative speech recognition performance was highly variable across the listeners and in some conditions the range of performance covered nearly the entire possible range of scores. This range was observed in individuals who all had EAS-qualifying audiograms. Thus these data support the need to consider factors beyond the audiogram, as signal audibility is not a reliable predictor of performance on supra-threshold tasks such as speech recognition. Further, the range of preoperative scores were, in some cases, much higher than expected for a traditional implant candidate. Despite having relatively good sentence recognition abilities, all individuals in the current study reported considerable difficulty with everyday communication (which precipitated an appointment for preoperative cochlear implant candidacy evaluation). Further, nearly all listeners exhibited significant improvement in speech recognition performance when considering individual subject performance using a binomial distribution statistic, and all listeners demonstrated an improvement in raw performance scores.

The current results suggest that individuals with EAS-like hearing loss have little to lose in terms of psychoacoustic low-frequency function and much to gain in terms of speech understanding – representing a highly favorable assessment of risk versus benefit. Further, there is a lack of correlation between preoperative measures of pre-implant tonal detection (i.e. audiometric thresholds), frequency resolution, and temporal resolution as related to post-implant speech recognition. Thus it is critical to consider the whole patient when determining implant candidacy, as neither the audiogram nor pre-implant speech recognition will accurately predict the degree of postoperative benefit with a cochlear implant. These data also provide further evidence for the expansion of adult cochlear implant criteria to include individuals with low-frequency thresholds in even the near-normal range, as significant postoperative benefit is noted for speech understanding.

Acknowledgements

This work was supported by NIDCD grants F32 DC006538 (RHG), R01 DC009404, and DC00654 (MFD).

Footnotes

A portion of these data were presented at the 2012 Med El Presbycusis Meeting in Munich.

References

- 1.Bacon SP, Opie JM, Montoya DY. The effects of hearing loss and noise masking on the masking release for speech in temporally complex backgrounds. J Speech Lang Hear Res. 1998;41:549–563. doi: 10.1044/jslhr.4103.549. [DOI] [PubMed] [Google Scholar]

- 2.Bacon SP, Gleitman RM. Modulation detection in subjects with relatively flat hearing losses. J Speech Lang Hear Res. 1992;35:642–653. doi: 10.1044/jshr.3503.642. [DOI] [PubMed] [Google Scholar]

- 3.Bacon SP, Viemeister NF. Temporal modulation transfer functions in normal-hearing and hearing-impaired listeners. Audiology. 1985;24:117–134. doi: 10.3109/00206098509081545. [DOI] [PubMed] [Google Scholar]

- 4.Baer T, Moore BCJ. Effects of spectral smearing on the intelligibility of sentences in the presence of interfering speeck. J Acoust Soc Am. 1994;95:2277–2280. doi: 10.1121/1.408640. [DOI] [PubMed] [Google Scholar]

- 5.Brown CA, Bacon SP. Low-frequency speech cues and simulated electric-acoustic hearing. J Acoust Soc Am. 2009;125:1658–1665. doi: 10.1121/1.3068441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Carlyon RP, Datta J. Excitation produced by Schroeder-phase complexes: Evidence for fast-acting compression in the auditory system. J Acoust Soc Am. 1997;101:3636–3647. doi: 10.1121/1.418324. [DOI] [PubMed] [Google Scholar]

- 7.Gantz BJ, Hansen MR, Turner CW, et al. Hybrid 10 clinical trial: preliminary results. Audiol Neurotol. 2009;14(Suppl.1):32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gifford RH, Dorman MF, Spahr AJ, Bacon SP. Auditory function and speech understanding in listeners who qualify for EAS surgery. Ear Hear. 2007;28:114S–118S. doi: 10.1097/AUD.0b013e3180315455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gifford RH, Dorman MF, Brown CA. Psychophysical properties of low-frequency hearing: implications for perceiving speech and music via electric and acoustic stimulation. Adv Otorhinolaryngol. 2010;67:51–60. doi: 10.1159/000262596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Glasberg BR, Moore BCJ. Derivation of auditory filter shapes from notched-noise data. Hear Res. 1990;47:103–138. doi: 10.1016/0378-5955(90)90170-t. [DOI] [PubMed] [Google Scholar]

- 11.He NJ, Mills JH, Ahlstrom JB, Dubno JR. Age-related differences in the temporal modulation transfer function with pure-tone carriers. J Acoust Soc Am. 2008;124:3841–3849. doi: 10.1121/1.2998779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lentz JJ, Leek MR. Psychophysical estimates of cochlear phase response: masking by harmonic complexes. J Assoc Res Otolaryngol. 2001;2:408–422. doi: 10.1007/s101620010045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49:467–477. [PubMed] [Google Scholar]

- 14.Moore BCJ, Glasberg BR. Simulation of the effects of loudness recruitment and threshold elevation on the intelligibility of speech in quiet and in a background of speech. J Acoust Soc Am. 1993;94:2050–2062. doi: 10.1121/1.407478. [DOI] [PubMed] [Google Scholar]

- 15.Nelson PB, Jin SH, Carney AE, Nelson DA. Understanding speech in modulated interference: cochlear implant users and normal-hearing listeners. J Acoust Soc Am. 2003;113(2):961–968. doi: 10.1121/1.1531983. [DOI] [PubMed] [Google Scholar]

- 16.Nilsson MJ, Soli SD, Sullivan J. Development of a hearing in noise test for the measurement of speech reception threshold. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- 17.Oxenham AO, Dau T. Reconciling frequency selectivity and phase effects in masking. J Acoust Soc Am. 2001;110:1525–1538. doi: 10.1121/1.1394740. [DOI] [PubMed] [Google Scholar]

- 18.Patterson RD. Auditory filter shapes derived with noise stimuli. J Acoust Soc Am. 1976;59:640–654. doi: 10.1121/1.380914. [DOI] [PubMed] [Google Scholar]

- 19.Patterson RD, Nimmo-Smith I, Weber DL, Milroy R. The deterioration of hearing with age: frequency selectivity, the critical ratio, the audiogram and speech threshold. J Acoust Soc Am. 1982;72:1788–1803. doi: 10.1121/1.388652. [DOI] [PubMed] [Google Scholar]

- 20.Peters RW, Moore BCJ. Auditory filter shapes at low center frequencies in young and elderly hearing-impaired subjects. J Acoust Soc Am. 1992;91(1):256–266. doi: 10.1121/1.402769. [DOI] [PubMed] [Google Scholar]

- 21.Peterson GE, Lehiste I. Revised CNC lists for auditory tests. J Speech Hear Disord. 1962;27:62–70. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- 22.Qin MK, Oxenham AJ. Effects of envelope-vocoder processing on F0 discrimination and concurrent-vowel identification. Ear Hear. 2005;26:451–460. doi: 10.1097/01.aud.0000179689.79868.06. [DOI] [PubMed] [Google Scholar]

- 23.Recio A, Rhode WS. Basilar membrane responses to broadband stimuli. J Acoust Soc Am. 2000;108:2281–2298. doi: 10.1121/1.1318898. [DOI] [PubMed] [Google Scholar]

- 24.Schroeder MR. Synthesis of low peak-factor signal and binary sequences with low autocorrelation. IEEE Transactions on Information Theory. 1970;16:85–89. [Google Scholar]

- 25.Spahr AJ, Dorman MF, Litvak LL, et al. Development and Validation of the AzBio Sentence Lists. Ear Hear. 2012;33:112–117. doi: 10.1097/AUD.0b013e31822c2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Stone MA, Glasberg BR, Moore BCJ. Simplified measurement of impaired auditory filter shapes using the notched-noise method. Br J Audiol. 1992;26:329–334. doi: 10.3109/03005369209076655. [DOI] [PubMed] [Google Scholar]

- 27.Thornton AR, Raffin MJ. Speech discrimination scores modeled as a binomial variable. J Speech Hear Res. 1978;21:507–518. doi: 10.1044/jshr.2103.507. [DOI] [PubMed] [Google Scholar]

- 28.Viemeister NF. Temporal modulation transfer functions based upon modulation thresholds. J Acoust Soc Am. 1979;66:1364–1380. doi: 10.1121/1.383531. [DOI] [PubMed] [Google Scholar]