Abstract

Networks of molecular interactions regulate key processes in living cells. Therefore, understanding their functionality is a high priority in advancing biological knowledge. Boolean networks are often used to describe cellular networks mathematically and are fitted to experimental datasets. The fitting often results in ambiguities since the interpretation of the measurements is not straightforward and since the data contain noise. In order to facilitate a more reliable mapping between datasets and Boolean networks, we develop an algorithm that infers network trajectories from a dataset distorted by noise. We analyze our algorithm theoretically and demonstrate its accuracy using simulation and microarray expression data.

Keywords: Boolean network, Inference, Conditional entropy, Gradient descent

Introduction

Boolean networks were introduced by Kauffman [1] several decades ago as a model for gene regulatory networks. In this model, every gene corresponds to a node in the network. Every node is assigned an initial Boolean value, which is its value at time 0. A Boolean value of 1 means that the gene is active; in other words, its product is present in the cell and can perform its designated role. A Boolean value of 0 means exactly the opposite - a gene is not active and its product is absent from the cell. Since the activity or inactivity of genes affects the activity or inactivity of other genes, the Boolean value of a node at time point T + 1 is determined by the Boolean values of other nodes at time T. More specifically, the Boolean value of a node is determined by a time-invariant Boolean function that takes as input the Boolean values of a set of network nodes at the preceding time point. The set of nodes that constitute the input to the Boolean function is called its regulators, and the output node is referred to as target. The vector of the Boolean values of all the network nodes is called the network state. A sequence of states that evolves from some initial state according to the Boolean functions is called a trajectory. The trajectories of network states can be complex, displaying chaos or order depending on the network structure and the initial state [2]. When the outputs of all the Boolean functions at state S produce the state S itself, S is called a steady state. Since in every state every node is set to one of two Boolean values, the number of possible network states is exponential to the number of nodes. Figure 1 illustrates a simple Boolean network.

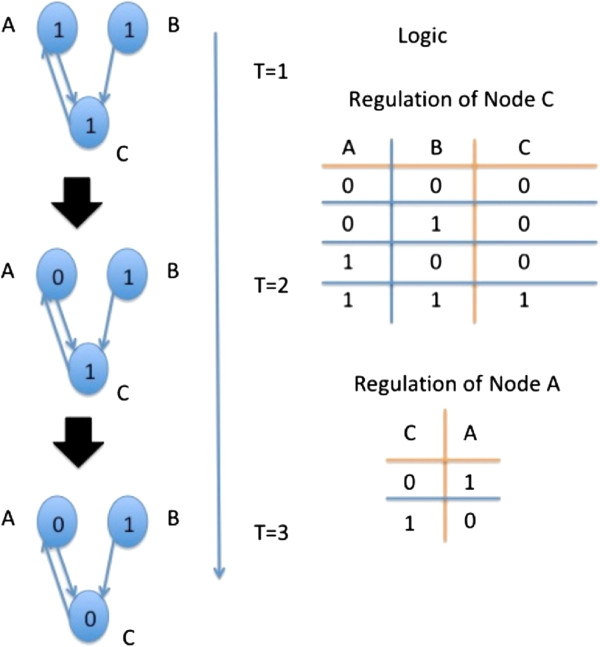

Figure 1.

A simple Boolean network. The network has three nodes, denoted as A, B, and C. Nodes A and B can change the Boolean value of node C, according to the rules given at the right side of the figure. Similarly, node C can change the value of node A. At the left side of the figure, a trajectory of length 3 is illustrated. In the initial state (T = 1), all the nodes receive a Boolean 1. According to the logical rule by which C changes the value of A, the value of A changes to 0 at time T = 2. At time point T = 3, the node C changes its value, since the input to the logical rule that determines its value has changed.

In recent years, new experimental technologies in molecular biology enabled a broader examination of gene activity in cells [3-5] and consequently, significant efforts have been invested in the application of gene regulatory networks modeling [6]. However, experimental procedures produce continuous values that do not determine conclusively the activity or inactivity of a gene. Hence, these values cannot be mapped into states of Boolean networks unambiguously, and the resulting picture of the cell state contains errors. Computational methods address this problem in various ways, for example, by using additional data such as the genomic sequences of gene promoters [7], by mapping the continuous measurements into discrete values and then optimally fitting the transformed dataset to a network model [8,9], or by using a prior distribution on states [10]. It is well recognized that an improved ability to probe the state of a cell can lead to improvement in our understanding of a broad range of biological processes.

With this motivation in mind, we propose a novel algorithm for inferring the state of a Boolean network using a continuous noisy expression dataset and the network structure, i.e., the genes and their regulators. The algorithm is based on the following idea: High expression values are more likely to correspond to a Boolean 1, while low to a Boolean 0. By combining the network structure and the expression dataset, we can estimate the likelihood of each continuous value to correspond to a Boolean value of 1 or 0. We can then update the likelihood (equivalently the expression value) of each gene accordingly and repeat the process until any further change would either (a) change a gene towards a Boolean value that is less likely for it or (b) change a gene towards a Boolean value that is as likely as the opposite Boolean value (i.e., make an arbitrary guess). The update scheme should be such that if enough updates were possible, the final probability distribution will describe the states of a Boolean network.

The next section explains how to implement this idea using the conditional entropy [11] of the network. It will be shown that changing the gene probabilities in the opposite direction of the conditional entropy gradient is equivalent to executing the inference algorithm that we outlined above. The section begins by analyzing a simple network and then extends the results to general networks.

In the ‘Testing’ section, we use simulation and real data in order to test the performance of the algorithm. We generate noisy datasets for several Boolean network structures and use a microarray time-series dataset from a study of the Saccharomyces cerevisiae cell cycle. We find that using the simulated datasets, the algorithm infers a large proportion of the Boolean states correctly; and using the yeast dataset, it infers Boolean states that agree with the conclusion of the study. We conclude by summarizing our results and suggesting research directions that can lead to further progress in this domain.

Main text

Analysis

Consider the following simple network: gene X negatively regulates gene Y. In other words, when X is active Y is inactive, and vice versa. X is also said to be a repressor of Y or to repress Y. The Boolean function that controls the value of Y is called NOT.

An experimental device can measure the states of X and Y. If a gene is active, it measures a value from a normal distribution with a large positive average μ and small standard deviation σ. If a gene is inactive, the device measures a value from a normal distribution with a negative average − μ and standard deviation σ.

The input to our problem is a series of N i.i.d. measurements of the genes X, Y (for example, under different stimulations given to the cells). X can be active or inactive in every measurement with equal probabilities. We are also given the structure of the network. We do not know the logic with which X regulates Y, but the values in the dataset will reflect this logic.

Our goal is to find the states of X and Y in each measurement. Clearly, we cannot always recover the ‘true’ states from every measurement, since there is a nonzero probability that the device will measure a large value for the inactive gene and, at the same time, a small value for the active gene. Nevertheless, the best strategy is to identify X as a repressor and then predict that in each pair of values the larger one corresponds to an active gene and the smaller to an inactive - the larger the difference, the higher our confidence. The inference algorithm, which we will shortly describe, will apply a generalization of this strategy. We will show that in the case of the simple network, the algorithm predicts the network states in the optimal way. Then, we will explain how it generalizes to more complex networks. Before we describe the algorithm, we need to define several random variables.

Denote the N measurements by C1, C2,…,CN, and the continuous values of X and Y in measurement Ci as xi and yi, respectively. As a convention, we will use uppercase and lowercase letters to define variables that assume discrete values and continuous values, respectively. The terms measurement i and Ci are used interchangeably.

We define the following continuous values:

The role of the logistic function is to map continuous values to probabilities. For example, if xi is close to the average of its distribution μ, it will have a high probability to correspond to a Boolean 1, because μ is a large positive number. The use of the logistic function will also enable us to implement the update step in our algorithm, in which we update the probabilities of the previous iteration.

Similarly we define

Using these values, we define the discrete random variable [X;Y]i ∈ {00, 10, 01, 11}:

The probability distribution of [X;Y]i is well defined, since all probabilities are in (0,1) and sum to 1.

Since each of xi and yi is from one of the normal distributions N(μ,σ2), N(−μ,σ2) with a small σ2, the probabilities P([X;Y]i = 11) and P([X;Y]i = 00) will be small.

Similar to [X;Y]i, we can define the discrete random variable Xi with probability function:

We define the discrete random variable Yi by replacing with in the definition of Xi.

The discrete random variables that we defined so far correspond to specific experiments. We also need to define discrete random variables that correspond to the set of experiments as a whole. For example, such variables would answer the question: What is the probability of seeing X = 1 and Y = 0 in the whole dataset? In order to do that, note that as σ2 becomes smaller and the number of measurements N larger, by the law of large numbers:

which is what one expects intuitively - either X is active and Y is inactive, or vice versa, but they cannot both be active or inactive in the same measurement, because X represses Y. Although it is possible to have a high probability P([X;Y]i = 00) for some i, such deviations will have little effect on the average of the N samples. Hence, we define a variable [X;Y] ∈ {00, 01, 10, 11} with a distribution that is an average of the probabilities of the variables [X;Y]i.

Since X can be inactive or active in any measurement with equal probabilities, similarly to [X;Y] we define the variable X using the distribution

and in a similar way a discrete random variable Y. Note that the probability of [X;Y] is an estimation of the joint probabilities of X and Y, P(X,Y).

How can we infer the probabilities of variables that do not conform to the X → Y network, for example, when xi and yi are both positive? We can use the average of all the samples, which is rather accurate, and estimate the probabilities of Xi = 1 and Yi = 1. Then we will correct the values of xi and yi accordingly. This estimation and correction process is in fact equivalent to changing xi and yi in the opposite direction of the gradient of the conditional entropy H(Y|X). We have defined the probability distributions P(X), P(Y), P(X,Y) as functions of the continuous values xi, yi. We can therefore partially derive the conditional entropy H(Y|X) according to each continuous value and obtain the gradient ∇H(Y|X). This leads to the following algorithm:

Algorithm 1: State Inference

|

We now show that the algorithm obtains the desired solution for our simple network. More specifically, if yi >xi, then λ (xi) will approach 0 and λ(yi) will approach 1 and vice versa.

First, in order to compute the gradient, we use the chain rule for conditional entropy: H(Y|X) = H(Y,X) − H(X).

It is easy to see [12] that

Expanding the partial derivative we have

The direction of change in xi (positive or negative, i.e., towards Boolean 1 or Boolean 0) will be determined by the ratio within the log. If this ratio is greater than 1, the direction of change will be negative (because the change is in the opposite direction of the gradient). If it is smaller than 1, the change will be positive.

The expression (*) expresses three properties of the data:

1. How certain we are in xi. If xi is very high or very low, the whole expression, and the change it implies to xi, will be small. This is a result of the factor [λ(xi) · (1 − λ(xi))] that has its maximum at λ(xi) = 0.5 and approaches 0 when λ(xi) approaches 1 or 0.

2. The more likely Boolean value to assign to yi. The exponent of P(X,Y)P(Yi = Y)) will decrease the weight of the probability P(X,Y) in the ratio if P(Yi = Y) is low, and vice versa.

3. The more likely Boolean (X,Y) vectors. For example, if P(Yi = 0) ≈ 0, we will have within the log a ratio between P(X = 0, Y = 1) and P(X = 1, Y = 1). If P(X = 0, Y = 1) is more likely, the ratio will be greater than 1; and if P(X = 1, Y = 1) is more likely, it will be smaller than 1.

A symmetric expression can be developed for yi. Note that since all regulator values are equally likely, the term is 0 (otherwise it negates the bias).

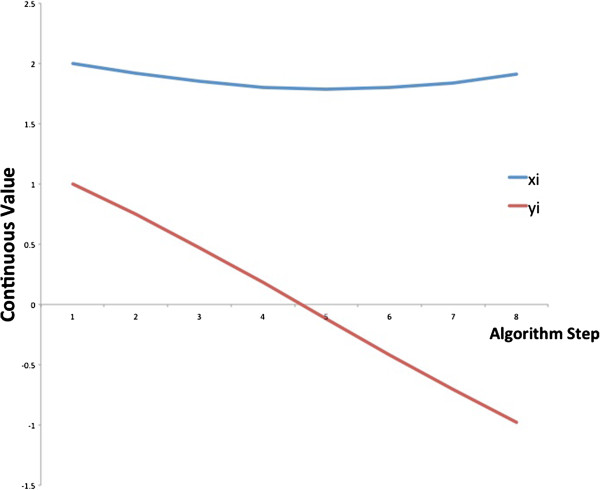

Now assume that P((X,Y) = (1,0)) = P((X,Y) = (0,1)) = 0.49; and P((X,Y) = (0,0)) = P((X,Y) = (1,1)) = 0.01. We look at measurement i in which xi = 2 and yi = 1 and plot the changes to xi, yi in eight consecutive steps of the algorithm (Figure 2). We choose δ = N and therefore the constant 1/N is canceled out. As can be seen in the figure, xi does not change significantly, while yi is reduced sharply to a negative value. This is in agreement with our optimal solution scheme for the simple X → Y network.

Figure 2.

Changes in xi, yi in eight consecutive gradient descent steps. We set P(XY = 01) = P(XY = 10) = 0.49; and P(XY = 11) = P(XY = 00) = 0.01. Since xi is larger than yi in the beginning, it is hardly reduced. In contrast, yi is reduced sharply to a negative value. The inferred states for measurement i are gene X is active gene Y is inactive.

We used a very simple network in order to explain the principles of our algorithm, and we now turn to more complex networks. Any network can be described by a directed graph G(V,E), where the set of nodes V contains a node for every gene, and the set of edges E contains edges from every regulator to each of its targets. The entropy of every node Yi is conditional on its set of regulators XYi. The conditional entropy of the network becomes

The dataset of more complex networks may contain steady states, like in the case of the simple network, but it may also include longer trajectories. In the latter case, if two measurements i, i + 1 correspond to two consecutive states in a trajectory, the value of yi + 1 should be taken from Ci + 1 and the values xi of its regulators from Ci.

In the simple network that we discussed so far, V contains two nodes, one for gene X and one for gene Y, and E contains one directed edge from the node of X to the node of Y. Each measurement is a vector of size 2, (xi,yi). For calculating (*), we needed to find the probability P(X,Y) of vectors of size 2.

In the general case, in order to derive the conditional entropy of the network by the value xi of one of the regulators X at the measurement i, we need to find the probability of a Boolean assignment to vectors of arbitrary size. We can do this in the same way as we did for P([X;Y]i) - by multiplying the individual probabilities of the vector entries. The probability of seeing a Boolean vector in the dataset as a whole is again an average of its probabilities in the N measurements.

Denote by Mx the number of targets that X regulates. Denote by , a Boolean assignment to XYj ∪ {Yj}/ X, where Yj is the jth target of X, and XYj is the set of regulators of Yj, at the ith measurement. Denote as any Boolean vector of size . We generalize the derivative by xi given by (*) as follows:

The expression (**) determines the change to xi in the same way as (*), taking into account all the targets of gene X in the network. If X is itself a target of other regulators, then Mx increases by 1, and will correspond to a Boolean assignment to the regulators of X at measurement i.

Note that if we decrease the step size of the gradient descent δ by a factor C, the change in the xi values will decrease by a factor of C. However, since the logistic function maps the xi values to the finite interval (0,1), equal probabilities λ(xi) = P(Xi = 1) may not change by the same factor. For a ratio within the log in (**) that is very large for some xi, and smaller for another xj, the change in P(Xj) as a result of decreasing δ can remain large while the change in P(Xi) becomes small. In addition, if the change in the total entropy becomes very small as a result of decreasing δ, the algorithm will proceed to step 4.

It may be the case that the dataset is not sufficiently informative for inferring all the states. For example, if in the simple X → Y network xi = yi, the algorithm will change both values to 0. On the other hand, if all xi and yi are different, there are always parameters τ, δ for which the algorithm will change all xi and yi to have opposite signs, and H(Y|X) will approach 0. A situation as the former can also occur in more complex networks. We would like to prove that if it does not occur, i.e., if the dataset is informative enough, our algorithm will infer the states of a Boolean network. This is shown by the following theorem:

Theorem 1:Let G = (V,E) be a graph that describes the structure of a Boolean network and D a dataset of N measurements.

Let XYbe a set of nodes that regulate some node Y, i.e., ∀ X′ ∈ XY, (X′ → Y) ∈ E

Denote byan assignment of Boolean values to the nodes in XYat measurement i. Similarly, Yiis a Boolean assignment to Y at measurement i.

If the algorithm converges to a global minimum and updates dataset D to become D′, then for any two measurements i,j in D′:

Proof

The conditional entropy of the network is a sum of conditional entropies. Since conditional entropy is always nonnegative, the global minimum is reached when the conditional entropy of the network is 0, and every term in the sum is also 0.

The conditional entropy of gene Y and its set of regulators XYcan be written as

Since the log is non-positive and the probabilities are non-negative, H(Y|XY) reaches its minimum when for every either , , or .

If , the value of the regulators never occurs in the data.

Otherwise, if , then since it must hold that . Similarly, if then .

Hence, for a specific assignment of the regulators, the target Y is either 0 or 1 but never both.□

To summarize the analysis section, we showed that the algorithm infers the states of a simple network optimally if the dataset is informative enough. We then generalized the inference process to general networks, and showed that if the algorithm converges it will infer the states of a Boolean network.

In the ‘Testing’ section, we test the algorithm using simulation and real microarray expression data.

Testing

Boolean networks with two regulators per node

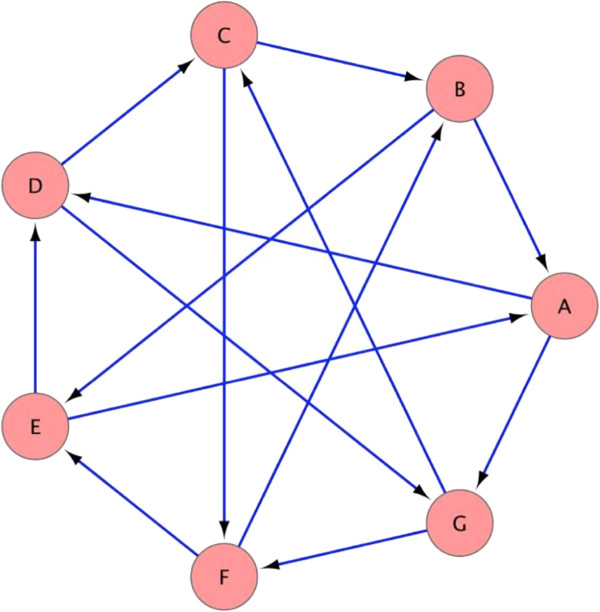

We can evaluate the accuracy of the algorithm without bias by using a known Boolean network structure. We use the Boolean network that is illustrated in Figure 3 and generate our dataset according to the following procedure:

Figure 3.

The structure of the Boolean network used in the simulation. For example, the regulator set of node A contains nodes B and E. The figure was generated using Cytoscape [13].

1. Assign logic rules to all the nodes. We use the same logic function for all the nodes - XOR in the first experiment and NOR in the second experiment. XOR's output is 1 if and only if the values of the regulator nodes differ. NOR's output is 1 if and only if the values of the regulator nodes are both 0

2. Randomly choose an initial state

3. Generate a trajectory of length 400 states

4. Convert the Boolean trajectory to a continuous trajectory as follows:

(a) Replace every Boolean 1 by a value from a normal distribution with an average of 1 and a standard deviation of 1.1

(a) Replace every Boolean 0 by a value from a normal distribution with an average of −1 and a standard deviation of 1.1

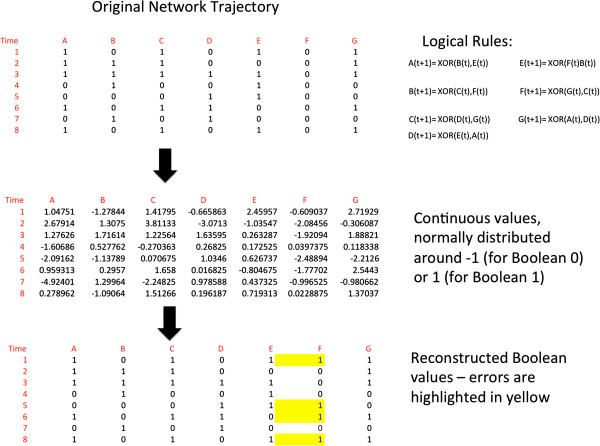

We use a C implementation of the algorithm as described in [12], without normalizing the continuous values. The process is illustrated in Figure 4. A trajectory of length 400 corresponds to the size of biological datasets that are available in public databases [14].

Figure 4.

The process by which datasets are generated in the simulation. A Boolean trajectory is generated using a Boolean network and a set of logical rules (top). The Boolean values are translated into normally distributed continuous values (middle). The continuous values are reconstructed into Boolean values that are then compared to the original Boolean trajectory (bottom).

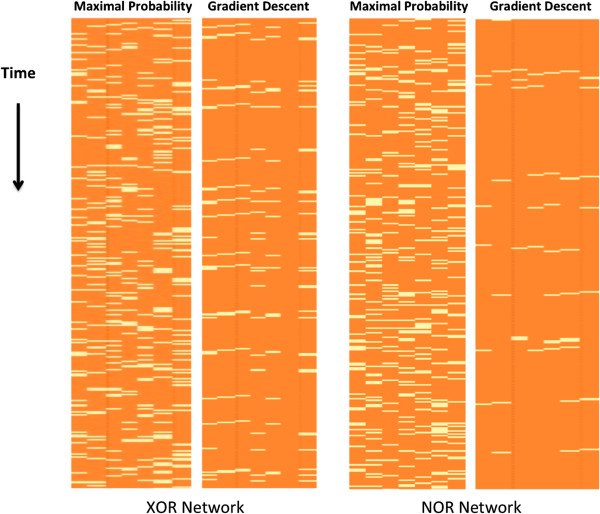

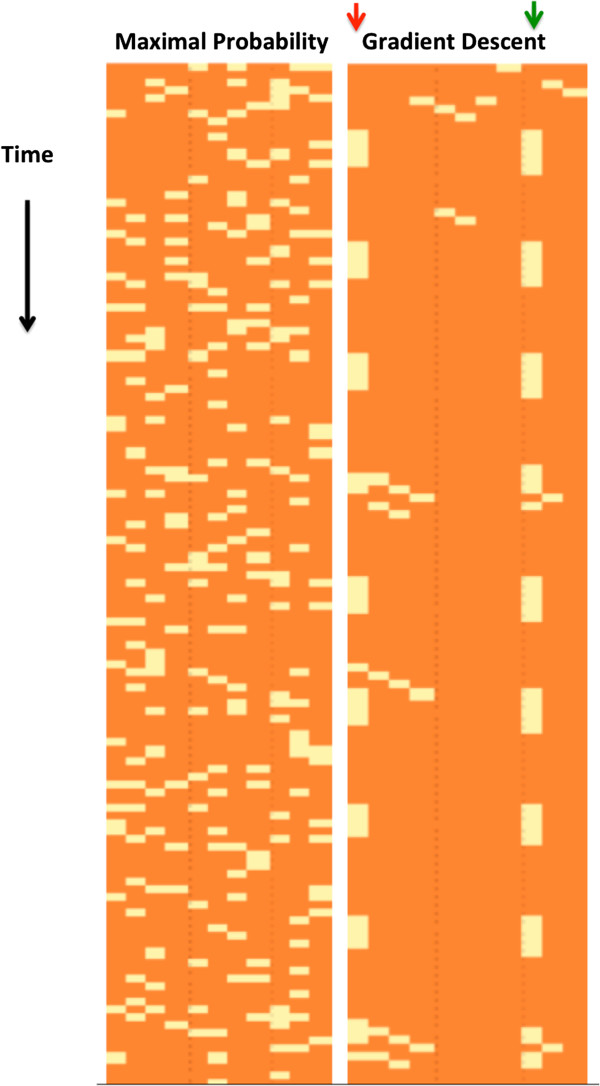

In [15] Shmulevich and Zhang describe a mapping of continuous values to Boolean values that maps every value above some threshold to 1 and below that threshold to 0. We will compare the results of our inference process to this method, which we will refer to as ‘maximal probability reconstruction.’ The threshold that we will use is 0. Figure 5 illustrates this comparison. As can be seen in the figure, the gradient descent makes significantly less mistakes in its reconstructed trajectory. Its mistakes tend to cluster at consecutive time points, since if it makes a mistake in a regulator at time T, it is more likely to make mistakes in its target at time T + 1.

Figure 5.

Maximal probability reconstruction vs. gradient descent reconstruction. Maximal probability reconstruction vs. gradient descent reconstruction of trajectories of the Boolean XOR (left) and Boolean NOR (right) networks. Rows correspond to the time points and columns to network nodes. For display purposes, only a prefix of the trajectory is shown. The yellow color represents mistakes, i.e., values which are different than the real Boolean values, and orange represents a correct value. In each of the two comparisons, the maximal probability reconstruction is presented to the left of the gradient descent reconstruction. Overall, the gradient descent is more accurate than the maximal probability reconstruction. The percentages of incorrect reconstructed values for the latter method are 17.6% (XOR) and 18% (NOR), and for the gradient descent reconstruction, 6.7% (XOR) and 2.2% (NOR).

Boolean networks with imperfect structure

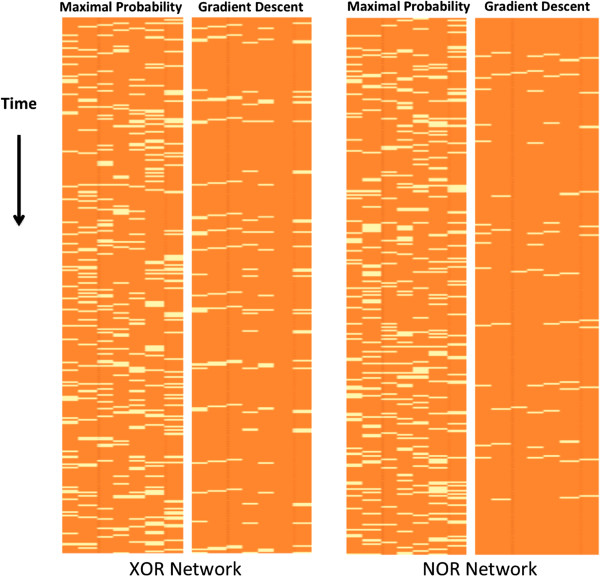

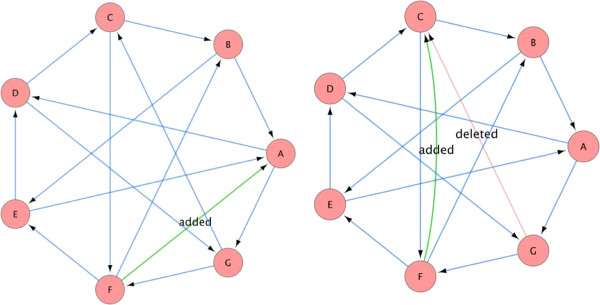

In the previous experiment, we assumed that we know the regulator set of each node. However, it is often the case that the network structure is not perfectly known, for example, some regulator set may contain incorrect nodes. Therefore, we now use the same continuous dataset, but give the algorithm an incorrect structure as input. We perform two experiments. In the first we add an incorrect node to one of the regulator sets, and in the second experiment we replace a node in a regulator set by a node that does not belong to that set. These changes are illustrated in Figure 6. As can be seen in Figure 7 when using a wrong structure, the algorithm can make more mistakes in the reconstruction of the network trajectories. However, even with an imperfect network structure, the trajectories reconstructed by the algorithm are more accurate than the maximal probability trajectories.

Figure 6.

Incorrect structures that were given to the algorithm as input. Incorrect structures that were given to the algorithm as input with the dataset generated by the network in Figure 2. The wrong structure on the left was given as input with the dataset generated for the XOR network (see text). The wrong structure on the right was given as input with the dataset generated for the NOR network (see text). Edges that were removed (regulator sets that were changed) are colored in faded red and marked ‘deleted’. Edges that were added are colored in green and marked ‘added’. The figure was generated using Cytoscape [13].

Figure 7.

Maximal probability reconstruction vs. gradient descent reconstruction. Maximal probability reconstruction vs. gradient descent reconstruction of trajectories of the Boolean XOR (left) and Boolean NOR (right) networks. The gradient descent algorithm is given an inaccurate structure. The rows correspond to the time points and columns to the network nodes. For display purposes, only a prefix of the trajectory is shown. The yellow color represents mistakes, i.e., values different than the real Boolean values, and orange represents a correct value. In each of the two comparisons, the maximal probability reconstruction is presented to the left of the gradient descent reconstruction. Overall, the gradient descent is more accurate than the maximal probability reconstruction despite the imperfect structures that are given to it as input. The XOR network's trajectory reconstruction is not affected by the error in structure, while the NOR network's reconstruction is slightly less accurate. The percentages of incorrect reconstructed values for maximal probability reconstruction are 17.6% (XOR) and 18% (NOR), and for the gradient descent reconstruction, 6.7% (XOR) and 3% (NOR).

The cell cycle network of Li et al

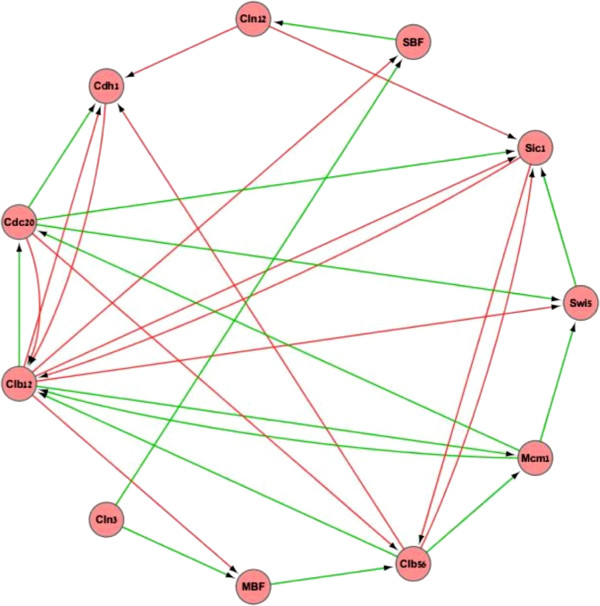

The cell cycle is a process by which cells grow and multiply. It constitutes several distinct phases through which the cell grows and divides. Its daughter cells start the cycle from the first phase and so on. A gene regulatory network controls this process. Li et al. [16] created a Boolean network model of the yeast cell cycle. In their model, every node in the regulator set is assigned a repressing or an activating role and is referred to as a repressor or an activator, respectively. A node is activated by its regulator set if the sum of active activators is greater than the sum of active repressors and repressed if the former sum is lesser than the latter sum. If the sums are equal, a node either remains unchanged or is assigned a Boolean 0, meaning that without sufficient activation the gene product is degraded. Li et al. showed that the trajectories of their model converge to the first phase of the yeast cell cycle, and given an external trigger the network resumes the cycle. The network is illustrated in Figure 8.

Figure 8.

The structure of the yeast cell cycle network of Li et al. [[16]]. Edges in green correspond to activators (see text), and red edges to repressors (see text). The node Cln3 has no regulators but receives an external signal that causes the network to go through the phases of the cell cycle. The figure was generated using Cytoscape [13].

We repeated our data generation procedure for the cell cycle network of Li et al. Since this network converges to the first phase of the cell cycle and awaits a trigger to continue cycling, we provided that trigger repeatedly and generated a trajectory of length 400. The results of reconstructing the Boolean states are illustrated in Figure 9. As in the previous experiments, the reconstructed trajectory is more accurate than the maximal probability trajectory. The mistakes in this case were mainly concentrated to the node Cln3 and its direct target MBF. The reason is that when we generated the dataset, we repeatedly changed Cln3 to provide a trigger for cycling, but we did not include any regulators for Cln3 in the network structure. This creates a discrepancy between the input that we provided to the algorithm and the network behavior - the algorithm does not expect Cln3 to change its value along the trajectory if it does not have regulators.

Figure 9.

Maximal probability reconstruction vs. gradient descent reconstruction. Maximal probability reconstruction vs. gradient descent reconstruction of the trajectory of the yeast cell cycle network of Li et al. [16]. Rows correspond to the time points and columns to network nodes. For display purposes only a prefix of the trajectory is shown. The yellow color represents mistakes, i.e., values different than the real Boolean values, and orange represents a correct value. The maximal probability reconstruction is presented to the left of the gradient descent reconstruction. Overall, the gradient descent is more accurate than the maximal probability reconstruction. The percentages of wrongly reconstructed values for the latter method are 17.9%, and for the gradient descent reconstruction, 7.4%. The gradient descent algorithm makes more mistakes for the node Cln3 that has no regulators and receives an external input (red arrow) and for the MBF that has Cln3 in its regulator set (green arrow).

Conway's game of life

Conway's game of life is composed of a square grid of cells in which each cell's Boolean value is controlled by the values of neighboring cells, and changes over time [17]. The grid can generate complex patterns that may vary significantly depending on the initial values. We modeled the game of life with grid size 7×7 as a Boolean network. Each node has 3 to 8 regulators, depending on the number of grid neighbors, and the initial state is chosen randomly. The results of reconstructing a trajectory of length 100 with the same level of noise as in previous experiments are displayed in Additional file 1: Movie 1. The left frame is the real trajectory, the middle frame is a maximal probability reconstruction, and the right frame is the gradient descent reconstruction. Boolean 1 is represented by a black cell and Boolean 0 by a white cell. As can be observed in the movie, the reconstruction algorithm makes more mistakes in the early states than in the later states. The reason for this is most likely the fact that at later states, the network enters a 3-cycle, i.e., a trajectory in which three states occur in the same order repeatedly. Since the relationships between the nodes occur more than once, the algorithm can learn these relationships and use them in reconstruction. The algorithm also identifies the existence of a 3-cycle, in the sense that it predicts a repetitive sequence of three patterns that are similar to the real patterns of the 3-cycle. In contrast, in the early time points, the states vary and do not reoccur, which makes it harder to learn some of the dependencies that play a role in generating these states. Note that most nodes have eight regulators, which means that their logic function has 256 different inputs. The number of possible network states is 249.

The maximal probability reconstruction makes an error on 18.6% of the nodes. In the initial 50 states, it errs on 18.2% of the nodes, and in the last 50 states, on 19% of the nodes. The gradient descent reconstruction assigns the wrong values to 8.8% of the nodes. In the initial 50 states, its error rate is 12.8%, and in the last 50 states, 4.8%.

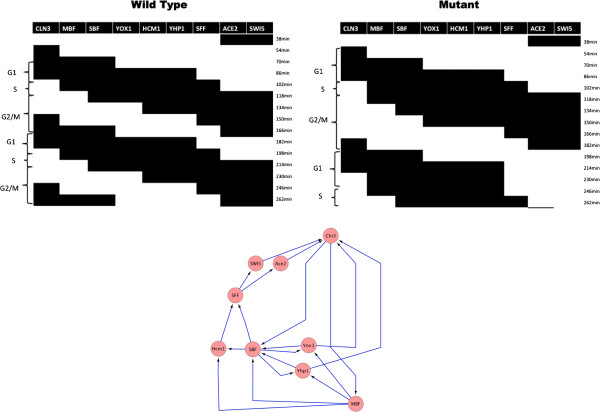

Microarray expression data

Orlando et al. [18] compared gene expression patterns in wild type yeast compared to a cyclin mutant strain. They observed that many genes are expressed in a cyclic pattern in both strains. In order to explain this observation, they suggested a Boolean network of nine transcription factors and transcription complexes. They showed that for logic functions of their choice and most initial states, the network traverses the cell cycle stages and, therefore, can explain their observation. We will use the expression data of the transcription factors and the network structure from [18] and infer the network states in wild type and mutant cells. If the states represent the cell cycle in both strains, then our analysis will support the conclusion of the study.

For the MBF, SBF, and SFF complexes, we use the expression profiles of their members STB1, SWI4, and FKH1, respectively. The dataset of [18] contains four time series of 15 microarrays for time points from 30 to 260 min, two replicates for the wild type and two for the mutant. Since all expression values are positive values, we need to map them to a symmetric range centered at 0, as the input of the simulations. However, different arrays will typically contain biases; for example, a gene can have a higher value in an array that has a higher mean expression value. Therefore, mapping two identical values from two different arrays to the same value may result in a bad estimation of the initial probabilities.

Shmulevich and Zhang [15] showed that bias in different arrays can be eliminated by applying a normalization process. We use the following normalization: The network is expected to perform about two cell cycles during the measured time points. The expression levels of a gene at the 2 cycles should correlate. Based on this observation, we normalize in every replicate the genes on the first set of seven arrays and the second set of eight arrays to average 0 and unit standard deviation. Using the resulting initial probability estimates and the network structure, we apply our inference process and compare the resulting set of Boolean states with the pattern hypothesized in the study (Figure 10). As can be seen in the figure, the network performs a cyclic trajectory in both strains, while the trajectory of the mutant corresponds to a slower cell cycle. This finding is in agreement with a slower cell cycle for the mutant as reported in [18]. It also indicates that the network structure may not account for all the regulatory interactions in the network, since both networks start from the same initial state.

Figure 10.

Inferred trajectories of the Boolean network for wild type and mutant strains. Inferred trajectories of the Boolean network from [18] for the wild type and mutant strains (one replicate is shown for each). The network structure is displayed at the bottom. Black cells correspond to a Boolean 1 and white cells to a Boolean 0. The corresponding cell cycle stages are marked at the left side of each trajectory. Both trajectories start with active ACE2 and SWI5, which are the last active factors at the completion of the previous cycle, followed activation of CLN3 and an initiation of a new cycle. The network seems to cycle a little faster in the wild type. The network structure was generated using Cytoscape [13].

Conclusions

In this study we presented a problem that arises in molecular biology, namely, that of inferring the activity of cellular network components given noisy measurements, and defined it as mapping continuous measurements to Boolean network states. We developed an algorithm that given a network structure infers its Boolean states from a dataset of continuous measurements. Our results show that the algorithm can successfully reconstruct Boolean states from inaccurate continuous data. The algorithm performs reasonably well even if the relations between the nodes of the network contain errors. We also showed that it can be used to interpret real microarray data and examine experimental hypotheses.

Our approach is highly dependent on a network structure, and when that is not available, methods that rely solely on expression should be used [15,19]. We did not define a concept of prior knowledge, which has been used in various works to integrate information into Bayesian models [20,21]. While this makes our method arguably less flexible, it also exempts us from the need to define prior distributions. Finally, the algorithm is defined for deterministic Boolean networks, in contrast to probabilistic Boolean networks that may better express biological noise [22].

Further research could improve inference accuracy and explore various aspects of the problem. One such aspect is the amount of information about the network trajectory that is lost due to noise. In the simple network that we described in the analysis section, the proportion of information that will be lost is the sum of probabilities of two events:

When one of these two events occurs, it is impossible to reconstruct the original states of X and Y. In more complex networks, information loss is a more complex. Determining an upper limit on the number of Boolean values that can be recovered given a certain amount of noise may prove insightful.

Another aspect that should be investigated is how to choose parameters that optimize the performance of the algorithm, such as the parameters of the logistic function or the step size δ and threshold τ of the gradient descent.

As Boolean networks can generate a diverse range of dynamic behaviors, the accuracy of reconstructing trajectories that arise in different dynamic regimes should also be characterized. For example, are chaotic trajectories harder to reconstruct then those that display order? More simulation tests can better define the relationships between the quality of data and different classes of networks.

Current experimental techniques produce an ever-greater number of measurements, and there is a pressing need for methods that will enable researchers to interpret it accurately and without bias. An accurate method for inferring the state of a cell can translate this richness of data into important discoveries.

Competing interests

The author declares that he has no competing interests.

Supplementary Material

Movie 1. Reconstruction of a trajectory of Conway’s Game of Life. The left frame is the real trajectory, the middle frame is a maximal probability reconstruction, and the right frame is the gradient descent reconstruction. Boolean 1 is represented by a black cell and Boolean 0 by a white cell.

References

- Kauffman SA. Metabolic stability and epigenesis in randomly constructed genetic nets. J. 1969;22:437–467. doi: 10.1016/0022-5193(69)90015-0. [DOI] [PubMed] [Google Scholar]

- Kauffman SA. The Origins of Order, Self-Organization and Selection in Evolution. Oxford: Oxford University Press; 1993. [Google Scholar]

- Yu X, Schneiderhan-Marra N, Joos TO. Protein microarrays and personalized medicine. Ann. 2011;69(1):p17–p29. doi: 10.1684/abc.2010.0512. [DOI] [PubMed] [Google Scholar]

- Liu F, Kuo Jenssen WPTK, Hovig E. Performance comparison of multiple microarray platforms for gene expression profiling. Methods Mol. 2012;802:141–155. doi: 10.1007/978-1-61779-400-1_10. [DOI] [PubMed] [Google Scholar]

- Roy NC, Alterman E, Park ZA, McNabb WC. A comparison of analog and next-generation transcriptomic tools for mammalian studies. Brief. Funct. Genomics. 2011;10(3):p135–p150. doi: 10.1093/bfgp/elr005. [DOI] [PubMed] [Google Scholar]

- Karlebach G, Shamir R. Modeling and analysis of gene regulatory networks. Nat. 2008;9:770–780. doi: 10.1038/nrm2503. [DOI] [PubMed] [Google Scholar]

- Pan Y, Durfee T, Bockhorst J, Craven M. Connecting quantitative regulatory-network models to the genome. Bioinformatics. 2007;23:p367–p376. doi: 10.1093/bioinformatics/btm228. [DOI] [PubMed] [Google Scholar]

- Akutsu T, Miyano S, Kuhara S. Identification of genetic networks from a small number of gene expression patterns under the Boolean network model. Pac. 1999;1999:17–28. doi: 10.1142/9789814447300_0003. [DOI] [PubMed] [Google Scholar]

- Sharan R, Karp RM. Reconstructing Boolean models of signaling. J. 2013;3:p1–p9. doi: 10.1089/cmb.2012.0241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gat-Viks I, Tanay A, Raijman D, Shamir R. A probabilistic methodology for integrating knowledge and experiments on biological networks. J. 2006;13:p165–p181. doi: 10.1089/cmb.2006.13.165. [DOI] [PubMed] [Google Scholar]

- Shannon CE. A mathematical theory of communication. Bell Syst. 1948;27(379–423):623–656. [Google Scholar]

- Karlebach G, Shamir R. Constructing logical models of gene regulatory networks by integrating transcription factor-DNA interactions with expression data: an entropy-based approach. J. 2012;19:p30–p41. doi: 10.1089/cmb.2011.0100. [DOI] [PubMed] [Google Scholar]

- Shannon P, Markiel A, Ozier O, Baliga NS, Wang JT, Ramage D, Amin N, Schwikowski B, Ideker T. Cytoscape: a software environment for integrated models of biomolecular interaction networks. Genome Res. 2003;13:p2498–p2504. doi: 10.1101/gr.1239303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edgar R. et al. Gene expression omnibus: NCBI gene expression and hybridization array data repository. Nucleic Acids Res. 2002;30(1):p207–p210. doi: 10.1093/nar/30.1.207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmulevich I, Zhang W. Binary analysis and optimization-based normalization of gene expression data. Bioinformatics. 2002;18(4):555–565. doi: 10.1093/bioinformatics/18.4.555. [DOI] [PubMed] [Google Scholar]

- Li F, Long T, Lu Y, Ouyang Q, Tang C. The yeast cell-cycle network is robustly designed. Proc. 2004;101:p4781–p4786. doi: 10.1073/pnas.0305937101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner M. Mathematical games - the fantastic combinations of John Conway's new solitaire game “life”. Scientific Am. 1970;223:120–123. [Google Scholar]

- Orlando DA, Lin CY, Bernard A, Wang JY, Socolar JES, Iversen ES, Hartemink AJ, Haase SB. Global control of cell-cycle transcription by coupled CDK and network oscillators. Nature. 2008;453:944–948. doi: 10.1038/nature06955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou X, Wang X, Dougherty E. Binarization of microarray data based on a mixture model. Mol Cancer Therap. 2003;2(7):679–684. [PubMed] [Google Scholar]

- Gat-Viks I, Tanay A, Raijman D, Shamir R. A probabilistic methodology for integrating knowledge and experiments on biological networks. J. 2006;13:165–181. doi: 10.1089/cmb.2006.13.165. [DOI] [PubMed] [Google Scholar]

- Friedman N, Linial M, Nachman I, Pe'er D. Using Bayesian networks to analyze expression data. J. 2000;7:601–620. doi: 10.1089/106652700750050961. [DOI] [PubMed] [Google Scholar]

- Shmulevich I, Dougherty ER, Kim S, Zhang W. Probabilistic Boolean networks: a rule-based uncertainty model for gene regulatory networks. Bioinformatics. 2002;18:261–274. doi: 10.1093/bioinformatics/18.2.261. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Movie 1. Reconstruction of a trajectory of Conway’s Game of Life. The left frame is the real trajectory, the middle frame is a maximal probability reconstruction, and the right frame is the gradient descent reconstruction. Boolean 1 is represented by a black cell and Boolean 0 by a white cell.