Abstract

Objective

This study examines observations of client in-session engagement and fidelity of implementation to the Family Check-Up (FCU) as they relate to improvements in caregivers’ positive behavior support (PBS) and children’s problem behavior in the context of a randomized prevention trial. The psychometric properties of fidelity scores obtained with a new rating system are also explored.

Method

The FCU feedback sessions of 79 families with children with elevated problem behavior scores at age 2 were coded by trained raters of fidelity, who used an observational coding system developed specifically for this intervention model.

Results

Path analysis indicated that fidelity to the FCU results in greater caregiver engagement in the feedback session, which directly predicts improvements in caregivers’ PBS 1 year later (β = 0.06, 95% CI [.007, .129]). Similarly, engagement and PBS directly predict reductions in children’s problem behavior measured 2 years later (β = −0.24, 95% CI [−.664, −.019]).

Conclusions

These results suggest fidelity within the context of this randomized intervention trial. Ratings of fidelity to the FCU covary with observed improvements in parenting and children’s problem behavior in early childhood. Overall reliability of the fidelity scores was found to be acceptable, but some single-item reliability estimates were low, suggesting revisions to the rating system might be needed. Accurately assessing fidelity and understanding its relationship to change during intervention studies is an underdeveloped area of research and has revealed some inconsistent findings. Our results shed light on the mixed conclusions of previous studies, suggesting that future research ought to assess the role of intervening variable effects, such as observed engagement.

Keywords: fidelity, positive behavior support, Family Check-Up, problem behavior, engagement

Central to assessing the effectiveness of an intervention is ensuring that implementation of the intervention adheres to its intended process and content. A breakdown in treatment fidelity threatens the experimental validity of a study and the ability to draw valid inferences regarding a treatment effect (e.g., Kazdin, 2003; Perepletchikova & Kazdin, 2005). Without adequate evaluation of fidelity to the intervention’s content and processes, one cannot explain whether failure to produce or replicate a treatment effect has resulted from a problem with the intervention program or with its application. These concerns are valid when researchers are conducting a randomized controlled trial (RCT) and when they are attempting to translate effective mental health interventions into real-world settings (Brownson, Colditz, & Proctor, 2012; Perepletchikova & Kazdin, 2005). The ongoing monitoring of fidelity in clinical practice reduces the potential to drift from an evidence-supported intervention protocol, which affects the benefits clients are likely to experience as a result of receiving the intervention. Despite the methodological and practical importance of treatment fidelity, a recent review by Perepletchikova, Treat, and Kazdin (2007) revealed that less than 4% of the evaluated RCTs published in six influential psychology and psychiatry journals had adequately implemented them, which casts some doubt on the validity of the studies’ conclusions.

Treatment fidelity comprises two primary elements: adherence and competence. Waltz, Addis, Koerner, and Jacobson (1993) defined adherence as “the extent to which a therapist used interventions and approaches prescribed by the treatment manual and avoided the use of intervention procedures proscribed by the manual” (p. 620). They defined competence as “the level of skill shown by the therapist in delivering the treatment” (p. 620). These definitions are widely accepted in the psychological intervention and implementation science literatures. It is scientifically reasonable to expect that implementation fidelity will result in significant effects on the outcomes specifically targeted by theoretically based and well-designed interventions. The literature generally substantiates this expectation across a variety of psychological interventions and client populations (e.g., Barber et al., 2006; Barber, Sharpless, Klostermann, & McCarthy, 2007; Forgatch, Patterson, & DeGarmo, 2005; Henggeler, Melton, Brondino, Scherer, & Hanley, 1997). However, competence and adherence have been shown to independently predict outcomes. For example, Barber, Crits-Christoph, and Luborsky (1996) found that adherence and competence each contributed to the outcomes of brief dynamic therapy for depression, whereas competence was the better predictor of outcomes of a cognitive behavioral intervention for depression (Shaw et al., 1999).

Recent reviews on this topic have noted mixed results in the link between the components of fidelity to intervention outcomes (e.g., Barber et al., 2007; Leichsenring et al., 2011). Some studies have failed to find direct effects between fidelity and treatment outcomes. For example, Elkin (1988) found that adherence to a cognitive behavioral therapy (CBT) protocol added little to the prediction of outcome. Similarly, Svartberg and Stiles (1994) found that competence did not predict change in dysfunctional attitudes in a study of short-term anxiety-provoking psychotherapy, yet was predictive of reductions in general symptomatic distress. In a study of adherence and competence in the Individual Drug Counseling arm of the National Institute on Drug Abuse Collaborative Cocaine Treatment Study, Barber et al. (2006) found no association between competence and outcome. However, Barber and colleagues (2006) found support for a curvilinear relationship between adherence and outcome, indicating that both very high levels of adherence, perhaps signifying inflexibility, and very low levels, suggesting poor intervention implementation, impede positive outcomes. Hogue et al. (2008) found a similar relationship when examining CBT and family therapy interventions for adolescent problem behaviors.

In the context of family-based interventions for child and adolescent problem behaviors, the relationship between treatment fidelity and outcome appears to be more consistent. Huey, Henggeler, Brondino, and Pickrel (2000) found that greater adherence to multisystemic therapy principles resulted in improved parenting practices. Hogue et al. (2008) demonstrated a relationship between fidelity to multidimensional family therapy concepts and reductions in adolescent problem behavior. Forgatch and colleagues (Forgatch & DeGarmo, 2011; Forgatch, DeGarmo, & Beldavs, 2005; Forgatch, Patterson, & DeGarmo, 2005) found that fidelity to a parent management training intervention was predictive of long-term improvements in the quality of observed parenting practices. These published studies suggest that fidelity to skills-based parenting interventions predicts changes in both parenting practices and youth outcomes. Despite the inconsistencies in the broader literature, the evidence is promising that fidelity to family-based interventions improves outcomes.

One potential explanation for inconsistent findings in the broader literature is the assessment and measurement of the components of fidelity. The assessment of competence in the context of empirically supported treatments is most often measured as the delivery of a specific intervention protocol or treatment modality. Further muddying the waters is the difficulty of measuring adherence and competence, which are conceptually unique but undoubtedly interrelated. As Perepletchikova and Kazdin (2005) pointed out, treatment adherence and therapist competence are inextricably linked, such that it is impossible to competently deliver a specified treatment sans adherence to that treatment. Reviews of this topic provided by Barber and colleagues (2006, 2007), Leichsenring et al. (2011), and Perepletchikova and Kazdin (2005) describe conceptualization and measurement issues in significant detail. The conclusion seems to be that measurement of fidelity ought to encompass both adherence and competence in a meaningful manner.

A second explanation for the inconsistent findings regarding direct effects of fidelity on outcome is the possible relationship between fidelity and other therapeutic processes. Pertinent to our study is the process of engaging clients in intervention sessions and the therapeutic alliance. The Vanderbilt psychotherapy process–outcomes study revealed that in-session observable client engagement accounted for approximately 20% of the variance in treatment outcome (O’Malley, Suh, & Strupp, 1983). Client engagement is a particularly important clinical process in motivational interviewing (MI), in which the therapist’s style of collaboration, egalitarianism, and empathy are hypothesized to increase observable in-session engagement (Miller & Rollnick, 2002) and is supported by the MI literature (Hettema, Steele, & Miller, 2005). Specifically, therapists’ use of prescribed MI behaviors is associated with greater observable in-session client engagement (Boardman, Catley, Grobe, Little, & Ahluwalia, 2006). Engaging caregivers, particularly those with high-risk youth, is a primary goal of family-based prevention programs (e.g., Dishion, Kavanagh, Schneiger, Nelson, & Kaufman, 2002; Spoth & Redmond, 2000).

Establishing an alliance with caregivers and the youth is also a second, closely related, process variable that has been found in meta-analysis to account for an average of 4% of the variance in child outcomes (Shirk & Karver, 2003). Hawley and Weisz (2005) found that parent–therapist alliance was instrumental to engagement in treatment but did not predict child outcome, whereas child–therapist alliance predicted symptom improvement but not engagement in treatment. A stronger parent–therapist alliance in family therapy was found to predict improvements in adolescent drug use and externalizing symptoms, but this relationship was not found in individual CBT (Hogue, Dauber, Stambaugh, Cecero, & Liddle, 2006). These findings suggest that therapists who are effective at fostering significant clinical change in their clients exhibit skills that increase the therapeutic alliance and client engagement in sessions, which perhaps paves the way for enhanced parental skill acquisition and subsequent behavior change.

Evaluating Collaborative Assessment Feedback Processes

Collaborative assessment-driven family intervention models, including the Family Check-Up (FCU; Dishion & Stormshak, 2007), are being used with increasing frequency (see Finn, Fischer, & Handler, 2012), and a number of studies support their effectiveness in reducing children’s behavior problems (e.g., Dishion et al., 2008; Smith, Handler, & Nash, 2010). Finn et al. (2012) stress that the effectiveness of these models hinges upon delivering feedback in a collaborative manner to increase client engagement in the intervention (Tharinger et al., 2008). FCU feedback sessions provide therapists with the opportunity to share the results of the ecological assessment, which is conducted as part of the FCU and is discussed further in the Method section, in a manner that increases families’ motivation to change parenting practices and implement the necessary parenting skills to reduce youth behavior problems. This is accomplished by using collaborative, MI-based feedback procedures, which are addressed in a number of the COACH dimensions. Moreover, a strong collaborative set between the therapist and client during parent management training reduces resistance to change in the context of treatment (e.g., Patterson & Forgatch, 1985). Therefore, assessing fidelity via observations of therapist’s behaviors during the FCU feedback session is most likely to be related to intervention outcomes. Furthermore, FCU techniques during this session are intended to increase client in-session engagement and motivation to participate in subsequent services, if indicated.

This Study

In this study, we examined the fidelity of implementation of the FCU, a brief, assessment-driven family intervention that has been shown to be an effective treatment for early childhood problem behaviors (e.g., Dishion et al., 2008; Gardner, Shaw, Dishion, Burton, & Supplee, 2007). We introduce the COACH rating system, a newly developed measure for assessing fidelity to the FCU (Dishion, Knutson, Brauer, Gill, & Risso, 2010). The goals of this study were twofold: (a) evaluate therapist fidelity to the FCU with a subsample of families with children exhibiting clinically elevated problem behavior from a larger parent project called Early Steps and (b) demonstrate reliability and validity of scores obtained using the COACH rating system by linking fidelity scores to primary parent- and child-level outcomes of the intervention model. We examined the role of observed caregiver engagement as a potential intervening variable between fidelity and later outcomes. We first present the conceptual underpinnings of the FCU intervention and then a description of the COACH.

The FCU

The FCU is an ecological approach to family intervention and treatment designed to improve children’s adjustment across settings (home, school, neighborhood) by motivating positive behavior support (PBS) and other family management practices (e.g., effective limit setting, parental monitoring) in those settings. Family-based intervention programs with a parent management training focus have been found to reduce child behavior problems (e.g., Dishion, Patterson, & Kavanagh, 1992). Two key features of the intervention are that it is assessment driven and individually tailored to the needs of youth and families. An ecological assessment is accomplished through a brief, three-session intervention that is assessment driven, based on MI, and modeled on the Drinker’s Check-Up (Miller & Rollnick, 2002). Typically, the three meetings include an initial contact session, a home-based multi-informant ecological observational assessment session, and a feedback session (Dishion & Stormshak, 2007). Feedback emphasizes parenting and family strengths, yet draws attention to possible areas of change. One goal of the FCU feedback session is to collaboratively help caregivers better understand the ecological factors influencing the child’s problem behaviors and to enhance the family’s motivation to change using collaborative, MI-based therapeutic techniques, such as promoting change talk, highlighting discrepancies, and fostering motivation to address key problems in parenting either on their own or with professional support. Research has indicated that participation in the FCU leads to reductions in problem behaviors during the preschool years (e.g., Dishion et al., 2008; Gardner et al., 2007). Of particular importance to our study, Dishion et al. (2008) found that the FCU’s effect on child problem behaviors is mediated by improvements in caregivers’ observed PBS, which is a prevalent and effective educational behavior management principle that emphasizes the use of nonaversive, reinforcing caregiver–child interactions to promote development (e.g., Horner & Carr, 1997).

In previous studies of the FCU with parents of children and adolescents with behavior problems, Dishion and colleagues (Connell, Dishion, Yasui, & Kavanagh, 2007; Dishion et al., 2013; Dishion & Connell, 2008) found that families at greater risk were more likely to engage in the treatment. In contrast to these previous FCU studies, which operationalized engagement as participation versus nonparticipation and retention over time, our study observationally assessed caregivers’ engagement in feedback sessions. The individually tailored and adaptive nature of the FCU; collaborative, client-driven decision making regarding intervention foci; and motivation-enhancing processes were designed to increase client engagement in sessions and promote continued participation. These therapeutic processes are reflected in each of the dimensions of the COACH.

The COACH Rating System

The COACH is an observational system designed to quantify the extent to which an interventionist exhibits fidelity to the core components of the FCU. The system assesses five dimensions of observable therapist skill prescribed to the FCU intervention, as well as client engagement in the session. The engagement items of the system are described in the Measures section. The five COACH categories are rated separately on a 9-point scale: needs work (1–3), acceptable work (4 – 6), good work (7–9). Scores in the “needs work” range indicate minimal knowledge and skill implementing the FCU. Score in the “acceptable work” range indicate an acceptable level of process skill and conceptual understanding of the model as evidenced by a demonstration of key basic skills. Scores in the “good work” category indicate clear understanding of the principles and mastery of the process skills of the model: Execution is relatively fluid, barriers to change are tactfully addressed, feedback is effective and realistic, motivation enhancement techniques are skillfully applied, and the therapist capitalizes on opportunities to proactively enhance behavior change and motivation to change. A score of 5 is considered “sufficient” fidelity to the model, which is based on the treatment developer’s descriptions of therapist skill in this range. This benchmark was used as a reference to describe therapist skill at the upper and lower anchors.

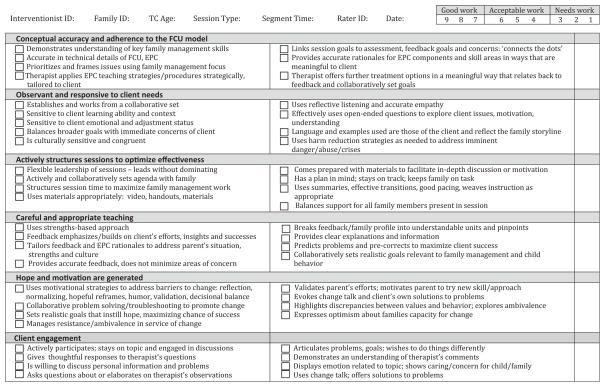

Following are descriptions of the five dimensions. The COACH manual (available by request) provides additional description of each dimension, which is followed by explication of the key process skills. Clinical examples reflecting exemplar (conceptually accurate) and nonexemplar (conceptually inaccurate) execution of each concept are also provided in the manual. Additionally, more specific, operational definitions of the dimensions are provided in Figure 1.

Figure 1.

COACH Rating Form (Version 1). FCU = Family Check-Up; EPC = Everyday Parenting Curriculum.

Conceptual accuracy and adherence. The therapist demonstrates an accurate understanding of the FCU model in terms of its emphasis on family-centered change, caregiver leadership in the change process, and support of specific skills that define family management. The model is assessment driven and tailored to the specific needs of children and families; this unique aspect of the model shapes all therapist–caregiver interactions.

Observant and responsive to client needs. It is essential that the therapist observes the client’s immediate and pressing concerns and contextual factors and responds accordingly while giving feedback or while working with a caregiver on changing a specific behavior. The delivery of feedback is appropriately modified to align with the client’s context and unique cultural and individual needs (e.g., intellectual or mental health factors).

Actively structures sessions. The therapist actively structures the change process using an assessment-driven case conceptualization as a guide. Aside from listening, being supportive, and being empathetic, the therapist can use actions such as constructing useful questions, conducting role-plays, and redirecting discussions to motivate and empower the caregivers to behave differently in their interactions with children. As an active agent in the therapy process, the therapist encourages caregiver involvement and uses active strategies to teach family management skills, which often require caregiver effort and self-regulation.

Careful and appropriate teaching. The foundation of the model is to use assessment data to direct the course of the family-centered intervention. Therapists actively provide feedback to caregivers to increase their accurate self-appraisals and motivation to either build on existing strengths or take corrective action in one or more areas. This dimension of the COACH evaluates whether the therapist sensitively provides feedback and guidance to increase caregivers’ motivation to change. Useful therapist skills include reframes that incorporate family strengths, skillful questions that help caregivers reevaluate their motivations, and supportive statements that validate the complexity of the change process.

Hope and motivation are generated. Specific therapeutic techniques from MI are integrated into the FCU to promote caregivers’ hope, motivation, and change. The motivational approach means (a) providing feedback to the caregiver, (b) acknowledging that the caregiver is responsible for the change process, (c) informing the caregiver about known effective change strategies, (d) providing the caregiver with a menu of change options and not controlling the change process by offering only one option, (e) expressing empathy for the caregiver’s situation, and (f) promoting the caregiver’s self-efficacy. These process skills are used in moment-by-moment interaction with caregivers to help the caregiver become an agent of positive family change and enhance motivation to work toward that end.

The COACH was developed on the basis of the Fidelity of Implementation Rating System (FIMP; Knutson, Forgatch, & Rains, 2003) because of similar conceptual and theoretical underpinnings, mainly the role of coercive family processes and family management training in the escalation and treatment, respectively, of child problem behaviors. As such, COACH fidelity terminology is consistent with the definition provided by Forgatch, Patterson, and DeGarmo (2005): The term fidelity refers to the incorporation of two concepts: “adherence to the intervention’s core content components and competent execution using accomplished clinical and teaching practices” (p. 3). Adherence refers to therapists’ following FCU-specific procedures while covering specific content or topic areas consistent with the intervention model. Competent adherence, as defined by Forgatch, Patterson, and DeGarmo (2005), “requires that the procedures be carried out with sophisticated clinical and teaching skills that promote behavior change” (p. 4).

As is the parenting intervention for which the FIMP was designed, the FCU is theory based, meaning that rigid adherence to treatment manuals is neither necessary nor desired in most cases, as long as the core interventional components of the model are implemented in a manner that promotes positive parenting change. Thus, evaluation of fidelity to the FCU requires assessment of both content delivery and process. Competent adherence reflects a combination of constructs that have been found in other studies to be distinct components of the superordinate construct of treatment fidelity. FIMP scores of competent adherence have been found to have reliable kappa coefficients (.67–.74) and intraclass correlation coefficients (.76 –.82) and were found to be predictive of improved child and family outcomes (Forgatch & DeGarmo, 2011; Forgatch, DeGarmo, & Beldavs, 2005; Forgatch, Patterson, & DeGarmo, 2005). Evaluating adherence to the content of the FCU can be accomplished using a checklist, which is provided to coders on the COACH rating form (Version 1; see Figure 1). Coders are instructed to first determine whether or not the key content of the FCU was delivered, using the checkboxes, and then evaluate the therapist’s clinical and teaching skill level in delivering those interventions in a manner consistent with the model and in the service of promoting behavior change.

Method

Participants

A subsample of the original 731 mother–child dyads (49% female) recruited from the Women, Infants, and Children Nutrition Program (WIC) was examined in this study in three geographically and culturally diverse U.S. regions near Charlottesville, VA (188 dyads), Eugene, OR (271), and Pittsburgh, PA (272), for an RCT of the FCU. WIC families with children between age 2 years 0 months and 2 years 11 months whose screening indicated socioeconomic, family, or child risk factors for future child behavior problems were invited to participate in the study. Comprehensive information regarding the recruitment and randomization protocol can be found in Dishion et al. (2008). Inclusion in this study was determined by either the primary or alternate caregiver reporting clinical or borderline range scores on the Externalizing scale of the Child Behavior Checklist (CBCL) 1.5/5 (Achenbach & Rescorla, 2001) at the age 2 assessment. Seventy-nine families (VA, 23; OR, 28; PA, 28) met inclusion criteria, and their FCU feedback sessions were rated using the COACH. Of this subsample, 64 (81%) of the families elected to receive the FCU again at age 3. The children in this subsample of 79 families were 48% female, had a mean age of 29.9 months (SD = 3.2) at the time of the age 2 assessment, and were reported by caregivers to be European American (51%), African American (30%), Hispanic/Latino (7%), Native American/American Indian (1%), or biracial (12%).

Procedure

Home observation assessment protocol

Caregivers (i.e., predominantly mothers and, if available, alternative caregivers, such as fathers or grandmothers) and children who agreed to participate in the study were scheduled for a 2.5-hr home visit. Each assessment began by introducing the child to an assortment of age-appropriate toys and having them play for 15 min while the mother completed questionnaires. Following this free-play period, the primary caregiver and child participated in a cleanup task (5 min) followed by a delay-of-gratification task (5 min), four teaching tasks (3 min each), a second free play (4 min), a second cleanup task (4 min), the presentation of two inhibition-inducing toys (2 min each), and a meal preparation and lunch task (20 min). Exactly the same home visit and observation protocol were repeated at age 3. During this home assessment, assessment staff completed ratings of caregiver involvement with and supervision of their child (described in the Measures section). In addition, in-home interaction tasks were videotaped for later coding. The average time between age 2 and age 3 assessments and age 3 and age 4 assessments was 11.86 months (SD = 1.21) and 12.02 months (SD = 1.38), respectively.

Intervention

For the purposes of the randomized research protocol and the internal validity of the study, the home-based assessment was the first contact and occurred prior to randomization. Those families randomized to the intervention condition (following the assessment) were then offered the FCU, which comprises the initial interview and feedback session. All the families in this study sample elected to participate in the FCU and completed the feedback session. In the overall Early Steps study, 77.9% (286) of the 367 families assigned to the intervention group participated in the FCU at child age 2.

Measures

Fidelity

Therapist adherence and competence in the FCU feedback session were jointly assessed using the COACH. Coders were an advanced undergraduate student, a graduate student, and a professional family interventionist who had received approximately 20 hr of training in the COACH. The reliability criterion at the conclusion of training requires scoring three sessions in a row with 85% agreement and a kappa greater than .65. A reliable code during the initial training period in the COACH system is one in which two raters are within one rating point on each dimension. Raters attended weekly meetings to maintain reliability and minimize rater drift. Raters viewed the entire FCU assessment feedback session. We randomly selected 20% of the sessions to be independently coded by two different members of the coding team in order to calculate interrater reliability. Thus, a one-way random-effects model intraclass correlation coefficient (ICC [1,1]; Shrout & Fleiss, 1979) was used. According to previous interpretative guidelines (Cicchetti, 1994), COACH ICC single-item values were in the “fair” to “excellent” range (C = .59; O = .72; A = .70; C = .57; H = .76). The overall ICC of the COACH composite score used in the final analysis was in the “good” range at .74.

Client engagement

Assessment of the client’s level of engagement with the therapist was accomplished at the same time and by the same coders who had used the COACH and consisted of the same training and reliability procedures. High Engagement scores (7–9) indicate a client’s active participation in the session as exemplified by engaging in conversation with the therapist and staying on topic; giving complete, thoughtful responses to open-ended questions; using change talk; being willing to discuss personal information; asking questions and elaborating on the therapist’s comments; showing initiative; and actively participating in role-plays and other session tasks. Moderate Engagement (4 – 6) scores are exemplified by clients’ modest signs of engagement in similar areas, such as occasional verbal and nonverbal signs of interest and understanding, modest elaboration of therapist comments, short responses to questions, and reluctance to disclose and share personal information and problems. Low Engagement (1–3) indicates that the client appears inattentive or disengaged as exemplified by shutting out the therapist, lacking nonverbal engagement (e.g., eye contact, head nodding), displaying signs of boredom, providing very brief responses to the therapist’s open-ended questions, repeatedly attempting to steer the conversation away from parent management topics, being unwilling to participate in role-plays and other activities, and seeming dishonest or disingenuous. The one-way random-effects model ICC was “excellent” at .87.

PBS

Interactions between the child and his or her primary caregiver were observed in the home at ages 2 and 3. Four observational measures of parenting were used to build the PBS construct, which is represented as a z-score in the analyses because the four measures are on different scales. The home-based assessment protocol, described previously, was recorded for observational coding by a team of trained undergraduate coders. Additional information regarding the measurement model of PBS and the coder training and reliability procedures can be found in previous research from the parent study from which this subsample was drawn (Dishion et al., 2008; Lunkenheimer et al., 2008).

Parent involvement. Home visitors rated parent involvement using the following items from the Home Observation for Measurement of the Environment (Bradley, Corwyn, McAdoo, & Garcia-Coll, 2001): “Parent keeps child in visual range, looks at often”; “Parent talks to child while doing household work”; “Parent structures child’s play periods” (Yes/No).

Positive behavior support. This measure is based on videotape coding (durations) of caregivers prompting and reinforcing young children’s positive behavior as captured in the following Relationship Process Code (RPC; Jabson, Dishion, Gardner, & Burton, 2004) scores: positive reinforcement (verbal and physical), prompts and suggestions of positive activities, and positive structure (e.g., providing choices in a request for behavior change). Noldus Observer 5 (Noldus Information Technology, 2003), which was used to process observation data, provided a kappa coefficient of .86 at both ages.

Engaged parent–child interactions. RPC codes, such as Talk and Neutral Physical Contact, were used to develop a measure of the average duration of parent–child sequences that involve talking or physical interactions, such as turn taking or playing a game. Kappa coefficients were .86 at both ages.

Proactive parenting. Coders rated each parent on his or her tendency to anticipate potential problems and to provide prompts or other changes to avoid young children becoming upset and/or involved in problem behavior on the following six items from the Coder Impressions Inventory (Dishion, Hogansen, Winter, & Jabson, 2004): parent gives child choices for behavior change whenever possible; parent communicates to the child in calm, simple, and clear terms; parent gives understandable, age-appropriate reasons for behavior change; parent adjusts/defines the situation to ensure the child’s interest, success, and comfort; parent redirects the child to more appropriate behavior if the child is off task or misbehaves; parent uses verbal structuring to make the task manageable. Cronbach’s alpha for ages 2 and 3 were .84 and .87, respectively.

Child problem behavior

The CBCL for Ages 1.5–5 (CBCL; Achenbach & Rescorla, 2001) is a 99-item questionnaire used to assess behavioral problems in young children. Caregivers completed the CBCL at the home assessments each year. The Externalizing factor of the CBCL is a broadband scale used to assess problem behavior. Cronbach’s alpha for Externalizing were .74 and .91 at ages 2 and 4, respectively, using the current study sample. Primary and secondary caregiver scores at age 2 were used to determine study inclusion, because at-risk or clinical range scores indicate clinically significant levels of problem behavior. Only primary caregiver reports were available for all children at both the age 2 and age 4 assessments, so these scores were used in the subsequent analyses reported in this article.

Data Analytic Plan and Hypotheses

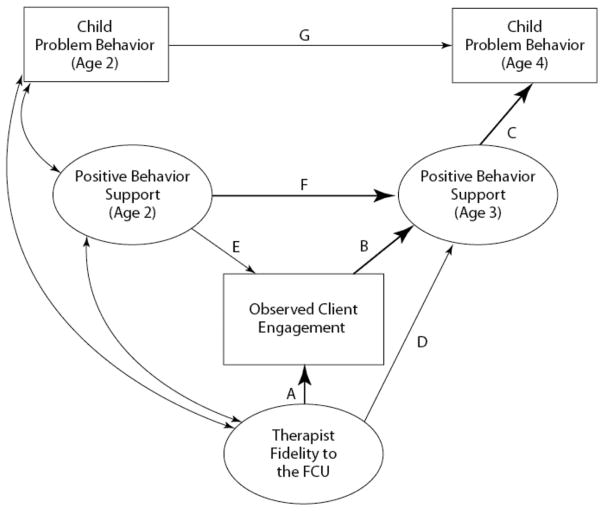

We first examined correlations between the five dimensions assessed in the COACH system (see Table 1). Pearson’s r correlations ranging from .56 to .78 indicated the appropriateness of creating a composite score. This approach was verified using a principal axis factor analysis, which resulted in a one-factor solution with loadings ranging from .54 to .79. The five dimensions were equally weighted when creating the composite variable for further analyses. Our conceptual model (see Figure 2) reflected our two hypotheses: (a) The composite COACH fidelity score would be related to observed client engagement in the age 2 assessment feedback session, which would act as an intervening variable on PBS at age 3, and (b) COACH fidelity scores would have a significant indirect effect on children’s problem behavior at age 4, through engagement and PBS scores at age 3. We examined both the direct effects of COACH fidelity on engagement and PBS and the indirect effect of COACH scores on PBS with observed engagement as a mediator. We then examined the indirect effects of the COACH on caregiver-reported problem behaviors at age 4. The child’s enrollment in Head Start and the family’s receipt of FCU feedback at age 3 were examined as potential covariates.

Table 1.

Intercorrelations Between COACH Treatment Fidelity Variables, Observed Caregiver Engagement, and Positive Behavior Support

| Variable | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Conceptual accuracy and adherence to the FCU model | — | .57** | .58** | .66** | .61** | .80** | .30** | .06 | .06 | .19 | .05 |

| 2. Observant and responsive to client needs | — | .62** | .56** | .68** | .82** | .44** | .02 | 3.04 | .03 | .02 | |

| 3. Accurately structures sessions to optimize effectiveness | — | .66** | .71** | .84** | .37** | .03 | .01 | .18 | .20 | ||

| 4. Careful and appropriate teaching and corrective feedback | — | .78** | .87** | .21 | .22 | .05 | .06 | .08 | |||

| 5. Hope and motivation are generated | — | .90** | .38** | .23* | .13 | .07 | .01 | ||||

| 6. COACH composite score | — | .40** | .14 | .05 | .14 | .09 | |||||

| 7. Observed client engagement in the feedback session | — | 3.01 | .21 | .04 | .05 | ||||||

| 8. Positive behavior support (child age 2) | — | .44* | 3.29* | 3.39** | |||||||

| 9. Positive behavior support (child age 3) | — | 3.31** | 3.30** | ||||||||

| 10. Child problem behavior on CBCL (age 2) | — | .12 | |||||||||

| 11. Child problem behavior on CBCL (age 4) | — | ||||||||||

| M | 5.81 | 5.59 | 5.40 | 5.62 | 5.67 | 5.59 | 6.00 | 66.37 | 6.30 | ||

| SD | 1.33 | 1.56 | 1.47 | 1.51 | 1.70 | 1.28 | 1.61 | 57.64 | 10.47 |

Note. N = 79. Correlations were calculated using a Pearson’s r. Means and standard deviations are not reported for positive behavior support because this variable is a z-score. Externalizing scores on the Child Behavior Checklist (CBCL) are reported as a T-score. FCU = Family Check-Up.

p < .05.

p < .01.

Figure 2.

Conceptual model: The relationship between treatment fidelity, observed engagement in the feedback FCU session, improvement in caregivers’ positive behavior support 1 year later, and the child’s problem behaviors 2 years after intervention. Paths in bold are significant. FCU = Family Check-Up.

The typical requirements for mediation include a significant direct effect of the predictor on the presumed mediator (i.e., Path A; see conceptual model in Figure 2) and on the distal outcome (i.e., Path D), a significant direct effect of the mediator on the outcome (i.e., Path B), and a significant indirect effect of the predictor on the outcome via the mediator (Judd, Kenny, & Mc-Clelland, 2001; MacKinnon & Dwyer, 1993). However, some have argued that this approach is too restrictive (e.g., MacKinnon, Lockwood, Hoffman, West, & Sheets, 2002) and have advocated for the consideration of other approaches that focus solely on the joint significance of the paths from the predictor to the proposed mediator and from the mediator to the outcome (i.e., Path A*B). In the absence of a direct effect of the predictor on the outcome, MacKinnon et al. (2002) refer to the purported mediator as an intervening variable. Given the inconsistent literature regarding the direct effect of fidelity on outcome, as we previously discussed, we hypothesized engagement to be an intervening variable: a variable that is affected by fidelity to the FCU that, in turn, promotes beneficial improvements in parents’ PBS and thus is theoretically and clinically meaningful (Sandler, Schoenfelder, Wolchik, & MacKinnon, 2011). In our model, we controlled for the effect of baseline PBS (i.e., age 2) on PBS 1 year later (i.e., age 3; Path F). Second, consistent with previous findings from the Early Steps study indicating the mediating role of PBS between intervention and child problem behavior outcomes (Dishion et al., 2008), we hypothesized engagement and PBS at age 3 to be intervening variables on caregiver-reported problem behaviors at age 4 (Path A*B*C). Again, we controlled for the effect of baseline problem behavior (i.e., age 2) on age 4 (Path G).

Path modeling was conducted using structural equation modeling with Mplus 6.12 (L. K. Muthén & Muthén, 2012). A Bayesian estimator was used because of its robust performance under conditions of smaller sample sizes compared with maximum likelihood estimation (Lee & Song, 2004). Similar to full information maximum likelihood, the Bayesian estimator uses all available data to estimate parameters (Asparouhov & Muthén, 2010; Little & Rubin, 2002). However, there were no missing data to be estimated when conducting the analyses; thus, the full 79-subject subsample was included. Mplus provides a 95% credibility interval for all estimated effects in structural equation models using Bayes-ian estimation. When using the Bayes estimator, if the provided confidence interval (CI) of the specified indirect effect using the Model Constraint command does not contain zero, the effect is considered to be statistically significant (Yuan & MacKinnon, 2009).

Results

Correlations among model variables are provided in Table 1, along with means and standard deviations. In terms of the overall treatment fidelity of the sample, the mean scores of the five COACH dimensions were within the “good work” range (5.40–5.81) and were above the minimum level of competency cutoff (5). As previously discussed, there was a high level of intercorrelation between the five COACH dimensions, indicating the appropriateness of using a manifest fidelity variable in subsequent path analyses (i.e., a mean composite score). Similarly, each of the COACH dimensions was modestly correlated with observed client engagement in the session. Engagement was modestly correlated with PBS 12 months later. PBS was negatively correlated with caregivers’ reports of the children’s problem behavior such that higher levels of observed PBS were associated with lower levels of reported problem behaviors.

Path analysis began with an examination of the model fit of the model presented in Figure 2. Model fit with models using Bayesian estimation uses the posterior predictive checking (PPC) method. Model fit is determined in two ways using the PPC: (a) Mplus produces a 95% CI in which a negative lower limit is considered to be one indicator of good model fit (B. O. Muthén, 2010), and (b) Mplus also provides a posterior predictive p value (PPP) of model fit based on the usual chi-square test of the null hypothesis against the alternative hypothesis. PPP values approaching zero (e.g., .05) indicate poor fit (B. O. Muthén, 2010). Our model provided good fit to the data: PPC (95% CI [−8.666 | 26.191]) and the PPP (0.250). With adequate model fit, we proceeded by examining the results of the path model.

The path coefficients for the model in Figure 2 are provided in Table 2. Fidelity scores as assessed using the COACH were significantly and directly related to observed client engagement in the session (Path A). However, the path between COACH scores and PBS at age 3, controlling for PBS at age 2 (F), was not significant (D). Higher levels of observed client engagement in the feedback session had a significant direct effect on PBS at age 3 (B), controlling for baseline levels. Furthermore, PBS at age 3 was significantly and negatively associated with children’s reported problem behavior at age 4 (C), controlling for baseline levels (G). Baseline levels of PBS were not significantly related to the level of observed engagement in the feedback session (E). Correlations between the baseline variables (i.e., age 2 scores on PBS, problem behavior, and COACH) were not significant. R squares presented in Table 2 of the three outcome variables in our model were significant and trustworthy, based on the credibility intervals. Enrollment in Head Start and receipt of FCU feedback at age 3 were not related to age 3 PBS or age 4 problem behavior scores and were not included in the final model.

Table 2.

Results of Path Analysis

| Model path | B | Posterior SD | β | 95% Credibility interval |

|---|---|---|---|---|

| A. Fidelity → engagement | .51*** | .12 | .41 | .207 | .579 |

| B. Engagement → PBS (age 3) | .11** | .05 | .28 | .049 | .484 |

| C. PBS (age 3) → problem behavior (age 4) | −5.30*** | 1.93 | −.32 | −.497 | −.052 |

| D. Fidelity → PBS (age 3) | −.05 | .05 | −.10 | −.328 | .091 |

| E. PBS (age 2) → engagement | −.22 | .27 | −.08 | −.271 | .127 |

| F. PBS (age 2) → PBS (age 3) | .51*** | .12 | .48 | .298 | .671 |

| G. Problem behavior (age 2) → problem behavior (age 4) | .04 | .19 | .02 | −.228 | .263 |

| R2 | ||||

| Engagement | .19*** | .08 | .051 | .344 | |

| PBS (age 3) | .29*** | .09 | .134 | .461 | |

| Problem behavior (age 4) | .11*** | .06 | .015 | .249 | |

Note. N = 79. PBS = positive behavior support construct; problem behavior = primary caregiver report of child’s problem behavior. Behaviors on the Child Behavior Checklist.

p < .01.

p < .001.

Two intervening variable effects were of interest in this study: (a) COACH scores on observed PBS at age 3, by way of observed client engagement, and (b) COACH scores on caregiver-reported problem behavior at age 4, by way of observed client engagement and observed PBS at age 3. The results of the indirect effect analyses are presented in Table 3. The 95% CIs for the indirect effects tested do not contain zero and are thus considered significant (Yuan & MacKinnon, 2009). In regard to the first indirect effect, observed engagement in feedback acted as a significant intervening variable (MacKinnon et al., 2002) between COACH fidelity scores and PBS at age 3, controlling for prior levels. Results indicate that higher fidelity scores were related to higher levels of engagement and higher levels of observed PBS 12 months later. The second indirect analysis was also significant and contained two intervening variables between COACH scores at the age 2 feedback and problem behavior at age 4: observed engagement and observed PBS at age 3. Results indicate a negative indirect effect between the COACH scores and problem behavior, showing that higher COACH scores are related to decreased problem behavior 2 years later.

Table 3.

Indirect Effects

| Indirect effects | B | Posterior SD | 95% CI |

|---|---|---|---|

| 1. Fidelity → engagement → PBS | .06 | .03 | .007 | .129 |

| 2. Fidelity → engagement → PBS → problem behavior | −.24 | .19 | −.664 | 3.019 |

Note. Effect is considered significant if the 95% confidence interval (CI) does not contain zero. PBS = positive behavior support construct at age 3; problem behavior = primary caregiver report of child’s problem behavior on the Child Behavior Checklist at age 4.

Discussion

The goals of this study were to present and evaluate the reliability and validity of the COACH rating system, a measure of fidelity to the FCU intervention model. The reliability of the COACH is within the acceptable range with respect to the overall internal consistency of ratings (overall ICC = .74). However, single-item ICCs of the first and fourth dimensions, Conceptual accuracy and adherence to the model and Careful and appropriate teaching and corrective feedback, were .59 and .57, respectively. One explanation for this finding is the advanced clinical skill required within these dimensions. An untrained clinician rating sessions for fidelity might not recognize or label these skills. A second consideration is the heterogeneity of the coders trained in the COACH for this study; one had not been trained as a clinician and had not practiced the FCU model firsthand, which might have made deciphering the quality of the therapists’ conceptual accuracy to the model and careful and appropriate teaching and feedback more difficult. Reliability is likely to increase when using coders who are clinicians trained in the model. Low reliability could also be an indication of potential misspecification of the underlying constructs, suggesting that revision of the system is needed. We have undertaken a systematic study of the COACH system to address the psychometric concerns identified in this study. The results indicate that raters who are trained clinicians in the FCU, who are also provided with the family’s assessment data, produce more reliable scores (Smith, Dishion, & Knoble, 2013). Although the reliability of single-item scores on the COACH were low in this study, the overall score used in the path analysis was good (ICC = .74), and we did not intend for the individual items to be used as separate indicators of fidelity to the FCU.

In the context of the larger RCT in which this study was conducted, it is important to note that the therapists associated with the cases selected for this study were found to have sufficient fidelity to the model. That is, average scores on each of the COACH dimensions were above the minimum preestablished threshold of 5, with a mean of 5.62. In comparison, Smith, Stormshak, and Kavanagh (2013) found that therapists with less training and implementation support achieved a mean score of 4.46 on the COACH when delivering the FCU outside of the laboratory environment in community mental health clinics. These findings suggest the sensitivity of scores obtained by the COACH rating system. As Perepletchikova and Kazdin (2005) and others have noted, demonstrating fidelity to the treatment model is critical when drawing valid inferences regarding treatment effects. Additional assessment of fidelity across more diverse families, ages, and session types (i.e., initial sessions) remains an area of focus in the Early Steps study and for research with the FCU model in general.

In terms of the predictive validity of the COACH, our study proposed two hypotheses, both of which were supported by the results of the path analysis. The direction of the two significant indirect effects indicate that therapists who more competently adhere to the content and process of the FCU feedback are better able to engage clients in the session, which is related to the parent using more PBS 1 year later and to reduced child problem behavior the following year. Although this finding is rather intuitive, the intervening effect of client engagement between fidelity, parenting practices, and later child outcomes had not previously been demonstrated in the family intervention literature. Thus far, it appears that researchers have neglected identifying potential intervening variables between fidelity and outcome. Yet, identifying salient intervening variables in intervention and prevention research is valuable in developing more effective and efficient interventions that focus on processes that lead to change over time (Sandler et al., 2011). Engagement could possibly be seen as a prerequisite for future changes: If therapists are unable to engage parents in an intervention, even if they can competently and skillfully use the intervention’s techniques, as evidenced by high fidelity, parents might be unlikely to retain skills that result in changes in their parenting practices. The same could likely be said for other prevention programs in which engagement and participation in the intervention is viewed as an outcome in and of itself. Similarly, model-specific components of the FCU could very likely be responsible for increasing caregiver engagement. As such, strategies that foster engagement, and perhaps alliance, may not be generic; rather, specific components of the FCU and fidelity to these components engage caregivers. At least one other study has found support for the distinctive contribution of model-specific components of manualized CBT therapy in cultivating salient process variables (Malik, Beutler, Alimohamed, Gallagher-Thompson, & Thompson, 2003). This consideration illuminates the mutually dependent nature of fidelity and engagement: It could very well be that better fidelity increases client engagement. Yet, an engaged client arguably enables the therapist greater ease of implementing the model. Our findings support the proposed model of implementation presented by Berkel, Mauricio, Schoenfelder, and Sandler (2011), who identified client engagement as a mediating variable between fidelity of implementation and client outcomes.

Our findings suggest that engagement and other potential intervening variables related to therapeutic process ought to be examined in future studies of treatment fidelity and outcome. Although we did not directly assess this construct in our study, therapeutic alliance might well be an important intervening variable in the relationship between fidelity and outcome. The larger psychotherapy literature has consistently found that therapeutic alliance accounts for a significant amount of the variance in the dependent measures (e.g., Martin, Garske, & Davis, 2000). The family intervention literature has similarly demonstrated a relationship between alliance and outcomes (e.g., Hogue et al., 2006, 2008; Shelef, Diamond, Diamond, & Liddle, 2005; Shirk & Karver, 2003). A study by Boardman et al. (2006), which tested an MI intervention for smokers, revealed not only a strong relationship between therapists’ use of MI techniques and increased observable engagement and a stronger therapeutic alliance, but also a very strong relationship between therapeutic alliance and observed engagement (r = .85; from Table 3, p. 334). The conceptual linkages between these two constructs suggest that intervention models ought to prescribe therapeutic processes that increase in-session engagement and foster a strong therapeutic relationship.

Limitations

This study requires replication and has some limitations that require acknowledgment. First, the sample size is adequate for the statistical methods used (path analysis with Bayesian estimation; Asparouhov & Muthén, 2010) but is still relatively small, especially when compared with the larger study from which the data were drawn. The focus of the study is also rather narrow in terms of examining only FCU assessment feedback sessions at age 2 and with families in which the target child had elevated problem behavior. Although this study took place in the larger context of a prevention program for early problem behaviors, the results and conclusions might be more applicable to treatment models, given the elevated problem behavior scores of the subsample we examined. Yet, these findings are still applicable with respect to the goal of prevention programs to reach families with the highest degree of need for intervention.

Second, fidelity ratings in this study are relatively high and lack variation. Future studies might consider a research design that could increase the variability in treatment integrity between interventionists or service delivery sites. This issue is significant when translating intervention programs from highly controlled randomized designs, in which therapists receive considerable and ongoing training and supervision, to real-world service delivery sites, in which the training and supervision infrastructure will undoubtedly vary and affect fidelity, resulting in a voltage drop in effectiveness (e.g., Carroll et al., 2007; McHugh & Barlow, 2010).

Last, the COACH system ratings were completed based on full sessions averaging about 60 min in duration. Future studies should examine the reliability and validity of ratings conducted on shorter segments of the feedback session (e.g., 15–20 min), which would make the system more cost-effective and applicable to implementation research in real-world clinical settings. Empirical demonstration that segment and full-session ratings are equivalent is needed as a precursor to altering the current COACH rating procedures.

Conclusions

Clients benefit from well-implemented treatments. This is one of the central assumptions of psychological intervention research, yet empirical support for this assumption is not consistently demonstrated. Our study adds to a growing body of literature demonstrating the importance of fidelity to family-based interventions for problems in childhood and the outcomes of those intervention models. Illumination of the role of engagement in assessment feedback sessions provides a unique contribution to this literature and opens the door for future studies of the relationship between germane therapeutic processes, fidelity, and outcome. This study also presents a reliable and valid tool for assessing fidelity to the FCU. Feasible, psychometrically sound, and valid measures of implementation fidelity are sorely needed to advance the field of implementation science and move evidence-supported treatments into the community settings for which they were designed (e.g., Aarons, Sommerfeld, Hecht, Silovsky, & Chaffin, 2009; Carroll et al., 2007; Forgatch & DeGarmo, 2011; McHugh & Barlow, 2010). Sound assessment of fidelity, empirical demonstration of its relationship to salient clinical outcomes, and use of fidelity measurement and monitoring in the community will help close the chasm between health research and real-world practice identified by the Institute of Medicine (2001). This issue will undoubtedly be one of the primary challenges facing mental health intervention researchers in the 21st century (Institute of Medicine, 2001, 2006; Report of the President’s New Freedom Commission on Mental Health, 2004).

Acknowledgments

This research was supported by National Institute on Drug Abuse Grant DA016110, awarded to Thomas J. Dishion, Daniel S. Shaw, and Melvin N. Wilson. Justin D. Smith received support from National Institute of Mental Health Research Training Grant T32 MH20012, awarded to Elizabeth Stormshak. We thank Mark Van Ryzin for his assistance with data analysis; David MacKinnon for consultation regarding intervening variable effects and Bayesian estimation; Cheryl Mikkola for her editorial support; the treatment fidelity raters; the members of the observational coding team at the Child and Family Center; the rest of the Early Steps team in Eugene, Pittsburgh, and Charlottesville; and the families who participated in the study. Specifically, we appreciate the contribution of Lisa Brauer for her work in developing the COACH fidelity coding system and coding of videotaped intervention sessions.

Contributor Information

Justin D. Smith, Child and Family Center, University of Oregon

Thomas J. Dishion, Prevention Research Center, Arizona State University, and Child and Family Center, University of Oregon

Daniel S. Shaw, University of Pittsburgh

Melvin N. Wilson, University of Virginia

References

- Aarons GA, Sommerfeld DH, Hecht DB, Silovsky JF, Chaffin MJ. The impact of evidence-based practice implementation and fidelity monitoring on staff turnover: Evidence for a protective effect. Journal of Consulting and Clinical Psychology. 2009;77:270–280. doi: 10.1037/a0013223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Achenbach TM, Rescorla LA. Manual for the ASEBA school-age forms and profiles. Washington, DC: ASEBA; 2001. [Google Scholar]

- Asparouhov T, Muthén BO. Bayesian analysis using Mplus: Technical implementation. Mplus Technical Report. 2010 Retrieved from http://www.statmodel.com.

- Barber JP, Crits-Christoph P, Luborsky L. Effects of therapist adherence and competence on patient outcome in brief dynamic therapy. Journal of Consulting and Clinical Psychology. 1996;64:619–622. doi: 10.1037/0022-006X.64.3.619. [DOI] [PubMed] [Google Scholar]

- Barber JP, Gallop R, Crits-Christoph P, Frank A, Thase ME, Weiss RD, Connolly Gibbons MB. The role of therapist adherence, therapist competence, and alliance in predicting outcome of individual drug counseling: Results from the National Institute on Drug Abuse Collaborative Cocaine Treatment Study. Psychotherapy Research. 2006;16:229–240. doi: 10.1080/10503300500288951. [DOI] [Google Scholar]

- Barber JP, Sharpless BA, Klostermann S, McCarthy KS. Assessing intervention competence and its relation to therapy outcome: A selected review derived from the outcome literature. Professional Psychology: Research and Practice. 2007;38:493–500. doi: 10.1037/0735-7028.38.5.493. [DOI] [Google Scholar]

- Berkel C, Mauricio AM, Schoenfelder EN, Sandler IN. Putting the pieces together: An integrated model of program implementation. Prevention Science. 2011;12:23–33. doi: 10.1007/s11121-010-0186-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boardman T, Catley D, Grobe JE, Little TD, Ahluwalia JS. Using motivational interviewing with smokers: Do therapist behaviors relate to engagement and therapeutic alliance? Journal of Substance Abuse Treatment. 2006;31:329–339. doi: 10.1016/j.jsat.2006.05.006. [DOI] [PubMed] [Google Scholar]

- Bradley RH, Corwyn RF, McAdoo HP, Garcia-Coll C. The home environments of children in the United States. Part I: Variations by age, ethnicity, and poverty status. Child Development. 2001;72:1844–1867. doi: 10.1111/1467-8624.t01-1-00382. [DOI] [PubMed] [Google Scholar]

- Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: Translating science to practice. New York, NY: Oxford University Press; 2012. 10.1093/acprof: oso/9780199751877.001.0001. [Google Scholar]

- Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implementation Science. 2007;2:40–48. doi: 10.1186/1748-5908-2-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicchetti DV. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychological Assessment. 1994;6:284–290. doi: 10.1037/1040-3590.6.4.284. [DOI] [Google Scholar]

- Connell AM, Dishion TJ, Yasui M, Kavanagh K. An adaptive approach to family intervention: Linking engagement in family-centered intervention to reductions in adolescent problem behavior. Journal of Consulting and Clinical Psychology. 2007;75:568–579. doi: 10.1037/0022-006X.75.4.568. [DOI] [PubMed] [Google Scholar]

- Dishion TJ, Brennan LM, Shaw DS, McEachern AD, Wilson MN, Jo B. Prevention of problem behavior through annual Family Check-Ups in early childhood: Intervention effects from the home to the second grade of elementary school. Journal of Abnormal Child Psychology. 2013 doi: 10.1007/s10802-013-9768-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dishion TJ, Connell AM. An ecological approach to family intervention to prevent adolescent drug use: Linking parent engagement to long-term reductions of tobacco, alcohol and marijuana use. In: Heinrichs N, Hahlweg K, Doepfner M, editors. Strengthening families: Evidence-based approaches to support child mental health. Munster, Ireland: Verlag fur Psychotherapie; 2008. pp. 403–433. [Google Scholar]

- Dishion TJ, Hogansen J, Winter C, Jabson JM. The Coder Impressions Inventory. Child and Family Center; 6217 University of Oregon, Eugene, OR 97403: 2004. Unpublished coding manual. [Google Scholar]

- Dishion TJ, Kavanagh K, Schneiger A, Nelson SE, Kaufman N. Preventing early adolescent substance use: A family-centered strategy for public middle school. Prevention Science. 2002;3:191–201. doi: 10.1023/A:1019994500301. [DOI] [PubMed] [Google Scholar]

- Dishion TJ, Knutson N, Brauer L, Gill A, Risso J. Family Check-Up: COACH ratings manual. Child and Family Center; 6217 University of Oregon, Eugene, OR 97403: 2010. Unpublished coding manual. [Google Scholar]

- Dishion TJ, Patterson GR, Kavanagh K. An experimental test of the coercion model: Linking theory, measurement, and intervention. In: McCord J, Tremblay R, editors. Preventing antisocial behavior: Interventions from birth through adolescence. New York, NY: Guilford Press; 1992. pp. 253–282. [Google Scholar]

- Dishion TJ, Shaw DS, Connell A, Gardner FEM, Weaver C, Wilson M. The Family Check-Up with high-risk indigent families: Preventing problem behavior by increasing parents’ positive behavior support in early childhood. Child Development. 2008;79:1395–1414. doi: 10.1111/j.1467-8624.2008.01195.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dishion TJ, Stormshak EA. Intervening in children’s lives: An ecological, family-centered approach to mental health care. Washington, DC: American Psychological Association; 2007. [DOI] [Google Scholar]

- Elkin I. Relationship of therapists’ adherence to treatment outcome in the Treatment of Depression Collaborative Research Program. Paper presented at the annual meeting of the Society for Psychotherapy Research; Santa Fe, NM. 1988. [Google Scholar]

- Finn SE, Fischer CT, Handler L, editors. Collaborative/ therapeutic assessment: A casebook and guide. Hoboken, NJ: Wiley; 2012. [Google Scholar]

- Forgatch MS, DeGarmo DS. Sustaining fidelity following the nationwide PMTO™ implementation in Norway. Prevention Science. 2011;12:235–246. doi: 10.1007/s11121-011-0225-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forgatch MS, DeGarmo DS, Beldavs ZG. An efficacious theory-based intervention for stepfamilies. Behavior Therapy. 2005;36:357–365. doi: 10.1016/S0005-7894(05)80117-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forgatch MS, Patterson GR, DeGarmo DS. Evaluating fidelity: Predictive validity for a measure of competent adherence to the Oregon Model of Parent Management Training. Behavior Therapy. 2005;36:3–13. doi: 10.1016/S0005-7894(05)80049-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner FEM, Shaw DS, Dishion TJ, Burton J, Supplee L. Randomized prevention trial for early conduct problems: Effects on proactive parenting and links to toddler disruptive behavior. Journal of Family Psychology. 2007;21:398–406. doi: 10.1037/0893-3200.21.3.398. [DOI] [PubMed] [Google Scholar]

- Hawley KM, Weisz JR. Youth versus parent working alliance in usual clinical care: Distinctive associations with retention, satisfaction, and treatment outcome. Journal of Clinical Child and Adolescent Psychology. 2005;34:117–128. doi: 10.1207/s15374424jccp3401_11. 10.1207/s15374424 jccp3401_11. [DOI] [PubMed] [Google Scholar]

- Henggeler SW, Melton GB, Brondino MJ, Scherer DG, Hanley JH. Multisystemic therapy with violent and chronic juvenile offenders and their families: The role of treatment fidelity in successful dissemination. Journal of Consulting and Clinical Psychology. 1997;65:821–833. doi: 10.1037/0022-006X.65.5.821. [DOI] [PubMed] [Google Scholar]

- Hettema J, Steele J, Miller WR. Motivational Interviewing. Annual Review of Clinical Psychology. 2005;1:91–111. doi: 10.1146/annurev.clinpsy.1.102803.143833. [DOI] [PubMed] [Google Scholar]

- Hogue A, Dauber S, Stambaugh LF, Cecero JJ, Liddle HA. Early therapeutic alliance and treatment outcome in individual and family therapy for adolescent behavior problems. Journal of Consulting and Clinical Psychology. 2006;74:121–129. doi: 10.1037/0022-006X.74.1.121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogue A, Henderson CE, Dauber S, Barajas PC, Fried A, Liddle HA. Treatment adherence, competence, and outcome in individual and family therapy for adolescent behavior problems. Journal of Consulting and Clinical Psychology. 2008;76:544–555. doi: 10.1037/0022-006X.76.4.544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horner RH, Carr EG. Behavioral support for students with severe disabilities: Functional assessment intervention. Journal of Special Education. 1997;31:84–104. doi: 10.1177/002246699703100108. [DOI] [Google Scholar]

- Huey SJ, Jr, Henggeler SW, Brondino MJ, Pickrel SG. Mechanisms of change in multisystemic therapy: Reducing delinquent behavior through therapist adherence and improved family and peer functioning. Journal of Consulting and Clinical Psychology. 2000;68:451–467. doi: 10.1037/0022-006X.68.3.451. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- Institute of Medicine. Adaption to mental health and addictive disorder: Improving the quality of health care for mental and substance-use conditions. Washington, DC: National Academy Press; 2006. [Google Scholar]

- Jabson JM, Dishion TJ, Gardner FEM, Burton J. Relationship Process Code v-2.0 training manual: A system for coding realtionship interactions. Child and Family Center; 6217 University of Oregon, Eugene, OR 97403: 2004. Unpublished coding manual. [Google Scholar]

- Judd CM, Kenny DA, McClelland GH. Estimating and testing mediation and moderation in within-subject designs. Psychological Methods. 2001;6:115–134. doi: 10.1037/1082-989X.6.2.115. [DOI] [PubMed] [Google Scholar]

- Kazdin AE. Research design in clinical psychology. 4. Boston, MA: Allyn & Bacon; 2003. [Google Scholar]

- Knutson NM, Forgatch MS, Rains LA. Fidelity of Implementation Rating System (FIMP): The training manual for PMTO. Eugene: Oregon Social Learning Center; 2003. [Google Scholar]

- Lee S, Song X. Evaluation of the Bayesian and maximum likelihood approaches in analyzing structural equation models with small sample sizes. Multivariate Behavioral Research. 2004;39:653–686. doi: 10.1207/s15327906mbr3904_4. [DOI] [PubMed] [Google Scholar]

- Leichsenring F, Salzer S, Hilsenroth MJ, Leibing E, Leweke F, Rabung S. Treatment integrity: An unresolved issue in psychotherapy research. Current Psychiatry Reviews. 2011;7:313–321. 10.2174/ 157340011797928259. [Google Scholar]

- Little RJA, Rubin DB. Statistical analysis with missing data. 2. New York, NY: Wiley; 2002. [Google Scholar]

- Lunkenheimer ES, Dishion TJ, Shaw DS, Connell AM, Gardner FEM, Wilson MN, Skuban EM. Collateral benefits of the Family Check-Up on early childhood school readiness: Indirect effects of parents’ positive behavior support. Developmental Psychology. 2008;44:1737–1752. doi: 10.1037/a0013858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacKinnon DP, Dwyer JH. Estimating mediated effects in prevention studies. Evaluation Review. 1993;17:144–158. doi: 10.1177/0193841X9301700202. [DOI] [Google Scholar]

- MacKinnon DP, Lockwood CM, Hoffman JM, West SG, Sheets V. A comparison of methods to test mediation and other intervening variable effects. Psychological Methods. 2002;7:83–104. doi: 10.1037/1082-989X.7.1.83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malik ML, Beutler LE, Alimohamed S, Gallagher-Thompson D, Thompson L. Are all cognitive therapies alike? A comparison of cognitive and noncognitive therapy process and implications for the application of empirically supported treatments. Journal of Consulting and Clinical Psychology. 2003;71:150–158. doi: 10.1037/0022-006X.71.1.150. [DOI] [PubMed] [Google Scholar]

- Martin DJ, Garske JP, Davis KM. Relation of the therapeutic alliance with outcome and other variables: A meta-analytic review. Journal of Consulting and Clinical Psychology. 2000;68:438–450. doi: 10.1037/0022-006X.68.3.438. [DOI] [PubMed] [Google Scholar]

- McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments: A review of current efforts. American Psychologist. 2010;65:73–84. doi: 10.1037/a0018121. 10.1037/ a0018121. [DOI] [PubMed] [Google Scholar]

- Miller WR, Rollnick S. Motivational interviewing: Preparing people for change. 2. New York, NY: Guilford Press; 2002. [Google Scholar]

- Muthén BO. Technical Report. Los Angeles, CA: Muthén & Muthén; 2010. Bayesian analysis in Mplus: A brief introduction. Version 3. Retrieved from http://www.statmodel.com/ [Google Scholar]

- Muthén LK, Muthén BO. Mplus. Los Angeles, CA: Author; 2012. (Version 7.0) [Google Scholar]

- Noldus Information Technology. The Observer XT reference manual 5.0. Wageningen, the Netherlands: Author; 2003. [Google Scholar]

- O’Malley SS, Suh CS, Strupp HH. The Vanderbilt Psychotherapy Process Scale: A report on the scale development and a process-outcome study. Journal of Consulting and Clinical Psychology. 1983;51:581–586. doi: 10.1037/0022-006X.51.4.581. [DOI] [PubMed] [Google Scholar]

- Patterson GR, Forgatch MS. Therapist behavior as a determinant for client resistance: A paradox for the behavior modifier. Journal of Consulting and Clinical Psychology. 1985;53:846–851. doi: 10.1037/0022-006X.53.6.846. [DOI] [PubMed] [Google Scholar]

- Perepletchikova F, Kazdin AE. Treatment integrity and therapeutic change: Issues and research recommendations. Clinical Psychology: Science and Practice. 2005;12:365–383. doi: 10.1093/clipsy.bpi045. [DOI] [Google Scholar]

- Perepletchikova F, Treat TA, Kazdin AE. Treatment integrity in psychotherapy research: Analysis of the studies and examination of the associated factors. Journal of Consulting and Clinical Psychology. 2007;75:829–841. doi: 10.1037/0022-006X.75.6.829. [DOI] [PubMed] [Google Scholar]

- Report of the President’s New Freedom Commission on Mental Health. Final report. 2004 Retrieved from http://govinfo.library.unt.edu/mentalhealthcommission/reports/FinalReport/toc.html.

- Sandler IN, Schoenfelder EN, Wolchik SA, MacKinnon DP. Long-term impact of prevention programs to promote effective parenting: Lasting effects but uncertain processes. Annual Review of Psychology. 2011;62:299–329. doi: 10.1146/annurev.psych.121208.131619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaw BF, Elkin I, Yamaguchi J, Olmsted M, Vallis TM, Dobson KS, Imber SD. Therapist competence ratings in relation to clinical outcome in cognitive therapy of depression. Journal of Consulting and Clinical Psychology. 1999;67:837–846. doi: 10.1037/0022-006X.67.6.837. [DOI] [PubMed] [Google Scholar]

- Shelef K, Diamond GM, Diamond GS, Liddle HA. Adolescent and parent alliance and treatment outcome in Multidimensional Family Therapy. Journal of Consulting and Clinical Psychology. 2005;73:689–698. doi: 10.1037/0022-006X.73.4.689. [DOI] [PubMed] [Google Scholar]

- Shirk SR, Karver M. Prediction of treatment outcome from relationship variables in child and adolescent therapy: A meta-analytic review. Journal of Consulting and Clinical Psychology. 2003;71:452–464. doi: 10.1037/0022-006X.71.3.452. [DOI] [PubMed] [Google Scholar]

- Shrout P, Fleiss J. Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin. 1979;86:420–428. doi: 10.1037//0033-2909.86.2.420. 10.1037/ 0033-2909.86.2.420. [DOI] [PubMed] [Google Scholar]

- Smith JD, Dishion TJ, Knoble N. An experimental study of the psychometric properties of a fidelity of implementation rating system for the Family Check–Up. 2013. Manuscript submitted for publication. [Google Scholar]

- Smith JD, Handler L, Nash MR. Therapeutic Assessment for preadolescent boys with oppositional-defiant disorder: A replicated single-case time-series design. Psychological Assessment. 2010;22:593–602. doi: 10.1037/a0019697. [DOI] [PubMed] [Google Scholar]

- Smith JD, Stormshak EA, Kavanagh K. Results of a randomized trial implementing the Family Check-Up in community mental health agencies. 2013. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth RL, Redmond C. Research on family engagement in preventive interventions: Toward improved use of scientific findings in primary prevention practice. The Journal of Primary Prevention. 2000;21:267–284. doi: 10.1023/A:1007039421026. [DOI] [Google Scholar]

- Svartberg M, Stiles TC. Therapeutic alliance, therapist competence, and client change in short-term anxiety-provoking psychotherapy. Psychotherapy Research. 1994;4:20–33. 10.1080/ 10503309412331333872. [Google Scholar]

- Tharinger DJ, Finn SE, Hersh B, Wilkinson AD, Christopher G, Tran A. Assessment feedback with parents and pre-adolescent children: A collaborative approach. Professional Psychology: Research and Practice. 2008;39:600–609. doi: 10.1037/0735-7028.39.6.600. [DOI] [Google Scholar]

- Waltz J, Addis ME, Koerner K, Jacobson NS. Testing the integrity of a psychotherapy protocol: Assessment of adherence and competence. Journal of Consulting and Clinical Psychology. 1993;61:620–630. doi: 10.1037/0022-006X.61.4.620. [DOI] [PubMed] [Google Scholar]

- Yuan Y, MacKinnon DP. Bayesian mediation analysis. Psychological Methods. 2009;14:301–322. doi: 10.1037/a0016972. [DOI] [PMC free article] [PubMed] [Google Scholar]