Introduction

The study of decision making (DM) draws on psychology, statistics, economics, finance, engineering (e.g., quality control), political science, philosophy, ethics and jurisprudence. The neuroscience behind decision making touches on only a fraction of these areas, although it is a constant source of delight when a connection emerges between neural mechanisms and each of these areas. While decision making, per se, fascinates, what makes the neuroscience of DM special is the insight it promises on a deeper topic. For the neurobiology of DM is really the neurobiology of cognition — or at the very least a large component of cognition that is tractable to experimental neuroscience. It exposes principles of neuroscience which underlie a variety of mental functions. Moreover, we believe these same principles, enumerated below, will furnish critical insight into the pathophysiology of diseases that compromise cognitive function, and ultimately they will supply the key to ameliorating cognitive dysfunction.

For this special issue of Neuron’s 25th Anniversary, we focus on a line of research that began almost exactly 25 years ago, in the laboratory of Bill Newsome. It is an honor to share our perspective on the field: its roots, an overview of the progress we have made and some ideas about some of the directions we might pursue in the next 25 years.

From perception to decision making

Approximately 25 years ago, Bill Newsome, Ken Britten and Tony Movshon recorded from neurons in extratriate area MT/V5 of rhesus monkeys while those monkeys performed a demanding direction discrimination task. They made two important discoveries. First, the fidelity of the single neuron response to motion rivaled the fidelity of the monkey’s behavioral reports, in other words, accuracy. Fidelity of a neural response is some characterization of the relationship between the signal-to-noise ratio (SNR) of the neural response and stimulus difficulty level. Second, the trial-to-trial variability of single neurons — the noise part of “signal to noise” — exhibited a weak but reliable correlation with the trial-to-trial variability of the monkey’s choices.

These two observations seemed to imply that the monkey was basing decisions either on a small number of neurons or, more likely, a large number of neurons that share a portion of their variability. Shared variability, termed noise correlation, curtails the expected improvement in performance one would expect from signal averaging (Box 1). Recall that the SNR of an average will improve by the square root of the number of independent samples. However if the noise is not independent but instead characterized by weak positive correlation, then the improvement in SNR approaches asymptotic levels at 50–100 samples, beyond which more samples fail to improve matters. The levels of correlation seen in pairs of neurons (nearby neurons that carry similar signals, that is to say neurons that one would imagine ought to be averaged) would limit the improvement in SNR to ~2.5 to 3-fold compared to a single neuron.

Box 1. Noise.

One might wonder why the brain would allow for such inefficiency. There are two answers which stem from a deeper truth. First, it probably can’t be helped. To build responses that are similar enough to be worthy of averaging, it may be impossible to avoid sharing inputs, and this leads inevitably to weak noise correlation. Second, the real benefit of averaging is to achieve a fast representation of firing rate. A neuron that is receiving a signal should not have to wait for many spikes to arrive in order to sense the intensity of the signal it is receiving. It samples from many neurons. The density of spikes across the pool furnishes a near-instantaneous estimate of spike rate. So the deeper truth is that neurons in cortex do not compute with spikes but with spike rate. Moreover, it is this need for many neurons to represent spike rate in a fraction of the interval between the spikes of any one neuron that leads to this particular form of redundancy and the surfeit of excitation that this would bring to a target cell were it not balanced by inhibition. It is from this insight that the essential role of balanced E/I in cortical neural circuits arises. E/I balance in the high input regime is what makes neurons noisy in the first place (Shadlen and Newsome, 1994, 1998), and it requires fine tuning since it must be maintained over the range of cortical spike rates, throughout which the spike intervals scale but the time constants of neurons do not. Together, this argument explains why E/I balance is such a general principle and perhaps why it seems to be implicated in many disorders affecting higher brain function.

This simple insight goes a long way toward explaining why single neurons can rival a well-trained monkey and why the variation from just one neuron (out of the many that could have been sampled) would exhibit any covariation with the trial-to-trial variation in the monkey’s responses.

Signal Detection Theory

The quantitative study of perception, or psychophysics, has embraced decision theory since its inception with Fechner. The focus of psychophysics is to infer from choice-behavior (e.g., present/absent, more/less, left/right) properties of the sensory “evidence”. How does SNR scale with contrast or other physical properties of the stimulus? Which stimulus features interfere with each other? This inference relies on a decision rule that connects the representation of the evidence to the subject’s choice (Fig 1A). The success of psychophysics and the reason it remains such an influential platform for the study of DM is that this decision step was held to rigorous predictions. This is exemplified by the application of signal detection theory (SDT from here on) to perception (Green and Swets, 1966). We should remind ourselves of this standard as neuroscience moves past the representation of evidence to the study of the decision process itself.

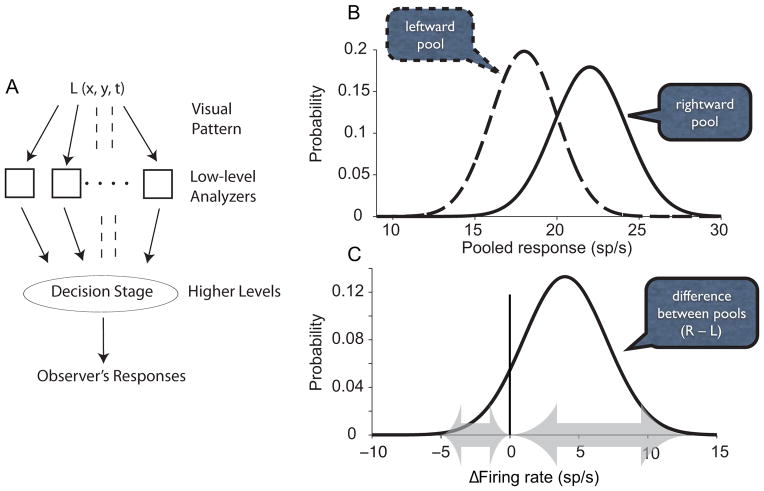

Figure 1. Psychophysics and signal detection theory.

A. Psychophysics deploys simple behavioral measures of detection, discrimination and identification to deduce the conversion of physical properties of stimuli to signals in low level processes. The approach identifies a decision stage that connects low level processors to a behavioral report. L(x,y,t) represents the pattern of luminance as a function of space and time. Adapted from (Graham, 1989)

B. Application of signal detection theory to a direction discrimination task. A weak rightward motion stimulus gives rise to samples of evidence from the rightward and leftward preferring neurons, respectively, conceived as random draws of average firing rate from the two pools. The decision rule is to choose the direction corresponding to the larger sample of the pair. For Most sample pairs will exhibit the correct sign of the inequality (right sample > left sample), but the overlap of the distributions occasionally gives rise to the opposite, hence an error.

C. The depiction in B can be simplified by subtracting the left sample from the right to construct a single difference (Δ) in firing rate. The distribution has a mean equal to the difference of the two means in B and a variance equal to the sum of the two variances. The decision rule is to choose rightward if Δ>0. The error rate is the total probability of Δ<0, that is the area to the left of the criterion line at Δ=0.

One of the great dividends of SDT was its displacement of so-called “high threshold theory”, which explained error rates as guesses arising from a failure of a noisy signal to surpass a threshold. SDT replaced the threshold with a flexible criterion and this gave a more parsimonious theory of error rates — one that is consilient with neuroscience. By inducing changes in the criterion or setting up the experiment to test in a “criterion free” way, it became clear that errors do not arise because a signal did not make it past some threshold of activation. The signal (and noise) is available; it is only a matter of adjusting the criterion.

There is a larger point to be made about SDT that distinguishes it from many other popular mathematical frameworks. It specifies how a single observation leads to a single response. Other popular frameworks (e.g., information theory, game theory and probabilistic classification) can explain ensemble behavior captured by psychometric functions (e.g., proportion correct over many trials), but they provide less satisfying accounts of the decision process on single trials (DeWeese and Meister, 1999; Laming, 1968). Often they presume that single trials are random realizations of the probabilities captured by the ensemble choice frequencies (see Value/Social-based decisions, below). This presumption is antithetical to SDT, which explains variability of choice using a deterministic decision rule applied to noisy evidence.

From evidence to decision variable

In SDT, there is a notion that the raw representation of evidence gives rise to a so-called decision variable (DV), upon which the brain applies a “decision rule” to say yes/no, more/less or category A/B. In classic SDT, the DV is a simple transformation of the sensory data that satisfies the weak constraint that it be monotonically related to likelihood (the probability of observing this value given a state of the world, such as rightward), and the decision rule is effectively a comparison to a criterion.

For the motion task, the original idea put forth by Newsome et al was that the decision is based on a comparison of the spike counts from a pair of neurons that are most sensitive to the two directions of motion. This is equivalent to saying that the DV is the difference in the spike counts and the criterion is at DV=0 (Fig 1C). There are several implicit assumptions. The monkey knows which neurons to monitor and counts all the spikes from theses neurons while the stimulus (random dot motion, RDM) is shown. Moreover, the responses of a neuron to motion in its anti-preferred direction are a proxy for the responses of another “anti-neuron” to motion in its preferred direction. These assumptions were later amended to replace the neuron-antineuron pair with pools of noisy weakly correlated neurons and to restrict the epoch of spike counting to shorter epochs than the entire duration of the stimulus (Kiani et al., 2008; Mazurek et al., 2003). Nonetheless, the idea remained that the DV can be inferred from recordings of a single neuron whose direction preference (and receptive field) are suited to the discriminanda.

The main transformations from the evidence in MT to the DV are subtraction (i.e., comparison) and the accumulation of spikes in time, which we will refer to as integration. Both of these transformations are appealing in principle. Regarding the first, a difference between two positive random numbers yields a new random variable that is apt for quantifying degree of belief (Gold and Shadlen, 2002; Shadlen et al., 2006), as we will explain later. The appeal of integration is that it implies processing on a time scale that is liberated from the immediacy of the sensory events. It underlies the most general feature of cognition.

From SDT to sequential analysis

In SDT there is no natural explanation for the amount of time it takes to complete a decision. This is an extremely important property of decisions, especially as viewed as a window on cognition. After all, outside the lab, there is no such thing as a trial structure. Even the simplest of perceptual decisions presuppose decisions about context, which include whether, when and for how long to acquire evidence. There are two ways to answer the how long question: based on elapsed time itself, as in a deadline, and based on a level of evidence or certainty. These are not mutually exclusive.

Even for simple perceptual decisions, evidence may be acquired over time scales greater than the natural integration times of sensory receptors. For vision, this would encompass a decision that extends past ~60–100 ms (e.g., the limits of Block’s law; cite Watson). In that case, we must countenance an evolving DV that is updated in time. In many situations, accumulating samples of evidence — that is, some type of integration — may be sensible. We put it this way to emphasize that integration is not the only operation that can be used for decision-making. Different operations may be advantageous in different contexts (e.g., differentiation for a detection task, and belief propagation for inference about whether two parts of an object are connected).

Nonetheless a simple but powerful idea is that in many situations evidence is accumulated to some threshold level, whence the decision terminates in a choice, even if provisional (Resulaj et al., 2009). If the two directions are equally likely (i.e., neutral prior probability), then we represent the process as an accumulation of signal plus noise to decision bounds (Fig 2A). The upper and lower bounds support termination in favor of a right or left choice, respectively. In the brain, this process looks more like a race between two mechanisms, one that accumulates evidence for right (against left), and the other that does the opposite (Fig 2B). This detail matters for correspondence with the physiology (Fig 3).

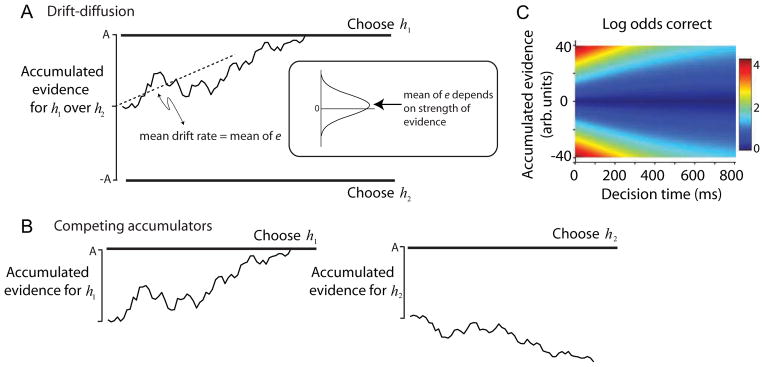

Figure 2. Bounded evidence accumulation explains decision accuracy, speed and confidence.

A. Drift-diffusion with symmetric bounds. Noisy momentary evidence for/against hypotheses h1 and h2 is accumulated (irregular trace) until it reaches an upper or lower termination bound, leading to a choice in favor of h1 or h2. In the motion task, h1 and h2 are opposite directions (e.g., right and left). The momentary evidence is the difference in firing rates between pools of direction-selective neurons that prefer the two directions. At each moment, this difference is a noisy draw from a Gaussian distribution (inset) with mean proportional to motion strength. The mean difference is the expected drift rate of the diffusion process. The process reconciles the speed and accuracy of choices with two parameters, bound height (±A) and mean of e. If the stimulus is extinguished before the accumulated evidence reaches a bound, then the decision is based on the sign of the accumulation.

B. Competing accumulators. The same mechanism is realized by two accumulators that race. If the evidence for h1 and h2 are opposite, then the race is mathematically identical to symmetric drift-diffusion. The race is a better approximation to the physiology, since there are neurons in LIP that represent accumulated evidence for each of the choices. This mechanism extends to account for choices and RT when there are more than two alternatives. If the stimulus is extinguished before one of the accumulations reaches a bound, then the decision is based on the accumulator with the larger value (as in Fig 1B).

C. Certainty. The heat map displays the correspondence between the state of the accumulated evidence in panel A and the log of the odds that the decision it precipitates will be the correct one. The mapping depends on the possible difficulties that might be encountered. This corresponds to the possible motion strengths in the direction discrimination experiments. The mapping does not depend on presence or shape of the bound. Notice that the same amount of accumulated evidence supports less certainty as time passes. Cooler colors indicate low certainty (e.g., log odds equal to 0 implies that a correct choice and an error are equally likely). In the post decision wagering experiment, the monkey opts out of the discrimination and chooses the sure-but-small reward when the accumulated evidence is in the low certainty (cooler) region of the map.

Adapted from Gold & Shadlen, 2007 and Kiani & Shadlen, 2009.

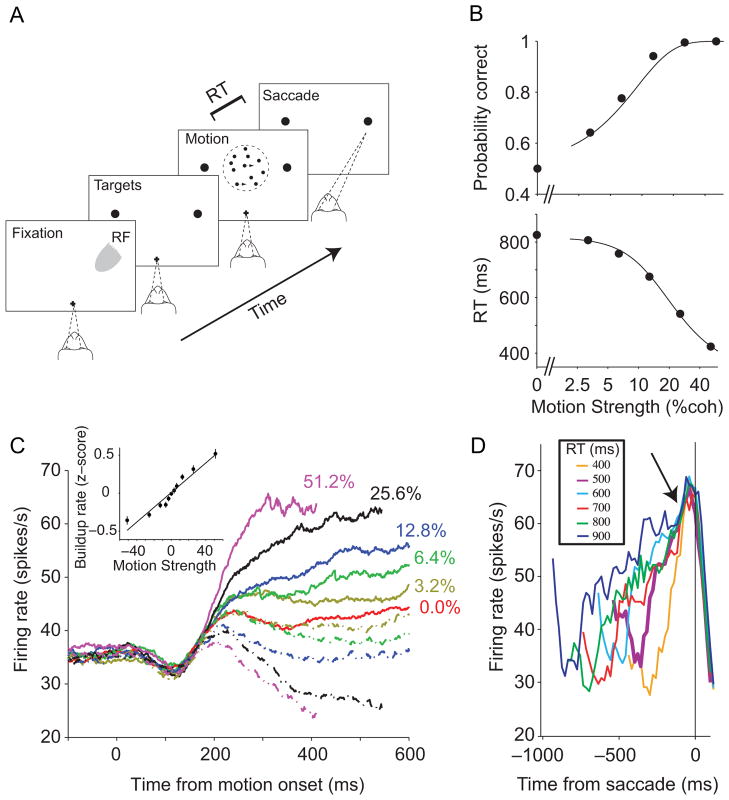

Figure 3. Neural and behavioral support for bounded evidence accumulation.

A. Choice-reaction time (RT) version of the direction discrimination task. The subject views a patch of dynamic random dots and decides the net direction of motion. The decision is indicated by an eye movement to a peripheral target. The subject controls the viewing duration by terminating each trial with an eye movement whenever ready. The gray patch shows the location of the response field of an LIP neuron.

B. Effect of stimulus difficulty on choice accuracy and decision time. The solid curve in the lower panel is a fit of the bounded accumulation model to the reaction time data. The model can be used to predict the monkey’s accuracy (upper panel). The solid curve is the predicted accuracy based on bound and sensitivity parameters derived from the fit to the RT data.

C. Response of LIP neurons during decision formation. Average firing rate from 54 LIP neurons is shown for six levels of difficulty. Responses are grouped by motion strength (color) and direction (solid/dashed toward/away from the RF). Firing rates are aligned to onset of random-dot motion and truncated at the median RT. Inset shows the rate of rise of neural responses as a function of motion strength. These buildup rates are calculated based on spiking activity of individual trials 200–400 ms after motion onset. Data points are the averaged normalized buildup rates across cells. Positive/negative values indicate increasing/decreasing firing rate functions.

D. Responses grouped by reaction time and aligned to eye movement. Only Tin choices are shown. Arrow shows the stereotyped firing rate ~70 ms before saccade initiation.

Adapted from Shadlen et al. (2006) and Roitman & Shadlen (2002).

The beauty of this idea is that a single mechanism can thus account for both which decision is made and how much time (or how many samples) it takes to commit to an answer — in other words the balance between accuracy and speed. As shown in Figure 3B the framework is so powerful that one can fit the reaction time data to establish the model parameters — an estimate of the bound height and a coefficient that converts motion strength to units of SNR — and then predict the accuracy at each of the motion strengths (solid curve). This is a rare feat in psychophysics: to make a set of measurements and to use it to predict another. It convinced us that there is merit to the idea (Box 2).

Box 2. The death and resurrection of a theory.

Here is a cautionary tale that ought to interest theorists, experimentalists, philosophers and historians of science. The concept of bounded evidence integration originated in field of quality control, which draws on statistical inference from sequential samples of data. Abraham Wald began this secretly as a way to decide whether batches of munitions were of sufficient quality to ship. He developed the sequential probability ratio test as the optimal procedure to test a hypothesis against its alternative, using the minimal number of samples (effectively a speed vs. accuracy tradeoff) (Wald, 1947; Wald and Wolfowitz, 1947). The test involves accumulation of evidence in the form of a logLR (or a proportional quantity) to a pair of terminating bounds, which trigger acceptance of the respective hypotheses. Alan Turing developed the same algorithm as a part of the code-breaking work in WWII [(Gold and Shadlen, 2002; Good, 1979). A decade later, several psychologists recognized the implications for choice and RT (e.g., Laming, 1968; Stone, 1960). However, the field realized that this model predicts that for a fixed stimulus strength (e.g., 12% coherent motion), the mean RT for correct and erroneous choices should be identical. In fact the distributions should be scaled replicas of one another. This prediction was clearly incorrect. In experiments like the ones I have described, errors are typically slower. This counterfactual led the field to abandon the model. A few stubborn individuals stuck with the bounded accumulation framework (e.g., Stephen Link and Roger Ratcliff), but there was little enthusiasm from the community of psychophysics and almost no penetration into neuroscience.

Turns out it was misguided prediction. There is no reason to assume the bounds are flat. If the conversion of evidence to logLR is known or if the source of evidence is statistically stationary, then flat bounds are optimal in the sense mentioned above. But if the reliability is not known (e.g., the motion strength varies from trial to trial) or there is an effort cost of deliberation time (within trial), then the bounds should decline as a function of elapsed decision time (Busemeyer and Rapoport, 1988; Drugowitsch et al., 2012; Rapoport and Burkheimer, 1971). Uncertainty about reliability implies a mixture of difficulties across decisions (i.e., experimental trials). Intuitively, if after many samples, the accumulated evidence is still meandering near the neutral point, then it is likely that the source of evidence was unreliable and the probability of making a correct decision is less likely. This leads to a normative solution to sequential sampling in which bounds collapse over time. This results in slow errors simply because errors are more frequent when the bounds are lower. There are other solutions to this dilemma (Link and Heath, 1975; Ratcliff and Rouder, 1998), but we favor the collapsing bounds because it is more consistent with physiology.

This is a cautionary tale about the application of normative theory. In this case there was a mistaken assumption that a normative model would apply more widely than the conditions of its derivation. There is also the question of what is optimized. It is also a cautionary tale about the role of experimental refutation. Sometimes it is worthwhile to persist with a powerful idea even when the experimental facts seem to offer a clear refutation. If only we knew when to do this!

There is another virtue of evidence accumulation that is not yet widely appreciated. It establishes a mapping between a DV and the probability that a decision made on the basis of this DV will be the correct one. The brain has implicit knowledge of this mapping and can use it to give rise to a sense of certainty or confidence about success, reward and punishment. Confidence is crucial for guiding behavior in a complex environment. For example, if the consequences of our decisions are not immediate our subsequent decisions can be guided solely by our certainty.

Until recently confidence has been largely ignored in neuroscience, in large part because it seemed impossible to measure behaviorally in non-verbal animals. However, introduction of post-decision wagering has begun to change this (Hampton, 2001; Kepecs et al., 2008; Kiani and Shadlen, 2009; Kornell et al., 2007; Middlebrooks and Sommer, 2012; Shields et al., 1997). The strategy is to allow an animal to opt out of a decision for a secure but small reward, a “sure bet”. The testable assertion is that the animal uses this option to indicate lack of confidence on the main decision. The assertion can be tested by comparing choice accuracy under two conditions: trials in which the animal is not given the “sure bet” option and trials in which the option is available but waived. In both cases the animal renders a decision. If the monkey takes the sure bet more frequently when the evidence is less reliable, then it ought to improve its accuracy on the remaining trials. This prediction was confirmed, implying that the animal’s behavior is informed by a veridical sense of certainty (Hampton, 2001; Kiani and Shadlen, 2009).

The mapping between the DV and the probability of being correct explains certainty and provides a unified theory of choice, RT and confidence. The mapping for the RDM experiment is shown by the heat map in Figure 2C. This mapping is more sophisticated than a monotonic function of the amount of evidence accumulated for the winning option. We think it also involves two other quantities: the evidence that has been accumulated for the losing alternatives and the amount of time that has elapsed, or really the number of samples of evidence. The first of these was proposed by Vickers to explain the observation that stimulus difficulty affects confidence even in RT experiments (Vickers, 1979). If there were just one DV, and if it were stereotyped at the end of the decision, there would be no explanation for different levels of confidence. The second, elapsed time, shapes the monotonic relationship between the DV and confidence so that the same DV can map to different degrees of confidence (note the curved iso-certainty contours in Fig 2C). The intuition is simple. The reliability of the evidence is often unknown to the decision maker at the beginning of deliberation (i.e., the first sample of evidence). If time goes by and the DV has not meandered too far from its origin, then it is likely that the evidence came from a less reliable source (e.g., a difficult motion strength). This insight suggests that brain structures such as orbitofrontal cortex, which represent quantities dependent on certainty (e.g., expected reward), must have access to the relevant variables: elapsed decision time, the DV, and any variables that would corrupt the correspondence between the DV and accumulated evidence (e.g., the urgency signal described below).

A neural correlate of a decision variable

The question is where to look in the brain for a neural correlate of a decision variable. The main criterion must be the existence of temporally prolonged responses that are neither purely motor nor purely sensory but which reveal aspects of both. Other criteria are access to the motion evidence, and access to the oculomotor system since the animal reports direction with a saccade to a target, but the responses should outlast the immediate responses of visual cortical neurons and they cannot precipitate an eye movement. The lateral intraparietal area (LIP) seemed an obvious candidate. LIP was defined as the part of Brodmann area 7 that projects to oculomotor areas FEF and superior colliculus (SC) (Andersen et al., 1990). It receives input from the appropriate visual areas and the pulvinar, and its neurons are known to respond persistently through intervals of up to seconds when an animal is instructed — but required to withhold — a saccade to a target (Barash et al., 1991; Gnadt and Andersen, 1988). It seems obvious that one could construct a task like a delayed eye movement and to substitute a decision about motion for the instruction. Under this condition, LIP neurons ought to, at the very least, signal the monkey’s answer in the delay period after the decision is made. In other words, the neurons should signal the planned saccade to (or away from) the choice target in its receptive field (RF). That was immediately confirmed; no surprise, as it was almost guaranteed by targeting LIP (Glimcher, 2001).

Far more interesting, however was the dynamical changes in the neural firing during the period of random dot viewing. The evolution of this activity occurs in just the right time frame for decision formation (Fig 3). Indeed, the average firing rate in LIP approximates the integration (i.e., accumulation) of the difference between the averaged firing rates of pools of neurons in MT whose RFs overlap the random dot motion stimulus. It is known that the firing rate of MT neurons is approximated by a constant plus a value that is proportional to motion strength in the preferred direction (Britten et al., 1993). For motion in the opposite direction, the response is approximated by a constant minus a value proportional to motion strength. The difference is simply proportional to motion strength. Interestingly, in LIP, the initial rate of rise in the average firing rate is proportional to motion strength (Fig 3C, inset), suggesting that the linking computation is integration with respect to time (Roitman and Shadlen, 2002; Shadlen and Newsome, 1996).

This integration step is supported directly by inserting brief motion “pulses” in the display and demonstrating their lasting effect on the LIP response, choice and RT (Huk and Shadlen, 2005). Moreover, the signal that is integrated is noisy, giving rise to a neural correlate of both drift and diffusion. The former is evident in the mean firing rates of LIP, whereas the latter is adduced from the evolving pattern of variance and autocorrelation of the LIP response across trials (Churchland et al., 2011). Together these observations make a strong case for the representation of integral of the sensory signal plus noise, beginning ~200 ms after onset of motion. This is a long time compared to visual responses of neurons in MT and LIP, but this is not a visual response. Remember, the RDM is not in the receptive field of the LIP neuron. The brain must establish a flow of information such that motion in one part of the visual field bears on the salience of a choice target in another location. Below, we refer to this operation as “circuit configuration”. It is one of the mysteries we hope to understand in the next decade. It is unlikely to be achieved by direct connections from MT to LIP. It requires too much flexibility. Indeed, a cue at the beginning of a trial can change the configuration of what evidence supports what possible action. This is why we believe that even this simple task involves a level of function that is more similar to the flexible operations underlying cognition than it is to the specialized processes that support sensory processing.

Recall that the behavioral data — choice and RT — support the idea that each decision terminates when the DV reaches a threshold or bound. A neural correlate of this event can be seen in the traces in Figure 3D, which shows the responses leading up to a decision in favor or the target in the RF (Tin). The responses achieve a stereotyped level of firing rate 70–100 ms before the eye movement. So the bound or threshold inferred from the behavior has its neural correlate in a level of firing rate in LIP. This holds for the Tin choices, but not when the monkey makes the other choice. The idea is that this is when another population of LIP neurons — the ones with the other choice target in their RF — reach a threshold.

One implication is that the bounded evidence accumulation is better displayed as a race between two DVs, one supporting right and the other supporting left, as mentioned earlier (Fig 2B). This is convenient because it allows the mechanism to extend to decisions among more than two options (Bollimunta et al., 2012; Churchland et al., 2008; Ditterich, 2010; Usher and McClelland, 2001). It is just a matter of expanding the number of races. With a large number of accumulators the system can even approximate direction estimation (Beck et al., 2008; Furman and Wang, 2008; Jazayeri and Movshon, 2006). A race architecture also introduces some flexibility into the way the bound height is implemented in the brain. In behavior, when a subject works in a slow but more accurate regime, we infer that the bound is further away from the starting point. Envisioned as a race, the change in excursion can be achieved by a higher bound or by a lower starting point. It appears that the latter is more consistent with physiology (Churchland et al., 2008).

A race architecture also provides a simple way to incorporate the cost of decision time (Drugowitsch et al., 2012) or deadline (Heitz and Schall, 2012). One might imagine decision bounds that squeeze inward as a function of time, thereby lowering the criterion for termination. However, the brain achieves this by adding a time-dependent (evidence-independent) signal to the accumulated evidence, which we refer to as an “urgency” signal (Churchland et al., 2008; Cisek et al., 2009). The urgency signal adds to the accumulated evidence in all races, bringing DVs closer to the bound rather than bringing the bounds closer to the DVs. The bound itself is a fixed firing rate threshold (as in Fig 3D, see also Hanes and Schall, 1996). This suggests that the termination mechanism could be achieved with a simple threshold crossing, unencumbered by details such as the cost of time, the tradeoff between speed and accuracy, and other policies that affect the decision criteria. By implementing these policies in areas like LIP, the brain can use the same mechanism to sense a threshold crossing as it exercises different decision criteria for different processes. For example, it may take less accumulated evidence to decide to look at something than to grasp or eat it.

We are suggesting that different brain modules, supporting different provisional intentions, can operate on the same information in parallel and apply different criteria. This insight effectively reconciles SDT with high threshold theory: the bound is the high threshold, but it (or starting point) is also an adjustable criterion, which may be deployed differently depending on policies and desiderata. As explained later, this parallel intentional architecture lends insight into seemingly mysterious distinctions, such as preparation without awareness of volition (Haggard, 2008), subliminal cuing and non-conscious cognitive processing (Dehaene et al., 2006; Del Cul et al., 2009). Put simply, too little evidence to pierce consciousness might be enough information to prepare another behavior. The key is to recognize consciousness as just another kind of decision to engage in a certain way (Shadlen and Kiani, 2011).

To be clear, we suspect that LIP is one of many areas that represent a DV, and it does so only because the decision before the monkey is not “Which direction?” but “Which eye movement target?” Other areas are involved if the decision is about reaching to a target (e.g., Pesaran et al., 2008; Scherberger and Andersen, 2007) and still others, presumably, if the decision is about whether an item is a match or nonmatch. This, however, will remain a matter of speculation until a neural correlated of a DV is demonstrated in these situations.

We place emphasis on the DV because it is a level of representation that can be dissociated from sensory processing of evidence and motor planning. The DV is not the decision. It is built from the evidence, incorporates other signals related to value, time and prior probability, and it must be “read out” by neurons that sense thresholds, calculate certainty and trigger the next step, be it a movement or another decision. The argument is not that all decisions require long integration times but that those that do permit insights that are otherwise difficult to discern. To support a neural correlate of a DV, we must at least try to (i) distinguish the response from a sensory response, (ii) distinguish it from a motor plan reflecting only the outcome of the decision, and (iii) demonstrate a correspondence with the decision process. To achieve these we need more than tests of whether mean responses are different under choice A vs. B. We would like to reconcile quantitatively the neural response with the DV inferred from a rich analysis of behavior: error rates, reaction time means and distributions, confidence ratings. We say try because there are reasons we do not expect a slam dunk on any of these criteria. For example the motor system might reflect the DV (Gold and Shadlen, 2000; Selen et al., 2012; Song and Nakayama, 2008, 2009; Spivey et al., 2005), and noisy sensory responses often bear a weak relationship to choice (Britten et al., 1996; Nienborg and Cumming, 2009; Parker and Newsome, 1998). Nonetheless, for the case of motion bearing on a choice target in the response fields of LIP neurons, the correspondence to a DV seems reasonably compelling.

Principles, extensions and unknowns

Box 3 summarizes some of the principles that have arisen from a narrow line of investigation. We would like to think that such principles will apply more generally to many types of decisions and to other cognitive functions that bear no obvious connection to decision making. Of course, many principles are yet to be discovered, and even those that seem solid are not understood at the refined circuit-level that will be required to reap the benefits of this knowledge in medicine. From here on, we will branch outward, beginning with other types of perceptual decisions, then decisions that are not about perception, and on to aspects of cognition that do not at first glance appear to have anything to do with decision-making but which may benefit from this perspective.

Box 3. Emerging principles.

The neurobiology of decision making has exposed features of computation and neural processing that may be viewed as principles of cognitive neuroscience.

Flexibility in time. The process is not tied reflexively to immediate changes in the environment or to the real time demands of motor control.

Integration. The process involves an accumulation of evidence in time or from multiple modalities or across space or possibly across proposition (as in a directed graph)

Probabilistic representation. Neural firing rates are associated with a degree of belief or degree of commitment to a proposition. This is facilitated by converting a sample of evidence, e, to a difference between firing rates of neurons that assign positive and negative weight to e, with respect to a proposition (Gold and Shadlen, 2001; Shadlen et al., 2006).

Direct representation of a decision variable. Neurons represent in their firing rates a combination of quantities in a low dimensional variable that supports a decision. The DV greatly simplifies the process leading to commitment and the certainty or confidence that the decision will be correct (or successful).

Continuous flow. Partial information, or an evolving decision variable, can affect downstream effector structures despite the fact that these effectors are only brought into play after the decision is made.

Termination. The decision process incorporates a stopping rule based on the state of evidence and/or time. This is an operation like threshold applied to the DV.

Intentional framework. The word intentional is meant to contrast with “representational”. The suggestion is that information flow is not toward progressively abstract concepts but is instead in the service of propositions, which in their simplest rendering resemble affordances or provisional intentions. Although no action need occur, many decisions are likely to obey an organization from the playbook of a flexible sensory to motor transformation. In one sense, this justifies the existence of a DV in a brain areas associated with directing the gaze or a reach.

Other perceptual decisions

Visual neuroscience was poised to contribute to the neurobiology of decision making because of a confluence of progress in psychophysics (Graham, 1989), quantitative reconciliation of signal and noise in the retina (Barlow et al., 1971; Parker and Newsome, 1998), the successful application of similar quantitative approaches to understanding processing in primary visual cortex (e.g., Tolhurst et al., 1983) and emerging detailed knowledge of central visual processes beyond the striate cortex (Maunsell and Newsome, 1987). The move to more central representations of signal plus noise led to the measurements from Newsome et al in the awake monkey. We also believe that the discovery of persistent neural activity in prefrontal and parietal association cortex (Funahashi et al., 1991; Fuster, 1973; Fuster and Alexander, 1971; Gnadt and Andersen, 1988) was key. An obvious but fruitful step will be the advancement of knowledge about other perceptual decisions, involving other modalities.

Touch

Vernon Mountcastle spearheaded a quantitative program linking the properties of neurons in the somatosensory system to the psychophysics of vibrotactile sensation. The theory and the physiology were a decade ahead of vision (Johnson, 1980a, b; Mountcastle et al., 1969a; Mountcastle et al., 1969b), but the link to decision making did not occur until recently. The main difficulty was the reliance on a two-interval comparison of vibration frequency which required a representation of the first stimulus in working memory. This was absent in S1. Recently, Ranulfo Romo and colleagues advanced this paradigm by recording from association areas of the prefrontal cortex. There is now compelling evidence for a representation of the first frequency in the inter-stimulus interval as well as the outcome of the decision (Romo and Salinas, 2003), but the DV has proven more challenging. There are hints of an evolving DV in ventral premotor cortex (Romo et al., 2004), but the period in which the decision evolves (during the 2nd stimulus) is brief and thus hard to differentiate from a sensory representation and decision outcome. Nonetheless, this paradigm has taught us more about the prefrontal cortex involvement in decision making than vision, which has focused mainly on posterior parietal cortex. Somatosensory discrimination also holds immense promise for the study of decision making in rodents. Texture discrimination via the whiskers has particular appeal because it involves an active sensing component (i.e., whisking) and integration across whiskers, hence cortical barrels and time (e.g., Diamond et al., 2008).

Smell and taste

This perceptual system and the experimental methods are far better developed in rodents than in primates. The chief advantage of the system is its molecular characterization which replaced the arcane ideas of coding before Axel and Buck’s discovery of the odor receptors (ORs) (Buck and Axel, 1991) and the organization they imposed on a chemical map in the olfactory bulb (Rubin and Katz, 1999), but the system is not without its challenges. Odors are difficult to control spatially and temporally, and despite the elegant organization of the sensory system through the olfactory bulb, we do not know the natural ligands for most ORs. Perhaps the biggest drawback of the system is that it has proven difficult to establish an integration window that is longer than a sniff (Uchida et al., 2006). These challenges notwithstanding, we believe olfactory decisions will allow the field to exploit the power of molecular biology to delve deeper into refined mechanisms underlying the principles in Box 3. Similar considerations apply to gustatory decisions (Chandrashekar et al., 2006; Chen et al., 2011; Miller and Katz, 2010). Animals naturally forage for food. Presumably they can be coerced to deliberate. Indeed the learning literature is full of experiments that can be viewed from the perspective of perceptual decision making (e.g., Bunsey and Eichenbaum, 1996; Pfeiffer and Foster, 2013). It might be argued that learning is the establishment of the conditions under which a circuit will be activated. We speculate below that this might be regarded a change in circuit configuration that is itself the outcome of a decision process.

Hearing

Signal detection theory made its entry into psychophysics via the auditory system, but the neurophysiology of cortex was decades behind somatosensory and visual systems neuroscience. There has been tremendous progress in this field over the past 10–20 years (e.g., Beitel et al., 2003; Recanzone, 2000; Zhou and Wang, 2010), but there may be a fundamental problem that will be difficult to overcome. It seems that there is a paucity of association cortex devoted to audition in old world monkeys (Poremba et al., 2003). Just where the intraparietal sulcus ought to pick up auditory association areas, it vanishes to lissencephaly. One wonders if the auditory association cortex is a late bloomer in old world monkeys. Perhaps this is why language capacities developed only recently in hominid evolution.

Interval timing

We do not sense time through a sensory epithelium, but timing is key to many aspects of behavior, especially foraging and learning. Interval timing exhibits regularities that mimic those of traditional sensory systems. The best known is a potent version of Weber’s law (the JND is proportional to the baseline for comparison) known as scalar timing (Gallistel and Gibbon, 2000; Gibbon, 1997). In our experience, animals learn temporal contingencies far more quickly than they learn the kinds of visual tasks we employ in our studies. Among the first things an animal knows about its environment are the temporal expectations associated with a strategy. Of all the “senses” mentioned, interval timing may be the easiest to train an animal on. There are challenges, to be sure, since time is not represented the way vision or olfaction are. But it is represented in the form of an anticipation (or hazard) function by the same types of neurons that represent a DV (Janssen and Shadlen, 2005), and we suspect timing is a ubiquitous feature of the association cortex. It is the price it pays for freedom from the immediacy of sensation and action. Deciding when is as important as deciding whether. Interestingly, it has been proposed that deciding when can be explained by a bounded accumulation mechanism like the one in Figure 2A (Simen et al., 2011).

Beyond perceptual decisions

Obviously not all decisions revolve around perception. This section serves a dual purpose: (i) to extend and amend principles that arise in other types of decisions that have been studied in neurophysiology and (ii) to examine a few cognitive processes from the perspective of decision making.

Value-based and social decisions

An open question is whether the neural mechanisms underlying perceptual decisions are similar to those involving decisions about value and social interactions (Rorie and Newsome, 2005). Value based decisions involve choices among goods, money, food and punishments. Social decisions involve mating, fighting, sharing and establishing dominance. Both incorporate evidence (e.g., what is the valence of the juice or what is my rival about to do), but the process underlying these assessments is not the focus, because this is typically the easy part of the problem — analogous to an easy perceptual decision (Deaner et al., 2005; Platt and Glimcher, 1999; Rorie et al., 2010). As we pointed out earlier in the essay, value has been integrated into signal detection theory and all mathematical formalisms of decision theory in economics. We would like to focus on one issue that might distinguish value-based and social decision-making from perceptual decision making. It concerns an almost philosophical issue about randomness in behavior.

It is common to model many social decisions as competitive games. This has led to the concept of a premium on being unpredictable. If this is correct, then social decisions differ fundamentally from perceptual decisions, because the former embraces a decision rule that is effectively a consultation with a random number generator. Consider a binary choice and imagine that the brain has accumulated evidence that renders one choice better than the other with probability 0.7. According to some game-theoretic approaches, the agent should choose that option probabilistically as if flipping a weighted coin that will come up heads with probability 0.7 (Barraclough et al., 2004; Glimcher, 2005; Karlsson et al., 2012; Lau and Glimcher, 2005; Sugrue et al., 2005) (but see Krajbich et al., 2012; Webb, 2013).

This way of thinking is antithetical to the way we think about the variation in choice in perceptual decisions. Such variation arises because the evidence is noisy. In the discussion of certainty (above), we pointed out that a DV is associated with a probability or degree of belief, but the decision rule is itself deterministic. For example, suppose that in the RDM task, on some trial, the DV is positive (meaning favoring rightward) and happens to correspond to P=0.7 that the rightward choice is correct. The decision is not rendered via consultation with a random number generator to match the probability 0.7. The stochastic variation (across repetitions) arises by selection of the best option in each instance. The variation is explained by signal-to-noise considerations on an otherwise deterministic mechanism. Put another way, suppose that a monkey achieves 70% correct rightward choices on 100 trials of a weak rightward RDM display. The job of the neuroscientist is to explain why the DV is on wrong side of the choice criterion on 30% of trials. This requires reconciliation of evidence strength, noise and biases owing to asymmetric values placed on the options. The assumption that the decision process is itself random — that is, beyond the inescapable noise — could lead to incorrect conclusions about value and cost. For example, it would nullify a dividend for exploration, which comes for free by flipping a weighted coin (or applying the popular “softmax” operation) (Daw et al., 2006).

Probabilistic reasoning

Humans and monkeys can learn complex reasoning that involve probabilistic cues. For example, in a weather prediction task (Knowlton et al., 1996; Knowlton et al., 1994) a monkey may view a sequence of cues that bear differently on the outcome and decide the highest paying option (Yang and Shadlen, 2007). Behaviorally, the monkeys seem to reason rationally by assigning weights proportional to the log likelihood that a cue would support one choice or another. The strategy reduces the inference process to the integration of evidence in appropriate units. Interestingly the firing rates of parietal neurons represent this accumulation of evidence in units proportional to logLR (movies of neural responses during this task can be viewed online http://www.nature.com/nature/journal/v447/n7148/suppinfo/nature05852.html). We do not know how this occurs, but it must involve the learning of an association between the cue (shape) and an intensity. As in the RDM task, the capacity of the brain to accumulate evidence in units of logLR could serve as a basis of rationality. Note the connection to the confidence map (Fig 2C). The firing rates of neurons in the association cortex represent through addition, subtraction and accumulation a degree of belief in a proposition. We would like to think that this principle will apply more generally to neural computations in association cortex (Box 3).

Memory retrieval

In Box 2, we mentioned that Roger Ratcliff effectively saved bounded evidence accumulation (or bounded drift diffusion) from the dust bin. Interestingly, his efforts focused largely on lexical decisions involving memory retrieval (Ratcliff, 1978; Ratcliff and McKoon, 1982). It is intriguing that the speed and accuracy of memory retrieval would appear to be explained by a process resembling the bounded accumulation of evidence bearing on a perceptual decision. Without taking the analogy too literally, the observation suggests that there is a sequential character to the memory retrieval. Perhaps the “a-ha” moment of remembering involves a commitment to a proposition based on accumulated evidence for similitude. Related ideas have been promoted by memory researchers investigating the role of the striatum in memory retrieval (e.g., Donaldson et al., 2010; Schwarze et al., 2013; Scimeca and Badre, 2012; Wagner et al., 2005). This is intriguing since the striatum is suspected to play protean roles in perceptual decision making too: value representation, time costs, bound setting and termination (Bogacz and Gurney, 2007; Ding and Gold, 2010, 2012; Lo and Wang, 2006; Malapani et al., 1998). Of course memory retrieval is the source of evidence in most decisions that are not based on evidence from perception. The process could impose a sequential character to the evidence samples that guide the complex decisions that humans make (Gyslain Giguère, 2013; Wimmer and Shohamy, 2012). If so, integrating the fields might permit experimental tests of the broad thesis of this essay — that the principles and mechanisms of simple perceptual decisions also support complex cognitive functions of humans.

Finally, one cannot help but wonder: if memory retrieval resembles a perceptual decision, perhaps we should view storage as a strategy to encode degree of similitude so that the recall process can choose correctly — where choice is activation of a circuit and its accompanying certainty. For example, the assignment of similitude might resemble the process that we exploited in Yang’s study of probabilistic reasoning (see above). Recall that the monkeys effectively assigned a number to each of the shapes. Each time a shape appeared, it triggered the incorporation of a weight into a DV. That is, the shape activated a parietal circuit that assembles evidence for a hypothesis. Perhaps something like this happens when we retrieve a memory. The cue to the memory is effectively the context that establishes a “relatedness” hypothesis, analogous to the choice targets in Yang’s study. Instead of reacting to visual shapes to introduce weights to the DV, the context triggers a directed search, analogous to foraging in a complex environment, such that each step introduces weights that increment and decrement a DV bearing on similitude. As in foraging, mini-decisions are made about the success or failure of the search strategy and a decision is made to explore elsewhere or deeper in the tree.

Viewing the retrieval process as a series of decisions about similitude invites us to speculate that what is stored, consolidated and reconsolidated in memory is not a connection but values like those associated with the shapes in the Yang study: a context-dependent value —a weight of evidence not as opposed to a synaptic weight— bearing on a decision about relevance. We recognize that this is embarrassingly vague, but we hope more serious scholars of learning and memory will perceive some value in the decision-making perspective.

Strategy and abstraction

When we make a decision about a proposition but we do not know how we will communicate or act upon that decision, then structures like LIP are unlikely sites of integration, and a DV is unlikely to “flow” to brain structures involved in motor preparation (Gold and Shadlen, 2003; Selen et al., 2012). Such abstract decisions are likely to use similar mechanisms of bounded evidence accumulation and so forth (e.g., see O’Connell et al., 2012), but there is much work to be done on this. In a sense an abstract decision about motion is a decision about rule or context. For example, if a monkey learns to make an abstract decision about direction, it must know that ultimately it will be asked to provide the answer somehow, for example by indicating with a color, as in red for right, green for left. The idea is that during deliberation, there is accumulation of evidence bearing not on an action but on a choice of rule: when the opportunity arises, choose red or green.

There are already relevant studies in the primate that suggest rule is represented in the dorsolateral prefrontal cortex (e.g., Wallis et al., 2001). A rule must be translated to the activation, selection and configuration of another circuit. In the future, it would be beneficial to elaborate such tasks so that the decision about which rule requires deliberation. Were it extended in time, we predict a DV (about rule) would be represented in structures that effect the implementation of the rule.

More generally, we see great potential in the idea that the outcome of a decision may not be an action but the initiation of another decision process. It invites us to view the kind of strategizing apparent in animal foraging as a rudimentary basis for creativity — that is, non-capricious exploration within a context with overarching goals — and it allows us to appreciate why larger brains support the complexity of human cognition. With a bigger brain comes the ability to make decisions about decisions about decisions. We suspect that this architecture will displace the concept of a global workspace (Baars, 1988; Sergent and Dehaene, 2004), which currently seems necessary to explain abstract ideation. Pat Goldman-Rakic (Goldman-Rakic, 1996) made a similar argument, as has John Duncan under the theme of a multiple demands system (Duncan, 2013).

Decisions about relevance

Most decisions we make do not depend on just one stream of data. The brain must have a way to allow some sources of information to access the decision variable and to filter out others. These might be called decisions about relevance. It is a reasonable way to construe the process of attention allocation, and we have already mentioned a potential role in decisions based on evidence from memory. The outcome of a “decision about relevance” is not an action but a change in the routing of information in the brain. Norman and Shallice (Norman and Shallice, 1986; Power and Petersen, 2013) referred to this as controlling (in contrast with processing). For neurophysiology, we might term this circuit selection and configuration. We suspect its neural substrate is a yet-to-be-discovered function of supragranular cortex, and it is enticing to think that it has a signature in neural signals that can be dissociated from modulation of spike rate. Examples include field potentials, the fMRI BOLD signal and phenomena observed with voltage sensitive dyes.

Decisions to move or engage: volition and consciousness

We have gradually meandered to the territory of cognitive functions, which at first glance do not resemble decisions. The idea is that we might approach some of the more mysterious functions from a vantage point of decision making. The potential dividend is that the mechanisms identified in the study of decision making might advance our understanding of some seemingly elusive phenomena.

Consider the problem of volition: the conscious will to perform an action. Like movements made without much awareness, specification and initiation of willful action probably involve the accumulation of evidence bearing on what to do along with a termination rule that combines thresholds in time (i.e., a deadline) and evidence. What about the sensation of “willing”? We conceive of this as another decision process that uses the same evidence to commit to some kind of internal report — or an explicit report if that is what we are asked to supply. It should come as no surprise that this commitment would require less evidence than the decision to actually act, but it is based on a DV determining specification and initiation. Thus we should not be shocked by the observation that brain activity precedes “willing” which precedes the actual act (Haggard, 2008; Libet et al., 1983; Roskies, 2010). Of course, if an actor is not engaging the question about “willing”, the threshold for committing to such a provisional report might not be reached before an action, in which case we have action without explicit willing. Finally, since it is possible to revise a decision with information available after an initial choice (Resulaj et al., 2009), we can imagine that the second scenario could support endorsement of “willing” after the fact. Nothing we have speculated seems terribly controversial. Viewed from the perspective of decision-making, willing, initiating, preparing subliminally and endorsing do not seem mysterious.

An even more intriguing idea is that consciousness — that holy grail of psychology and neuroscience — is explained as a decision to engage in a certain way. When a neurologist assesses consciousness, she is concerned with a spectrum of wakefulness spanning coma through stupor though full attentive engagement. The transition from sleep to wakefulness involves a kind of decision to engage — to do so for the cry of the baby but not the sound of the rain or the traffic. These are perceptual decisions that result in turning on the circuitry that wakes us — circuitry that involves brainstem, ascending systems and intralaminar nuclei of the thalamus. Of course this is not the kind of consciousness that fascinates psychologists and philosophers. But it may be related. We have already suggested that the outcome of a decision may be the selection and parameterization of another circuit. We do not understand these steps, but we speculate they involve similar thalamic circuitry. Indeed, the association thalamic nuclei (e.g., pulvinar) contain a class of neurons that exhibit projection patterns (and other features) that resemble the neurons in the intralaminar nuclei. Ted Jones referred to this as the thalamic matrix (Jones, 2001). These matrix neurons could function to translate the outcome of one decision to the “engagement” of another circuit.

Such a mechanism is likely a ubiquitous feature of cognition, and we assume it does not require the kind of conscious awareness referred to as a holy grail. We do not need conscious awareness to make a provisional decision to eat, return later, explore elsewhere, reach for, court, or inspect. But when we decide to engage in the manner of a provisional report —to another agent or to oneself — we introduce narrative and a form of subjectivity. Consider the spatiality of an object that I decide to provisionally report to another agent. The object is not a provisional affordance—something that has spatiality as an object I might look at, or grasp in a certain way, or sit upon— but instead occupies a spatiality shared by me and another agent (about whom I have a theory of mind). It has a presence independent of my own gaze perspective. For example, it has a back that I cannot see but which can be seen (inspected) by another agent, or by me if I move. This example serves as a partial account of what is commonly referred to as qualia or the so-called hard problem. But it is no harder than an affordance — a quality of an object that would satisfy an action like sitting on, looking at. It only seems hard if one is wed to the idea that representation is sufficient for perception, which is obviously false (Churchland et al., 1994; Rensink, 2000).

Viewed as a decision to engage, the problem of conscious awareness is not solved but tamed. The neural mechanisms are not all that mysterious. They involve the elements of decision making and probably co-opt the mechanisms of arousal from sleep. This is speculative to be sure, but it is also liberating, and we hope it will inspire experiments.

Towards a more refined understanding

The broad scope of decision-making belies a more significant impact, for we believe that principles revealed through the study of decision making expose mechanisms that underlie many of the core functions of cognition. This is because the neural mechanisms that support integration, bound setting, initiation and termination and so forth are mechanisms that keep the normal brain “not confused”. We suspect that a breakdown of these mechanisms not only leads to confusion but to diverse manifestation of cognitive dysfunction, depending on the nature of the failure and the particular brain system that is compromised. It seems conceivable that in the next 25 years, we will know enough about these mechanisms to begin to devise therapies to correct or ameliorate dysfunction and possibly even reverse it. These therapies will target circuits in ways we cannot imagine right now, because we lack the refined understanding of neural mechanism at the appropriate level.

The findings reviewed in this essay afford insight into mechanism at a systems and computational level. We might begin our steps toward refinement by listing three open questions about the decision process described in the beginning of this essay. (1) LIP neurons represent the integral of evidence but we do not know how the integration occurs or if LIP plays an essential role. (2) The coalescence of firing rate before Tin choices suggests the mechanism for termination is a threshold or bound crossing, but we do not know where in the brain the comparison is made, how it is made or how the bound is set. We think the bound is not sensed in LIP itself, and when it is, integration stops, but we do not know how a threshold detection leads to a change in the state of the LIP circuit. We also do not know what starts the integration. There’s a reproducible starting time ~200 ms after the onset of motion, but we do not know why this is so long and what is taking place in the 100+ ms between the onset of relevant directional signals in visual cortex and their representation in LIP. (3) We do not know how values are added to the integral of the evidence. We’re fairly certain that time dependent quantities, such as the urgency signal mentioned earlier or a dynamic bias signal (Hanks et al., 2011) are added, but we do not know if they are represented independently of the DV and how they are incorporated into the DV.

The answers to these questions will require the study of neural processing in other cortical and subcortical structures, including the thalamus, basal ganglia and possibly the cerebellum. It makes little sense to say the decision takes place in area LIP, or any other area for that matter. Even for the part of the decision process with which LIP aligns — representation of a DV — it seems unlikely that the pieces of the computation arise de novo in LIP. Still, it will be important to determine which aspects of the circuitry play critical roles.

Perhaps the most important problem to solve is the mechanism of integration. It is commonly assumed that this capacity is an extension of a simpler capacity of neurons to achieve a steady persistent activity for tenths of seconds to seconds (Wang, 2002) but this remains an open question. There are several elegant computational theories that would explain integration by balancing recurrent excitation with leaks and inhibition (Albantakis and Deco, 2009; Bogacz et al., 2006; Machens et al., 2005; Miller and Katz, 2013; Usher and McClelland, 2001; Wong and Wang, 2006) along with a variety of extensions that overcome sensitivity to mistuning (Cain et al., 2013; Goldman et al., 2003; Koulakov et al., 2002). These theories would support integration within the cortical module (e.g., LIP). In contrast, our favorite idea for integration would involve control signals that effectively switch the LIP circuit between modes that either defend the current firing rate or allow the rate to be perturbed by external input (e.g., momentary evidence from the visual cortex). A similar idea has been put forth by Schall and colleagues (Purcell et al., 2010). This is part of the larger idea mentioned in the section on decisions about relevance. The result of this decision is change in configuration of the LIP circuit such that the new piece of evidence can perturb the DV.

To begin to address the circuit-level analyses of integration we need better techniques that can be used in primates and we need better testing paradigms in rodents. There is great promise in both of these areas and emerging enthusiasm for interaction between these traditionally separate cultures. We need to control elements of the microcircuit in primates with optogenetics and DREADD (designer receptors exclusively activated by designer drugs, Rogan and Roth, 2011) technologies, and we need to identify relevant physiological properties of cortical circuits in more tractable animals (e.g., the mouse) that can be studied in detail. Ideally, the variety, reliability and safety of viral expression systems will support such work in highly trained monkeys (e.g., Diester et al., 2011; Han et al., 2009; Jazayeri et al., 2012). And the behavioral paradigms in mice will achieve the sensitivity to serve as assays for subtle manipulations of the circuit. Recall that the most compelling microstimulation studies in the field of perceptual decision making (e.g., Salzman et al., 1990) would have failed had the task included only easy conditions! Promising work from several labs supports the possibility of achieving this in rats (e.g., Brunton et al., 2013; Lima et al., 2009; Raposo et al., 2012; Rinberg et al., 2006; Znamenskiy and Zador, 2013). Mice cannot be far behind (Carandini and Churchland, 2013). Indeed, it now appears possible to study persistent activity in behaving mice (Harvey et al., 2012). These are early days, but we are hopeful that the molecular tools available in the mouse will yield answers to fundamental questions about integration and eventually to some of the other “principles” listed in Box 3.

Many of the most important questions concern interactions between circuits. Here we need technologies that will make it routine to record from and manipulate neurons identified by their connection to other brain structures — that is without heroic effort (e.g., Sommer and Wurtz, 2006). For example, if we could record from neurons in both the caudate and cortex that receive input from the same dorsal pulvinar neuron, we might begin to understand the process of circuit selection. For example, we are interested in the ~100 ms epoch in which motion information is available in the visual cortex but not yet apparent in LIP. What processes are responsible for gating, routing, selecting and configuring association areas like LIP?

Closing remarks

We have covered much ground in this essay, but we have only touched on a fraction of what the topic of decision making means to psychologists, economists, political scientists, jurists, philosophers and artists. And despite our attempt to connect perceptual decision making to other types of decisions, even many neuroscientists will be right to criticize the authors for parochialism and gross omissions. Perhaps thinking about the next quarter century ought to begin with an acknowledgement that the field will integrate and penetrate other disciplines. This is an exciting theme to contemplate as an educator wishing to advance interdisciplinary knowledge. However, as neuroscience inculcates itself, it may be wise to avoid two potential missteps. The first is to believe that neuroscience offers more fundamental explanations of phenomena traditionally studied by other fields. Our limited interactions with philosophers and ethicists has taught us that one of the hardest questions to answer is why (and how) a neuroscientific explanation would affect a concept. The second is to assert that a neuroscientific explanation renders a phenomenon unreal. A neuroscientific explanation of musical aesthetic does not make music less beautiful. Explaining is not explaining away.

This is the 25th anniversary of Neuron, which invites us to think of the neuron as the cornerstone of brain function. We see no reason to exclude cognitive functions, like decision making from the party. Indeed ~25 years ago, when vision began its migration from extrastriate visual cortex to the parietal association cortex, some of us received very clear advice that the days of connecting the firing rates of single neurons with variables of interest were behind us. We were warned that the important computations will only be revealed in complex patterns of activity across vast populations of neurons. We were skeptical of this advice, because we had ideas about why neurons were noisy (so found the patterns less compelling), and believed the noise arose from a generic problem that had to be solved by any cortical module that operates in what we termed a “high input” regime (Shadlen and Newsome, 1998) (Box 1), and the association cortex should be no exception. It seemed likely that when a module computes a quantity — even one as high level as degree of belief in a proposition — the variables that are represented and combined would be reflected directly in the firing rates of single neurons.

Horace Barlow referred this property as a direct (as opposed to distributed) code (Barlow, 1995). It is a far more profound concept than a grandmother cell, for it is not about representation (at least not solely) but concerns the intermediate steps of neural computation. In vision, it is a legacy of Hubel and Wiesel, expanded and elaborated by Movshon, Ferster and many others. The concept seems to be holding up to the study of decision making. No high dimensional dynamical structures needed for assembly—at least not so far. In the next 25 years, the field will tackle problems that encompass various levels of explanation, from molecule to networks of circuits. But in the end, the key mechanisms that support cognition are likely to be understood as computations supported by the firing rates of neurons that relate directly to relevant quantities of information, evidence, plans and the steps along the way.

Regarding decision-making, we have arrived at a point where the three pillars of choice behavior — accuracy, reaction time and confidence (Link; Vickers)— are reconciled by a common neural mechanism. It has taken 25 years to achieve this, and it will take another 25, at least, to achieve the degree of understanding we desire at the level of cells, circuits and circuit-circuit interaction. It will be worth the effort. If cognition is decision-making writ large, then the window on cognition mentioned in the title of this essay may one day be a portal to interventions in diseases that affect the mind.

Acknowledgments

MNS is supported by HHMI, NEI and HFSP. We thank Chris Fetsch, Daphna Shohamy, Luke Woloszyn, Shushruth and Naomi Odean for helpful feedback.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Albantakis L, Deco G. The encoding of alternatives in multiple-choice decision making. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:10308–10313. doi: 10.1073/pnas.0901621106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Asanuma C, Essick G, Siegel RM. Corticocortical connections of anatomically and physiologically defined subdivisions within the inferior parietal lobule. J Comp Neurol. 1990;296:65–113. doi: 10.1002/cne.902960106. [DOI] [PubMed] [Google Scholar]

- Baars BJ. A Cognitive Theory of Consciousness. Cambridge University Press; 1988. [Google Scholar]

- Barash S, Bracewell RM, Fogassi L, Gnadt JW, Andersen RA. Saccade-related activity in the lateral intraparietal area: I. Temporal properties; comparison with area 7a. J Neurophysiol. 1991;66:1095–1108. doi: 10.1152/jn.1991.66.3.1095. [DOI] [PubMed] [Google Scholar]

- Barlow HB. The neuron doctrine in perception. In: Gazzaniga M, editor. The Cognitive Neurosciences. Cambridge, Mass: MIT Press; 1995. pp. 415–435. [Google Scholar]

- Barlow HB, Levick WR, Yoon M. Responses to single quanta of light in retinal ganglion cells of the cat. Vision Res Suppl. 1971;3:87–101. doi: 10.1016/0042-6989(71)90033-2. [DOI] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Beck JM, Ma WJ, Kiani R, Hanks T, Churchland AK, Roitman J, Shadlen MN, Latham PE, Pouget A. Probabilistic population codes for Bayesian decision making. Neuron. 2008;60:1142–1152. doi: 10.1016/j.neuron.2008.09.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beitel RE, Schreiner CE, Cheung SW, Wang X, Merzenich MM. Reward-dependent plasticity in the primary auditory cortex of adult monkeys trained to discriminate temporally modulated signals. Proc Natl Acad Sci U S A. 2003;100:11070–11075. doi: 10.1073/pnas.1334187100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol Rev. 2006;113:700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- Bogacz R, Gurney K. The Basal Ganglia and cortex implement optimal decision making between alternative actions. Neural Comput. 2007;19:442–477. doi: 10.1162/neco.2007.19.2.442. [DOI] [PubMed] [Google Scholar]

- Bollimunta A, Totten D, Ditterich J. Neural dynamics of choice: single-trial analysis of decision-related activity in parietal cortex. Journal of Neuroscience. 2012;32:12684–12701. doi: 10.1523/JNEUROSCI.5752-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual responses of neurons in macaque MT. Visual Neurosci. 1996;13:87–100. doi: 10.1017/s095252380000715x. [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. Responses of neurons in macaque MT to stochastic motion signals. Vis Neurosci. 1993;10:1157–1169. doi: 10.1017/s0952523800010269. [DOI] [PubMed] [Google Scholar]

- Brunton BW, Botvinick MM, Brody CD. Rats and Humans Can Optimally Accumulate Evidence for Decision-Making. Science (New York, NY) 2013;340:95–98. doi: 10.1126/science.1233912. [DOI] [PubMed] [Google Scholar]

- Buck L, Axel R. A novel multigene family may encode odorant receptors: a molecular basis for odor recognition. Cell. 1991;65:175–187. doi: 10.1016/0092-8674(91)90418-x. [DOI] [PubMed] [Google Scholar]

- Bunsey M, Eichenbaum H. Conservation of hippocampal memory function in rats and humans. Nature. 1996;379:255–257. doi: 10.1038/379255a0. [DOI] [PubMed] [Google Scholar]

- Busemeyer JR, Rapoport A. Psychological models of deferred decision making. Journal of Mathematical Psychology. 1988;32:91–134. [Google Scholar]

- Cain N, Barreiro AK, Shadlen M, Shea-Brown E. Neural integrators for decision making: a favorable tradeoff between robustness and sensitivity. Journal of neurophysiology. 2013;109:2542–2559. doi: 10.1152/jn.00976.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carandini M, Churchland AK. Probing perceptual decisions in rodents. Nature Neuroscience. 2013;16:824–831. doi: 10.1038/nn.3410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrashekar J, Hoon MA, Ryba NJ, Zuker CS. The receptors and cells for mammalian taste. Nature. 2006;444:288–294. doi: 10.1038/nature05401. [DOI] [PubMed] [Google Scholar]

- Chen X, Gabitto M, Peng Y, Ryba NJ, Zuker CS. A gustotopic map of taste qualities in the mammalian brain. Science. 2011;333:1262–1266. doi: 10.1126/science.1204076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland AK, Kiani R, Chaudhuri R, Wang XJ, Pouget A, Shadlen MN. Variance as a Signature of Neural Computations during Decision Making. Neuron. 2011;69:818–831. doi: 10.1016/j.neuron.2010.12.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland AK, Kiani R, Shadlen MN. Decision-making with multiple alternatives. Nat Neurosci. 2008;11:693–702. doi: 10.1038/nn.2123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland PS, Ramachandran VS, Sejnowski TJ. A critique of pure vision. In: Koch C, Davis JL, editors. In Large-scale neuronal theories of the brain. Cambridge, Mass: MIT Press; 1994. pp. 23–60. [Google Scholar]