Abstract

More than a quarter of Medicare beneficiaries are enrolled in Medicare Advantage, which was created in large part to improve the efficiency of health care delivery by promoting competition among private managed care plans. This paper explores the spillover effects of the Medicare Advantage program on the traditional Medicare program and other patients, taking advantage of changes in Medicare Advantage payment policy to isolate exogenous increases in Medicare Advantage enrollment and trace out the effects of greater managed care penetration on hospital utilization and spending throughout the health care system. We find that when more seniors enroll in Medicare managed care, hospital costs decline for all seniors and for commercially insured younger populations. Greater managed care penetration is not associated with fewer hospitalizations, but is associated with lower costs and shorter stays per hospitalization. These spillovers are substantial – offsetting more than 10% of increased payments to Medicare Advantage plans.

I. Introduction

The Medicare program consists of two distinct components for covering non-drug services: traditional Medicare (TM), a government-administered fee-for-service insurance plan with a legislatively defined benefit structure, administered prices, and few utilization controls; and Medicare Advantage (MA), a program of competing private health plans that may offer additional benefits and utilize various cost-containment and quality-improvement strategies. Beneficiaries who choose to enroll in MA receive health insurance for all TM covered services from their chosen MA plan, and may also receive additional services (such as dental and eye care) and/or reduced cost sharing relative to TM. In return for providing care for enrollees, Medicare pays MA plans a monthly risk-adjusted payment per beneficiary.

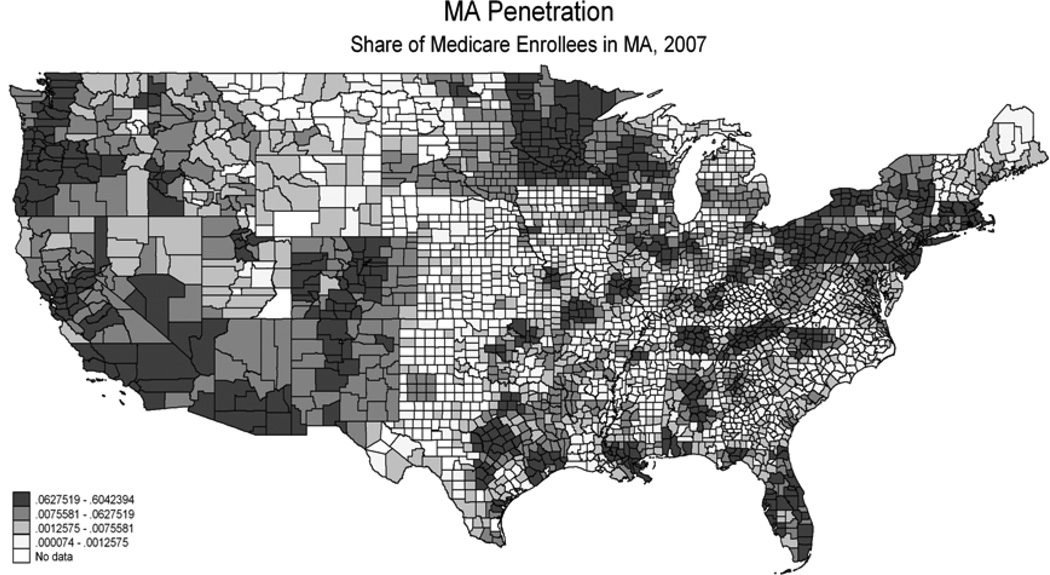

MA enrollment has expanded rapidly as payments have increased,1 with 27% of beneficiaries enrolled in MA plans in 2012 after declining rates in the 1990s and penetration of only 14% a decade ago.(1–5) There is substantial variation in MA enrollment by state, with 14 states having 30 percent or more beneficiaries enrolled in MA (MA ‘penetration’) and 6 states with less than 10 percent enrollment in 2012 (see Figure 1, described in more detail below). Within states there is also significant variation in penetration rates, with 66% of variation accounted for by within state variation in 2009.

Figure 1.

Notes: Data from Medicare denominator file, 2007. Share of Medicare beneficiaries enrolled in Medicare Advantage plans, by county.

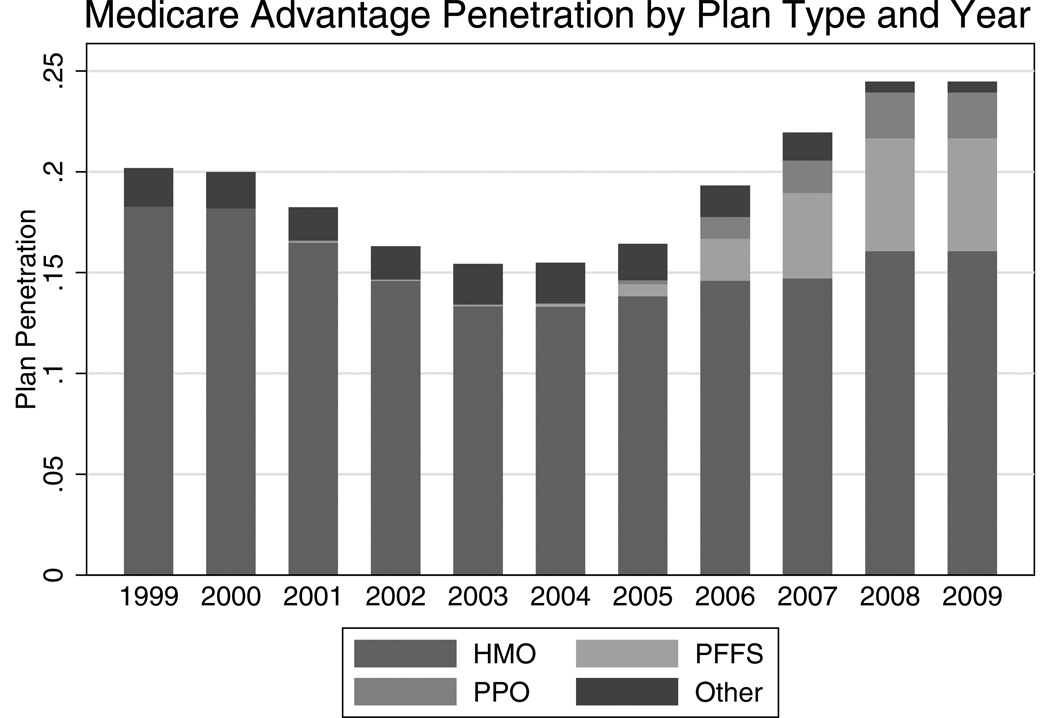

The MA program was introduced in that hope that private competition and managed care would result in more efficient care at a lower cost than conventional fee-for-service health insurance.(6) Initially only HMO-type plans were allowed to enter, although recently other types of plans such as PPOs and even “private” fee-for-service plans have entered the MA market (see Figure 2, described in more detail below). HMOs continue to dominate the MA market, although their share of total MA penetration declined from 91% in 1999 to 66% in 2009. This share has been taken up by private FFS and PPO plans, which in 2009 made up 23% and 9% of total MA penetration, respectively.

Figure 2.

Notes: Data from Medicare Beneficiary Denominator File, 1999–2009. Share of Medicare beneficiaries enrolled in Medicare Advantage, by plan type and year.

This evolution in the MA market has meant, in part, that more providers work both on contract to MA plans and also serve patients in many other plans.2 While in these arrangements MA plans may have less direct control over providers, because the same health care providers generally serve both MA and TM patients, changes in care induced by the MA program may “spill over” to care delivered to TM enrollees – and, indeed, to all patients. The ramifications of MA incentives may thus be felt throughout the health care system if, for example, they affect standards of care or hospital investment. Previous research in other contexts, such as the spread of commercial managed care plans in the 1990s, suggests that these spillovers may be substantial, but there is little research as yet on spillovers from MA plans. Any spillover effects of MA plans to others’ spending or outcomes have direct implications for designing an efficient MA program. Gauging the magnitude of such spillovers and establishing causal connections requires careful empirical research to isolate causal effects.

This paper examines the effect of changes in the MA sector induced by MA payment changes on the care received by other patients, focusing on hospitalization rates, quality of care, and costs for Medicare enrollees (in TM) and the commercially insured. We first provide background on potential mechanisms for and previous estimates of spillover effects, as well as detail on the evolution of the MA program. We then outline our empirical strategy and the data we bring to bear. After describing our empirical results, we conclude by drawing implications for public policy.

II. Background

More than 27% of Medicare beneficiaries are now in MA. MA payment structure and program parameters directly affect MA plans and enrollees and may indirectly affect the entire health care system. Much of the rationale for the current MA program is based on the premise MA plans can provide care of higher quality and lower costs than the TM system, and that this efficiency will enable more generous benefits at a lower premium. There are multiple avenues through which any improvements in efficiency associated with MA may have ripple effects throughout the health care system.

A. Spillover Pathways

Payment policies that do not account for “spillovers” from MA to other segments of the market – or externalities – are likely to be inefficient from a social welfare perspective. There are many different pathways through which care received by some patients might affect that of others; we highlight several here3. Models focusing on different factors – such as the structure of health care production, demand for services, and interactions among providers, payers, and patients – generate different predictions about the sign of spillovers.

A number of spillover mechanisms imply a convergence of patterns of care among different patient populations. First, managed care can influence physician practice styles more broadly – if managed care changes the physician treatment of managed care patients and then those changes affect the physician’s treatment of his or her other patients. Managed care plans deploy a number of techniques to control utilization, such as pre-authorization, utilization review, referral requirements, restricted networks, and (full or partial) capitation. These tools may change how physicians practice medicine for all of their patients – not just those in the managed care plan.(7) The “norms hypothesis,” first studied in the context of health insurance by Newhouse and Marquis, supposes that physicians base their practice style on the average or typical health insurance coverage of their patients, so a change in one patient’s coverage, by affecting the average, affects others.(8, 9) There is indeed evidence that physicians make decisions based on their overall mix of patients – or even the mix of patients in the area.(10)

Second, managed care can influence health care investment and the adoption of technology that can in turn affect system-wide utilization. For example, an increase in managed care activity in an area could lead to a decrease in the number of MRI machines and thereby the total number of MRIs performed. Several previous studies of the effect of managed care on health care investment and subsequent use of particular high costs services suggest that managed care affects hospital infrastructure (11) and the use of high-cost procedures.(12)

Third, changes in managed care activity may also affect health care prices. If managed care plan entry in an area leads to greater competition, prices could decline for all providers. This effect is likely to be weak for TM patients, however. Under the inpatient prospective payment system for hospital admissions and the fee-schedule for physician visits, prices are set administratively; however changes in prices may eventually be incorporated in the payment rate updates.

Other spillover mechanisms imply divergence of care patterns for different patient populations. For example, an increasing supply curve for a service implies that a decreased demand from one group reduces marginal cost for other groups, leading to increases in their use of the service. Particular objective functions of providers may themselves lead to divergence. Specifically, if physicians seek a “target income,” then increasing MA enrollment that reduces income for providers would motivate physicians to try to recover this income by inducing demand among other sets of patients. Even without particular targets, strong income effects (i.e., decreasing marginal utility of income) can generate divergent spillovers.(13) Of course, there may be multiple forces at work, and effects may vary based on underlying conditions. Some models suggest that the net effect of competition on premiums may depend on the elasticity of demand; (14) there may be convergence of practice patterns when competition drives down market power, but divergence when it induces demand.(15)

All of this means that empirical evidence must be brought to bear to gauge the sign and magnitude of spillovers – and the sign of those spillovers drives optimal payment policy. If the marginal benefits of care in TM are less than the cost, positive spillovers from MA to TM that reduce costs in TM are welfare-improving, while negative spillovers that led to higher utilization in TM would be welfare reducing.4 From the perspective of full social costs and benefits, if changes in MA enrollment confer positive (negative) spillovers to TM enrollees or other populations, MA payment rates should be higher (lower) than they should be based only on evaluation of the effect on MA enrollees. In this case the “missing market” for the gains from spillovers leads to socially inefficient under (over) entry by MA plans.(16)

Empirically, evidence for strong effects of MA penetration on improved quality and/or reduced cost market-wide would suggest that optimal payment to MA plans exceed expected enrollee cost. Such spillovers are not only an important determinant of the optimal payment structure, but also complicate evaluation of existing policies: many studies use non-enrollees as a control group against which to gauge the effect of a particular policy change, but if these nonenrollees are themselves affected by the intervention then those estimates may be biased.5 (17)

B. Previous Literature

There is ample evidence that an individual’s health insurance coverage affects that person’s own utilization of health care, and more mixed evidence that it affects that person’s ultimate health outcomes.(18–22) There is less definitive empirical evidence, however, about how changes in one person’s health insurance coverage affect the health care use and outcomes of other patients. There is substantial prior research on market-level effects of managed care penetration that has found some evidence that increased penetration leads to lower costs or premiums for all insurers and greater adherence to recommended patterns of care. However, these studies generally suffer from several shortcomings. First, many are not able to account adequately for the potential endogeneity of market choice by insurers and of payer mix at the provider level. Second, data limitations make it difficult to identify the mechanism producing changes in performance as managed care penetration increases. Finally, previous studies largely predate the Medicare Modernization Act (MMA) of 2003, limiting the applicability of their findings to the current policy context.

A large number of studies examine the spillover effect of managed care on health care expenditures and utilization. Robinson evaluates the relationship between hospital cost growth and HMO penetration in California. (23) He finds that hospital expenditures grew 44% less rapidly in markets with high HMO penetration (15.2%) compared with low penetration (.6%), due mainly to reductions in the volume and mix of services. Gaskin and Hadley also examine the effect of managed care market share and hospital cost growth, and find hospitals in areas with high HMO penetration (40% of the population enrolled in HMOs) had a 25% slower growth rate in costs than hospitals in low penetration areas (5% of the population enrolled in HMOs). (24) Both studies attempt to account for the endogeneity of HMO location in high-cost areas by using first-differences in hospital costs.

Baker and coauthors examine the effect of HMO penetration on spending and utilization by other (non-HMO) beneficiaries in a series of papers, with mixed results.(14, 25–29) Baker finds a concave relationship between managed care penetration (instrumented with firm characteristics) and both Traditional Medicare Part A and Part B FFS spending. (25) Part A and Part B expenditures are increasing in HMO penetration until a maximum is reached at 16% and 18% penetration, respectively, after which they are decreasing in penetration. Baker argues the concavity is mainly due to changes in quantity rather than price; under Medicare regulations, price variation is limited, although some indirect price effects are possible. In a separate study, Baker and coauthors find that managed care penetration (instrumented with firm characteristics) initially reduced market-wide insurance premiums, but eventually led to increased selection.(14) It is worth noting that IV estimates differed from OLS, highlighting the importance of accounting for endogenous firm entry.

Chernew, DeCicca, and Town, in an analysis similar in spirit to that here, find that increasing MA penetration reduces spending by TM beneficiaries – particularly those with chronic conditions.(30) Using data from an earlier time period, they find that in an OLS specification, a 1 percentage point increase in MA HMO penetration decreases TM utilization by .3%, but when they account for endogenous penetration by using payments as an instrument they find a decrease of .9%. Finkelstein explores the system-level effects of the introduction of the Medicare program on hospital and health care investments, with estimates implying that the spread of insurance resulted in a 37 percent increase in hospital expenditures, half from new hospital entry and half from higher spending at existing hospitals.(31, 32) The magnitude of Finkelstein’s finding is far greater than prior work, suggesting that the spillover impacts of largescale changes in insurance may be much greater than smaller incremental changes would imply.

Other studies examine the effect of managed care not just on overall utilization and premiums but on patterns and quality of care, but much of this work is not able to draw on a source of exogenous variation in penetration. Heidendreich et al. find that greater managed care penetration was associated with greater use of beta blockers and aspirin among TM heart attack patients, but lower use of more technologically intensive interventions such as coronary angiography.(33) Bundorf et al. also find that managed care penetration affected rates of revascularization and cardiac catheterization among TM heart attack patients, but that spillovers dissipated as competition between managed care plans increased.(34) They had rich controls but no available instrument, and there is some evidence of endogenous insurer entry based on profitability.(35, 36) Van Horn et al. find that increased managed care penetration at the hospital level led to more efficient resource utilization, but also that hospitals “cost shifted” to their nonmanaged care patients – although here, too, results were sensitive to strategies for accounting for endogeneity.6(37) (38, 39) Glied and Zivin found evidence of spillovers in practice patterns for all patients as physicians’ managed care share increased.(15)

The results from this literature imply that there are likely spillovers when managed care patients comprise a sufficiently large share of a hospital’s or physician’s practice, but methodological issues interfere with clear interpretation. Baker has called attention to problems with estimating the reduced-form models employed in the spillover literature.(35) Most importantly, unobserved market-level variables may be correlated with managed care entry decisions, penetration, and outcomes, confounding identification. Our strategy to improve on the existing literature by using an exogenous source of variation in managed care penetration is described below.

C. Medicare Advantage Payment Policy

MA payment policy has evolved over time in an effort to maintain access to private plans while containing costs.(6) We take advantage of the fact that the idiosyncrasies of those changes generate exogenous shocks to MA penetration to isolate the causal effect of MA penetration on system-wide health care use. Figure 3 shows substantial growth in real payment rates over the past decade, from an average of $624 a month in 1997 to $860 a month in 2009. It also shows the degree of variation in those payments. Because of the floor payments described below there is not much of a lower tail, but there is a substantial right tail in payments.

Figure 3. Distribution of MA Payment Rates.

Notes: Payment rate data from the CMS Ratebook files, located online at http://www.cms.gov/Medicare/Health-Plans/MedicareAdvtgSpecRateStats/Ratebooks-and-Supporting-Data.html. Distribution of Medicare Advantage Aged payment rates, by year.

The Tax Equity and Fiscal Responsibility Act (TEFRA) of 1982 authorized Medicare to contract with HMOs to provide managed care coverage to Medicare beneficiaries. HMOs are paid directly from the Medicare program a monthly capitation fee to provide each beneficiary’s covered services of Medicare Parts A and B. In addition, to attract enrollees, HMOs can also provide supplementary services that traditional Medicare (TM) does not cover. While many aspects of the original program have not changed, the calculation of the capitation amount has been subject to numerous legislative changes over the last thirty years.

From 1985 to 1997 (before our data period), Medicare’s payments to HMOs per enrollee were based on actuarial estimates of the per person TM expenditures in a beneficiary’s county of residence, adjusted for a limited set of demographics. The Balanced Budget Act of 1997 (BBA) significantly altered the types of private Medicare plans as well as the plan payment methodology. First, the BBA authorized new types of private plans to contract with Medicare: preferred-provider organizations (PPOs), provider-sponsored organizations (PSOs) and private fee-for-service plans (PFFS). PSOs are similar to HMOs, while PFFS plans are similar to indemnity plans.

The BBA also changed the way the payments to plans were calculated. Instead of basing the county rates on average TM costs, plans were paid the maximum of three amounts: (1) a “blended” payment rate, calculated by taking a weighted average of the county’s average TM costs and national TM costs; (2) a “floor amount”, i.e. a minimum amount specified by law ($367 per month in 1998); and (3) a 2% increase over the prior year’s rates. The BBA also changed the individual level adjustments to the county-level base rate: the base rate plans received was now adjusted based on enrollee health status as well as demographics. The health status risk adjustment was phased in gradually: from 2000–2003 10% of payments were based on a enhanced risk-adjustment system that accounted for inpatient diagnoses.

In 2003 the Medicare Modernization and Improvement Act (MMA) again changed the payment methodology. Medicare now calculated a benchmark based on the highest of five amounts: (1) an urban or rural floor payment; (2) 100% of county risk-adjusted TM costs (calculated using a five-year moving average lagged three years); (3) an update based on the prior year’s national average growth in TM costs; (4) a 2% update over the prior year’s payment; and (5) a “blend” update (identical to the BBA “blend”), which was discontinued after 2004. Moreover, individual risk adjustment for payment rates was also refined with the adoption of the “Hierarchical Condition Category” (HCC) risk-adjustment model, which takes into account information from ambulatory care claims, inpatient admissions, and demographic factors. This more complete risk adjustment system was given 30% weight in 2004 and was fully phased in by 2007.

Starting in 2006, the MMA introduced a bidding process for plan payments. Each year, plans bid their estimated cost to provide TM covered benefits for an average risk patient. This bid amount is compared to the county’s benchmark (calculated as above): if a plan’s bid is higher than the benchmark, it is required to collect the difference through a premium on its enrollees. If the bid is lower, seventy-five percent of the difference is returned to enrollees in the form of increased benefits, while twenty-five percent is returned to Medicare.7

III. Empirical Strategy

We examine the effect of MA enrollment on spending, utilization, and quality both at the county- and hospital-levels. We employ several different identification strategies.

We begin with a baseline specification describing the relationship between Medicare Advantage penetration and a range of outcomes:

| (1) |

where Yijt is a measure of spending, utilization, or other outcome for individual j in area i in year t, MA Penetrationi,t-1 is the MA penetration in area i in year t-1 (focusing on managed care plans and excluding private FFS, although we explore sensitivity to this choice), Xjt is a vector of areatime varying characteristics (including measures of area-level population demographics and economic conditions), Yeart is a vector of year dummies, and Zijt is a vector of individual characteristics (including risk adjusters). In hospital-level regressions the “area” is the hospital, and in county-level regressions it is the county. Some analyses are restricted to subsets of the population – such as those covered by TM or those under age 65. Other specifications aggregate individuals up to the county level for population-based analysis. We also explore potential non-linearities in the effect of penetration using penetration and its square.

While these specifications control for any factors about the area that are fixed over time as well as any national trends, there may still be time-varying omitted factors within areas that drive both MA penetration and the care received by other segments of the population. For example, if care in a particular area grows more expensive in a way that makes it less profitable for MA plans to enroll new beneficiaries, we might see lower penetration associated with higher costs even though that relationship was not causal. To abstract from such confounding factors, we use an instrumental variables approach that takes advantage of plausibly exogenous changes in the payment schedule for MA plans that affects the profitability of MA enrollment but is not correlated with local care patterns or beneficiary characteristics.

We begin by using the benchmark payment rate (described in Section II.C) as an instrument for penetration:

| (3) |

The validity of our IV approach rests on the assumption that changes in payment rates for MA plans are not correlated with changes in contemporaneous TM costs or outcomes (except through any correlation induced by their impact on MA enrollment). With this assumption, instrumented changes in payment rates will thus drive changes in MA enrollment that are also independent of local costs and market features, and can be interpreted as causal effects. We also estimate a first stage with year-specific payment instruments:

| (4) |

There may be some concern about our assumption that payment rates are unrelated to local spending on Traditional Medicare FFS enrollees, since TM costs are an element of benchmark calculation. These TM costs enter with a substantial lag (5-year moving average, lagged 3 years), but if there were strong serial correlation in growth rates (not just levels) that assumption would be problematic. Previous research finds no evidence for serial correlation in spending growth in TM.(30) We see little correlation in our data between TM payment increases and lagged TM costs: a regression of county-level payment growth (in log real dollars) on the 5-year growth in TM costs (in log real expenditures) from 3 years before yields a small and statistically insignificant coefficient (−.003, std error −.008). One might still be concerned that payment increases are systematically related to other unmeasured county traits, creating an omitted variable bias, but the correlation between payment change and observed cost-related county traits such as hospital beds per capita, physicians per capita (in aggregate and by specialty categories) and managed care penetration are also all small (all <0.06).8 It thus does seems reasonable to treat the correlation between current payment changes (which are based on lagged cost increases) and contemporaneous cost changes as near zero.

Nevertheless, we test robustness of our findings to alternative instruments based on a simulated benchmark payment that is purged of variation in (even lagged) Traditional Medicare FFS costs. We construct a benchmark based on all of the elements of the actual formula except that TM component using CMS data on the individual elements. We also use a benchmark constructed at the state, rather than county, level to capture the regional factors that may drive insurer entry and offering decisions.

| (5) |

Another concern with using changes in MA payment rates as a source of exogenous variation in MA penetration is that the new enrollees are systematically different than the old. For example, if higher payment rates induce MA plans to seek new enrollees by offering benefits that appeal to the healthier TM enrollees, then MA plan expansion could make the TM pool sicker.(40, 41) Observed differences in treatments and outcomes for TM enrollees would then be a combination of both selection and spillovers, so any amount of this type of selection would bias our estimate of spillover effects downward.

Evidence from recent studies suggests this kind of selection bias is likely to be small. While MA plans appear to attract healthier enrollees on average, (42–45) the change in average case mix in TM in response to payment-change-driven expansions, the relevant value for our purposes, appears small. Mello et al.(46) find that MA penetration does not affect the distribution of risks in TM. Chernew, DeCicca and Town find no evidence for differential patient severity in observables for TM beneficiaries due to within-county variation in penetration.(47) Additional recent work finds that increased county-level MA penetration has no appreciable effect on the risk scores of beneficiaries in TM. (48–50)

We employ several strategies for assessing this potential bias. First, we study the effect of increases in MA penetration not only on TM enrollees, but also on non-Medicare enrollees in commercial plans. This population is not subject to selection effects, and so any observed effect can be interpreted as spillovers. Second, we look at population-based outcomes at the countylevel that are also not sensitive to selection between plans. Third, we examine the effect of observable risk factors on our estimated spillovers to help gauge the likelihood that unobservable factors are exerting a substantial influence. To the extent that results are sensitive, estimated changes in the average risk score could be used to assess the magnitude of the bias.

IV. Data

We use several data sources to implement this empirical strategy. Data are summarized in Table 1. In 2009, the average cost of a hospitalization in our sample was $12,422 and the average length of stay was 5.7 days. About 40% of all hospitalized patients in our sample were insured by Medicare, 28% by commercial insurers, and 13% by Medicaid.

Table 1.

Summary Statistics

| 1999–2009 | 2009 | 1999–2009 | 2009 | ||

|---|---|---|---|---|---|

| Hospitalizations (Patient-level) | MA (County-level, Unweighted) | ||||

| Cost (dollars) | 11,258 (17,906) |

12,422 (18,653) |

MA Penetration (%) | 0.0726 (0.106) |

0.147 (0.116) |

| Length of Stay (days) |

5.701 (8.821) |

5.423 (8.422) |

MA HMO Penetration (%) |

0.0403 (0.0906) |

0.0459 (0.0924) |

| Died During Hospitalization (%) |

0.0304 (0.172) |

0.0260 (0.159) |

Benchmark Payment (dollars) |

672.4 (105.4) |

795.6 (76.09) |

| PQI (%) | 0.143 (0.350) |

0.133 (0.340) |

Simulated Benchmark (dollars) |

646.3 (91.98) |

788.8 (70.16) |

| PSI (%) | 0.0184 (0.134) |

0.0131 (0.114) |

|||

| Per Person, County level | Insurance Among Hospitalized | ||||

| Cost (dollars) | 1,203 (366.0) |

1,348 (367.1) |

% Medicare | 0.396 (0.489) |

0.375 (0.484) |

| Number of Hospitalizations |

0.119 (0.0312) |

0.121 (0.0317) |

% Medicaid | 0.129 (0.335) |

0.143 (0.350) |

| Total Days in Hospital | 0.616 (0.174) |

0.650 (0.188) |

% Commercial Insured |

0.281 (.450) |

0.262 (0.440) |

| Mortality in Hospital | |||||

| (%) | 0.00355 (0.0012) |

0.00306 (0.00104) |

% Self-Pay | 0.0433 (0.203) |

0.0461 (0.210) |

| PQI (visits) | 0.0171 (0.0075) |

0.0156 (0.00611) |

Note: Data are from Healthcare Cost and Utilization Project’s state inpatient database for NY, MA, AZ, FL, and CA for 1999–2009 and from the Medicare enrollment files for 1998–2009. PQIs are admissions for ambulatory care sensitive conditions as described in text. N=16.8 million for hospitalizations (20% HCUP sample); N=2192 for counties (based on 100% HCUP). Costs are calculated as total charges times hospital cost-to-charge ratio. The hospital cost-to-charge ratio is calculated from the yearly Medicare Hospital Cost Report file.

A. MA Payments and Enrollment

We use data from CMS to quantify payment rates and plan characteristics for 1999–2009. County-level payment rates come from the Medicare Rate Book and the State/County/Plan Database. Enrollment data come from CMS State/County/Plan Enrollment Data File. Figure 1 shows substantial variation in MA penetration both within and between states, with about 32% of the variation in county-level MA penetration in 2007 (for example) accounted for by between-state variation and 68% within. Figure 2 shows the distribution of plan types over time. Throughout the sample period, 64% of MA enrollees were enrolled in HMO plans, 31% were enrolled in PFFS plans, and 5% were enrolled in PPO plans. There is also substantial variation in payment rates. In 1999 there was two-fold variation in payment rates across counties, with New York, New York MA plans receiving $750 and “floor” counties such as Essex VT receiving $379. Under the BBA this variation across counties decreased, from a standard deviation of $50 in 1999 to $30 in 2003. The payment regime of the MMA reversed this trend of decreasing variation starting in 2004, and by 2009 the standard deviation in payment rates was $80, with a maximum of $1365 and a minimum of $740.

B. Healthcare Cost and Utilization Project’s (HCUP) State Inpatient Databases (SID)

For selected states, the SID includes the universe of all discharges, including information on insurance provider and type of plan. We use data for Florida, New York, California, Arizona, and Massachusetts. There are several advantages to using these states. First, more than 15 percent of the Medicare beneficiaries in each of these states are enrolled in Medicare Advantage, ranging from 15% in Massachusetts to 31% in California. In California, for example, more than 30% of the almost 4 million hospital discharges in 2004 were attributable to Medicare patients, and 25% of those were in an Medicare Advantage plan. Together, the Medicare Advantage enrollees in these 5 states comprise 49% of all Medicare Advantage enrollees nationally (excluding U.S. territories). There is also substantial variation within these states in county-level MA penetration: only 9% of the variation in county Medicare Advantage enrollment in these states is attributable to between-state variation, leaving 91% of the variation within-state. Second, each of these states reports whether Medicare enrollees are in TM or an MA plan. Third, in addition to the patient-level zip code of residence, each of these states also reports hospital identifiers that can be matched with American Hospital Association data. While each state’s health care systems, resources, and populations are different, these states are reasonably representative of the nation as a whole: as shown in Table 2, Medicare expenditures and population characteristics in the 5 states are quite similar to national averages.

Table 2.

5 HCUP States vs All 50 States

| All 50 States | 5 HCUP States | ||

|---|---|---|---|

| Medicare Expenditures, 2003-2009 ($) | |||

| Total Expenditures | 8,633 | 8,852 | |

| Hospital Expenditures | 4,314 | 4,247 | |

| Physician Expenditures | 2,421 | 2,833 | |

| Hospital Outpatient Expenditures | 906 | 790 | |

| Area Resource File Covariates, 1999–2009 | |||

| % Female | 51 | 51 | |

| % White | 81 | 79 | |

| % Black | 13 | 11 | |

| % Hispanic | 13 | 23 | |

| % Below Poverty Level | 13 | 13 | |

| % Unemployed | 5 | 5 | |

| Per Capita Income | 37,755 | 40,678 | |

| % Over 65 | 13 | 13 | |

| GPs / 10,000 pop | 2.9 | 2.5 | |

| Specialists / 10,000 pop | 8.5 | 10.2 | |

| Surgeons / 10,000 pop | 5.1 | 5.5 | |

5 HCUP states include NY, MA, AZ, FL and CA. All dollar figures are in 2009 dollars. Medicare expenditure data from the Dartmouth Atlas.

The HCUP data reports total inpatient facility charges, which can be converted into costs by multiplying the charge amounts by the hospital’s cost-to-charge ratio. The cost-to-charge ratio is calculated annually for each hospital using information from the hospital’s Medicare Cost Reports, and do not include the professional fees paid to physicians. Importantly, this measure of cost does not capture variation in traditional Medicare expenditures because hospitals are paid a fixed amount per admission based on the patient’s condition. The HCUP data also allow calculation of two different measures of quality of care developed through AHRQ. The first, Patient Safety Indicators, measure the quality of hospital care that patients receive by assessing the presence of 20 adverse events, including complications of anesthesia, post-operative sepsis, iatrogenic pneumothorax, and death in low-mortality DRGs. These patient outcomes were designed to be sensitive to quality of care in the hospital. The second, Prevention Quality Indicators assess access to and quality of primary care services that can reduce the risk of hospitalization. These indicators, based on hospital inpatient discharge data, calculate the prevalence of 14 “ambulatory care sensitive” conditions that serve as markers of access to outpatient care and its quality.(51)

C. Area Resource File

The ARF provides county-level economic and demographic covariates by year, which can be merged based on patient or hospital county identifiers. The ARF also provides countylevel aggregated hospital characteristics, including the number and type of providers (such as the number of general practitioners, the number of specialists, the number of registered nurses, etc.) and hospital capacity (the number of beds, the number of intensive care unit beds, etc.).

V. Results

A. First Stage

Table 3 shows the results of our first stage estimation. All regressions include hospital and year fixed effects as well as other covariates. Standard errors are clustered on county here and in all specifications in subsequent tables (although, since this is a conservative assumption, we also show results here clustered at the hospital level instead). We show results at the hospitalization level as well as aggregated to the county level for the counties in our 5-state HCUP sample. The “payment*year” column aggregates the year-specific coefficients to show a comparable average.

Table 3.

First Stage

| Outcome: | Hospitalization Level Penetration | County Level Penetration | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IV: | Payment | Payment * Year |

Simulated County Benchmk |

Simulated State Benchmk |

Payment | Payment * Year |

Simulated County Benchmk |

Simulated State Benchmk |

Payment | Payment * Year |

Simulated County Benchmk |

Simulated State Benchmk |

| Payment | .032913*** | .0474*** | .0523*** | .189*** | .032913*** | .0474*** | 0.0523*** | .189*** | .0334*** | .045*** | .0506*** | .1405*** |

| (.0112644) | (.0170) | (.0142) | (.002) | (0.00544) | (.0067) | (0.00773) | (0.0173) | (.0107) | (.0125) | (.0129) | (.0232) | |

| Covariates | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Hosp FEs County |

Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | No | No | No |

| Fes | No | No | No | No | No | No | No | No | Yes | Yes | Yes | Yes |

| Year FEs | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Cluster | County | County | County | County | Hospital | Hospital | Hospital | Hospital | County | County | County | County |

| Obs | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 2,376 | 2,376 | 2,376 | 2376 |

| R-squared F-stat | .4825 8.54 | .51 4.03 | .4614 13.66 | .51 24.51 | .4825 36.66 | .51 22.2 | .4614 45.85 | .51 119.5 | .445 9.7 | .465 4.9 | .465 15.3 | .4921 36.6 |

Note: Dependent variable is MA Managed Care penetration, defined as MA HMO penetration + MA PPO penetration, with units in percentages. Individual level covariates are age, sex, race, and type of insurance. Hospital level covariates are teaching hospital status, for-profit status, and number of beds. County level covariates are population size, % in poverty, % unemployed, per capita income, % male, % white, % black, % Hispanic, % pop under 15, % aged 15–19, % aged 20–20, % aged 25–44, % aged 45–64, % aged 65 and older, # of general practitioners per capita, # of specialists per capita, # of surgeons per capita, # of other physicians per capita. Robust standard errors in parentheses (clustered on county).

p<0.01,

p<0.05,

p<0.1.

Data are from Healthcare Cost and Utilization Project’s state inpatient database for NY, MA, AZ, FL, and CA (20% sample) for 1999–2009 and from the Medicare enrollment files for 1998–2009. The “payment*year” column aggregates the year-specific coefficients to show a comparable average.

The results suggest that an increase in benchmark payment of $100 (about 1 standard deviation) increases penetration by about 3–5 percentage points (about .3 std devs), all significant at the p<.001 level. Previous studies have found a $100 increase in payment rates increases penetration by around 1 to 3.4 percentage points, but most of these studies do not include fixed effects in the regression models. (52–54)

Results using a full set of payment-year dummy interactions produce a similar average value (4.7 percentage points). Alternative specifications are shown for comparison, including using the county- and state-level simulated benchmark payments that abstract from lagged TM costs, as described above. These produce similar responses, although changes in state-wide payments produce larger changes in county penetration.

B. Hospitalization-Level Outcomes

We begin by analyzing inpatient outcomes at the individual level. Recall that this will only capture costs and care conditional on having been admitted to the hospital. Table 4 shows the effect of MA penetration on the natural log of hospital costs. We show OLS and IV results, using our preferred specification of year-specific payment rates as instruments (but show robustness to alternative specifications below). The first panel shows results for all hospitalizations in our 5-state sample, and includes specifications with and without individuallevel health risk adjusters (HCCs). The next panels show results broken down by the insurance status of the inpatient. These regressions are run on a 20% sample of the full HCUP (comprising more than 13.5 million admissions). Subsequent tables follow this format.

Table 4.

Effect of Penetration on Log Hospitalization Costs

| Dep Var: Log Costs |

Full Sample | Traditional Medicare FFS |

MA | Commercial | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| OLS | IV Payments*Year |

OLS | IV Payments* Year |

OLS | IV Payments* Year |

OLS | IV Payments* Year |

|||

| MA Managed Care | −0.0027*** | −0.00239*** | −0.0051** | −0.0047** | −0.0025*** | −0.0045** | −0.0029*** | −0.00204 | −0.0026*** | −0.0042** |

| Penetration | (0.00075) | (0.00066) | (0.00200) | (0.0019) | (0.00060) | (0.0019) | (0.0010) | (0.00263) | (0.00056) | (0.0017) |

| Risk Adjusters | No | Yes | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Covariates | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Hosp FEs | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Year FEs | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Observations | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 5,813,942 | 5,813,942 | 1,253,227 | 1,253,227 | 3,632,805 | 3,632,805 |

| R-squared | 0.031 | 0.1822 | 0.030 | 0.182 | 0.154 | 0.154 | 0.190 | 0.190 | 0.212 | 0.212 |

Note: Costs are in real 2009 dollars. MA Managed Care Penetration is in percentages (ranging from 0 to 100). Individual level covariates are age, sex, race, and type of insurance. Hospital level covariates are teaching hospital status, for-profit status, and number of beds. County level covariates are population size, % in poverty, % unemployed, per capita income, % male, % white, % black, % Hispanic, % pop under 15, % aged 15–19, % aged 20–20, % aged 25–44, % aged 45–64, % aged 65 and older, # of general practitioners per capita, # of specialists per capita, # of surgeons per capita, # of other physicians per capita. Robust standard errors in parentheses (clustered on county).

p<0.01,

p<0.05,

p<0.1.

Data are from Healthcare Cost and Utilization Project’s state inpatient database for NY, MA, AZ, FL, and CA for 1999–2009 (20% sample) and from the Medicare enrollment files for 1998–2009.

The first panel of Table 4 suggests that a 10 percentage point increase in MA penetration yields a 2.4% decline in hospitalization costs (not necessarily Medicare spending) in the OLS specification, but a 4.7% decline in the IV specification. This is consistent with endogenous increases in penetration in higher-cost areas. Results are robust to the inclusion or exclusion of individual-level risk adjusters – suggesting that selection on unmeasured risk is unlikely to be driving results. The next panels look at subsets based on insurance status. In areas with higher MA penetration, cost per hospitalization is lower for TM enrollees and for the under-65 commercially insured. In the IV specifications, the effect of MA penetration on hospital costs of MA patients is roughly half the size of the effect for the TM and commercial patients and is not statistically significant. This is consistent with MA patients receiving less intensive care that eventually spills over to lower costs of other patients: the average (regression-adjusted) cost for an MA patient’s admission is $10,700, compared with $11,400 for a TM patient.

The magnitude of these spillovers is consistent with findings of previous studies. Baker finds that a 10% increase in MA penetration is associated with a 4.5% decrease in Part A TM expenditures, the same magnitude as our 4.5% decrease in TM hospitalization costs. Chernew et al. find a 10% increase in MA penetration is associated with a larger 9% decrease in TM expenditures.

Table 5 shows the effect of MA penetration on the length of stay. A 10 percentage point increase in MA penetration has no significant effect on length of stay in the OLS specification, but the IV regressions suggest a shortening of approximately .2 days (compared with an average length of stay of about 5 days). Here, too, the reduction is seen system-wide, across both TM and commercially insured patients. As above, the effect of MA penetration on length of stay for MA patients is smaller in magnitude than for TM and commercially insured patients, and not statistically significant, consistent with lower-intensity treatment of MA patients eventually spilling over to other patients. The average (regression-adjusted) length of stay for an MA patient is 5.6 days, compared with 6.3 for a TM patient.

Table 5.

Effect of MA Penetration on Length of Hospital Stay

| Dep Var: LOS |

Full Sample | Trad. Medicare FFS | MA | Commercial | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| OLS | IV payment*year |

OLS | IV payment * year |

OLS | IV payment * year |

OLS | IV payment * year |

|||

| MA Managed | −.0037 | −.0030 | −.018** | −.0231** | −.00281 | −.0309** | −.00783** | −.0216* | −.00625*** | −.0186*** |

| Care Penetration | (0.0028) | (0.0023) | (0.0075) | (0.0092) | (0.0033) | (0.014) | (0.0035) | (0.013) | (0.0018) | (0.0053) |

| Risk Adjusters | No | Yes | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Covariates | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Hosp FEs | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Year FEs | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Observations | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 5,813,942 | 5,813,942 | 1,253,227 | 1,253,227 | 3,632,805 | 3,632,805 |

| R-squared | 0.013 | 0.1019 | 0.013 | 0.098 | 0.087 | 0.087 | 0.085 | 0.085 | 0.108 | 0.108 |

Note: LOS is in days. MA Managed Care Penetration is in percentages. Individual level covariates are age, sex, race, and type of insurance. Hospital level covariates are teaching hospital status, for-profit status, and number of beds. County level covariates include population, % in poverty, % unemployed, per capita income, % male, % white, % black, % Hispanic, % pop under 15, % aged 15–19, % aged 20–20, % aged 25–44, % aged 45–64, % aged 65 and older, # of general practitioners per capita, # of specialists per capita, # of surgeons per capita, # of other physicians per capita. Robust standard errors in parentheses (clustered on county).

p<0.01,

p<0.05,

p<0.1.

Data are from Healthcare Cost and Utilization Project’s state inpatient database for NY, MA, AZ, FL, and CA (20% sample) for 1999–2009 and from the Medicare enrollment files for 1998–2009.

Table 6 presents robustness checks, including restricting the commercially insured sample to those over age 45 and alternative instruments in the first stage (with the first column of each panel reproducing the main results shown in Tables 4 and 5). Results are consistent, although often not statistically significant when we use the annual simulated county-level benchmark instruments. We also see similar spillovers over time, across refinement of the risk adjustment regime.9 Furthermore, we find no statistically significant effect of the squared term in specifications that include both penetration and its square.

Table 6.

Robustness

| Panel A: Log Total Costs | ||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Full Sample | Traditional Medicare FFS | MA | Commercial | Commercial 45–64 | ||||||||||||||||

| payment* year |

payment | sim county bench*year |

sim state benchmark |

payment* year |

payment | sim county bench*year |

sim state benchmark |

payment* year |

payment | sim county bench*year |

sim state benchmark |

payment* year |

payment | sim county bench*year |

sim state benchmark |

payment* year |

payment | sim county bench*year |

sim state benchmark |

|

| MA Managed | −0.00471** | −0.00367 | −0.00621 | −0.0143*** | −0.00451** | −0.00449 | −0.00611 | −0.0147*** | −0.00204 | −0.0118** | 0.00327 | −0.0118** | −0.00420** | −0.00352 | −0.00482 | −0.0117*** | −0.00320* | −0.00353 | −0.00224 | −0.0106*** |

| Care Penetration | (0.00194) | (0.00344) | (0.00510) | (0.00349) | (0.00186) | (0.00282) | (0.00521) | (0.00329) | (0.00263) | (0.00490) | (0.00331) | (0.00460) | (0.00174) | (0.00290) | (0.00431) | (0.00333) | (0.00174) | (0.00276) | (0.00404) | (0.00316) |

| Observations | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 5,813,942 | 5,813,942 | 5,813,942 | 5,813,942 | 1,253,227 | 1,253,227 | 1,253,227 | 1,253,227 | 3,632,805 | 3,632,805 | 3,632,805 | 3,632,805 | 1,981,146 | 1,981,146 | 1,981,146 | 1,981,146 |

| R-squared | 0.182 | 0.182 | 0.182 | 0.180 | 0.154 | 0.155 | 0.154 | 0.152 | 0.190 | 0.190 | 0.190 | 0.190 | 0.212 | 0.212 | 0.212 | 0.210 | 0.212 | 0.216 | 0.216 | 0.215 |

| Panel B: Length of Stay | Panel B: Length of Stay | |||||||||||||||||||

| payment* year |

payment | sim county bench*year |

sim state benchmark |

payment* Year |

Payment | sim county bench*year |

sim state benchmark |

payment* year |

payment | sim county bench*year |

payment* year |

payment | sim county bench*year |

sim state benchmark |

payment* year |

payment | sim county bench*year |

sim state benchmark |

sim state benchmark |

|

| MA Managed | −0.0231** | −0.0289** | −0.0127 | −0.0563*** | −0.0309** | −0.0378** | −0.0156 | −0.0788*** | −0.0216* | −0.0314* | −0.0181 | −0.0325** | −0.0186*** | −0.0116* | −0.0249** | −0.0360*** | −0.0192*** | −0.0108 | −0.0289** | −0.0371*** |

| Care Penetration | (0.00921) | (0.0136) | (0.0132) | (0.0139) | (0.0135) | (0.0185) | (0.0225) | (0.0211) | (0.0128) | (0.0180) | (0.0178) | (0.0139) | (0.00533) | (0.00657) | (0.0110) | (0.00956) | (0.00553) | (0.00687) | (0.0113) | (0.00938) |

| Observations | 13,678,534 | 13,678,534 | 13,678,534 | 13,678,534 | 5,813,942 | 5,813,942 | 5,813,942 | 5,813,942 | 1,253,227 | 1,253,227 | 1,253,227 | 1,253,227 | 3,632,805 | 3,632,805 | 3,632,805 | 3,632,805 | 1,981,146 | 1,981,146 | 1,981,146 | 1,981,146 |

| R-squared | 0.098 | 0.102 | 0.102 | 0.101 | 0.087 | 0.089 | 0.090 | 0.088 | 0.085 | 0.091 | 0.091 | 0.091 | 0.108 | 0.113 | 0.113 | 0.113 | 0.212 | 0.119 | 0.119 | 0.119 |

Note: Individual level covariates are age, sex, race, and type of insurance. Hospital level covariates are teaching hospital status, for-profit status, and number of beds. County level covariates include population, % in poverty, % unemployed, per capita income, % male, % white, % black, % Hispanic, % pop under 15, % aged 15–19, % aged 20–20, % aged 25–44, % aged 45–64, % aged 65 and older, # of general practitioners per capita, # of specialists per capita, # of surgeons per capita, # of other physicians per capita. Robust standard errors in parentheses (clustered on county). (20% sample) for 1999–2009 and from the Medicare enrollment files for 1998–2009.

p<0.01

p<0.05

p<0.1.

Data are from Healthcare Cost and Utilization Project’s state inpatient database for NY, MA, AZ, FL, and CA

C. County-Level Outcomes

Table 7 aggregates the hospitalization-level data to the county level (based on patients’ county of residence). We use this to gauge aggregate hospitalization utilization and outcomes on a population level – to see, for example, how MA penetration affects the rate of hospitalization (which obviously cannot be gauged at the hospitalization level). These county-level aggregates for the 215 counties in our 5 states are derived from aggregating the full 100% HCUP data. We further decompose population-level outcomes to the population over age 65 (in TM or MA) and that under age 65 (commercially insured). The over-65 panel thus aggregates all hospitalizations experienced in the county by residents over age 65, divided by the number of residents over age 65 to yield a per capita measure. This decomposition will not be affected by movements of beneficiaries between Medicare insurance types.10

Table 7.

MA Penetration on Population-Level Hospital Use and Outcomes

| Full Sample | Over 65 | Under 65 | ||||

|---|---|---|---|---|---|---|

| Ln(Cost) | OLS | IV Payment*Year |

OLS | IV Payment*Year |

OLS | IV Payment*Year |

| MA Managed | −0.00282*** | −0.00678** | −0.00240*** | −0.00412 | −0.00282*** | −0.00813** |

| Care Penetration | (0.000795) | (0.00343) | (0.000785) | (0.00337) | (0.000881) | (0.00356) |

| Days in Hospital per Thousand Residents | ||||||

| MA Managed | −0.864** | −4.975** | −1.714 | −8.916 | −0.480 | −2.469** |

| Care Penetration | (0.437) | (1.933) | (1.423) | (5.497) | (0.292) | (1.092) |

| Number of Hospitalizations per Thousand Residents | ||||||

| MA Managed | 0.0670 | −0.0804 | 0.308 | 0.748 | 0.0348 | −0.101 |

| Care Penetration | (0.0592) | (0.229) | (0.194) | (0.795) | (0.0360) | (0.133) |

| PQI per Thousand Residents | ||||||

| MA Managed | 0.0104 | −0.0909** | 0.0571 | 0.0546 | 0.00855 | −0.0585** |

| Care Penetration | (0.0107) | (0.0446) | (0.0376) | (0.126) | (0.00564) | (0.0261) |

Note: County level covariates include population, % in poverty, % unemployed, per capita income, % male, % white, % black, % Hispanic, % pop under 15, % aged 15–19, % aged 20–20, % aged 25–44, % aged 45–64, % aged 65 and older, # of general practitioners per capita, # of specialists per capita, # of surgeons per capita, # of other physicians per capita. Robust standard errors in parentheses (clustered on county).

p<0.01,

p<0.05,

p<0.1.

Data are from Healthcare Cost and Utilization Project’s state inpatient database for NY, MA, AZ, FL, and CA for 1999–2009 (100% sample) and from the Medicare enrollment files for 1998–2009 aggregated to 215 counties. PQIs are admissions for ambulatory care sensitive conditions as described in text.

The first panel of Table 7 shows lower total hospitalization costs for areas with greater MA penetration. The magnitude of these declines is consistent with that seen at the hospitalization level (with larger point estimates but overlapping confidence intervals). These estimates are consistent with the existing literature but are at the larger end of the range. The second panel explores the effect on total days in the hospital per thousand residents. Here, too, the pattern seen at the population level reflects that seen at the hospitalization level: greater MA penetration is associated with fewer days spent in the hospital overall.

The third panel of Table 7 explores the potential effect on the number of hospitalizations. We see a statistically insignificant decline (consistent with the similar but larger decline in population-level hospital costs than per-hospitalization costs), although imprecisely estimated enough to include substantial changes. The bottom panel looks specifically at admissions for ambulatory care sensitive conditions (PQIs). The decline here is similar in magnitude to that in total admissions, but is statistically significant – consistent with a story where the admissions that are avoided are those that were amenable to better outpatient management.

D. Discussion

The spillovers seen suggest that increasing Medicare Advantage penetration reduces the intensity of care during an inpatient stay, without the savings having a substantial effect on the rate of hospitalizations. This moves care in TM populations closer to the care patterns seen in MA populations, consistent with spillovers operating through norms and practice patterns or investment in shared technology or infrastructure – rather than income targets or other patterns that would suggest divergence. The magnitude of such spillovers, which improve overall system performance and could eventually reduce Medicare spending if they facilitate lower payment updates, has implications for optimal payment policy.

We can use the decline in population-level expenditures to help gauge the rough magnitude of the spillover effects of MA. For example, using the estimated effect from Table 3, increasing MA monthly payments by $100 (about one standard deviation) would increase the share of beneficiaries in MA by just under 5 percentage points, or from an average of about 35% in 2009 in the counties represented in the 5-state HCUP sample to about 40%, increasing the number of enrollees by about 400,000 in these states. This would increase total MA spending by $100 per month for the existing and new enrollees, or almost $5 billion in total for these states. Overall costs of hospital care is estimated to go down by something like 2% when MA penetration increases by 5 percentage points, off a base of total hospital costs for the TM population remaining in these states (after the implied shift to MA) of just under $30 billion, or about $600 million.11 Hospital costs for those in TM would thus go down by upwards of 10% of the increase in spending on MA.

It is important to note, however, that while these represent reductions in real resource use (such as fewer days in the hospital), the savings do not all accrue immediately to the Medicare program: Medicare pays hospitals prospectively, based on the “diagnosis-related group” (DRG) with which patients are admitted rather than the individual costs incurred. Some DRGs are in fact defined by treatments delivered as well as underlying conditions, so to the extent that changes in utilization affect the mix of DRGs they will affect Medicare payments more directly. Other changes in hospital costs may eventually affect the prospective payments (as they affect hospital margins that are an input into revisions of the payment structure), but are not immediately recaptured by the program. These substantial offsets nonetheless suggest that optimal payments for MA plans may be higher than models that ignore spillovers might suggest.

VI. Conclusion

The MA program was designed to give enrollees more choices among insurance plans and thereby provide higher-value care. Because the same health care delivery system serves most patients, changes in the MA system may affect care delivered system-wide. Any spillover effects of MA plans to others’ spending or outcomes have direct implications for payment rates in designing an efficient MA program. Previous research in other contexts suggests that these spillovers may be substantial, but there is limited evidence from the modern MA era that abstracts from potential confounding factors.

We take advantage of changes in payment policy and rich data on hospital use across population to gauge the causal effect of MA enrollment on system-wide care. We find that increasing MA penetration results in lower hospitalization costs and shorter lengths of stay system-wide. The magnitude of these spillovers is substantial, and taking them into account suggests higher optimal MA payments than would otherwise be the case. Future research will focus on other types of utilization and more nuanced measures of the quality of care.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Historically the MA program has been more costly than traditional Medicare because of higher base payments. During the mid 1990s it is estimated that private enrollees cost Medicare 5 to 7 percent more than it would have paid by providing care through TM. These generous payments resulted in more generous MA benefits but also higher Medicare spending (an estimated $14 billion more in 2009 for MA enrollees than if MA beneficiaries had been enrolled in FFS). Reductions in these “overpayments” represent a substantial source of savings in the 2010 Patient Protection and Affordable Care Act. Specifically, the ACA freezes payments to MA plans for 2011 and reduces MA payments in 2012 and 2013 to bring them in line with TM costs. The CBO estimates that this drop in payments will reduce MA enrollment every year until 2017, saving $135.6 billion over the FY2010 – FY2019 period.

The Institute of Medicine notes that “most providers receive payment from a variety of payers that may rely on different methods. Therefore, any given provider faces a mix of incentives and rewards, rather than a consistent set of expectations” (Institute of Medicine 2001). In a survey of physicians, Remler et al. (1997) found mean physician practice received capitation for 13% of patients, and 41% of all practices included some capitated payments. Integrated plans like Kaiser are an exception.

See Baker (2003) for a comprehensive discussion of possible spillover pathways. (10)

Negative spillovers due to increasing marginal costs in production (e.g. easing access for TM patients due to economical patterns of care in MA) could be interpreted as “pecuniary externalities,” with no implication of inefficiency. However, in the presence of excess utilization due to moral hazard in health insurance, the real externality is generated by increased use in TM.

See Card for an example of using the non-enrolled as a control for estimating the effect of Medicare coverage on the health and utilization of beneficiaries.

Viewed in context of the Roy model proposed by Chandra and Staiger, these results are consistent with a shift in the equilibrium from a technology that is intensive one to one that is not or, alternatively, that managed care penetration is endogenously determined by local practice patterns.

In 2006 the MMA also introduced a lock-in period, such that enrollees could only switch between MA plans and FFS during annual open enrollment.

For example, the correlation between changes in payment rates and changes in: unemployment rates: .002 (p-value=.77); per capita income: −.011 (p-value=.05); number of surgeons per capita: .004 (p-value=.45). Regression coefficients (including fixed effects – thus telling us about within-area changes) give context for the magnitude of these relationships. Regressing log payment rates on log population yields a statistically insignificant coefficient of .001, (or .05 when excluding “floor” counties); on the unemployment rate yields a statistically insignificant −.001.

There is no consistent difference in the pattern of spillovers in the updated HCC regime relative to the earlier period. For example, a regression of length of stay on instrumented MA penetration and instrumented MA penetration interacted with a post−2004 dummy produces a coefficient of −.028 (s.e. .013) on the main effect and an insignificant coefficient of − 007 (s.e. .006) on the interaction effect.

We do not see a consistent pattern of differences between the over-65 and under-65 population results. Results for the 45–64 year old county population are qualitatively similar to the under-65 results, but often with point estimates closer to the over-65 estimates. For example, re-estimating the last column of the log-cost specification limited to residents 45–64 yields an estimate of −.0068 (s.e. .0031).

We assume that the increase in MA payments applies to existing and new MA enrollees (who switch as a result of the more generous payment) and apply the resulting reduction in hospital spending to the remaining TM enrollees only, under the assumptions that (at least in the short run) MA payments do not change when MA enrollees’ marginal costs change, and that the Medicare program’s expenses for the beneficiaries who moved from TM to MA were on average the same at baseline. This calculation does not take into account any changes in other types of utilization or spillovers to the privately insured or uninsured, but gives some sense of the magnitude of the estimated effects.

References

- 1.Gold M, Jacobson G. [[cited 2012 September 5]];Medicare Advantage 2012 Data Spotlight: Enrollment Market Update: Kaiser Family Foundation. 2012 Available from: http://www.kff.org/medicare/8323.cfm.

- 2.MedPAC. Report to the Congress: Medicare Payment Policy, March. Washington, DC: 1998. [Google Scholar]

- 3.Medicare Payment Advisory Commission. Report to the Congress: Medicare Payment Policy. Washington, DC: 2010. [DOI] [PubMed] [Google Scholar]

- 4.CBO. The Long Term Budget Outlook: Fiscal Years 2010 to 2020. Washington, DC: 2010. [Google Scholar]

- 5.CBO. Comparison of Projected Enrollment in Medicare Advantage Plans and Subsidies for Extra Benefits Not Covered by Medicare Under Current Law and Under Reconciliation Legislation Combined with H.R. 3590 as Passed by Senate. Washington, DC: Mar 19, 2010. [Google Scholar]

- 6.McGuire T, Sinaiko A, Newhouse J. An Economic History of Medicare Part C. Milbank Quarterly. 2011 Jun;89(2):289–332. doi: 10.1111/j.1468-0009.2011.00629.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Baker LC. Managed Care Spillover Effects. Annual review of public health. 2003;24:435–456. doi: 10.1146/annurev.publhealth.24.100901.141000. [DOI] [PubMed] [Google Scholar]

- 8.Newhouse JP, Marquis MS. The Norms Hypothesis and the Demand for Medical Care. The Journal of Human Resources. 1978;13:159–182. (Supplement: National Bureau of Economic Research Conference on the Economics of Physician and Patient Behavior) [PubMed] [Google Scholar]

- 9.Fuchs VR, Newhouse JP. The conference and unresolved problems (introduction to Proceedings of the Conference on the Economics and Physician and Patient Behavior) Journal of Human Resources. 1978;13(Supplement):5–18. [PubMed] [Google Scholar]

- 10.Phelps CE. Information Diffusion and Best Practice Adoption. In: Culyer A, Newhouse J, editors. Handbook of Health Economics. 1A: North-Holland Press; 2000. [Google Scholar]

- 11.Chernew M. The impact of non-IPA HMOs on the number of hospitals and capacity. Inquiry. 1995;32(2):143–154. [PubMed] [Google Scholar]

- 12.Miller R, Luft H. Does managed care lead to better or worse quality of care? Health Affairs. 1997;16(5):7–25. doi: 10.1377/hlthaff.16.5.7. [DOI] [PubMed] [Google Scholar]

- 13.McGuire TG, Pauly MV. Physician Response to Fee Changes with Multiple Payers. Journal of Health Economics. 1991;10(4):385–410. doi: 10.1016/0167-6296(91)90022-f. [DOI] [PubMed] [Google Scholar]

- 14.Baker L, Corts K. HMO Penetration and the Cost of Health Care: Market Discipline or Market Segmentation? American Economic Review. 1996;86(2):389–394. [PubMed] [Google Scholar]

- 15.Glied S, Zivin J. How Do Doctors Behave When Some (But Not All) of Their Patients Are In Managed Care? Journal of Health Economics. 2002;21(2):337–353. doi: 10.1016/s0167-6296(01)00131-x. [DOI] [PubMed] [Google Scholar]

- 16.Arrow KJ. Uncertainty and the Welfare Economics of Medical Care. American Economic Review. 1963 Dec;53(5):941–973. [Google Scholar]

- 17.Card D, Dobkin C, Maestas N. The Impact of Nearly Universal Health Care Coverage on Health Care Utilization: Evidence from Medicare. American Economic Review. 2008 Dec;98(5):2242–2258. doi: 10.1257/aer.98.5.2242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.McWilliams JM, Meara ER, Zaslavsky AM, Ayanian JZ. Health of Previously Uninsured Adults After Acquiring Medicare Coverage. Journal of the American Medical Association. 2007 Dec 26;298(24):2886–2894. doi: 10.1001/jama.298.24.2886. [DOI] [PubMed] [Google Scholar]

- 19.McWilliams JM, Meara ER, Zaslavsky AM, Ayanian JZ. Health Service Use and Costs of Care for Previously Uninsured Medicare Beneficiaries. New England Journal of Medicine. 2007;357(2):143–153. doi: 10.1056/NEJMsa067712. [DOI] [PubMed] [Google Scholar]

- 20.Newhouse JP. Free For All: Lessons from the Health Insurance Experiment. Harvard University Press; 1993. [Google Scholar]

- 21.Finkelstein A, Taubman S, Wright B, Bernstein M, Gruber J, Newhouse J, et al. The Oregon Health Insurance Experiment: Evidence from the First Year. Quarterly Journal of Economics. 2012 Aug;127(3):1057–1106. doi: 10.1093/qje/qjs020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Baicker K, Taubman S, Allen H, Bernstein M, Gruber J, Newhouse J, et al. The Oregon Experiment - Medicaid’s Effects on Clinical Outcomes. New England Journal of Medicine. 2013;368(18) doi: 10.1056/NEJMsa1212321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Robinson JC. Decline in Hospital Utilization and Cost Inflation under Managed Care in California. JAMA. 1996;276(13):1060–1064. [PubMed] [Google Scholar]

- 24.Gaskin D, Hadley J. The impact of HMO penetration on the rate of hospital cost inflation, 1985–1993. Inquiry. 1997;34(3):205–216. [PubMed] [Google Scholar]

- 25.Baker L. The effect of HMOs on fee-for-service health care expenditures: evidence from Medicare J Health Econ. 1997;16:453–482. doi: 10.1016/s0167-6296(96)00535-8. [DOI] [PubMed] [Google Scholar]

- 26.Baker L, Cantor JC, Long SH, Marquis MS. HMO Market Penetration and Costs of Employer-Sponsored Health Plans. Health Affairs. 2000;19(5):121–128. doi: 10.1377/hlthaff.19.5.121. [DOI] [PubMed] [Google Scholar]

- 27.Baker LC. Association of Managed Care Market Share and Health Expenditures for Feefor-Service Medicare Patients. Journal of the American Medical Association. 1999;281(5):432–437. doi: 10.1001/jama.281.5.432. [DOI] [PubMed] [Google Scholar]

- 28.Baker LC, Phibbs CS. Managed Care, Technology Adoption, and Health Care: The Adoption of Neonatal Intensive Care. RAND Journal of Economics. 2002;33(3):524–548. [PubMed] [Google Scholar]

- 29.Heidenreich Pea. The Relation Between Managed Care Market Share and the Treatment of Elderly Fee for Service Patients with Myocardial Infarction. 2001 doi: 10.1016/s0002-9343(01)01098-1. [DOI] [PubMed] [Google Scholar]

- 30.Chernew M, DeCicca P, Town R. Managed care and medical expenditures of Medicare beneficiaries. Journal of Health Economics. 2008;27(6):1451–1461. doi: 10.1016/j.jhealeco.2008.07.014. [DOI] [PubMed] [Google Scholar]

- 31.Finkelstein A. The Interaction of Partial Public Insurance Programs and Residual Private Insurance Markets: Evidence from the U.S. Medicare Program. Journal of Health Economics. 2004;23(1):1–24. doi: 10.1016/j.jhealeco.2003.07.003. [DOI] [PubMed] [Google Scholar]

- 32.Finkelstein A. Quarterly Journal of Economics. Forthcoming; 2007. The Aggregate Effects of Health Insurance: Evidence from the Introduction of Medicare. [Google Scholar]

- 33.Heidenreich Pea. The Relation Between Managed Care Market Share and the Treatment of Elderly Fee-for-Service Patients with Myocardial Infarction. NBER Working Paper; 2001. p. 8065. [DOI] [PubMed] [Google Scholar]

- 34.Bundorf MK, Schulman KA, Gaskin D, Jollis JG, Escarce JJ. Impact of Managed Care on the Treatment, Costs and Outcomes of Fee-for-Service Medicare Patients with Acute Myocardial Infarction. Health services research. 2004;39(1):131–152. doi: 10.1111/j.1475-6773.2004.00219.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Baker L. HMOs and Fee for Service Health Care Expenditures: Evidence from Medicare. Journal of Health Economics. 1997;16(4):453–481. doi: 10.1016/s0167-6296(96)00535-8. [DOI] [PubMed] [Google Scholar]

- 36.Ho K. Insurer-Provider Networks in Medical Care Markets. NBER Working Paper; 2006. p. 11822. [Google Scholar]

- 37.Van Horn RL, Burns LR, Wholey DR. The Impact of Physician Involvement in Managed Care on Efficient Use of Hospital Resources. Medical Care. 1997;35(9):873–889. doi: 10.1097/00005650-199709000-00001. [DOI] [PubMed] [Google Scholar]

- 38.Chandra A, Staiger D. Productivity Spillovers in Healthcare: Evidence from the Treatment of Heart Attacks. Journal of Political Economy. 2007;115(1):103–140. doi: 10.1086/512249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Baicker K, Chandra A. Medicare Spending, the Physician Workforce, and Beneficiaries’ Quality of Care. Health Affairs. 2004;Web Exclusive(W4):184–197. doi: 10.1377/hlthaff.w4.184. [DOI] [PubMed] [Google Scholar]

- 40.Feldman R, Dowd B. Simulation of a Health Insurance Market with Adverse Selection. Operations Research. 1982 Nov-Dec;30(6):1027–1042. doi: 10.1287/opre.30.6.1027. [DOI] [PubMed] [Google Scholar]

- 41.Altman D, Cutler DM, Zeckhauser RJ. Adverse Selection and Adverse Retention. American Economic Review. 1998 May;88(2):122–126. [Google Scholar]

- 42.Mello MM, Stearns SC, Norton EC. Do Medicare HMOs Still Reduce Health Services Use After Controlling for Selection Bias? Health Economics. 2002;11(4):323–340. doi: 10.1002/hec.664. [DOI] [PubMed] [Google Scholar]

- 43.Brown RS, Dolores Gurnick Clement, Jerrold W. Hill, Sheldon MRetchin, Bergeron JW. Do Health Maintenance Organizations Work for Medicare? Health Care Financing Review. 1993;15(1):7–23. [PMC free article] [PubMed] [Google Scholar]

- 44.Riley G, Tudor C, Chiang Y-P, et al. Health Status of Medicare Enrollees in HMOs and Fee-for-Service in 1994. Health Care Financing Review. 1996;17(4):65–76. [PMC free article] [PubMed] [Google Scholar]

- 45.Greenwald LM, Levy JM, Ingber MJ. Favorable Selection into the Medicare+Choice Program: New Evidence. Health Care Financing Review. 2000;21(3):127–134. [PMC free article] [PubMed] [Google Scholar]

- 46.Mello MM, Stearns SC, Norton EC, Ricketts TC. Understanding Biased Selection in Medicare HMOs. Health services research. 2003;38(3):961–992. doi: 10.1111/1475-6773.00156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chernew ME, DeCicca P, Town R. Managed Care and Medical Expenditures of Medicare Beneficiaries. NBER Working Paper; 2008. p. 13747. [DOI] [PubMed] [Google Scholar]

- 48.Newhouse J, Price M, Huang J, McWilliams M, Hsu J. Steps to Reduce Favorable Risk Selection in Medicare Advantage Largely Succeeded, Boding Well for Health Insurance Exchanges. Health Affairs. 2012;31(12):2618–2628. doi: 10.1377/hlthaff.2012.0345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.McWilliams M, Hsu J, Newhouse J. New risk-adjustment system was associated with reduced favorable selection in medicare advantage. Health Affairs. 2012;31(12):2630–2640. doi: 10.1377/hlthaff.2011.1344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Brown J, Duggan M, Illyana K, Woolston W. Evidence from the Medicare Advantage program. NBER Working Paper; 2011. How does risk selection respond to risk adjustment? p. 16977. [DOI] [PubMed] [Google Scholar]

- 51.Agency for Healthcare Research and Quality CfF, Access, and Cost Trends. Population Characteristics. 2003 MEPS HC-064:P7R3/P8R1. [Google Scholar]

- 52.Penrod JD, McBride TD, Mueller KJ. Geographic variation in determinants of Medicare managed care enrollment. Health services research. 2001 Aug;36(4):733–750. PubMed PMID: 11508637. Pubmed Central PMCID: 1089254. Epub 2001/08/18. eng. [PMC free article] [PubMed] [Google Scholar]

- 53.Welch WP. Growth in HMO share of the Medicare market, 1989–1994. Health Affairs. 1996 Fall;15(3):201–214. doi: 10.1377/hlthaff.15.3.201. PubMed PMID: 8854527. Epub 1996/10/01. eng. [DOI] [PubMed] [Google Scholar]

- 54.Cawley J, Chernew ME, McLaughlin C. HMO Participation in Medicare+Choice. Journal of Economics and Management Strategy. 2005;14(3) [Google Scholar]