Abstract

Interest has recently surged in the neural mechanisms of audition, particularly with regard to functional imaging studies in human subjects. This review emphasizes recent work on two aspects of auditory processing. The first explores auditory spatial processing and the role of the auditory cortex in both nonhuman primates and human subjects. The interactions with visual stimuli, the ventriloquism effect, and the ventriloquism aftereffect are also reviewed. The second aspect is temporal processing. Studies investigating temporal integration, forward masking, and gap detection are reviewed, as well as examples from the birdsong system and echolocating bats.

Keywords: temporal processing, spatial processing, macaque monkey, human imaging, audition, perception

INTRODUCTION

Humans and animals use many different senses to explore and experience the world around them. Of all of the mammalian sensory systems, we currently have the best understanding of the visual system, largely due to the consistent interest in this topic and the tremendous effort that has been made to explore and attempt to understand the neural mechanisms of visual perception. Our understanding of the neural basis of auditory perception is far behind that of vision, but interest in this area has surged over the past decade. Two major strategies that are used to elucidate the neural correlates of auditory perception are functional imaging techniques in humans and single-neuron responses in macaque monkeys. This review highlights some of these studies and places them in a broader context of the neurological basis of auditory perception.

The auditory system must process many different features of an acoustic stimulus to give rise to the salient features that we perceive; one must determine where a sound came from, what different spectral and temporal properties it has, and what exactly the sound represents. This complexity is much too great to be dealt with in a single review, so we have elected to focus on spatial and temporal processing. Further, this review focuses on the cortical mechanisms most closely related to auditory perception, i.e., what the listener actually perceives. In the auditory system, the acoustic signal is extensively processed by nuclei in the brainstem, midbrain, and thalamus (Figure 1). Clearly, the role of these subcortical structures is paramount in providing the necessary input to the cerebral cortex for auditory perception. For instance, bilateral cochlear lesions result in deafness regardless of cortical function. Similarly, lesions of the superior olivary complex in the brainstem result in sound localization deficits despite an intact auditory cortex (see Masterton et al. 1967). However, the cerebral cortex is necessary for the perception of auditory signals (see Heffner 1997, Heffner & Heffner 1990). The focus of this review is on recent studies using both single-neuron recordings in animal preparations and functional imaging in humans that have shed new light on how neural processing gives rise to auditory perception.

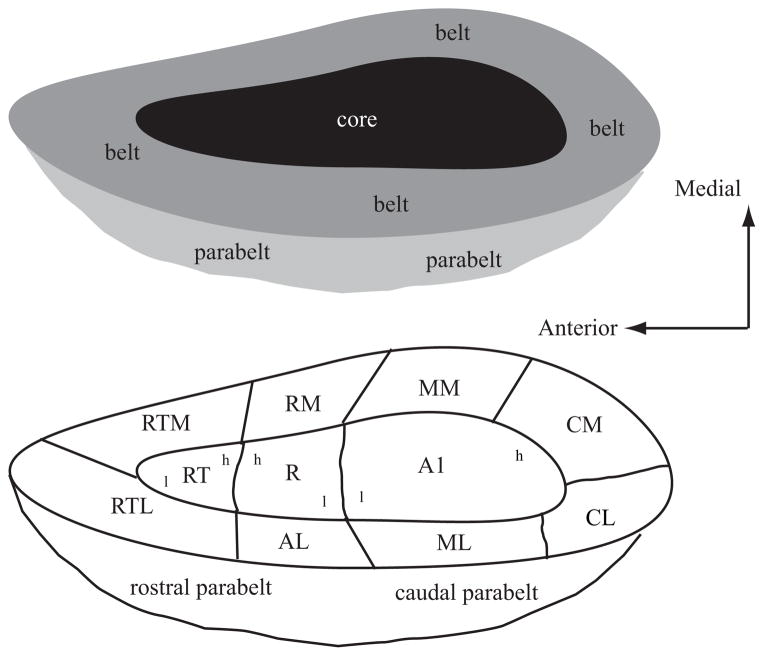

Figure 1.

Schematic diagram of the ascending auditory system. Shown are the major subcortical nuclei that process acoustic information. Each cochlea projects to the ipsilateral cochlear nucleus in the brainstem. Not shown are the three different subdivisions of this nucleus, the dorsal cochlear nucleus, the anterior ventral cochlear nucleus, and the posterior ventral cochlear nucleus. These neurons project to the superior olivary complex (SOC) in the brainstem, which contains the lateral superior olive (LSO) and medial superior olive (MSO). Each cochlear nucleus projects to the SOC bilaterally; thus, neurons in these regions have binaural response properties that are projected upward to each ascending nucleus. The nucleus of the trapezoid body is not shown. SOC neurons project to the inferior colliculus (IC), which itself is composed of several subnuclei. The IC is the obligatory relay nucleus to the thalamus. Not shown is the nucleus of the lateral lemniscus. The IC neurons project to the medial geniculate body of the dorsal thalamus, which in turn projects to the auditory cortex.

THE ORGANIZATION OF THE PRIMATE AUDITORY CORTEX

The use of the macaque monkey as an animal model in studies of the neural basis of audition has increased dramatically in the past few years. Monkeys and humans share similarities in the anatomical, electrophysiological, and functional role that these cortical areas play in audition. Recent studies have investigated the role of the primate cerebral cortex in auditory spatial processing, which is one emphasis of this review, so this section provides an overview of the basic organization of these areas.

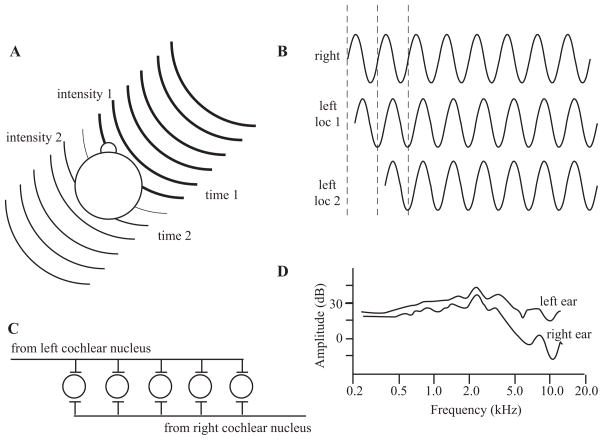

The auditory cortex of the primate can be divided into three main regions, each composed of several cortical areas (Figure 2; see Jones 2003, Kaas & Hackett 2000, Pandya 1995, Rauschecker & Tian 2000). The organization can be described as a core-belt-parabelt arrangement, with the cortical hierarchy advancing at each stage. Each of these fields can be distinguished based on histological appearance, corticocortical and thalamocortical connections, and functional response properties (Hackett et al. 2001, Jones et al. 1995, Kosaki et al. 1997, Merzenich & Brugge 1973, Molinari et al. 1995, Morel et al. 1993, Rauschecker & Tian 2004, Rauschecker et al. 1995, Recanzone et al. 2000a, Tian & Rauschecker 2004, Woods et al. 2006). The core region is composed of three cortical areas. The primary auditory cortex, A1, has koniocortical cytoarchitecture and is believed to be homologous with A1 in other mammals (Hackett et al. 2001). Rostral to A1 are two other core cortical fields, the rostral field (R) and rostrotemporal field (RT). Physiologically, A1 and R are organized tonotopically (Merzenich & Brugge 1973, Morel et al. 1993, Recanzone et al. 2000b), with a reversal at the low-frequency border between the two fields. Area RT is also suspected of having a tonotopic organization, but this has yet to be explicitly demonstrated. Surrounding the core field are several belt fields. In the lateral belt (caudolateral, mediolateral, and anterolateral, or CL, ML, and AL, respectively), neurons respond better to broad spectral stimuli, such as broadband and narrowband noise, than to tones (Merzenich & Brugge 1973, Rauschecker & Tian 2004, Rauschecker et al. 1995, Recanzone et al. 2000a, Tian & Rauschecker 2004). However, if narrowband noise is used, the corresponding center frequency of the stimulus that elicits the best response does show tonotopy, with reversals at the borders between these lateral belt fields (Rauschecker & Tian 2004). The medial belt fields have been less well defined physiologically, although they generally also show broad spatial tuning (Kosaki et al. 1997, Woods et al. 2006).

Figure 2.

Schematic diagram of the primate auditory cortex. Top schematic shows the general organization of core (black), belt ( gray), and parabelt (light gray). Bottom schematic shows the approximate locations of the different cortical areas. The core is made up of the primary auditory cortex (AI), the rostral field (R), and the rostrotemporal (RT) area. These three fields have a tonotopic organization as shown by the lower-case letters h (high frequency) and l (low frequency). The medial belt includes the caudomedial (CM) area, middle medial (MM) area, rostromedial (RM) area, and medial rostrotemporal (RTM) area. The lateral belt includes the caudolateral (CL) area, middle lateral (ML) area, anterolateral (AL) area, and the lateral rostrotemporal (RTL) area. The parabelt is divided into rostral and caudal areas. Drawing is not to scale as the medial belt is significantly narrower than the lateral belt. Adapted from (Kaas & Hackett 2000).

Lateral to the belt fields is the parabelt, which is subdivided into rostral and caudal regions. The thalamocortical and corticocortical connections have been described (Hackett et al. 1998a,b; see Jones 2003), but there have been relatively few published electrophysiology studies of neurons in these areas. Two imaging studies in the macaque monkey have been able to define the parabelt cortex (as well as the other fields shown in Figure 2) by the change in the blood oxygenation level–dependent response to acoustic stimulation (Kayser et al. 2007, Petkov et al. 2006). The results from these anatomical and functional imaging studies in monkeys and functional imaging studies in humans (referenced below) indicate that the parabelt is likely processing complex acoustic features.

In humans, multiple studies over the past decade have explored the functional organization of the auditory cortex. These experiments have been hampered by the wide individual variability of the gross anatomical landmarks of the upper bank of the superior temporal gyrus (e.g., Leonard et al. 1998) that does not consistently identify the locations of the different cortical fields (see also Morosan et al. 2001, Petkov et al. 2004). The human auditory cortex is also composed of three core fields defined histologically as in the macaque (Morosan et al. 2001, Rademacher et al. 2001). Functional imaging studies note that there are several tonotopic fields in the human auditory cortex (e.g., Bilecen et al. 1998, Talavage et al. 2000). Other features of auditory responses in different cortical areas are consistent with the results seen in macaques (e.g., Ahveninen et al. 2006, Altmann et al. 2007, Brunetti et al. 2005, Krumbholz et al. 2005, Petkov et al. 2004, Viceic et al. 2006, Wessinger et al. 2001, Zimmer & Macaluso 2005).

One central theme in investigating auditory cortical processing is the working hypothesis that spatial and nonspatial features of acoustic stimuli are processed in two parallel streams (Rauschecker 1998) similar to that proposed for the dorsal “where” and ventral “what” streams in visual cortex (Ungerleider & Haxby 1994, Ungerleider & Mishkin 1982). Work in both nonhuman primates (Recanzone et al. 2000b, Romanski et al. 1999, Tian et al. 2001, Woods et al. 2006; see Kaas & Hackett 2000, Rauschecker & Tian 2000) and humans (Ahveninen et al. 2006, Altmann et al. 2007, Arnott et al. 2004, Sestieri et al. 2006) tends to support this model in that spatial features are preferentially processed in caudal cortical areas, and nonspatial features are processed in more ventral cortical areas. Thus, it appears that the macaque monkey is an excellent animal model for human auditory cortical processing.

AUDITORY SPATIAL PROCESSING

Auditory spatial processing has been a topic of interest ever since the auditory system has been experimentally investigated. One key feature of spatial processing in the auditory system is that, unlike the visual and somatosensory systems, space is not directly represented in the sensory epithelium. The central nervous system must compute the spatial location of a stimulus by integrating the information provided by many individual inner hair cells and their corresponding spiral ganglion cells. These computations are initiated very early, at the level of the second synapses in the central nervous system in the superior olivary complex (Figure 1). These early processing stations have been the topic of much interest and research, and the contribution of these stations to spatial processing is too extensive to review here. Instead, we focus on the cortical mechanisms of spatial localization and how these responses can potentially account for the sound localization ability of humans and nonhuman primates. In this section, we describe psychophysical studies and the potential spatial cues that can be used to compute the spatial location of stimuli. The electrophysiological and functional imaging evidence in support of a caudal processing stream of acoustic space is then summarized.

Sound Localization Psychophysics

The ability of humans and animals to detect either changes in or the absolute location of an acoustic stimulus has been studied extensively for several decades (see Blauert 1997, Carlile et al. 1997, Heffner 2004, Populin & Yin 1998, Recanzone & Beckerman 2004, Recanzone et al. 1998, Su & Recanzone 2001). These studies have noted that two basic parameters influence sound localization ability (see Blauert 1997). The first is spectral bandwidth. The greater the bandwidth, the better subjects are able to localize the stimuli, particularly in elevation. For example, broadband noise is better localized than bandpassed noise, which is better localized than tones (see Recanzone et al. 2000b for examples in monkeys). This is likely due to the ability to recruit more neurons to provide information about stimulus location as more neurons are activated by the greater spectral energy of the stimulus. The second basic stimulus parameter is the intensity of the stimulus, with low-intensity stimuli being most difficult to localize and louder stimuli more easily localized (e.g., Altshuler & Comalli 1975, Comalli & Altshuler 1976, Recanzone & Beckerman 2004, Sabin et al. 2005, Su & Recanzone 2001). Again, as the louder sounds will engage a greater population of neurons, it is possible that the size of the neuronal pool is important in processing acoustic space information. This is reasonable to assume, as the individual binaural cues can be ambiguous when only a single frequency is present, and the monaural cues depend on both a broad spectral bandwidth and reasonable intensity levels to be effective, as described below.

Binaural Cues

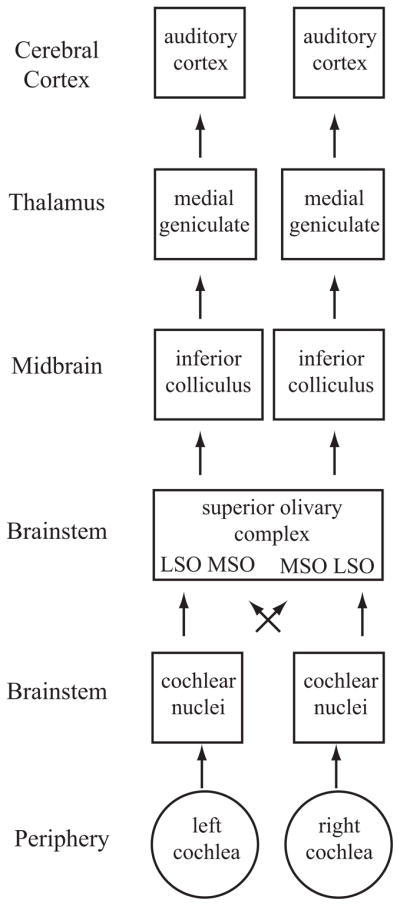

Two binaural cues can be used to calculate the location of an acoustic source. The first is interaural intensity differences. If a sound source is not in the plane of the midline, primarily the head but also the body will absorb some of the energy and shadow the far ear from the acoustic source, thereby making the intensity of the stimulus at that ear less than the intensity of the closer ear (Figure 3A). The second binaural cue results from the fact that any sound deviating from the midline will arrive at the near ear before it arrives at the far ear. This interaural timing difference can occur at the onset of the stimulus, but can also continue as a phase difference of the signal between the two ears. One advantage of encoding phase differences is that there are multiple samples (each phase of the stimulus) that can be compared. The disadvantage is that ambiguities can exist for higher frequencies as the same phase difference will occur when the location corresponds to multiples of a full cycle of the stimulus (Figure 3B).

Figure 3.

Sound localization cues. (A) Interaural intensity and timing cues. The head and body will absorb some of the sound energy, resulting in a lower-intensity stimulus at the far ear (intensity 2) in comparison with the near ear (intensity 1), represented here by the lines becoming thinner. The wave fronts will also arrive at the near ear (time 1) before the far ear (time 2), giving rise to interaural timing cues. (B) Interaural time and phase cues. The waveform will reach the far ear at a later time than the near ear. Ambiguities exist when only considering the phase and not the onset, as two locations can have the same phase disparity if they differ by multiples of a single cycle. (C ) Jeffress’s model of computing interaural timing differences. The lengths of the axons will result in coincident activation of only one neuron, depending on the location of the stimulus. (D) Head-related transfer functions. The acoustic energy measured at the eardrum varies between the two ears as a function of the stimulus location. (D) is adapted from (Wightman & Kistler 1989a).

The superior olivary complex (SOC) of the brainstem is the first processing stage of these binaural interactions, and lesions of these structures can cause sound localization deficits (see Casseday & Neff 1975, Kavanagh & Kelly 1992, Masterton et al. 1967). Binaural processing seems to be selectively disrupted, as the animals can still hear the sounds but make random responses with respect to the perceived location of the stimulus. Jeffress (1948) presented one initial hypothesis, based on a place code in the SOC (Figure 3C ), that could account for the ability to make microsecond discriminations. In this model, the length of the axons determines a place code where the action potentials from the two cochlear nuclei arrive at the postsynaptic cell simultaneously. This is an attractively simple model that has been shown to exist in the avian brain, but it has yet to be demonstrated in the mammalian brain (see Carr & Koppl 2004, McAlpine 2005), which may use a different mechanism.

Monaural Cues

The binaural cues are quite effective in providing the necessary information to the brain to localize acoustic stimuli in azimuth, as evidenced by deficits in sound localization perception in the unilaterally deaf or after one ear is plugged or occluded (see Blauert 1997, Middlebrooks & Green 1991). However, binaural cues cannot provide information with respect to the elevation of the stimulus, as mammalian ears are symmetrically placed on the head in elevation. There is a class of monaural cues that provide both azimuth, and especially elevation, cues (see Wightman & Kistler 1989a,b). As the sound travels to the listener, the reflections of the sounds off the head, body, and particularly the pinna cause the energy at certain frequencies to be cancelled out and therefore reduced. This leaves a characteristic sound spectrum, called the head-related transfer function (HRTF; Figure 3D), that is different from that of the sound in the air. Transforming sounds using the HRTF to recreate spatial location and presenting them via earphones permits the sound to be perceived from outside the listener’s head (Wightman & Kistler 1989b), allowing spatial hearing to be studied in small environments such as in functional magnetic resonance imaging (fMRI) scanners (Altmann et al. 2007, Fujiki et al. 2002, Hunter et al. 2003, Pavani et al. 2002, Warren et al. 2002).

For these spectral cues to be effective, the stimulus must have a broad spectral bandwidth. These cues also require that the stimulus be loud enough to permit detection of all of the decreases in energy, or notches. Thus, pure tone stimuli are extremely difficult to localize in elevation (e.g., Recanzone et al. 2000b), as are very-low-intensity stimuli (e.g., Recanzone & Beckerman 2004, Sabin et al. 2005, Su & Recanzone 2001). Because the spectral bandwidth of neurons in the primary auditory cortex can be quite narrow (Recanzone et al. 2000a), a population-coding scheme is necessary to encode acoustic space at the cortical level. Several varieties of such models have been proposed (Fitzpatrick et al. 1997, Furukawa et al. 2000, Harper & McAlpine 2004, Recanzone et al. 2000b, Skottun 1998, Stecker et al. 2005a).

Lesion Experiments

Lesions in normal animals have shown that the auditory cortex is necessary for the perception of acoustic space. Early studies in cats suggested that the primary auditory cortex (A1) is both necessary and sufficient to account for sound localization ability ( Jenkins & Merzenich 1984; see King et al. 2007 for review). More recent studies have suggested that although AI is certainly necessary, it may not be sufficient for sound localization (Malhotra & Lomber 2007). Experiments in primates have concurred with the necessity argument but have not addressed whether A1 is sufficient for sound location perception (Heffner & Heffner 1990, Sanchez-Longo & Forster 1958, Thompson & Cortez 1983, Zatorre & Penhune 2001; see also Beitel & Kaas 1993). The sound localization deficits are rarely complete, and lesioned animals are able to detect the same acoustic stimuli; thus, it seems the best explanation is that the animals are unable to perceive the location of the sound (see Heffner 1997).

Physiology Experiments

The spatial response properties of cortical neurons have been studied extensively over the years and have primarily used the cat as an animal model (e.g., Brugge et al. 1996, Imig et al. 1990, Middlebrooks et al. 1994, Middlebrooks & Pettigrew 1981, Rajan et al. 1990). A handful of studies have investigated spatial response properties in the macaque monkey, in both anesthetized (Rauschecker & Tian 2000, 2004; Tian et al. 2001) and alert preparations (Ahissar et al. 1992, Benson et al. 1981, Recanzone et al. 2000b, Woods et al. 2006). Tian et al. (2001) tested whether neurons were better tuned to the spatial location or to the type of stimulus (monkey vocalization) in three different areas of the lateral belt (CL, ML, and AL). They found that neurons in the caudal fields are much more sharply spatially tuned than neurons in the more rostral fields, which were more selective for the type of vocalization. In a similar study in alert monkeys, Recanzone et al. (2000b) showed that neurons in the caudal belt field CM had sharper spatial tuning functions than those in A1 in both azimuth and elevation. Furthermore, the population response indicated that CM neurons could account for the localization performance of these animals as a function of the frequency and spectral bandwidth of the stimulus. Most recently, Woods et al. (2006) measured the spatial receptive fields of neurons in two core (A1 and R) and four belt (MM, CL, CM, and ML) cortical areas. As predicted by Rauschecker (1998), neurons in the caudal fields had sharper spatial tuning to broadband noise stimuli presented at different stimulus intensities.

Imaging Experiments

There has been a surge of functional imaging experiments investigating auditory spatial processing in humans. Initial studies in humans primarily used magnetoencephalography or positron emission tomography imaging techniques because it is difficult to create external sound sources in the confines of an fMRI magnet (e.g., Weeks et al. 1999). More recently, fMRI studies have taken advantage of HRTFs to externalize the stimuli (Altmann et al. 2007, Fujiki et al. 2002, Hunter et al. 2003, Pavani et al. 2002, Warren et al. 2002). These studies, as well as those using traditional headphone stimuli, have indicated that the posterior regions of auditory cortex, both Heschl’s gyrus and the planum temporale, are preferentially activated by the spatial aspects of the stimulus location (Ahveninen et al. 2006, Altmann et al. 2007, Brunetti et al. 2005, Krumbholz et al. 2005, Viceic et al. 2006, Zimmer & Macaluso 2005; see Arnott et al. 2004 for a review of earlier work) whereas nonspatial aspects of the stimuli are preferentially processed by more rostral regions. This is consistent with single-neuron experiments in nonhuman primates, as discussed above.

Visual Influences on Auditory Spatial Processing

The spatial resolution of the auditory system is quite remarkable given that acoustic space must be calculated by the nervous system. However, it is clearly inferior to the spatial resolution of the visual or somatosensory system, where the spatial features of the stimulus are encoded by the location of the sensory receptor. In some cases, both spatially disparate auditory and visual stimuli can be perceived to originate from the location of the visual stimulus. This type of intersensory bias is known as the ventriloquism effect (Howard & Templeton 1966), but similar interactions occur between the auditory, visual, and proprioceptive senses in both space and time (see Bertelson 1999, Recanzone 2003, Welch 1999, Welch & Warren 1980). Both cognitive and noncognitive factors influence the incidence and magnitude of the ventriloquism effect (see Welch 1999). The cognitive factors include the “unity assumption,” which is that the subject has the a priori assumption that the two stimuli are part of the same real-world object or event.

Three main noncognitive factors affect the strength of the ventriloquism effect. The first is timing, as there has to be close temporal synchrony between the auditory and visual stimuli for the illusion to occur (Slutsky & Recanzone 2001). Subjects also are more likely to report the two stimuli as different if the visual stimulus leads the auditory stimulus compared to the reverse. The second parameter is compellingness (Warren et al. 1981), which is how well the sound matches what the listener expects of the visual object, i.e., deep pitches for large objects and high pitches for small objects. Finally, there is a spatial influence ( Jack & Thurlow 1973). If the auditory and visual stimuli are too far apart spatially, the illusion breaks down. Of course, all three of these parameters interact. For example, the distance between a tone and flash of light where the illusion breaks down is much smaller than when voices are presented with images of people talking.

Early studies interpreted the sensory bias on the idea that one sensory modality would capture the other based on the relative acuities between the two, with little sensory bias of the dominant sensory modality by the nondominant sensory modality. For the ventriloquism effect, therefore, the high-acuity visual system will capture the location of the auditory stimulus, with little or no bias of the visual location by the auditory stimulus (see Warren et al. 1981). More recently, Alais & Burr (2004) have hypothesized that a model of optimal combination can explain this phenomenon. In this case, the variance in the prediction for the location of the stimulus in each modality is taken into account to determine where the bisensory stimulus originated. By increasing the difficulty in localizing the visual stimuli, they showed that subjects would increase the variance of the localization estimates, and for conditions where the visual acuity was very low, the auditory stimulus tended to dominate the percept of where both stimuli were located.

The results from a second set of experiments are consistent with the idea that the sensory system with the highest acuity dominates the percept (Phan et al. 2000). Those experiments tested a subject with bilateral parietal lobe lesions. This subject could detect visual stimuli across the entire visual field, but had extreme difficulty discriminating whether two visual stimuli came from the same location or different locations, particularly in the right visual field. His auditory localization was relatively normal. When he was discriminating the location of visual stimuli while unattended auditory stimuli were simultaneously presented from the same location, his visual acuity was greatly improved. Again, the sensory modality that has the greater acuity, regardless of which it is, tends to dominate the percept of where both stimuli are located.

Ventriloquism Aftereffect

An intriguing aspect of the ventriloquism effect is that it can be long lasting (Lewald 2002, Radeau & Bertelson 1974, Recanzone 1998, Woods & Recanzone 2004). If a subject is presented with auditory and visual stimuli with a consistent spatial disparity for a period of tens of minutes, the subject’s percept of acoustic space will be shifted in the direction of the visual stimulus when tested in complete darkness. This indicates that the representation of acoustic space has somehow been altered by the disparate visual experience. This can occur when the auditory and visual stimuli are displaced by a small amount and are consistently perceived to be from the same location (Recanzone 1998) or when the spatial disparity is quite large (Lewald 2002). Under either condition, the effect does not transfer across frequencies, i.e., training at one frequency does not influence the spatial representation at other frequencies. The frequency range over which this integration occurs remains to be explored. Recanzone (1998) used 750 Hz and 3000 Hz stimuli, and Lewald (2002) used 1000 Hz and 4000 Hz, so potentially a frequency difference of less than two octaves could show a transference effect. The duration of the effect also remains untested. It is possible that the effect will remain until the subject is put back into the “normal” environment and the auditory and visual stimuli are again spatially coincident.

It is important to note that this aftereffect is quite different from other aftereffects that are the result of adaptation of neurons in the visual motion processing areas, such as the waterfall illusion (Hautzel et al. 2001, Tootell et al. 1995, van Wezel & Britten 2002). The ventriloquism aftereffect lasts tens of minutes, does not transfer across frequencies, and more importantly, is in the same direction as the adapting stimulus.

Potential Neural Mechanisms of the Ventriloquism Effect

The underlying neuronal mechanisms of the ventriloquism effect and aftereffect remain unclear. It remains difficult to test where in the brain neurons shift their spatial receptive fields to account for this change in perception. Functional imaging studies in humans do not currently have the spatial resolution to detect such subtle differences in spatial processing, and the broad spatial receptive fields increase the difficulty of determining changes in spatial processing at the single-neuron level. Although the ventriloquism aftereffect has been shown in macaque monkeys (Woods & Recanzone 2004), it is always difficult to have an animal perform a task in which an illusion may (or may not) occur. Does the experimenter reward the animal for responding as though it experienced the illusion and run the risk that the animal learns to make the experimenter-desired response even though it did not perceive the illusion? The alternative is to not reward the animal on illusion trials, and run the risk that the animal does experience the illusion and will not be able to form a relationship between the stimulus, the response, and the reward. One way around this is to use “probe” trials that are very infrequent and either are never or are randomly rewarded. The inherent disadvantage of probe trials is that very few of the interesting trials are presented, which either vastly increases the time to perform the experiment or greatly decreases the amount of data collected.

Regardless, several candidate structures could be involved in this multisensory processing. First, there is a direct projection from the auditory cortex to the visual cortex representing the periphery in primates (Falchier et al. 2002, Rockland & Ojima 2003), and visual cortex projects to auditory cortical fields in ferrets (Bizley et al. 2006). Indeed, visual influences at early auditory cortical levels have been demonstrated. Ghazanfar et al. (2005) showed that the majority of locations tested had either an enhanced and/or a suppressed response when video images of monkeys making vocalizations were paired with the vocalization as opposed to just the video or audio component alone. This effect was greater in the lateral belt areas than in A1 and was consistent with earlier reports of audio-visual interactions in speech processing in humans (Calvert et al. 1999). In addition, other nonauditory factors such as eye position and task demands can also influence the responses of auditory cortical neurons (Brosch et al. 2005, Fu et al. 2004, Werner-Reiss et al. 2003, Woods et al. 2006), indicating that there is processing beyond simple acoustic feature representations even in core auditory cortex in the primate.

Previous studies have documented several regions of the nonhuman primate brain that have multisensory responses, including the superior temporal sulcus (e.g., Benevento et al. 1977, Bruce et al. 1981, Cusick 1997), the parietal lobe (e.g., Cohen et al. 2005, Mazzoni et al. 1996, Stricanne et al. 1996), and the frontal lobe (Benevento et al. 1977, Russo & Bruce 1994). Each of these areas could potentially be involved in multisensory processing leading to the ventriloquism effect, but to date studies that directly investigate how these illusions are represented at the level of the single neuron have not been reported.

Human imaging studies have used a wide variety of different auditory and visual stimuli with a variety of different tasks that the subjects have to perform. The results from these studies have been quite varied in detail. The variances are likely due to the differences in experimental paradigms and the difficulty in comparing cross-modal matching, intramodal matching, spatial attention, and cross-modal learning paradigms (see Calvert 2001). Nonetheless, these studies are consistent in implicating a number of different cortical areas, including the superior temporal sulcus, inferior parietal lobe, prefrontal cortex, and the insula (e.g., Bushara et al. 2001, 2003; Lehmann et al. 2006; Sestieri et al. 2006). A few studies have directly assessed audio-visual interactions that are relevant to the ventriloquism effect (Bischoff et al. 2007, Busse et al. 2005, Stekelenburg & Vroomen 2005, Stekelenburg et al. 2004). Findings from these studies are consistent in showing differential activation of the multisensory processing areas noted above, namely, the superior temporal sulcus, intraparietal areas, insula, and frontal cortex, when sensory binding occurs in contrast to when it does not. How these different brain pathways integrate or parse these different sensory modalities into the percept of a single or two different sensory objects or events remains unknown.

TEMPORAL PROCESSING

The ability of the auditory system to process the temporal features of sounds also has a long history. Only recently have the neural mechanisms of this processing been explored in non-human primate models (e.g., Bartlett & Wang 2005, Phan & Recanzone 2007, Werner-Reiss et al. 2006), although there is a rich literature on human auditory temporal processing using event-related recording and magnetoencephalography techniques. For the purposes of this review, we concentrate on five basic temporal processing features and draw from a number of different species to illustrate potential neural correlates.

With respect to the auditory system, the term “temporal processing” can be ambiguous. Temporal processing can either refer to the ability of the auditory system to process temporal components of the acoustic stimulus, or it can refer to the ability of the neurons to encode acoustic parameters in the temporal pattern of neural activity. For this review, unless otherwise noted, we use the term to refer to the former. An additional point of confusion can come about from the auditory usage of the term “temporal processing” when it is contrasted with spectral processing. Spectral processing refers to the ability of the nervous system to analyze the frequencies of a sound; technically, sound frequency is a temporal property. In general, spectral processing is used to refer to analysis of sound where frequency can be resolved by place on the cochlea. Spectral processing has recently been reviewed elsewhere (Sutter 2005) and is not the topic of this article. Finally, investigators often discuss two types of temporal processing: temporal integration and temporal resolution (for a review, see Eddins & Green 1995). In this article, we focus on forms of temporal integration and resolution that have been studied both psychophysically and electrophysiologically.

Temporal Integration

Temporal integration refers to the ability to integrate acoustic features over time. This integration is ecologically important because it takes time to transmit large quantities of high-quality information and because integrating over time in realistic noisy environments allows random noise to be averaged out, thereby making the signal easier to detect. However, many realistic biological problems, like hunting prey or avoiding predation, require a rapid response for which integrating over long times would be counterproductive. Therefore, a compromise or tradeoff must be established.

One basic way to assess this tradeoff is by measuring the ability of subjects to detect a sound as a function of its duration. Increasing the duration of a sound decreases the detection threshold up to a point, after which longer durations do not lead to further improvements (Dallos & Olsen 1964, Garner & Miller 1947, Hughes 1946). This type of relationship has been modeled primarily in two ways. One is to reciprocally relate threshold and duration (Bloch’s law). Another is through the leaky integrator model, which models temporal integration as the charging of a capacitor in parallel with a resistor (Munson 1947, Plomp & Bouman 1959, Zwislocki 1960). With this model, a time constant (in milliseconds) can be calculated that reflects how long it takes integration to reach 63% of asymptotic value.

Integration time constants of 50–200 msec have been found in most investigations of human subjects (Eddins & Green 1995; Eddins et al. 1992; Garner & Miller 1947; Gerken et al. 1983, 1990; Green & Birshall 1957; Plomp & Bouman 1959; Watson & Gengel 1969) and other animal subjects (Clack 1966, Costalupes 1983, Fay & Coombs 1983, O’Connor et al. 1999, Solecki & Gerken 1990), although there has been significant variation of estimates both within and across species. A more recent reanalysis (with nonlinear curve fitting) of the data available in the literature suggests mean integration time constants of ~30 msec in humans and medians of ~45 msec in other animals, but there still was a large degree of variation, with time constants from 25–150 msec in humans and 25–350 msec in other animals (O’Connor & Sutter 2003, O’Connor et al. 1999). Several sources of experimental differences can account for the large range of values. These include the use of arbitrary subjective criteria for curve fitting (O’Connor & Sutter 2003) and the possibility that neither the power law nor the leaky integrator models completely captures temporal integration because performance for very short stimuli deviates substantially from the model predictions, resulting in a strong relationship between reported time constants and the minimum duration stimulus used in the experiments. In addition, the frequencies used can influence the estimate of integration times. The general results of these behavioral and psychophysical studies, therefore, are mixed, which makes it difficult to interpret comparisons across studies.

There have only been a few single-unit studies of temporal integration. One has found very similar time constants for cochlear nucleus neurons and behavior in chinchillas (Clock et al. 1993). The results using the exponential (leaky integrator) function fit the behavioral data very well. However, when the same analysis was applied to auditory nerve data, the neurons of the auditory nerve had time constants much longer than neurons in the cochlear nucleus. One possible explanation for this is that the longest stimulus used was one second. By counting spikes (a form of integration) for the entire second, the integration time of neurons could have been exceeded and therefore the calculations of adding spikes would continue to show improvement (even if the animal could not use this). Because this technique integrates the neural data, it is quite possible that the slower cochlear nucleus integration times (relative to the auditory nerve) reflect adaptation of the cochlear nucleus neurons. Further analysis would need to be performed to determine whether the cochlear nucleus neurons themselves appear to integrate or receive an already integrated signal.

In the dorsal zone of the cat auditory cortex (Middlebrooks & Zook 1983, Sutter & Schreiner 1991), neurons have been found with integrative properties on the timescale observed in temporal integration (He 1998, He et al. 1997). These neurons have long latencies and are tuned to respond to longer sounds. These responses appear to have an already integrated output, except that the response is transient. A caveat that needs to be brought up is that this result is based on the subset of dorsal zone neurons with latencies greater than 30 msec, yet approximately one third of dorsal zone neurons have shorter latencies (Middlebrooks & Zook 1983, Stecker et al. 2005b, Sutter & Schreiner 1991). A little more than half (78/150) of the neurons with latencies greater than 30 msec had responses that increased with greater duration and at some point reached an asymptotic value. However, in 36% (54/150) of the neurons the activity decreased as duration was increased. This does not pose a major problem for a population code because the joint activity of a neuron that increases rate with increasing duration and one that decreases rate provides more information than either one individually. Although curves were not fit to the data, inspection suggests that the time constants of the duration firing rate curves were 100–200 msec, consistent with the psychophysical literature. One important limitation of this study, with respect to this review, is that most of these recordings were made at highly suprathreshold intensities. Neurons in the dorsal zone are known to have relatively high thresholds (Middlebrooks & Zook 1983, Sutter & Schreiner 1991). Thus, how dorsal zone neurons could contribute to detection thresholds remains unclear. However, it should be noted that temporal integration of loudness shares many properties with that of detection (Zwislocki 1960).

Forward Masking

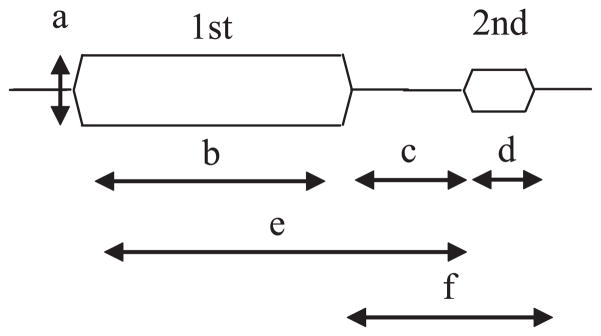

Temporal integration has also been studied using a forward masking paradigm. Two sounds are presented sequentially and subjects’ ability to detect the second sound (thresholds in dB) is measured as a function of the duration of the first. Masking occurs when the ability to detect the second sound decreases. There is a rich psychophysical literature on this topic and, in general, as the masker (first sound) duration is increased, the ability to detect the target (second sound) decreases (Figure 4; e.g., Weber & Green 1978). The idea with respect to integration is that the masker causes adaptation or habituation to energy at the frequencies present in the sound, and therefore the target is less perceivable. The difficulty in comparing perceptual masking to physiology is that results are influenced by many stimulus variables in addition to masker duration (shown by arrows in Figure 4). Therefore, in almost all of the reported studies, the differences in results can be accounted for by differences in the employed stimulus set. Nevertheless, based on physiological data, several neural mechanisms have been proposed for forward masking, including adaptation of auditory nerve fibers (Harris & Dallos 1979). Although peripheral adaptation almost certainly contributes to forward masking, a signal detection analysis indicates that limitations introduced more central to the auditory nerve are also necessary (Relkin & Turner 1988). Findings indicate roles for central adaptation and inhibition in areas including the cochlear nucleus (Boettcher et al. 1990, Kaltenbach et al. 1993) and cortex (Brosch & Schreiner 1997, Calford & Semple 1995). Most single-unit studies of forward masking have focused on how the response to the target sound recovers with a longer delay between the first and second sound. In the few studies that have investigated the effect of masker duration (Harris & Dallos 1979), the general trend is for more masking as the duration is increased, a trend consistent with the psychophysical results.

Figure 4.

Stimulus parameters in forward masking experiments. The first stimulus is the masker and the subject is asked to detect the second stimulus. Parameters that influence the strength of masking include the intensity of the masker (a), the duration of the masker (b), the interstimulus interval (c), the duration of the target (d ), the onset interval between the masker and target (e), and the combined interstimulus interval and target duration ( f ).

Neuroethological Studies

Other interesting properties of the biological basis of temporal integration can be gleaned from neuroethological studies. In songbirds, the ability to discriminate a song improves by increasing the sample time of the song (Ratcliffe & Weisman 1986). Neurons in a part of the forebrain called high vocal center (also known as hyperventrale pars caudalis) selectively respond to the bird’s own song (Margoliash 1983, 1986; Margoliash & Konishi 1985; Theunissen & Doupe 1998). The pronounced selectivity of these neurons was investigated by dissecting the song into individual syllables. The results showed that these neurons integrate across several syllables, sometimes up to the order of a second (Margoliash & Fortune 1992, Margoliash et al. 1994). For these neurons, if the song’s structure was degraded in any manner prior to the integration time, responsiveness dropped dramatically. Such neuronal response selectivity achieved by combining elements over such a long time is a very rare finding. Furthermore, it was found that within the high vocal center (or hyperventrale pars caudalis) most neurons responded similarly to song. However, by changing the width of the analysis window, selectivity to different syllables in the song could be revealed (Sutter & Margoliash 1994). In other words, the bird’s forebrain could pull different features out of a sound by applying different integration times. The combination of results in the bird suggests that neurons in the forebrain can analyze a sound by simultaneously applying multiple integration times in parallel.

Echolocating bats provide another neuroethological example of neural integration. In these bats, neurons in one cortical area are highly selective for delays between echolocation emission and the returning echo (O’Neill & Suga 1979, Suga & O’Neill 1979). These neurons will only respond to a very narrow range of delays. To achieve this, these neurons integrate the emission and returning echo over a timescale of approximately 10–20 msec. In both of the provided examples (birds and bats) we can see cases where neurons that are highly selective for biologically relevant sounds can be found at higher levels in the brain, and that such selectivity comes about by integrating different sequentially occurring components of the auditory signal over time.

Gap Detection

Contrasting temporal integration is temporal resolution: the ability to resolve time. One way to study temporal resolution is to measure the ability to detect a gap or silent period in a sound. Gap detection can be studied with broadband noise or narrowband sounds such as tones. Because gap detection in narrowband sounds contains strong spectral cues that subjects can use, we first focus on the ability to detect a gap in noise. Humans’ ability to detect a gap in a broadband noise is on the scale of a few milliseconds (Eddins et al. 1992, Forrest & Green 1987, Snell et al. 1994). Remarkably similar results across many animal studies report gap detection that is similar to humans in the 2–6 msec range for broadband noise (e.g., Ison et al. 1991, Leitner et al. 1997, Syka et al. 2002, Wagner et al. 2003), including macaques (personal observation). Although gap detection appears to be fairly simple, there is strong support for a role of the auditory cortex (Bowen et al. 2003, Ison et al. 1991, Kelly et al. 1996, Syka et al. 2002). Thresholds usually increase with auditory cortical lesions, inactivation, or interference. There have been surprisingly few studies of the neural correlate of gap detection. One difficulty in finding a single-unit response correlated with gap detection is that it is difficult to detect a dip in the response of neurons with sustained firing rates. In the auditory nerve, fibers reduce activity during a gap in broadband noise, and then give a slightly elevated onset response followed by sustained activity. For short gaps down to 1–2 msec, this can be seen in the average activity (Salvi & Arehole 1985). The average value for all single units sampled was significantly different at gap widths ≥2 msec compared with the response when no gap was present. This suggests that the population of auditory nerve fibers sampled have the information to detect a gap that is consistent with psychophysical performance.

As the auditory system ascends to the IC, the representation becomes much more dependent on phasic responses to the re-onset of the tone (Walton et al. 1997, 1998). Here the most sensitive neurons are those with an onset response to noise. The trend toward onset response encoding strengthens even more at the cortical level (Eggermont 1999). The IC and auditory cortex studies indicate sensitivity comparable to behavioral thresholds; however, since they are based on observations from averaged activity and don’t employ signal detection metrics, it is not possible to make definitive statements. Nevertheless, the finding that such activity is obvious in the averaged responses suggests that these higher-level areas can encode gaps by pooling across neurons.

There is a wide variety of results with respect to the ability to detect gaps in narrowband sounds. This is partially because rapid transitions in narrowband sounds (like tones) generate additional frequencies, making a click-like artifact that can be used by the subjects to determine that there is a gap, and different methods are more or less effective at eliminating this cue. However, an interesting illusion can be created by introducing a gap into a “foreground” sound and then filling the gap with broadband noise (Bashford et al. 1988; Warren 1970; Warren et al. 1972, 1988). Under these conditions, not only is gap detection impaired but subjects also report the foreground as being continuous. This effect has several names (auditory induction, continuity illusion, perceptual or phonemic restoration, amodal completion, and auditory fill-in) and can be thought of as resulting from the auditory system reallocating the energy present in the noise to the foreground (Bregman 1990). Induction occurs for the spectral content of sounds, but recently it has also been demonstrated for the temporal properties of amplitude-modulated sounds (Lyzenga et al. 2005). Induction has also been found in cats (Sugita 1997), cotton-top tamarin monkeys (Miller et al. 2001), and macaque monkeys (Petkov et al. 2003). Recent studies in macaques compared the single-neuron responses in A1 to stimuli that produce induction in these animals (Petkov et al. 2007). As was the case with gap detection, teasing apart the contribution of onset, offset, and sustained responses was useful. Onset, offset, and sustained responders contribute such that the noise removes the ability to observe the foreground sound’s transitions by obliterating an onset of responses, and it induces a continuous foreground sound perception with the sustained response. An inability to hear transitions of foreground sounds has been shown psychophysically to be a requirement for induction (Bregman & Dannenbring 1977).

SUMMARY POINTS.

The functional and anatomical organization of the primate auditory cortex is organized in a core-belt-parabelt manner and is similar between macaque monkeys and humans.

Spatial processing is equivalent between humans and macaques, and both appear to selectively process acoustic spatial information in caudal regions of the auditory cortex.

Visual stimuli can strongly influence auditory spatial representations and could do so by influencing early cortical areas, including A1, as well as multisensory areas.

Temporal integration time constants are similar between humans and other animals.

Gap detection is mainly processed by onset responses, and there is a cortical correlate to auditory fill-in in the auditory cortex of macaque monkeys.

Acknowledgments

The authors thank C. Broaddus, K. Campi, J. Engle, M. Fletcher, J. Johnson, M. Niwa, S. Nyon, and K. O’Connor for their contributions to the manuscript. The authors are supported by NIH grants EY013458 and AG024372 (GHR) and DC02514 (MLS), and the McDonnel Foundation (MLS).

Glossary

- A1

primary auditory cortex

- Koniocortical cytoarchitecture

cortical areas characterized by a well-developed inner granular layer (layer 4) corresponding to primary sensory areas

- Tonotopic organization

the regular and progressive spatial arrangement of tone frequency representations

- IC

inferior colliculus

- Place code

encoding a specific stimulus parameter by the activity of neurons located at a particular place within the structure

- HRTF

head-related transfer function

- fMRI

functional magnetic resonance imaging

- Bloch’s law

the perceived loudness and sound duration are reciprocally related so that their product is constant (loudness × sound duration = constant)

- Phasic responses

responses of a neuron at the onset or offset of the stimulus only, regardless of the length of the stimulus

Contributor Information

Gregg H. Recanzone, Email: ghrecanzone@ucdavis.edu.

Mitchell L. Sutter, Email: mlsutter@ucdavis.edu.

LITERATURE CITED

- Ahissar M, Ahissar E, Bergman H, Vaadia E. Encoding of sound-source location and movement: activity of single neurons and interactions between adjacent neurons in the monkey auditory cortex. J Neurophysiol. 1992;67:203–15. doi: 10.1152/jn.1992.67.1.203. [DOI] [PubMed] [Google Scholar]

- Ahveninen J, Jääskeläinen IP, Raij T, Bonmassar G, Devore S, et al. Task-modulated “what” and “where” pathways in human auditory cortex. Proc Natl Acad Sci USA. 2006;103:14608–13. doi: 10.1073/pnas.0510480103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr Biol. 2004;14:257–62. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Altmann CF, Bledowski C, Wibral M, Kaiser J. Processing of location and pattern changes of natural sounds in the human auditory cortex. NeuroImage. 2007;35:1192–200. doi: 10.1016/j.neuroimage.2007.01.007. [DOI] [PubMed] [Google Scholar]

- Altshuler MW, Comalli PE. Effect of stimulus intensity and frequency on median horizontal plane sound localization. J Aud Res. 1975;15:262–65. [Google Scholar]

- Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. NeuroImage. 2004;22:401–8. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- Bartlett EL, Wang X. Long-lasting modulation by stimulus context in primate auditory cortex. J Neurophysiol. 2005;94:83–104. doi: 10.1152/jn.01124.2004. [DOI] [PubMed] [Google Scholar]

- Bashford JA, Jr, Meyers MD, Brubaker BS, Warren RM. Illusory continuity of interrupted speech: speech rate determines durational limits. J Acoust Soc Am. 1988;84:1635–38. doi: 10.1121/1.397178. [DOI] [PubMed] [Google Scholar]

- Beitel RE, Kaas JH. Effects of bilateral and unilateral ablation of auditory cortex in cats on the unconditioned head orienting response to acoustic stimuli. J Neurophysiol. 1993;70:351–69. doi: 10.1152/jn.1993.70.1.351. [DOI] [PubMed] [Google Scholar]

- Benevento LA, Fallon J, Davis BJ, Rezak M. Auditory-visual interaction in single cells in the cortex of the superior temporal sulcus and the orbital frontal cortex of the macaque monkey. Exp Neurol. 1977;57:849–72. doi: 10.1016/0014-4886(77)90112-1. [DOI] [PubMed] [Google Scholar]

- Benson DA, Hienz RD, Goldstein MH., Jr Single-unit activity in the auditory cortex of monkeys actively localizing sound sources: spatial tuning and behavioral dependency. Brain Res. 1981;219:249–67. doi: 10.1016/0006-8993(81)90290-0. [DOI] [PubMed] [Google Scholar]

- Bertelson P. Ventriloquism: a case of crossmodal perceptual grouping. In: Aschersleben G, Bachmann T, Musseler J, editors. Cognitive Contributions to the Perception of Spatial and Temporal Events. New York: Elsevier; 1999. pp. 346–62. [Google Scholar]

- Bilecen D, Scheffler K, Schmid N, Tschopp K, Seelig J. Tonotopic organization of the human auditory cortex as detected by BOLD-fMRI. Hear Res. 1998;126:19–27. doi: 10.1016/s0378-5955(98)00139-7. [DOI] [PubMed] [Google Scholar]

- Bischoff M, Walter B, Blecker CR, Morgen K, Vaitl D, Sammer G. Utilizing the ventriloquism-effect to investigate audio-visual binding. Neuropsychologia. 2007;45:578–86. doi: 10.1016/j.neuropsychologia.2006.03.008. [DOI] [PubMed] [Google Scholar]

- Bizley JK, Nodal RF, Bajo VM, Nelken I, King AJ. Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cereb Cortex. 2006 Nov 29; doi: 10.1093/cercor/bhl128. (Epub ahead of print) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization. Cambridge, MA: MIT Press; 1997. [Google Scholar]

- Boettcher FA, Salvi RJ, Saunders SS. Recovery from short-term adaptation in single neurons in the cochlear nucleus. Hear Res. 1990;48:125–44. doi: 10.1016/0378-5955(90)90203-2. [DOI] [PubMed] [Google Scholar]

- Bowen GP, Lin D, Taylor MK, Ison JR. Auditory cortex lesions in the rat impair both temporal acuity and noise increment thresholds, revealing a common neural substrate. Cereb Cortex. 2003;13:815–22. doi: 10.1093/cercor/13.8.815. [DOI] [PubMed] [Google Scholar]

- Bregman AS. Auditory Scene Analysis. Cambridge, MA: MIT Press; 1990. [Google Scholar]

- Bregman AS, Dannenbring GL. Auditory continuity and amplitude edges. Can J Psychol. 1977;31:151–59. doi: 10.1037/h0081658. [DOI] [PubMed] [Google Scholar]

- Brosch M, Schreiner CE. Time course of forward masking tuning curves in cat primary auditory cortex. J Neurophysiol. 1997;77:923–43. doi: 10.1152/jn.1997.77.2.923. [DOI] [PubMed] [Google Scholar]

- Brosch M, Selezneva E, Scheich H. Nonauditory events of a behavioral procedure activate auditory cortex of highly trained monkeys. J Neurosci. 2005;25:6797–806. doi: 10.1523/JNEUROSCI.1571-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J Neurophysiol. 1981;46:369–84. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Brugge JF, Reale RA, Hind JE. The structure of spatial receptive fields of neurons in primary auditory cortex of the cat. J Neurosci. 1996;16:4420–37. doi: 10.1523/JNEUROSCI.16-14-04420.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunetti M, Belardinelli P, Caulo M, Del Gratta C, Della Penna S, et al. Human brain activation during passive listening to sounds from different locations: an fMRI and MEG study. Hum Brain Mapp. 2005;26:251–61. doi: 10.1002/hbm.20164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bushara KO, Grafman J, Hallett M. Neural correlates of auditory-visual stimulus onset asynchrony detection. J Neurosci. 2001;21:300–4. doi: 10.1523/JNEUROSCI.21-01-00300.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bushara KO, Hanakawa T, Immisch I, Toma K, Kansaku K, Hallett M. Neural correlates of cross-modal binding. Nat Neurosci. 2003;6:190–95. doi: 10.1038/nn993. [DOI] [PubMed] [Google Scholar]

- Busse L, Roberts KC, Crist RE, Weissman DH, Woldorff MG. The spread of attention across modalities and space in a multisensory object. Proc Natl Acad Sci USA. 2005;102:18751–56. doi: 10.1073/pnas.0507704102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calford MB, Semple MN. Monaural inhibition in cat auditory cortex. J Neurophysiol. 1995;73:1876–91. doi: 10.1152/jn.1995.73.5.1876. [DOI] [PubMed] [Google Scholar]

- Calvert GA. Crossmodal processing in the human brain: insights from functional neuroimaging studies. Cereb Cortex. 2001;11:1110–23. doi: 10.1093/cercor/11.12.1110. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Brammer MJ, Bullmore ET, Campbell R, Iversen SD, David AS. Response amplification in sensory-specific cortices during crossmodal binding. NeuroReport. 1999;10:2619–23. doi: 10.1097/00001756-199908200-00033. [DOI] [PubMed] [Google Scholar]

- Carlile S, Leong P, Hyams S. The nature and distribution of errors in sound localization by human listeners. Hear Res. 1997;114:179–96. doi: 10.1016/s0378-5955(97)00161-5. [DOI] [PubMed] [Google Scholar]

- Carr CE, Koppl C. Coding interaural time differences at low best frequencies in the barn owl. J Physiol Paris. 2004;98:99–112. doi: 10.1016/j.jphysparis.2004.03.003. [DOI] [PubMed] [Google Scholar]

- Casseday JH, Neff WD. Auditory localization: role of auditory pathways in brain stem of the cat. J Neurophysiol. 1975;38:842–58. doi: 10.1152/jn.1975.38.4.842. [DOI] [PubMed] [Google Scholar]

- Clack TD. Effect of signal duration on the auditory sensitivity of humans and monkeys (Macaca mulatta) J Acoust Soc Am. 1966;40:1140–46. doi: 10.1121/1.1910199. [DOI] [PubMed] [Google Scholar]

- Clock AE, Salvi RJ, Saunders SS, Powers NL. Neural correlates of temporal integration in the cochlear nucleus of the chinchilla. Hear Res. 1993;71:37–50. doi: 10.1016/0378-5955(93)90019-w. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Russ BE, Gifford GW., III Auditory processing in the posterior parietal cortex. Behav Cogn Neurosci Rev. 2005;4:218–31. doi: 10.1177/1534582305285861. [DOI] [PubMed] [Google Scholar]

- Comalli PE, Altshuler MW. Effect of stimulus intensity, frequency, and unilateral hearing loss on sound localization. J Aud Res. 1976;16:275–79. [Google Scholar]

- Costalupes JA. Temporal integration of pure tones in the cat. Hear Res. 1983;9:43–54. doi: 10.1016/0378-5955(83)90133-8. [DOI] [PubMed] [Google Scholar]

- Cusick CG. The superior temporal polysensory region in monkeys. Cereb Cortex. 1997;12:435–68. [Google Scholar]

- Dallos PJ, Olsen WO. Integration of energy at threshold with gradual rise-fall tone pips. J Acoust Soc Am. 1964;36:743–51. [Google Scholar]

- Eddins DA, Green DM. Temporal integration and temporal resolution. In: Moore BCJ, editor. Hearing (Handbook of Perception and Cognition) 2 San Diego, CA: Academic; 1995. pp. 207–42. [Google Scholar]

- Eddins DA, Hall JW, 3rd, Grose JH. The detection of temporal gaps as a function of frequency region and absolute noise bandwidth. J Acoust Soc Am. 1992;91:1069–77. doi: 10.1121/1.402633. [DOI] [PubMed] [Google Scholar]

- Eggermont JJ. Neural correlates of gap detection in three auditory cortical fields in the cat. J Neurophysiol. 1999;81:2570–81. doi: 10.1152/jn.1999.81.5.2570. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci. 2002;22:5749–59. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fay RR, Coombs S. Neural mechanisms in sound detection and temporal summation. Hear Res. 1983;10:69–92. doi: 10.1016/0378-5955(83)90018-7. [DOI] [PubMed] [Google Scholar]

- Fitzpatrick DC, Batra R, Stanford TR, Kuwada S. A neuronal population code for sound localization. Nature. 1997;388:871–74. doi: 10.1038/42246. [DOI] [PubMed] [Google Scholar]

- Forrest TG, Green DM. Detection of partially filled gaps in noise and the temporal modulation transfer function. J Acoust Soc Am. 1987;82:1933–43. doi: 10.1121/1.395689. [DOI] [PubMed] [Google Scholar]

- Fu KM, Shah AS, O’Connell MN, McGinnis T, Eckholdt H, et al. Timing and laminar profile of eye-position effects on auditory responses in primate auditory cortex. J Neurophysiol. 2004;92:3522–31. doi: 10.1152/jn.01228.2003. [DOI] [PubMed] [Google Scholar]

- Fujiki N, Riederer KAJ, Jousmaki V, Makela JP, Hari R. Human cortical representation of virtual auditory space: differences between sound azimuth and elevation. Eur J Neurosci. 2002;16:2207–13. doi: 10.1046/j.1460-9568.2002.02276.x. [DOI] [PubMed] [Google Scholar]

- Furukawa S, Xu L, Middlebrooks JC. Coding of sound-source location by ensembles of cortical neurons. J Neurosci. 2000;20:1216–28. doi: 10.1523/JNEUROSCI.20-03-01216.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garner WR, Miller GA. The masked threshold of pure tones as a function of duration. J Exp Psychol. 1947;37:293–303. doi: 10.1037/h0055734. [DOI] [PubMed] [Google Scholar]

- Gerken GM, Bhat VK, Hutchison-Clutter M. Auditory temporal integration and the power function model. J Acoust Soc Am. 1990;88:767–78. doi: 10.1121/1.399726. [DOI] [PubMed] [Google Scholar]

- Gerken GM, Gunnarson AD, Allen CM. Three models of temporal summation evaluated using normal-hearing and hearing-impaired subjects. J Speech Hear Res. 1983;26:256–62. doi: 10.1044/jshr.2602.256. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J Neurosci. 2005;25:5004–12. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green DM, Birshall TG. Signal detection as a function of signal intensity and duration. J Acoust Soc Am. 1957;29:523–31. [Google Scholar]

- Hackett TA, Preuss TM, Kaas JH. Architectonic identification of the core region in auditory cortex of macaques, chimpanzees, and humans. J Comp Neurol. 2001;441:197–222. doi: 10.1002/cne.1407. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Subdivisions of auditory cortex and ipsilateral cortical connections of the parabelt auditory cortex in macaque monkeys. J Comp Neurol. 1998a;394:475–95. doi: 10.1002/(sici)1096-9861(19980518)394:4<475::aid-cne6>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Thalamocortical connections of the parabelt auditory cortex in macaque monkeys. J Comp Neurol. 1998b;400:271–86. doi: 10.1002/(sici)1096-9861(19981019)400:2<271::aid-cne8>3.0.co;2-6. [DOI] [PubMed] [Google Scholar]

- Harper NS, McAlpine D. Optimal neural population coding of an auditory spatial cue. Nature. 2004;430:682–86. doi: 10.1038/nature02768. [DOI] [PubMed] [Google Scholar]

- Harris DM, Dallos P. Forward masking of auditory nerve fiber responses. J Neurophysiol. 1979;42:1083–107. doi: 10.1152/jn.1979.42.4.1083. [DOI] [PubMed] [Google Scholar]

- Hautzel H, Taylor JG, Krause BJ, Schmitz N, Tellmann L, et al. The motion aftereffect: more than area V5/MT? Evidence from 15O-butanol PET studies. Brain Res. 2001;892:281–92. doi: 10.1016/s0006-8993(00)03224-8. [DOI] [PubMed] [Google Scholar]

- He J. Long-latency neurons in auditory cortex involved in temporal integration: theoretical analysis of experimental data. Hear Res. 1998;121:147–60. doi: 10.1016/s0378-5955(98)00076-8. [DOI] [PubMed] [Google Scholar]

- He J, Hashikawa T, Ojima H, Kinouchi Y. Temporal integration and duration tuning in the dorsal zone of cat auditory cortex. J Neurosci. 1997;17:2615–25. doi: 10.1523/JNEUROSCI.17-07-02615.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heffner HE. The role of macaque auditory cortex in sound localization. Acta Otolaryngol Stockh Suppl. 1997;532:22–27. doi: 10.3109/00016489709126140. [DOI] [PubMed] [Google Scholar]

- Heffner HE, Heffner RS. Effect of bilateral auditory cortex lesions on sound localization in Japanese macaques. J Neurophysiol. 1990;64:915–31. doi: 10.1152/jn.1990.64.3.915. [DOI] [PubMed] [Google Scholar]

- Heffner RS. Primate hearing from a mammalian perspective. Anat Rec A Discov Mol Cell Evol Biol. 2004;281A:1111–22. doi: 10.1002/ar.a.20117. [DOI] [PubMed] [Google Scholar]

- Howard IP, Templeton WB. Human Spatial Orientation. New York: Wiley; 1966. [Google Scholar]

- Hughes JW. The threshold of audition for short periods of stimulation. Proc R Soc Lond B Biol Sci. 1946;133:486–90. doi: 10.1098/rspb.1946.0026. [DOI] [PubMed] [Google Scholar]

- Hunter MD, Griffiths TD, Farrow TFD, Zheng Y, Wilkinson ID, et al. A neural basis for the perception of voices in external auditory space. Brain. 2003;126:161–69. doi: 10.1093/brain/awg015. [DOI] [PubMed] [Google Scholar]

- Imig TJ, Irons WA, Samson FR. Single-unit selectivity to azimuthal direction and sound pressure level of noise bursts in cat high-frequency primary auditory cortex. J Neurophysiol. 1990;63:1448–66. doi: 10.1152/jn.1990.63.6.1448. [DOI] [PubMed] [Google Scholar]

- Ison JR, O’Connor K, Bowen GP, Bocirnea A. Temporal resolution of gaps in noise by the rat is lost with functional decortication. Behav Neurosci. 1991;105:33–40. doi: 10.1037//0735-7044.105.1.33. [DOI] [PubMed] [Google Scholar]

- Jack CE, Thurlow WR. Effects of degree of visual association and angle of displacement on the “ventriloquism” effect. Percept Mot Skills. 1973;37:967–79. doi: 10.1177/003151257303700360. [DOI] [PubMed] [Google Scholar]

- Jeffress LA. A place theory of sound localization. J Comp Psychol. 1948;41:35–39. doi: 10.1037/h0061495. [DOI] [PubMed] [Google Scholar]

- Jenkins WM, Merzenich MM. Role of cat primary auditory cortex for sound-localization behavior. J Neurophysiol. 1984;52:819–47. doi: 10.1152/jn.1984.52.5.819. [DOI] [PubMed] [Google Scholar]

- Jones EG. Chemically defined parallel pathways in the monkey auditory system. Ann NY Acad Sci. 2003;999:218–33. doi: 10.1196/annals.1284.033. [DOI] [PubMed] [Google Scholar]

- Jones EG, Dell’Anna ME, Molinari M, Rausell E, Hashikawa T. Subdivisions of macaque monkey auditory cortex revealed by calcium-binding protein immunoreactivity. J Comp Neurol. 1995;362:153–70. doi: 10.1002/cne.903620202. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proc Natl Acad Sci USA. 2000;97:11793–99. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaltenbach JA, Meleca RJ, Falzarano PR, Myers SF, Simpson TH. Forward masking properties of neurons in the dorsal cochlear nucleus: possible role in the process of echo suppression. Hear Res. 1993;67:35–44. doi: 10.1016/0378-5955(93)90229-t. [DOI] [PubMed] [Google Scholar]

- Kavanagh GL, Kelly JB. Midline and lateral field sound localization in the ferret (Mustela putorius): contribution of the superior olivary complex. J Neurophysiol. 1992;67:987–1016. doi: 10.1152/jn.1992.67.6.1643. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Functional imaging reveals visual modulation of specific fields in auditory cortex. J Neurosci. 2007;27:1824–35. doi: 10.1523/JNEUROSCI.4737-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly JB, Rooney BJ, Phillips DP. Effects of bilateral auditory cortical lesions on gap-detection thresholds in the ferret (Mustela putorius) Behav Neurosci. 1996;110:542–50. doi: 10.1037//0735-7044.110.3.542. [DOI] [PubMed] [Google Scholar]

- King AJ, Bajo VM, Bizley JK, Campbell RAA, Nodal FR, et al. Physiological and behavioral studies of spatial coding in the auditory cortex. Hear Res. 2007 Jan 19; doi: 10.1016/j.heares.2007.01.001. (Epub ahead of print) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosaki H, Hashikawa T, He J, Jones EG. Tonotopic organization of auditory cortical fields delineated by parvalbumin immunoreactivity in macaque monkeys. J Comp Neurol. 1997;386:304–16. [PubMed] [Google Scholar]

- Krumbholz K, Schonwiesner M, Rubsamen R, Zilles K, Fink GR, Yves von Cramon D. Hierarchical processing of sound location and motion in the human brainstem and planum temporale. Eur J Neurosci. 2005;21:230–38. doi: 10.1111/j.1460-9568.2004.03836.x. [DOI] [PubMed] [Google Scholar]

- Lehmann C, Herdener M, Esposito F, Hubl D, di Salle F, et al. Differential patterns of multisensory interactions in core and belt areas of human auditory cortex. NeuroImage. 2006;31:294–300. doi: 10.1016/j.neuroimage.2005.12.038. [DOI] [PubMed] [Google Scholar]

- Leitner DS, Carmody DP, Girten EM. A signal detection theory analysis of gap detection in the rat. Percept Psychophys. 1997;59:774–82. doi: 10.3758/bf03206023. [DOI] [PubMed] [Google Scholar]

- Leonard CM, Puranik C, Kuldau JM, Lombardino LJ. Normal variation in the frequency and location of human auditory cortex landmarks. Heschl’s gyrus: Where is it? Cereb Cortex. 1998;8:397–406. doi: 10.1093/cercor/8.5.397. [DOI] [PubMed] [Google Scholar]

- Lewald J. Rapid adaptation to auditory-visual spatial disparity. Learn Mem. 2002;9:268–78. doi: 10.1101/lm.51402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyzenga J, Carlyon RP, Moore BC. Dynamic aspects of the continuity illusion: perception of level and of the depth, rate, and phase of modulation. Hear Res. 2005;210:30–41. doi: 10.1016/j.heares.2005.07.002. [DOI] [PubMed] [Google Scholar]

- Malhotra S, Lomber SG. Sound localization during homotopic and heterotopic bilateral cooling deactivation of primary and nonprimary auditory cortical areas in the cat. J Neurophysiol. 2007;97:26–43. doi: 10.1152/jn.00720.2006. [DOI] [PubMed] [Google Scholar]

- Margoliash D. Acoustic parameters underlying the responses of song-specific neurons in the white-crowned sparrow. J Neurosci. 1983;3:1039–57. doi: 10.1523/JNEUROSCI.03-05-01039.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margoliash D. Preference for autogenous song by auditory neurons in a song system nucleus of the white-crowned sparrow. J Neurosci. 1986;6:1643–61. doi: 10.1523/JNEUROSCI.06-06-01643.1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margoliash D, Fortune ES. Temporal and harmonic combination-sensitive neurons in the zebra finch’s HVc. J Neurosci. 1992;12:4309–26. doi: 10.1523/JNEUROSCI.12-11-04309.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margoliash D, Fortune ES, Sutter ML, Yu AC, Wren-Hardin BD, Dave A. Distributed representation in the song system of oscines: evolutionary implications and functional consequences. Brain Behav Evol. 1994;44:247–64. doi: 10.1159/000113580. [DOI] [PubMed] [Google Scholar]

- Margoliash D, Konishi M. Auditory representation of autogenous song in the song system of white-crowned sparrows. Proc Natl Acad Sci USA. 1985;82:5997–6000. doi: 10.1073/pnas.82.17.5997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masterton B, Jane JA, Diamond IT. Role of brainstem auditory structures in sound localization. I Trapezoid body, superior olive, and lateral lemniscus. J Neurophysiol. 1967;30:341–59. doi: 10.1152/jn.1967.30.2.341. [DOI] [PubMed] [Google Scholar]

- Mazzoni P, Bracewell RM, Barash S, Andersen RA. Spatially tuned auditory responses in area LIP of macaques performing delayed memory saccades to acoustic targets. J Neurophysiol. 1996;75:1233–41. doi: 10.1152/jn.1996.75.3.1233. [DOI] [PubMed] [Google Scholar]

- McAlpine D. Creating a sense of auditory space. J Physiol. 2005;566:21–28. doi: 10.1113/jphysiol.2005.083113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merzenich MM, Brugge JF. Representation of the cochlear partition on the superior temporal plane of the macaque monkey. Brain Res. 1973;50:275–96. doi: 10.1016/0006-8993(73)90731-2. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Clock AE, Xu L, Green DM. A panoramic code for sound location by cortical neurons. Science. 1994;264:842–44. doi: 10.1126/science.8171339. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Green DM. Sound localization by human listeners. Annu Rev Psychol. 1991;42:135–59. doi: 10.1146/annurev.ps.42.020191.001031. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Pettigrew JD. Functional classes of neurons in primary auditory cortex of cat distinguished by sensitivity to sound location. J Neurosci. 1981;1:107–20. doi: 10.1523/JNEUROSCI.01-01-00107.1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middlebrooks JC, Zook JM. Intrinsic organization of the cat’s medial geniculate body identified by projections to binaural response-specific bands in the primary auditory cortex. J Neurosci. 1983;3:203–24. doi: 10.1523/JNEUROSCI.03-01-00203.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller CT, Dibble E, Hauser MD. Amodal completion of acoustic signals by a nonhuman primate. Nat Neurosci. 2001;4:783–84. doi: 10.1038/90481. [DOI] [PubMed] [Google Scholar]

- Molinari M, Dell’Anna ME, Rausell E, Leggio MG, Hashikawa T, Jones EG. Auditory thalamocortical pathways defined in monkeys by calcium-binding protein immunoreactivity. J Comp Neurol. 1995;362:171–94. doi: 10.1002/cne.903620203. [DOI] [PubMed] [Google Scholar]

- Morel A, Garraghty PE, Kaas JH. Tonotopic organization, architectonic fields, and connections of auditory cortex in macaque monkeys. J Comp Neurol. 1993;335:437–59. doi: 10.1002/cne.903350312. [DOI] [PubMed] [Google Scholar]

- Morosan P, Rademacher J, Schleicher A, Amunts K, Schormann T, Zilles K. Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. NeuroImage. 2001;13:684–701. doi: 10.1006/nimg.2000.0715. [DOI] [PubMed] [Google Scholar]

- Munson WA. The growth of auditory sensation. J Acoust Soc Am. 1947;19:584–91. [Google Scholar]

- O’Connor K, Sutter ML. Auditory temporal integration in primates: a comparative analysis. In: Ghazanfar A, editor. Primate Audition: Ethology and Neurobiology. Boca Raton, FL: CRC Press; 2003. pp. 27–43. [Google Scholar]

- O’Connor KN, Barruel P, Hajalilou R, Sutter ML. Auditory temporal integration in the rhesus macaque (Macaca mulatta) J Acoust Soc Am. 1999;106:954–65. doi: 10.1121/1.427108. [DOI] [PubMed] [Google Scholar]

- O’Neill WE, Suga N. Target range-sensitive neurons in the auditory cortex of the mustache bat. Science. 1979;203:69–73. doi: 10.1126/science.758681. [DOI] [PubMed] [Google Scholar]

- Pandya DN. Anatomy of the auditory cortex. Rev Neurol (Paris) 1995;151:486–94. [PubMed] [Google Scholar]

- Pavani F, Macaluso E, Warren JD, Driver J, Griffiths TD. A common cortical substrate activated by horizontal and vertical sound movement in the human brain. Curr Biol. 2002;12:1584–90. doi: 10.1016/s0960-9822(02)01143-0. [DOI] [PubMed] [Google Scholar]

- Petkov CI, Kang X, Alho K, Bertrand O, Yund EW, Woods DL. Attentional modulation of human auditory cortex. Nat Neurosci. 2004;7:658–63. doi: 10.1038/nn1256. [DOI] [PubMed] [Google Scholar]

- Petkov CI, Kayser C, Augath M, Logothetis NK. Functional imaging reveals numerous fields in the monkey auditory cortex. PLoS Biol. 2006;4:e215. doi: 10.1371/journal.pbio.0040215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petkov CI, O’Connor KN, Sutter ML. Illusory sound perception in macaque monkeys. J Neurosci. 2003;23:9155–61. doi: 10.1523/JNEUROSCI.23-27-09155.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petkov CI, O’Connor KN, Sutter ML. Encoding of illusory continuity in primary auditory cortex. Neuron. 2007;54:153–65. doi: 10.1016/j.neuron.2007.02.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phan ML, Recanzone GH. Single-neuron responses to rapidly presented temporal sequences in the primary auditory cortex of the awake macaque monkey. J Neurophysiol. 2007;97:1726–37. doi: 10.1152/jn.00698.2006. [DOI] [PubMed] [Google Scholar]