Abstract

Distance learning is an important tool for training HIV health workers. However, there is limited evidence on design and evaluation of distance learning HIV curricula and tools. We therefore designed, implemented, and evaluated a distance learning course on HIV management for clinical care providers in India. After course completion, participant scores rose significantly from a pretest (78.4% mean correct) compared with the posttest (87.5%, P < .001). After course completion, participants were more likely to be confident in starting an initial antiretroviral (ARV) regimen, understanding ARV toxicities, encouraging patient adherence, diagnosing immune reconstitution syndrome, and monitoring patients on ARV medications (P ≤ .05). All participants (100%) strongly agreed/agreed that they would recommend this course to others, and most of them (96%) strongly agreed/agreed that they would take a course in this format again. A pragmatic approach to HIV curriculum development and evaluation resulted in reliable learning outcomes, as well as learner satisfaction and improvement in knowledge.

Keywords: HIV, distance learning, India, evaluation, Internet

Introduction

eLearning (electronically supported teaching and learning), particularly online or distance learning, has been advocated as an important tool for training health workers in low- and middle-income countries (LMICs), and its deployment has grown substantially.1,2 eLearning offers opportunities for cost-effective capacity building in LMIC and the ability to bring educators and learners together whom might not otherwise be able to easily interact.3,4 In particular, the field of HIV has developed a number of eLearning resources disseminated by disparate organizations across the globe.5–7

However, despite this abundance of source material, there is limited expertise and evidence in the HIV community on design and evaluation of HIV-related eLearning curricula and tools.8 Proper and pragmatic evaluations of educational programs are important to optimize their impact and ensure they are having the desired results.9 We therefore systematically designed, implemented, and evaluated a multimodal distance learning course on HIV management for clinical care providers in India and report our experiences here.

Methods

Study Setting

The Johns Hopkins Center for Clinical Global Health Education (CCGHE, www.ccghe.jhmi.edu) was established in 2005 to provide access to high-quality training to health care providers in LMIC.10 The CCGHE made a strategic decision to develop, use, and evaluate distance learning platforms to achieve its mission. In late 2009, in collaboration with B. J. Medical College, a leading research and education institution located in Pune, India, CCGHE designed, implemented, and evaluated one of its eLearning HIV courses. This study was approved by the institutional review board of Johns Hopkins University.

Curriculum Design

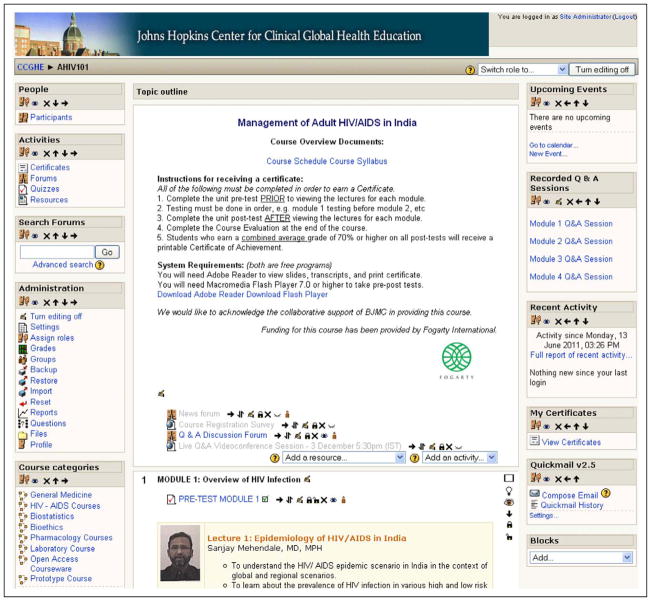

The HIV course on the management of adult HIV/AIDS in India was designed using established principles of curriculum development.9 The course was multimodal, consisting of 16 online expert lectures divided into 4 content areas covering fundamental aspects of HIV/AIDS care, 4 live question and answer video-conferencing sessions, pre-/posttests, and awarding of a certificate (Figures 1–4). Course directors, coordinators, and instructors were a mix of local and US-based educators. The free course was advertised through 3 leading HIV/AIDS organizations in India (B. J. Medical College, National AIDS Research Institute, and Y. R. Gaitonde Centre for AIDS Research and Education). Registration, online lectures, and tests were administered over a 1-month period using the free open-source learning management system, Moodle (www.moodle.org; see Figure 1).

Figure 1.

HIV distance learning course Moodle homepage.

Figure 4.

Example of certificate granted after completing distance learning course.

Evaluation Design

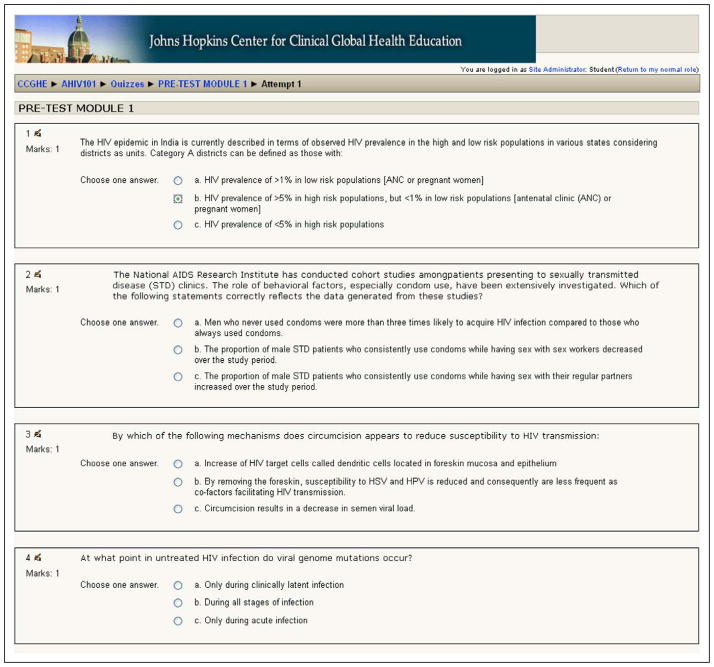

Participant knowledge was assessed through pre-/posttests comprising multiple-choice online examinations. Questions were first developed prior to course implementation by each instructor based upon the lecture learning objectives. Instructors, all of whom possessed expertise in HIV, were asked to follow a 1-page question writing guide (available upon request) to improve question quality and uniformity following established principles of question writing.11 Subsequently, 2 authors (L.W.C. and S.S.) edited the questions for clarity and content. A total of 108 questions were developed. These questions were randomized and stratified by lecture to being either a pretest or a posttest question to help balance question difficulty and content between tests. Participant satisfaction and self-reported intended behaviors were evaluated with pre-/postcourse surveys with categorical and Likert scale responses administered using surveymonkey.com via a link provided on Moodle.

Analytic Methods

Evaluation of pre-/posttest quality consisted of test item analysis using a simple Excel spreadsheet designed by our group (available upon request), and t tests were used to compare pretest scores to posttest scores. Participant survey responses were analyzed descriptively and comparisons were made of responses before and after the course using Wilcoxon Mann-Whitney tests, a nonparametric analog to the independent samples t test. All these analyses were performed using SAS 9.2 (SAS Institute Inc, Cary, North Carolina).

Results

Participant Characteristics

In all, 115 participants registered for the course. In total, 92 participants completed all sessions and tests. Most participants responding to the precourse survey (n = 78) were attending physicians (59%), residents (31%), or students (13%). Most were men (76%), and all were affiliated with a research institution. The majority were specialized in general medicine (81%) with smaller numbers specialized in obstetrics/gynecology (8%) and pediatrics (6%). Participants were primarily 26 to 35 (78%), 18 to 25 (11%), or 36 to 45 (8%) years of age. Most participants indicated that they had access to a computer (81%) or the Internet (71%) “all the time” or “daily.”

Test Quality

Item analysis of the pre-/posttests found internal consistency and reliability to be acceptable. The pretest average discrimination (item characteristic that describes its ability to sensitively measure individual difference, scores range from −1 to +1, scores >0.30 usually considered acceptable11) was 0.36, average item difficulty (percentage answering an item correctly) was 0.78, and the Cronbach α was .88 (score >0.70 is usually considered acceptable). The posttest average discrimination was 0.30, average item difficulty was 0.87, and the Cronbach α was .83.

Participant Outcomes

Participant scores rose significantly from a mean proportion answering questions correctly of 78.4% (mean 42.4 items correct, standard deviation 8.1, range 23–54, 54 total items) on the pretest to 87.5% (mean 47.2 items correct, standard deviation 5.6, range 23–54, 54 total items) on the posttest (P < .001, t test).

As shown in Table 1, after completing the course, responding participants were more likely to be confident in several aspects of patient management including starting an initial antiretroviral (ARV) regimen, understanding ARV toxicities, encouraging patient adherence, diagnosing immune reconstitution syndrome, and monitoring patients on ARV medications (all Ps ≤ .05). Table 2 shows additional survey responses indicating mostly positive responses toward the influence of the course on participant knowledge and self-reported intended future behaviors. All participants (100%) strongly agreed or agreed that they would recommend this course to others, and most of them (96%) strongly agreed or agreed that they would take a course in this format again.

Table 1.

Participant Survey Responses before and after Completion of an HIV Distance Learning Course

| Statement | Mean (SD) [Range]

|

P Valueb | |

|---|---|---|---|

| Before (n = 70)a | After (n = 55) | ||

| I am confident taking care of patients with HIV. | 1.83 (0.82) [1–4] | 1.58 (0.60) [1–3] | .12 |

| I am confident performing a comprehensive initial evaluation of a patient with HIV. | 1.67 (0.76) [1–4] | 1.56 (0.58) [1–3] | .63 |

| I am confident starting an initial antiretroviral regimen in a patient with HIV. | 2.04 (1.0) [1–5] | 1.55 (0.60) [1–3] | .0092 |

| I am confident with my understanding of HIV transmission. | 1.53 (0.70) [1–4] | 1.40 (0.56) [1–3] | .35 |

| I am confident in my understanding of antiretroviral toxicities. | 2.09 (0.89) [1–5] | 1.65 (0.62) [1–3] | .005 |

| I am confident in my ability to encourage patients to adhere to their antiretrovirals. | 1.71 (0.67) [1–3] | 1.48 (0.62) [1–3] | .05 |

| I am confident in knowing what positive prevention interventions are needed for my patients with HIV. | 1.70 (0.67) [1–3] | 1.52 (0.61) [1–3] | .15 |

| I am confident in my ability to diagnose immune reconstitution syndrome. | 2.10 (1.0) [1–5] | 1.60 (0.69) [1–4] | .0064 |

| I am confident in my understanding of how to best monitor my patients on antiretrovirals. | 2.04 (0.95 [1–5] | 1.56 (0.63) [1–3] | .0038 |

Abbreviation: SD, standard deviation.

Likert scale response options were as follows: 1 = Strongly agree; 2 = Agree; 3 = Neutral; 4 = Disagree; 5 = Strongly disagree.

Wilcoxon 2-sample test.

Table 2.

Participant Survey Responses after Completing a HIV Distance Learning Course

| Statement | Responsea (n = 55), Mean (SD) [Range] |

|---|---|

| I will care for my HIV-infected patients differently as a result of this course. | 1.58 (0.69) [1–4] |

| I have a worse understanding of HIV counseling and testing after this course. | 3.34 (1.5) [1–5] |

| I will encourage ART adherence more with my patients as a result of this course. | 1.60 (0.66) [1–3] |

| I will change when I start ART on my patients as a result of this course. | 2.06 (0.99) [1–5] |

| I will monitor my patients on ART differently as a result of this course. | 1.83 (0.94) [1–5] |

| I have a worse understanding of ART toxicities as a result of this course. | 3.40 (1.4) [1–5] |

| I will start some of my patients on different ART regimens as a result of this course. | 2.33 (1.0) [1–5] |

| I will encourage more positive prevention with my patients as a result of this course. | 1.58 (0.63) [1–3] |

| I would recommend this course to a friend or colleague. | 1.27 (0.45) [1–2] |

| I would take a course in this format again. | 1.40 (0.57) [1–3] |

Abbreviation: ART, antiretroviral therapy.

Likert scale response options were as follows: 1 = Strongly agree; 2 = Agree; 3= Neutral; 4 = Disagree; 5 = Strongly disagree.

Discussion

A multimodal, distance learning course on the management of HIV in India was successfully designed, implemented, and evaluated in a stepwise fashion. Evaluation results demonstrated good baseline knowledge, improved participant knowledge after completing the course, high satisfaction, and encouraging self-reported intended behaviors. This pragmatic, stepwise process can be implemented with modest resources and expertise, offering 1 model for similar HIV eLearning programs to optimize their assessments and impact.

The course implementation and evaluation process we used has 3 key components which can be conveniently emulated: course design, pre-/posttest, and pre-/postcourse survey. Initial design of the course was informed by a straightforward curriculum design process.9 This process stresses the need to first identify problems and needs, particularly learner needs, then set goals and objectives, and finally align curriculum content and educational strategies. Our group’s past experiences with distance learning and a long-standing relationship between collaborating institutions also aided this process and promoted sustainable capacity building.

The second component of the process was the design of participant knowledge tests. This component required instructors to follow a simple series of design parameters. Some editing was still required by authors with experience in test item creation though most of this editing was grammatical and/or for clarity and only a minority (<10%) of the items required editing. Facility with editing and writing high-quality test items can be gained through a number of didactic resources.11 We also chose to randomize knowledge test items into a pretest and posttest. This randomization was a pragmatic option for balancing test difficulty between the 2 tests without pretesting items. Randomization programs are freely available on the Internet, for example, www.random.org. While randomization does not ensure that difficulty is evenly balanced, pretesting and analyzing test items is time and resource intensive and may not be practical for many organizations.

The final component of our implementation and evaluation was the pre-/postcourse survey. This was a straightforward method in which implementers first gathered baseline data on participants with a precourse survey including not only demographic and professional characteristics but also self-reported intended behaviors and competencies. The postcourse survey results then allow for straightforward comparison of differences using simple statistical techniques, as well as assessing course satisfaction issues and identifying areas for improvement. While self-reported intended behaviors is only a proximal outcome of educational interventions, it is nevertheless a valuable and simple outcome to assess.12

This study has several important limitations. It is a relatively small sample of participants and reports on a single distance learning experience. Additionally, our response rate was not complete, which may have introduced biases and did not allow us to use more rigorous paired analysis methods. Using incentives in the future may be indicated, for example, requiring completion of pre/postcourse surveys in order to receive a certificate. Finally, internal validity of our evaluation could be further optimized with more rigorous methods, for example, pretesting all test items. However, the process we utilized was a pragmatic one where we sought to make efficient use of limited resources.

In summary, without the need for extensive resources or expertise, a pragmatic stepwise approach to curriculum development and evaluation of a distance learning HIV course resulted in reliable learning outcomes, as well as learner satisfaction and improvement in knowledge. Further study is needed to see whether HIV-specific learner behaviors and patient outcomes are changed as a result of these types of educational activities.

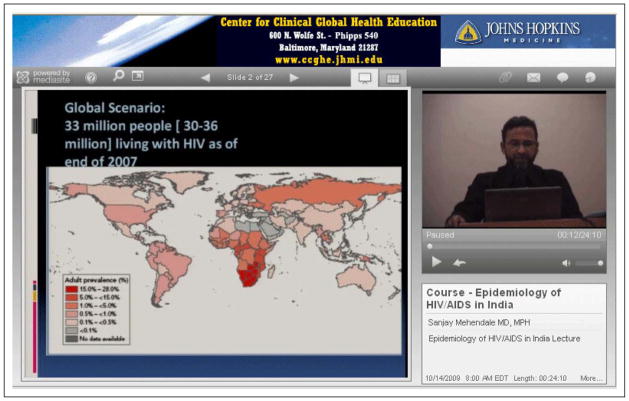

Figure 2.

Example of an online lecture with PowerPoint slides and speaker video.

Figure 3.

Example of an online multiple-choice test.

Acknowledgments

Funding

This course and evaluation were sponsored by a grant from the Fogarty International Center of the National Institutes of Health.

Footnotes

Reprints and permission: sagepub.com/journalsPermissions.nav

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- 1.Kwankam SY. What e-Health can offer. Bull World Health Organ. 2004;82(10):800–802. [PMC free article] [PubMed] [Google Scholar]

- 2.International Telecommunication Union. World Telecommunication/ICT Development Report. 9. Geneva, Switzerland: 2010. [Google Scholar]

- 3.Nartker AJ, Stevens L, Shumays A, Kalowela M, Kisimbo D, Potter K. Increasing health worker capacity through distance learning: a comprehensive review of programmes in Tanzania. Hum Resour Health. 2010;8:30. doi: 10.1186/1478-4491-8-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ruiz JG, Mintzer MJ, Leipzig RM. The impact of E-learning in medical education. Acad Med. 2006;81(3):207–212. doi: 10.1097/00001888-200603000-00002. [DOI] [PubMed] [Google Scholar]

- 5.Armstrong WS, del Rio C. HIV-associated resources on the internet. Top HIV Med. 2009;17(5):151–162. [PubMed] [Google Scholar]

- 6.Krakower D, Kwan CK, Yassa DS, Colvin RA. iAIDS: HIV-related internet resources for the practicing clinician. Clin Infect Dis. 2010;51(7):813–822. doi: 10.1086/656237. [DOI] [PubMed] [Google Scholar]

- 7.Kiviat AD, Geary MC, Sunpath H, et al. HIV Online Provider Education (HOPE): the internet as a tool for training in HIV medicine. J Infect Dis. 2007;196 (suppl 3):S512–S515. doi: 10.1086/521117. [DOI] [PubMed] [Google Scholar]

- 8.Hamel J. ICT4D and the Human Development and Capabilities Approach: The Potentials of Information and Communication Technology. 2010. United Nations Development Programme Human Development Reports Research Paper. [Google Scholar]

- 9.Kern DE, Thomas PA, Hughes MT. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore, MD: The Johns Hopkins University Press; 2011. [Google Scholar]

- 10.Bollinger RC, McKenzie-White J, Gupta A. Building a global health education network for clinical care and research. The benefits and challenges of distance learning tools. Lessons learned from the Hopkins Center for Clinical Global Health Education. Infect Dis Clin North Am. 2011;25(2):385–398. doi: 10.1016/j.idc.2011.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Haladyna TM. Developing and Validating Multiple-Choice Test Items. 3. Mahwah, NJ: Lawrence Erlbaum Associates, Inc; 2004. [Google Scholar]

- 12.Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990;65(9 suppl):S63–S67. doi: 10.1097/00001888-199009000-00045. [DOI] [PubMed] [Google Scholar]