Abstract

In this work the measured variable, such as temperature, is a random variable showing fluctuations. The loss of information caused by diffusion waves in non-destructive testing can be described by stochastic processes. In non-destructive imaging, the information about the spatial pattern of a samples interior has to be transferred to the sample surface by certain waves, e.g., thermal waves. At the sample surface these waves can be detected and the interior structure is reconstructed from the measured signals. The amount of information about the interior of the sample, which can be gained from the detected waves on the sample surface, is essentially influenced by the propagation from its excitation to the surface. Diffusion causes entropy production and information loss for the propagating waves. Mandelis has developed a unifying framework for treating diverse diffusion-related periodic phenomena under the global mathematical label of diffusion-wave fields, such as thermal waves. Thermography uses the time-dependent diffusion of heat (either pulsed or modulated periodically) which goes along with entropy production and a loss of information. Several attempts have been made to compensate for this diffusive effect to get a higher resolution for the reconstructed images of the samples interior. In this work it is shown that fluctuations limit this compensation. Therefore, the spatial resolution for non-destructive imaging at a certain depth is also limited by theory.

Keywords: Diffusion, Entropy, Fluctuation, Information , Non-destructive imaging, Resolution

Introduction

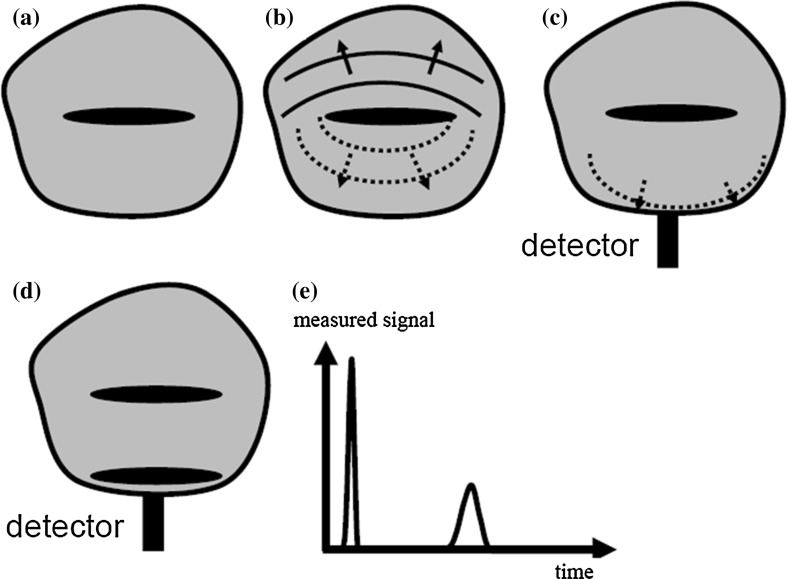

In non-destructive imaging, the information about the spatial pattern of a samples interior has to be transferred to the sample surface by certain waves, e.g., thermal or ultrasound waves. At the sample surface these waves can be detected and the interior structure is reconstructed from the measured signals (Fig. 1). The amount of information about the interior of the sample, which can be gained from the detected waves on the sample surface, is essentially influenced by the propagation from its excitation to the surface. Scattering, dissipation, or diffusion causes entropy production which results in a loss of information for the propagating waves. A unifying framework for treating diverse diffusion-related periodic phenomena under the global mathematical label of diffusion-wave fields has been developed by Mandelis [1], such as thermal waves, charge-carrier-density waves, diffuse-photon-density waves, but also modulated eddy currents, neutron waves, or harmonic mass-transport diffusion waves.

Fig. 1.

Information loss in non-destructive imaging: the spatial resolution is essentially influenced by the propagation of certain waves, e.g., thermal or ultrasound waves to the surface. Scattering, dissipation, or diffusion causes entropy production and a loss of information of the propagating waves: (a) Sample containing internal structure, which should be imaged. (b) Propagation of the waves to the sample surface: entropy production goes along with fluctuations (“fluctuation–dissipation theorem”) and causes a loss of information. (c) Detection of wave at the sample surface. (d) The same structure is contained twice in the sample: just beneath the surface and at a higher depth. (e) Measured signal at the detector surface as a function of time for the sample shown in (d): due to diffusion or dissipation, the signal from the deeper structure has not only smaller amplitude but it is also broadened compared to the signal from the structure just beneath the surface

Several attempts have been made to compensate for these diffusive or dissipative effects to get a higher resolution for the reconstructed images of the sample interior. In this work it is shown that thermodynamical fluctuations limit this compensation, and therefore, the spatial resolution for non-destructive imaging at a certain depth is also limited. This loss of information can be described by stochastic processes, e.g., for thermal diffusion with temperature as a random variable.

Thermography uses the time-dependent diffusion of heat (either pulsed or modulated periodically) which goes along with entropy production and a loss of information. Thermal waves are a good example as they exist only because of thermal diffusion. The amplitude of a thermal wave decreases by more than 500 times at a distance of just one wavelength [2].

Another example is ultrasound imaging, taking acoustic attenuation of the generated ultrasound wave into account. Here, the pressure of the acoustic wave is described by a stochastic process. As for any other dissipative process, the energy in attenuated acoustic waves is not lost but is transferred to heat, which can be described in thermodynamics by an entropy increase. This increase in entropy is equal to a loss of information, as defined by Shannon [3], and no algorithm can compensate for this loss of information. This is a limit given by the second law of thermodynamics.

The outstanding role of entropy and information in statistical mechanics was published in 1963 by Jaynes [4]. Already in 1957 he gave an information theoretical derivation of equilibrium thermodynamics showing that under all possible probability distributions with particular expectation values (equal to the macroscopic values like energy or pressure), the distribution which maximizes the Shannon information is realized in thermodynamics [5]. Jaynes explicitly showed for the canonical distribution, which is the thermal equilibrium distribution for a given mean value of the energy, that the Shannon or Gibbs entropy change is equal to the dissipated heat divided by the temperature, which is the entropy as defined in phenomenological thermodynamics [5, 6]. This “experimental entropy” in conventional thermodynamics is only defined for equilibrium states but by using the equality to Shannon information, Jaynes recognized that this “gives a generalized definition of entropy applicable to arbitrary nonequilibrium states, which still has the property that it can only increase in a reproducible experiment” [6].

In non-destructive imaging, the sample is not in equilibrium, e.g., for thermography a short pulse from a laser or a flash light induces a non-equilibrium state which in the end evolves into an equilibrium state. For states near thermal equilibrium in the linear regime, we use in the next section the theory of non-equilibrium thermodynamics presented by de Groot and Mazur [7]. In this regime microscopic time reversibility entails, as Onsager has first shown, a relation between fluctuation and dissipation, since one cannot distinguish between the average regression following an external perturbation or an equilibrium fluctuation [8]. This relation is known as the fluctuation–dissipation theorem and is due to Callen and Greene [9], Callen and Welton [10], and Greene and Callen [11]. It represents in fact a generalization of the famous Johnson [12]–Nyquist [13] formula in the theory of electric noise.

Over the last two decades, time reversibility of deterministic or stochastic dynamics has been shown to imply relations between fluctuation and dissipation in systems far from equilibrium, taking the form of a number of intriguing equalities, the fluctuation theorem [14, 15], the Jarzynski equality [16], and the Crooks relation [17]. The conceptual framework of “stochastic thermodynamics” relates applied or extracted work, exchanged heat, and changes in internal energy along a single fluctuating trajectory [18]. In an ensemble one gets probability distributions for these quantities, and since dissipated heat is typically associated with changes in entropy, one gets also a distribution for the entropy. In the next section a consequence of these equalities will be used: that thermodynamic entropy production is equal to the relative entropy, which is a quantitative measure of the information loss.

In this paper the measured variable, such as temperature, is treated as a time-dependent random variable with a mean value and a variance as a function of time. More about random variables and stochastic processes can be found, e.g., in the book about “Statistical Physics” from Honerkamp [19]. An introduction to stochastic processes on an elementary level has been published by Lemons [20], also containing “On the Theory of Brownian Motion” by Langevin [21]. An introduction to Markov processes on a slightly more advanced level is given by Gillespie [22]. To be able to use the results of some “model” stochastic processes given in the literature such as the Ornstein–Uhlenbeck process for a model of thermal waves, we have changed the initial conditions: instead of a defined initial value (with zero variance), we have taken the stochastic process at equilibrium before time zero. At time zero () a certain perturbation has been applied to the process (e.g., a rapid change in velocity of a Brownian particle—called kicked Ornstein–Uhlenbeck process). Reconstruction of the size of this perturbation at time from the measurement after a time shows how the information about the size of this kick at gets lost with increasing time if diffusive or dissipative processes occur.

Information Loss and Entropy Production

In this section we shall first assume that the time varying stochastic processes will have Gauss–Markov character. In doing so we do not wish to assume that all macroscopic processes considered belong to this specific class of processes. It may, however, be surmised that a number of real phenomena near equilibrium may, with a certain approximation, be adequately described by such Gauss–Markov processes [7]. At the end of this section we will see that the same results can be achieved for general processes from relations between fluctuation and dissipation in systems far from equilibrium, which have been derived in recent years.

The advantage of specifying more precisely the nature of the processes considered is that it enables us to discuss, on the level of the theory of random processes, the behavior of entropy production and of information loss. Following the theory of random fluctuations given, e.g., by de Groot and Mazur [7], we take as a starting point equations analogous to the Langevin equation used to describe the velocity of a Brownian particle:

| 1 |

The components of the vector x are the random variables , which have at equilibrium a zero mean value. The matrix M of real phenomenological coefficients is independent of time . The vector represents white noise, which is at different times uncorrelated. The distribution density of x turns out to be an -dimensional Gaussian distribution:

| 2 |

with the mean value and the covariance matrix , which is usually also a function of time Using equilibrium () as initial conditions has a significant advantage compared to initial conditions used in the literature: it turns out that the covariance stays constant in time, while the mean value is a function of time. This facilitates the calculations of the entropy production and of the information loss due to the stochastic process. is the determinant of the covariance matrix.

If the initial mean value of x at time zero is given by , the mean value at a later time will be

| 3 |

By an adequate coordinate transformation of x, the matrix M can be diagonalized: the eigenvalues of M are the elements of the diagonal matrix.

According to the second law of thermodynamics, the entropy of an adiabatically insulated system must increase monotonically until thermodynamic equilibrium is established within the system, where the entropy is set to zero. Then the entropy at a time is

| 4 |

with the Boltzmann constant , ln is the logarithm to the base e, and is the relative entropy or Kullback–Leibler distance between the distribution at time and the distribution at equilibrium ( at infinity). Stein’s lemma [23] gives a precise meaning to the relative entropy between two distributions: if data from are given, the probability of guessing incorrectly that the data come from is bounded by e, for large [24]. Therefore, the relative entropy is a quantitative measure of the information loss due to the stochastic process and is equal to the thermodynamic entropy .

For Gaussian distributions with a constant covariance matrix, the Kullback–Leibler distance results in

| 5 |

Before modeling heat diffusion as a Gauss–Markov process, we give a simple example: the Ornstein–Uhlenbeck process with only one component of x as a model, e.g., for the velocity of a Brownian particle.

Kicked Ornstein–Uhlenbeck Process

If the random vector x in Eq. 1 has only one component, we get the Langevin equation

| 6 |

which was used to describe the Brownian motion of a particle. The random variable is the particle velocity, is the viscous drag, and is the amplitude of the random fluctuations. The Langevin equation governs an Ornstein–Uhlenbeck process, after Ornstein and Uhlenbeck, who formalized the properties of this continuous Markov process [25]. Now we assume that initially we have thermal equilibrium with a zero mean velocity, and at time zero, the particle is kicked which causes an immediate change in the velocity of . Following Eq. 3, the mean value shows an exponential decay:

| 7 |

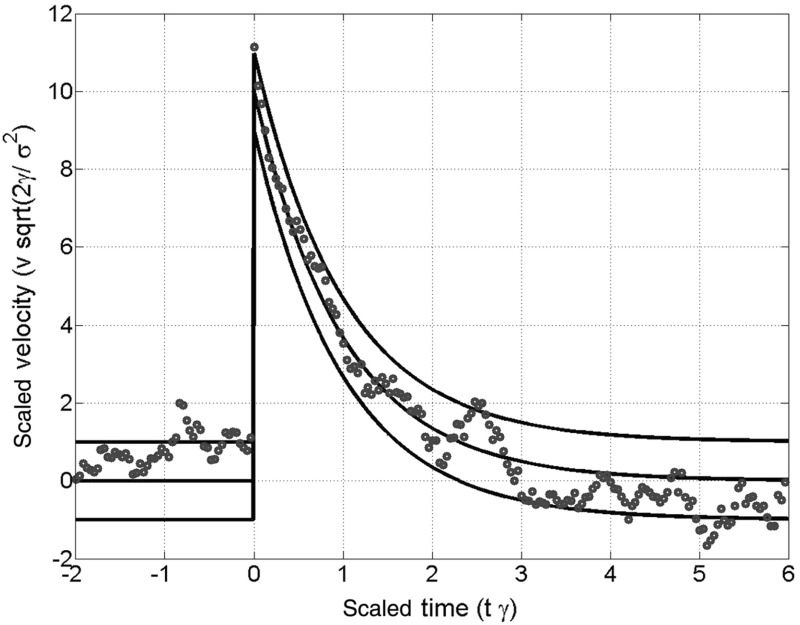

The variance of the velocity turns out to stay constant in time and is therefore equal to the equilibrium value (e.g., [19]). In Fig. 2 the time and velocity are scaled to be dimensionless and the standard deviation (square root of the variance) of the velocity is normalized.

Fig. 2.

Points on a sample path of the normalized kicked Ornstein–Uhlenbeck process defined by the Langevin Eq. 6. The solid lines represent the mean, and mean standard deviation of the scaled velocity coordinate. At the time a value of has been added to the scaled velocity. After some time the information of the amplitude gets more and more lost due to the fluctuations

From Eq. 5 the information loss equal to the entropy production until the time after the kick is

| 8 |

On the other hand the entropy production known from thermodynamics is the dissipated energy , which is the kinetic energy of the Brownian particle of mass , divided by the temperature :

| 9 |

The thermodynamic entropy production in Eq. 9 has to be equal to the loss of information in Eq. 8, and therefore we get for the variance of the velocity:

| 10 |

Equation 10 states a connection between the strength of the fluctuations, given by , and the strength of the dissipation . This is the fluctuation–dissipation theorem (FDT) in its simplest form for uncorrelated white noise, and this derivation of Eq. 10 shows the information theoretical background of the FDT.

Another simple model for a stochastic process is the stochastically damped kicked harmonic oscillator, which combines an oscillatory with a diffusive behavior and therefore is a good starting point to model attenuated acoustic waves. It can be treated similarly as the kicked Ornstein–Uhlenbeck process, and the equations of motion were solved already in 1943 by Chandrasekhar [26] for definite initial conditions and . Again we have changed the initial conditions to an oscillator with zero mean values kicked by an initial momentum at time zero. Further details are given in Sect. 5 in our book chapter on attenuated acoustic waves [27].

Entropy Production for General Processes

In Sect. 2.1, the stochastic process was assumed to have Gauss–Markov character. Now we will see that the same results can be achieved for general processes from relations between fluctuation and dissipation in systems far from equilibrium, which have been derived the past years [24, 28, 29]: Jarzynski described a “forward” process starting from an equilibrium state at a temperature , during which a system evolves in time as a control parameter is varied from an initial value to a final value . is the external work performed on the system during one realization of the process; is the free energy difference between two equilibrium states of the system, corresponding to and . The “reverse” process starts from an equilibrium state with and evolves to . For the relative entropy between the forward and reverse processes, it was shown that

| 11 |

with the average performed work and the probability density in phase space for the forward process and for the reverse process. For a sudden change of the control parameter, which could be, e.g., the sudden heat pulse at a time 0 for pulse thermography, this gives the same result as Eq. 4, but now not only for Gaussian distributions (details can be found in [29]).

As summarized in Sect. 2, the equity of thermodynamic entropy production and information loss—measured as the relative entropy —was shown for general non-equilibrium processes. The underlying assumptions are that we start with an equilibrium process and the “disturbance” at time has to be short, but it has not to be small. For thermography these conditions are fulfilled if the initial pulse is short compared to the time needed for the diffusion of heat. Therefore, the equity of thermodynamic entropy production and information loss will be applied to thermal diffusion after such a short pulse in the next section.

Thermal Diffusion as a Stochastic Process

Thermal Diffusion Equation in Real and Fourier Space

Thermal diffusion can be described by the differential equation (Fourier, 1823, or, e.g., Rosencwaig [2]):

| 12 |

where is the temperature and is the thermal source volumetric density as a function of space r and time . is the Laplacian operator. is the thermal diffusivity and is the thermal conductivity, which are both material properties.

In the very well developed theory of heat transfer (e.g., by Carslaw and Jaeger [30]), usually a bilateral Fourier transform is applied to and in the diffusion equation:

| 13 |

where and is the angular frequency. When Eq. 13 is applied to and in Eq. 12, one gets for the Fourier transformed and the inhomogeneous form of the Helmholtz equation:

| 14 |

This equation may be solved by the application of a bilateral Fourier transform over space, defined as

| 15 |

where k is the wave vector (see, e.g., Buckingham [31]). When Eq. 15 is applied to and of Eq. 14, the Helmholtz equation reduces to

| 16 |

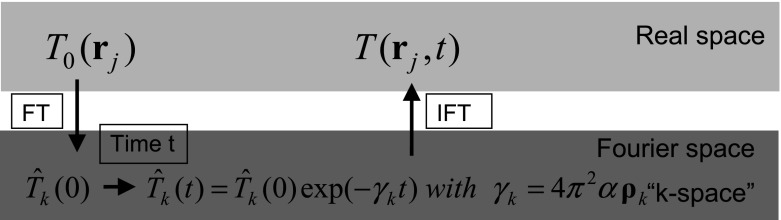

The same result can be achieved if first the spatial Fourier transform (Eq. 15) is applied to and in Eq. 12,

| 17 |

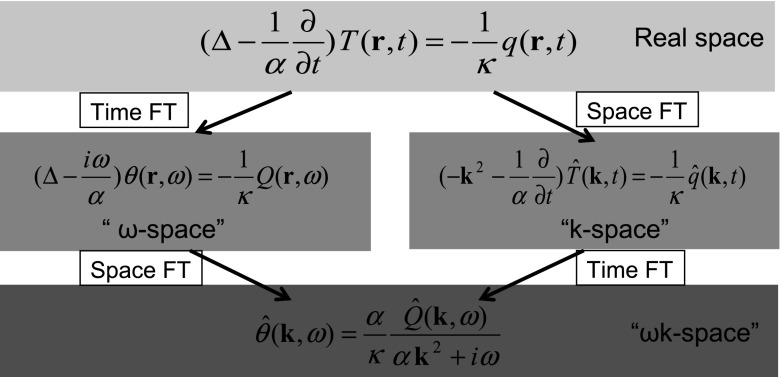

and then the temporal Fourier transform (Eq. 13) is applied to Eq. 17, as shown in Fig. 3.

Fig. 3.

Diffusion Eq. 12 can be solved by a Fourier transform (FT) in time (Eq. 13) and a subsequent FT in space (Eq. 15). The same result in “-space” is gained if the space FT is performed first, and then the time FT

In the past, most publications have used the Helmholtz equation (“-space” in the left of Fig. 3). For example, Mandelis has solved this equation in his book “Diffusion-Wave Fields” [1] in one, two, or three dimensions and for different boundary conditions (e.g., adiabatic boundary conditions where no heat can be “lost” at the sample boundary) by using Green functions. For a delta source in space and time and no boundaries, one gets in one dimension:

| 18 |

The thermal wave is a superposition of waves with angular frequency and as the wavenumber. At a distance the amplitude is damped by . Therefore, the damping factor after a distance of one wavelength is . This is the reason why thermal waves are a good example for “very dispersive” waves, as stated in the Introduction.

In “-space” the thermal wave is a superposition of waves damped in time (see, e.g., Buckingham [31]). Now for a delta source in space and time and no boundaries, one gets in one dimension,

| 19 |

Of course, the result is the same as in Eq. 18. These two different representations of a damped wave correspond to a real frequency and a complex wave vector or vice versa. In the next section the exponential damping in time in Eq. 19 will be realized by an Ornstein–Uhlenbeck process, as described in Sect. 2.1.

Thermal Diffusion Described by Ornstein–Uhlenbeck Processes

At time , an initial temperature distribution is given on the whole sample volume . The sample is thermally isolated, which results in adiabatic boundary conditions: the normal derivative of the temperature at the sample boundary vanishes at all times . Therefore, after a long time a thermal equilibrium will be established with an equilibrium temperature .

For numerical calculations we use a discrete space , where are points on a cubic lattice with a spacing of within the sample volume . At a time the temperature distribution can be represented by the Fourier series [32] (including the time with the known initial temperature distribution ,

| 20 |

is a support function which is one within the sample volume and zero outside. ’s are integer points on an infinite 3D lattice in -space. The index should correspond to , and therefore, is equal to the equilibrium temperature s which is constant in time. From the diffusion Eq. 12, we get

| 21 |

In Fourier space the time evolution is just an exponential decay in time (Fig. 4). Only for , where , the Fourier coefficient is constant in time and is equal to the equilibrium temperature as stated above.

Fig. 4.

The initial temperature distribution just after the laser pulse is Fourier transformed (FT). The time evolution of the Fourier series coefficients can be described similar to the mean value of an Ornstein–Uhlenbeck process (Eq. 7). The temperature distribution after a time is then calculated by an inverse Fourier transform (IFT)

Now we assume that the temporal evaluation of the temperature is realized by a Gauss–Markov process. The coordinate transformation, which diagonalizes the matrix M from Eq. 3, is the Fourier transform Eq. 20. The elements of the diagonalized matrix M are the time constants for the exponential decay of , which increase with a higher order of (quadratically with a length of . To get the variance the Gaussian distribution for , we use again the fact that the loss of information has to be equal to the thermodynamical entropy production. From Eq. 5 we get for the loss of information:

| 22 |

The term with is subtracted and therefore the sum starts with to ensure that the information loss is zero for the equilibrium distribution. Compared to Eq. 5, the sign is changed as the entropy production is positive.

On the other hand one gets from thermodynamics for the entropy production of a discrete temperature distribution around the equilibrium value in real space [7] and in Fourier space:

| 23 |

where is the volume of volume element number . Their sum is the sample volume . is the heat capacity at a constant volume. Comparison with Eq. 22 shows that the variance in Fourier space is the same for all wavenumbers with index :

| 24 |

For each wave number index , in Eq. 21 gives the strength of dissipation and gives the amplitude of the random fluctuations for the Ornstein–Uhlenbeck process for . The stochastic differential equation according to Eq. 6 is

| 25 |

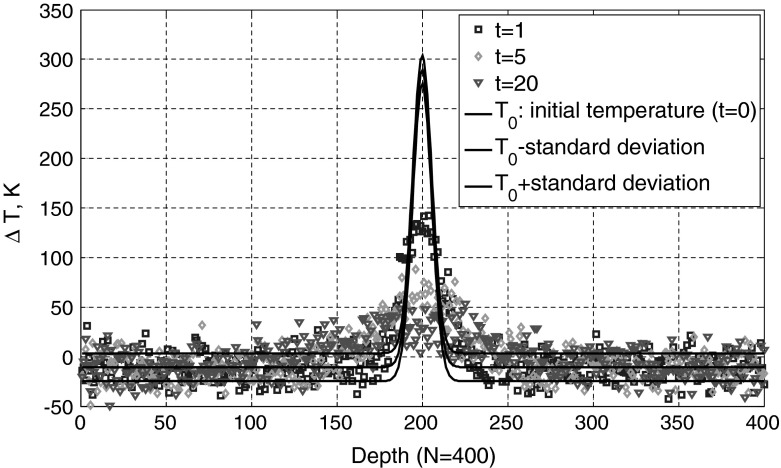

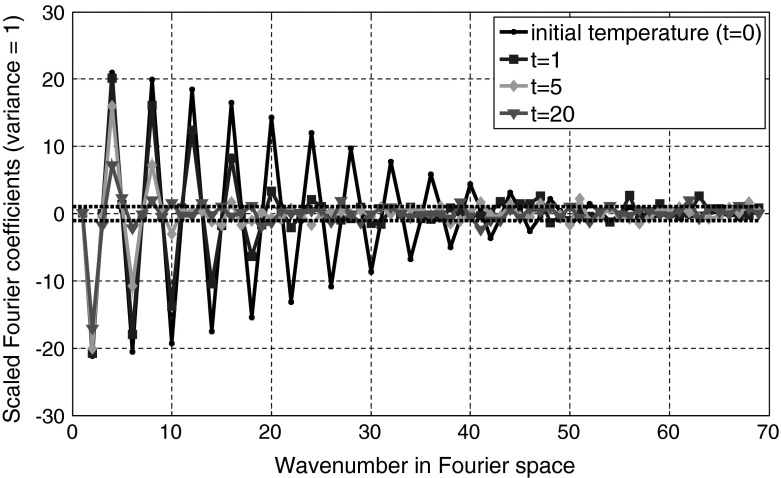

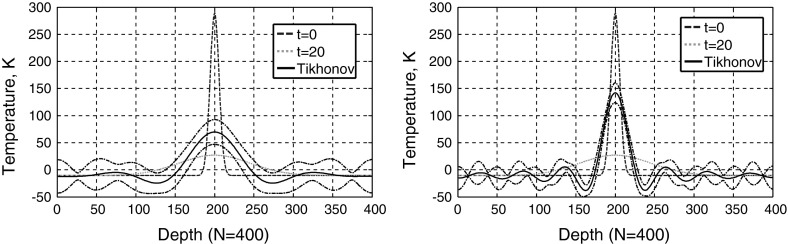

Figures 5 and 6 show a one-dimensional example for . The initial temperature distribution is Gaussian-shaped with a maximum of 600 K at a depth of 200 and the full width at 1/e of the maximum is 16 arb. units. The minimum temperature is 300 K. The deviation from equilibrium temperature is shown in black in Fig. 5 in real space and in Fig. 6 in Fourier space (“-space”). The thermal diffusivity is chosen as 50. The temperature profiles at the 400 discrete points are calculated by 400 independent Ornstein–Uhlenbeck processes for at a time , , and . In Fourier space higher orders of show a rapid decrease. The Fourier coefficients are scaled to give a variance . The standard deviation in real space is 14.12 K and is constant in depth and time, as the initial state is the temperature distribution of the equilibrium state increased by (analog to the kicked Ornstein–Uhlenbeck process). The standard deviation is selected rather high for this simulation to show clearly the influence of fluctuations.

Fig. 5.

Deviation from equilibrium temperature in real space as a funtion of time. The initial temperature distribution is Gaussian-shaped with a maximum of 600 K at a depth of 200 and the full width at 1/e of the maximum is 16 arb. units. The minimum temperature is 300 K. The standard deviation in real space is constant in depth and time

Fig. 6.

Fourier coefficients as a function of time. Coefficients with a higher wavenumber show a rapid decay with time according to Eq. 21. The Fourier coefficients are scaled to have a variance of one (independent of wavenumber)

After a long time the mean value of all Fourier coefficients will vanish. Only the Fourier coefficient for is constant and equal to the equilibrium temperature. The entropy from Eq. 22 goes to zero, and all the information about the shape of the initial temperature profile is lost. For earlier times the temperature profile is broadened (Fig. 5) but the initial temperature can be reconstructed to a certain extent, which depends on the fluctuations . How to get the best reconstruction of the initial temperature profile from a “measured” temperature distribution at a later time will be the topic of the next section.

Time Reversal and Regularization Methods

In the previous section the initial temperature distribution was given and the temperature distribution after a time was calculated (Fig. 4). Now we want to reconstruct the initial temperature distribution from the measured temperature distribution at a time (inverse problem). Without fluctuations (), this reconstruction in -space would be (from Eq. 21)

| 26 |

These exponential time evolution factors can reach high values for increasing length of wave vectors . Then even small fluctuations are “blown up” exponentially and the reconstruction error is big. Therefore, regularization methods have to be used for this inverse problem, like truncated singular value decomposition (SVD) or Tikhonov regularization [33]. For truncated SVD only the first wavenumbers are taken and the other wavenumbers for which the exponential factors produce high errors are set to zero:

| 27 |

Tikhonov regularization looks for a which fulfills the linear vector equation as good as possible, but is also a smooth solution, minimizing The regularization parameter gives the tradeoff between these two requirements: . Differentiating with respect to and setting the derivative equal to zero results in

| 28 |

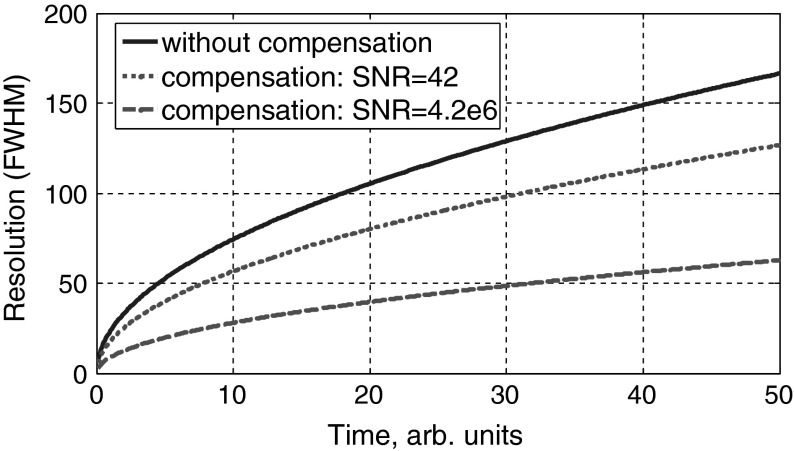

Figure 7, left, shows the Tikhonov reconstruction of the initial temperature distribution and its standard deviation from the “measured” data shown in Fig. 5 at a time with a variance in Fourier space (-space) of (Fig. 6). Figure 7, right, shows the reconstruction for the signal-to-noise ratio (SNR) multiplied by . The width of the reconstructed peak is smaller for the higher SNR and therefore enables a better spatial resolution. The spatial resolution is defined as the minimal distance of two peaks, which can still be reconstructed as two individual peaks. This is equal to the width of the peak, if the initial temperature peak at is a sharp peak like a delta source in space and time. The analytic expression is shown in Eq. 19 and shows a width (standard deviation) of . In -space and the width is the inverse .

Fig. 7.

Tikhonov reconstruction of the initial temperature and its standard deviation for a low signal-to-noise-ratio (SNR) from the data shown in Fig. 5 (left) and a SNR multiplied by 10 (right). The width of the reconstructed peak is smaller for the higher SNR and therefore enables a better spatial resolution

In -space SVD reconstruction (Eq. 27), and in a good approximation for higher signal-to-noise ratios (SNRs), also Thikhonov reconstruction (Eq. 28) gives a rectangular function up to with . After Fourier transformation this gives a sinc function in real space for the reconstructed initial temperature profile (Fig. 7):

| 29 |

In Fig. 8 the spatial resolution (defined as the width between the two inflection points of the central peak of the sinc function) for a reconstructed initial temperature profile from the measured temperature after a certain time is shown. This width can be approximated for higher SNRs by the distance between the zero points of the reconstructed temperature profile:

| 30 |

Fig. 8.

Possible spatial resolution (FWHM) from a measured temperature profile after a certain time without compensation (solid line) and with compensation for a small signal-to-noise ratio (SNR) from the data shown in Fig. 5 (dotted line) and a SNR of (dashed line)

Discussion, Conclusions, and Outlook

In Sect. 3 the whole one-dimensional temperature distribution was detected at a certain time after a short generation pulse. From this data the initial temperature distribution was reconstructed. For this situation we could give the limit of spatial resolution as a function of the signal-to-noise ratio (SNR) and time .

In non-destructive imaging as sketched in Fig. 1, the temperature is measured at the surface of the sample as a function of time. In that case the limit of spatial resolution can be given as a function of SNR and depth. For this ongoing work the heat diffusion is modeled as a stochastic process in “-space” (Fig. 3) instead of “-space,” as was done in Sect. 3 (Fig. 4).

Future work should describe the limit of spatial resolution for different generation—not only for short pulses as in this contribution. This could, for example, describe the difference of the spatial resolution for lock-in thermography and pulse thermography. It is also planned to extend this work to two- or three-dimensional problems.

A similar description with pressure as a random variable can be used to describe the possible compensation of ultrasound attenuation in photoacoustic imaging [27].

Acknowledgments

This work has been carried out with financial support from the “K-Project for Non-Destructive Testing and Tomography” supported by the COMET-program of the Austrian Research Promotion Agency (FFG), Grant No. 820492 and was supported by the Christian Doppler Research Association, by the Federal Ministry of Economy, Family and Youth, by the Austrian Science Fund (FWF) project numbers S10503-N20 and TRP102-N20, by the European Regional Development Fund (EFRE) in the framework of the EU-program Regio 13, and the federal state Upper Austria.

References

- 1.Mandelis A. Diffusion-Wave Fields: Mathematical Methods and Green Functions. New York: Springer; 2001. [Google Scholar]

- 2.A. Rosencwaig, in Non-Destructive Evaluation: Progress in Photothermal and Photoacoustic Science and Technology, ed. by A. Mandelis (Elsevier, New York, 1992)

- 3.Shannon CE. Bell Syst. Tech. J. 1948;27(379):623. doi: 10.1002/j.1538-7305.1948.tb00917.x. [DOI] [Google Scholar]

- 4.Jaynes ET. In: Statistical Physics. Ford K, editor. New York: Benjamin; 1963. p. 181. [Google Scholar]

- 5.Jaynes ET. Phys. Rev. 1957;106:620. doi: 10.1103/PhysRev.106.620. [DOI] [Google Scholar]

- 6.Jaynes ET. Am. J. Phys. 1965;33:391. doi: 10.1119/1.1971557. [DOI] [Google Scholar]

- 7.de Groot SR, Mazur P. Non-Equilibrium Thermodynamic. New York: Dover Publications; 1984. [Google Scholar]

- 8.Cleuren B, Van den Broeck C, Kawai R. Phys. Rev. Lett. 2006;96:050601. doi: 10.1103/PhysRevLett.96.050601. [DOI] [PubMed] [Google Scholar]

- 9.Callen HB, Greene RF. Phys. Rev. 1952;86:702. doi: 10.1103/PhysRev.86.702. [DOI] [Google Scholar]

- 10.Callen HB, Welton TA. Phys. Rev. 1951;83:34. doi: 10.1103/PhysRev.83.34. [DOI] [Google Scholar]

- 11.Greene RF, Callen HB. Phys. Rev. 1952;88:1387. doi: 10.1103/PhysRev.88.1387. [DOI] [Google Scholar]

- 12.Johnson JB. Phys. Rev. 1928;32:97. doi: 10.1103/PhysRev.32.97. [DOI] [Google Scholar]

- 13.Nyquist H. Phys. Rev. 1928;32:110. doi: 10.1103/PhysRev.32.110. [DOI] [Google Scholar]

- 14.D. Evans, E.G.D. Cohen, G.P. Morris, Phys. Rev. Lett. 71, 2401 (1993) [DOI] [PubMed]

- 15.Gallavotti G, Cohen EGD. Phys. Rev. Lett. 1995;74:2694. doi: 10.1103/PhysRevLett.74.2694. [DOI] [PubMed] [Google Scholar]

- 16.Jarzynski C. Phys. Rev. Lett. 1997;78:2690. doi: 10.1103/PhysRevLett.78.2690. [DOI] [Google Scholar]

- 17.Crooks GE. Phys. Rev. E. 1999;60:2721. doi: 10.1103/PhysRevE.60.2721. [DOI] [PubMed] [Google Scholar]

- 18.Seifert U. Phys. Rev. Lett. 2005;95:040602–1. doi: 10.1103/PhysRevLett.95.040602. [DOI] [PubMed] [Google Scholar]

- 19.Honerkamp J. Statistical Physics. New York: Springer; 1998. [Google Scholar]

- 20.Lemons DS. An Introduction to Stochatic Processes in Physics. Baltimore: The Johns Hopkins University Press; 2002. [Google Scholar]

- 21.P. Langevin, Compets rendus Académie des Sciences (Paris) 146, 530 (1908) (Trans. A. Gythiel, Am. J. Phys. 65, 1079, 1997)

- 22.Gillespie DT. Markov Processes. New York: Academic Press; 1992. [Google Scholar]

- 23.T.M. Cover, J.A. Thomas, Elements of Information Theory, 2nd edn. (Wiley, Hoboken, NJ, 2006)

- 24.Parrondo JMR, Van den Broeck C, Kawai R. New J. Phys. 2009;11:073008. doi: 10.1088/1367-2630/11/7/073008. [DOI] [Google Scholar]

- 25.Uhlenbeck GE, Ornstein LS. Phys. Rev. 1930;36:823. doi: 10.1103/PhysRev.36.823. [DOI] [Google Scholar]

- 26.Chandrasekhar S. Rev. Mod. Phys. 1943;15:1. doi: 10.1103/RevModPhys.15.1. [DOI] [Google Scholar]

- 27.P. Burgholzer, H. Roitner, J. Bauer-Marschallinger, H. Grün, T. Berer, G. Paltauf, Compensation of Ultrasound Attenuation in Photoacoustic Imaging, Acoustic Waves—from Microdevices to Helioseismology, ed. by M.G. Beghi (2011), ISBN: 978-953-307-572-3, http://www.intechopen.com/articles/show/title/compensation-of-ultrasound-attenuation-in-photoacoustic-imaging

- 28.Jarzynski C. Phys. Rev. E. 2006;73:046105. doi: 10.1103/PhysRevE.73.046105. [DOI] [PubMed] [Google Scholar]

- 29.Kawai R, Parrondo JMR, Van den Broeck C. Phys. Rev. Lett. 2007;98:080602. doi: 10.1103/PhysRevLett.98.080602. [DOI] [PubMed] [Google Scholar]

- 30.Carslaw HS, Jaeger JC. Conduction of Heat in Solids. 2. Oxford: Clarendon Press; 1959. [Google Scholar]

- 31.Buckingham MJ. Phys. Rev. E. 2005;72:026610–1. doi: 10.1103/PhysRevE.72.026610. [DOI] [PubMed] [Google Scholar]

- 32.Barret HH, Denny JL, Wagner RF, Myers KJ. J. Opt. Soc. Am. A. 1995;12:834. doi: 10.1364/JOSAA.12.000834. [DOI] [PubMed] [Google Scholar]

- 33.Hansen PC. Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aaspects of Linear Inversion. Philadelphia: SIAM; 1987. [Google Scholar]