Abstract

Localization of sound sources is a considerable computational challenge for the human brain. Whereas the visual system can process basic spatial information in parallel, the auditory system lacks a straightforward correspondence between external spatial locations and sensory receptive fields. Consequently, the question how different acoustic features supporting spatial hearing are represented in the central nervous system is still open. Functional neuroimaging studies in humans have provided evidence for a posterior auditory “where” pathway that encompasses non-primary auditory cortex areas, including the planum temporale (PT) and posterior superior temporal gyrus (STG), which are strongly activated by horizontal sound direction changes, distance changes, and movement. However, these areas are also activated by a wide variety of other stimulus features, posing a challenge for the interpretation that the underlying areas are purely spatial. This review discusses behavioral and neuroimaging studies on sound localization, and some of the competing models of representation of auditory space in humans.

1. Introduction

Determining the location of perceptual objects in extrapersonal space is essential in many everyday situations. For objects outside the field of vision, hearing is the only sense that provides such information. Thus, spatial hearing is a fundamental prerequisite for our efficient functioning in complex communication environments. For example, consider a person reaching for a ringing phone or a listener using audiospatial information to help focus on one talker in a chattering crowd (Brungart et al., 2002; Gilkey et al., 1997; Middlebrooks et al., 1991; Shinn-Cunningham et al., 2001). Spatial hearing has two main functions: it enables the listener to localize sound sources and to separate sounds based on their spatial locations (Blauert, 1997). While the spatial resolution is higher in vision (Adler, 1959; Recanzone, 2009; Recanzone et al., 1998), the auditory modality allows us to monitor objects located anywhere around us. The ability to separate sounds based on their location makes spatial auditory processing an important factor in auditory scene analysis (Bregman, 1990), a process of creating individual auditory objects, or streams, and separating from background noise (Moore, 1997). Auditory localization mechanisms can be different in humans compared to other species utilized in animal neurophysiological studies. For example, in contrast to cats, we cannot move our ears towards the sound sources. Further, unlike in barn owls, our ears are at symmetrical locations on our heads, and sound elevation needs to be determined based on pinna-related spectral cues, which is less accurate than comparing the sounds received at the two asymmetric ears (Knudsen, 1979; Rakerd et al., 1999). In the following, we will review key findings that have elucidated the psychophysics and neural basis of audiospatial processing in humans.

2. Psychophysics of auditory spatial perception

2.1. Sound localization cues in different spatial dimensions

Spatial hearing is based on “binaural” and “monaural” cues (Yost et al., 1987). The two main binaural cues are differences in the time of arrival (the interaural time difference, ITD, or interaural phase difference, IPD) and differences in the received intensity (the interaural level difference, ILD) (Middlebrooks et al., 1991). The most important monaural localization cue is the change in the magnitude spectrum of the sound caused by the interaction of the sound with the head, body, and pinna before entering the ear (Blauert, 1997; Macpherson et al., 2007; Middlebrooks et al., 1991; Shaw, 1966; Wightman et al., 1989). Another monaural cue is the direct-to-reverberant energy ratio (DRR), which expresses the amount of sound energy that reaches our ears directly from the source vs. the amount that is reflected off the walls in enclosed spaces (Larsen et al., 2008). In general, monaural cues are more ambiguous spatial cues than binaural cues because the auditory system must make a priori assumptions about the acoustic features of the original sound in order to estimate the filtering effects corresponding to the monaural spatial cues.

Positions of objects in three dimensional (3D) space are usually described using either Cartesian (x, y, z) or spherical (azimuth, elevation, distance) coordinates. For studies of spatial hearing, the most natural coordinate system uses bipolar spherical coordinates (similar to the coordinate system used to describe a position on the globe) with the two poles at the two ears and the origin at the middle point between the ears (Duda, 1997). In this coordinate system the azimuth (or horizontal location) of an object is defined by the angle between the source and the interaural axis, the elevation (or vertical location) is defined as the angle around the interaural axis, and distance is measured from the center of the listener’s head. Using this coordinate system is natural when discussing spatial hearing because different auditory localization cues map onto these coordinate dimensions in a natural, monotonic manner. However, note that, if the examination is restricted to certain sub-regions of space, the Cartesian and spherical representations can be equivalent. For example, for sources directly ahead of the listener the two representations are very similar.

Binaural cues are the primary cues for perception in the azimuthal dimension. The perceived azimuth of low-frequency sounds (below 1–2 kHz) is dominated by the ITD. For high-frequency stimuli (above 1–2 kHz), the auditory system weights the ILD more when determining the azimuth. This simple dichotomy (ITD for low frequencies, ILD for high frequencies) is referred to as the duplex theory (Strutt, 1907). It can be explained by considering physical and physiological aspects of how these cues change with azimuth and how they are neuronally extracted. However, there are limitations to the applicability of this theory. For example, for nearby sources, the ILD is available even at low frequencies (Shinn-Cunningham, 2000). Similarly, the ITD cue in the envelope of the stimulus, as opposed to the ITD in the fine structure, can be extracted by the auditory system even from high-frequency sounds (van de Par et al., 1997). Finally, in theory, the azimuth of a sound source can be determined also monaurally, because the high-frequency components of the sound are attenuated more compared to low-frequency components as the sound source moves contralaterally (Shub et al., 2008).

The main cue the human auditory system uses to determine the elevation of a sound source is the monaural spectrum determined by the interaction of the sound with the pinnae (Wightman et al., 1997). Specifically, there is a spectral notch that moves in frequency from approximately 5 kHz to 10 kHz as the source moves from 0° (directly ahead of listener) to 90° (above the listener’s head), considered to be the main elevation cue (Musicant et al., 1985). However, small head asymmetries may provide a weak binaural elevation cue.

The least understood dimension is distance (Zahorik et al., 2005). The basic monaural distance cue is the overall received sound level (Warren, 1999). However, to extract this cue, the listener needs a priori knowledge of the emitted level, which can be difficult since the level at which sounds are produced often varies. For nearby, lateral sources the ILD changes with source distance and provides a distance cue (Shinn-Cunningham, 2000). In reverberant rooms, the auditory system uses some aspect of reverberation to determine the source distance (Bronkhorst et al., 1999). This cue is assumed to be related to the DRR (Kopco et al., 2011). Finally, other factors like vocal effort for speech (Brungart et al., 2001) can also be used.

To determine which acoustic localization cues are available in the sound produced by a target at a given location in a given environment, the Head-related transfer functions (HRTFs) and Binaural room impulse responses (BRIRs) can be measured and analyzed (Shinn-Cunningham et al., 2005). These functions/responses provide complete acoustic characterization of the spatial information available to the listener for a given target and environment, they vary slightly from listener to listener, and they also can be used to simulate the target in a virtual acoustic environment.

2.2. Natural environments: Localization in rooms and complex scenes

While the basic cues and mechanisms of spatial hearing in simple scenarios are well understood, much less is known about natural environments, in which multiple acoustic objects are present in the scene and where room reverberation distorts the cues. When the listener is in a room or other reverberant environment the direct sound received at the ears is combined with multiple copies of the sound reflected off the walls before arriving at the ears. Reverberation alters the monaural spectrum of the sound as well as the ILDs and IPDs of the signals reaching the listener (Shinn-Cunningham et al., 2005). These effects depend on the source position relative to the listener as well as on the listener position in the room. On the other hand, reverberation itself can provide a spatial cue (DRR). Most studies of sound localization were performed in an anechoic chamber (Brungart, 1999; Hofman et al., 1998; Makous et al., 1990; Wenzel et al., 1993; Wightman et al., 1997). There are also several early studies of localization in reverberant environments (Hartmann, 1983; Rakerd et al., 1985; Rakerd et al., 1986). They show that reverberation causes small degradations in directional localization accuracy. However, performance can improve with practice (Irving et al., 2011; Shinn-Cunningham, 2000). In addition, several recent studies measured the perceived source distance (Bronkhorst et al., 1999; Kolarik et al., 2013; Kopco et al., 2011; Ronsse et al., 2012; Zahorik et al., 2005). These studies show that in reverberant space, distance perception is more accurate, due to additional information provided by the DRR cue.

Processing of spectral and binaural spatial cues becomes particularly critical when multiple sources are presented concurrently. Under such conditions, the auditory system’s ability to correctly process spatial information about the auditory scene becomes critical both for speech processing (Best et al., 2008; Brungart et al., 2007) and target localization (Drullman et al., 2000; Hawley et al., 1999; Simpson et al., 2006). However, the strategies and cues the listeners use in complex environments are not well understood. It is clear that factors like the ability to direct selective spatial attention (Sach et al., 2000; Shinn-Cunningham, 2008; Spence et al., 1994) or the ability to take advantage of a priori information about the distribution of targets in the scene (Kopco et al., 2010) can improve performance. On the other hand, the localization accuracy can be adversely influenced by preceding stimuli (Kashino et al., 1998) or even by the context of other targets that are clearly spatio- temporally separated from the target of interest (Kopco et al., 2007; Maier et al., 2010).

2.3. Implications for non-invasive imaging studies of spatial processing

Psychophysical studies provide hypotheses and methodology for non-invasive studies of spatial brain processing. It is critical to understand differences between these two approaches when linking psychophysical and neuronal data. An example of this in the spatial hearing research can be the examination of ILD. For any signal, whenever its ILD is changed, by definition the monaural level of the signal at one of the ears (or at both) has to change. Therefore, when examining the ILD cue behaviorally or neuronally, one has to make sure that the observed effects of changing ILD are not actually caused by the accompanying change in the monaural levels. Behaviorally, the usefulness of the monaural level cues can be easily eliminated by randomly roving the signal level at both ears from presentation to presentation, while the ILD is kept constant or varied systematically to isolate its usefulness (Green, 1988). However, the same approach results in monaural level variations at both ears, which, although not useful behaviorally, will result in additional broad neuroimaging activations of various feature detectors. Several methods were used to mitigate this issue, for example, comparing the activation resulting from varying ILD to activation when varying only the monaural levels (Stecker et al., 2013). Since none of the alternative methods completely eliminates monaural level cues, the available neuroimaging studies of ILD processing all have monaural cues as a potential confound (Lehmann et al., 2007; Zimmer et al., 2006).

One of the largest challenges for neuroimaging of spatial auditory processing is the necessity to use virtual acoustics techniques to simulate sources arriving from various locations (Carlile, 1996). These techniques, based on simulating the sources using HRTFs or BRIRs, require individual measurements of these responses for veridical simulation of sources in virtual space. While this issue can be relatively minor in simple acoustic scenarios, the human ability to correctly interpret the distorted virtual spatial cues might be particularly limited in a complex auditory scene, in which the cognitive load associated with the tasks is much higher. Therefore, it is important that the psychophysical validation accompanies neuroimaging studies of spatial hearing in virtual environments (Schechtman et al., 2012).

The following sections provide an overview of the neuroimaging studies of auditory spatial processing. The overview focuses on processing of basic auditory cues and on spatial processing in simple auditory conditions, as very little attention has been paid in the neuroimaging literature to the mechanisms and strategies used by the listeners in natural, complex multi-target environments.

3. Non-invasive studies of spatial processing in human auditory cortex

Non-human primate models (Thompson et al., 1983) and studies with neurological patients (Zatorre et al., 2001) show that accurate localization of sound sources is not possible without intact auditory cortex (AC), although reflexive orienting toward sound sources may be partially preserved in, for example, AC-ablated cats (Thompson et al., 1978) or monkeys (Heffner et al., 1975). In comparison to the other sensory systems, the functional organization of human ACs is still elusive. Nonhuman primate studies (Kaas et al., 2000; Kosaki et al., 1997; Merzenich et al., 1973; Rauschecker et al., 1995) suggest several distinct AC subregions processing different sound attributes. The subregion boundaries have been identified based on reversals of tonotopic (or cochleotopic) gradients (Kosaki et al., 1997; Rauschecker et al., 1995). This cochleotopic organization is analogous to the hierarchical retinotopic representation of visual fields, that is, the different locations of the basilar membrane are represented in a topographic fashion up to the higher-order belt areas of non-human primate AC. In humans, the corresponding area boundaries have been studied using non-invasive measures such as functional MRI (fMRI) (Formisano et al., 2003; Langers et al., 2012; Seifritz et al., 2006; Talavage et al., 2004). However, the results across the different studies have not been fully consistent and the exact layout of human AC fields is still unknown.

Characterizing cortical networks contributing to spatial auditory processing requires methods allowing whole-brain sampling with high spatial and temporal resolution. For example, although single-unit recordings in animals provide a very high spatiotemporal resolution, multisite recordings are still challenging and limited in the number of regions that can be measured simultaneously (Miller et al., 2005). There are also essential differences in how different species process auditory spatial information. Meanwhile, although single-unit recordings of AC have been reported in humans (Bitterman et al., 2008), intracranial recordings are not possible except in rare circumstances where they are required as part of a presurgical preparation process. Non-invasive neuroimaging techniques are, thus, clearly needed. However, neuroimaging of human AC has been challenging for a number of methodological reasons. The electromagnetic methods EEG and magnetoencephalography (MEG) provide temporally accurate information, but their spatial localization accuracy is limited due to the ill-posed inverse problem (any extracranial electromagnetic field pattern can be explained by an infinite number of different source configurations) (Helmholtz, 1853). fMRI provides spatially accurate information, but its temporal accuracy is limited and further reduced when sparse sampling paradigms are used to eliminate the effects of acoustical scanner noise. These challenges are further complicated by the relatively small size of the adjacent subregions in ACs. Consequently, although the amount of information from neuroimaging studies is accumulating, there is still no widely accepted model of human AC, which has contributed to disagreements on how sounds are processed in the brain.

Although results comparable to the detailed mapping achieved in human visual cortices are not yet available, evidence for broader divisions between the anterior “what” vs. posterior “where” pathways of non-primary ACs is accumulating from non-human primates and human neuroimaging studies (Ahveninen et al., 2006; Barrett et al., 2006; Rauschecker, 1998; Rauschecker et al., 2000; Rauschecker et al., 1995; Tian et al., 2001). In humans, the putative posterior auditory “where” pathway, encompassing the planum temporale (PT) and posterior superior temporal gyrus (STG), is strongly activated by horizontal sound direction changes (Ahveninen et al., 2006; Brunetti et al., 2005; Deouell et al., 2007; Tata et al., 2005), movement (Baumgart et al., 1999; Formisano et al., 2003; Krumbholz et al., 2005; Warren et al., 2002), intensity-independent distance cues (Kopco et al., 2012), and under conditions where separation of multiple sound sources is required (Zündorf et al., 2013). However, it is still unclear how the human AC encodes the acoustic space: Is there an orderly topographic organization of neurons representing different spatial origins of sounds, or are sound locations, even at the level of non-primary cortices, computed by neurons that are broadly tuned to more basic cues such as ITD and ILD, using a “two-channel” rate code (McAlpine, 2005; Middlebrooks et al., 1994; Middlebrooks et al., 1998; Stecker et al., 2003; Werner-Reiss et al., 2008)? At the most fundamental level, it is thus still debatable whether sound information is represented based on the same hierarchical and parallel processing principles found in the other sensory systems, or whether AC process information in a more distributed fashion.

In the following, we will review human studies of spatial processing of sound information, obtained by non-invasive measurements of brain activity. Notably, spatial cues are extensively processed in the subcortical auditory pathways, and the functionally segregated pathways for spectral cues, ITD, and ILD have been thoroughly investigated in animal models (Grothe et al., 2010). In particular, the subcortical mechanisms of ITD processing are a topic of a debate, as the applicability of long-prevailing theories of interaural coindicence detection (Jeffress, 1948) to mammals has been recently challenged (McAlpine, 2005). Here, we will, however, concentrate on cortical mechanisms of sound localization that have intensively studied using human neuroimaging, in contrast to the relatively limited number of fMRI (Thompson et al., 2006) or EEG (Junius et al., 2007; Ozmen et al., 2009) studies on subcotrical activations to auditory spatial cues. On the same note, human neuropsychological (Adriani et al., 2003; Clarke et al., 2000; Clarke et al., 2002) and neuroimaging (Alain et al., 2001; Arnott et al., 2004; Bushara et al., 1999; De Santis et al., 2006; Huang et al., 2012; Kaiser et al., 2001; Maeder et al., 2001; Rämä et al., 2004; Weeks et al., 1999) studies have produced detailed information on networks contributing to higher-order cognitive control of auditory spatial processing beyond ACs, including posterior parietal (e.g., intraparietal sulcus) and frontal regions (e.g., premotor cortex/frontal eye fields, lateral prefrontal cortex). In this review, we will, however, specifically concentrate on studies focusing on auditory areas of the superior temporal cortex.

3.1. Cortical processing of ITD and ILD cues: separate channels or joint representations?

Exactly how the human brain represents binaural cues is currently a hot topic. According to the classic “place code model” of subcortical processing (Jeffress, 1948), delay lines from each ear form a coincidence detector that activates topographically organized ITD-sensitive neurons in the nucleus laminaris, the bird analogue of medial superior olive in mammals. As for cortical processing in humans, several previous EEG and MEG results, demonstrating change-related auditory responses that increase monotonically as a function of ITD (McEvoy et al., 1993; McEvoy et al., 1991; Nager et al., 2003; Paavilainen et al., 1989; Sams et al., 1993; Shestopalova et al., 2012) or ILD (Paavilainen et al., 1989), as well as fMRI findings of responses that increase as a function of ITD separation between competing sound streams (Schadwinkel et al., 2010b), could be interpreted in terms of topographical ITD/ILD representations in AC (McEvoy et al., 1993). However, given the lack of direct neurophysiological evidence of sharply tuned ITD neurons in mammals, an alternative “two channel model” has been more recently presented, predicting that neuron populations tuned relatively loosely to ipsilateral or contralateral hemifields vote for the preferred perception of horizontal directions (McAlpine, 2005; Stecker et al., 2005). Evidence for the two-channel model in humans was found, for example, in a recent neuroimaging study showing that when ITD increases, the laterality of midbrain fMRI responses switches sides, even though perceived location remains on the same side (Thompson et al., 2006). Subsequent MEG (Salminen et al., 2010a) and EEG (Magezi et al., 2010) studies have, in turn, provided support on the two-channel model at the level of human AC. It is, however, important to note that the two-channel model is generally restricted to ITD, and its behavioral validation is very limited, compared to a range studies that found a good match between human psychophysical data and place-code models (Bernstein et al., 1999; Colburn et al., 1997; Stern et al., 1996; van de Par et al., 2001). Additional mechanisms are needed for explaining our ability to discriminate between the front and the back, vertical directions, and sound source distances. Most importantly, higher-level model of auditory spatial processing, which would describe how the separate cues are integrated into one coherent 3D representation of auditory space, is still missing.

A related open question, therefore, is whether ITD, ILD, and other spatial features are processed separately at the level of non-primary AC “where” areas, or whether higher-order ACs include “complex” neurons representing feature combinations of acoustic space. The former hypothesis of separate subpopulations is supported by early event-related potential (ERP) studies comparing responses elicited by unexpected changes in ITD or ILD (Schröger, 1996). Similarly, differences in early (i.e., less than 200 ms after sound onset) cortical response patterns to ITDs vs. ILDs were observed in recent MEG (Johnson et al., 2010) and EEG (Tardif et al., 2006) studies, interpreted to suggest separate processing of different binaural cues in AC. These studies also suggested that a joint representation of ILD and ITD could be formed at the earliest 250 ms after sound onset, reportedly (Tardif et al., 2006) dominated by structures beyond the AC itself. However, when interpreting the results of EEG/ERP and MEG studies, it should be noted that separating the exact neuronal structures contributing to the reported effects is complicated (particularly with EEG). Moreover, few previous studies reporting response differences to ITD and ILD have considered the fact that, for a fixed target azimuth, ILD changes as a function of sound frequency (Shinn-Cunningham et al., 2000). Particularly if broadband stimuli are presented, using a fixed ILD should activate neurons representing different directions at different frequency channels, leading to an inconsistency between the respective ITD and ILD representations and in an inherent difference in the response profiles.

A different conclusion could be reached from a recent MEG study (Palomäki et al., 2005), involving a comprehensive comparison of different auditory spatial cues, which showed that ITD or ILD alone are not sufficient for producing direction-specific modulations in non-primary ACs. A combination of ITD and ILD was reportedly the “minimum requirement” to achieve genuinely spatially selective responses at this processing level, and including all 3D cues available in individualized HRTF measurements resulted in the clearest direction specific results. These results are in line with earlier observations, suggesting sharper mismatch responses to free-field direction changes than to the corresponding ILD/ITD differences (Paavilainen et al., 1989), as well as with recent fMRI studies comparing responses to sounds with all available (i.e., “externalized”) vs. impoverished spatial cues (Callan et al., 2012). Meanwhile, a recent EEG study (Junius et al., 2007) on BAEP (peaking <10 ms from sound onset) and middle-latency auditory evoked potentials (MAEP, peaking <50 ms of sound onset) suggest that ITD and ILD are processed separately at the level of the brainstem and primary AC. Similar conclusions could be reached based on 80-Hz steady-state response (SSR) studies (Zhang et al., 2008) and BAEP measurements of the so-called cochlear latency effect (Ozmen et al., 2009). Interestingly, the study of Junius and colleagues also showed that, unlike the responses reflecting non-primary AC function (Callan et al., 2012; Palomäki et al., 2005), the BAEP and MAEP components were not larger for individualized 3D simulations containing all direction cues than for ITD/ILD. This could be speculated to suggest that processing of spectral direction cues occurs in non-primary ACs, and that joint representations of auditory space do not exist before this stage. Taken together, the existing human results suggest that ILD and ITD are processed separately at least at the level of brain stem (Ozmen et al., 2009), consistent with predictions of numerous previous animal neurophysiological studies (Grothe et al., 2010). Further, it seems that the combination of individual cues to cognitive representations does not occur before non-primary AC areas (Junius et al., 2007; Zhang et al., 2008), and that the latency window of the formation of a joint representation of acoustic space is somewhere between 50–250 ms (Johnson et al., 2010; Palomäki et al., 2005; Tardif et al., 2006). The interpretation that AC processes feature combinations of acoustic space, in contrast to the processing of simpler cues that occurs in subcortical structures, is also consistent with non-human primate evidence that ablation of AC is particularly detrimental to functions that require the combination of auditory spatial features to a complete spatial representation (Heffner et al., 1975; Heffner et al., 1990).

3.2. Motion sensitivity or selectivity of non-primary auditory cortex

Given the existing evidence for separate processing pathways for stationary and moving visual stimuli, the neuronal processes underlying motion perception have also been intensively studied in the auditory system. Early EEG studies suggested that auditory apparent motion following from ITD manipulations results in an auditory ERP shift that differs from responses to stationary sounds (Halliday et al., 1978). Evidence for direction-specific motion sensitive neurons in human AC was also found in an early MEG study (Mäkelä et al., 1996), which manipulated the ILD and binaural intensity of auditory stimuli to simulate an auditory object moving along the azimuth, or moving towards or away from the subject. An analogous direction-specific phenomenon was observed in a more recent ERP study (Altman et al., 2005).

After the initial ERP studies, auditory motion perception has been intensively studied using metabolic/hemodynamic imaging measures. A combined fMRI and positron emission tomography (PET) study by Griffiths and colleagues (1998) showed motion-specific activations in right posterior parietal areas, consistent with preceding neuropsychological evidence (Griffiths et al., 1996). However, in the three subjects measured using fMRI, there seemed to be an additional activation cluster near the boundary between parietal lobe and posterior superior temporal plane. The first study that reported motion-related activations in AC itself was conducted by Baumgart and colleagues (1999), who showed significantly increased activations for amplitude modulated (AM) sounds containing motion-inducing IPD vs. stationary AM sounds in the right PT. Indications of roughly similar effects, with the strongest activations in the right posterior AC, were found in the context of a fMRI study comparing visual, somatosensory, and auditory motion processing (Bremmer et al., 2001). A combined PET and fMRI study by Warren and colleagues (2002), in turn, showed a more bilateral pattern of PT activations in a variety of well-controlled comparisons between moving and stationary auditory stimuli, simulated using both IPD manipulations and generic HRTF simulations. Posterior non-primary AC activations to acoustic motion, with more right-lateralized and posterior-medial focus than those related to frequency modulations (FM), were also found in a subsequent factorial fMRI study (Hart et al., 2004). The role of PT and posterior STG in motion processing has been also supported by fMRI studies comparing acoustic motion simulated by ITD cues to spectrally matched stationary sounds (Krumbholz et al., 2007; Krumbholz et al., 2005), and by recent hypothesis-free fMRI analyses (Alink et al., 2012). It has been also shown that posterior non-primary AC areas are similarly activated to horizontal and vertical motion, simulated by using individualized spatial simulations during fMRI measurements (Pavani et al., 2002) or generated by using free-field loudspeaker arrays during EEG measurements (Getzmann et al., 2010).

Previous fMRI studies have also compared neuronal circuits activated during “looming” vs. receding sound sources (Hall et al., 2003; Seifritz et al., 2002; Tyll et al., 2013). The initial fMRI studies in humans would seem to suggest that increasing and decreasing sound intensity, utilized to simulate motion, activates similar posterior non-primary AC structures as horizontal or vertical acoustic motion (Seifritz et al., 2002). However, it is still an open question whether looming vs. receding sound sources are differentially processed in AC, as suggested by non-human primate models (Maier et al., 2007) and some recent human fMRI studies (Tyll et al., 2013), or whether the perceptual saliency associated with looming emerges in higher-level polymodal association areas (Hall et al., 2003; Seifritz et al., 2002).

Taken together, there is evidence from studies comparing auditory motion to “stationary” sounds that a motion-sensitive area may exist in posterior non-primary ACs, including PT and posterior STG. However, it is not yet entirely sure if the underlying activations reflect genuinely motion selective neurons, or whether auditory motion is inferred from an analysis of position changes of discretely sampled loci in space. More specifically, AC neurons are known to be “change detectors”. Activation to a constant stimulus repeated at a high rate gets adapted, i.e., suppressed in amplitude, while a change in any physical dimension, including the direction of origin, results in a release from adaptation (Butler, 1972; Jääskeläinen et al., 2007; Jääskeläinen et al., 2011; Näätänen et al., 1988; Ulanovsky et al., 2003) (for a brief introduction, see Fig. 1a; Fig. 2a). Evidence for direction-specific auditory-cortex adaptation effects has been found in numerous previous ERP studies (Altman et al., 2010; Butler, 1972; Nager et al., 2003; Paavilainen et al., 1989; Shestopalova et al., 2012). One might, thus, hypothesize that increased fMRI activations to moving sounds reflect release from adaptation in populations representing discrete spatial directions, as a function of increased angular distance that the moving sound “travels” in relation to the stationary source. Evidence supporting this speculation was found in an fMRI study (Smith et al., 2004) suggesting similar PT activations to “moving” noise bursts, with smoothly changing ILD, and “stationary” noise bursts with eight randomly-picked discrete ILD values from the corresponding range. This result, interpreted to disprove the existence of motion-selective neurons, was supported by a subsequent event-related adaptation fMRI study by the same authors (Smith et al., 2007) that showed no differences in adaptation to paired motion patterns vs. paired stationary locations in the same direction ranges. Analogous results were also found in a subsequent ERP study (Getzmann et al., 2011), which compared suppression of responses to moving sounds (to left or right from 0 degrees) that followed either stationary (0 degrees, or 32 degrees to the right or left) or “scattered” adaptor sounds (scattered directions 0–32 degrees right or left, or from 32 left to 32 right). EEG responses were adapted most pronouncedly by a scattered adaptor that was spatially congruent with the motion probe. However, it is also noteworthy that, particularly in the fMRI studies that purportedly refuted the existence of motion selective neurons (Smith et al., 2007; Smith et al., 2004), the differences in directions occurred at larger angular distance steps in the stationary than in the motion sounds. Noting that release from adaptation should increase as a function of the angular distance between the subsequently activated representations, one might have expected larger responses during the stationary than moving sounds (Shestopalova et al., 2012). The lack of such increases leaves room for an interpretation that an additional motion specific population could have contributed to the cumulative responses of moving sounds at least in the fMRI experiments (Smith et al., 2007; Smith et al., 2004).

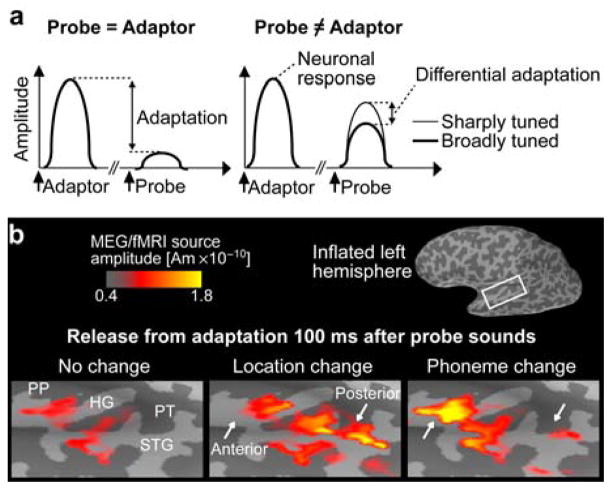

Figure 1.

Adaptation studies of auditory spatial processing. (a) Neuronal adaptation refers to suppression of responses to Probe sounds, as a function of their similarity and temporal proximity to preceding Adaptor sounds. Differential release from adaptation when Probe vs. Adaptor differences may reveal populations tuned to the varied feature dimension. (b) Our previous MEG/fMRI adaptation data (Ahveninen et al., 2006), revealing differential release from adaptation due to changes in directional vs. phonetic differences between Probe and Adaptor sounds, which supports the existence of anterior “what” and posterior “where” AC pathways (i.e., there is specific release from adaptation in posterior AC following sound location change from adaptor to probe). An fMRI-weighted MEG source estimate is shown in a representative subject.

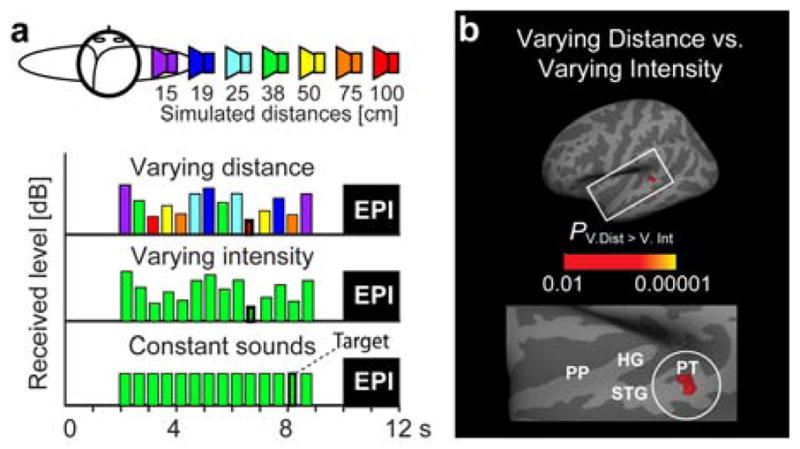

Figure 2.

fMRI studies on intensity-independent auditory distance processing. (a) Our recent fMRI adaptation paradigm (Kopco et al., 2012), comparing responses to sounds that are “Constant”, or contain “Varying distance” cues (all possible 3D distance cues 15–100 cm from the listener) or “Varying intensity” cues (other cues corresponding constantly to 38 cm). This kind of adaptation fMRI design presumably differentiates the tuning properties of neurons within each voxel (Grill-Spector et al., 2001), analogously to the MEG/EEG adaptation example above. (Subjects paid attention to unrelated, randomly presented duration decrements, to control attention effects.) (b) Adaptation fMRI data (Kopco et al., 2012), demonstrating a comparison between the varying distance and varying intensity conditions (N=11). Maximal difference is observed in the posterior STG/PT, possibly reflecting neurons sensitive to intensity-independent distance cues.

3.3. Adaptation evidence for differential populations sensitive to sound identity and location

Recent studies have provided accumulating evidence that adaptation designs may help examine feature tuning of adjacent areas of AC (Figs. 1, 2). Specifically, in line with the predictions of the dual pathway model (Rauschecker et al., 2000), our cortically constrained MEG/fMRI study (Ahveninen et al., 2006) suggested that neuron populations in posterior non-primary AC (PT, posterior STG) demonstrate a larger release from adaptation due to spatial than phonetic changes, while the areas anterior to HG demonstrated larger release from adaptation due to phonetic than spatial changes (Fig. 1b). The activation of the presumptive posterior “where” pathway preceded the anterior pathway by about 10–30 ms in the MEG/fMRI source estimates of the N1 peak latency, in line with our earlier MEG/fMRI study suggesting that the putative anterior and posterior N1 components may reflect parallel processing of features relevant for sound-object identity vs. location processing (Jääskeläinen et al., 2004).

Similar results, which could be interpreted in terms of neuronal adaptation, have emerged in fMRI studies comparing activations to sound pitch and locations. Warren and Griffiths (2003) showed strongest activations (i.e., release from adaptation) to varying vs. constant pitch sequences in anterior non-primary ACs, while the activations to varying vs. fixed sound locations peaked in a more posterior AC areas. A subsequent fMRI study by Barret and Hall (2006) manipulated the level of pitch (no pitch, fixed pitch, varying pitch) and spatial information (“diffuse” sound source, compact source at fixed location, compact source at varying location) in a full factorial design: The varying vs. fixed pitch comparison resulted in strongest release from adaptation in anterior non-primary AC, while the varying vs. fixed location contrast activated the bilateral PT. Evidence for very similar distribution of “what” vs. “where” activations was provided in a subsequent study comparing fMRI and EEG activations during location and pattern changes in natural sounds (Altmann et al., 2007), as well as in a fMRI study that compared activations to temporal pitch and acoustic motion stimuli (Krumbholz et al., 2007). Interestingly, an adaptation fMRI study by Deouell and colleagues (2007) showed bilateral posterior non-primary AC activations to spatially changing vs. constant sounds while the subjects’ attention was actively directed to a visual task. The adaptation fMRI study of Deouell et al. (2007) also provided support for the dual pathway model, as one of the supporting experiments contained a pitch- deviant oddball condition, which suggested strongest release from adaptation as a function of pitch changes in anterior non-primary AC areas.

3.4. Contralateral vs. bilateral representation of acoustic space

Human studies have shown that monaural stimuli (i.e., infinite ILD) generate a stronger response in the hemisphere contralateral to the stimulated ear (e.g., Virtanen et al., 1998). However, recent fMRI studies comparing monaural and binaural stimuli suggest that the hard-wired lateralization is stronger in primary than non-primary AC (Langers et al., 2007; Woods et al., 2009b), which is in line with previous observations showing stronger contra-laterality effects in more primary than posterior non-primary AC activations to 3D sounds (Pavani et al., 2002). Meanwhile, although certain animal lesion studies suggest contra-lateralized localization deficits after unilateral AC lesions (Jenkins et al., 1982), human AC lesion data seem to support right hemispheric dominance of auditory spatial processing (Zatorre et al., 2001). A pattern where the right AC responds to ITDs lateralized to both hemifields but the left AC responds only to right-hemifield ITDs has been replicated in several MEG studies (Kaiser et al., 2000; Salminen et al., 2010b) and fMRI studies using motion stimuli (Krumbholz et al., 2005). Indirect evidence for such division has been provided by right-hemisphere dominance of motion-induced fMRI activations (Baumgart et al., 1999; Griffiths et al., 1998; Hart et al., 2004) or MEG activations to directional stimuli (Palomäki et al., 2005; Tiitinen et al., 2006).

3.5. New directions in imaging studies on auditory spatial processing

3.5.1. Neuronal representations of distance cues

Indices of distinct auditory circuits processing auditory distance were originally found in studies of looming, sound sources approaching vs. receding from the listener (Seifritz et al., 2002). In these studies, the essential distance cue has been sound intensity. In the AC, the greatest activations for sounds with changing vs. constant level were specifically observed in the right temporal plane. However, humans have the capability to discriminate the distance of sound sources even without sound intensity cues. Therefore, we recently applied the adaptation fMRI design, analogous to Deouell et al. (2007), to investigate neuronal bases of auditory distance perception in humans. The results suggested that posterior superior temporal gyrus (STG) and PT may include neurons sensitive to intensity-independent cues of sound-source distance (Kopco et al., 2012) (Fig. 2).

3.5.2. Spatial frames of reference

Previous neuroimaging studies have almost exclusively concentrated on auditory spatial cues that are craniocentric, i.e., presented relative to the subject’s head coordinate system. However, the human brain can preserve a constant perception of auditory space although our head moves relative to the acoustic environment, such as when one turns the head to focus on one of many competing sound sources. Neuronal basis of such allocentric auditory space perception is difficult to study using methods such as fMRI, PET, or MEG. In contrast, EEG allows for head movements because the electrodes are attached to the subject’s scalp, while the other neuroimaging methods are based on fixed sensor arrays. However, head movements may lead to large physiological and other artefacts in EEG. Despite the expected technical complications, recent auditory oddball EEG studies conducted using HRTFs (Altmann et al., 2009) or in the free field (Altmann et al., 2012) have reported differences in change-related responses to craniocentric deviants (subjects head turned but sound stimulation kept constant) vs. allocentric deviants (subjects’ head turned, sound direction moved relative to the environment but not relative to the head). The results suggested that craniocentric differences are processed in the AC, and that allocentric differences could involve parietal structures. More detailed examination of the underlying neuronal process in humans is clearly warranted.

Similar questions about the reference frames have been posed with respect to audiovisual perception since the visual spatial representation is primarily eye-centered (retinotopic representation relative to the direction of gaze) while the auditory perception is primarily craniocentric (Porter et al., 2006). Ventriloquism effect and aftereffect are illusions that make it possible to study how the spatial information from the two sensory modalities is aligned, what transformations do unimodal representations undergo in this process, and the short-term plasticity that can result from the perceptual alignment process (Bruns et al., 2011; Recanzone, 1998; Wozny et al., 2011). Using the Ventriloquism aftereffect illusion in humans and non-human primates, we recently showed that the coordinate frame in which vision calibrates auditory spatial representation is a mixture between eye-centered and craniocentric, suggesting that perhaps both representations get transformed in a way that is most consistent with the motor commands of the response to stimulation in either modality (Kopco et al., 2009).

3.5.3. Top-down modulation of auditory cortex spatial representations

In contrast to the accurate spatial tuning curves found in certain animals such as the barn owl, few previous studies in mammals have managed to identify neurons representing specific locations of space (Recanzone et al., 2011). Recent neurophysiological studies in the cat, however, suggest that the specificity of primary AC neurons is sharpened by attention, that is, when the animal is engaged in an active spatial auditory task (Lee et al., 2011). Indices of similar effects in humans were observed in our MEG/fMRI study (Ahveninen et al., 2006), suggesting that the stimulus-specificity of neuronal adaptation to location changes is enhanced in posterior non-primary AC areas, while recent fMRI evidence suggests stronger activations to spatial vs. pitch features in the same stimuli in posterior non-primary AC areas (Krumbholz et al., 2007) (however, see also Altman et al., 2008, and Rinne et al., 2012). Very recent evidence suggests that the spatial selectivity of human AC neurons is also modulated by visual attention (Salminen et al., 2013). However, the vast majority of previous selective auditory spatial attention studies have used dichotic paradigms, which has been highly useful for investigating many fundamental aspects of selective attention, but perhaps suboptimal for determining how the processing of different 3D features, per se, is top-down modulated. Further studies are therefore needed to examine how attention modulates auditory spatial processing.

3.6. Confounding and conflicting neuroimaging findings

Taken together, a large proportion of existing studies support a notion that posterior aspects of non-primary AC are particularly sensitive to spatial information. However, the presumed spatial processing areas PT and pSTG have been reported to respond to a great variety of other kinds sounds, including phonemes (Griffiths et al., 2002; Zatorre et al., 1992), harmonic complexes and frequency modulated sounds (Hall et al., 2002), amplitude modulated sounds (Giraud et al., 2000), sounds involving “spectral motion” (Thivard et al., 2000), environmental sounds (Engelien et al., 1995), or pitch (Schadwinkel et al., 2010a). These conflicting findings have led to alternative hypotheses, suggesting that, instead of purely spatial processing, PT and adjacent AC areas constitute a more general spectrotemporal processing center (Belin et al., 2000; Griffiths et al., 2002). A recent fMRI study (Smith et al., 2010), which compared the number of talkers occurring in one or several locations and either moving around or being stationary at one location, suggested that PT is not a spatial processing area, but merely sensitive to “source separation” of auditory objects.

It is however noteworthy that, in contrast to visual objects, sounds are dynamic signals that contain the information about the activity that generated them (Scott, 2005). Many biologically relevant “action sounds” may activate vivid multisensory representations that involve a lot of motion. It is also possible that sounds very relevant to our behavior and functioning lead to an automatic activation in the spatial processing stream: visual motion has been shown to activate posterior non-primary AC areas (Howard et al., 1996). Evidence supporting this speculation can also be found in fMRI studies, which have showed that PT responds significantly more strongly to speech sounds that are presented from an “outside the head” vs. “inside the head” location (Hunter et al., 2003).

One answer to some of the inconsistencies in imaging evidence may be found in connectivity of the posterior aspect of the superior temporal lobe. In addition to auditory inputs that arrive both from auditory subcortical structures and from primary AC, there are inputs from the adjacent visual motion areas as well as inputs from the speech motor areas (Rauschecker, 2011; Rauschecker et al., 2009). Posterior aspects of PT near the boundary to the parietal lobe are activated during covert production (Hickok et al., 2003). This area could, thus, be speculated to be involved with an audiomotor “how” pathway, analogously to the modification of the visual dual pathway model (Goodale et al., 1992) derived from the known role of the dorsal pathway in visuomotor functions and visuospatial construction (Fogassi et al., 2005; Leiguarda, 2003). Thus, for example, in the case of learning of speech perception during early development, one might hypothesize that the visual (spatial and movement) information from lip-reading would be merged with both the information/knowledge of the speech motor schemes via a mirroring type of process and with the associated speech sounds (Jääskeläinen, 2010). In terms of spatial processing, it is crucial for the learning infant to be able to co-localize his/her own articulations with the speech sounds that he/she generates, and, on the other hand, with the lip movements of others producing the same sounds. All this requires spatial overlay of the auditory inputs with visual and motor cues and coordinate transformations, which might again be supported by close proximity of the putative visual spatial processing stream in parietal cortex and the auditory one in posterior temporal (or temporo-parietal) cortex.

3.7. How to improve non-invasive measurements of human auditory spatial processing?

Many inconsistencies considering the specific roles of different AC areas could be addressed by improving the resolution and accuracy of functional imaging estimates. Given the relatively small size of human AC subregions (Woods et al., 2009a; Woods et al., 2009b), one might need smaller voxels, which is feasible at ultra-high magnetic fields (De Martino et al., 2012), and more precise anatomical coregistration approaches than the volume-based methods that have been used in the majority of previous audiospatial fMRI studies. At the same time, anatomical definitions of areas such as PT may vary across different atlases, such as in those used by Fischl et al. (2004) vs. Westbury et al. (1999). With sufficiently accurate methods, more pronounced differences might emerge within posterior non-primary ACs, for example, among more anterolateral areas activated to pitch cues (Hall et al., 2009) and frequency modulations (Hart et al., 2004), slightly more caudomedial spatial activations (Hart et al., 2004), and the most posterior aspects of PT, bordering parietal regions, demonstrating auditory-motor response properties during covert speech production (Hickok et al., 2003). This speculation is supported by the meta-analytical comparisons in Figure 3, which show clear anterior-posterior differences between spatial and non-spatial effects, even though many of the original findings have been interpreted as originating in the same “planum temporale” regions (e.g., the so-called “spectral motion”). Promising new results have been found by using approaches that combine different data analysis methods and imaging techniques. For example, a recent study separated subregions of PT using a combination of fMRI and diffusion-tensor imaging (DTI), which were selectively associated with audiospatial perception and sensorimotor functions (Isenberg et al., 2012). In our own studies, we have strongly advocated the combination of temporally accurate MEG/EEG and spatially precise fMRI techniques, to help discriminate between activations originating in anatomically very close-by areas (Ahveninen et al., 2011; Ahveninen et al., 2006; Jääskeläinen et al., 2004). A combination of these techniques also allows for analyses in the frequency domain (e.g., Ahveninen et al., 2012; Ahveninen et al., 2013), which might help non-invasively discriminate and categorize neuronal processes despite the fact that pooled activation estimates, such as those provided by fMRI or PET alone, reveal no differences in the overall activation pattern. Finally, methods such as transcranial magnetic stimulation might also offer possibilities for causally testing the role of the posterior AC in auditory spatial processing.

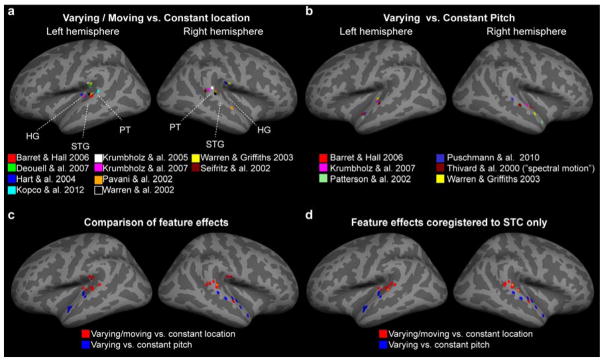

Figure 3.

Adaptation fMRI meta-analysis of release from adaptation due to spatial or pitch/spectral variation. From each study, the reported voxels showing largest signals during varying location (a; motion vs. stationary, varying location vs. stationary) or pitch (b; varying vs. constant pitch) have been coregistered to the nearest cortical vertex in the Freesurfer standard brain representation. In case separate subregions, such as when PT vs. HG voxels were reported separately (Hart et al., 2004; Warren et al., 2003), the AC voxel showing the largest signal was selected. (c) The comparisons of feature effects suggest quite a clear division between the putative posterior “where” pathway (observations labeled red; orange in the case of two overlapping voxels) and the anterior areas demonstrating release from adaptation due to pitch-related variation (blue; possibly with further subregions). (d) Finally, an additional analysis where the peak voxels have been coregistered to the closest superior temporal cortex (STC) vertex is presented, to account for possible misalignment along the vertical axis across the studies. Note that Thivard and colleagues (2000) did not investigate pitch, per se. The results are shown due to their importance on influential alternative hypotheses related to “spectral motion” and PT (Belin et al., 2000; Griffiths et al., 2002). Altogether, this surface-based “adaptation-fMRI meta analysis” demonstrates the importance of consistent anatomical frameworks in evaluating the distinct AC subareas.

4. Concluding remarks

Taken together, a profusion of neuroimaging studies support the notion that spatial acoustic features activate the posterior aspects of non-primary AC, including the posterior STG and PT. These areas are, reportedly, activated by a number of other features as well, such as pitch and phonetic stimuli. However, studies using factorial designs and/or adaptation paradigms, specifically contrasting spatial and identity-related features, suggest that sound-location changes or motion are stronger activators of the most posterior aspects of AC than identity-related sound features (see, e.g., Figure 3). Based on non-invasive measurements in humans, one might also speculate that posterior higher-order AC areas contain neurons sensitive to combinations of different auditory spatial cues, such as ITD, ILD, and spectral cues. However, further studies are needed to determine how exactly acoustic space is represented at the different stages of human auditory pathway, as few studies published so far have provided evidence for a topographic organization of spatial locations in AC, analogous to, for example, cochleotopic representations. In addition to animal models, human studies are of critical importance in this quest: As shown by the rare examples of single-cell recordings conducted in humans (Bitterman et al., 2008), the tuning properties of human AC neurons may in some cases be fundamentally different from those in other species. Single-cell studies are, naturally, difficult to accomplish in healthy humans. Therefore, future studies using tools that combine information from different imaging modalities, and provide a better spatiotemporal resolution than that allowed by the routine approaches utilized to date, could help elucidate the neuronal mechanisms of spatial processing in the human auditory system.

Highlights.

Behavioral and neuroimaging studies/theories on sound localization are discussed.

Posterior non-primary auditory cortices (AC) are sensitive to spatial sounds.

Differential neuronal adaptation may reveal subregions of posterior non-primary AC.

High-order AC neuron populations may process combinations of acoustic spatial cues.

Multimodal imaging/psychophysics on spatial hearing in complex environments needed.

Acknowledgments

We thank Dr. Beata Tomoriova for her comments on the initial versions of the manuscript. This research was supported by the National Institutes of Health (NIH) grants R21DC010060, R01MH083744, R01HD040712, R01NS037462, and P41EB015896, as well as by the Academy Of Finland grants 127624 and 138145, the European Community’s 7FP/2007-13 Grant PIRSESGA-2009-247543, and the Slovak Scientific Grant Agency Grant VEGA 1/0492/12. The content is solely the responsibility of the authors and does not necessarily represent the official views of the funding agencies.

Abbreviations

- 3D

Three dimensional

- AC

Auditory cortex

- AM

Amplitude modulation

- BAEP

Brainstem auditory evoked potentials

- BRIR

Binaural room impulse responses

- DRR

Direct-to-reverberant ratio

- DTI

Diffusion-tensor imaging

- EEG

Electroencephalography

- ERP

Event-related potential

- FM

Frequency modulation

- fMRI

Functional magnetic resonance imaging

- HRTF

Head-related transfer function

- ILD

Interaural level difference

- IPD

Interaural phase difference

- ITD

Interaural time difference

- MAEP

Middle-latency auditory evoked potentials

- MEG

Magnetoencephalography

- MRI

Magnetic resonance imaging

- PET

Positron emission tomography

- PT

Planum temporale

- SSR

Steady-state response

- STG

Superior temporal gyrus

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

4.1. References

- Adler FH. Physiology Of The Eye. C. V. Mosby; St. Louis, MO: 1959. [Google Scholar]

- Adriani M, Maeder P, Meuli R, Thiran AB, Frischknecht R, Villemure JG, Mayer J, Annoni JM, Bogousslavsky J, Fornari E, Thiran JP, Clarke S. Sound recognition and localization in man: specialized cortical networks and effects of acute circumscribed lesions. Exp Brain Res. 2003;153:591–604. doi: 10.1007/s00221-003-1616-0. [DOI] [PubMed] [Google Scholar]

- Ahveninen J, Huang S, Belliveau JW, Chang WT, Hämäläinen M. Dynamic oscillatory processes governing cued orienting and allocation of auditory attention. J Cogn Neurosci. 2013 doi: 10.1162/jocn_a_00452. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahveninen J, Jääskeläinen IP, Belliveau JW, Hämäläinen M, Lin FH, Raij T. Dissociable Influences of Auditory Object vs. Spatial Attention on Visual System Oscillatory Activity. PLoS One. 2012;7:e38511. doi: 10.1371/journal.pone.0038511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahveninen J, Hämäläinen M, Jääskeläinen IP, Ahlfors SP, Huang S, Lin FH, Raij T, Sams M, Vasios CE, Belliveau JW. Attention-driven auditory cortex short-term plasticity helps segregate relevant sounds from noise. Proc Natl Acad Sci U S A. 2011;108:4182–7. doi: 10.1073/pnas.1016134108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahveninen J, Jääskeläinen IP, Raij T, Bonmassar G, Devore S, Hämäläinen M, Levänen S, Lin FH, Sams M, Shinn-Cunningham BG, Witzel T, Belliveau JW. Task-modulated “what” and “where” pathways in human auditory cortex. Proc Natl Acad Sci U S A. 2006;103:14608–13. doi: 10.1073/pnas.0510480103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Natl Acad Sci U S A. 2001;98:12301–6. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alink A, Euler F, Kriegeskorte N, Singer W, Kohler A. Auditory motion direction encoding in auditory cortex and high-level visual cortex. Hum Brain Mapp. 2012;33:969–78. doi: 10.1002/hbm.21263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altman JA, Vaitulevich SP, Shestopalova LB, Varfolomeev AL. Mismatch negativity evoked by stationary and moving auditory images of different azimuthal positions. Neurosci Lett. 2005;384:330–5. doi: 10.1016/j.neulet.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Altman JA, Vaitulevich SP, Shestopalova LB, Petropavlovskaia EA. How does mismatch negativity reflect auditory motion? Hear Res. 2010;268:194–201. doi: 10.1016/j.heares.2010.06.001. [DOI] [PubMed] [Google Scholar]

- Altmann CF, Wilczek E, Kaiser J. Processing of auditory location changes after horizontal head rotation. J Neurosci. 2009;29:13074–8. doi: 10.1523/JNEUROSCI.1708-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altmann CF, Getzmann S, Lewald J. Allocentric or craniocentric representation of acoustic space: an electrotomography study using mismatch negativity. PLoS One. 2012;7:e41872. doi: 10.1371/journal.pone.0041872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altmann CF, Bledowski C, Wibral M, Kaiser J. Processing of location and pattern changes of natural sounds in the human auditory cortex. Neuroimage. 2007;35:1192–200. doi: 10.1016/j.neuroimage.2007.01.007. [DOI] [PubMed] [Google Scholar]

- Altmann CF, Henning M, Doring MK, Kaiser J. Effects of feature-selective attention on auditory pattern and location processing. Neuroimage. 2008;41:69–79. doi: 10.1016/j.neuroimage.2008.02.013. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. Neuroimage. 2004;22:401–8. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- Barrett DJ, Hall DA. Response preferences for “what” and “where” in human non-primary auditory cortex. Neuroimage. 2006;32:968–77. doi: 10.1016/j.neuroimage.2006.03.050. [DOI] [PubMed] [Google Scholar]

- Baumgart F, Gaschler-Markefski B, Woldorff MG, Heinze HJ, Scheich H. A movement-sensitive area in auditory cortex. Nature. 1999;400:724–6. doi: 10.1038/23390. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ. ‘What’, ‘where’ and ‘how’ in auditory cortex. Nat Neurosci. 2000;3:965–6. doi: 10.1038/79890. [DOI] [PubMed] [Google Scholar]

- Bernstein LR, van de Par S, Trahiotis C. The normalized interaural correlation: accounting for NoS pi thresholds obtained with Gaussian and “low-noise” masking noise. J Acoust Soc Am. 1999;106:870–6. doi: 10.1121/1.428051. [DOI] [PubMed] [Google Scholar]

- Best V, Ozmeral EJ, Kopco N, Shinn-Cunningham BG. Object continuity enhances selective auditory attention. Proceedings of the National Academy of Sciences of the USA. 2008;105:13174–13178. doi: 10.1073/pnas.0803718105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bitterman Y, Mukamel R, Malach R, Fried I, Nelken I. Ultra-fine frequency tuning revealed in single neurons of human auditory cortex. Nature. 2008;451:197–201. doi: 10.1038/nature06476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blauert J. The psychophysics of human sound localization. MIT Press; Cambridge, MA: 1997. Spatial hearing. [Google Scholar]

- Bregman AS. Auditory Scene Analysis: The Perceptual Organization of Sound. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann K, Zilles K, Fink GR. Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron. 2001;29:287–96. doi: 10.1016/s0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- Bronkhorst AW, Houtgast T. Auditory distance perception in rooms. Nature. 1999;397:517–20. doi: 10.1038/17374. [DOI] [PubMed] [Google Scholar]

- Brunetti M, Belardinelli P, Caulo M, Del Gratta C, Della Penna S, Ferretti A, Lucci G, Moretti A, Pizzella V, Tartaro A, Torquati K, Olivetti Belardinelli M, Romani GL. Human brain activation during passive listening to sounds from different locations: an fMRI and MEG study. Hum Brain Mapp. 2005;26:251–61. doi: 10.1002/hbm.20164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brungart DS. Auditory localization of nearby sources III: Stimulus effects. J Acoust Soc Am. 1999;106:3589–3602. doi: 10.1121/1.428212. [DOI] [PubMed] [Google Scholar]

- Brungart DS, Scott KR. The effects of production and presentation level on the auditory distance perception of speech. J Acoust Soc Am. 2001;110:425–440. doi: 10.1121/1.1379730. [DOI] [PubMed] [Google Scholar]

- Brungart DS, Simpson BD. The effects of spatial separation in distance on the informational and energetic masking of a nearby speech signal. J Acoust Soc Am. 2002;112:664–676. doi: 10.1121/1.1490592. [DOI] [PubMed] [Google Scholar]

- Brungart DS, Simpson BD. Cocktail party listening in a dynamic multitalker environment. Perception & Psychophysics. 2007;69:79–91. doi: 10.3758/bf03194455. [DOI] [PubMed] [Google Scholar]

- Bruns P, Liebnau R, Roder B. Cross-Modal Training Induces Changes in Spatial Representations Early in the Auditory Processing Pathway. Psych Sci. 2011;22:1120–1126. doi: 10.1177/0956797611416254. [DOI] [PubMed] [Google Scholar]

- Bushara KO, Weeks RA, Ishii K, Catalan MJ, Tian B, Rauschecker JP, Hallett M. Modality-specific frontal and parietal areas for auditory and visual spatial localization in humans. Nat Neurosci. 1999;2:759–66. doi: 10.1038/11239. [DOI] [PubMed] [Google Scholar]

- Butler RA. The influence of spatial separation of sound sources on the auditory evoked response. Neuropsychologia. 1972;10:219–25. doi: 10.1016/0028-3932(72)90063-2. [DOI] [PubMed] [Google Scholar]

- Callan A, Callan DE, Ando H. Neural correlates of sound externalization. Neuroimage. 2012;66C:22–27. doi: 10.1016/j.neuroimage.2012.10.057. [DOI] [PubMed] [Google Scholar]

- Carlile S. Virtual Auditory Space: Generation and Applications. RG Landes; New York: 1996. [Google Scholar]

- Clarke S, Bellmann A, Meuli RA, Assal G, Steck AJ. Auditory agnosia and auditory spatial deficits following left hemispheric lesions: evidence for distinct processing pathways. Neuropsychologia. 2000;38:797–807. doi: 10.1016/s0028-3932(99)00141-4. [DOI] [PubMed] [Google Scholar]

- Clarke S, Bellmann Thiran A, Maeder P, Adriani M, Vernet O, Regli L, Cuisenaire O, Thiran JP. What and where in human audition: selective deficits following focal hemispheric lesions. Exp Brain Res. 2002;147:8–15. doi: 10.1007/s00221-002-1203-9. [DOI] [PubMed] [Google Scholar]

- Colburn HS, Isabelle SK, Tollin DJ. Modeling binaural detection performance for individual masker waveforms. In: Gilkey R, Anderson T, editors. Binaural and Spatial Hearing in Real and Virtual Environments. Erlbaum; New York: 1997. pp. 533–556. [Google Scholar]

- De Martino F, Schmitter S, Moerel M, Tian J, Ugurbil K, Formisano E, Yacoub E, de Moortele PF. Spin echo functional MRI in bilateral auditory cortices at 7 T: an application of B(1) shimming. Neuroimage. 2012;63:1313–20. doi: 10.1016/j.neuroimage.2012.08.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Santis L, Clarke S, Murray MM. Automatic and Intrinsic Auditory “What” and “Where” Processing in Humans Revealed by Electrical Neuroimaging. Cereb Cortex. 2006 doi: 10.1093/cercor/bhj119. [DOI] [PubMed] [Google Scholar]

- Deouell LY, Heller AS, Malach R, D’Esposito M, Knight RT. Cerebral responses to change in spatial location of unattended sounds. Neuron. 2007;55:985–96. doi: 10.1016/j.neuron.2007.08.019. [DOI] [PubMed] [Google Scholar]

- Drullman R, Bronkhorst AW. Multichannel speech intelligibility and talker recognition using monaural, binaural, and three-dimensional auditory presentation. J Acoust Soc Am. 2000;107:2224–2235. doi: 10.1121/1.428503. [DOI] [PubMed] [Google Scholar]

- Duda RO. Elevation dependence of the interaural transfer function. In: Gilkey RH, Anderson TB, editors. Binaural and Spatial Hearing in Real and Virtual Environments. Lawrence Erlbaum Associates; Mahwah, NJ: 1997. pp. 49–75. [Google Scholar]

- Engelien A, Silbersweig D, Stern E, Huber W, Doring W, Frith C, Frackowiak RS. The functional anatomy of recovery from auditory agnosia. A PET study of sound categorization in a neurological patient and normal controls. Brain. 1995;118 ( Pt 6):1395–409. doi: 10.1093/brain/118.6.1395. [DOI] [PubMed] [Google Scholar]

- Fischl B, van der Kouwe A, Destrieux C, Halgren E, Segonne F, Salat DH, Busa E, Seidman LJ, Goldstein J, Kennedy D, Caviness V, Makris N, Rosen B, Dale AM. Automatically parcellating the human cerebral cortex. Cereb Cortex. 2004;14:11–22. doi: 10.1093/cercor/bhg087. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Luppino G. Motor functions of the parietal lobe. Curr Opin Neurobiol. 2005;15:626–31. doi: 10.1016/j.conb.2005.10.015. [DOI] [PubMed] [Google Scholar]

- Formisano E, Kim DS, Di Salle F, van de Moortele PF, Ugurbil K, Goebel R. Mirror-symmetric tonotopic maps in human primary auditory cortex. Neuron. 2003;40:859–69. doi: 10.1016/s0896-6273(03)00669-x. [DOI] [PubMed] [Google Scholar]

- Getzmann S, Lewald J. Shared cortical systems for processing of horizontal and vertical sound motion. J Neurophysiol. 2010;103:1896–904. doi: 10.1152/jn.00333.2009. [DOI] [PubMed] [Google Scholar]

- Getzmann S, Lewald J. The effect of spatial adaptation on auditory motion processing. Hear Res. 2011;272:21–9. doi: 10.1016/j.heares.2010.11.005. [DOI] [PubMed] [Google Scholar]

- Gilkey R, Anderson T. Binaural and Spatial Hearing in Real and Virtual Environments. Lawrence Erlbaum Associates, Inc; Hillsdale, New Jersey: 1997. [Google Scholar]

- Giraud AL, Lorenzi C, Ashburner J, Wable J, Johnsrude I, Frackowiak R, Kleinschmidt A. Representation of the temporal envelope of sounds in the human brain. J Neurophysiol. 2000;84:1588–98. doi: 10.1152/jn.2000.84.3.1588. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–5. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Green DM. Auditory Intensity Discrimination. Oxford University Press; 1988. Profile Analysis. [Google Scholar]

- Griffiths TD, Warren JD. The planum temporale as a computational hub. Trends in Neurosciences. 2002;25:348–53. doi: 10.1016/s0166-2236(02)02191-4. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Rees A, Witton C, Shakir RA, Henning GB, Green GG. Evidence for a sound movement area in the human cerebral cortex. Nature. 1996;383:425–7. doi: 10.1038/383425a0. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Rees G, Rees A, Green GG, Witton C, Rowe D, Buchel C, Turner R, Frackowiak RS. Right parietal cortex is involved in the perception of sound movement in humans. Nat Neurosci. 1998;1:74–9. doi: 10.1038/276. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol (Amst) 2001;107:293–321. doi: 10.1016/s0001-6918(01)00019-1. [DOI] [PubMed] [Google Scholar]

- Grothe B, Pecka M, McAlpine D. Mechanisms of sound localization in mammals. Physiol Rev. 2010;90:983–1012. doi: 10.1152/physrev.00026.2009. [DOI] [PubMed] [Google Scholar]

- Hall DA, Moore DR. Auditory neuroscience: the salience of looming sounds. Curr Biol. 2003;13:R91–3. doi: 10.1016/s0960-9822(03)00034-4. [DOI] [PubMed] [Google Scholar]

- Hall DA, Plack CJ. Pitch processing sites in the human auditory brain. Cereb Cortex. 2009;19:576–85. doi: 10.1093/cercor/bhn108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall DA, Johnsrude IS, Haggard MP, Palmer AR, Akeroyd MA, Summerfield AQ. Spectral and temporal processing in human auditory cortex. Cereb Cortex. 2002;12:140–9. doi: 10.1093/cercor/12.2.140. [DOI] [PubMed] [Google Scholar]

- Halliday R, Callaway E. Time shift evoked potentials (TSEPs): method and basic results. Electroencephalogr Clin Neurophysiol. 1978;45:118–21. doi: 10.1016/0013-4694(78)90350-4. [DOI] [PubMed] [Google Scholar]

- Hart HC, Palmer AR, Hall DA. Different areas of human non-primary auditory cortex are activated by sounds with spatial and nonspatial properties. Hum Brain Mapp. 2004;21:178–90. doi: 10.1002/hbm.10156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartmann WM. Localization of sound in rooms. J Acoust Soc Am. 1983;74:1380–91. doi: 10.1121/1.390163. [DOI] [PubMed] [Google Scholar]

- Hawley ML, Litovsky RY, Colburn HS. Speech intelligibility and localization in a multi-source environment. J Acoust Soc Am. 1999;105:3436–3448. doi: 10.1121/1.424670. [DOI] [PubMed] [Google Scholar]

- Heffner H, Masterton B. Contribution of auditory cortex to sound localization in the monkey (Macaca mulatta) J Neurophysiol. 1975;38:1340–58. doi: 10.1152/jn.1975.38.6.1340. [DOI] [PubMed] [Google Scholar]

- Heffner HE, Heffner RS. Effect of bilateral auditory cortex lesions on sound localization in Japanese macaques. J Neurophysiol. 1990;64:915–31. doi: 10.1152/jn.1990.64.3.915. [DOI] [PubMed] [Google Scholar]

- Helmholtz H. Ueber einige Gesetze der Vertheilung elektrischer Strome in korperlichen Leitern, mit Anwendung auf die thierisch-elektrischen Versuche. Ann Phys Chem. 1853;89:211–233. 353–377. [Google Scholar]

- Hickok G, Buchsbaum B, Humphries C, Muftuler T. Auditory-motor interaction revealed by fMRI: speech, music, and working memory in area Spt. J Cogn Neurosci. 2003;15:673–82. doi: 10.1162/089892903322307393. [DOI] [PubMed] [Google Scholar]

- Hofman PM, Van Opstal AJ. Spectro-temporal factors in two-dimensional human sound localization. J Acoust Soc Am. 1998;103:2634–48. doi: 10.1121/1.422784. [DOI] [PubMed] [Google Scholar]

- Howard RJ, Brammer M, Wright I, Woodruff PW, Bullmore ET, Zeki S. A direct demonstration of functional specialization within motion-related visual and auditory cortex of the human brain. Current Biology. 1996;6:1015–9. doi: 10.1016/s0960-9822(02)00646-2. [DOI] [PubMed] [Google Scholar]

- Huang S, Belliveau JW, Tengshe C, Ahveninen J. Brain networks of novelty-driven involuntary and cued voluntary auditory attention shifting. PLoS One. 2012;7:e44062. doi: 10.1371/journal.pone.0044062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunter MD, Griffiths TD, Farrow TF, Zheng Y, Wilkinson ID, Hegde N, Woods W, Spence SA, Woodruff PW. A neural basis for the perception of voices in external auditory space. Brain. 2003;126:161–9. doi: 10.1093/brain/awg015. [DOI] [PubMed] [Google Scholar]

- Irving S, Moore DR. Training sound localization in normal hearing listeners with and without a unilateral ear plug. Hear Res. 2011;280:100–8. doi: 10.1016/j.heares.2011.04.020. [DOI] [PubMed] [Google Scholar]

- Isenberg AL, Vaden KI, Jr, Saberi K, Muftuler LT, Hickok G. Functionally distinct regions for spatial processing and sensory motor integration in the planum temporale. Hum Brain Mapp. 2012;33:2453–63. doi: 10.1002/hbm.21373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jääskeläinen IP. The role of speech production system in audiovisual speech perception. Open Neuroimag J. 2010;4:30–6. doi: 10.2174/1874440001004020030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jääskeläinen IP, Ahveninen J, Belliveau JW, Raij T, Sams M. Short-term plasticity in auditory cognition. Trends Neurosci. 2007;30:653–61. doi: 10.1016/j.tins.2007.09.003. [DOI] [PubMed] [Google Scholar]

- Jääskeläinen IP, Ahveninen J, Andermann ML, Belliveau JW, Raij T, Sams M. Short-term plasticity as a neural mechanism supporting memory and attentional functions. Brain Res. 2011;1422:66–81. doi: 10.1016/j.brainres.2011.09.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jääskeläinen IP, Ahveninen J, Bonmassar G, Dale AM, Ilmoniemi RJ, Levänen S, Lin FH, May P, Melcher J, Stufflebeam S, Tiitinen H, Belliveau JW. Human posterior auditory cortex gates novel sounds to consciousness. Proc Natl Acad Sci U S A. 2004;101:6809–14. doi: 10.1073/pnas.0303760101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeffress LA. A place theory of sound localization. J Comp Physiol Psychol. 1948;41:35–9. doi: 10.1037/h0061495. [DOI] [PubMed] [Google Scholar]

- Jenkins WM, Masterton RB. Sound localization: effects of unilateral lesions in central auditory system. J Neurophysiol. 1982;47:987–1016. doi: 10.1152/jn.1982.47.6.987. [DOI] [PubMed] [Google Scholar]

- Johnson BW, Hautus MJ. Processing of binaural spatial information in human auditory cortex: neuromagnetic responses to interaural timing and level differences. Neuropsychologia. 2010;48:2610–9. doi: 10.1016/j.neuropsychologia.2010.05.008. [DOI] [PubMed] [Google Scholar]

- Junius D, Riedel H, Kollmeier B. The influence of externalization and spatial cues on the generation of auditory brainstem responses and middle latency responses. Hear Res. 2007;225:91–104. doi: 10.1016/j.heares.2006.12.008. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proceedings of the National Academy of Sciences of the United States of America. 2000;97:11793–9. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser J, Lutzenberger W. Parietal gamma-band activity during auditory spatial precueing of motor responses. Neuroreport. 2001;12:3479–82. doi: 10.1097/00001756-200111160-00021. [DOI] [PubMed] [Google Scholar]

- Kaiser J, Lutzenberger W, Preissl H, Ackermann H, Birbaumer N. Right-hemisphere dominance for the processing of sound-source lateralization. Journal of Neuroscience. 2000;20:6631–9. doi: 10.1523/JNEUROSCI.20-17-06631.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kashino M, Nishida S. Adaptation in the processing of interaural time differences revealed by the auditory localization aftereffect. J Acoust Soc Am. 1998;103:3597–3604. doi: 10.1121/1.423064. [DOI] [PubMed] [Google Scholar]

- Knudsen EI. Mechanisms of sound localization in the barn owl (Tyto alba) J Comp Physiol. 1979;133:13–21. [Google Scholar]

- Kolarik AJ, Cirstea S, Pardhan S. Evidence for enhanced discrimination of virtual auditory distance among blind listeners using level and direct-to-reverberant cues. Experimental Brain Research. 2013;224:623–633. doi: 10.1007/s00221-012-3340-0. [DOI] [PubMed] [Google Scholar]