Abstract

This paper deals with the estimation of a high-dimensional covariance with a conditional sparsity structure and fast-diverging eigenvalues. By assuming sparse error covariance matrix in an approximate factor model, we allow for the presence of some cross-sectional correlation even after taking out common but unobservable factors. We introduce the Principal Orthogonal complEment Thresholding (POET) method to explore such an approximate factor structure with sparsity. The POET estimator includes the sample covariance matrix, the factor-based covariance matrix (Fan, Fan, and Lv, 2008), the thresholding estimator (Bickel and Levina, 2008) and the adaptive thresholding estimator (Cai and Liu, 2011) as specific examples. We provide mathematical insights when the factor analysis is approximately the same as the principal component analysis for high-dimensional data. The rates of convergence of the sparse residual covariance matrix and the conditional sparse covariance matrix are studied under various norms. It is shown that the impact of estimating the unknown factors vanishes as the dimensionality increases. The uniform rates of convergence for the unobserved factors and their factor loadings are derived. The asymptotic results are also verified by extensive simulation studies. Finally, a real data application on portfolio allocation is presented.

Keywords: High-dimensionality, approximate factor model, unknown factors, principal components, sparse matrix, low-rank matrix, thresholding, cross-sectional correlation, diverging eigenvalues

1 Introduction

Information and technology make large data sets widely available for scientific discovery. Much statistical analysis of such high-dimensional data involves the estimation of a covariance matrix or its inverse (the precision matrix). Examples include portfolio management and risk assessment (Fan, Fan and Lv, 2008), high-dimensional classification such as Fisher discriminant (Hastie, Tibshirani and Friedman, 2009), graphic models (Meinshausen and Bühlmann, 2006), statistical inference such as controlling false discoveries in multiple testing (Leek and Storey, 2008; Efron, 2010), finding quantitative trait loci based on longitudinal data (Yap, Fan, and Wu, 2009; Xiong et al. 2011), and testing the capital asset pricing model (Sentana, 2009), among others. See Section 5 for some of those applications. Yet, the dimensionality is often either comparable to the sample size or even larger. In such cases, the sample covariance is known to have poor performance (Johnstone, 2001), and some regularization is needed.

Realizing the importance of estimating large covariance matrices and the challenges brought by the high dimensionality, in recent years researchers have proposed various regularization techniques to consistently estimate Σ. One of the key assumptions is that the covariance matrix is sparse, namely, many entries are zero or nearly so (Bickel and Levina, 2008, Rothman et al, 2009, Lam and Fan 2009, Cai and Zhou, 2010, Cai and Liu, 2011). In many applications, however, the sparsity assumption directly on Σ is not appropriate. For example, financial returns depend on the equity market risks, housing prices depend on the economic health, gene expressions can be stimulated by cytokines, among others. Due to the presence of common factors, it is unrealistic to assume that many outcomes are uncorrelated. An alternative method is to assume a factor model structure, as in Fan, Fan and Lv (2008). However, they restrict themselves to the strict factor models with known factors.

A natural extension is the conditional sparsity. Given the common factors, the outcomes are weakly correlated. In order to do so, we consider an approximate factor model, which has been frequently used in economic and financial studies (Chamberlain and Rothschild, 1983; Fama and French 1993; Bai and Ng, 2002, etc):

| (1.1) |

Here yit is the observed response for the ith (i = 1, …, p) individual at time t = 1, …, T; bi is a vector of factor loadings; ft is a K × 1 vector of common factors, and uit is the error term, usually called idiosyncratic component, uncorrelated with ft. Both p and T diverge to infinity, while K is assumed fixed throughout the paper, and p is possibly much larger than T.

We emphasize that in model (1.1), only yit is observable. It is intuitively clear that the unknown common factors can only be inferred reliably when there are sufficiently many cases, that is, p → ∞. In a data-rich environment, p can diverge at a rate faster than T. The factor model (1.1) can be put in a matrix form as

| (1.2) |

where yt = (y1t, …, ypt)′, B = (b1, …, bp)′ and ut = (u1t, …, upt)′. We are interested in Σ, the p × p covariance matrix of yt, and its inverse, which are assumed to be time-invariant. Under model (1.1), Σ is given by

| (1.3) |

where Σu = (σu,ij)p×p is the covariance matrix of ut. The literature on approximate factor models typically assumes that the first K eigenvalues of Bcov(ft)B′ diverge at rate O(p), whereas all the eigenvalues of Σu are bounded as p → ∞. This assumption holds easily when the factors are pervasive in the sense that a non-negligible fraction of factor loadings should be non-vanishing. The decomposition (1.3) is then asymptotically identified as p → ∞. In addition to it, in this paper we assume that Σu is approximately sparse as in Bickel and Levina (2008) and Rothman et al. (2009): for some q ∈ [0, 1),

does not grow too fast as p → ∞. In particular, this includes the exact sparsity assumption (q = 0) under which mp = maxi≤p Σj≤p I(σu,ij≠0), the maximum number of nonzero elements in each row.

The conditional sparsity structure of (1.2) was explored by Fan, Liao and Mincheva (2011) in estimating the covariance matrix, when the factors {ft} are observable. This allows them to use regression analysis to estimate . This paper deals with the situation in which the factors are unobservable and have to be inferred. Our approach is simple, optimization-free and it uses the data only through the sample covariance matrix. Run the singular value decomposition on the sample covariance matrix Σ̂sam of yt, keep the covariance matrix formed by the first K principal components, and apply the thresholding procedure to the remaining covariance matrix. This results in a Principal Orthogonal complEment Thresholding (POET) estimator. When the number of common factors K is unknown, it can be estimated from the data. See Section 2 for additional details. We will investigate various properties of POET under the assumption that the data are serially dependent, which includes independent observations as a specific example. The rate of convergence under various norms for both estimated Σ and Σu and their precision (inverse) matrices will be derived. We show that the effect of estimating the unknown factors on the rate of convergence vanishes when p log p ≫ T, and in particular, the rate of convergence for Σu achieves the optimal rate in Cai and Zhou (2012).

This paper focuses on the high-dimensional static factor model (1.2), which is innately related to the principal component analysis (PCA), as clarified in Section 2. This feature makes it different from the classical factor model with fixed dimensionality (e.g., Lawley and Maxwell 1971). In the last ten years, much theory on the estimation and inference of the static factor model has been developed, for example, Stock and Watson (1998, 2002), Bai and Ng (2002), Bai (2003), Doz, Giannone and Reichlin (2011), among others. Our contribution is on the estimation of covariance matrices and their inverse in large factor models.

The static model considered in this paper is to be distinguished from the dynamic factor model as in Forni, Hallin, Lippi and Reichlin (2000); the latter allows yt to also depend on ft with lags in time. Their approach is based on the eigenvalues and principal components of spectral density matrices, and on the frequency domain analysis. Moreover, as shown in Forni and Lippi (2001), the dynamic factor model does not really impose a restriction on the data generating process, and the assumption of idiosyncrasy (in their terminology, a p-dimensional process is idiosyncratic if all the eigenvalues of its spectral density matrix remain bounded as p → ∞) asymptotically identifies the decomposition of yit into the common component and idiosyncratic error. The literature includes, for example, Forni et al. (2000, 2004), Forni and Lippi (2001), Hallin and Liška (2007, 2011), and many other references therein. Above all, both the static and dynamic factor models are receiving increasing attention in applications of many fields where information usually is scattered through a (very) large number of interrelated time series.

There has been extensive literature in recent years that deals with sparse principal components, which has been widely used to enhance the convergence of the principal components in high-dimensional space. d’Aspremont, Bach and El Ghaoui (2008), Shen and Huang (2008), Witten, Tibshirani, and Hastie (2009) and Ma (2011) proposed and studied various algorithms for computations. More literature on sparse PCA is found in Johnstone and Lu (2009), Amini and Wainwright (2009), Zhang and El Ghaoui (2011), Birnbaum et al. (2012), among others. In addition, there has also been a growing literature that theoretically studies the recovery from a low-rank plus sparse matrix estimation problem, see for example, Wright et al. (2009), Lin et al. (2009), Candès et al. (2011), Luo (2011), Agarwal, Nagahban, Wainwright (2012), Pati et al. (2012). It corresponds to the identifiability issue of our problem.

There is a big difference between our model and those considered in the aforementioned literature. In the current paper, the first K eigenvalues of Σ are spiked and grow at a rate O(p), whereas the eigenvalues of the matrices studied in the existing literature on covariance estimation are usually assumed to be either bounded or slowly growing. Due to this distinctive feature, the common components and the idiosyncratic components can be identified, and in addition, PCA on the sample covariance matrix can consistently estimate the space spanned by the eigenvectors of Σ. The existing methods of either thresholding directly or solving a constrained optimization method can fail in the presence of very spiked principal eigenvalues. However, there is a price to pay here: as the first K eigenvalues are “too spiked”, one can hardly obtain a satisfactory rate of convergence for estimating Σ in absolute term, but it can be estimated accurately in relative term (see Section 3.3 for details). In addition, Σ−1 can be estimated accurately.

We would like to further note that the low-rank plus sparse representation of our model is on the population covariance matrix, whereas Candès et al. (2011), Wright et al. (2009), Lin et al. (2009)1 considered such a representation on the data matrix. As there is no Σ to estimate, their goal is limited to producing a low-rank plus sparse matrix decomposition of the data matrix, which corresponds to the identifiability issue of our study, and does not involve estimation and inference. In contrast, our ultimate goal is to estimate the population covariance matrices as well as the precision matrices. For this purpose, we require the idiosyncratic components and common factors to be uncorrelated and the data generating process to be strictly stationary. The covariances considered in this paper are constant over time, though slow-time-varying covariance matrices are applicable through localization in time (time-domain smoothing). Our consistency result on Σu demonstrates that the decomposition (1.3) is identifiable, and hence our results also shed the light of the “surprising phenomenon” of Candès et al. (2011) that one can separate fully a sparse matrix from a low-rank matrix when only the sum of these two components is available.

The rest of the paper is organized as follows. Section 2 gives our estimation procedures and builds the relationship between the principal components analysis and the factor analysis in high-dimensional space. Section 3 provides the asymptotic theory for various estimated quantities. Section 4 illustrates how to choose the thresholds using cross-validation and guarantees the positive definiteness in any finite sample. Specific applications of regularized covariance matrices are given in Section 5. Numerical results are reported in Section 6. Finally, Section 7 presents a real data application on portfolio allocation. All proofs are given in the appendix. Throughout the paper, we use λmin(A) and λmax(A) to denote the minimum and maximum eigenvalues of a matrix A. We also denote by ||A||F, ||A||, ||A||1 and ||A||max the Frobenius norm, spectral norm (also called operator norm), L1-norm, and elementwise norm of a matrix A, defined respectively by ||A||F = tr1/2(A′A), , ||A||1 = maxj Σi |aij| and ||A||max = maxi,j |aij|. Note that when A is a vector, both ||A||F and ||A|| are equal to the Euclidean norm. Finally, for two sequences, we write aT ≫ bT if bT = o(aT) and aT ≍ bT if aT = O(bT) and bT = O(aT).

2 Regularized Covariance Matrix via PCA

There are three main objectives of this paper: (i) understand the relationship between principal component analysis (PCA) and the high-dimensional factor analysis; (ii) estimate both covariance matrices Σ and the idiosyncratic Σu and their precision matrices in the presence of common factors, and (iii) investigate the impact of estimating the unknown factors on the covariance estimation. The propositions in Section 2.1 below show that the space spanned by the principal components in the population level Σ is close to the space spanned by the columns of the factor loading matrix B.

2.1 High-dimensional PCA and factor model

Consider a factor model

where the number of common factors, K = dim(ft), is small compared to p and T, and thus is assumed to be fixed throughout the paper. In the model, the only observable variable is the data yit. One of the distinguished features of the factor model is that the principal eigenvalues of Σ are no longer bounded, but growing fast with the dimensionality. We illustrate this in the following example.

Example 2.1

Consider a single-factor model yit = bift + uit where bi ∈ ℝ. Suppose that the factor is pervasive in the sense that it has non-negligible impact on a non-vanishing proportion of outcomes. It is then reasonable to assume for some c > 0. Therefore, assuming that λmax(Σu) = o(p), an application of (1.3) yields,

for all large p, assuming var(ft) > 0.

We now elucidate why PCA can be used for the factor analysis in the presence of spiked eigenvalues. Write B = (b1, …, bp)′ as the p × K loading matrix. Note that the linear space spanned by the first K principal components of Bcov(ft)B′ is the same as that spanned by the columns of B when cov(ft) is non-degenerate. Thus, we can assume without loss of generality that the columns of B are orthogonal and cov(ft) = IK, the identity matrix. This canonical form corresponds to the identifiability condition in decomposition (1.3). Let b̃1, · · ·, b̃K be the columns of B, ordered such that is in a non-increasing order. Then, are eigenvectors of the matrix BB′ with eigenvalues and the rest zero. We will impose the pervasiveness assumption that all eigenvalues of the K × K matrix p−1B′B are bounded away from zero, which holds if the factor loadings are independent realizations from a non-degenerate population. Since the non-vanishing eigenvalues of the matrix BB′ are the same as those of B′B, from the pervasiveness assumption it follows that are all growing at rate O(p).

Let be the eigenvalues of Σ in a descending order and be their corresponding eigenvectors. Then, an application of Weyl’s eigenvalue theorem (see the appendix) yields that

Proposition 2.1

Assume that the eigenvalues of p−1B′B are bounded away from zero for all large p. For the factor model (1.3) with the canonical condition

| (2.1) |

we have

In addition, for j ≤ K, lim infp→∞ ||b̃j||2/p > 0.

Using Proposition 2.1 and the sin θ theorem of Davis and Kahn (1970, see the appendix), we have the following:

Proposition 2.2

Under the assumptions of Proposition 2.1, if are distinct, then

Propositions 2.1 and 2.2 state that PCA and factor analysis are approximately the same if ||Σu|| = o(p). This is assured through a sparsity condition on Σu = (σu,ij)p×p, which is frequently measured through

| (2.2) |

The intuition is that, after taking out the common factors, many pairs of the cross-sectional units become weakly correlated. This generalized notion of sparsity was used in Bickel and Levina (2008) and Cai and Liu (2011). Under this generalized measure of sparsity, we have

if the noise variances { } are bounded. Therefore, when mp = o(p), Proposition 2.1 implies that we have distinguished eigenvalues between the principal components and the rest of the components and Proposition 2.2 ensures that the first K principal components are approximately the same as the columns of the factor loadings.

The aforementioned sparsity assumption appears reasonable in empirical applications. Boivin and Ng (2006) conducted an empirical study and showed that imposing zero correlation between weakly correlated idiosyncratic components improves forecast2. More recently, Phan (2012) empirically estimated the level of sparsity of the idiosyncratic covariance using the UK market data.

Recent developments on random matrix theory, for example, Johnstone and Lu (2009) and Paul (2007), have shown that when p/T is not negligible, the eigenvalues and eigenvectors of Σ might not be consistently estimated from the sample covariance matrix. A distinguished feature of the covariance considered in this paper is that there are some very spiked eigenvalues. By Propositions 2.1 and 2.2, in the factor model, the pervasiveness condition

| (2.3) |

implies that the first K eigenvalues are growing at a rate p. Moreover, when p is large, the principal components are close to the normalized vectors when mp = o(p). This provides the mathematics for using the first K principal components as a proxy of the space spanned by the columns of the factor loading matrix B. In addition, due to (2.3), the signals of the first K eigenvalues are stronger than those of the spiked covariance model considered by Jung and Marron (2009) and Birnbaum et al. (2012). Therefore, our other conditions for the consistency of principal components at the population level are much weaker than those in the spiked covariance literature. On the other hand, this also shows that, under our setting the PCA is a valid approximation to factor analysis only if p → ∞. The fact that the PCA on the sample covariance is inconsistent when p is bounded was also previously demonstrated in the literature (See e.g., Bai (2003)).

With assumption (2.3), the standard literature on approximate factor models has shown that the PCA on the sample covariance matrix Σ̂sam can consistently estimate the space spanned by the factor loadings (e.g., Stock and Watson (1998), Bai (2003)). Our contribution in Propositions 2.1 and 2.2 is that we connect the high-dimensional factor model to the principal components, and obtain the consistency of the spectrum in the population level Σ instead of the sample level Σ̂sam. The spectral consistency also enhances the results in Chamberlain and Rothschild (1983). This provides the rationale behind the consistency results in the factor model literature.

2.2 POET

Sparsity assumption directly on Σ is inappropriate in many applications due to the presence of common factors. Instead, we propose a nonparametric estimator of Σ based on the principal component analysis. Let λ̂1 ≥ λ̂2 ≥ · · · ≥ λ̂p be the ordered eigenvalues of the sample covariance matrix Σ̂sam and be their corresponding eigenvectors. Then the sample covariance has the following spectral decomposition:

| (2.4) |

where is the principal orthogonal complement, and K is the number of diverging eigenvalues of Σ. Let us first assume K is known.

Now we apply thresholding on R̂K. Define

| (2.5) |

where sij(·) is a generalized shrinkage function of Antoniadis and Fan (2001), employed by Rothman et al. (2009) and Cai and Liu (2011), and τij > 0 is an entry-dependent threshold. In particular, the hard-thresholding rule sij(x) = xI(|x| ≥ τij) (Bickel and Levina, 2008) and the constant thresholding parameter τij = δ are allowed. In practice, it is more desirable to have τij be entry-adaptive. An example of the adaptive thresholding is

| (2.6) |

where r̂ii is the ith diagonal element of R̂K. This corresponds to applying the thresholding with parameter τ to the correlation matrix of R̂K.

The estimator of Σ is then defined as:

| (2.7) |

We will call this estimator the Principal Orthogonal complEment thresholding (POET) estimator. It is obtained by thresholding the remaining components of the sample covariance matrix, after taking out the first K principal components. One of the attractiveness of POET is that it is optimization-free, and hence is computationally appealing. 3

With the choice of τij in (2.6) and the hard thresholding rule, our estimator encompasses many popular estimators as its specific cases. When τ = 0, the estimator is the sample covariance matrix and when τ = 1, the estimator becomes that based on the strict factor model (Fan, Fan, and Lv, 2008). When K = 0, our estimator is the same as the thresholding estimator of Bickel and Levina (2008) and (with a more general thresholding function) Rothman et al. (2009) or the adaptive thresholding estimator of Cai and Liu (2011) with a proper choice of τij.

In practice, the number of diverging eigenvalues (or common factors) can be estimated based on the sample covariance matrix. Determining K in a data-driven way is an important topic, and is well understood in the literature. We will describe the POET with a data-driven K in Section 2.4.

2.3 Least squares point of view

The POET (2.7) has an equivalent representation using a constrained least squares method. The least squares method seeks for and such that

| (2.8) |

subject to the normalization

| (2.9) |

The constraints (2.9) correspond to the normalization (2.1). Here we assume that the mean of each variable has been removed, that is, Eyit = Efjt = 0 for all i ≤ p, j ≤ K and t ≤ T. Putting it in a matrix form, the optimization problem can be written as

| (2.10) |

where Y = (y1, …, yT) and F′ = (f1, · · ·, fT). For each given F, the least-squares estimator of B is Λ = T−1YF, using the constraint (2.9) on the factors. Substituting this into (2.10), the objective function now becomes . The minimizer is now clear: the columns of are the eigenvectors corresponding to the K largest eigenvalues of the T × T matrix Y′Y and Λ̂K = T−1YF̂K (see e.g., Stock and Watson (2002)).

We will show that under some mild regularity conditions, as p and T → ∞, consistently estimates the true uniformly over i ≤ p and t ≤ T. Since Σu is assumed to be sparse, we can construct an estimator of Σu using the adaptive thresholding method by Cai and Liu (2011) as follows. Let , and . For some pre-determined decreasing sequence ωT > 0, and large enough C > 0, define the adaptive threshold parameter as . The estimated idiosyncratic covariance estimator is then given by

| (2.11) |

where for all z ∈ ℝ (see Antoniadis and Fan, 2001),

It is easy to verify that sij(·) includes many interesting thresholding functions such as the hard thresholding (sij(z) = zI(|z|≥τij)), soft thresholding (sij (z) = sign(z)(|z| − τij)+), SCAD, and adaptive lasso (See Rothman et al. (2009)).

Analogous to the decomposition (1.3), we obtain the following substitution estimators

| (2.12) |

and by the Sherman-Morrison-Woodbury formula, noting that ,

| (2.13) |

In practice, the true number of factors K might be unknown to us. However, for any determined K1 ≤ p, we can always construct either (Σ̂K1, ) as in (2.7) or (Σ̃K1, ) as in (2.12) to estimate (Σ, Σu). The following theorem shows that for each given K1, the two estimators based on either regularized PCA or least squares substitution are equivalent. Similar results were obtained by Bai (2003) when K1 = K and no thresholding was imposed.

Theorem 2.1

Suppose that the entry-dependent threshold in (2.5) is the same as the thresholding parameter used in (2.11). Then for any K1 ≤ p, the estimator (2.7) is equivalent to the substitution estimator (2.12), that is,

In this paper, we will use a data-driven K̂ to construct the POET (see Section 2.4 below), which has two equivalent representations according to Theorem 2.1.

2.4 POET with Unknown K

Determining the number of factors in a data-driven way has been an important research topic in the econometric literature. Bai and Ng (2002) proposed a consistent estimator as both p and T diverge. Other recent criteria are proposed by Kapetanios (2010), Onatski (2010), Alessi et al. (2010), etc.

Our method also allows a data-driven K̂ to estimate the covariance matrices. In principle, any procedure that gives a consistent estimate of K can be adopted. In this paper we apply the well-known method in Bai and Ng (2002). It estimates K by

| (2.14) |

where M is a prescribed upper bound, F̂K1 is a T × K1 matrix whose columns are times the eigenvectors corresponding to the K1 largest eigenvalues of the T × T matrix Y′Y; g(T, p) is a penalty function of (p, T) such that g(T, p) = o(1) and min{p, T}g(T, p) → ∞. Two examples suggested by Bai and Ng (2002) are

Throughout the paper, we let K̂ be the solution to (2.14) using either IC1 or IC2. The asymptotic results are not affected regardless of the specific choice of g(T, p). We define the POET estimator with unknown K as

| (2.15) |

The procedure is as stated in Section 2.2 except that K̂ is now data-driven.

3 Asymptotic Properties

3.1 Assumptions

This section presents the assumptions on the model (1.2), in which only are observable. Recall the identifiability condition (2.1).

The first assumption has been one of the most essential ones in the literature of approximate factor models. Under this assumption and other regularity conditions, the number of factors, loadings and common factors can be consistently estimated (e.g., Stock and Watson (1998, 2002), Bai and Ng (2002), Bai (2003), etc.).

Assumption 3.1

All the eigenvalues of the K × K matrix p−1B′B are bounded away from both zero and infinity as p → ∞.

Remark 3.1

It implies from Proposition 2.1 in Section 2 that the first K eigenvalues of Σ grow at rate O(p). This unique feature distinguishes our work from most of other low-rank plus sparse covariances considered in the literature, e.g., Luo (2011), Pati et al. (2012), Agarwal et al. (2012), Birnbaum et al. (2012). 4

Assumption 3.1 requires the factors to be pervasive, that is, to impact a non-vanishing proportion of individual time series. See Example 2.1 for its meaning. 5

As to be illustrated in Section 3.3 below, due to the fast diverging eigenvalues, one can hardly achieve a good rate of convergence for estimating Σ under either the spectral norm or Frobenius norm when p > T. This phenomenon arises naturally from the characteristics of the high-dimensional factor model, which is another distinguished feature compared to those convergence results in the existing literature.

Assumption 3.2

{ut, ft}t≥1 is strictly stationary. In addition, Euit = Euitfjt = 0 for all i ≤ p, j ≤ K and t ≤ T.

There exist constants c1, c2 > 0 such that λmin(Σu) > c1, ||Σu||1 < c2, and mini≤p,j≤p var(uitujt) > c1.

- There exist r1, r2 > 0 and b1, b2 > 0, such that for any s > 0, i ≤ p and j ≤ K,

Condition (i) requires strict stationarity as well as the non-correlation between {ut} and {ft}. These conditions are slightly stronger than those in the literature, e.g., Bai (2003), but are still standard and simplify our technicalities. Condition (ii) requires that Σu be well-conditioned. The condition ||Σu||1 ≤ c2 instead of a weaker condition λmax(Σu) ≤ c2 is imposed here in order to consistently estimate K. But it is still standard in the approximate factor model literature as in Bai and Ng (2002), Bai (2003), etc. When K is known, such a condition can be removed. Our working paper6 shows that the results continue to hold for a growing (known) K under the weaker condition λmax(Σu) ≤ c2. Condition (iii) requires exponential-type tails, which allows us to apply the large deviation theory to and .

We impose the strong mixing condition. Let and denote the σ-algebras generated by {(ft, ut) : t ≤ 0} and {(ft, ut) : t ≥ T} respectively. In addition, define the mixing coefficient

| (3.1) |

Assumption 3.3

Strong mixing: There exists r3 > 0 such that , and C > 0 satisfying: for all T ∈ ℤ+,

In addition, we impose the following regularity conditions.

Assumption 3.4

There exists M > 0 such that for all i ≤ p, t ≤ T and s ≤ T,

||bi||max < M,

,

.

These conditions are needed to consistently estimate the transformed common factors as well as the factor loadings. Similar conditions were also assumed in Bai (2003), and Bai and Ng (2006). The number of factors is assumed to be fixed. Our conditions in Assumption 3.4 are weaker than those in Bai (2003) as we focus on different aspects of the study.

3.2 Convergence of the idiosyncratic covariance

Estimating the covariance matrix Σu of the idiosyncratic components {ut} is important for many statistical inferences. For example, it is needed for large sample inference of the unknown factors and their loadings, for testing the capital asset pricing model (Sentana, 2009), and large-scale hypothesis testing (Fan, Han and Gu, 2012). See Section 5.

We estimate Σu by thresholding the principal orthogonal complements after the first K̂ principal components of the sample covariance are taken out: . By Theorem 2.1, it also has an equivalent expression given by (2.11), with . Throughout the paper, we apply the adaptive threshold

| (3.2) |

where C > 0 is a sufficiently large constant, though the results hold for other types of thresholding. As in Bickel and Levina (2008) and Cai and Liu (2011), the threshold chosen in the current paper is in fact obtained from the optimal uniform rate of convergence of maxi≤p,j≤p |σ̂ij − σu,ij|. When direct observation of uit is not available, the effect of estimating the unknown factors also contributes to this uniform estimation error, which is why p−1/2 appears in the threshold.

The following theorem gives the rate of convergence of the estimated idiosyncratic covariance. Let . In the convergence rate below, recall that mp and q are defined in the measure of sparsity (2.2).

Theorem 3.1

Suppose log p = o(Tγ/6), T = o(p2), and Assumptions 3.1–3.4 hold. Then for a sufficiently large constant C > 0 in the threshold (3.2), the POET estimator satisfies

If further , then the eigenvalues of are all bounded away from zero with probability approaching one, and

When estimating Σu, p is allowed to grow exponentially fast in T, and can be made consistent under the spectral norm. In addition, is asymptotically invertible while the classical sample covariance matrix based on the residuals is not when p > T.

Remark 3.2

Consistent estimation of Σu indicates that Σu is identifiable in (1.3), namely, the sparse Σu can be separated perfectly from the low-rank matrix there. The result here gives another proof (when assuming of the “surprising phenomenon” in Candès et al (2011) under different technical conditions.

Fan, Liao and Mincheva (2011) recently showed that when are observable and q = 0, the rate of convergence of the adaptive thresholding estimator is given by . Hence when the common factors are unobservable, the rate of convergence has an additional term , coming from the impact of estimating the unknown factors. This impact vanishes when p log p ≫ T, in which case the minimax rate as in Cai and Zhou (2010) is achieved. As p increases, more information about the common factors is collected, which results in more accurate estimation of the common factors .

When K is known and grows with p and T, with slightly weaker assumptions, our working paper (Fan et al. 2011) shows that under the exactly sparse case (that is, q = 0), the result continues to hold with convergence rate .

3.3 Convergence of the POET estimator

Since the first K eigenvalues of Σ grow with p, one can hardly estimate Σ with satisfactory accuracy in the absolute term. This problem arises not from the limitation of any estimation method, but is due to the nature of the high-dimensional factor model. We illustrate this using a simple example.

Example 3.1

Consider an ideal case where we know the spectrum except for the first eigenvector of Σ. Let be the eigenvalues and vectors, and assume that the largest eigenvalue λ1 ≥ cp for some c > 0. Let ξ̂1 be the estimated first eigenvector and define the covariance estimator . Assume that ξ̂1 is a good estimator in the sense that ||ξ̂1 − ξ1||2 = Op(T−1). However,

which can diverge when T = O(p2).

In the presence of very spiked eigenvalues, while the covariance Σ cannot be consistently estimated in absolute term, it can be well estimated in terms of the relative error matrix

which is more relevant for many applications (see Example 5.2). The relative error matrix can be measured by either its spectral norm or the normalized Frobenius norm defined by

| (3.3) |

In the last equality, there are p terms being added in the trace operation and the factor p−1 plays the role of normalization. The loss (3.3) is closely related to the entropy loss, introduced by James and Stein (1961). Also note that

where ||A||Σ = p−1/2||Σ−1/2AΣ−1/2||F is the weighted quadratic norm in Fan et al (2008).

Fan et al. (2008) showed that in a large factor model, the sample covariance is such that , which does not converge if p > T. On the other hand, Theorem 3.2 below shows that ||Σ̂K̂ − Σ||Σ can still be convergent as long as p = o(T2). Technically, the impact of high-dimensionality on the convergence rate of Σ̂K̂ − Σ is via the number of rows in B. We show in the appendix that B appears in ||Σ̂K̂ − Σ||Σ through B′Σ−1B whose eigenvalues are bounded. Therefore it successfully cancels out the curse of high-dimensionality introduced by B.

Compared to estimating Σ, in a large approximate factor model, we can estimate the precision matrix with a satisfactory rate under the spectral norm. The intuition follows from the fact that Σ−1 has bounded eigenvalues.

The following theorem summarizes the rate of convergence under various norms.

Theorem 3.2

Under the assumptions of Theorem 3.1, the POET estimator defined in (2.15) satisfies

In addition, if , then Σ̂K̂ is nonsingular with probability approaching one, with

Remark 3.3

When estimating Σ−1, p is allowed to grow exponentially fast in T, and the estimator has the same rate of convergence as that of the estimator in Theorem 3.1. When p becomes much larger than T, the precision matrix can be estimated at the same rate as if the factors were observable.

-

As in Remark 3.2, when K > 0 is known and grows with p and T, the working paper Fan et al. (2011) proves the following results (when q = 0) 7:

The results state explicitly the dependence of the rate of convergence on the number of factors.

The relative error ||Σ−1/2 Σ̂K̂ Σ−1/2 − Ip|| in operator norm can be shown to have the same order as the maximum relative error of estimated eigenvalues. It does not converge to zero nor diverge. It is much smaller than ||Σ̂K̂ − Σ||, which is of order (see Example 3.1).

3.4 Convergence of unknown factors and factor loadings

Many applications of the factor model require estimating the unknown factors. In general, factor loadings in B and the common factors ft are not separably identifiable, as for any matrix H such that H′H = IK, Bft = BH′Hft. Hence (B, ft) cannot be identified from (BH′, Hft). Note that the linear space spanned by the rows of B is the same as that by those of BH′. In practice, it often does not matter which one is used.

Let V denote the K̂ × K̂ diagonal matrix of the first K̂ largest eigenvalues of the sample covariance matrix in decreasing order. Recall that F′ = (f1, …, fT) and define a K̂ × K̂ matrix . Then for t ≤ T, Hft = T−1V−1F̂′(Bf1, …, BfT)′Bft. Note that Hft depends only on the data V−1F̂′ and an identifiable part of parameters . Therefore, there is no identifiability issue in Hft regardless of the imposed identifiability condition.

Bai (2003) obtained the rate of convergence for both b̂i and f̂t for any fixed (i, t). However, the uniform rate of convergence is more relevant for many applications (see Example 5.1). The following theorem extends those results in Bai (2003) in a uniformity sense. In particular, with a more refined technique, we have improved the uniform convergence rate for f̂t.

Theorem 3.3

Under the assumptions of Theorem 3.1,

As a consequence of Theorem 3.3, we obtain the following: (recall that the constant r2 is defined in Assumption 3.2.)

Corollary 3.1

Under the assumptions of Theorem 3.1,

The rates of convergence obtained above also explain the condition T = o(p2) in Theorems 3.1 and 3.2. It is needed in order to estimate the common factors uniformly in t ≤ T. When we do not observe , in addition to the factor loadings, there are KT factors to estimate. Intuitively, the condition T = o(p2) requires the number of parameters introduced by the unknown factors be “not too many”, so that we can consistently estimate them uniformly. Technically, as demonstrated by Bickel and Levina (2008), Cai and Liu (2011) and many other authors, achieving uniform accuracy is essential for large covariance estimations.

4 Choice of Threshold

4.1 Finite-sample positive definiteness

Recall that the threshold value , where C is determined by the users. To make POET operational in practice, one has to choose C to maintain the positive definiteness of the estimated covariances for any given finite sample. We write , where the covariance estimator depends on C via the threshold. We choose C in the range where . Define

| (4.1) |

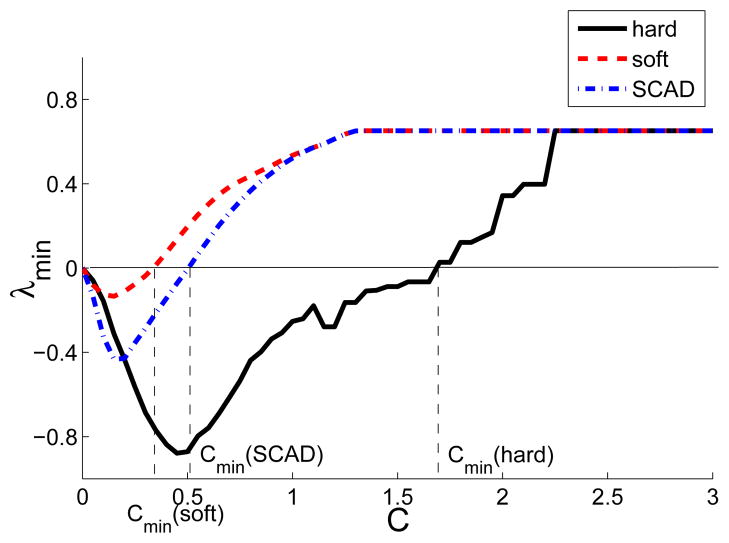

When C is sufficiently large, the estimator becomes diagonal, while its minimum eigenvalue must retain strictly positive. Thus, Cmin is well defined and for all C > Cmin, is positive definite under finite sample. We can obtain Cmin by solving , C ≠ 0. We can also approximate Cmin by plotting as a function of C, as illustrated in Figure 1. In practice, we can choose C in the range (Cmin + ε, M) for a small ε and large enough M. Choosing the threshold in a range to guarantee the finite-sample positive definiteness has also been previously suggested by Fryzlewicz (2010).

Figure 1.

Minimum eigenvalue of as a function of C for three choices of thresholding rules. The plot is based on the simulated data set in Section 6.2.

4.2 Multifold Cross-Validation

In practice, C can be data-driven, and chosen through multifold cross-validation. After obtaining the estimated residuals {ût}t≤T by the PCA, we divide them randomly into two subsets, which are, for simplicity, denoted by {ût}t∈J1 and {ût}t∈J2. The sizes of J1 and J2, denoted by T(J1) and T (J2), are T (J1) ≍ T and T (J2) + T (J1) = T. For example, in sparse matrix estimation, Bickel and Levina (2008) suggested to choose T(J1) = T (1 − (log T)−1).

We repeat this procedure H times. At the jth split, we denote by the POET estimator with the threshold on the training data set {ût}t∈J1. We also denote by the sample covariance based on the validation set, defined by . Then we choose the constant C* by minimizing a cross-validation objective function over a compact interval

| (4.2) |

Here Cmin is the minimum constant that guarantees the positive definiteness of for C > Cmin as described in the previous subsection, and M is a large constant such that is diagonal. The resulting C* is data-driven, so depends on Y as well as p and T via the data. On the other hand, for each given N × T data matrix Y, C* is a universal constant in the threshold in the sense that it does not change with respect to the position (i, j). We also note that the cross-validation is based on the estimate of Σu rather than Σ because POET thresholds the error covariance matrix. Thus cross-validation improves the performance of thresholding.

It is possible to derive the rate of convergence for under the current model setting, but it ought to be much more technically involved than the regular sparse matrix estimation considered by Bickel and Levina (2008) and Cai and Liu (2011). To keep our presentation simple we do not pursue it in the current paper.

5 Applications of POET

We give four examples to which the results in Theorems 3.1–3.3 can be applied. Detailed pursuits of these are beyond the scope of the paper.

Example 5.1 (Large-scale hypothesis testing)

Controlling the false discovery rate in large-scale hypothesis testing based on correlated test statistics is an important and challenging problem in statistics (Leek and Storey, 2008; Efron, 2010; Fan, et al., 2012). Suppose that the test statistic for each of the hypothesis

is Zi ~ N (μi, 1) and these test statistics Z are jointly normal N (μ, Σ) where Σ is unknown. For a given critical value x, the false discovery proportion is then defined as FDP(x) = V (x)/R(x) where V (x) = p−1 Σμi=0 I(|Zi| > x) and are the total number of false discoveries and the total number of discoveries, respectively. Our interest is to estimate FDP(x) for each given x. Note that R(x) is an observable quantity. Only V (x) needs to be estimated.

If the covariance Σ admits the approximate factor structure (1.3), then the test statistics can be stochastically decomposed as

| (5.1) |

By the principal factor approximation (Theorem 1, Fan, Han, Gu, 2012)

| (5.2) |

when mp = o(p) and the number of true significant hypothesis {i : μi ≠ 0} is o(p), where zx is the upper x-quantile of the standard normal distribution, ηi = (Bf)i and ai = var(ui)−1.

Now suppose that we have n repeated measurements from the model (5.1). Then, by Corollary 3.1, {ηi} can be uniformly consistently estimated, and hence p−1V (x) and FDP(x) can be consistently estimated. Efron (2010) obtained these repeated test statistics based on the bootstrap sample from the original raw data. Our theory (Theorem 3.3) gives a formal justification to the framework of Efron (2007, 2010).

Example 5.2 (Risk management)

The maximum elementwise estimation error ||Σ̂K̂ − Σ||max appears in risk assessment as in Fan, Zhang and Yu (2012). For a fixed portfolio allocation vector w, the true portfolio variance and the estimated one are given by w′Σw and w′Σ̂K̂w respectively. The estimation error is bounded by

where ||w||1, the L1-norm of w, is the gross exposure of the portfolio. Usually a constraint is placed on the total percentage of the short positions, in which case we have a restriction ||w||1 ≤ c for some c > 0. In particular, c = 1 corresponds to a portfolio with no-short positions (all weights are nonnegative). Theorem 3.2 quantifies the maximum approximation error.

The above compares the absolute error of perceived risk and true risk. The relative error is bounded by

for any allocation vector w. Theorem 3.2 quantifies this relative error.

Example 5.3 (Panel regression with a factor structure in the errors)

Consider the following panel regression model

where xit is a vector of observable regressors with fixed dimension. The regression error εit has a factor structure and is assumed to be independent of xit, but bi, ft and uit are all unobservable. We are interested in the common regression coefficients β. The above panel regression model has been considered by many researchers, such as Ahn, Lee and Schmidt (2001), Pesaran (2006), and has broad applications in social sciences.

Although OLS (ordinary least squares) produces a consistent estimator of β, a more efficient estimation can be obtained by GLS (generalized least squares). The GLS method depends, however, on an estimator of , the inverse of the covariance matrix of εt = (ε1t, …, εpt)′. By assuming the covariance matrix of (u1t, …, upt) to be sparse, we can successfully solve this problem by applying Theorem 3.2. Although εit is unobservable, it can be replaced by the regression residuals ε̂it, obtained via first regressing Yit on xit. We then apply the POET estimator to . By Theorem 3.2, the inverse of the resulting estimator is a consistent estimator of under the spectral norm. A slight difference lies in the fact that when we apply POET, is replaced with , which introduces an additional term in the estimation error.

Example 5.4 (Validating an asset pricing theory)

A celebrated financial economic theory is the capital asset pricing model (CAPM, Sharpe 1964) that makes William Sharpe win the Nobel prize in Economics in 1990, whose extension is the multi-factor model (Ross, 1976, Chamberlain and Rothschild, 1983). It states that in a frictionless market, the excessive return of any financial asset equals the excessive returns of the risk factors times its factor loadings plus noises. In the multi-period model, the excess return yit of firm i at time t follows model (1.1), in which ft is the excess returns of the risk factors at time t. To test the null hypothesis (1.2), one embeds the model into the multivariate linear model

| (5.3) |

and wishes to test H0 : α = 0. The F-test statistic involves the estimation of the covariance matrix Σu, whose estimates are degenerate without regularization when p ≥ T. Therefore, in the literature (Sentana, 2009, and references therein), one focuses on the case p is relatively small. The typical choices of parameters are T = 60 monthly data and the number of assets p = 5, 10 or 25. However, the CAPM should hold for all tradeable assets, not just a small fraction of assets. With our regularization technique, non-degenerate estimate can be obtained and the F-test or likelihood-ratio test statistics can be employed even when p ≫ T.

To provide some insights, let α̂ be the least-squares estimator of (5.3). Then, when ut ~ N (0, Σu), α̂ ~ N(α, Σu/cT) for a constant cT which depends on the observed factors. When Σu is known, the Wald test statistic is . When it is unknown and p is large, it is natural to use the F-type of test statistic . The difference between these two statistics is bounded by

Since under the null hypothesis α̂ ~ N(0, Σu/cT), we have . Thus, it follows from boundness of ||Σu|| that . Theorem 3.1 provides the rate of convergence for the above difference. Detailed development is out of the scope of the current paper, and we will leave it as a separate research project.

6 Monte Carlo Experiments

In this section, we will examine the performance of the POET method in a finite sample. We will also demonstrate the effect of this estimator on the asset allocation and risk assessment. Similarly to Fan, et al. (2008, 2011), we simulated from a standard Fama-French three-factor model, assuming a sparse error covariance matrix and three factors. Throughout this section, the time span is fixed at T = 300, and the dimensionality p increases from 1 to 600. We assume that the excess returns of each of p stocks over the risk-free interest rate follow the following model:

The factor loadings are drawn from a trivariate normal distribution b ~ N3(μB, ΣB), the idiosyncratic errors from ut ~ Np(0, Σu), and the factor returns ft follow a VAR(1) model. To make the simulation more realistic, model parameters are calibrated from the financial returns, as detailed in the following section.

6.1 Calibration

To calibrate the model, we use the data on annualized returns of 100 industrial portfolios from the website of Kenneth French, and the data on 3-month Treasury bill rates from the CRSP database. These industrial portfolios are formed as the intersection of 10 portfolios based on size (market equity) and 10 portfolios based on book equity to market equity ratio. Their excess returns (ỹt) are computed for the period from January 1st, 2009 to December 31st, 2010. Here, we present a short outline of the calibration procedure.

Given as the input data, we fit a Fama-French-three-factor model and calculate a 100 × 3 matrix B̃, and 500 × 3 matrix F̃, using the principal components method described in Section 3.1.

We summarize 100 factor loadings (the rows of B̃) by their sample mean vector μB and sample covariance matrix ΣB, which are reported in Table 1. The factor loadings bi = (bi1, bi2, bi3)T for i = 1, …, p are drawn from N3(μB, ΣB).

We run the stationary vector autoregressive model ft = μ + Φft−1 + εt, a VAR(1) model, to the data F̃ to obtain the multivariate least squares estimator for μ and Φ, and estimate Σε. Note that all eigenvalues of Φ in Table 2 fall within the unit circle, so our model is stationary. The covariance matrix cov(ft) can be obtained by solving the linear equation cov(ft) = Φcov(ft) Φ′ + Σε. The estimated parameters are depicted in Table 2 and are used to generate ft.

-

For each value of p, we generate a sparse covariance matrix Σu of the form:

Here, Σ0 is the error correlation matrix, and D is the diagonal matrix of the standard deviations of the errors. We set D = diag(σ1, …, σp), where each σi is generated independently from a Gamma distribution G(α, β), and α and β are chosen to match the sample mean and sample standard deviation of the standard deviations of the errors. A similar approach to Fan et al. (2011) has been used in this calibration step. The off-diagonal entries of Σ0 are generated independently from a normal distribution, with mean and standard deviation equal to the sample mean and sample standard deviation of the sample correlations among the estimated residuals, conditional on their absolute values being no larger than 0.95. We then employ hard thresholding to make Σ0 sparse, where the threshold is found as the smallest constant that provides the positive definiteness of Σ0. More precisely, start with threshold value 1, which gives Σ0 = Ip and then decrease the threshold values in a grid until positive definiteness is violated.

Table 1.

Mean and covariance matrix used to generate b

| μB | ΣB | ||

|---|---|---|---|

| 0.0047 | 0.0767 | −0.00004 | 0.0087 |

| 0.0007 | −0.00004 | 0.0841 | 0.0013 |

| −1.8078 | 0.0087 | 0.0013 | 0.1649 |

Table 2.

Parameters of ft generating process

| μ | cov(ft) | Φ | ||||

|---|---|---|---|---|---|---|

| −0.0050 | 1.0037 | 0.0011 | −0.0009 | −0.0712 | 0.0468 | 0.1413 |

| 0.0335 | 0.0011 | 0.9999 | 0.0042 | −0.0764 | −0.0008 | 0.0646 |

| −0.0756 | −0.0009 | 0.0042 | 0.9973 | 0.0195 | −0.0071 | −0.0544 |

6.2 Simulation

For the simulation, we fix T = 300, and let p increase from 1 to 600. For each fixed p, we repeat the following steps N = 200 times, and record the means and the standard deviations of each respective norm.

Generate independently , and set B = (b1, …, bp)′.

Generate independently .

Generate as a vector autoregressive sequence of the form ft = μ + Φft−1 + εt.

Calculate from yt = Bft + ut.

Set hard-thresholding with threshold . Estimate K using Bai and Ng (2002)’s IC1. Calculate covariance estimators using the POET method. Calculate the sample covariance matrix Σ̂sam.

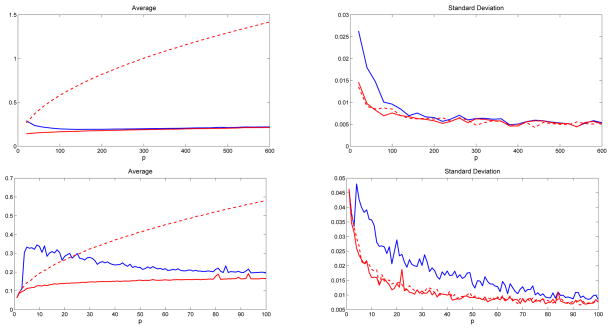

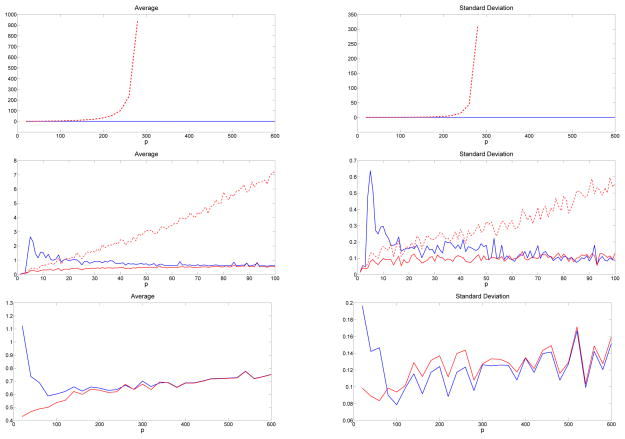

In the graphs below, we plot the averages and standard deviations of the distance from Σ̂K̂ and Σ̂sam to the true covariance matrix Σ, under norms ||.||Σ, ||.|| and ||.||max. We also plot the means and standard deviations of the distances from (Σ̂K̂)−1 and to Σ−1 under the spectral norm. The dimensionality p ranges from 20 to 600 in increments of 20. Due to invertibility, the spectral norm for is plotted only up to p = 280. Also, we zoom into these graphs by plotting the values of p from 1 to 100, this time in increments of 1. Notice that we also plot the distance from Σ̂obs to Σ for comparison, where Σ̂obs is the estimated covariance matrix proposed by Fan et al. (2011), assuming the factors are observable.

6.3 Results

In a factor model, we expect POET to perform as well as Σ̂obs when p is relatively large, since the effect of estimating the unknown factors should vanish as p increases. This is illustrated in the plots below.

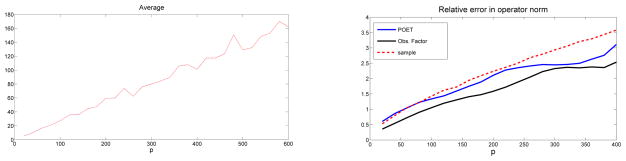

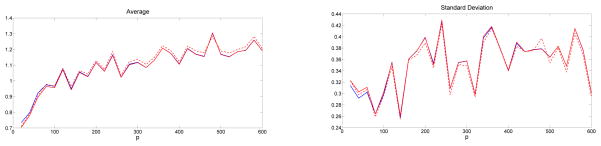

From the simulation results, reported in Figures 2–5, we observe that POET under the unobservable factor model performs just as well as the estimator in Fan et al. (2011) if the factors are known, when p is large enough. The cost of not knowing the factors is approximately of order . It can be seen in Figures 2 and 3 that this cost vanishes for p ≥ 200. To give a better insight of the impact of estimating the unknown factors for small p, a separate set of simulations is conducted for p ≤ 100. As we can see from Figures 2 (bottom panel) and 3 (middle and bottom panels), the impact decreases quickly. In addition, when estimating Σ−1, it is hard to distinguish the estimators with known and unknown factors, whose performances are quite stable compared to the sample covariance matrix. Also, the maximum absolute elementwise error (Figure 4) of our estimator performs very similarly to that of the sample covariance matrix, which coincides with our asymptotic result. Figure 5 shows that the performances of the three methods are indistinguishable in the spectral norm, as expected.

Figure 2.

Averages (left panel) and standard deviations (right panel) of the relative error p−1/2||Σ−1/2Σ̂Σ−1/2 − Ip||F with known factors (Σ̂ = Σ̂obs solid red curve), POET (Σ̂ = Σ̂K̂ solid blue curve), and sample covariance (Σ̂ = Σ̂sam dashed curve) over 200 simulations, as a function of the dimensionality p. Top panel: p ranges in 20 to 600 with increment 20; bottom panel: p ranges in 1 to 100 with increment 1.

Figure 5.

Averages of ||Σ̂ − Σ|| (left panel) and ||Σ−1/2Σ̂Σ−1/2 − Ip|| with known factors (Σ̂ = Σ̂obs solid red curve), POET (Σ̂= Σ̂K̂ ω solid blue curve), and sample covariance (Σ̂ = Σ̂sam dashed curve) over 200 simulations, as a function of the dimensionality p. The three curves are hardly distinguishable on the left panel.

Figure 3.

Averages (left panel) and standard deviations (right panel) of ||Σ̂−1 − Σ−1|| with known factors (Σ̂ = Σ̂obs solid red curve), POET (Σ̂ = Σ̂K̂ solid blue curve), and sample covariance (Σ̂ = Σ̂sam dashed curve) over 200 simulations, as a function of the dimensionality p. Top panel: p ranges in 20 to 600 with increment 20; middle panel: p ranges in 1 to 100 with increment 1; Bottom panel: the same as the top panel with dashed curve excluded.

Figure 4.

Averages (left panel) and standard deviations (right panel) of ||Σ̂ − Σ||max with known factors (Σ̂ = Σ̂obs solid red curve), POET (Σ̂ = Σ̂K̂ solid blue curve), and sample covariance (Σ̂ = Σ̂sam dashed curve) over 200 simulations, as a function of the dimensionality p. They are nearly indifferentiable.

6.4 Robustness to the estimation of K

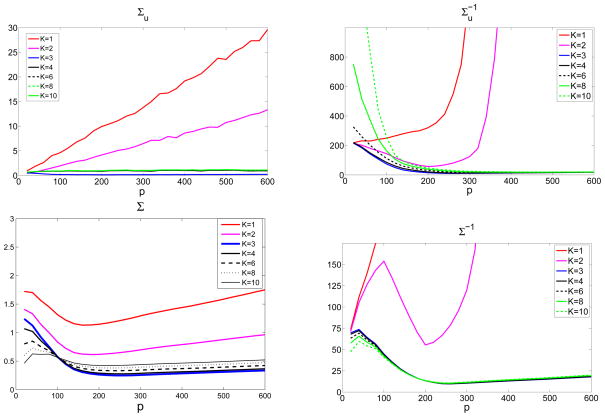

The POET estimator depends on the estimated number of factors. Our theory uses a consistent esimator K̂. To assess the robustness of our procedure to K̂ in finite sample, we calculate for K = 1, 2, …, 10. Again, the threshold is fixed to be .

6.4.1 Design 1

The simulation setup is the same as before where the true K0 = 3. We calculate and ||Σ̂K − Σ||Σ for K = 1, 2, …, 10. Figure 6 plots these norms as p increases but with a fixed T = 300. The results demonstrate a trend that is quite robust when K ≥ 3; especially, the estimation accuracy of the spectral norms for large p are close to each other. When K = 1 or 2, the estimators perform badly due to modeling bias. Therefore, POET is robust to over-estimated K, but not to under-estimation.

Figure 6.

Robustness of K as p increases for various choices of K (Design 1, T = 300). Top left: || ||; top right: || ||; bottom left: ||Σ̂K − Σ||Σ; bottom right: || ||.

6.4.2 Design 2

We also simulated from a new data generating process for the robustness assessment. Consider a banded idiosyncratic matrix

We still consider a K0 = 3 factor model, where the factors are independently simulated as

Table 3 summarizes the average estimation error of covariance matrices across K in the spectral norm. Each simulation is replicated 50 times and T = 200.

Table 3.

Robustness of K. Design 2, estimation errors in spectral norm

| K | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 8 | |||

| p = 100 |

|

10.70 | 5.23 | 1.63 | 1.80 | 1.91 | 2.04 | 2.22 | |

|

|

2.71 | 2.51 | 1.51 | 1.50 | 1.44 | 1.84 | 2.82 | ||

|

|

2.69 | 2.48 | 1.47 | 1.49 | 1.41 | 1.56 | 2.35 | ||

| Σ̂K | 94.66 | 91.36 | 29.41 | 31.45 | 30.91 | 33.59 | 33.48 | ||

| Σ−1/2 Σ̂K Σ−1/2 | 17.37 | 10.04 | 2.05 | 2.83 | 2.94 | 2.95 | 2.93 | ||

|

| |||||||||

| p = 200 |

|

11.34 | 11.45 | 1.64 | 1.71 | 1.79 | 1.87 | 2.01 | |

|

|

2.69 | 3.91 | 1.57 | 1.56 | 1.81 | 2.26 | 3.42 | ||

|

|

2.67 | 3.72 | 1.57 | 1.55 | 1.70 | 2.13 | 3.19 | ||

| Σ̂K | 200.82 | 195.64 | 57.44 | 63.09 | 64.53 | 60.24 | 56.20 | ||

| Σ−1/2 Σ̂K Σ−1/2 | 20.86 | 14.22 | 3.29 | 4.52 | 4.72 | 4.69 | 4.76 | ||

|

| |||||||||

| p = 300 |

|

12.74 | 15.20 | 1.66 | 1.71 | 1.78 | 1.84 | 1.95 | |

|

|

7.58 | 7.80 | 1.74 | 2.18 | 2.58 | 3.54 | 5.45 | ||

|

|

7.59 | 7.49 | 1.70 | 2.13 | 2.49 | 3.37 | 5.13 | ||

| Σ̂K | 302.16 | 274.12 | 87.92 | 92.47 | 91.90 | 83.21 | 92.50 | ||

| Σ−1/2 Σ̂K Σ−1/2 | 23.43 | 16.89 | 4.38 | 6.04 | 6.16 | 6.14 | 6.20 | ||

Table 3 illustrates some interesting patterns. First of all, the best estimation accuracy is achieved when K = K0. Second, the estimation is robust for K ≥ K0. As K increases from K0, the estimation error becomes larger, but is increasing slowly in general, which indicates the robustness when a slightly larger K has been used. Third, when the number of factors is under-estimated, corresponding to K = 1, 2, all the estimators perform badly, which demonstrates the danger of missing any common factors. Therefore, over-estimating the number of factors, while still maintaining a satisfactory estimation accuracy of the covariance matrices, is much better than under-estimating. The resulting bias caused by under-estimation is more severe than the additional variance introduced by over-estimation. Finally, estimating Σ, the covariance of yt, does not achieve a good accuracy even when K = K0 in the absolute term ||Σ̂ − Σ||, but the relative error ||Σ−1/2 Σ̂KΣ−1/2 − Ip|| is much smaller. This is consistent with our discussions in Section 3.3.

6.5 Comparisons with Other Methods

6.5.1 Comparison with related methods

We compare POET with related methods that address low-rank plus sparse covariance estimation, specifically, LOREC proposed by Luo (2012), the strict factor model (SFM) by Fan, Fan and Lv (2008), the Dual Method (Dual) by Lin et al. (2009), and finally, the singular value thresholding (SVT) by Cai, Candès and Shen (2008). In particular, SFM is a special case of POET which employs a large threshold that forces Σ̂u to be diagonal even when the true Σu might not be. Note that Dual, SVT and many others dealing with low-rank plus sparse, such as Candès et al. (2011) and Wright et al. (2009), assume a known Σ and focus on recovering the decomposition. Hence they do not estimate Σ or its inverse, but decompose the sample covariance into two components. The resulting sparse component may not be positive definite, which can lead to large estimation errors for and Σ̂−1.

Data are generated from the same setup as Design 2 in Section 6.4. Table 4 reports the averaged estimation error of the four comparing methods, calculated based on 50 replications for each simulation. Dual and SVT assume the data matrix has a low-rank plus sparse representation, which is not the case for the sample covariance matrix (though the population Σ has such a representation). The tuning parameters for POET, LOREC, Dual and SVT are chosen to achieve the best performance for each method.8

Table 4.

Method Comparison under spectral norm for T = 100. RelE represents the relative error ||Σ−1/2Σ̂Σ−1/2 − Ip||

| Σ̂u |

|

RelE | Σ̂−1 | Σ̂ | |||

|---|---|---|---|---|---|---|---|

| p = 100 | POET | 1.624 | 1.336 | 2.080 | 1.309 | 29.107 | |

| LOREC | 2.274 | 1.880 | 2.564 | 1.511 | 32.365 | ||

| SFM | 2.084 | 2.039 | 2.707 | 2.022 | 34.949 | ||

| Dual | 2.306 | 5.654 | 2.707 | 4.674 | 29.000 | ||

| SVT | 2.59 | 13.64 | 2.806 | 103.1 | 29.670 | ||

|

| |||||||

| p = 200 | POET | 1.641 | 1.358 | 3.295 | 1.346 | 58.769 | |

| LOREC | 2.179 | 1.767 | 3.874 | 1.543 | 62.731 | ||

| SFM | 2.098 | 2.071 | 3.758 | 2.065 | 60.905 | ||

| Dual | 2.41 | 6.554 | 4.541 | 5.813 | 56.264 | ||

| SVT | 2.930 | 362.5 | 4.680 | 47.21 | 63.670 | ||

|

| |||||||

| p = 300 | POET | 1.662 | 1.394 | 4.337 | 1.395 | 65.392 | |

| LOREC | 2.364 | 1.635 | 4.909 | 1.742 | 91.618 | ||

| SFM | 2.091 | 2.064 | 4.874 | 2.061 | 88.852 | ||

| Dual | 2.475 | 2.602 | 6.190 | 2.234 | 74.059 | ||

| SVT | 2.681 | > 103 | 6.247 | > 103 | 80.954 | ||

6.5.2 Comparison with direct thresholding

This section compares POET with direct thresholding on the sample covariance matrix without taking out common factors (Rothman et al. 2009, Cai and Liu 2011. We denote this method by THR). We also run simulations to demonstrate the finite sample performance when Σ itself is sparse and has bounded eigenvalues, corresponding to the case K = 0. Three models are considered and both POET and THR use the soft thresholding. We fix T = 200. Reported results are the average of 100 replications.

Model 1: one-factor

The factors and loadings are independently generated from N(0, 1). The error covariance is the same banded matrix as Design 2 in Section 6.4. Here Σ has one diverging eigenvalue.

Model 2: sparse covariance

Set K = 0, hence Σ = Σu itself is a banded matrix with bounded eigenvalues.

Model 3: cross-sectional AR(1)

Set K = 0, but Σ = Σu = (0.85|i−j|)p×p. Now Σ is no longer sparse (or banded), but is not too dense either since Σij decreases to zero exponentially fast as |i − j| → ∞. This is the correlation matrix if follows a cross-sectional AR(1) process: yit = 0.85yi−1,t + εit.

For each model, POET uses an estimated K̂ based on IC1 of Bai and Ng (2002), while THR thresholds the sample covariance directly. We find that in Model 1, POET performs significantly better than THR as the latter misses the common factor. For Model 2, IC1 estimates K̂ = 0 precisely in each replication, and hence POET is identical to THR. For Model 3, POET still outperforms. The results are summarized in Table 5.

Table 5.

Method Comparison. T = 200

| ||Σ̂ − Σ|| | ||Σ̂−1 − Σ−1|| | K̂ | ||||

|---|---|---|---|---|---|---|

| POET | THR | POET | THR | |||

| p = 200 | Model 1 | 26.20 | 240.18 | 1.31 | 2.67 | 1 |

| Model 2 | 2.04 | 2.04 | 2.07 | 2.07 | 0 | |

| Model 3 | 7.73 | 11.24 | 8.48 | 11.40 | 6.2 | |

|

| ||||||

| p = 300 | Model 1 | 32.60 | 314.43 | 2.18 | 2.58 | 1 |

| Model 2 | 2.03 | 2.03 | 2.08 | 2.08 | 0 | |

| Model 3 | 9.41 | 11.29 | 8.81 | 11.41 | 5.45 | |

The reported numbers are the averages based on 100 replications.

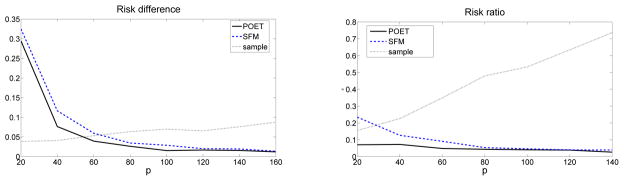

6.6 Simulated portfolio allocation

We demonstrate the improvement of our method compared to the sample covariance and that based on the strict factor model (SFM), in a problem of portfolio allocation for risk minimization purposes.

Let Σ̂ be a generic estimator of the covariance matrix of the return vector yt, and w be the allocation vector of a portfolio consisting of the corresponding p financial securities. Then the theoretical and the empirical risk of the given portfolio are R(w) = w′Σw and R̂ (w) = w′Σ̂w, respectively. Now, define

the estimated (minimum variance) portfolio. Then the actual risk of the estimated portfolio is defined as R(ŵ) = ŵ′Σŵ, and the estimated risk (also called empirical risk) is equal to R̂ (ŵ) = ŵ′Σ̂ŵ. In practice, the actual risk is unknown, and only the empirical risk can be calculated.

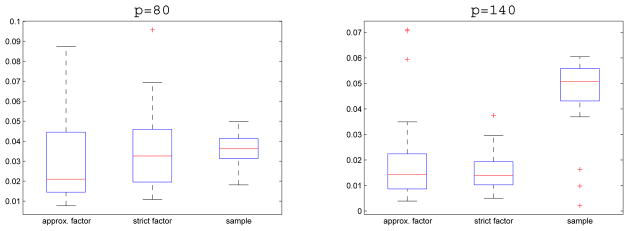

For each fixed p, the population Σ was generated in the same way as described in Section 6.1, with a sparse but not diagonal error covariance. We use three different methods to estimate Σ and obtain ŵ: strict factor model Σ̂diag (estimate Σu using a diagonal matrix), our POET estimator Σ̂POET, both are with unknown factors, and sample covariance Σ̂Sam. We then calculate the corresponding actual and empirical risks.

It is interesting to examine the accuracy and the performance of the actual risk of our portfolio ŵ in comparison to the oracle risk R* = minw′1=1 w′Σw, which is the theoretical risk of the portfolio we would have created if we knew the true covariance matrix Σ. We thus compare the regret R(ŵ) − R*, which is always nonnegative, for three estimators of Σ̂. They are summarized by using the box plots over the 200 simulations. The results are reported in Figure 7. In practice, we are also concerned about the difference between the actual and empirical risk of the chosen portfolio ŵ. Hence, in Figure 8, we also compare the average estimation error |R(ŵ) − R̂ (ŵ)| and the average relative estimation error |R̂ (ŵ)/R(ŵ) − 1| over 200 simulations. When ŵ is obtained based on the strict factor model, both differences - between actual and oracle risk, and between actual and empirical risk, are persistently greater than the corresponding differences for the approximate factor estimator. Also, in terms of the relative estimation error, the factor model based method is negligible, where as the sample covariance does not process such a property.

Figure 7.

Box plots of regrets R(ŵ) − R* for p = 80 and 140. In each panel, the box plots from left to right correspond to ŵ obtained using Σ̂ based on approximate factor model, strict factor model, and sample covariance, respectively.

Figure 8.

Estimation errors for risk assessments as a function of the portfolio size p. Left panel plots the average absolute error |R(ŵ) − R̂ (ŵ)| and right panel depicts the average relative error |R̂ (ŵ)/R(ŵ) − 1|. Here, ŵ and R̂ are obtained based on three estimators of Σ̂.

7 Real Data Example

We demonstrate the sparsity of the approximate factor model on real data, and present the improvement of the POET estimator over the strict factor model (SFM) in a real-world application of portfolio allocation.

7.1 Sarsity of Idiosyncratic Errors

The data were obtained from the CRSP (The Center for Research in Security Prices) database, and consists of p = 50 stocks and their annualized daily returns for the period January 1st, 2010-December 31st, 2010 (T = 252). The stocks are chosen from 5 different industry sectors, (more specifically, Consumer Goods-Textile & Apparel Clothing, Financial-Credit Services, Healthcare-Hospitals, Services-Restaurants, Utilities-Water utilities), with 10 stocks from each sector. We made this selection to demonstrate a block diagonal trend in the sparsity. More specifically, we show that the non-zero elements are clustered mainly within companies in the same industry. We also notice that these are the same groups that show predominantly positive correlation.

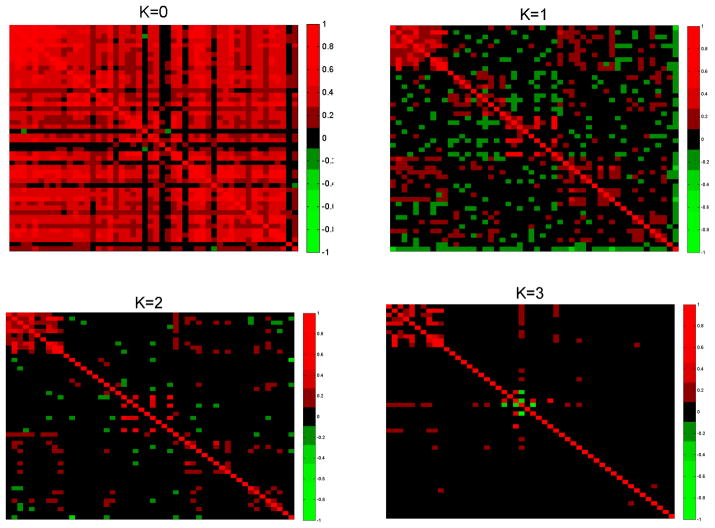

The largest eigenvalues of the sample covariance equal 0.0102, 0.0045 and 0.0039, while the rest are bounded by 0.0020. Hence K = 0, 1, 2, 3 are the possible values of the number of factors. Figure 9 shows the heatmap of the thresholded error correlation matrix (for simplicity, we applied hard thresholding). The threshold has been chosen using the cross validation as described in Section 4. We compare the level of sparsity (percentage of non-zero off-diagonal elements) for the 5 diagonal blocks of size 10 × 10, versus the sparsity of the rest of the matrix. For K = 2, our method results in 25.8% non-zero off-diagonal elements in the 5 diagonal blocks, as opposed to 7.3% non-zero elements in the rest of the covariance matrix. Note that, out of the non-zero elements in the central 5 blocks, 100% are positive, as opposed to a distribution of 60.3% positive and 39.7% negative amongst the non-zero elements in off-diagonal blocks. There is a strong positive correlation between the returns of companies in the same industry after the common factors are taken out, and the thresholding has preserved them. The results for K = 1, 2 and 3 show the same characteristics. These provide stark evidence that the strict factor model is not appropriate.

Figure 9.

Heatmap of thresholded error correlation matrix for number of factors K = 0, K = 1, K = 2 and K = 3.

7.2 Portfolio Allocation

We extend our data size by including larger industrial portfolios (p = 100), and longer period (ten years): January 1st, 2000 to December 31st, 2010 of annualized daily excess returns. Two portfolios are created at the beginning of each month, based on two different covariance estimates through approximate and strict factor models with unknown factors. At the end of each month, we compare the risks of both portfolios.

The number of factors is determined using the penalty function proposed by Bai and Ng (2002), as defined in (2.14). For calibration, we use the last 100 consecutive business days of the above data, and both IC1 and IC2 give K̂ = 3. On the 1st of each month, we estimate Σ̂diag (SFM) and Σ̂K̂ (POET with soft thresholding) using the historical data of excess daily returns for the proceeding 12 months (T = 252). The value of the threshold is determined using the cross-validation procedure. We minimize the empirical risk of both portfolios to obtain the two respective optimal portfolio allocations ŵ = ŵ1 and ŵ2 (based on Σ̂ = Σ̂diag and Σ̂K̂): ŵ = arg minw̃′1=1 w′Σ̂w. At the end of the month (21 trading days), their actual risks are compared, calculated by

We can see from Figure 10 that the minimum-risk portfolio created by the POET estimator performs significantly better, achieving lower variance 76% of the time. Amongst those months, the risk is decreased by 48.63%. On the other hand, during the months that POET produces a higher-risk portfolio, the risk is increased by only 17.66%.

Figure 10.

Risk of portfolios created with POET and SFM (strict factor model)

Next, we demonstrate the impact of the choice of number of factors and threshold on the performance of POET. If cross-validation seems computationally expensive, we can choose a common soft-threshold throughout the whole investment process. The average constant in the cross-validation was 0.53, close to our suggested constant 0.5 used for simulation. We also present the results based on various choices of constant C = 0.5, 0.75, 1 and 1.25, with soft threshold . The results are summarized in Table 6. The performance of POET seems consistent across different choices of these parameters.

Table 6.

Comparisons of the risks of portfolios using POET and SFM: The first number is proportion of the time POET outperforms and the second number is percentage of average risk improvements. C represents the constant in the threshold.

| C | K̂ = 1 | K̂ = 2 | K̂ = 3 |

|---|---|---|---|

| 0.25 | 0.58/29.6% | 0.68/38% | 0.71/33% |

| 0.5 | 0.66/31.7% | 0.70/38.2% | 0.75/33.5% |

| 0.75 | 0.68/29.3% | 0.70/29.6% | 0.71/25.1% |

| 1 | 0.66/20.7% | 0.62/19.4% | 0.69/18% |

8 Conclusion and Discussion

We study the problem of estimating a high-dimensional covariance matrix with conditional sparsity. Realizing unconditional sparsity assumption is inappropriate in many applications, we introduce a latent factor model that has a conditional sparsity feature, and propose the POET estimator to take advantage of the structure. This expands considerably the scope of the model based on the strict factor model, which assumes independent idiosyncratic noise and is too restrictive in practice. By assuming sparse error covariance matrix, we allow for the presence of the cross-sectional correlation even after taking out the common factors. The sparse covariance is estimated by the adaptive thresholding technique.

It is found that the rates of convergence of the estimators have an extra term approximately Op(p−1/2) in addition to the results based on observable factors by Fan et al. (2008, 2011), which arises from the effect of estimating the unobservable factors. As we can see, this effect vanishes as the dimensionality increases, as more information about the common factors becomes available. When p gets large enough, the effect of estimating the unknown factors is negligible, and we estimate the covariance matrices as if we knew the factors.

The proposed POET also has wide applicability in statistical genomics. For example, Carvalho et al. (2008) applied a Bayesian sparse factor model to study the breast cancer hormonal pathways. Their real-data results have identified about two common factors that have highly loaded genes (about half of 250 genes). As a result, these factors should be treated as “pervasive” (see the explanation in Example 2.1), which will result in one or two very spiked eigenvalues of the gene expressions’ covariance matrix. The POET can be applied to estimate such a covariance matrix and its network model.

Acknowledgments

The research was partially supported by NIH R01GM100474-01, NIH R01-GM072611, DMS-0704337, and Bendheim Center for Finance at Princeton University.

APPENDIX

A Estimating a sparse covariance with contaminated data

We estimate Σu by applying the adaptive thresholding given by (2.11). However, the task here is slightly different from the standard problem of estimating a sparse covariance matrix in the literature, as no direct observations for are available. In many cases the original data are contaminated, including any type of estimate of the data when direct observations are not available. This typically happens when represent the error terms in regression models or when data is subject to measurement of errors. Instead, we may observe . For instance, in the approximate factor models, .

We can estimate Σu using the adaptive thresholding proposed by Cai and Liu (2011): for the threshold , define

| (A.1) |

where sij(.) satisfies: for all z ∈ ℝ, sij(z) = 0, when |z| ≤ τij; |sij(z) − z| ≤ τij.

When is close enough to , we can show that is also consistent. The following theorem extends the standard thresholding results in Bickel and Levina (2008) and Cai and Liu (2011) to the case when no direct observations are available, or the original data are contaminated. For the tail and mixing parameters r1 and r3 defined in Assumptions 3.2 and 3.3, let .

Theorem A.1

Suppose (log p)6α = o(T), and Assumptions 3.2 and 3.3 hold. In addition, suppose there is a sequence aT = o(1) so that , and maxi≤p,t≤T |uit − ûit| = op(1); Then there is a constant C > 0 in the adaptive thresholding estimator (A.1) with

such that

If further ωT mp = o(1), then is invertible with probability approaching one, and

Proof

By Assumptions 3.2 and 3.3, the conditions of Lemmas A.3 and A.4 of Fan, Liao and Mincheva (2011, Ann. Statist, 39, 3320–3356) are satisfied. Hence for any ε > 0, there are positive constants M, θ1 and θ2 such that each of the events

occurs with probability at least 1 − ε. By the condition of threshold function, . Now for , under the event A1 ∩ A2,

Let M1 = (Cθ1+M)(M−q+(Cθ1+M)−q). Then with probability at least 1 − 2ε, . Since ε is arbitrary, we have . If in addition, ωT mp = o(1), then the minimum eigenvalue of is bounded away from zero with probability approaching one since λmin(Σu) > c1. This then implies .

B Proofs for Section 2

We first cite two useful theorems, which are needed to prove propositions 2.1 and 2.2. In Lemma B.1 below, let be the eigenvalues of Σ in descending order and be their associated eigenvectors. Correspondingly, let be the eigenvalues of Σ̂ in descending order and be their associated eigenvectors.

Lemma B.1

(Weyl’s Theorem) |λ̂i − λi| ≤ ||Σ̂ − Σ||.

- (sin θ Theorem, Davis and Kahan, 1970)

Proof of Proposition 2.1

Proof

Since are the eigenvalue of Σ and are the first K eigenvalues of BB′ (the remaining p − K eigenvalues are zero), then by the Weyl’s theorem, for each j ≤ K,

For j > K, |λj| = |λj − 0| ≤ ||Σu||. On the other hand, the first K eigenvalues of BB are also the eigenvalues of B′B. By the assumption, the eigenvalues of p−1B′B are bounded away from zero. Thus when j ≤ K, ||b̃j||2/p are bounded away from zero for all large p.

Proof of Proposition 2.2

Proof

Applying the sin θ theorem yields

For a generic constant c > 0, |λj−1 − ||b̃j||2| ≥ |||b̃j−1||2 − ||b̃j||2| − |λj−1 − ||b̃j−1||2| ≥ cp for all large p, since |||b̃j−1||2 − ||b̃j||2| ≥ cp but |λj−1 − ||b̃j−1||2| is bounded by Prosposition 2.1. On the other hand, if j < K, the same argument implies |||b̃j||2 − λj+1| ≥ cp. If j = K, |||b̃j||2 − λj+1| = p|||b̃K||2/p − λK+1/p|, where ||b̃K||2/p is bounded away from zero, but λK+1/p = O(p−1). Hence again, |||b̃j||2 − λj+1| ≥ cp.

Proof of Theorem 2.1

Proof

The sample covariance matrix of the residuals using least squares method is given by . where we used the normalization condition F̂′F̂ = TIK and Λ̂ = YF̂/T. If we show that , then from the decompositions of the sample covariance

we have R̂ = Σ̂u. Consequently, applying thresholding on Σ̂u is equivalent to applying thresholding on R̂, which gives the desired result.

We now show indeed holds. Consider again the least squares problem (2.8) but with the following alternative normalization constraints: , and is diagonal. Let (Λ̃, F̃) be the solution to the new optimization problem. Switching the roles of B and F, then the solution of (2.10) is Λ̃ = (ξ̂1, · · ·, ξ̂K) and F̃ = p−1Y′Λ̃. In addition, T−1F̃′F̃= diag(λ̂1, · · ·, λ̂K). From Λ̂F̂′ = Λ̃F̃′, it follows that .

C Proofs for Section 3

We will proceed by subsequently showing Theorems 3.3, 3.1 and 3.2.

C.1 Preliminary lemmas

The following results are to be used subsequently. The proofs of Lemmas C.1, C.2 and C.3 are found in Fan, Liao and Mincheva (2011).

Lemma C.1

Suppose A, B are symmetric semi-positive definite matrices, and λmin(B) > cT for a sequence cT > 0. If ||A − B|| = op(cT ), then λmin(A) > cT/2, and

Lemma C.2