Abstract

We investigated the effects of listeners' head movements and proprioceptive feedback during sound localization practice on the subsequent accuracy of sound localization performance. The effects were examined under both restricted and unrestricted head movement conditions in the practice stage. In both cases, the participants were divided into two groups: a feedback group performed a sound localization drill with accurate proprioceptive feedback; a control group conducted it without the feedback. Results showed that (1) sound localization practice, while allowing for free head movement, led to improvement in sound localization performance and decreased actual angular errors along the horizontal plane, and that (2) proprioceptive feedback during practice decreased actual angular errors in the vertical plane. Our findings suggest that unrestricted head movement and proprioceptive feedback during sound localization training enhance perceptual motor learning by enabling listeners to use variable auditory cues and proprioceptive information.

Keywords: head movement, proprioceptive feedback, training of sound localization, binaural differences, spectral shape cues, perceptual motor learning

1. Introduction

Human sound localization in the horizontal plane (azimuth) is based mainly on the evaluation of interaural differences in the sound level and time of arrival (Blauert, 1997; Middlebrooks & Green, 1991). On the other hand, spectral shape cues generated by the head and pinnae play important roles in localizing acoustic objects along the vertical plane (elevation) (Blauert, 1970, 1997; Hofman & van Opstal, 1998; Kulkarni & Colburn, 1998; Langendijk & Bronkhorst, 2002; Shaw, 1966). Recently, Aytekin, Moss, and Simon (2008) pointed out in a review article that sound localization is not only a purely acoustic phenomenon. It is actually a combination of multisensory information processing, experience-dependent plasticity, and movement. Here, we investigated the effects of head movement and proprioceptive feedback on the improvement of sound localization.

When we listen to a sound, we sometimes move our head, consciously or unconsciously, to localize it (Wallach, 1939). A listener's head movements are known to be important for the identification of the sound direction of incidence. For example, Thurlow and Runge (1967) revealed that a blindfolded person's head rotation is especially effective at reducing horizontal localization error for both high-frequency and low-frequency noise, as well as click stimuli. In addition, Perrett and Noble (1997) demonstrated that a moving auditory system generates information that can indicate sound sources positions, even when the movement does not result in the listener facing the source. More recently, results of sound localization experiments using an acoustical telepresence robot demonstrated that head movement improves horizontal-plane sound localization performance even when the shape of the dummy head differs from that of the user's head (Toshima, Aoki, & Hirahara, 2008).

Similar findings related to the benefits of head movements have also been observed in animal experiments. For example, Populin (2006) reported that monkeys were able to localize sounds more accurately and with less variation when they were allowed free head movement, compared with the results obtained when using a head restraint. Furthermore, Tollin, Populin, Moore, Ruhland, and Yin (2005) investigated the sound localization performance of cats that had been trained to indicate the location of auditory and visual targets via eye position, using operant conditioning with restrained and unrestrained head movements. Results revealed that sound localization performance was substantially worse in the head-restrained condition relative to that in the head-unrestrained condition (Tollin et al., 2005). Consequently, these findings suggest that a listener's head movements are a crucially important method used for achieving correct sound localization. Furthermore, these findings underscore that binaural time and level disparity cues from a listener's head movements are important for accurate horizontal sound localization.

When learning to localize sounds accurately, we might obtain positional and spatial information not only from active head movements but also from sensory feedback (such as vision, audition, or proprioception). Perceptual learning refers to performance changes brought about through practice or experience, which improves an organism's ability to respond to its environment (Goldstone, 1998; Hawkey, Amitay, & Moore, 2004). It is possible that perceptual learning is similar in several respects to procedural learning, but procedural learning refers to an improvement in performance at a task arising from learning its response demands (Hawkey et al., 2004). King (1999) pointed out that previous studies of sound localization conducted on both humans and animals provide evidence for perceptual learning, i.e., improved performance with practice. In this review article, King (1999) reported that the plasticity of auditory localization in adults can result from changes in the activity patterns of sensory inputs or as a consequence of behavioral training, such as perceptual learning.

In fact, several studies have demonstrated that visual systems can train and calibrate the acoustic localization process by providing accurate spatial feedback. For instance, Hofman, van Riswick, and van Opstal (1998) investigated spatial calibration in the adult human auditory system. Their study assessed the accuracy of sound localization in four adult subjects who had custom molds fitted into their conchae for up to 6 weeks. Subjects received no specific localization training during this period. Results demonstrated that localization of sound elevation was dramatically degraded immediately after the modification, but accurate performance was steadily reacquired thereafter. In addition, results revealed that learning the new spectral cues did not interfere with the neural representation of the original cues because subjects were able to localize sounds with both normal and modified ears. These findings indicated that the adult human auditory system can adjust considerably well in response to altered spectral cues after several weeks of passive adaptation. Afterward, they considered the adaptive capability of the human auditory localization system to be contingent on the availability of a sufficiently rich set of spectral cues, as well as on visual feedback related to actual performance in daily life (Hofman et al., 1998).

More recent studies have specifically examined the effects of active feedback on adaptation of the auditory system. For example, Zahorik, Bangayan, Sundareswaran, Wang, and Tam (2006) attempted to determine if controlled multimodal feedback related to the correct sound source location engenders rapid improvements in sound localization accuracy, presumably through a process of perceptual recalibration, when spectral cues are inappropriate. In this study, they examined the efficacy of a sound localization training procedure that provided listeners with auditory, visual, and proprioceptive/vestibular feedback related to the correct sound source position. Spectral cues were modified through the use of a virtual auditory display that used non-individualized head-related transfer functions (HRTFs) instead of the ear-mold manipulation used by Hofman et al. (1998). Results demonstrated that a brief perceptual training procedure (two 30-min sessions) that provides listeners with auditory, visual, and proprioceptive feedback related to true target locations can improve the localization accuracy for stimulus conditions in which a mismatch in spectral cues to the source direction exists. Additionally, they reported that training improvements appear to last for at least four months (Zahorik et al., 2006).

These findings from results of previous studies suggest that sound localization learning with accurate feedback plays an important role in perceptual recalibration of the human auditory system. However, few studies have examined the effects of a listener's proprioceptive feedback on the improvement of sound localization accuracy. For instance, Blum, Katz, and Warusfel (2004) reported that providing proprioceptive information to blindfolded listeners with normal vision can facilitate recalibration to altered spectral cues effectively. Furthermore, Parseihian & Katz (2012) revealed that rapid perceptual adaptation to non-individual HRTF through an audio proprioceptive and vestibular feedback is possible. In this study, they found that participants using non-individualized HRTFs can cut down localization errors in elevation by 10° after three 12-min sessions of perceptual adaptation. These findings indicate that proprioceptive feedback has beneficial effects on sound localization training. However, it remains unclear whether restrictions to the listener's head movement have any impact on the proprioceptive feedback effects on sound localization improvement.

Therefore, this study investigated the separate and combined effects of both head movement and proprioceptive feedback during sound localization training on the accuracy of sound localization afterward. The findings of this study might provide some insight into how varied auditory cues and proprioceptive information contribute to perceptual motor learning.

2. Methods

2.1. Participants

Thirty-two listeners (16 women and 16 men) participated in this experiment. All participants were undergraduate or graduate students of Tohoku University (mean age = 22.06 years, SD = 1.50). They were naïve to the purpose of the experiment. All participants reported normal hearing and normal or corrected-to-normal vision. Written informed consent was obtained from each participant before participation in the study.

2.2. Apparatus and stimuli

All tasks were conducted in a soundproof room with a speaker array comprising 36 loudspeakers positioned at 30° intervals (0°, ±30°, ±60°, ±90°, ±120°, ±150°, and 180° on either side of the sagittal plane) and elevated to 0°, ±30° on the speaker array with a 1.2-m radius. The speaker array frame was covered with sound-absorbing material. The blindfolded participant sat on a chair placed in the center of the speaker array. The experimenter adjusted the chair height; the participant's ear level was positioned at 140-cm elevation (0° on the speaker array).

The target sound consisted of an AM triangle wave (200 Hz) modulated with a 25-Hz sinusoidal envelope, with a sampling frequency of 32 kHz. The A-weighted sound pressure level (A-weighted SPL) of each stimulus was adjusted to 70 dB at the center of the head. The duration of the stimulus was one second. The target stimulus was delivered through a randomly selected loudspeaker.

The background sound was presented to the participant using four loudspeakers that were spaced equally outside of the speaker array during the tasks. We used background noise with the intention of masking any noise that might derive from the experimenter. The experimenter checked and recorded the participants' performance near the speaker array to prevent blindfolded participants from falling off the chair. The background sound was pink noise. The noise level was maintained at 60 dB at the center of the head.

Honda et al. (2007) investigated human sound localization performance using the same speaker array system. In their study, participants showed that the average correct rate for initial sound localization tasks was 47% using the same sounds (Honda et al., 2007). This result demonstrates that the sound localization tasks were neither unreasoningly difficult nor trivial. For this reason, we decided to use the same loudspeaker array as used by Honda et al. (2007).

2.3. Procedure

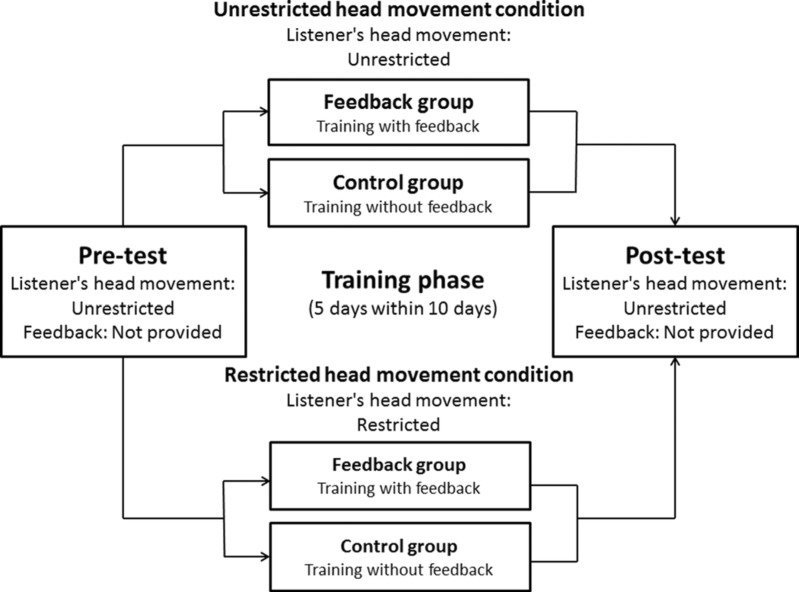

All participants performed all tasks blindfolded. They performed the sound localization task on the first day (pre-test) and were asked to do the same task 10 days later (post-test), after five practice sessions. A schematic illustration of the experimental design is presented in Figure 1.

Figure 1.

Schematic illustration of the experimental design used. All participants performed all tasks blindfolded. In the pre-test and post-test, participants performed sound localization tasks divided in two sessions of 36 trials each. In the training phases, participants were divided into two groups of either restricted or unrestricted head movement. In addition, both groups were further divided into two subgroups. The training group performed sound localization training with accurate proprioceptive feedback for five days within a 10-day period; the control group did the training without feedback. During the training phases, all participants were asked to conduct sound localization tasks of 36 trials.

In the pre-test, a short rehearsal was conducted for the participants to adapt to the blindfold and the experiment procedure. The pre-test consisted of 72 trials. After the pre-test, all participants were divided into two groups, with restricted or unrestricted head movement for sound localization practice. Both groups were further divided into two subgroups. A feedback group performed sound localization training with accurate proprioceptive feedback for five days within a 10-day period. Meanwhile, a control group conducted the training without feedback. During the training phases, all participants were asked to conduct 36 trials of a sound localization task. Finally, a post-test was conducted to evaluate changes in the sound localization performance of the participants. The post-test consisted of 72 trials. In all cases, participants were informed that the target sound was delivered through a randomly selected loudspeaker.

All participants were asked to enter the loudspeaker array and maintain the head at a specific position (facing a loudspeaker positioned at an azimuth angle of 0° and elevation of 0°) before the target sound onset. The participants in the unrestricted head movement groups were allowed to change their head posture freely after the target sound onset. In contrast, the participants in the restricted head movement groups were asked not to move the head even after the target sound onset. In all tasks, the participants were asked to identify the active loudspeaker position by touching it with a rod (100 cm long, 60 g) after the sound offset. The experimenter asked each participant to pinpoint another direction when they localized a position between the loudspeakers (e.g., speaker array frame).

During the training phase, the feedback group was informed orally whether their sound localization was correct or incorrect at each trial. Furthermore, an experimenter led their mislocated rod to the correct loudspeaker position when their localization was inaccurate. In contrast, the control group received no feedback during the training phases. The experimenter, who was near the loudspeakers, confirmed that all the participants followed the instructions related to head movement during each trial. In this study, we conducted all tasks for the unrestricted head movement condition first. Afterward, we carried out all tasks for the restricted head movement groups.

3. Results

In this study, all participants showed average sound localization performance during the pre-test (see the Appendix). To obtain an insight into the overall effects of head movement and proprioceptive feedback during training, we analyzed the proportion of correct sound localization and the actual angular errors in the vertical and horizontal planes. The actual angular errors were calculated as the difference between the localized position and the target position.

3.1. Sound localization performance during the training phase

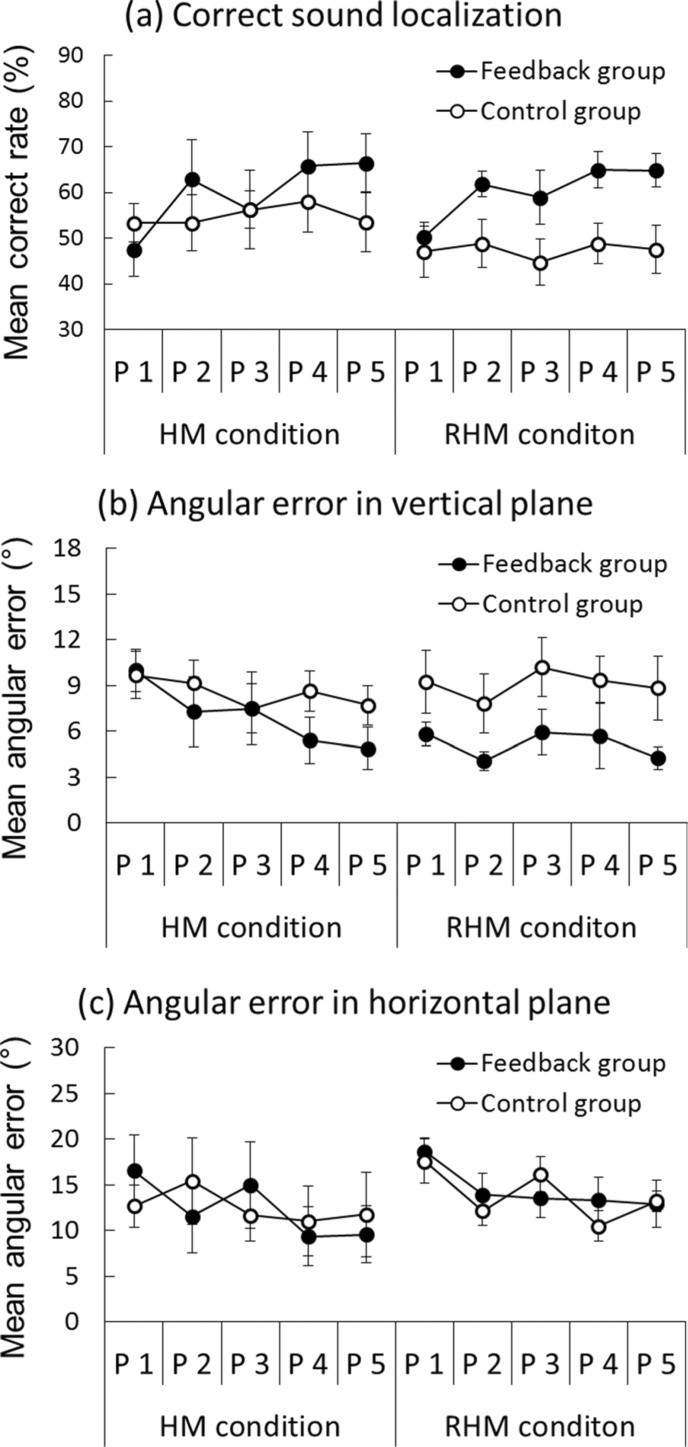

A three-way analysis of variance (ANOVA) of the correct response rate was performed, considering the condition (restricted or unrestricted head movement), the group (feedback, control), and the training phase (1, 2, 3, 4, 5) as factors (see Figure 2a). The interaction between the group and the training phase was significant [F (4, 112) = 4.58, p < 0.01]. The simple main effect of the training phase was found on the feedback group [F (4, 112) = 11.18, p < 0.001]. Post-hoc analyses (Ryan's) revealed that the feedback group's correct rate in phase 1 was lower than in the other phases (all p < 0.01). Simple main effects of the group were found for phase 4 [F (1, 140) = 4.33, p < 0.05] and phase 5 [F (1, 140) = 6.97, p < 0.01]. The feedback group (M = 65% in phase 4 and M = 66% in phase 5) showed higher correct rates than those of the control group (M = 54% in phase 4 and M = 51% in phase 5). The main effect of the training phase was also significant [F (4, 112) = 6.99, p < 0.001]. Although the feedback group value was slightly better than that of the control group (60% vs. 51%), no significant effect was found for the group difference [F (1, 28) = 3.03, p = 0.09].

Figure 2.

Mean correct response rate (%) and angular errors (°) of sound localization during training phases for the training and control groups. Unrestricted head movement condition results are labeled as the HM condition, whereas restricted head movement condition results are labeled as RHM condition. P 1, P2, P3, P4, and P5 respectively denote phases 1, 2, 3, 4, and 5. The black circles depict performance of the feedback group. The white circles denote the performance of the control group. Standard error bars are shown.

Three-way ANOVAs were also performed on the actual angular errors with the head movement condition, the group, and the training phase as factors. These data are presented in Figures 2(b) and (c). Significant main effects of the training phase were observed on the horizontal angular error [F (4, 112) = 2.79, p < 0.05] and the vertical angular error [F (4, 112) = 7.07, p < 0.001]. Post-hoc analyses (Ryan) revealed that the participants' vertical sound localization was significantly improved in phase 5 (M = 8.70) over phase 1 (M = 6.43, p < 0.001). Participants showed significantly larger horizontal angular error in phase 1 (M = 16.38) than in phase 2 (M = 13.28, p < 0.01), phase 4 (M = 11.07, p < 0.001), and phase 5 (M = 11.88, p < 0.001). Although the feedback group (M = 6.09) showed less vertical angular error than the control group did (M = 8.82), no significant main effect of the group was found [F (1, 28) = 3.91, p = 0.06]. A three-way interaction was only significant for the actual angular errors in the horizontal plane [F (4, 112) = 2.49, p < 0.05]. Two-way interaction between the group and the training phase showed significance of the head movement condition [F (4, 112) = 2.49, p < 0.05]. Simple–simple main effects of the training phase were found for the feedback group under unrestricted head movement [F (4, 112) = 4.32, p < 0.01] and for the control group with restricted head movement [F (4, 112) = 3.47, p < 0.05].

In brief, our results showed that the participants' sound localization performance was improved during the training phase. Particularly, a significant improvement of sound localization accuracy was observed in the feedback group. Proprioceptive feedback slightly decreased the participants' actual angular errors in the vertical plane.

3.2. Sound localization performance in the test phase

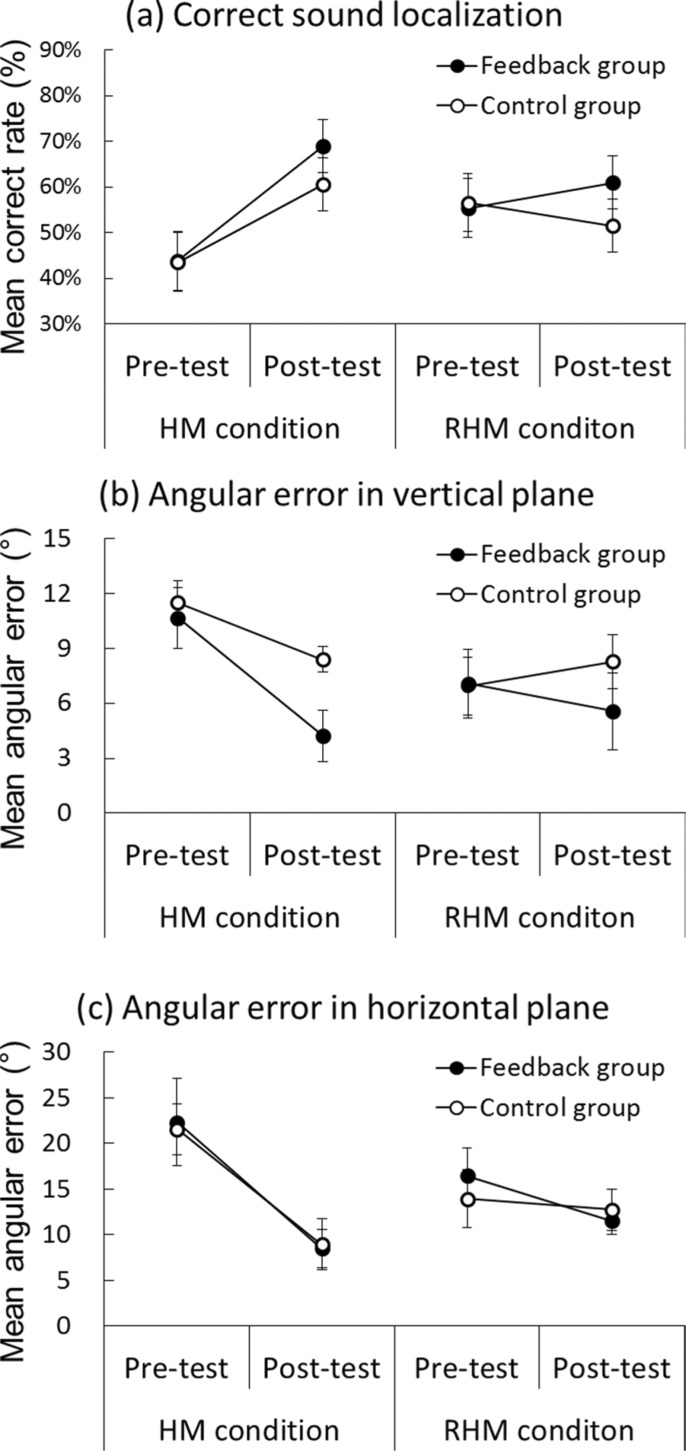

A three-way ANOVA on the correct response rate was conducted, considering the condition (restricted or unrestricted head movement), the group (feedback and control), and the test phase (pre-test and post-test) as factors (see Figure 3a). The interaction between the condition and the test was significant [F (1, 28) = 22.55, p < 0.001]. The correct response rate for the unrestricted head movement was significantly higher in the post-test (M = 65%) than in the pre-test (M = 44%) [F (1, 28) = 46.20, p < 0.001]. Furthermore, the simple main effect of head movement was found in the pre-test [F (1, 56) = 4.03, p < 0.05]. The interaction between the group and the test phase was also significant [F (1, 28) = 4.60, p < 0.05]. The simple main effect revealed that the correct rate of the feedback group was significantly higher in the post-test (M = 65%) than in the pre-test (M = 50%) [F (1, 28) = 24.56, p < 0.001]. A significant main effect of the test phase was observed [F (1, 28) = 23.65, p < 0.001]. No other main effect or interaction was found to be significant.

Figure 3.

Mean correct response rate (%) and angular errors (°) of sound localization in the test phases for the training and control groups. The unrestricted head movement condition is labeled as the HM condition, whereas the restricted head movement condition is labeled as the RHM condition. Black circles denote the performance of the feedback group. White circles represent the performance of the control group. Standard error bars are shown.

Three-way ANOVAs were performed on actual angular errors with the head movement condition, the group, and the test phase as factors. These data are presented in Figures 3(b) and (c). Significant interactions between the condition and the test were observed on the vertical angular error [F (1, 28) = 10.76, p < 0.01] and the horizontal angular error [F (1, 28) = 18.22, p < 0.001]. The simple main effects revealed that the unrestricted head movement condition engendered significantly smaller angular errors in the post-test than in the pre-test [vertical angular error, F (1, 28) = 22.23, p < 0.001; horizontal angular error, F (1, 28) = 61.84, p < 0.001]. In addition, the simple main effects of the head movement condition were found in the pre-test [vertical angular error, F (1, 28) = 6.85, p < 0.05; horizontal angular error, F (1, 28) = 5.26, p < 0.05]. In the pre-test, the restricted head movement condition made fewer the actual angular errors than the unrestricted head movement condition. Significant interaction between the group and the test was found only on the actual angular error in the vertical plane [F (1, 28) = 4.65, p < 0.05]. The vertical angular error of the feedback group was significantly smaller in the post-test (M = 8.89) than in the pre-test (M = 4.90) [F (1, 28) = 15.37, p < 0.001]. Furthermore, the simple main effect of the group was found in the post-test [F (1, 56) = 4.85, p < 0.05]. Significant main effects of the test phase were also observed [vertical angular error, F (1, 28) = 11.48, p < 0.01; horizontal angular error, F (1, 28) = 46.95, p < 0.001]. No other main effect or interaction was found to be significant.

In summary, results showed that sound localization training under head movement increased the accuracy of sound localization and decreased the actual angular errors in the vertical and horizontal planes. In addition, results revealed that training with the proprioceptive feedback contributed to improvement in the accuracy of sound localization in the vertical plane. Furthermore, the pre-test results showed that the restricted head movement condition group had better sound localization than the unrestricted head movement group had.

4. Discussion

This study investigated the effects of head movement and proprioceptive feedback during training in the improvement of sound localization. Particularly, we examined the effects of feedback under conditions in which the participant's head movements were restricted or unrestricted during sound localization training. Previous studies mainly examined whether visual feedback might aid in the training and calibration of acoustic localization processes (e.g., Hofman et al., 1998). Results of recent studies suggest that proprioceptive feedback has beneficial effects on sound localization training (Blum et al., 2004; Parseihian & Katz, 2012). In this study, participants showed average sound localization ability during a pre-test (see the Appendix). We anticipate that sound localization drills to improve the participant's sound localization skills can be more effective with the addition of proprioceptive feedback.

During the training phases, the sound localization accuracy of the participants improved significantly. Particularly, a significant improvement of sound localization accuracy was observed for the feedback group. This result demonstrates that proprioceptive feedback can boost the effects of sound localization training. Results of previous studies suggested that proprioceptive feedback has beneficial effects on sound localization training (Blum et al., 2004; Parseihian & Katz, 2012). Our study showed that the feedback group exhibited slightly smaller angular errors in the vertical plane than those of the control group. Consequently, the results from this study are consistent with those reported in previous studies (Blum et al., 2004; Parseihian & Katz, 2012).

Test phase results demonstrated that the participants' sound localization performances were improved significantly after sound localization training. Our results from the test phase revealed that (1) sound localization training with unrestricted head movement decreased the actual angular errors in the vertical and horizontal planes, and that (2) training with the proprioceptive feedback contributed to improvement of the sound localization accuracy and decreased the vertical angular error. Results of sound localization training under head movement are in agreement with those obtained by Honda et al. (2007), who investigated the effects of training with a virtual auditory game on sound localization performance. An important finding among our results is that significant differences between the feedback and control groups were found despite equal test phases for all groups, with unrestricted head movement and no feedback. Previous reports have described that head movements during sound localization tasks are effective to increase the localization accuracy (e.g., Populin, 2006; Thurlow & Runge, 1967). A new result of our study shows that head movement during training is important to enhance its subsequent effects on sound localization. This is particularly the case for horizontal sound localization.

Furthermore, our results indicate that head movement and accurate proprioceptive feedback during training might mutually contribute to different effects on sound localization learning: head movement might be related to the improvement of horizontal sound localization, although proprioceptive feedback might be associated with the enhancement of vertical sound localization. van Wanrooij & van Opstal (2005) pointed out that human sound localization relies primarily on the processing of binaural differences in sound levels and arrival times for locations in the horizontal plane, and of spectral shape cues generated by the head and pinnae for positions in the vertical plane. One interpretation of the results is that the participants in the unrestricted head movement groups might learn how to use the information of binaural difference in sound level and arrival time by actively moving their heads. Afterward, they can determine sound positions in the horizontal plane with greater accuracy. The positive effect of head movement on horizontal sound localizations is consistent with the results reported by Thurlow & Runge (1967). Sound localization in the vertical plane is generally believed to be more difficult than that in the horizontal plane. Our results suggest that proprioceptive feedback might be useful in the processing of spectral information. Therefore, the use of feedback during training engenders an increase in the performance of vertical sound localization afterward.

However, in the results obtained in this study do not conclusively associate proprioceptive feedback with the listeners' performance during vertical sound localization. For example, our findings differ from the results described by Zahorik et al. (2006), who showed no clear decrease in elevation localization error during the course of adaptation. One possible explanation for the differences in our results might be the type of multimodal feedback. Zahorik et al. (2006) provided listeners with auditory, visual, and proprioceptive feedback as to the correct sound source position. In contrast, our study, as well as that of Parseihian & Katz (2012), mainly used proprioceptive feedback of auditory spatial information. Blum et al. (2004) reported that proprioceptive feedback can facilitate sound localization learning effectively. Therefore, it is important to investigate the multimodal feedback effects on sound localization training further. For example, the feedback group of our study was informed orally that their sound localization was either correct or incorrect. Furthermore, the experimenter led their mislocated rod to the correct loudspeaker position when their localization was inaccurate. In contrast, the control group received no feedback during training phases. To observe proprioceptive feedback effects more precisely, it might be better to prepare an additional control group that is informed only whether their sound localization is correct or not.

Furthermore, we observed an unexpected difference in sound localization performance between restricted and unrestricted head movement conditions during the pre-test. This result suggests that the restricted head movement condition group (mean correct rate was 56%) presented better sound localization than the unrestricted head movement condition group (44%). This observation is likely to be the result of using a between-subject design; however, it is difficult to confirm that presupposition. Future studies must assess those effects of head movement during training for sound localization.

Although further research must be undertaken, results of our study suggest that proprioceptive feedback (i.e. change of participants' body posture when the experimenter provided the feedback) might have led to increased performance of vertical sound localization. Particularly, some reports have described that the short-term deprivation of vision can improve sound localization in the horizontal plane. For example, Abel and Shelly Paik (2004) reported that both sighted and blindfolded listeners showed improved horizontal sound localization with practice, with the blindfolded group exhibiting this to a greater degree. However, few studies have examined the effects of short-term vision deprivation on vertical plane sound localization. Lewald (2002) reported that vision might be used to accurately calibrate the relation between the vertical coordinates of auditory space and the body position. Therefore, it is important to explore the relation between visual information and vertical plane sound localization.

The results of this study have some limitations. First, we did not conduct a follow-up test to evaluate the persistence of localization accuracy improvements resulting from the training procedure. Therefore, it remains unclear how long the observed effects of sound localization training persist. For example, Zahorik et al. (2006) reported that the training improvements appear to last at least four months. Future studies are necessary to examine the persistence effects of head movement and proprioceptive feedback in sound localization training. Second, we only measured sound localization performance under a blindfolded condition. Lewald (2007) reported that sighted participants showed more accurate sound localization after a short-term of light deprivation (90 min). Future studies call for the investigation of whether the phenomena observed in this study differ between blindfolded and sighted conditions. Third, in our study, it remains unclear whether similar results might be obtained using different types of sounds. Particularly the target sound in this study might be considered non-standard because it was chosen in accordance with Honda et al. (2007), where the simulated sound of a bee was used in a game. Therefore, future studies must be undertaken to clarify whether more general sound also causes the same effects. Finally, we did not record how many times participants were asked to localize another appropriate loudspeaker position when the participant pointed to a position between the loudspeakers. This parameter might be important for additional understanding of the effects of head movement and proprioceptive feedback in the training for sound localization.

Despite these points of uncertainty, our results show that proprioceptive feedback has beneficial effects on training for sound localization irrespective of whether the listener's head movements are restricted or not. Furthermore, our findings suggest that unrestricted head movement and proprioceptive feedback during the sound localization training can engender enhanced perceptual motor learning and can enable better use by listeners of proprioceptive information and of varied auditory cues from head movement.

Acknowledgments

We appreciate valuable support from Kaoru Abe and Jorge Trevino. This research was supported by a Ministry of Education, Culture, Sports, Science and Technology Grant-in-Aid for Specially Promoted Research (No. 19001004) and a Grant-in-Aid for Scientific Research (A) (No. 24240016). The funding sources had no role in the design of this study, data collection and analysis, decision to publish, or preparation of the manuscript.

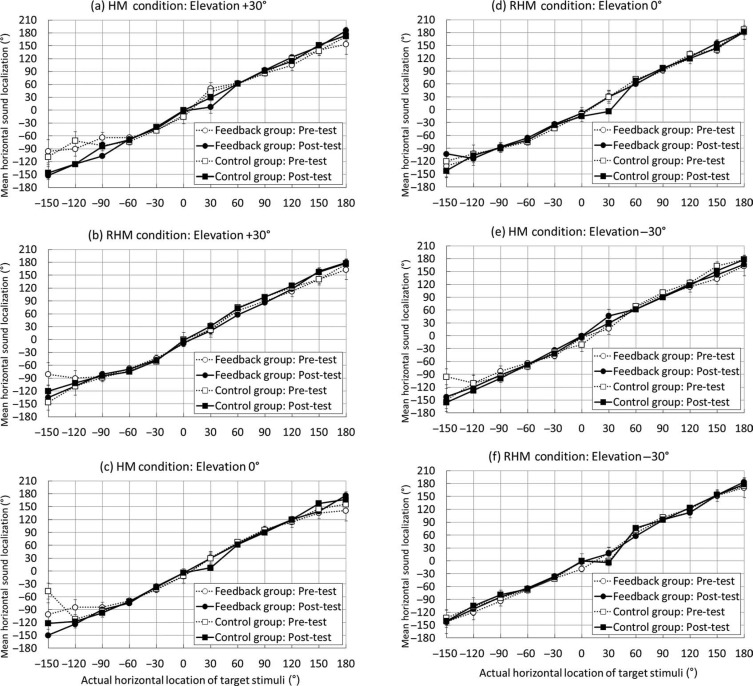

Appendix

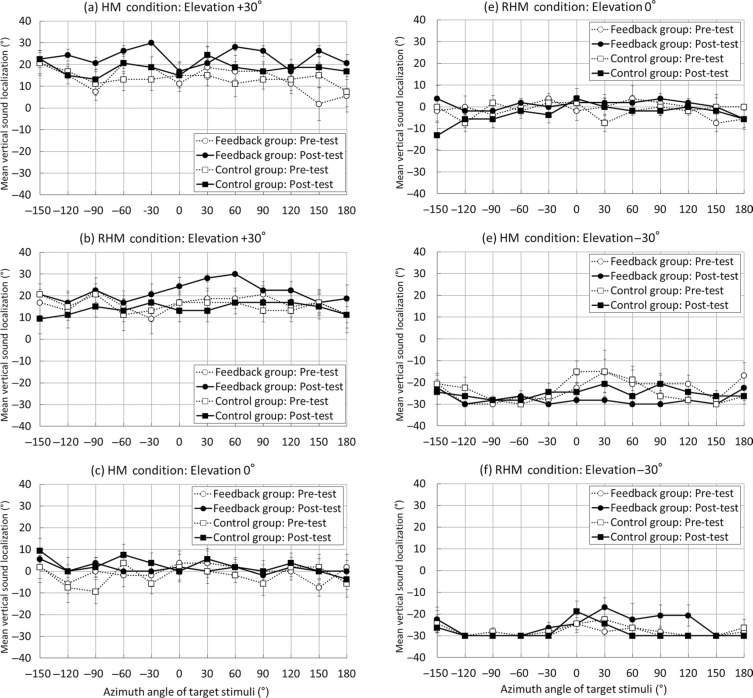

In this appendix, we present the data of actual sound localization performance for each target loudspeaker position observed during the pre-test and post-test phases. During these phases, all participants were allowed to change their head posture freely after the onset of the target sound. However, they received no feedback during any trial.

Figure A1.

Mean horizontal sound localization performance in the pre-test and post-test phases. The unrestricted head movement condition group is labeled as the HM condition. The restricted head movement condition group is presented with the RHM condition label. Circles denote the feedback group performance, whereas blocks show the control group performance. White-marked, dotted lines represent the pre-test performance, whereas black-marked continuous lines represent the post-test performance. Standard error bars are shown.

Figure A2.

Mean vertical sound localization performance in the pre-test and post-test phases. The unrestricted head movement condition group is labeled as the HM condition. The restricted head movement condition group is labeled as the RHM condition. Circles denote the feedback group performance, whereas blocks show the control group performance. White-marked, dotted lines represent the pre-test performance, whereas black-marked, continuous lines represent the post-test performance. Standard error bars are shown.

Contributor Information

Akio Honda, Research Institute of Electrical Communication, Tohoku University, 2-1-1, Katahira, Aoba-ku, Sendai, Miyagi 980-8577, Japan, Currently at: Department of Welfare Psychology, Tohoku Fukushi University, 1-8-1, Kunimi, Aoba-ku, Sendai, Miyagi 981-8522, Japan; e-mail: a-honda@tfu-mail.tfu.ac.jp.

Hiroshi Shibata, Research Institute of Electrical Communication, Tohoku University, 2-1-1, Katahira, Aoba-ku, Sendai, Miyagi 980-8577, Japan; Department of Psychology, Graduate School of Arts and Letters, Tohoku University, 27-1, Kawauchi, Aoba-ku, Sendai, Miyagi 980-8576, Japan, Currently at: Faculty of Medical Science and Welfare, Tohoku Bunka Gakuen University, 6-45-1, Kunimi, Aoba-ku, Sendai, Miyagi 981-0943, Japan; e-mail: hshibata@rehab.tbgu.ac.jp.

Souta Hidaka, Department of Psychology, Rikkyo University, 1-2-26, Kitano, Niiza-shi, Saitama 352-8558, Japan; e-mail: hidaka@rikkyo.ac.jp.

Jiro Gyoba, Department of Psychology, Graduate School of Arts and Letters, Tohoku University, 27-1, Kawauchi, Aoba-ku, Sendai, Miyagi 980-8576, Japan; e-mail: gyoba@sal.tohoku.ac.jp.

Yukio Iwaya, Research Institute of Electrical Communication, Tohoku University, 2-1-1, Katahira, Aoba-ku, Sendai, Miyagi 980-8577, Japan, Currently at: Faculty of Engineering, Tohoku Gakuin University, 1-13-1, Chuo, Tagajo, Miyagi 985-8537, Japan; e-mail: iwaya.yukio@tjcc.tohoku-gakuin.ac.jp.

Yôiti Suzuki, Research Institute of Electrical Communication, Tohoku University, 2-1-1, Katahira, Aoba-ku, Sendai, Miyagi 980-8577, Japan; e-mail: yoh@riec.tohoku.ac.jp.

References

- Abel S. M., Shelly Paik J. E. The benefit of practice for sound localization without sight. Applied Acoustics. 2004;65:229–241. doi: 10.1016/j.apacoust.2003.10.003. [DOI] [Google Scholar]

- Aytekin M., Moss C. F., Simon J. Z. A sensorimotor approach to sound localization. Neural Computation. 2008;20:603–635. doi: 10.1162/neco.2007.12-05-094. [DOI] [PubMed] [Google Scholar]

- Blauert J. Sound localization in the median plane. Acustica. 1970;22:205–213. [Google Scholar]

- Blauert J. Spatial hearing: The psychophysics of human sound localization. rev. Ed. Cambridge, MA: MIT; 1997. [Google Scholar]

- Blum A., Katz B. F. G., Warusfel O. Eliciting adaptation to non-individual HRTF spectral cues with multi-modal training. Proceedings of Joint Meeting of the German and the French Acoustical Societies (CFA/DAGA '04), Strasboug, France. 2004. pp. 1225–1226.

- Goldstone R. L. Perceptual Learning. Annual Review of Psychology. 1998;49:585–612. doi: 10.1146/annurev.psych.49.1.585. [DOI] [PubMed] [Google Scholar]

- Hawkey D. J. C., Amitay S., Moore D. R. Early and rapid perceptual learning. Nature Neuroscience. 2004;7:1055–1056. doi: 10.1038/nn1315. [DOI] [PubMed] [Google Scholar]

- Hofman P. M., van Opstal A. J. Spectro-temporal factors in two-dimensional human sound localization. The Journal of the Acoustical Society of America. 1998;103:2634–2648. doi: 10.1121/1.422784. [DOI] [PubMed] [Google Scholar]

- Hofman P. M., van Riswick J. G. A., van Opstal A. J. Relearning sound localization with new ears. Nature Neuroscience. 1998;1:417–421. doi: 10.1038/1633. [DOI] [PubMed] [Google Scholar]

- Honda A., Shibata H., Gyoba J., Saitou K., Iwaya Y., Suzuki Y. Transfer effects on sound localization performances from playing a virtual three-dimensional auditory game. Applied Acoustics. 2007;68:885–896. doi: 10.1016/j.apacoust.2006.08.007. [DOI] [Google Scholar]

- King A. J. Auditory perception: Does practice make perfect? Current Biology. 1999;9:R143–R146. doi: 10.1016/S0960-9822(99)80084-0. [DOI] [PubMed] [Google Scholar]

- Kulkarni A., Colburn H. Role of spectral detail in sound-source localization. Nature. 1998;396:747–749. doi: 10.1038/25526. [DOI] [PubMed] [Google Scholar]

- Langendijk E. H., Bronkhorst A. W. Contribution of spectral cues to human sound localization. The Journal of the Acoustical Society of America. 2002;112:1583–1596. doi: 10.1121/1.1501901. [DOI] [PubMed] [Google Scholar]

- Lewald J. Vertical sound localization in blind humans. Neuropsychologia. 2002;40:1868–1872. doi: 10.1016/S0028-3932(02)00071-4. [DOI] [PubMed] [Google Scholar]

- Lewald J. More accurate sound localization induced by short-term light deprivation. Neuropsychologia. 2007;45:1215–1222. doi: 10.1016/j.neuropsychologia.2006.10.006. [DOI] [PubMed] [Google Scholar]

- Middlebrooks J. C., Green D. M. Sound localization by human listeners. Annual Review of Psychology. 1991;42:135–159. doi: 10.1146/annurev.ps.42.020191.001031. [DOI] [PubMed] [Google Scholar]

- Parseihian G., Katz B. F. Rapid head-related transfer function adaptation using a virtual auditory environment. The Journal of the Acoustical Society of America. 2012;131:2948–2957. doi: 10.1121/1.3687448. [DOI] [PubMed] [Google Scholar]

- Perrett S., Noble W. The contribution of head motion cues to localization of low-pass noise. Perception and Psychophysics. 1997;59:1018–1026. doi: 10.3758/BF03205517. [DOI] [PubMed] [Google Scholar]

- Populin L. C. Monkey sound localization: Head-restrained versus head-unrestrained orienting. Journal of Neuroscience. 2006;26:9820–9832. doi: 10.1523/JNEUROSCI.3061-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaw E. A. G. Ear canal pressure generated by using a free sound field. The Journal of the Acoustical Society of America. 1966;39:465–470. doi: 10.1121/1.1909913. [DOI] [PubMed] [Google Scholar]

- Thurlow W., Runge P. Effect of induced head movements on localization of direction of sounds. The. Journal of the Acoustical Society of America. 1967;42:480–488. doi: 10.1121/1.1910604. [DOI] [PubMed] [Google Scholar]

- Tollin D. J., Populin L. C., Moore J. M., Ruhland J. L., Yin T. C. Sound-localization performance in the cat: The effect of restraining the head. Journal of Neurophysiology. 2005;93:1223–1234. doi: 10.1152/jn.00747.2004. [DOI] [PubMed] [Google Scholar]

- Toshima I., Aoki S., Hirahara T. Sound localization using an acoustical telepresence robot: TeleHead II. Presence: Teleoperators and Virtual Environments. 2008;17:365–375. doi: 10.1162/pres.17.4.392. [DOI] [Google Scholar]

- van Wanrooij M. M., van Opstal A. J. V. Relearning sound localization with a new ear. Journal of Neuroscience. 2005;25:5413–5424. doi: 10.1523/JNEUROSCI.0850-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallach H. On sound localization. The Journal of the Acoustical Society of America. 1939;10:270–274. doi: 10.1121/1.1915985. [DOI] [Google Scholar]

- Zahorik P., Bangayan P., Sundareswaran V., Wang K., Tam C. Perceptual recalibration in human sound localization: Learning to remediate front–back reversals. The Journal of the Acoustical Society of America. 2006;120:343–359. doi: 10.1121/1.2208429. [DOI] [PubMed] [Google Scholar]