Abstract

Despite growing recognition of the important role implementation plays in successful prevention efforts, relatively little work has sought to demonstrate a causal relationship between implementation factors and participant outcomes. In turn, failure to explore the implementation-to-outcome link limits our understanding of the mechanisms essential to successful programming. This gap is partially due to the inability of current methodological procedures within prevention science to account for the multitude of confounders responsible for variation in implementation factors (i.e., selection bias). The current paper illustrates how propensity and marginal structural models can be used to improve causal inferences involving implementation factors not easily randomized (e.g., participant attendance). We first present analytic steps for simultaneously evaluating the impact of multiple implementation factors on prevention program outcome. Then we demonstrate this approach for evaluating the impact of enrollment and attendance in a family program, over and above the impact of a school-based program, within PROSPER, a large scale real-world prevention trial. Findings illustrate the capacity of this approach to successfully account for confounders that influence enrollment and attendance, thereby more accurately representing true causal relations. For instance, after accounting for selection bias, we observed a 5% reduction in the prevalence of 11th grade underage drinking for those who chose to receive a family program and school program compared to those who received only the school program. Further, we detected a 7% reduction in underage drinking for those with high attendance in the family program.

Keywords: Implementation Science, Causal Inference, Propensity Scores, Potential Outcomes Framework, Family-Based Intervention

Prevention scientists are increasingly concerned with evaluating the impact of implementation on the effectiveness of prevention efforts (Elliott & Mihalic, 2004; Domitrovich, Gest, Jones, Gill, & Derousie, 2010; Kam, Greenberg, & Walls, 2003). In particular, implementation broadly appears to be related to the effectiveness of programs delivered in school and family contexts (August, Bloomquist, Lee, Realmuto, & Hektner; 2006; Carroll et al., 2007)1. Implementation is comprised of many factors that include a prevention effort's reach (e.g., enrollment and attendance rates), delivery quality (e.g., fidelity), and capacity to plan for program delivery (Berkel, Maurcio, Schoenfelder, & Sandler, 2010 Glasgow, Vogt, & Boles, 1999; Glasgow, Mckay, Piette & Reynolds, 2001; Spoth, Redmond & Shin, 2000a).

Despite growing recognition of the important role implementation plays in successful prevention efforts, relatively little work has sought to demonstrate a causal link between implementation factors and participant outcomes (c.f., Hill, Brooks-Gunn, & Waldfogel, 2003; Imbens, 2000; Foster, 2003). In turn, failure to explore the implementation-to-outcome relationship limits our understanding of the mechanisms essential to successful programming. This gap is partially due to the inability of current methodological procedures within prevention science to account for the multitude of confounders responsible for variation in implementation factors (i.e., selection bias). The current paper illustrates a novel approach for improving causal inferences involving different implementation factors not easily randomized (e.g., participant attendance, program fidelity). Specifically, we seek to demonstrate the capacity of this approach to account for selection bias resulting from voluntary enrollment and participation in a family prevention program. Participant enrollment and attendance rates are implementation factors often associated with the reach of a prevention effort (Durlak & DuPre, 2008; Glasgow et al., 1999; Graczyk, Domitrovich, & Zins, 2003). For this demonstration we selected factors related to reach in an attempt to both build on related efforts to improve causal inference in behavioral intervention (Angrist & Hahn, 2006; Connell, Dishion, Yasui & Kavanagh, 2007; Imbens, 2000) and to illustrate this methodological approach with data that is often available to researchers (e.g., attendance records as opposed to fidelity observations).

In this context, we illustrate that propensity and marginal structural models (Holland, 1986; Robins, Hernán, & Brumback, 2000; Rubin, 2005) can be used to simultaneously estimate the effect of multiple implementation factors. We first present background on the role of implementation within prevention science, and then describe analytic steps for evaluating multiple implementation factors using propensity and marginal structural models. We demonstrate the utility of this approach in an outcome analysis of a real-world prevention trial known as PROSPER.

Accounting for Participant Selection Effects

Prevention researchers have drawn few causal inferences about how implementation factors impact program outcomes (c.f., McGowan, Nix, Murphy, Bierman, & CPPRG, 2010; Stuart, Perry, Le, & Ialongo, 2008). This is largely because current methodological approaches have a limited capacity to successfully account for selection biases that influence variation in implementation (Taylor, Graham, Cumsille, & Hansen, 2000). With regard to a prevention effort's reach, a variety of factors may influence participants' enrollment and attendance in family-focused prevention programs, including demographic factors (youth gender, race, SES; Dumas, Moreland, Gitter, Pearl, & Nordstrom, 2008; Winslow, Bonds, Wolchik, Sandler, & Braver, 2009), youth factors (past delinquency and parent perceptions; Brody, Murray, Chen, Kogan, & Brown, 2006; Dumas, Nissley-Tsiopinis, & Moreland, 2006) and logistic factors (e.g., access to programming; Rohrbach et al., 1994; Spoth, Redmond & Shin, 2000a—see Table 1). For example, pre-existing characteristics of enrolled participants (e.g., parenting style, education level, youth risk-level) may influence an outcome more than the intervention itself. Consequently, we demonstrate the value of using propensity models to account for the effects of these factors (i.e., confounders) on program enrollment and attendance. Specifically, we first examine the impact of program enrollment on underage drinking during middle and high school. Second, we simultaneously examine the impact of different levels of program attendance (high and low) on underage drinking. To accomplish this we model data from the PROSPER prevention trial.

Table 1.

Confounders of Program Use and Attendance in the Literature

| Confounder | Variable Included in Model | References |

|---|---|---|

| • Family Ethnicity | • Youth Ethnicity | • Albin, Lee, Dumas, Slater, & Witmer, 1985; Holden, Lavigne, & Cameron, 1990; Prinz & Miller, 1994 |

| • Parent & Child Age | • Child Age | |

| • Marital Status | • Marital Status | |

| • Family SES | • Participation in School Lunch Program | • Toomey et al, 1996; Williams et al., 1995; Dumas, Moreland, Gitter, Pearl & Nordstrom, 2008; Winslow, Bonds, Wolchik, Sandler & Braver, 2009 |

| • Educational Attainment | • Youth Grades, Youth School Attitude, Bonding and Absences | • Rohrbach et al., 1994 |

| • Parent Gender | • Parent Gender | • Dumas, Nissley-Tsiopinis & Moreland, 2006; Spoth & Redmond, 1995; Spoth, Redmond, Kahn, & Shin, 1997 |

| • Perceived Child Susceptibility to Teen Problem Behaviors | • Youth Engagement in Problem Behaviors, Parent-Child Affective Quality | |

| • Perceived Severity of Teen Problem Behaviors | • Youth Engagement in Problem Behaviors, Family Cohesion Scale | |

| • Perceptions of Intervention Benefits | • Child Management Techniques Employed: Monitoring, Reasoning, Discipline | |

| • Time Demands & Scheduling Issues | • Distance from Program, Frequency of Youth-Parent Activities |

Traditionally, prevention researchers include confounders, unaccounted for by experimental designs, as covariates within their models (e.g., ANCOVA; Coffman, 2011; Schafer & Kang, 2008). However, this approach can account for only a limited number of confounders, especially if those confounders span across multiple levels (e.g., individual, family, and community factors). One method to account for a large number of confounders is to employ propensity scores to estimate the probability of a given level of a factor (e.g., enrolling in or attending a program; Rosenbaum & Rubin, 1983). Propensity scores (πi) are traditionally the probability that an individual i receives a program (Ai) given measured confounders (Xi) (D'Agostino, 1998; Harder, Stuart, & Anthony, 2008, 2010; Rosenbaum & Rubin, 1983):

Propensity scores may be used within a marginal structural model to weight individuals in order to create balanced groups of participants based upon the confounders included in the propensity model. Marginal structural models are a class of causal models that may be used to define and estimate, from observational data, the causal effect of an intervention in the presence of time-varying exposures and confounders (Robins et al., 2000). Thus, assuming that no confounders are unaccounted for, this procedure results in groups that approximate those that would be achieved through randomization (Rosenbaum & Rubin, 1983, 1985; Sampson, Sharkey, & Raudenbush, 2008). Using propensity scores to balance different groups allow researchers to estimate the mean differences between the groups. This difference is commonly known as the `average causal effect' (Schafer & Kang, 2008). Interpreting this effect, under the assumption that all confounders are accounted for in the estimation of the propensity scores, allows researchers to draw causal inferences about group differences with a greater degree of confidence that would be possible otherwise (Coffman, 2011; Luellen, Shadish, & Clark, 2005; Schafer & Kang, 2008). The potential outcomes framework shares this assumption with traditional regression approaches (Rubin, 2005). A key distinction between common approaches for handling selection bias (e.g., ANCOVA) and marginal structural models is that the latter controls for confounding by changing the data to mimic what would be obtained in a randomized experiment whereas ANCOVA controls for confounding by including covariates in the model for the outcome. Next we describe the analytic steps for applying the potential outcomes framework and marginal structural models to outcome evaluation of the PROSPER prevention trial.

Illustrative Example: The PROSPER Delivery System

The PROSPER (PROmoting School-university-community Partnerships to Enhance Resilience) study is a community-randomized trial of an innovative approach for delivering substance use prevention programs (see Spoth, Greenberg, Bierman, & Redmond, 2004). There were two program components implemented in all intervention communities. All families were offered a family-based prevention program in the evenings when their child was in 6th grade (Strengthening Families Program 10–14) and 17% percent of families enrolled. The following year, all youth in intervention communities received evidence-based preventive interventions (EBIs) during the regular school day in the 7th grade. Evaluations of the PROSPER trial (28 communities, 17,701 adolescent participants) have demonstrated the system's effectiveness in promoting EBI adoption and implementation (Spoth, Guyll, Lillehoj, Redmond, & Greenberg, 2007; Spoth, Guyll, Redmond, Greenberg, & Feinberg, 2011; Spoth & Greenberg, 2011) as well as significantly reducing rates of adolescent alcohol and substance abuse (Spoth et al., 2007; 2011).

Our goal is to assess the specific impact of family program enrollment and attendance—in addition to the school program—on participant outcomes. In previous reports, 10th grade youth in PROSPER intervention communities—compared to the control communities—were significantly less likely to report ever consuming alcohol—(Spoth et al., 2011). This evaluation differentiated between those that received only the school-based program and those that received both the school- and family-based programs. In addition, this evaluation assessed the potential differences between those enrolled in the family program with low versus high program attendance. Consequently, we seek to tease apart the effects of these two aspects of implementation on rates of underage drinking during middle and high school to demonstrate the value of the potential outcomes framework and marginal structural models for the study of implementation.

Methods

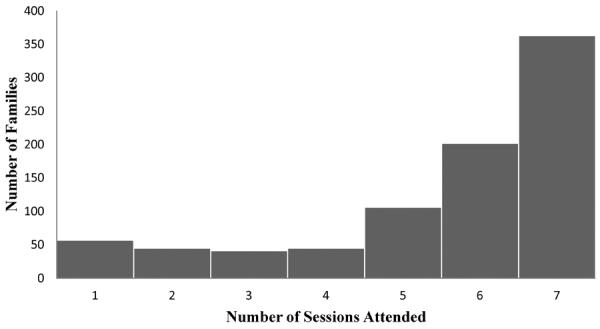

To evaluate the impact of program enrollment and attendance within the PROSPER trial, it is necessary to account for two types of selection bias: (1) the possible bias that impacts whether a family decides to enroll in the family program, and (2) the possible bias that influences enrolled families' rate of attendance. The family program delivered within PROSPER was a seven-session EBI known as the Strengthening Families Program: For Parents and Youth 10–14 (Spoth, Redmond, & Shin, 2000b, 2001). Thus, families who were enrolled (N = 859) could attend one to seven sessions. Figure 1 shows the distribution of families' attendance across all sessions within PROSPER. Most families in the trial attended five or more sessions (N = 671), but some families attended only one to four sessions (N=188). There appears to be a natural break in the distribution between those attending four sessions or less and those attending five sessions or more. Although differential participant attendance may be aggregated in a variety of ways, for the purpose of this demonstration we considered those who attended one to four sessions as having `low attendance' and those attending five or more sessions as having `high attendance'.

Figure 1.

Distribution of Family Attendance in SFP 10–14 in PROSPER Trial

Measures

Confounders

A wide variety of available measures are included in the propensity model to account for factors that may contribute to family program enrollment and attendance (Table 1). These include youth demographics (gender, ethnicity, age, enrollment in school lunch programs), functioning (stress management, problem solving, assertiveness, frequency of risk activities, frequency of self-oriented activities, academic achievement, frequency of school absences, attitude towards school, school bonding and achievement, deviant behaviors, antisocial peer behavior), proclivity towards alcohol and substance abuse (substance refusal intentions and efficacy, positive expectations, perceptions of and attitudes toward substance abuse, as well as cumulative alcohol and substance initiation and use indices), as well as family environment (parent marital status, youth residence with biological parents, affective quality between parents and youth, parental involvement, parent child management, family cohesion) and each family's geographic distance from the program site (see Chilenski & Greenberg, 2009; Redmond et al., 2009).

Underage Drinking

Each youth participant's initiation of alcohol consumption was measured annually from 6th through 11th grade. A dichotomous (Yes/No) self-report measure was used to evaluate the initiation of underage alcohol consumption (“Ever drunk more than a few sips of alcohol”).

Procedure

Applying the potential outcomes framework to evaluate the impact of multiple implementation factors entails five steps. These include (1) defining the causal effects, (2) estimating individuals' propensity scores, (3) calculating inverse propensity weights (IPWs), (4) evaluating balance, and (5) estimating the marginal structural models. We employed a multiple imputation approach to account for any missing data (20 imputations; D'Agostino & Rubin, 2000; Graham, Olchowski, & Gilreath, 2007; Little & Rubin, 2002, Schafer & Graham, 2002).

Defining Causal Effects

The first step is to define the causal effects that will be estimated in order to isolate each effect within the model. For the purpose of this analysis, let e denote program enrollment and a denote attendance. The potential outcomes for individual i at time t in community c are Yitc(e,a). Thus, Yitc (0,0) is the potential outcome if the individual does not enroll and does not attend, Yitc(1,0) is the potential outcome if the individual enrolls but does not attend, Yitc(1,1) is the potential outcome if the individual enrolls and attends at least 1 but fewer than 5 sessions, and Yitc(1,2) is the potential outcome if the individual enrolls and attends 5 or more sessions. Note that there is a monotonic function in that if an individual does not enroll, then they cannot have a = 1 or a = 2; thus the potential outcomes Yitc(0,1) and Yitc(0,2) are equal to Yitc(0,0). By employing the potential outcomes framework, we may estimate (0.1) the effect of enrolling in the family program, but not attending any sessions vs. not enrolling or attending,; (0.2) the effect of enrolling and attending at least 1 but fewer than 5 sessions vs. enrolling but attending no sessions; (0.3) the effect of enrolling and attending at least 1 but fewer than 5 sessions vs. not enrolling; (0.4) the effect of enrolling and attending 5 or more sessions vs. enrolling but attending no sessions; and (0.5) the effect of enrolling and attending 5 or more sessions vs. not enrolling at all. The effects are expressed as follows:

| (0.1) |

| (0.2) |

| (0.3) |

| (0.4) |

| (0.5) |

Our notation for the potential outcomes implicitly makes the stable unit treatment value assumption at the community level (i.e., SUTVA; VanderWeele, 2008). This implies that for individual j living in Community A, enrollment or attendance is not influenced by the enrollment or attendance of an individual i in Community B although it may be influenced by other individuals in Community A. For the remainder of the article we will drop the subscripts for individual, time, and community. Additionally, we will not estimate any random effects for the community level although we account for the nested structure in the models.

The marginal structural model is given as follows:

Thus, the causal contrasts given in Equations 1–5 are equal to:

Note that by leaving out the main effect term for attendance, we have a monotonic function because

Propensity Score Estimation

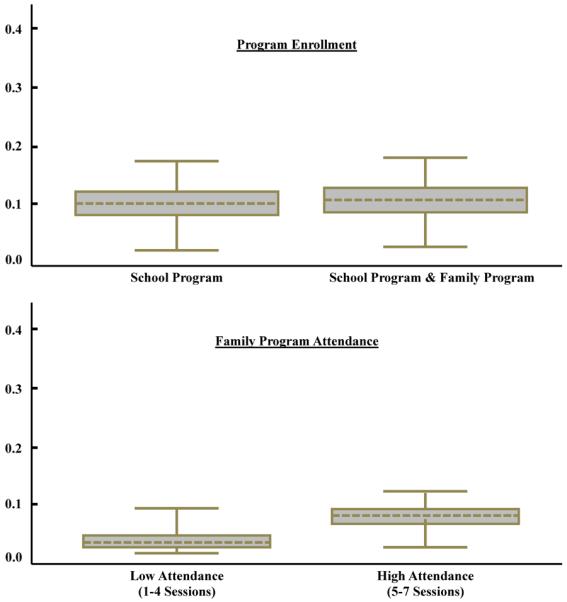

We first estimated propensity scores (πe) for each youth who received either the school program or the school and family program together using a logistic regression model (i.e., the propensity model). Then a multinomial probit model was used to estimate the propensity scores of intervention participants having no, low, or high attendance (πa) in the family program. We evaluated the `overlap', which indicates if there are individuals with similar propensity scores in each of the groups by considering the distributions of the propensities for being in either the school only or school and family program groups (Rosenbaum & Rubin, 1983). Without overlap casual comparisons between the two groups are generally not warranted. Here we observed substantial overlap between the family program enrollment and no-enrollment distributions (see Figure 2). Similarly, we considered the overlap of the low and high attendance groups and again observed substantial overlap of the propensities between the levels of attendance (see Figure 2).

Figure 2.

Overlap Diagnostics for Family Program Enrollment and Program Attendance Levels

Inverse Propensity Weight Calculation

Next the IPWs for the level of program enrollment e and attendance a actually received by each participant were calculated. The IPWs are similar to survey weights and allow us to make adjustments to the sample data to account for selection effects influencing both participant enrollment and attendance by up-weighting those that are underrepresented and down-weighting those that are overrepresented (Hirano & Imbens, 2001). When modeling multiple independent variables—such as the two implementation factors considered here—the product of the weights may be used (IPWe*IPWa –Robins et al., 2000; Spreewenberg et al., 2010).

where is the estimated propensity that a participant did not enroll in the family program, is the estimated propensity that an individual enrolled in the family program, is the estimated propensity that a participant attended no family sessions, is the estimated propensity that a participant attended between 1 and 4 sessions, and is the estimated propensity that a participant attended between 5 and 7 family sessions.

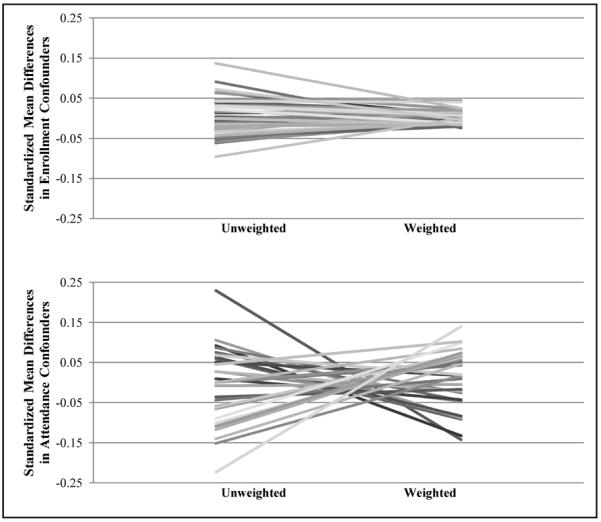

Balance Diagnostics

We evaluated the balance of the different groups—before and after weighting—to ascertain whether the adjustment using the IPWs successfully resulted in group equivalence on the modeled confounders (Harder et al., 2010).This evaluation included a comparison of the standardized mean differences (SMDs) of the unweighted sample to the weighted sample for each of the confounders included in the propensity model, allowing us to ascertain whether the SMDs decreased after weighting (i.e., effect size comparison). It is recommended that these differences be less than .2 (in absolute value), which is considered a “small” effect size (Cohen, 1992; Harder et al., 2010). Figure 3 illustrates balance for both program enrollment and attendance within the PROSPER trial, as all weighted SMDs are less than .2.

Figure 3.

SMDs of Confounders Predicting Enrollment in School or School and Family Program and Attendance of Family Program

Outcome Analysis

The fifth step was to evaluate how differential implementation impacted underage drinking using the IPWs within marginal structural models to account for selection bias. In this case we modeled whether youth reports of underage drinking varied across different levels of program enrollment and attendance. To evaluate the effect of implementation, we constructed logistic generalized estimating equations for the marginal models to examine differences between implementation groups across time (categorical) using the IPW estimation method. The model is given as

where β1 is the effect of enrolling in the family program, β2 is the effect of enrolling and having low attendance in the family program versus not attending any family sessions, and β1+β2 is the effect of enrolling in and having low attendance versus not enrolling and not attending any family sessions. Additionally, 2β2 is the effect of enrolling in and having high attendance and β1+2β2 is the effect of enrolling and having high attendance versus not enrolling and not attending any family sessions. β3 is the effect of time, which was coded to capture the unequal interval between baseline, 6 month follow-up and subsequent annual assessments of underage alcohol use (e.g., 0, .5, 1, 2 etc.). We estimated the model using PROC GLIMMIX in SAS 9.1 (SAS Institute, 2004), in which the outcome was the binary alcohol measure of whether youth had ever consumed alcohol. PROC GLIMMIX allows the logit link function for dichotomous outcome data, and error terms for non-normally distributed dependent variables (in this case binomial). Additionally, PROC GLIMMIX allows a weighting function that may be employed to include IPWs in the model. This procedure allowed us to analyze the three-level, nested design of the model, with measurement occasions nested within individuals and individuals nested within communities (Littell, Milliken, Stroup, Wolfinger, & Schabenberger, 2006).

Results

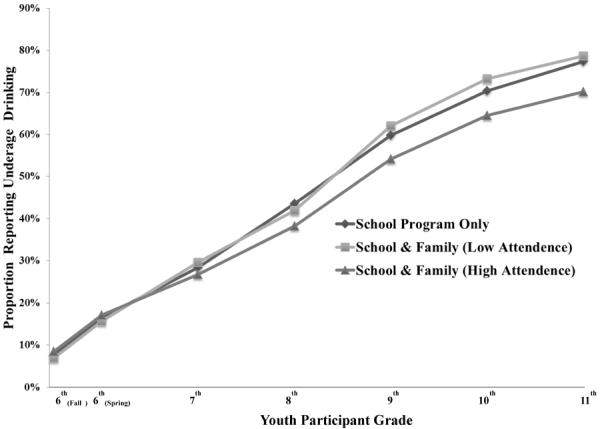

Utilizing the potential outcomes framework and marginal structural models described above, we evaluated the impact of enrolling in the family program in addition to the school program on reduction of underage drinking. We found a significant difference in the probability of alcohol use between those that received the school program and those that received both the family and school program (F = 2.66, p = .01). Results are plotted in Figure 4. As illustrated by the plot, the effect of receiving the family program in addition to the school program vs. only the school program translated into about a 5% decrease in the prevalence of youth who have ever consumed alcohol in the 11th grade (see Figure 4).

Figure 4.

Weighted Prevalence of Underage Drinking by Program and Attendance Level

Next, we assessed the impact of differential program attendance in the context of program enrollment. We did not find significant differences between youth who enrolled in the school and family program and had low attendance compared to those who did not enroll in the family program at all (F = 1.15, p = .32). However, we found a significant difference between those who did not enroll in the family program and those that enrolled and had high attendance in the family program (F = 3.03, p < .001). Attendance in 5 or more sessions of the family program—compared to not enrolling in the family program—translated into about a 7% decrease in the prevalence rate of underage drinking (see Figure 4).

Discussion

These results illustrate the value of employing IPWs within marginal structural models for disentangling the effects of implementation factors in real-world contexts. Through the application of the potential outcomes framework, this approach provides a method to account for selection effects that lead to differential program enrollment and attendance. This method permits researchers to make stronger causal inferences about the impact of differential implementation. Here these methods allowed us to evaluate the impact of enrolling in a family program in addition to a school-based EBI to reduce underage alcohol use. Below we discuss three major implications of this application for the field of prevention science.

The first implication pertains to the development of increasingly efficient prevention programs. As researchers seek to optimize prevention efforts, they will need to assess the value of each program, session, and component and trim away any material that is ineffective or counterproductive in order to optimize prevention programs. While increasingly sophisticated experimental designs are being used to conduct such work (e.g., Collins, Murphy, Nair, & Strecher, 2007), researchers may also employ the approach demonstrated above to existing data when undertaking this optimization process. For instance, here we demonstrated the added value of receiving a family-based EBI in addition to a school-based EBI and emphasized the importance of program attendance. If participants' attendance in different components or sessions is tracked this method could be applied to program optimization in a similar fashion—allowing researchers to test the added value of specific curriculum components as opposed to the program as a whole.

A second implication of this work is the value that information from evaluations such as the one presented here may have when scaling-up EBIs. Specifically, because evaluations that employ propensity scores may be used in the context of real-world prevention trials (e.g., PROSPER), the inferences are likely to be more generalizable than those drawn from smaller efficacy trials. This is illustrated by the fact that program implementation is likely to be much more comparable in dissemination trials to that of real-world settings than evaluations that use more restrictive designs (Flay et al., 2005).

A third implication pertains to ongoing efforts to enhance and maintain implementation factors—in particular enrollment and attendance rates. Using these statistical approaches we were able to better represent the differential influence of family program attendance. This information may in turn be persuasive to local implementers about the importance of program attendance. Possibly findings such as these could even encourage community efforts to allocate additional resources to increasing participants' attendance in family-based EBIs.

Conclusions

This work demonstrates the value of using potential outcomes and marginal structural models for the study of implementation factors within prevention science. Studies that collect large amounts of data on a variety of domains, including on implementation factors and the dynamics that may influence implementation factors, are especially suited for this type of analysis. One limitation of this work is that, while it is possible to include multiple implementation factors within a marginal structural model, we included only two within this evaluation. Further multivariate analyses of how implementation factors influence participant outcomes could provide greater insight into best practices for facilitating EBI implementation.

Acknowledgments

This project was supported by the National Institute on Drug Abuse (NIDA) grants P50-DA010075-15 and R03 DA026543. The content is solely the responsibility of the authors and does not necessarily represent the official views of NIDA or the National Institutes of Health.

Footnotes

Here we consider implementation in the context of a larger prevention effort, as opposed to a single program in isolation. In particular, we focus on the role of participant choice to enroll and engage programs as key factors that influence the success of prevention efforts (as opposed to more narrow definitions of implementation that focus on the role of a facilitator to adhere to program curricula; i.e., implementation quality).

References

- Albin JB, Lee B, Dumas J, Slater J, Witmer J. Parent training with Canadian families. Canada's Mental Health. 1985 Dec;:20–24. [Google Scholar]

- Angrist J, Kuersteiner G. Semiparametric Causality Tests Using the Policy Propensity Score. The National Bureau of Economic Research; 2004. Working Paper. [Google Scholar]

- August GJ, Bloomquist ML, Lee SS, Realmuto GM, Hektner JM. Can evidence-based prevention programs be sustained in community practice settings? The Early Risers' Advanced-Stage effectiveness trial. Prevention Science. 2006;7:151–165. doi: 10.1007/s11121-005-0024-z. doi:10.1007/s11121-005-0024-z. [DOI] [PubMed] [Google Scholar]

- Berkel C, Mauricio AM, Schoenfelder E, Sandler IN. Putting the pieces together: An integrated model of program implementation. Prevention Science. 2010;12:23–33. doi: 10.1007/s11121-010-0186-1. doi:10.1007/s11121-010-0186-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brody GH, Murry VM, Chen Y.-fu, Kogan SM, Brown AC. Effects of family risk factors on dosage and efficacy of a family-centered preventive intervention for rural African Americans. Prevention Science. 2006;7:281–291. doi: 10.1007/s11121-006-0032-7. doi:10.1007/s11121-006-0032-7. [DOI] [PubMed] [Google Scholar]

- Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implementation Science. 2007;2:40. doi: 10.1186/1748-5908-2-40. doi:10.1186/1748-5908-2-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chilenski SM, Greenberg MT. The importance of the community context in the epidemiology of early adolescent substance use and delinquency in a rural sample. American Journal of Community Psychology. 2009;44:287–301. doi: 10.1007/s10464-009-9258-4. doi:10.1007/s10464-009-9258-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coffman DL. Estimating causal effects in mediation analysis using propensity scores. Structural Equation Modeling. 2011;18:357–369. doi: 10.1080/10705511.2011.582001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J. A power primer. Psychological Bulletin. 1992;112:155–159. doi: 10.1037//0033-2909.112.1.155. doi:10.1037/0033-2909.112.1.155. [DOI] [PubMed] [Google Scholar]

- Collins LM, Murphy SA, Nair VN, Strecher VJ. A strategy for optimizing and evaluating behavioral interventions. Annals of Behavioral Medicine. 2005;30:65–73. doi: 10.1207/s15324796abm3001_8. doi:10.1207/s15324796abm3001_8. [DOI] [PubMed] [Google Scholar]

- Connell AM, Dishion TJ, Yasui M, Kavanagh K. An adaptive approach to family intervention: Linking engagement in family-centered intervention to reductions in adolescent problem behavior. Journal of Consulting and Clinical Psychology. 2007;75:568–579. doi: 10.1037/0022-006X.75.4.568. doi:10.1037/0022-006X.75.4.568. [DOI] [PubMed] [Google Scholar]

- McGowan M, Nix R, Murphy S, Bierman K, Conduct Problems Prevention Research Group Investigating the Impact of Selection Bias in Dose-Response Analyses of Preventive Interventions. Prevention Science. 2010;11:239–251. doi: 10.1007/s11121-010-0169-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Agostino RB. Propensity score methods for bias reduction in the comparison of a treatment to a non-randomized control group. Statistics in Medicine. 1998;17:2265–2281. doi: 10.1002/(sici)1097-0258(19981015)17:19<2265::aid-sim918>3.0.co;2-b. [DOI] [PubMed] [Google Scholar]

- D'Agostino RB, Rubin DB. Estimating and using propensity scores with partially missing data. Journal of the American Statistical Association. 2000;95:749–759. [Google Scholar]

- Domitrovich CE, Gest SD, Jones D, Gill S, DeRousie RMS. Implementation quality: Lessons learned in the context of the Head Start REDI trial. Early Childhood Research Quarterly. 2010;25:284–298. doi: 10.1016/j.ecresq.2010.04.001. doi:10.1016/j.ecresq.2010.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumas JE, Moreland AD, Gitter AH, Pearl AM, Nordstrom AH. Engaging Parents in Preventive Parenting Groups: Do Ethnic, Socioeconomic, and Belief Match Between Parents and Group Leaders Matter? Health Education & Behavior. 2006;35:619–633. doi: 10.1177/1090198106291374. doi:10.1177/1090198106291374. [DOI] [PubMed] [Google Scholar]

- Dumas JE, Nissley-Tsiopinis J, Moreland AD. From intent to enrollment, attendance, and participation in preventive parenting groups. Journal of Child and Family Studies. 2006;16:1–26. doi:10.1007/s10826-006-9042-0. [Google Scholar]

- Durlak JA, DuPre EP. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology. 2008;41:327–350. doi: 10.1007/s10464-008-9165-0. doi:10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Elliott DS, Mihalic S. Issues in disseminating and replicating effective prevention programs. Prevention Science. 2004;5:47–53. doi: 10.1023/b:prev.0000013981.28071.52. doi:10.1023/B:PREV.0000013981.28071.52. [DOI] [PubMed] [Google Scholar]

- Flay BR, Biglan A, Boruch RF, Castro FG, Gottfredson D, Kellam S, Mościcki EK, et al. Standards of evidence: Criteria for efficacy, effectiveness and dissemination. Prevention Science. 2005;6:151–175. doi: 10.1007/s11121-005-5553-y. doi:10.1007/s11121-005-5553-y. [DOI] [PubMed] [Google Scholar]

- Foster M. Propensity score matching: An illustrative analysis of dose response. Medical Care. 2003;41:1183–1192. doi: 10.1097/01.MLR.0000089629.62884.22. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, McKay HG, Piette JD, Reynolds KD. The RE-AIM framework for evaluating interventions: What can it tell us about approaches to chronic illness management? Patient Education and Counseling. 2001;44:119–127. doi: 10.1016/s0738-3991(00)00186-5. doi:10.1016/S0738-3991(00)00186-5. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. American Journal of Public Health. 1999;89:1322–1327. doi: 10.2105/ajph.89.9.1322. doi:10.2105/AJPH.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graczyk P, Domitrovich C, Zins J. Facilitating the implementation of evidence-based prevention and mental health promotion efforts in schools. In: Weist M, editor. Handbook of school mental health: Advancing practice and research. Springer; New York: 2007. 2007. [Google Scholar]

- Graham JW, Olchowski AE, Gilreath TD. How many imputations are really needed? Some practical clarifications of multiple imputation theory. Prevention Science. 2007;8:206–213. doi: 10.1007/s11121-007-0070-9. doi:10.1007/s11121-007-0070-9. [DOI] [PubMed] [Google Scholar]

- Harder VS, Stuart EA, Anthony JC. Adolescent cannabis problems and young adult depression: Male-female stratified propensity score analyses. American Journal of Epidemiology. 2008;168:592–601. doi: 10.1093/aje/kwn184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harder VS, Stuart EA, Anthony JC. Propensity score techniques and the assessment of measured covariate balance to test causal associations in psychological research. Psychological Methods. 2010;15:234–249. doi: 10.1037/a0019623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill JL, Brooks-Gunn J, Waldfogel J. Sustained effects of high participation in an early intervention for low-birth-weight premature infants. Developmental Psychology. 2003;39:730–744. doi: 10.1037/0012-1649.39.4.730. [DOI] [PubMed] [Google Scholar]

- Hirano K, Imbens G. Estimation of causal effects using propensity score weighting: An application to data on right heart catherization. Health Services and Outcome Research Methodology. 2001;2:259–278. [Google Scholar]

- Holden G, Rosenberg G, Barker K, Tuhrim S, Brenner B. The recruitment of research participants: A review. Social Work in Health Care. 1993;19:1–44. doi: 10.1300/J010v19n02_01. [DOI] [PubMed] [Google Scholar]

- Holland PW. Statistics and causal inference. Journal of the American Statistical Association. 1986;81:945–970. [Google Scholar]

- Imbens GW. The role of the propensity score in estimating dose-response functions. Biometrika. 2000;87:706–710. [Google Scholar]

- Kam C, Greenberg M, Walls C. Examining the role of implementation quality in school-based prevention using the PATHS curriculum. Prevention Science. 2003;4:55–63. doi: 10.1023/a:1021786811186. [DOI] [PubMed] [Google Scholar]

- Littell R, Milliken G, Stroup W, Wolfinger R, Schabenberger O. SAS for mixed models. 2nd ed. SAS Institute; Cary, NC: 2006. [Google Scholar]

- Little R, Rubin D. Statistical analysis with missing data. 2nd ed. John Wiley; New York: 2002. [Google Scholar]

- Luellen JK, Shadish WR, Clark MH. Propensity scores: An introduction and experimental test. Evaluation Review. 2005;29:530–558. doi: 10.1177/0193841X05275596. [DOI] [PubMed] [Google Scholar]

- Prinz RJ, Miller GE. Family-based treatment for childhood antisocial behavior: Experimental influences on dropout and engagement. Journal of Clinical and Child Psychology. 1994;62:645–650. doi: 10.1037//0022-006x.62.3.645. [DOI] [PubMed] [Google Scholar]

- Redmond C, Spoth RL, Shin C, Schainker LM, Greenberg MT, Feinberg M. Long-term protective factor outcomes of evidence-based interventions implemented by community teams through a community–university partnership. The Journal of Primary Prevention. 2009;30:513–530. doi: 10.1007/s10935-009-0189-5. doi:10.1007/s10935-009-0189-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robins J, Hernan MA, Brumback B. Marginal structural models and causal inference in epidemiology. Epidemiology. 2000;11:550–560. doi: 10.1097/00001648-200009000-00011. [DOI] [PubMed] [Google Scholar]

- Rohrbach LA, Hodgson CS, Broder BI, Montgomery SB, Flay BR, Hansen WB, Pentz MA. Parental participation in drug abuse prevention: Results from the midwestern prevention project. Journal of Research on Adolescence. 1994;4:295–317. doi:10.1207/s15327795jra0402_7. [Google Scholar]

- Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55. [Google Scholar]

- Rubin DB. Causal inference using potential outcomes. Journal of the American Statistical Association. 2005;100:322–331. doi:10.1198/016214504000001880. [Google Scholar]

- Sampson RJ, Sharkey P, Raudenbush SW. From the Cover: Inaugural Article: Durable effects of concentrated disadvantage on verbal ability among African-American children. Proceedings of the National Academy of Sciences. 2008;105:845–852. doi: 10.1073/pnas.0710189104. doi:10.1073/pnas.0710189104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- SAS Institute Inc. SAS/STAT 9.1 User's Guide. SAS Institute Inc.; Cary, NC: 2004. [Google Scholar]

- Schafer JL, Graham JW. Missing data: Our view of the state of the art. Psychological Methods. 2002;7:147–177. doi:10.1037/1082-989X.7.2.147. [PubMed] [Google Scholar]

- Schafer JL, Kang JDY. Average causal effects from non-randomized studies: A practical guide and simulated example. Psychological Methods. 2008;13:279–313. doi: 10.1037/a0014268. [DOI] [PubMed] [Google Scholar]

- Spoth R, Greenberg M. Impact challenges in community science-with-practice: Lessons from PROSPER on transformative practitioner-scientist partnerships and prevention infrastructure development. American Journal of Community Psychology. 2011;48:106–119. doi: 10.1007/s10464-010-9417-7. doi:10.1007/s10464-010-9417-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth R, Greenberg M, Bierman K, Redmond C. Prosper community–university partnership model for public education systems: Capacity-building for evidence-based, competence-building prevention. Prevention Science. 2004;5:31–39. doi: 10.1023/b:prev.0000013979.52796.8b. doi:10.1023/B:PREV.0000013979.52796.8b. [DOI] [PubMed] [Google Scholar]

- Spoth R, Guyll M, Lillehoj CJ, Redmond C, Greenberg M. Prosper study of evidence-based intervention implementation quality by community–university partnerships. Journal of Community Psychology. 2007;35:981–999. doi: 10.1002/jcop.20207. doi:10.1002/jcop.20207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth R, Guyll M, Redmond C, Greenberg M, Feinberg M. Six-year sustainability of evidence-based intervention implementation quality by community–university partnerships: The PROSPER study. American Journal of Community Psychology. 2011;48:412–425. doi: 10.1007/s10464-011-9430-5. doi:10.1007/s10464-011-9430-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth R, Redmond C. Parent motivation to enroll in parenting skills programs: A model of family context and health belief predictors. Journal of Family Psychology. 1995;9:294–310. [Google Scholar]

- Spoth R, Redmond C, Kahn J, Shin C. A prospective validation study of inclination, belief, and context predictors of family-focused prevention involvement. Family Process. 1997;36:403–429. doi: 10.1111/j.1545-5300.1997.00403.x. [DOI] [PubMed] [Google Scholar]

- Spoth R, Redmond C, Shin C. Modeling factors influencing enrollment in family-focused preventive intervention research. Prevention Science. 2000a;1:213–225. doi: 10.1023/a:1026551229118. [DOI] [PubMed] [Google Scholar]

- Spoth R, Redmond C, Shin C. Reducing adolescents' aggressive and hostile behaviors: Randomized trial effects of a brief family intervention 4 years past baseline. Archives of Pediatrics and Adolescent Medicine. 2000b;154:1248–1257. doi: 10.1001/archpedi.154.12.1248. [DOI] [PubMed] [Google Scholar]

- Spoth R, Redmond C, Shin C, Greenberg M, Clair S, Feinberg M. Substance-use outcomes at 18 months past baseline. American Journal of Preventive Medicine. 2007;32:395–402. doi: 10.1016/j.amepre.2007.01.014. doi:10.1016/j.amepre.2007.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spreewenberg M, Bartak A, Croon M, Hagenaars J, Buschblach J, Andrea H, Twisk J, Stijnen T. The multiple propensity score as control for bias in the comparison of more than two treatment arms. Medical Care. 2010;48:166–174. doi: 10.1097/MLR.0b013e3181c1328f. [DOI] [PubMed] [Google Scholar]

- Stuart EA, Perry DF, Le H-N, Ialongo NS. Estimating intervention effects of prevention programs: Accounting for noncompliance. Prevention Science. 2008;9:288–298. doi: 10.1007/s11121-008-0104-y. doi:10.1007/s11121-008-0104-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor B, Graham J, Cumsille P, Hansen W. Modeling prevention program effects on growth in substance use analysis of data from the adolescent alcohol prevention trial. Prevention Science. 2000;4:183–197. doi: 10.1023/a:1026547128209. doi:10.1023/A:1026547128209. [DOI] [PubMed] [Google Scholar]

- Toomey TL, Williams CL, Perry CL, Murray DM, Dudoritz B, Veblen-Mortenson S. An alcohol primary prevention program for parents of 7th graders: The amazing alternatives! Home Program. Journal of Child and Adolescent Substance Abuse. 1996;5:35–53. [Google Scholar]

- VanderWeele TJ. Ignorability and stability assumptions in neighborhood effects research. Statistics in Medicine. 2008;27:1934–1943. doi: 10.1002/sim.3139. doi:10.1002/sim.3139. [DOI] [PubMed] [Google Scholar]

- Williams CL, Perry CL, Dudovitz B, Veblen-Mortenson S, Anstine PS, Komro KA, Toomey TL. A home-based prevention program for sixth-grade alcohol use: Results from Project Northland. Journal of Primary Prevention. 1995;16:125–147. doi: 10.1007/BF02407336. [DOI] [PubMed] [Google Scholar]

- Winslow EB, Bonds D, Wolchik S, Sandler I, Braver S. Predictors of enrollment and retention in a preventive parenting intervention for divorced families. The Journal of Primary Prevention. 2009;30:151–172. doi: 10.1007/s10935-009-0170-3. doi:10.1007/s10935-009-0170-3. [DOI] [PMC free article] [PubMed] [Google Scholar]