Abstract

The most dramatic progress in the restoration of hearing takes place in the first months after cochlear implantation. To map the brain activity underlying this process, we used positron emission tomography at three time points: within 14 days, three months, and six months after switch-on. Fifteen recently implanted adult implant recipients listened to running speech or speech-like noise in four sequential PET sessions at each milestone. CI listeners with postlingual hearing loss showed differential activation of left superior temporal gyrus during speech and speech-like stimuli, unlike CI listeners with prelingual hearing loss. Furthermore, Broca's area was activated as an effect of time, but only in CI listeners with postlingual hearing loss. The study demonstrates that adaptation to the cochlear implant is highly related to the history of hearing loss. Speech processing in patients whose hearing loss occurred after the acquisition of language involves brain areas associated with speech comprehension, which is not the case for patients whose hearing loss occurred before the acquisition of language. Finally, the findings confirm the key role of Broca's area in restoration of speech perception, but only in individuals in whom Broca's area has been active prior to the loss of hearing.

1. Introduction

The cochlear implant (CI) transforms acoustic signals from the environment into electric impulses, which are then used to stimulate intact fibers of the auditory nerve. With this treatment, individuals with profound hearing loss (HL) are given the opportunity to gain or regain the sense of hearing. Current technology and speech processing strategies allow many CI recipients to achieve impressive accuracy in open-set speech recognition, and the CI is arguably the most effective neural prosthesis ever developed [1–3]. However, the success of the outcome depends both on duration of deafness prior to implantation [4, 5] and on the onset of deafness before (prelingually) [4–7] or after (postlingually) [8] critical stages in the acquisition of language. In many cases, the greatest gains of performance occur in the first three months of use [9–11]. The dramatic improvements following implantation not only demonstrate the efficiency of the CI technology, but also point to the role of cortical plasticity as a means to reactivate brain function.

Plasticity is a term used to describe the reorganization of the central nervous system by means of synaptic changes and rewiring of neural circuits. In cases of cochlear implantation, neural plasticity associated with deprivation of auditory input and adaptation to the absence of stimuli is of particular interest. Reduced input to the brain from impaired auditory pathways results in significant changes in the central auditory system [12] and is accompanied by a recruitment of deprived cortices in response to input from the intact senses [13–17]. When auditory input to the brain is reintroduced, this novel auditory experience may itself induce additional plasticity [18]. The sensory reafferentation provided by the CI thus offers a unique opportunity to study the effects of preceding deafness on functional brain organization.

In normal-hearing (NH) adults, language processing is associated with extensive frontal activation in the left cerebral hemisphere, including the anterior (Brodmann's Areas (BA) 45 and 47) and posterior (BA 44 and 45) parts of the left inferior frontal gyrus (LIFG), the latter often referred to as Broca's area [19, 20]. Traditionally, this area is mainly assigned an expressive language function, but several studies show a relationship between the perception of language and left frontal activity, both when stimuli are presented aurally [21–24] and visually [24–28]. Neuroimaging experiments comparing auditory responses of CI users and normal-hearing control participants, while listening to speech or complex nonspeech, generally reveal bilateral activity in the primary and secondary auditory cortices, including both superior and middle temporal gyri [12, 29–34]. One consistent outcome of these studies is the more dominant right temporal activity of CI users listening to speech, that is, the observation of more bilateral activity than would be expected on the basis of the classical presumption of left-lateralized activity of language processing in normal-hearing [35]. However, in these studies, activation of other classic language regions such as Broca's area was not a consistent finding. Naito and colleagues found Broca's area to be activated only when the CI participants silently repeated sentences [31, 36]. Mortensen et al. [37] compared brain activity in experienced CI users according to their levels of speech comprehension performance. They found that, unlike CI users with low speech comprehension, single words and speech yielded raised activity in the left inferior prefrontal cortex (LIPC) in CI users with excellent speech perception.

Some observed activations outside the classic language areas, including anterior cingulate, parietal regions, and left hippocampus, have been attributed to nonspecific attentional mechanisms and memory in CI users [31, 32]. Furthermore, some studies have reported convincing evidence of visual activity in response to auditory stimuli or auditory activity in response to visual stimuli in CI users. Although much debate about the identity of the brain systems that are changed and the mechanisms that mediate these changes exists, the general belief is that this cross-modal reorganization is associated with the strong visual speech-reading skills developed by CI users during the period of deafness, which are maintained or even improved after cochlear implantation, despite progressive recovery of auditory function [5, 11, 33, 38–41]. The possible reasons for these mixed results may include differences in experimental paradigms, small sample sizes, heterogeneous populations, variance in statistical thresholds, and a lack of longitudinal control of plasticity.

With the present study, we tested the cortical mechanisms underlying the restoration of hearing and speech perception in the first six-month period following implant switch-on. We expected to see inactive neuronal pathways reactivated in CI recipients, within three to six months of switch-on, and engagement of cortical areas resembling those of normal-hearing control participants. Furthermore, in previous findings, notwithstanding, we expected to see Broca's area involved in speech perception. Finally, we expected to see a difference in the progress of adaptation and the involvement of cortical areas between CI users with postlingual hearing loss and CI users with prelingual hearing loss.

2. Materials and Methods

2.1. Participants

Over the course of two years, patients who were approved for implantation were contacted by mail and invited to take part in the research project, including positron emission tomography (PET) and speech perception measures. From a total of 41 patients, 15 accepted and were included in the study (6 women, 9 men, M age = 51.8, age, range: 21–73 years). All participants were unilaterally implanted. Four participants had a prelingual onset of HL, indicated by their estimated age at onset of deafness and main use of signed language as communicative strategy. The remaining 11 participants had a postlingual or progressive onset of HL, as indicated by their main use of residual hearing, supported by lip-reading. In accordance with local practice, all CI participants followed standard aural/oral therapy for six months in parallel with the study. The therapy program includes weekly one-hour individually adapted sessions and trains speech perception and articulation. Table 1 lists the demographic and clinical data for the 15 participants.

Table 1.

Clinical and demographic data for the 15 participants included in the study.

| Participant (gender) | Age at project start (y) | Etiology of deafness | Side of implant | dPre/post | Duration of HL (y) | eDegree of deafness (dB HL) | Implant type | CI sound processor | CI sound processing strategy |

|---|---|---|---|---|---|---|---|---|---|

| CI 1 (F) | 49.8 | aCong. non spec. | R | Post | 45.8 | 80–90 | fNucleus | Freedom | ACE 900 |

| CI 2 (F) | 21.4 | Ototoxic | R | Pre | 20.7 | >90 | Nucleus | Freedom | ACE 250 |

| CI 3 (M) | 31.7 | Meningitis | L | Post | 30.2 | 80–90 | Nucleus | Freedom | ACE 900 |

| CI 4 (M) | 56.0 | Cong. non spec. | R | Post | 48.0 | 80–90 | Nucleus | Freedom | ACE 1800 |

| CI 5 (F) | 70.3 | Cong. non spec. | R | Post | 30.3 | 80–90 | Nucleus | Freedom | ACE 900 |

| CI 6 (F) | 47.5 | Unknown | L | Post | 10.5 | 80–90 | Nucleus | Freedom | ACE 1200 |

| CI 7 (F) | 56.2 | bHered. non spec. | R | Post | 37.6 | 80–90 | Nucleus | Freedom | ACE 1200 |

| CI 8 (M) | 58.5 | Meningitis | R | Pre | 53.5 | >90 | Nucleus | Freedom | ACE 900 |

| CI 9 (F) | 29.1 | cMon | L | Post | 19.1 | 80–90 | Nucleus | Freedom | ACE 1200 |

| CI 10 (F) | 44.8 | Unknown | R | Post | 9.8 | 80–90 | Nucleus | Freedom | ACE 1200 |

| CI 11 (M) | 60.4 | Unknown | L | Post | 16.4 | 70–90 | Nucleus | Freedom | ACE 900 |

| CI 12 (F) | 50.6 | Cong. non spec. | R | Pre | 47.6 | >90 | gA.B. | Harmony | Fid. 120 |

| CI 13 (M) | 63.5 | Cong. non spec. | L | Pre | 57.5 | >90 | Nucleus | Freedom | ACE 500 |

| CI 14 (F) | 63.0 | Unknown | R | Post | 5.0 | 70–90 | Nucleus | Freedom | ACE 720 |

| CI 15 (M) | 73.3 | Trauma | R | Post | 19.3 | 70–90 | Nucleus | CP 810 | ACE 720 |

| Mean | 51.8 (SD 15) | 29.7 |

aNon specified congenital HL, bnon specified hereditary HL, cMondini dysplasia. dPre- or postlingual HL. eMeasured as the average of pure-tone hearing thresholds at 500, 1000, and 2000 Hz, expressed in dB with reference to normal thresholds. Ranges indicate a difference between left and right ear hearing thresholds. fCochlear, gAdvanced Bionics.

2.2. Normal-Hearing Reference

To obtain a normal-hearing reference, we recruited a group of NH adults (4 women, 2 men, M age = 54.29 years, age range: 47–64 years) for a single PET/test session. All NH participants met the criteria for normal-hearing by passing a full audiometric test.

All participants were right-handed Danish speakers and gender was not considered important [42].

2.3. Ethical Approval

The study was conducted at the PET center, Aarhus University Hospital, Denmark, in accordance with the Declaration of Helsinki, and approved by the Research Ethics Committee of the Central Denmark Region. Informed consent was obtained from all participants.

2.4. Design

NH participants underwent PET once, while CI participants were tested consecutively at three points of time: (1) within 14 days after switch-on of the implant (baseline, BL), (2) after three-months (midpoint, MP), and (3) after six months (endpoint, EP). For purposes of analysis, two subgroups were identified as (1) the postlingual (POST) HL subgroup (N = 11) and (2) the prelingual (PRE) HL subgroup (N = 4).

2.5. Behavioral Measures

We assessed the participants' speech perception progress by the Hagerman speech perception test [43]. The Hagerman test is an open-set test which presents sentences organized in lists of ten. The sentences have identical name-verb-number-adjective-noun structures, which the participant is required to repeat. Normally, the test is presented in background noise. However, considering the participants' inexperience with the implant, we removed the background noise. The participants were given one training list with feedback and two trial lists without feedback (max. score = 100 pts.). Sound was played back on a laptop computer through an active loudspeaker (Fostex 6301B, Fostex Company, Japan) placed in front of the participant. The stimuli were presented at 65 dB sound pressure level (SPL), and CI users were instructed to use their preferred CI settings during the entire test session and to adjust their processors to a comfortable loudness level. Furthermore, participants who used a hearing aid were instructed to turn it off and leave it plugged in. The CI participants performed the Hagerman tests in separate sessions at the same milestones as selected for PET data acquisition (BL, MP, and EP). Different lists were used at the three times of testing. The NH group performed a single Hagerman test along with their PET scan session.

To identify effects of time, we performed a repeated measures analysis of variance. Due to nonnormal distributions in the data, this was done using a nonparametric Friedman's ANOVA in the POST and PRE subgroups separately. Post hoc tests and between-group analyses were performed by Mann-Whitney nonparametric tests and Bonferroni corrected at alpha 0.016. The HAG data were analyzed in SPSS and plotted with Sigmaplot for Windows 11.0 (Systat Software Inc.).

2.6. Apparatus and Stimuli

MRI. A high-resolution T1-weighted MR scan was acquired prior to PET scanning. In the case of CI participants, this was performed preoperatively.

Stimuli. All participants were examined in 2 conditions: (1) multitalker babble (BAB) from multiple simultaneous speakers with a complexity close to that of speech and perceived by the listeners as speech-like but devoid of meaning [44] and (2) “running” speech (RS), narrating the history of a familiar geographical locality at the rate of 142 words per minute, generated in Danish by a standard female voice [45]. The stimuli were played back on a laptop computer in the freeware sound editor software Audacity (http://audacity.sourceforge.net/) and delivered directly from the computer's headphone jack to the external input port of the implant speech processor. Bimodally aided participants removed their hearing aid and were fitted with an earplug in the nonimplanted ear during the tomography. These measures were taken to preclude background noise and possible cross-talk from the contralateral ear. The NH participants listened to the stimuli binaurally through a pair of headphones (AKG, K 271). All stimuli were presented at the most comfortable level. To define this level, participants were exposed to the two stimuli once before the tomography. In the tomograph, prior to bolus injection, participants had no information about the nature of the next stimulus, but they were instructed to listen attentively in all cases. After each of the four scans, participants were required to describe what they had heard and, if possible, review the content of the narration.

PET. Positron emission tomography (PET) is a molecular imaging method that yields brain activity, by means of detecting changes in regional cerebral blood flow (rCBF). This is done by computing and comparing the spatial distributions of the uptake of a blood flow tracer. PET measurements are generally limited with respect to spatial and temporal resolution and the invasiveness of the procedure, which requires injection of oxygen-15-labelled water. In this case, however, anatomical and temporal specificity could not have been improved by using functional magnetic resonance imaging (fMRI), as the auditory implants are not MRI compatible. In addition, PET is an almost noiseless imaging modality, which is useful for both CI participants and for the study of speech. Finally, because only the head of the participant is positioned in the tomograph, compared with the whole body imposition of fMRI, it is possible to communicate visually with the participant during tomography.

We measured raised or reduced cerebral activity as the change of the brain uptake of H2 15O oxygen-15-labeled water, which matches the distribution of cerebral blood flow (CBF), using an ECAT EXACT HR 47 Tomograph (Siemens/CTI). Emission scans were initiated at 60,000 true counts per second after repeated intravenous bolus injections of doses of tracer with an activity of 500 MBq (13.5 mCi). The tomography took place in a darkened room with participants' eyes closed.

The babble and running speech conditions were duplicated, generating a total of four tomography sessions. The uptake lasted 90 seconds (single frame) at intervals of 10 min. Each frame registered 47 3.1 mm sections of the brain. After correction for scatter and measured attenuation, each PET frame was reconstructed with filtered back projection and smoothed with a postreconstruction 10 mm Gaussian filter resulting in a resolution of 11 mm full-width-at-half-maximum (FWHM).

2.7. Restrictions

Rules of regulation mean that participants who volunteer for scientific experiments may receive a total maximum radiation of 6 millisieverts (mSv) within one year. Here, the total radiation dose administered over the three times of scanning was approximately 5.58 mSv. Due to these restrictions, no preoperative baseline scans could be acquired.

2.8. Image Preprocessing

Participants' MR images were co-registered to an MR template averaged across 85 individual MR scans in Talairach space [46], using a combination of linear and nonlinear transformations [47]. Each summed PET emission recording was linearly coregistered to the corresponding MR image using automated algorithms. To smooth the PET images for individual anatomical differences and variation in gyral anatomy, images were blurred with a Gaussian filter resulting in final 14 mm at full-width half-maximum (FWHM) isotropic resolution.

2.9. Data Analysis

All images were processed using Statistical Parametric Mapping 8 (SPM8; Wellcome Neuroimaging Department, UK, (http://www.fil.ion.ucl.ac.uk/spm/)). Local maxima of activation clusters were identified using the Montreal Neurological Institute (MNI) coordinate system and then cross-referenced with a standard anatomical brain coordinate atlas [46]. Differences in global activity were controlled using proportional normalization (gray matter average per volume). Significance threshold for task main effects was set to P < 0.05, family wise error (FWE) corrected for multiple comparisons. We tested the effect of side of implant and type of implant in a separate preanalysis. As we found neither main effects nor interactions with functional data involving these variables, we concluded that these factors had no significant effect on the results. They were thus not included in further analyses.

Three analyses were performed as described below.

Analysis 1. The first analysis identified the main effects of time, speech/babble contrast, and history of hearing loss (POST HL versus PRE HL) and possible interactions between these effects in the whole brain, across CI participants. This analysis was performed as a single SPM matrix in a factorial 3-way design with time, contrast, and subgroup as factors. To define a region-of-interest (ROI), we created a mask based on the main effect of contrast.

Analysis 2. The second analysis identified possible main effects of contrast, time, and interactions between these factors in the inferior frontal gyrus (IFG), more specifically Broca's area (BA 44/45). This analysis was performed as two 2-way factorial analyses of the POST HL and the PRE HL subgroups separately. To define a region-of-interest (ROI), we created a mask based on bilateral inferior frontal gyri (Broca's region), including the putative Brodmann regions 44, 45, and 47 using the WFU pick-atlas [48].

Analysis 3. The third analysis investigated main effects of contrast and group (CI versus NH) at the CI baseline and possible interactions between these effects. This analysis was performed as a single SPM matrix in a factorial 2-way design with condition and group as factors. To define a ROI, we created a mask based on the main effect of contrast.

3. Results

3.1. Analysis 1

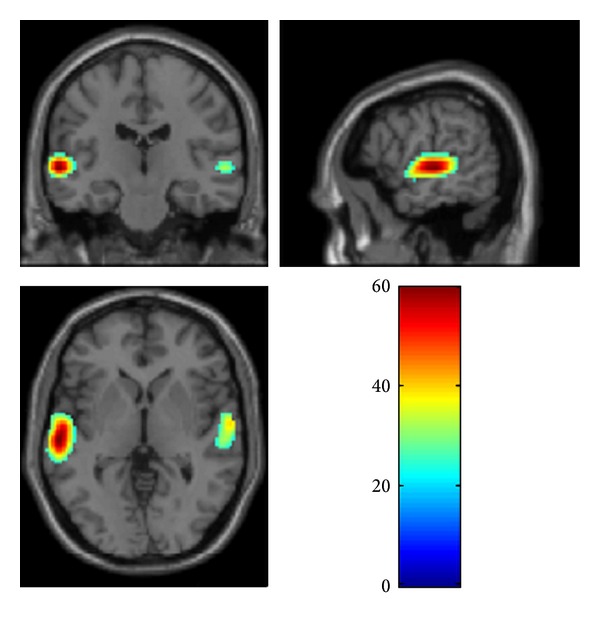

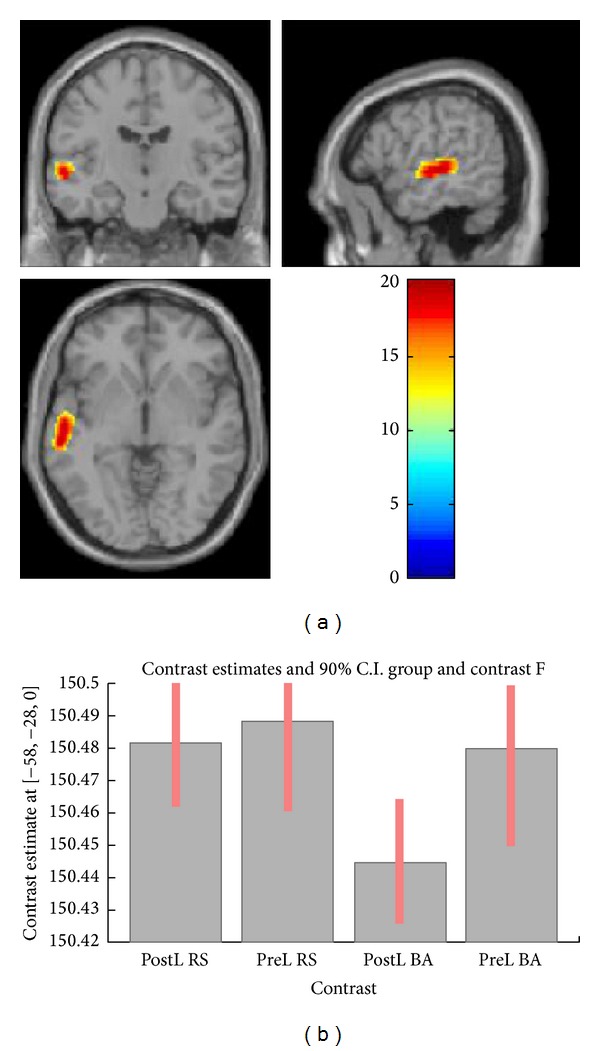

We found a main effect of speech/babble contrast across CI participants, regardless of subgroup, in bilateral superior temporal gyri (F(1,78) = 60.14). A t-test confirmed that the effect was driven by higher activity during running speech (Table 2; Figure 1). There was no significant main effect of time or group, nor any interaction between the effects. The ROI analysis revealed significant interaction between the effects of contrast and group in BA 21/22 in the left superior temporal gyrus (F(1,78) = 20.42) (Table 2; Figure 2). A plot of contrast estimates showed a difference between running speech and babble that was larger in the postlingual subgroup than in the prelingual subgroup (Figure 2).

Table 2.

Main effects and interactions of analysis 1 performed across CI participants on the whole brain (top) and on a region-of-interest based on main effect of contrast (bottom).

| Coordinates | Z score | Region | Brodmann area | |||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Whole brain analysis | ||||||

| Main effect of contrast (RS versus BAB) | −58 | −20 | 0 | 6.55 | L STG | BA 21/22 |

| 58 | 0 | −6 | 5.67 | R STG | BA 21/22 | |

| 64 | −10 | 0 | 5.47 | R STG | BA 21 | |

| Main effect of time | NS | |||||

| Interaction time x contrast | NS | |||||

| Main effect of group | NS | |||||

| Interaction contrast versus group | NS | |||||

| Interaction time versus group | NS | |||||

|

| ||||||

| Region of interest analyses | ||||||

| Main effect of group | NS | |||||

| Main effect of time | NS | |||||

| Interaction contrast x group | −58 | −26 | 0 | 4.09 | L STG | BA 21/22 |

| −56 | −16 | −3 | 3.24 | L MTG | BA 21 | |

RS: running speech. BAB: babble. L STG: left superior temporal gyrus. R STG: right superior temporal gyrus. L MTG: left middle temporal gyrus, NS: non-significant.

Figure 1.

Activation map for main effect of contrast across subgroups in the whole brain analysis showing greater activity in superior temporal gyri (BA 21/22) during speech comprehension.

Figure 2.

(a) Activation map for interaction between effect of contrast and effect of PRE/POST subgroup in the ROI analysis (L BA 21/22) showing greater activation during speech for the postlingual group. (b) Contrast estimates of conditions in the two subgroups showing a larger difference in the postlingual subgroup than in the prelingual subgroup. PostL: postlingual group; PreL: prelingual group; RS: running speech condition; BA: multitalker babble condition.

3.2. Analysis 2

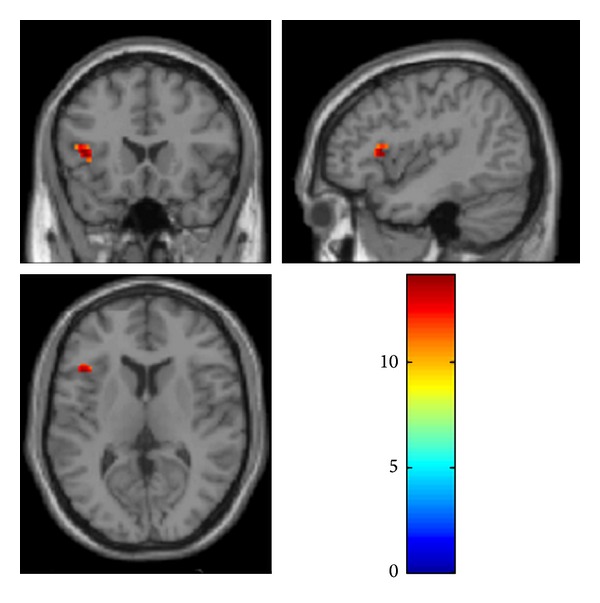

In the bilateral IFG ROI analysis, we found a main effect of speech/babble contrast in BA 47 in the postlingual subgroup. A t-test confirmed that the effect was driven by higher activity during running speech. Furthermore, we found a main effect of time in left IFG (Broca's area BA 45; F(2,60) = 14.19; P = 0.006, FWE corrected) (Figure 3), with no significant interaction between contrast and time. The prelingual subgroup had no main effects in the bilateral IFG ROI analysis (Table 3).

Figure 3.

Activation map for main effect of time in the separate analysis of the POST HL group with ROI based on bilateral IFG showing activation of left IFG (Broca's area BA 45).

Table 3.

Main effects and interactions of analysis 2 performed separately for the two CI-subgroups on a region-of-interest based on bilateral inferior frontal gyri.

| Coordinates | Z score | Region | Brodmann area | |||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| POST HL subgroup | ||||||

| Region of interest analysis (bil. IFG) | ||||||

| Main effect of contrast (RS versus BAB) | −46 | 14 | −6 | 4.91 | L IFG | BA 47 |

| 52 | 16 | −6 | 3.85 | R IFG | BA 47 | |

| 46 | 16 | −9 | 3.74 | R IFG | BA 47 | |

| Main effect of time | −42 | 20 | 9 | 4.29 | L IFG | BA 45 |

| Interaction contrast x time | NS | |||||

|

| ||||||

| PRE HL subgroup | ||||||

| Main effect of contrast (RS versus BAB) | NS | |||||

| Main effect of time | NS | |||||

L IFG: left inferior frontal gyrus. R IFG: right inferior frontal gyrus.

3.3. Analysis 3

We found a main effect of speech/babble contrast across the CI and NH groups bilaterally in superior temporal gyri, in the left middle temporal gyrus, and in the right inferior parietal lobule. t-tests showed that the superior temporal gyri bilaterally and the left middle temporal gyrus were more active during running speech, while the right inferior parietal lobule was more active during babble. We found a main effect of CI versus NH exclusively in the caudate nucleus. A t-test showed that this effect was due to higher activity of this area in the NH group than in the CI group. No interaction was found between the effect of contrast and the effect of group in whole-brain analysis (Table 4).

Table 4.

Main effects and interactions of analysis 3 performed across CI- and NH-participants at baseline on the whole brain (top) and on a region-of-interest based on main effect of contrast (bottom).

| Coordinates | Z score | Region | Brodmann area | |||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Cochlear Implant group versus NH group | ||||||

| Main effect of contrast (RS versus BAB) | −58 | −18 | 0 | 6.7 | L STG | BA 22/21 |

| −54 | 4 | −9 | 5.25 | L MTG | BA 21/38 | |

| 62 | −8 | 0 | 6.53 | R STG | BA 21/22 | |

| 54 | −44 | 36 | 4.64 | R IPL | BA 40 | |

| Main effect of group | 12 | 20 | 3 | 4.84 | Caudate | |

| Interaction contrast x group | NS | |||||

|

| ||||||

| Region of interest analyses | ||||||

| Main effect of CI versus NH (ROI) | 58 | −8 | 6 | 3.37 | R STG | BA 22 |

| Interaction contrast x group (ROI) | 52 | −46 | 39 | 3.45 | R IPL | BA 40 |

L STG: left superior temporal gyrus. R STG: right superior temporal gyrus. L MTG: left middle temporal gyrus. R IPL: right inferior parietal lobule.

The ROI analysis based on the main effect of contrast yielded a main effect of CI versus NH in secondary auditory cortex including BA 22 in the right superior temporal gyrus. A t-test showed that this effect was due to higher activity of this area in the NH group than in the CI group. Furthermore, in the ROI analysis, we found an interaction between the effect of speech/babble contrast and the effect of group in the right inferior parietal lobule (Table 4).

3.4. Behavioral Measures

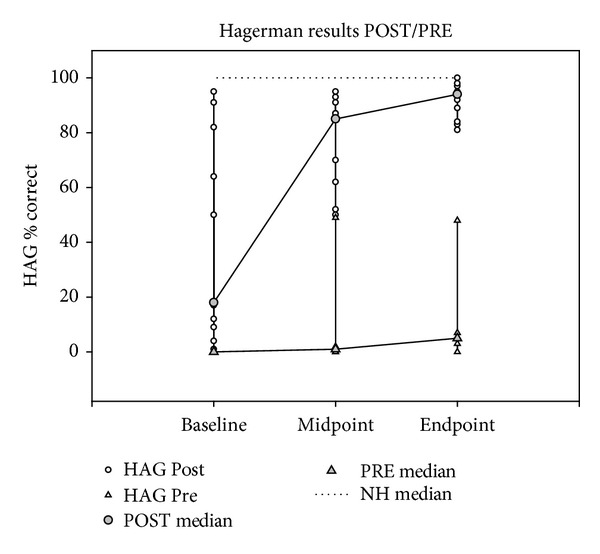

We found a significant effect of time in the POST group (χ 2(2,2) = 19.62, P < 0.001), but not in the PRE group. Post hoc tests showed that the effect was driven by the 51.3 percentage points endpoint gain (Mann-Whitney U = 12.5, P = 0.002). Furthermore, the POST group scored significantly higher than the PRE group at all three points of measurement (BL: Mann-Whitney U = 0.000, P = 0.004; MP: Mann-Whitney U = 0.000, P = 0.004; EP: Mann-Whitney U = 0.000, P = 0.004). Ceiling performance (100% correct) was observed in all NH participants (Figure 4).

Figure 4.

Mixed line and scatter plot showing individual and median Hagerman speech perception scores for the POST and the PRE subgroups at the three milestones. The dotted line represents the median score of the NH reference group.

4. Discussion

In this study, we aimed to examine the plastic changes which underlie the recovery of hearing after cochlear implantation. Our results showed that across all CI recipients and all points of measurement, the bilateral middle and superior temporal gyri (including, more specifically, Brodmann areas 21 and 22) were significantly more active when participants listened to running speech than when they listened to multi-talker babble. Thus, on average, the auditory brain regions, known to be involved in the processing of complex auditory stimuli [49–51] displayed a clear distinction between speech-like noise and speech in recently implanted CI recipients. This confirms that both hemispheres are involved in the speech perception process, even during monaural auditory stimulation [29, 36, 52, 53].

Furthermore, the results showed a difference in the way CI recipients with postlingual hearing loss and recipients with prelingual hearing loss distinguish between speech and babble. The CI users with postlingual hearing loss displayed a greater activation during speech than during babble in BA 21 and 22 in the left superior temporal gyrus, indicating differential processing of the stimuli by the two subgroups. We speculate that the postlingually deaf listeners disengage attention when they are presented with the incomprehensible babble stimulus. This disengagement is then reflected in decreased temporal brain activity. In contrast, the prelingually deaf CI listeners may be equally attentive to the two stimuli, regardless of their nature, as reflected in undifferentiated activity.

This difference between postlingual and prelingual deafness was mirrored in the behavioral measures. The postlingual subgroup not only possessed a moderate level of speech perception at baseline (within 14 days after switch-on of the implant), but also made significant gains in performance, the majority of which occurred in the first three month period. In contrast, the prelingual subgroup had no baseline speech perception and only modest, if any, progress during the study period. This finding is consistent with expectation and implies an association between behavioral performance and brain activity related to the history of hearing loss. In prelingual deafness, the neuronal connections of the auditory pathways (e.g., measured as cortical auditory evoked potentials) may not be established in the appropriate time window of opportunity [54–56]. The subsequent electric stimulation at some time in adulthood may produce some hearing sensation, but the discrimination of sounds and time intervals remain defective [57, 58]. The findings are compatible with Naito et al. [29], who suggested that the reduced speech activation in prelingually deaf implant users could be explained by insufficient development of neuronal networks or their degeneration due to prolonged deafness. Follow-up studies in the present population may provide interesting insight into the degree to which speech perception progresses in the prelingual subgroup in the long term.

We found a main effect of time exclusively in Broca's area, and only in the postlingual subgroup. This is in line with recent studies showing that, in CI users, the activation of Broca's area during speech processing is negatively correlated with the duration of deafness and positively correlated with the progress in the restoration of speech comprehension [7, 8, 37, 59]. This indicates that the changes in the auditory recovery process are most profoundly manifested in this specific area, which is associated with speech perception and production. Surprisingly, we found no interaction between the speech/babble contrast and time. This suggests that the area becomes increasingly activated, regardless of whether the stimulus makes semantic sense or not, or is active in the distinction between sense and nonsense. However, the absence of difference between speech and babble in Broca's area may also be explained by an increasingly high activity at rest in CI patients, as reported by Strelnikov et al. [39].

4.1. Cochlear Implanted Participants at Baseline versus Normal-Hearing Participants

Our whole-brain analysis revealed a significant activation of bilateral superior temporal gyri and left middle temporal gyrus during speech across CI participants at baseline and NH individuals. This is partly consistent with Naito et al. [31], who, in addition to bilateral superior temporal gyri, found significant speech activation in the right middle temporal gyrus, the left posterior inferior frontal gyrus (Broca's area), and the left hippocampus in both normal participants and CI users. These further findings could be explained by the use of silence as contrast relative to the use of babble in the present study. Interestingly, even though the NH participants received unilateral stimulation (6 right; 6 left), the Naito study found no significant difference in any brain area in either NH participants or CI users between right ear stimulation and left ear stimulation.

The significant involvement of the right parietal lobule in the CI participants during babble suggests that, at this initial stage of the CI adaptation, CI listeners, unlike NH individuals, need to pay attention to the speech-like noise to determine its possible character [60]. The observation that during speech stimulation the NH participants involved the caudate nucleus more than the CI participants may be explained by a reduction of the effort needed by the NH participants to deal with the well-known task of receiving a message. The caudate nucleus is a part of the striatum, which subserves among other tasks the learning of slowly modulated skills or habits [61]. To the normal-hearing listener, the reception of auditory information is an every-day experience similar to following a known route; for example, see Wallentin et al. [23] for a similar argument. In contrast, to CI listeners, auditory stimuli are nonhabitual in the strongest sense of the word, thus relying on other sources of processing. Interestingly, Naito et al. [31] made a similar finding and speculated that though the caudate nucleus has been associated with various tasks ranging from sensorimotor tasks to pure thinking, the result may provide further evidence that the area has cognitive function and shows increased activity along with the increased activity in cortical language areas.

In contrast to the significantly higher right-lateralized activation observed in NH individuals in the present study, Giraud et al. [33] showed a left-lateralized activation of temporal and frontal regions in NH controls. However, direct comparison between the two studies is difficult as there are several differences in study design and implant experience of the participants. Mortensen et al. [37] found increased activity in right cerebellar cortex when running speech was comprehended relative to babble, but only in CI listeners with high speech comprehension. The authors speculated that this could be due to cognitive work of cerebellum subserving verbal working memory or a contribution of the right cerebellar hemisphere to precise representation of temporal information for phonetic processing. However, this finding was not replicated in the present study, which may reflect differences in duration of implant use and a mixture of speech comprehension levels.

4.2. Cross-Modal Plasticity

Giraud and colleagues [34] consistently demonstrated activation of areas BA 17/18 in the visual cortex when CI users responded to meaningful sounds. The authors argued that the process was associated with improvement of lip-reading proficiency, which is supported by findings in a behavioral study by Rouger [11]. A similar cross-modal interaction between vision and hearing was not replicated in the current study. Differences in the methodology used in the two studies may explain this discrepancy. The Giraud study involved repetition of words and syllables and naming of environmental sounds, contrasted with noise bursts, as opposed to the current study, which involved passive listening to a story contrasted with speech-like noise. Furthermore, sound was presented in free field, whereas in the current study, the auditory stimuli bypassed the microphones of the speech processor and were fed directly to the auxiliary input. Finally, the strict conservative statistical methods used here preclude reporting of results that are not statistically significant when corrected for multiple comparisons.

4.3. Limitations of the Study

In Scandinavia as in most European countries, cochlear implantation is administered by the public health care system and offered for free to all patients who meet the clinical implantation criteria (<40% open-set word-recognition scores). This includes patients with a prelingual hearing loss, despite recognition that these patients may have very limited linguistic benefit. As a result of this policy, the group of CI users in general is very heterogeneous, which was also the case in this study. While the difference in group size was far from optimal and may have confounded the results, we maintain that it is of high importance to also study cortical activity in prelingually deaf patients following cochlear implantation. In a previous study involving music training, we found that while little gain was achieved in speech perception, prelingual deafness did not preclude acquisition of some aspects of music perception [62]. This implies that plastic changes take place also in the long-term deaf brain and that specific training measures could help these patients in achieving improved implant outcome [58, 63].

Our normal-hearing control group had a less optimal size, which may have made direct comparisons between groups less valid than desired. However, ethical restrictions limit the number of healthy participants in studies involving PET.

As stated, the degree of deafness (i.e., residual hearing) varied across subjects and may have influenced the adaptation to the implant. Unfortunately, a correlation analysis between preoperative speech understanding and PET and behavioral results was not possible since such data were not available for all participants.

5. Conclusion

The present PET study tested brain activation patterns in a group of recently implanted adult CI recipients and a group of normal-hearing controls, who listened to speech and nonspeech stimuli. CI listeners with postlingual hearing loss showed differential activation of left superior temporal gyrus during speech and speech-like stimuli, unlike CI listeners with prelingual hearing loss. This group difference was also reflected in a behavioral advantage for patients with postlingual hearing loss. Furthermore, Broca's area was activated as an effect of time, but only in CI listeners with postlingual hearing loss. Comparison of the CI listeners and the normal-hearing controls revealed significantly higher activation of the caudate nucleus in the normal-hearing listeners. The study demonstrates that processing of the information provided by the cochlear implant is highly related to the history of hearing loss. Patients whose hearing loss occurred after the acquisition of language involve brain areas associated with speech comprehension, which is not the case for patients whose hearing loss occurred before the acquisition of language. Finally, the findings confirm the key role of Broca's area in restoration of speech perception, but only in individuals in whom Broca's area has been active prior to the loss of hearing.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Acknowledgments

The authors wish to dedicate this article to the memory of Dr. Malene Vejby Mortensen, PhD, who passed away August 5th, 2011 from leukemia. She was an eminent MD and ENT-specialist, who was dedicated to research in cochlear implant outcome and prognostication and was a driving force in the implementation and completion of this study. The authors also wish to acknowledge all of the participants for their unrestricted commitment to the study. Furthermore, they wish to thank Minna Sandahl, Roberta Hardgrove Hansen, Anne Marie Ravn and Susanne Mai from the Department of Audiology, Aarhus University Hospital for invaluable help with participant recruitment and provision of clinical data. Finally, they thank Kim Vang Hansen and Anders Bertil Rodell for assistance on preprocessing of PET data, Arne Møller, Helle Jung Larsen and Birthe Hedegaard Jensen for assistance in scheduling and completion of PET-scannings and Mads Hansen for assistance with analysis of behavioral data.

References

- 1.Friesen LM, Shannon RV, Baskent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. Journal of the Acoustical Society of America. 2001;110(2):1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- 2.Wilson BS, Dorman MF. The surprising performance of present-day cochlear implants. IEEE Transactions on Biomedical Engineering. 2007;54(6, part 1):969–972. doi: 10.1109/TBME.2007.893505. [DOI] [PubMed] [Google Scholar]

- 3.Moore DR, Shannon RV. Beyond cochlear implants: awakening the deafened brain. Nature Neuroscience. 2009;12(6):686–691. doi: 10.1038/nn.2326. [DOI] [PubMed] [Google Scholar]

- 4.Gantz BJ, Tyler RS, Woodworth GG, Tye-Murray N, Fryauf-Bertschy H. Results of multichannel cochlear implants in congenital an acquired prelingual deafness in children: five-year follow-up. American Journal of Otology. 1994;15(supplement 2):1–7. [PubMed] [Google Scholar]

- 5.Tyler RS, Parkinson AJ, Woodworth GG, Lowder MW, Gantz BJ. Performance over time of adult patients using the ineraid or nucleus cochlear implant. Journal of the Acoustical Society of America. 1997;102(1):508–522. doi: 10.1121/1.419724. [DOI] [PubMed] [Google Scholar]

- 6.Manrique M, Cervera-Paz FJ, Huarte A, Perez N, Molina M, García-Tapia R. Cerebral auditory plasticity and cochlear implants. International Journal of Pediatric Otorhinolaryngology. 1999;49(supplement 1):193–197. doi: 10.1016/s0165-5876(99)00159-7. [DOI] [PubMed] [Google Scholar]

- 7.Caposecco A, Hickson L, Pedley K. Cochlear implant outcomes in adults and adolescents with early-onset hearing loss. Ear and Hearing. 2012;33(2):209–220. doi: 10.1097/AUD.0b013e31822eb16c. [DOI] [PubMed] [Google Scholar]

- 8.Green KMJ, Julyan PJ, Hastings DL, Ramsden RT. Auditory cortical activation and speech perception in cochlear implant users: effects of implant experience and duration of deafness. Hearing Research. 2005;205(1-2):184–192. doi: 10.1016/j.heares.2005.03.016. [DOI] [PubMed] [Google Scholar]

- 9.Spivak LG, Waltzman SB. Performance of cochlear implant patients as a function of time. Journal of Speech and Hearing Research. 1990;33(3):511–519. doi: 10.1044/jshr.3303.511. [DOI] [PubMed] [Google Scholar]

- 10.Ruffin CV, Tyler RS, Witt SA, Dunn CC, Gantz BJ, Rubinstein JT. Long-term performance of Clarion 1.0 cochlear implant users. Laryngoscope. 2007;117(7):1183–1190. doi: 10.1097/MLG.0b013e318058191a. [DOI] [PubMed] [Google Scholar]

- 11.Rouger J. Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proceedings of the National Academy of Sciences of the United States of America. 2007;104(17):7295–7300. doi: 10.1073/pnas.0609419104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lee JS, Lee DS, Seung HO, et al. PET evidence of neuroplasticity in adult auditory cortex of postlingual deafness. The Journal of Nuclear Medicine. 2003;44(9):1435–1439. [PubMed] [Google Scholar]

- 13.Giraud AL, Truy E, Frackowiak R. Imaging plasticity in cochlear implant patients. Audiology and Neuro-Otology. 2001;6(6):381–393. doi: 10.1159/000046847. [DOI] [PubMed] [Google Scholar]

- 14.Rettenbach R, Diller G. Do deaf people see better? Texture segmentation and visual search compensate in adult but not in juvenile subjects. Journal of Cognitive Neuroscience. 1999;11(5):560–583. doi: 10.1162/089892999563616. [DOI] [PubMed] [Google Scholar]

- 15.Bavelier D, Tomann A, Hutton C, et al. Visual attention to the periphery is enhanced in congenitally deaf individuals. The Journal of Neuroscience. 2000;20(17):p. RC93. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Röder B, Teder-Sälejärvi W, Sterr A, Rösler F, Hillyard SA, Neville HJ. Improved auditory spatial tuning in blind humans. Nature. 1999;400(6740):162–166. doi: 10.1038/22106. [DOI] [PubMed] [Google Scholar]

- 17.Weeks R, Horwitz B, Aziz-Sultan A, et al. A positron emission tomographic study of auditory localization in the congenitally blind. The Journal of Neuroscience. 2000;20(7):2664–2672. doi: 10.1523/JNEUROSCI.20-07-02664.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ito K. Cortical activation shortly after cochlear implantation. Audiology and Neuro-Otology. 2004;9(5):282–293. doi: 10.1159/000080228. [DOI] [PubMed] [Google Scholar]

- 19.Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JDE. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. NeuroImage. 1999;10(1):15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- 20.Amunts K, Zilles K. Architecture and organizational principles of Broca's region. Trends in Cognitive Sciences. 2012;16(8):418–426. doi: 10.1016/j.tics.2012.06.005. [DOI] [PubMed] [Google Scholar]

- 21.Harasty J, Binder JR, Frost JA, et al. Language processing in both sexes: evidence from brain studies (multiple letters) Brain. 2000;123(2):404–406. doi: 10.1093/brain/123.2.404. [DOI] [PubMed] [Google Scholar]

- 22.Davis MH, Johnsrude IS. Hierarchical processing in spoken language comprehension. The Journal of Neuroscience. 2003;23(8):3423–3431. doi: 10.1523/JNEUROSCI.23-08-03423.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wallentin M, Roepstorff A, Glover R, Burgess N. Parallel memory systems for talking about location and age in precuneus, caudate and Broca’s region. NeuroImage. 2006;32(4):1850–1864. doi: 10.1016/j.neuroimage.2006.05.002. [DOI] [PubMed] [Google Scholar]

- 24.Christensen KR, Wallentin M. The locative alternation: distinguishing linguistic processing cost from error signals in Broca’s region. NeuroImage. 2011;56(3):1622–1631. doi: 10.1016/j.neuroimage.2011.02.081. [DOI] [PubMed] [Google Scholar]

- 25.Burholt Kristensen L, Engberg-Pedersen E, Højlund Nielsen A, Wallentin M. The influence of context on word order processing: an fMRI study. Journal of Neurolinguistics. 2013;26(1):73–88. [Google Scholar]

- 26.Price CJ, Wise RJS, Warburton EA, et al. Hearing and saying. The functional neuro-anatomy of auditory word processing. Brain. 1996;119(3):919–931. doi: 10.1093/brain/119.3.919. [DOI] [PubMed] [Google Scholar]

- 27.Friederici AD, Opitz B, von Cramon DY. Segregating semantic and syntactic aspects of processing in the human brain: an fMRI investigation of different word types. Cerebral Cortex. 2000;10(7):698–705. doi: 10.1093/cercor/10.7.698. [DOI] [PubMed] [Google Scholar]

- 28.Seghier ML, Boëx C, Lazeyras F, Sigrist A, Pelizzone M. fMRI evidence for activation of multiple cortical regions in the primary auditory cortex of deaf subjects users of multichannel cochlear implants. Cerebral Cortex. 2005;15(1):40–48. doi: 10.1093/cercor/bhh106. [DOI] [PubMed] [Google Scholar]

- 29.Naito Y, Hirano S, Honjo I, et al. Sound-induced activation of auditory cortices in cochlear implant users with post- and prelingual deafness demonstrated by Positron Emission Tomography. Acta Oto-Laryngologica. 1997;117(4):490–496. doi: 10.3109/00016489709113426. [DOI] [PubMed] [Google Scholar]

- 30.Wong D, Miyamoto RT, Pisoni DB, Sehgal M, Hutchins GD. PET imaging of cochlear-implant and normal-hearing subjects listening to speech and nonspeech. Hearing Research. 1999;132(1-2):34–42. doi: 10.1016/s0378-5955(99)00028-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Naito Y, Tateya I, Fujiki N, et al. Increased cortical activation during hearing of speech in cochlear implant users. Hearing Research. 2000;143(1-2):139–146. doi: 10.1016/s0378-5955(00)00035-6. [DOI] [PubMed] [Google Scholar]

- 32.Giraud A, Truy E, Frackowiak RSJ, Grégoire M, Pujol J, Collet L. Differential recruitment of the speech processing system in healthy subjects and rehabilitated cochlear implant patients. Brain. 2000;123(part 7):1391–1402. doi: 10.1093/brain/123.7.1391. [DOI] [PubMed] [Google Scholar]

- 33.Giraud A, Price CJ, Graham JM, Truy E, Frackowiak RSJ. Cross-modal plasticity underpins language recovery after cochlear implantation. Neuron. 2001;30(3):657–663. doi: 10.1016/s0896-6273(01)00318-x. [DOI] [PubMed] [Google Scholar]

- 34.Giraud AL, Price CJ, Graham JM, Frackowiak RSJ. Functional plasticity of language-related brain areas after cochlear implantation. Brain. 2001;124(part 7):1307–1316. doi: 10.1093/brain/124.7.1307. [DOI] [PubMed] [Google Scholar]

- 35.Gjedde A. Gradients of the brain. Brain. 1999;122(11):2013–2014. doi: 10.1093/brain/122.11.2013. [DOI] [PubMed] [Google Scholar]

- 36.Naito Y, Okazawa H, Honjo I, et al. Cortical activation with sound stimulation in cochlear implant users demonstrated by positron emission tomography. Cognitive Brain Research. 1995;2(3):207–214. doi: 10.1016/0926-6410(95)90009-8. [DOI] [PubMed] [Google Scholar]

- 37.Mortensen MV, Mirz F, Gjedde A. Restored speech comprehension linked to activity in left inferior prefrontal and right temporal cortices in postlingual deafness. NeuroImage. 2006;31(2):842–852. doi: 10.1016/j.neuroimage.2005.12.020. [DOI] [PubMed] [Google Scholar]

- 38.Rouger J, Lagleyre S, Démonet J, Fraysse B, Deguine O, Barone P. Evolution of crossmodal reorganization of the voice area in cochlear-implanted deaf patients. Human Brain Mapping. 2012;33(8):1929–1940. doi: 10.1002/hbm.21331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Strelnikov K, Rouger J, Demonet J-F, et al. Does brain activity at rest reflect adaptive strategies? evidence from speech processing after cochlear implantation. Cerebral Cortex. 2010;20(5):1217–1222. doi: 10.1093/cercor/bhp183. [DOI] [PubMed] [Google Scholar]

- 40.Sandmann P, Dillier N, Eichele T, et al. Visual activation of auditory cortex reflects maladaptive plasticity in cochlear implant users. Brain. 2012;135(part 2):555–568. doi: 10.1093/brain/awr329. [DOI] [PubMed] [Google Scholar]

- 41.Buckley KA, Tobey EA. Cross-modal plasticity and speech perception in pre- and postlingually deaf cochlear implant users. Ear and Hearing. 2011;32(1):2–15. doi: 10.1097/AUD.0b013e3181e8534c. [DOI] [PubMed] [Google Scholar]

- 42.Wallentin M. Putative sex differences in verbal abilities and language cortex: a critical review. Brain and Language. 2009;108(3):175–183. doi: 10.1016/j.bandl.2008.07.001. [DOI] [PubMed] [Google Scholar]

- 43.Wagener K, Josvassen JL, Ardenkjaer R. Design, optimization and evaluation of a Danish sentence test in noise. International Journal of Audiology. 2003;42(1):10–17. doi: 10.3109/14992020309056080. [DOI] [PubMed] [Google Scholar]

- 44.ICRA. ICRA Noise Signals Ver. 0. 3. International Collegium of Rehabilitative Audiology; 1997. [Google Scholar]

- 45.Elberling C, Ludvigsen C, Lyregaard PE. Dantale: a new Danish speech material. Scandinavian Audiology. 2010;18(3):169–175. doi: 10.3109/01050398909070742. [DOI] [PubMed] [Google Scholar]

- 46.Talairach J, Tournoux P. A Co-Planar Stereotaxic Atlas of the Human Brain. New York, NY, USA: Thieme; 1988. [Google Scholar]

- 47.Grabner G, Janke AL, Budge MM, Smith D, Pruessner J, Collins DL. Symmetric atlasing and model based segmentation: an application to the hippocampus in older adults. Medical Image Computing and Computer-Assisted Intervention. 2006;9(part 2):58–66. doi: 10.1007/11866763_8. [DOI] [PubMed] [Google Scholar]

- 48.Tzourio-Mazoyer N, Landeau B, Papathanassiou D, et al. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage. 2002;15(1):273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- 49.Creutzfeldt O, Ojemann G, Lettich E. Neuronal activity in the human lateral temporal lobe. I. Responses to speech. Experimental Brain Research. 1989;77(3):451–475. doi: 10.1007/BF00249600. [DOI] [PubMed] [Google Scholar]

- 50.Howard D, Patterson K, Wise R, et al. The cortical localization of the lexicons. Positron emission tomography evidence. Brain. 1992;115(6):1769–1782. doi: 10.1093/brain/115.6.1769. [DOI] [PubMed] [Google Scholar]

- 51.Hirano S, Naito Y, Okazawa H, et al. Cortical activation by monaural speech sound stimulation demonstrated by positron emission tomography. Experimental Brain Research. 1997;113(1):75–80. doi: 10.1007/BF02454143. [DOI] [PubMed] [Google Scholar]

- 52.Fujiki N, Naito Y, Hirano S, et al. Correlation between rCBF and speech perception in cochlear implant users. Auris Nasus Larynx. 1999;26(3):229–236. doi: 10.1016/s0385-8146(99)00009-7. [DOI] [PubMed] [Google Scholar]

- 53.Okazawa H, Naito Y, Yonekura Y, et al. Cochlear implant efficiency in pre- and postlingually deaf subjects. A study with H215O and PET. Brain. 1996;119(4):1297–1306. doi: 10.1093/brain/119.4.1297. [DOI] [PubMed] [Google Scholar]

- 54.Sharma A, Dorman MF. Central auditory development in children with cochlear implants: clinical implications. Advances in Oto-Rhino-Laryngology. 2006;64:66–88. doi: 10.1159/000094646. [DOI] [PubMed] [Google Scholar]

- 55.Sharma A, Dorman MF, Kral A. The influence of a sensitive period on central auditory development in children with unilateral and bilateral cochlear implants. Hearing Research. 2005;203(1-2):134–143. doi: 10.1016/j.heares.2004.12.010. [DOI] [PubMed] [Google Scholar]

- 56.Zhang LI. Persistent and specific influences of early acoustic environments on primary auditory cortex. Nature Neuroscience. 2001;4(11):1123–1130. doi: 10.1038/nn745. [DOI] [PubMed] [Google Scholar]

- 57.Mortensen MV, Madsen S, Gjedde A. Cortical responses to promontorial stimulation in postlingual deafness. Hearing Research. 2005;209(1-2):32–41. doi: 10.1016/j.heares.2005.05.011. [DOI] [PubMed] [Google Scholar]

- 58.Honjo I. Brain function of cochlear implant users. Advances in Oto-Rhino-Laryngology. 2000;57:42–44. doi: 10.1159/000059181. [DOI] [PubMed] [Google Scholar]

- 59.Lee H, Giraud A, Kang E, et al. Cortical activity at rest predicts cochlear implantation outcome. Cerebral Cortex. 2007;17(4):909–917. doi: 10.1093/cercor/bhl001. [DOI] [PubMed] [Google Scholar]

- 60.Johannsen P, Jakobsen J, Bruhn P, Gjedde A. Cortical responses to sustained and divided attention in Alzheimer’s disease. NeuroImage. 1999;10(3 I):269–281. doi: 10.1006/nimg.1999.0475. [DOI] [PubMed] [Google Scholar]

- 61.Gabrieli JDE. Cognitive neuroscience of human memory. Annual Review of Psychology. 1998;49:87–115. doi: 10.1146/annurev.psych.49.1.87. [DOI] [PubMed] [Google Scholar]

- 62.Petersen B, Mortensen MV, Hansen M, Vuust P. Singing in the key of life: a study on effects of musical ear training after cochlear implantation. Psychomusicology. 2012;22(2):134–151. [Google Scholar]

- 63.Looi V, She J. Music perception of cochlear implant users: a questionnaire, and its implications for a music training program. International Journal of Audiology. 2010;49(2):116–128. doi: 10.3109/14992020903405987. [DOI] [PubMed] [Google Scholar]