Abstract

Emerging technology, especially robotic technology, has been shown to be appealing to children with autism spectrum disorders (ASD). Such interest may be leveraged to provide repeatable, accurate and individualized intervention services to young children with ASD based on quantitative metrics. However, existing robot-mediated systems tend to have limited adaptive capability that may impact individualization. Our current work seeks to bridge this gap by developing an adaptive and individualized robot-mediated technology for children with ASD. The system is composed of a humanoid robot with its vision augmented by a network of cameras for real-time head tracking using a distributed architecture. Based on the cues from the child’s head movement, the robot intelligently adapts itself in an individualized manner to generate prompts and reinforcements with potential to promote skills in the ASD core deficit area of early social orienting. The system was validated for feasibility, accuracy, and performance. Results from a pilot usability study involving six children with ASD and a control group of six typically developing (TD) children are presented.

Keywords: Rehabilitation robotics, robot and autism, robotassisted autism intervention, social human–robot interaction

I. Introduction

AUTISM spectrum disorders (ASD) are characterized by difficulties in social communication as well as repetitive and atypical patterns of behavior [1]. According to a new report by the Centers for Disease Control and prevention (CDC), an estimated 1 in 88 children and an estimated 1 out of 54 boys in the United States have ASD [2]. That is a 78% increase since the last CDC report in 2009 [3]. The average lifetime cost of care for individual with autism is estimated to be around $3.2 million, with average medical expenditures for individuals with ASD 4.1–6.2 times greater than for those without ASD [4]. With these alarming prevalence figures, effective identification and treatment of ASD is considered a public health emergency [5]. At present, there is no cure or single accepted intervention for autism with lifespan outcomes of the disorder varying widely [1].

While the characteristics responsible for differential response to treatment are unclear, the strongest finding for effecting change comes from interventions that incorporate intensive behavioral intervention [6]. Intensive behavioral interventions, however, typically involves long hours from qualified therapists who are not available in many communities or are beyond the financial resources of families and service systems [7]. Therefore, such high quality behavioral intervention is often inaccessible to the wide ASD population [8], [9] that is increasingly identified at younger ages.

Emerging technology [10] may ultimately play a crucial role in filling the gap for those who cannot otherwise access behavioral intervention. Moreover, these technologies such as computer technology [11]–[13], virtual reality environments [14]–[17], and robotic systems [18], [19] show the potential to make current interventions more individualized and thus more powerful [20]. In fact, a number of recent studies suggest that specific applications of robotic systems can be effectively harnessed to provide new important directions for intervention, given potential capacities for making treatments highly individualized, intensive, flexible, and adaptive based on highly relevant novel quantitative measurements of engagement and performance [21].

The aim of this work was to develop a robot-mediated architecture that allows practice of social orientation skills such as joint attention (JA) skills in a dynamic closed-loop manner for young children with ASD. We chose early joint attention skills [22], since these skills are thought to be fundamental, or pivotal, social communication building blocks that are central to the etiology of the disorder itself [23], [24]. At a basic level, joint attention refers to a triadic exchange in which a child coordinates attention between a social partner and an aspect of the environment. Such exchanges enable young children to socially coordinate their attention with people to more effectively learn from others and their environment. Fundamental differences in early joint attention skills likely underlie the deleterious neurodevelopmental cascade of effects associated with the disorder itself, including language and social outcomes across the ASD spectrum [22], [24], [25].

We hypothesized that an appropriately designed robotic system would be able to administer joint attention tasks as well as an experienced therapist, and as a consequence, can be included as a useful ASD intervention tool that, in addition to administering the therapy, can collect objective data and metrics to derive new individualized intervention paradigms. In this paper, we empirically tested this hypothesis. The contributions of the paper are two-fold: first, we designed a closed-loop adaptive robot-mediated ASD intervention architecture called ARIA wherein a humanoid robot works in coordination with a network of spatially distributed cameras and display monitors to enable dynamic closed-loop interaction with a participant, and second, we perform a usability study with this new system and compare ARIA performance with that of a human therapist. We believe that such a comparative study with a robotic system in the context of response to joint attention task has not been performed before.

The rest of the paper is organized as follows. Section II discusses the state-of-the-art in robot-mediated ASD intervention and the need for adaptive and individualized intervention. Section III is devoted to the discussion of the development of the test-bed system, ARIA (Adaptive Robot-mediated Intervention Architecture). Section IV explains the usability study designed to measure the efficacy of ARIA. Section V presents the results and discusses their implication. Finally, Section VI summarizes the paper and highlights future directions.

II. Robot-Mediated Therapy for ASD

Well-controlled research focusing on the impact of specific clinical applications of robot-assisted ASD systems have been very limited [21], [26], [27]. The most promising finding documents a preference for technological interaction versus human interaction for some children in certain circumstances. Specifically, data from several research groups has demonstrated that many individuals with ASD show a preference for robot-like characteristics over nonrobotic toys and humans [28], [29], and in some circumstances even respond faster when cued by robotic movement than human movement [30], [31]. While this research has been accomplished with school aged children and adults, research noting the preference for very young children with autism to orient to nonsocial contingencies rather than biological motion suggests a downward extension of this intervention. Broad reactions and behaviors during interactions with robots have been investigated with this age group but studies have yet to apply appropriately controlled methodologies with well-indexed groups of young children with ASD, which is needed as the bias toward nonsocial stimuli may be more salient for younger children [32], [33].

Beyond the need for research with younger groups, there have been very few applications of robotic technology to teaching, modeling, or facilitating interactions through more directed intervention and feedback approaches. In the only identified study in this category to date, Duquette, et al. [34] demonstrated improvements in affect and attention sharing with co-participating partners during robotic imitation interaction task. This study provides initial support for the use of robotic technology in ASD intervention. Existing robotic systems, however, are primarily remotely operated and/or unable to perform autonomous closed-loop interaction, which limits application to intervention settings for extended and meaningful interactions [35]–[37]. Studies have yet to investigate the utilization of intelligent and dynamic robotic interaction in attempts to directly address skills related to the core deficits of ASD. In this context, our preliminary results with computer and robot-based adaptive response technology for intervention indicates the potential of the technology to flexibly adapt to children with ASD [14], [18], [38], [39]. Feil–Seifer et al. [40] used distance-based features to autonomously detect and classify positive and negative robot interactions, while Liu et al. [41] used peripheral physiological signals to adaptively change robot behavior based on psychological state of the participant. These are among the few adaptive robot assisted autism therapeutic systems to date.

A. Objectives and Scope

This work is mainly focused on 1) developing such an individualized and adaptive robotic therapy platform that is capable of administering joint attention therapy on its own; and 2) conducting a usability study that investigates the potential of such a robot-mediated ASD intervention as compared to a similar human therapist-based intervention. Though it was beyond the scope of this current study, it is our hope that eventually robot-mediated intervention can be used to teach skills and those skills can be transferred to real-world situations using co-robotic paradigms where a human caretaker will be integrated within a robotic interaction framework.

III. ARIA-System Development

A. Architecture Overview

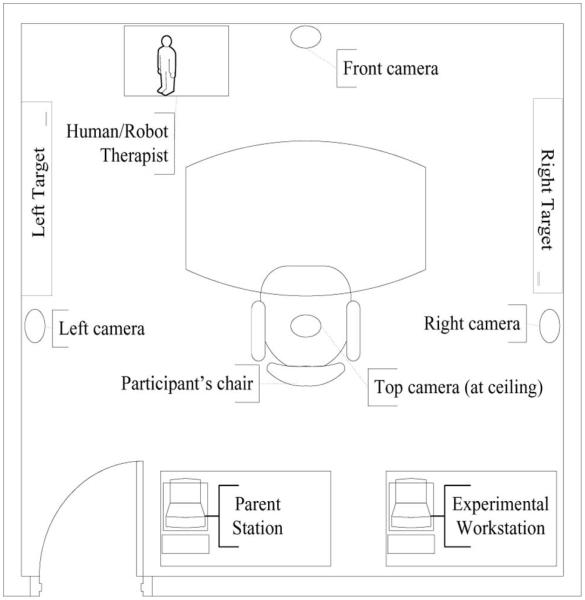

A humanoid robot is the central component of the Adaptive Robot-mediated Intervention Architecture (ARIA) (Fig. 1), which is capable of performing many novel embodied actions. It presented joint attention prompts adaptively using gestures (pointing to the target by hand), gaze/head shifts, voice commands and audiovisual stimuli. The system had two computer monitors hung on specific, but modifiable locations of the experiment room capable of providing visual and auditory stimuli. The stimuli included static pictures of interest for the children (e.g., pictures of children characters), videos of similar content, or discrete audio and visual events (i.e., specific sound/video in the form of additional triggering if child did not respond to robot cues alone). These stimuli could be adaptively changed in form or content to provide additional prompt levels based on the participant’s response and also served as an object of potential reinforcement and reward (i.e., contingent activation).

Fig. 1.

Experiment room setup.

Participants’ gaze as approximated by the head pose, in response to the joint attention prompt was inferred in real-time, by a network of spatially distributed cameras mounted on the walls of the room. Individual gaze information was fed back to a software supervisory controller that coordinated the next robotic system action. The adaptive and individualized closed-loop dynamic interaction preceded for a set of fixed duration trials. Fig. 1 shows the experimental room setup and how the cameras and the monitors that presented stimuli were placed in reference to the participant.

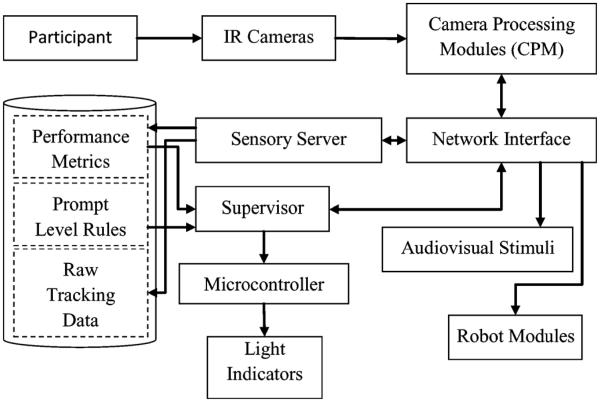

The robot and each camera had their own processing modules. The system was developed using a distributed architecture with a supervisory module (i.e., a software supervisory controller) making decisions about task progression. The participant wore a hat with an array of infrared (IR) LEDs sewn to its top and sides in straight lines. This hat was used in conjunction with the network of cameras to infer eye gaze of the participant. The supervisory controller sent/received commands and data to and from the robot as well as the cameras. Individual modules ran their own separate processes. The supervisory controller facilitated communication between the camera processing modules (CPM), the humanoid robot, and the stimuli controllers (SC) using a network interface, as shown in Fig. 2. It also made decisions based on performance metrics computed from the sensory data collected from CPMs.

Fig. 2.

System schematics for closed-loop interaction.

The CPMs were responsible for processing the images from the IR camera and measured approximate gaze directions in their respective projective views. The stimuli controllers controlled presentation of stimuli on the audiovisual targets. A sensory network protocol was implemented in the form of a client-server architecture in which each CPM was a client to a server that was monitoring them for raw time-stamped tracking data upon trigger from the supervisory controller.

The server accumulated the raw data for the duration of a trial, produced measured performance metrics data at the end of each trial and sent these data to a client embedded in the supervisory controller. Another server embedded in the supervisory controller generated feedback and communicated with the robot (NAO) and the stimuli controllers. Each stimuli controller had an embedded client. Communication with the robot was done using remote procedure call (RPC) of modules instantiated in the robot via a proxy using simple object access protocol (SOAP).

B. The Humanoid Robot, NAO

The humanoid robot used in this project was NAO, which is made by Aldebaran Robotics . NAO is a medium child-sized humanoid robot with a height of 58 cm and weight of approximately 4.3 kg. Its body is made of plastic and it has 25 degrees of freedom (DOF). It is equipped with software modules that enable RPC and encourage distributed processing. NAO’s architecture is based on a programming architecture called NAOqi that is a broker process for object sharing and is used to attach modules developed for specific tasks. This allows to port out computationally intensive algorithms into a remote machine other than the robot.

In this work, we augmented NAO’s vision using a distributed network of external IR cameras as part of the head tracker for closed-loop interaction. NAO’s two CMOS vertical stereo camera sensors are low performance CMOS sensors with frame rates of 4–5 frames per second (FPS) with the native resolution of 640 × 480, which were not suitable for our task in detecting head movement in real-time. Fig. 2 shows how we designed the communication between different parts of the system and the participant that constituted the closed-loop real-time interaction. To meaningfully compare the performance of the robot and a human therapist, we used the head tracker for both the human therapist as well as the robot. Light indicators, which were controlled by a microcontroller, were used to indicate success or failure for the human therapist. They were located behind the participant and in front of the human therapist.

C. Rationale for the Development of a Head Tracker

In responding to joint attention (RJA) tasks, the administrator of the task is interested in knowing whether the child looks at the desired target following a prompt [42]. Gaze inference is usually performed using eye trackers. However, such systems often place restrictions on head movement and detection range. They are also expensive and are generally sensitive to large head movements, especially the type of head movements needed for RJA tasks. Moreover, the eye trackers need calibration (often multiple times) with each participant and the range of the eye trackers typically requires that the participants be seated within 1–2 ft from the tracker/stimuli. Joint attention tasks, on the other hand, require large head movements and the objects of interests are typically placed between 5–10 ft from the participants. While it is necessary to know whether the child is looking at a target object in joint attention tasks, unlike typical eye tracking tasks the need to know the precise gaze coordinates is less important in JA tasks. Commercial marker based head trackers (such as TrackIR4, and inertial cubes) were initially considered. However, they are specifically designed for certain applications (e.g., video games) and their tracking algorithm is not flexible enough for JA task, which requires very large head movements around the room. As a result, we decided to develop a low cost head tracker that would not be limited to large head movements.

In order to determine whether the child is responding to RJA commands, a near-IR light marker-based head tracker was designed using low cost off-the-shelf components for gaze inference with an assumption that gaze direction can be inferred by knowing the head direction using projective transformations.

D. The Head Tracker

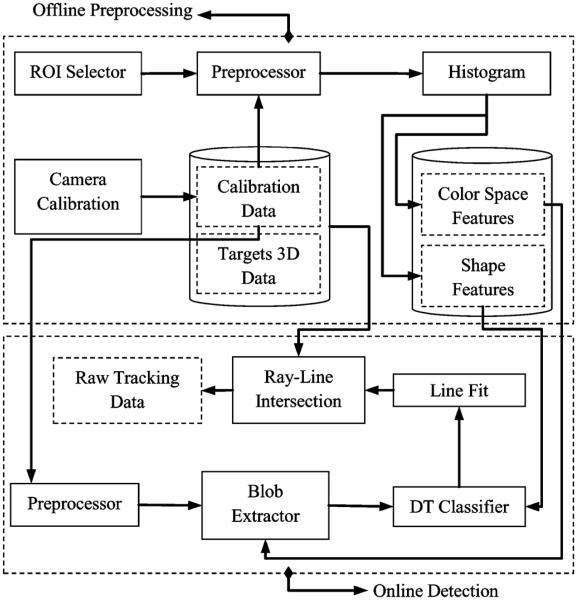

The head tracker was composed of near-infrared cameras, arrays of IR light emitting diodes (LEDs) sewn on the top and the sides of a hat as markers, and the camera processing modules (CPMs) (Fig. 3). The tracker had a set of CPMs that processed input images captured by the top and side cameras that were tracking the arrays of top and side LEDs on the hat, respectively.

Fig. 3.

The hat holds the array of LEDs at side and top.

The Head Tracker has the following components:

1) IR Cameras

The IR Cameras were custom modified to monitor only in near-infrared from inexpensive Logitech Pro 9000 webcams. The IR filter for the original camera was removed carefully and was replaced with a glass of similar refractive index and thickness.

2) Camera Processing Module

These modules were equipped with contour-based image processor in the XYZ color space and simple decision tree like rule-based object detector given geometric and color characteristic features. The XYZ space is characterized by identifying brightness cues better than other color spaces. This was a suitable property for detection of LEDs in the IR spectrum.

The camera matrix (hence, the focal length) and distortion coefficients were estimated using the Levenberg–Marquardt optimization algorithm [43]. A camera calibration routine was performed with the common square grid fiducial.

There were two stages of the camera processing modules: offline preprocessing stage and an online detection stage (Fig. 4). The offline preprocessing stage included the camera calibration and the offline manual feature selection. Regions of interests (ROI) containing only LEDs were manually selected. The input images were then masked by the selected ROI. Preprocessing (such as noise filtering, undistorting using the camera distortion coefficient) was performed before extracting features. Then, color space histogram statistics (such as minimum, mean, maximum in each of the three color channels) and geometric shape features (such as area, roundness, and perimeter) were computed inside the regions of interest. These features were then stored for online real-time detection stage.

Fig. 4.

CPM schematics.

In the online detection stage, the input images were undistorted using the distortion coefficients and then a 9 × 9 Gaussian smoothing filter was applied to remove artifacts. The blob extractor applied the color space features in each channel of the XYZ color space to obtain candidate blobs. The resultant single channel images were logically combined so that all qualifying blobs satisfied all the color space constraints. To further remove artifact blobs, extreme geometric feature pruning and morphological opening were performed. The candidate blobs were then passed through a simple classifier, which classified the blobs as those belonging to LED blobs and those belonging to the background using the geometrical features. A linear function was fit to those blobs that qualified the classification, which was then extended indefinitely as a ray. Finally, a line segment (projection of the targets) and ray (extension of the fitted line) intersection test was performed to approximate the gaze of the participant in the respective projective plane. When the child moved his/her head so did the LEDs arrays and so did their 2-D projections. Hence the ray moved around the projection plane. The targets were at rest relative to the camera’s frames of references. The intersections of rays with the projections of the targets in the top and side projection planes gave the x and y-coordinates of the approximate gaze point, respectively, on the corresponding image planes. Therefore, a ray-line segment intersection could be performed to compute the intersection coordinate in the respective dimensions. A brief description of the ray-line segment intersection algorithm used in this work is given in [44]. Note that this system considered yaw and pitch angles of the head movement but not the roll, which was considered less important for this study.

IV. Usability Study

To test and verify the feasibility of the ARIA system, a usability study was designed based on common paradigms for indexing early joint attention and social orienting capacity, such as the Early Social Communication Scales (ESCS) and the Autism Diagnostic Observation Schedule (ADOS) [45], [46]. We developed the behavior protocol used in this study to mirror standard practice in assessment and treatment of children with autism regarding early joint attention skills. Consistency in prompt procedures across robot and human administrators was of primary importance given our aim to determine whether a robot administrator could carry out a joint attention task similar to a human administrator. We therefore used only one prompting method. We chose a least-to-most prompt (LTM) hierarchy [47], which essentially provides support to the learner only when needed. The method allows for independence at the outset of the task with increasing help only after the child has been given an opportunity to display independent skills. Prompt levels arranged from least-to-most supportive are also commonly used in “gold-standard” diagnostic or screening tools (e.g., ADOS, STAT, and ESCS). The LTM approach allowed us to identify the lowest level of support needed by each child to emit the correct response (i.e., looking toward the joint-attention target). Our paradigm, adopting the least-to-most prompting systems of these common assessments, involves an administrator cuing a child to look toward specific targets and adding additional cues or reinforcement based on performance.

The child sits facing the human therapist or the robot administrator in their respective sessions and the human therapist and the robot presented the joint attention task using the hierarchical prompt protocol described below. It should be noted that this is a preliminary usability study using a well-accepted prompting strategy commonly used in ASD assessment and intervention; but the study is not an intervention. Future study applying the system and indexing learning over time would be a natural extension to this comparative behavioral study.

A. Hierarchical Prompt Protocol

A hierarchical prompt protocol was designed in a least-tomost fashion with higher level of prompt provided on a need basis. In this robot-mediated joint attention task, the robot or the human therapist initiated the task (IJA) using speech as a first level of interaction. They cued the participant to look at a specific picture that was being displayed on one of the two computer monitors hung on the wall. The next higher prompt level adds pointing gesture to the target by the robot or the human therapist. The next higher prompt level adds an audio prompt at the target on top of the pointing gesture cue and the verbal cue. The final level cue adds a brief video in addition to pointing gesture and the verbal cues. The audio and video prompts at the target were not directly addressing the child rather they are novelty added to attract the child attention. There were six levels of prompts in each trial. These hierarchical prompts were administered stepwise if no or inappropriate response was detected and progressed in least-to-most fashion (Table I). Exact similar prompts protocol was followed by both the human therapist as well as the robot. The way they were presented could, however, be slightly different as the robot might not be capable of producing all subtle nonverbal cueing exactly as the human therapist. For instance, the nonbiological (robotic) movement of the head and the arms were starkly different from the smooth human limb motions. Moreover, the robot has limitations on its eyes and eye gaze was approximated by a head turn on the robot. The LEDs on the robot’s eyes could have been toggled to give a sense of direction but it would be unnatural and it would make comparison to a human therapist compounded.

TABLE I.

Levels of the Hierarchical Protocol

| Prompt Levels (PL) |

Robot Prompt for a child named Max |

|---|---|

| PL l | "Max, look" + shift (robot’s) head to target |

| PL 2* | If NR$ after 5 s : "Max, look" + shift head to target |

| PL 3 | If NR after 5s: "Max, look at that." + head shift + point to target |

| PL 4* | If NR after 5s: "Max, look at that." + head shift + point to target |

| PL 5 | If NR after 5s: "Max, look at that." + head shift + point to target + audio clip sounds at target |

| PL 6 | If NR after 5s: "Max, look at that." + head shift + point to target + audio clip sounds at target and then video onset for 30s |

PL2 and PL4 are intentionally repeated versions of PLl and PL3, respectively.

NR means no or inappropriate response.

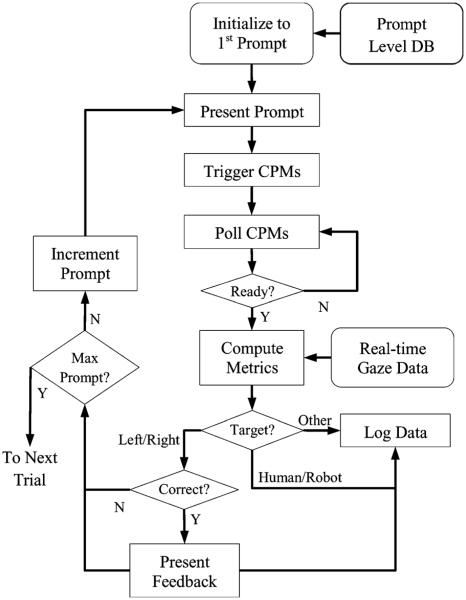

The head tracker was triggered by the supervisory controller as the robot issued a prompt to activate the camera processing modules for the specified trial duration to accumulate time-stamped data of the head movement so as to infer where the participant was looking. At the end of the trial duration, several performance metrics were computed and sent to the supervisory controller. These performance metrics were used to generate rewards to be executed by the robot. For example, if the response was correct the robot would say “Good Job!” and encouragement (e.g., static picture shown on the monitor turned into a movie clip). On the other hand, if there was no response or incorrect response, the robot issued the next level of prompt.

The pictures, audio, and video clips were carefully selected from children’s TV programs such as Bob the Builder, Dora the Explorer, etc. Segmented clips of characters performing specific actions from six age appropriate preschool shows characters were selected for inclusion. Clips selected were components of these shows wherein a dance, performance, or action were carried out by the character such that the clip could be easily initiated and ended without abrupt start or end. The clips were also selected based on consultant review that the particular segments were developmentally appropriate and potentially reinforcing to our patient population. Within each show, videos were selected to be part of the prompt and feedback. Each video was selected so that it has short duration action filled segment so as to be used for the six level of prompting. The videos for the reinforcement were selected to make the reinforcement enjoyable to the participant. The reinforcement videos contained segments that are filled with play, joy and multiple characters. The prompting videos were short segment (5 s) compared to a longer reinforcement segment of 30 s. In almost all of the videos, the characters are not directly addressing the participant. The audio/video played on the targets was used only as a simple attention grabbing mechanism rather than an additional source of prompts on their own. Each audio and video clip played was directly matched with the initial picture displayed on the presentation targets. This means, if initial target was a picture of Scooby-doo, the audio and video prompt as well as the reinforcement videos would be that of Scooby-doo with the only difference of multiple characters (people) in a single segment in the case of the reinforcement video. The target monitors were 24 in (58 cm × 36 cm). Each monitor was placed at a horizontal and vertical distance of 148 cm and 55 cm, respectively, as seen from the top camera reference frame and they were hung at a height of 150 cm from ground and 174 cm from the top camera (ceiling) (Fig. 1).

B. The Joint Attention Task and Procedure

Each participant took part in one session of experiment lasting approximately 30 min. A typical session consisted of four sub-sessions of each 2–4 min long: two human therapist and two robot therapist sub-sessions interleaved (H-R-H-R or R-H-R-H). Each sub-session contained four trials, with two trials randomized per target. Each trial was 8 s long with 3–5 s of prompt and an additional 3–5 s of monitoring interval. Fig. 5 presents the flow of the process.

Fig. 5.

Flowchart of the prompt-feedback mechanism for one trial.

In the beginning, a researcher (a trained therapist) described the procedures and the tasks involved in the study to the participant and his/her parent(s) and obtained written parent consent. An assent document was then read for children above three years of age. The child was instructed to tell the researcher or parents if he or she became uncomfortable with the study and could choose to withdraw at any time. The participant was then seated and buckled into a Rifton chair to start the first sub-session. The child remained buckled in the chair during sub-sessions (approximately 2–4 min long) but encouraged to take a break from the chair between sub-sessions. For the first three participants of both groups (children with ASD and typically developing children), the robot was presented first while for the rest of participants in each group, the human therapist started out the session. The prompt presenter (either the robot or the human therapist) first cued the participant just verbally. For example, if the participant’s name was Max, “Max, Look!” (Table I). If the participant did not respond, the robot hierarchically increased the prompting level to include pointing to the target as well as audio and video trigger for 5 s (Table I).

C. Subjects

A total of 12 participants (two groups: children with ASD and a control group of typically developing (TD) children) completed the tasks with their parents’ consent. Initially, a total of 18 participants (10 with ASD and 8 TD) were recruited. Six TD (m: n = 4 f: n = 2) of age 2–5 (M = 4.26 years, SD = 1.05 years) and six participants with ASD (m : n = 5 and f: n = 1) of age 2–5 years (M = 4.7 years, SD = 0.7 years) successfully completed the study. The details of the TD and ASD group who completed the study are given in Tables II and III.

Out of the total 10 ASD and eight TD children, four ASD and two TD participants were not able to complete the study. Three out of the four subjects with ASD withdrew because they could not tolerate wearing the hat. One was distressed during the interaction and as such the session was discontinued. Two typically developing children were not willing to participate after parent consent. Table II shows the diagnoses of the participants with ASD who completed the study. All participants with ASD were recruited through existing clinical research programs at Vanderbilt University and had an established clinical diagnosis of ASD. The study was approved by the Vanderbilt Institutional Review Board (IRB). To be eligible for the study, participants had to be between 2–5 years of age, had to have an established diagnosis of ASD based on the gold standard in autism assessment, the Autism Diagnostic Observation Schedule Generic (ADOS-G) [48], as well as participants’ willingness (and parents’ consent) and the physical and mental ability to adequately perform the tasks.

TABLE II.

Profile of the Participants With ASD in Robot-Mediated JA Study

| Subject (Gender) |

ASDl (m) |

ASD2 (m) |

ASD3 (f) |

ASD4 (m) |

ASD5 (m) |

ASD6 (m) |

Average (SD) |

|---|---|---|---|---|---|---|---|

| Age | 5.14 | 3.24 | 4.92 | 5.27 | 4.49 | 5.17 | 4.70 (0.7) |

| ADOS-G (cutoff=7) |

10 | 13 | 14 | 25 | 25 | 9 | 16.0 (6.58) |

| ADOS CSS (cutoff=8) |

6 | 6 | 7 | 10 | 10 | 5 | 7.33 (1.97) |

| ADOS-G RJA |

0 | 1 | 1 | 2 | 3 | 0 | 1.17 (1.07) |

| SRS (cutoff=60) |

51 | 58 | 70 | 85 | 81 | 77 | 70.33 (12.24) |

| SCQ (cutoff=15) |

5 | 11 | 8 | 21 | 20 | 15 | 13.33 (5.91) |

| IQ | 101 | 54 | 102 | 50 | 49 | 73 | 71.5 (22.65) |

In order to ensure that TD participants did not evidence ASD-specific impairments and to have a quantification of ASD symptoms across groups, TD parents completed ASD screening/symptom measurements: the Social Responsiveness Scale (SRS) [49] and the Social Communication Questionnaire (SCQ) [50]. Efforts were made to match the groups with respect to age and gender whenever possible.

V. Results and Discussion

A. Validation Results

First, the head tracker was validated using an infrared (IR) laser pointer. Validation of the head tracker was performed by attaching an infrared laser pointer to the hat and aligning it in such a way that the direction of the laser pointer approximated the gaze direction. Projection of the laser pointer onto one of the targets (computer monitors) was recorded using a 2 cm × 2 cm grid displayed on the monitor. Twenty such validation points were uniformly distributed across the screen and 10 s of data was recorded and averaged at each point. It was found that the head tracker had average validation errors of 2.6 cm (1.20) and 1.5 cm (0.70) in x and y coordinates at approximately 1.2 m distance, respectively, with good repeatability. Since joint attention requires large head movements, an average 2.6 cm error was acceptable (for details, refer [44]).

The overall system was also tested with two typically developing children, ages two and four years. The system worked as designed and all the data were logged properly and the robot could administer the JA task accurately. This preliminary testing helped to fix issues and made the system ready for the usability study.

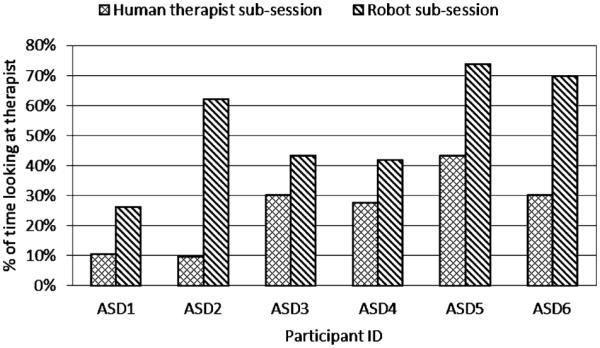

B. Preferential Looking Towards the Robot

The results of this study indicated that children in both ASD and TD groups spent more time looking at the robot than the human therapist. A paired dependent t-test statistic was performed for statistical significance analysis of the results. The children in the ASD group looked at the robot therapist for 52.76% (17.01%)1 of the robot sub-session time. By comparison, they looked at the human therapist for 25.11% (11.82%)11 of the human sub-session time. As such, the ASD grouped looked at the robot therapist 27.65% longer than the human therapist, p < 0.005. Fig. 6 shows individual comparison of the time children in the ASD group spent looking at the therapist in the robot and human sub-sessions, respectively.

Fig. 6.

Percentage of time spent looking at robot and human therapists during respective sub-sessions for the ASD group.

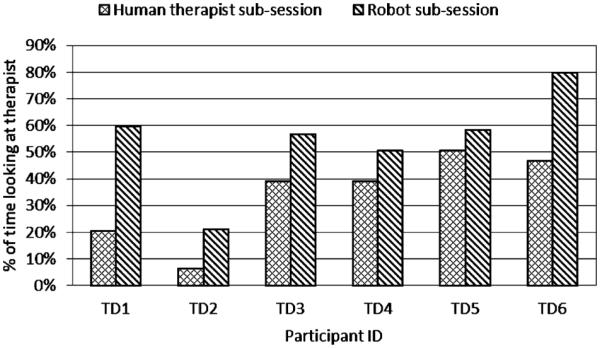

The children in the TD control group looked at the robot therapist for 54.27% (17.65%)11 of the robot sub-session time. By comparison, they looked at the human therapist for 33.64% (16.04%)11 of the human therapist sub-session time. As such the children in the TD group looked at the robot therapist for 20.63% longer than the human therapist, p < 0.005. Fig. 7 shows individual comparison of the time spent looking at the therapist in the robot and human sub-sessions, respectively, for the TD group.

Fig. 7.

Percentage of time spent looking at robot and human therapists during respective sub-sessions for the TD group.

The results indicated a statistically significant preferential orientation towards the robot as compared to the human therapist for both groups. Individual and group comparisons indicate that the children spent more time looking at the robot than human therapist. This difference was most pronounced for children in the ASD group as this group looked at the human therapist for a smaller percentage of human sub-sessions than did those children in the TD group (The TD group looked 8.53% more time on the human therapist than the children in the ASD group).

The robotic system together with the dynamic target stimuli was able to perform the joint attention task administration with 95.83% success to the ASD group and with 97.92% success to the TD group. Success was measured as a percentage of successful trials (trials that resulted in eventual response to the targets at any of the six levels of prompt) out of the total available trials. The human therapist was able to achieve 100% success for both groups. Taken together with preferential looking data, the robot was able to perform the task with success rates similar to that of the human therapist with the potential advantage of increased engagement. Success to attend to the target before the dynamic audio/video target stimuli was also measured to rate the performance of the robot in administering the trials. The robot was able to result in success 77.08% for the ASD group and 93.75% for the TD group while the human therapist was able to achieve successes of 93.75% and 100% for the ASD and TD groups, respectively. This shows that the robot alone resulted in lower success rate, which highlights the importance of the dynamic stimuli presented on the targets themselves. Relatively, this is not the case for the control group.

C. Frequency of Looking to Target and Number of Required Levels for Success

Objective performance metrics were computed to quantitatively measure: “hit frequency” (i.e., frequency of looks to the target after the prompt was issued within a trial) and “levels required for success” (prompt level required for success hitting target), expressed as prompt level/total levels in a given sub-session.

Too large a hit frequency is undesirable as it indicates erratic gaze movement rather than engagement. Too small a hit frequency might be considered a “sticky” attention scenario [51]. The children in the ASD group showed a hit frequency of 2.06 (0.71)11 in the human therapist sub-session and 2.02 (0.28)11 in the robot sub-session, which were comparable.

Children in the TD group showed hit frequency of 2.17 (0.65)11 in the human therapist sub-session and 1.67 (.35)11 in the robot sub-session. There was no significant difference in hit frequency for ASD and TD groups, p > 0.1.

Levels required for success measures the realization of the prompt and the ability to respond to initiation of joint attention. Children in the ASD group required 14.58% more of the total number of levels available in the robot sub-sessions than in the human therapist sub-sessions. Children in the TD group required 9.37% more of the total number of levels available in the robot sub-sessions than in the human therapist sub-sessions. Both differences for the two groups were statistically significant, p < 0.05.

As seen in Section V-B, preferential looking toward robot was statistically significant for both groups. Further, a trained observer and a parent completed ratings of behavioral engagement. Both observers reported that most children appeared excited to see the robot and its actions. The higher number of levels required for success in robot sub-sessions may also be due to this attentional bias towards the robot.

D. General Comparisons of ASD Group With TD Group

Generally, both groups spent more time on the robot as opposed to the human therapist sub-sessions (p < 0.005, both groups), exhibit a higher latency before first hit on the robot sub-session than the human therapist (p < 0.05, ASD, p < 0.005, TD), lower percentage of time spent on the targets in the robot sub-session than the human therapist sub-session (p < 0.05, ASD, p < 0.005, TD), higher average number of levels were required in the robot sub-session than the human therapist sub-session (p < 0.005, both groups)

In the human therapist sub-sessions, children in the TD group looked towards the human therapist more frequently than did children in the ASD group. In the robot sub-sessions, children in the TD group looked towards the robot at roughly equivalent rates as the ASD group; however, they looked towards the human therapist 8.53% more than children in the ASD group. This suggests excellent engagement with the robot regardless of group status. As expected, human therapists garnered the attention of children in the TD group more than children in the ASD group. However, the difference was not statistically significant with p < 0.1 (independent two-sample unequal variance test).

Regarding levels required for success, the children in the TD group required fewer prompt levels for success in general than children in the ASD group. In the robot sub-session the TD group required 11.46% fewer prompt levels than ASD group while they required 6.25% fewer prompt levels than the ASD group on average in the human therapist sub-session.

Generally, children in the ASD group attended more to the robot and were less attracted by the targets than their TD counterparts. The relatively higher number of prompt levels required for children with ASD might be best attributed to their attention bias for the robot than the nature of the disorder itself. The relatively small and insignificant differences in these performance metrics for children in the ASD group may be explained by participant characteristics (i.e., mostly high functioning with closer to normal RJA scores. See Table II for the participants’ profiles).

TABLE III.

Profile of the TD Participants in Robot-Mediated JA Study

| Participant (Gender) |

Age | SRS (cutoft=60) |

SCQ (cutoff=15) |

|---|---|---|---|

| TDl (f) | 4.72 | 47 | 2 |

| TD2 (m) | 5.27 | 39 | 0 |

| TD3 (m) | 4.74 | 45 | 2 |

| TD4 (f) | 3.20 | 46 | 5 |

| TD5 (m) | 5.18 | 50 | 11 |

| TD6 (m) | 2.46 | 46 | 3 |

|

| |||

| Average (SD) | 4.26 (1.05) | 45.5 (3.3) | 3.83 (3.53) |

VI. Conclusion and Future Work

We studied the development and application of an innovative adaptive robotic system with potential relevance to core areas of deficit in young children with ASD. The ultimate objective of this study was to empirically test the feasibility and usability of an adaptive robotic system capable of intelligently administering joint attention prompts and adaptively responding based on within system measurements of performance. We also conducted a preliminary comparison of a participant’s performance across robot and human administrators.

Children with ASD spent significantly more time looking at the humanoid robot than their TD counterparts and spent less time looking at the human administrator. Preschool children with ASD looked away from target stimuli at rates comparable to typically developing peers. Collectively these findings are promising and suggest that robotic systems endowed with enhancements for successfully pushing toward correct orientation to target either with systematically faded prompting or potentially embedding coordinated action with human-partners might be capable of taking advantage of baseline enhancements in nonsocial attention preference in order to meaningfully enhance skills related to coordinated attention [33]. Both children with ASD and TD children required higher levels of prompting with the robot than human administrator. This may be due to novelty of the robot, which might decline over time and should further be examined in a larger study.

There are several limitations of the current study that are important to highlight. The small sample size examined and the limited time frame of interaction are the most powerful limits of the current study. Data from this preliminary study do not suggest that robotic interaction itself constitutes an intervention paradigm that can meaningfully improve core areas of impairment for children with ASD. Further, the brief exposure of the current paradigm ultimately is limited to answering questions as to whether the heightened attention paid to the robotic system during the study was simply the artifact of novelty or of a more characteristic pattern of preference that could be harnessed over time. Further study with large sample size is needed to determine 1) whether the preferential gaze towards the robot is from the novelty of the robot; and 2) ways of employing this attention and diverting these towards the target over time for meaningful skill learning. The other major limitation of the current system was the requirement of wearing a hat for the marker-based tracker to work. A dropout rate of 33% (which is comparable to clinical dropout rates of minimally invasive devices) highlights the need to develop a completely noninvasive remote monitoring mechanism. Further work is underway to develop a remote noncontact eye gaze monitoring mechanism to replace the hat. We are developing an approximate gaze tracker based on marker less face and eye tracking.

Despite limitations, this work is the first to our knowledge to design and empirically evaluate and compare the usability, feasibility, and preliminary efficacy of a closed-loop interactive technology capable of adapting based on objective performance metrics on joint attention tasks. Only a few [40], [41] other existing robotic systems developed for other aspects of autism intervention have specifically addressed how to detect and flexibly respond to individually derived social and disorder relevant behavioral cues within an intelligent adaptive robotic paradigm for young children with ASD. Progress in this direction may introduce the possibility of technological intervention tools that are not simply response systems, but systems that are capable of necessary and more complex adaptations. Systems capable of such adaptation may ultimately be utilized to promote meaningful change related to the complex and important social communication impairments of the disorder itself.

Ultimately, questions of generalization of skills remain perhaps the most important ones to answer for the expanding field of robotic intervention of ASD. While we are hopeful that in the future, sophisticated clinical applications of adaptive robotic technologies will demonstrate key meaningful improvements for young children with ASD, it is important to note that robots might be useful as assistants in the intervention than overtaking humans in the near future. However, if we are able to discern measurable and modifiable aspects of adaptive robotic intervention with meaningful effects on skills seen as important to neurodevelopment, or important to caregivers, we may realize transformative accelerant robotic technologies with pragmatic real-world application.

Acknowledgement

The authors would like to thank A. Vehorn, TRIAD, and Vanderbilt Kennedy Center for her role in the study as human therapist and human therapist observer. The authors would also like to thank J. Steinmann–Hermsen and Z. Zheng for their help as key research personnel while the study was conducted. Special thanks to study participants and their families.

Biographies

Esubalew T. Bekele (S’07) received the M.S. degree in electrical engineering, in 2009, from Vanderbilt University, Nashville, TN, USA, where he is currently working toward the Ph.D. degree in electrical engineering.

He served as junior faculty in Mekelle Uiversity, Ethiopia, before joining Vanderbilt University. His current research interests include human–machine interaction, robotics, affect recognition, machine learning, and computer vision.

Uttama Lahiri (S’11–M’12) received the Ph.D. in mechanical engineering from Vanderbilt University, Nashville, TN, USA, 2011.

Currently, she is Assistant Professor at Indian Institute of Technology, Gandhinagar, Gujarat, India. Her research interests include adaptive response systems; signal processing, machine learning, human–computer interaction, and human–robot interaction.

Dr. Lahiri is a member of the Society of Women Engineers.

Amy R. Swanson received the M.A. degree in social science from University of Chicago, Chicago, IL, USA, in 2006.

Currently she is a Research Analyst at Vanderbilt Kennedy Center’s Treatment and Research Institute for Autism Spectrum Disorders, Nashville, TN, USA.

Julie Crittendon received the Ph.D. degree in clinical psychology from the University of Mississippi, Oxford, MS, USA, in 2009.

She completed her pre-doctoral internship at the Kennedy Krieger Institute and the Johns Hopkins School of Medicine and her postdoctoral fellowship at the Vanderbilt Kennedy Center/Treatment and Research Institute for Autism Spectrum Disorders. Currently, she is an Assistant Professor of Pediatrics and Psychiatry at Vanderbilt University, Nashville, TN, USA.

Zachary E. Warren received the Ph.D. degree from the University of Miami, Miami, FL, USA, in 2005. He completed his pre-doctoral internship at Children’s Hospital Boston/Harvard Medical School in and his postdoctoral fellowship at the Medical University of South Carolina. Currently he is an Assistant Professor of Pediatrics and Psychiatry at Vanderbilt University. He is the Director of the Treatment and Research Institute for Autism Spectrum Disorders at the Vanderbilt Kennedy Center as well Director of Autism Clinical Services within the Division of Genetics and Developmental Pediatrics.

Nilanjan Sarkar (S’92–M’93–SM’04) received the Ph.D. degree in mechanical engineering and applied mechanics from the University of Pennsylvania, Philadelphia, PA, USA, in 1993.

He was a Postdoctoral Fellow at Queen’s University, Canada. Then, he joined the University of Hawaii as an Assistant Professor. In 2000, he joined Vanderbilt University, Nashville, TN, USA, where he is currently a Professor of mechanical engineering and computer engineering. His current research interests include human–robot interaction, affective computing, dynamics, and control.

Dr. Sarkar was an Associate Editor for the IEEE Transactions on Robotics.

Footnotes

The format is Mean (Standard Deviation) throughout the paper.

REFERENCES

- 1.Diagnostic and Statistical Manual of Mental Disorders: Quick Reference to the Diagnostic Criteria DSM-IV-TR. Am. Psychiatric Pub.; Washington, DC, USA: 2000. [Google Scholar]

- 2.Baio JA. Prevalence of Autism Spectrum Disorders—Autism and Developmental Disabilities Monitoring Network. Washington, DC, USA; 2012. 14 Sites, United States, 2008: National Center on Birth Defects and Developmental Disabilities. Centers Disease Control Prevent., U.S. Dept. Health Human Ser. [Google Scholar]

- 3.Prevalence of autsim spectrum disorders-ADDM Network Centers for Disease Control Prevent. Washington, DC, USA: 2009. MMWR Weekly Rep. 58. [Google Scholar]

- 4.Autism Spectrum Disorders Prevalence Rate. Autism Speaks Center Disease Control; Washington, DC, USA: 2011. [Google Scholar]

- 5.Interagency Autism Coordinating Committee Strategic Plan for Autism Spectrum Disorder Research U.S. Dept. Health Human Services. 2009 [Online]. Available: http://iacc.hhs.gov/strategic-plan/2009/ [Google Scholar]

- 6.Warren VVJ, et al. Therapies for children with autism spectrum disorders. 2001. comparative effectiveness review Vanderbilt Evidence-Based Practice Center 290- 02- HHSA 290 2007 10065 1.

- 7.Eikeseth S. Outcome of comprehensive psycho-educational interventions for young children with autism. Res. Develop. Disabil. 2009;30(1):158–178. doi: 10.1016/j.ridd.2008.02.003. [DOI] [PubMed] [Google Scholar]

- 8.Knapp M, Romeo R, Beecham J. Economic cost of autism in the UK. Autism. 2009;13(3):317–336. doi: 10.1177/1362361309104246. [DOI] [PubMed] [Google Scholar]

- 9.Chasson GS, Harris GE, Neely WJ. Cost comparison of early intensive behavioral intervention and special education for children with autism. J. Child Family Stud. 2007;16(3):401–413. [Google Scholar]

- 10.Goodwin MS. Enhancing and accelerating the pace of autism research and treatment. Focus Autism Other Develop. Disabil. 2008;23(2) [Google Scholar]

- 11.2008;66(9):662–677. [Google Scholar]

- 12.Moore D, McGrath P, Thorpe J. Computer-aided learning for people with autism-a framework for research and development. Innovat. Edu. Train. Int. 2000;37(3):218–228. [Google Scholar]

- 13.Bernard-Opitz V, Sriram N, Nakhoda-Sapuan S. Enhancing social problem solving in children with autism and normal children through computer-assisted instruction. J. Autism Develop. Disorders. 2001;31(4):377–384. doi: 10.1023/a:1010660502130. [DOI] [PubMed] [Google Scholar]

- 14.Conn K, Liu C, Sarkar N, Stone W, Warren Z. Affect-sensitive assistive intervention technologies for children with autism: An individual-specific approach; Proc. 17th IEEE Int. Symp. Robot Human Interactive Commun.; Munich, Germany. 2008. pp. 442–447. [Google Scholar]

- 15.Lahiri U, Bekele E, Dohrmann E, Warren Z, Sarkar N. Design of a virtual reality based adaptive response technology for children with autism spectrum disorder. in Affective Computing and Intelligent Interaction. New York: Springer. 2011:165–174. [Google Scholar]

- 16.Welch K, Lahiri U, Liu C, Weller R, Sarkar N, Warren Z. Human-Computer Interaction. Ambient, Ubiquitous and Intelligent Interaction. Springer; New York: 2009. An affect-sensitive social interaction paradigm utilizing virtual reality environments for autism intervention; pp. 703–712. [Google Scholar]

- 17.Mitchell P, Parsons S, Leonard A. Using virtual environments for teaching social understanding to 6 adolescents with autistic spectrum disorders. J. Autism Develop. Disord. 2007;37(3):589–600. doi: 10.1007/s10803-006-0189-8. [DOI] [PubMed] [Google Scholar]

- 18.Liu C, Conn K, Sarkar N, Stone W. Online affect detection and adaptation in robot assisted rehabilitation for children with autism; Proc. 16th IEEE Int. Symp. Robot Human Interact. Commun.; Jeju Island, Korea.2007. pp. 588–593. [Google Scholar]

- 19.Dautenhahn K, Billard A. Games children with autism can play with robota, a humanoid robotic doll. Proc. 1st Cambridge Workshop Universal Access Assist. Technol.; Cambridge, U.K.. 2002. pp. 179–190. [Google Scholar]

- 20.R. Sandall S. DEC Recommended Practices: A Comprehensive Guide for Practical Application in Early Intervention/Early Childhood Special Education. DEC; Missoula, MT: 2005. [Google Scholar]

- 21.J. Diehl J, M. Schmitt L, Villano M, R. Crowell C. The clinical use of robots for individuals with autism spectrum disorders: A critical review. Res. Autism Spectrum Disord. 2011;6(1):249–262. doi: 10.1016/j.rasd.2011.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.T. Paparella C. Kasari,, Freeman S, B. Jahromi L. Language outcome in autism: Randomized comparison of joint attention and play interventions. J. Consult. Clin. Psychol. 2008;76(1):125–137. doi: 10.1037/0022-006X.76.1.125. [DOI] [PubMed] [Google Scholar]

- 23.Mundy P, R. Neal A. Neural plasticity, joint attention, and a transactional social-orienting model of autism. Int. Rev. Res. Mental Retardat. 2000;23:139–168. [Google Scholar]

- 24.K. Poon K, R. Watson L, T. Baranek G, D. Poe M. To what extent do joint attention, imitation, and object play behaviors in infancy predict later communication and intellectual functioning in ASD? J. Autism Develop. Disord. :111–2011. doi: 10.1007/s10803-011-1349-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kasari C, Gulsrud AC, Wong C, Kwon S, Locke J. Randomized controlled caregiver mediated joint engagement intervention for toddlers with autism. J. Autism Develop. Disord. 2010;40(9):1045–1056. doi: 10.1007/s10803-010-0955-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Feil-Seifer D, Matarić M. Toward socially assistive robotics for augmenting interventions for children with autism spectrum disorders. Exp. Robot. 2009;54:201–210. [Google Scholar]

- 27.Tapus A, Mataric M, Scassellati B. The grand challenges in socially assistive robotics. IEEE Robot. Autom. Mag. 2007 Mar.14(1):35–42. [Google Scholar]

- 28.Dautenhahn K, Werry I. Towards interactive robots in autism therapy: Background, motivation and challenges. Pragmat. Cognit. 2004;12(1) [Google Scholar]

- 29.Robins B, Dautenhahn K, Dubowski J. Does appearance matter in the interaction of children with autism with a humanoid robot? Interaction Studies. 2006;7(3):509–542. [Google Scholar]

- 30.Bird G, Leighton J, Press C, Heyes C. Intact automatic imitation of human and robot actions in autism spectrum disorders. Proc. R. Soc. B: Biol. Sci. 2007;274(1628):3027–3031. doi: 10.1098/rspb.2007.1019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pierno AC, Mari M, Lusher D, Castiello U. Robotic movement elicits visuomotor priming in children with autism. Neuropsychologia. 2008;46(2):448–454. doi: 10.1016/j.neuropsychologia.2007.08.020. [DOI] [PubMed] [Google Scholar]

- 32.Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch. General Psychiatry. 2002;59(9):809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- 33.Klin A, Lin DJ, Gorrindo P, Ramsay G, Jones W. Two-year-olds with autism orient to nonsocial contingencies rather than biological motion. Nature. 2009;459(72-44) doi: 10.1038/nature07868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Duquette A, Michaud F, Mercier H. Exploring the use of a mobile robot as an imitation agent with children with low-functioning autism. Auton. Robots. 2008;24(2):147–157. [Google Scholar]

- 35.Billard A, Robins B, Nadel J, Dautenhahn K. Building robota, a mini-humanoid robot for the rehabilitation of children with autism. RESNA Assist. Technol. J. 2006;19(1):37–49. doi: 10.1080/10400435.2007.10131864. [DOI] [PubMed] [Google Scholar]

- 36.Dautenhahn K, Werry I, Rae J, Dickerson P, Stribling P, Ogden B. Socially Intelligent Agents—Creating Relationships With Computers and Robots. Dordrecht. Kluwer; MA: 2002. Robotic playmates: Analysing interactive competencies of children with autism playing with a mobile robot; pp. 117–124. [Google Scholar]

- 37.Kozima H, Nakagawa C, Yasuda Y. IEEE Int. Workshop Robot Human Interactive Commun. Nashville, TN, USA: 2005. Interactive robots for communication-care: A case-study in autism therapy; pp. 341–346. [Google Scholar]

- 38.Liu C, Conn K, Sarkar N, Stone W. Affect recognition in robot assisted rehabilitation of children with autism spectrum disorder. Proc. 15th IEEE Int. Conf. Robot. Automat. 2006:1755–1760. [Google Scholar]

- 39.Rani P, Liu C, Sarkar N, Vanman E. An empirical study of machine learning techniques for affect recognition in human-robot interaction. Pattern Anal. Appl. 2006;9(1):58–69. [Google Scholar]

- 40.Feil-Seifer D, Mataric M. Automated detection and classification of positive vs. negative robot interactions with children with autism using distance-based features; Proc. 6th Int. Conf. ACM/IEEE Human-Robot Interact.; New York, NY, USA. 2011. pp. 323–330. [Google Scholar]

- 41.Liu C, Conn K, Sarkar N, Stone W. Online affect detection and robot behavior adaptation for intervention of children with autism. IEEE Trans. Robotics. 2008 Aug.24(4):883–896. [Google Scholar]

- 42.Mundy P, Block J, Delgado C, Pomares Y, Van Hecke AV, Parlade MV. Individual differences and the development of joint attention in infancy. Child Develop. 2007;78(3):938–954. doi: 10.1111/j.1467-8624.2007.01042.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.More J. The Levenberg-Marquardt algorithm: Implementation and theory. Numerical Anal. 1978:105–116. [Google Scholar]

- 44.Bekele E, Lahiri U, Davidson J, Warren Z, Sarkar N. Development of a novel robot-mediated adaptive response system for joint attention task for children with autism; IEEE Int. Symp. Robot Man Interact..2011. pp. 276–281. [Google Scholar]

- 45.Mundy P, Delgado C, Block J, Venezia M, Hogan A, Seibert J. Coral Gables. Univ. Miami; FL: 2003. Early Social Communikation Scales (ESCS) [Google Scholar]

- 46.Kasari C, Freeman S, Paparella T. Joint attention and symbolic play in young children with autism: A randomized controlled intervention study. J. Child Psychol. Psychiatry. 2006;47(6):611–620. doi: 10.1111/j.1469-7610.2005.01567.x. [DOI] [PubMed] [Google Scholar]

- 47.Demchak MA. Response prompting and fading methods: A review. Am. J. Mental Retardat. 1990;94(6):603–615. [PubMed] [Google Scholar]

- 48.Lord C, Risi S, Lambrecht L, Cook EH, Leventhal BL, DiLavore PC, Pickles A, Rutter M. The autism diagnostic observation schedule—Generic: A standard measure of social and communication deficits associated with the spectrum of autism. J. Autism Develop. Disord. 2000;30(3):205–223. [PubMed] [Google Scholar]

- 49.Constantino J, Gruber C. The Social Responsiveness Scale. Western Psychol. Services; Los Angeles, CA, USA: 2002. [Google Scholar]

- 50.Rutter M, Bailey A, Lord C, Berument S. Social Communication Questionnaire. Western Psychol. Services; Los Angeles, CA, USA: 2003. [Google Scholar]

- 51.Landry R, Bryson SE. Impaired disengagement of attention in young children with autism. J. Child Psychol. Psychiatry. 2004;45(6):1115–1122. doi: 10.1111/j.1469-7610.2004.00304.x. [DOI] [PubMed] [Google Scholar]