Abstract

This paper shows that the various computations underlying spatial cognition can be implemented using statistical inference in a single probabilistic model. Inference is implemented using a common set of ‘lower-level’ computations involving forward and backward inference over time. For example, to estimate where you are in a known environment, forward inference is used to optimally combine location estimates from path integration with those from sensory input. To decide which way to turn to reach a goal, forward inference is used to compute the likelihood of reaching that goal under each option. To work out which environment you are in, forward inference is used to compute the likelihood of sensory observations under the different hypotheses. For reaching sensory goals that require a chaining together of decisions, forward inference can be used to compute a state trajectory that will lead to that goal, and backward inference to refine the route and estimate control signals that produce the required trajectory. We propose that these computations are reflected in recent findings of pattern replay in the mammalian brain. Specifically, that theta sequences reflect decision making, theta flickering reflects model selection, and remote replay reflects route and motor planning. We also propose a mapping of the above computational processes onto lateral and medial entorhinal cortex and hippocampus.

Author Summary

The ability of mammals to navigate is well studied, both behaviourally and in terms on the underlying neurophysiology. Navigation is a well studied topic in computational fields such as machine learning and signal processing. However, studies in computational neuroscience, which draw together these findings, have mainly focused on specific navigation tasks such as spatial localisation. In this paper, we propose a single probabilistic model which can support multiple tasks, from working out which environment you are in, to computing a sequence of motor commands that will take you to a sensory goal, such as being warm or viewing a particular object. We describe how these tasks can be implemented using a common set of lower level algorithms that implement ‘forward and backward inference over time’. We relate these algorithms to recent findings in animal electrophysiology, where sequences of hippocampal cell activations are observed before, during or after a navigation task, and these sequences are played either forwards or backwards. Additionally, one function of the hippocampus that is preserved across mammals is that it integrates spatial and non-spatial information, and we propose how the forward and backward inference algorithms naturally map onto this architecture.

Introduction

This paper describes a dynamic Bayesian model of spatial cognition. Here we define spatial cognition as including the tasks of localisation (estimating where you are in a known environment), sensory imagery (constructing a virtual scene), decision making (deciding which way to turn to reach a goal), model selection (working out which environment you are in) and motor planning (computing a sequence of motor commands that will lead to a sensory goal). We show that all of these tasks can be implemented using statistical inference in a single probabilistic model. We note that the above formulation is slightly different to previous definitions by OKeefe and Nadel [1], Gallistel [2], and Redish [3] which stress the capacity of determining and performing a path from a current position towards a desired location.

The model has hidden states comprising speed, direction and allocentric location, control variables comprising change in direction and speed, and sensory states representing olfactory, somatosensory and visual information. The model describes the dynamical evolution of hidden states, and provides a mapping from hidden to sensory states. Inference in the model is then implemented using a common set of ‘lower-level’ computations involving forward and backward inference over time. We propose that these computations are reflected in recent empirical findings of pattern replay in the mammalian brain [4], [5]. Specifically, we propose that theta sequences reflect decision making, theta flickering reflects model selection, and remote replay reflects route and motor planning. Our use of the terms ‘forward’ and ‘backward’ here relate to time and should not be confused with the direction of message passing in a cortical hierarchy [6].

Our approach falls into the general category of ‘map-based’ or ‘model-based’ planning [1], [7]–[10], or ‘model-based decision making’ [11]. The term ‘model-based’ refers to making and updating a representation of the world (such as a cognitive map). This is to be contrasted, for example, with ‘model-free’ approaches in which agents merely react to stimuli, after having previously learnt stimulus-response mappings through extensive exposure to an environment [12].

More generally, agents will use a variety of navigation strategies depending on their cognitive capabilities and familiarity with an environment. Spatial decisions can, for example, be classified [13] as being cue-guided (eg. move towards the red house), stimulus triggered (eg. turn left at the red house), route based (turn left at the red house then right at the blue house). There is a good deal of evidence showing that the brain has multiple decision making or control systems, each with its own strengths and weaknesses [14]–[16].

The usefulness of model-based planning is most apparent after an agent has sufficient experience to learn a model of an environment and when, subsequently, local changes to that environment are made which affect the optimal route to a goal [15]. In statistical terms, these would be referred to as nonstationarities. For spatial models this could be, for example, a hole appearing in a wall enabling an agent to take a shortcut, or a new object appearing preventing an agent taking a habitual route. Another strength of model-based control is that it can reduce learning time. Tse et al. [17], for example, studied decision making in rats and found that learning required fewer trials when it occurred against a background of prior knowledge. This allows new information to be assimilated into an existing schema or model.

The model-based versus model-free distinction has become important for the study of decision making in general as the underlying neuroanatomical differences are being delineated [11], [15]. Khamassi and Humphries [18] argue that, due to the shared underlying neuroanatomy, spatial navigation strategies that were previously described as being either place-driven or cue-driven are better thought of as being model-based versus model-free. Daw et al. [15] propose that arbitration between model-based and model-free controllers is based on the relative uncertainty of the decisions and more recently, Pezzulo et al. [19] have embedded both types of decision making systems into a single ‘mixed instrumental controller’.

This paper describes the computations underlying spatial cognition, initially, at a rather abstract level of manipulations of probability densities and then employs vector and matrix representations of variables and connectivities. Although we later on go on to describe how our model relates to underlying neuronal implementations, the model itself is not specified at a neuronal level. This style of modelling has many precedents in the literature. For example, Bousquet et al. [20] have conceived of the hippocampus as a Kalman filter. This requires that the hippocampus has an ‘observation model’ relating hidden states (places specified in allocentric coordinates) to sensory cues, and a dynamic model relating previous to current state via path integration. Kalman filtering then refers to the forward inference algorithm that combines path integral estimates of state with current sensory cues to provide optimal updates of the agent's location. The main function of Kalman filtering in this context is therefore one of localisation. One of the key points of this paper is that if an agent has taken the trouble to construct a ‘dynamic model’ and an ‘observation model’ then they can be used for more than just localisation; the same models, when combined with additional inference steps, can also be used for model selection, decision making and motor planning and to construct sensory imagery.

Other statistical treatments of hippocampal function address the issue of context learning [21]. Here, a context is defined in statistical terms as a stationary distribution of experiences. The problem of context learning is then reduced to one of clustering together an agent's experiences into a finite number of contexts. This is addressed through the use of Hidden Markov Models (HMMs) and it is shown how this perspective explains experimental findings in rat navigation concerning sequence and reversal learning and place-cell remapping. Johnson et al. [22] provide a normative statistical model of exploratory behaviour called Information Foraging (IF). ‘Passive IF’ describes the temporal distribution of an agent's sampling process (eg. spending longer investigating novel versus familiar objects) whereas ‘Directed IF’ describes its spatial distribution (eg. where it should move to next). Additionally, IF is conceived to apply both to the environment and the agent's memory of the environment. Directed IF proposes a common hippocampal substrate for constructive memory (eg. scene construction), vicarious trial and error behaviour, model-based facilitation of memory performance, and memory consolidation. The IF framework samples spatial locations, or episodic memories using an information theoretic criterion. To compute this criterion it is necessary for the agent to possess an observation model of the sort described in our article below. A further statistical treatment of hippocampal function comprises a two-stage processing model of memory formation in the entorhinal-hippocampal loop [23]. The first stage, which is proposed to take place during theta activity, allows hippocampus to temporally decorrelate and sparsify its input, and develop representations based on an Independent Component Analysis. The second stage, which is proposed to take place during Sharp Wave Ripples [24], allows hippocampus to replay these new representations to neocortex where long term memories are held to be instantiated.

This paper is concerned with computational processes underlying spatial cognition and we describe how the underlying computations may be instantiated in hippocampus and associated brain regions. The hippocampal formation is, however, implicated in a much broader array of functions [25], such as episodic memory, that our model does not address. Indeed one of the key differences between our approach and some other models of spatial cognition [10], [16] is that the approach we describe has no episodic component. Specifically, the sequences that are generated in our model are the result of online computation rather than memory recall. However, as we highlight in the discussion, the interactions between episodic memory and the computations we describe would be especially interesting to examine in future work.

The paper is structured as follows. The computer simulations in this paper describe an agent acting in a simple two-dimensional environment. This environment produces visual, somatosensory and olfactory cues as described in the methods section on the ‘Environmental Model’. The agent then develops its own model of the environment as described in the ‘Probabilistic Model’ section. This describes the two elements of the model (i) a dynamical model describing the evolution of hidden states and (ii) a mapping from hidden states to sensory states. The section on ‘Spatial Cognition as Statistical Inference’ then describes how the various tasks of localisation, decision making (and sensory imagery), model selection and motor planning can be described in probabilistic terms. The section on ‘Forward and Backward Inference’ describes the common set of forward and backward recursions for estimating the required probability densities. The section on ‘Results’ describes an implementation of the above algorithms and provides some numerical results. The discussion section on ‘Neuronal Implementation’ then describes our proposal for how these algorithms are implemented in the brain and how functional connectivity among a candidate set of brain regions changes as a function of task. We conclude with a discussion of how the above computations might relate to pattern replay and what are the specific predictions of our model.

Methods

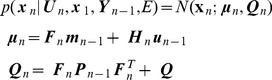

In what follows matrices are written in upper case bold type and vectors in lower case bold. Scalars are written in upper or lower case plain type. We use  to denote a multivariate Gaussian density over the random variable

to denote a multivariate Gaussian density over the random variable  having mean

having mean  and covariance

and covariance  . Table 1 provides a list of all the symbols used in the main text.

. Table 1 provides a list of all the symbols used in the main text.

Table 1. Description of mathematical symbols used in the main text.

| Environmental Model | |

|

Scaling of olfactory source |

|

Allocentric location of olfactory source |

|

Spatial diffusion of olfactory source |

|

Sequence of sensory states from environmental model |

| Sensory State Variables | |

|

Olfactory, somatosensory and visual states |

|

Sensory state (comprising  ) ) |

|

Sequence of sensory states up to time  (observations or goals) (observations or goals) |

|

Sensory noise |

|

Variance of olfactory noise |

|

Variance of somatosensory noise |

|

Covariance of visual noise |

|

Sensory noise covariance (blkdiag( )) )) |

| Control Variables | |

|

Control signal (virtual input or motor efference copy) |

|

Sequence of control signals up to time index

|

|

Estimate of control signal from backward inference |

|

Uncertainty in est. of control signal from backward inference |

| Hidden State Variables | |

|

Allocentric location comprising  and and

|

|

Speed |

|

Direction of heading |

|

Hidden state (comprising  ) at time step ) at time step

|

|

Hidden state sequence up to time index

|

|

Flow term describing change of state wrt. previous state |

|

Flow term describing change of state wrt. input |

|

Hidden state noise |

|

Hidden state noise covariance |

|

State estimate from path integration (forward inference) |

|

State estimate based on Bayes rule (forward inference) |

|

State estimate from backward inference |

|

Covariance of state estimate from path integration |

|

Covariance of state estimate from Bayes rule (forward inference) |

|

Covariance of state estimate from backward inference |

| Agent's Observation Model | |

|

Model of environment i |

, ,  , ,

|

Agent's predictions of olfactory, somatosensory and visual state |

|

Agent's predictions of sensory state |

|

Local linearisation of observation model |

|

Precision of head direction cells |

|

Output of  th head direction cell th head direction cell |

|

Output of  th spatial basis function th spatial basis function |

, ,  , ,

|

Weights in agent's olfactory, somatosensory and visual models |

Environmental Model

Computer simulations are implemented in Matlab (R2012a, The MathWorks, Inc.) and are based on an agent navigating in a simple 2D environment depicted in Figure 1. The location of the agent is specified using orthogonal allocentric coordinates  and its direction of heading (clockwise from positive

and its direction of heading (clockwise from positive  ) is

) is  . The environment contains two inner walls and four boundary walls. The agent is equipped with a touch sensor that detects the minimum Euclidian distance to a wall,

. The environment contains two inner walls and four boundary walls. The agent is equipped with a touch sensor that detects the minimum Euclidian distance to a wall,  . It is also equipped with a nose that detects olfactory input,

. It is also equipped with a nose that detects olfactory input,  . In this paper we consider a single olfactory source located at allocentric coordinates

. In this paper we consider a single olfactory source located at allocentric coordinates  . We assume this source diffuses isotropically with scale parameter

. We assume this source diffuses isotropically with scale parameter  so that olfactory input at location

so that olfactory input at location  is given by an exponential function

is given by an exponential function

| (1) |

All of the simulations use a single olfactory source with  ,

,  and

and  . More realistic environments with multiple olfactory sources and turbulence [26] are beyond the scope of this paper.

. More realistic environments with multiple olfactory sources and turbulence [26] are beyond the scope of this paper.

Figure 1. Model of environment.

Allocentric representation (left panel) and egocentric view (right panel). The agent (white triangle) is at allocentric location  and oriented at

and oriented at  degrees (clockwise relative to the positive

degrees (clockwise relative to the positive  axis). The environment contains two inner walls and four boundary walls. The agent is equipped with whiskers that detect the minimum Euclidian distance to a wall,

axis). The environment contains two inner walls and four boundary walls. The agent is equipped with whiskers that detect the minimum Euclidian distance to a wall,  . It is also equipped with a nose that detects the signal from an olfactory source placed at

. It is also equipped with a nose that detects the signal from an olfactory source placed at  ,

,  in the south-west corner of the maze (white circle). The agent also has a retina that is fixed in orientation and always aligned with the direction of heading,

in the south-west corner of the maze (white circle). The agent also has a retina that is fixed in orientation and always aligned with the direction of heading,  . The retina provides one-dimensional visual input,

. The retina provides one-dimensional visual input,  (displayed as a one-dimensional image in the right panel), from −45 to +45 degrees of visual angle around

(displayed as a one-dimensional image in the right panel), from −45 to +45 degrees of visual angle around  and comprising

and comprising  pixels.

pixels.

The agent is also equipped with a retina that is aligned with the direction of heading. The retina provides one-dimensional visual input,  , from −45 to +45 degrees of visual angle around

, from −45 to +45 degrees of visual angle around  and comprises

and comprises  pixels. The retina provides information about the ‘colour’ of the walls within its field of view. In our simulations ‘colour’ is a scalar variable which we have displayed using colormaps for ease of visualisation. The scalar values corresponding to the various walls are 0.14 (north border), 0.29 (east border), 0.43 (south border), 0.57 (west border), 0.71 (west wall), 0.86 (east wall). These map onto the colours shown in Figure 1 using Matlab's default colour map. Although classical laboratory navigation tasks do not involve walls with different colours, they employ extra-maze cues which enable experimental subjects to localize themselves. For the sake of simplicity, here we provide such visual information to the simulated agent by variation of wall colour.

pixels. The retina provides information about the ‘colour’ of the walls within its field of view. In our simulations ‘colour’ is a scalar variable which we have displayed using colormaps for ease of visualisation. The scalar values corresponding to the various walls are 0.14 (north border), 0.29 (east border), 0.43 (south border), 0.57 (west border), 0.71 (west wall), 0.86 (east wall). These map onto the colours shown in Figure 1 using Matlab's default colour map. Although classical laboratory navigation tasks do not involve walls with different colours, they employ extra-maze cues which enable experimental subjects to localize themselves. For the sake of simplicity, here we provide such visual information to the simulated agent by variation of wall colour.

The environmental model of retinal input takes the values of  and

and  and produces

and produces  using calculations based on the two-dimensional geometrical relation of the agent with the environment. This uses a simple ray-tracing algorithm. The agent then has its own predictive model of retinal input, described in the ‘vision’ section below, which predicts

using calculations based on the two-dimensional geometrical relation of the agent with the environment. This uses a simple ray-tracing algorithm. The agent then has its own predictive model of retinal input, described in the ‘vision’ section below, which predicts  from

from  and

and  using a basis set expansion. The agent has similar models of olfactory and somatosensory input (see ‘Olfaction’ and ‘Touch’ below). Overall, the environmental model produces the signals

using a basis set expansion. The agent has similar models of olfactory and somatosensory input (see ‘Olfaction’ and ‘Touch’ below). Overall, the environmental model produces the signals  ,

,  and

and  which form the sensory inputs to the agent's spatial cognition model (see next section). We write this as

which form the sensory inputs to the agent's spatial cognition model (see next section). We write this as  to denote sensory signals from the environment. For a sequence of signals we write

to denote sensory signals from the environment. For a sequence of signals we write  . These sensory inputs are surrogates for the compact codes produced by predictive coding in sensory cortices [27]. We emphasise that the agent has its own model of sensory input (an ‘observation model’) which is distinct from the environmental input itself. The agent's observation model is learnt from exposure to the environment.

. These sensory inputs are surrogates for the compact codes produced by predictive coding in sensory cortices [27]. We emphasise that the agent has its own model of sensory input (an ‘observation model’) which is distinct from the environmental input itself. The agent's observation model is learnt from exposure to the environment.

Probabilistic Model

We investigate agents having a model comprising two parts (i) a dynamical model and (ii) an observation model. The dynamical model describes how the agent's internal state,  is updated from the previous time step

is updated from the previous time step  and motor efference copy

and motor efference copy  . The observation model is a mapping from hidden states

. The observation model is a mapping from hidden states  to sensory states

to sensory states  . Our probabilistic model falls into the general class of discrete-time nonlinear state-space models

. Our probabilistic model falls into the general class of discrete-time nonlinear state-space models

| (2) |

where  is a control input,

is a control input,  is state noise and

is state noise and  is sensory noise. The noise components are Gaussian distributed with

is sensory noise. The noise components are Gaussian distributed with  and

and  . This is a Nonlinear Dynamical System (NDS) with inputs and hidden variables. We consider a series of time points

. This is a Nonlinear Dynamical System (NDS) with inputs and hidden variables. We consider a series of time points  and denote sequences of sensory states, hidden states, and controls using

and denote sequences of sensory states, hidden states, and controls using  ,

,  , and

, and  . These are also referred to as trajectories. The above equations implicitly specify the state transition probability density

. These are also referred to as trajectories. The above equations implicitly specify the state transition probability density  and the observation probability density

and the observation probability density  . This latter probability depends on the agent's model of its environment,

. This latter probability depends on the agent's model of its environment,  . Together these densities comprise the agent's generative model, as depicted in Figure 2 (top left).

. Together these densities comprise the agent's generative model, as depicted in Figure 2 (top left).

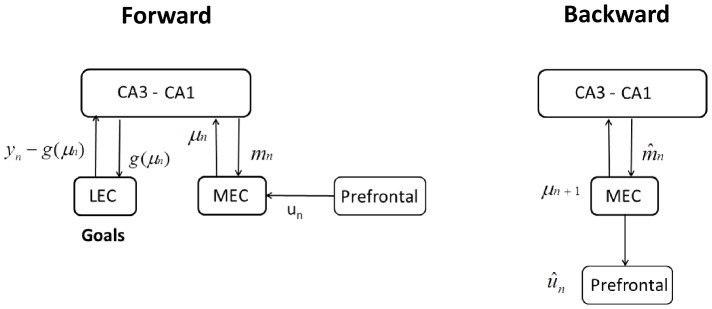

Figure 2. Generative model for spatial cognition.

The agent's dynamical model is embodied in the red arrows,  , and its observation model in the blue arrows,

, and its observation model in the blue arrows,  . All of the agent's spatial computations are based on statistical inference in this same probabilistic generative model. The computations are defined by what variables are known (gray shading) and what the agent wishes to estimate. Sensory Imagery Given a known initial state,

. All of the agent's spatial computations are based on statistical inference in this same probabilistic generative model. The computations are defined by what variables are known (gray shading) and what the agent wishes to estimate. Sensory Imagery Given a known initial state,  , and virtual motor commands

, and virtual motor commands  , the agent can generate sensory imagery

, the agent can generate sensory imagery  . Decision Making Given initial state

. Decision Making Given initial state  , a sequence of putative motor commands

, a sequence of putative motor commands  (eg. left turn), and sensory goals

(eg. left turn), and sensory goals  , an agent can compute the likelihood of attaining those goals given

, an agent can compute the likelihood of attaining those goals given  and

and  ,

,  . This computation requires a single sweep of forward inference. The agent can then repeat this for a second putative motor sequence (eg. right turn), and decide which turn to take based on the likelihood ratio. Model Selection Here, the agent has made observations

. This computation requires a single sweep of forward inference. The agent can then repeat this for a second putative motor sequence (eg. right turn), and decide which turn to take based on the likelihood ratio. Model Selection Here, the agent has made observations  and computes the likelihood ratio under two different models of the environment. Planning can be formulated as estimation of a density over actions

and computes the likelihood ratio under two different models of the environment. Planning can be formulated as estimation of a density over actions  given current state

given current state  and desired sensory states,

and desired sensory states,  . This requires a forward sweep to compute the hidden states that are commensurate with the goals, and a backward sweep to compute the motor commands that will produce the required hidden state trajectory.

. This requires a forward sweep to compute the hidden states that are commensurate with the goals, and a backward sweep to compute the motor commands that will produce the required hidden state trajectory.

Path integration

During spatial localisation, an agent's current location can be computed using path integration. This takes the previous location, direction of heading, velocity and elapsed time and uses them to compute current position, by integrating the associated differential equation. We assume that the agent is in receipt of a control signal  which delivers instructions to change direction,

which delivers instructions to change direction,  , and speed,

, and speed,  . During navigation, for example, these signals will correspond to motor efference copy. Later we will show how these control signals can be inferred by conditioning on desirable future events (i.e. how the agent performs planning). For the moment we assume the controls are known. The dynamical model is

. During navigation, for example, these signals will correspond to motor efference copy. Later we will show how these control signals can be inferred by conditioning on desirable future events (i.e. how the agent performs planning). For the moment we assume the controls are known. The dynamical model is

|

(3) |

Here the state variables are two orthogonal axes of allocentric location,  , speed

, speed  and direction

and direction  (clockwise angle relative to the positive

(clockwise angle relative to the positive  axis). Motion is also subject to frictional forces as defined by the constant

axis). Motion is also subject to frictional forces as defined by the constant  . We set

. We set  . We can write a state vector

. We can write a state vector  . The control signals

. The control signals  and

and  change the agent's speed and direction. We can write

change the agent's speed and direction. We can write

| (4) |

which can be integrated to form a discrete-time representation

| (5) |

using local linearisation as described in Text S1. If the deterministic component of the dynamics is originally described using differential equations, the flow terms  and

and  can be computed as shown in Text S1. Here

can be computed as shown in Text S1. Here  describes how the current hidden state depends on the previous hidden state, and

describes how the current hidden state depends on the previous hidden state, and  how it depends on the previous input. An example of using the above equations for implementing path integration is described in the ‘Sensory Imagery’ simulation section below. Errors in path integration, perhaps due to inaccuracies in the representation of time or in local linearisation, can also be included, i.e.

how it depends on the previous input. An example of using the above equations for implementing path integration is described in the ‘Sensory Imagery’ simulation section below. Errors in path integration, perhaps due to inaccuracies in the representation of time or in local linearisation, can also be included, i.e.

| (6) |

where  is a random variable. This corresponds to a locally linearised version of equation 2. For the results in this paper we used a local regression method, due to Schaal et al. [28], to compute

is a random variable. This corresponds to a locally linearised version of equation 2. For the results in this paper we used a local regression method, due to Schaal et al. [28], to compute  and

and  as this resulted in more robust estimates. This is described in Text S1.

as this resulted in more robust estimates. This is described in Text S1.

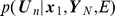

Multisensory input

We consider agents with sensory states,  having olfactory, somatosensory and visual components. Sensory states will typically be low-dimensional codes that index richer multimodal representations in sensory cortices. During navigation and model selection these will correspond to inputs from the environmental model,

having olfactory, somatosensory and visual components. Sensory states will typically be low-dimensional codes that index richer multimodal representations in sensory cortices. During navigation and model selection these will correspond to inputs from the environmental model,  . During decision making and motor planning these will correspond to internally generated sensory goals. The agent associates hidden states with sensory states using the mapping

. During decision making and motor planning these will correspond to internally generated sensory goals. The agent associates hidden states with sensory states using the mapping  , a nonlinear function of the state variables. We have

, a nonlinear function of the state variables. We have

| (7) |

where  is zero-mean Gaussian noise with covariance

is zero-mean Gaussian noise with covariance  . During localisation and model selection

. During localisation and model selection  corresponds to the agent's prediction of its sensory input, and

corresponds to the agent's prediction of its sensory input, and  specifies the covariance of the prediction errors. These predictions can be split into modality-specific components

specifies the covariance of the prediction errors. These predictions can be split into modality-specific components  with associated prediction errors having (co-)variances

with associated prediction errors having (co-)variances  ,

,  and

and  . Equation 7 defines the likelihood

. Equation 7 defines the likelihood

| (8) |

We assume the different modalities are independent given the state so that

| (9) |

where

|

(10) |

so that  . We now describe the agent's model for generating the predictions

. We now describe the agent's model for generating the predictions  ,

,  and

and  . Olfactory input is predicted using a basis set

. Olfactory input is predicted using a basis set

| (11) |

where  is the number of basis functions,

is the number of basis functions,  is the location, and

is the location, and  are parameters of the olfactory model. Here we use a local basis function representation where

are parameters of the olfactory model. Here we use a local basis function representation where

is the response of the  th basis cell. Following Foster et al. [29]

th basis cell. Following Foster et al. [29]

may be viewed as an idealised place cell output, where

may be viewed as an idealised place cell output, where  is the spatial location of the centre of cell i's place field, and

is the spatial location of the centre of cell i's place field, and  its breadth. We assume that the parameters governing the location and width of these cells have been set in a previous learning phase. In this paper we used

its breadth. We assume that the parameters governing the location and width of these cells have been set in a previous learning phase. In this paper we used  and the centres of the place fields

and the centres of the place fields  were arranged to form a 10-by-10 grid in allocentric space. The same set of cells were used as a basis for predicting olfactory, somatosensory and visual input.

were arranged to form a 10-by-10 grid in allocentric space. The same set of cells were used as a basis for predicting olfactory, somatosensory and visual input.

The parameters  will have to be learnt for each new environment. For the results in this paper they are learnt using a regression approach, which assumes knowledge of the agent's location. More generally, they will have to be learnt without such knowledge and on a slower time scale than (or after learning of) the place cell centres and widths. This is perfectly feasible but beyond the scope of the current paper. We return to this issue in the discussion.

will have to be learnt for each new environment. For the results in this paper they are learnt using a regression approach, which assumes knowledge of the agent's location. More generally, they will have to be learnt without such knowledge and on a slower time scale than (or after learning of) the place cell centres and widths. This is perfectly feasible but beyond the scope of the current paper. We return to this issue in the discussion.

In the agent's model, somatosensory input is predicted using a basis set

| (12) |

where  are the parameters of the somatosensory model. Here we envisage that processing in somatosensory cortex is sufficiently sophisticated to deliver a signal

are the parameters of the somatosensory model. Here we envisage that processing in somatosensory cortex is sufficiently sophisticated to deliver a signal  that is the minimum distance to a physical boundary. If the agent had whiskers, a simple function of

that is the minimum distance to a physical boundary. If the agent had whiskers, a simple function of  would correspond to the amount of whisker-related neural activity. More sophisticated generative models of somatosensory input would have a directional, and perhaps a dynamic component. But this is beyond the scope of the current paper.

would correspond to the amount of whisker-related neural activity. More sophisticated generative models of somatosensory input would have a directional, and perhaps a dynamic component. But this is beyond the scope of the current paper.

The agent's retina is aligned with the direction of heading,  . The retina provides one-dimensional visual input,

. The retina provides one-dimensional visual input,  , from −45 to +45 degrees of visual angle around

, from −45 to +45 degrees of visual angle around  and comprising

and comprising  pixels. An example of retinal input is shown in the right panel of Figure 1. The agent's prediction of this visual input is provided by a weighted conjunction of inputs from populations of place/grid and head direction cells. The head direction cells are defined as

pixels. An example of retinal input is shown in the right panel of Figure 1. The agent's prediction of this visual input is provided by a weighted conjunction of inputs from populations of place/grid and head direction cells. The head direction cells are defined as

| (13) |

where  is the preferred angle of the

is the preferred angle of the  th basis function and

th basis function and  defines the range of angles to which it is sensitive. The output for retinal angle

defines the range of angles to which it is sensitive. The output for retinal angle  is given simply by

is given simply by  . Visual input at retinal angle

. Visual input at retinal angle  is then predicted to be

is then predicted to be

| (14) |

This sort of conjunctive representation is widely used to provide transformations among coordinate systems and, for sensorimotor transforms, is thought to be supported by parietal cortex [30]. The above mapping is adaptable and can be optimised by choosing appropriate weights  and these will have to be learnt for each new environment.

and these will have to be learnt for each new environment.

It is a gross simplification to predict retinal input, or egocentric views, with a single stage of computation as in the above equation. More realistic models of this process [31], [32] propose separate representations of the spatial and textural components of landmarks, with bilateral connectivity to cells in a parietal network which effect a transform between allocentric and egocentric coordinates. Egocentric view cells are then also connected to this parietal network. This level of detail is omitted from our current model, as our aim is to focus on temporal dynamics.

Overall, the agent's model of multisensory input has parameters  . For each new environment,

. For each new environment,  , the agent has a separate set of parameters. Experiments on rats have found that changes to the environment cause changes in the pattern of firing of place cells [33], [34]. This could happen in our model if the cells fire at rates

, the agent has a separate set of parameters. Experiments on rats have found that changes to the environment cause changes in the pattern of firing of place cells [33], [34]. This could happen in our model if the cells fire at rates  ,

,  and

and  and the parameters

and the parameters  are updated to reflect changes in sensory features. In the simulations that follow the

are updated to reflect changes in sensory features. In the simulations that follow the  parameters are set using a separate learning phase prior to spatial cognition. More detailed models of this learning process propose that cells in the dentate gyrus select which CA3 cells will be engaged for encoding a new environment [35]. Connections from EC to selected CA3 cells are then updated to learn the relevant place-landmark associations.

parameters are set using a separate learning phase prior to spatial cognition. More detailed models of this learning process propose that cells in the dentate gyrus select which CA3 cells will be engaged for encoding a new environment [35]. Connections from EC to selected CA3 cells are then updated to learn the relevant place-landmark associations.

Spatial Cognition as Statistical Inference

This section describes, initially at the level of manipulations of probability densities, how the various computations underlying spatial cognition can be implemented. It then describes a practical algorithm based on local linearisation. If an agent has a probabilistic model of its environment,  , then the various tasks that together comprise spatial cognition are optimally implemented using statistical inference in that model. These inferences will be optimal in the sense of maximising likelihood. The various tasks - localisation, imagery, decision making, model selection and planning - all rely on the same statistical model. They are differentiated by what variables are known and what the agent wishes to compute. This is depicted in the panels in Figure 2 where shaded circles denote known quantities. Additionally, for each task, the information entering the system may be of a different nature. For example, for imagery, the inputs,

, then the various tasks that together comprise spatial cognition are optimally implemented using statistical inference in that model. These inferences will be optimal in the sense of maximising likelihood. The various tasks - localisation, imagery, decision making, model selection and planning - all rely on the same statistical model. They are differentiated by what variables are known and what the agent wishes to compute. This is depicted in the panels in Figure 2 where shaded circles denote known quantities. Additionally, for each task, the information entering the system may be of a different nature. For example, for imagery, the inputs,  , are virtual motor commands and for localisation they are motor efference copies. Similarly, during localisation and model selection the agent receives inputs from sensory cortices. For the simulations in this paper these come from the environmental model,

, are virtual motor commands and for localisation they are motor efference copies. Similarly, during localisation and model selection the agent receives inputs from sensory cortices. For the simulations in this paper these come from the environmental model,  . However, during decision making and motor planning these inputs do not derive from the agent's environment but are generated internally and correspond to the agent's goals

. However, during decision making and motor planning these inputs do not derive from the agent's environment but are generated internally and correspond to the agent's goals  .

.

Localisation

The use of dynamic models with hidden states for spatial localisation is well established in the literature [20], [36], [37]. Estimation of spatial location requires motor efference copy  , and sensory input

, and sensory input  . The initial location

. The initial location  may be known or specified with some degree of uncertainty. Forward inference over states (in time) can then be used to optimally combine probabilistic path integration with sensory input to estimate location. This produces the density

may be known or specified with some degree of uncertainty. Forward inference over states (in time) can then be used to optimally combine probabilistic path integration with sensory input to estimate location. This produces the density  . A Gaussian approximation to this density based on a local linearisation is described below in the section on forward inference over states (see equation 24). The agent's best estimate of its location is then given by the maximum likelihood estimate

. A Gaussian approximation to this density based on a local linearisation is described below in the section on forward inference over states (see equation 24). The agent's best estimate of its location is then given by the maximum likelihood estimate

| (15) |

We refer to this as a maximum likelihood estimate because there is no distribution over  prior to observing the sequence

prior to observing the sequence  . This is commensurate with standard terminology [38]. However, one could also think of this as a posterior estimate, due to the sequential nature of the estimation process (see below), in that there is a distribution over

. This is commensurate with standard terminology [38]. However, one could also think of this as a posterior estimate, due to the sequential nature of the estimation process (see below), in that there is a distribution over  prior to the observation at a single time point

prior to the observation at a single time point  . For the Gaussian approximation to this density, we have

. For the Gaussian approximation to this density, we have  where

where  is the mean of the Gaussian.

is the mean of the Gaussian.

It is also possible to improve the above estimates retrospectively

| (16) |

where  . For example, upon leaving an underground metro system and turning left you may not know that you are heading north until you encounter a familiar landmark. You can then use this observation to update your estimate about where you have been previously. Estimation of

. For example, upon leaving an underground metro system and turning left you may not know that you are heading north until you encounter a familiar landmark. You can then use this observation to update your estimate about where you have been previously. Estimation of  requires forward and backward inference over hidden states (see equation 30). The Gaussian approximation to this density has mean

requires forward and backward inference over hidden states (see equation 30). The Gaussian approximation to this density has mean  , so that under the local linear approximation we have

, so that under the local linear approximation we have  .

.

Decision making

Given initial state  , a sequence of putative motor commands

, a sequence of putative motor commands  (eg. left turn), and sensory goals

(eg. left turn), and sensory goals  , an agent can compute the likelihood of attaining those goals,

, an agent can compute the likelihood of attaining those goals,  . This computation requires a single sweep (or ‘replay’ - see discussion) of forward inference (see equation 29 in the section on ‘Likelihood’ below). The agent can then repeat this for a second putative motor sequence (eg. right turn),

. This computation requires a single sweep (or ‘replay’ - see discussion) of forward inference (see equation 29 in the section on ‘Likelihood’ below). The agent can then repeat this for a second putative motor sequence (eg. right turn),  , and decide which turn to take based on the likelihood ratio.

, and decide which turn to take based on the likelihood ratio.

| (17) |

Here  are internally generated task goals rather than sensory input from the environment

are internally generated task goals rather than sensory input from the environment  . Decisions based on the likelihood ratio are statistically optimal [38]. In probabilistic models of sequential data the likelihood can be computed by a single forward pass of inference, as described below. We would therefore need two forward passes to compute the LR, one for each putative motor sequence.

. Decisions based on the likelihood ratio are statistically optimal [38]. In probabilistic models of sequential data the likelihood can be computed by a single forward pass of inference, as described below. We would therefore need two forward passes to compute the LR, one for each putative motor sequence.

This formulation of decision making is based on sets of motor primitives being combined to form actions such as ‘turn left’ or ‘turn right’. This can therefore also be regarded as motor planning (see below) at some higher level. Additionally, the generation of sensory imagery can be viewed as a component of decision making because, to evaluate the likelihood, sensory goals must be compared with sensory predictions from the agent's generative model. In later sections we consider sensory imagery in its own right.

Model selection

Given motor efference copy  , and sensory input

, and sensory input  the agent computes the likelihood ratio under two different models of the environment. The agent's best estimate of which environment it is in, is given by the maximum likelihood estimate

the agent computes the likelihood ratio under two different models of the environment. The agent's best estimate of which environment it is in, is given by the maximum likelihood estimate

| (18) |

For consistency with terminology in statistics, we refer to this as model selection. This can be implemented using multiple sweeps of forward inference, one for each potential environment. The likelihood can be computed, for example, for two maze models  and

and  each hypothesising that the agent is in a particular environment. To decide which environment the observations are drawn from one can compute the likelihood ratio

each hypothesising that the agent is in a particular environment. To decide which environment the observations are drawn from one can compute the likelihood ratio

| (19) |

where each probability is computed using equation 29 in the section on ‘Likelihood’ below.

Motor planning

Given current state  and sensory goals,

and sensory goals,  , planning can be formulated as estimation of a density over actions

, planning can be formulated as estimation of a density over actions  , as depicted in Figure 2. This requires a forward sweep to compute the hidden states that are commensurate with the goals, and a backward sweep to compute the motor commands that will produce the required hidden state trajectory. This is described in the section below on ‘Inference over Inputs’ and can be implemented using equations 33 and 34. The agent's best estimate of the motor commands needed to attain sensory goals

, as depicted in Figure 2. This requires a forward sweep to compute the hidden states that are commensurate with the goals, and a backward sweep to compute the motor commands that will produce the required hidden state trajectory. This is described in the section below on ‘Inference over Inputs’ and can be implemented using equations 33 and 34. The agent's best estimate of the motor commands needed to attain sensory goals  is given by the maximum likelihood estimate

is given by the maximum likelihood estimate

| (20) |

Here  are internally generated task goals rather than sensory input from the environment

are internally generated task goals rather than sensory input from the environment  .

.

Forward and Backward Inference

Text S2 describes how the required probability densities can be computed at the very general level of manipulations of probability densities. However, these operations cannot be implemented exactly. They can only be implemented approximately and there are basically two types of approximate inference methods. These are based either on sampling [39] or Local Linearization (LL) [40]. In this paper we adopt an LL approach although this is not without disadvantages. We return to this important issue in the discussion. The following subsections describe the forward and backward inference algorithms under LL assumptions. Readers unfamiliar with statistical inference for dynamical systems models may benefit from textbook material [38].

Forward inference over hidden states

The problem of estimating the hidden states given current and previous sensory states is solved using Forward Inference. This produces the marginal densities  . Estimation of the state

. Estimation of the state  is based only on information up to that time point. For Linear Dynamical Systems (LDS), forward inference corresponds to the Kalman Filter, and for nonlinear dynamical systems under LL, forward inference can be instantiated using an Extended Kalman Filter (EKF) [40]. After local linearisation the state-space model can be written as

is based only on information up to that time point. For Linear Dynamical Systems (LDS), forward inference corresponds to the Kalman Filter, and for nonlinear dynamical systems under LL, forward inference can be instantiated using an Extended Kalman Filter (EKF) [40]. After local linearisation the state-space model can be written as

| (21) |

where  ,

,  and

and  are Jacobian matrices (see Text S1 and below). There is a long history of applying KFs, EKFs and related state-space models to the problem of localisation [20], [36]. Indeed one of the key implementations of the KF is for solving the localisation problem. These probabilistic algorithms have been used in a formalism known as Simultaneous Localisation and Mapping (SLAM) [37]. The goal of SLAM research is to develop an algorithm that would allow an agent to explore and map novel environments.

are Jacobian matrices (see Text S1 and below). There is a long history of applying KFs, EKFs and related state-space models to the problem of localisation [20], [36]. Indeed one of the key implementations of the KF is for solving the localisation problem. These probabilistic algorithms have been used in a formalism known as Simultaneous Localisation and Mapping (SLAM) [37]. The goal of SLAM research is to develop an algorithm that would allow an agent to explore and map novel environments.

In the context of localisation, forward inference allows information from path integration and sensory input to be combined in an optimal way. Under a local linear approximation the state estimates are Gaussian

| (22) |

and these quantities can be estimated recursively using an EKF. Here  is the agent's estimate of

is the agent's estimate of  based only on information up to time index

based only on information up to time index  . The covariance

. The covariance  quantifies the agent's uncertainty about

quantifies the agent's uncertainty about  , again based on information up to that time point. The agent's best estimate of location, based on forward inference, is then given by the first two entries in

, again based on information up to that time point. The agent's best estimate of location, based on forward inference, is then given by the first two entries in  (the third and fourth entries are speed and direction, see equation 3). The EKF equations can be expressed in two steps. The first is a prediction step

(the third and fourth entries are speed and direction, see equation 3). The EKF equations can be expressed in two steps. The first is a prediction step

|

(23) |

where  is the state noise covariance defined earlier. During localisation this corresponds to probabilistic path integration. The second is a correction step

is the state noise covariance defined earlier. During localisation this corresponds to probabilistic path integration. The second is a correction step

|

(24) |

where the ‘Kalman Gain’ is

| (25) |

and the  th entry in

th entry in  is given by

is given by

| (26) |

evaluated at  . The correction step provides optimal combination of probabilistic path integration with sensory input. More specifically, probabilistic path integration produces an estimate of the current state

. The correction step provides optimal combination of probabilistic path integration with sensory input. More specifically, probabilistic path integration produces an estimate of the current state  . The agent produces a prediction of sensory input

. The agent produces a prediction of sensory input  and compares it with actual sensory input

and compares it with actual sensory input  . The final estimate of the current state is then

. The final estimate of the current state is then  plus the Kalman gain times the prediction error

plus the Kalman gain times the prediction error  . This very naturally follows predictive coding principles, as described below in the section on Neuronal Implementation. Together, the above updates implement an EKF and these recursions are initialised by specifying the initial distribution over hidden states.

. This very naturally follows predictive coding principles, as described below in the section on Neuronal Implementation. Together, the above updates implement an EKF and these recursions are initialised by specifying the initial distribution over hidden states.

| (27) |

Likelihood

As described in Text S2, we can use the predictive densities to compute the likelihood of a data sequence. Under local linearisation the predictive density is given by

|

(28) |

The log-likelihood of a sequence of observations is then

|

(29) |

where  is the prediction error. The (log) likelihood of sensory input

is the prediction error. The (log) likelihood of sensory input  can thus be computed using equation 29. The first term in this equation corresponds to an accumulation of sum-squared prediction errors weighted by the inverse variance (precision). During decision making, the likelihood of attaining sensory goals

can thus be computed using equation 29. The first term in this equation corresponds to an accumulation of sum-squared prediction errors weighted by the inverse variance (precision). During decision making, the likelihood of attaining sensory goals  under a proposed control sequence

under a proposed control sequence  is computed using this method. During model selection, the likelihood of sensory observations

is computed using this method. During model selection, the likelihood of sensory observations  , under a proposed model of the environment,

, under a proposed model of the environment,  , is also computed using this method.

, is also computed using this method.

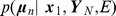

Backward inference over hidden states

Forward inference over the states is used to estimate a distribution over  using all observations up to time point

using all observations up to time point  . Backward inference over the states can then be used to improve these estimates by using observations up to time point

. Backward inference over the states can then be used to improve these estimates by using observations up to time point  i.e. future observations. The resulting estimates are therefore retrospective. An example of when this retrospective updating is beneficial is when the observation of a new landmark disambiguates where you have previously been located. For locally linear systems, Backward Inference over states is implemented using

i.e. future observations. The resulting estimates are therefore retrospective. An example of when this retrospective updating is beneficial is when the observation of a new landmark disambiguates where you have previously been located. For locally linear systems, Backward Inference over states is implemented using

|

(30) |

Here,  is the optimal state estimate given all sensory data up to time

is the optimal state estimate given all sensory data up to time  . Intuitively, the state estimate based on data up to time

. Intuitively, the state estimate based on data up to time  ,

,  , is improved upon based on state estimates at future time points (

, is improved upon based on state estimates at future time points ( for

for  ). The resulting sequence

). The resulting sequence  will provide more accurate state estimates than those based on purely forward inference,

will provide more accurate state estimates than those based on purely forward inference,  .

.

The above formulae are known as the ‘gamma recursions’ (see Text S2). An alternative algorithm for computing  , based on the ‘beta recursions’, requires storage of the data sequence

, based on the ‘beta recursions’, requires storage of the data sequence  and so is not an online algorithm. The gamma recursions may therefore have a simpler neuronal implementation (see below).

and so is not an online algorithm. The gamma recursions may therefore have a simpler neuronal implementation (see below).

The above recursions depend on a number of quantities from forward inference. These are  ,

,  ,

,  and

and  . The gamma recursions are initialised with

. The gamma recursions are initialised with  and

and  . For an LDS the above equations constitute the well-known Rauch-Tung-Striebel (RTS) smoother. Various reparameterisations can be made to remove computation of matrix inverses [41]. A predictive coding interpretation is readily applied to the second row of the above equation. The backward estimate

. For an LDS the above equations constitute the well-known Rauch-Tung-Striebel (RTS) smoother. Various reparameterisations can be made to remove computation of matrix inverses [41]. A predictive coding interpretation is readily applied to the second row of the above equation. The backward estimate  is equal to the forward estimate

is equal to the forward estimate  plus a correction term which is given by a learning rate matrix

plus a correction term which is given by a learning rate matrix  times a prediction error. This prediction error is the difference between the estimate of the next state based on the entire data sequence,

times a prediction error. This prediction error is the difference between the estimate of the next state based on the entire data sequence,  , minus the prediction of the next state based only on data up to the current time point,

, minus the prediction of the next state based only on data up to the current time point,  .

.

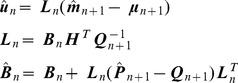

Inference over inputs

This section describes forward and backward inference over hidden states and inputs. If the controls are unknown we can estimate them by computing  where

where  is the current state and

is the current state and  are the desired sensory states. This probability can be computed via forward and backward inference in the following locally linearised model

are the desired sensory states. This probability can be computed via forward and backward inference in the following locally linearised model

| (31) |

with  ,

,  and

and  . The initial control values are distributed as

. The initial control values are distributed as

| (32) |

Informally, the forward sweep is necessary to compute the hidden states that are commensurate with sensory goals, and the backward sweep for computing the inputs that will produce the required state trajectory. Text S3 shows how inferences about the unknown controls can be made by creating an augmented state-space model and using the previously described equations for forward and backward inference over the states. The density over estimated inputs is a Gaussian

| (33) |

with mean  and covariance

and covariance  . In the absence of correlations between inputs and hidden states the backward inference formulae have the simplified form

. In the absence of correlations between inputs and hidden states the backward inference formulae have the simplified form

|

(34) |

Effectively, the optimal inputs are estimated using a model-based deconvolution of the desired sensory states.

Results

This section describes computer simulations showing how the agent's model can be used to generate visual imagery, and how inference in that model can implement decision making, model selection and motor planning. Here, ‘model selection’ refers to estimating which model of the environment is most likely given sensory data. An agent would use this to figure out what maze it was in.

In what follows we assume the agent is already equipped with the correct dynamical model  . The first section below describes a preliminary learning phase in which the sensory mapping

. The first section below describes a preliminary learning phase in which the sensory mapping  is learnt for a given environment

is learnt for a given environment  . Once the agent has a dynamical and a sensory mapping it is in effect equipped with a model of its environment which can be thought of as its own virtual reality system. It can then predict the sensory consequences of the control signals it receives.

. Once the agent has a dynamical and a sensory mapping it is in effect equipped with a model of its environment which can be thought of as its own virtual reality system. It can then predict the sensory consequences of the control signals it receives.

The degree to which each sensory modality is used in the following simulations is determined by the relative values of observation noise covariance (see Text S4 for details). Here we set  ,

,  and

and  (see equation 10). This means that the agent is guided most by olfaction and touch, and least by vision. Note, however, that as there are many more visual than somatosensory or olfactory inputs this differential weighting is perhaps less distinct than it might first appear. All the simulations use

(see equation 10). This means that the agent is guided most by olfaction and touch, and least by vision. Note, however, that as there are many more visual than somatosensory or olfactory inputs this differential weighting is perhaps less distinct than it might first appear. All the simulations use  time points with a time step of

time points with a time step of  . The simulations also used a very low level of dynamical noise,

. The simulations also used a very low level of dynamical noise,  , except for the planning example where we used

, except for the planning example where we used  .

.

Sensory Imagery

This section describes a preliminary learning phase in which an agent is exposed to an environment to learn the sensory mapping from states  to observations

to observations  . Here the agent is provided with the observations

. Here the agent is provided with the observations  and also exact knowledge of the hidden states

and also exact knowledge of the hidden states  . More realistic simulations would also require the agent to infer the hidden states

. More realistic simulations would also require the agent to infer the hidden states  whilst learning. This is in principle straightforward but is beyond the scope of the current paper, as our focus is on temporal dynamics. We return to this point in the discussion.

whilst learning. This is in principle straightforward but is beyond the scope of the current paper, as our focus is on temporal dynamics. We return to this point in the discussion.

The olfactory and sensorimotor models use a 10-by-10 grid of basis cells giving 100 cells in all. We assume that the parameters governing the location and width of these cells have been set in a previous learning phase. The weight vectors  and

and  (see equations 11 and 12) were optimised using least squares regression and 225 training exemplars with uniform spatial sampling. The retinal model used the same number and location of basis cells. It additionally used 32 head direction cells each having a directional precision parameter

(see equations 11 and 12) were optimised using least squares regression and 225 training exemplars with uniform spatial sampling. The retinal model used the same number and location of basis cells. It additionally used 32 head direction cells each having a directional precision parameter  . The conjunctive representation comprised 3200 basis cells. The weight vector

. The conjunctive representation comprised 3200 basis cells. The weight vector  (see equation 14) was optimised using least squares and a training set comprising 10,575 exemplars. These were generated from spatial positions taken uniformly throughout the maze. Visual input from the environmental model for multiple directions at each spatial location was used to create the training examples. At the end of this learning phase the agent is exquisitely familiar with the environment.

(see equation 14) was optimised using least squares and a training set comprising 10,575 exemplars. These were generated from spatial positions taken uniformly throughout the maze. Visual input from the environmental model for multiple directions at each spatial location was used to create the training examples. At the end of this learning phase the agent is exquisitely familiar with the environment.

A trained model can then be used to generate visual imagery. This is implemented by specifying a synthetic control sequence, running path integration and generating predictions from the model. For example, Figure 3A shows a control sequence that is used to generate the ‘north-east’ trajectory shown in Figure 3C. We also generated ‘north-west’, ‘south-west’ and ‘south-east’ trajectories by changing the sign of direction change,  , and/or the initial direction,

, and/or the initial direction,  .

.

Figure 3. Visual imagery.

(A) Control sequence used to generate visual imagery for the ‘north-east’ trajectory. The input signals are acceleration,  , and change in direction,

, and change in direction,  . These control signals change the agent's state according to equation 3. (B) The state variables speed

. These control signals change the agent's state according to equation 3. (B) The state variables speed  and direction

and direction  produced by the control sequence in A. (C) The state variables

produced by the control sequence in A. (C) The state variables  and

and  shown as a path (red curve). This is the ‘north-east’ trajectory. The state variable time series in B and C were produced by integrating the dynamics in equation 3 using the local linearisation approach of equation 5. (D) Accuracy of visual imagery produced by agent as compared to sensory input that would have been produced by the environmental model. The figure shows the proportion of variance,

shown as a path (red curve). This is the ‘north-east’ trajectory. The state variable time series in B and C were produced by integrating the dynamics in equation 3 using the local linearisation approach of equation 5. (D) Accuracy of visual imagery produced by agent as compared to sensory input that would have been produced by the environmental model. The figure shows the proportion of variance,  , explained by the agent's model as a function of retinal angle,

, explained by the agent's model as a function of retinal angle,  . This was computed separately for the north-east (black), north-west (red), south-east (blue) and south-west (green) trajectories. Only activity in the centre of the retina is accurately predicted.

. This was computed separately for the north-east (black), north-west (red), south-east (blue) and south-west (green) trajectories. Only activity in the centre of the retina is accurately predicted.

To quantitatively assess the accuracy of these imagery sequences,  , we compared them to the sequence of visual inputs that would have been received from the environmental model,

, we compared them to the sequence of visual inputs that would have been received from the environmental model,  . Figure 3D plots the proportion of variance explained by the agent's model as a function of retinal angle. These plots were computed separately for each trajectory, and show that only activity in the central retina is accurately predicted. This is due to the increased optic flow in peripheral regions of the agent's retina. The asymmetry in Figure 3D is due to the particular spatial arrangement and numerical values of the visual cues. These results suggest that it would be better to have a retina with lower spatial resolution in the periphery.

. Figure 3D plots the proportion of variance explained by the agent's model as a function of retinal angle. These plots were computed separately for each trajectory, and show that only activity in the central retina is accurately predicted. This is due to the increased optic flow in peripheral regions of the agent's retina. The asymmetry in Figure 3D is due to the particular spatial arrangement and numerical values of the visual cues. These results suggest that it would be better to have a retina with lower spatial resolution in the periphery.

Localisation

This simulation shows how an agent can localise itself in an environment. The agent was located centrally and moved according to the south-east trajectory. Its exact path was computed using noiseless path integration and the appropriate environmental inputs were provided to the agent.

In the discussion section below we propose a mapping of the forward and backward inference equations onto the hippocampal-entorhinal complex. We now report the results of two simulations. The first used the standard forward inference updates in equations 23 and 24. This corresponds to the algorithm that an agent with an intact hippocampus would use. The second, however, had a ‘lesioned hippocampus’ in that only the path integral updates in equation 23 were used (we set  ). This in effect removed the top down input from hippocampus to MEC (see ‘Localisation’ subsection in the discussion) so that path integral errors are not corrected by sensory input. In both cases the agent's path updates,

). This in effect removed the top down input from hippocampus to MEC (see ‘Localisation’ subsection in the discussion) so that path integral errors are not corrected by sensory input. In both cases the agent's path updates,  , were subject to a small amount of noise (with standard deviation 0.01) at each time step.

, were subject to a small amount of noise (with standard deviation 0.01) at each time step.

Figure 4 shows the results for single and multiple trials. Here, localisation with an intact hippocampus results in better tracking of the agent's location. Localisation accuracy was assessed over multiple trials ( ) and found to be significantly more accurate with, rather than without, a hippocampus (

) and found to be significantly more accurate with, rather than without, a hippocampus ( ). The mean localisation error was 60 per cent smaller with a hippocampus.

). The mean localisation error was 60 per cent smaller with a hippocampus.

Figure 4. Localisation.

Left: Representative result from a single trial showing true route computed using noiseless path integration (black curve), localisation with a noisy path integrator and no Hippocampus (blue curve) and localisation with a noisy path integrator and a Hippocampus (red curve). Right: Boxplots of localisation error over trials with medians indicated by red bars, box edges indicating 25th and 75th percentiles, whiskers indicating more extreme points, and outliers plotted as red crosses.

For the above simulations we disabled somatosensory input by setting  . This was found to be necessary as this input is not a reliable predictor of location (the distance from a boundary is the same at very many locations in an environment).

. This was found to be necessary as this input is not a reliable predictor of location (the distance from a boundary is the same at very many locations in an environment).

Decision Making

This simulation shows how an agent can make a decision about which direction to turn by computing likelihood ratios. To demonstrate this principle, we selected the ‘north-west’ and ‘north-east’ trajectories as two possible control sequences. The sensory goal  was set equal to the sensory input that would be received at the end of the ‘north-east’ trajectory. This goal was set to be identical at all time points

was set equal to the sensory input that would be received at the end of the ‘north-east’ trajectory. This goal was set to be identical at all time points  .

.

The agent's starting location was  and

and  with initial speed set to zero. The log of the likelihood ratio (see equation 28),

with initial speed set to zero. The log of the likelihood ratio (see equation 28),  , for model 1 versus model 2 was then computed at each time step. Figure 5 shows the accumulated

, for model 1 versus model 2 was then computed at each time step. Figure 5 shows the accumulated  as a function of the

as a function of the  to

to  time points along the trajectory. A

time points along the trajectory. A  of 3 corresponds to a probability of 95% [42]. This indicates that a confident decision can be made early on in the hypothesized trajectories.

of 3 corresponds to a probability of 95% [42]. This indicates that a confident decision can be made early on in the hypothesized trajectories.

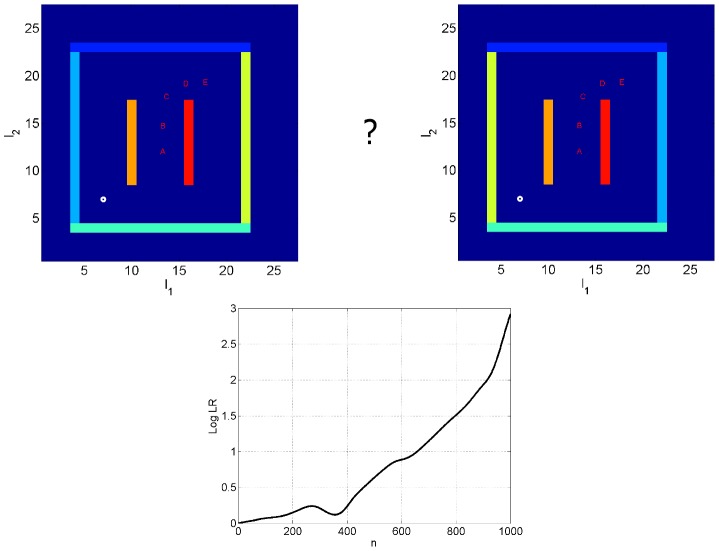

Figure 5. Decision making.

The task of decision making is to decide whether to make a left or a right turn (hence the question mark in the above graphic). Top Left: Locations on the route of the ‘left turn’ or north-west trajectory (red curve) Top Right: The markers A, B, C, D and E denote locations on the ‘right turn’ or north-east trajectory corresponding to time points  and

and  respectively. Bottom: The log likelihood ratio (of north-east versus north-west),

respectively. Bottom: The log likelihood ratio (of north-east versus north-west),  , as a function of the number of time points along the trajectory.

, as a function of the number of time points along the trajectory.

The degree to which each sensory modality is used in the above computations is determined by the relative values of observation noise covariances (see Text S4). These were initially fixed to the values described at the beginning of the simulations section. Whilst a confident decision could soon be reached using the above default values, decomposition of the LR into modality specific terms showed a strong contribution from both olfactory and visual modalities, but a somatosensory contribution that was initially rather noisy. This is due to small idiosyncrasies in the predictions of somatosensory values. We therefore experimented with the level of somatosensory noise covariance. Figure 5 was produced using a value of  which means LR effectively ignores this contribution (although we also have

which means LR effectively ignores this contribution (although we also have  , there are 20 visual inputs).