Abstract

The neural mechanism underlying simple perceptual decision-making in monkeys has been recently conceptualized as an integrative process in which sensory evidence supporting different response options accumulates gradually over time. For example, intraparietal neurons accumulate over time motion information in favour of a specific oculomotor choice. It is unclear, however, whether this mechanism generalizes to more complex decisions based on arbitrary stimulus- response associations. Here, in a task requiring to arbitrarily associate visual stimuli (faces or places) with different actions (eye or hand-pointing movements), we show that activity of effector-specific regions in human posterior parietal cortex reflects the ‘strength’ of the sensory evidence in favour of the preferred response. These regions, which do not respond to sensory stimuli per se, integrate after learning sensory evidence toward the outcome of an arbitrary decision. We conclude that even arbitrary decisions can be mediated by sensory-motor mechanisms completely triggered by contextual stimulus-response associations.

Human decision-making is thought to involve higher-order task-independent cognitive processes, which are distinct from task-specific perceptual mechanisms providing evidence in favour of a particular choice, and from motor mechanisms responsible for producing the chosen action1, 2. However, at least simple visual decisions in monkeys are mediated by neural mechanisms that are embedded in the sensory-motor apparatus. In the critical experiments performed in monkeys trained to discriminate the direction of moving dots, oculo-motor neurons in the lateral intraparietal area (LIP) increase their response proportionally to the level of sensory evidence in favour of a saccadic choice toward the receptive field3, 4.

It is currently unknown, however, whether the proposed mechanism also generalizes to human decisions that are instead characterized by arbitrary stimulus- response associations changing over time according to contextual factors. To understand how sensory representations are converted into the behavioural outcome of arbitrary human decisions, we trained human subjects to arbitrarily associate visual stimuli (faces or places) with different actions (saccadic eye or hand-pointing movements) and systematically manipulated the level of noise added to the visual stimuli, in order to study decision as a function of the quantity of sensory evidence available, and to evaluate, especially for stimuli near the psychophysical threshold, i.e. at 50% accuracy, decisions between competing response options in the absence of valid sensory evidence. By analogy to the monkey studies3, 4, and if arbitrary human visual decisions are based on similar mechanisms, we predicted that neural regions intimately linked to the process of decision formation should reflect the level of certainty of the decision within cortical regions that are responsible for the selection of the appropriate response. We targeted for study a specific set of regions in human parietal and frontal cortex that carry motor signals specific for planning or executing either hand-pointing or saccadic eye movements, and we asked whether preparatory activity in these regions represents the level of sensory evidence in favour or against the motor choice they are selective for. Critically, in our paradigm sensory evidence was provided by visual stimuli (faces or places) that normally do not sensorially drive these regions, but had been arbitrarily associated with a specific response in the context of the decision task. We found that two pointing-selective regions in medial parietal cortex, which do not respond to faces or place stimuli per se, show a pattern of activity that scales with the ‘strength’ of the sensory evidence in favour of a pointing response. A posterior intra-parietal region selective for saccades shows a more complex pattern with a mixture of decision and attention signals related to perceptual difficulty.

RESULTS

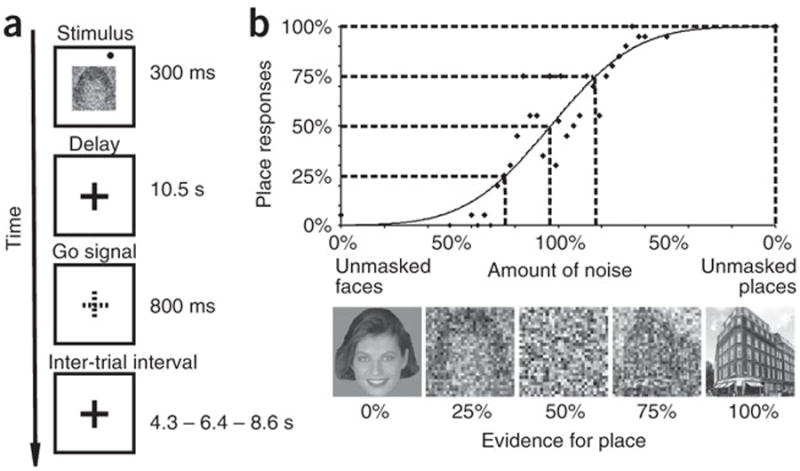

We recorded blood oxygen level dependent (BOLD) signal time-series with functional magnetic resonance imaging (fMRI) during a visual decision task using an event-related fMRI design. In a typical trial (Fig.1a), either a face or a place image was centrally presented, along with a peripheral visual target. Following a delay, subjects reported whether they had seen a face or a place by performing a saccade or a pointing movement, respectively, toward the remembered location of the visual target. A variable amount of noise was added to the face/place image in each trial, in order to manipulate the amount of sensory evidence in favour of a saccadic/pointing decision. To match the level of sensory evidence across subjects, each subject performed a behavioural session in which images were categorized as either faces or places. Responses were fitted to a psychometric function5, 6, which was then interpolated so as to select for each subject stimuli categorized as places 100%, 75% 50% 25% and 0% of the times (Fig. 1b).

Figure 1. Decision task.

a. Paradigm. b. Psychophysics. X-axis: Levels of sensory evidence for place categorization and pointing decision. Examples of 5 stimuli with different levels of noise that yield a ‘place’ response 100%, 75% 50% 25% and 0% of the times in one subject. Y-axis: % of place responses. The solid curve represents the best-fitting psychometric function, while the scatter plot shows the raw data upon which

Action-selective and preparatory activity in parietal and frontal cortex

Since we predicted that sensory evidence in favour of a given choice was represented by neural regions responsible for the selection of the corresponding response, the main analysis was conducted on a specific set of pointing- and saccade- selective regions of interest (ROIs), which were individually localized in each subject by recording a separate set of fMRI scans during blocks of memory-guided pointing or saccadic eye movements to visual targets.

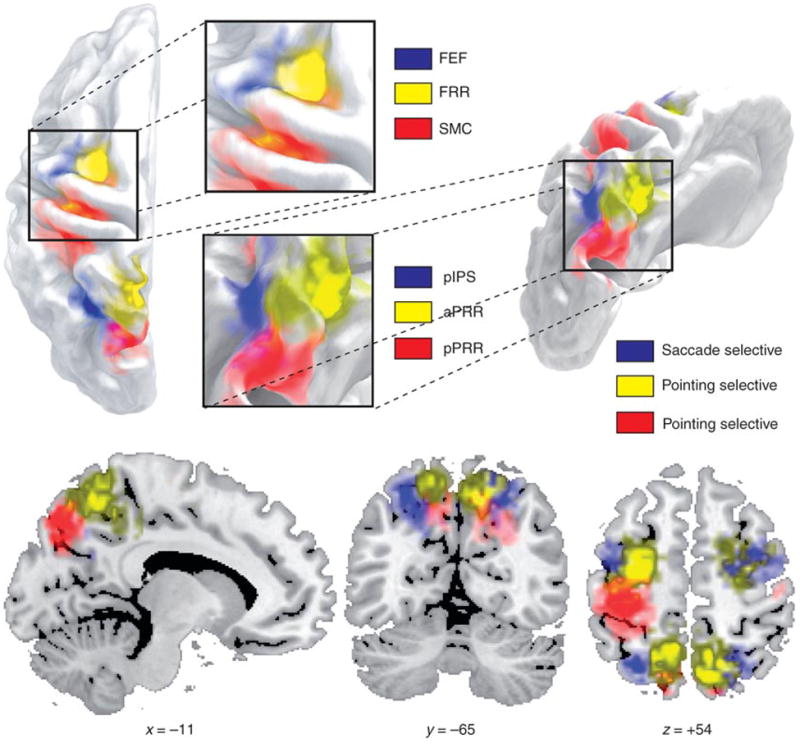

Two pointing-selective ROIs were identified in the precuneus, on the medial surface of the parietal lobe, and were labelled anterior parietal reach region (aPRR) and posterior parietal reach region (pPRR) for their relative location based on the localizer scans. In frontal cortex we identified two additional pointing-selective ROIs: a left central sulcus region spanning sensory-motor cortex (SMC) and a region in the dorsal aspect of the precentral sulcus that was labelled frontal reach region (FRR) (Fig. 3a, 4a, 6a). We also localized two saccade-selective ROIs, one located in the medial bank of the posterior intraparietal sulcus (pIPS)(Fig. 5a), and the other at the intersection of the precentral sulcus with the posterior end of the superior frontal sulcus (frontal eye fields, FEF)(Fig 6d). Based on their location (see Fig.2 for an overview) and previous functional imaging mapping studies, while pointing-selective regions in posterior parietal cortex may be homologues of macaque areas MIP and V6A, which are part of the monkey parietal reach region (PRR)7-10, the saccade-selective regions pIPS and FEF may be the homologue of macaque areas LIP and FEF, respectively10-14.

Figure 3. Anterior parietal reach (aPRR) region.

a. Left and right anatomical location of aPRR. Color scale indicates overlap of individual ROIs selected based on the localizer scans displayed on the inflated surface of the PALS atlas43. b. Percent signal change to face and place stimuli during passive stimulation before training on the decision task (passive), and during the decision task (decision). c. BOLD signal time- series for pointing or saccadic eye movements to contralateral or ipsilateral targets. X- axis: time-points of MR scans and onset of stimulus and go signal. Y-axis: percent signal change. d. Time-series for trials in which stimuli provided no useful information (50% evidence), and subjects selected either pointing or eye movements. e. Time-series for trials in which subjects selected a pointing movement to place stimuli with different levels of ’positive’ sensory evidence (100%, 75%, 50%). f. Time-series for trials in which subjects selected an eye movement to face stimuli at different levels of sensory evidence (100%, 75%, 50%).

Figure 4. Anterior parietal reach (aPRR) region.

a-f. as figure 2.

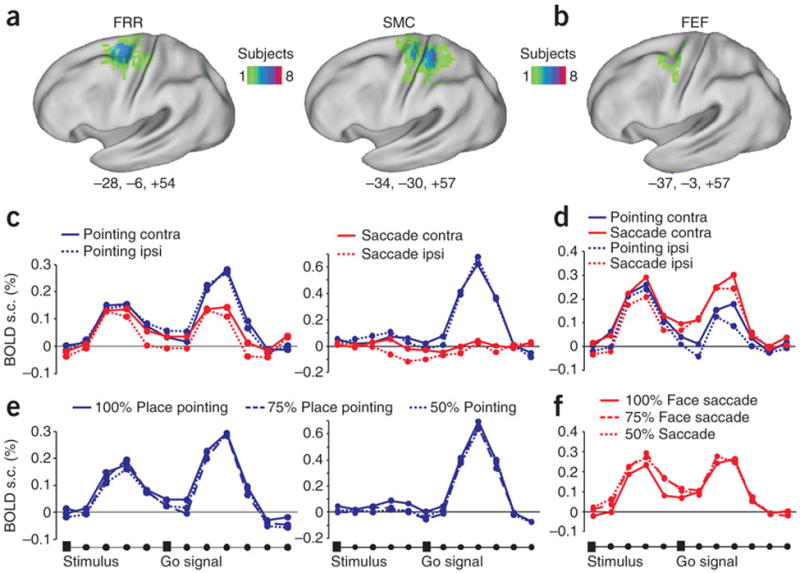

Figure 6. Frontal reach (FRR), sensory-motor cortex (SMC) and frontal eye field (FEF).

a, d. Left hemisphere anatomical location of FRR, SMC (pointing) and FEF (saccade) regions. Same as figure 2a. b, e BOLD signal time-series for pointing or eye movements to contralateral or ipsilateral targets. c. Time-series for trials in which subjects selected a pointing movement to place stimuli at different levels of sensoryevidence (100%, 75%, 50%). f. Time-series for trials in which subjects selected an eye movement to face stimuli at different levels of sensory evidence (100%, 75%, 50%).

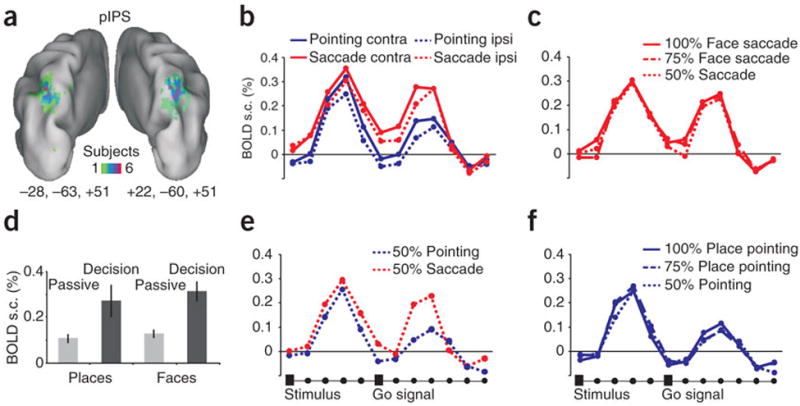

Figure 5. Posterior intra-parietal (pIPS) region.

a-f. same as figure 2.

Figure 2. Pointing- and saccade-selective regions in posterior parietal and frontal cortex.

Conjunction of individual ROIs from localizer scan for frontal reach region (FRR), frontal eye fields (FEF), sensory-motor cortex (SMC), posterior intraparietal sulcus region (pIPS), anterior parietal reach region (aPRR) and posterior parietal reach region (pPRR) shown on different views of the inflated surface of the PALS atlas43, and coronal, sagittal and transversal slices of the Colin brain44.

Activity was estimated in each ROI by averaging the BOLD signal time series for the different condition across trials in each individual, and then averaging the time-series across subjects (see Methods). Inspection of the time-series shows a first peak corresponding to the presentation of the visual target (face, place) and relative decision, and a second peak corresponding to the executed movement. First, we determined whether activity during the first peak was significantly modulated by the planned response. Under conditions in which movement selection was easier, i.e. when the place/face stimulus was clearly visible (100% level of evidence), both aPRR and pPRR responded more strongly when subjects planned a pointing movement than an eye movement (Fig. 3c, 4c) [Response Effector (pointing, saccade) by Time (time-points 1-6) interaction, aPRR: F(5,55)=8.35, P<0.0005; pPRR: F(5,45)=4.37, P=0.002]. Robust pointing-selective intentional signals in posterior parietal cortex contrasted with relatively weak preparatory activity in SMC region (albeit still significantly stronger for pointing than saccades), and lack of pointing selectivity in the frontal FRR region (Fig.6b). On the other side, saccade-selective signals in the pIPS were weaker and emerged towards the end of the delay period [Response Effector main effect, pIPS: F(1,9)=3.59 P=0.09, post-hoc time-point 5, P=0.001; time-point 6, P=0.004] while no saccade-selective delay activity was found in FEF (Fig. 5c, 6e).

Accumulation of sensory evidence in parietal cortex

Next, we considered whether the level of activity in these regions co-varied with the level of sensory evidence. Inspection of the BOLD signal time-series, on trials in which subjects selected a pointing response, shows that in both aPRR and pPRR the magnitude of the first peak varied with the strength of the sensory evidence (100%>75%>50% evidence)(Fig. 3e, 4e) [Evidence by Time interaction, aPRR: F(10,110)=2.71, P=0.005; pPRR: F(10,90)=3.04, P=0.002]. In the pIPS region, the sensory evidence modulation emerged relatively later in the course of the delay similarly to what we observed for saccade selectivity [Evidence by Time interaction: F (12,108)=2.1 P=0.02] (Fig. 5e). Interestingly, in the whole set of regions this difference was no longer evident when considering the second peak related to the movement execution. This is consistent with the idea that decisions were made early on after stimulus presentation and that the quality of sensory information does not affect the execution of a movement once a threshold for decision is reached.

Bolstering the notion of an accumulator mechanism that integrates sensory evidence into a premotor plan, we looked for the presence of modulation by ‘negative’ sensory evidence, i.e. favouring the non-preferred response. As shown in Figures 3f, 4f and 5f, at least in aPRR and pPRR, the weakest peak response was observed when 100% face stimuli, strongly linked to a saccadic eye movement, were presented. This qualitative observation was validated by an ANOVA comparing over time-points the level of sensory evidence for the preferred effector. For example, in pointing-selective regions, we compared responses to 100% place, 75% place, 25% place (75% face), and 0% place (100% face) stimuli. We observed a significant interaction of the level of sensory evidence by time [aPRR: F(15,165)=3.5, P<0.001; pPRR: F(15,135)=1.9, P=0.02], which was significant at the peak of the response (time-point 4) (post-hoc t-tests, aPRR: 100% place > 75% place, P=0.0001; >25% place, P<0.00001; > 0% place, P<0.00001; pPRR: 100% place > 75% place, P=0.01; >25% place, P=0.04; > 0% place, P=0.0003). In the saccadic region pIPS, we also found an interaction of the level of sensory evidence by time [F(15,135)=1.8 p=0.03], which was significant at the end of the delay (time-point 6) similarly to what found for saccade selectivity and positive sensory evidence [post-hoc t-tests,100% face > 25% face, P= 0.004; > 0% face, P=0.0003]. These findings indicate that all effector-specific regions in posterior parietal cortex are modulated by both positive and negative sensory evidence.

Overall, these findings strongly suggest that arbitrary decisions, i.e. when you see a place image point to the target next to it, relies, as is the case for simpler perceptual decisions in monkeys, on an accumulator that integrates sensory evidence toward a decision. To rule out the possibility that this modulation by sensory evidence reflected a low-level perceptual effect, such as a stronger response to clearly visible stimuli (100% face or place) than noisy ones (50% face/place), or a differential specificity of pointing or saccade regions to these stimulus categories, we compared sensory responses to face and place stimuli before and after subjects were exposed to the decision task.

To this aim, blocks of trials involving the passive presentation of face and place stimuli were run before subjects were ever exposed to the visual decision task. Before training, the passive sensory responses to place or face images were generally weak in posterior parietal cortex. However, during the decision task (i.e. after training), responses became strong and selective (Fig. 3b, 4b, 5b). This difference, at least in aPRR, cannot be accounted for by variation in arousal or attention, as the activation became not only stronger but also more selective for the stimulus category associated with the preferred response [Experiment (passive, decision) by Stimulus (face, place) interaction, F(1,10)=8.1, P=0.01]. In pPRR we observed the same trend but not significant, while in pIPS region the response to both type of stimuli increased during the decision task. In summary, the response in these regions is not due to low-level sensory factors, but is likely the product of learning the arbitrary visuo-motor association.

Spatially-selective signals in parietal cortex

Activity in posterior parietal cortex was not only modulated by the strength of the sensory evidence, but also by the direction of movement or spatial location of the target. In fact, both pointing aPRR and pPRR, and saccadic pIPS region showed stronger responses for targets/movements in the contralateral visual space [Visual Field by Time interaction, aPRR: F(5,55)=5.69, P=0.0002; pIPS: F(5,45)=5.21, P=0.0007; Visual Field main effect, pPRR: F(1,9)=12.1, P=0.006] (Fig. 2c, 3c, 4c). Moreover, the lack of a significant interaction of Response Effector by Visual Field suggests that spatial signals were independent of motor planning signals.

Signals predictive of the decision outcome in parietal cortex

Lastly, we tested whether these regions contained signals that predicted the outcome of the motor decision independently of the quality of sensory information. Figures 3d, 4d and 5d show the response in aPRR, pPRR and pIPS region when the stimuli provided no useful information (50% evidence), as a function of whether subjects selected pointing or eye movements. Interestingly, although aPRR did not show any modulation by motor choice, preparatory signals in both pPRR and pIPS region predicted the subject’s decision. In pPRR, the early part of the time-series (including the peak) did not distinguish between pointing and eye movements, consistent with an ongoing competition between the two response outcomes; however, a differentiation emerged later in the course of the delay (time-point 5) consistent with a relative delay in reaching a decision when the sensory evidence was poor [Response Effector by Time interaction, pPRR: F(5,45)=2.73, P=0.03; post hoc t-tests on time-point 5, P=0.001]. In the pIPS region, consistent with the late emergence in the delay of other selective signals (see above), the decision to make a saccade modulated activity after the peak toward the end of the delay period [Response Effector main effect, F(1,9)=6.35, P=0.03]. In summary, while all regions were sensitive to the effector, the quality of sensory information, and the spatial location of the target, pPRR and pIPS were also modulated by the actual ‘internal’ decision to move.

Accumulation of sensory evidence in frontal cortex

In contrast to the parietal regions, the frontal reach region (FRR) and the FEF saccade-selective region were not modulated by the level of sensory evidence during the decision delay [Evidence by Time interaction, FRR: F(10,110)=0.7, P=ns; FEF: F(10,80)=0.8, P=ns) (Fig. 6c, f). Although these regions were classified as pointing- and saccade-selective based on the localizer scans, and manifested effector-specific activity during execution (second peak Figure 6b, e), they did not show strong selective planning activity, and did not predict the outcome of the decision when the stimuli were ambiguous. This constellation of negative findings during the delay in contrast to the selectivity of responses during execution, and when compared to the pattern in posterior parietal cortex, suggest that premotor regions FRR and FEF were more involved in this paradigm in late motor selection or execution, than in the transformation of sensory information into motor plans. In sensory-motor cortex (SMC), however, we did observe some evidence of accumulation of sensory evidence, and specificity for arm motor planning (Fig. 6b, c). The response during the decision delay was stronger for 100% than 75% or 50% place stimuli [Sensory evidence main effect, F(2,22)=7.1, P=0.003]. There was also stronger delay activity for pointing than saccades [Response Effector main effect, F(1,11)=8.2, P=0.01].

Sensory evidence and decision outside action-selective regions

Although our results strongly suggest that posterior parietal cortex is predominantly influenced by the accumulation of sensory evidence and ensuing motor decisions, two important questions remain unanswered by the main ROIs analysis. First, are there any other effector-specific regions that also show decision signals? Second, are there any brain regions that accumulate sensory evidence independently of motor plans or response type? In other words, is there evidence for a neural correlate of a general decision-making module? Two recent fMRI studies reported that activity in left superior dorso-lateral prefrontal cortex (DLPFC) is compatible with such a mechanism15, 16. These two questions were investigated by performing a voxel-wise ANOVA on the whole brain, with Response Effector, Sensory Evidence and Time as factors. An interaction of Response Effector by Sensory Evidence by Time identifies voxels in the brain sensitive to both response selection and sensory evidence. Conversely, a significant interaction of Sensory Evidence by Time highlights voxels that modulate with sensory evidence independently of the specific motor response, and may thus represent a more general decision-making mechanism.

Figure 7b presents a z-map of the interaction of Response Effector by Sensory Evidence by Time. In agreement with the ROIs analysis, we found a medial parietal region in the precuneus that completely overlaps with the aPRR (see conjunction map of individual aPRR ROIs in Fig.7a). Inspection of the BOLD time-series shows that this area responds more strongly for pointing than saccadic movements during the decision delay, and shows a modulation by sensory evidence in the expected direction (100%>75%>50% evidence) (Fig.7c). The only other significant region was localized in the left central sulcus, and it was also entirely included in the SMC region. These analyses strongly and independently confirm the specificity of posterior parietal cortex and sensory-motor cortex in the accumulation of sensory-motor evidence.

Figure 7. Whole brain analysis: sensory evidence and effector selectivity.

a. Colour scale indicates overlap of individual aPRR ROI based on localizer scan mapped onto transverse slices of the Colin brain44. b. Multiple-comparison corrected z-map of the interaction of Response Effector by Sensory Evidence by Time. c. BOLD signal time- series from aPRR region in b. for trials in which subjects selected either a pointing or an eye movement to stimuli at different levels of sensory evidence.

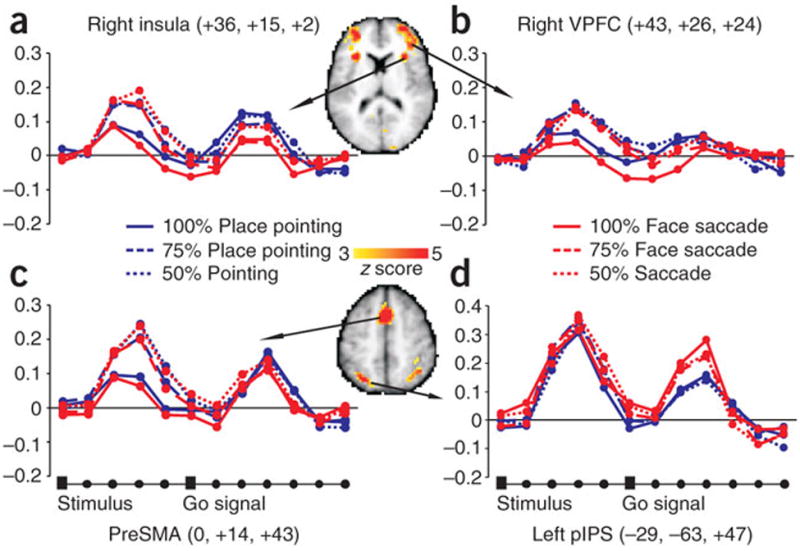

Next, we considered whether any region in the brain revealed an effect of sensory evidence irrespective of response effector. Several anterior regions including ventral (Left: -35, 43, 10; Right: 29, 39, 19; 38, 46, 4; 43, 26, 24) and lateral prefrontal cortex (Left: -36, -5, 25; Right: 36, 0, 36), bilateral insula (Left: -30, 18, 6; Right: 36, 15, 2), pre-supplementary motor area (pre-SMA 0, 14, 43), and anterior cingulate (-4, 46,1) showed a significant main effect of sensory evidence (Fig. 7b, c, d). Time-series inspection reveals stronger activation for stimuli that are difficult to discriminate (50% and 75% evidence) than for easy stimuli (100% evidence). Critically, this effect was independent of the response (saccade or pointing). This pattern of results is consistent with a role of these prefrontal regions in perceptual analysis or allocation of attention resources, but not in the accumulation of sensory evidence or decision-making.

We also observed a main effect of sensory evidence bilaterally in the IPS (Left: -29,- 63,47; Right: 30,-61, 46). The execution response in this region was saccadic-selective (second peak in Fig. 8a) and overlapped with the saccadic region pIPS, previously described. Therefore, this part of posterior parietal cortex contains a mixture of perceptual-attention, sensory evidence, and motor decision signals. Finally, several other brain regions showed a deactivation that was independent of stimuli and responses. Moreover, the deactivation increased with the stimuli’s perceptual difficulty, i.e. stronger for 50% and 75%, than 100% evidence. These regions included posterior cingulate, ventro-medial prefrontal cortex and angular gyrus, which are the main nodes of the so-called “default system”, a functional network that is consistently deactivated during goal-directed behaviour when compared to rest17, 18. Supplementary figure 1 shows the location of these regions in relation to the topography of the default network. The negative modulation of these regions as function of task difficulty is compatible with similar load- or attention-dependent modulations described in the default network19, 20, but not very compatible with a role of these regions in the accumulation of sensory evidence. Interestingly, the superior prefrontal area described by Heekeren et al.16 after atlas coordinate transformation falls within the borders of the default network, and not surprisingly shows a signal deactivation (see Supplementary fig. 1a). In conclusion, in our study, no prefrontal region shows a positive modulation related to sensory evidence that is independent of the motor response.

Figure 8. Whole brain analysis: sensory evidence.

a-d Multiple-comparison corrected z-map of the main effect of sensory evidence is superimposed on selected transverse slices of the Colin brain. BOLD signal time-series for pointing and eye movements as function of sensory evidence. L pIPS: left posterior intra-parietal sulcus; preSMA: pre- supplementary motor area; VPFC=ventro-medial prefrontal cortex.

DISCUSSION

Sensory evidence and decision signals in parietal cortex

The main finding of this study is that arbitrary visual decisions involving the association of a stimulus to a response do not involve a general decision-making module, but rather depend on specific sensory-motor mechanisms that accumulate sensory information and plan motor actions. Several regions in human posterior parietal cortex specific for planning and executing either pointing or saccadic eye movements respond more strongly to stimuli that provide more sensory evidence toward a motor decision, and that are also linked to the preferred response in the context of the decision task. The modulation by sensory evidence cannot be explained by a difference in the sensory response to highly visible (100% evidence) vs. noisy stimuli (50% evidence) because these regions did not respond to highly visible stimuli shown in a passive stimulation paradigm. Rather, parietal cortex became strongly responsive to the relevant stimulus category (face or place) in the context of the decision task. While higher arousal or attention may partly account for an increment in the sensory drive of these regions, at least in the region aPRR the enhancement was specific for the stimulus category (places) associated to the preferred movement (pointing). Higher responsiveness to task- relevant stimuli is consistent with reports that neurons in parietal cortex flexible code stimulus features instructing a selective task set21-23.

Additional support for the hypothesis that parietal cortex functions as an accumulator of sensory information is the modulation by both ‘positive’, i.e. related to the preferred response, and ‘negative’ sensory evidence, i.e. related to the non-preferred response (e.g. saccade responses to face stimuli in a pointing region). In all three parietal regions (aPRR, pPRR, pIPS) the response scaled with evidence for both preferred and non- preferred response, e.g. 100% place, 75% place, 25% place (75% face), and 0% place (100% face) in pointing-selective aPRR and pPRR. This control suggested by one of the reviewers is important because it argues against a ‘premotor’ interpretation. A highly visible (high evidence) relevant stimulus leads to a quicker decision, and thus potentially to an earlier and stronger build-up of premotor activity for the preferred movement. Conversely, a noisy stimulus (low evidence) leads to a delayed decision, and possibly to a delayed and weaker motor build up. A premotor interpretation, however, predicts no modulation by negative evidence linked to the non-preferred response. In contrast, we find that parietal cortex activity scales as function of both positive and negative evidence, which is more consistent with a sensory mechanism that weights the available sensory information3, 4.

Parietal cortex contains not only sensory evidence signals, but also motor signals related to the decision. This conclusion is based on three observations. First, these parietal regions were selected based on their specificity for motor effectors in a localizer task that combined planning and execution; and, during the decision task, all three regions showed effector-specific planning activity. A functional subdivision of posterior parietal cortex in action-specific regions (eye, face, arm) is consistent in humans and monkeys. The location of the pointing-selective regions aPRR and pPRR extending from the precuneus to the superior parietal lobule matches the localization of pointing-selective activity in other neuroimaging studies7, 8, as well as the relative position of putatively homologues reaching specific regions in the macaque monkey (parietal reach region, PRR; medial intraparietal area, MIP; area V6A)24. Similarly, the location of the saccade-selective region pIPS, in the posterior aspect and medial bank of the intraparietal sulcus, is consistent with the localization of a topographically selective region responsive to eye movements and spatial attention in humans7, 11, 25-27. This region shows in agreement with previous work weaker saccade-specific preparatory signals7, but strong selectivity during execution. This region represents the putative homologue of macaque lateral intraparietal (LIP) which also show similar response properties10, 12.

Second, two out of three regions (pPRR, pIPS) responded more strongly when subjects selected the preferred response under conditions in which the stimulus did not provide any useful information (50% sensory evidence). Hence activity in these regions predicts a motor decision independently of any sensory evidence. This is consistent with recent reports of monkey’s PRR neurons responding to spontaneous choice of arm movements in the absence of instructing cues28.

Third, these regions were spatially selective in relation to the target location or movement direction, i.e. the response during the decision delay was stronger for contralateral than ipsilateral targets/movements. A spatially selective response underlies either selection of the target stimulus or planning of movement direction. Interestingly, effector and spatially selective signals were independent and additively contributed to the response in these regions. This is consistent with results from both PRR and LIP regions in monkey, in which spatial and effector-specific signals are also largely independent from one another29, 30.

In summary, the combined functional properties of our posterior parietal regions strongly indicates that they contain the right mixture of signals (sensory evidence, motor, spatial) for implementing a simple decision-making mechanism based on the continuous transformation of sensory information into motor decisions. In this case the association between stimuli and responses was entirely arbitrary and not tuned to the sensory properties of an area. This result generalizes to more complex human decisions a basic mechanism previously identified in macaques during simpler perceptual decisions3, 4. However, the poor spatial resolution of fMRI does not allow us to distinguish whether these signals converge on the same neuronal population, or whether they are distributed over different neuronal types or layers within the same region.

Parietal vs. Frontal cortex

In contrast to the strong decision-related signals in posterior parietal cortex, we found relatively weak evidence for accumulation of sensory evidence in frontal cortex. The pointing-selective frontal reach region (FRR) and the saccade-selective FEF region in premotor cortex were not in fact modulated either by sensory evidence levels or decision outcome. The sensory-motor cortex (SMC) showed weak effects of sensory evidence and motor planning, but no decision outcome or spatially selective modulation. Furthermore, in a voxel-wise analysis looking for a modulation of sensory evidence by effector, we still found significant modulation in correspondence of the pointing-selective aPRR in posterior parietal cortex, and primary sensory-motor cortex, but no effect in premotor or prefrontal cortex. This negative finding must be weighted against single-unit evidence of sensory accumulation in dorsolateral prefrontal cortex31, and the limited spatial resolution of fMRI in detecting individual neuronal populations within the same area.

In contrast, prefrontal regions including ventral and dorsolateral cortex, anterior insula and anterior cingulate showed strong modulation by sensory information independently of effector. However, this effect was due to stronger responses for difficult (e.g. 50% evidence) than easy (e.g. 100% evidence) stimuli, which can be attributed to either perceptual difficulty or attention load, but not to sensory evidence accumulation.

In summary, within the resolution of the fMRI methods used in this study, we can conclude that sensory-motor decision mechanisms seem to be specific to posterior parietal cortex. The modulation by sensory evidence of preparatory activity in SMC is consistent with continuous flow models of decision-making in which sensory evidence continuously flows from sensory to motor regions of the brain32.

Sensory-motor vs. general decision-making mechanisms

The localization of decision signals in effector-specific regions of parietal lobe, and the lack of any other region in the brain, including prefrontal cortex, that positively modulates with the degree of sensory evidence independent of the response runs against the idea of a general abstract decision-making mechanism postulated in traditional psychological models1.

A previous study by Heekeren et al. reported in a left superior prefrontal region signals that scaled with the level of sensory evidence independent of motor responses16. Subjects performed a motion discrimination task on displays containing different levels of coherent motion, similarly to the original experiments by Shadlen and Newsome3, 4, and reported their decision using either an eye movement or key press. The superior prefrontal region showed a stronger response to high than low evidence stimuli, and no specificity for the movement. Given current evidence in non-human primates that perceptual decisions are closely tied to motor plans3, 4, 31, Heekeren and colleagues argued for the development in the human brain of a more general decision-making module to accommodate the broader range and greater flexibility of human decisions2. With the limitation of comparing responses across studies, we find that the prefrontal region described by Heekeren et al.16 falls within regions of prefrontal cortex that are differentially deactivated by stimuli at different levels of difficulty. The deactivation is deeper for more difficult (low evidence) than easier (high evidence) stimuli, and a direct contrast between these two conditions, as in the Heekeren study, may result in a positive response. The prefrontal region of Heekeren et al. is part of a more distributed network including lateral parietal cortex, posterior cingulate-precuneus, and ventro-medial prefrontal cortex that also shows the same pattern of deactivation as function of sensory evidence. This network corresponds to the so-called ‘default’ network17, 18 which is commonly deactivated during goal-directed behaviour and more suppressed during difficult perceptual or attentional demanding tasks19, 20.

Conclusions

This is the first report to show that human posterior parietal cortex contains a sensory-motor mechanism for arbitrary visual decisions. Activity in posterior parietal cortex, specific for planning effector-specific movements, was modulated, at a moment in which decisions were being formed, by the level of sensory evidence, the position of the target, and the outcome of the decision in the absence of helpful sensory information. These signals represent the neural correlate of a mechanism, completely trained by the experimental association, by which sensory evidence accumulates toward the behavioural outcome of an arbitrary decision. Moreover, in our hands, visual decisions in human subjects do not necessarily involve high-level representations independent of sensory-motor systems2, 16. Rather, decision processes seem to be embodied in the direct transformations between relevant sensory and motor representations, with premotor circuitries querying sensory systems for evidence toward learned behavioural choices. These findings support the emerging idea of “embodied cognition” 33 according to which abstract cognitive functions do not depend on specialized modules, but are built on simpler sensory-motor processing mechanisms. They are also consistent with the view that “to see and decide is, in effect, to plan a motor response” 34.

METHODS

Subjects

Twelve healthy right-handed volunteers (8 females, mean age 24.2) participated in the study, after providing written informed consent. The experimental protocol was approved by the University “G. D’Annunzio” of Chieti institutional ethics committee. Participants completed a behavioural session for selecting levels of noise yielding respectively 100%, 75%, 50%, 25%, or 0% of place responses; an fMRI session of delayed saccade and pointing tasks to localize effector-specific regions, and of passive view to measure in these same regions passive sensory responses to face and place stimuli; and at least two fMRI sessions to measure signals in these regions during the decision task. Stimuli. Images were 240 × 240 pixel grey-scale digitized photographs of faces and buildings selected from a larger set developed by Neal Cohen and used in previous experiments35-37. Visual stimuli were generated with a personal computer running in- house software, back projected to a translucent screen by an LCD video projector and viewed through a mirror attached to the head coil. Presentation timing was controlled and triggered by the acquisition of fMRI images. Passive view scans. Participants passively viewed alternating blocks (16 s) of unmasked faces and places, presented for 300 ms every 500 ms, interleaved with fixation periods of 15 s on average. Each subject underwent two scans of 8 minutes each, for a total of 16 blocks for each condition. Localizer scans. Participants kept fixation on a central black cross and maintained their right index finger on a key of a button box positioned centrally on their abdomen. Blocks of delayed pointing movements, saccadic eye movements, and fixation were alternated every 16 s, for a total of two scans and 16 blocks for each condition. Each block started with a written instruction (FIX, EYE, HAND) and contained 4 trials. Each trial began with the onset of a peripheral target (a filled white circle of 0.9 deg diameter) for 300 ms in one of 8 radial locations (1/8, 3/8, 5/8, 7/8, 9/8, 11/8, 13/8, 15/8 pi) at 4 deg eccentricity. The target indicated the location for the impending movement. After a variable delay (1.5, 2.5, 3.5, or 4.5 s), the fixation point changed colour, and participants moved either their right index finger or their eyes to the memorized target location and then immediately returned back to the starting point. In the saccade task, subjects were instructed to prepare a saccadic eye movement toward the memorized target location and to look at its location and then quickly look back at the fixation point after the fixation change of colour (go signal). In the pointing task, subjects were instructed to prepare a pointing movement with their right index finger toward the memorized target location and to point toward its location after the go signal. The right index was always pressing a key of a button box positioned in the middle of the abdomen and immobilized with Velcro straps attached to the scanner bed. When the fixation point changed colour, subjects released the key response and pointed as quickly as possible in the direction of the memorized target location by rotating their wrist without moving either the shoulder or the arm, and then returned to the starting key press.

Psychophysical calibration

The session was performed inside the MR scanner to ensure stable stimulation conditions. Face/place images were presented for 300 ms, followed by an inter-stimulus interval of 2 s. On each trial, a variable amount of noise (0% to 100%) was added to the images, in the form of 8 pixel squares of white noise. Thirty-five equally spaced levels of noise were tested, forming a continuous range from unmasked faces (100% evidence for faces) through pure noise (50% evidence for either faces or places) to unmasked places (100% evidence for places). Twenty trials were presented for each level, in a randomized sequence. A probit analysis of binomial responses, based on maximum likelihood estimation5, 6, was used to estimate the threshold and slope of the psychometric function describing the probability of giving a place response as a function of the evidence level in the image. Five noise levels (100%, 75% 50% 25% and 0% of place responses) were then selected for each individual by interpolation of his/her psychometric function. Subjects responded by pressing one key for faces and one key for places.

Decision task

Each trial began with a 300 ms presentation of a face/place image centrally, along with a peripheral visual target (same size and location as in the localizer scans). The image was masked by an amount of noise individually selected during psychophysical calibration. Following a delay of 10.5 seconds, the fixation cross changed colour and subjects reported whether they had seen a face or a place image by performing an eye or a pointing movement, respectively, toward the remembered position of the visual target. The next trial started after a variable intertrial time (4.3, 6.4, or 8.6 seconds, corresponding to 2, 3, and 4 MR time-points, respectively). Pointing and saccadic responses were executed by subjects in the same way as in the localizer scans. Eye movements were recorded with an eye tracker during the psychophysical training session (subjects underwent a 1 hour training session to learn the stimulus- response association) but not during fMRI. Pointing responses were monitored by the release of a key at the onset of the movement, and during the psychophysical training session by a video-camera. In total 480 stimuli/trials were presented in 20 runs of 24 stimuli each. Based on the psychophysical session, we presented 120 stimuli at 100% evidence (60 faces, 60 places), 240 stimuli at 75% evidence (120 faces, 120 places), and 120 stimuli at 50% evidence. Of the theoretical distribution of stimuli, based on individual selection of 5 noise levels, after subjects made their own decisions, the following distribution of stimulus/response classification was obtained: 59.1 trials of 100% place stimulus/pointing response, 59.6 trials of 100% face stimulus/saccade response, 82.7 trials of 75% place stimulus/pointing response, 36.8 trials of 75% place stimulus/saccade response, 90.6 trials of 75% face stimulus/saccade response, 28.9 trials of 75% face stimulus/pointing response, 62.2 trials of 50% face/place stimulus/pointing responses and 57.3 trials of 50% face/place stimulus saccade response. Therefore the theoretical distribution agreed well with the final distribution of subjects’ decisions.

fMRI scan acquisition and data analysis

A Siemens Vision 1.5 T scanner and asymmetric spin-echo, echoplanar sequence were used to measure BOLD contrast over the entire brain [repetition time (TR), 2.16 sec; echo time (TE), 37 msec; flip angle, 90°; 16 continuous 8mm thick axial slices, 3.75 ×3.75mm in-plane resolution]. Anatomical images were acquired using a sagittal magnetization-prepared rapid acquisition gradient echo (MP-RAGE) sequence (TR, 97 msec; TE, 4 msec; flip angle, 12°; voxel size, 1×1mm). Hemodynamic responses of localizers and passive view scans were generated by convolving an idealized representation of the neural waveform for each condition with a gamma function, with distinct regressors for each condition. ROIs were extracted from single subject z maps of contrasts38. Hemodynamic responses in the decision experiment were estimated without any shape assumption at the voxel level using the general linear model39. Random-effects analyses were performed by entering the individual time-points of each estimated hemodynamic response into regional ANOVAs. Functional data were realigned within and across scans to correct for head motion using six-parameter rigid-body realignment. A whole brain normalization factor was applied to equate signal intensity between scans. Differences in the time of acquisition of each slice within a frame were compensated for by sinc interpolation. For each subject, an atlas transformation40 was computed to the atlas representative target 41 using a 12 parameter general affine transformation. Functional data were interpolated to 3 mm cubic voxels in atlas space and were analyzed on a voxel by voxel basis, according to the general linear model. Trials from the decision task were modelled by a set of 12 delta functions covering 12 consecutive time-points each 2.16 s, aligned with the onset of the face/place image. The model included terms on each scan for an intercept, linear trend, and temporal high-pass filter with a cut-off frequency of 0.009 Hz. Pointing- (saccade-) specific regions were identified in each individual from the localizer scans, as the intersection of the two statistical parametric maps resulting from the one-tail contrasts between pointing (saccades) and saccades (pointing) and between pointing (saccades) and fixation, each thresholded at z > 2 (p < 0.05 uncorrected). An in-house clustering algorithm was used to extract ROIs from these maps. ROIs were 10 mm diameter spheres centered on map peaks with z-scores > 2; spheres within 16 mm of each other were consolidated into a single ROI. Critically these ROIs were used for independent time-series analysis during the decision experiment. For each ROI, regional time-series for each condition of the decision task were estimated over 12 MR time- points by averaging across all the voxels in the ROI. Group analyses either on the regional data or at the single voxel were conducted through random-effect ANOVAs, in which the experimental factors, including Sensory evidence, Response, and Visual field, were crossed with the Time factor. Separate ANOVAs were conducted on the first 6 and second 6 time-points. Group-average ANOVA F maps were transformed to z maps, corrected for multiple-comparison and adjusted for correlations across time-points by using previously published methods42. An automated algorithm that searched for the local maxima and minima, and localized according to a stereotactic atlas40 identified the coordinates of responses in z maps.

The sensory response to face and place stimuli was assessed within pointing-selective ROIs by contrasting the magnitude of the BOLD response during passive sensory stimulation scans. Conjunction between individual ROIs were projected on a PALS atlas43. Group-average z maps were projected on the Colin-brain atlas44.

Supplementary Material

Acknowledgments

We thank Eleonora Leombardi and Sara Di Marco for help in data collection; Christopher Lewis, Carlo Sestieri, and Avi Snyder for technical support on data analysis and software. This work was supported by the EU FP6 -MEXC-CT-2004-006783 project (acronym IBSEN), the National Institute of Mental Health Grant R01MH71920-06 and National Institutes of Health Grant NS48013 to M.C., by the Italian Ministry of University and Research Grant PRIN 2005119851_004 to G.G., and by the 3th PhD Internationalization Program of the Italian Ministry of University and Research.

Footnotes

Author Contributions A.T., G.G. and M.C. were involved in experimental design, A.T. was responsible for data acquisition and data analysis, A.T., G.G., G.L.R. and M.C. were involved in data interpretation and manuscript writing.

References

- 1.Tversky A, Kahneman D. The framing of decisions and the psychology of choice. Science. 1981;211:453–8. doi: 10.1126/science.7455683. [DOI] [PubMed] [Google Scholar]

- 2.Heekeren HR, Marrett S, Ungerleider LG. The neural systems that mediate human perceptual decision making. Nat Rev Neurosci. 2008;9:467–79. doi: 10.1038/nrn2374. [DOI] [PubMed] [Google Scholar]

- 3.Shadlen MN, Newsome WT. Motion perception: Seeing and deciding. Proceedings of the National Academy of Sciences of the United States of America. 1996;93:628–633. doi: 10.1073/pnas.93.2.628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. J Neurophysiol. 2001;86:1916–36. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- 5.Finney DJ. Probit Analysis. Cambridge University Press; 1971. [Google Scholar]

- 6.McKee SP, Klein SA, Teller DY. Statistical properties of forced-choice psychometric functions: implications of probit analysis. Percept Psychophys. 1985;37:286–98. doi: 10.3758/bf03211350. [DOI] [PubMed] [Google Scholar]

- 7.Astafiev SV, et al. Functional organization of human intraparietal and frontal cortex for attending, looking, and pointing. J Neurosci. 2003;23:4689–99. doi: 10.1523/JNEUROSCI.23-11-04689.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Connolly JD, Andersen RA, Goodale MA. FMRI evidence for a ’parietal reach region’ in the human brain. Exp Brain Res. 2003 doi: 10.1007/s00221-003-1587-1. [DOI] [PubMed] [Google Scholar]

- 9.Galletti C, Fattori P, Kutz D, Gamberini M. Brain location and visual topography of cortical area V6A in the macaque monkey. European Journal of Neuroscience. 1999;11:575–582. doi: 10.1046/j.1460-9568.1999.00467.x. [DOI] [PubMed] [Google Scholar]

- 10.Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;386:167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- 11.Sereno MI, Pitzalis S, Mrtinez A. Mapping of contralateral space in retinotopic coordinates by a parietal cortical area in humans. Science. 2001;294:1350–4. doi: 10.1126/science.1063695. [DOI] [PubMed] [Google Scholar]

- 12.Colby CL, Duhamel JR, Goldberg ME. Visual, presaccadic, and cognitive activation of single neurons in monkey lateral intraparietal area. Journal of Neurophysiology. 1996;76:2841–2852. doi: 10.1152/jn.1996.76.5.2841. [DOI] [PubMed] [Google Scholar]

- 13.Bruce CJ, Goldberg ME. Primate frontal eye fields. I. Single neurons discharging before saccades. Journal of Neurophysiology. 1985;53:603–635. doi: 10.1152/jn.1985.53.3.603. [DOI] [PubMed] [Google Scholar]

- 14.Paus T. Location and function of the human frontal eye-field: a selective review. Neuropsychologia. 1996;34:475–483. doi: 10.1016/0028-3932(95)00134-4. [DOI] [PubMed] [Google Scholar]

- 15.Heekeren HR, Marrett S, Bandettini PA, Ungerleider LG. A general mechanism for perceptual decision-making in the human brain. Nature. 2004;431:859–62. doi: 10.1038/nature02966. [DOI] [PubMed] [Google Scholar]

- 16.Heekeren HR, Marrett S, Ruff DA, Bandettini PA, Ungerleider LG. Involvement of human left dorsolateral prefrontal cortex in perceptual decision making is independent of response modality. Proc Natl Acad Sci U S A. 2006;103:10023–8. doi: 10.1073/pnas.0603949103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Shulman GL, et al. Common blood flow changes across visual tasks: II. Decreases in cerebral cortex. Journal of Cognitive Neuroscience. 1997;9:648–663. doi: 10.1162/jocn.1997.9.5.648. [DOI] [PubMed] [Google Scholar]

- 18.Raichle ME, et al. Inaugural Article: A default mode of brain function. Proc Natl Acad Sci U S A. 2001;98:676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.McKiernan KA, Kaufman JN, Kucera-Thompson J, Binder JR. A parametric manipulation of factors affecting task-induced deactivation in functional neuroimaging. Journal of Cognitive Neuroscience. 2003;15:394–408. doi: 10.1162/089892903321593117. [DOI] [PubMed] [Google Scholar]

- 20.Weissman DH, Roberts KC, Visscher KM, Woldorff MG. The neural bases of momentary lapses in attention. Nature Neuroscience. 2006;9:971–978. doi: 10.1038/nn1727. [DOI] [PubMed] [Google Scholar]

- 21.Toth LJ, Assad JA. Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature. 2002;415:165–8. doi: 10.1038/415165a. [DOI] [PubMed] [Google Scholar]

- 22.Freedman DJ, Assad JA. Experience-dependent representation of visual categories in parietal cortex. Nature. 2006;443:85–8. doi: 10.1038/nature05078. [DOI] [PubMed] [Google Scholar]

- 23.Stoet G, Snyder LH. Single neurons in posterior parietal cortex of monkeys encode cognitive set. Neuron. 2004;42:1003–12. doi: 10.1016/j.neuron.2004.06.003. [DOI] [PubMed] [Google Scholar]

- 24.Snyder LH, Batista AP, Andersen RA. Intention-related activity in the posterior parietal cortex: a review. Vision Res. 2000;40:1433–41. doi: 10.1016/s0042-6989(00)00052-3. [DOI] [PubMed] [Google Scholar]

- 25.Silver MA, Ress D, Heeger DJ. Topographic maps of visual spatial attention in human parietal cortex. J Neurophysiol. 2005;94:1358–71. doi: 10.1152/jn.01316.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Schluppeck D, Glimcher P, Heeger DJ. Topographic organization for delayed saccades in human posterior parietal cortex. J Neurophysiol. 2005;94:1372–84. doi: 10.1152/jn.01290.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jack AI, et al. Changing human visual field organization from early visual to extra-occipital cortex. PLoS ONE. 2007;2:e452. doi: 10.1371/journal.pone.0000452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cui H, Andersen RA. Posterior parietal cortex encodes autonomously selected motor plans. Neuron. 2007;56:552–9. doi: 10.1016/j.neuron.2007.09.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Calton JL, Dickinson AR, Snyder LH. Non-spatial, motor-specific activation in posterior parietal cortex. Nat Neurosci. 2002;5:580–8. doi: 10.1038/nn0602-862. [DOI] [PubMed] [Google Scholar]

- 30.Dickinson AR, Calton JL, Snyder LH. Nonspatial saccade-specific activation in area LIP of monkey parietal cortex. J Neurophysiol. 2003;90:2460–4. doi: 10.1152/jn.00788.2002. [DOI] [PubMed] [Google Scholar]

- 31.Kim J-N, Shadlen MN. Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nature Neuroscience. 1999;2:176–185. doi: 10.1038/5739. [DOI] [PubMed] [Google Scholar]

- 32.Coles MGH, Gratton G, Bashore TR, Eriksen CW, Donchin E. A psychophysiological investigation of the continuous flow model of humaninformation processing. Journal of Experimental Psychology: Human Perception and Performance. 1985;11:529–553. doi: 10.1037//0096-1523.11.5.529. [DOI] [PubMed] [Google Scholar]

- 33.Wilson M. Six views of embodied cognition. Psychon Bull Rev. 2002;9:625–36. doi: 10.3758/bf03196322. [DOI] [PubMed] [Google Scholar]

- 34.Rorie AE, Newsome WT. A general mechanism for decision-making in the human brain? Trends Cogn Sci. 2005;9:41–3. doi: 10.1016/j.tics.2004.12.007. [DOI] [PubMed] [Google Scholar]

- 35.Kelley WM, et al. Hemispheric specialization in human dorsal frontal cortex and medial temporal lobe for verbal and nonverbal memory encoding. Neuron. 1998;20:927–936. doi: 10.1016/s0896-6273(00)80474-2. [DOI] [PubMed] [Google Scholar]

- 36.Corbetta M, et al. A functional MRI study of preparatory signals for spatial location and objects. Neuropsychologia. 2005;43:2041–56. doi: 10.1016/j.neuropsychologia.2005.03.020. [DOI] [PubMed] [Google Scholar]

- 37.Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- 38.Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear systems analysis of functional magnetic resonance imaging in human V1. Journal of Neuroscience. 1996;16:4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ollinger JM, Shulman GL, Corbetta M. Separating Processes within a Trial in Event-Related Functional MRI I. The Method. Neuroimage. 2001;13:210–217. doi: 10.1006/nimg.2000.0710. [DOI] [PubMed] [Google Scholar]

- 40.Talairach J, Turnoux P. Co-Planar Stereotaxic Atlas of the Human Brain. Thieme Medical Publishers, Inc.; New York: 1988. [Google Scholar]

- 41.Snyder AZ. In: Quantification of Brain Function Using PET. Myer R, Cunningham VJ, Bailey DL, Jones T, editors. Academic Press; San Diego, CA: 1995. pp. 131–137. [Google Scholar]

- 42.Ollinger JM, McAvoy MP. A homogeneity correction for post-hoc ANOVAs in fMRI. Neuroimage. 2000;11:S604. [Google Scholar]

- 43.Van Essen DC. A Population-Average, Landmark- and Surface-based (PALS) atlas of human cerebral cortex. Neuroimage. 2005;28:635–62. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- 44.Van Essen DC, et al. Mapping visual cortex in monkeys and humans using surface-based atlases. Vision Res. 2001;41:1359–78. doi: 10.1016/s0042-6989(01)00045-1. [DOI] [PubMed] [Google Scholar]

- 45.Fox MD, et al. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc Natl Acad Sci U S A. 2005;102:9673–8. doi: 10.1073/pnas.0504136102. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.