Abstract

Recently, attempts have been made to disentangle the neural underpinnings of preparatory processes related to reward and attention. Functional magnetic resonance imaging (fMRI) research showed that neural activity related to the anticipation of reward and to attentional demands invokes neural activity patterns featuring large-scale overlap, along with some differences and interactions. Due to the limited temporal resolution of fMRI, however, the temporal dynamics of these processes remain unclear. Here, we report an event-related potentials (ERP) study in which cued attentional demands and reward prospect were combined in a factorial design. Results showed that reward prediction dominated early cue processing, as well as the early and later parts of the contingent negative variation (CNV) slow-wave ERP component that has been associated with task-preparation processes. Moreover these reward-related electrophysiological effects correlated across participants with response-time speeding on reward-prospect trials. In contrast, cued attentional demands affected only the later part of the CNV, with the highest amplitudes following cues predicting high-difficulty potential-reward targets, thus suggesting maximal task preparation when the task requires it and entails reward prospect. Consequently, we suggest that task-preparation processes triggered by reward can arise earlier, and potentially more directly, than strategic top-down aspects of preparation based on attentional demands.

Keywords: Reward, attention, event-related potentials, contingent negative variation, visual attention

1. Introduction1

Everyday human behavior is guided by internal states and objectives that interact with external factors. Central among these external influences are reward and reward prediction. The dopaminergic midbrain is known to play a critical role in these reward-related processes and to be central to reinforcement learning (e.g., Glimcher, 2011; Wise and Rompre, 1989). It has been shown that stimuli predicting the possibility to obtain a reward invoke neuronal activity that is similar to that triggered by the reward itself in both animal (e.g., Mirenowicz and Schultz, 1994; Schultz et al., 1997) and human research (e.g., D’Ardenne et al., 2008; Knutson and Cooper, 2005; Knutson et al., 2005; Schott et al., 2008; Zaghloul et al., 2009). This process is believed to simultaneously energize cognitive and motor processes that may help to successfully obtain the reward (Salamone and Correa, 2012). Along such lines, the anticipation of reward has been shown to enhance a wide range of cognitive operations, including memory and novelty processing (e.g., Adcock et al., 2006; Krebs et al., 2009; Wittmann et al., 2005, 2008), perceptual discrimination (e.g., Engelmann and Pessoa, 2007; Engelmann et al., 2009), cognitive flexibility (e.g., Aarts et al., 2010) and conflict resolution (e.g., Padmala and Pessoa, 2011; Stürmer et al., 2011).

Effects of reward and attention have largely been considered as distinct phenomena, and they therefore have been investigated mainly in separate fields. However, it has been pointed out that most studies are not able to distinguish direct reward effects from effects of voluntary attentional enhancement (Maunsell, 2004). Previous studies have shown that attention and reward clearly interact: visual attention is more efficient when conditions or stimuli are motivationally significant (Engelmann and Pessoa, 2007) and rewarded stimulus aspects draw more attention (Krebs et al., 2010, 2013). These studies, however, have generally not been able to differentiate between more direct low-level influences of reward versus indirect strategic attentional effects, although some recent studies have shown that reward associations can have a direct impact on early stages of visual, cognitive, and oculomotor processes, without the mediation of strategic attention (Della Libera and Chelazzi, 2006; Hickey and van Zoest, 2012; Hickey et al., 2010). These early-stage effects are thought to rely on the direct association between task-relevant stimulus features and reward, and hence do not reflect preparatory or strategic effects that require a cue-target sequence. Baines et al. (2011), in turn, investigated the dynamics of spatial attention and motivation in an event-related-potentials (ERP) study, but also focused on effects of target processing. They showed that motivation and attention had early independent effects when visually processing the target stimulus, with interactions only arising later.

Whereas the above studies thus tried to dissociate influences of reward and attention largely during target discrimination processes, the possible dissociations of attention and reward-prospect during task preparation have received little attention so far. Yet, effective preparatory brain mechanisms can be crucial for successful task performance. Moreover, it has been suggested that the dopaminergic system plays an important role in improving task performance mostly in pro-active/preparatory contexts (Braver et al., 2007). Importantly, the dopaminergic response that is typically related to reward anticipation is usually assumed to be only elicited by extrinsic factors (but see Salamone and Correa, 2012). However, in a recent paper by our group (Boehler et al., 2011) this idea was challenged. In this fMRI study, a visual discrimination task was performed in which a cue informed participants of the task demands (high or low) for the upcoming trial. Despite the absence of reward or any other immediate extrinsic motivator, the dopaminergic midbrain showed enhanced activity for high compared to low task demands. Thus, anticipation of attentionally demanding tasks, independent of any extrinsic factor, can invoke neural processes that resemble the anticipation of reward, suggesting that the dopaminergic midbrain is more generally engaged in flexible resource allocation processes to meet situational requirements for which it can be recruited in different ways (see also Nieoullon, 2002; Salamone et al., 2005).

To further investigate the overlap and distinctiveness of the neural networks related to reward-dependent and reward-independent recruitment of neural processing resources Krebs et al. (2012) systematically crossed reward and attentional demand prediction in a subsequent fMRI study. Both factors activated selective but also similar neural networks with mostly additive effects, but also interactions for some areas, including the dopaminergic midbrain, with maximal activity in response to cues that predicted difficult potential-reward trials. These findings were taken to support the view that the dopaminergic midbrain plays a role in a broader network that is involved in the control of neuro-cognitive processing resources to optimize behavior when it is particularly worthwhile. Importantly, the above task required attentional orienting and task preparation immediately in response to the cue, which sets it apart from typical neuroeconomic experiments that emphasize evaluative processes and have conceptualized task demands as costs that get discounted from the possible reward (Croxson et al., 2009; McGuire and Botvinick, 2010). There are however important questions that cannot be addressed with fMRI because of the slow characteristics of the hemodynamic response. Most importantly, studies using fMRI are not able to distinguish processing stages related to cue evaluation and task preparation processes in general, as well as potential differences in the temporal dynamics of such processes related to the processing and anticipation of reward and task demands. The present study was performed to tackle these questions of timing by using ERPs in an adapted version of the study by Krebs et al. (2012).

Our central aim was to systematically investigate how the prediction of attentional demands and reward availability are registered over time, and lead to adjustments in preparatory activity preceding the target stimulus onset. After the initial registration of the relevant features, we expected differential effects on neural markers of task preparation and attentional orienting. An ERP component that is particularly interesting in this regard is the contingent negative variation (CNV), which is a central slow negative brain wave that has been typically observed between a warning (cue) and imperative stimulus (target). This ERP wave has been shown to reflect the anticipation of or orienting to the upcoming stimulus and response preparation, and has been related to preparatory attention, motivation and response readiness (Grent-’t-Jong and Woldorff, 2007; Tecce, 1972; van Boxtel and Brunia, 1994; Walter et al., 1964). We expected that cue information about reward availability and task demands could lead to dissociations of processes related to the interpretation of the cue information and subsequent task preparation not only in amplitude but also in time. These two manipulations could start to influence brain processes at a different point in time, with reward effects potentially arising earlier since reward is known to be a salient stimulus feature that can even modify early visual processes directly (e.g., Hickey et al., 2010) and because a reward-predicting cue can become an inherently motivating stimulus (Bromberg-Martin and Hikosaka, 2009; Mirenowicz and Schultz, 1994).

2. Materials and Methods

2.1. Participants

Twenty-two healthy right-handed participants with normal color vision participated in the present study (three male; mean age 20, range 18–23). The study was approved by the local ethics board and written informed consent was obtained from all participants according to the Declaration of Helsinki prior to participation. Participants were compensated at 15€ per hour plus an additional performance-based bonus between 4 and 8€.

2.2. Stimuli and procedure

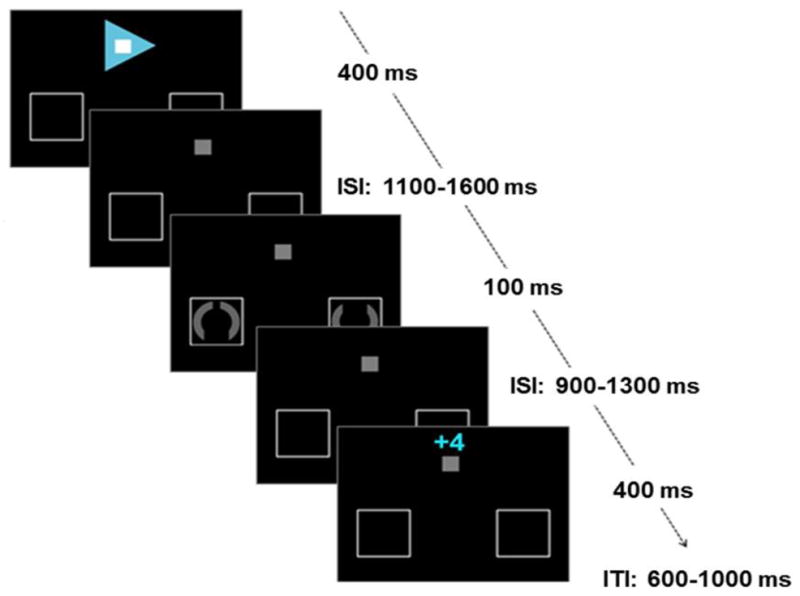

The present experiment was based on an earlier fMRI study (Krebs et al., 2012), by using a very similar version of the paradigm with some adjustments related to electroencephalography (EEG) methodology (Fig. 1). A central grey fixation square (0.5°) and two placeholder frames, one in the left and right visual field (6° lateral from fixation and 6° below fixation), were continuously present on a black background throughout the experiment. Each trial started with a centrally presented arrow cue (400 ms duration) predicting the target location (left or right), as well as reward availability and task difficulty. With respect to reward likelihood, cue color was either green or blue, indicating whether a fast correct answer was going to be rewarded or not. In addition, white or black squares in the center of these arrows specified the difficulty (high or low) of the upcoming task trial. Colors predicting reward (green and blue) and task difficulty (white and black) were counterbalanced across participants. To enable links to some earlier studies in this attentional-cueing field (e.g., Grent-’t-Jong and Woldorff, 2007), catch cues trials were also included (where the cue was grey upward-oriented arrows, enclosing a little dark grey square, indicating no target would follow); these trials were, however, ultimately not used for the present analysis.

Figure 1.

Paradigm. In active-attention trials cues indicated the target location (direction of arrow), availability of reward (color of arrow) and task difficulty (color of fixation square). After a variable ISI a target was presented and participants had to indicate whether the top or bottom gap was larger. Subsequent feedback indicated the amount of money won or lost (4 eurocents for reward trials or 0 eurocents for no-reward trials).

After a variable inter-stimulus interval (ISI) of 1100 ms to 1600 ms, target stimuli were presented in the placeholder frames for 100 ms, whereas catch cues were followed at that time point by another cue that started a new trial. Targets were grey circles (radius 1°), interrupted by two opposing gaps. Participants were asked to respond only to the covertly attended stimulus at the cued location, while ignoring the stimulus in the opposite hemifield, by indicating which gap was larger (index versus middle finger of the right hand for larger gap at the bottom versus the top, respectively). On low-difficulty trials, one of the gaps was clearly larger than the other, with gap angles of 90° versus 20°. On high-difficulty trials, the two gaps were more similar, with gap angles of 40° versus 20°, and were thus harder to discriminate. A response time-out was adjusted after every high-difficulty trial to obtain a constant ratio of 75% correct versus 25% error or missed trials thereby ensuring that the task was similarly difficult for all participants. This variable response time-out was used during task performance to adjust visual feedback. Yet, it was not applied when analyzing behavioral data and cue- and target-related ERPs.

A feedback display was presented after a varying ISI of 900 to 1300 ms. In potential-reward trials, four cents could be won or lost, indicated by a display above the standard fixation square of ‘+4’ after correct and fast responses and ‘−4’ after incorrect or too-slow responses. To preserve trial structure similarity, in no-reward trials feedback comprised of a ‘+0’ or ‘−0’ for correct and incorrect/missed trials, respectively. The feedback stimulus was displayed for 400 ms, followed by an inter-trial interval of 600–1000 ms. Additionally, after each experimental run the total gained amount was presented.

Participants started with a short practice run to get acquainted with the task. After practice, three runs of 200 trials each were performed. In every run, the factors of reward and task difficulty were crossed and shown in randomized order, resulting in 20 trials per condition (high-difficulty reward, low-difficulty reward, high-difficulty no-reward, low-difficulty no-reward) per target side (left vs. right) plus 40 catch trials. This resulted in a total of 60 trials per active-attention condition (120 when combining data for left- and rightward cues), and 120 catch trials. Participants sat in a shielded room and were monitored with a camera. They were asked to sit in a relaxed position, limit blinking, and fixate on the fixation square throughout the task. In each run five 20-seconds breaks were inserted in which participants could move and relax their eyes.

2.3. EEG acquisition and preprocessing

EEG activity was recorded with a Biosemi ActiveTwo measurement system (BioSemi, Amsterdam, Netherlands) using 64 Ag-AgCl scalp electrodes attached in an elastic cap, arranged according to the standard international 10–20 system. Four external electrodes were additionally attached to the head: left and right mastoids, which were used for later offline re-referencing to the average of these two channels, and a bilateral electro-oculogram (EOG) electrode pair next to the outer canthi of the eyes referenced to each other to measure horizontal eye movements. Signals were amplified and digitized with a sampling rate of 1024 Hz.

EEG data was processed using EEGLAB (Delorme and Makeig, 2004) and the ERPLAB plugin (http://erpinfo.org/erplab), running on MATLAB. Trials with blink artifacts were corrected by independent component analysis (ICA). Epochs were created time-locked to the onset of the relevant stimulus (cue, target or feedback), including a 200 ms pre-stimulus period, that was used for baseline correction. The total time window of the epoched ERPs varied according to the kind of stimulus, with the post-stimulus length equal to the duration of the stimulus presentation plus the time window of the shortest ISI. Epochs with horizontal eye movements detected by a step function (with threshold 60μV and moving window of 400 ms in the bipolar EOG channel) were rejected. We also rejected trials with drifts larger than −/+ 200 μV in any scalp electrodes. For cue-related data, this led to the rejection of 6% of epochs on average for the different cueing conditions, for which rejection rates were very similar (ranging from 5.6% to 7.2%). For the targets, on average 4.5% of the epochs were excluded, with minimal differences between conditions (range 4.2% to 4.8%). On average 5.6% of the correct feedback epochs were rejected, again with similar percentages for all conditions (ranging from 5.1% to 6.2%). Next, EEG epochs were averaged across participants according to the different conditions.

2.4. EEG analyses

Although we were mostly interested in the cue phase activity, ERP responses to the target and feedback stimuli were also analyzed to investigate the possible effects of preparation on target and feedback processing. Analyses of the cue data included all trials, while analyses of the target and feedback stimuli were limited to trials with correct responses. Although it would also be interesting to look at error responses and negative feedback, we did not analyze this data. The main reason is that there are not enough error trials for a reliable ERP analysis, in particular when dissociating trials with incorrect responses from trials with correct responses that were given too late.

Mean amplitudes were derived for time-windows averaged across electrodes within a region of interest (ROI). Time windows and ROIs of components were defined by ERP waveforms and topographic maps collapsed across conditions. Thus, the channel and time-window selection was orthogonal to the conditions of interest. Based on this approach, the cue-related P1 was quantified at posterior electrodes PO7, PO8, PO3, PO4, O1 and O2 between 70 and 130 ms. This component was followed by a negative wave (N1) over the same posterior brain area from 130 to 180 ms. A P2 with a central positive deflection at electrode sites C1, C2, Cz and CPz from 200 to 250 ms was detected, followed from 250 to 300 ms by a negative anterior (electrodes FC1, FC2, F1, F2, FCz and Fz) deflection in the N2 range. A clear P3 component was observed at occipito-parietal electrode sites (P1, P2, PO3, PO4, Pz and POz) and quantified between 300 and 500 ms. The CNV, a late negative-going wave for active-attention cues, was detected within a central ROI (C1, C2 and Cz) between 700 and 1500 ms (earliest onset of the target). Consistent with earlier studies (Broyd et al., 2012; Connor and Lang, 1969; Goldstein et al., 2006; Jonkman et al., 2003) this large time window was divided in two parts: 700–1100 ms and 1100–1500 ms, resulting in an early and late CNV component.

For targets, the P1 was quantified over lateral posterior sites (PO7, PO8, PO3, PO4, O1 and O2) between 70 and 130 ms, followed by a negative N1 in a time window of 150–200 ms over those same sites. From 180 to 230 ms post-target onset a P2 component was present and maximal at central electrode sites C1, C2, Cz and CPz. The N2 amplitude was analyzed on frontal electrode sites (F1, F2, FC1, FC2, FCz and Fz) between 250 and 300 ms. A late target P3 was visible from 300 ms to 600 ms in parietal regions (P1, P2, Pz and POz). A feedback-related component was observed over central parietal electrodes (CP1, CP2, CPz) starting around 200 ms after feedback presentation, which was quantified between 200 and 400 ms.

Amplitudes were examined using a repeated-measures analysis of variance (rANOVA) with factors reward (reward, no-reward) and task difficulty (high, low). Results are generally reported without strict Bonferroni correction for multiple testing when multiple ERP components were considered to avoid over-correction, thereby potentially manufacturing false negatives. However, we are also referring to the corrected p-values when interpreting the results of the rather exploratory early and mid-range potentials (P1, N1, P2, N2 and P3) in the cue and target phase (yielding a corrected value of p<0.01).

3. Results

3.1. Behavioral results

Response times (RTs) were shorter in trials with potential reward (M = 514.96 ms, SD = 50.69 ms) versus those without (M = 526.28 ms, SD = 54.43 ms), as indicated by a main effect of reward (F(1,21)=22.81, p<0.001, see table 1). There was also a significant main effect of task difficulty (F(1,21)=109.36, p<0.001) with faster responses for low-difficulty trials (M = 491.29 ms, SD = 50.13 ms) than for high-difficulty trials (M = 549.95 ms, SD = 57.47 ms). The interaction of reward and task difficulty approached significance (F(1,21)=4.02, p=0.058) explained by a larger RT difference between high-difficulty and low-difficulty trials for reward trials compared to no-reward trials.

Table 1.

Behavioral results. Response times in milliseconds (ms) and percentage correct responses in all four main conditions with corresponding standard deviations in brackets.

| High-difficulty | Low-difficulty | ||

|---|---|---|---|

| Reward | 546 (58) | 484 (46) | RT (ms) |

| 83 (6) | 97 (3) | correct (%) | |

| No-reward | 554 (58) | 499 (54) | RT (ms) |

| 79 (6) | 94 (4) | correct (%) |

Analyses of the accuracy data yielded a main effect of reward (F(1,21)=14.03, p=0.001) with more correct responses for reward trials (M = 90 %, SD = 4 %) as compared with no-reward trials (M = 87 %, SD = 4 %). Unsurprisingly, accuracy was higher when the discrimination task was easy (M = 95 %, SD = 5 %) than when it was difficult (M = 81%, SD = 3 %; F(1,21)=234.38, p<0.001). No significant interaction of reward and task difficulty was found for task accuracy (F(1,21)=1.689, p=0.27). All these results are in line with the behavioral effects of the previous fMRI version of this task (Krebs et al., 2012).

3.2. ERP results: Cue-locked

3.2.1. Early and mid-range potentials

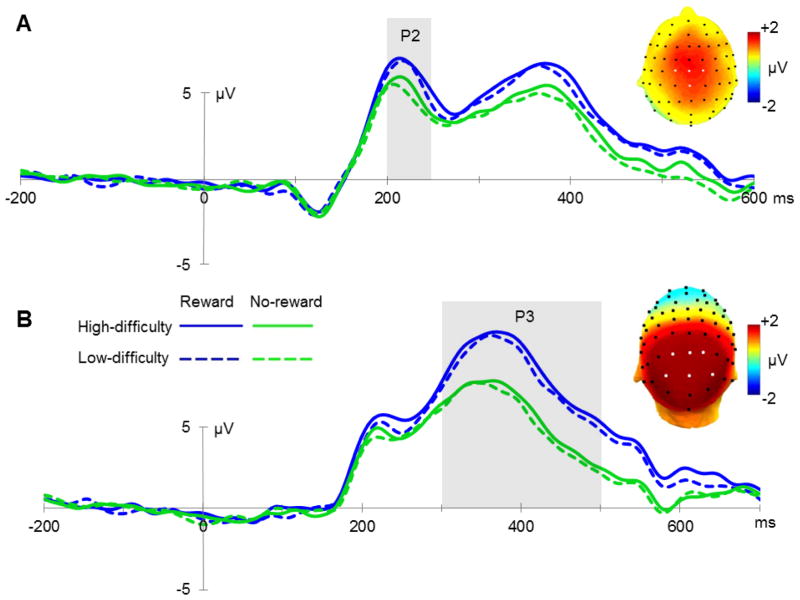

None of the early sensory components elicited by the cues (P1 and N1) were modulated by our task manipulation (all p>0.1). The cue-related P2 component had a larger amplitude for reward cues than for no-reward cues, as indicated by a main effect of reward (F(1,21)=13.09, p=0.002; see figure 2A). Task difficulty did not influence the amplitude of this component (F(1,21)=1.65, p=0.21), and there was no significant interaction between the two factors (F(1,21)=0.14, p=0.71). The mean amplitude of the N2 component showed a trend-level main effect of reward (F(1,21)=3.63, p=0.07), with a larger amplitude for no-reward cues. No main effect of reward nor an interaction between reward and task difficulty was observed on this component (F(1,21)<1). Since the N2 follows the P2 very quickly, modulations of those components are not easily distinguishable. However, the most important finding here is that the reward availability is detected as early as 200 ms post-cue (P2 effect). The subsequent P3 amplitude was larger for reward cues compared to no-reward cues (F(1,21)=22.07, p<0.001; see figure 2B). No significant main effect of task difficulty (F(1,21)=2.86, p=0.11) or interaction (F(1,21)<1) was found for the P3 response.

Figure 2.

Mid-range cue-related potentials. (A) Grand average ERPs elicited by cues in all four conditions at electrode sites C1, C2, Cz and CPz between 200 and 250 ms, and a topographic map reflecting the difference in P2 amplitude between reward-predicting cues and trials without reward prediction (electrodes of interest are indicated by white markers). (B) Grand average ERPs locked to the onset of the cue at electrode sites P1, P2, PO3, PO4, Pz and POz between 300 and 500 ms, reflecting P3 amplitudes in all conditions, and a topographic plot for reward condition versus no-reward condition.

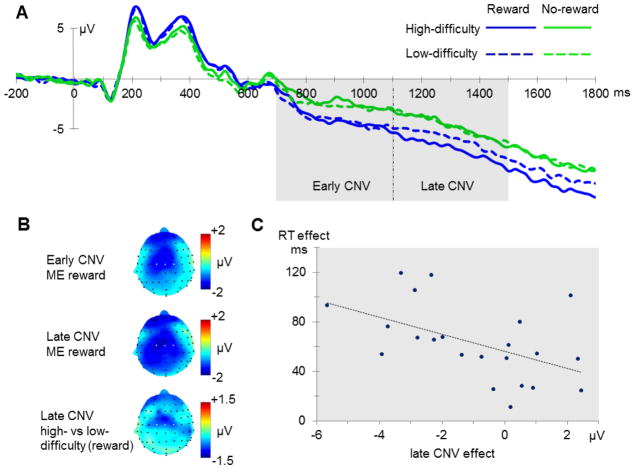

3.2.2. Contingent Negative Variation (CNV)

For the early part of the CNV an enhanced amplitude was found for reward-predicting cues (F(1,21)=19.41, p<0.001), while no main effect of task difficulty nor interaction between reward and task difficulty was observed (both F(1,21)<1; see figure 3B). Reward also modulated the late part of the CNV (F(1,21)=22.88, p<0.001), again with larger amplitudes for reward trials. Yet, this later main effect was modulated by an interaction between reward and task difficulty (F(1,21)=4.32, p=0.05; see figure 3C). This interaction resulted from the difference between high-difficulty and low-difficulty cues being larger for reward trials than for no-reward trials, with the largest late CNV deflection for high-difficulty reward trials.

Figure 3.

Contingent negative variation. (A) Electrophysiological waveform indicating the CNV, with an early (700–1100 ms) and late (1100–1500 ms) phase at electrode sites C1, C2 and Cz. (B) Topographic maps resulting from condition-wise contrasts in the early and late time window of the CNV (ME = main effect). (C) Correlation between difficulty effect in the reward condition on the late CNV amplitude and target RTs (high minus low task difficulty, respectively).

To fully capture this result pattern (see Nieuwenhuis et al., 2011), a 3-way rANOVA with the additional factor time (early vs. late CNV) was implemented. A main effect of time was present (F(1,21)=75.44, p<0.001), with a higher level of negative-polarity activity in the later window. Again, larger CNV amplitudes were observed for reward cues compared to no-reward cues, resulting in a main effect of reward across both time periods (F(1,21)=22.95, p<0.001). There was also a significant interaction between time and task difficulty (F(1,21)=10.85, p=0.003), due to a larger difference between high-difficulty and low-difficulty trials in the late phase, with high-difficulty trials being more negative. Moreover, a marginally significant three-way interaction between time, task difficulty and reward was observed (F(1,21)=3.52, p=0.075). This 3-way interaction pattern was due to the interaction between task difficulty and reward only arising at a later stage of the preparation process.

Finally, this difference in CNV amplitude in the late time interval between high-difficulty and low-difficulty cues in reward trials was related to performance during target processing in that it correlated with the high-versus-low difficulty difference in the RTs to the following potentially rewarding target (r=−0.5, p=0.017; see figure 3C). In contrast, the difference in late CNV amplitude between high-difficulty and low-difficulty cues without a potential reward and the corresponding difficulty effect in RTs to the target was not significant (p=0.7). Moreover, no significant correlation was found between reward and no-reward differences for RTs and early and late CNV amplitude (respectively p=0.76 and p=0.38).

3.3. ERP results: Target-locked and feedback-locked

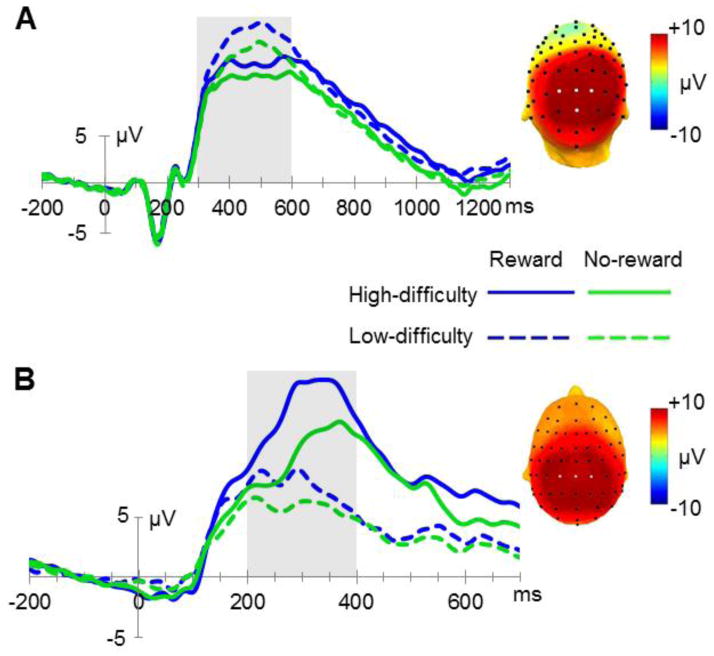

Albeit of subordinate priority, we also analyzed the ERPs elicited by the target stimuli. No significant differences between conditions were detected in the mean amplitudes of the early P1 and N1 components (all p-values>0.1). A significant interaction (F(1,21)=4.88, p=0.04) was obtained for the P2 component. This interaction is explained by a larger difference between high-difficulty and low-difficulty targets in reward trials (with a more positive wave for low-difficulty reward trials) compared to the same contrast for no-reward trials. However, this interaction effect related to P2 amplitude should be viewed as more exploratory considering that it would not survive a Bonferroni correction that takes all five ERP components into account that were analyzed here (resulting in a threshold of p<0.01). Subsequently, a more negative N2 deflection was observed for targets in no-reward trials, revealed by a main effect of reward (F(1,21)=11.31, p=0.003). The main effect of task difficulty (F(1,21)=1.61, p=0.22) and the interaction (F(1,21)<1) did not reach significance for these components. For the P3 component, a main effect of reward was observed (F(1,21)=23.65, p<0.001, see figure 4A), with a larger amplitude for targets in reward trials compared to no-reward trials. Additionally, the P3 amplitude was larger for low-difficulty targets than for high-difficulty targets, reflected statistically by a main effect of task difficulty (F(1,21)=32.86, p<0.001). No significant interaction of reward and task difficulty was observed for the P3 (F(1,21)=2.36, p=0.14).

Figure 4.

Target- and feedback-related potentials. (A) Grand average ERPs indicating target P3 amplitudes at parietal electrode sites P1, P2, Pz and POz between 300 and 600 ms and a topographic map reflecting the average of all four main conditions, with the ROI being indicated by white electrode markers. (B) Electrophysiological waveforms time-locked to the onset of the feedback electrodes CP1, CP2 and CPz (from 200 to 400 ms) and a topographic map averaging the four main conditions.

A prominent feedback-related component that was visible over posterior central electrode sites showed a significant main effect of reward (F(1,21)=105.33, p<0.001), with larger positive amplitudes for the reward condition compared to the no-reward condition. The main effect of task difficulty was also highly significant (F(1,21)=127.99, p<0.001) due to more positive amplitudes for high-difficulty than low-difficulty trials. Moreover, a highly significant interaction was observed (F(1,21)=29.82, p<0.001), explained by a larger amplitude difference in high-difficulty versus low-difficulty trials in the reward condition compared to the no-reward condition (see figure 4B).

4. Discussion

In the present study participants performed a cued visual discrimination task in which targets were preceded by cues that indicated not only the target location but simultaneously the level of task difficulty and the possibility to receive a monetary reward in case of a correct and fast response. Krebs et al. (2012) already demonstrated the utility of this task to assess cognitive processes related to the prospect of reward and task demands. Again, in the current study the experimental manipulations were proven successful in that reward improved discrimination performance (more accurate and faster responses), which furthermore interacted with the manipulation of task difficulty.

The central aim of the present study was to explore neural activity related to the anticipation of both reward and attentional demands (i.e., discrimination difficulty), and more specifically, the respective time course of such activity. The present results support the idea that these processes can be dissociated temporally during task preparation. In this preparation phase reward availability modulated the processing of the cue starting from 200 ms post cue onset, with larger P2 amplitudes for potential-reward trials compared to no-reward trials. In addition, the main effect of reward was prevalent in all later ERP components of the cueing phase. The impact of reward on the amplitude of later components of warning stimuli, particularly on the P3, has been shown in previous studies (Goldstein et al., 2006; Hughes, et al., 2012). In contrast, reports on how reward availability impacts the anticipatory CNV component are rather inconsistent. Some researchers have reported variable CNV amplitudes depending on the rewarding characteristics of the warning stimulus (Hughes et al., 2012; Pierson et al., 1987), which however others have failed to find (Goldstein et al., 2006; Sobotka et al., 1992). Another anticipatory slow-wave component that is similar to the CNV is the stimulus-preceding negativity (SPN) which reflects anticipatory attention (disentangled from motor preparation; van Boxtel and Böcker, 2004; Brunia and van Boxtel, 2001; Brunia et al., 2011). The SPN has also been shown to be affected by the motivational relevance of a stimulus, more precisely, and in line with the current results, a more negative SPN amplitude is observed when a rewarding event is expected (Brunia et al., 2011; Fuentemilla et al., 2013). Hence, in agreement with previous reports, the present study clearly supports the notion that reward can influence the attentional anticipation of, and the preparation for, an upcoming target.

On the other hand, and more importantly, task-difficulty effects arose only later in the preparation phase, as reflected by an interaction effect in the late CNV component. Specifically, CNV differences following cues predicting high-difficulty versus low-difficulty targets were more apparent in reward trials compared to no-reward trials, but only in the late part of the CNV. As a consequence, the most negative going wave was observed for high-difficulty reward trials. Importantly, this difference in task preparation indeed affected subsequent target-discrimination performance, indicated by the fact that participants with a larger difference in late CNV amplitude between high-difficulty and low-difficulty cues in the reward condition also showed faster responses for high-difficulty reward targets compared to low-difficulty reward ones. Such correlations between CNV amplitude and behavioral performance have been shown before (Birbaumer et al., 1990; Fan et al., 2007; Haagh and Brunia, 1985; Wascher et al., 1996) and correspond to the notion that the CNV reflects both motor preparation and attention or stimulus anticipation (Connor and Lang, 1969; Rohrbaugh et al., 1976; Tecce, 1972; van Boxtel and Brunia, 1994). It has to be noted that reward and task-difficulty might influence both kinds of processes in a different way, but it is not possible to distinguish attentional orienting from motor preparation in the current experiment.

Although a main effect of task-difficulty was found for RTs, there was no clear difference between CNV amplitudes in high-difficulty no-reward trails and low-difficulty no-reward trials. This might be explained by a motivational account, in which additional strategic attention is employed only when it is worth the effort. Therefore, no extra preparation processes will be triggered by high-difficulty cues in situations without the potential of being rewarded. The current finding is probably context-dependent, since participants usually also engage attentional resources in difficult tasks that lack (the prospect of) reward. In the current experiment, however, no-reward trials could be seen as disappointing leading to a lack of motivation to spend processing resources on these trials. Alternatively, control processes elicited by task difficulty might be qualitatively different in the reward condition and the no-reward condition along the lines of a pro-active vs. re-active distinction (e.g., Braver, 2012). Specifically, high task-difficulty in a reward context clearly engage pro-active control mechanisms, as indexed here be the CNV. In contrast, different levels of task difficulty in the no-reward condition of the current experiments might have invoked different levels of reactive control (i.e., during target processing), which could be difficult to detect in the target-related ERPs. A final option would be that participants did not invoke any additional control processes, neither pro- nor re-actively, for high-difficulty trials as compared to low-difficulty trials in the no-reward condition. The current ERP data cannot adjudicate between these alternatives.

Patient research and studies with healthy individuals have indicated that the CNV might be related to the dopaminergic system (e.g., Amabile et al., 1986; Gerschlager et al., 1999; Linssen et al., 2011). Consequently, the observed interaction between task difficulty and reward in this component appears to be consistent with the results of the previous fMRI study of Krebs et al. (2012) showing a very similar interaction pattern in the dopaminergic midbrain with highest activation levels in respond to cues that predicted both reward and high difficulty. Of course, it should be noted that ERP measurements will not directly reflect activity in deep brain structures such as the dopaminergic midbrain (Cohen et al., 2011), but only through cortical consequences of its involvement. This possible link to the dopaminergic system raises another alternative, or possibly supplementary, interpretation for the current results. With higher levels of (reward) uncertainty, slower sustained activations of the dopaminergic system have been shown to increase (Fiorillo et al., 2003; Preuschoff et al., 2006). The current results related to late CNV amplitude are in line with this finding. The amplitude is lowest for cues that do not predict reward. Not only do these trials not feature reward, but reward uncertainty is also lowest here (for both high-difficult and low-difficult trials). In reward trials, reward uncertainty is present in both conditions, but most pronounced when cues predict a high-difficulty trial; correspondingly the largest CNV amplitude has been detected in this condition. However, considering the established characteristics of the CNV as a typical preparatory component reflecting anticipatory attention and motor preparation, this uncertainty-based interpretation appears less likely as the full explanation of the data pattern than the task-preparation-related account.

The central finding of the current study is the temporal dissociation between processes related to the anticipation of potential reward and attentional demands. The earlier and more pronounced effect of reward compared to task difficulty appears to suggest that reward might influence visual processing of the cue stimuli in a more bottom-up way, while anticipated attentional demands seem to trigger a more voluntary (top-down) influence that arises later. This might relate to the idea that there could be different routes by which the dopaminergic system is recruited that has been previously suggested by other researchers (e.g., Salamone et al., 2005). Also, in patients with Parkinson’s disease, which is characterized by major disturbances of the dopaminergic system, voluntary attention mechanisms are affected while performances and processes in automatic attention tasks can remain intact (Brown and Marsden, 1988; Brown and Marsden, 1990; Yamaguchi and Kobayashi, 1998). Other studies have shown that reward associations, especially for task-irrelevant stimulus aspects, can distract participants from the task-relevant aspects and have a detrimental effect on performance (e.g., Hickey et al., 2010; Krebs et al., 2010, 2011, 2013), which also adds evidence in favor of potential automatic influences of reward on task processes. We suggest that reward influences cue-related processes relatively directly, while strategically implemented attentional orienting plays a role only later in processing in an attempt to optimize performance according to the situational circumstances.

Another key aspect is that temporal information provided here by the ERP measures also enables the dissociation of processes related to cue evaluation from the preparatory processes it triggers. Specifically, our data indicates that early cue evaluation is particularly sensitive to possible reward availability, whereas cued task demands do not play a major role until late in the actual task-preparation process as the target is about to occur. Furthermore, the finding that the late CNV amplitude, which has been consistently linked to task preparation, was maximal for high-difficulty reward trials, speaks to an additional critical issue. Specifically, as alluded to in the introduction, neuroeconomic experiments usually conceptualize high task demands as costs that get discounted (e.g., Croxson et al., 2009; McGuire and Botvinick, 2010). This should even more so be the case in the present experiment, as reward probability was lower in high-difficulty than low-difficulty trials. Even in this situation, we found the largest CNV amplitude in high-difficulty reward trials. If this had been merely an effect of expected reward value, the low-difficulty reward trials should have triggered the largest CNV wave. An important difference to the earlier neuroeconomic experiments was that in the present study participants had to start preparing for the upcoming task in response to the cue, which in our opinion relies on a neural network that overlaps with reward-related processes (see also Stoppel et al., 2011).

Subsequent to the preparation phase, the early perceptual processing of the target was not affected by the reward or difficulty manipulation, which is consistent earlier reports (Baines et al., 2011; Hughes et al., 2012) could not find an early reward impact in the target P1-N1 component in their cueing paradigms. The earliest manipulation effects in the current study were observed 200 ms after target onset. In particular, the P2 amplitude was largest for low-difficulty reward trials and for the N2 and the P3 component a main effect of reward was observed, with an enhanced positive wave for reward trials. These findings match with the results of several recent ERP studies investigating reward, suggesting that attention to or attentional capture by rewarding or affective stimuli was increased (e.g., Baines et al., 2011; Hajcak et al., 2010; Hughes et al., 2012; Krebs et al., 2013). The amplitude of the P3 in the present study was also larger in the low-difficulty condition compared to the high-difficulty condition, perhaps due to reward expectancy being higher in the low-difficulty trials (Goldstein et al., 2006; Gruber and Otten, 2010; Wu and Zhou, 2009). Also, similar results have been found in other discrimination tasks, showing a diminished visual or auditory evoked P3 amplitude in difficult discrimination trials (Hoffman et al., 1985; Palmer et al., 1994; Polich, 1987; Senkowski and Herrmann, 2002). This has been related to decreased decision certainty (i.e. ‘equivocation’), since confidence in the decision made is reduced when discriminations are more difficult (Palmer et al., 1994; Ruchkin & Sutton, 1978). Moreover, both the reward and difficulty main effect might be partly explained by the relation of the target P3 to response execution (Doucet and Stelmack, 1999), with larger P3 amplitudes for faster responses. Hughes et al. (2012) also showed that the target-locked P3 amplitude was larger for easy compared to difficult detected target pictures in a rapid serial visual presentation task and results suggested that the P3 amplitude on single trials reflected the confidence in detecting a target. Hence, the P3 modulation probably reflects a combination of reward expectancy, confidence in correct responding, and facilitated response execution.

Targets were followed by a feedback presentation, for which we had to limit our analysis to correct feedback due to trial-number limitations. The feedback elicited a broad centro-parietal component, which probably reflects a feedback-related P3 component. The response to the different kinds of positive feedback in the present experiment displayed sensitivities to reward in general, as well as to the difficulty of the task. The P3 component is generally known to be sensitive to expectancy (Courchesne et al., 1977; Johnson and Donchin, 1980; Núñez Castellar et al., 2010) and more specifically with regard to feedback, the P3 amplitude has been observed to be larger for unpredicted outcomes compared to predicted outcomes (Hajcak et al., 2005, 2007). Since in the current experiment correct feedback is more unexpected in high-difficulty trials than in low-difficulty trials, the main effect of task-difficulty might reflect this subjective expectation. The current findings related to reward are also consistent with previous reports showing larger P3 amplitude following reward feedback than no-reward feedback (Hajcak et al., 2007), which might indicate higher motivational significance of reward feedback (see Sato et al., 2005). Finally, the response also displayed an interaction pattern, wherein the difference between low- and high-difficulty trials was larger for rewarded trials. This latter interaction seems to represent a combination of performance monitoring of correct performance on the one hand, and of reward outcome evaluation on the other.

To summarize, in the present study we investigated the time course of task preparation as a function of anticipated reward and anticipated attentional task demands. While preparing for the target, reward influenced neural processes more rapidly, with large effects in both the early and late stage of preparation. In contrast, it seems that processing resources were only later allocated in a strategic fashion that also incorporated anticipated task difficulty. These findings provide evidence that effects of voluntary attentional demands and reward can be temporally dissociated, not only during task execution but also during task preparation.

Acknowledgments

Funding

This work was supported by a post-doctoral fellowship by the Flemish Research Foundation (FWO; grant number FWO11/PDO/016) awarded to RMK; by a grant from the U.S. National Institutes of Mental Health (grant number R01-MS060415) to MGW; and the Ghent University Multidisciplinary Research Platform “The integrative neuroscience of behavioral control”.

Footnotes

Abbreviations: CNV, contingent negative variation; EEG, electroencephalography; ERP, event-related potential; fMRI, functional magnetic resonance imaging; ICA, independent component analysis; ISI, inter-stimulus interval; rANOVA, repeated-measure analysis of variance; ROI, region of interest; RT, response time; SPN, stimulus-preceding negativity.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Hanne Schevernels, Email: hanne.schevernels@Ugent.be.

Ruth M. Krebs, Email: ruth.krebs@Ugent.be.

Patrick Santens, Email: patrick.santens@Ugent.be.

Marty G. Woldorff, Email: woldorff@duke.edu.

C. Nico Boehler, Email: nico.boehler@Ugent.be.

References

- Aarts E, Roelofs A, Franke B, Rijpkema M, Fernández G, Helmich RC, Cools R. Striatal dopamine mediates the interface between motivational and cognitive control in humans: evidence from genetic imaging. Neuropsychopharmacology. 2010;35:1943–1951. doi: 10.1038/npp.2010.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adcock RA, Thangavel A, Whitfield-Gabrieli S, Knutson B, Gabrieli JDE. Reward-motivated learning: mesolimbic activation precedes memory formation. Neuron. 2006;50:507–517. doi: 10.1016/j.neuron.2006.03.036. [DOI] [PubMed] [Google Scholar]

- Amabile G, Fattapposta F, Pozzessere G, Albani G, Sanarelli L, Rizzo PA, Morocutti C. Parkinson disease: electrophysiological (CNV) analysis related to pharmacological treatment. Electroencephalography and clinical neurophysiology. 1986;64:521–524. doi: 10.1016/0013-4694(86)90189-6. [DOI] [PubMed] [Google Scholar]

- Baines S, Ruz M, Rao A, Denison R, Nobre AC. Modulation of neural activity by motivational and spatial biases. Neuropsychologia. 2011;49:2489–2497. doi: 10.1016/j.neuropsychologia.2011.04.029. [DOI] [PubMed] [Google Scholar]

- Birbaumer N, Elbert T, Canavan AGM, Rockstroh B. Slow potentials of the cerebral cortex and behavior. Physiological Reviews. 1990;70:1–41. doi: 10.1152/physrev.1990.70.1.1. [DOI] [PubMed] [Google Scholar]

- Boehler CN, Hopf JM, Krebs RM, Stoppel CM, Schoenfeld MA, Heinze HJ, Noesselt T. Task-load-dependent activation of dopaminergic midbrain areas in the absence of reward. The Journal of Neuroscience. 2011;31:4955–4961. doi: 10.1523/JNEUROSCI.4845-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braver TS. The variable nature of cognitive control: A dual-mechanisms framework. Trends in Cognitive Sciences. 2012;16:106–113. doi: 10.1016/j.tics.2011.12.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braver TS, Gray JR, Burgess GC. Explaining the many varieties of working memory variation: dual mechanisms of cognitive control. In: Conway A, Jarrold C, Kane M, Miyake A, Towse J, editors. Variation in Working Memory. Oxford University Press, Oxford Oxford University; 2007. pp. 76–106. [Google Scholar]

- Bromberg-Martin ES, Hikosaka O. Midbrain dopamine neurons signal preference for advance information about upcoming rewards. Neuron. 2009;63:119–126. doi: 10.1016/j.neuron.2009.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown RG, Marsden CD. Internal versus external cues and the control of attention in Parkinson’s disease. Brain. 1988;111:323–345. doi: 10.1093/brain/111.2.323. [DOI] [PubMed] [Google Scholar]

- Brown RG, Marsden CD. Cognitive function in Parkinson’s disease: from description to theory. Trends in Neuroscience. 1990;13:21–29. doi: 10.1016/0166-2236(90)90058-i. [DOI] [PubMed] [Google Scholar]

- Broyd SJ, Richards HJ, Helps SK, Chronaki G, Bamford S, Sonuga-Barke EJS. An electrophysiological monetary incentive delay (e-MID) task: a way to decompose the different components of neural response to positive and negative monetary reinforcement. Journal of Neuroscience Methods. 2012;209:40–49. doi: 10.1016/j.jneumeth.2012.05.015. [DOI] [PubMed] [Google Scholar]

- Brunia CH, van Boxtel GJ. Wait and see. International journal of Psychophysiology. 2001;43:59–75. doi: 10.1016/s0167-8760(01)00179-9. [DOI] [PubMed] [Google Scholar]

- Brunia CH, Hackley SA, van Boxtel GJ, Kotani Y, Ohgami Y. Waiting to perceive: reward or punishment? Clinical Neurophysiology. 2011;122:858–868. doi: 10.1016/j.clinph.2010.12.039. [DOI] [PubMed] [Google Scholar]

- Cohen MX, Cavanagh JF, Slagter HA. Event-related potential activity in the basal ganglia differentiates rewards from nonrewards: temporospatial principal components analysis and source localization of the feedback negativity: commentary. Human brain mapping. 2011;32:2270–2271. doi: 10.1002/hbm.21358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connor WH, Lang PJ. Cortical slow-wave and cardiac responses in stimulus orientation and reaction time conditions. Journal of Experimental Psychology. 1969;82:310–320. doi: 10.1037/h0028181. [DOI] [PubMed] [Google Scholar]

- Courchesne E, Hillyard SA, Courchesne RY. P3 waves to the discrimination of targets in homogeneous and heterogeneous stimulus sequences. Psychophysiology. 1977;14:590–597. doi: 10.1111/j.1469-8986.1977.tb01206.x. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O’Reilly JX, Behrens TEJ, Rushworth MFS. Effort-based cost-benefit valuation and the human brain. The Journal of Neuroscience. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D’Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319:1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- Della Libera C, Chelazzi L. Visual selective attention and the effects of monetary rewards. Psychological Science. 2006;17:222–227. doi: 10.1111/j.1467-9280.2006.01689.x. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. The Journal of Neuroscience methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Doucet C, Stelmack RM. The effect of response execution on P3 latency, reaction time, and movement time. Psychophysiology. 1999;36:351–363. doi: 10.1017/s0048577299980563. [DOI] [PubMed] [Google Scholar]

- Engelmann JB, Damaraju E, Padmala S, Pessoa L. Combined effects of attention and motivation on visual task performance: transient and sustained motivational effects. Frontiers in Human Neuroscience. 2009:3. doi: 10.3389/neuro.09.004.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engelmann JB, Pessoa L. Motivation sharpens exogenous spatial attention. Emotion. 2007;7:668–674. doi: 10.1037/1528-3542.7.3.668. [DOI] [PubMed] [Google Scholar]

- Fan J, Kolster R, Ghajar J, Suh M, Knight RT, Sarkar R, McCandliss BD. Response anticipation and response conflict: an event-related potential and functional magnetic resonance imaging study. The Journal of Neuroscience. 2007;27:2272–2282. doi: 10.1523/JNEUROSCI.3470-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- Fuentemilla L, Cucurell D, Marco-Pallarés J, Guitart-Masip M, Morís J, Rodríguez-Fornells A. Electrophysiological correlates of anticipating improbable but desired events. NeuroImage. 2013;78:135–144. doi: 10.1016/j.neuroimage.2013.03.062. [DOI] [PubMed] [Google Scholar]

- Gerschlager W, Alesch F, Cunnington R, Deecke L, Dirnberger G, Endl W, Lindinger G, Lang W. Bilateral subthalamic nucleus stimulation improves frontal cortex function in Parkinson’s disease. An electrophysiological study of the contingent negative variation. Brain. 1999;122:2365–2373. doi: 10.1093/brain/122.12.2365. [DOI] [PubMed] [Google Scholar]

- Glimcher PW. Understanding dopamine and reinforcement learning: the dopamine reward prediction error hypothesis. Proceedings of the National Academy of Sciences of the United States of America. 2011;108(Suppl 3):15647–15654. doi: 10.1073/pnas.1014269108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein RZ, Cottone LA, Jia Z, Maloney T, Volkow ND, Squires NK. The effect of graded monetary reward on cognitive event-related potentials and behavior in young healthy adults. International Journal of Psychophysiology. 2006;62:272–279. doi: 10.1016/j.ijpsycho.2006.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grent-’t-Jong T, Woldorff MG. Timing and sequence of brain activity in top-down control of visual-spatial attention. PLoS biology. 2007;5:e12. doi: 10.1371/journal.pbio.0050012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gruber MJ, Otten LJ. Voluntary control over prestimulus activity related to encoding. The Journal of Neuroscience. 2010;30:9793–9800. doi: 10.1523/JNEUROSCI.0915-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haagh SA, Brunia CH. Anticipatory response-relevant muscle activity, CNV amplitude and simple reaction time. Electroencephalography and clinical neurophysiology. 1985;61:30–39. doi: 10.1016/0013-4694(85)91070-3. [DOI] [PubMed] [Google Scholar]

- Hajcak G, Holroyd CB, Moser JS, Simons RF. Brain potentials associated with expected and unexpected good and bad outcomes. Psychophysiology. 2005;42:161–170. doi: 10.1111/j.1469-8986.2005.00278.x. [DOI] [PubMed] [Google Scholar]

- Hajcak G, MacNamara A, Olvet DM. Event-related potentials, emotion, and emotion regulation: an integrative review. Developmental neuropsychology. 2010;35:129–155. doi: 10.1080/87565640903526504. [DOI] [PubMed] [Google Scholar]

- Hajcak G, Moser JS, Holroyd CB, Simons RF. It’s worse than you thought: the feedback negativity and violations of reward prediction in gambling tasks. Psychophysiology. 2007;44:905–912. doi: 10.1111/j.1469-8986.2007.00567.x. [DOI] [PubMed] [Google Scholar]

- Hickey C, Chelazzi L, Theeuwes J. Reward changes salience in human vision via the anterior cingulate. The Journal of Neuroscience. 2010;30:11096–11103. doi: 10.1523/JNEUROSCI.1026-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickey C, Van Zoest W. Reward creates oculomotor salience. Current Biology. 2012;22:R219–R220. doi: 10.1016/j.cub.2012.02.007. [DOI] [PubMed] [Google Scholar]

- Hoffman JE, Houck MR, MacMillan FW, Simons RF, Oatman LC. Event-related potentials elicited by automatic targets: a dual-task analysis. Journal of Experimental Psychology: Human Perception and Performance. 1985;11:50–61. doi: 10.1037//0096-1523.11.1.50. [DOI] [PubMed] [Google Scholar]

- Hughes G, Mathan S, Yeung N. EEG indices of reward motivation and target detectability in a rapid visual detection task. NeuroImage. 2012;64:590–600. doi: 10.1016/j.neuroimage.2012.09.003. [DOI] [PubMed] [Google Scholar]

- Johnson R, Donchin E. P300 and stimulus categorization: two plus one is not so different from one plus one. Psychophysiology. 1980;17:167–178. doi: 10.1111/j.1469-8986.1980.tb00131.x. [DOI] [PubMed] [Google Scholar]

- Jonkman LM, Lansbergen M, Stauder JEA. Developmental differences in behavioral and event-related brain responses associated with response preparation and inhibition in a go/nogo task. Psychophysiology. 2003;40:752–761. doi: 10.1111/1469-8986.00075. [DOI] [PubMed] [Google Scholar]

- Knutson B, Cooper JC. Functional magnetic resonance imaging of reward prediction. Current opinion in Neurology. 2005;18:411–417. doi: 10.1097/01.wco.0000173463.24758.f6. [DOI] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. The Journal of Neuroscience. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krebs RM, Boehler CN, Appelbaum LG, Woldorff MG. Reward associations reduce behavioral interference by changing the temporal dynamics of conflict processing. PloS one. 2013;8:e53894. doi: 10.1371/journal.pone.0053894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krebs RM, Boehler CN, Egner T, Woldorff MG. The neural underpinnings of how reward associations can both guide and misguide attention. Journal of Neuroscience. 2011;31:9752–9759. doi: 10.1523/JNEUROSCI.0732-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krebs RM, Boehler CN, Roberts KC, Song AW, Woldorff MG. The involvement of the dopaminergic midbrain and cortico-striatal-thalamic circuits in the integration of reward prospect and attentional task demands. Cerebral Cortex. 2012;22:607–615. doi: 10.1093/cercor/bhr134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krebs RM, Boehler CN, Woldorff MG. The influence of reward associations on conflict processing in the Stroop task. Cognition. 2010;117:341–347. doi: 10.1016/j.cognition.2010.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krebs RM, Schott BH, Schütze H, Düzel E. The novelty exploration bonus and its attentional modulation. Neuropsychologia. 2009;47:2272–2281. doi: 10.1016/j.neuropsychologia.2009.01.015. [DOI] [PubMed] [Google Scholar]

- Linssen AMW, Vuurman EFPM, Sambeth A, Nave S, Spooren W, Vargas G, Santarelli L, Riedel WJ. Contingent negative variation as a dopaminergic biomarker: evidence from dose-related effects of methylphenidate. Psychopharmacology. 2011;218:533–542. doi: 10.1007/s00213-011-2345-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JHR. Neuronal representations of cognitive state: reward or attention? Trends in Cognitive Sciences. 2004;8:261–265. doi: 10.1016/j.tics.2004.04.003. [DOI] [PubMed] [Google Scholar]

- McGuire JT, Botvinick MM. Prefrontal cortex, cognitive control, and the registration of decision costs. Proceedings of the National Academy of Sciences of the United States of America. 2010;107:7922–7926. doi: 10.1073/pnas.0910662107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mirenowicz J, Schultz W. Importance of unpredictability for reward responses in primate dopamine neurons. Journal of Neurophysiology. 1994;72:1024–1027. doi: 10.1152/jn.1994.72.2.1024. [DOI] [PubMed] [Google Scholar]

- Nieoullon A. Dopamine and the regulation of cognition and attention. Progress in Neurobiology. 2002;67:53–83. doi: 10.1016/s0301-0082(02)00011-4. [DOI] [PubMed] [Google Scholar]

- Nieuwenhuis S, Forstmann BU, Wagenmakers EJ. Erroneous analyses of interactions in neuroscience: a problem of significance. Nature Neuroscience. 2011;14:1105–1107. doi: 10.1038/nn.2886. [DOI] [PubMed] [Google Scholar]

- Núñez Castellar E, Kühn S, Fias W, Notebaert W. Outcome expectancy and not accuracy determines posterror slowing: ERP support. Cognitive, affective & behavioral neuroscience. 2010;10:270–278. doi: 10.3758/CABN.10.2.270. [DOI] [PubMed] [Google Scholar]

- Padmala S, Pessoa L. Reward reduces conflict by enhancing attentional control and biasing visual cortical processing. Journal of Neuroscience. 2011;23:3419–3432. doi: 10.1162/jocn_a_00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer B, Nasman VT, Wilson GF. Task decision difficulty: effects on ERPs in a same-different letter classification task. Biological Psychology. 1994;38:199–214. doi: 10.1016/0301-0511(94)90039-6. [DOI] [PubMed] [Google Scholar]

- Pierson A, Ragot R, Ripoche A, Lesevre N. Electrophysiological changes elicited by auditory stimuli given a positive or negative value: a study comparing anhedonic with hedonic subjects. International journal of psychophysiology. 1987;5:107–123. doi: 10.1016/0167-8760(87)90015-8. [DOI] [PubMed] [Google Scholar]

- Polich J. Task difficulty, probability, and inter-stimulus interval as determinants of P300 from auditory stimuli. Electroencephalography and clinical neurophysiology. 1987;68:311–320. doi: 10.1016/0168-5597(87)90052-9. [DOI] [PubMed] [Google Scholar]

- Preuschoff K, Bossaerts P, Quartz SR. Neural differentiation of expected reward and risk in human subcortical structures. Neuron. 2006;51:381–390. doi: 10.1016/j.neuron.2006.06.024. [DOI] [PubMed] [Google Scholar]

- Rohrbaugh JW, Syndulko K, Lindsley DB. Brain wave components of the contingent negative variation in humans. Science. 1976;191:1055–1057. doi: 10.1126/science.1251217. [DOI] [PubMed] [Google Scholar]

- Ruchkin DS, Sutton S. Multidisciplinary Perspectives in Event-Related Brain Potential Research. U.S. Government Printing Office; Washington, DC: 1978. Equivocation and 300 amplitude; pp. 175–177. [Google Scholar]

- Salamone JD, Correa M. The mysterious motivational functions of mesolimbic dopamine. Neuron. 2012;76:470–485. doi: 10.1016/j.neuron.2012.10.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Mingote SM, Weber SM. Beyond the reward hypothesis: alternative functions of nucleus accumbens dopamine. Current opinion in Pharmacology. 2005;5:34–41. doi: 10.1016/j.coph.2004.09.004. [DOI] [PubMed] [Google Scholar]

- Sato A, Yasuda A, Ohira H, Miyawaki K, Nishikawa M, Kumano H, Kuboki T. Effects of value and reward magnitude on feedback negativity and P300. NeuroReport. 2005;16:407–411. doi: 10.1097/00001756-200503150-00020. [DOI] [PubMed] [Google Scholar]

- Schott BH, Minuzzi L, Krebs RM, Elmenhorst D, Lang M, Winz OH, Seidenbecher CI, Coenen HH, Heinze Hans-Jochen, Zilles K, Düzel E, Bauer A. Mesolimbic functional magnetic resonance imaging activations during reward anticipation correlate with reward-related ventral striatal dopamine release. The Journal of Neuroscience. 2008;28:14311–14319. doi: 10.1523/JNEUROSCI.2058-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A Neural Substrate of Prediction and Reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Herrmann CS. Effects of task difficulty on evoked gamma activity and ERPs in a visual discrimination task. Clinical Neurophysiology. 2002;113:1742–1753. doi: 10.1016/s1388-2457(02)00266-3. [DOI] [PubMed] [Google Scholar]

- Sobotka SS, Davidson RJ, Senulis JA. Anterior brain electrical asymmetries in response to reward and punishment. Electroencephalography and clinical neurophysiology. 1992;83:236–247. doi: 10.1016/0013-4694(92)90117-z. [DOI] [PubMed] [Google Scholar]

- Stoppel CM, Boehler CN, Strumpf H, Heinze HJ, Hopf JM, Schoenfeld MA. Neural processing of reward magnitude under varying attentional demands. Brain research. 2011;1383:218–229. doi: 10.1016/j.brainres.2011.01.095. [DOI] [PubMed] [Google Scholar]

- Stürmer B, Nigbur R, Schacht A, Sommer W. Reward and punishment effects on error processing and conflict control. Frontiers in Psychology. 2011:2. doi: 10.3389/fpsyg.2011.00335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tecce JJ. Contingent negative variation (CNV) and psychological processes in man. Psychological Bulletin. 1972;77:73–108. doi: 10.1037/h0032177. [DOI] [PubMed] [Google Scholar]

- van Boxtel GJ, Böcker KB. Cortical measures of anticipation. Journal of Psychophysiology. 2004;18:61–76. [Google Scholar]

- van Boxtel GJ, Brunia CH. Motor and non-motor aspects of slow brain potentials. Biological Psychology. 1994;38:37–51. doi: 10.1016/0301-0511(94)90048-5. [DOI] [PubMed] [Google Scholar]

- Walter WG, Cooper R, Aldridge VJ, McCallum WC, Winter AL. Contingent Negative Variation: An electric sign of sensori-motor association and expectancy in the human brain. Nature. 1964;203:380–384. doi: 10.1038/203380a0. [DOI] [PubMed] [Google Scholar]

- Wascher E, Verleger R, Jaskowski P, Wauschkuhn B. Preparation for action: an ERP study about two tasks provoking variability in response speed. Psychophysiology. 1996;33:262–72. doi: 10.1111/j.1469-8986.1996.tb00423.x. [DOI] [PubMed] [Google Scholar]

- Wise RA, Rompre PP. Brain dopamine and reward. Annual review of psychology. 1989;40:191–225. doi: 10.1146/annurev.ps.40.020189.001203. [DOI] [PubMed] [Google Scholar]

- Wittmann BC, Schiltz K, Boehler CN, Düzel E. Mesolimbic interaction of emotional valence and reward improves memory formation. Neuropsychologia. 2008;46:1000–1008. doi: 10.1016/j.neuropsychologia.2007.11.020. [DOI] [PubMed] [Google Scholar]

- Wittmann BC, Schott BH, Guderian S, Frey JU, Heinze HJ, Düzel E. Reward-related FMRI activation of dopaminergic midbrain is associated with enhanced hippocampus-dependent long-term memory formation. Neuron. 2005;45:459–467. doi: 10.1016/j.neuron.2005.01.010. [DOI] [PubMed] [Google Scholar]

- Wu Y, Zhou X. The P300 and reward valence, magnitude, and expectancy in outcome evaluation. Brain research. 2009;1286:114–122. doi: 10.1016/j.brainres.2009.06.032. [DOI] [PubMed] [Google Scholar]

- Yamaguchi S, Kobayashi S. Contributions of the dopaminergic system to voluntary and automatic orienting of visuospatial attention. The Journal of Neuroscience. 1998;18:1869–1878. doi: 10.1523/JNEUROSCI.18-05-01869.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaghloul KA, Blanco JA, Weidemann CT, Mcgill K, Jaggi L, Baltuch GH, Kahana MJ. Human substantia nigra neurons encode unexpected financial rewards. Science. 2009;323:1496–1499. doi: 10.1126/science.1167342. [DOI] [PMC free article] [PubMed] [Google Scholar]