Abstract

While Regional Extension Centers and other national policy efforts to increase the adoption of electronic health records (EHR) have been implemented in the United States, the relationship between EHR adoption and quality of care remains poorly understood. We evaluated the early effects on quality of the Primary Care Information Project, which provides subsidized EHRs and technical assistance to primary care practices in underserved neighborhoods in New York City. We find that nine or more months of participation in the Primary Care Information Project is associated with improved quality, but only for certain quality measures and only for physicians receiving extensive technical assistance.

Introduction

The rate of adoption of electronic health records (EHR) for outpatient care in the United States has been slow, but is rapidly accelerating. Between 2009 and 2011, the proportion of outpatient physicians using of a “basic” EHR – an EHR with the ability to generate problem lists, document medications, and view test results - increased from 22% to 35%.(1) Nonetheless, small practices—which constitute the majority of practices in the U.S.(2)— and practices that are owned by physicians have the lowest rates of EHR adoption. (1, 2) Further, some evidence suggests that practices with higher proportions of minority, Medicaid, and uninsured patients are less likely to use EHRs.(3)

In an effort to encourage EHR adoption, the American Recovery and Reinvestment Act of 2009 allocated $643 million for the creation of a Health Information Technology Extension Program. (4) This program provides ongoing technical assistance to practices through Regional Extension Centers, with a focus on small primary care practices. Through a combination of clinical decision support, tool to reduce medical errors (such as eprescribing and clear and comprehensive display of test results), population management through registry generation, and improved communication with patients and other providers, EHRs have tremendous potential to improve quality of care. (5, 6)

Despite the enthusiasm for EHRs, and the recent acceleration in adoption, evidence that EHRs improve quality of care is mixed.(5-13) EHRs may have long term benefits, but the transition from paper to electronic medical records can be disruptive, with a steep learning curve required to effectively use the features of the EHR to improve quality of care. While a recent review identified that 92 percent of recent articles on health information technology – including electronic health records and accompanying technologies, such as clinical decision support – found positive results from implementation,(13) other reviews have emphasized the lack of formal evaluation of health IT applications.(5, 6) Very little is known about the effects of the recently created Regional Extension Centers on quality.(14) Although smaller-scale versions of the Regional Extension Center model have been implemented, evaluations have focused on pre- and post- intervention improvement and have not convincingly demonstrated the effectiveness of this approach.(15-20)

This study uses a unique dataset based on multi-payer medical claims in New York State to evaluate whether the Primary Care Information Project – in its first years as a major electronic health record implementation and technical assistance program – improved quality of care for small practices located in underserved areas in New York City.

The Primary Care Information Project

In 2005, the New York City Department of Health and Mental Hygiene created the Primary Care Information Project (PCIP), which began assisting practices with EHR adoption in late 2007. PCIP subsidized two years of EHR software costs for eligible primary care providers (serving a minimum of 10% Medicaid or uninsured patients) in New York City. These providers were required to adopt the eClinicalWorks™ EHR, which included several functionalities co-developed by PCIP to improve prevention-oriented services. These functionalities include a clinical decision support system, an enhanced patient registry that allows the providers to search on specific patient characteristics, and e-prescribing. PCIP also provided technical assistance and coaching on quality improvement with a focus on preventative care, based on the NYC health policy agenda “Take Care New York”.(21-23) PCIP staff provided practices with on-site technical assistance visits lasting after the implementation of EHR software. Technical assistance focused initially on helping practices to address barriers to effective EHR use and to troubleshoot EHR implementation issues. Subsequent technical assistance visits assisted practices to use the EHR to improve the population health of their panel, with an emphasis on adult patients with cardiovascular health problems. This included interfacing with the patient registry, using patient flow sheets, and generating patient order sets, custom alerts, and quality reports.

Since July of 2007, PCIP has enrolled over 3,300 physicians in over 600 practices, making it the largest community-based EHR implementation and extension program in the U.S.(21) In 2011, PCIP became one of the 60 Regional Extension Centers established nationally.(24)

Methods

We tested whether physicians in PCIP improved outpatient quality more than a set of matched comparison physicians in New York State that did not participate in PCIP. We also examined whether the effect of PCIP was greater for physicians who received more technical assistance from the program. This study encompasses the time period before, and up to two years after EHR implementation for PCIP physicians.

Data

To generate measures of the quality of care, we used data from the New York Quality Alliance dataset for calendar years 2007-2010. The dataset includes outpatient quality of care measures based on Healthcare Effectiveness Data Information Set specifications from 13 private insurers (including some managed Medicare and Medicaid plans) for physicians in New York City, Long Island, the Hudson Valley, the Capital Region (surrounding Albany), and Western New York State (Exhibit 1).(25) Together, participating plans make up approximately 40% of the privately insured market in New York State.(26) In addition to containing numerators and denominators for each quality measure for 6,756 physicians with at least 30 eligible patients for a given measure in a given year, the dataset also contains information on physicians’ location of practice, and specialty.

Exhibit 1.

Characteristics of physicians participating and not participating in PCIP for all eligible physicians and the propensity score matched sample

| PCIP physicians |

All Comparison physicians |

Matched Comparison physicians |

|

|---|---|---|---|

| N | 360 | 4,645 | 360 |

| Location*Ψ (%) | |||

| New York City | 100 | 43.7 | 90.8 |

| Long Island | 0 | 23.3 | 5.0 |

| Hudson Valley | 0 | 17.8 | 1.9 |

| Capital Region and Northern New York | 0 | 12.4 | 0.6 |

| Western New York | 0 | 2.8 | 1.7 |

| Percent poverty in zip code†*(mean, sd) | 20.1 (6.7) | 13.6 (8.0) | 20.0 (6.9) |

| Number of physicians in practice* (mean, sd) | 2.1 (2.7) | 5.4 (16.8) | 1.8 (1.9) |

| Specialty* (%) | |||

| Internal medicine | 41.4 | 41.2 | 41.7 |

| Family practice | 15.8 | 21.4 | 14.7 |

| Pediatric medicine | 19.2 | 16.4 | 21.7 |

| Cardiology | 2.8 | 3.1 | 2.8 |

| Pulmonology | 3.6 | 1.8 | 2.2 |

| Gastroenterology | 3.1 | 2.0 | 3.9 |

| Other | 14.2 | 14.2 | 13.1 |

| Degree* (% with MD) | 94.4 | 91.0 | 92.5 |

| Practice ownership* (% with corporate parent) | 6.7 | 26.0 | 4.4 |

|

Number of technical assistance visits¥ (mean, sd) |

3.1 (3.7) | - | - |

| Exposure to PCIP¥ (%) | |||

| Less than 6 months | 37.8 | - | - |

| 6 to less than 12 months | 12.5 | - | - |

| 12 to less than 18 months | 7.8 | - | - |

| 18 to less than 24 months | 15.3 | - | - |

| 24 months or more | 26.7 | - | - |

SOURCE: Authors’ analysis

Note 1: For zip code in which practice is located

Note 2: As of December 31, 2010

Note 3: p<.05 for test of difference between PCIP physicians and all comparison physicians

Note 3: p<.05 for test of difference between PCIP physicians and matched comparison physicians

We used data from the New York City Department of Public Health and Mental Hygiene on PCIP physician go-live dates, technical assistance received after EHR implementation (from 2008 onwards), physicians’ location of practice, and type of practice (small practice, community health center, or hospital) for 3,376 physicians with valid National Physician Identifier numbers who went-live on an EHR between July 2007 and December 2011.

Additional data on physician and practice characteristics for PCIP and non-PCIP physicians were derived from the 2011 IMS Health Healthcare Organization Services and Healthcare Relational Services datasets. These files contain physician and practice information, including physician affiliations with practices, for virtually all physicians and practices in the United States. We merged the quality data and PCIP member files with the IMS Health files by National Physician Identifier to include data on physician degree (MD or osteopath), the number of physicians in the practice, and whether a practice had a corporate parent. We also integrated data from the 2000 Decennial Census on the proportion of the population living in poverty (at the zip code level) and whether the practice zip code was located in an urban or rural area. The resulting file contained 5,005 unique physicians (360 from PCIP) and 57,558 physician-measure observations (3,924 from PCIP physicians) representing quality of care for a total of 4,874,518 eligible patient measures (including 271,762 eligible patient measures from PCIP physicians).

Creating a matched comparison group to assess the effect of PCIP on quality

Physicians in PCIP tended to work in smaller practices, were located in higher poverty areas, were less likely to have a corporate parent, and were exclusively located in New York City (Exhibit 1). As a result, PCIP physicians may be expected to have a different quality improvement trajectory than other physicians. To address this issue, we evaluated the effect of PCIP against a matched cohort of non-PCIP physicians. We used a one-to-one matching strategy using propensity scores, matching PCIP physicians to non-PCIP physicians on the number of physicians in their practice, whether the practice had a corporate parent (yes/no), physician degree (MD/DO), physician specialty (internal medicine, family practice, pediatric medicine, cardiology, pulmonology, and gastroenterology), and the proportion of residents living in poverty in the practice zip code. Because all PCIP physicians practiced in urban areas, we limited potential matches to comparison physicians who also practiced in urban areas.

Study Outcome

Our study outcome is outpatient quality of care, defined by the proportion of patients (numerator divided by denominator, or “score”) receiving the ten available process-of-care measures: appropriate treatment for children with upper respiratory infection, appropriate testing for children with pharyngitis, breast cancer screening for women, retinal exam for diabetic patients, HbA1c testing for diabetic patients, cholesterol testing for diabetic patients, urine testing for proteinuria in diabetic patients, cervical cancer screening for women, chlamydia screening for women, and colorectal cancer screening (Exhibit 2).

Exhibit 2.

Physician-level quality scores on quality measures and counts of physicians reporting for PCIP and matched comparison physicians, 2007 and 2010

| Measure | Number of observations, 2007-2010 |

Mean Score (SD), 2007 |

Mean Score (SD), 2010 |

|---|---|---|---|

| Appropriate treatment for children with upper respiratory infection |

539 | 91.4 (8.9) | 93.5 (10.3) |

| Appropriate testing for children with pharyngitis | 132 | 65.8 (34.0) | 85.5 (24.1) |

| Breast cancer screening for women† | 1,638 | 59.4 (11.3) | 57.4 (15.0) |

| Retinal exam for diabetic patients† | 590 | 44.1 (12.6) | 44.6 (11.2) |

| HbA1c testing for diabetic patients | 590 | 71.3 (20.7) | 85.3 (10.2) |

| Cholesterol testing for diabetic patients | 591 | 73.3 (20.8) | 85.6 (10.8) |

| Urine testing for diabetic patients† | 590 | 65.8 (13.9) | 69.7 (17.5) |

| Cervical cancer screening for women | 1,933 | 64.3 (11.4) | 77.3 (9.8) |

| Chlamydia screening for women† | 108 | 42.4 (20.2) | 65.0 (19.1) |

| Colorectal cancer screening† | 911 | - | 53.9 (15.2) |

SOURCE: Authors’ analysis

Note 1: Denotes EHR sensitive measure

Note 2: Colorectal cancer screening was not available as a quality measure in 2007

Note 3: Differences between the 2007 and 2010 scores are significant at p<.05 for all measures with data in both years

Analysis

We perform a physician-measure-level analysis, in which quality for each measure reported for each physician is an observation in the data, allowing us to evaluate the effect of PCIP on overall quality. We specify a longitudinal fixed-effects model to estimate the effect of varying exposure times to PCIP (i.e. time since going live on the EHR) on quality while controlling for the specific measures reported by physicians. By including physician fixed-effects, we account for all time-invariant factors at the physician level (e.g., physician skill, motivation to improve quality, and patient mix), allowing us to test whether exposure to PCIP improves quality “within” physicians, over time. By controlling for the quality measures reported for each physician, our estimates are invariant to the specific measures reported by physicians.

Physicians in PCIP did not all start the program at the same time. Instead, there was rolling admission to PCIP for the entire study period. Consequently, at the end of any given calendar year (the interval for which quality measures are calculated), exposure to PCIP varied across the cohort of PCIP physicians. To address this, we estimated the continuous, nonlinear effect of each additional month of exposure to PCIP on quality while controlling for secular trends in quality for each study year. Using the model coefficients, we estimated the effects of 6 months, 12 months, 18 months, and 24 months of exposure to PCIP on quality of care.

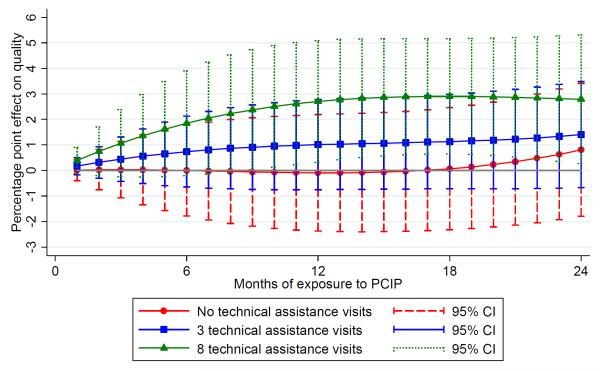

We extend our base specification by allowing the effect of PCIP to vary depending on the number of technical assistance visits that a physician received. This is performed by including in our model a non-linear interaction between the cumulative number of technical assistance visits that a physician received in a given year and the number of months the physician was exposed to PCIP. We then estimated the effect of 6 months, 12 months, 18 months, and 24 months of exposure to PCIP for physicians associated with practices that received zero technical assistance visits, those who received three technical assistance visits (the median), and those who received eight technical assistance visits (the 90th percentile).

We also identified quality measures for which previous research has shown a relationship between EHR implementation and quality,(7) and evaluated the effect of EHR implementation only for these “EHR sensitive” measures (breast cancer screening for women, retinal exam for diabetics, urine testing for diabetics, chlamydia screening for women, and colorectal screening). Appendix A provides details on the model estimation and shows the results from several sensitivity checks.

Limitations

We were only able to evaluate the effect of PCIP among the subset of PCIP physicians that matched to the New York Quality Alliance database and met the other study eligibility criteria: our analytic sample included 360 PCIP physicians out of a total of 1,151 small practice physicians in PCIP, 31.3% of participating physicians. PCIP physicians that did not match to the measures file may have been in practices with fewer patients overall, or had fewer patients who were members of health plans contributing to the measures dataset, and therefore did not meet the 30 patient threshold required for any measure to be included in the measures dataset. The inclusion of a greater number of PCIP physicians in our analysis would have allowed for greater statistical power to detect program effects and would have given greater confidence that the results were representative of the experience of all small practice physicians in PCIP, although additional analysis suggests that matched and non-matched PCIP physicians were similar (Appendix A).

We also lacked information on physicians’ payer mix, which would have allowed for a better match between PCIP and comparison physicians. Furthermore, we were limited to evaluating the effect of PCIP for the relatively small set of available measures. PCIP’s technical assistance emphasized improving the quality of care for adult patients with cardiovascular issues, and therefore would not be expected to have uniform effects across the study measures. Our analysis showed some sensitivity of the results to the measures included in the analysis; evaluating the effect of PCIP for a different set of measures may have led to different conclusions. Data used in these analyses were based on paid claims for patients assigned to providers. The difference in quality measurement using a claims-based data source may result in different patterns of quality trends for providers than data derived directly from the practice.(27)

Physicians in PCIP voluntarily joined the program, and the receipt of technical assistance was also voluntary, both of which potentially signal a greater motivation to improve practice processes and quality of care. It is therefore possible that PCIP physicians were, in ways we could not measure, more likely to improve quality than the physicians to whom they were compared. Sensitivity results using all comparison physicians, rather than just the matched sample, found a pattern of similar results, albeit with stronger evidence of the effect of PCIP exposure on quality (Appendix A). However, additional analysis – showing similar trends in quality for PCIP physicians before they joined the program and matched comparison physicians - indicates that the matched comparison physicians appear to be a more appropriate comparison group.

Finally, New York State has made major investments in health information technology through the HEAL New York Phase 10 program. As a result, some of the physicians included in the study who were not part of PCIP may have received support for implementing EHRs that was similar to the support received by physicians who participated in PCIP. Evaluating PCIP against a benchmark of physicians participating in similar programs would likely bias estimated program effects towards the null. Unfortunately, information on whether non-PCIP providers were involved in other implementation projects was not available in this study.

Results

Exhibit 1 shows that, compared to the population of comparison physicians, PCIP physicians were more likely to be located in New York City (100% versus 43.7%), to practice in areas with a higher proportion of residents living in poverty (20.1% versus 13.6%), and to work in smaller practices (2.1 physicians versus 5.4 physicians). They were somewhat less likely to have a specialty in family medicine (15.8% versus 21.4%) but somewhat more likely to have a specialty in pediatric medicine (19.2% versus 16.4%), and were much less likely to have a corporate parent (6.7% versus 26.0%). However, in the matched sample, PCIP and comparison physicians share similar characteristics, with region of practice being the only significant difference between the groups.

Exhibit 2 shows the quality scores and the number of physicians with reported quality for each quality measure for 2007 and 2010. Quality of care was highest for appropriate treatment for children with upper respiratory infection (mean score 91.4 in 2007) and lowest for chlamydia screening for women (mean score 42.4 in 2007). Between 2007 and 2010, the cervical cancer screening for women measure had the most physician-level observations (1,933) while the chlamydia screening for women measure had the fewest number of physician-level observations (108).

Exhibit 3 shows the results from the models estimating the effect of PCIP on quality of care. The column to the left indicates exposure to PCIP (6 months, 12 months, 18 months, and 24 months) corresponding with each estimate. Estimates are shown separately for all quality measures and for the EHR sensitive measures and are shown overall, and for three levels of technical assistance visits (zero visits, three visits, and eight visits). Estimates are interpreted as the percentage point change in quality of care associated with a given period of exposure to PCIP and a given number of technical assistance visits, compared to no exposure to PCIP and no technical assistance. All estimates control for the specific measures reported by PCIP and comparison physicians.

EXHIBIT 3.

Estimates of the effect of PCIP on quality for 6, 12, 18, and 24 months of exposure to PCIP across levels of technical assistance

| Quality measures | ||||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| All quality measures | EHR sensitive quality measures | |||||||

|

| ||||||||

| Cumulative technical assistance visits | Cumulative technical assistance visits | |||||||

|

| ||||||||

| Exposure period | Overall | none | 3 visits | 8 visits | Overall | none | 3 visits | 8 visits |

| 6 months | −0.24 (0.53) |

−0.30 (0.77) |

−0.33 (0.54) |

0.04 (0.77) |

0.73 (0.66) |

0.00 (0.90) |

0.74 (0.71) |

1.85 (1.05) |

| 12 months | −0.25 (0.72) |

−0.65 (1.01) |

−0.40 (0.73) |

0.25 (0.91) |

1.24 (0.88) |

−0.09 (1.16) |

1.01 (0.90) |

2.70* (1.22) |

| 18 months | −0.02 (0.81) |

−0.86 (1.05) |

−0.23 (0.82) |

0.61 (0.90) |

1.71 (0.95) |

0.07 (1.22) |

1.13 (0.94) |

2.90* (1.15) |

| 24 months | 0.42 (0.91) |

−0.74 (1.15) |

0.14 (0.94) |

1.10 (1.07) |

2.31* (1.05) |

0.81 (1.33) |

1.41 (1.06) |

2.79* (1.29) |

|

| ||||||||

| Observations | 7,622 | 3,589 | ||||||

| Number of total physicians |

720 | 516 | ||||||

| Number of PCIP physicians |

360 | 258 | ||||||

SOURCE: Authors’ analysis

Note 1: p<.05

Note 2: 3 cumulative technical assistance visits is the median number of visits and 8 cumulative visits is the 90th percentile among practices that went live during the study period.

Note 3: Effects are interpreted as the incremental percentage point change in quality of care for a given exposure to PCIP

Note 4: Standard errors robust to groupwise heterskedasticity at the level of the match shown in ()

Note 5: EHR sensitive measures include breast cancer screening for women, retinal exam for diabetics, urine testing for diabetics, chlamydia screening for women, and colorectal screening

Exhibit 3 shows that, for all quality measures, PCIP was not significantly associated with improvement in quality of care at 6 months (−0.24 percentage points, p > .10), 12 months (−0.25 percentage points p > .10), 18 months (−0.02 percentage points p > .10), or 24 months of exposure (+0.42 percentage points p > .10). In addition, although effects were positive for practices receiving 8 technical assistance visits, no level of exposure to PCIP was significantly associated with an increase in performance for all quality measures for any level of technical assistance.

Exhibit 3 also shows results for EHR sensitive measures. While lower exposure periods to PCIP were not associated with quality improvements for EHR sensitive measures, 24 months of exposure to PCIP was significantly associated with quality improvement (+2.31 percentage points, p < .05). This effect was driven by physicians in practices that received extensive technical assistance: while 24 months of program exposure was not associated with quality improvement for physicians in practices that received zero technical assistance visits (+0.81 percentage points, p > .10) or three technical assistance visits (+1.41 percentage points, p > .10), 24 months of exposure was significantly associated with quality improvement for physicians in practices that received eight technical assistance visits (+2.79 percentage points, p < .05).

Exhibit 4 shows monthly estimates of exposure to PCIP on quality for the EHR sensitive measures, derived from the estimated models. Exposure to PCIP was not associated with quality improvement for physicians in practices receiving zero or three quality visits. However, for those receiving at least eight quality visits, 9 through 24 months of exposure to PCIP was significantly associated with greater quality improvement (p <.05).

EXHIBIT 4.

Caption/headline: Estimates of the effect of exposure to the Primary Care Information Project on quality across levels of technical assistance for EHR sensitive measures

SOURCE: Authors’ analysis

Note 1: EHR sensitive measures include breast cancer screening for women, retinal exam for diabetics, urine testing for diabetics, chlamydia screening for women, and colorectal screening

Discussion

This study used a unique dataset based on medical claims from multiple payers in New York State to evaluate the effect of the Primary Care Information Project, a large electronic health record implementation and technical assistance program aimed at small primary care practices serving disadvantaged populations, on ten commonly used measures of outpatient quality of care. Within the first two years of the program, use of an EHR was not associated with an overall improvement in quality, even among physicians who received high levels of technical assistance from PCIP. However, for a limited set of quality measures that previous research has shown to be sensitive to EHR implementation, we found that use of the EHR was associated with significantly higher quality of care for physicians who received high levels of technical assistance (eight or more visits) and who had been using the EHR for at least nine months. Exposure to PCIP was not associated with quality improvement for physicians who received low or moderate levels of technical assistance. These findings suggest that the early effects of a major electronic health record implementation project, as measured by claims-based measures, were limited to a subset of quality measures, and limited to physicians in practices receiving extensive technical assistance from the program.

Our results are consistent with other research finding that EHRs alone do not consistently improve quality of care.(8) However, ours is one of the few studies evaluating the effect of EHR implementation on quality of care in a community, outpatient setting: most studies have been single institution studies, many of which were conducted within institutions recognized as health IT leaders.(5, 6, 13) Our study focuses on physicians who are a key target for regional extension centers – physicians in small practices who serve primarily disadvantaged patients.

Our results support three main conclusions. First, EHR implementation through the PCIP program was associated with an improvement in quality for a subset of quality measures which previous research has shown to be related to quality of care (breast cancer screening for women, retinal exam for diabetics, urine testing for diabetics, chlamydia screening for women, and colorectal screening). EHR implementation and technical assistance, in the short term, may not help physicians improve quality across all clinical parameters.

Second, EHR implementation alone was not sufficient to improve quality of care. Only those physicians who received high levels of technical assistance concomitant with EHR implementation improved quality. Even relatively long periods of EHR use – up to two years – were not associated with quality improvement for physicians who received no technical assistance, or moderate levels of technical assistance. For the quality measures we assessed, this finding stands in contrast to widespread physician perceptions that EHRs improve quality of care, (28) and suggests that Regional Extension Centers have an important role to play in facilitating the use of EHRs to improve quality of care.

Third, even with high levels of technical assistance, it took close to one year of exposure to PCIP for effects on quality of care to be observed. This suggests that there is a learning curve for using the EHR effectively to improve quality, and that it is important for future studies to appropriately capture the effect of EHRs on quality by evaluating medium to longer term outcomes. EHR implementation is disruptive to physician practices; it is possible that it takes longer than two years for improvements in quality to appear.

Conclusion

Our study suggests that small primary care practices serving disadvantaged populations can use EHRs to improve the quality of care they provide. However, improvements were small, were only for a limited number of measures, and occurred after at least nine months of using the EHR in practices that received eight or more technical assistance visits. These findings suggest that small practices in disadvantaged areas may need considerable help to use EHRs to improve quality, and lend some support for the Regional Extension Center model of assisting practices use EHRs.(29, 30) However, it should be noted that the practices in this study received assistance from PCIP, an experienced and well-funded organization that also provided funding to assist practices obtain appropriate software. It will be important to compare the effectiveness of different regional extension centers to each other, and to evaluate these effects over the long term.

Supplementary Material

Acknowledgements

The authors acknowledge Colleen McCullough and Samantha Deleon, of the New York City Department of Health and Mental Hygiene, for preparing data on the Primary Care Information Project for the study and Amanda Parsons, Jesse Singer, Mandy Smith Ryan, and Jason Wang, of the same department, for providing comments on the manuscript.

Funding. This research was funded by the Robert Wood Johnson Foundation under the solicitation “Improving Quality and Value in Health Care: Ideas from the Field.” Dr. Ryan is supported by an Agency for Healthcare Research and Quality Career Development Award (K01 HS018546). Dr. Bishop is also supported by a National Institute On Aging Career Development Award (K23AG043499) and as a Nanette Laitman Clinical Scholar in Public Health at Weill Cornell Medical College. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Endnotes

- 1.Decker SL, Jamoom EW, Sisk JE. Physicians In Nonprimary Care And Small Practices And Those Age 55 And Older Lag In Adopting Electronic Health Record Systems. Health affairs. 2012 Apr 25; doi: 10.1377/hlthaff.2011.1121. PubMed PMID: 22535502. Epub 2012/04/27. Eng. [DOI] [PubMed] [Google Scholar]

- 2.Hing E, Burt CW. Office-based medical practices: methods and estimates from the national ambulatory medical care survey. Advance data. 2007 Mar;12(383):1–15. PubMed PMID: 17370700. Epub 2007/03/21. eng. [PubMed] [Google Scholar]

- 3.Hing E, Burt CW. Are there patient disparities when electronic health records are adopted? Journal of health care for the poor and underserved. 2009 May;20(2):473–88. doi: 10.1353/hpu.0.0143. PubMed PMID: 19395843. Epub 2009/04/28. eng. [DOI] [PubMed] [Google Scholar]

- 4.Department of Health and Human Services . Health Information Technology Extension Program: Facts-At-A-Glance. Department of Health and Human Services; Washington, D.C.: [cited 2012 11/02/2012]. Available from: http://www.hhs.gov/recovery/programs/hitech/factsheet.html. [Google Scholar]

- 5.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Annals of internal medicine. 2006 May 16;144(10):742–52. doi: 10.7326/0003-4819-144-10-200605160-00125. PubMed PMID: 16702590. Epub 2006/05/17. eng. [DOI] [PubMed] [Google Scholar]

- 6.Goldzweig CL, Towfigh A, Maglione M, Shekelle PG. Costs and benefits of health information technology: new trends from the literature. Health affairs. 2009 Mar-Apr;28(2):w282–93. doi: 10.1377/hlthaff.28.2.w282. PubMed PMID: 19174390. Epub 2009/01/29. eng. [DOI] [PubMed] [Google Scholar]

- 7.Baron RJ. Quality improvement with an electronic health record: achievable, but not automatic. Annals of internal medicine. 2007 Oct 16;147(8):549–52. doi: 10.7326/0003-4819-147-8-200710160-00007. PubMed PMID: 17938393. Epub 2007/10/17. eng. [DOI] [PubMed] [Google Scholar]

- 8.DesRoches CM, Campbell EG, Vogeli C, Zheng J, Rao SR, Shields AE, et al. Electronic health records’ limited successes suggest more targeted uses. Health affairs. 2010 Apr;29(4):639–46. doi: 10.1377/hlthaff.2009.1086. PubMed PMID: 20368593. Epub 2010/04/07.eng. [DOI] [PubMed] [Google Scholar]

- 9.Friedberg MW, Coltin KL, Safran DG, Dresser M, Zaslavsky AM, Schneider EC. Associations between structural capabilities of primary care practices and performance on selected quality measures. Annals of internal medicine. 2009 Oct 6;151(7):456–63. doi: 10.7326/0003-4819-151-7-200910060-00006. PubMed PMID: 19805769. Epub 2009/10/07. eng. [DOI] [PubMed] [Google Scholar]

- 10.Keyhani S, Hebert PL, Ross JS, Federman A, Zhu CW, Siu AL. Electronic health record components and the quality of care. Medical care. 2008 Dec;46(12):1267–72. doi: 10.1097/MLR.0b013e31817e18ae. PubMed PMID: 19300317. Epub 2009/03/21. eng. [DOI] [PubMed] [Google Scholar]

- 11.Kinn JW, Marek JC, O’Toole MF, Rowley SM, Bufalino VJ. Effectiveness of the electronic medical record in improving the management of hypertension. Journal of clinical hypertension. 2002 Nov-Dec;4(6):415–9. doi: 10.1111/j.1524-6175.2002.01248.x. PubMed PMID: 12461305. Epub 2002/12/04. eng. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Linder JA, Ma J, Bates DW, Middleton B, Stafford RS. Electronic health record use and the quality of ambulatory care in the United States. Archives of internal medicine. 2007 Jul 9;167(13):1400–5. doi: 10.1001/archinte.167.13.1400. PubMed PMID: 17620534. Epub 2007/07/11. eng. [DOI] [PubMed] [Google Scholar]

- 13.Buntin MB, Burke MF, Hoaglin MC, Blumenthal D. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health affairs. 2011 Mar;30(3):464–71. doi: 10.1377/hlthaff.2011.0178. PubMed PMID: 21383365. Epub 2011/03/09. eng. [DOI] [PubMed] [Google Scholar]

- 14.Torda P, Han ES, Scholle SH. Easing the adoption and use of electronic health records in small practices. Health affairs. 2010 Apr;29(4):668–75. doi: 10.1377/hlthaff.2010.0188. PubMed PMID: 20368597. Epub 2010/04/07. eng. [DOI] [PubMed] [Google Scholar]

- 15.Grumbach K, Mold JW. A health care cooperative extension service: transforming primary care and community health. JAMA : the journal of the American Medical Association. 2009 Jun 24;301(24):2589–91. doi: 10.1001/jama.2009.923. PubMed PMID: 19549977. Epub 2009/06/25. eng. [DOI] [PubMed] [Google Scholar]

- 16.Aspy CB, Enright M, Halstead L, Mold JW, Oklahoma Physicians Resource/Research N Improving mammography screening using best practices and practice enhancement assistants: an Oklahoma Physicians Resource/Research Network (OKPRN) study. Journal of the American Board of Family Medicine : JABFM. 2008 Jul-Aug;21(4):326–33. doi: 10.3122/jabfm.2008.04.070060. PubMed PMID: 18612059. Epub 2008/07/10. eng. [DOI] [PubMed] [Google Scholar]

- 17.Newton WP, Lefebvre A, Donahue KE, Bacon T, Dobson A. Infrastructure for large-scale quality-improvement projects: early lessons from North Carolina Improving Performance in Practice. The Journal of continuing education in the health professions. 2010 Spring;30(2):106–13. doi: 10.1002/chp.20066. PubMed PMID: 20564712. Epub 2010/06/22. eng. [DOI] [PubMed] [Google Scholar]

- 18.Fontaine P, Zink T, Boyle RG, Kralewski J. Health information exchange: participation by Minnesota primary care practices. Archives of internal medicine. 2010 Apr 12;170(7):622–9. doi: 10.1001/archinternmed.2010.54. PubMed PMID: 20386006. Epub 2010/04/14. eng. [DOI] [PubMed] [Google Scholar]

- 19.Bodenheimer T, Grumbach K, Berenson RA. A lifeline for primary care. The New England journal of medicine. 2009 Jun 25;360(26):2693–6. doi: 10.1056/NEJMp0902909. PubMed PMID: 19553643. Epub 2009/06/26. eng. [DOI] [PubMed] [Google Scholar]

- 20.Kaufman A, Powell W, Alfero C, Pacheco M, Silverblatt H, Anastasoff J, et al. Health extension in new Mexico: an academic health center and the social determinants of disease. Annals of family medicine. 2010 Jan-Feb;8(1):73–81. doi: 10.1370/afm.1077. PubMed PMID: 20065282. Pubmed Central PMCID: 2807392. Epub 2010/01/13. eng. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mostashari F, Tripathi M, Kendall M. A tale of two large community electronic health record extension projects. Health affairs. 2009 Mar-Apr;28(2):345–56. doi: 10.1377/hlthaff.28.2.345. PubMed PMID: 19275989. Epub 2009/03/12. eng. [DOI] [PubMed] [Google Scholar]

- 22.New York City Department of Health and Mental Hygeine . The Primary Care Information Project. New York City Department of Health and Mental Hygeine; New York City: [cited 2010 July 6, 2010]. Available from: http://www.nyc.gov/html/doh/html/pcip/pcip-summary.shtml. [Google Scholar]

- 23.Cohen L, Desai E, Guerrero Z, Havusha A, Sliger F, Frieden TR. Take Care New York: Fourth Year Progress Report. New York City Department of Health and Mental Hygiene; New York City: 2008. [Google Scholar]

- 24.New York City Department of Health and Mental Hygeine . Primary Care Information Project Bulletin. New York City Department of Health and Mental Hygeine; New York City: [cited 2010 July 6, 2010]. 2010. Available from: http://www.nyc.gov/html/doh/downloads/pdf/pcip/march-2010-pcip-newsletter.pdf. [Google Scholar]

- 25.Participating plans included Aetna AHP, CDPHP, CIGNA, Elderplan, Group Health Incorporated HMO, Health Net, Health Now NY, HIP of New York, Hudson Health Plan, Independent Health, MVP Health Care, and Oxford Health Plans.

- 26.Alliance NYQ. [cited 2010 July 6, 2010];New York Quality Alliance. Available from: http://www.nyqa.org/

- 27.Tang PC, Ralston M, Arrigotti MF, Qureshi L, Graham J. Comparison of methodologies for calculating quality measures based on administrative data versus clinical data from an electronic health record system: implications for performance measures. Journal of the American Medical Informatics Association : JAMIA. 2007 Jan-Feb;14(1):10–5. doi: 10.1197/jamia.M2198. PubMed PMID: 17068349. Pubmed Central PMCID: 2215069. Epub 2006/10/28. eng. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Jamoom EW, Beatty P, Bercovitz A, Woodwell D, Palso K, Rechtsteiner E. Physician adoption of electronic health record systems: United States, 2011. National Center for Health Statistics; Hyattsville, MD: 2012. [PubMed] [Google Scholar]

- 29.De Leon SF, Shih SC. Tracking the delivery of prevention-oriented care among primary care providers who have adopted electronic health records. Journal of the American Medical Informatics Association : JAMIA. 2011 Dec;18(Suppl 1):i91–5. doi: 10.1136/amiajnl-2011-000219. PubMed PMID: 21856688. Pubmed Central PMCID: 3241164. Epub 2011/08/23. eng. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Shih SC, McCullough CM, Wang JJ, Singer J, Parsons AS. Health information systems in small practices. Improving the delivery of clinical preventive services. American journal of preventive medicine. 2011 Dec;41(6):603–9. doi: 10.1016/j.amepre.2011.07.024. PubMed PMID: 22099237. Epub 2011/11/22. eng. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.