Abstract

The ability of today's robots to autonomously support humans in their daily activities is still limited. To improve this, predictive human-machine interfaces (HMIs) can be applied to better support future interaction between human and machine. To infer upcoming context-based behavior relevant brain states of the human have to be detected. This is achieved by brain reading (BR), a passive approach for single trial EEG analysis that makes use of supervised machine learning (ML) methods. In this work we propose that BR is able to detect concrete states of the interacting human. To support this, we show that BR detects patterns in the electroencephalogram (EEG) that can be related to event-related activity in the EEG like the P300, which are indicators of concrete states or brain processes like target recognition processes. Further, we improve the robustness and applicability of BR in application-oriented scenarios by identifying and combining most relevant training data for single trial classification and by applying classifier transfer. We show that training and testing, i.e., application of the classifier, can be carried out on different classes, if the samples of both classes miss a relevant pattern. Classifier transfer is important for the usage of BR in application scenarios, where only small amounts of training examples are available. Finally, we demonstrate a dual BR application in an experimental setup that requires similar behavior as performed during the teleoperation of a robotic arm. Here, target recognition processes and movement preparation processes are detected simultaneously. In summary, our findings contribute to the development of robust and stable predictive HMIs that enable the simultaneous support of different interaction behaviors.

Introduction

During the last decades different approaches were developed to support humans in their daily life and working environment or to restore sensory and motor functions with the help of intelligent and autonomous robotic systems that behave situational and support humans according to the context [1]–[5]. However, autonomous systems do not yet come close to the cognitive capabilities of humans regarding their ability to react mostly correctly and appropriately to a new situation. Therefore, the application of robotic systems is to some degree restricted to certain situations and environments.

Some approaches solve restrictions of autonomous robotic behavior by using human-machine interfaces (HMIs). HMIs for example explicitly send commands to robots when autonomous behavior cannot handle a given situation as shown by, e.g., Kaupp et al. [2] for teleoperation. However, to explicitly send a control command requires enhanced cognitive demands by the interacting human. Between humans, implicit information is transferred beside explicit information during interaction that can be used by the interacting persons to infer on the general state of each other, like the emotional state, involvement in the interaction or communication or the mental load. This implicit information serves to adapt behavior to interact better, e.g., more efficiently. Thus, a promising approach for improving the behavior of autonomous artificial systems is to adapt them with respect to the state of the interacting human. Such adaptation of technical systems is in a more general sense also known as biocybernetic adaptation [6]. It is usually used to, e.g., change the functionality of a system regarding fatigue or frustration levels of a user and can enable better control over complex systems [7]. For this aim (psycho-)physiological data from the user like galvanic skin response, blood pressure, gesture, eye gaze, mimic, prosody, brain activity or combinations of those are applied [5], [6], [8], [9].

Establishing and Supporting Interaction by Brain-Computer Interfaces

The human's EEG has been used since some decades to develop brain-computer interfaces (BCIs) with the goal to (re-)establish explicit interaction and communication [10]–[15]. For this purpose, active and reactive BCIs enable the user to control a computer or machine via the nervous system and can replace classical HMIs for the explicit control of devices like keyboard, mouse or joystick. They were mainly developed to open up new ways of communication for disabled persons [10], [11], [16], for example, to control a speller by imaging hand movements [17]. Recently, active and reactive BCIs are also used by healthy people [18], e.g., in BCI controlled computer games [19], [20]. Active and reactive BCIs have some main drawbacks in their application: The user has to concentrate on the task of controlling the device via his brain activity, hence the application of such BCIs typically requires a high amount of cognitive resources from the user. However, training can improve, even automate the control of such BCI and thus reduce the effort. Further, due to the direct link between brain and machine, misclassifications of the brain signals always have an impact on the application and can lead to faulty behavior [21] or inaccuracies. There are, however, promising approaches that attempt to automatically correct misclassifications in active BCIs. For example, [22], [23] have shown that misclassifications of brain activity can be compensated by autonomous interpretation of the situation by the cooperating robotic system.

To extend the usage of EEG activity for physiological computing [6] passive or implicit BCIs were developed [24], [25]. They have their roots in several approaches in the past that focus on user-state detection [25], [26]. For example, in [25] the detection of error potentials is used to correct errors that happen during a rotation task which is performed by the application of an active human-computer interface (HCI) that is manipulated in a way that execution errors are introduced randomly. Since users of passive or implicit BCIs do not actively influence their brain activity, i.e., do not explicitly control a device by brain activity and do not actively produce brain activity, they seem to be an appropriate tool to improve human-machine interaction by implicitly gained information about the humans brain state. It was further proposed that passive BCIs can be integrated into more complex and natural control systems, like emergency braking assistance in cars to improve their functionality. Haufe et al. 2011 [27] discuss that an emergency braking assistance system could be modified by predicting upcoming braking behavior based on EEG analysis. The given examples furthermore show that compared to active or reactive BCIs, passive BCIs seem to be even more easily applicable in hybrid HMI or BCI approaches [28], [29], where at least two different kinds of BCIs or a HMI and a BCI as in [25] are combined.

Embedded Brain Reading in Robotic Applications

Our approach to improve interaction in robotic application scenarios was to implement embedded brain reading (eBR) [30]. It allows to integrate implicitly gained information about the human from his brain's activity into the control of HMIs to automatically adapt them for a better support of future interaction behavior. Since such HMIs are adapted by eBR with respect to inferred upcoming interaction behavior we call the resulting HMIs predictive HMIs. Since we make use of implicit information, our approach is similar to the approach of passive BCI, however we focus on applications in which upcoming interaction behavior can be supported instead of, e.g., correcting former false behavior. In eBR the detection of specific brain patterns by means of machine learning (ML) methods and the process of relating them to specific states of the user, e.g., his intentional state, is called brain reading (BR). BR was introduced as a method to gain information about hidden processes and states of the brain, i.e., the function of the mind [31]. BR can even be applied to detect different conscious states of the human, i.e., in his conscious perception [32]. However, more functional questions like the decoding of visual, auditory, perceptual or cognitive patterns are addressed as well [33]–[37]. For our purpose, we define BR as the passive decoding of brain activity, i.e., detection of certain brain patterns that are related to specific functional, cognitive or intentional (but not necessarily conscious) processes, which are evoked by internal or external events during human-machine interaction. BR takes place unnoticed by the user and requires no extra attentional or cognitive resources of the user it is applied to.

The application of eBR to adapt HMIs and the tasks of BR can be explained on the example of a robotic telecontrol scenario (see Fig. 1), where two HMIs are implemented for human-machine interaction. During teleoperation the operator has to understand information about the general situation or possible hazards, e.g., a person entering the operating area of the robot, a malfunction of the exoskeleton or robot, or requests for communication from outside, such as a second task. It is known that under such conditions of high workload attention to a second task can be impaired [7], [38]. This impairment can lead to failure in one of the tasks, most likely the subjectively less important one. Since manipulation of the exoskeleton requires a very high amount of the user's cognitive resources, it is very likely that he misses important information. It is well known that the event-related potential (ERP) P300 is evoked whenever the brain detects information that appears infrequently in the user's subjective perception. Several sub-components of the positive P300 are known, i.e., novelty P3, P3a and P3b [39]–[41]. The P3b component is evoked by infrequent task relevant stimuli and is therefore not only an indicator for attentional, but also for early cognitive processes, i.e., when target evaluation and recognition takes place [41]–[44]. The amplitude of the P300 does not only depend on the subjective impression of the frequency of occurrence of stimuli but also on the importance of a presented stimulus and whether a subject devotes high amounts of effort to the task [38]. A reduction of the amplitude of P300 can be found in case of ambiguous stimuli for which relevance and importance might not be clear. In case that a subject misses an important stimulus it is expected that no P300 is expressed [45]. Since in the teleoperation scenario a dual-task (controlling the robot by the exoskeleton and responding to important information) is performed, it can further be assumed that besides brain activity related to target recognition processes also other partly overlapping ERP components related to the retrieval of intended action from long-term memory, post-retrieval monitoring, and task coordination processes will be evoked by target stimuli and accompanied by further EPRs like the prospective positivity [46]–[48].

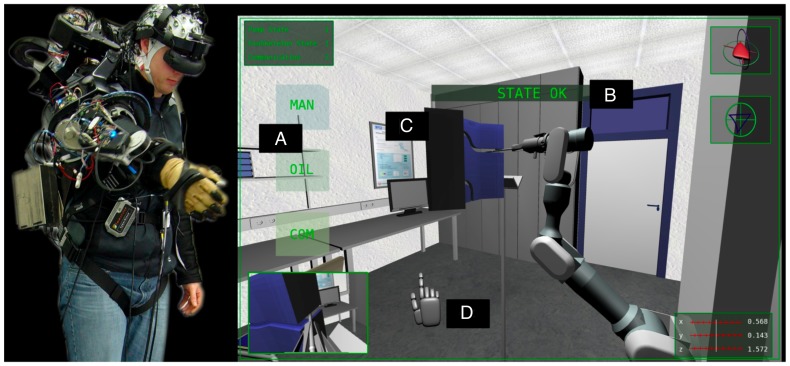

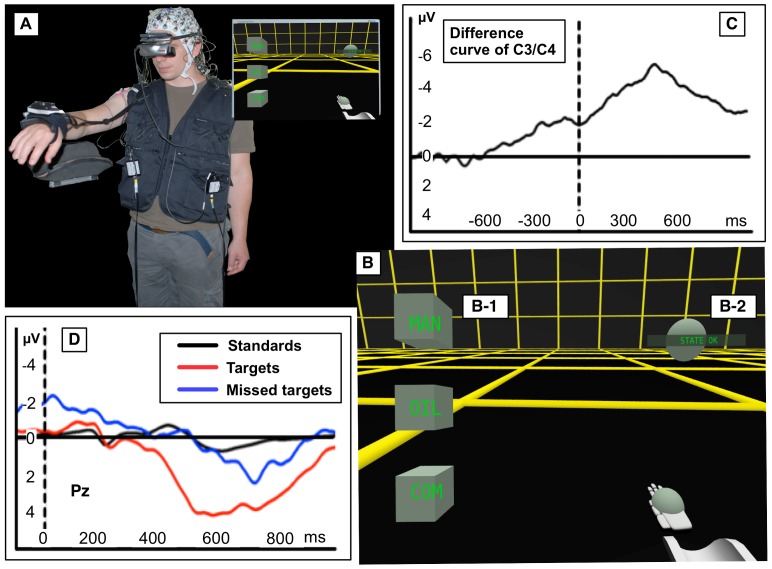

Figure 1. Experimental setup for the teleoperation scenario – a holistic feedback control of semi-autonomous robots.

In the teleoperation scenario an operator is wearing an exoskeleton and, with the support of a virtual scenario, is tele-manipulating a robotic arm. A: three kinds of virtual response cubes (different responses are required for different types of warnings); B: different kinds of stimuli: unimportant stimulus (STATE OK – no response required), warning (first target – response required), repeated and enhanced warning (second target – response required), third warning (response is critical, e.g., exoskeleton control is disabled); C: labyrinth that the robot has to be moved through; D: virtual hand.

Hence, in the teleoperation scenario we used ERP activity, i.e., positive parietal ERP activity, mainly the P300. Instead of having a second person to assist the operator we adapted the implemented operator monitoring system (OMS) by eBR to better assist the operator under both conditions, i.e., if she/he recognized an important warning or did not recognize it. The task of BR was to detect different brain patterns, i.e., patterns that were evoked by the recognition of important stimuli (that contain a P300) and patterns that were evoked by important stimuli that were not recognized, i.e., missed (containing no P300). This information was then used to infer whether or not the operator would respond and to adapt the repetition time for warnings appropriately by eBR. For example, if eBR infers that the operator will respond (in case BR detected brain patterns related to the recognition of important stimuli) the tolerated response time is extended. On the other hand, if eBR infers that the operator will not respond (in case BR did not detect brain patterns related to the recognition of important stimuli) the allowed response time is reduced or the important information is repeated immediately (see Video S1 and Fig. 2). Experiments conducted so far support our approach [49]. Subjects reported that an adapted OMS can reduce stress by avoiding to force fast responses and emphasizes important information by repeating them at a higher frequency in case the subject was distracted.

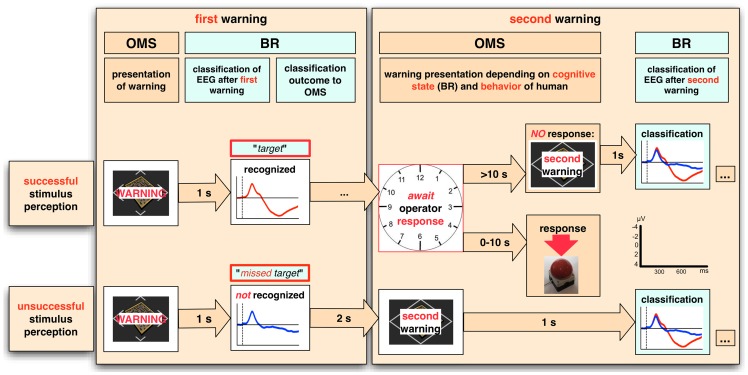

Figure 2. Adaptation of an operator monitoring system by BR.

The currently implemented message scheduling procedure which is controlled by the operator monitoring system (OMS) is shown. The OMS considers the cognitive state that is detected by BR and allows to infer the behavior of the human. The general procedure is described in the following: After a warning the operator's EEG is analyzed by BR. Detection of successes versus no success in the recognition of important information by BR allows to infer future behavior (response or no response) by eBR. As a consequence, the behavior of the OMS is adapted, i.e., the tolerated response time is extended or a second warning is presented right away by the OMS. In case the operator does not respond to the second warning, a third warning follows. Approximate time required for predictions made by BR and predefined response times are given in the arrows.

A central part of the teleoperation scenario (see Fig. 1) is an exoskeleton developed by our group [50] to intuitively control different robotic arms or legs [51], [52]. The exoskeleton used for teleoperation serves both as a control device for a semi-autonomous robot as well as an interface for the control of a virtual scenario (for visualization of the scenario see Video S2). For control reasons the switch between two operating modes of the exoskeleton: (i) a position control mode (PC) where the exoskeleton supports the user, i.e., by allowing him to rest and (ii) a free run mode (FM) where the operator can move freely and control the virtual scenario (see Fig. 3 adopted from [52]) is very interesting for an adaptation by eBR and could be shown to be applied successfully [53]. During rest the applied control mechanism of the exoskeleton cannot make predictions about upcoming behavior as it is possible during interaction [54]. To improve interaction it is relevant to know whether the operator wants to move again.

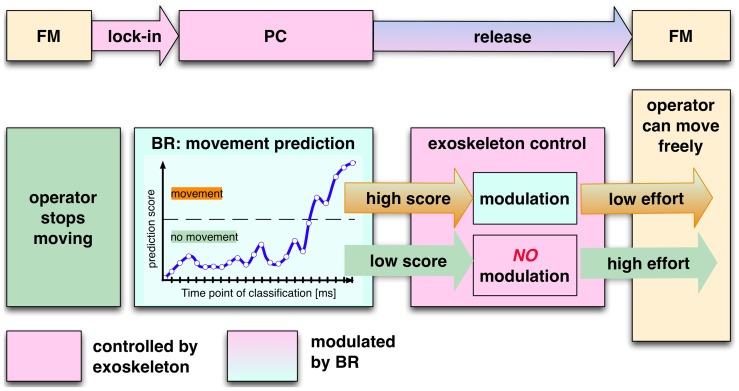

Figure 3. Adaptation of the exoskeleton's control by BR.

It is shown how BR adapts the exoskeletons control. The exoskeleton is supporting the user while moving (free mode: FM). In case the user stops moving, the exoskeleton will lock in to support the arm at a chosen position (position control mode: PC). For release the user has to press against sensors that are integrated into the exoskeleton. To ease the release BR detects movement intention. The movement prediction score is then used to modulate the exoskeleton's control by eBR: the higher the prediction score (i.e., the more certain the classifier is) the stronger is the adaptation of the exoskeleton's control and the lower is the effort for the user to transfer the exoskeleton from PC to FM mode. Pressure against the sensors is always required for release, which is minimizing the risk of false lock out in case of possible false detection of movement intention by BR. Adapted from [52].

Movement intention can be predicted from the user's EEG. Kornhuber and Deecke [55] showed that a complex of ERPs precedes intended movements. Most prominent are the Bereitschaftspotential (BP) or Readiness Potential (RP) and the Lateralized Readiness Potential (LRP) [55], [56]. The RP can be recorded up to two seconds before the movement's onset and is pronounced at central electrode sites [57]. The LRP has, in case of arm and hand movements, its maximal amplitude contralateral to the side of movement above sensorimotor areas of the brain and will occur just before movement onset. By detecting brain patterns by BR that are related to movement preparation processes, the onset of movement can be inferred by eBR and used to adapt the interface, i.e., exoskeleton, for an easier lock-out from a rest situation (PC mode in Fig. 3). However, even if BR detects movement intention, the exoskeleton's mode is not directly changed. Any change from PC to a FM mode will only happen after the inferred movement onset is confirmed by the force sensors that are integrated in the exoskeleton (see Fig. 3 and Video S3). This prohibits faulty behavior of the exoskeleton but improves interaction by reducing the force that is required for lock-out in case the inferred behavior is indeed executed [52].

Goals: Applying and Improving BR during Complex Interaction

Although we were able to show in the teleoperation scenario that our approach of adapting both HMIs by eBR works online and improves interaction [49], [53], it is not clear whether or not our approach of BR to relate brain patterns that were detected by means of machine learning methods to certain states of the human is appropriate for complex human-machine interaction scenarios. In the given application example the intentional state “movement intention” and the cognitive states “recognition of important stimuli and task coordination” are expected to be accompanied by ERPs, like the RP and LRP for “movement intention” and the P300 and prospective positivity for “recognition of important stimuli and task coordination” as explained above. To support that BR indeed allows to detect these states, correlation between brain patterns detected by ML and the above mentioned ERP activities must be shown. One has to point out that other brain activity besides the expected ERP activity will be learned by the classifier especially since applied ML methods can make use of all available signals from all electrodes. This may on one hand decrease classification performance since the classifier might learn unstable features that are for example present during training but not during testing and might reduce the reliability of inferred behavior by eBR since brain processes other than the assumed ones might evoke the brain patterns that were detected by BR. On the other hand, other brain activity than the here investigated one will surely contribute positively to the classification performance. Hence, our goal was not to prove that evoked brain activity not investigated here is not involved. Rather, the goal of this work was to support the application of BR in complex human-machine interaction scenarios. Therefore, we investigated whether predictions made by ML based on detected brain patterns can be related to known patterns in the EEG, here ERPs, that are well understood in their meaning with respect to the brain's functioning as well as their psychological effects. Since we wanted to perform the above explained investigations during complex interaction, the chosen experimental setups had to cover certain aspects of the teleoperation scenario. Two experimental scenarios were designed. The teleoperation scenario described above was not used since an investigation in this scenario with a high amount of subjects is quite-time consuming and experiments could not easily be repeated and reproduced. Moreover, since in the teleoperation scenario two different applications for eBR were implemented in the second scenario we investigated a dual BR approach.

We further systematically investigate experimentally the performance of BR with respect to training data. To improve performance of BR by choosing most appropriate training data (here the relevant training window as well as combinations of training windows) is important since BR as a passive approach cannot make use of direct feedback during training to optimize brain activity as it is common for the application of active and reactive BCIs and will hence not profit from effects of biofeedback [12], [58]. Even more critical than this is the fact that a complex application may not produce enough training data while performance of ML strongly depends on the amount and quality of the training samples. Our robotic application uses BR in situations which occur irregularly and are hard to reproduce for training. One way to deal with this issue is to substitute the underrepresented training class by a training class for which more and similar examples can be acquired. Such approaches are already applied with success. In [59] an overview is given when and how transfer learning can be applied in general. For the detection of brain patterns, classifier transfer was also proposed. Observation error related potentials (ErrPs) were detected in a task on which the applied classifier was not trained [60]. In this study a classifier for the same type of ErrP (observation ErrPs) was transferred between tasks. In [61] we showed that a classifier which is trained on one type of ErrP can classify another type of ErrP. Although the underlying kind of interaction (active versus passive interaction) is different, one can assume that similar brain processes are responsible for the detection of errors. In this work we want to investigate whether it is possible to transfer a classifier between classes used for training and testing that are similar with respect to the fact that the individual ERPs do not contain a specific component, i.e., a P300. Our hypothesis is that this is possible, if ERP analysis shows that the relevant component, i.e., the P300, is missing in both cases. Hence, for classifier transfer we propose that the classifier does not have to be trained and tested on examples that are evoked by the same brain processes (like error detection processes as explained above), but by brain processes, which might be different, but evoke brain patterns, which are similar in shape and characteristics, i.e., miss a prominent ERP component.

To summarize, in the following we will present results of two studies, which show that BR can be applied during complex human-machine interaction to detect patterns in the EEG in single trial with a high accuracy. In Part “Labyrinth Oddball Scenario – Recognition of Important Stimuli and Task Coordination Processes” we investigate, whether the cognitive states “recognition of important stimuli and task coordination” can be correlated to the results of ML analysis. For this goal the EEG was analyzed by averaged ERP analysis and single trial ML analysis. The applicability of classifier transfer between different classes is investigated in Sec. “Window of Interest and Transferability of Classifier”. Furthermore, we present results on improving the detection accuracy by choosing optimal training windows based on ERP and ML analysis, i.e., show how to optimally combine different training windows (see Sec. “Window of Interest and Transferability of Classifier” and Sec. “Combination of Training Windows for a Robust Detection of Movement Intention” in Part “Dual BR Scenario Armrest – Simultaneous Detection of Two States”). In Part “Dual BR Scenario Armrest – Simultaneous Detection of Two States” we further present results for detecting both the intentional state “movement intention” as well as the cognitive state “recognition of important stimuli and task coordination” within one experimental setup. Such a dual BR approach that enables the simultaneous detection of two different brain states is an important requirement to enable eBR to adapt two HMIs, i.e., the OMS and an exoskeleton, within one application, i.e., the above described teleoperation scenario. Furthermore, in Sec. “Performance of BR in the Detection of a Highly Underrepresented State” we replicated some results of the first study under more realistic conditions to confirm that our approach of classifier transfer works even in case of reduced numbers of samples of the relevant class. In Sec. “Conclusions” conclusions are drawn regarding the results gained in our studies with respect to the applicability of BR for self-controlled, predictive HMIs in robotics.

Labyrinth Oddball Scenario – Recognition of Important Stimuli and Task Coordination Processes

To support our hypotheses that ERP activities evoked by the presentation and processing of different stimuli contributes strongly to the separability of classes in ML analysis and that BR can hence be applied to detect the cognitive states of “recognition of important stimuli and task coordination” the test scenario Labyrinth Oddball (Fig. 4) was developed. By means of this test scenario we further show that classifier transfer is possible between classes that contain examples of similar shape and characteristics, i.e., miss a P300. The scenario allows to investigate the EEG activity of an operator who is controlling a device while reacting to incoming infrequent information at the same time. This mimics the situation in the described teleoperation scenario (see Fig. 1), where the operator performs a main task involving continuous motor activity (telecontrol of the robot), while monitoring and responding to important information that is given to him. In the teleoperation scenario, response time is expected to jitter in a wide range depending on the workload that is induced by the main task. This is expected to be similar in the Labyrinth Oddball setup (for visualization of the scenario see Video S4).

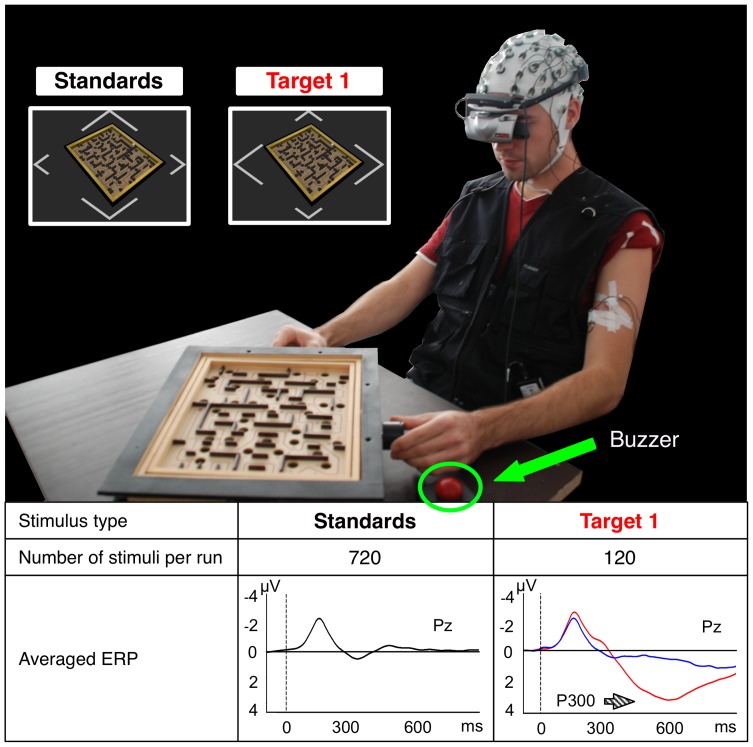

Figure 4. Experimental setup Labyrinth Oddball.

In the Labyrinth Oddball setup subjects perform a dual-task, i.e., they play a virtualized labyrinth game and react to less frequent first and second target stimuli by pressing a buzzer. A second target is presented in case that the first target was missed. Brain activity recorded after the different stimuli was averaged over all subjects, sessions, and runs (total number of trials after artifact removal: target 1 (red ERP curve, right side):  ; missed target 1 (blue ERP curve, right side):

; missed target 1 (blue ERP curve, right side):  ; standards (black ERP curve):

; standards (black ERP curve):  ).

).

The Labyrinth Oddball scenario can be described as follows: A subject plays a virtualized BRIO® labyrinth game wearing a head mounted display (HMD). This demanding task was chosen to put the subject into a situation of high workload while performing the second task, which is to react to infrequent warnings (first and second target stimuli, see “Target 1” in Fig. 4 for first targets, second targets were represented as a full form in the shape of a diamond matching the color and size of the first target) by pressing a buzzer. Subjects were asked to respond immediately and not to ignore any target stimulus. Target stimuli (infrequent, important information) were mixed up with standard stimuli (frequent, unimportant information that require no response, see “Standards” in Fig. 4; the corner with the longer sides points upwards instead of sidewards if compared with the first targets) in a ratio of about 1∶6. The inter-stimulus interval (ISI) was  ms with a random jitter of

ms with a random jitter of  ms. For more general details about this experimental setup see [44]. Since the manipulation task was very demanding, a rather long response time from

ms. For more general details about this experimental setup see [44]. Since the manipulation task was very demanding, a rather long response time from  ms to approximately

ms to approximately  ms (i.e.,

ms (i.e.,  ms to

ms to  ms due to jitter in inter stimulus interval) after target stimulus presentation was allowed during the recording of training data before a second warning was presented. In case there was no response within this period, the trial was labeled as missed target. On the second target a response time of

ms due to jitter in inter stimulus interval) after target stimulus presentation was allowed during the recording of training data before a second warning was presented. In case there was no response within this period, the trial was labeled as missed target. On the second target a response time of  ms to

ms to  ms was allowed. In contrast to the scenario used in [44], visual presentation (shape and color) of standard stimuli that require no response and first target stimuli that require a response were kept very similar (see Fig. 4) in order to avoid differences in early visual processing of the stimuli. This assures that differences in the EEG recorded after the presentation of both stimuli types were mainly caused by processes of higher cognitive processing.

ms was allowed. In contrast to the scenario used in [44], visual presentation (shape and color) of standard stimuli that require no response and first target stimuli that require a response were kept very similar (see Fig. 4) in order to avoid differences in early visual processing of the stimuli. This assures that differences in the EEG recorded after the presentation of both stimuli types were mainly caused by processes of higher cognitive processing.

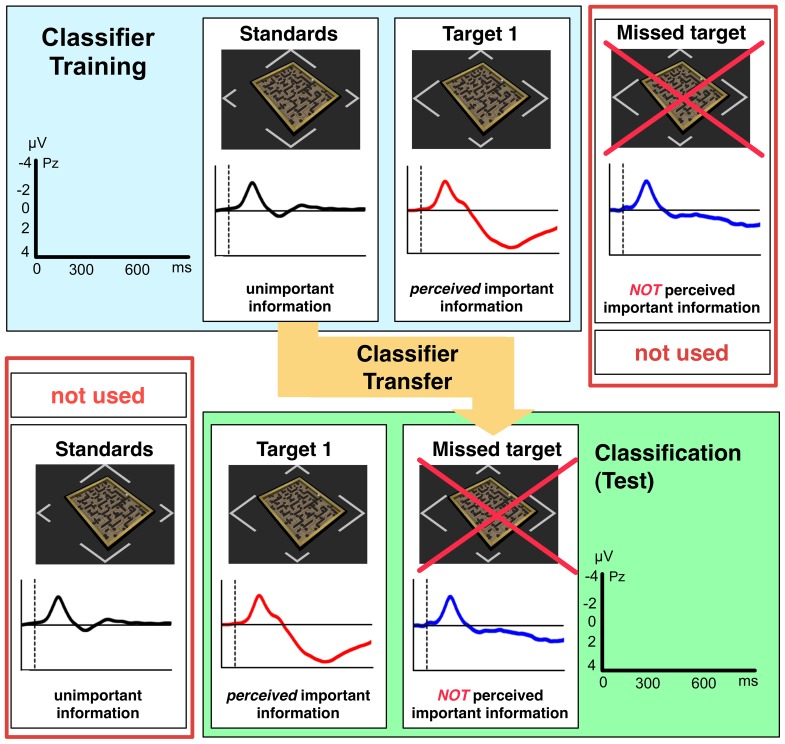

As discussed in Sec. “Introduction” classifier transfer is possible between two classes if the patterns of the samples of both classes (used for training and for test) are similar in shape and characteristics. For the detection of target recognition processes by BR we substituted our test class in ML analysis, i.e., infrequent samples evoked by situations in which the user missed the first targets (missed targets), with a training class of frequent samples (standards), i.e., EEG instances evoked by frequent unimportant information to which the user was not required to respond (Fig. 5). This approach was based on the assumption that ERP activity evoked by standards is very similar in shape and characteristic to ERP activity evoked by missed targets and that both differ from ERP activity evoked by targets, which represent the second training and test class. The expected similarities between EEG activity evoked by standards and missed targets is mainly the absence of a P300. Only perceived target stimuli will evoke a P300 (mainly P3b due to the task relevance of the target stimuli). Our hypothesis is that the P300 substantially contribute to the class separability in ML learning. We further assume that the absence of target detection processes, either because of a failure of recognition or complete miss (for missed targets) or because it is not required (for standards) mainly contributes to the similarities between ERPs evoked by standards and missed targets.

Figure 5. Classifier Transfer.

Transfer of classifier between classes is visualized. Classifier transfer is applied between the class standard and missed targets. Hence, for training the class standard was used, in test the class missed targets was used instead.

Another implicit difference is that response behavior is only executed after target stimuli and not after standard and missed target stimuli. It is known that motor-related potentials that are evoked by the preparation and execution of response behavior can influence the amplitude of P300 (i.e., P3b in [43]) which is expected to be evoked by targets but not by standards and missed targets. However, we can largely rule out a major impact on P300 amplitude differences by motor-related activity and by this a major influence of response preparation and execution on class separability and classifier transferability in our experiment for at least three reasons: First, studies showed that the P300 latency is not correlated with reaction time [42]. Only in case that a very fast response time is requested a correlation can be found between response preparation and P300, i.e., P3b (see [43] for discussion). In the Labyrinth Oddball scenario we expected that motor response activity will be late and poorly time-locked to the stimulus onset with a low correlation due to the dual task condition. Hence, related EEG activity is not expected to overlap largely. Second, possible differences related to motor activity are most prominent at frontal and central electrodes [57] and should not heavily influence ERPs at electrode Pz, where highest amplitudes for P3b are expected. Third, subjects are constantly performing the labyrinth task during the experiment and therefore motor-related activity (not corresponding to the oddball response) is evoked while processing all types of stimuli. Thus, motor activity is not only prominent after target stimuli. Furthermore, it could be shown that the execution of button press in a simple oddball setting does reduce the amplitude of the midline P3b [43]. By weakening the amplitude of the P3b by motor response on targets, ERP activity that is evoked by target stimuli would be more similar to ERP activity on missed targets than the latter to standards which is rather the opposite of the hypothesis we want to validate here.

To support our hypothesis, we conducted an ERP study in the Labyrinth Oddball scenario investigating differences in ERPs after the presentation of standard, target and missed target stimuli. First, the behavior of the subjects is analyzed to differentiate between EEG trials with correct, incorrect and missed behavior. Further, the reaction time for correct trials is calculated. In the average ERP analysis we focus on EEG activity occurring  ms after stimulus onset at electrode locations Cz, Pz, and Oz, since the P3b component should be expressed at that time or later with maximal amplitude at electrode positions Cz and/or Pz in case of target recognition [41]. The relevance of the P300, i.e., P3b, for class separability and classifier transferability is investigated by the above mentioned average ERP analysis and by a systematic machine learning (ML) analysis. By comparing the results of both analyses we investigate whether ERP activity recorded in the time range of the P3b is suited to make predictions on the transferability of a classifier. In the ML analysis we systematically train a classifier on different sub-windows to evaluate how well the transfer works for different windows. Following and depending on the outcome of the ERP average study we investigate which window and which window size is most important and what performance can be achieved after optimization of preprocessing and classification. A reduction of window size contributes to lower computational costs and is therefore desirable for online analysis.

ms after stimulus onset at electrode locations Cz, Pz, and Oz, since the P3b component should be expressed at that time or later with maximal amplitude at electrode positions Cz and/or Pz in case of target recognition [41]. The relevance of the P300, i.e., P3b, for class separability and classifier transferability is investigated by the above mentioned average ERP analysis and by a systematic machine learning (ML) analysis. By comparing the results of both analyses we investigate whether ERP activity recorded in the time range of the P3b is suited to make predictions on the transferability of a classifier. In the ML analysis we systematically train a classifier on different sub-windows to evaluate how well the transfer works for different windows. Following and depending on the outcome of the ERP average study we investigate which window and which window size is most important and what performance can be achieved after optimization of preprocessing and classification. A reduction of window size contributes to lower computational costs and is therefore desirable for online analysis.

Methods

Experimental Procedures and Data Acquisition

Six subjects (males; mean age  , standard deviation

, standard deviation  ; right-handed, and normal or corrected-to-normal vision) took part in the experiments. Subjects were instructed to respond to all target stimuli even in case they were uncertain. By this procedure, we ensured that missed targets were indeed missed and not perceived as important and task relevant stimuli. Subjects were in a competition to miss as few as possible targets while achieving good performance in the game. Recognizing and responding to all targets was rated higher than performing the senso-motor, i.e., labyrinth task. One subject had to be excluded in retrospect due to extensive eye blinks which made average ERP analysis impossible. The experiment was split into two sessions with at least one day rest in between. In each session, each subject performed

; right-handed, and normal or corrected-to-normal vision) took part in the experiments. Subjects were instructed to respond to all target stimuli even in case they were uncertain. By this procedure, we ensured that missed targets were indeed missed and not perceived as important and task relevant stimuli. Subjects were in a competition to miss as few as possible targets while achieving good performance in the game. Recognizing and responding to all targets was rated higher than performing the senso-motor, i.e., labyrinth task. One subject had to be excluded in retrospect due to extensive eye blinks which made average ERP analysis impossible. The experiment was split into two sessions with at least one day rest in between. In each session, each subject performed  runs with

runs with  target 1 stimuli (important information) and about

target 1 stimuli (important information) and about  standard stimuli (unimportant information, shape of stimuli see Fig. 4). Stimuli were presented in random order.

standard stimuli (unimportant information, shape of stimuli see Fig. 4). Stimuli were presented in random order.

While the subjects were performing the task, the EEG was recorded continuously ( electrodes, extended 10–20 system with reference at FCz) using a

electrodes, extended 10–20 system with reference at FCz) using a  channel actiCap system (Brain Products GmbH, Munich, Germany). Two electrodes of the 64 channel system were used to record the electromyogram (EMG) of muscles of the upper arm (M. bizeps brachii) related to the buzzer press in order to monitor muscle activity. Impedance was kept below

channel actiCap system (Brain Products GmbH, Munich, Germany). Two electrodes of the 64 channel system were used to record the electromyogram (EMG) of muscles of the upper arm (M. bizeps brachii) related to the buzzer press in order to monitor muscle activity. Impedance was kept below  k

k . EEG and EMG signals were sampled at

. EEG and EMG signals were sampled at  kHz, amplified by two

kHz, amplified by two  channel BrainAmp DC amplifiers (Brain Products GmbH, Munich, Germany) and filtered with a low cut-off of

channel BrainAmp DC amplifiers (Brain Products GmbH, Munich, Germany) and filtered with a low cut-off of  Hz and high cut-off of

Hz and high cut-off of  kHz.

kHz.

Ethics Statement

The study has been conducted in accordance with the Declaration of Helsinki and approved with written consent by the ethics committee of the University of Bremen. Subjects have given informed and written consent to participate.

Behavior

For behavioral analysis we investigated the performance of the subjects in the oddball task. For this, we analyzed the subject's correct behavior and incorrect behavior (commission error, i.e., response on standard stimuli and omission error, i.e., missing response on target stimuli).

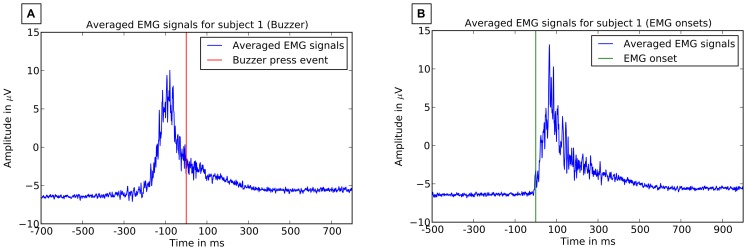

Further, we investigated the response times and jitter in response times based on buzzer events and EMG onsets (see Fig. 6 for averaged EMG activity based on EMG onset and buzzer event). The onsets in the EMG signal had to be labeled manually, due to poor signal quality and constant movement of the subject an automated onset detection as described in [62] was not possible. For the analysis of EMG onset the signals from the two unipolar EMG channels were subtracted from each other to calculate a bipolar signal. The raw bipolar signal was preprocessed using a variance based filter with a window length of  s [62]. The resulting signals were visually inspected and each onset was marked in the EEG data. The single response time was then measured as interval between the target onset and the corresponding EMG onset. Single response times on the buzzer events were measured as time between the onset of stimulus presentation and the onset of buzzer press. Further, we calculated the median of response time over all sets (3 sets

s [62]. The resulting signals were visually inspected and each onset was marked in the EEG data. The single response time was then measured as interval between the target onset and the corresponding EMG onset. Single response times on the buzzer events were measured as time between the onset of stimulus presentation and the onset of buzzer press. Further, we calculated the median of response time over all sets (3 sets  2 sessions) for each subject and also minimal response time and maximal response time. After that, the mean of subject's medians was calculated.

2 sessions) for each subject and also minimal response time and maximal response time. After that, the mean of subject's medians was calculated.

Figure 6. Averaged EMG activity.

Average EMG activity of subject  that was averaged based on two different events is displayed. A: Averaged activity based on buzzer press event is shown. B: Average activity based on EMG onset is shown.

that was averaged based on two different events is displayed. A: Averaged activity based on buzzer press event is shown. B: Average activity based on EMG onset is shown.

Average ERP Analysis

To identify relevant ERP activity an average ERP analysis was performed. EEGs from runs  and

and  of both sessions were analyzed off-line with the BrainVision Analyser Software Version

of both sessions were analyzed off-line with the BrainVision Analyser Software Version  (Brain Products GmbH, Munich, Germany). Run

(Brain Products GmbH, Munich, Germany). Run  and

and  were not used for analysis to reduce the amount of data and thus processing time for the ML analysis presented in Sec. “ML Analysis”. We chose the middle runs to minimize side effects due to training or exhaustion.

were not used for analysis to reduce the amount of data and thus processing time for the ML analysis presented in Sec. “ML Analysis”. We chose the middle runs to minimize side effects due to training or exhaustion.

EEGs were re-referenced to an average reference (excluding electrodes Fp1, Fp2, F1, F2, PO9, PO10, FT7–FT10 due to artifacts and electrodes TP7 and TP8 which were used to record EMG activity) and filtered ( Hz low cutoff,

Hz low cutoff,  Hz high cutoff). The low-pass filter was chosen with an untypical low cutoff frequency, since results of average ERP analysis should be compared with results of ML analysis. Although different pass bands are reported in P300 classification (see [63], [64]) a study about the important factors on P300 detection concluded that the main energy of this type of ERP is concentrated below

Hz high cutoff). The low-pass filter was chosen with an untypical low cutoff frequency, since results of average ERP analysis should be compared with results of ML analysis. Although different pass bands are reported in P300 classification (see [63], [64]) a study about the important factors on P300 detection concluded that the main energy of this type of ERP is concentrated below  Hz [64]. Our own investigations support this conclusion (see for example [65]). An ERP analysis of EEG data from a very similar experimental setting which considers a wider frequency range (higher low pass filter) is currently under preparation. Preliminary results are published in [47]. Artifacts (e.g., eye movement, blinks, muscle artifacts, etc.) were rejected semi-manually (maximal amplitude difference in

Hz [64]. Our own investigations support this conclusion (see for example [65]). An ERP analysis of EEG data from a very similar experimental setting which considers a wider frequency range (higher low pass filter) is currently under preparation. Preliminary results are published in [47]. Artifacts (e.g., eye movement, blinks, muscle artifacts, etc.) were rejected semi-manually (maximal amplitude difference in  ms intervals was

ms intervals was

V, gradient

V, gradient

V/ms, low activity was

V/ms, low activity was

V over

V over  ms). EEGs were segmented into epochs from

ms). EEGs were segmented into epochs from  ms before to

ms before to  ms after stimulus onset. Epochs were averaged separately for each stimulus type. Only segments in which a stimulus of type target was followed by a response within the given response time contributed to mean ERP curves on the stimulus type target. Segments in which no response followed after a stimulus of type target were defined as missed target trials and contributed to generate mean ERP curves on the stimulus type missed target. Baseline correction was performed before averaging (pre-stimulus interval:

ms after stimulus onset. Epochs were averaged separately for each stimulus type. Only segments in which a stimulus of type target was followed by a response within the given response time contributed to mean ERP curves on the stimulus type target. Segments in which no response followed after a stimulus of type target were defined as missed target trials and contributed to generate mean ERP curves on the stimulus type missed target. Baseline correction was performed before averaging (pre-stimulus interval:  to

to  ms). In case of missed target events a second target (target 2) followed. In this study we did not evaluate ERP activity evoked by stimulus type target 2 and missed target 2.

ms). In case of missed target events a second target (target 2) followed. In this study we did not evaluate ERP activity evoked by stimulus type target 2 and missed target 2.

Amplitude differences were analyzed using repeated measures ANOVA with the within-subjects factors stimulus type, electrode, and time window and between-subjects factor subject. To find the expected P300 effect, we compared amplitude differences between the three stimulus types (standards, targets, and missed targets). Additionally, the factor electrode (Cz, Pz and Oz) served to investigate spatial differences in the P300 effect. Time window was used as factor, since visual inspection of the averages of activity evoked by targets revealed multiple peaks in the time range of  –

– ms for each subject. Therefore, we divided the

ms for each subject. Therefore, we divided the  –

– ms window into two separate windows (

ms window into two separate windows ( –

– ms and

ms and  –

– ms after the stimulus) to cover early and late parts of the broad peak (as seen in grand average in Fig. 4), accounting for multiple, possibly overlapping positive ERP components. To investigate subject-specificity of the effects, subject was used as a between-subjects factor. Where necessary, the Greenhouse–Geisser correction was applied and the corrected

ms after the stimulus) to cover early and late parts of the broad peak (as seen in grand average in Fig. 4), accounting for multiple, possibly overlapping positive ERP components. To investigate subject-specificity of the effects, subject was used as a between-subjects factor. Where necessary, the Greenhouse–Geisser correction was applied and the corrected  -value is reported. For pairwise comparisons, the Bonferroni correction was applied.

-value is reported. For pairwise comparisons, the Bonferroni correction was applied.

ML Analysis

All ML evaluations have been performed using the open source signal processing and classification environment pySPACE [66]. Data processing was as follows: Windowing and preprocessing were performed directly on the raw data from the recording device. In order to avoid that preprocessing artifacts such as, e.g., filter border artifacts, influence classification performance, we performed the complete preprocessing (including decimation and filtering) on a larger window between  and

and  ms relative to the stimulus onset. We chose the following preprocessing based on the rationale issued above (see Sec. “Average ERP Analysis” and [64]): The data were baseline-corrected (with

ms relative to the stimulus onset. We chose the following preprocessing based on the rationale issued above (see Sec. “Average ERP Analysis” and [64]): The data were baseline-corrected (with  ms window prior to stimulus onset), decimated to

ms window prior to stimulus onset), decimated to  Hz and subsequently lowpass filtered with a cut-off frequency of

Hz and subsequently lowpass filtered with a cut-off frequency of  Hz.

Hz.

As in the ERP analysis, run numbers  ,

,  and

and  of both sessions were used for training and testing. In contrast to the average ERP analysis described above we included the early time window of

of both sessions were used for training and testing. In contrast to the average ERP analysis described above we included the early time window of  –

– ms in the ML analysis. This was done to control for the fact that early time windows may still contribute to the classification of the different classes (standards, targets, missed targets) even though we hypothesized that main differences are caused by the P300 effect (see Sec. “ Relevant Averaged ERP Activity”).

ms in the ML analysis. This was done to control for the fact that early time windows may still contribute to the classification of the different classes (standards, targets, missed targets) even though we hypothesized that main differences are caused by the P300 effect (see Sec. “ Relevant Averaged ERP Activity”).

It is important to note here that several dependencies have to be kept in mind when evaluating the results: First, performance depends on window size since a larger window contains more features and thus a higher dimensionality of the signal. Second, the classifier parameters depend on the underlying data (and dimensionality). However, the purpose of this investigation was to compare different windows (and sizes) concerning their quality for classification, so we assessed the results always with respect to window size and starting point of the window and performed statistical analysis only on windows of equal length.

Furthermore, the parameters of the classifier were adjusted to an unspecified value to omit data-dependent effects: in the entire analysis, we used a support vector machine (SVM) as implemented in LIBSVM

[67] (SVC-C with a linear kernel) with a fixed complexity of  simulating a hard margin. Hence, we cut different windows by varying starting point (

simulating a hard margin. Hence, we cut different windows by varying starting point ( ms-

ms- ms) and window size (

ms) and window size ( ms-

ms- ms) in steps of

ms) in steps of  ms. Data used for training and testing were different, as outlined above: We trained on standards and targets of one experimental run and tested missed targets versus targets of another run within one session. All possible combinations of the above mentioned runs within one session were tested. Classifier features were the preprocessed time-channel values, i.e., the amplitudes.

ms. Data used for training and testing were different, as outlined above: We trained on standards and targets of one experimental run and tested missed targets versus targets of another run within one session. All possible combinations of the above mentioned runs within one session were tested. Classifier features were the preprocessed time-channel values, i.e., the amplitudes.

The corresponding classification performance was computed using the area under curve (AUC) [68] which is an indicator of general separability of the two classes in the data. AUC is the area under the receiver operating characteristics curve. This curve maps the different true positive rates (TPR) and false positive rates (1-TNR) obtained when the decision boundary is varied from  to

to  . The AUC is then computed as the integral of the resulting function. In this way, we investigated the linear separability of the data essentially independent of the applied classifier.

. The AUC is then computed as the integral of the resulting function. In this way, we investigated the linear separability of the data essentially independent of the applied classifier.

For statistical inference, we chose three time windows from the aforementioned temporal segmentation that match the later time windows which had been chosen for ERP analysis ( –

– ms, and

ms, and  –

– ms see Sec. “Average ERP Analysis”) and the early time window of

ms see Sec. “Average ERP Analysis”) and the early time window of  –

– ms. This procedure relates the results of the classifier performance-based approach to the results of the ERP analysis. Classification performances for the different window sizes were statistically analyzed using repeated measures ANOVA with the within-subjects factors time window (

ms. This procedure relates the results of the classifier performance-based approach to the results of the ERP analysis. Classification performances for the different window sizes were statistically analyzed using repeated measures ANOVA with the within-subjects factors time window ( –

– ms,

ms,  –

– ms, and

ms, and  –

– ms) and subject. Corrections were applied where necessary. Classification performance after optimizing the classifier were analyzed using repeated measures ANOVA with subject as within-subjects factor. Where necessary, the Greenhouse–Geisser correction was applied and the corrected

ms) and subject. Corrections were applied where necessary. Classification performance after optimizing the classifier were analyzed using repeated measures ANOVA with subject as within-subjects factor. Where necessary, the Greenhouse–Geisser correction was applied and the corrected  -value is reported. For multiple comparisons, the Bonferroni correction was applied.

-value is reported. For multiple comparisons, the Bonferroni correction was applied.

In a further analysis we investigated the possibility to improve classification performance by the combination of information from two windows. We combined the middle time window ( to

to  ms) with both other time windows (early:

ms) with both other time windows (early:  to

to  ms and late:

ms and late:  to

to  ms time window) separately.

ms time window) separately.

To determine classification performance that can be achieved under optimized conditions we finally performed a final analysis with the goal to get a better estimate of the applicability of our approach of classifier transfer between the classes standard and missed target. The processing window was chosen based on the results of the systematic ML analysis explained above. We performed a classifier optimization of the SVM parameter complexity using a 5-fold cross validation in combination with a pattern search algorithm [69] to evaluate the overall performance in the application with an adjusted classifier.

In this subsequent investigation we used an optimized SVM on a chosen time window. Further, we computed the balanced accuracy (BA) as a performance measure for the chosen time window. The balanced accuracy [70] is the arithmetic mean of true positive rate (TPR) and true negative rate (TNR) and calculated accordingly

| (1) |

Both performance measures used (AUC and BA) are insensitive to unbalanced or even changing ratios of the two classes (positive class P and negative class N), which is most important in the application where we have an oddball-like situation with frequent and infrequent examples. It holds for both metrics that a value of  means guessing and

means guessing and  means perfect classification.

means perfect classification.

Results

Behavior

In total  omission errors (

omission errors ( ) occurred, thus

) occurred, thus  missed targets were observed and

missed targets were observed and  targets stimuli were found with correct responses. No commission error (i.e., responses on standards stimuli) could be found.

targets stimuli were found with correct responses. No commission error (i.e., responses on standards stimuli) could be found.

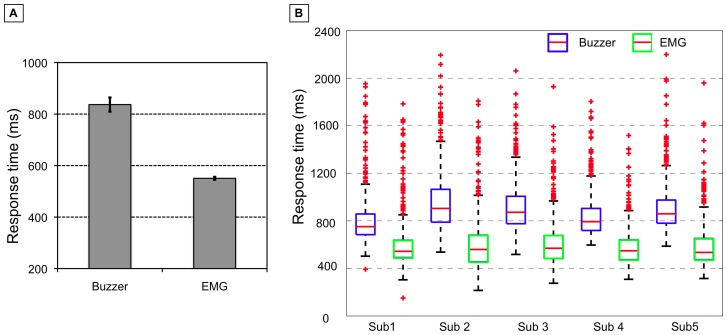

Figure 7 shows the median response time for each subject across two sessions. Based on the buzzer press event, responses occurred  ms after the target stimuli (mean of subject's medians). The median of minimal response time was

ms after the target stimuli (mean of subject's medians). The median of minimal response time was  ms and the median of maximal response time was

ms and the median of maximal response time was  ms. The difference between the minimal and maximal response time was between

ms. The difference between the minimal and maximal response time was between  ms and

ms and  ms (median:

ms (median:  ms). EMG onsets began even earlier in time (mean of subject's medians:

ms). EMG onsets began even earlier in time (mean of subject's medians:  ms). The median of minimal response time was

ms). The median of minimal response time was  ms and the median of maximal response time was

ms and the median of maximal response time was  ms. The difference between the minimal and maximal response time was between

ms. The difference between the minimal and maximal response time was between  ms and

ms and  ms (median:

ms (median:  ms). No difference exists between median difference in response time based on the buzzer event (median:

ms). No difference exists between median difference in response time based on the buzzer event (median:  ms) and median difference of response time based on the EMG onset (median:

ms) and median difference of response time based on the EMG onset (median:  ms).

ms).

Figure 7. Evaluation of response time.

The mean and median of response time for each subject across two sessions based on EMG and buzzer press events are displayed. A: Mean of response time. B: Median of response time.

Relevant Averaged ERP Activity

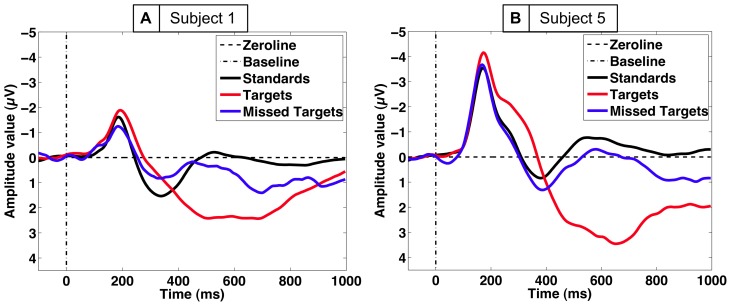

The grand average over all subjects of the standard, target and missed target ERP pattern in the centro-parietal electrode (Pz) is depicted in Fig. 4. Significant differences between standards and targets (i.e., P300 effect) were observed [ , pairwise comparisons: standards vs. targets:

, pairwise comparisons: standards vs. targets:  ]. The P300 effect was stronger at the electrodes Cz and Pz compared to the electrode Oz [P300 effect at Cz:

]. The P300 effect was stronger at the electrodes Cz and Pz compared to the electrode Oz [P300 effect at Cz:  , P300 effect at Pz:

, P300 effect at Pz:  , P300 effect at Oz:

, P300 effect at Oz:  ]. The significant amplitude difference between the ERPs evoked by targets and missed targets stems from a higher positive amplitude on targets for both time windows [

]. The significant amplitude difference between the ERPs evoked by targets and missed targets stems from a higher positive amplitude on targets for both time windows [ ]. This higher positivity elicited by targets was significant for four subjects [targets vs. missed targets:

]. This higher positivity elicited by targets was significant for four subjects [targets vs. missed targets:  for four subjects,

for four subjects,  for one subject (subject 1), see Fig. 8]. Furthermore, no subject showed differences between ERPs evoked by missed targets and standards in the

for one subject (subject 1), see Fig. 8]. Furthermore, no subject showed differences between ERPs evoked by missed targets and standards in the  –

– ms time range recorded over central electrodes [standards vs. missed targets:

ms time range recorded over central electrodes [standards vs. missed targets:  n.s.]. However, in the

n.s.]. However, in the  –

– ms window, amplitude differences between missed targets and standards are more subject-specific [standards vs. missed targets:

ms window, amplitude differences between missed targets and standards are more subject-specific [standards vs. missed targets:  n.s. for subject 4 and 5,

n.s. for subject 4 and 5,  for subject 1, 2, and 3, see Fig. 8].

for subject 1, 2, and 3, see Fig. 8].

Figure 8. Averaged ERPs in the Labyrinth Oddball scenario.

Different averaged ERP patterns evoked by standards, targets, and missed targets are shown for two subjects. A: Subject  : No significant difference in ERP amplitude between targets and missed targets but significant difference in ERP amplitude between standards and missed targets for the late window was found. B: Subject

: No significant difference in ERP amplitude between targets and missed targets but significant difference in ERP amplitude between standards and missed targets for the late window was found. B: Subject  : A higher P300 effect on targets compared to both standards and missed targets and no significant difference in ERP amplitude between standards and missed targets for the late window was found.

: A higher P300 effect on targets compared to both standards and missed targets and no significant difference in ERP amplitude between standards and missed targets for the late window was found.

To summarize, a P300 effect elicited by targets was observed for both time windows and in all subjects with a maximum amplitude intensity at the central and parietal electrodes (Cz and Pz). The morphology of the ERP form elicited by missed targets is, especially in the  –

– ms time window, similar to ERP forms elicited by standards and supports our hypothesis that EEG instances evoked by standard stimuli can potentially be used to substitute EEG instances evoked by missed targets during training. For the later time window results differed. Only two subjects showed no differences between standards and missed targets.

ms time window, similar to ERP forms elicited by standards and supports our hypothesis that EEG instances evoked by standard stimuli can potentially be used to substitute EEG instances evoked by missed targets during training. For the later time window results differed. Only two subjects showed no differences between standards and missed targets.

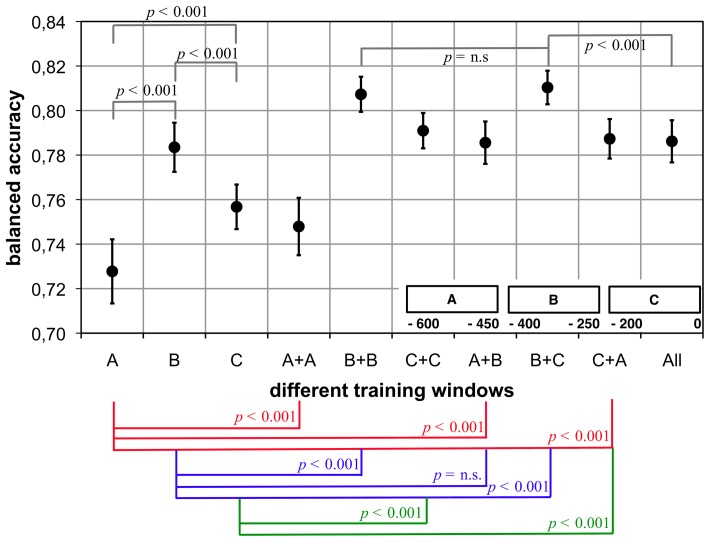

Window of Interest and Transferability of Classifier

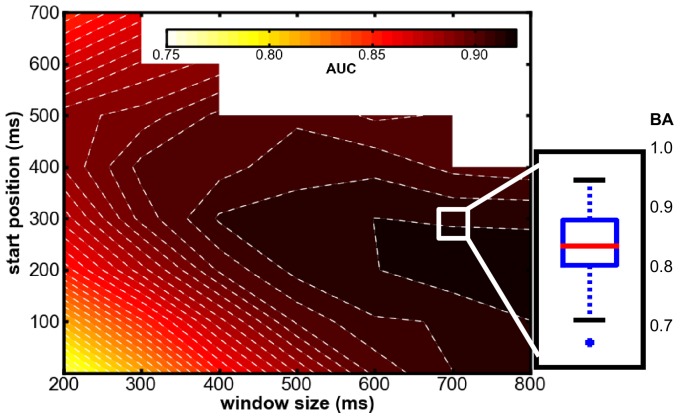

The results in Fig. 9 illustrate how the separability of the two classes missed targets versus targets varies when different time windows are used for classification. For small and early windows (before around  ms) the performance is lowest but above random guessing. For small window sizes (

ms) the performance is lowest but above random guessing. For small window sizes ( –

– ms) the performance reached a maximum when used with windows starting after

ms) the performance reached a maximum when used with windows starting after  ms. With increasing window size performance also increases, which is yet impacted with the increased dimensionality of the data (more dimensions imply more information for the classifier) and has therefore to be considered carefully.

ms. With increasing window size performance also increases, which is yet impacted with the increased dimensionality of the data (more dimensions imply more information for the classifier) and has therefore to be considered carefully.

Figure 9. Classification performance obtained in the Labyrinth Oddball scenario for different windows of EEG data.

The dependency between classification performance and window size as well as start point of window are displayed for the classification of missed targets versus recognized targets. The start position (y-axis) is given relative to stimulus onset. The inset on the right indicates the optimized performance using the window from  to

to  ms. The different windows are compared using the AUC, while the optimized performance is given as BA.

ms. The different windows are compared using the AUC, while the optimized performance is given as BA.

To investigate the amount of information in each time range, we compared performances on training data with fixed window sizes of  ms as illustrated in Fig. 10. The statistical analysis of the AUC values shows that performance is clearly affected by the choice of the time window [main effect of time window:

ms as illustrated in Fig. 10. The statistical analysis of the AUC values shows that performance is clearly affected by the choice of the time window [main effect of time window:  ] and that classification of the middle window (

] and that classification of the middle window ( ms–

ms– ms) and the late window (

ms) and the late window ( ms–

ms– ms) clearly yields higher performance compared to the early window (

ms) clearly yields higher performance compared to the early window ( ms–

ms– ms) [early window: mean AUC of 0.82, middle window: mean AUC of 0.90, late window: mean AUC of 0.88, multiple comparisons:

ms) [early window: mean AUC of 0.82, middle window: mean AUC of 0.90, late window: mean AUC of 0.88, multiple comparisons:  –

– ms vs.

ms vs.  –

– ms:

ms:  ,

,  –

– ms vs.

ms vs.  –

– ms,

ms,  ,

,  –

– ms vs.

ms vs.  –

– ms:

ms:  ].

].

Figure 10. Classification performance for different time windows.

The mean classification performance is shown for each time window and each subject.

In the further analysis were we combined the middle time window ( to

to  ms) that is showing the highest classification performance for most subjects (see Fig. 10) with both other time windows (early:

ms) that is showing the highest classification performance for most subjects (see Fig. 10) with both other time windows (early:  to

to  ms and late:

ms and late:  to

to  ms time window) separately, the main result was that classification performance could be improved by the combination of the middle and late time window compared to the combination of the middle and early time window [main effect of combined time window:

ms time window) separately, the main result was that classification performance could be improved by the combination of the middle and late time window compared to the combination of the middle and early time window [main effect of combined time window:  , combination of the middle and early window: Mean of AUC of 0.89, combination of the middle and late window: Mean of AUC of 0.92, pairwise comparison: combination of the middle and early window vs. combination of the middle and late window:

, combination of the middle and early window: Mean of AUC of 0.89, combination of the middle and late window: Mean of AUC of 0.92, pairwise comparison: combination of the middle and early window vs. combination of the middle and late window:  ] again supporting our hypothesis that later cognitive activity is most important for the prediction of the success of cognitive processing.

] again supporting our hypothesis that later cognitive activity is most important for the prediction of the success of cognitive processing.

Given the results presented above we obtained the best results when starting the windows  ms after the stimulus was presented (depicted in Fig. 9). This supports our hypothesis that P300 related processes contribute substantially to class separability. Based on theses findings, we decided to use a processing window in the time range between

ms after the stimulus was presented (depicted in Fig. 9). This supports our hypothesis that P300 related processes contribute substantially to class separability. Based on theses findings, we decided to use a processing window in the time range between  ms and

ms and  ms. As described in the methods section, we now used an optimized preprocessing procedure and classifier for this window. On average, a BA of

ms. As described in the methods section, we now used an optimized preprocessing procedure and classifier for this window. On average, a BA of  (standard deviation:

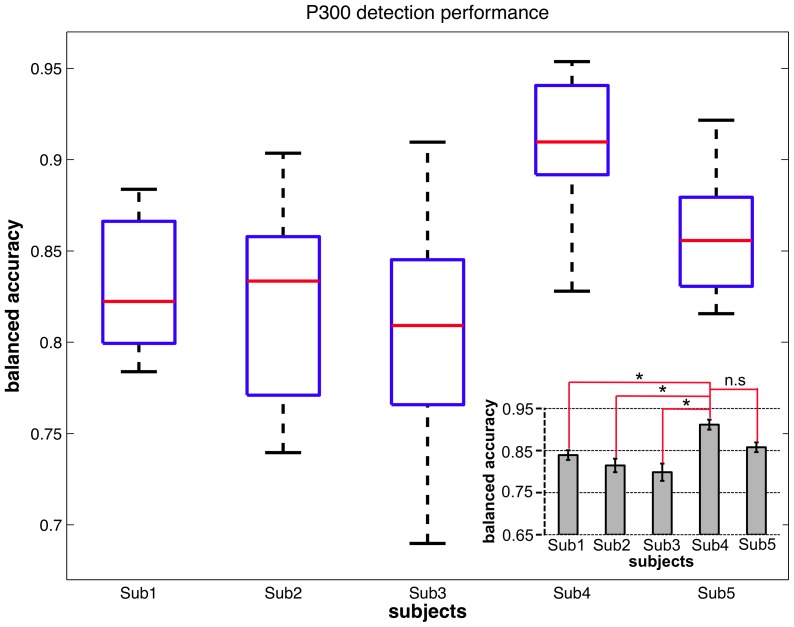

(standard deviation:  ) was obtained. While the measure of the AUC served for finding the interesting window ranges, this performance measure now reflects what the particular classifier is able to achieve. The distribution of the results is illustrated in the inset in Fig. 9 and the classification performance for each subject is depicted in Fig. 11. A significantly higher classification performance compared to all other subjects (except for subject 5) was shown for subject 4 [main effect of subject:

) was obtained. While the measure of the AUC served for finding the interesting window ranges, this performance measure now reflects what the particular classifier is able to achieve. The distribution of the results is illustrated in the inset in Fig. 9 and the classification performance for each subject is depicted in Fig. 11. A significantly higher classification performance compared to all other subjects (except for subject 5) was shown for subject 4 [main effect of subject:  , details see Fig. 11 lower right].

, details see Fig. 11 lower right].

Figure 11. Classification performance in the Labyrinth Oddball scenario.

For each subject for a window from  to

to  ms the evaluated classification performance and statistics are shown. The red lines in the main diagram mark the median values of obtained classification performances for each subject. The inserted diagram shows that highest classification performance was obtained for subject

ms the evaluated classification performance and statistics are shown. The red lines in the main diagram mark the median values of obtained classification performances for each subject. The inserted diagram shows that highest classification performance was obtained for subject  and

and  (mean classification performance and standard error of mean (SEM) are depicted).

(mean classification performance and standard error of mean (SEM) are depicted).

It is worth to point out that average ERP analysis for subjects 4 and 5 (with better classification performance) in contrast to all other subjects could not reveal any significant differences in amplitude of averaged ERP forms evoked by standards and missed targets in both time windows ( to

to  ms and

ms and  to

to  ms). Based on our hypotheses, such similarity between ERP forms evoked by standard and missed target stimuli and a clear absence of P300 and later EPR activity that may be related to task coordination would suggest a good outcome for classifier transfer and high performance as was shown here. Hence, results of ERP analysis can under certain conditions be used to infer classification performance.

ms). Based on our hypotheses, such similarity between ERP forms evoked by standard and missed target stimuli and a clear absence of P300 and later EPR activity that may be related to task coordination would suggest a good outcome for classifier transfer and high performance as was shown here. Hence, results of ERP analysis can under certain conditions be used to infer classification performance.

Discussion: Labyrinth Oddball Scenario

Results of ERP and ML analysis confirm that ERPs evoked by stimulus recognition and subsequent processes, e.g., change of task and preparation of response, are most important to detect the state of target recognition by BR. This is a basic prerequisite for eBR to infer response behavior of the operator. We showed that a classifier trained on the classes standards versus targets can be successfully transferred to classify the classes missed targets versus targets. Results of ERP analysis of ERPs evoked in the middle time window that were found to be maximally expressed on central and parietal electrodes (Cz and Pz) were used to infer on classification performance. Thus, it is likely that the signal that is maximally expressed at these electrodes contributes most to the differences and similarities of the overall signal on all three types of stimuli.

Our hypothesis that ERP activity evoked by unimportant standard stimuli is similar in shape and characteristic to ERP activity evoked by important stimuli that were not recognized as such (missed targets) was supported by the results. Further, our results indicate that this similarity is in the middle time windows mainly caused by the absence of target recognition processes, since the P300 is either missing or massively reduced in amplitude. Certainly, processes later than the evaluation and classification of stimuli (evoking a P300) that are related to task set preparation or response preparation and execution will also be involved [47]. For example, for some of the subjects ERP activity evoked by unimportant standard stimuli and by missed target stimuli shows significant differences in the later time window which may be related to late task set preparation processes [46] or late P300 activity that did not lead to a successful stimulus evaluation as discussed in [42] and requires further investigation (see Fig. 8, e.g., subject 1). Although a prominent similarity between standards and missed targets is the missing of a response of the subject, our results show that response related activity should not have a major influence on transferability of the classifier, since response time to individual target stimuli does widely vary (see Sec. “Behavior”).

Results of ML analysis finally show that early stimulus processing in the time window  –

– ms was not equally important as EEG activity in the later time range (

ms was not equally important as EEG activity in the later time range ( ms) investigated here. However, early brain activity contributed as well. This might be caused by differences in attentional processes which have to be investigated in future experiments and analysis.

ms) investigated here. However, early brain activity contributed as well. This might be caused by differences in attentional processes which have to be investigated in future experiments and analysis.

To summarize, from our results we conclude that brain activity evoked by infrequent, unimportant stimuli (standards) in the investigated low frequency range is highly similar to brain activity evoked by missed targets, which are important stimuli that were not successfully processed, i.e., not recognized as important stimuli or completely missed. To substitute infrequent examples of the class missed targets by frequent examples of the class standards during training is possible and supports our hypothesis that transfer between classes is a feasible approach for applying BR in scenarios in which the amount of training data is way too small to implement methods that can handle few training data [71]–[73] (for a brief discussion see [74]). Hence, the problem of few training examples in realistic scenarios can be solved by our approach of classifier transfer with a high classification performance, and can be improved by choosing appropriate window combinations. The choice of window, samples used for transfer and the combinations of windows were first defined by knowledge about underlying brain activity gained from average ERP analysis and confirmed by systematic ML analysis. Hence, it is shown that average ERP analysis can be a useful method to choose appropriate training data, especially if processes are involved that evoke pronounced patterns in the EEG like the P300.

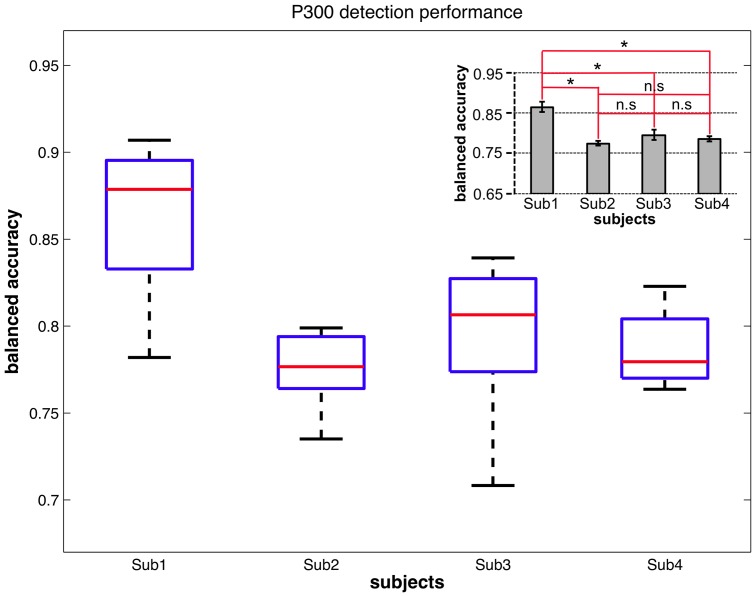

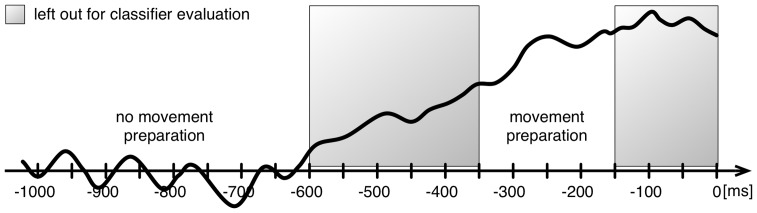

Dual BR Scenario Armrest – Simultaneous Detection of Two States

Since the BR system in the teleoperation scenario (see Fig. 1) should not only detect success in the recognition of important information but also movement intention to optimize the exoskeleton's control (see Sec. “Introducation”), a second test scenario, the Armrest setup, was developed to test a dual BR approach. Experiments were conducted to test whether a simultaneous classification of different brain states is possible by analyzing the EEG recorded in a complex scenario similar to the teleoperation scenario. The Armrest setup copies a realistic dual-task situation that comes closer to the teleoperation scenario than the dual task performed in the Labyrinth Oddball scenario (see Part “Labyrinth Oddball Scenario – Recognition of Important Stimuli and Task Coordination Processes”). That is because in the Armrest setup the user is not always able to respond to information (responses to target events were not allowed during the rest period – see below) but has to postpone his response. This restriction was most important to prove that our approach still works under realistic conditions in which two motor tasks may influence each other, thus one task inhibits the execution of the other one. Further, it is expected that trained operators of teleoperation scenarios have a low rate of missed targets. Hence, to investigate whether it is indeed possible to detect very few instances of missed targets by our approach, we designed a test scenario in which subjects would not miss too many target stimuli.

The Armrest setup can be described as follows: Participants of the experiments wore a head-mounted display (HMD) and stood in a dimly lit room while performing a task in a virtual environment. The task was to move the right arm from a rest position in order to reach a virtual target ball which was presented in the upper right corner marking a possible object which could be manipulated in a final application case (Fig. 12A and B). A hand-tracking system was used to detect the point in time when the hand left the armrest. Whenever subjects moved their arm  cm away from the rest position, a marker for movement onset was sent and stored together with the EEG (movement marker was set at time point “0”, see Fig. 12C). After entering the target ball (see Fig. 12B–2), the subject returned to the rest position. To support the rest state of the arm, an armrest was designed as part of our testbed. This armrest was integrated into the setup to imitate the strong support of the arm by the exoskeleton during the position control in the teleoperation scenario. The arm and hand of the participant had to stay in the rest position for at least 5 seconds. In case the subject left the rest position too early, the target ball would disappear. This served to avoid too rapid changes between rest and movement which was necessary to assure sufficiently long non-movement periods.