Abstract

Assessing the agency of potential actors in the visual world is a critically important aspect of social cognition. Adult observers are generally capable of distinguishing real faces from artificial faces (even allowing for recent advances in graphics technology and motion capture); even small deviations from real facial appearance can lead to profound effects on face recognition. Presently, we examined how early components ofvisual event-related potentials (ERPs) are affected by the “life” in human faces and animal faces. We presented participants with real and artificial faces of humans and dogs, and analyzed the response properties of the P100 and the N170 as a function of stimulus appearance and task (species categorization vs. animacy categorization). The P100 exhibited sensitivity to face species and animacy. We found that the N170's differential responses to human faces vs. dog faces depended on the task participants’ performed. Also, the effect of species was only evident for real faces of humans and dogs, failing to obtain with artificial faces. These results suggest that face animacy does modulate early components of visual ERPs – the N170 is not merely a crude face detector, but reflects the tuning of the visual system to natural face appearance.

Keywords: Face perception, event-related potentials, social cognition

Introduction

The use of artificial faces in visual media has become increasingly prevalent in the past decade and observers now encounter highly detailed synthetic agents in a range of settings. The effective use of artificial faces in applied settings is complicated, however, by the human visual system's exquisite sensitivity to deviations from natural appearance. In general, adult observers’ response to faces depends upon a fine-tuned representation of typical appearance that incorporates the statistics of each observer's experience. Faces that are not usually represented in an observer's normal, every-day visual experience are not recognized as accurately as faces that are more typically experienced, leading to measurable decrements in performance when other-race (Malpass & Kravitz, 1969), other-age (Kuefner et al., 2008), or other-species faces (Dufour et al., 2004) are used as experimental stimuli. Artificial faces represent another category of faces that observer's have less experience with than human faces, possibly leading to specific patterns of perceptual sensitivity or insensitivity. Indeed, even objectively small deviations from real appearance may nonetheless result in poor or inefficient recognition performance, or worse, lead to the perception of an artificial face as “uncanny” or disturbing (Mori, 1970). Errors in the accurate rendering of the appearance of the eyes, skin tone, or configuration (MacDorman et al., 2009) of the face can lead to artificial faces looking profoundly disturbing. Even when synthetic faces are highly realistic, observers are nonetheless good at distinguishing them from real faces (Farid & Bravo, 2011). Performance in animacy categorization tasks is robust to a range of image transformations (Farid & Bravo, 2011), suggesting that the differences between real and artificial faces are encoded across a wide range of visual features. The appearance of the eyes stands out, however, as a key critical feature used to assign “life” to a face (Looser & Wheatley, 2011), though interactions between the appearance of the eyes and the rest of the face suggest that a broader representation of natural appearance likely supports animacy categorization (Balas & Horski, 2012).

The neural response to faces is also sensitive to their category membership, reflecting the behavioral variability in recognition accuracy as a function of experience with different groups of faces. The N170 component, which is a common electrophysiological index of face processing (Bentin et al., 1996; Eimer, 2000), responds differentially to faces that are representative of observers’ dominant experience and multiple examples ofless frequently seen face categories. The perceived race of face stimuli, for example, typically modulates the amplitude of the N170 (Hermann et al., 2007; Caldara et al., 2004; Balas & Nelson, 2010). The perceived age of faces also influences the amplitude of the N170 (Wiese, 2012; Wiese et al., 2012). In both cases, the effect of belonging to a less frequently seen face category leads to a larger, sometimes later, peak (though see Balas & Nelson, 2010). This is consistent with the well-known effect of face inversion on the N170 (Rossion et al., 2000), suggesting more broadly that deviations from typical face appearance (as defined by an observer's experience) lead towards systematic changes in the neural response. The manner in which own- and other-group faces differ from one another varies substantially across distinct types of “other” faces – other-race faces differ from own-race faces on a range of dimensions including 3D shape and pigmentation (Balas & Nelson, 2010), while other-age faces differ from own-age faces in terms of contrast (Porcheron, Mauger, & Russell, 2013) and spatial frequency content (Mark et al., 1980), for example. Changes in the N170 component thus do not appear to reflect specific low-level differences between individual faces or face categories, but more likely result from deviation in any direction from the fine-tuned estimate of facial appearance established over a lifetime of experience with faces. Moreover, the effect of category membership on the N170 response also varies as a function of task. Asking observers to perform individuation vs. categorization tasks during face learning leads to measurable differences in the other-race effect observed at this component (Stahl et al., 2010), though online task performance does not necessarily bias the N170 amplitude (Tanaka & Pierce, 2009). The N170 therefore is influenced by the congruency of facial appearance with past experience, and in some circumstances, also by the cognitive and perceptual demands of different recognition tasks. All of these effects suggest that the N170 is not merely related to a coarse first-stage of face processing where faces are simply discriminated from other object classes. The observed sensitivity to the various category and task manipulations described above suggest that the N170 is instead sensitive to a broader range of perceptual and cognitive factors (Thierry et al., 2007b), that may be less specific to face categories than previously thought (Dering, Martin & Thierry, 2009).

Despite the extreme sensitivity of the human visual system to the difference between real and artificial faces and the consistent modulation of the N170 response by experience-defined face categories, differential neural responses to real and artificial faces at this component have not to date been observed. To our knowledge, the only previous electrophysiological study of the N170's response to real and artificial faces was recently reported by Wheatley et al. (2011), who showed participants luminance-matched real human faces, artificial human faces (mannequins) and clocks. Their analysis ofboth the N170 and the Vertex Positive Potential (or VPP) demonstrated a robust difference between clocks and both types of face, but no significant differences between the two kinds of faces. Instead, differential responses to real and artificial faces obtained over an extended time window from 400ms-1000ms post-stimulus onset, suggesting that later perceptual and cognitive processes distinguished between faces based on perceived animacy. Wheatley et al. therefore posited a two-step model of face processing composed of an early stage that is solely responsible for detecting face-like structure, and a secondary stage that supports finer-grained distinctions between categories. To some extent, this is consistent with recent results describing robust effects of age and race category at later ERP components like the P200 and N250 (Stahl, Wiese, & Schweinberger, 2008; Wiese, Stahl, & Schweinberger, 2009; Tanaka & Pierce, 2009), but is potentially discrepant with regard to the previously described reports of N170 sensitivity to category membership. Besides this interesting theoretical question about the time-course of differential face processing based on categories defined by experience, there are other methodological questions raised by Wheatley et al.'s study. For example, participants in their study viewed stimuli passively, so it was not possible to examine potential task effects. To what extent do animacy effects observed at early components depend upon perceptual and cognitive task demands? Also, the comparison between faces and clocks offers a means for comparing the effect of animacy to a baseline condition, but does not allow for comparison between different types of faces. How do animacy effects differ (if at all) within the category of faces? The visual system is particularly sensitive to variation in faces as a function of expertise, so the effects of real vs. synthetic appearance may depend critically on face category. We suggest therefore, thatthere are several theoretically important open questions that remain regarding when and how the brain distinguishes between real and artificial faces.

In the current study, our goal was to examine how both the P100 and N170 components were modulated by the animacy category of face stimuli, the species category of faces, and the recognition task assigned to observers. We presented participants with grayscale images of human and dog faces, which were either real, living individuals, or artificial simulacra of category members (e.g. toy dogs and dolls). Our design thus permitted us to examine how the distinction between real and artificial faces might be manifested at the N170, and also how perceived animacy might influence differential responses as a function of species or task. We also chose to examine the effects of these factors on the P100 response, a marker of early visual processing that also exhibits sensitivity to faces compared to other objects (Hermann et al, 2005; Dering et al., 2011). While the N170 has traditionally been more widely investigated as a putative face-sensitive ERP component, the P100 also exhibits category-specific responses, as do related neural markers measured using MEG (Liu, Harris, & Kanwisher, 2002) and TMS (Pitcher et al., 2007). Considering both the P100 and the N170 is thus critical to characterizing how animacy may impact early visual ERPs. Finally, we also included an analysis of late components of the ERP waveform (400-1000ms) to allow comparison between our results and Wheatley et al.'s effects. We find that the P100 exhibits sensitivity both to the species of faces and to face animacy. Further, we find that animacy affects the extent to which the N170 responds differently to faces of different species. In both cases, real vs. artificial face appearance affects the neural response measured at early visual components.

Methods

Subjects

We recruited 20 right-handed participants (10 female) from the NDSU community. Participants ranged in age from 18 to 37 years old (average age, 23 years old) and reported normal or corrected-to-normal vision. Participants gave written informed consent prior to beginning the study and received either course credit or monetary compensation for their participation.

Stimuli

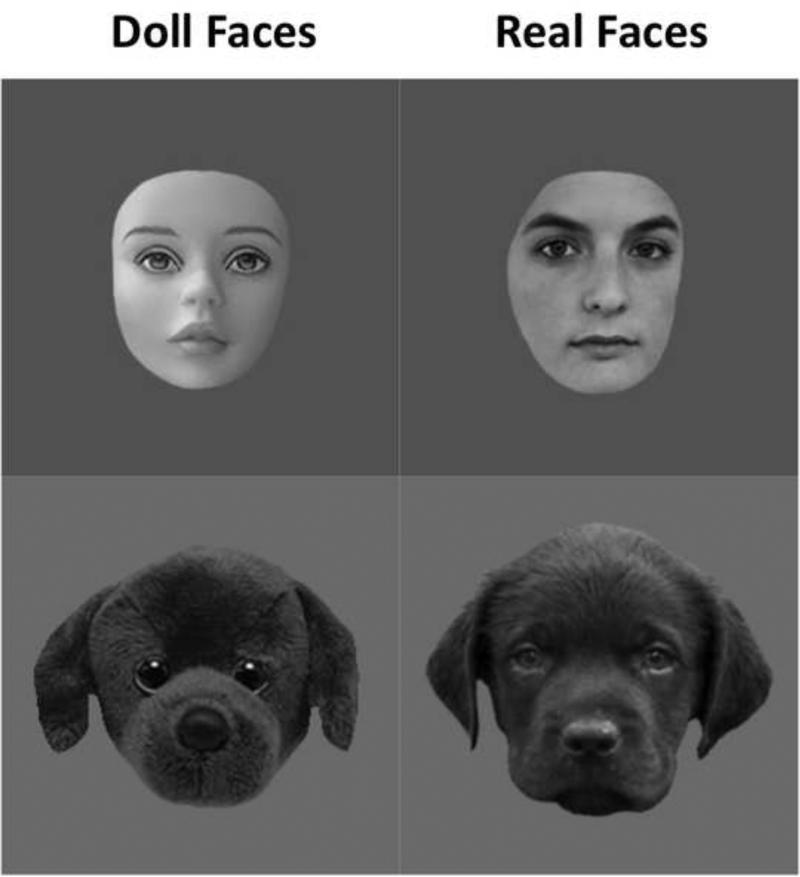

Participants viewed 16 unique images: Eight of these were pictures of human faces (4 real faces, 4 doll faces) and eight were pictures of dog faces (4 real faces, 4 doll faces). We selected doll faces such that each one was an approximate visual match for a unique real face (Figure 1), and the intensity histogram of each doll face was matched to the intensity histogram of the real face selected as its match. We did not, however, match all images for mean luminance. All images were 256×256 pixels in size and presented in grayscale to the participants.

Figure 1.

Examples of the real and artificial human and dog stimuli used in our study.

Procedure

Following application of the sensor net, participants sat in a sound-attenuated, darkened room approximately 50cm away from the display. We presented stimulus images to our participants using custom routines written in EPrime v2.0. On an individual trial, a single image was presented on a medium-gray background for 500ms. In separate blocks, participants were asked to indicate either the species category of each image (human or dog) or the animacy category of each image (real or artificial). Participants responded using a large button box and were asked to withhold responses until stimulus offset. Each image presentation was followed by an inter-stimulus interval of variable duration (drawn at random from a uniform distribution spanning 800-1500ms).

Participants viewed 60 images per condition (15 repetitions of each of the 4 unique faces per category) yielding a grand total of 240 stimulus images per block and 480 images for the entire experiment. Image order was fully randomized within blocks, and block order was balanced across participants.

5.1.4 ERP recording and analysis

We recorded event-related potentials (ERPs) with a 64-channel Hydrocel Geodesic Sensor Netv2.0, (Electrical Geodesics Inc., Eugene, OR). We recorded EEG continuously, referenced to a single vertex electrode (Cz). We amplified the raw signal with an EGI NetAmps 200 amplifier using a band-pass filter of 0.1-100 Hz and a sampling rate of 250 Hz. Prior to the start of each experimental session, we checked impedances online and began the experiment after establishing stable impedances below a threshold of 100kΩ.

Following each experimental session, we processed each subject's continuous EEG data using NetStation v4.3.1 (Eugene, OR). We applied a 30-Hz lowpass filter and segmented individual trials using stimulus onset to define a 100ms pre-stimulus baseline period and a 900ms post-onset window, yielding a 1000ms segment for each trial. We baseline-corrected these segments by subtracting the average voltage measured in the baseline period from the entire segment and, following this, we applied automated routines for ocular artifact identification and rejection. We also manually rejected data from any individual sensors that had lost contact and rejected entire segments if there was evidence of wide-spread drift, eye movements or eye blinks, or more than 7 rejected sensors. We used spherical spline interpolation to replace the data from rejected sensors, and subsequent to this, we computed individual subject averages for each experimental condition. Finally we re-referenced the data from each subject to an average reference – the choice of reference has consequences for the size of the effects measured at various components, but the use of an average re-reference has been shown to be a useful choice for examining the N170 (Joyce & Rossion, 2005). We rejected the data from 4 subjects on the basis of too few usable segments within some experimental conditions (<40 of 60 trials), yielding 16 participants in the final sample. The subjects we discarded were removed from the analysis on the basis of either low numbers of usable trials in some conditions or because of widespread motion artifacts, which were likely the result of poorly fitting electrode nets. In the former case, unequal numbers of trials per condition can introduce artificial amplitude differences (Luck, 2005) between conditions simply because fewer trials contribute to the average in some cases. In both cases, these subjects did not provide reliable data and were thus not used in the following analyses.

We selected four electrodes per hemisphere as channels of interest for our analysis of these components, which were selected based on previous reports and visual identification of the channels where these components were maximal. These electrodes included T5 and T6 in the left and right hemispheres, respectively, and immediately adjacent electrodes. We selected a time window of 100-140ms post-stimulus onset for our analysis of the P100 and a time window of 150-190ms for our analysis of the N170. We selected these time windows to accommodate the variability observed across participants, ensuring that component peaks were not truncated. We described these components using the peak amplitude for each condition, averaged across the electrode group within each hemisphere. We also calculated the latency-to-peak for these components and verified the values obtained manually to be certain that the data was not corrupted by artifacts introduced by the imposed time window or subject to high levels oflocal noise in the averaged EEG signal. We also included an analysis of the mean amplitude of the ERP waveform between 400ms and 900ms, similar to the slow-wave analysis carried out by Wheatley et al. (2011). The extended time window for this analysis and the lack of easily discernible peaks (save for the offset potential observed around 600ms) precluded a meaningful analysis oflatency. As described below, these descriptors were analyzed using a repeated-measures ANOVAto determine the impact of our experimental manipulations.

Results

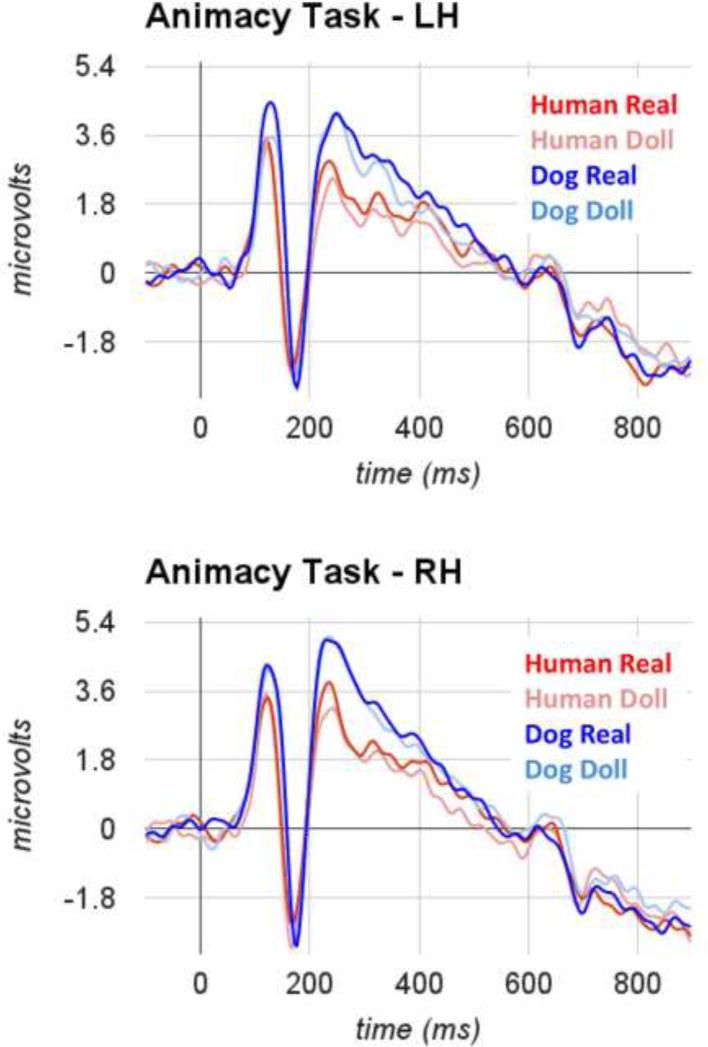

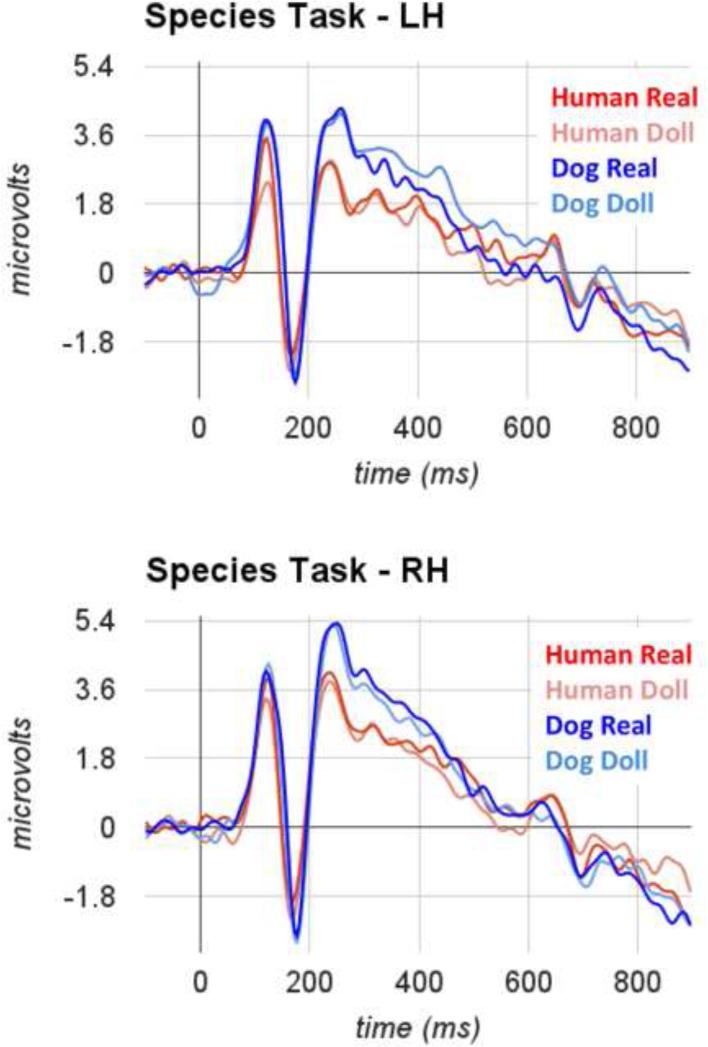

Each participant in our final sample exhibited a clear P100-N170 complex over occipito-temporal electrodes, with similar topography for the P100 and N170. In Figure 2a (animacy task) and Figure 2b (species task), we display average ERPs for each condition, averaged across the sensors within right and left hemisphere groups separately.

Figure 2a.

Grand average ERPs measured over occipito-temporal sensors for real and artificial faces of humans and dogs during completion of the animacy task.

Figure 2b.

Grand average ERPs measured over occipito-temporal sensors for real and artificial faces of humans and dogs during completion of the species task.

For all analyses of peak amplitudes and latencies, we submitted the values obtained across conditions for each subject to a 2×2×2×2 repeated-measures ANOVA. Task (species or animacy categoryjudgment), Species category (Human or Dog), Animacy category (Real or Doll), and hemisphere (Right or Left) were included as within-subject factors.

P100 amplitude

We observed main effects of species category (F(1,15)=28.2, η2=0.65, p<0.001) and animacy category (F(1,15)=4.7, η2=0.24, p =0.048). These main effects were driven by a larger peak amplitude in response to dog faces (M=5.05, s.e.m.=0.55) than to human faces (M=4.23, s.em.=0.49) and a larger peak amplitude in response to artificial faces (M=4.83, s.e.m.=0.53) than to real faces (M=4.54, s.e.m.=0.52). The main effect of animacy category was qualified by an interaction between animacy category and hemisphere (F(1,15)=9.59, η2=0.39, p=0.007). Post-hoc tests revealed that this interaction was driven by a hemisphere-dependent difference between real and artificial faces such that a significant difference was evident only in the left hemisphere, but notthe right. No other main effects or interactions reached significance.

P100 Latency

The results of our analysis ofP100 latency-to-peakvalues revealed effects thatwere largely similar to those obtained for peak amplitude of this component. We observed a main effect of species category (F(1,15)=10.50, η2=0.41, p=0.005), such that longer latencies were evident for dog faces (M=119 ms, s.e.m.=2 ms) than for human faces (M=116 ms, s.e.m.=1.9 ms). We also observed an interaction between animacy category and hemisphere (F(1,15)=12.43, η2=0.45, p=0.003), such that the effect of animacy category was most evident in the left hemisphere. No other main effects or interactions reached significance.

N170 amplitude

We observed no main effects of face species, face animacy, task, or hemisphere on the N170 peak amplitude. We did, however, find a significant interaction between task and species category (F(1,15)=8.18, η2=0.0.35, p=0.012) and an additional interaction between animacy category and species category (F(1,15)=4.25, η2=0.22, p=0.05). Post-hoc tests revealed that the interaction between species category and task was driven by a significant difference between peak amplitudes to human faces and dog faces during completion of the species categorization task (Mhuman=−3.30, Mdog=−3.94) that was not evident during completion of the animacy categorization task (Mhuman=−3.53, Mdog=−3.57). The interaction between species category and animacy category was driven by a significant difference between species categories for real face stimuli (Mhuman=−3.24, Mdog=−3.88) that was not evident when artificial faces were compared (Mhuman=−3.58, Mdog=−3.62). No other interactions reached significance.

N170 latency

The only effect we observed on the N170 latency-to-peak was a main effect of species (F(1,15)=6.10, η2=0.0.29, p=0.026). The effect was driven by significantly longer latencies to dog faces (M=169ms, s.e.m.=2ms) than to human faces (M=164ms, s.e.m.=2ms). No other main effects or interactions reached significance.

Slow-wave mean amplitude

Our analysis of the mean amplitude of the ERP waveform between 400ms and 900ms yielded no significant main effects and no interactions. Critically, the effect of animacy category reported by Wheatley et al. (2011) did not reach significance in our data (F(1,15)=0.55, p=0.47). Planned comparisons revealed that this effectwas also not significant when we considered the data from the human images separately (MReal=−0.31; MDoll=−0.30).

Discussion

Our results offer new insights into how the tuning of face representations to the statistics of real face appearance affects face processing. Like Wheatley et al. (2011), we find little evidence for a robust effect of animacy on the N170 (and by extension, the VPP). However, we did observe two critical interactions that suggest that the differential response to faces of different species depends both on the task participants perform and the perceived animacy of the faces. The “life” in a face thus does not appear to have a direct impact on the N170 – within-species comparisons between real and artificial faces do not reveal substantial differences in the amplitude or latency of the component. Perceived animacy does, however, affect the extent to which the N170 responds differently to human and dog faces. This species effect obtains when observers view real faces, but not when artificial faces are considered.

Differential responses to human and animal faces have been observed before at the N170. The N170 response to non-human primate faces is typically larger and has a longer peak latency than the response to human faces, an effect that appears to be largely driven by the appearance of the eyes (Itier, Van Roon, & Alain, 2011). Considering animal faces more broadly, Rousselet et al. (2004) compared the N170 response to upright and inverted pictures of human faces, a wide variety of animal faces, and non-face objects, and observed both that the difference between upright human and animal faces was only marginally significant and that the inversion effect only obtained with human faces. The variability of animal faces (including birds, canines, etc.) in this study makes comparison with our results challenging, but we suggest that overall the evidence supports species-specific processing at the N170. Our results extend these findings by demonstrating two novel characteristics of the differential processing of faces according to species. First, species-specific processing depends on task. Rousselet et al.'s results (2004) demonstrated that target/non-target status did not modulate the N170 component in their task, but our results – which demonstrate task-dependent processing of human and animal faces – suggest that task relevance may matter. When participants are making animacyjudgements, the N170 no longer responds differentially to faces from different species, possibly due to attentional factors. Second, species-specific processing depends on perceived animacy. Artificial faces did not elicit a species effect in our task, but real faces did. Animacy thus does not directly impact the N170, but efficient face processing at this stage is clearly affected by deviations from natural appearance, including our manipulation of face animacy. The “life” in a face does not appear to substantially impact the first stage of face detection as postulated by Wheatley et al. (2011), but other early processes subserving categorization by species are affected by artifice. We note also that we find no evidence in our data of a late-component response to the animacy of faces. This may have to do with the differences in task between our study and Wheatley et al.'s design, in which participants viewed the stimuli passively. Furthermore, it is possible that these late components are more closely related to later cognitive processing that is more robustly measured at anterior electrodes. We do not therefore conclude that the absence of such an effect in our study speaks to the absence oflate-stage processing of animacy, but rather demonstrates that such effects may depend critically on task demands, stimulus factors, and recording site.

We also observed main effects of both species and animacy at the P100 component. Specifically, we observed larger peak amplitudes at this component in response to dog faces, and in response to artificial faces. Compared to previous reports that described larger P100 responses to faces compared to non-face objects (Thierry et al., 2007a; Dering et al., 2009; 2011), these data are somewhat curious, since we might consider dog faces and artificial faces to lie somewhere between faces and objects on a single continuum of “face-ness.” However, Dering et al. (2011) also demonstrated that the P100 is modulated by factors that increase how difficult faces are to process. If dog faces and artificial faces are therefore processed as faces, the higher amplitudes we observed in our task may reflect decreased processing fluency for these faces that are not consistent with the faces that our observers typically must distinguish between. The P100 is also sensitive to low-level image properties (Rossion et al., 2003; Nakashima et al., 2008), and while it is possible that some low-level differences between our stimulus categories could be partly responsible for the effects we observe at this component, the dissociation between the effects at the P100 and the N170 (both of which can be affected by low-level properties) suggests instead that a higher-level interpretation of the effects observed at the P100 is sensible. Whether this early differential response is due to category-specific differences in local part appearance (Seyama & Nagayama, 2007) or global differences in face configuration (Green et al., 2008) is an intriguing question for future study, which may reveal critical properties of the early neural representation of facial appearance. Overall, our data demonstrates that the responses of early visual ERP components depend directly upon perceived animacy (the P100) or influence other category-specific response (the N170). The neural processing of species category is therefore tuned to natural appearance.

Our results also raise several important questions that can be addressed by further investigation of the role of natural vs. artificial appearance in face processing and recognition. Comparing the response to upright and inverted faces with these stimuli could be one useful way to try to understand what is happening at early stages ofvisual processing. Additional insights into the difference between the neural processing of real and artificial faces might be gained by carrying out a pattern classification analysis of ERP data. Using such a classification technique, Moulson et al. (2011) found that although the N170 responds with a relatively steep threshold to face-like stimuli that varied in facial resemblance, a pattern classification analysis revealed a gradient of “Face-ness” at occipito-temporal sites. Given previous reports of sensitivity to other face categories at late components including the P200, N250, and others, a multivariate classifier may reveal sensitivity to stimulus variables that cannot be easily seen when assessing a single component. Finally, there is the intriguing question of what aspects of artificial appearance the P100 and the N170 might be specifically sensitive to – our doll faces differed from real faces on multiple dimensions. By manipulating specific qualities of our face stimuli, we may be able to determine more about the tuning function that leads to the pattern of results we have observed here.

To conclude, our results demonstrate that the perceived animacy of a face impacts the neural response to faces of own, and other, species individuals. Thus, early stages of face processing must, to some extent, be sensitive to whether faces look “alive” or not. We suggest that understanding the processing of artificial faces by the human visual system may offer important insights into the nature of the boundaries that define the face categories observers establish based on their experience of the social world.

Highlights.

We compared ERP responses to real and inanimate human and dog faces.

Participants categorized animacy or species in our task.

A differential species response at the N170 depends on animacy.

The visual system is tuned to real face appearance.

Acknowledgements

This publication was made possible by ND EPSCoR NSF #EPS-0814442 and COBRE Grant P20 GM103505 from the National Institute of General Medical Sciences, a component of the National Institutes of Health (NIH). Its contents are the sole responsibility of the authors and do not necessarily represent the official views of NIGMS or NIH. Thanks to two anonymous reviewers for their comments, as well as to Alyson Saville for assistance with recruiting and data collection.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Benjamin Balas, Department of Psychology North Dakota State University Fargo, ND.

Kami Koldewyn, Department of Brain and Cognitive Sciences Massachusetts Institute of Technology Cambridge, MA.

References

- 1.Balas B, Horski J. You can take the eyes out of the doll, but... Perception. 2012;41:361–364. doi: 10.1068/p7166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Balas B, Nelson CA. The role of face shape and pigmentation in other-race face perception: An electrophysiological study. Neuropsychologia. 2010;48:498–506. doi: 10.1016/j.neuropsychologia.2009.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Caldara R, Rossion B, Bovet P, Hauert CA. Event-related potentials and time course of the ‘other-race’ face classification advantage. Cognitive Neuroscience and Neuropsychology. 2004;15:905–910. doi: 10.1097/00001756-200404090-00034. [DOI] [PubMed] [Google Scholar]

- 5.Dering B, Martin CD, Moro S, Pegna A, Thierry G. Face-sensitive processes one hundred milliseconds after picture onset. Frontiers in Human Neuroscience. 2011;5:93. doi: 10.3389/fnhum.2011.00093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dering B, Martin CD, Thierry G. Is the N170 peak of visual event-related potentials car-selective? Neuroreport. 2009;20:902–906. doi: 10.1097/WNR.0b013e328327201d. [DOI] [PubMed] [Google Scholar]

- 7.Dufour V, Coleman M, Campbell R, Petit O, Pascalis O. On the species-specificity of face recognition in human adults. Current Psychology of Cognition. 2004;22:315–333. [Google Scholar]

- 8.Eimer M. The face-specific N170 component reflects late stages in the structural encoding of faces. NeuroReport. 2000;11:2319–2324. doi: 10.1097/00001756-200007140-00050. [DOI] [PubMed] [Google Scholar]

- 9.George N, Evans J, Fiori N, Davidoff J, Renault B. Brain events related to normal and moderately scrambled faces. Cognitive Brain Research. 1996;4:65–76. doi: 10.1016/0926-6410(95)00045-3. [DOI] [PubMed] [Google Scholar]

- 10.Green RD, MacDorman KF, Ho C-C, Vasudevan SK. Sensitivity to the proportions of faces that vary in human likeness. Computers in Human Behavior. 2008;24(5):2456–2474. [Google Scholar]

- 11.Hermann MJ, Ehlis AC, Ellgring H, Fallgatter AJ. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs) Journal of Neural Transmission. 2005;112:1073–81. doi: 10.1007/s00702-004-0250-8. [DOI] [PubMed] [Google Scholar]

- 12.Hermann MJ, Schreppel T, Jager D, Koehler S, Ehlis AC, Fallgatter AJ. The other-race effect for face perception: an event-related potential study. Journal of Neural Transmission. 2007;114:951–957. doi: 10.1007/s00702-007-0624-9. [DOI] [PubMed] [Google Scholar]

- 13.Itier R, Van Roon P, Alain C. Species sensitivity of early face and eye processing. Neuroimage. 2011;54:705–713. doi: 10.1016/j.neuroimage.2010.07.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Joyce C, Rossion B. The face-sensitive N170 and VPP components manifest the same brain processes: The effect of reference electrode site. Clinical Neurophysiology. 2005;116:2613–2631. doi: 10.1016/j.clinph.2005.07.005. [DOI] [PubMed] [Google Scholar]

- 15.Kuefner D, Macchi Cassia V, Picozzi M, Bricolo E. Do All Kids Look Alike? Evidence for an Other-Age Effect in Adults. Journal of Experimental Psychology: Human Perception and Performance. 2008;34:811–817. doi: 10.1037/0096-1523.34.4.811. [DOI] [PubMed] [Google Scholar]

- 16.Liu J, Harris A, Kanwisher N. Stages of processing in face perception: An MEG study. Nature Neuroscience. 2002;5(9):910–6. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- 17.Looser CE, Wheatley T. The Tipping Point of Animacy: How, When, and Where We Perceive Life in a Face. Psychological Science. 2010;21:1854–1862. doi: 10.1177/0956797610388044. [DOI] [PubMed] [Google Scholar]

- 18.Luck SJ. An Introduction to the Event-Related Potential Technique. MIT Press; Cambridge, MA.: 2005. [Google Scholar]

- 19.MacDorman K, Green R, Ho C, Koch C. Too real for comfort? Uncanny responses to computer generated faces. Computers in Human Behavior. 2009;25:695–710. doi: 10.1016/j.chb.2008.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Malpass RS, Kravitz J. Recognition of faces of own and other race. Journal of Personality and Social Psychology. 1969;13:330–334. doi: 10.1037/h0028434. [DOI] [PubMed] [Google Scholar]

- 21.Mark LS, Pittenger JB, Hines H, Carello C, Shaw RE, et al. Wrinkling and head shape as coordinated sources of age-level information. Perception & Psychophysics. 1980;27:117–124. [Google Scholar]

- 22.Mori M. The uncannyvalley. Energy. 1970;7:33–5. [Google Scholar]

- 23.Moulson M, Balas B, Nelson C, Sinha P. EEG correlates of categorical and graded face perception. Neuropsychologia. 2011;49:3847–3853. doi: 10.1016/j.neuropsychologia.2011.09.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nakashima T, Kaneko K, Goto Y, Abe T, Mitsudo T, Ogata K, Makinouchi A, Tobimatsu S. Early ERP components differentially extract facial features: evidence for spatial frequency-and-contrast detectors. Neuroscience Research. 2008;62:225–235. doi: 10.1016/j.neures.2008.08.009. [DOI] [PubMed] [Google Scholar]

- 25.Pitcher D, Walsh V, Yovel G, Duchaine B. TMS evidence for the involvement of the right occipital face area in early face processing. Current Biology. 2007;17(18):1568–1573. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- 26.Porcheron A, Mauger E, Russell R. Aspects of Facial Contrast Decrease with Age and Are Cues for Age Perception. PLoS ONE. 2013;8(3):e57985. doi: 10.1371/journal.pone.0057985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rossion B, Gauthier I, Tarr MJ, Despland B, Bruyer R, Linotte S, Crommelinck M. The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport. 2000;11:69–74. doi: 10.1097/00001756-200001170-00014. [DOI] [PubMed] [Google Scholar]

- 28.Rossion B, Joyce C, Cottrell GW, Tarr MJ. Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage. 2003;20:1609–1624. doi: 10.1016/j.neuroimage.2003.07.010. [DOI] [PubMed] [Google Scholar]

- 29.Rousselet GA, Mace M, J-M., Fabre-Thorpe M. Animal and human faces in natural scenes: How specific to human faces is the N170 ERP components? Journal of Vision. 2004;4:13–21. doi: 10.1167/4.1.2. [DOI] [PubMed] [Google Scholar]

- 30.Seyama J, Nagayama RS. The uncanny valley: The effect of realism on the impression of artificial human faces. Presence: Teleoperators and Virtual Environments. 2007;16(4):337–351. [Google Scholar]

- 31.Stahl J, Wiese H, Schweinberger SR. Expertise and own-race bias in face processing: an event-related potential study. Neuroreport. 2008;19:583–587. doi: 10.1097/WNR.0b013e3282f97b4d. [DOI] [PubMed] [Google Scholar]

- 32.Stahl J, Wiese H, Schweinberger SR. Learning task affects ERP correlates of the own-race bias, but not recognition memory performance. Neuropsychologia. 2010;48:2027–2040. doi: 10.1016/j.neuropsychologia.2010.03.024. [DOI] [PubMed] [Google Scholar]

- 33.Tanaka JW, Pierce LJ. The neural plasticity of other-race face recognition. Cognitive, Affective, and Behavioral Neuroscience. 2009;9:122–131. doi: 10.3758/CABN.9.1.122. [DOI] [PubMed] [Google Scholar]

- 34.Thierry G, Martin CD, Downing P, Pegna A. Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nature Neuroscience. 2007a;10:505–511. doi: 10.1038/nn1864. [DOI] [PubMed] [Google Scholar]

- 35.Thierry G, Martin CD, Downing P, Pegna A. Is the N170 sensitive to the human face or several intertwined perceptual and conceptual factors? Nature Neuroscience. 2007b;10:802–803. doi: 10.1038/nn1864. [DOI] [PubMed] [Google Scholar]

- 36.Wheatley T, Weinberg A, Looser C, Moran T, Hajcak G. Mind Perception: Real but Not Artificial Faces Sustain Neural Activity beyond the N170/VPP. PLoS:ONE. 2011;6(3):e17960. doi: 10.1371/journal.pone.0017960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wiese H. The role of age and ethnic group in face recognition memory: ERP evidence from a combined own-age and own-race bias study. Biological Psychology. 2012;89:137–147. doi: 10.1016/j.biopsycho.2011.10.002. [DOI] [PubMed] [Google Scholar]

- 38.Wiese H, Komes J, Schweinberger SR. Daily-life contact affects the own-age bias in elderly participants. Neuropsychologia. 2012;50:3496–3508. doi: 10.1016/j.neuropsychologia.2012.09.022. [DOI] [PubMed] [Google Scholar]

- 39.Wiese H, Stahl J, Schweinberger SR. Configural processing of other-race faces is delayed but not decreased. Biological Psychology. 2009;81:103–109. doi: 10.1016/j.biopsycho.2009.03.002. [DOI] [PubMed] [Google Scholar]