Abstract

During speech comprehension, bilinguals co-activate both of their languages, resulting in cross-linguistic interaction at various levels of processing. This interaction has important consequences for both the structure of the language system and the mechanisms by which the system processes spoken language. Using computational modeling, we can examine how cross-linguistic interaction affects language processing in a controlled, simulated environment. Here we present a connectionist model of bilingual language processing, the Bilingual Language Interaction Network for Comprehension of Speech (BLINCS), wherein interconnected levels of processing are created using dynamic, self-organizing maps. BLINCS can account for a variety of psycholinguistic phenomena, including cross-linguistic interaction at and across multiple levels of processing, cognate facilitation effects, and audio-visual integration during speech comprehension. The model also provides a way to separate two languages without requiring a global language-identification system. We conclude that BLINCS serves as a promising new model of bilingual spoken language comprehension.

Keywords: spoken language comprehension, modeling speech processing, connectionist models, self-organizing maps, language interaction

Modeling language processing in monolinguals and bilinguals

Knowing more than one language can have a substantial impact on the neurological or cognitive mechanisms that underlie speech comprehension. For example, as bilinguals recognize spoken words, they often access information from both of their languages simultaneously (FitzPatrick & Indefrey, 2010; Marchman, Fernald & Hurtado, 2010; Marian & Spivey, 2003a, b; Thierry & Wu, 2007). In addition, language related factors that are known to affect monolingual processing, such as lexical frequency (Cleland, Gaskell, Quinlan & Tamminen, 2006) or neighborhood density (Vitevitch & Luce, 1998, 1999), can influence bilingual processing both within a single language, as well as across languages (e.g., van Heuven, Dijkstra & Grainger, 1998). Bilinguals are further affected by features specific to multilingual experience, like age of second language acquisition, relative proficiency in the two languages, and language dominance (Bates, Devescovi & Wulfeck, 2001; Kroll & Stewart, 1994; Marian, 2008).

One way in which we may be able to better understand how two languages interact within a single system, as well as the consequences that dual-language input may have on language processing, is through computational modeling of the language system. Computational modeling of language processing allows for the creation of simulated, controlled environments, where specific factors can be manipulated in order to predict their effects on processing. Furthermore, models can serve as a critical tool for honing or refining a pre-existing theory about how the language system operates.

The development of computational models of bilingualism has benefited from the groundwork laid out by the monolingual language processing literature (for a review, see Chater & Christiansen, 2008; see also, Forster, 1976; Marslen-Wilson, 1987; McClelland & Elman, 1986; Morton, 1969; Norris, 1994; Norris & McQueen, 2008). Many early models of bilingual language processing were inspired by monolingual connectionist models. For example, the Bilingual Interactive Activation+ (BIA+) model (Dijkstra & van Heuven 2002; see also Dijkstra & van Heuven, 1998; Grainger & Dijkstra, 1992) began as an extension of the monolingual Interactive Activation model developed by McClelland and Rumelhart (1981), and focused on the processing of visual/orthographic input in bilinguals.1 Similarly, the Bilingual Model of Lexical Access (BIMOLA; Grosjean, 1988, 1997) was inspired by the TRACE model of monolingual speech perception (McClelland & Elman, 1986). Recently, Li and Farkas (2002) developed the SOMBIP (Self-Organizing Model of Bilingual Processing), a distributed neural network model that uses unsupervised learning to capture bilingual lexical access, influenced by Miikkulainen’s (1993, 1997) self-organizing DISLEX model. Many of the features of the SOMBIP model were expanded by Zhao and Li (2007, 2010) to create the DevLex-II, a multi-layered, self-organizing model that captures bilingual lexical interaction and development. Importantly, these bilingual processing models do not simply add a second language to an existing architecture, but rather extend previous monolingual research in order to capture the dynamic interaction between a bilingual’s two languages.

Because the interaction of a bilingual’s two languages can be conceptualized in many ways, the differences between the various bilingual models serve to highlight some of the issues and concerns related to bilingual language processing. For example, while the BIA+ and SOMBIP assume an integrated lexicon, the BIMOLA separates the two languages at the lexical level. Differences in the architecture of the system invariably result in differences in how a bilingual’s two languages interact. For example, an integrated lexicon allows for lexical items across languages to directly influence one another, while separating the languages could suggest largely independent processing at the level of the lexicon.

The models also make distinct assumptions about how lexical items are categorized. The integration of two languages at the lexical level in the BIA+ necessitates the use of language tags to explicitly mark items as belonging to L1 or L2. In contrast, BIMOLA and SOMBIP do not explicitly mark language membership. BIMOLA relies on ‘global language’ information (often consisting of semantic and syntactic cues) to group words together, while the SOMBIP uses the phono-tactic principles of the input itself. To any model of bilingual language processing, the issues of lexical organization and categorization are critical.

To explore how the lexicon may be organized or categorized in bilingual speech comprehension, the present paper introduces the Bilingual Language Interaction Network for Comprehension of Speech, or BLINCS, a novel model of bilingual spoken language processing which captures dynamic language processing in bilinguals. Localist, connectionist models like BIA+ and BIMOLA can provide insight into steady-state instances of the bilingual processing system, but often must be carefully, and manually, coded to capture the variability inherent to the bilingual system. This variability can be substantial, as bilingual language processing can be influenced not only by long-term features like age of acquisition or language proficiency, which are either fixed or tend to vary gradually, but also by short-term features like recent exposure, which can change rapidly (Bates et al., 2001; Kaushanskaya, Yoo & Marian, 2011; Kroll & Stewart, 1994). Including a learning mechanism, like the self-organizing feature of the SOMBIP, imbues a model with the ability to grow dynamically and to more easily capture the flexibility inherent to bilingual processing. Thus, BLINCS combines features of both distributed and localist models in an effort to accurately simulate the natural process of bilingual spoken language comprehension. Furthermore, the BLINCS model represents a dedicated, computational model of spoken language processing in bilinguals that considers cross-linguistic lexical activation as it unfolds over time. In the next section, we will discuss the structure of the Bilingual Language Interaction Network for Comprehension of Speech.

The architecture of the BLINCS model

The Bilingual Language Interaction Network for Comprehension of Speech (BLINCS; Figure 1) consists of an interconnected network of self-organizing maps, or SOMs. Self-organizing maps represent a type of unsupervised learning algorithm (Kohonen, 1995). As the SOM receives information, the input is mapped to the node with the smallest Euclidean distance from the input (the so-called best-match unit). The value of the selected node is then altered to become more similar to the input. Nearby nodes are also updated (to a lesser degree), so that the space around the selected node becomes more uniform. Thus, when the same input is presented again, it is likely to settle upon the same node. Furthermore, the adaptation of the surrounding nodes results in similar inputs (e.g., words) mapping together in the SOM space.

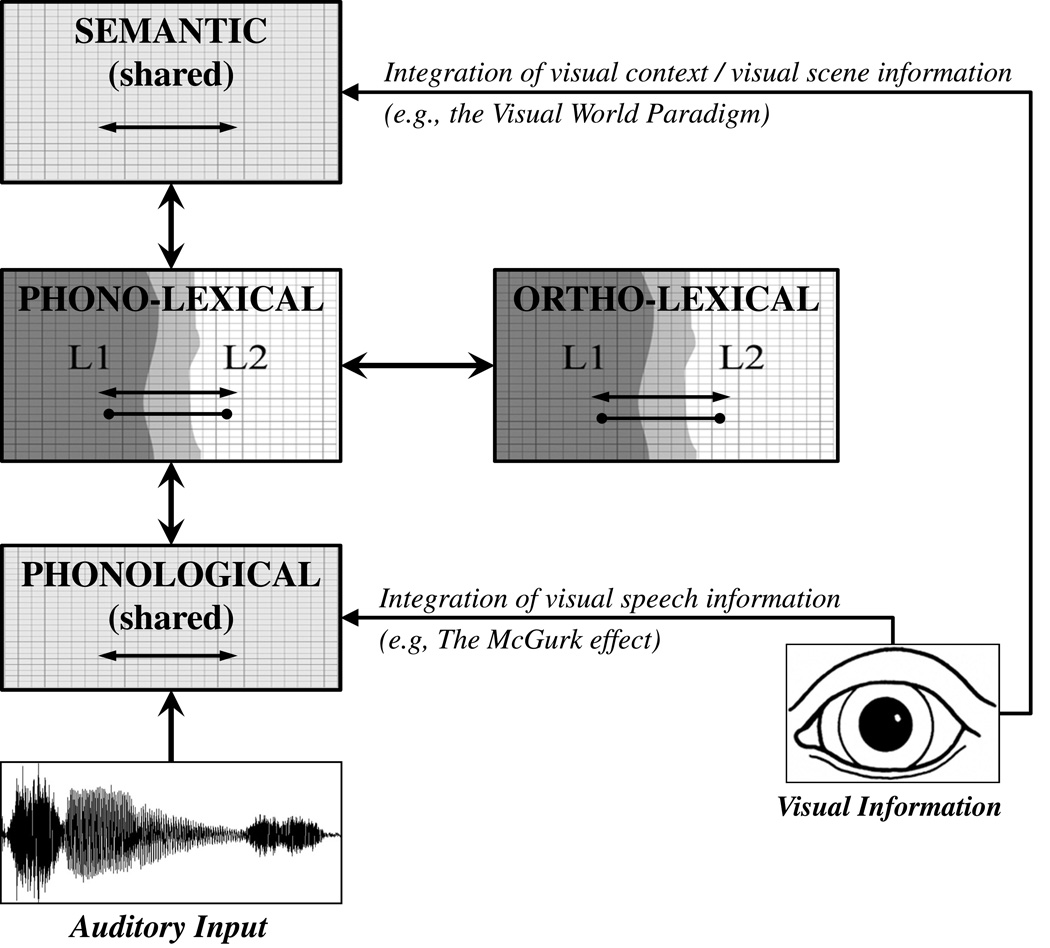

Figure 1.

The Bilingual Language Interaction Network for Comprehension of Speech (BLINCS) model. The model takes auditory information as its input, which can be integrated with visual information. There are bi-directional excitatory connections between and within each level of the model, and inhibitory connections at the phono-lexical and ortho-lexical levels. Each level is constructed with a self-organizing map.

The BLINCS model contains multiple interconnected levels of representation – phonological, phono-lexical, ortho-lexical and semantic – and each level in the model is individually constructed using the self-organizing map algorithm. Additionally, the model simulates the influence of visual information on language processes though connections to the phonological and semantic levels. As is characteristic of interactive models of processing, the various levels within the system interact bi-directionally, allowing for both feed-forward activation and back-propagation. Within levels, language-specific and language-shared representations occupy the same network space; communication (and competition) between languages is the product of both lateral links between translation-equivalents, and proximity on the map (i.e., items that map together are simultaneously active, but also inhibit one another). Between levels, bidirectional excitatory connections are computed via Hebbian learning, wherein connections between items that activate together are strengthened through self-updating algorithms. Thus, when a lexeme and its semantic representation are presented to the model simultaneously (during model training), their weighted connection is strengthened. This degree of interconnectivity between and within levels of processing simulates a dynamic and highly interactive language system.

Next, we describe the BLINCS model in greater detail by focusing on how the model was trained using English and Spanish stimuli, the structure of the model after training, and how language activation occurs within the model, thus providing computational evidence for the viability of BLINCS as a model of bilingual spoken language comprehension.

The phonological level

The phonological level of the BLINCS model was constructed using a modified version of PatPho (Li & MacWhinney, 2002), which quantifies phonemic items by virtue of their underlying attributes (e.g., voicedness, place of articulation, etc.). In this system, each phoneme is represented as a three-element vector, with each element capturing a different aspect of the phoneme. Thus, a three-dimensional vector was created for each phoneme from the International Phonetic Association alphabet (International Phonetics Association, 1999), with two notable additions. First, as with PatPho, the phoneme /H/ was added as a voiceless, glottal approximant (first sound in hospital). Second, a category of affricates was added to include the sounds /ʧ/(first phoneme in church) and /ʤ/ first phoneme in jail), which were defined as unvoiced/voiced (respectively), alveolar affricates. A full list of the phonological forms and the quantified three-dimensional vectors is available as online Supplementary Material.

The phono-lexical level

Phono-lexical items in the model were constructed by inserting the three-element phonological vectors into a multi-syllabic template. In the current model, each word in the model is placed in a three-syllable template of the structure CCVVCC/CCVVCC/CCVVCC. Each C or V slot contains a three-element vector, resulting in a total length of 54-elements perword (three-elements multiplied by 18 C or V slots). For example, the two-syllable word rabbit would be represented as [rCæVCC/bCIVtC/CCVVCC]; each empty C or V position contains a vector of zeros. Thus, information about the syllable structure of words is retained at the level of the lexicon.

Embedding the phoneme vectors into syllabic phrases allows the model to draw connections between items that a simple ordered structure might not. For example, using an ordered structure, the English words tap and trap would be formed as [tæp …] and [træp …], respectively – the model would then compare each phoneme from one word to the phoneme that occurs in the same position in the second word. In other words, the /r/ in trap would be compared to the /æ/ in ‘tap,’ and the model would fail to recognize the significant overlap between the two words. By embedding the items into syllable phrases, word input is instead formulated as [tCæVpC…] and [træVpC…], so the vowel in tap occupies the same slot as the vowel in trap, allowing the model to recognize their phonological similarity.

For the present model, a list of 480 words ranging from one to three syllables was chosen, consisting of 240 English words and 240 Spanish words. The list contained 142 English–Spanish translation equivalents (totaling 286 words), 88 cognates, 34 false-cognates, and 72 single-language words (split evenly between English and Spanish). Each word was written with broad phonetic transcription in accordance with the IPA. The phonological information was then transformed into the modified PatPho vectors and concatenated into the three-syllable carrier. A list of words is available as online Supplementary Material.

The ortho-lexical level

In addition to the phono-lexical level, we included a level that contained orthographic representations for the lexical items. Though our primary interest in developing the model was speech comprehension, research has indicated an interaction between phonological and orthographic systems (Bitan, Burman, Chou, Lu, Cone, Cao, Bigio & Booth, 2007; Kaushanskaya & Marian, 2007; Kramer & Donchin, 1987; Schwartz, Kroll & Diaz, 2007). Because orthographic information is known to be co-activated during phonological processing (Rastle, McCormick, Bayliss & Davis, 2011; Ziegler & Ferrand, 1998), and orthography has been shown to activate phonological representations (see Rastle & Brysbaert, 2006, for a review), BLINCS includes an orthographic system that interacts with the phono-lexical level during processing. Each letter in the English and Spanish alphabets (the 26 traditional characters, as well as the Spanish characters ñ, á, é, í, ó, and ú) was quantified using a method similar to that of Miikkulainen (1997). Each letter was typed in 12 point, Times New Roman font in black on a white background measuring 50 × 50 pixels, where 1 represented a black pixel and 0 represent a white pixel. The proportion of black pixels in each of the four corners was then calculated for each image (i.e., number of black pixels/total number of pixels) and used to create a four-element vector for each letter. The letters were then concatenated into a 16-slot carrier of the form CCVVCCVVCCVVCCVV (resulting in a 64-element vector). For example, the Spanish word fotó “photo” and the English words carpet and telescope were coded as [f0o0t0ó000000000], [c0a0rpe0t0000000], and [t0e0l0e0sco0p0e0], respectively.

The semantic level

Semantic representations of the words were obtained using the Hyperspace Analogue to Language (HAL; Burgess & Lund, 1997; Lund & Burgess, 1996), which provides quantified measures of word co-occurrence from large text corpora. In essence, HAL captures lexical meaning through the frequency with which words co-occur with other words and is able to automatically derive semantic information from co-occurrence information. For the present model, the HAL tool from the S-Space Package (Jurgens & Stevens, 2010) was used to derive semantic representations for our lexical items from a portion of the UseNet Corpus (Shaoul & Westbury, 2011) totaling approximately 330 million words. Each semantic entry consisted of a 200-dimensional vector. Because evidence suggests that the semantic space is shared in bilinguals (Kroll & De Groot, 1997; Salamoura & Williams, 2007; Schoonbaert, Hartsuiker & Pickering, 2007), the values for the English lexical items matched the values of the Spanish translation equivalents. The semantic vectors for Spanish words were obtained using their English translations – thus, the English word duck and its Spanish translation pato had the same semantic vector.

Visual information

Listening to spoken language in the real world naturally involves the integration of auditory and visual information. Visual information can influence the process of speech recognition at the level of perception, where visual and auditory input are integrated to impact phoneme perception (e.g., the McGurk effect; Gentilucci & Cattaneo, 2005; McGurk & MacDonald, 1976), or can provide context that shapes how the linguistic message is interpreted by limiting processing to objects in the visual scene (Knoeferle, Crocker, Scheepers & Pickering, 2005; Spivey, Tanenhaus, Eberhard & Sedivy, 2002). With this in mind, the BLINCS model was designed to accommodate the potential influence of visual information on language processing. Specifically, the BLINCS model was not designed to perform visual recognition (i.e., tracking of lip movements or recognition of specific images), but rather to focus on the effects that information gained by the visual system might have on language processing. Thus, direct connections from a visual input module to the phonological level were used to simulate the effect of additional identifying phonemic information from lip or mouth movements, as with the McGurk effect, by averaging the phonological values associated with the visually-represented phoneme and those corresponding to the spoken language input. Likewise, direct connections from a visual-input module to the semantic level served to simulate non-linguistic constraint effects, where the presence or absence of objects in a visual scene can affect language processing. More specifically, the model increases the resting activation of semantic representations for items that the visual-input module indicates are currently visible.

Training the BLINCS model

The four levels (phonological, phono-lexical, ortho-lexical and semantic) and the Hebbian connections between phono-lexical/ortho-lexical maps and phono-lexical/semantic maps were trained concurrently over 1000 epochs. Training within each level was performed using the SOM algorithm. The learning rate (which determines the strength of learning) was initially set at 0.2 and decreased linearly to 0 from epochs 1 to 1000. The learning radius (which determines the nodes that are trained based on their radial proximity to the best-match-unit) was initially set at 10, decreased linearly to 4 in the first 100 epochs, and then decreased linearly to 0 from epochs 101 to 1000. Furthermore, the learning radius function was Gaussian in nature, so the learning-strength of a given node decreased relative to its distance from the best-match-unit. The items were presented in random order and an equal number of times. Spanish and English lexical items were intermixed during training, thereby approximating simultaneous acquisition of the two lexical systems. The phono-lexical, ortho-lexical, and semantic representations for each single word were presented concurrently; this allowed the model to strengthen the inter-level connections between the best-match-units at each level, thereby enhancing the links between a given word’s phono-lexical, ortho-lexical, and semantic representations during training. To train the inter-level connections, we applied a Hebbian learning algorithm, defined as:

where xi and xj represent the activation levels of the two nodes (e.g., phono-lexical and semantic representations for a single word), and λ represents the learning rate, which was set at 0.2.

In addition to the between level connections, the Hebbian weights were used as a basis for drawing lateral links between translation equivalents. For example, the lexical items duck and pato mapped to the same semantic unit, meaning that both words were activated at the phono-lexical level, along with the representation of “duck” at the semantic level. A network of lateral connections at the phono-lexical level was developed by increasing the weights between two lexical nodes that were accessed by the same semantic node using the Hebbian learning rule.

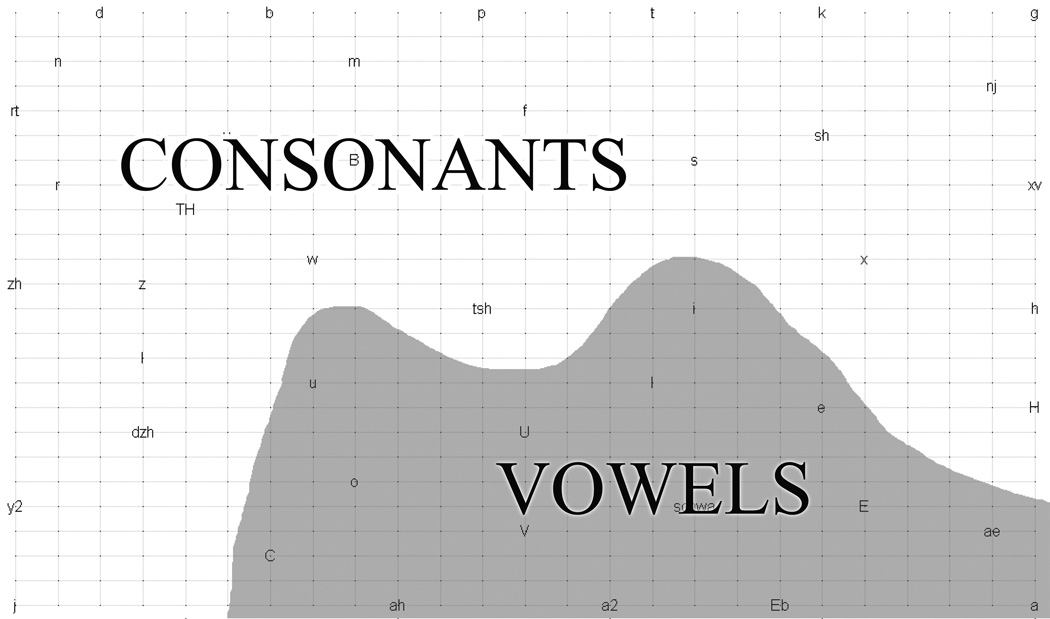

Training at each level was able to capture similarities between phonological, lexical, ortho-lexical, and semantic items. Figure 2 shows the phonological map after 1000 epochs of training. The most notable distinction on the map is the separation of vowels from consonants – a finding that echoes recent neurological evidence for distinct neural correlates of vowels and stop consonants (Obleser, Leaver, VanMeter & Rauschecker, 2011). Within these two major categories, distinctions based on phonemic class also emerge. For example, items such as /p/ and /t/ mapped together in the phonemic space based on their shared membership to the category of voiceless plosives, suggesting that they should co-activate based on their similarity.

Figure 2.

The Phonological SOM after 1000 epochs of training. Vowels and consonants are separated by virtue of their underlying phonological features (e.g., voicedness, manner, or place of articulation). White-shaded areas represent consonants, and gray-shaded areas represent vowels.

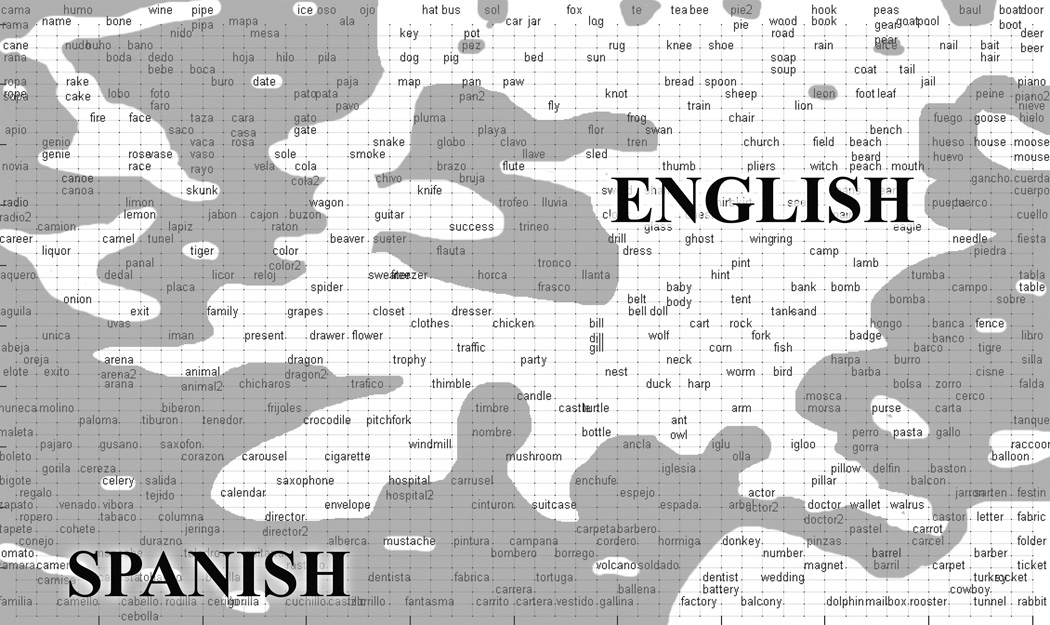

The phono-lexical SOM, shown in Figure 3, successfully captures similarities between lexical items driven by phonological overlap. For example, words like bone, boat, and road are mapped near one another. However, we are most interested in the way in which the map organizes the two lexicons into somewhat distinct space. We can see from the map that Spanish and English words are primarily separated based upon the phonotactic probabilities inherent to the input. There are some notable exceptions, primarily in the mapping of cognates and false cognates, which often map to the boundaries of the distinct language spaces within the SOM. For example, the cognate words tobacco (English) and tabaco (Spanish) are mapped near one another, but directly below the Spanish tabaco the map contains primarily Spanish words, and directly above the English tobacco is a primarily English neighborhood. The organization of the phono-lexical SOM represents a separated but integrated system, where the BLINCS model classifies words by language membership according to phonotactic rules, while also allowing words from both languages to interact within a single lexical space.

Figure 3.

The phono-lexical SOM after 1000 epochs of training. The model automatically separates English and Spanish in the two-dimensional vector space according to phonotactic principles. White-shaded areas represent English, and gray-shaded areas represent Spanish.

In order to capture the interaction between the phonological and orthographic representations of lexical items, each word on the phono-lexical SOM mapped directly to its written equivalent on the orthographic map (Figure 4) via trained Hebbian connections. Items in the ortho-lexical SOM are mapped together based on their spelling-similarity (e.g., hint and pint; cerco “fence” and carta “letter”). The ortho-lexical level allows for items that do not share phonology, but that share orthography, to be accessed or activated at the same time. For example, the English words beard and heart share a significant orthographic overlap (three of five letters exact, with a high degree of similarity between ‘b’ and ‘h’), but overlap very little in phonology. However, because these words do map closely in ortho-lexical space, orthographic information is able to influence lexical processing. As with the phono-lexical level, the ortho-lexical level also displays a separated but integrated structure.

Figure 4.

The ortho-lexical SOM after 1000 epochs of training. The model automatically separates English and Spanish in the two-dimensional vector space according to orthotactic principles. White-shaded areas represent English, and gray-shaded areas represent Spanish. (Because Spanish and English overlap orthographically more than they overlap phonologically, the ortho-lexical map is more integrated than the phono-lexical map, across languages.)

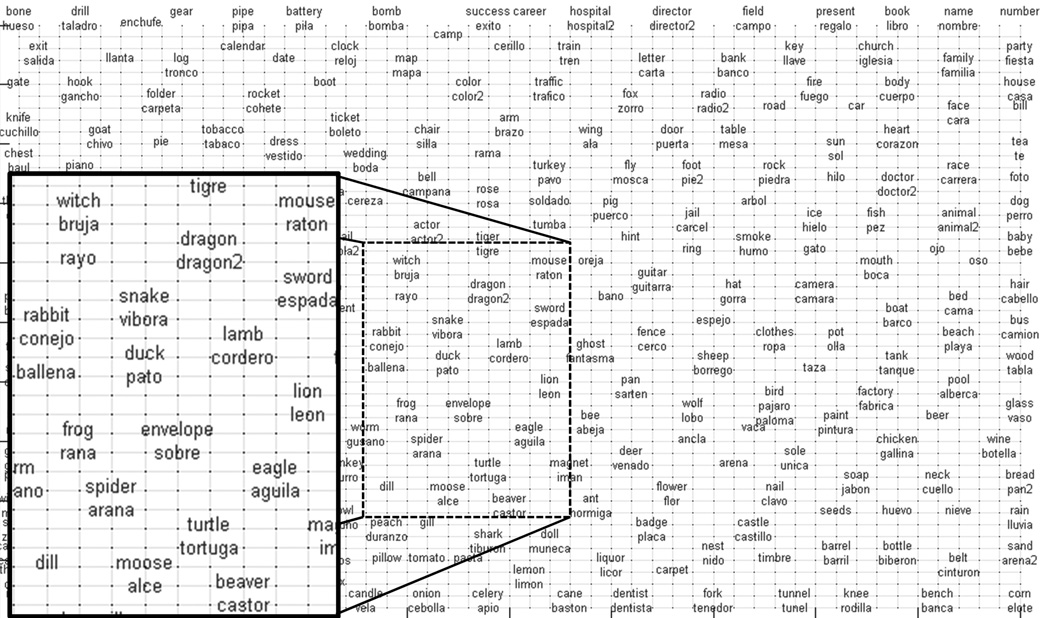

The BLINCS also includes a level dedicated to semantic/conceptual information (Figure 5). Here, we see that semantic relationships between items are successfully represented in the SOM space. Words such as car and road, which are associated concepts, map near one another. Likewise, the SOM can capture category relationships; duck, lamb, and rabbit – all types of animals – map together, and musical instruments like saxophone and flute map together as well. In cases of translation equivalents, single nodes encapsulate the meaning for both lexical items, based on the notion of a shared semantic space across languages. Thus, single nodes in the semantic space can map to multiple nodes at the lexical level. Through these connections, words that do not share semantic similarity may be co-activated by virtue of phonological similarity. In addition, words that are phonologically distinct but are closely related in meaning may be co-activated via top–down connectivity from the semantic system.

Figure 5.

The semantic SOM after 1000 epochs of training. Translation equivalents are mapped to a single node, which reflects a semantic system that is shared across languages. The inset shows a subsection of the SOM onto which related concepts were mapped.

Activation in the BLINCS model

The goal in designing the BLINCS model was to capture patterns of lexical activation during speech comprehension. Therefore, our primary concern was with the overall activation of lexical items over time, both during and immediately after the presentation of spoken words. The BLINCS model takes, as its input, a word composed of concatenated phonological vectors (i.e., the same format as the lexical input used during training). The model is presented one phoneme vector at a time for a pre-designated number of cycles (e.g., five cycles per phoneme, or 90 cycles total for an 18 phoneme carrier). In a given cycle, the model determines the best-match-unit to the input on the phonological map, and activates that node and neighboring nodes. In addition, the input is not a pure phonological vector – a small amount of noise is added to the phoneme vector (+/− in the range from 0 to 0.01), so that the model receives variable stimuli during comprehension. The model can therefore settle either on the node for the target phoneme or on a best-match-unit that is near the target phoneme node and still provide activation (albeit decreased) to the “correct” target phoneme. Activation at this level can also be influenced by visual input, which comes in the same form as the phonological input (i.e., a three-element vector) and is meant to represent seeing articulatory lip or mouth movements consistent with a specific phoneme, which may be inconsistent with the phonological input (e.g., the McGurk effect; McGurk & MacDonald, 1976). Thus, activation at the best-match-unit in the phonological level can be represented by (1/number of cycles × noise), optionally averaged with the three-element phonological-vector provided by the visual-input module (which simulates the effect of additional phonemic cues from the visual modality); exponentially decreasing activation values are given to surrounding nodes based on their distance from the best-match-unit. This activation is additive across cycles for a given phoneme, and the active node at the phonological level passes its activation to lexical items that contain that phoneme at a given time (e.g., /p/ at the first time point might activate pot, but not cop). A consequence of the input activating neighboring nodes is that providing the model with a word like pot will result in the activation of lexical items with similar phonemes (e.g., bottle) based on phonological proximity.

During initial phoneme presentation, many candidates are likely to be active at the phono-lexical level. For example, given the phoneme /p/, the words pot, perro, and pasta (among others) are likely to be activated. As subsequent phonemes are presented, items that continue to match the input are more strongly activated, while those that no longer match the input gradually decay (at a rate of 10% per cycle). However, phoneme activation is not binary in nature, but undergoes a process of gradual decay. In other words, for the word pot, when the model is considering the second phoneme, it is also receiving activation from the initial phoneme, which gradually decreases over time. This can be conceptualized as a type of phonological memory trace, where activation of lexical items depends not only on the phoneme currently being heard, but also on the knowledge of the phonemes that came before it.

By allowing for this phonological trace to remain active beyond the phoneme’s presentation, the model can better account for co-activation of rhyme-cohorts. If the model considered only the phoneme of presentation and its relationship to a specific slot in the syllable carrier at the lexical level, it would capture rhyme-similarities between words when the phonemes occur in the same syllable, like bear and pear, but would likely fail to co-activate cross-syllable rhyme cohorts, like bear and declare. By maintaining decreasing phonemic activation over time, the model should be able to account for rhyme-cohorts where the rhyme occurs in different syllables.

As phono-lexical representations become active, nearby items are also activated by virtue of their proximity to the target node, with the strength of their activation decreasing as a function of distance. However, phono-lexical items can inhibit nearby items, and the strength of this inhibition is relative to the degree of activation; inhibition involves each active node decreasing the strength of nearby nodes by multiplying their activation by 1 minus its own activation (i.e., a node with activation 0.9 will reduce a nearby node with activation 0.1 to 0.01 by multiplying its activation by 1–0.9, or 0.1). In this way, the model can capture effects of neighborhood density, where lexical items in dense neighborhoods undergo greater competition and are activated less quickly (Luce & Pisoni, 1998; Vitevitch & Luce, 1998). In addition to simulating neighborhood density effects, the BLINCS also accounts for lexical frequency at the phono-lexical level, where each item’s initial activation is determined by its lexical frequency, obtained from the SUBTLEXus (English items; Brysbaert & New, 2009) and SUBTLEXesp (Spanish items; Cuetos, Glez-Nosti, Barbón & Brysbaert, 2011) databases. Specifically, the frequency per-million values for each word were transformed to a scale from 0 to 0.1, and the resting value for each word at the phono-lexical level was determined by its scaled frequency.

At each cycle, phono-lexical units transfer their activation to corresponding units in the ortho-lexical level and the semantic level, both of which activate neighboring units within their levels based on a predefined radius of four nodes, with activation decreasing as a function of distance. At this point (and prior to the beginning of the next cycle), proportional activation from the ortho-lexical and semantic levels feeds back to the phono-lexical level, allowing for items that are orthographically and semantically similar to the target words to become active. Thus, activation in the phono-lexical level is the sum of proportional activation from the phonological, ortho-lexical, and semantic levels. In this way, the BLINCS model is highly interactive – information is passed between distributed levels of processing during each cycle of the system.

The model also allows for the phono-lexical level to feed information back to the phonological level – an active phono-lexical item can further boost its activation by providing supporting activation to its own phonemes. The primary motivation for including phono-lexical feedback comes from research indicating an effect of lexical knowledge on phoneme perception (McClelland, Mirman & Holt, 2006; Samuel, 1996, 2001; but see McQueen, Norris & Cutler, 2006; Vroomen, van Linden, de Gelder & Bertelson, 2007).

In the following section, we will explore lexical activation within the model using specific examples guided by the activation principles outlined above. Specifically, we will focus on (i) the model’s ability to simulate non-selective language activation, (ii) the influence that ortho-lexical and semantic information have on phono-lexical processing, and (iii) differential patterns of activation for cognates and false-cognates. In addition, we will examine how the integration of visual information affects language activation and explore a potential method for maintaining language separation during comprehension.

Language co-activation in the BLINCS model

When listening to speech, bilinguals display an impressive degree of cross-linguistic interaction. A growing body of evidence suggests that a bilingual’s two languages communicate and influence one another at the levels of phonological (Ju & Luce, 2004; Marian & Spivey, 2003a, b), orthographic (Kaushanskaya & Marian, 2007; Thierry & Wu, 2007), lexical (Finkbeiner, Forster, Nicol & Nakamura, 2004; Schoonbaert, Duyck, Brysbaert & Hartsuiker, 2009), syntactic (Hartsuiker, Pickering & Veltkamp, 2004; Loebell & Bock, 2003), and semantic processing (FitzPatrick & Indefrey, 2010). Therefore, one important goal for the BLINCS model was to accurately simulate the way in which bilinguals process speech by capturing this interactivity. We tested the model by providing it with sequential phonological information and then measuring the overall activation of all the items within the phono-lexical level as a function of phonological, orthographic and semantic activation. This allowed us to rank the items that were “most active” during the entire trial. The results provided strong support for the model’s ability to capture effects of cross-linguistic activation. To illustrate these effects, Table 1 shows several target words that were provided to the model, and words that consistently ranked in the top 15% of co-activated items (at least 80 occurrences in 100 model simulations; there was a degree of variability within this cohort due to the noise in the model).

Table 1.

Examples of co-activated words in BLINCS.

| Target word | Co-activated words |

|---|---|

| tenedor “fork” | tiburón “shark”, fork, tunnel, tent |

| road | rope, race, car, ropa “clothes” |

| pear | chair, pan, jail, pez “fish” |

| arena “sand” | arena, ballena “whale”, sand, playa “beach” |

| pie | pato “duck”, pan, pie “foot”, vaso “glass”, pear |

| hielo “ice” | yellow, huevo “egg”, ice, sol “sun” |

Note: Spanish words are italicized. Words were considered to be consistently co-activated if they occurred in the top 15% of most-active words a minimum of 80 times out of 100 model simulations when the target was presented.

These examples highlight the interactivity inherent to the BLINCS model. For example, tenedor “fork” activated a within-language onset competitor, tiburón “shark”, as well as cross-language onset competitors, tunnel and tent. This is consistent with research indicating that during speech processing, multiple candidates are active early in the listening process for both monolinguals (Marslen-Wilson, 1987; Tanenhaus, Spivey-Knowlton, Eberhard & Sedivy, 1995), and bilinguals (Marian & Spivey, 2003a, b). The model is also capable of activating rhyme cohorts during listening. For instance, pear activates chair in English, and arena “sand” activates ballena “whale” in Spanish via input from the phonological level. However, activation in BLINCS is not driven entirely by the phonological input. Consider the co-activated word vaso “glass” that accompanies the target word pie. In this instance, vaso is only active because the target word, pie, activates the word pato “duck” via shared onset, which in turn activates vaso due to their close proximity in the phono-lexical map (note that vaso isanear-rhyme to pato). Thus, activation of vaso from pie depends upon lateral mapping within the phono-lexical system. Both the case of pear to chair, and pie to vaso, illustrate the BLINCS model’s ability to capture rhyme effects (Allopenna, Magnuson & Tanenhaus, 1998), either through direct phonological match to the input, or via lateral connections between rhyming items. The co-activated item analyses also indicate the influence of semantic feedback during processing in the BLINCS model. Consider the target/co-activated pairs, road/car, and hielo “ice”/sol “sun”. Co-activation of these items occurred by virtue of their proximity in the semantic map, since roads and cars are often associated, and ice and sun both represent weather phenomena. In other words, the target word road activated the semantic representation of road, which increased the activation of nearby related concepts (e.g., car), and both semantic representations passed their activation values down to their corresponding phono-lexical representations, resulting in car co-activating during presentation of road. These effects of semantic knowledge on lexical processing in the model are supported by empirical work highlighting the influence of semantic relatedness on language comprehension (Huettig & Altmann, 2005; Yee & Sedivy, 2006).

The time-course of activation in the BLINCS model

While measuring overall activation (i.e., collapsed across time) in BLINCS is informative for exploring the process of speech comprehension, the model also allows one to trace the activation of lexical items as speech unfolds. For a sequential and incremental process like spoken comprehension, looking at the relative activation of words across time provides a more nuanced measure of language co-activation. In each example, the model is given the same target word for 10 trials. Those trials are then averaged together to obtain activation curves for the target item and for items that are phonologically, orthographically, or semantically related. Each subsequent graph contains the activation of nodes at the phono-lexical level, but reflects the integrated activation of phonological, orthographic, and semantic processing.

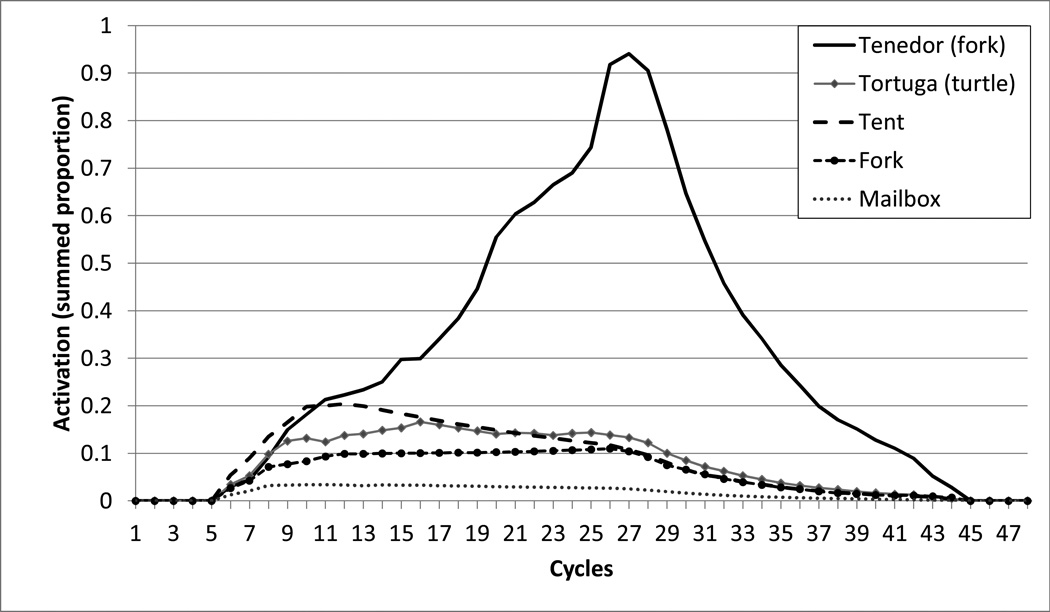

The first example (Figure 6) shows the activation curve of the target word tenedor “fork”, a within-language onset competitor, tortuga “turtle”, a cross-linguistic onset competitor, tent, the translation equivalent fork (English), and an unrelated item, mailbox. We can see an increase in activation for both within- and cross-language onset competitors until the point at which the model no longer considers those items to match the input, resulting in tent reaching a higher peak of activation than tortuga because it overlaps more with the target. Both items remain overall more active than the unrelated item, mailbox. The activation of the translation equivalent in this example is driven by feedback from the semantic level to the lexical level. First, as tenedor becomes active, it activates its semantic representation (as FitzPatrick & Indefrey, 2010, suggest, this process can begin with presentation of a word’s initial phoneme), which feeds back to the nodes for tenedor and its translation equivalent fork. Through this mechanism of feedback, the presentation of a word in one language can result in the rapid activation of its translation equivalent.

Figure 6.

Activation of the BLINCS model with the Spanish target word tenedor “fork”. The curves show simultaneous activation of a within-language competitor (tortuga/turtle), a cross-language competitors (tent), and the English translation equivalent of the target, fork. In contrast, there was no activation of the unrelated word mailbox.

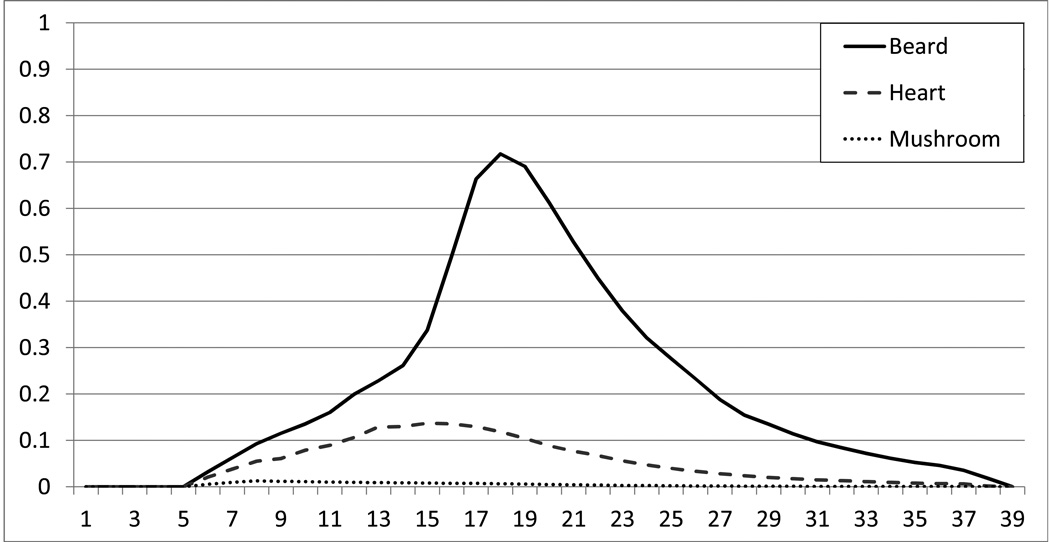

The orthographic relationships found in the proximal structure of the BLINCS model are further reflected in the activation patterns across time. Figure 7 shows an example of a model simulation with the target word beard. The activation curves again show increased activation of an item that does not share phonology with the target, but overlaps substantially in orthography (i.e., heart). The reason for this heightened activation is that beard and heart map closely in ortho-lexical space, so that when the phono-lexical representation of beard is activated, it spreads to its ortho-lexical form, subsequently activating nearby items that feed back to their phono-lexical representations. Through this pathway, ortho-lexical information is able to influence phono-lexical processing, consistent with previous research (Rastle et al., 2011; Ziegler & Ferrand, 1998).

Figure 7.

Activation of the BLINCS model with the target word beard. The curves show greater activation of an orthographic competitor, heart, relative to an unrelated item, mushroom.

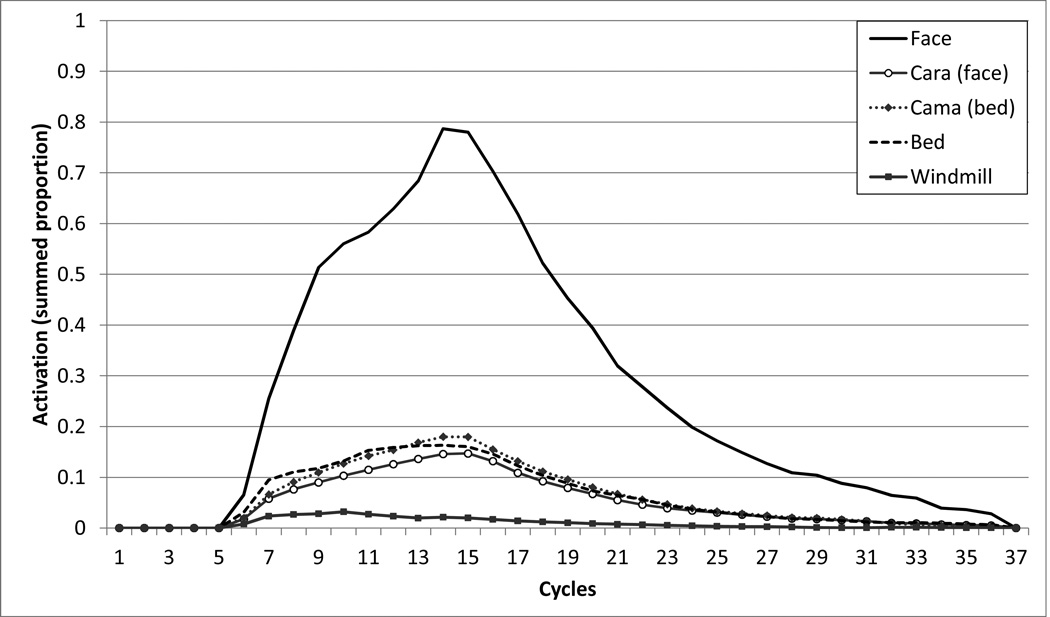

The BLINCS model also makes predictions regarding the extent to which semantic knowledge can drive activation of lexical items. Previous research using eye-tracking has suggested that semantic relatedness plays a role in language comprehension (Shook & Marian, 2012; Yee & Sedivy, 2006; Yee & Thompson-Schill, 2007). However, the extent to which semantic information can impact lexical processing is unclear. Yee and Sedivy found that when participants heard the word log, they looked more to a picture of a key, because log partially activated the word lock, which activated key due to their semantic relatedness. In other words, bottom–up phonological information activated multiple candidates (e.g., log and lock), which spread activation upward to their corresponding semantic representations. Then, at the semantic level, the representation for lock activated the representation of key via lateral connections. Feedback from the conceptual representation of key to its lexical counterpart is not necessary for participants to look more at the image of the key. While this result provides evidence for semantic processing of multiple candidates, and for lateral connections between semantically related items, the BLINCS model predicts that the semantically related information can cause the lexical forms of semantically-related items to become active as well. Consider Figure 8, which contains the activation curves for the target word, face, its translation equivalent, cara, an object that is phonologically related to the translation equivalent, cama “bed”, the translation equivalent of the phonologically related item, bed, and an unrelated item, windmill. The activation curves suggest that the phono-lexical representation of the English word bed, which is not directly related to face through semantics, orthography, or phonology, may nevertheless show greater activation than a word like windmill, given the target word face (see Li & Farkas, 2002, for a discussion of similar cross-language activation effects for semantically-unrelated words). Thus, the BLINCS makes a testable prediction regarding the degree of impact that semantic knowledge can have on language co-activation in bilinguals that is consistent with monolingual research (Yee & Sedivy, 2006; Yee & Thompson-Schill, 2007).

Figure 8.

Activation of the BLINCS model with the target word face. The curves show greater activation of the Spanish translation equivalent, cara, a word that is phonologically related to the translation, cama “bed”, and the English translation of that phonological competitor, bed, relative to an unrelated word, windmill.

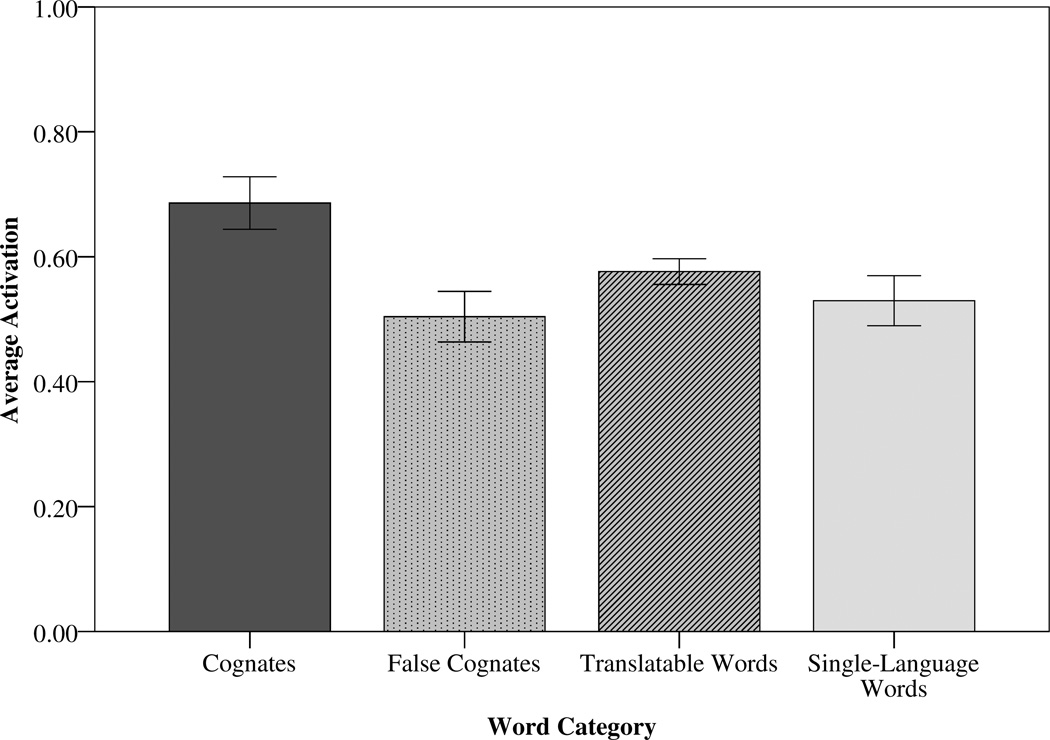

In addition to examining the sets of words that are co-activated during speech comprehension as a product of phonological, ortho-lexical, or semantic information, we were also interested in how the model activated different types of words. The structure of the BLINCS model often maps cognates and false-cognates close together in the phono-lexical space, with cognates further benefitting from overlap at the semantic level. This proximity suggests an advantage for cognate activation, which is consistent with empirical findings that indicate faster or increased activation for cognates (Blumenfeld & Marian, 2007; Costa, Caramazza, Sebastián-Gallés, 2000; Dijkstra, Grainger & van Heuven, 1999). To determine how cognates are processed in the BLINCS, we compared the overall activation of all cognate-words (e.g., doctor (English) and doctor (Spanish)) to false-cognates (e.g., arena and arena “sand”), translatable words (e.g., pato “duck” and duck, and party and fiesta “party”), and words for which the model did not contain a translation (e.g., árbol “tree”). Figure 9 reveals that cognates show higher activation than false-cognates, translatable words or single-language words. The graph also reveals a trend for false-cognates to have slightly lower overall activation than translatable or single-language words. This finding is consistent with research from priming studies indicating that false-cognates show no priming advantages relative to non-cognate words (Lalor & Kirsner, 2001; Sánchez-Casas & García-Albea, 2005), and research showing that naming latencies are slower for false-cognates relative to non-cognate words (Kroll, Dijkstra, Janssen & Schriefers, 2000), perhaps due to reduced false-cognate activation as a function of increased competition at the lexical level.

Figure 9.

Activation of the BLINCS model with different types of target words. Cognate words showed greater activation than false-cognate words, translatable words (i.e., the model contained both Spanish and English translations of a single concept), and single-language words (i.e., the model contained only the Spanish or English word).

Integration of visual information in the BLINCS model

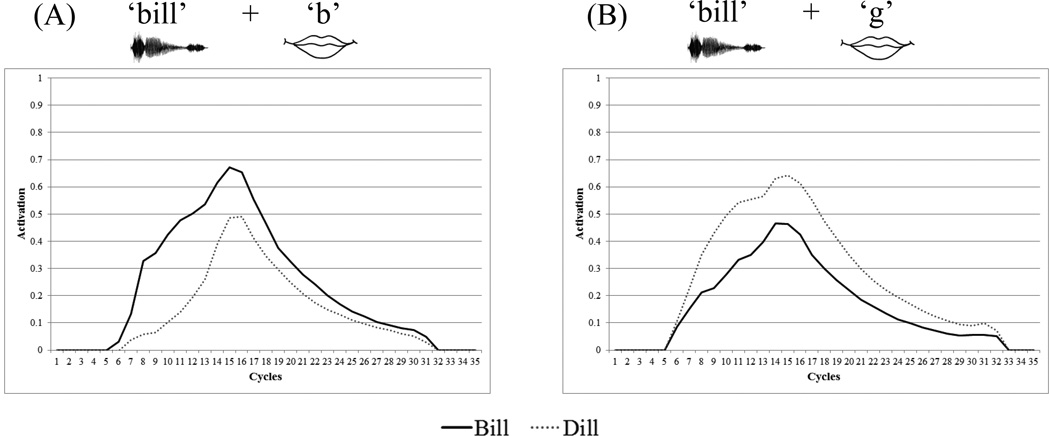

At the phonological level, visual information is able to influence the model’s ability to select a target phoneme by providing visual articulatory information in the form of additional phonemic input. Along with the auditory input provided to the model, a secondary input, meant to represent the end-state of recognition of lip-movement, is simultaneously integrated with the phonological vector. For example, the model may be given the word bill, but simultaneously be provided with visual input that is consistent with the initial phoneme /g/, as with the word gill. When the model processes the word bill, the initial phoneme is therefore the average of the two quantified vectors /b/ and /g/, which results in activation of the phoneme /d/ as the initial phoneme. In this way, the visual information results in a change in percept, causing the model to select the phoneme that best fits the quantified integration of the two inputs (see Figure 10). Visual input at the phonological level can also help perception – a classic study by Sumby and Pollack (1954) showed that corroborating visual information improves word recognition in noise. When a large amount of noise is added to the phonological level in the BLINCS model (by randomly shifting the value of three elements in the phonological vector), the system is less able to select a particular phoneme. The addition of corroborative visual input (e.g., visual information for /b/ during noisy presentation of bill) reduces the noise by making the phonological vector more like its originally-intended target. This process is especially important for bilingual speakers, as evidence suggests that bilinguals may rely more on this sort of multisensory integration than monolinguals (Kanekama & Downs, 2009; Marian, 2009; Navarra & Soto-Faraco, 2007)

Figure 10.

Activation of the words bill and dill in the BLINCS model. Panel A shows auditory presentation of the word bill when accompanied by consistent visual information (the initial phoneme /b/ as in bill). Panel B shows auditory presentation of the word bill when accompanied by inconsistent visual information (the initial phoneme /g/ as in gill). The curves show that in the inconsistent case (B), dill is activated more than bill, which reflects the integration of the auditory phoneme /b/ with the visual phoneme /g/ resulting in perception of the phoneme /d/, as in dill (i.e., the McGurk effect; McGurk & MacDonald, 1976).

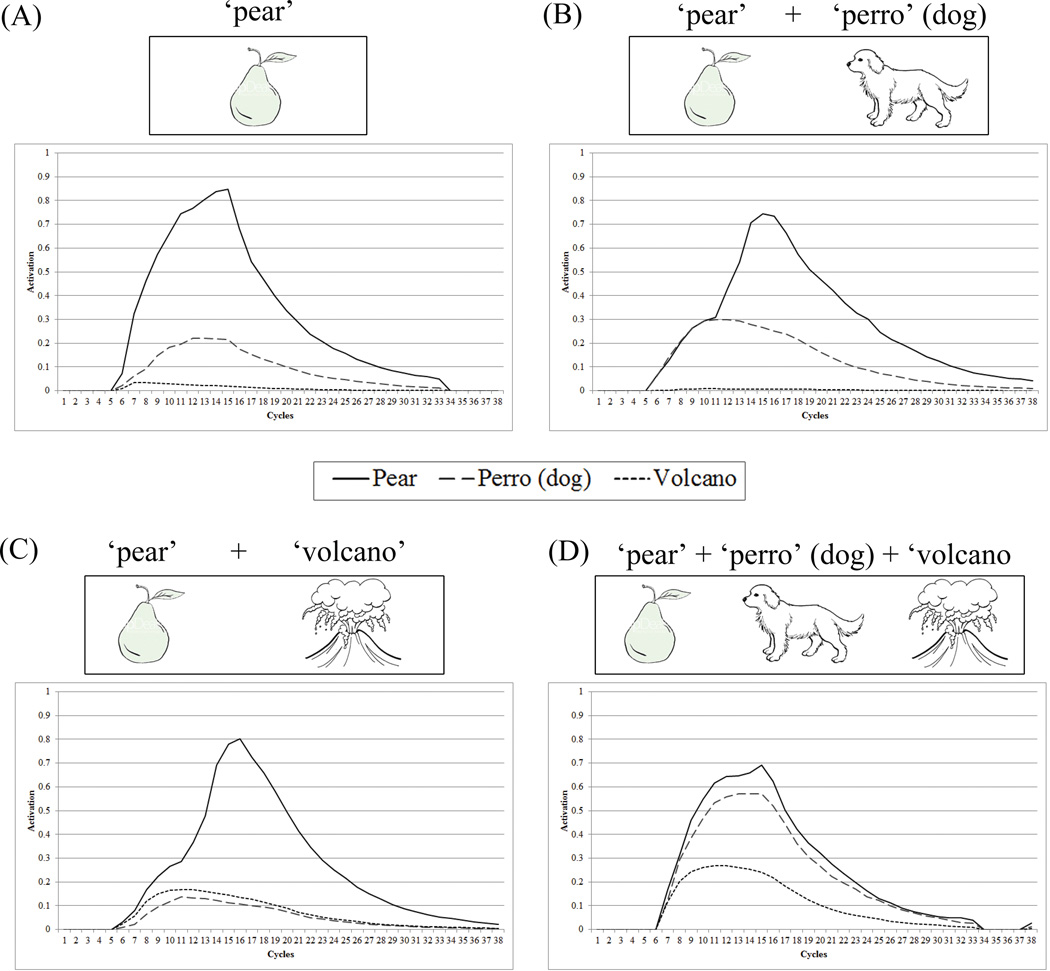

The BLINCS model also contains a mechanism for constraining the activation of words based on the presence of items in the visual scene. Compelling evidence for a relationship between linguistic and visual input during language processing comes from the visual world paradigm (Marian & Spivey, 2003a, b; Shook & Marian, 2012; Tanenhaus et al., 1995), which measures eye-movements as an index of language activation in visual contexts. For example, visual context can constrain listeners’ syntactic interpretation of an utterance (Knoeferle et al., 2005; Spivey et al., 2002), and may play an important role in cross-linguistic lexical activation (Bartolotti & Marian, 2012; Shook & Marian, 2012). Furthermore, given sufficient viewing time, visual scenes themselves appear capable of activating the labels for the items they contain (Huettig & McQueen, 2007; Mani & Plunkett, 2010; Meyer, Belke, Telling & Humphreys, 2007), suggesting that the visual context can boost activation of lexical items. The BLINCS model allows for the inclusion of visual activation in order to simulate the effects found in the visual world paradigm. BLINCS assumes that this visual activation is a product of connections between a visual recognition system and the semantic level; therefore, BLINCS can constrain lexical access by providing additional activation directly to nodes at the semantic level that correspond to items that the visual-input module indicates as currently visible. Here, the visual input module is meant to simulate the activation constraint born from presenting a limited set of visual stimuli during language processing and does not reflect the sensory or perceptual processes involved in visual recognition (i.e., shape or color recognition).

The model is given a list of “visually presented” objects, as well as phonological input, and activation simultaneously begins at the stages of semantic access and phonological processing. Thus, as the phonological input enters the system and feeds upward to the phono-lexical level, feedback from the semantic level down to the phono-lexical level increases activation to those items that were present in the visual display. For example, Figure 11 shows how presentation of the English word pear with an image of a pear results in activation of both pear and perro “dog”, but not volcano (panel A), but that the addition of the image of a dog results in greater activation of perro “dog” than when only the pear is present (panel B). Finally, including an image of a phonologically unrelated item (a volcano given the target word pear) results in the activation of the lexical entry volcano. This mechanism can explain how linguistic activation may be constrained by objects in a visual scene, while also providing a mechanism for explaining how visual context can potentially access the lexical labels for those objects before, or without, linguistic input. The current framework also assumes that the visually presented objects will likely activate lexical items in both languages – since the additional activation occurs at the semantic level, the semantic representation will be able to feed back to both English and Spanish phono-lexical items. Further empirical and computational research will be necessary to determine the extent and the exact manner in which visual information affects bilingual language activation.

Figure 11.

(Colour online) Activation of the words pear, perro “dog”, and volcano in the BLINCS model during auditory presentation of the target word pear, accompanied by visual presentation of (A) pear alone, (B) pear and perro “dog”, (C) pear and volcano, or (D), pear, perro “dog”, and volcano.

Language identification and control

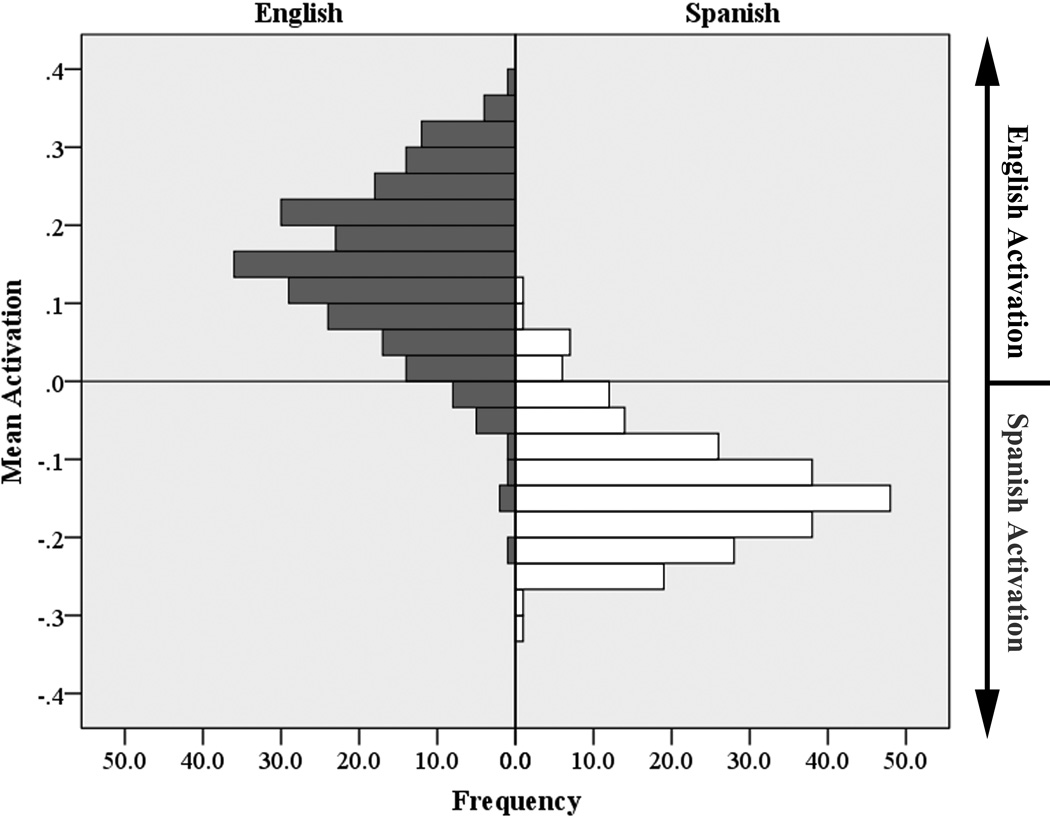

The structure of the BLINCS model, like that of the SOMBIP (Li & Farkas, 2002), provides a means of organizing the lexicons of Spanish and English without explicitly tagging or labeling the items during training. Specifically, the phono-lexical and ortho-lexical levels showed separation of the two languages within the structure of the self-organizing maps, such that same-language words tended to cluster together, with the notable exception of cognates and false-cognates. This separation has implications for the process of language selection and control in bilinguals. One crucial function that may involve the use of language tags is inhibitory control of one of a bilingual’s two languages during processing. According to Green’s (1998) Inhibitory Control model (or, IC Model), the activation of an entire lexicon can be dampened during processing by an inhibitory mechanism, which identifies the language for suppression through the use of language tags. In contrast, Li (1998) suggests that the language system may develop so that lexical items that belong to the same language are grouped together by a learning mechanism (much like in the SOMBIP, and the present BLINCS model), where the language system can draw associations between patterns of localized activation and the form of the input. In other words, if a given word consistently activates a set of other words, the language system may associate those items together, resulting in language-specific patterns of activation.

Although BLINCS is able to utilize language tags as a mechanism for language selection, the present implementation of the BLINCS model is also equipped to capture associations between words within a single language and can use these associations to guide language selection. The model received each of the 480 words that it was given during training and we measured the overall activation of both English and Spanish words. By subtracting the average activation of English words from that of Spanish words and dividing by the average activation of all nodes, we calculated a language activation score. A positive language activation score would represent a system that was tilted towards English activation, i.e., those nodes representing English words were more active on average, as a result of the target word. Figure 12 shows the histogram of language activation scores for each word, separated by target language. Statistical analyses on the model-generated data revealed a significant difference between English words (N = 240, M = 0.147, SD = 0.1) and Spanish words (N = 240, M = −0.135, SD = 0.08), t(478) = 34.13, p < .001. When English words are presented as targets, the model is more likely to activate other English words, and when Spanish words are presented, BLINCS is more likely to activate Spanish words. We also measured the top 10 co-activated words for each word in the model; when the model was given an English word, the average proportion of Spanish words in the cohort was 21.1% (SD = 0.19), and when given a Spanish word, the cohort was 69.5% (SD = 0.22) Spanish, t(478) = −24.8, p < .001. These accuracy levels differ from those found in natural language, where bilinguals recognize the language to which a word belongs with high accuracy. This difference is likely due to the fact that in natural language situations, listeners can draw upon their previous experience and both the linguistic and environmental context to provide clues about language membership. Despite these differences, the model is successful in its ability to utilize localized activation patterns to determine which lexical items to suppress (e.g., a portion of the words with relatively low activation), resulting in a generalized pattern of specific-language inhibition (consistent with Li, 1998).

Figure 12.

Histogram of language activation scores for each word. Points above zero reflect greater overall activation of English lexical items, while points below zero reflect greater overall activation of Spanish lexical items. The mean activation for English words was 0.147 (SD = 0.1) and the mean activation for Spanish words was −0.135 (SD = 0.08), indicating that English and Spanish words activated items in their corresponding languages to a similar degree.

The language system may retain this localized activation from word-to-word, so that if a word in one language immediately follows a word from the other language, as with a code-switch, the second word will be more difficult to access. This is consistent with evidence for a processing cost associated with switching languages (Costa & Santesteban, 2004; Costa, Santesteban & Ivanova, 2006; Gollan & Ferreira, 2009; Meuter & Allport, 1999). This process could occur either through a mechanism of general inhibition, in which the lexical nodes of one language are suppressed, or due to the lexical items of the presently-in-use language being more highly active on average.

One way to accomplish the goal of a general inhibitory mechanism is to mathematically reduce the relative activation of one language in order to promote activation or selection of the other. Individual items can be inhibited in an attempt to normalize the language activation score of the to-be-suppressed language to zero (equivalent English/Spanish activation). In this way, if the model considers one language to be dominant relative to the other, more inhibition would be required to normalize the relative activation score, which would effectively simulate the switch-cost asymmetry such as the one found in Meuter and Allport (1999). Conversely, a balanced model may not show such an asymmetry, as the amount of suppression required would be equal across languages (see Costa & Santesteban, 2004).

Alternatively, the model may not require a general suppression mechanism for lexical selection at this level. Rather than depending on suppression of lexical items, the language system may be represented by a threshold model, where lexical items race to reach a selection threshold. In BLINCS, a switch-cost might occur in the following way: Prior input to the model may result in localized activation of one language. When a new word from the unused language is presented to the model, that new word is forced to compete with items from the previously used language that are already active, resulting in delayed processing. A switch-cost asymmetry could arise as a consequence of the localized activation for each language, and reactive lateral inhibition at the level of the lexicon. Given sustained L1 input, activation in the model would be more localized (though not completely) to other L1 words, which would inhibit each other through lateral connections. Upon switching to L2, the L2 word would compete with L1 words, resulting in a switch-cost. In contrast, given sustained input in L2, relative activation in the model would be more balanced for L1 and L2 candidates than in the previous case where the target word was in L1. The co-activated L1 words would again reactively inhibit one another, so that upon an L2-to-L1 switch, the L1 word would compete with L2 candidates, and encounter a language system where its L1 neighbors are potentially primed for lateral inhibition, or are less able to facilitate the target (by virtue of proximity in the map) due to being previously inhibited.

A third possibility is that a language switch-cost is not necessarily inherent to the language system. Finkbeiner, Almeida, Janssen and Caramazza (2006) had bilinguals name pictures in their L1, preceded by a digit-naming trial (similar to Meuter & Allport, 1999) in either their L1 or L2. They found that bilinguals did not show a switch cost; they replied equally fast to switch trials and non-switch trials (though the switch-cost was found when participants switched between L1 and L2 for naming digits). In addition, when participants are allowed to voluntarily switch, they do not show a switch-cost and sometimes even show facilitation of the response (Gollan & Ferreira, 2009). It is therefore possible that the switch-costs found in previous studies are not intrinsic to language processing, but reflect the participants’ expectation of language change. Under this scenario, the BLINCS model would show switch-costs only when primed to do so by a context or task-dependent module apart from the language system (which would be compatible with either a suppression-based or activation/threshold-based account of switch-costs). Future computational and empirical work will need to determine the exact nature of these effects.

Irrespective of the underlying processes responsible for language-specific activation or suppression, the BLINCS model is capable of distinguishing between languages (a necessary feat for both accounts) without the need for explicit tags or nodes by learning the characteristics of the input and using that information to define the layout of the language system.

Conclusions

In summary, the Bilingual Language Interaction Network for Comprehension of Speech – the BLINCS model – is a highly-interactive network of dynamic, self-organizing systems, aimed at capturing the natural phenomena associated with the processing of spoken language in bilinguals. The BLINCS model makes predictions about both the underlying architecture of the bilingual language system, as well as the way in which these structures interact when processing spoken information.

For example, the architecture of the model assumes a shared phonological system, where there is no clear delineation between Spanish and English phonemes. This is consistent with research suggesting that bilinguals have shared phonological representations (Roelofs, 2003; Roelofs & Verhoef, 2006). However, the organization of the phonological level can still lead to language specific activation. Consider the phonemes /x/ and /ɣ/. Since these phonemes are present in Spanish but not English, when they are encountered, it is much more likely that Spanish words will be activated at the phono-lexical level than English words. Furthermore, these two phonemes map closely in phonological space, and can activate one another, further reinforcing a bias towards Spanish word activation. This suggests that when pockets of language-specificity are found in the phonological level, it is likely to occur with phonemes that are not shared across languages. Therefore, two languages that have highly distinct, non-overlapping phonological inventories might show more separation at the phonological level.

At the lexical level, BLINCS assumes that a bilingual’s two languages are separated but integrated. The model separates words at the phono-lexical level into language regions according to the phono-tactic probabilities of the input. However, it does not separate the languages with such strict division that they are unable to interact. Indeed, while there are distinct language “islands” within the map, cross-language items that overlap very highly in phonological form (e.g., cognates and false-cognates) tend to be placed at the boundaries between language-regions, which may account for the facilitative advantages found for cognates. For example, cognates may be less susceptible to dampening effects of linguistic context (e.g., suppression of an unused language) by virtue of being able to receive facilitation from nearby items in their own language, and from cross-language items.

In the ortho-lexical level, we see a separate but integrated structure similar to that of the phono-lexical level, but with a higher degree of overlap than is seen in the phono-lexical SOM. Of course, the structure of the ortho-lexical level is influenced by the degree of difference between the two languages’ orthographies. For Spanish and English, whose orthographies are very similar, we may see greater integration of ortho-lexical forms than if we were to train the model on languages with more varied orthographies (e.g., Russian and English).

As in the BIA+ (Dijkstra & van Heuven, 2002) model, BLINCS assumes a single semantic level with a shared set of conceptual representations across languages. A semantic structure with common meanings among translation equivalents is supported by empirical research suggesting that semantic representations are shared across languages (Kroll & De Groot, 1997; Salamoura & Williams, 2007; Schoonbaert et al., 2007). However, it is possible that conceptual representations across a bilingual’s two languages are not one-to-one. Languages can carry cultural information which may influence conceptual feature representations. For example, Pavlenko and Driagina (2007, cited first in Pavlenko, 2009, p. 134) found that while native Russian speakers differentiate between feelings of general anger (using the word zlit’sia) and anger at a specific person (using the word serdit’sia), English-native learners of Russian do not; instead, they consistently use serdit’sia, effectively collapsing the two categories of anger. This finding suggests that the L2 Russian learners and the native Russian speakers may have somewhat distinct representations for the concept of anger, since the native Russian speakers make a category distinction where the L2 learners do not. Similar patterns have been seen for categories of concrete objects (Ameel, Storms, Malt & Sloman, 2005; Graham & Belnap, 1986). Additionally, when the conceptual representations are shared across languages, the strength of connections between representations can still potentially differ. Dong, Gui and MacWhinney (2005) found stronger connections between Mandarin words xin niang “bride” and hong se “red”, since red is a common color for wedding attire in China, than for the English translation equivalents. Data from studies supporting either shared or distinct conceptual representations suggest a semantic system that is highly dynamic; in the future, BLINCS can potentially be used to investigate the degree to which conceptual representations are shared across languages and the effect this overlap might have on processing.

BLINCS also models language activation in bilingual speech comprehension as it occurs over time. Simulations of language activation in the model indicate that it is capable of accounting for, and making predictions regarding, (i) the activation of onset competitors both within- and between-languages and (ii) rhyme competitors both within- and between languages, (iii) the impact of ortho-lexical information on phono-lexical processing, (iv) the interaction between semantic and phono-lexical representations, and (v) increased or faster activation for cognates and false-cognates. Additionally, BLINCS allows for input from the visual domain to influence these processes. The model also provides a potential means of separating a bilingual’s two languages without the need for explicit tags or nodes. These effects arise from the combination of the self-organizing maps, which capture the relationships between representations by placing them in physical space, and a connectionist activation framework, which captures how those representations interact both within and across levels of processing. In the current paper, the various phenomena captured by BLINCS are represented with examples that are indicative of the model’s performance and offer an initial overview of the BLINCS model’s ability to capture bilingual language processing. Future research will further test the viability of the BLINCS model by directly comparing model simulations to empirical data.

In the current paper we have outlined a combined connectionist and distributed model of bilingual spoken language comprehension, BLINCS. Though successfully able to capture many phenomena related to bilingual language processing, we hope to expand and refine the BLINCS model in future implementations. For example, the model can easily be adapted for larger vocabularies, novel pairs of spoken languages, or structural details (e.g., voice-onset time), simply by adjusting the amount or form of the input. Additionally, the effects of linguistic context (e.g., prior language activation), non-linguistic context (e.g., expectation, goal-orientation), and more detailed visual information on language processing deserve exploration. Understanding these effects will help to further determine whether control mechanisms, such as language-specific suppression (as suggested by the IC Model; Green, 1998), are necessary for language selection. Finally, recent work highlights how self-organizing maps can be trained to simulate changes in linguistic experience or ability, like relative proficiency of two languages or age of second language acquisition (Miikkulainen & Kiran, 2009; Zhao & Li, 2010), or changes in bilingual processing due to aphasia (Grasemann, Kiran, Sandberg & Miikkulainen, 2011) or lesions (Li, Zhao & MacWhinney, 2007). Models like BLINCS could potentially capture subtle changes in activation patterns as a function of individual differences in bilingual experience and have the potential to enhance our understanding of both the structure and function of the bilingual language system.

Supplementary Material

Footnotes

The authors would like to thank the members of the Northwestern Bilingualism and Psycholinguistics Laboratory, as well as Dr. Ping Li and two anonymous reviewers for their helpful comments. This research was funded in part by grant R01HD059858 to the second author, and the John D. and Lucille H. Clarke Scholarship to the first author.

Dijkstra and Van Heuven further extended the model to better capture the effect of semantic and phonological information on visual word recognition (SOPHIA, or Semantic, Orthographic, & Phonological Interactive Activation, described in Thomas & van Heuven, 2005).

References

- Allopenna P, Magnuson J, Tanenhaus M. Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language. 1998;38:419–439. [Google Scholar]

- Ameel E, Storms G, Malt B, Sloman S. How bilinguals solve the naming problem. Journal of Memory and Language. 2005;52:309–329. [Google Scholar]

- Bartolotti J, Marian V. Language learning and control in monolinguals and bilinguals. Cognitive Science. 2012;36(6):1129–1147. doi: 10.1111/j.1551-6709.2012.01243.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates E, Devescovi A, Wulfeck B. Psycholin-guistics: A cross-language perspective. Annual Review of Psychology. 2001 May;52:369–396. doi: 10.1146/annurev.psych.52.1.369. [DOI] [PubMed] [Google Scholar]

- Bitan T, Burman DD, Chou T-L, Lu D, Cone NE, Cao F, Bigio JD, Booth JR. The interaction between orthographic and phonological information in children: An fMRI study. Human Brain Mapping. 2007;28(9):880–891. doi: 10.1002/hbm.20313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blumenfeld H, Marian V. Constraints on parallel activation in bilingual spoken language processing: Examining proficiency and lexical status using eye-tracking. Language and Cognitive Processes. 2007;22(5):633–660. [Google Scholar]

- Brysbaert M, New B. Moving beyond Kucera and Francis: A critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behavioral Research Methods. 2009;41(4):977–990. doi: 10.3758/BRM.41.4.977. [DOI] [PubMed] [Google Scholar]

- Burgess C, Lund K. Modelling parsing constraints with high-dimensional context space. Language & Cognitive Processes. 1997;12(2):177–210. [Google Scholar]

- Chater N, Christiansen MH. Computational models in psycholinguistics. In: Sun R, editor. Cambridge handbook of computational cognitive modeling. New York: Cambridge University Press; 2008. pp. 477–504. [Google Scholar]

- Cleland AA, Gaskell MG, Quinlan PT, Tamminen J. Frequency effects in spoken and visual word recognition: Evidence from dual-task methodologies. Journal of Experimental Psychology: Human Perception and Performance. 2006;32(1):104–119. doi: 10.1037/0096-1523.32.1.104. [DOI] [PubMed] [Google Scholar]

- Costa A, Caramazza A, Sebastián-Gallés N. The cognate facilitation effect: Implications for models of lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2000;26(5):1283–1296. doi: 10.1037//0278-7393.26.5.1283. [DOI] [PubMed] [Google Scholar]

- Costa A, Santesteban M. Lexical access in bilingual speech production: Evidence from language switching in highly proficient bilinguals and L2 learners. Journal of Memory and Language. 2004;50(4):491–511. [Google Scholar]

- Costa A, Santesteban M, Ivanova I. How do highly proficient bilinguals control their lexicalization process? Inhibitory and language-specific selection mechanisms are both functional. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32:1057–1074. doi: 10.1037/0278-7393.32.5.1057. [DOI] [PubMed] [Google Scholar]

- Cuetos F, Glez-Nosti M, Barbón A, Brysbaert M. SUBTLEX-ESP: Spanish word frequencies based on film subtitles. Psicológica. 2011;32:133–143. [Google Scholar]

- De Groot AMB, Kroll J, editors. Tutorials in bilingualism: Psycholinguistic perspectives. Mahwah, NJ: Lawrence Erlbaum; 1997. [Google Scholar]

- Dijkstra T, Grainger J, van Heuven WJB. Recognition of cognates and interlingual homographs: The neglected role of phonology. Journal of Memory and Language. 1999;41:496–518. [Google Scholar]

- Dijkstra T, van Heuven WJB. The BIA model and bilingual word recognition. In: Grainger J, Jacobs AM, editors. Localist connectionist approaches to human cognition. Mahwah, NJ: Lawrence Erlbaum; 1998. pp. 189–225. [Google Scholar]

- Dijkstra T, van Heuven WJB. The architecture of the bilingual word recognition system: From identification to decision. Bilingualism: Language and Cognition. 2002;5(3):175–197. [Google Scholar]

- Dong Y, Gui Sh, MacWhinney B. Shared and separate meanings in the bilingual mental lexicon. Bilingualism: Language and Cognition. 2005;8(3):221–238. [Google Scholar]

- Finkbeiner M, Almeida J, Janssen N, Caramazza A. Lexical selection in bilingual speech production does not involve language suppression. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32(5):1075–1089. doi: 10.1037/0278-7393.32.5.1075. [DOI] [PubMed] [Google Scholar]

- Finkbeiner M, Forster K, Nicol J, Nakamura K. The role of polysemy in masked semantic and translation priming. Journal of Memory and Language. 2004;51(1):1–22. [Google Scholar]

- FitzPatrick I, Indefrey P. Lexical competition in nonnative speech comprehension. Journal of Cognitive Neuroscience. 2010;22(6):1165–1178. doi: 10.1162/jocn.2009.21301. [DOI] [PubMed] [Google Scholar]

- Forster KI. Accessing the mental lexicon. In: Wales RJ, Walker E, editors. New approaches to language mechanisms. Amsterdam: North-Holland; 1976. pp. 257–287. [Google Scholar]

- Gentilucci M, Cattaneo L. Automatic audiovisual integration in speech perception. Experimental Brain Research. 2005;167(1):66–75. doi: 10.1007/s00221-005-0008-z. [DOI] [PubMed] [Google Scholar]

- Gollan TH, Ferreira VS. Should I stay or should I switch? A cost-benefit analysis of voluntary language switching in young and aging bilinguals. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2009;35(3):640–665. doi: 10.1037/a0014981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham R, Belnap K. The acquisition of lexical boundaries in English by native speakers of Spanish. International Review of Applied Linguistics. 1986;24:275–286. [Google Scholar]

- Grainger J, Dijkstra T. On the representation and use of language information in bilinguals. In: Harris RJ, editor. Cognitive processing in bilinguals. vol. 83. Amsterdam: Elsevier; 1992. pp. 207–220. [Google Scholar]

- Grasemann U, Kiran S, Sandberg C, Miikkulainen R. In: Proceedings of WSOM11, 8th Workshop on Self-organizing Maps. Laaksonen J, Honkela T, editors. Espoo: Springer Verlag; 2011. pp. 207–217. [Google Scholar]

- Green DW. Mental control of the bilingual lexico-semantic system. Bilingualism: Language and Cognition. 1998;1:67–82. [Google Scholar]

- Grosjean F. Exploring the recognition of guest words in bilingual speech. Language and Cognitive Processes. 1988;3:233–274. [Google Scholar]

- Grosjean F. In: Processing mixed languages: Issues, findings and models. Groot, De Kroll, editors. 1997. pp. 225–254. [Google Scholar]

- Hartsuiker RJ, Pickering MJ, Veltkamp E. Is syntax separate or shared between languages? Cross-linguistic syntactic priming in Spanish–English bilinguals. Psychological Science. 2004;15(6):409–414. doi: 10.1111/j.0956-7976.2004.00693.x. [DOI] [PubMed] [Google Scholar]

- Huettig F, Altmann GTM. Word meaning and the control of eye fixation: Semantic competitor effects and the visual world paradigm. Cognition. 2005;96:B23–B32. doi: 10.1016/j.cognition.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Huettig F, McQueen JM. The tug of war between phonological, semantic and shape information in language-mediated visual search. Journal of Memory and Language. 2007;57:460–482. [Google Scholar]

- International Phonetic Association. Handbook of the International Phonetic Association: A guide to the use of the International Phonetic Alphabet. Cambridge: Cambridge University Press; 1999. [Google Scholar]

- Ju M, Luce PA. Falling on sensitive ears. Psychological Science. 2004;15:314–318. doi: 10.1111/j.0956-7976.2004.00675.x. [DOI] [PubMed] [Google Scholar]

- Jurgens D, Stevens K. Proceedings of the ACL 2010 System Demonstrations. Association for Computational Linguistics; 2010. The S-Space package: An open source package for word space models; pp. 30–35. [Google Scholar]

- Kanekama Y, Downs D. Effects of speechreading and signal-to-noise ratio on understanding American English by American and Indian adults. Journal of the Acoustical Society of America. 2009;126(4):2314. [Google Scholar]

- Kaushanskaya M, Marian V. Non-target language recognition and interference: Evidence from eye-tracking and picture naming. Language Learning. 2007;57(1):119–163. [Google Scholar]

- Kaushanskaya M, Yoo J, Marian V. The effect of second-language experience on native-language processing. Vigo International Journal of Applied Linguistics. 2011;8:54–77. [PMC free article] [PubMed] [Google Scholar]

- Knoeferle P, Crocker MW, Scheepers C, Pickering MJ. The influence of the immediate visual context on incremental thematic role assignment: Evidence from eye-movements in depicted events. Cognition. 2005;95(1):95–127. doi: 10.1016/j.cognition.2004.03.002. [DOI] [PubMed] [Google Scholar]

- Kohonen T. Self-organizing maps. Berlin; Heidelberg; New York: Springer; 1995. [Google Scholar]

- Kramer AF, Donchin E. Brain potentials as indices of orthographic and phonological interaction during word matching. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1987;13(1):76–86. doi: 10.1037//0278-7393.13.1.76. [DOI] [PubMed] [Google Scholar]