Abstract

Humans and animals use landmarks during wayfinding to determine where they are in the world and to guide their way to their destination. To implement this strategy, known as landmark-based piloting, a navigator must be able to: (i) identify individual landmarks, (ii) use these landmarks to determine their current position and heading, (iii) access long-term knowledge about the spatial relationships between locations and (iv) use this knowledge to plan a route to their navigational goal. Here, we review neuroimaging, neuropsychological and neurophysiological data that link the first three of these abilities to specific neural systems in the human brain. This evidence suggests that the parahippocampal place area is critical for landmark recognition, the retrosplenial/medial parietal region is centrally involved in localization and orientation, and both medial temporal lobe and retrosplenial/medial parietal lobe regions support long-term spatial knowledge.

Keywords: spatial navigation, parahippocampal cortex, retrosplenial cortex, parietal lobe, hippocampus, functional magnetic resonance imaging

1. Introduction

Landmarks are entities that are useful for navigation because they are fixed in space. They can come in a variety of forms, including single discrete objects (such as a building or statue) and extended topographical features (such as a valley, ridge or the arrangement of buildings at an intersection). Humans and animals use landmarks during wayfinding to determine their position and heading. This strategy, known as landmark-based piloting, contrasts with path integration strategies in which self-motion cues are used to determine displacement relative to a known starting position [1]. Landmark-based piloting and path integration can work in concert during navigation, with path integration used to keep track of one's position and heading while exploring a new space and landmark-based piloting used to re-establish (or re-calibrate) these quantities when in a familiar environment. Landmarks can also be used as part of a stimulus–response strategy while following familiar routes, but here we focus on the use of landmarks as part of a spatial (i.e. locale) rather than an action (i.e. taxon) strategy [2].

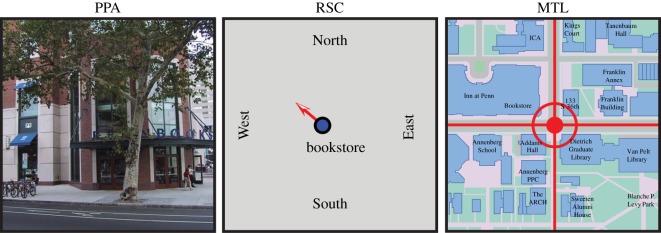

To perform landmark-based piloting, one needs four cognitive mechanisms, which support different components of the strategy. First, a landmark recognition mechanism for identifying landmarks when they are within the sensory horizon. Second, a localization/orientation mechanism that uses sensory information to determine one's current position and heading. Third, a mechanism for encoding and retrieving long-term spatial knowledge about the locations of other points of interest that might act as navigational goals. Fourth, a route-planning mechanism that uses spatial knowledge to plan a route to one's destination. In colloquial terms, these mechanisms allow a navigator to answer four questions: (i) What am I looking at? (ii) Where am I and which direction am I facing? (iii) Where are other places? and (iv) How do I get to these places? Here, we outline evidence linking the first three of these mechanisms to specific brain regions in the parahippocampal cortex, retrosplenial/medial parietal region and medial temporal lobe (MTL; figure 1). We leave aside route planning for now, as less is known about the neural basis of this ability.

Figure 1.

Hypothesized roles of the parahippocampal place area (PPA), retrosplenial complex (RSC) and medial temporal lobe (MTL) in landmark-based piloting. In the proposed scheme, the PPA identifies landmarks, the RSC uses landmarks to determine the current location and facing/heading direction (and may also encode information about directions to other locations—not shown) and the MTL encodes a cognitive map that represents landmarks and goals in terms of their coordinates in allocentric space. (Online version in colour.)

2. Landmark recognition

The first step in landmark-based piloting is landmark recognition—that is, identification of landmarks when one is directly in their presence. In theory, the brain could have used a general-purpose object recognition system to solve this problem. However, it appears to rely instead on a specialized mechanism for landmark recognition, analogous in many ways to the specialized mechanism that is believed to support face recognition.

The primary neural locus of this mechanism is the parahippocampal place area (PPA)—a region in the collateral sulcus near the parahippocampal/lingual boundary that exhibits a strong functional magnetic resonance imaging (fMRI) response when subjects view environmental stimuli, such as buildings, streets, rooms and landscapes [3,4]. By contrast, the PPA only responds weakly when subjects view common everyday objects, such as vehicles, tools and appliances, and it does not respond at all when they view faces. Notably, the PPA exhibits this strong preference for environmental stimuli even when subjects simply view stimuli passively without performing any explicit navigational task. Consistent with these neuroimaging results, patients with damage to the PPA find it difficult to identify their surroundings based on analysis of the visual scene as a whole, although they can sometimes figure out where they are by focusing on small details, such as a mailbox or a door knocker [5,6]. That is, they can recognize objects but they cannot recognize the scenes within which the objects are contained.

An especially salient kind of landmark is the geometric arrangement (i.e. spatial layout) of the major surfaces of the local scene. Several lines of evidence indicate that the PPA might be concerned with processing this kind of information. The PPA responds strongly to images of empty rooms containing little more than bare walls, which contain no discrete objects but depict a three-dimensional space as defined by fixed background elements [3]. It also responds strongly to ‘scenes’ made out of Lego blocks that have a similar geometric organization [7] even when they are perceived haptically rather than visually [8]. Further, multi-voxel pattern analysis (MVPA) studies have found that the PPA distinguishes between scenes based on their geometric features, with distinct activity patterns elicited by open vistas (e.g. a highway stretching through an open desert) and closed-in scenes (e.g. a crowded city street) [9,10]. Finally, PPA response to scenes is greater when subjects judge the location of a target object relative to the fixed architectural elements in the scene than when they judge its location relative to a movable object or the viewer, thus demonstrating a role for the PPA in the processing of environment-centred spatial relationships [11].

These observations seem to fit well with behavioural data showing that humans and animals use geometric information preferentially (and sometimes exclusively) to reorient after disorientation [12,13]. However, results from two recent fMRI adaptation studies suggest that coding of geometry is not the whole story. The first study found that the PPA exhibits cross-adaptation between visual stimuli that have similar visual summary statistics [14]. The second study found that the PPA exhibits cross-adaptation between mirror images of the same scene, which contain the same visual features, but shown in different spatial arrangements relative to the viewer [15]. Thus, the first study shows sensitivity to non-spatial features, whereas the second study shows insensitivity to one kind of spatial information. Taken as a whole, the literature suggests the PPA encodes both spatial and non-spatial aspects of scenes and may use both kinds of features for scene recognition. Further, the relative insensitivity of the PPA to mirror reversal suggests that it may be more involved in identifying scenes based on visual features and/or intrinsic spatial geometry than in calculating one's egocentric orientation relative to that geometry.

What about landmarks that take the form of individual punctate objects (such as a statue or a mailbox)? Several findings suggest that the PPA may support recognition of these as well. The fact that the PPA responds strongly to buildings even when they are shown as discrete items cut out from their surroundings has long been known [16]. Recent studies have shown that the PPA also exhibits preferential response to certain kinds of common everyday objects when presented in the same way. For example, the PPA responds more strongly to large objects compared with small objects [17,18], distant objects compared with nearby objects [19], objects with strong contextual associations compared with objects with weak contextual associations [20] and objects that define the space around them compared with objects with weak spatial definition [21]. In all four cases, the PPA responds more strongly to objects that are more useful as landmarks than to objects that are less useful [22]. In addition, PPA response can be modulated by the navigational history of an object: objects initially encountered at navigational decision points, for example intersections, elicit more activity when they are subsequently observed in isolation compared with objects initially encountered at non-decision point locations [23]. These results demonstrate that the PPA responds not only to scenes, but also to non-scene objects with orientational value, either because of their inherent qualities (for example, size), or because they were previously encountered in a navigationally relevant location.

An important unanswered question is how the PPA response to an object changes as it becomes encoded as a landmark. The PPA may simply represent the appearances of entities that have known orientational value. However, it is unclear what purpose such coding would serve given that the primate visual system already contains a set of regions dedicated to recognizing objects in the lateral occipital cortex and fusiform region. Something must be gained by re-encoding the appearances of landmark objects in the PPA; for example, the landmarks might be represented in a different way that allows the visual aspects of the landmark to be linked to its navigationally relevant spatial features. One possibility is that the PPA affixes a local spatial coordinate frame onto the landmark object, which allows it to be subsumed into the larger constellation of unchanging stationary elements indicative of a location. In this conception, by coding an object as a landmark, the PPA treats it as a fixed background element—in effect, as a partial scene [22]. Alternatively, the PPA might strip away the perceptual and spatial features of a landmark to encode it as an abstract indicator of a ‘place’; this representation could then trigger the recovery of the appropriate spatial map in the hippocampus and other structures [24].

3. Localization and orientation

The second step in landmark-based piloting is the use of local landmarks to determine where one is and which direction one is facing (or heading) in large-scale space. That is, one not only has to identify the place one is looking at, but one also has to localize and orient oneself within a spatial framework that can potentially extend beyond the current horizon. Identification and localization/orientation are conceptually distinct operations: a tourist in Paris might be able to identify the Eiffel Tower and Arc De Triomphe without being able to use that information to figure out where they were in the city or which direction they were facing.

Several lines of evidence suggest that the PPA is not the critical locus for localization and orientation. Rather, this operation is primarily supported by a medial parietal region called the retrosplenial complex (RSC), which includes retrosplenial cortex and more posterior territory along the parietal–occipital sulcus [25]. Like the PPA, the RSC activates during passive viewing of scenes, but this response is strongly enhanced when the scenes are familiar locations, suggesting a role in linking the visual percept to long-term knowledge [26]. Evidence that this long-term knowledge is spatial comes from a study in which we scanned Penn students while they viewed images of familiar locations around campus [27]. On different trials, they reported (i) whether the image depicted a location on the east or west side of campus, (ii) whether the image depicted a view facing to the east or west and (iii) whether the location was familiar or not. Only the first two tasks involved the explicit retrieval of spatial information; RSC response was greater in these two tasks compared with the third. By contrast, PPA responded equally to all three conditions. These results suggest that RSC is centrally involved in determining where one is located or how one is oriented in the broader spatial environment, whereas the PPA is primarily concerned with analysing the local (i.e. immediately visible) scene or landmark [28,29].

Additional evidence for this division of labour between the PPA and RSC comes from neuropsychology [5]. In contrast to patients with PPA damage, who have trouble recognizing places and buildings, patients with RSC damage can identify these items without difficulty. However, they cannot then use these landmarks to orient themselves in large-scale space. For example, they can look at a building and name it without hesitation, but they cannot tell from this whether they are facing north, south, east or west, and they cannot point to any other location that is not immediately visible [30]. It is as if they can perceive the scenes around them normally but these scenes are ‘lost in space’—unmoored from their broader spatial context.

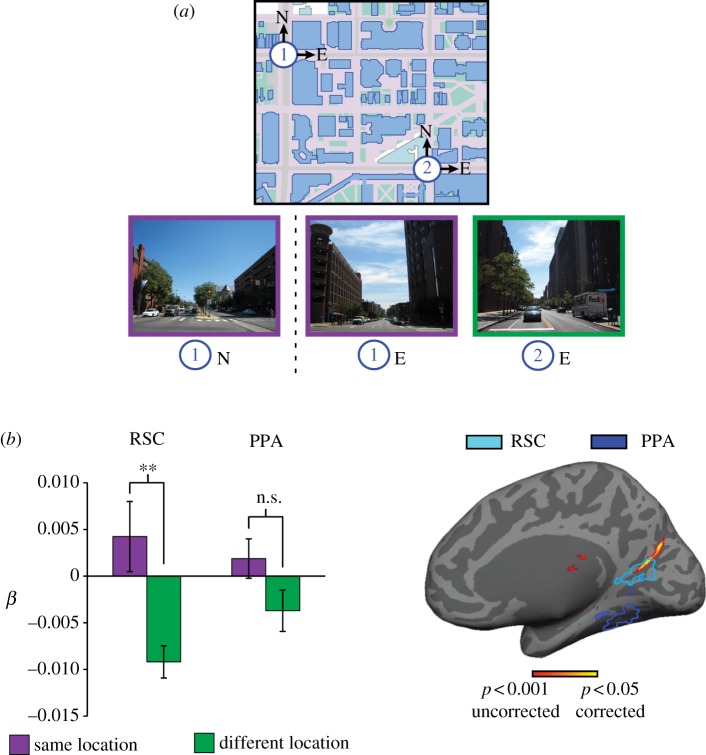

A recent study from our laboratory used MVPA to more directly demonstrate RSC involvement in localization (figure 2) [35]. The study was motivated by the observation that one can potentially experience many different views from the same location. Thus, to show that a region represents location per se, one needs to show that different views obtained at the same location elicit a common neural response, even if they have few or no common visual features. To show this, we scanned Penn students while viewing images taken at different intersections around the Penn campus. At each intersection, we included views facing the four different compass directions, which meant that we had four views for each location that did not overlap. Although it was evident to Penn students that these four views were taken from the same location, it would have been virtually impossible for someone unfamiliar with the campus to determine this. We then looked for multi-voxel activity patterns that were consistent across the four different views for each location. We observed such location-specific activity patterns in a medial parietal region extending from RSC posteriorly into the precuneus and also in the left presubicular region of the MTL. In these regions, the response patterns elicited by different views were more similar for views acquired at the same intersection than for views acquired at different intersections. By contrast, PPA activity patterns distinguished between views, but did not group these view-specific patterns by location.

Figure 2.

fMRI evidence that RSC codes location in a manner that abstracts away from perceptual features. (a) Subjects were scanned while viewing images taken at different locations on a familiar college campus. (b) Multi-voxel activity patterns elicited by non-overlapping views taken at the same location (e.g. 1N and 1E) were more similar than patterns elicited by views taken at different intersections (e.g. 1N and 2E) in RSC; a similar trend in the PPA was not significant. Bars indicate the average pattern similarity between same-location (dark grey/purple) and different-location (light grey/green) view pairs, as measured by the beta weight on the corresponding categorical regressors in a multiple regression model that also included a regressor for low-level visual similarity between the views. Right shows the same pattern of results in a whole-brain searchlight analysis. Voxels whose surrounding neighbourhood exhibited greater pattern similarity for same-location views compared with different-location views are plotted on the inflated medial surface of the brain. The PPA and RSC were defined in each subject based on greater response to scenes than to objects in independent localizer scans; outlines on the inflated brain reflect the across-subject region-of-interest (ROI) intersection that most closely matched the average size of each ROI. Adapted from [35]. (Online version in colour.)

These results indicate that RSC represents location per se, and not just the visual features or landmarks observable from those locations. This implicates the RSC in localization. What about orientation—determining one's facing (i.e. heading) direction? Because the Penn campus is built on a rectangular grid plan, the four views at each intersection faced the four compass directions (north, south, east and west). Indeed, the subjects' task was to report this facing direction on each trial. Thus, it was possible to look for neural coding of facing direction by seeing whether views facing the same direction at different intersections elicited activity patterns that were more similar than those elicited by views facing different directions at different intersections. We found evidence for such coding in the right presubiculum—one of the regions known to contain head direction (HD) cells in rodents [31]. There was also some weak evidence for direction coding in RSC but it did not maintain significance when visual similarity between the images was controlled for.

Stronger evidence for direction coding in RSC comes from an fMRI adaptation study in which subjects were trained on a virtual environment consisting of 10 intersecting corridors, five of which were oriented parallel to each other along one direction, and five of which were oriented parallel to each other along the orthogonal direction [32]. There was a visual landmark at the end of each corridor, so subjects could determine which direction they were facing. On each trial, subjects viewed two different corridors presented sequentially, which could either be parallel (same direction) or orthogonal (different direction). In the left RSC (BA31), response was reduced on same-direction trials compared with different-direction trials, indicating that the region coded direction, and thus showed adaptation when direction was repeated.

In summary, fMRI studies have demonstrated that RSC activates during localization and orientation, and they have also shown that RSC codes the outcomes of these operations (i.e. location and facing direction). However, it remains unclear how location and facing direction are represented at the neural level, and it is also unclear how RSC calculates these quantities from egocentric inputs. The simplest possibility is that RSC might contain both location cells (which respond in a location irrespective of direction, analogous to the behaviour of hippocampal place cells in an open field) and direction cells (which respond for a given facing direction irrespective of location, analogous to HD cells). Indeed, neurophysiological studies from the rodent have indicated that HD cells are found in retrosplenial cortex [33], corresponding to the most anterior portion of RSC, whereas neurophysiological studies from monkeys have reported place-like cells in a medial parietal region that may correspond to the more posterior portion of RSC [34]. However, this does not appear to be the whole story, because the majority of the cells in these regions are neither place cells nor HD cells, but show more complex firing patterns. Further, it is unclear how separate populations of place and HD cells would facilitate the computations that underlie localization and orientation, beyond representing the results of those computations.

Rather than representing location and direction information separately, we hypothesize that RSC neurons might encode combinations of these quantities. For example, some RSC neurons might fire when facing a specific direction within a specific local environment, but might be inactive in other environments. Other RSC neurons might represent one's heading relative to specific landmarks, both currently visible and over-the-horizon. These different kinds of cells could be interconnected in a way that facilitates spatial reasoning by incorporating long-term knowledge about angular relations in the world: for example, cells that represent facing direction within the local environment (‘I am at the intersection in front of the Penn Bookstore facing 45° relative to the axis of the intersection’) could connect to HD cells that represent facing direction within a larger environment (‘I must be facing to the northwest’) and to cells that represent facing direction relative to unseen landmarks and navigational goals (‘Van Pelt Library must be behind me’). A system wired in this manner would be able to recover heading direction—and perhaps also location—from local cues.

4. Long-term spatial knowledge

The third step of landmark-based piloting is accessing knowledge about the spatial locations of other points of interest that can serve as navigational goals or waypoints to those goals. As indicated in the previous section, some of this long-term spatial knowledge might be encoded within RSC, which distinguishes between locations and may encode information about directional relationships between locations. However, another way to represent spatial information is in the form of a cognitive map, which is a representation of the Euclidean coordinates of landmarks and other navigational points of interest, analogous to a physical map [1,36]. Evidence from a variety of sources suggests that MTL regions may instantiate such a map.

This idea was originally proposed by O'Keefe & Nadel [2], based on the discovery of place cells in the rodent hippocampus, which fire when the animal is in a specific location. Experiments since then have indicated that the firing of these cells is somewhat complex, dependent not only on spatial location but also on non-spatial inputs (although spatial information appears to be primary, at least in the rodent [37]). By contrast, grid cells in medial entorhinal cortex (MEC), which provide inputs to the hippocampal place cells, exhibit firing patterns that are purely dependent on spatial coordinates [38]. Thus, the hippocampus and MEC may work together to represent a cognitive map, with MEC representing spatial coordinates per se, and hippocampus representing landmarks, goals and events that occur at specific locations. Place and grid cells have been found not only in the rodent but also in monkeys [39] and humans [40,41], although occasionally with somewhat different properties [42].

Indirect evidence for cognitive map coding in human MTL comes from structural imaging studies that have shown that the right posterior hippocampus increases in size when London taxi drivers learn the layout of London streets over a period of 2 years [43] and that the size of this region predicts the accuracy of cognitive maps in college students learning a new campus [44]. Furthermore, hippocampal size correlates with accuracy on a topographical memory test in which the spatial arrangements of landscapes must be recognized from multiple points of view [45]. This last finding is consistent with other data indicating that the hippocampus might encode viewpoint-independent maps of spatial scenes [46,47] that allow memories for objects and events to be precisely bound to specific locations [48].

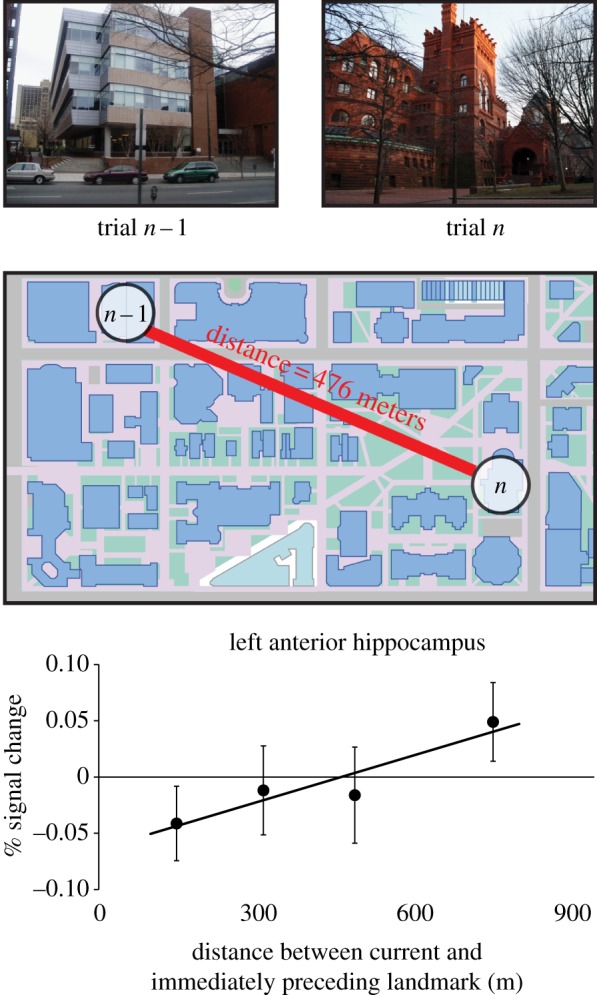

A recent fMRI study from our laboratory demonstrated that the hippocampus exhibits a key feature of a cognitive map: representation of real-world distance relationships [49]. To show this, we scanned Penn students while they viewed photographs of prominent landmarks (buildings and statues) from the Penn campus, presented one at a time while they performed a simple recognition task (figure 3). We then examined the fMRI response on each trial as a function of the real-world distance between the landmark shown on that trial and the landmark shown on the immediately preceding trial. Activity in the left anterior hippocampus scaled with real-world distance: it was greater when viewing a landmark that was far away from the landmark that had just been seen, but smaller when viewing a landmark that was close. This indicates that this part of the hippocampus has information about which landmarks are close together in the real world and which ones are further away from each other—the same kind of information that is encoded by a map.

Figure 3.

fMRI evidence that the human hippocampus encodes distances between real-world locations, thus exhibiting a key feature of a cognitive map. Subjects were scanned while viewing landmarks from a familiar college campus. Activity in the left anterior hippocampus scaled with the real-world distance between the landmark shown on each trial and the landmark shown on the immediately preceding trial. Adapted from [49]. (Online version in colour.)

What is the relationship between the long-term knowledge represented in MTL and the long-term knowledge represented in RSC? Earlier studies suggested a distinction based in the different phases of memory, such that the MTL might only be necessary for the initial encoding of spatial knowledge, whereas RSC might suffice for retrieval [50]. However, recent studies have suggested that both regions are involved in retrieval of long-term knowledge, but perhaps of different kinds. A striking demonstration of this comes from a former London taxi driver who suffered bilateral hippocampal damage as a result of limbic encephalitis, but whose RSC is likely intact. When asked to navigate between locations in a videogame version of London, he did so accurately if he could reach the goal on the main roads, but failed if he had to remember the smaller side streets [51]. This was interpreted as indicating that the hippocampus encodes a representation that is more detailed than that encoded by cortical regions, akin to the details that compose an episodic memory [52]. An alternative possibility is that the hippocampus encodes a representation that is more metric and precise, which may be required for navigating along side streets.

We hypothesize that the representations in MTL and RSC differ in two ways: (i) the coordinate system used to represent the space and (ii) the density/sparsity of the places encoded by this system. MTL may represent all locations in space in terms of a continuous Cartesian coordinate system that allows navigational vectors to be computed between any two locations on the map [53,54]. RSC, on the other hand, may only represent vectors between the most prominent or well-travelled locations, which would be encoded in terms of polar coordinates anchored to each location [55–59]. Whereas the MTL system may be especially suited for tasks that require novel inferences, for example devising shortcuts between locations, the RSC system may be particularly efficient for ‘everyday’ route planning between familiar locations, insofar as it allows vectors to be directly retrieved from memory without calculating them anew. A consequence of these differences in the nature of the representations is that the two systems might be optimized for different spatial scales: whereas small-scale spaces might be more likely to be represented in terms of continuous metric coordinates, large-scale spaces might be more likely to be encoded in terms of vectors connecting the smaller spaces [60], which could potentially explain why spatial memories for large-scale environments are notoriously rife with distortions [61].

As the above discussion indicates, the assignment of cognitive mechanisms to regions does not preclude the possibility that a region can participate in more than one mechanism. In our view, RSC is involved in both localization/orientation and also in retrieval of some varieties of long-term spatial knowledge. These two functions might reflect the use of the same underlying representation for two purposes: translating between egocentric input and allocentric output during localization/orientation, and then translating backwards from allocentric to egocentric during spatial knowledge retrieval [62].

5. Conclusion

The evidence that we describe above suggests that three components of landmark-based piloting—landmark recognition, localization/orientation and long-term spatial knowledge—are supported by neural mechanisms in the PPA, RSC and MTL. Key challenges for future research will include developing a more exact computational description of these cognitive mechanisms, achieving a better understanding of how they interact during navigation and elucidating the neural systems involved in route planning. We believe that neuroimaging studies using MVPA and adaptation techniques have potential to shed further light on these issues, thus refining the provisional account that we offer here.

Acknowledgements

We thank Steven Marchette and Joshua Julian for helpful comments.

Funding statement

Financially supported by the National Science Foundation (SBE-0541957) and the National Institutes of Health (R01-EY022350 to R.A.E. and F31-NS074729 to L.K.V.)

References

- 1.Gallistel CR. 1990. The organization of learning. Cambridge, MA: MIT Press. [Google Scholar]

- 2.O'Keefe J, Nadel L. 1978. The hippocampus as a cognitive map, xiv, p. 570 Oxford, UK: Oxford University Press. [Google Scholar]

- 3.Epstein R, Kanwisher N. 1998. A cortical representation of the local visual environment. Nature 392, 598–601. ( 10.1038/33402) [DOI] [PubMed] [Google Scholar]

- 4.Epstein RA. 2005. The cortical basis of visual scene processing. Vis. Cogn. 12, 954–978. ( 10.1080/13506280444000607) [DOI] [Google Scholar]

- 5.Aguirre GK, D'Esposito M. 1999. Topographical disorientation: a synthesis and taxonomy. Brain 122, 1613–1628. ( 10.1093/brain/122.9.1613) [DOI] [PubMed] [Google Scholar]

- 6.Habib M, Sirigu A. 1987. Pure topographical disorientation: a definition and anatomical basis. Cortex 23, 73–85. ( 10.1016/S0010-9452(87)80020-5) [DOI] [PubMed] [Google Scholar]

- 7.Epstein R, Harris A, Stanley D, Kanwisher N. 1999. The parahippocampal place area: recognition, navigation, or encoding? Neuron 23, 115–125. ( 10.1016/S0896-6273(00)80758-8) [DOI] [PubMed] [Google Scholar]

- 8.Wolbers T, Klatzky RL, Loomis JM, Wutte MG, Giudice NA. 2011. Modality-independent coding of spatial layout in the human brain. Curr. Biol. 21, 984–989. ( 10.1016/j.cub.2011.04.038) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kravitz DJ, Peng CS, Baker CI. 2011. Real-world scene representations in high-level visual cortex: it's the spaces more than the places. J. Neurosci. 31, 7322–7333. ( 10.1523/JNEUROSCI.4588-10.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Park S, Brady TF, Greene MR, Oliva A. 2011. Disentangling scene content from spatial boundary: complementary roles for the parahippocampal place area and lateral occipital complex in representing real-world scenes. J. Neurosci. 31, 1333–1340. ( 10.1523/JNEUROSCI.3885-10.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Committeri G, Galati G, Paradis AL, Pizzamiglio L, Berthoz A, LeBihan D. 2004. Reference frames for spatial cognition: different brain areas are involved in viewer-, object-, and landmark-centered judgments about object location. J. Cogn. Neurosci. 16, 1517–1535. ( 10.1162/0898929042568550) [DOI] [PubMed] [Google Scholar]

- 12.Cheng K. 1986. A purely geometric module in the rats spatial representation. Cognition 23, 149–178. ( 10.1016/0010-0277(86)90041-7) [DOI] [PubMed] [Google Scholar]

- 13.Hermer L, Spelke ES. 1994. A geometric process for spatial reorientation in young children. Nature 370, 57–59. ( 10.1038/370057a0) [DOI] [PubMed] [Google Scholar]

- 14.Cant JS, Xu Y. 2012. Object ensemble processing in human anterior-medial ventral visual cortex. J. Neurosci. 32, 7685–7700. ( 10.1523/JNEUROSCI.3325-11.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dilks DD, Julian JB, Kubilius J, Spelke ES, Kanwisher N. 2011. Mirror-image sensitivity and invariance in object and scene processing pathways. J. Neurosci. 31, 11 305–11 312. ( 10.1523/JNEUROSCI.1935-11.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Aguirre GK, Zarahn E, D'Esposito M. 1998. An area within human ventral cortex sensitive to ‘building’ stimuli: evidence and implications. Neuron 21, 373–383. ( 10.1016/S0896-6273(00)80546-2) [DOI] [PubMed] [Google Scholar]

- 17.Konkle T, Oliva A. 2012. A real-world size organization of object responses in occipitotemporal cortex. Neuron 74, 1114–1124. ( 10.1016/j.neuron.2012.04.036) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cate AD, Goodale MA, Kohler S. 2011. The role of apparent size in building- and object-specific regions of ventral visual cortex. Brain Res. 1388, 109–122. ( 10.1016/j.brainres.2011.02.022) [DOI] [PubMed] [Google Scholar]

- 19.Amit E, Mehoudar E, Trope Y, Yovel G. 2012. Do object-category selective regions in the ventral visual stream represent perceived distance information? Brain Cogn. 80, 201–213. ( 10.1016/j.bandc.2012.06.006) [DOI] [PubMed] [Google Scholar]

- 20.Bar M, Aminoff E. 2003. Cortical analysis of visual context. Neuron 38, 347–358. ( 10.1016/S0896-6273(03)00167-3) [DOI] [PubMed] [Google Scholar]

- 21.Mullally SL, Maguire EA. 2011. A new role for the parahippocampal cortex in representing space. J. Neurosci. 31, 7441–7449. ( 10.1523/Jneurosci.0267-11.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Troiani V, Stigliani A, Smith ME, Epstein RA. 2012. Multiple object properties drive scene-selective regions. Cereb. Cortex. ( 10.1093/cercor/bhs364) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Janzen G, van Turennout M. 2004. Selective neural representation of objects relevant for navigation. Nat. Neurosci. 7, 673–677. ( 10.1038/nn1257) [DOI] [PubMed] [Google Scholar]

- 24.Brown TI, Ross RS, Keller JB, Hasselmo ME, Stern CE. 2010. Which way was I going? Contextual retrieval supports the disambiguation of well learned overlapping navigational routes. J. Neurosci. 30, 7414–7422. ( 10.1523/Jneurosci.6021-09.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Epstein RA. 2008. Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn. Sci. 12, 388–396. ( 10.1016/j.tics.2008.07.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Vass LK, Epstein RA. 2013. Abstract representations of location and facing direction in the human brain. J. Neurosci. 33, 6133–6142. ( 10.1523/JNEUROSCI.3873-12.2013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Epstein RA, Higgins JS, Jablonski K, Feiler AM. 2007. Visual scene processing in familiar and unfamiliar environments. J. Neurophysiol. 97, 3670–3683. ( 10.1152/jn.00003.2007) [DOI] [PubMed] [Google Scholar]

- 28.Epstein RA, Parker WE, Feiler AM. 2007. Where am I now? Distinct roles for parahippocampal and retrosplenial cortices in place recognition. J. Neurosci. 27, 6141–6149. ( 10.1523/JNEUROSCI.0799-07.2007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Epstein RA, Higgins JS. 2007. Differential parahippocampal and retrosplenial involvement in three types of visual scene recognition. Cereb. Cortex 17, 1680–1693. ( 10.1093/cercor/bhl079) [DOI] [PubMed] [Google Scholar]

- 30.Park S, Chun MM. 2009. Different roles of the parahippocampal place area (PPA) and retrosplenial cortex (RSC) in panoramic scene perception. Neuroimage 47, 1747–1756. ( 10.1016/j.neuroimage.2009.04.058) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Takahashi N, Kawamura M, Shiota J, Kasahata N, Hirayama K. 1997. Pure topographic disorientation due to right retrosplenial lesion. Neurology 49, 464–469. ( 10.1212/WNL.49.2.464) [DOI] [PubMed] [Google Scholar]

- 32.Taube JS. 1998. Head direction cells and the neurophysiological basis for a sense of direction. Prog. Neurobiol. 55, 225–256. ( 10.1016/S0301-0082(98)00004-5) [DOI] [PubMed] [Google Scholar]

- 33.Baumann O, Mattingley JB. 2010. Medial parietal cortex encodes perceived heading direction in humans. J. Neurosci. 30, 12 897–12 901. ( 10.1523/Jneurosci.3077-10.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Morgan LK, Macevoy SP, Aguirre GK, Epstein RA. 2011. Distances between real-world locations are represented in the human hippocampus. J. Neurosci. 31, 1238–1245. ( 10.1523/JNEUROSCI.4667-10.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Chen LL, Lin LH, Green EJ, Barnes CA, McNaughton BL. 1994. Head-direction cells in the rat posterior cortex. I. Anatomical distribution and behavioral modulation. Exp. Brain Res. 101, 8–23. ( 10.1007/BF00243212) [DOI] [PubMed] [Google Scholar]

- 36.Sato N, Sakata H, Tanaka YL, Taira M. 2006. Navigation-associated medial parietal neurons in monkeys. Proc. Natl Acad. Sci. USA 103, 17 001–17 006. ( 10.1073/pnas.0604277103) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shettleworth SJ. 1998. Cognition, evolution, and behavior, xv, p. 688 New York, NY: Oxford University Press. [Google Scholar]

- 38.Manns JR, Eichenbaum H. 2009. A cognitive map for object memory in the hippocampus. Learn. Mem. 16, 616–624. ( 10.1101/Lm.1484509) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hafting T, Fyhn M, Molden S, Moser MB, Moser EI. 2005. Microstructure of a spatial map in the entorhinal cortex. Nature 436, 801–806. ( 10.1038/nature03721) [DOI] [PubMed] [Google Scholar]

- 40.Matsumura N, Nishijo H, Tamura R, Eifuku S, Endo S, Ono T. 1999. Spatial- and task-dependent neuronal responses during real and virtual translocation in the monkey hippocampal formation. J. Neurosci. 19, 2382–2393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ekstrom AD, Kahana MJ, Caplan JB, Fields TA, Isham EA, Newman EL, Fried I. 2003. Cellular networks underlying human spatial navigation. Nature 425, 184–188. ( 10.1038/nature01964) [DOI] [PubMed] [Google Scholar]

- 42.Doeller CF, Barry C, Burgess N. 2010. Evidence for grid cells in a human memory network. Nature 463, 657–661. ( 10.1038/nature08704) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Killian NJ, Jutras MJ, Buffalo EA. 2012. A map of visual space in the primate entorhinal cortex. Nature 491, 761–764. ( 10.1038/Nature11587) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Woollett K, Maguire EA. 2011. Acquiring ‘the knowledge’ of London's layout drives structural brain changes. Curr. Biol. 21, 2109–2114. ( 10.1016/j.cub.2011.11.018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Schinazi VR, Nardi D, Newcombe NS, Shipley TF, Epstein RA. 2013. Hippocampal size predicts rapid learning of a cognitive map in humans. Hippocampus 23, 515–528. ( 10.1002/hipo.22111) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hartley T, Harlow R. 2012. An association between human hippocampal volume and topographical memory in healthy young adults. Front. Hum. Neurosci. 6 ( 10.3389/fnhum.2012.00338) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.King JA, Burgess N, Hartley T, Vargha-Khadem F, O'Keefe J. 2002. Human hippocampus and viewpoint dependence in spatial memory. Hippocampus 12, 811–820. ( 10.1002/hipo.10070) [DOI] [PubMed] [Google Scholar]

- 48.Hassabis D, Maguire EA. 2007. Deconstructing episodic memory with construction. Trends Cogn. Sci. 11, 299–306. ( 10.1016/j.tics.2007.05.001) [DOI] [PubMed] [Google Scholar]

- 49.Howard LR, Kumaran D, Olafsdottir HF, Spiers HJ. 2011. Double dissociation between hippocampal and parahippocampal responses to object-background context and scene novelty. J. Neurosci. 31, 5253–5261. ( 10.1523/JNEUROSCI.6055-10.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Teng E, Squire LR. 1999. Memory for places learned long ago is intact after hippocampal damage. Nature 400, 675–677. ( 10.1038/23276) [DOI] [PubMed] [Google Scholar]

- 51.Maguire EA, Nannery R, Spiers HJ. 2006. Navigation around London by a taxi driver with bilateral hippocampal lesions. Brain 129, 2894–2907. ( 10.1093/brain/awl286) [DOI] [PubMed] [Google Scholar]

- 52.Rosenbaum RS, Priselac S, Kohler S, Black SE, Gao F, Nadel L, Moscovitch M. 2000. Remote spatial memory in an amnesic person with extensive bilateral hippocampal lesions. Nat. Neurosci. 3, 1044–1048. ( 10.1038/79867) [DOI] [PubMed] [Google Scholar]

- 53.Kubie JL, Fenton AA. 2012. Linear look-ahead in conjunctive cells: an entorhinal mechanism for vector-based navigation. Front. Neural Circuits 6 ( 10.3389/fncir.2012.00020) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Muller RU, Stead M, Pach J. 1996. The hippocampus as a cognitive graph. J. Gen. Physiol. 107, 663–694. ( 10.1085/jgp.107.6.663) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Chrastil ER. 2013. Neural evidence supports a novel framework for spatial navigation. Psychon. Bull. Rev. 20, 208–227. ( 10.3758/s13423-012-0351-6) [DOI] [PubMed] [Google Scholar]

- 56.Schinazi VR, Epstein RA. 2010. Neural correlates of real-world route learning. Neuroimage 53, 725–735. ( 10.1016/j.neuroimage.2010.06.065) [DOI] [PubMed] [Google Scholar]

- 57.Trullier O, Wiener SI, Berthoz A, Meyer JA. 1997. Biologically based artificial navigation systems: review and prospects. Prog. Neurobiol. 51, 483–544. ( 10.1016/S0301-0082(96)00060-3) [DOI] [PubMed] [Google Scholar]

- 58.Kuipers B, Tecuci DG, Stankiewicz BJ. 2003. The skeleton in the cognitive map: a computational and empirical exploration. Environ. Behav. 35, 81–106. ( 10.1177/0013916502238866) [DOI] [Google Scholar]

- 59.Kubie JL, Fenton AA. 2009. Heading-vector navigation based on head-direction cells and path integration. Hippocampus 19, 456–479. ( 10.1002/hipo.20532) [DOI] [PubMed] [Google Scholar]

- 60.Meilinger T. 2008. The network of reference frames theory: a synthesis of graphs and cognitive maps. In Spatial cognition VI learning, reasoning, and talking about space (eds Freksa C, Newcombe NS, Gärdenfors P, Wölfl S.), pp. 344–360. Freiberg, Germany: Springer. [Google Scholar]

- 61.Lynch K. 1960. The image of the city. Cambridge, MA: Technology Press. [Google Scholar]

- 62.Byrne P, Becker S, Burgess N. 2007. Remembering the past and imagining the future: a neural model of spatial memory and imagery. Psychol. Rev. 114, 340–375. ( 10.1037/0033-295X.114.2.340) [DOI] [PMC free article] [PubMed] [Google Scholar]