Abstract

The Irrelevant Sound Effect (ISE) is the finding that serial recall performance is impaired under complex auditory backgrounds such as speech as compared to white noise or silence (Colle & Welsh, 1976). Several findings have demonstrated that ISE occurs with nonspeech backgrounds and that the changing-state complexity of the background stimuli is critical to ISE (e.g., Jones & Macken, 1993). In a pair of experiments, we investigate whether speech-like qualities of the irrelevant background have an effect beyond their changing-state complexity. We do so by using two kinds of transformations of speech with identical changing-state complexity; one kind that preserved speech-like information (sinewave speech and fully-reversed sinewave speech) and others in which this information was distorted (two selectively-reversed sinewave speech conditions). Our results indicate that even when changing-state complexity is held constant, sinewave speech conditions in which speech-like interformant relationships are disrupted produce less ISE than those in which these relationships are preserved. This indicates that speech-like properties of the background are important beyond their changing-state complexity for ISE.

The Irrelevant Sound Effect (ISE) is the finding that accuracy in serial-recall tasks is impaired by the presence of complex background sounds. For instance, when subjects are asked to recall a series of visual items in the order of their presentation, their recall performance is worse under speech backgrounds compared to white noise or silent backgrounds (Colle & Welsh, 1976). The earliest explanation of ISE was the Phonological Loop Hypothesis based on the working memory model proposed by Baddeley and Hitch (1974). According to this model, working memory consists of the visuo-spatial sketchpad that is responsible for coding visual information, the phonological loop that is responsible for coding verbal information, and the central executive that controls these subsystems. By this account, presented visual items coded in the visuo-spatial sketchpad, must be sub-vocally rehearsed to be transferred into the phonological loop. Accordingly, irrelevant speech (compared to, for instance, white noise) interferes with transfer because it has preferential access to the phonological loop. Thus, the presented visual items have to compete for access with the privileged irrelevant speech leading to poorer recall (Baddeley, 2000). This explanation of ISE was originally proposed when it was assumed that ISE was specific to background speech. However, subsequent research has demonstrated that irrelevant non-speech backgrounds, such as instrumental music (Salame & Baddeley, 1989), pure tones (Jones & Macken, 1993), and traffic noise (Hygge, Boman, & Enmarker, 2003) also impair serial-recall.

Given these findings and the general acknowledgment that ISE is not restricted to speech backgrounds, other accounts of ISE have been used to explain the equipotentiality of speech and nonspeech in disrupting serial recall (Jones, Madden, & Miles, 1992; Neath, 2000). One such account is the changing-state hypothesis (Jones et al., 1992; Jones & Macken, 1993) that suggests that both non-speech and speech backgrounds are able to gain access to memory and disrupt serial recall by competing with target items for serial order cues. For instance, irrelevant backgrounds do not interfere in a non-serial memory task such as the recall of missing items (Beaman & Jones, 1997; but see LeCompte, 1996) suggesting that ISE is caused by the competition between two seriation processes. Importantly, because it is this competition for order information that interferes with serial recall, the critical property of irrelevant sound that is responsible for serial recall disruption is its number of changing-states. Strong support for this claim comes from studies that demonstrate that both speech and nonspeech backgrounds with changing-states (i.e. with high changing state complexity) disrupt serial recall more than steady, unchanging backgrounds (Hughes, Tremblay, & Jones, 2005; Jones, Alford, Macken, Banbury, & Tremblay, 2000; Macken, Mosdell, & Jones, 1999). Thus, by this account, speech does not have a privileged status in the production of ISE (see Schlittmeier, Weißgerber, Kerber, Fastl, & HellbrÜck, 2012, for a comparison on how speech and nonspeech differ with respect to their changing-states).

To specifically investigate whether speech-specific properties of the background have a privileged role in ISE, Tremblay and colleagues compared the recall disruption produced by sinewave speech backgrounds to those produced by natural speech and silent backgrounds (Tremblay, Nicholls, Alford, & Jones, 2000). Sinewave speech preserves the spectro-temporal information of natural speech with a series of time-varying sinusoids that tracks formant centers (Remez, Rubin, Pisoni, & Carell, 1981). An interesting property of sinewave speech is that some listeners hear it as speech while others as non-speech (Remez et al. 1981). Tremblay et al. performed two critical comparisons. First, they compared the recall disruption produced by the more complex natural speech to that produced by the simpler sinewave speech and found that natural speech produced significantly more recall disruption than sinewave speech. In the context of the changing-state hypothesis, this demonstrated that the signal with higher changing-state complexity produces more recall disruption. Second, they compared the recall disruption produced by sinewave speech in participants who heard sinewave speech as speech to those who did not. The authors found that both sinewave speech groups had identical levels of recall disruption irrespective of whether they were trained to hear sinewave speech as speech or not. In other words, when the signal (and thus its changing-state complexity) is held constant, the recall disruption is identical, irrespective of how listeners perceive the background. Tremblay et al. interpreted this pair of findings to mean that ISE could be completely explained by a background signal’s changing-state complexity and that there was no need to invoke its speech-specific properties.

In this study, we closely examine this conclusion. Specifically, we suggest that speech-specific properties of sinewave speech do not depend on listeners’ perceptions of the signal as speech or non-speech. Instead, these properties inhere in the relationship among speech formants. We hypothesize that this dynamic relationship among formants may have influenced subjects irrespective of their perception of sinewave speech. Therefore, before one can rule out the role of speech-specific properties of the background, it is important to examine the role of these speech-specific relations left intact in sinewave speech. We did so, in a pair of experiments, by examining the ISE produced by sinewave speech conditions that either maintained or disrupted the interformant relationships present in natural speech. Sinewave speech is ideal for this kind of systematic manipulation because it lacks the diffusion of acoustic energy that is present in natural speech, making the individual formants easy to isolate and manipulate.

Experiment 1

In Experiment 1, we disrupted speech-like interformant relationships that are usually preserved in typical sinewave speech precursors and examined resulting ISE. Specifically, we investigated whether such backgrounds produce serial recall disruption that is different from those elicited by typical sinewave speech precursors. In this experiment, we studied four background conditions. In addition to two conditions from Tremblay et al., natural speech and sinewave speech, we included a white noise control and a two-formant-reversed sinewave speech background condition. We created this condition by temporally reversing the first two formants (F1, F2) of typical three formant sinewave speech while leaving the third formant (F3) unchanged. This form of signal manipulation disrupts the dynamic relationship between formants without changing the signal’s changing-state complexity because it alters the arrangement of the components, but not the components themselves (Viswanathan, Magnuson, & Fowler, in prep; Viswanathan, 2009; samples of our stimuli used in Experiments 1 and 2 can be found at https://sites.google.com/site/joshdorsi/research/ISE/stimuli). By comparing recall-accuracy in the two-formant-reversed sinewave speech and typical sinewave speech backgrounds, one can ascertain whether properties beyond signal complexity, that are unique to speech, are important to ISE.

Method

Participants

Thirty-three students from the State University of New York at New Paltz received course credit for their participation. All subjects were native English speakers and reported normal hearing and normal or corrected vision.

Materials

Both the serial recall consonant list (L, R, T, S, M, K, F) and words in the background stimuli (bowls, boy, day, dog, go, than, view) were taken from Tremblay et al. (2000). Each item on the to-be-remembered list was presented on a computer screen for 1000ms each, with a 500ms interval between items. Four background conditions were used: natural speech, sinewave speech, two-formant-reversed sinewave speech, and white noise. Natural speech recordings of each of the seven background words were obtained from a twenty-two year old male speaker of American English. The produced words were matched for intensity. Sinewave speech analogues of each word were then synthesized by combining sinewave equivalents of the first three formants of each natural speech token. For the two-formant-reversed sinewave speech condition, the first two formants of the three formant sinewave speech were temporally inverted before being combined with the unaltered third formant. White noise controls were created by synthesizing white noise segments corresponding to each word. All four conditions were matched in duration and intensity. For each condition, the seven words (or their respective analogues) were concatenated in one of four pre-specified random orders with an inter-item latency of 200 ms between each word. During each trial, the concatenated 7 item loop was played twice in succession for a total of 1.6 s. The irrelevant sounds were presented at 70 dB SPL through Seinheisser HD 575 headphones. Sample of our stimuli for all conditions can be found here: https://sites.google.com/site/joshdorsi/research/ISE/stimuli

Procedure

Participants were seated approximately 60 cm away from a 43 × 28 cm LCD computer screen. Directions were provided both verbally and through on screen instructions on the computer at the start of each session. Participants were instructed that the goal of the task was to report each letter sequence in the correct order and to ignore sounds presented through the headphones. Each participant performed the serial recall task under the four background conditions described above. For every trial, the seven letters listed above were displayed in a randomly generated order on the screen, in the presence of one of four background sound conditions. Auditory background stimuli had the same onset as the first of the visual to-be-remembered letters, and continued for the entire duration of the visual presentation. One second after the end of each presentation, a blinking cursor in the upper left corner of their screen prompted participants to type the items in the presented order. The background conditions were manipulated within subjects and varied for each trial in a random order across subjects. Each of four backgrounds was played 16 times per session for a total of 64 serial recall trials per subject. Because Tremblay et al. (2000) found no difference in ISE whether sinewave speech was perceived as speech or non-speech; we did not include the speech and nonspeech training conditions.1

Results and Discussion

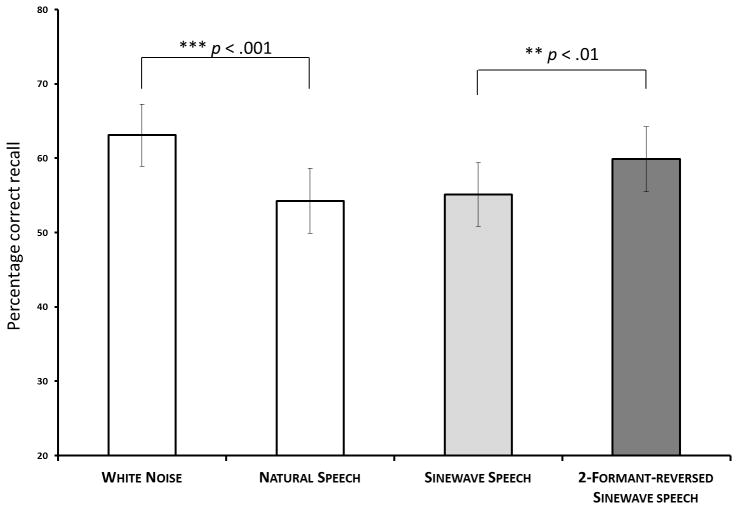

Figure 1 depicts the average serial recall accuracy in each of the four background conditions in Experiment 1. Natural speech produced the lowest recall accuracy (M = 54%), followed by sinewave speech (M = 55%), two-formant-reversed sinewave speech (M = 59%) and white noise (M = 63%). These data were submitted to a 4 (background) X 7 (letter position) within-subjects ANOVA. There was a significant main effect for background (F (3, 96) = 12.57, p < .001, η2p = .28) indicating that recall accuracy was affected by the nature of the background. A main effect of letter position (F (6, 192) = 76.05, p < .001, η2p = .70) indicated that recall accuracy reduced with increase in serial position. There was also a weak but reliable interaction between background and letter position F (18, 576) = 1.98, p < .01, η2p = .06. This interaction was due to greater effects of the background in latter serial positions compared to earlier ones. Planned comparisons were used to determine the locus of the effect of background. The first comparison, between natural speech and white noise backgrounds, revealed a reliable difference F (1, 32) = 34.44, p < .001, η2p = .52, indicating that our results replicated typical ISE findings. Central to this study was the comparison between the sinewave and two-formant-reversed sinewave speech conditions. These two conditions were identical in acoustic complexity, but only sinewave speech preserved the formant relationship in natural speech. This critical comparison revealed that sinewave speech produced more recall disruption than two-formant-reversed sinewave speech (F (1, 32) = 9.09, p < .01, η2p = .22; see Figure 1), suggesting that speech-specific properties of the acoustic signal contribute to ISE. Finally, unlike Tremblay et al., we did not detect a difference between sinewave and natural speech conditions (F < 1).

Figure 1.

A comparison of serial recall under four background conditions in Experiment 1. The error bars represent standard error of the mean in each condition. The shaded bars represent the two sinewave conditions. The sinewave speech condition in which speech-specific formants are disrupted (denoted by the bar with darker shading) produces significantly less serial recall disruption than the condition in which it is preserved (denoted by the bar with lighter shading).

However, an alternate explanation could also be considered. Surprenant, Neath, and Biretta (2007) showed that backward speech, but not fully-reversed sinewave speech, produces reliably different serial recall disruption compared to silent backgrounds. The finding with fully-reversed sinewave speech is troubling to our account of findings for two reasons. First, despite the temporal reversal of the signal, fully-reversed speech sinewave speech preserves the purportedly critical interformant relations. Therefore, by our account, such a background should have still produced ISE. Second, the finding raises the possibility that our two-formant-reversed speech may have merely acted like fully-reversed sinewave speech given that two out of three formants are identical in these conditions. That is, the reduction in ISE obtained in Experiment 1 in the two-formant reversed condition may be due to the reversal of the majority of the signal rather than disruption of speech-specific relations.

Experiment 2

To resolve the question of whether the diminished ISE obtained in the two-formant-reversed sinewave speech condition was indeed due to the disruption of speech-like formant relationships, we investigated additional conditions. Following Surprenant et al. (2007), in addition to our control white noise background condition, we included fully-reversed sinewave speech and backward natural speech conditions to permit replication and comparison. To investigate the role of speech-like formant relations, we created a new one-formant-reversed condition in which only the first formant was reversed. This reversed a smaller portion of typical sinewave speech while also disrupting the relationship between the first two formants. If the speech-like quality of the signal, rather than proportion of signal reversed, is critical to ISE, then the one-formant-selectively-reversed sinewave condition should produce less disruption than fully-reversed sinewave condition.

Method

Participants

Twenty-nine students from the State University of New York at New Paltz received course credit, or five dollars for their participation. All subjects were native English speakers and reported normal hearing and normal or corrected vision.

Materials

The serial recall consonant list and background stimuli from Experiment 1 with modifications were used. The backward speech and the fully-reversed sinewave speech tokens were created by temporally reversing natural speech and typical sinewave speech tokens from Experiment 1 respectively. The one-formant-reversed sinewave speech condition was created by temporally inverting the first formant before being combined with the second and third formants that were themselves unmodified. As in Experiment 1, a white noise condition was used as a control and all backgrounds were matched in duration, and intensity. For samples of our stimuli see: https://sites.google.com/site/joshdorsi/research/ISE/stimuli

Procedure

The procedure of Experiment 1 was used with the new background conditions.

Results and Discussion

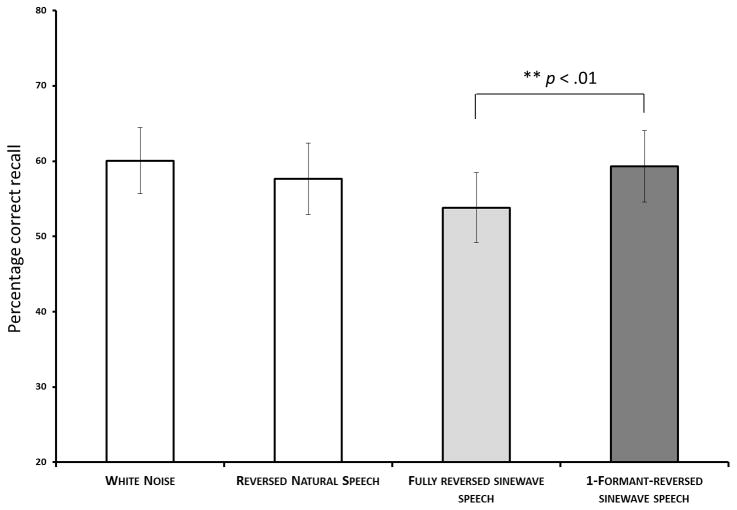

Figure 2 depicts the average serial recall accuracy for the four irrelevant sound conditions in Experiment 2. The lowest recall accuracy was obtained in the reversed sinewave speech (M = 53%), reversed natural speech (M = 57%), one-formant-reversed sinewave-speech (M = 59%), and white noise (M = 60%). These data were submitted to a 4 (background) X 7 (letter position) within-subjects ANOVA. There was again a significant main effect for background, F(3, 84) = 4.416, p < .05, η2p = .136 and a main effect of letter position, F(6, 168) = 70.23, p < .001, η2p = .715. Unlike Experiment 1, there was no interaction between background and serial position (F(18, 504) = 1.06, p = .38, η2p = .03). The critical comparison of interest showed that recall disruption produced by the one-formant-reversed sinewave speech was significantly lower than that in the fully-reversed sinewave speech condition (F(1, 28) = 8.812, p= .006, η2p = .24). Furthermore, as expected by us, and contrary to Surprenant et al. (2007), we found that fully-reversed sinewave condition produces robust ISE relative to the white noise control (F(1, 28) = 10.915, p = .003, η2p = .280). This pair of results confirms that the reduction in recall disruption is due to the disruption of speech-specific properties of the background rather than due to the reversal of the signal. Finally, while we found lower recall accuracy in the backward speech (M = 57%) than in the white noise condition (M = 60%), this difference was not statistically reliable (F (1, 28) = 1.754, p = .196, η2p = .06). Given that past studies have demonstrated ISE with backward speech (Lecompte, Neely, & Wilson, 1997, Experiment 4; Surprenant et al. 2007), our failure to replicate this effect requires explanation. We suggest2 that our use of a white noise control rather than a silent background used in both past studies may have raised the threshold for detecting the effect of reversed natural speech backgrounds. Given that the numerical direction of our effects is consistent with past studies we suggest that we failed to detect this effect in our study.

Figure 2.

A comparison of serial recall under four background conditions in Experiment 2. Again, the sinewave speech condition in which speech-specific formants are disrupted (denoted by the bar with darker shading) produces significantly less serial recall disruption than the condition in which it is preserved (denoted by the bar with lighter shading).

General Discussion

We investigated whether, in addition to its changing-state complexity, speech-specific properties of the background signal have a role in ISE. In order to answer this question, we used sinewave speech conditions of identical changing-state complexity that either preserved speech-like interformant relationships (typical sinewave speech in Experiment 1, fully-reversed sinewave speech in Experiment 2) or distorted them (two-formant reversed sinewave speech in Experiment 1, one-formant reversed sinewave speech in Experiment 2). In Experiment 1, we found that two-formant-reversed sinewave speech backgrounds produces significantly lower recall disruption than typical sinewave speech precursors despite being equated in the number of changing states. Given that Surprenant et al. (2007) showed no ISE effects with fully-reversed sinewave speech, we sought to determine whether this effect was truly due to disruption of speech-like formant relations or whether it was due to the simple act of reversing the majority of the signal. In Experiment 2, we compared recall accuracy in backward natural speech, fully-reversed sinewave speech, one-formant reversed sinewave speech and white noise conditions. Contrary to Surprenant et al. (2007) and consistent with our hypothesis, we found robust ISE with fully-reversed sinewave speech conditions. That is even though the signal was reversed, it produced strong ISE because its speech-like interformant relations were preserved. Furthermore, we again found that destroying these interformant relations in the one-formant-reversed sinewave condition lead to reduced serial recall disruption compared to fully-reversed sinewave speech.

Importantly, even though all our sinewave speech conditions consisted of the same three sinewave tones, conditions in which these tones were arranged to preserve the speech-like formant relations (sinewave speech in Experiment 1, completely-reversed sinewave speech in Experiment 2) consistently produced more serial recall disruption than those in which the speech-like relations were disrupted (two-formant reversed sinewave speech in Experiment 1, one-formant reversed sinewave speech in Experiment 2). Our manipulation left lower order acoustic properties such as average formant frequencies unchanged while manipulating higher-order properties (the interformant relations). Our results suggest that these higher-order speech-like properties of the background are critical to ISE beyond their changing-state complexity.

One possible alternative explanation for our results is that selective reversal may have caused participants to perceptually group the changing-states of the different sinewave speech conditions differently. For example, Jones, Macken, and Murray (1993) found that tone glides interspersed with silence were more disruptive than the same tone glides with the silence masked by white noise bursts. The authors explained this finding by noting that the white noise masked the silent intervals resulting in the perceptual resolution that the tone glide was continuous with noise overlaps. Thus, despite being more acoustically complex, the tone with noise condition was found to be less disruptive. This finding shows that acoustic complexity was overridden by perceptual grouping of the stimuli to fewer changing states. We suggest that this explanation may not completely explain our results for two reasons. First, Tremblay et al.’s findings demonstrate that, under identical acoustical complexity, perceptual resolution of sinewave speech, by itself, does not alter serial recall disruption. Second, in the context of our findings, this explanation would suggest that somehow listeners grouped the selectively-reversed sinewave conditions into fewer states than forward or backward sinewave speech. Given that listeners have considerably more experience perceptually attuning to speech-like signals; it appears that they would at least be as successful in similarly resolving forward or backward speech. However, this explanation requires further empirical evaluation.

In general, our findings have implications for all models of ISE. While it is clear that the number of changing-states of a signal is an important determinant of ISE, it may not completely explain the extent of recall disruption in all signals. We suggest that understanding the information carried by the background signal (and the effect of disrupting this information) can complement our understanding of processes involved in ISE. For instance, in a recent study, Schlittmeier and colleagues modeled the effects of a range of different auditory stimuli on serial recall (Schlittmeier et al., 2012). The authors modeled effects of 70 different sources of irrelevant sound, including pure tones, animal calls, different forms of speech (multi-talker babble, motherese, foreign language speech, etc.), and traffic noise, on serial recall in an effort to be able to predict the level of disruption based on changing-state complexity. The results of this study suggest a strong relationship between changing-state complexity and serial-recall disruption as assumed by the changing-state hypothesis. However, the authors also found that among the backgrounds considered, speech backgrounds produce the most disruption. The authors suggest that apart from the fact that speech backgrounds typically had high changing-state complexity, it is possible that they exert strong effects due to their ability to capture a listener’s attention. This suggestion is consistent with theories of ISE that propose a specific role for attention in ISE (Cowan, 1995; Neath, 2000; Page & Norris 2003). Our findings, from the perspective of these models and in the context of Schlittmeier et al.’s findings, would indicate that speech preferentially engages subjects’ attention because of the presence of the outlined speech-specific properties. We suggest that our approach using selectively-reversal of the acoustic signal to dissociate complexity and speech-specificity may be used to evaluate this hypothesis in combination with an explicit measurement or manipulation of a signal’s attention engaging properties independent of the serial recall task

In conclusion, we suggest that an exclusive focus on the changing state complexity of the background signal without consideration of the role of its speech-specific properties is a current weakness of the changing-state hypothesis. In other words, our findings suggest that any explanation of ISE must account for the effects of speech-specific information, beyond complexity, present in the acoustic signal.

Acknowledgments

This research was supported by NIDCD grant R15DC011875-01 to NV. We thank Annie Olmstead for her helpful comments and suggestions.

Footnotes

In a previous study (Viswanathan et al., under review; Viswanathan, 2009) we found that none of our listeners spontaneously perceived selectively-reversed sinewave speech as speech.

We thank one of our reviewers for this suggestion.

Contributor Information

Navin Viswanathan, Email: viswanan@newpaltz.edu.

Josh Dorsi, Email: jdorsi56@gmail.com.

Stephanie George, Email: stephanie.george815@gmail.com.

References

- Baddeley AD. The phonological loop and the irrelevant speech effect: Some comments on Neath (2000) Psychonomic Bulletin & Review. 2000;7(3):544–549. doi: 10.3758/bf03214369. [DOI] [PubMed] [Google Scholar]

- Baddeley AD, Hitch GJ. Working memory. In: Bower GH, editor. The psychology of learning and motivation. Vol. 8. New York: Academic Press; 1974. pp. 47–90. [Google Scholar]

- Beaman PC, Jones DM. Role of serial order in the irrelevant speech effect: tests of the changing-state hypothesis. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1997;23(2):459–471. [Google Scholar]

- Colle HA, Welsh A. Acoustic masking in primary memory. Journal of Verbal Learning and Verbal Behavior. 1976;15:17–32. [Google Scholar]

- Cowan N. Attention and memory: An integrated framework. New York: Oxford; 1995. [Google Scholar]

- Hughes RW, Tremblay S, Jones DM. Disruption by speech of serial short-term memory: The role of changing-state vowels. Psychonomic Bulletin and Review. 2005;12:886–90. doi: 10.3758/bf03196781. [DOI] [PubMed] [Google Scholar]

- Hygge S, Boman E, Enmarker I. The effects of road traffic noise and meaningful irrelevant speech on different memory systems. Scandinavian Journal of Psychology. 2003;44:13–21. doi: 10.1111/1467-9450.00316. [DOI] [PubMed] [Google Scholar]

- LeCompte DC, Neely CB, Wilson JR. Irrelevant speech and irrelevant tones: The relative importance of speech to the irrelevant speech effect. Journal Of Experimental Psychology: Learning, Memory, And Cognition. 1997;23(2):472–483. doi: 10.1037//0278-7393.23.2.472. [DOI] [PubMed] [Google Scholar]

- Jones DM, Macken WJ. Irrelevant Tones Produce an Irrelevant Speech Effect: Implications for Phonological Coding in Working Memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1993;19(2):369–381. [Google Scholar]

- Jones DM, Madden C, Miles C. Privileged access by irrelevant speech to short-term memory: The role of changing state. The Bimonthly Journal of Experimental Psychology. 1992;44A:645–669. doi: 10.1080/14640749208401304. [DOI] [PubMed] [Google Scholar]

- Jones DM, Macken WJ, Murray AC. Disruption of visual short-term memory by changing-state auditory stimuli: the role of segmentation. Memory and Cognition. 1993;21(3):318–328. doi: 10.3758/bf03208264. [DOI] [PubMed] [Google Scholar]

- Jones DM, Alford D, Macken WJ, Banbury SP, Tremblay S. Interference from degraded auditory stimuli: Linear effects of changing-state in the irrelevant sequence. Journal of the Acoustical Society of America. 2000;108(3):1082–1088. doi: 10.1121/1.1288412. [DOI] [PubMed] [Google Scholar]

- Macken WJ, Mosdell N, Jones DM. Explaining the irrelevant-sound effect: Temporal distinctiveness or changing state? Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25(3):810–814. [Google Scholar]

- Neath I. Modeling the effects of irrelevant speech on memory. Psychonomic Bulletin & Review. 2000;7:403–423. doi: 10.3758/bf03214356. [DOI] [PubMed] [Google Scholar]

- Page MPA, Norris D. The irrelevant-sound effect: What needs modeling, and a tentative model. Quarterly Journal of Experimental Psychology. 2003;56:1289–1300. doi: 10.1080/02724980343000233. [DOI] [PubMed] [Google Scholar]

- Remez RE, Rubin PE, Pisoni DB, Carrell TD. Speech perception without traditional speech cues. Science. 1981;212(4497):947–950. doi: 10.1126/science.7233191. [DOI] [PubMed] [Google Scholar]

- Salamé P, Baddeley A. Effects of background music on phonological short-term memory. The Quarterly Journal of Experimental Psychology. 1989;41(1):107–122. [Google Scholar]

- Schlittmeier SJ, Weißgerber T, Kerber S, Fastl H, Hellbrück J. Algorithmic modeling of the irrelevant sound effect (ISE) by the hearing sensation fluctuation strength. Attention, Perception, and Psychophysics. 2012;74:194–203. doi: 10.3758/s13414-011-0230-7. [DOI] [PubMed] [Google Scholar]

- Surprenant AM, Neath I, Bireta TJ. Changing state and the irrelevant sound effect. Canadian Acoustics. 2007;35:86–87. [Google Scholar]

- Tremblay S, Nicholls AP, Alford D, Jones DM. The irrelevant sound effect: does speech play a special role? Journal of Experimental Psychology: Learning, Memory, and Cognition. 2000;26(6):1750–1754. doi: 10.1037//0278-7393.26.6.1750. [DOI] [PubMed] [Google Scholar]

- Viswanathan N. Unpublished doctoral dissertation. University of Connecticut; Storrs: 2009. The role of the listener’s state in speech perception. [Google Scholar]

- Viswanathan N, Magnuson JS, Fowler CA. Information for Coarticulation: Static Signal Properties or Formant Dynamics? (under review) [DOI] [PMC free article] [PubMed] [Google Scholar]