Abstract

Background:

The exponential use of the internet as a learning resource coupled with varied quality of many websites, lead to a need to identify suitable websites for teaching purposes.

Aim:

The aim of this study is to develop and to validate a tool, which evaluates the quality of undergraduate medical educational websites; and apply it to the field of pathology.

Methods:

A tool was devised through several steps of item generation, reduction, weightage, pilot testing, post-pilot modification of the tool and validating the tool. Tool validation included measurement of inter-observer reliability; and generation of criterion related, construct related and content related validity. The validated tool was subsequently tested by applying it to a population of pathology websites.

Results and Discussion:

Reliability testing showed a high internal consistency reliability (Cronbach's alpha = 0.92), high inter-observer reliability (Pearson's correlation r = 0.88), intraclass correlation coefficient = 0.85 and κ =0.75. It showed high criterion related, construct related and content related validity. The tool showed moderately high concordance with the gold standard (κ =0.61); 92.2% sensitivity, 67.8% specificity, 75.6% positive predictive value and 88.9% negative predictive value. The validated tool was applied to 278 websites; 29.9% were rated as recommended, 41.0% as recommended with caution and 29.1% as not recommended.

Conclusion:

A systematic tool was devised to evaluate the quality of websites for medical educational purposes. The tool was shown to yield reliable and valid inferences through its application to pathology websites.

Keywords: Computer assisted learning, e-learning, medical education, pathology, website evaluation

INTRODUCTION

The number of medical information web sites is increasing. The quality of such websites is highly variable, difficult to assess and are published by a variety of bodies such as government institutions, consumer and scientific organizations, patients associations, personal sites, health provider institutions, commercial sites, etc.[1,2,3]

Without tools and methodologies for evaluating their content information, the web's potential as a universe of knowledge could be lost.[4,5,6,7,8,9] Moreover, no clear guidelines are yet set for medical teaching websites.[10]

A need therefore exists for the development of an evaluation procedure that assists teachers to assess the value of such websites.

CONTEXT

There are ever growing number of medical educational websites and growing number of students relying on them for undergraduate, postgraduate and continuous medical education. Therefore, the process of choosing what to rely on from the plethora of websites available cannot be left to chance or unsystematic browsing. A tool needs to be devised that helps medical teachers and studentsproperly evaluate and choose high quality medical educational websites.

Skilled, methodical, organized human reviewing, selection and filtering based on well-defined quality appraisal criteria need to be the key ingredient in the recommended student web-based reading material in medical schools.

The tool is expected to be used by educators to evaluate websites for potential teaching purposes. In this context the tool will act as an “aid memoire” or a checklist of all factors that will contribute to the overall assessment of the degree of suitability of the tool.

AIMS

To develop and validate a rating tool which evaluates the quality of undergraduate medical educational websites; and apply it to the field of Pathology.

To enable teachers to better evaluate online medical education materials and hence better selection of the most appropriate websites as learning resources for their students, particularly students of problem based learning curricula.

By promoting the application of agreed quality guidelines by all medical schools, the overall quality of medical educational websites will improve to meet the demanded quality and the web will ultimately become a reliable and integral part of the undergraduate medical education.

MATERIALS AND METHODS

The methodology of this research involved systematic review of the literature of available tools, tool development, tool validation process and tool application.

Tool validation is described in several levels, as follows:

Developing a draft tool (this encompasses criteria to be used in evaluating medical education websites).

Pilot testing of the tool.

Revising the tool according to pilot tests.

Validating the tool.

These are detailed as follows

Tool Development

Developing a Draft Tool

Medical Educational Website Quality Evaluation Tool (MEWQET) was developed as described below using the principals and methodology of systematic review as outlined by Hamdy et al.[11]

Item Generation

A comprehensive literature review was carried out. Such a review served to clarify the nature and range of the content of the target construct. Existing tools and criteria for evaluating websites pertaining to education, medical education, general health related educational websites and website quality in general were obtained via searching peer reviewed medical journals websites and other websites as follows.

Review of the literature was carried out by using bibliographic databases, citation searches such as PubMed (http://www.ncbi.nlm.nih.gov/PubMed/) and ERIC (http://www.eric.ed.gov) and search engines such as Google (www.google.com), Yahoo (www.yahoo.com), Excite (www.excite.com), Lycos (www.lycos.com), and Web Crawler (www.webcrawler.com) (Only English language results were pursued).

Search was done using the following search strings: “Quality Rating Instruments AND medical education”, “(evaluation OR guidelines OR criteria) AND (website OR internet OR online OR www) AND medical education” and “(evaluation methods website quality)”, “(reliability OR validity) AND (evaluation method OR questionnaire OR tool)” and variations of the following: “quality,” “Internet,” “World Wide Web”, “rating,” “ranking,” “evaluate,” “award,” and “assess” and combinations thereof.

Additional resources were obtained by investigating references to the obtained articles, connections to relevant articles, author links and hyperlinks from the initial results.

Data Extraction

Criteria were extracted and compiled into groups.

Item Reduction

Initially, items were reduced by removal of items which were repetitive or not relevant to medical education, raw items were generated and further modifications were carried out according to the researcher experience pertaining to undergraduate medical education in general and pathology education in particular. Following the pilot testing, further item reduction was carried out whereby further redundant items were removed and item scaling was adjusted.

Item Scaling

Items were either scaled on a dichotomous basis or as a multilevel scale. The former is a yes/no answer. Example is item 1.1 and 1.4 of the tool. The latter is exemplified in items 1.2, 1.3, 1.5 and 1.6 [Appendix I]. Such items cannot be answered by a simple yes or no answer.

Item Weighting

This was carried out based on already weighted items of pre-validated tools in the literature. Items that were modified or devised were weighted according to information from pooled literature and the experience of the investigator. Summation was done as a subtotal of each category followed by the grand total. The grand total score was categorized as: Poor, weak, fair, good, very good and excellent.

Pilot Testing of the Tool

The tool was piloted using a sample (10%) of the population of websites upon which the tool is to be ultimately used.

Modification of the Tool

The preliminary tool was applied to those websites and further item reduction and modifications of weightage was carried out according to the results of the pilot study.

The grand total score was categorized as recommended, recommended with caution and not recommended.

Validating the Tool

All pathology teaching websites were rated according to MEWQET by one pathologist (the main researcher, referred to as the first observer). A second pathologist (referred to as the second observer) was recruited to evaluate a random sample of the websites using the tool. A 30% randomly selected sample of websites was used.

Training the Second Observer to Use the MEWQET Tool

The second observer acted as the trainee and the main researcher as the trainer. The second observer was given one random website to rate independently. Both the main researcher and the second observer discussed the MEWQET tool using the first website as an example with discussion and few clarifications. Subsequently, the second observer rated another five randomly selected websites. Another discussion session followed with further clarifications. The second observer then rated the remainder of the websites using the MEWQET Tool. Concordance rate was calculated and websites with discordant rating were re-examined and discussed by both the first and second observer in order to reach a consensus.

Reliability Measures

The reliability of the tool was evaluated by comparing the first and second observer's scores using the MEWQET tool and measuring the internal consistency reliability (Cronbach's alpha), Pearson correlation and intraclass correlation coefficient.

Further reliability of the tool was evaluated by comparing the first and second observer's categories using the MEWQET tool. This was done using kappa statistics.

Criterion Related Validity Measures

Testing the tool against a gold standard: Approximately 50% of the websites were randomly selected for review (140 websites) and were independently rated by two expert pathologists (more than 20 years of pathology teaching experience) using their general judgment rather than the tool. This is considered to be the gold standard as no other gold standard exists for this particular area under study. Gold standard one and gold standard two (referred to as GS1 and GS2 respectively) were blinded to the details of the study, the MEWQET tool, the nature of items within the tool and the methodology. Both gold standards ranked the websites as: “Recommended,” “recommended with caution” or “Not recommended” for educational purposes independently and then reached a consensus on the discordant cases. GS consensus results were compared with the outcome of the MEWQET tool to determine its sensitivity and specificity of identifying websites of good quality suitable for teaching purposes or otherwise and positive and negative predictive values.

Content Validity Measures

Comparing the MEWQET Tool with general website rating tools such as Google PageRank and Alexa Traffic Rank.

Two common general website ranking tools such as Google Page Rank and Alexa traffic rank were accessed via www.google.com and www.alexa.com respectively. Their respective toolbars were used to automatically rank every website accessed for the study.

The rank of each was recorded and categorized as recommended, recommended with caution or Not recommended according to the following criteria:

Google Page Rank:

7-10: Recommended.

4-6: Recommended with caution.

0-3: Not recommended.

Alexa Traffic Rank:

≤10,001: Recommended.

10,000-100,000: Recommended with caution.

≥100,001: Not recommended.

Ranks of both Google Page Rank and Alexa traffic rank were compared with the tool consensus categories using kappa statistics.

Gold Standard Rating of the Tool

The tool was revealed to both gold standards after they finished their blinded rating. GS1 and GS2 rated each item and sub-item as: Highly important (HI), important (I) and not important (NI). The Weightage of each item and sub-item was also judged. In addition, the opinion of both gold standards was solicited verbally in a discussion session that followed the completion of the evaluation process.

Construct Validity Measures

The relationship of Gold standard consensus with the actual score of the tool: The scores of the tool were compared with the rating of the gold standard consensus. The mean score for each category was calculated and compared with the tool consensus score.

The relationship of Gold standard consensus with both Google PageRank and Alexa Traffic Rank: Ranks of both Google Page Rank and Alexa traffic rank were compared with the gold standard consensus categories using kappa statistics.

Further validation of the tool was sought by applying it to one of the well-known, robust websites amongst pathologists, namely http://www.pathologyoutlines.com.

Application of the MEWQET Tool

This was applied to pathology websites according to the following eligibility criteria.

Inclusion Criteria

All free of charge, English language websites for pathology education, teaching, online image banks and interactive tutorials.

Exclusion Criteria

Websites for other disciplines, websites for research or experimental pathology, websites of Journals or periodicals of Pathology, websites of databases and search engines, online manuals and textbooks or online dictionaries and glossaries are all excluded.

Sampling Method

All pathology education websites found on the web using Google (The most widely used search engine) and all links from official pathology related websites were used (virtually 100% sample). Search through www.google.com was done using the following search string: “Pathology and education.” The first 50 hits plus all related links were taken. Search ended on 6th June 2008.

Statistical Analysis Used

All statistics were carried out using SPSS software version 17.0.

RESULTS

Tool Development

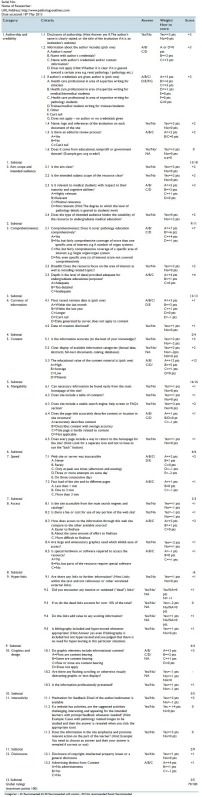

Items were generated as per the methodology described above and then compiled into groups. After compiling the items into groups, items were reduced by removal of items which were repetitive, or not relevant to medical education. Raw items were generated and this resulted in 19 items and a total of 124 sub-items with a maximum global score of 312 points. Some items were modified, some were split into two separate items, and some were summed together as one item depending on relevant importance. This modification resulted in 12 major items, a total of 74 sub-items with a maximum global score of 127 points. This was categorized as poor, weak, fair, good, very good and excellent. Piloting was performed on a 10% random sample which resulted in 30 websites. The preliminary tool was applied to those websites and further item reduction and modifications of weightage was carried out as well as the addition of few important clarifications termed as “hints”.

This resulted in the final version of the tool, which comprises 12 major items and 42 sub-items with a maximum global score of 100 points [Appendix I]. This was categorized arbitrarily as follows:

>65: Recommended.

65-50: Recommended with caution.

<50: Not recommended.

Categorization was changed from a six tiered system to just three for simplification purposes as the former was found cumbersome to apply during the pilot period and it would have proven complicated for comparison purposes. The final version of the tool was then used for the study [Appendix I]: MEWQET].

Validating the Tool

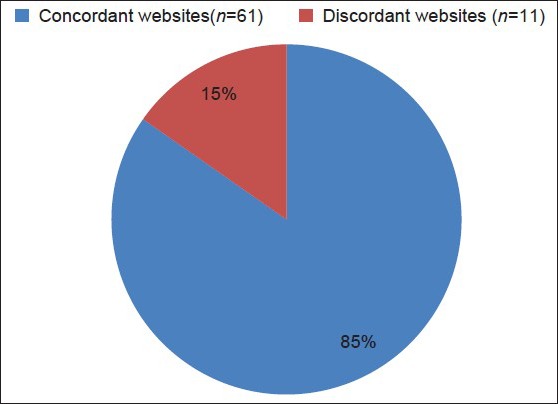

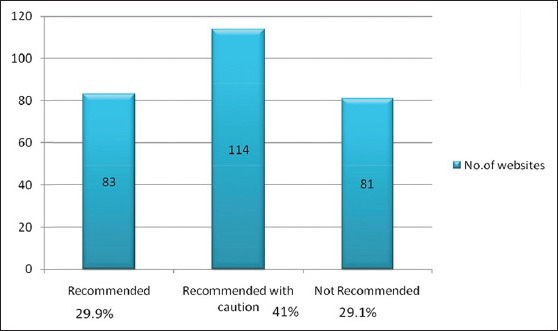

A total of 278 websites were evaluated and categorized by the main researcher (first observer) after the exclusion of 17 websites due to inaccessibility at the time of application of the tool. Following categorization by the second observer and comparing the categories, 61 were found to be concordant and 11 were discordant [Figure 1]. Discordant websites were reviewed together by both first and second observer and the consensus was reached in all (100%).

Figure 1.

Concordance rate between first and second observer

Reliability Measures

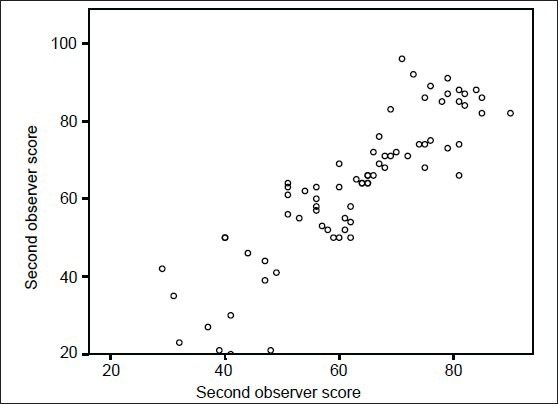

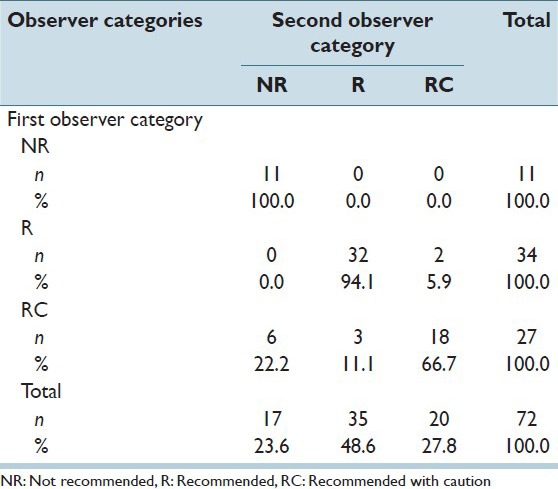

The tool showed internal consistency reliability (Cronbach's alpha) =0.92, Pearson correlation, r = 0.88, P < 0.05 [Figure 2] and intraclass correlation coefficient = 0.85, (0.776-0.906 95% confidence interval), P < 0.05. Further reliability of the tool was evaluated by comparing the first and second observer's categories using the MEWQET tool [Table 1]. This was done using Kappa statistics. κ =0.75, P < 0.05 (substantial agreement-level of agreement as indicated by Landis and Koch (1977).

Figure 2.

Scatter plot of inter-observer agreement

Table 1.

Inter-observer agreement by category

Validity Measures

Criterion Related Validity Measures

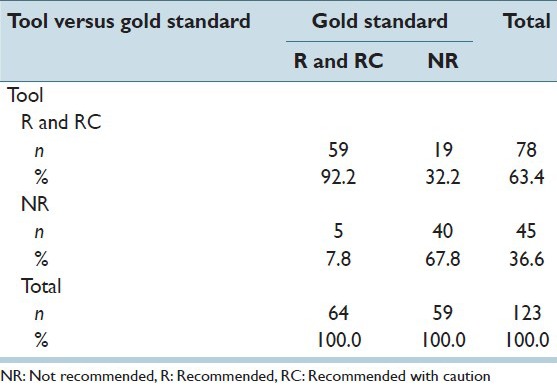

When the categories of tool scores were compared with the gold standard categories, the level of agreement was found to be substantial (κ =0.61) [Table 1] (level of agreement as indicated by Landis and Koch, 1977).

In order to determine the sensitivity, specificity, positive predictive value and negative predictive value, the recommended with caution group was combined with the recommended group and compared with the not recommended group. This showed that the tool has 92.2% sensitivity, 67.8% specificity, 75.6% positive predictive value and 88.9% negative predictive value [Table 2].

Table 2.

Ratings of tool versus gold standard

Content Validity

Comparing the MEWQET Tool with general website rating tools such as Google PageRank and Alexa Traffic Rank: Comparing Google Page Rank and Alexa traffic rank with the tool consensus categories using kappa statistics showed kappa of 0.031 with a P value of 0.45 and − 0.023 with a P value of 0.57 respectively.

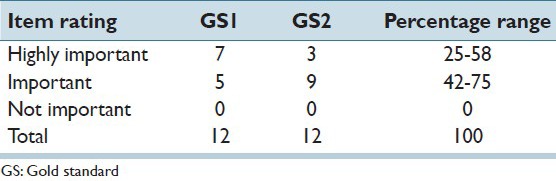

Gold Standard rating of the tool: No item was rated as NI; as 100% of Items were rated as either HI or important. 91-93% of sub-items were rated as either HI or important [Tables 3 and 4].

Table 3.

Item ratings by gold standard

Table 4.

Sub-item rating by gold standard

In addition, both gold standards expressed their opinion verbally that the items in the tool can act as an “aid memoire” had they not been put in a checklist format they can be easily overlooked. This opinion was obtained during a discussion session that followed the completion of the evaluation process.

Construct Validity Measures

The relationship of gold standard consensus with the actual score of the tool.

The rating of the gold standard consensus was compared with scores of the tool and the mean was calculated. The mean scores for each category were as follows: Recommended: 67.8, recommended with caution: 59.9 and not recommended: 49.6.

The Relationship of Gold Standard Consensus with Both Google Pagerank and Alexa Traffic Rank

To examine whether this tool performs better than Google or Alexa, the latter were each compared against the gold standard in the same way that the tool was compared with each of Google and Alexa.

Comparing both Google Page Rank and Alexa traffic rank with the Gold standard categories using kappa statistics showed kappa level of agreement, κ = −0.007 with a P value of 0.92 and 0.038 with a P value of 0.53 respectively.

Upon seeking further validation of the tool by applying it to one of the well known, robust websites amongst pathologists, namely http://www.pathologyoutlines.com, the following was found:

This website scored 79% and hence it is in the recommended category [[Appendix II]].

This gives further support to the validity of the MEWQET tool.

Pathologyoutlines.com got the maximum score in 34 out of 42 sub-items (80.9% of the total sub-items). The website did not get the highest score in only eight sub-items and these are described as follows.

In sub-item 1.3, the maximum score is 5 but the website only scored 3 because the target audience is physicians rather than medical students. In sub-item 1.6, it scored 0, while the maximum score is 3 as the domain of this website is a.com (denoting a commercial website, as opposed to.net or.org). In sub-item 2.3 which relates to its relevance to medical students with respect to their maturity and cognitive abilities, it scored 3 while the maximum score is 5. This is because the website is geared for postgraduate professionals where the information is presented swiftly in a compact bulleted format. This may be conceived as “hard to understand” by medical students as it does not give the elaborative explanatory text that the undergraduate medical student might need. In sub-item 4.1, it scored one while the maximum score is 3. This is because the date of last update of some chapters of the website was longer than a year. In sub-item 10.2, the website scored-2 as it did contain flashing, scrolling, or otherwise visually distracting graphic and text displays. In both sub-items 11.2 and 11.3 (interactivity), the website scored 0 in each, while the maximum score is 3 and 4 respectively. This is due to the fact that the website does not have activities that are challenging, interesting, and appealing for the intended learner with prompt feedback whenever needed nor does it have any provision for relevant action on the part of the learner. Lastly, in sub-item 12.2, it scored one while the maximum score is 4 and that is because it did have commercial advertisements.

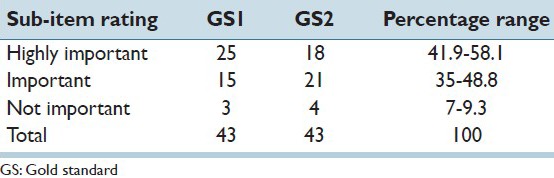

Application of the Tool

The search methodology described above yielded 414 websites [Figure 3].

Figure 3.

Website selection flowchart

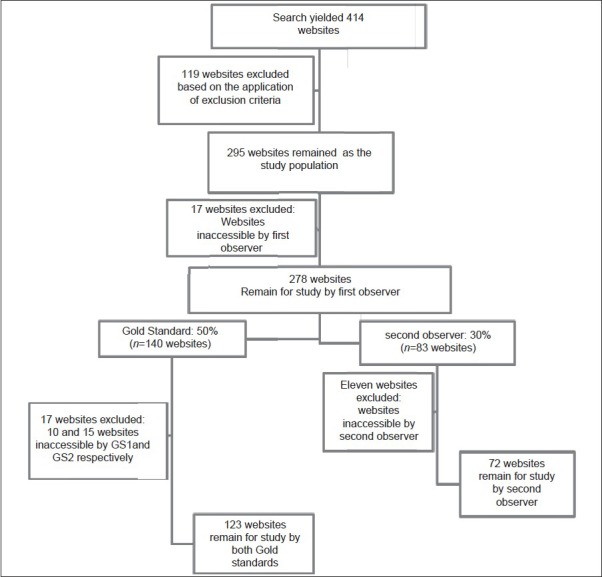

The quality of pathology websites for undergraduate education was measured using the validated MEWQET Tool. Out of a total of 278 websites, the tool identified 83 websites as recommended (29.9%), 114 websites as recommended with caution (41.0%) and 81 websites as not recommended (29.1%) [Figure 4]. In other words, the tool distinguished around two thirds of the websites as suitable and one third as not suitable. The full list of websites and their rating is outlined in Appendix III.

Figure 4.

Websites according to category

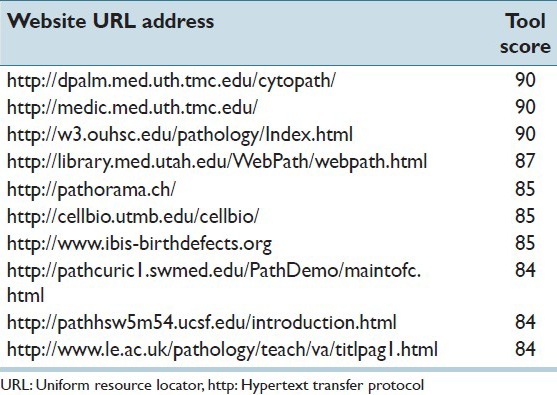

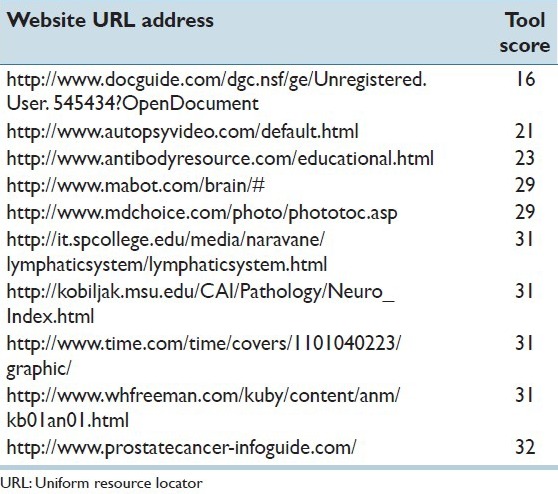

The websites were ranked according to the actual scores of the tool within each category. The top 10 recommended websites and the bottom 10 not recommended websites are displayed in Tables 5 and 6 respectively.

Table 5.

Top 10 “recommended” websites

Table 6.

Top 10 “not recommended” websites

DISCUSSION

Background

The exponential advances of online technologies have led to significant enhancement of medical education. The study of medicine depends on analysis and synthesis of the vast amount of information that includes highly visual and complex data. This is particularly true for a field like pathology, where it is highly dependent on interpretation of complex visual images opening the way for the internet to rapidly emerge as an attractive method for learning.[12,13,14]

With the growing popularity of using the internet as a source of information in general and for educational purposes in particular and the concern of lack of proper scrutiny for quality; a need has arisen to devise instruments whereby the quality of such material is systematically evaluated for its quality and possible potential use by students.[15]

In contrast to the ever-growing websites and internet usage around the world, literature pertaining to evaluating the quality of web based materials has been scarce.[1,2]

Most of website evaluating tools that were found in the literature pertain to evaluating health related websites with the consumer, lay person or patient in mind.[9,16,17,18,19,20] Other evaluating tools were found to be devised by librarians who are concerned about the quality of published information in general on the web.[21,22,23] Tools pertaining to evaluating education related websites are also available[24,25] as well as those related to medical undergraduate education.[4,26]

Despite many attempts to devise new tools pertaining to examine the quality of health information on the internet, there does not appear to be any universally accepted reliable website rating tool that can be used. Of the attempts to devise education related evaluation tools, criteria lacked comprehensiveness and well described development procedures.[27,28,29]

Finding tools that have already been validated was challenging as a number of tools were devised and applied without proper scrutiny for their reliability or validity.[2,30,31,32] Studies have shown that finding reliable sources of information on the web can be confusing and difficult.[33,34]

Tool Development

The tool of this study was developed pooling together “standards” or “items” from a variety of tools identified via extensive literature searches. Such tools encompassed those designed either for health websites, pure educational websites, websites in general form librarian perspective or medical educational websites.

Items of the Tool

The standards or items (Authorship and Credibility, Aim, Scope and Intended Audience, Comprehensiveness, Currency of Information, Content, Navigability, Speed, Access, Hyper-links, Graphics and Design, Interactivity and Disclosures) that were found to be essential or important amongst all the tools searched were included in this tool.

Agencies and institutions concerned with health consumer issues over the net in their publications regarding evaluating online health related information also considered aim, scope and intended audience, credibility, content, authority and reputation, relevance, coverage, accuracy, currency, accessibility, ethics, design and layout, disclosure, links, interactivity and ease of use in their guidelines.[9,16,17,18,20,35] This was further supported by (Bernstama, Sheltona, Waljia and Meric-Bernstamb, 2005) who reviewed 80 instruments that are available to assess the quality of health information on the web and found the above as common elements in such instruments.[8] Other investigators had similar findings.[1,2,5,34,36,37]

General and medical library resources considered authorship, accuracy, currency, coverage, design, referral to other resources, purpose, audience value of content and navigation as essential items in their online publication regarding evaluating internet resources.[21,22,23]

Tools assessing educational materials on the web were rated by several investigators outlining authorship, accuracy, intended audience, clarity, aim, comprehensiveness, interactivity, navigability and scope as their evaluation checklists.[24,25,38] Emphasized the importance of active learning and hence the importance of interactivity as one of the standards in educational website.[10] Interactivity was also included in our tool. In addition, similar standards were considered in guidelines set for medical and health information sites[39] as well as medical educational website evaluations.[4,26]

Some studies evaluated online educational materials for practicing pathologists and those outlined accuracy, ease of navigation, relevance, updates and completeness as some of their standards.[40]

Lack of Existing Comprehensive Tool Pertaining to Pathology Education

Review of the literature did not reveal any study pertaining to pathology education that incorporated as a comprehensive review of earlier tools or as systematic item generation with item listing and extraction as was carried out in this study. In this study, all earlier tools pertaining to evaluating health education, general librarian, education, practice of discipline and medical education were thoroughly reviewed, items were generated from these tools and they were listed and categorized. This was followed by a process of eliminating repeated or redundant items, reducing the items to the most relevant ones in relation to undergraduate medical education.

Item Weightage

Item weightage was not thoroughly discussed in the majority of studies encountered,[34] however, in (McInerney C and Bird, 2004) study weightage was judged by the level of importance decided by website quality.[37]

In this study, items and sub-items were assigned weightage according to some of the literature that mentioned their item weightage or from the researcher's own experience regarding pathology education and the importance of the various items as it pertains to medical education. The Net Scoring® criteria to assess the quality of health internet information for example have grouped 49 criteria into eight groups. Each criterion has a weight: Essential criterion rated from 0 to 9, important criterion rated from 0 to 6 and minor criterion rated from 0 to 3. This was done according to the relevance of the criterion or item to the core item, which is the educational value of the resource. In this study, a similar approach was followed.[19]

This was further refined following the pilot stage of the study. This approach strengthened the methodology of the study as it followed the acceptable fundamentals of tool development technique. Such item weightage suffered from the inevitable disadvantage of relying on certain assumptions. This was overcome, however, by the systematic tool validation that followed.

Tool Validation

Very few tools have undergone rigorous validation. Of those who have, some show good validity and reliability of the their tools,[1,8,9,34] while others show poor validation measures including poor inter-rater agreement in a wide range of tools.[2,30,31]

Inter-observer agreement was found to improve after a period of training of the second rater or the rater who did not develop the tool. For example, (McInerney C and Bird, 2004) found that Spearman rho's correlation improved from 0.775 to 0.985 after such training.[37] In this study training of the second rater was incorporated from the beginning in the methodology of tool validation.

The results of this study show high reliability suggested by statistically significant and quantitatively large kappa value, intraclass correlation coefficient, pearson correlation coefficient and Cronbach's alpha.

Analyzing the inter-observer agreement by category revealed that the not recommended category showed a 100% agreement, the recommended category showed a 94% agreement and no website rated as recommended by one observer was rated as not recommended by the other observer, the disagreement was only confined between the recommended group and the recommended with caution group. In the recommended with caution group, the discordance was spread between both recommended and not recommended category. In other words, there was no more than one category difference in the discordant group [Table 1].

According to (Bland and Altman, 1997) the minimum requirement of satisfactory reliability as measured by Cronbach's alpha is 0.70. In our study, the level of Cronbach's alpha correlation coefficient is 0.92 indicating the high reliability of our tool.[41]

Comparison of the tool consensus score with the gold standard consensus showed high sensitivity, moderately high specificity and high positive and negative predictive values of the tool. In other words, the tool proved to be able to pick out the most suitable websites for medical education.

The MEWQET tool showed higher sensitivity than specificity. This was expected as it was designed to pick out most of the suitable websites for medical education, though some of the tools selected may not be suitable. The high negative predictive value denotes an added advantage of the tool as it indicates that a high proportion of “not recommended” websites are correctly assigned as not recommended.

No similar comparison was found in the literature where sensitivity, specificity, positive and negative predictive values of tools was measured. This may be due to the fact that most tools reflected their results in a multiple tiered categorization systems such as (excellent, very good, good, poor) rather than our system where we managed to combine the two categories “recommended” and “recommended with caution” together and measure it against the third category “not recommended” in a binary fashion. This approach gave an added depth to the meaningfulness of the statistical results in that it focused on what matters most, which is: Will the tool be able to pick the most suitable or most recommended websites or not. In addition, no instrument used a gold standard in the manner that was carried out in this study.

Very few instruments used the gold standard approach as described in this study. Some used “gold standard” for specific information on the web such as information on the management of cough or reliability of information about miscarriage measured against set criteria as established by the Royal College of obstetrics and Gynecology for example. Gold standard in this context was established guidelines about a specific disorder, but not for a broad topic such as pathology education.[2,32]

Since Google PageRank and Alexa Traffic Rank are general rating tools which are designed basically to measure how popular a website is by tracking only traffic to and from it, it was anticipated that they will not correlate with the tool as the tool was applied to pathology educational websites. The general popularity ranking of these websites by Google and Alexa was not expected to be favorable out of the entire population of websites that are available on the web. The results of this study showed that there was no correlation between the tool and Google and Alexa which adds to the content validity of the tool. To support this further, gold standard rating was compared with that of each of Google and Alexa in the same way as it done for the tool and similar negative correlation was found between the gold standard rating and both Google and Alexa ranking system. It is also known that general website ranking tools can be subject to manipulation, spoofing and spamdexing inflating the real popularity of websites.[42,43]

High content validity of the tool was further supported by the gold standard evaluation where all items were rated as either HI or important and no item was rated as NI. In addition, up to 93% of sub-items were rated as either HI or important and Gold standard agreed with the weightage of all items. In addition, both gold standards expressed their opinion verbally that the items in the tool can act as an “aid memoire” had they not been put in a checklist format they can be easily overlooked.

Positive correlation was found between the gold standard consensus with the actual score of the tool while an inverse correlation was found between the gold standard consensus with both Google Page Rank and Alexa Traffic Rank thus supporting the high construct validity of the tool.

When (Griffins, Tang, Hawking and Christensen, 2005) compared Google PageRank with a tool designed to find good web-based information about depression, poor correlation was found.[36] In addition, no correlation was found between the high scoring health related websites and the popularity ranking by either Google Page Rank or Alexa Traffic Rank as studied by (Zeng and Parmanto 2004).[44]

Application of the Tool

The MEWQET tool was then applied to a population of pathology websites. The results were shown to follow a normal distribution pattern, which is expected as the majority of the websites were recommended with caution, fewer websites were recommended and an equal number of websites were deemed not recommended by the tool.

From the experience of the investigator those websites that were picked by the tool as recommended are websites that are already known to many reputable medical schools as highly suitable websites to give to students for further reading or as a reference. Moreover, evaluation of the website: http://www.pathologyoutlines.com which was chosen as an example of well reputable, trusted and robust website by the tool showed its rating as “recommended.” This adds evidence to support the validity of the tool.

In Parikh et al., 's study on validated websites on cosmetic surgery, they found that 89% of the websites studied failed to reach an acceptable standard, while Frasca et al., evaluated anatomy sites on the internet in 1998 and again 1999 they found a significant increase in the quality of anatomy websites.[33,29] It is therefore difficult to compare with other studies as each is using a different tool with different validation methods.

Many studies devising rating tools, apply their standards or criteria directly without subjecting their tools to rigorous validations.[27,38] This study, however, demonstrated a thorough and systematic procedure in tool development and validation and established a good example of developing effective evaluation tool for medical educational websites.

It is of note that even though the tool was developed using pathology educational websites, it is applicable to any medical education website.

How to Use the MEWQET Tool

It is proposed that the tool be used by educators to vet websites or sections/subsections of websites for use by medical students to help fulfil specific learning objectives. Once vetted, the educator should give the link of this website as part of the reading material, post it on the intranet or virtual learning environment of the college. Students’ feedback about the website should be encouraged. This exercise should be done at the beginning of each learning module. This is supported by other studies where it was found that students even though they enjoy the flexibility of online learning,[45,46] but they reported that the content was too much for the allotted time. Instructors are therefore expected to carefully scrutinize all content material before recommending them to students.[45,46]

By promoting the application of agreed quality guidelines by all medical schools, the overall quality of medical educational websites could improve to meet the demanded quality and the Web might ultimately become a reliable and integral part of the undergraduate medical education.

CONCLUSIONS

Many medical education websites are available on the web with unknown quality and similarly many un-validated website evaluation instruments are also available.

It is crucial not to instantly rely on any material found on the internet for educational purposes and instead to critically and systematically evaluate websites for medical education. The tool developed in this study fills a vacuum that exists in this area and further scrutiny is needed for this tool and other similar tools that are available now or will be available in the future to ensure optimum choice of the best websites for educational purposes.

The ultimate objective was to increase the awareness of website authors/owners not to publish on the net without enough scrutiny otherwise, readers will simply stay away from their websites. Therefore, the results of this study could serve to increase the quality of materials published on the internet and ultimately to increase the reliability of the internet as an educational resource.

Appendix I: Medical educational website quality evaluation tool (MEWQET)

Appendix II: Medical educational website quality evaluation tool (MEWQET)

Appendix III: MEWQET tool's scores and ratings of pathology websites

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2013/4/1/29/120729

REFERENCES

- 1.Kim P, Eng TR, Deering MJ, Maxfield A. Published criteria for evaluating health related web sites: Review. BMJ. 1999;318:647–9. doi: 10.1136/bmj.318.7184.647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Breckons M, Jones R, Morris J, Richardson J. What do evaluation instruments tell us about the quality of complementary medicine information on the internet? J Med Internet Res. 2008;10:e3. doi: 10.2196/jmir.961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pujola JT. Ewebuation. Edinburgh working papers for applied linguistics. [Last cited on 2007 Nov 7]. Available from: http://www.eric.ed.gov .

- 4.Berry E, Parker-Jones C, Jones RG, Harkin PJ, Horsfall HO, Nicholls JA, et al. Systematic assessment of World Wide Web materials for medical education: Online, cooperative peer review. J Am Med Inform Assoc. 1998;5:382–9. doi: 10.1136/jamia.1998.0050382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Eysenbach G, Powell J, Kuss O, Sa ER. Empirical studies assessing the quality of health information for consumers on the World Wide Web: A systematic review. JAMA. 2002;287:2691–700. doi: 10.1001/jama.287.20.2691. [DOI] [PubMed] [Google Scholar]

- 6.Wilson P. How to find the good and avoid the bad or ugly: A short guide to tools for rating quality of health information on the internet. BMJ. 2002;324:598–602. doi: 10.1136/bmj.324.7337.598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Eysenbach G, Diepgen TL. Towards quality management of medical information on the internet: Evaluation, labelling, and filtering of information. BMJ. 1998;317:1496–500. doi: 10.1136/bmj.317.7171.1496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bernstam EV, Shelton DM, Walji M, Meric-Bernstam F. Instruments to assess the quality of health information on the World Wide Web: What can our patients actually use? Int J Med Inform. 2005;74:13–9. doi: 10.1016/j.ijmedinf.2004.10.001. [DOI] [PubMed] [Google Scholar]

- 9.Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. 1999;53:105–11. doi: 10.1136/jech.53.2.105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Alur P, Fatima K, Joseph R. Medical teaching websites: Do they reflect the learning paradigm? Med Teach. 2002;24:422–4. doi: 10.1080/01421590220145815. [DOI] [PubMed] [Google Scholar]

- 11.Hamdy H, Prasad K, Anderson MB, Scherpbier A, Williams R, Zwierstra R, et al. BEME systematic review: Predictive values of measurements obtained in medical schools and future performance in medical practice. Med Teach. 2006;28:103–16. doi: 10.1080/01421590600622723. [DOI] [PubMed] [Google Scholar]

- 12.Marshall R, Cartwright N, Mattick K. Teaching and learning pathology: A critical review of the English literature. Med Educ. 2004;38:302–13. doi: 10.1111/j.1365-2923.2004.01775.x. [DOI] [PubMed] [Google Scholar]

- 13.Klatt EC, Dennis SE. Web-based pathology education. Arch Pathol Lab Med. 1998;122:475–9. [PubMed] [Google Scholar]

- 14.Schmidt P. Digital learning programs-competition for the classical microscope? GMS Z Med Ausbild. 2013;30:Doc8. doi: 10.3205/zma000851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Burd A, Chiu T, McNaught C. Screening Internet websites for educational potential in undergraduate medical education. Med Inform Internet Med. 2004;29:185–97. doi: 10.1080/14639230400005982. [DOI] [PubMed] [Google Scholar]

- 16.BIOME website. [Last cited on 2007 Dec 16]. Available from: http://www.biome.ac.uk/guidelines/eval .

- 17.AHRQ website. [Last updated 2013 Sept 5; Last cited on 2007 Dec 16]. Available from: http://www.ahrq.gov .

- 18.MedCIRCLE website. [Last updated on 2013 Sep 5; Last cited on 2007 Dec 16]. Available from: http://www.medcircle.org .

- 19.Net Scoring® website. [Last updated on 2001 Jul 18; Last cited on 2007 Dec 16]. Available from: http://www.chu-rouen.fr/netscoring/netscoringeng.html .

- 20.Health on the net website. [Last cited on 2010 Feb 14]. Available from: http://www.healthonnet.org .

- 21.Jhu libraries website. [Last cited on 2007 Dec 16]. Available from: http://webapps.jhu.edu/jhuniverse/libraries .

- 22.Binghamton university libraries website. [Last cited on 2007 Dec 16]. Available from: http://library.binghamton.edu .

- 23.University of Idaho library website. [Last cited on 2007 Dec 16]. Available from: http://www.lib.uidaho.edu .

- 24.Robert MB, Dohun K, Lynne K. ERIC Digest. NY: ERIC clearinghouse on information and technology syracuse; [Last cited on 2007 Dec 16]. Evaluating online educational materials for use in instruction. Available from: http://www.eric.ed.gov . [Google Scholar]

- 25.Schrock K. The ABC's of web site evaluation. Kathyschrock website. [Last cited 2007 Nov 8]. Available from: http://kathyschrock.net/index.htm .

- 26.Cook DA, Dupras DM. A practical guide to developing effective web-based learning. J Gen Intern Med. 2004;19:698–707. doi: 10.1111/j.1525-1497.2004.30029.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hsu CM, Yeh UC, Yen J. Development of design criteria and evaluation scale for web-based learning platforms. Int J Ind Ergon. 2009;39:90–5. [Google Scholar]

- 28.Yuh JL, Abbott AV, Stumpf SH, Ontai S. Pilot study of a rating instrument for medical education Web sites. Acad Med. 2000;75:290. doi: 10.1097/00001888-200003000-00023. [DOI] [PubMed] [Google Scholar]

- 29.Frasca D, Malezieux R, Mertens P, Neidhardt JP, Voiglio EJ. Review and evaluation of anatomy sites on the Internet (updated 1999) Surg Radiol Anat. 2000;22:107–10. doi: 10.1007/s00276-000-0107-2. [DOI] [PubMed] [Google Scholar]

- 30.Hanif F, Read JC, Goodacre JA, Chaudhry A, Gibbs P. The role of quality tools in assessing reliability of the internet for health information. Inform Health Soc Care. 2009;34:231–43. doi: 10.3109/17538150903359030. [DOI] [PubMed] [Google Scholar]

- 31.Hsu WC, Bath PA. Development of a patient-oriented tool for evaluating the quality of breast cancer information on the internet. Stud Health Technol Inform. 2008;136:297–302. [PubMed] [Google Scholar]

- 32.Hardwick JC, MacKenzie FM. Information contained in miscarriage-related websites and the predictive value of website scoring systems. Eur J Obstet Gynecol Reprod Biol. 2003;106:60–3. doi: 10.1016/s0301-2115(02)00357-3. [DOI] [PubMed] [Google Scholar]

- 33.Parikh AR, Kok K, Redfern B, Clarke A, Withey S, Butler PE. A portal to validated websites on cosmetic surgery: The design of an archetype. Ann Plast Surg. 2006;57:350–2. doi: 10.1097/01.sap.0000216244.81900.3b. [DOI] [PubMed] [Google Scholar]

- 34.Ademiluyi G, Rees CE, Sheard CE. Evaluating the reliability and validity of three tools to assess the quality of health information on the Internet. Patient Educ Couns. 2003;50:151–5. doi: 10.1016/s0738-3991(02)00124-6. [DOI] [PubMed] [Google Scholar]

- 35.Healthcare coalition website. [Last cited on 2007 Dec 16]. Available from: http://www.ihealthcoalition.org .

- 36.Griffiths KM, Tang TT, Hawking D, Christensen H. Automated assessment of the quality of depression websites. J Med Internet Res. 2005;7:e59. doi: 10.2196/jmir.7.5.e59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.McInerney C, Bird N. Assessing website quality in context: Retrieving information about genetically modified food on the web. Information research. [Last cited on 2007 Jan 12]. Available from: http://InformationR.net/ir/10-2/paper213.html .

- 38.Kleinpell R, Ely EW, Williams G, Liolios A, Ward N, Tisherman SA. Web-based resources for critical care education. Crit Care Med. 2011;39:541–53. doi: 10.1097/CCM.0b013e318206b5b5. [DOI] [PubMed] [Google Scholar]

- 39.Winker MA, Flanagin A, Chi-Lum B, White J, Andrews K, Kennett RL, et al. Guidelines for medical and health information sites on the internet: Principles governing AMA web sites. American Medical Association. JAMA. 2000;283:1600–6. doi: 10.1001/jama.283.12.1600. [DOI] [PubMed] [Google Scholar]

- 40.Bland JM, Altman DG. Cronbach's alpha. BMJ. 1997;314:572. doi: 10.1136/bmj.314.7080.572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Google website. [Last cited on 2013 Aug 14]. Available from: http://www.google.com .

- 42.Wikipedia website. [Last updated 2013 Oct 6; Last cited 2010 Jan 17]. Available from: http://www.wikipedia.com .

- 43.Zeng X, Parmanto B. Web content accessibility of consumer health information web sites for people with disabilities: A cross sectional evaluation. J Med Internet Res. 2004;6:e19. doi: 10.2196/jmir.6.2.e19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Premkumar K, Ross AG, Lowe J, Troy C, Bolster C, Reeder B. Technology-enhanced learning of community health in undergraduate medical education. Can J Public Health. 2010;101:165–70. doi: 10.1007/BF03404365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Marker DR, Bansal AK, Juluru K, Magid D. Developing a radiology-based teaching approach for gross anatomy in the digital era. Acad Radiol. 2010;17:1057–65. doi: 10.1016/j.acra.2010.02.016. [DOI] [PubMed] [Google Scholar]

- 46.Marintcheva B. Motivating students to learn biology vocabulary with wikipedia. J Microbiol Biol Educ. 2012;13:65–6. doi: 10.1128/jmbe.v13i1.357. [DOI] [PMC free article] [PubMed] [Google Scholar]