Abstract

Dirichlet process (DP) priors are a popular choice for semiparametric Bayesian random effect models. The fact that the DP prior implies a non-zero mean for the random effect distribution creates an identifiability problem that complicates the interpretation of, and inference for, the fixed effects that are paired with the random effects. Similarly, the interpretation of, and inference for, the variance components of the random effects also becomes a challenge. We propose an adjustment of conventional inference using a post-processing technique based on an analytic evaluation of the moments of the random moments of the DP. The adjustment for the moments of the DP can be conveniently incorporated into Markov chain Monte Carlo simulations at essentially no additional computational cost. We conduct simulation studies to evaluate the performance of the proposed inference procedure in both a linear mixed model and a logistic linear mixed effect model. We illustrate the method by applying it to a prostate specific antigen dataset. We provide an R function that allows one to implement the proposed adjustment in a post-processing step of posterior simulation output, without any change to the posterior simulation itself.

Key words and phrases: Bayesian nonparametric model, Dirichlet process, fixed effects, generalized linear mixed model, random moments, post-processing, random probability measure

1. Introduction

We propose an adjustment for inference in semiparametric Bayesian mixed effect models with a Dirichlet process (DP) prior on a random effect distribution G. The need for adjustment arises from two challenges. The first is a difficulty in the interpretation of fixed effects that are paired with random effects, due to an identifiability issue. We formally define the notion of paired fixed and random effects later. The second challenge is a similar issue related to the variance components of the random effects. We show that inference based on a conventional interpretation of the fixed effects and variance components is often poor. Using a parametrization with hierarchical centering (Gelfand, Sahu, and Carlin (1995)), we interpret the first two moments of G, denoted by μG and CovG, as the fixed effects paired with random effects and the variance components of the random effects, respectively. We derive easy-to-evaluate formulas for the posterior moments of μG and CovG, and propose to use them in a straightforward post-processing step for Markov chain Monte Carlo (MCMC) output. In an application to inference for PSA profiles, we show that the proposed adjustment can significantly change parameter estimates in a typical data analysis: posterior means for some fixed effects change between 11 and 32%; the corresponding posterior standard deviations (SDs) and credible interval (CI) lengths change by more than 200%; the changes in the posterior means, SDs, and lengths of CIs for the variance components are similarly large. We provide an R function for users to implement the proposed procedure.

Linear and generalized linear mixed models (LMMs & GLMMs) are an important and popular tool for analyzing correlated data. The random effects in such models are typically assumed normal, mainly for reasons of technical convenience. However, many applications require a more heterogeneous random effect distribution. For example, potentially relevant subject-specific covariates may not have been measured or are difficult to measure. Missing covariates can lead to a multimodal random effect distribution. In other applications, the distribution of the random effects may be skewed.

Estimation of the random effect distribution is important for predictive inference. Consider, for example, the joint modeling of a primary endpoint and a longitudinal covariate. Valid estimates of the random effects are crucial. Inappropriately assuming normality can lead to excessive shrinkage towards zero and result in poor prediction.

These concerns lead many investigators to use nonparametric alternatives to normal random effect distributions. The DP is a popular choice as a nonparametric prior for the random effect distribution in mixed effect models within the Bayesian framework. For example, Kleinman and Ibrahim (1998b, a) modeled the random effect distribution as

| (1.1) |

where DP(M, G0) denotes a DP with a total mass parameter M and a base probability measure G0 (Ferguson (1973)). We refer to a fixed effect as paired with a random effect if the columns in the design matrices of fixed effects and random effects match. See the discussion after equation (2.1) for a formal definition. In short, if the sampling model for the j-th repeated observation for the i-th subject involves a linear predictor with fixed effects β, subject-specific random effects bi and known design vectors xij and zij, then we refer to a subvector βR of β as paired with bi if the corresponding subvector of xij matches zij, e.g., both contain an intercept. Posterior simulations in a LMM or GLMM based on model (1.1) for the random effects can be carried out using Gibbs sampling. A similar approach has been used by Bush and MacEachern (1996) in randomized block designs and many others. We argue that there is a difficulty in interpreting posterior inference for fixed effects that are paired with random effects in the above models, due to an identifiability issue. With a non-parametric random effect distribution, a difficulty also arises in the interpretation of the variance components of the random effects.

In related work, Newton, Czado, and Chappell (1996) proposed a centrally standardized Dirichlet process prior for the link function in a binary regression, under which each realization of the link function has a median of zero. The approach is restricted to univariate distributions.

We propose a modified DP model and a post-processing procedure to address the aforementioned challenges. The model uses a DP prior for the sum of the random effects and their corresponding fixed effects with a base measure centered at an unknown mean. The post-processing technique is based on an analytic evaluation of the moments of the random moments of a random probability measure with a DP prior. Several recent references have discussed the distribution of these random moments. For example, many authors have discussed the distribution of the mean of a DP random measure, including Hjort and Ongaro (2005) and Lijoi and Regazzini (2004). Epifani, Guglielmi, and Melilli (2006) studied the distribution of the random variance of a DP random measure. Gelfand and Mukhopadhyay (1995) and Gelfand and Kottas (2002) used Monte Carlo integration to evaluate marginal posterior expectation of linear and nonlinear functionals of a nonparametric distribution whose prior is a DP mixture. They approximate the conditional expectation of the functional by a sample of the functional based on the predictive distribution of the parameters of the kernel. In this paper, we instead provide closed-form formulas for the mean and covariance matrix of the (random) moments of a random measure with a DP prior. These expressions can be incorporated into MCMC simulations and used to adjust for inference for both the fixed effects paired with the nonparametric random effects and the second moments of the random effect distribution. We conduct simulation studies to evaluate the performance of the proposed moment-adjustment procedure and illustrate the method by analyzing a prostate specific antigen (PSA) dataset.

The remainder of this article is organized as follows. In Section 2 we discuss a difficulty with the naïve inference in the DP random effect model, propose a modification to the conventional DP prior, and briefly discuss the posterior propriety of the model. In Section 3 we propose adjusted inference for fixed effects paired with random effects, and for the variance components of the random effects. Specifically, in Section 3.1 we derive the posterior mean and variance-covariance matrix for fixed effects that are paired with random effects, using results on the moments of the random first and second moments of a DP random measure. In Section 3.2 we derive new closed-form results concerning the expectation of the random third and fourth moments of a DP. We use these results to report posterior summaries for the (random) covariance matrix of the random effects. In Section 4 we report results from simulation studies to show the performance of the proposed inference procedure in both a LMM and a logistic random effect model. In Section 5 we illustrate the method with inference for the PSA data. We provide concluding remarks in Section 6. Proofs are given in the Appendix.

2. A Hierarchically Centered Dirichlet Process Prior

For convenience, we use a nonparametric GLMM to illustrate our proposed method. However, unless indicated otherwise, all results remain applicable for any nonparametric hierarchical model that contains the DP model (1.1) or (2.3) as a submodel. For example, model (5.1) in our data example contains a nonlinear component.

Suppose yij arise independently from an exponential family with mean and variance with a known dispersion parameter ϕ, conditional on the cluster-specific random effects bi (q × 1), i = 1, …, m, j = 1, …, ni. Consider the GLMM

| (2.1) |

where , g(·) is a monotone differentiable link function with inverse h(·), and the bi are independent and identically distributed with E(bi) = 0. Let yi = (yi1, …, yini)T and . Model (2.1) emcompasses the general LMM as a special case. Without loss of generality we assume that the fixed effects are partitioned into (βF, βR) and similarly , with . We refer to βR as fixed effects paired with the random effects bi. For example, in equation (4.1), (β0, β1) are fixed effects paired with random effects with . If we add an additional term β2wij on the right hand side (RHS) of (4.1), then β2 is considered a fixed effect that is not paired with either random effect, or .

Consider the GLMM (2.1) with the DP prior model (1.1) for the random effects. The model includes the awkward feature that the unknown random effect distribution G has a non-zero mean almost surely. This makes inference on the fixed effects βR difficult to interpret. Let μG = ∫ bidG(bi) denote the random mean of G. We argue that, instead of reporting inference on βR, it is more appropriate to report inference on βpair ≡ βR + μG.

Following the above arguments, we propose to model the distribution of βR + bi as

| (2.2) |

where βb is an unknown vector of the mean parameters for the base probability measure. Given a lack of interpretation for inference on βR and μG separately, we propose to remove the paired fixed effects βR from (2.1). As a result, the random effect vector in the revised model, again denoted by bi, corresponds to βR + bi in the original model. The prior model (2.2) now becomes

| (2.3) |

The specification of (2.3) follows the notion of hierarchical centering (Gelfand, Sahu, and Carlin (1995)). We further use β ≡ βF and to denote the remaining fixed effect vector and corresponding design vector. Instead of inference on βR in the original model, we report inference on βpair = μG in the revised model. For later reference we state the revised centered GLMM as

| (2.4) |

This is the same as model (2.1), except that now xij only contains , and bi follows (2.3).

We complete the GLMM with commonly used (hyper-)priors on the remaining parameters: we assume a diffuse normal prior for each component of β and βb, a proper prior to be described below for D, and a diffuse inverse Gamma (IG) prior for the residual variance if the GLMM (2.4) reduces to a LMM. All these priors are assumed independent. For a proper prior for D, we consider both an inverse Wishart (IW) prior (or an IG prior if D reduces to a scalar) and a uniform shrinkage prior (USP) (Natarajan and Kass (2000)). For the latter, we define the USP as if the random effects were normally distributed. See Natarajan and Kass (2000) for corresponding detail.

One can show that under a flat prior for (β, βb) and a proper prior for both D and M (including the case of M being a constant), the posterior is proper. In the case of a LMM with an improper prior for σ2 that is proportional to 1/σ2, the posterior is also proper. As a side note, one can also show that an improper prior for M leads to an improper posterior. These results justify the common use of a diffuse normal prior for the fixed effects and a diffuse IG prior for the residual variance, when applicable, provided that the prior for the covariance matrix in the DP base measure is proper. Posterior simulation of the random effects follows the usual posterior MCMC scheme for DP mixture models. The simulation can include the total mass parameter M if the model is augmented with a gamma prior for M. See, for example, Neal (2000) for a review. Posterior simulation of the remaining model parameters can follow Kleinman and Ibrahim (1998a, b).

3. Adjusted Inference for Fixed Effects and Variance Components of the Random Effects

3.1. Adjustment for fixed effects

Let , bm+1 be the random effect for a future subject, and , with δbi denoting a point mass at bi. We further let and , the mean and covariance matrix of G⋆.

Proposition 1. (i) ; (ii) .

Proof. These are straightforward results of Theorems 3 and 4 of Ferguson (1973).

Proposition 1 suggests that the posterior mean and variance-covariance matrix of μG, equivalently βpair, can be computed based on the posterior samples of (b, βb, D, M). A CI for the i-th component of μG, denoted as μG,i, can then be constructed. Specifically, the construction can be based on a normal approximation of the posterior distribution of μG,i using the estimated posterior mean E(μG,i | y) and the estimated posterior variance, the (i, i)-th element of Cov(μG | y).

Corollary 1. Suppose θ is a function of (β, b, βb, D) and has the same dimension as bi. Then

;

.

Proof. E(θ + μG | y) and Cov(θ + μG | y) can be computed by first conditioning on (β, b, βb, D, y) and then marginalizing over (β, b, βb, D).

Corollary 1 is used to make inference for μg1 + dg and μg2 + dη in the analysis of the PSA data in Section 5.

3.2. Adjustment for variance components

In addition to the inference for the fixed effects βpair, the centered DP GLMM (2.4) and (2.3) also allows us to make inference on the random variance-covariance matrix CovG of G. In particular, we have the following proposition.

Proposition 2. (i) .

Proof. This is another straightforward result of Theorems 3 and 4 of Ferguson (1973).

In order to derive the posterior second moments for CovG, we need two lemmas.

Lemma 1. Let P ~ DP(M, α), where M > 0. Suppose Z1, Z2 and Z3 are random variables. If for all i1, i2, i3 ∈ {0, 1}, , then

| (3.1) |

where μi = ∫ Zidα, σij = ∫ (Zi − μi)(Zj − μj)dα, i, j = 1, 2, 3, i ≠ j, and σ123 = ∫ (Z1 − μ1)(Z2 − μ2)(Z3 − μ3)dα.

See the proof of Lemma 1 in Appendix A.1.

Lemma 2. Let P, α be as in Lemma 1. Let Z1, Z2, Z3 and Z4 be random variables. If for all i1, i2, i3, i4 ∈ {0, 1}, , then

| (3.2) |

where R1 = σ12μ3μ4 + σ13μ2μ4 + σ14μ2μ3 + σ23μ1μ4 + σ24μ1μ3 + σ34μ1μ2, R2 = σ123μ4 + σ124μ3 + σ134μ2 + σ234μ1, R3 = σ12σ34 + σ13σ24 + σ14σ23, and μi, σij, σijk and σ1234 are defined in a similar manner as in Lemma 1.

See the proof of Lemma 2 in Appendix A.2.

Let CovG,ij and CovG⋆,ij be the (i, j)-th component of CovG and CovG⋆ for i ≠ j, respectively. Let VarG⋆, i be the (i, i)-th component of CovG⋆. For notation in the next result, see Appendix A.3.

Proposition 2. (ii) Recall that [bm+1 | b, βb, D, M] = G⋆. If is the i-th component of bm+1, then

| (3.3) |

where

In particular,

| (3.4) |

where

See the proof of Proposition 2 (ii) in Appendix A.4.

Remark. Proposition 2 allows us to compute the posterior mean and variance-covariance matrix of CovG (it is easiest to write CovG as a stacked column vector of its lower-diagonal elements). Noting the typical skewness of the posterior distribution of a variance, we construct a CI for VarG,i by matching its posterior mean and variance to those of a log-normal distribution. We choose the lognormal distribution because of its positive support. Similar to the approach to constructing a CI for μG,i, we use a normal approximation for CovG,ij with i ≠ j.

Propositions 1 and 2 hold under model (2.3) for the random effects bi. Therefore, as long as the posterior samples of (b, βb, D, M) can be obtained (e.g., through MCMC simulations), one can post-process the samples and report adjusted inference for μG and CovG, i.e., the “fixed effects” paired with bi, and the variance components of bi.

4. Simulation Studies

4.1. A linear mixed model

We conducted a simulation study to examine the performance of the proposed center-adjusted inference in a LMM with nonparametric random intercept and slope. We generated 200 datasets from the LMM

| (4.1) |

i.e., with β = (β0, β1)′. We used β0 = 1, β1 = 1, xij = j + 0.025i − 5, , and , where with , and with , and . Under this bivariate bimodal normal mixture of bi, we have and , with σ11 = σ22 = 2.37 and σ12 = −2.33.

We used the semiparametric LMM proposed in Section 2 for analysis. In particular, we used the centered DP prior model (2.3) for bi. We assumed independent N(0, 104) priors for βb0, βb1, and an IG prior IG(10−2, 10−2) for σ2. Let I2 denote the 2 × 2 identity matrix. Recall that D denotes the variance-covariance matrix of the base measure G0. We assumed an IW prior IW(2, Ω) for D with mean E(D−1) = 2Ω where Ω = 10−2I2. The hyperparameters of the IW prior were chosen such that posterior inference was dominated by the data (c.f., Bernado and Smith (1994)). Posterior propriety follows by Proposition 1. Posterior simulations followed Kleinman and Ibrahim (1998b) with an additional step of sampling βb. Inference for the fixed effects β ≡ (β0 β1)′ and the random effect covariance matrix Σ followed the moment-adjustment procedure proposed in Sections 3.1 and 3.2.

Table A.1 reports relative bias, MSE, CI length (CIL), and coverage probability (CP) for the estimates of the fixed effect intercept and slope using both the traditional DP prior and the proposed centered DP prior approaches using both the IW and USP priors for variance components. Commonly used posterior inference involves larger biases and MSEs, much wider CIs, and either worse coverage probabilities with comparable CI lengths or slightly better coverage probabilities at the cost of doubled or even tripled CI lengths. In contrast, the proposed center-adjusted inference procedure led to estimates of the fixed effects and variance components that had small biases. The 95% coverage probabilities for the fixed effects were close to the nominal values. Note that the corresponding coverage probabilities for the variance components of the random effects using both procedures appeared to be high when the IW prior was used.

Table A.1.

Simulation results using center-adjusted vs conventional (i.e., non-centered and unadjusted) inference using DP prior with M ~ G(2.5, .5) in model (4.1) based on 200 replicates. An IWP or USP was used for D in the DP base measure.

| Center-adjusted | Conventional | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Parameter | π(D) | Bias | MSE (SE) | CIL | CP | Bias | MSE (SE) | CIL | CP |

| β0 | IWP | .04 | .04 (.004) | .85 | .93 | .16 | .08 (.01) | 2.88 | .99 |

| USP | .03 | .04 (.004) | .81 | .93 | .15 | .09 (.01) | 1.89 | .97 | |

| β1 | IWP | −.04 | .04 (.004) | .84 | .93 | −.15 | .08 (.01) | 1.88 | .80 |

| USP | −.03 | .04 (.004) | .80 | .95 | −.15 | .09 (.01) | 1.60 | .85 | |

| σ2 | IWP | .04 | .01 (.001) | .29 | .94 | .04 | .01 (.001) | .29 | .94 |

| USP | .04 | .01 (.001) | .29 | .92 | .03 | .01 (.001) | .29 | .94 | |

| σ11 | IWP | .01 | .10 (.01) | 1.62 | .99 | .15 | .33 (.03) | 2.44 | .99 |

| USP | −.07 | .11 (.01) | 1.29 | .93 | −.20 | .31 (.02) | 1.46 | .45 | |

| σ22 | IWP | .01 | .08 (.01) | 1.53 | 1.00 | .16 | .30 (.03) | 4.31 | .96 |

| USP | −.06 | .09 (.01) | 1.19 | .96 | −.20 | .31 (.02) | 2.49 | .98 | |

| σ12 | IWP | −.01 | .09 (.01) | 1.54 | .99 | −.15 | .30 (.03) | 4.16 | 1.00 |

| USP | .06 | .09 (.01) | 1.20 | .94 | .20 | .31 (.02) | 2.44 | .99 | |

In light of the documented difficulties with the use of an IG or IW prior for a random effect variance or covariance matrix (Natarajan and McCulloch (1998); Natarajan and Kass (2000); among others), we propose to extend the USP (Natarajan and Kass (2000)) to our semiparametric LMM and GLMM for the covariance matrix D in the DP base measure. While Natarajan and Kass (2000) show posterior propriety under mild conditions in their GLMMs with normal random effects, similar posterior propriety results hold in our semiparametric GLMMs, as implied by Proposition 1. Posterior MCMC simulation can include a Metropolis step for sampling D with an IW density as the proposal. The corresponding simulation results are also reported in Table A.1. The average CI lengths for the variance components now were considerably shorter than their IW counterparts, with the coverage probabilities preserved at a reasonable level (93–96%), being close to the nominal value. Similar results were obtained when varying the prior for M or fixing M to different constants.

4.2. A logistic random effect model

We used the following logistic linear mixed effect model as our simulation truth for the sampling model:

| (4.2) |

where the xij were the same as in Section 4.1. We investigated the performance of the proposed adjustments in inference again using both an IW prior and a USP for the covariance matrix D in the DP base measure. The assumptions on the random effect distribution and the priors for the remaining parameters were similar to those in Section 4.1. We fixed M = 5. When a USP was used, the posterior conditional sampling of D followed the same strategy as for the LMM in Section 4.1. The corresponding results are summarized in Table A.2. Note that when the IW prior was used for D, even after the moment adjustments, the inference for the random effect covariance matrix was still poor and seriously biased. In contrast, the use of the USP resulted in a good performance using the proposed inference on all model parameters, with a minimal bias and a coverage probability that was close to the nominal value.

Table A.2.

Simulation results using center-adjusted vs unadjusted inference using DP prior with M = 5 in model (4.2) based on 200 replicates. An IWP or USP was used for D in the DP base measure.

| Center-adjusted | Conventional | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Parameter | π (D) | Bias | MSE (SE) | CIL | CP | Bias | MSE (SE) | CIL | CP |

| β0 | IWP | .03 | .06 (.01) | 1.01 | .97 | .04 | .13 (.02) | 2.68 | 1.00 |

| USP | .07 | .05 (.01) | .91 | .94 | .24 | .14 (.01) | 2.32 | 1.00 | |

| β1 | IWP | .03 | .07 (.01) | 1.16 | .97 | −.03 | .13 (.02) | 2.70 | 1.00 |

| USP | −.06 | .06 (.01) | .96 | .93 | −.25 | .14 (.01) | 2.19 | 1.00 | |

| σ11 | IWP | .22 | 1.26 (.19) | 4.77 | .99 | .51 | 3.83 (.66) | 10.11 | 1.00 |

| USP | .02 | .57 (.09) | 3.31 | .97 | −.19 | .58 (.04) | 4.54 | .99 | |

| σ22 | IWP | .34 | 2.04 (.31) | 6.26 | .99 | .50 | 3.80 (.61) | 10.34 | 1.00 |

| USP | −.02 | .49 (.06) | 3.67 | .97 | −.29 | .77 (.04) | 4.18 | .97 | |

| σ12 | IWP | −.27 | 1.32 (.19) | 5.26 | .99 | .50 | 3.33 (.54) | 9.80 | 1.00 |

| USP | .03 | .39 (.05) | 3.13 | .92 | −.27 | .67 (.04) | 4.14 | .99 | |

5. Application

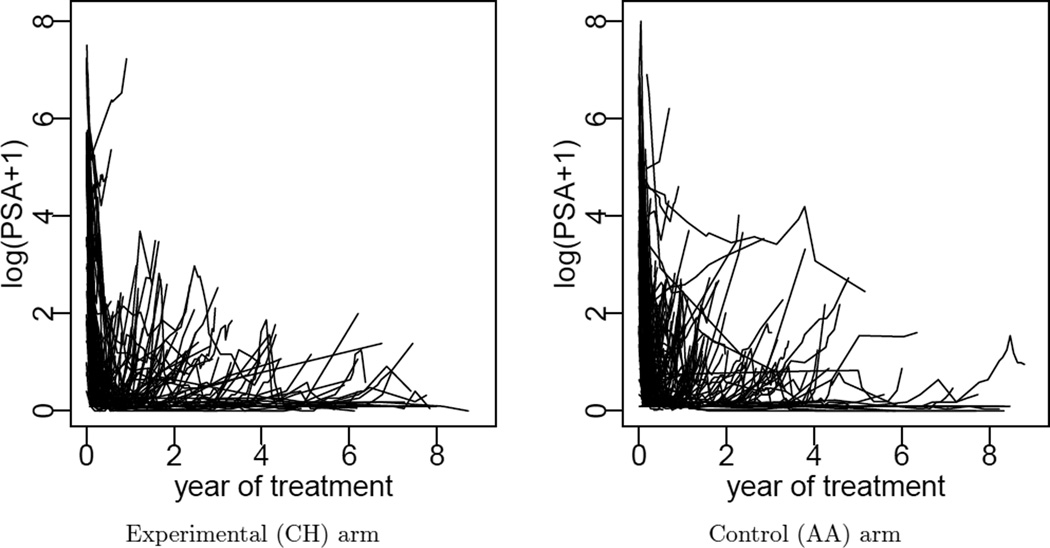

We applied the proposed method to analyze data from a phase III clinical trial with prostate cancer patients. The trial was conducted at M. D. Anderson Cancer Center. The sample size was n = 286 patients. Patients were randomized to two treatment arms: a conventional androgen ablation (AA) therapy (149 patients) and the AA therapy plus three eight-week cycles of chemotherapy (CH) using ketoconazole and doxorubicin (KA) alternating with vinblastine and estramustine (VE) (137 patients). The outcome variable of interest is y = log(PSA+1). PSA level is reported repeatedly over time starting with treatment initiation. The number of repeated measurements varies from 1 to 65 across patients. The investigators were interested in the PSA profiles post initialization of both treatments. Figure A.1 displays the observed PSA trajectories for all patients in each treatment arm. For a more detailed description of the data, see Zhang, Müller and Do (2010).

Figure A.1.

Observed PSA trajectories

We consider a model for the log-transformed PSA level as

| (5.1) |

where υ = 0 or 1 indicates treatment arm CH or AA, respectively, i (= 1, …, mυ) denotes the patient ID (in arm υ), and j (= 1, …, nυi) indicates the measurement number for subject i in arm υ, and sυij is the time since treatment initiation (measured in years) at the jth repeated observation for patient i in arm υ. The fixed effects dg and dη describe the effect of treatment on PSA slope and the size of the initial drop. We assume with G ~ DP (M, N (β ≡ (β1, β2)′, D ≡ [dij])), , and θ0υi, (θ1υi, θ2υi) and ευijare mutually independent.

Equation (5.1) models the typical features of PSA profiles for prostate cancer patients post treatment initiation. In particular, PSA levels tend to drop sharply after treatment initiation, and there is an additive increasing trend over time (linear in the log-transformed PSA level). Both, the initial drop and the trend, may differ between treatments.

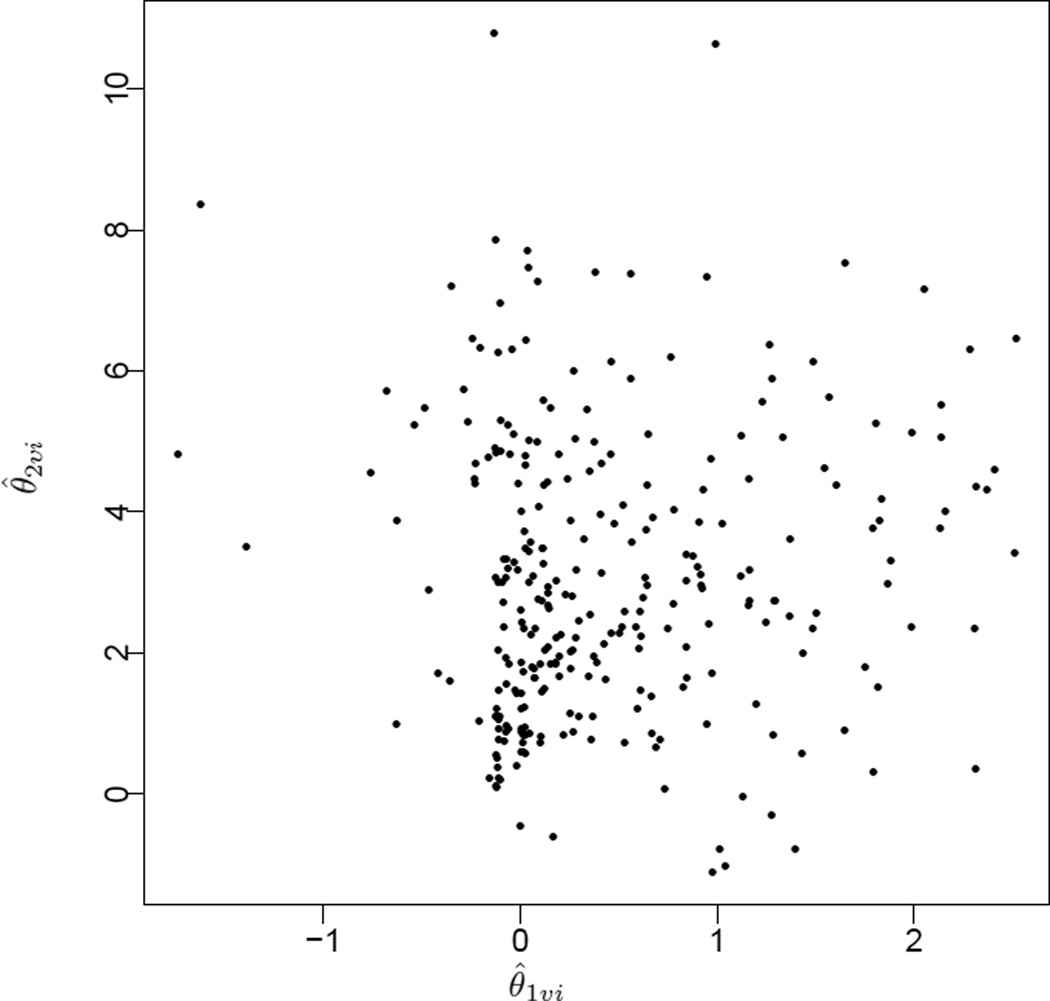

We assume mainly for simplicity, assuming that neither the distribution of θ0υinor their estimates are of main scientific interest for the study. A scatterplot of the joint posterior means of (θ1υi, θ2υi) (Figure A.2) suggests clear skewness and significant departure from normality (Verbeke and Lesaffre (1996)). This justifies the use of the centered DP prior model for the distribution of (θ1υi, θ2υi).

Figure A.2.

A scatterplot of the joint posterior means (θ̂1υi, θ̂2υi) assuming normally distributed (θ1υi, θ2υi) (with unknown means) in model (5.1)

The prior used for the parameters in model (5.1) was independent across parameters with p(μ0) = p(β1) = p(β2) = p(dg) = p(dη) = N(0, 104), p(ϕ0) = p(ϕ1) = G(0.01, 0.001), , and p(D) = IW(2, 0.01 I2). Here I2 denotes a 2 × 2 identity matrix and the IW distribution is parametrized such that E(D−1) = 0.02I2. We fixed M = 5.

We implemented posterior simulation using a Gibbs sampler. An additional Metropolis step was used to define a transition probability to update ϕ0 and ϕ1, respectively. After a burn-in of 5,000 iterations, 20,000 samples were obtained with every 10th saved for posterior inference. Evaluation of Geweke’s statistic (1992) suggested practical convergence of the Markov chains. We applied the adjustments for moments of the DP in posterior inference. Specifically, we report inference on (μg1, μg2) ≡ (∫ θ1idG1(θ1i), ∫ θ2idG2(θ2i)) as inference on the slope of PSA and the initial drop for arm CH. Similarly, we report inference on (μg1 + dg, μg2 + dη) as inference on the corresponding parameters for arm AA. Denote the 2 × 2 random covariance matrix of (θ1υi, θ2υi) by CovG = [σij]. We report posterior summaries for σij as inference for the variance components.

The posterior mean of dη, i.e., the difference in the initial drop in PSA between the conventional AA and CH treatments, was −.15. The corresponding 95% CI was (−.32, .01), suggesting that the new CH treatment likely results in a larger initial drop. The difference in the rate of the drop, i.e., ϕ1 − ϕ0, had a posterior mean of −.41 and a 95% CI of (−1.00, .17). The difference in the increase in PSA, or dg, had a posterior mean of −.01 and a 95% CI of (−.03, −0.0006). This significantly smaller rate of increase in PSA in the conventional AA arm (although the difference is small) might be related to its smaller initial drop.

For comparison, we report posterior inference with and without the proposed adjustment in Table A.3. We report inference on the rate of initial drop in PSA as part of the treatment effect. This is an example of the inference that is not affected by the proposed adjustment. On the other hand, we report inference for all fixed effects that are paired with nonparametric random effects and for the variance components. The posterior mean of the average increase in PSA in each arm changed by approximately 10% between the proposed adjusted and unadjusted inferences. The posterior precision approximately tripled. For the average initial drop in PSA, the posterior mean changed by about 30% with the precision being more than tripled in both treatment arms, as a result of the adjusted inference. Even larger changes were seen in inference for the variance components σij. For example, the posterior mean of the covariance between the two random effects flipped sign under the proposed center-adjusted inference compared to the unadjusted inference. The reported positive covariance estimate was consistent with the scatterplot of the estimated random effects (θ̂1υi, θ̂2υi) under a normality assumption (Figure A.2).

Table A.3.

Posterior summaries with and without the proposed adjustment for rate of initial drop in PSA, increase in PSA per year, initial drop in PSA, and variance components based on model (5.1) for the PSA data

| Parameter | Adjustment | Posterior Mean | Posterior SD | 95% CI |

|---|---|---|---|---|

| Rate of initial drop in PSA | ||||

| Arm CH | ||||

| ϕ0 | Cent-Adj/Unadj | 8.44 | .21 | (8.04, 8.87) |

| Arm AA | ||||

| ϕ1 | Cent-Adj/Unadj | 8.03 | .20 | (7.63, 8.44) |

| Increase in PSA per year | ||||

| Arm CH | ||||

| μ g1 | Cent-Adj | .63 | .08 | (.49, .78) |

| β1 | Unadj | .70 | .25 | (.24, 1.24) |

| Arm AA | ||||

| μg1 + dg | Cent-Adj | .62 | .08 | (.47, .77) |

| β1 + dg | Unadj | .69 | .25 | (.21, 1.22) |

| Initial drop in PSA | ||||

| Arm CH | ||||

| μg2 | Cent-Adj | 3.32 | .14 | (3.04, 3.59) |

| β2 | Unadj | 4.33 | .48 | (3.37, 5.28) |

| Arm AA | ||||

| μg2 + dη | Cent-Adj | 3.17 | .14 | (2.89, 3.44) |

| β2 + dη | Unadj | 4.18 | .48 | (3.23, 5.14) |

| Variance components | ||||

| σ11 | Cent-Adj | 1.17 | .23 | (.78, 1.68) |

| Unadj | 1.76 | .68 | (.89, 3.54) | |

| σ22 | Cent-Adj | 4.76 | .51 | (3.84, 5.84) |

| Unadj | 7.82 | 2.05 | (4.65, 12.56) | |

| σ12 | Cent-Adj | .35 | .17 | (.01, .68) |

| Unadj | −.22 | .60 | (−1.70, 1.14) | |

Finally, we investigated sensitivity of the proposed method with respect to M by considering alternatively a gamma prior for M, e.g., p(M) = G(.8, .4) (with mean = 2 and variance = 5). The results (not shown) followed the same pattern as reported in Table A.3.

6. Discussion

We have proposed a post-processing technique based on moment adjustment for inference on the fixed effects that are paired with random effects and the variance components of the random effects in a Bayesian hierarchical model. A hierarchically centered DP prior is assumed for the random effects distribution. The main results (Propositions 2 and 3) carry fully to any nonparametric Bayesian hierarchical model where a DP prior model (1.1) or (2.3) is assumed. In fact, this also applies to cases where the DP base measure is a parametric distribution other than normal, as long as the following are computable: 1) μG* and CovG* are needed for the evaluation of the posterior mean and covariance matrix of μG and the posterior mean of CovG; 2) Up to the fourth moments of G* is needed for the evaluation of the posterior second moments of CovG. The only additional requirements for the proposed method to be applicable are: 1) the posterior samples of the parameters in the DP prior model (1.1) or (2.3) are available; 2) the random mean and/or covariance matrix of the random effects are of scientific interest. In cases where only the predictive inference for the outcome variable is of interest, adjustments for the fixed effects and variance components are not necessary. While the specific expressions for the proposed moment adjustments are lengthy, they are closed-form and easy to evaluate. Most importantly, we provide an R function (freely downloadable from http://odin.mdacc.tmc.edu/~yishengli/DPPP.R) that allows easy implementation by the users.

We have demonstrated through simulations in DP GLMMs that the proposed center-adjusted inference is effective in correcting reported inference for the fixed effects and variance components. We also showed through a data example that the effect of a treatment on patient outcomes (such as the initial drop after treatment initiation and the yearly increase in the PSA level in prostate cancer patients) could be considerably misreported (such as overestimated and poorly inferred) without appropriate adjustments. A practically important feature of the proposed procedure is that the method requires little new model structure and can be implemented at essentially no additional computational cost. The implementation of the method requires essentially only post-processing of the posterior samples of the model parameters.

In applying the proposed inference in DP GLMMs, we also find that the USP leads to in general more robust performance, while the IW prior may result in poor inference for the variance components of the random effects, an issue becoming even more prominent when the data to be analyzed are binary.

Acknowledgment

Dr. Müller’s research was partially supported by the NIH/NCI grant R01 CA75981. Dr. Lin’s research was supported by the NIH/NCI grants R37 CA76404 and P01 CA134294. We thank the editor, associate editor, and an anonymous referee for their comments that helped improve the manuscript.

Appendix A

A.1. Proof of Lemma 1

Let (𝒳, 𝒜) be the space and σ-field of subsets on which the probability measure α is defined. By Theorem 2 of Ferguson (1973), a Dirichlet process DP(M, α) can be alternatively constructed as , for any A ∈ 𝒜, where Pj are correlated random variables defined in Ferguson (1973) satisfying Pj ≥ 0 and , a.s., Vj are i.i.d. random variables with values in 𝒳with probability measure α, and {Pj} and {Vj} are independent. Here δ x (A) = 1, if x ∈ A; and δ x (A) = 0 otherwise. Then we have

| (A.1) |

since all three series are absolutely convergent with probability one (see the proof of Theorem 3, Ferguson (1973)). The infinite summation (A.1) is bounded by

| (A.2) |

If (A.2) is an integrable random variable, then the expectation of (A.1) can be taken inside the summation sign. Let

S(1, 1, 3) = ∑i≠k E[Z1(Vi)Z2(Vi)]E[Z3(Vk)]E(PiPiPk),

S(1, 2, 3) = ∑i≠j≠k E[Z1(Vi)]E[Z2(Vj)]E[Z3(Vk)]E(PiPjPk),

- S(1, 1, 1) = ∑i E[Z1(Vi)Z2(Vi)Z3(Vi)]E(PiPiPi), etc. Then

A similar equation shows that (A.2) is integrable. The distribution of the Pi depends on M, but not α, based on its definition (Ferguson (1973)). Hence, analogous to the proof of Theorem 4 of Ferguson (1973), we choose 𝒳 to be the real line, α to give 2/3 probability to −1 and 1/3 probability to 2, and Z1(x) = Z2(x) = Z3(x) ≡ x. Thus μ1 = μ2 = μ3 = 0 and σ123 = 2. Hence

since P(2) ~ Beta(M/3, 2M/3). A similar calculation gives us , by assuming α to give 1/2 probability to each of −1 and 1, and Z1(x) = Z2(x) ≡ x and Z3 ≡ 1. The equality (3.1) is thus proved.

A.2. Proof of Lemma 2

Define S(i, j, k, ℓ) like S(i, j, k) in the proof of Lemma 1. By similar argument to that in the proof of Lemma 1, we have

| (A.3) |

Assuming α to give 1/2 probability to each of −1 and 1, and Z1(x) = Z2(x) = Z3(x) = Z4(x) ≡ x, the left hand side (LHS) of (A.3) is

Since P(1) ~ Beta(M/2, M/2), we have

The above is based on the moment formula for the beta distribution. Hence, the LHS of (A.3) is 3/[(M + 1)(M + 3)]. On the other hand, the RHS of (A.3) is . Thus, we have

| (A.4) |

Similarly, if we assume Z1(x) = Z2(x) = Z3(x) = Z4(x) ≡ x, α to assign 2/3 probability to −1 and 1/3 probability to 2, (A.3) implies

| (A.5) |

since P(2) ~ Beta(M/3, 2M/3). Equations (A.4) and (A.5) imply

| (A.6) |

| (A.7) |

Further assuming α to give 2/3 probability to −1 and 1/3 probability to 2, Z1(x) = Z2(x) = Z3(x) ≡ x, and Z4(x) ≡ 1, an analogous calculation using (A.3) as above yields

| (A.8) |

Again, assuming α to give 1/2 probability to each of −1 and 1, Z1(x) = Z2(x) ≡ x, and Z3(x) = Z4(x) ≡ 1, we obtain

| (A.9) |

(3.2) is obtained by plugging (A.6), (A.7), (A.8) and (A.9) into (A.3).

A.3. Notations used for defining L2 through L4, O2 and O3 in Proposition 2 (ii)

In L2:

In L3:

In L4:

In O2:

In O3:

A.4. Proof of Proposition 2 (ii)

Assume [b̃ | G] ~ G and [bm+1 | b, βb, D, M] ~ G*. Let b̃(i) and be the i-th component of b̃ and bm+1, respectively. Define I1 = E(CovG,i1j1 · CovG,i2j2 | b, β b, D,M), I2 = E(CovG,i1j1 | b, β b, D,M), and I3 = E(CovG,i2j2 | b, β b, D,M). Then Cov(CovG,i1j1, CovG,i2j2 | y) = E(I1 | y) − E(I2 | y)E(I3 | y). Based on Proposition 3 (i), I2 = (m + M)CovG*,i1j1/(m +M + 1), and I3 = (m + M)CovG*,i2j2/(m +M + 1).

To calculate I1, we write I1 = J1 − J2 − J3 + J4, where

By Theorem 4 of Ferguson (1973),

To calculate J2, we apply Lemma 1 for Z1 = b̃(i1)b̃(j1), Z2 = b̃(i2), and Z3 =b̃(j2). Following the notations in Lemma 1, we have

Plugging the above expressions into (3.1), we obtain J2. J3 can be similarly computed.

To calculate J4, we apply Lemma 2 for Z1 =b̃(i1), Z2 =b̃(j1), Z3 =b(i2), and Z4 =b(j2). Following the notations in Lemma 2, we then have

Plugging the above expressions into (3.2), we obtain J4. Thus I1 is computed, and so is Cov(CovG,i1j1, CovG,i2j2 | y).

Var(CovG,ij | y) can be obtained by replacing i1 and i2 by i, and j1 and j2 by j in (3.3). The proof is thus completed.

Contributor Information

Yisheng Li, Email: ysli@mdanderson.org.

Peter Müller, Email: pmueller@mdanderson.org.

Xihong Lin, Email: xlin@hsph.harvard.edu.

References

- Bernado JM, Smith AFM. Bayesian Theory. New York: John Wiley and Sons; 1994. [Google Scholar]

- Bush CA, MacEachern SN. A semiparametric Bayesian model for randomised block designs. Biometrika. 1996;83:275–285. [Google Scholar]

- Epifani I, Guglielmi A, Melilli E. A stochastic equation for the law of the random Dirichlet variance. Statistics and Probability Letters. 2006;76:495–502. [Google Scholar]

- Gelfand AE, Kottas A. A computational approach for full nonparametric Bayesian inference under Dirichlet process mixture models. Journal of Computational and Graphical Statistics. 2002;11:289–305. [Google Scholar]

- Gelfand AE, Mukhopadhyay S. On nonparametric Bayesian inference for the distribution of a random sample. The Canadian Journal of Statistics. 1995;23:411–420. [Google Scholar]

- Gelfand AE, Sahu SK, Carlin BP. Efficient parametrisations for normal linear mixed models. Biometrika. 1995;82:479–488. [Google Scholar]

- Geweke J. Evaluating the accuracy of sampling-based approaches to the calculation of posterior moments. In: Bernardo JM, Berger J, Dawid AP, Smith AFM, editors. Bayesian Statistics 4. Oxford, UK: Oxford University Press; 1992. pp. 169–193. [Google Scholar]

- Hjort NL, Ongaro A. Exact inference for random Dirichlet means. Statistical Inference for Stochastic Processes. 2005;8:227–254. [Google Scholar]

- Kleinman KP, Ibrahim JG. A semi-parametric Bayesian approach to generalized linear mixed models. Statistics in Medicine. 1998a;17:2579–2596. doi: 10.1002/(sici)1097-0258(19981130)17:22<2579::aid-sim948>3.0.co;2-p. [DOI] [PubMed] [Google Scholar]

- Kleinman KP, Ibrahim JG. A semiparametric Bayesian approach to the random effects model. Biometrics. 1998b;54:921–938. [PubMed] [Google Scholar]

- Lijoi A, Regazzini E. Means of Dirichlet process and hypergeo-metric functions. Annals of Probability. 2004;32:1469–1495. [Google Scholar]

- Natarajan R, Kass RE. Reference Bayesian methods for generalized linear mixed models. Journal of the American Statistical Association. 2000;95:227–237. [Google Scholar]

- Natarajan R, McCulloch CE. Gibbs sampling with diffuse proper priors: a valid approach to data-driven inference? Journal of Computational and Graphical Statistics. 1998;7:267–277. [Google Scholar]

- Neal RM. Markov chain sampling methods for Dirichlet process mixture models. Journal of Computational and Graphical Statistics. 2000;9:249–265. [Google Scholar]

- Newton MA, Czado C, Chappell R. Bayesian inference for semiparametric binary regression. Journal of the American Statistical Association. 1996;91:142–153. [Google Scholar]

- Verbeke G, Lesaffre E. A linear mixed-effects model with heterogeneity in the random-effects population. Journal of the American Statistical Association. 1996;433:217–221. [Google Scholar]

- Zhang S, Müller P, Do K-A. A Bayesian semi-parametric survival model with longitudinal markers. Biometrics. 2010;66:435–443. doi: 10.1111/j.1541-0420.2009.01276.x. [DOI] [PMC free article] [PubMed] [Google Scholar]