Abstract

The right posterior superior temporal sulcus (pSTS) is a neural region involved in assessing the goals and intentions underlying the motion of social agents. Recent research has identified visual cues, such as chasing, that trigger animacy detection and intention attribution. When readily available in a visual display, these cues reliably activate the pSTS. Here, using functional magnetic resonance imaging, we examined if attributing intentions to random motion would likewise engage the pSTS. Participants viewed displays of four moving circles and were instructed to search for chasing or mirror-correlated motion. On chasing trials, one circle chased another circle, invoking the percept of an intentional agent; while on correlated motion trials, one circle’s motion was mirror reflected by another. On the remaining trials, all circles moved randomly. As expected, pSTS activation was greater when participants searched for chasing vs correlated motion when these cues were present in the displays. Of critical importance, pSTS activation was also greater when participants searched for chasing compared to mirror-correlated motion when the displays in both search conditions were statistically identical random motion. We conclude that pSTS activity associated with intention attribution can be invoked by top–down processes in the absence of reliable visual cues for intentionality.

Keywords: fMRI, posterior superior temporal sulcus, intention attribution, biological motion, social perception

INTRODUCTION

Humans have sophisticated abilities to infer the dispositions and intentions of other individuals. Brothers (1990) posited that this distinct cognitive domain, termed social cognition, is likely instantiated in a specialized neural system, which includes the superior temporal sulcus, amygdala, fusiform gyrus and orbitofrontal cortex. One key component of social cognition is the ability to rapidly discriminate animate from inanimate motion. Research has shown that the posterior superior temporal sulcus (pSTS), in particular, is preferentially involved in processing biological motion such as hand, mouth and body movements, as well as eye gaze (reviewed in Allison et al., 2000). Moreover, the cues for biological motion that engage the pSTS can be abstract and impoverished—such as the movements of point lights depicting ambulation (Bonda et al., 1996; Beauchamp et al., 2003). Interestingly, the pSTS also responds preferentially to the movements of simple geometric shapes, such as triangles and squares, when those shapes interact in an apparently intentional manner (Castelli et al., 2000; Schultz et al., 2003).

Given the tendency of humans to anthropomorphize and attribute agency and intentions to inanimate objects (Heider and Simmel, 1944; also reviewed in Epley et al., 2007), a question can be raised about the dependency of pSTS activation upon visual cues for intentionality. Can the pSTS be activated by the attribution of intentions in the absence of such cues? Some prior research has demonstrated that pSTS activation is not driven solely by biological motion, but is also sensitive to the context in which that motion occurs. For example, a perceptually identical eye shift or arm reach elicits greater pSTS activity when the motion is made away from an obvious target (Pelphrey et al., 2003, 2004) or when the action of a human actor is incongruent with her preferences, as expressed through her facial expressions (Vander Wyk et al., 2009). The right pSTS is also more activated by unsuccessful compared to successful outcomes of goal-directed actions (Shultz et al., 2011). Taken together, these observations of higher pSTS activation when participants view motion that is incongruent with an assumed intention suggest that the pSTS is sensitive to the goals and intentions underlying observed biological motion.

These results also suggest that the interpretation of motion plays a role in the engagement of the pSTS. Indeed, several studies have investigated how attending to different aspects of the same motion influences neural activity (Blakemore et al., 2003; Wheatley et al., 2007; Tavares et al., 2008). One study showed that the visual background on which the ambiguous motion of a geometric form is presented can differentially engage the pSTS and other brain regions (Wheatley et al., 2007). For example, if the background context suggests that the motion is that of an animate agent, the pSTS is more highly engaged than when the background context suggests that the very same motion is that of an inanimate object. Also, using displays in which moving geometric shapes engaged in apparent interpersonal interactions, Tavares et al. (2008) showed that attending to the social meaning of biological motion, rather than to non-social aspects of the motion, increases pSTS activation.

In this study, we extended this reasoning to ask whether an instructional bias to attribute intentions to the random motion of geometric shapes would similarly engage the pSTS. That is, to what extent does pSTS activation depend upon visual cues for social agency? To address this question, we presented participants with displays composed of four colored moving circles. In some trials, participants were presented with displays of chasing, where one circle consistently followed another circle, and were instructed to detect the chasing. Chasing was used to invoke intention attribution as it has been shown to be a powerful cue for animacy and intentionality that can be readily conveyed using simple, well-controlled visual stimuli (Gao et al., 2009). However, chasing consists of correlated motion, which has also been found to activate the right pSTS (Schultz et al., 2005). Therefore, in control trials, participants were presented with displays of correlated motion, where one circle moved along a mirror-reflected trajectory of another circle, and were instructed to detect the correlated motion. Here, we expected that the pSTS would be preferentially engaged by chasing trials compared to the control correlated motion trials.

To address the dependency of pSTS activation on chasing cues, we included trials in which the motion of all circles were random and independent of one another; that is, there was neither chasing nor correlated motion. Participants were never told that such displays of random motion would be included; rather, they were told that it would be difficult to detect chasing or correlated motion on some trials, but that they should nevertheless try their best to detect the relevant motion. We hypothesized that searching for chasing among the random motion displays would engage brain systems for intention attribution, and that this engagement would be evident in increased activation of the right pSTS when participants searched for chasing in random displays than when they searched for correlated motion in the (statistically) identical random displays.

Finally, we were also interested in the extent to which pSTS activation to abstract and impoverished displays of biological motion could reflect intention attribution rather than being driven by visual cues of intentionality. Therefore, we also presented participants with point-light displays of moving human figures and hypothesized that the pSTS region involved in attributing intentions to random motion would intersect the pSTS region that was preferentially responsive to the biological motion of point-light figures.

METHODS

Subjects and stimuli

Fifteen right-handed healthy adults (eight females, mean age: 22.9 ± 2.7 years) participated in the study. They had normal or corrected-to-normal vision, were not color blind, and had no history of neurological or psychiatric illnesses. The protocol was approved by the Yale Human Investigations Committee and all participants’ consent was obtained according to the Declaration of Helsinki (1975/1983).

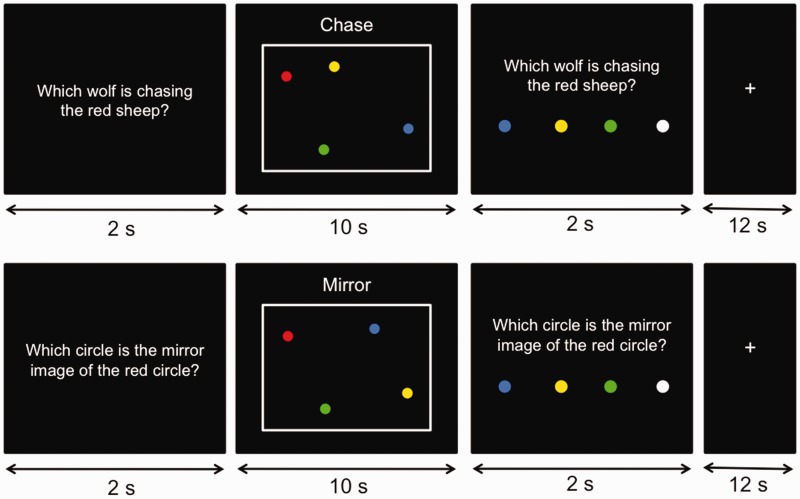

Stimuli consisted of three types of brief movie clips—chase motion, mirror motion and random motion—adapted from Gao et al. (2009). Each movie was 10 s long and showed four dynamic, colored (red, blue, green and yellow) circles moving across a black background (Figure 1). In all movies, the red circle and two other circles moved along independent trajectories in a haphazard manner, that is, randomly changing direction within a 120° window every 170 ms. In chase motion movies, the fourth circle consistently moved toward the red circle, also in a haphazard manner, but with a maximum deviation of 30° from the direct path between the fourth circle and the red circle. This type of motion has been shown to be a strong cue for chasing (Gao et al., 2009). In mirror motion movies, the fourth circle was a mirror reflection of the red circle’s location in each frame, with the center of the display as the point of reflection. Like chasing, mirror-reflected motion correlates the motions of two circles, but is not perceived as intentional. In random motion movies, the fourth circle also moved haphazardly, independent of the other three circles. Twenty-eight chase motion movies, 28 mirror motion movies and 56 random motion movies were used in this study. The color of the fourth circle was counterbalanced across all movies in each movie type. All movies were unique and no movies were repeated across trials. Sample movies can be viewed in the Supplementary Data.

Fig. 1.

Illustration of the three types of movies used, each consisting of four dynamic, colored (red, blue, green and yellow) circles, adapted from Gao et al. (2009). In all movies, the red circle and two other circles moved independently of one another in a haphazard manner (depicted by the curved arrows). (A) In chase motion movies, the fourth circle (here, the yellow circle) consistently moved toward the red circle, with a maximum deviation of 30° from the direct path between the fourth circle and the red circle. (B) In mirror motion movies, the fourth circle (here, the yellow circle) moved as a mirror image of the red circle, with the center of the display as the point of reflection. (C) In random motion movies, all circles had independent trajectories.

Experimental design and procedure

Participants were presented with the movies of moving circles in four experimental conditions: chase detect, mirror detect, chase project and mirror project. In chase detect and chase project trials, participants were presented with chase motion and random motion movies, respectively. They were instructed to imagine that the red circle was a sheep, and that one of the other three circles was a wolf that was chasing the red sheep. Their task was to identify which of the blue, green or yellow circles, was the wolf. They were told that in some trials, the chasing would be easy to detect, because the wolf would be chasing the sheep in a relatively direct manner. These were the chase detect trials, in which chase motion movies were used. To encourage participants to continue searching for chasing amidst random motion, participants were told that in some trials, the wolf would behave deceptively, making the wolf’s movements harder to track. These were the chase project trials, in which random motion movies were presented. Participants were not told beforehand that the four circles in these ‘harder’ trials were in fact moving independently of one another.

In mirror detect and mirror project trials, participants were presented with mirror motion and random motion movies, respectively. They were instructed to imagine that a mirror was placed in between the red circle and one of the other three circles. Their task was to identify which of the blue, green or yellow circles was the mirror image of the red circle. Care was taken to use the noun ‘mirror image’ instead of the verb ‘mirroring’ as the verb might suggest agency. They were told that in some trials, the mirror image would be easy to detect, because the mirror was in good condition and produced a perfect reflection. These were the mirror detect trials, in which mirror motion movies were presented. Participants were also told that there would be harder trials, in which the mirror would be cracked in many places, making the mirror image refracted and therefore more difficult to identify. These were the mirror project trials, in which random motion movies were presented. Again, participants were not told beforehand that the four circles in these ‘harder’ trials were in fact moving independently of one another.

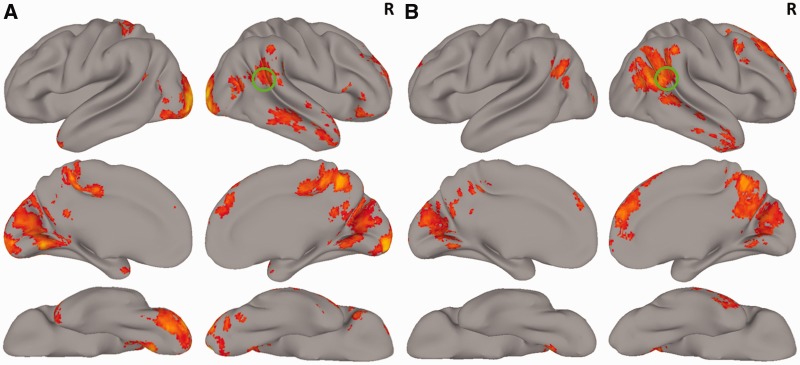

Trials were presented in a slow event-related design (see Figure 2 for the trial structure). Before the presentation of each movie, a question ‘Which wolf is chasing the red sheep?’ or ‘Which circle is the mirror image of the red circle?’ appeared on the screen for 2 s. The movie was then presented for 10 s. The word ‘chase’ or ‘mirror’ was displayed at the top of the screen for the duration of the movie to remind participants of the type of motion they were looking for. After the presentation of the movie, a response screen was displayed for 2 s. Participants responded using a four-button magnetic resonance imaging (MRI)-compatible response box that was provided in the scanner. In addition to the three possible answers (blue, yellow and green), they were also given the option of choosing a white circle, which indicated that they did not know the answer. Trials were separated by a 12 s fixation inter-trial interval.

Fig. 2.

Schematic illustration of one chase trial and one mirror trial. Participants were presented with a question for 2 s, followed by a 10 s movie of moving circles, and finally a response screen for 2 s. Trials were separated by a 12 s fixation inter-trial interval.

Each run of the task consisted of four trials per condition, for a total of 16 trials per run, each lasting 7 min 16 s. All participants completed at least six runs of the task. A seventh run was acquired when time permitted to increase power where possible. A seventh run was acquired for six participants. In summary, nine participants completed a total of 24 trials per condition, whereas six participants completed a total of 28 trials per condition.

In addition to the experimental task, a biological motion localizer was also run following the acquisition of the experimental trials to independently identify voxels in the pSTS that were preferentially responsive to biological motion. Three participants did not receive the biological motion localizer due to technical problems that resulted in insufficient time to acquire the localizer data. Each run of the localizer consisted of six 12 s blocks of point-light displays of moving human figures (the biological motion condition) and six 12 s blocks of scrambled point lights (the scrambled motion condition), separated by 12 s fixation intervals. Participants were instructed to pay attention to the moving point-light displays, as well as to keep a mental count of static images of point lights that were randomly interspersed throughout the run. Participants completed two runs of the localizer task.

Image acquisition and preprocessing

Data were acquired using a 3 T Siemens TIM Trio scanner with a 12-channel head coil. Functional images were acquired using an echo-planar pulse sequence [time to repetition (TR) = 2000 ms, time to echo (TE) = 25 ms, flip angle = 90°, field of view (FOV) = 224 mm, matrix = 64 × 64, voxel size = 3.5 × 3.5 × 3.5 mm3, slice thickness = 3.5 mm, 37 slices, interleaved slice acquisition with no gap]. Two structural images were acquired for registration: T1 coplanar images were acquired using a T1 Flash sequence (TR = 300 ms, TE = 2.47 ms, flip angle = 60°, FOV = 224 mm, matrix = 256 × 256, slice thickness = 3.5 mm, 37 slices) and high-resolution images were acquired using a three-dimensional magnetization-prepared rapid acquisition gradient-echo sequence (TR = 2530 ms, TE = 2.77 ms, flip angle = 7°, FOV = 256 mm, matrix = 256 × 256, slice thickness = 1 mm, 176 slices).

Image preprocessing was performed using the FMRIB Software Library (FSL, http://www.fmrib.ox.ac.uk/fsl). Structural and functional images were skull-stripped using the brain extraction tool. The first four volumes (8 s) of each functional dataset were discarded to allow for MR equilibration. Functional images then underwent slice-time correction for interleaved slice acquisition, motion correction (using the MCFLIRT linear realignment), spatial smoothing using a 5 mm full width at half maximum (FWHM) Gaussian kernel and high-pass filtering with a 0.01 Hz cutoff to remove low-frequency drift. Finally, the functional images were registered to the coplanar images, which were in turn registered to the high-resolution structural images and then normalized to the Montreal Neurological Institute’s template (MNI152). The transformation matrices from each registration stage were combined into one transformation matrix that was applied to the functional images, which were then normalized directly into MNI space, during single-subject multi-run analyses and group-level analyses.

fMRI data analysis

Whole-brain voxel-wise regression analyses were performed using FSL’s FMRI Expert Analysis Tool (FEAT). Explanatory variables (EVs) consisted of the chase detect, mirror detect, chase project and mirror project events. Time points associated with cues, responses and missed events, in which participants failed to make a response, were also included as variables of no interest to account for variance in the model. Each variable was modeled as a boxcar function, where a value of 1 was assigned to time points associated with the event and a value of 0 to time points not associated with the event, and then convolved with a double gamma function. Subject-level analyses combining multiple runs were conducted using a fixed effects model.

Contrasts comparing parameter estimates obtained from the regression analyses were defined at the subject level to identify brain regions that showed condition-specific effects. To address our research question directly, we tested for regions that were more engaged during the search for chasing v s mirror motion when either was present in the display with the chase detect > mirror detect contrast. We then tested for regions that were more engaged during the search for chasing v s mirror motion when random motion was presented with the chase project > mirror project contrast. For completeness, we also identified regions showing a main effect of searching for chasing v s searching for mirror motion regardless of motion type with the (chase detect + chase project) > (mirror detect + mirror project) contrast, and regions showing a main effect of searching for chasing or mirror motion when random motion was presented compared to when either motion was present with the (chase project + mirror project) > (chase detect + mirror detect) contrast. The results of these main effects are reported in Supplementary Figure S1.

Group-level analyses were performed on the parameter estimates obtained from the contrasts calculated at the subject level using a mixed effects model, where the mixed effects variance comprised both the fixed (within-subject) and random effects (between-subject) variance. The random effects component of variance was estimated using FSL’s FMRIB's Local Analysis of Mixed Effects (FLAME) 1 + 2 procedure (Beckmann et al., 2003). For significance testing, voxels were first thresholded at a level of Z > 2.3. Cluster correction using Gaussian random-field theory was then applied to the thresholded voxels to correct for multiple comparisons (Worsley et al., 1996). Clusters, defined as contiguous sets of voxels with Z > 2.3, that survived the correction at a cluster probability of P < 0.05 were considered significant activations.

Analogous preprocessing, subject- and group-level analyses were conducted on data from the biological motion localizer. Here, EVs consisted of the biological motion and scrambled motion blocks, where each variable was modeled as a boxcar function for the 12 s duration of the block, and then convolved with a double gamma function. Regions more responsive to biological motion v s scrambled motion were defined by the biological motion > scrambled motion contrast.

Using custom MATLAB (http://www.mathworks.com) scripts, regions that were commonly activated in the chase detect > mirror detect, chase project > mirror project and biological motion > scrambled motion contrasts were defined as voxels that reached significance at the cluster-corrected threshold (voxel-wise Z > 2.3, cluster probability P < 0.05) in all three contrasts.

RESULTS

Behavioral performance

Participants performed equally well in the chase detect (90.52%) and mirror detect (85.87%) trials [t(14) = 1.11, P = 0.29], suggesting that any differences in activation between these two conditions would not be a result of differences in participants’ ability to perceive chasing or mirror motion. Likewise, participants were equally likely to report having identified a chasing circle (65.60%) and a mirror image (64.88%) in chase project and mirror project trials, respectively [t(14) = 0.15, P = 0.89]. This suggests that any differences in activation between the chase project and mirror project conditions would not be a result of differences in participants’ tendency to perceive chasing v s mirror motion when presented with random motion. There was no difference in the percentage of missed trials between the chase detect (1.79%) and mirror detect (3.13%) conditions [t(14) = 1.48, P = 0.16], and also no difference between the chase project (1.87%) and mirror project (1.63%) conditions [t(14) = 0.51, P = 0.62].

Imaging results

Regions more responsive when searching for chasing v s a mirror image when presented with chasing and mirror motion, respectively (chase detect > mirror detect)

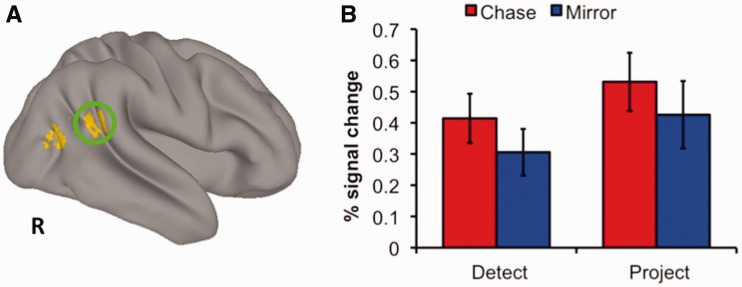

As expected, the chase detect > mirror detect contrast revealed activation in the right pSTS (peak voxel: 60, −42, 20; Z = 3.83). Activations were also seen in the left pSTS, right supramarginal gyrus, right superior and middle temporal gyri, postcentral gyrus, temporal pole, lateral occipital cortex, superior and inferior frontal gyri, dorsomedial prefrontal cortex, precuneus, posterior cingulate cortex, fusiform gyrus, cuneus, lingual gyrus, visual cortex (Figure 3A) and cerebellum (not shown). Coordinates for the local maxima from this contrast are reported in Supplementary Table S1. Results for the reverse contrast (mirror detect > chase detect) are reported in Supplementary Figure S2A.

Fig. 3.

(A) Activation map from the chase detect > mirror detect contrast, displayed on a 3D rendered brain using AFNI’s surface mapping tool (SUMA, http://afni.nimh.nih.gov/afni/suma). (B) Activation map from the chase project > mirror project contrast. In both images, the color bar ranges from Z = 2.3 (dark orange) to Z = 6.3 (bright yellow). The right pSTS is highlighted with a green circle.

Regions more responsive when searching for chasing v s a mirror image when presented with random motion (chase project > mirror project)

The chase project > mirror project contrast also yielded activations in the right pSTS (peak voxel in ascending limb: 54, −40, 26; Z = 4.45; peak voxel in posterior continuation: 56, −54, 16; Z = 4.45). Activations were also seen in the left pSTS, right angular and supramarginal gyri, right middle temporal gyrus, lateral occipital cortex, superior and middle frontal gyri, medial prefrontal cortex, precuneus, posterior cingulate cortex, cuneus and lingual gyrus (Figure 3B). Coordinates for the local maxima from this contrast are reported in Supplementary Table S2. Results for the reverse contrast (mirror project > chase project) are reported in Supplementary Figure S2B.

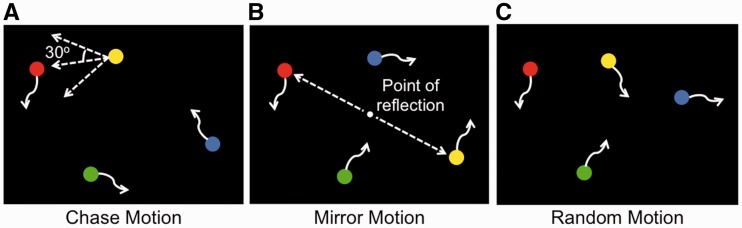

Regions commonly activated in the chase detect > mirror detect, chase project > mirror project and biological motion > scrambled motion contrasts

The intersection of activations reaching cluster-corrected threshold in all three contrasts consisted of voxels in the right pSTS, right lateral occipital cortex (Figure 4A) and left lateral occipital cortex (not shown). Coordinates for the center of mass of these regions are reported in Supplementary Table S3. Average percent signal changes were extracted from the pSTS region, defined by the intersection, for the chase detect, mirror detect, chase project and mirror project conditions and are illustrated in Figure 4B. No hypothesis was made about pSTS response to project v s detect trials due to differences in the visual characteristics of the two types of trials, namely that detect trials consisted of correlated motion, whereas project trials consisted of random motion. However, visual inspection of the percent signal changes suggested that pSTS activity was greater in project trials compared to detect trials. Indeed, paired samples t-tests revealed that chase project trials yielded higher percent signal changes than chase detect trials [t(14) = 3.76, P = 0.002], as did mirror project trials over mirror detect trials [t(14) = 2.59, P = 0.02].

Fig. 4.

(A) Lateral view of the right hemisphere showing the intersection of voxels that reached significance at the cluster-corrected threshold in the chase detect > mirror detect, chase project > mirror project and biological motion > scrambled motion contrasts. The pSTS is highlighted in green. (B) Bar graphs plotting the average percent signal changes for the pSTS region highlighted in green from (A) for each of the four experimental conditions.

Post hoc region-of-interest analysis to account for brief, chance instances of chasing amidst random motion

One possible reason that pSTS activity was greater for chase project trials than mirror project trials could be due to selective attention to those brief instances of apparent chasing that occurred by chance in random motion. To address this possibility, objective measures of chasing were calculated for each of the random motion movies used for the chase project and mirror project trials. The objective measure of chasing was defined, in arbitrary units, as the number of frames in which a circle moved toward the red circle with a maximum deviation of 30° from a direct path between that circle and the red circle, averaged across all three non-red circles in each movie. Within each condition, the movies were then sorted according to the amount of objective chasing in each movie and divided equally, using a median split, into those with the highest (high chase) and lowest (low chase) amounts of objective chasing.

A modified model was then created, where the chase project EV was replaced by chase project high chase and chase project low chase EVs, and the mirror project EV was replaced by the mirror project high chase and mirror project low chase EVs. As the trials for these new EVs were not evenly distributed across runs, the runs were concatenated for each participant and analyzed (with the new model) as a single run using AFNI’s (http://afni.nimh.nih.gov) 3dDeconvolve and 3dREMLfit tools. Average percent signal changes were extracted from the pSTS region, previously defined by the intersection, for chase project high chase, chase project low chase, mirror project high chase and mirror project low chase conditions.

A 2 (task: chase project, mirror project) × 2 (chase measure: high chase, low chase) repeated measures analysis of variance revealed a main effect of task [F(1, 14) = 29.27, P < 0.001], but not of chase measure [F(1, 14) = 0.96, P = 0.35], and no task × chase measure interaction [F(1, 14) = 0.41, P = 0.53]. This suggests that differential pSTS activation was likely driven by the task of searching for chasing v s the mirror image, than by apparent visual cues of intentionality that occurred by chance during random motion.

DISCUSSION

This study investigated the extent to which the activation in pSTS can be driven by top–down attribution of intentions in the absence of reliable visual cues of intentionality. Participants were presented with displays of moving circles. In some trials, one circle chased another circle, depicting intentionality, and in other trials, one circle was the mirror image of another circle, depicting correlated motion. Participants were asked to identify the chasing circle or the mirror image. As expected, engaging in the chase detection task activated the right pSTS to a greater degree than engaging in the mirror detection task. This finding provides further support that the pSTS does not merely respond to correlated motion, but is preferentially engaged by intentionality.

Even when presented with circles that were in fact all moving with independent trajectories, participants still reported having identified a chasing circle or mirror image in ∼60% of the trials. As we hypothesized, the pSTS also showed greater activation when participants were searching for chasing v s the mirror image when presented with random motion. Crucially, this differential activity emerged even though the motion properties of the stimuli were statistically identical in both conditions. These results demonstrate that mere searching for intentionality in the absence of reliable visual cues of intentionality was sufficient to engage the pSTS.

Furthermore, the pSTS region obtained by our experimental task intersected the pSTS region activated by a task commonly used to localize biological motion processing, that is, a task that involves viewing point-light displays of moving human figures, as well as scrambled point-light displays. This suggests that pSTS activity to abstract displays of biological motion could reflect the viewer’s active attribution of intentions to the abstract displays of biological motion, rather than reflect a response that is solely driven by visual cues of intentionality. Indeed, activity in the pSTS region defined by the intersection did not differentiate between high and low amounts of objective chasing when participants were searching for chasing or mirror motion when presented with random motion.

Interestingly, activity in the pSTS was not only greater when participants were searching for chasing amidst random motion v s chasing motion, but was also greater when searching for the mirror image amidst random motion v s mirror motion. One possible reason for the higher pSTS activity observed when searching for chasing during random motion trials is that participants may have switched their candidate ‘wolf’ from time to time because there was no obvious chasing. In a recent study, Gao et al. (2012) found that a display that conveyed rapidly changing intentions elicited greater pSTS activity than a display that conveyed only a single consistent intention. Therefore, attributing intentions to multiple circles at multiple times could have resulted in higher pSTS activity. A similar principle could apply to the detection of a mirror image, which was also shown to elicit pSTS activity when mirror motion was presented. This is not surprising considering that the pSTS also responds to correlated motion (Schultz et al., 2005). Participants could therefore have had to switch their candidate mirror image several times when presented with random motion, thus leading to higher pSTS activity. However, given that statistically identical displays of random motion were presented both when participants were searching for chasing and when they were searching for the mirror image, there would be no reason to expect participants to switch their candidate ‘wolf’ more often than they would switch their candidate mirror image. Yet, the pSTS was still more strongly engaged when searching for chasing v s the mirror image. This suggests that pSTS activity was driven by what participants were searching for and what they expected to see, and not by differences in visual elements of the displays.

A further explanation for the greater pSTS activity observed in response to random motion could be that participants simply had to make more attention shifts when chasing or mirror motion was not as easily detected, as would be the case when the circles were moving randomly. Research has shown that the temporoparietal junction, adjacent to the pSTS, is also involved in shifting and reorienting spatial attention (reviewed in Corbetta and Shulman, 2002). However, this does not explain the greater pSTS activity observed when participants were searching for chasing v s the mirror image as the motion properties of the moving circles, as well as task demands, were similar in both conditions. This again suggests that the pSTS is preferentially engaged during social processing, and in this case, when attributing intentions.

Previous studies have demonstrated that pSTS activity is greater when participants pay attention to the social aspects of visual stimuli compared to the non-social aspects (Wheatley et al., 2007; Tavares et al., 2008). Our study extends these findings to show that the pSTS can be robustly driven by what the observer expects to see, here specifically intentionality, even when reliable visual cues for intentionality are absent. Our findings not only highlight the important role that the observer’s expectation plays on pSTS activity but also demonstrates the extent to which pSTS activity can be modulated by such top–down influences. A question is then raised about the alternative ways an observation of pSTS activity in tasks involving passive viewing of biological motion could be interpreted, and what a lack of differential pSTS activity, as is often observed in individuals with autism spectrum disorders (ASDs) (Castelli et al., 2002; Pelphrey et al., 2005; Koldewyn et al., 2011), could then potentially reflect.

One possible interpretation is that the commonly observed pSTS response to the passive viewing of biological motion stems from the participant’s tendency to seek intentions, and that the presence or absence of visual cues of intentionality, although not entirely responsible for pSTS activity, simply render some stimuli more amenable to top–down intention attribution than others. Following this interpretation, the lower differentiation in pSTS response to point-light displays (Koldewyn et al., 2011), Heider and Simmel animations (Castelli et al., 2002) and even static faces (Hadjikhani et al., 2007) found in individuals with ASDs could reflect a failure to instinctively seek out intentions in the stimuli, in which case, the mere presence of visual cues of intentionality may not be sufficient to drive pSTS activity. Similarly, in studies where participants view actions with unexpected outcomes (Pelphrey et al., 2003, 2004; Vander Wyk et al., 2009), the higher pSTS activity observed in typically developing individuals could result from a greater degree of intention attribution when their expectations about the agent’s intentions are violated. In individuals with ASDs, the lack of this increase in pSTS activity to unexpected outcomes (Pelphrey et al., 2005, 2011) could mean that these individuals do not actively assess or have expectations about the intentions of the agent, such that when the outcomes are incongruent with the initial portrayed intention, no further intention attribution occurs. We note that the design of our study does not provide conclusive evidence for this account, but only seeks to demonstrate that such an interpretation could be possible given the extent to which expectations and top–down influences have an impact on pSTS activity.

CONCLUSION

The findings from this study show that the pSTS, a region involved in processing the goals and intentions of animate agents, can be driven by top–down attribution of intentions, even when visual evidence for intentionality is lacking. Moreover, this region was also activated during a biological motion perception task that is typically used to localize brains regions involved in biological motion processing. The ‘common’ activation in pSTS between our task and a biological motion perception task suggests that pSTS activity to the perception of biological motion could be driven by the viewer’s active attribution and interpretation of intentionality in the display, rather than being driven by the presence of visual cues signaling intentionality.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Conflicts of Interest

None declared.

Supplementary Material

Acknowledgments

We thank Rebecca Dyer, Miranda Farmer and Cora Mukerji for their help in data collection. This work was supported by the Yale University FAS Imaging Fund and by the National Institutes of Health (MH05286 to G.M.). T.G. is currently at the Department of Brain and Cognitive Sciences at MIT.

REFERENCES

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends in Cognitive Science. 2000;4(7):267–78. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. fMRI responses to video and point-light displays of moving humans and manipulable objects. Journal of Cognitive Neuroscience. 2003;15(7):991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in fMRI. NeuroImage. 2003;20(2):1052–63. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Boyer P, Pachot-Clouard M, Meltzoff A, Segebarth C, Decety J. The detection of contingency and animacy from simple animations in the human brain. Cerebral Cortex. 2003;13(8):837–44. doi: 10.1093/cercor/13.8.837. [DOI] [PubMed] [Google Scholar]

- Bonda E, Petrides M, Ostry D, Evans A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. Journal of Neuroscience. 1996;16(11):3737–44. doi: 10.1523/JNEUROSCI.16-11-03737.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brothers L. The social brain: a project for integrating primate behavior and neurophysiology in a new domain. Concepts in Neuroscience. 1990;1:27–51. [Google Scholar]

- Castelli F, Frith C, Happé F, Frith U. Autism, Asperger syndrome and brain mechanisms for the attribution of mental states to animated shapes. Brain. 2002;125(8):1839–49. doi: 10.1093/brain/awf189. [DOI] [PubMed] [Google Scholar]

- Castelli F, Happé F, Frith U, Frith C. Movement and mind: a functional imaging study of perception and interpretation of complex intentional movement patterns. NeuroImage. 2000;12(3):314–25. doi: 10.1006/nimg.2000.0612. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3(3):201–15. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Epley N, Waytz A, Cacioppo JT. On seeing human: a three-factor theory of anthropomorphism. Psychological Review. 2007;114(4):864–86. doi: 10.1037/0033-295X.114.4.864. [DOI] [PubMed] [Google Scholar]

- Gao T, Newman GE, Scholl BJ. The psychophysics of chasing: a case study in the perception of animacy. Cognitive Psychology. 2009;59(2):154–79. doi: 10.1016/j.cogpsych.2009.03.001. [DOI] [PubMed] [Google Scholar]

- Gao T, Scholl BJ, McCarthy G. Dissociating the detection of intentionality from animacy in the right posterior superior temporal sulcus. Journal of Neuroscience. 2012;32:14276–14287. doi: 10.1523/JNEUROSCI.0562-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N, Joseph RM, Snyder J, Tager-Flusberg H. Abnormal activation of the social brain during face perception in Autism. Human Brain Mapping. 2007;28(5):441–9. doi: 10.1002/hbm.20283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heider F, Simmel M. An experimental study of apparent behavior. American Journal of Psychology. 1944;57(2):243–59. [Google Scholar]

- Koldewyn K, Whitney D, Rivera SM. Neural correlates of coherent and biological motion perception in autism. Developmental Science. 2011;14(5):1075–88. doi: 10.1111/j.1467-7687.2011.01058.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G. Grasping the intentions of others: the perceived intentionality of an action influences activity in the superior temporal sulcus during social perception. Journal of Cognitive Neuroscience. 2004;16(10):1706–16. doi: 10.1162/0898929042947900. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G. Neural basis of eye gaze processing deficits in autism. Brain. 2005;128(5):1038–48. doi: 10.1093/brain/awh404. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Shultz S, Hudac CM, Vander Wyk BC. Research review: constraining heterogeneity: the social brain and its development in autism spectrum disorder. Journal of Child Psychology and Psychiatry. 2011;52(6):631–44. doi: 10.1111/j.1469-7610.2010.02349.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Singerman JD, Allison T, McCarthy G. Brain activation evoked by perception of gaze shifts: the influence of context. Neuropsychologia. 2003;41(2):156–70. doi: 10.1016/s0028-3932(02)00146-x. [DOI] [PubMed] [Google Scholar]

- Schultz J, Friston KJ, O’Doherty J, Wolpert DM, Frith CD. Activation in posterior superior temporal sulcus parallels parameter inducing the percept of animacy. Neuron. 2005;45(4):625–35. doi: 10.1016/j.neuron.2004.12.052. [DOI] [PubMed] [Google Scholar]

- Schultz RT, Grelotti DJ, Klin A, et al. The role of the fusiform face area in social cognition: implications for the pathobiology of autism. Philosophical Transactions of the Royal Society of London B. 2003;358(1430):415–27. doi: 10.1098/rstb.2002.1208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shultz S, Lee SM, Pelphrey K, McCarthy G. The posterior superior temporal sulcus is sensitive to the outcome of human and non-human goal-directed actions. Social Cognitive and Affective Neuroscience. 2011;6(5):602–11. doi: 10.1093/scan/nsq087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tavares P, Lawrence AD, Barnard PJ. Paying attention to social meaning: an fMRI study. Cerebral Cortex. 2008;18(8):1876–85. doi: 10.1093/cercor/bhm212. [DOI] [PubMed] [Google Scholar]

- Vander Wyk BC, Hudac CM, Carter EJ, Sobel DM, Pelphrey KA. Action understanding in the superior temporal sulcus region. Psychological Science. 2009;20(6):771–7. doi: 10.1111/j.1467-9280.2009.02359.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheatley T, Milleville S, Martin A. Understanding animate agents: distinct roles for the social network and mirror system. Psychological Science. 2007;18(6):469–74. doi: 10.1111/j.1467-9280.2007.01923.x. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC. A unified statistical approach for determining significant signals in images of cerebral activation. Human Brain Mapping. 1996;4(1):58–73. doi: 10.1002/(SICI)1097-0193(1996)4:1<58::AID-HBM4>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.