Abstract

Consonant recognition in noise was measured at a fixed signal-to-noise ratio as a function of low-pass-cutoff frequency and noise location in older adults fit with bilateral hearing aids. To quantify age-related differences, spatial benefit was assessed in younger and older adults with normal hearing. Spatial benefit was similar for all groups suggesting that older adults used interaural difference cues to improve speech recognition in noise equivalently to younger adults. Although amplification was sufficient to increase high-frequency audibility with spatial separation, hearing-aid benefit was minimal, suggesting that factors beyond simple audibility may be responsible for limited hearing-aid benefit.

Introduction

Older adults have difficulty understanding speech especially in backgrounds of noise (Plomp 1978; CHABA 1988). Relative to younger adults with normal hearing, older adults with and without hearing loss have higher thresholds for speech in steady-state noise and derive less benefit from spatial separation of speech and noise sources (Dubno et al. 2002, 2008). The primary factor contributing to deficits in speech understanding is elevated auditory thresholds, which reduces speech audibility. Accordingly, amplification should restore important speech information and improve speech understanding, resulting in significant hearing-aid benefit. However, many older adults who could benefit from amplification are not satisfied hearing-aid users, especially in noisy listening conditions (Humes et al. 2002; Bertoli et al. 2010).

A widely debated issue concerning auditory function and its relation to hearing aids is the benefit and optimal degree of higher frequency amplification for individuals with high-frequency hearing loss. Some studies report improved speech recognition and sound quality judgments with increased speech audibility in the higher frequencies (e.g., Turner & Henry 2002; Hornsby & Ricketts 2003, 2006; Simpson et al. 2005; Plyler & Fleck 2006; Horwitz et al. 2008; Moore & Füllgrabe 2010; Moore et al. 2010). Other studies report that speech recognition remained constant or deteriorated as amplification was added in the higher frequencies (Ching et al. 1998; Hogan & Turner 1998; Turner & Cummings 1999; Vickers et al. 2001; Baer et al. 2002; Amos & Humes 2007). Thus, it is important to determine if significant benefit to speech recognition is derived from amplifying higher frequency speech, especially under realistic listening conditions with spatially separated sound sources. Amplification that does not provide added benefit to speech recognition or that has negative consequences, such as uncomfortable loudness, increased feedback or distortion, or upward or downward spread of masking, could result in reduced hearing-aid use.

Moore et al. (2010) assessed the effect of providing higher frequency amplification for speech and background co-located at either ± 60° or spatially separated with speech at +60° and background at −60° (or vice versa). Sentences and a two-talker background were recorded through KEMAR, processed to simulate a hearing aid based on CAMEQ2-HF recommended gain and sound field listening in a reverberant room, and presented through headphones. Consistent with the hypothesis that the benefit of an extended aided bandwidth in the separated conditions would be greatest at higher frequencies where the head-shadow effect is largest, spatial separation benefit for hearing-impaired listeners was greater aided than unaided, and increased as the cutoff frequency increased from 5.0 to 7.5 kHz. Although no further improvement was found with the addition of even higher frequencies, large individual differences in benefit were observed. In contrast, Füllgrabe et al. (2010) reported mixed results in the amount of benefit when extending the bandwidth in a simulated hearing aid. Higher frequency gain above 5.0 kHz provided benefit for hearing-impaired listeners as determined by subjective measures of audibility of high-frequency components of speech. However, scores for vowel-consonant-vowel (VCV) identification did not improve as the low-pass cutoff was increased.

In addition to interaural level differences (e.g., head shadow), interaural time differences contribute to improved speech recognition when speech and noise are spatially separated. While benefit from interaural level differences may be limited by high-frequency hearing loss and inadequate hearing-aid gain, the use of interaural timing information may be limited by age (Cranford et al. 1993; Strouse et al. 1998; Dubno et al. 2002, 2008). Therefore, comparison of results from younger and older subjects with normal hearing can be used to quantify age-related differences in the use of binaural cues without the confounding effects of hearing loss.

In a previous experiment with older adults with hearing loss, Ahlstrom et al. (2009) observed that, on average, thresholds for HINT sentences in babble improved significantly with hearing aids and with speech and babble spatially separated. Comparisons of observed HINT thresholds to thresholds predicted using an aided importance-weighted speech-audibility metric (aided audibility index) were used to determine the extent to which amplification improved audibility and to separate simple audibility effects from other effects that may influence speech recognition, hearing-aid benefit, and spatial benefit. Results revealed that hearing-aid benefit was significantly poorer than predicted. In contrast, spatial benefit was significantly greater than predicted and improved significantly as the low-pass cutoff frequency of speech and babble was increased from low- to mid-frequencies, but only with hearing aids. Neither spatial benefit nor hearing-aid benefit improved as cutoff frequency increased further to include higher frequencies. There were at least two explanations for these results: (1) listeners could not take advantage of the additional higher frequency speech information provided by amplification or (2) listeners were provided only limited hearing-aid gain and inadequate audibility at higher frequencies. This lack of audible speech across bandwidth for some subjects may have been a consequence of the relationship between NAL-NL1 prescribed hearing-aid gain and magnitude of high-frequency hearing loss. That is, as hearing loss increased, the amount of prescribed gain did not increase accordingly.

As a follow-up to Ahlstrom et al. (2009), the current study was designed to assess age-related differences and the effects of hearing loss on the benefit attributable to spatial separation of speech and noise. In addition to including younger adults with normal hearing, some changes in methods were made for this study. For several reasons, nonsense syllables were used instead of HINT sentences. First, assessing the relative importance of higher frequency regions of the speech spectrum was a main goal of the study. The cutoff frequency for equally intelligible bands (or “crossover frequency”) is higher for nonsense syllables than for sentences. Second, benefit from spatial separation of speech and noise most likely involves both perceptual and cognitive processes. Identifying a consonant in a nonsense syllable presented in a noisy background may involve less memory and attentional resources than repeating an entire HINT sentence. With the use of nonsense syllables, speech and noise were presented at fixed levels rather than using an adaptive procedure.

Consonant recognition was measured in steady-state speech-shaped noise as a function of low-pass cutoff frequency for younger and older adults with normal hearing and for older adults with hearing loss, with noise either co-located with the speech or with spatial separation. Older subjects with hearing loss were also tested with and without bilateral hearing aids. To address the limitation in hearing-aid gain observed in Ahlstrom et al. (2009), hearing aids were programmed to assure that audibility was at least partially restored across the entire bandwidth of speech in the ear away from the noise when speech and noise were spatially separated. This design can quantify effects of reduced audibility and determine the extent to which older adults can benefit from increased higher frequency speech audibility provided by amplification.

Although increasing speech intelligibility is the main objective of providing amplification, improved sound quality and preference for increased higher frequency speech information has been observed in adults with mild-to-moderate hearing loss using hearing aids (e.g. Ricketts et al. 2008). However, less information is available on perceived listening effort and listeners’ subjective rating of their understanding of speech when using hearing aids in difficult environments. Thus, subjective assessment of listening effort, mental demand, and performance may provide information beyond traditional speech-recognition scores to assess hearing-aid and spatial benefit. The NASA Task Load Index (Hart & Staveland 1988), a self-report measure, is widely used in the fields of human factors and cognitive psychology to characterize mental effort and task difficulty (Lee & Liu 2003). The questionnaire has been used more recently in studies designed to assess listening effort during challenging tasks (Zekveld et al. 2009; Harris et al. 2010; Mackersie & Cones 2011; Veneman et al. 2012). Here, a modified version of the NASA Task Load Index was administered to assess each listener’s subjective ratings of task workload.

Materials and Methods

Subjects

Fourteen younger adults (mean age: 23.4 yr.; range: 20–28 yr.) and 10 older adults (mean age: 67.7 yr.; range: 58–78 yr.) with normal hearing, and 12 older adults (mean age: 69.8 yr.; range: 57–81 yr.) with mild-to-moderate sloping sensorineural hearing loss participated in the study. Two of the subjects with hearing loss were current users of hearing aids, although neither was fit with signal processing algorithms similar to that used in the current study. For all subjects, differences between ears, averaged across frequency from 0.25 to 6.0 kHz, were ≤ 12 dB; for 31 of the 36 subjects, differences were ≤ 5 dB. Subjects were compensated on an hourly basis for their participation.

Apparatus and Stimuli

Thresholds for Narrowband Noises

Thresholds for narrowband noises in quiet were measured in the sound field and used primarily in Articulation Index (AI) predictions. Signals were digitally generated (TDT DD1) narrowband noises, 350 ms in duration (including 10-ms rise/fall ramps) with nominal filter slopes of 96 dB/oct or steeper, and were centered at 0.25, 0.5, 1.0, 1.5, 2.0, 3.0, 4.0, and 6.0 kHz; bandwidths were 72, 120, 170, 195, 220, 270, 320, and 480 Hz, respectively. For measurements of masked thresholds (hearing-impaired subjects only) the masker was a broadband noise shaped to match the one-third-octave band levels of the materials used to measure speech recognition (nonsense syllables) and was recorded onto a compact disc for playback. These thresholds were used primarily to estimate hearing-aid gain. Overall levels of the signals (and speech-shaped masker) were controlled individually using programmable and manual attenuators (TDT PA4). Stimuli were low-pass filtered at 7.1 kHz (Stanford Research Dual Channel Filter Model 650 and TDT PF1), passed through an amplifier (Crown D-75A), and delivered through the 0° loudspeaker. For hearing-impaired subjects, the speech-shaped masker was either delivered through the 0° (spatially coincident) or 90° (spatially separated) loudspeaker. For the 90° condition, the masker was presented from the loudspeaker nearest each subject’s poorer hearing ear, which was determined from AI-weighted average pure-tone thresholds measured in quiet under headphones. The rms level of the masker was fixed at 66 dB SPL, the masker level that was used for all speech measures.

Speech and Speech-shaped Masker

Speech signals were 57 consonant-vowel and 54 vowel-consonant syllables formed by combining the consonants /b,ʧ,d,f,g,k,l,m,n,ŋ,p,r,s,ʃ, t,θ,v,w,j,z/ with the vowels /α,i,u/ spoken by one male and one female talker without a carrier phrase (a total of 222 syllables). Descriptions of the speech stimuli are in Dubno and Schaefer (1992) and Dubno et al. (2003). The long-term rms level of the speech was fixed at 70 dB SPL resulting in a +4 dB signal-to-noise ratio (SNR). For all subjects, routing of the speech and speech-shaped masker was similar as previously described for the narrowband noises in the speech-shaped masker except the speech and masker were low-pass filtered at 1.7, 3.4, and 7.1 kHz (3-dB down points). Nonsense syllables were always presented at 0°. Masker location was either 0° or 90°. Speech and noise levels were adjusted prior to low-pass filtering, thus levels within the pass-bands were equal for all conditions.

Sound Field Calibration

The sound field (Industrial Acoustics Company booth with inside dimensions: 3.05 m width × 2.845 m length × 1.98 m height), was pre-calibrated using the protocol described by Walker, Dillon and Byrne (1984). This procedure was used to verify that small changes in distance from the “test” position of the listener’s head to the loudspeaker did not result in large changes in level. Seven calibration points, along three axes relative to the loudspeaker, were measured for each of three loudspeakers. Each of these measurements was made with several narrowband noises. Once the “test” position was identified, the position of the three loudspeakers, as well as the position to be occupied by the subject, was marked for subsequent testing.

The level of the stimuli was measured at that point in the sound field to be occupied by the listener’s head (substitution method). This was done for each of the three loudspeakers individually. In addition, each loudspeaker’s frequency response was measured and found to be uniform (±3 dB) between 0.1 and 10.0 kHz.

Hearing Aids

All hearing-impaired subjects were fit bilaterally with digital, behind-the-ear hearing aids (Oticon EPOQ) with custom Receiver-In-The-Ear (RITE) molds. The earmolds were made with small vents (for real-ear probe measurements); during testing, the vents were plugged to reduce acoustic feedback and make target gains more easily achievable. Noise management, expansion, and directionality features were not engaged.

Each hearing aid was programmed based on a quasi-DSL v4.0 algorithm using the International Collegium of Rehabilitative Audiology (ICRA, Dreschler et al. 2001) -weighted composite steady-state noise provided by the Fonix 7000 Hearing Aid Test System (Frye Electronics). Hearing-aid gain was adjusted across frequency until 2-cc coupler and real-ear gain values were generally within ±5 dB of target values for an input level of 70 dB SPL (see Table 1). In addition, 2-cc coupler frequency-gain responses were measured using the ICRA noise, with input levels ranging from 70–85 dB SPL in 5-dB steps, to ensure that the hearing aids were not distorting or saturating at levels similar to peak levels of speech in the aided test conditions.

Table 1.

Average (±1 SE) absolute differences (in dB) between measured and target gain in the shadowed ear for an input of 70 dB SPL for 2-cc coupler gain and real-ear gain.

| Frequency (kHz) | 2-cc Coupler Gain | Real-Ear Gain |

|---|---|---|

| 0.5 | 1.9 (0.7) | 1.4 (0.7) |

| 1.0 | −1.1 (0.8) | 0.2 (1.2) |

| 2.0 | 4.9 (1.2) | 4.8 (1.5) |

| 3.0 | −4.2 (0.9) | −4.0 (1.1) |

| *4.0 | −2.0 (1.1) | −5.4 (1.0) |

| *6.0 | 0.1 (1.2) | −2.9 (0.9) |

Asterisks (*) refer to frequencies at which target gain values were not prescribed for all subjects

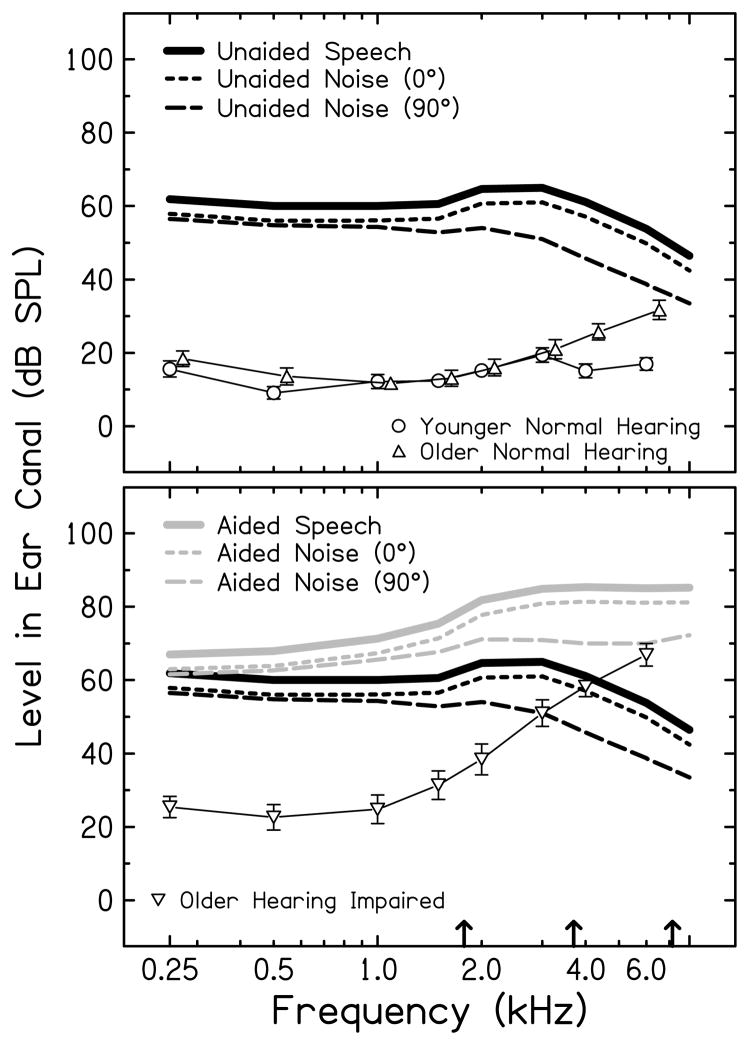

The DSL algorithm was chosen because the prescribed gain for each subject provided at least minimal high-frequency speech audibility in the far ear when speech and noise were spatially separated. Figure 1 shows the mean unaided speech and noise spectra (with noise at 0° and 90°) and quiet sound-field thresholds (described in more detail later) for younger and older subjects with normal hearing (top panel) and older subjects with hearing loss (bottom panel). Mean real-ear aided spectra (speech and noise) are shown in the bottom panel (hearing-impaired group only). Although individual subjects had varying amounts of gain, this figure shows that the average gain provided by the hearing aids was sufficient to make higher frequency speech information audible and to achieve some benefit of spatial separation.

Figure 1.

One-third-octave band spectrum of unaided speech (thick solid black line in each panel) and speech-shaped noise at 0° (dotted black line in each panel) and at 90° (dashed black line in each panel). Top panel: Mean (±1 SE) quiet thresholds for narrowband noises for younger adults with normal hearing (circles) and older adults with normal hearing (triangles). Bottom panel: Mean (±1 SE) quiet thresholds for narrowband noise for older adults with hearing loss (reverse triangles). Also shown is the one-third-octave band spectrum for the mean (±1 SE) aided speech (thick solid gray line) and speech-shaped noise at 0° (dotted gray line) and at 90° (dashed gray line). The arrows on the abscissa at 1.7, 3.4, and 7.1 kHz indicate the 3-dB down cut-off frequencies for the three low-pass filters.

Procedures

Thresholds for Narrowband Noises

Thresholds for narrowband noises (quiet and masked) in the sound field were measured using a single-interval (yes-no) maximum-likelihood psychophysical procedure (Green 1993; Leek et al. 2000). Each threshold was determined from 24 trials including four catch trials. Signal level was varied adaptively with a minimum step size of 0.5 dB. Threshold was defined as the “sweet point” (Green 1993), which was calculated based on the estimated m (the midpoint of the psychometric function) and α (the false alarm rate) after 24 trials. Narrowband-noise threshold was the average of two measurements. “Listen” and “vote” periods were displayed on a computer monitor placed above and behind the 0° loudspeaker. Participants responded by clicking one of two mouse buttons corresponding to the responses “yes, I heard the noise” and “no, I did not hear the noise.”

Thresholds were measured for each ear with the non-test ear plugged with an E-A-R® roll-down foam earplug (Aearo Company). After inspecting the ear canal with an otoscope, an appropriate earplug size was selected (small, medium, or large) and inserted deeply into the ear canal. Placement was verified by visual inspection and, after adjustment, attenuation was confirmed by the subject. For hearing-impaired subjects, masked thresholds were also measured both unaided and with each ear unilaterally aided. Spatial benefit for narrowband noises was computed by subtracting thresholds with the masker at 90° from thresholds with the masker at 0°; hearing-aid benefit was computed by subtracting aided thresholds from unaided thresholds.

Consonant Recognition in Speech-shaped Noise

For measuring consonant recognition, the set of possible consonants was presented on a computer monitor and subjects responded by clicking with a mouse. Noise-source location (0° vs. 90°)and low-pass filter cutoff were randomized. For hearing-impaired subjects, unaided conditions were measured first, followed by aided conditions. Spatial benefit for speech was defined as the difference in recognition scores for the two noise-source locations; hearing-aid benefit was defined as the difference in recognition scores between aided and unaided conditions.

Training and Practice

Subjects had no prior experience with the speech materials used in the experiment or with the listening tasks. During training, the examiner provided feedback until the subject understood the task and provided consistent results. For the simple task of measuring detection thresholds with narrowband noise, this was accomplished after four to five measurements for most participants. For speech recognition, subjects were familiarized with the testing procedure by listening to syllables with no noise background, followed by practice with the lowest and highest of three low-pass-filter cutoffs in both loudspeaker locations. During this practice period, hearing-impaired subjects were wearing the study hearing aids. After completion of the initial training period, no feedback was provided. Following approximately 1 hr. of practice with the various tasks, data collection began.

Unaided and Aided Articulation Index

Predicted scores for recognition of nonsense syllables in speech-shaped noise were determined from AI values (Souza & Turner 1999; Stelmachowicz et al. 2002) computed using procedures similar to ANSI (1969) including: (1) each subject’s quiet thresholds (transformed to individual in situ dB SPL) for narrowband noises measured at 0°; (2) the spectra and level of the nonsense syllables at 0°; (3) the spectra and level of the speech-shaped noise at 0° and 90° and (4) the frequency importance function and AI-recognition transfer function developed for these nonsense syllables (Dirks et al. 1990). It is important to note that this simple audibility-based predictive model includes frequency-dependent level differences between ears but does not include effects of other interaural difference cues that may influence speech recognition thresholds. Thus, differences between predicted and observed scores may reveal a role of binaural difference cues (unaided and aided) for consonant recognition beyond the purely acoustic phenomenon of head diffraction.

To quantify each subject’s quiet thresholds, recall that narrowband-noise thresholds were measured at 0° in the sound field for each ear individually with the non-test ear plugged. In order to transform these thresholds into individual in situ dB SPL values to be used in AI calculations, the speech-shaped noise was presented from the 0° loudspeaker at 70 dB SPL. Using a probe microphone (Etymōtic Model ER-7C), the output from each ear canal was measured independently and the amount of ear-canal resonance at each narrow band frequency was determined. This level, added to the measured quiet threshold, resulted in thresholds defined in terms of SPL generated in each individual ear canal.

Speech and noise levels for eight frequency bands of variable width centered at audiometric frequencies were based on individual subjects’ probe microphone recordings of the speech-shaped noise. Ear-canal recordings for each ear separately were made with the speech-shaped noise presented at 0° and 90° in both unaided and aided conditions. The probe tube was placed in the ear canal at a depth of 27 mm past the intratragal notch resulting in a location within ~5–7 mm of the eardrum of the average ear (Dirks, Ahlstrom & Eisenberg 1996). The level of the speech-shaped noise was fixed at 70 dB SPL during the recordings. The waveform recordings were analyzed using Adobe Audition (Version 1.5). An AI value was calculated for each ear of each subject, unaided and aided (hearing-impaired subjects only), for each low-pass filter and noise-source condition (0° vs. 90°). A 30-dB dynamic range (+12 dB speech peaks and −18 dB speech minima) was assumed, and, similar to Stelmachowicz et al. (2002), audibility was based on the better sensation level for each frequency band regardless of ear; no increase in audibility was assumed when the ears were symmetrical.

NASA Task Load Index

Perceived listening effort was assessed using a modified version of the NASA Task Load Index (Hart & Staveland 1988). Immediately following each measurement of consonant recognition in noise, subjects rated task workload using a 20-point scale for six subscales (mental demand, physical demand, temporal demand, performance, effort, and frustration). For all subscales except performance, a higher rating indicated a greater perceived workload.

Data Analyses

Outcome measures included the following: (1) unaided and aided narrowband noise thresholds and spatial benefit for hearing impaired listeners; (2) observed consonant-recognition scores, spatial benefit, and hearing-aid benefit; (3) AI-predicted recognition scores, spatial benefit, and hearing-aid benefit; (4) unaided and aided consonant confusion matrices focusing on information transmission of individual consonants; and (5) unaided and aided self-reported ratings of task workload. Unaided results were obtained for all subjects; aided results and hearing-aid benefit were obtained for hearing-impaired subjects only. Prior to statistical analysis, recognition scores were transformed to rationalized arcsine units (rau) to stabilize the variance across conditions (Studebaker 1985).

Results and Discussion

Masked Thresholds for Narrowband Noises for Older Adults with Hearing Loss

Masked thresholds for narrowband noises measured in the speech-shaped masker were used to estimate hearing-aid gain. Figure 2 (left panel) displays mean unaided and aided masked thresholds for the narrowband noises at 0° and the masker at 0° (filled and open circles, unaided and aided, respectively) or 90°(filled and open triangles, unaided and aided, respectively). The overall pattern of the masked thresholds (i.e., higher thresholds for lower frequencies, declining at higher frequencies) was determined by the spectrum of the speech-shaped masker. Effects of frequency, spatial benefit, and hearing-aid benefit were assessed by repeated measures ANOVA. Thresholds were significantly lower (better) with the masker at 90° than with the masker at 0° [F(1, 11) = 57.92, p < 0.0001]. With no amplification, this improvement was seen at low frequencies only, with virtually no difference in masked thresholds in the higher frequencies. This was expected due to the magnitude of hearing loss in the higher frequencies, which negates any head-shadow benefit (see Figure 1). Aided thresholds were significantly lower than unaided thresholds [F(1, 11) = 42.848, p < 0.0001], with a significant interaction of aid and frequency [F(7, 77) = 31.542, p < 0.0001]; this result is consistent with more improvement in aided thresholds for higher than lower frequencies due to greater higher frequency gain. Figure 2 (right panel) shows mean spatial benefit for unaided and aided conditions (filled and open circles, respectively). ANOVA results revealed a significant effect of amplification such that greater spatial benefit was found with hearing aids than without [F(1, 11) = 19.281, p < 0.05]. Post hoc testing revealed that, with hearing aids, listeners had greater spatial benefit at frequencies ≥ 1.5 kHz than at lower frequencies [F(1, 11) = 18.388, p < 0.05]. Thus, on average, hearing-aid gain in the higher frequencies was sufficient to improve audibility across a wide bandwidth, especially in the far ear with better SNRs (i.e., head shadow).

Figure 2.

Left: Mean (±1 SE) masked thresholds for narrowband noises measured in the speech-shaped masker as a function of frequency for older adults with hearing loss in unaided (filled) and aided (open) conditions. Narrowband noise was always at 0°. The speech-shaped masker was at 0° (circles) or at 90° (triangles). Right: Mean spatial benefit in dB (±1 SE) as a function of frequency for older adults with hearing loss. Each point is the mean threshold with noise at 0° minus thresholds with noise at 90°.

Consonant Recognition in Noise

Consonant Recognition for Younger and Older Adults with Normal Hearing

Figure 3 shows mean observed recognition scores (filled) and scores predicted by the AI (open) for the two groups of subjects as a function of low-pass cutoff frequency for speech-shaped noise at 0° (top panels A) and at 90° (bottom panels A). Observed consonant recognition improved significantly for both groups as cutoff frequency increased and additional high frequency speech information was available [F(2,44) = 1913.548, p < 0.0001]. Furthermore, mean observed consonant recognition was significantly higher for both groups when speech and noise were spatially separated as compared to co-located [F(1,22) = 183.624, p < 0.0001]. A significant main effect of group [F(1,22) = 12.696, p < 0.05] revealed that scores were poorer for older than younger adults. Averaged across cutoff frequency and noise location, mean observed recognition scores were 65.9 rau and 61.8 rau for younger and older subjects with normal hearing, respectively.

Figure 3.

A: Mean (±1 SE) observed (filled) and predicted (open) consonant recognition scores with noise at 0° (top) and noise at 90° (bottom) as a function of low-pass cutoff frequency, for younger adults with normal hearing (left) and older adults with normal hearing (right). B: Mean (±1 SE) observed (filled bars) and predicted (hatched bars) spatial benefit as a function of low-pass cutoff frequency for younger and older participants.

Similar to observed scores, mean predicted scores improved significantly with increasing cutoff frequency [F(2,44) = 24457.041, p < 0.0001] and were significantly better when speech and noise were spatially separated [F(1,22) = 92.470, p < 0.0001]. Averaged across cutoff frequency and noise location, mean predicted recognition scores were 60.2 rau and 60.1 rau for the younger and older subjects with normal hearing, respectively. Thus, average scores were better than predicted for both groups.

Spatial Benefit for Younger and Older Adults with Normal Hearing

In the bottom two panels of Figure 3 (B), mean observed and predicted spatial benefit is shown as a function of low-pass cutoff frequency for the younger (left panel) and older (right panel) subjects. Differences in spatial benefit between groups were not significant [F(1,22) = 2.534, p > 0.05; F(1,22) = 0.098, p > 0.05, observed and predicted, respectively]. Observed spatial benefit ranged from 7.0 to 13.0 rau, depending on cutoff frequency, and group. With predicted values ranging from 5.1 to 9.7 rau, spatial benefit was better than predicted (significantly better for older adults; t(29) = 2.8, p = 0.01). This was expected for both groups because AI predictions are based primarily on audibility (including head-shadow effects) and do not take into account other binaural advantages.

Averaged across group, observed spatial benefit increased significantly as a function of cutoff frequency [F(2,44) = 8.912, p < 0.05]. Post hoc analysis revealed that spatial benefit increased significantly as cutoff frequency increased from 1.7 to 3.4 kHz [F(2,22) = 7.886, p < 0.05]; no change in spatial benefit was observed as cutoff increased further from 3.4 to 7.1 kHz. Similarly, an increase in spatial benefit was predicted only as mid-to-higher frequency speech information was added (p < 0.001). Because head shadow effects increase with increasing frequency, it was anticipated that spatial benefit would be larger as high-frequency information was added (i.e., from 3.4 to 7.1 kHz). The lack of significant differences in predicted spatial benefit may partially be attributable to the slope of the performance-intensity function for these materials and to the AI-recognition transfer function that relates predicted scores to speech audibility. Specifically, lower AI values observed at the low-to-mid cutoff frequencies correspond to a steeper range of the transfer function. However, with a shallower slope of the transfer function at the highest cutoff frequency, the addition of the high-frequency band resulted in a smaller increase in predicted scores. Similarly, the already-high observed recognition scores for these normal-hearing subjects may have made it difficult to achieve further improvement with additional higher frequency speech information.

In summary, consonant recognition in noise improved with the availability of interaural difference cues and the addition of mid-to-higher frequency speech information for younger and older subjects with normal hearing. However, consonant recognition in noise was poorer for older than younger adults. Larger-than-predicted spatial benefit for both groups suggested that older adults were able to take advantage of interaural difference cues to the same extent as younger adults.

Consonant Recognition for Older Adults with Hearing Loss

Figure 4A shows observed (filled) and predicted (open) consonant recognition for older adults with hearing loss as a function of low-pass cutoff frequency. On average, unaided scores ranged from 34.5 to 61.0 rau and from 40.6 to 67.5 rau, with noise at 0° and 90°, respectively; aided scores ranged from 33.3 to 62.0 rau and from 41.6 to 69.6 rau in the same conditions. As shown by comparing open and filled symbols, predicted scores were generally higher than observed scores, especially with hearing aids. Effects of cutoff frequency, noise location, and hearing aid were assessed by repeated measures ANOVA. Observed and predicted unaided and aided scores were significantly higher with speech and noise separated than co-located [F(1,11) = 98.255, p < 0.0001; F(1,11) = 71.315, p = 0.0001, observed and predicted, respectively] and with the addition of high-frequency speech information [F(2,22) = 298.056, p < 0.0001; F(2,22) = 774.548, p < 0.0001, observed and predicted, respectively]. Post hoc testing revealed that, averaged across unaided and aided conditions, observed and predicted scores improved as cutoff frequency increased from 1.7 to 3.4 kHz [F(1,11) = 302.770, p < 0.0001; p < 0.0001, observed and predicted, respectively] with additional improvement as cutoff frequency increased further [F(1,11) = 78.263, p < 0.0001; p < 0.0001, observed and predicted, respectively]. Although consonant recognition in the spatially separated condition was slightly better aided than unaided, overall, observed scores did not improve significantly with hearing aids [F(1,11) = 0.023, p = 0.884]. In contrast, averaged across noise location, predicted scores improved significantly with hearing aids [F(1,11) = 20.332, p < 0.01], suggesting that, overall, hearing-aid gain was sufficient to improve audibility.

Figure 4.

A: Mean (±1 SE) observed (filled) and predicted (open) consonant recognition scores with noise at 0° (top) and noise at 90° (bottom) as a function of low-pass cutoff frequency, for older adults with hearing loss unaided (left) and aided (right). B: Mean (±1 SE) observed (filled) and predicted (hatched) spatial benefit as a function of low-pass cutoff frequency for unaided and aided conditions. C: Mean (±1 SE) observed (filled bars) and predicted (hatched bars) hearing-aid benefit (±1 SE) as a function of low-pass cutoff frequency with noise at 0° and 90°.

Unaided Spatial Benefit

As seen in Figure 4B (left panel), unaided spatial benefit (filled bars) was fairly constant across cutoff frequency, ranging from 6.1 to 6.6 rau; predicted values (hatched bars) ranged from 6.0 to 7.0 rau. These results were generally comparable to the mean unaided spatial benefit observed in older adults with normal hearing. However, averaged across cutoff frequencies, older adults with hearing loss had significantly less spatial benefit than older adults with normal hearing [F(1,20) = 9.784, p < 0.01]. To assess effects of hearing loss on spatial benefit, correlations between average high-frequency pure-tone thresholds (2.0, 3.0, 4.0, and 6.0 kHz) and spatial benefit for each cutoff frequency were computed, including results for all older adults. Significant negative correlations were found, such that less spatial benefit was observed with increasing high-frequency hearing loss (3.4 kHz: r = −0.56, p < 0.05; 7.1 kHz: r = −0.60, p < 0.05). This was not surprising due to the frequency dependence of head shadow effects and high-frequency hearing loss. Thus, hearing loss (but not age) influenced the amount of improvement in speech recognition when spatially separating speech and noise.

Aided Spatial Benefit

Aided spatial benefit (Figure 4B, right panel), ranged from 7.6 to 9.3 rau; predicted values ranged from 6.0 to 7.5 rau. Thus, aided spatial benefit was generally larger than predicted. These results suggest that, on average, older adults with hearing loss were able to take advantage of interaural level and time difference cues to improve consonant recognition in noise while using bilateral hearing aids.

It was hypothesized that amplification and the improved SNR in the far ear would result in an overall improvement in audibility of higher frequency speech information. Thus, observed and predicted spatial benefit should be larger aided than unaided. However, no significant interaction was found between observed or predicted spatial benefit and hearing aids, although there was a non-significant trend (p = 0.07) for observed spatial benefit to be greater aided than unaided. (For a few subjects, ear canal recordings of aided speech and noise revealed that quiet thresholds at 6.0 kHz limited audibility in the spatially separated low-pass 7.1 kHz condition.) Averaged across cutoff frequency, spatial benefit was 6.2 rau unaided and 8.4 rau aided; results were similar for predicted spatial benefit, 6.5 rau unaided and 7.0 rau aided. In contrast to unaided spatial benefit, no significant correlations between average high-frequency pure-tone thresholds and aided spatial benefit were observed.

Hearing-aid Benefit

Figure 4C shows mean hearing-aid benefit as a function of low-pass cutoff frequency. Scores for the co-located and separated noise conditions are in the left and right panels, respectively. Hearing-aid benefit ranged from −3.8 rau to 2.0 rau, depending on cutoff frequency and noise source location; predicted hearing-aid benefit ranged from 0.1 to 10.5 rau. A significant interaction between hearing-aid benefit and cutoff frequency [F(2,22) = 3.570, p < 0.05] showed that, averaged across noise location, consonant recognition with hearing aids significantly improved as cutoff frequency increased from 3.4 to 7.1 kHz [F(1,11) = 7.123, p < 0.05]. This result is most likely due to the decrease in hearing-aid benefit seen with the addition of mid frequencies. In contrast, hearing-aid benefit was predicted to improve with each increase in low-pass cutoff frequency, with a large significant predicted increase as higher frequencies were added from 3.4 to 7.1 kHz (p < 0.0001). Taken together, these results revealed that, on average, bilateral amplification did not lead to improved consonant recognition in noise. With significant benefit of amplification predicted based on improved speech audibility, and poorer-than-predicted aided scores and benefit, these subjects did not take full advantage of the increase in audible speech information provided by amplification.

Individual Results for Aided Listening

The availability for each subject of ear-canal recordings, quiet thresholds, and AI values provided a means to assess predicted scores and observed-predicted differences for individual subjects. These results are available for consonant-recognition scores, spatial benefit, and hearing-aid benefit and can be compared to subject-related characteristics, such as magnitude of high-frequency hearing loss. To clarify subjects’ ability to benefit from amplification, correlations between aided consonant recognition with noise at 0° and average high-frequency thresholds at 2.0, 3.0, 4.0, and 6.0 kHz in quiet were computed. For observed scores with speech and noise low-pass filtered at 7.1 kHz, a negative correlation was found (r = −0.63, p < 0.05), such that individuals with more high-frequency hearing loss had poorer aided consonant recognition. In contrast, predicted scores remained constant with increasing thresholds (r = −0.06, p > 0.05), demonstrating that audible high-frequency speech information was provided by amplification.

Figure 5 shows differences between observed and predicted aided recognition (0°) plotted against average higher frequency thresholds. Nearly all aided scores were poorer than predicted and, as high-frequency hearing loss increased, scores were increasingly poorer than predicted.. Similar patterns for observed and predicted scores were found for aided consonant recognition as a function of average high-frequency gain (2.0–6.0 kHz). That is, observed aided recognition decreased as the amount of high-frequency gain increased whereas predicted aided recognition remained constant as a function of gain. Taken together, these results confirm the conclusion from the average scores that hearing aids provided at least partial audibility across the speech bandwidth, but that older adults did not take advantage of the additional high-frequency speech information provided by amplification to improve speech recognition.

Figure 5.

Differences between observed and predicted aided consonant recognition (7.1 kHz) plotted against average high-frequency thresholds (2.0, 3.0, 4.0 kHz) measured in quiet (dB HL). The Pearson correlation coefficient and linear regression function are included in the panel.

Speech Feature Transmission Analysis

To further investigate the effects of amplification on consonant recognition, consonant confusion matrices were analyzed, focusing on transmission of feature information for individual consonants. Correct and incorrect responses to each syllable were pooled across subjects to create a single consonant confusion matrix for each unaided and aided condition in both noise-source locations. These matrices were then submitted to the Feature Information Transfer (FIX) program1 to compute proportion of transmitted information (Miller and Nicely 1955). This transformation provided an estimate of susceptibility of individual consonants to the effects of cutoff frequency, noise location, and amplification. Figure 6 presents information transmitted as a function of low-pass cutoff frequency for unaided and aided consonants (filled and open symbols in each panel, respectively) with the noise co-located (circles) and spatially separated (triangles). The consonants in the top row represent the plosive feature and the consonants in the bottom row represent the frication/continuance feature (accounting for 60% of the total number of consonants). Information transmitted improved for most consonants as high-frequency speech information was added with increases in low-pass cutoff frequency. Information transmitted also improved with spatial separation, especially for plosives. Although hearing-aid benefit was negligible according to overall scores, several consonants showed substantial aided benefit. Most notably, the consonants with frication/continuance (bottom row) showed the largest improvement with amplification, with consonants /ʃ/ and /ʈʃ/ showing the largest aided improvement with spatial separation.

Figure 6.

Proportion information transmitted for individual consonants, representing plosiveness (top row) and frication/continuance (bottom row), as a function of low-pass cutoff frequency with noise at 0° (circles) and 90° (triangles), unaided (filled) and aided (open).

High frequency speech cues contain essential linguistic information and are fundamental for discriminating various consonants (Boothroyd & Medwetsky 1992; Stelmachowicz et al. 2001; Stecker et al. 2006). The current results suggest that older adults with hearing loss derived benefit from higher frequency amplification for specific consonant elements. These results are similar to the findings of Füllgrabe et al. (2010), in which hearing-impaired subjects detected the presence of word-final /s/ and /z/ to a greater degree when listening to aided speech with an extended bandwidth (7.5 kHz). Together with the aided improvement in sound field masked thresholds (Figure 2), significant predicted hearing-aid and spatial benefit, and the lack of an association between predicted scores and high-frequency hearing loss, these results support the assumption that higher-frequency amplification provided audible speech information, especially for high-frequency components of speech.

Self-report Ratings of Task Workload

Although hearing-aid benefit as assessed by consonant-recognition scores was minimal, it was of interest to determine the extent to which perceived task workload varied with amplification and spatial separation of speech and noise. Task workload ratings for unaided listening by the three subject groups were used as baseline. In Figure 7, one of the six task workload scales (Perceived Effort, “How hard did you have to work to accomplish your level of performance?”) is plotted as a function of low-pass cutoff frequency. As seen in the left panels, perceived effort for unaided listening generally decreased with increasing speech bandwidth2. Thus, with the addition of mid-to-higher frequency speech information and spatial separation of speech and noise, task demands were generally perceived as less effortful, generally consistent with measured recognition scores. One notable trend was that the younger adults with normal hearing rated speech recognition in the more challenging conditions as more effortful than their older counterparts. It is possible that younger adults who are accustomed to listening to speech with highly redundant information found the tasks to be especially demanding when listening to filtered speech. A similar result was reported by Veneman et al. (2012) in which younger adults with normal hearing rated speech tasks significantly more mentally demanding than older adults when testing occurred during their “off-peak” measurement time of day.

Figure 7.

Left: Mean (±1 SE) perceived effort from one subscale of the NASA Task Load Index for consonant recognition as a function of low-pass cutoff frequency with noise at 0° (top) and 90° (bottom). Mean results are shown for younger adults with normal hearing (squares), older adults with normal hearing (triangles), and older adults with hearing loss (circles) listeners. Right: Same as left panels but for older hearing-impaired subjects unaided (filled) and with bilateral hearing aids (open).

For older adults with hearing loss (Fig 7, right panels; unaided data re-plotted from left panel), perceived effort was reduced with aided listening, but only with spatial separation and at the lower cutoff frequency (i.e., 1.7 kHz). Thus, even without significant changes in measured consonant recognition, older adults with hearing loss reported less effortful listening with hearing aids under some conditions. Similar results (not shown) were found with perceived mental demand and frustration. Comparable results have been reported in other studies (Hafter & Schlauch 1992; Bentler et al. 2008). For example, minimal objective improvement in speech recognition has been observed with noise reduction algorithms incorporated in many hearing aids, although, listeners report a preference for this type of processing. Results from dual-task paradigms examining objective listening effort have also shown reduced effort for aided compared to unaided conditions (Sarampalis et al. 2009; Hornsby 2013). Thus, even when amplification does not markedly improve speech recognition, hearing aids may reduce cognitive load and reduce listening effort (Picou et al. 2013; Hornsby 2013).

General Discussion

Unaided Spatial Benefit and Effects of Age

It has been hypothesized that age-related changes in binaural processing could result in reduced benefit from spatial separation for older adults even after accounting for differences in hearing sensitivity (Divenyi & Haupt 1997; Divenyi et al. 2005; Murphy et al. 2006). There is a body of work that supports this hypothesis; however, there are also contradictory findings.

For example, Gelfand et al. (1988) reported that thresholds for sentences in babble significantly improved with spatial separation, and the magnitude of spatial benefit was independent of age. More recently, these results were replicated in two large-scale studies designed to compare performance on the Listening in Spatialized Noise-Sentences Test (LiSN-S; Cameron et al. 2011; Glyde et al. 2012) for subjects aged 7–89 years. Speech thresholds were measured under headphones in a simulated environment that included pitch and spatial cues. Subject age was not a significant predictor of spatial benefit, suggesting that age-related changes were not the primary determinant of declining binaural processing ability in older adults. These results are consistent with those in the current study where no age-related differences in spatial benefit were observed among younger and older adults with normal hearing. Further, for both groups, observed spatial benefit was greater than predicted suggesting that binaural cues in addition to interaural level differences played a role in improved consonant recognition with spatial separation.

In contrast, Dubno et al. (2002) reported significantly less spatial benefit for older than younger adults with normal hearing and observed that spatial benefit was significantly smaller than predicted for older adults; deviations from predicted benefit increased with increasing age, and binaural listening benefit was negligible (Dubno et al. 2008). Similar results were reported by Marrone et al. (2008) in which younger and older adults with normal and impaired hearing repeated key words with maskers co-located with the target or symmetrically spatially separated. Although amount of hearing loss was the primary factor contributing to reduced spatial benefit, subject age had a small, but significant, negative effect on spatial benefit measured in a reverberant room condition. Consequently, there remains a lack of consensus regarding the role of aging in the use of binaural difference cues that underlie the spatial separation benefit for speech recognition in noise. Differences among the various studies may relate to differences in age ranges, absolute thresholds or other nonauditory characteristics of older participants, sample size and variance, or procedures. Further research is necessary to assess these and other aspects of binaural advantages for speech for older adults.

Aided Spatial Benefit

In the current study, there was a trend for aided spatial benefit to be larger than unaided, and larger than predicted, although no change in benefit was observed as higher frequency speech information was added. This suggests that older adults generally benefited from binaural cues to a greater extent with than without bilateral hearing aids. In the comparable study by Moore et al. (2010), aided spatial benefit was also examined in a group of middle-aged to older adults with hearing loss. Although there were large individual differences among the hearing-impaired subjects, speech recognition significantly improved in the spatially separated conditions with the provision of high-frequency amplification (i.e., 5.0–7.5 kHz). Aided spatial benefit was greater than unaided. These results are generally comparable to those in our previous study (Ahlstrom et al. 2009). In that study, despite effectively no predicted spatial benefit, observed spatial benefit increased significantly with hearing aids. Thus, bilateral amplification improved speech recognition in noise and increased the availability of some mid-to-high frequency interaural difference cues. Differences in the level of significance between studies may be due to differences in speech materials, use of a fixed SNR rather than an adaptive procedure, and a smaller sample size of subjects with hearing loss in the present study.

In contrast, Glyde et al. (2012) observed a consistent association between degree of hearing loss and spatial-processing ability in a heterogeneous group of listeners, despite provision of spectral shaping (NAL-RP) to provide sufficient audibility. Using the LiSN-S test, spatial-processing ability decreased as hearing loss increased and was not explained by age in most conditions. The authors suggest that despite being fit with appropriate amplification, subjects with even mild hearing loss may have more difficulty understanding speech in a complex listening environment than subjects with normal hearing. In the current study, hearing loss contributed to unaided spatial benefit but not aided spatial benefit.

Hearing-Aid Benefit

Although consonant recognition was significantly better with the addition of higher frequency speech information, hearing-aid benefit was relatively small. As discussed earlier, sufficient amplification was provided to improve audibility of high-frequency speech. Thus, the lack of significant hearing-aid benefit observed in the current study may reflect factors beyond simple audibility. Even after accounting for hearing sensitivity, the effects of peripheral suprathreshold processing may still be evident by a reduced dynamic range, poorer frequency selectivity, increased susceptibility to masking, and a loss of temporal resolution (e.g., Glasberg & Moore 1986; Moore 1996; Fitzgibbons & Gordan-Salant 1996). To assess the role of increased masking, associations among masked thresholds and aided scores were examined for older adults with hearing loss, but no significant correlations were observed. Thus, for this subject group, increased masking did not appear to contribute to reduced benefit of hearing aids for speech recognition in noise.

Higher-level deficits in central-auditory pathways and/or declines in cognitive abilities may also contribute to reduced hearing-aid benefit. Several studies have examined the relationship between hearing-aid benefit and various aspects of cognition (e.g., Gatehouse et al. 2003 e.g., Gatehouse et al. 2006 a, b; Humes 2002, 2007; Lunner & Sundewall-Thorén 2007). In most, the impact of audibility was minimized and results suggested that central and/or cognitive factors play a role in hearing-aid benefit. For example, Humes (2007) reviewed a series of studies in which different approaches were used to restore at least partial audibility for older adults with hearing loss. Associations between speech recognition in quiet and noise using nonsense syllables, monosyllabic words, and sentences, revealed that once audibility was restored, age and various cognitive factors (eg., IQ and digit span) accounted for ~30–50% of the variance in speech recognition for older adults.

Finally, it is also possible that the limited hearing-aid benefit observed here may be a consequence of the use of unfamiliar amplification. That is, these older adults who were primarily new hearing aid users did not have the opportunity to acclimatize to aided listening by using the hearing aids for extended periods of time. Improved hearing-aid benefit in quiet and noisy backgrounds has also been reported following aided speech perception training (e.g. Sweetow & Palmer, 2005; Burk & Humes, 2008).

In contrast to results observed here, Moore and colleagues (Moore et al. 2010; Moore & Füllgrabe 2010; Füllgrabe et al. 2010) observed that, in general, aided speech recognition was significantly higher than unaided, and benefit increased with a simulated amplification system designed to restore partial audibility for frequencies between 6.0 and 10.0 kHz. Although improvements in speech recognition varied across the studies, subjective ratings of loudness and sound quality indicated that listeners were satisfied with the addition of higher frequencies. Similar results were reported by Ricketts et al. (2008), whereby many participants with mild-to-moderate hearing loss consistently preferred speech and music samples processed with a wider than narrower bandwidth. In the current study, self-report of task workload suggested a reduction in perceived effort for older adults with hearing loss listening to aided lower frequency speech with spatial separation. Thus, even when providing audible high-frequency speech information does not result in significant improvements in speech recognition, some individuals with hearing loss may prefer additional high-frequency gain in laboratory and real-world listening (Cox et al. 2012). Although previous results suggest that providing gain above 4.0 kHz does not help and may hinder speech recognition in noise for individuals with high-frequency hearing loss (e.g., Hogan & Turner 1998; Ching et al. 1998), the optimal degree of high-frequency amplification remains unclear. Given that hearing aids are capable of providing useful gain above 6.0 kHz, further research using more realistic listening conditions and with hearing aids configured with more clinically relevant parameters is needed to determine the benefit of higher frequencies for speech recognition in noise.

Summary

For all subjects, consonant recognition was better when speech and noise were spatially separated than when co-located, due to the acoustic advantage provided by head shadow and the presence of other interaural difference cues.

For older adults with normal hearing, spatial benefit was larger than predicted and similar to spatial benefit for younger adults. Thus, younger and older adults with normal hearing benefited from binaural processing to a greater extent than predicted based on simple audibility.

For older adults with hearing loss, consonant recognition was poorer than predicted, but improved with the addition of higher frequency speech information.

Hearing-aid benefit was poorer than predicted. On average, predicted scores and results from a feature-based information transfer analysis confirmed that amplification provided some audibility across a wide bandwidth of speech and that speech audibility increased with spatial separation. This suggests that the lack of significant hearing-aid benefit may have resulted from age-related peripheral, central-auditory, and/or cognitive changes, rather than limited audibility.

For older adults with hearing loss, spatial benefit was better than predicted. Thus, these subjects were able to take advantage of binaural cues to improve consonant recognition in noise. Although improvements were small, older adults benefited from binaural cues to a greater degree with bilateral hearing aids than without amplification.

Perceived listening effort was less aided than unaided, especially when speech and noise were spatially separated. Despite the lack of hearing-aid benefit as measured by speech recognition scores, listening to speech in noise was perceived to be less effortful with the use of bilateral hearing aids.

Acknowledgments

This work was supported (in part) by research grants P50 DC00422 and R01 DC00184 from NIH/NIDCD and the South Carolina Clinical & Translational Research (SCTR) Institute, with an academic home at the Medical University of South Carolina, through NIH Grant Number UL1 RR029882. This investigation was conducted in a facility constructed with support from Research Facilities Improvement Program Grant Number C06 RR14516 from the National Center for Research Resources, National Institutes of Health. The authors thank Oticon USA for providing hearing aids. Helpful contributions from Fu-Shing Lee, Xin Wang, Mary Ashley Mercer, and Sarah Hall are gratefully acknowledged.

The study received external support and editorial control was retained by the authors.

Footnotes

The Feature Information Transfer (FIX) program was obtained from the University College London website: http://www.phon.ucl.ac.uk/resource/software.html

Due to the subjective nature of the questionnaire and the small sample size (scores were calculated for individual scales), the degree of statistical power was not sufficient to conduct statistical analyses.

All authors concur with their names being included and with the order in which the names are listed.

No name has been omitted of any person who contributed substantially to this work.

Human subjects were used in this research and their participation was approved by the MUSC Institutional Review Board.

There are no conflicts of interest to report.

References

- Ahlstrom JB, Horwitz AR, Dubno JR. Spatial benefit of bilateral hearing aids. Ear Hear. 2009;30:203–218. doi: 10.1097/AUD.0b013e31819769c1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American National Standards Institute. Methods for the Calculation of the Articulation Index. New York: ANSI; 1969. (ANSI S3.5–1969) [Google Scholar]

- Amos NE, Humes LE. Contribution of high frequencies to speech recognition in quiet and noise in listeners with varying degrees of high-frequency sensorineural hearing loss. J Speech Lang Hear Res. 2007;50:819–834. doi: 10.1044/1092-4388(2007/057). [DOI] [PubMed] [Google Scholar]

- Baer T, Moore BCJ, Kluk K. Effects of low pass filtering on the intelligibility of speech in noise for people with and without dead regions at high frequencies. J Acoust Soc Am. 2002;112:1133–1143. doi: 10.1121/1.1498853. [DOI] [PubMed] [Google Scholar]

- Bentler R, Wu YH, Kettel J, et al. Digital noise reduction: Outcomes from laboratory and field studies. Int J Audiol. 2008;47:447–460. doi: 10.1080/14992020802033091. [DOI] [PubMed] [Google Scholar]

- Bertoli S, Bodmer D, Probst R. Survey on hearing aid outcome in Switzerland: Associations with type of fitting (bilateral/unilateral), level of hearing aid signal processing, and hearing loss. Int J Audiol. 2010;49:333–346. doi: 10.3109/14992020903473431. [DOI] [PubMed] [Google Scholar]

- Boothroyd A, Medwetsky L. Spectral distribution of /s/ and the frequency response of hearing aids. Ear Hear. 1992;13:150–157. doi: 10.1097/00003446-199206000-00003. [DOI] [PubMed] [Google Scholar]

- Burk M, Humes LE. Effects of long-term training on aided speech-recognition performance in noise in older adults. J Speech, Lang, and Hear Res. 2008;51:759–771. doi: 10.1044/1092-4388(2008/054). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cameron S, Glyde H, Dillon H. Listening in Spatialized Noise–Sentences test: Normative and retest reliability data for adolescents and adults up to 60 years of age. J Am Acad Audiol. 2011;22:697–709. doi: 10.3766/jaaa.22.10.7. [DOI] [PubMed] [Google Scholar]

- Committe on Hearing Bioacoustics, and Biomechanics. Speech understanding and aging. J Acoust Soc Am. 1988;83:859–895. [PubMed] [Google Scholar]

- Ching TY, Dillon H, Byrne D. Speech recognition of hearing-impaired listeners: Predictions from audibility and the limited role of high-frequency amplification. J Acoust Soc Am. 1998;103:1128–40. doi: 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Cox RM, Johnson JA, Alexander GC. Implications of high-frequency cochlear dead regions for fitting hearing aids to adults with mild to moderately severe hearing loss. Ear Hear. 2012;33:573–87. doi: 10.1097/AUD.0b013e31824d8ef3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cranford JL, Andres MA, Piatz KK, et al. Influences of age and hearing loss on the precedence effect in sound localization. J Speech Lang Hear Res. 1993;36:437–441. doi: 10.1044/jshr.3602.437. [DOI] [PubMed] [Google Scholar]

- Dirks DD, Ahlstrom JB, Eisenberg LS. Comparison of probe insertion methods on estimate of ear canal SPL. J Am Acad Audiol. 1996;7:31–38. [PubMed] [Google Scholar]

- Dirks DD, Dubno JR, Ahlstrom JB, et al. Articulation index importance and transfer functions for several speech materials. ASHA. 1990;32:91. [Google Scholar]

- Dreschler WA, Verschuure H, Ludvigsen C, et al. International Collegium for Rehabilitative Audiology (ICRA) noises: Artificial noise signals with speech-like spectral and temporal properties for hearing instrument assessment. Audiol. 2001;40:148–157. [PubMed] [Google Scholar]

- Divenyi PL, Haupt KM. Audiological correlates of speech understanding deficits in elderly listeners with mild-to-moderate hearing loss. I. Age and lateral asymmetry effects. Ear Hear. 1997;18:42–61. doi: 10.1097/00003446-199702000-00005. [DOI] [PubMed] [Google Scholar]

- Divenyi PL, Stark PB, Haupt KM. Decline of speech understanding and auditory thresholds in the elderly. J Acoust Soc Am. 2005;118:1089–1100. doi: 10.1121/1.1953207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dubno JR, Schaefer AB. Comparison of frequency selectivity and consonant recognition among hearing-impaired and masked normal-hearing listeners. J Acoust Soc Am. 1992;91:2110–2121. doi: 10.1121/1.403697. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Ahlstrom JB, Horwitz AR. Spectral contributions to the benefit from spatial separation of speech and noise. J Speech Lang Hear Res. 2002;45:1297–1310. doi: 10.1044/1092-4388(2002/104). [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horwitz AR, Ahlstrom JB. Recovery from prior stimulation: Masking of speech by interrupted noise for younger and older adults with normal hearing. J Acoust Soc Am. 2003;113:2084–2094. doi: 10.1121/1.1555611. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Ahlstrom JB, Horwitz AR. Binaural advantage for younger and older adults with normal hearing. J Speech Lang Hear Res. 2008;51:539–556. doi: 10.1044/1092-4388(2008/039). [DOI] [PubMed] [Google Scholar]

- Fitzgibbons PJ, Gordan-Salant S. Auditory temporal processing in elderly listeners. J Am Acad Audiol. 1996;7:183–189. [PubMed] [Google Scholar]

- Füllgrabe C, Baer T, Stone MA, et al. Preliminary evaluation of a method for fitting hearing aids with extended bandwidth. Int J Audiol. 2010;49:741–753. doi: 10.3109/14992027.2010.495084. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Naylor G, Elberling C. Benefits from hearing aids in relation to the interaction between the user and the environment. Int J Audiol. 2003;42:S77–S85. doi: 10.3109/14992020309074627. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Naylor G, Elberling C. Linear and nonlinear hearing aid fittings - 1. Patterns of benefit. Int J Audiol. 2006a;45:130–152. doi: 10.1080/14992020500429518. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Naylor G, Elberling C. Linear and nonlinear hearing aid fittings - 2. Patterns of candidature. Int J Audiol. 2006b;45:153–171. doi: 10.1080/14992020500429484. [DOI] [PubMed] [Google Scholar]

- Gelfand SA, Ross L, Miller S. Sentence recognition in noise from one versus two sources: Effects of aging and hearing loss. J Acoust Soc Am. 1988;83:248–257. doi: 10.1121/1.396426. [DOI] [PubMed] [Google Scholar]

- Glasberg BR, Moore BCJ. Auditory filter shapes in subjects with unilateral and bilateral cochlear impairments. J Acoust Soc Am. 1986;79:1020–1033. doi: 10.1121/1.393374. [DOI] [PubMed] [Google Scholar]

- Glyde H, Cameron S, Dillon H, et al. The effects of hearing impairment and aging on spatial processing. Ear Hear. 2012;34:15–28. doi: 10.1097/AUD.0b013e3182617f94. [DOI] [PubMed] [Google Scholar]

- Green DM. A maximum-likelihood method for estimating thresholds in a yes-no task. J Acoust Soc Am. 1993;93:2096–2105. doi: 10.1121/1.406696. [DOI] [PubMed] [Google Scholar]

- Hafter ER, Schlauch RS. Cognitive factors and selection of auditory listening bands. In: Dancer A, Henderson D, Salvi RJ, Hammernik RP, editors. Noise-induced Hearing Loss. Philadelphia: B.C. Decker; 1992. pp. 303–310. [Google Scholar]

- Harris KC, Eckert MA, Ahlstrom JB, et al. Age-related differences in gap detection: Effects of task difficulty and cognitive ability. Hear Res. 2010;264:21–29. doi: 10.1016/j.heares.2009.09.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart SG, Staveland LE. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In: Hancock PA, Meshkati N, editors. Human Mental Workload. Amsterdam: Elsevier and North-Holland; 1988. pp. 239–250. [Google Scholar]

- Hogan C, Turner C. High-frequency audibility: Benefits for hearing-impaired listeners. J Acoust Soc Am. 1998;104:432–441. doi: 10.1121/1.423247. [DOI] [PubMed] [Google Scholar]

- Hornsby BW. The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear Hear. 2013 Feb 19; doi: 10.1097/AUD.0b013e31828003d8. Epub ahead of print. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Ricketts TA. The effects of hearing loss on the contribution of high-and low-frequency speech information to speech understanding. J Acoust Soc Am. 2003;113:1706–1717. doi: 10.1121/1.1553458. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Ricketts TA. The effects of hearing loss on the contribution of high-and low-frequency speech information to speech understanding. II. Sloping hearing loss. J Acoust Soc Am. 2006;119:1752–1763. doi: 10.1121/1.2161432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horwitz AR, Ahlstrom JB, Dubno JR. Factors affecting the benefits of high-frequency amplification. J Speech Lang Hear Res. 2008;51:798–813. doi: 10.1044/1092-4388(2008/057). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE. Factors underlying the speech-recognition performance of elderly hearing-aid wearers. J Acoust Soc Am. 2002;112:1112–1132. doi: 10.1121/1.1499132. [DOI] [PubMed] [Google Scholar]

- Humes LE, Wilson DL, Humes L, et al. A comparison of two measures of hearing aid satisfaction in a group of elderly hearing aid wearers. Ear Hear. 2002;23:422–427. doi: 10.1097/00003446-200210000-00004. [DOI] [PubMed] [Google Scholar]

- Humes LE. The contributions of audibility and cognitive factors to the benefit provided by amplified speech to older adults. J Am Acad Audiol. 2007;18:590–603. doi: 10.3766/jaaa.18.7.6. [DOI] [PubMed] [Google Scholar]

- Lee YH, Liu BS. Inflight workload assessment: comparison of subjective and physiological measurements. Aviat Space Environ Med. 2003;74:1078–1084. [PubMed] [Google Scholar]

- Leek MR, Dubno JR, He N-j, et al. Experience with a yes-no single-interval maximum-likelihood procedure. J Acoust Soc Am. 2000;107:2674–2684. doi: 10.1121/1.428653. [DOI] [PubMed] [Google Scholar]

- Lunner T, Sundewall-Thorén E. Interactions between cognition, compression, and listening conditions: Effects on speech-in-noise performance in a two-channel hearing aid. J Am Acad Audiol. 2007;18:604–617. doi: 10.3766/jaaa.18.7.7. [DOI] [PubMed] [Google Scholar]

- Mackersie CL, Cones H. Subjective and psychophysiological indices of listening effort in a competing-talker task. J Am Acad Audiol. 2011;22:113–122. doi: 10.3766/jaaa.22.2.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marrone N, Mason CR, Kidd G., Jr The effects of hearing loss and age on the benefit of spatial separation between multiple talkers in reverberant rooms. J Acoust Soc Am. 2008;124:3064–3075. doi: 10.1121/1.2980441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller GA, Nicely PE. Analysis of perceptual confusions among some English consonants. J Acoust Soc Am. 1955;27:338–352. [Google Scholar]

- Moore BCJ. Perceptual consequences of cochlear hearing loss and their implications for the design of hearing aids. Ear Hear. 1996;17:133–161. doi: 10.1097/00003446-199604000-00007. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Füllgrabe C. Evaluation of the CAMEQ2-HF method for fitting hearing aids with multichannel amplitude compression. Ear Hear. 2010;31:657–666. doi: 10.1097/AUD.0b013e3181e1cd0d. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Füllgrabe C, Stone MA. Effect of spatial separation, extended bandwidth, and compression speed on intelligibility in a competing-speech task. J Acoust Soc Am. 2010;128:360–371. doi: 10.1121/1.3436533. [DOI] [PubMed] [Google Scholar]

- Murphy DR, Daneman M, Schneider BA. Why do older adults have difficulty following conversations? Psychol Aging. 2006;21:49–61. doi: 10.1037/0882-7974.21.1.49. [DOI] [PubMed] [Google Scholar]

- Pavlovic C. Speech spectrum considerations and speech intelligibility predictions in hearing aid evaluations. J Speech Hear Dis. 1989;54:3–8. doi: 10.1044/jshd.5401.03. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Schneider BA. Masking-level differences in the elderly: a comparison of antiphasic and time-delay dichotic conditions. J Speech Hear Res. 1991;34:1410–1422. doi: 10.1044/jshr.3406.1410. [DOI] [PubMed] [Google Scholar]

- Picou EM, Ricketts TA, Hornsby BWY. How hearing aids, background noise, and visual cues influence objective listening effort. Ear Hear. 2013 Feb 20; doi: 10.1097/AUD.0b013e31827f0431. Epub ahead of print. [DOI] [PubMed] [Google Scholar]

- Plomp R. Auditory handicap of hearing impairment and the limited benefit of hearing aids. J Acoust Soc Am. 1978;63:533–549. doi: 10.1121/1.381753. [DOI] [PubMed] [Google Scholar]

- Plyler PN, Fleck EL. The effects of high-frequency amplification on the objective and subjective performance of hearing instrument users with varying degrees of high-frequency hearing loss. J Speech Lang Hear Res. 2006;49:616–627. doi: 10.1044/1092-4388(2006/044). [DOI] [PubMed] [Google Scholar]

- Ricketts TA, Dittberner AB, Johnson EJ. High-frequency amplification and sound quality in listeners with normal through moderate hearing loss. J Speech Lang Hear Res. 2008;51:160–172. doi: 10.1044/1092-4388(2008/012). [DOI] [PubMed] [Google Scholar]

- Sarampalis A, Kalluri S, Edwards B, et al. Objective measures of listening effort: Effects of background noise and noise reduction. J Speech Lang Hear Res. 2009;52:1230–1240. doi: 10.1044/1092-4388(2009/08-0111). [DOI] [PubMed] [Google Scholar]

- Simpson A, McDermott HJ, Dowell RC. Benefits of audibility for listeners with severe high-frequency hearing loss. Hear Res. 2005;210:45–52. doi: 10.1016/j.heares.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Souza P, Turner CW. Quantifying the contribution of audibility to recognition of compression-amplified speech. Ear Hear. 1999;20:12–20. doi: 10.1097/00003446-199902000-00002. [DOI] [PubMed] [Google Scholar]

- Stecker GC, Bowman BA, Yund EW, et al. Perceptual training improves syllable identification in new and experience hearing aid users. J Rehab Res Dev. 2006;43:537–552. doi: 10.1682/jrrd.2005.11.0171. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, et al. Effect of stimulus bandwidth on the perception of /s/ in normal- and hearing-impaired children and adults. J Acoust Soc Am. 2001;110:2183–2190. doi: 10.1121/1.1400757. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, et al. Aided perception of /s/ and /z/ by hearing-impaired children. Ear Hear. 2002;23:316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Strouse A, Ashmead DH, Ohde RN, et al. Temporal processing in the aging auditory system. J Acoust Soc Am. 1998;104:2385–2399. doi: 10.1121/1.423748. [DOI] [PubMed] [Google Scholar]

- Studebaker GA. A ‘rationalized’ arcsine transform. J Speech Hear Res. 1985;28:455–462. doi: 10.1044/jshr.2803.455. [DOI] [PubMed] [Google Scholar]

- Sweetow R, Palmer CV. Efficacy of individual auditory training in adults: A systematic review of the evidence. J Am Acad Audiol. 2005;16:494–504. doi: 10.3766/jaaa.16.7.9. [DOI] [PubMed] [Google Scholar]

- Turner CW, Cummings KJ. Speech audibility for listeners with high-frequency hearing loss. Am J Audiol. 1999;8:47–56. doi: 10.1044/1059-0889(1999/002). [DOI] [PubMed] [Google Scholar]

- Turner CW, Henry BA. Benefits of amplification for speech recognition in background noise. J Acoust Soc Am. 2002;112:1675–1680. doi: 10.1121/1.1506158. [DOI] [PubMed] [Google Scholar]

- Veneman CE, Gordan-Salant S, Matthews LJ, et al. Age and measurement time-of-day effects on speech recognition in noise. Ear Hear. 2012;34:288–299. doi: 10.1097/AUD.0b013e31826d0b81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vickers DA, Moore BCJ, Baer T. Effects of low-pass filtering on the intelligibility of speech in quiet for people with and without dead regions at high frequencies. J Acoust Soc Am. 2001;110:1164–1175. doi: 10.1121/1.1381534. [DOI] [PubMed] [Google Scholar]

- Walker G, Dillon H, Byrne D. Sound field audiometry: Recommended stimuli and procedures. Ear Hear. 1984;5:13–21. doi: 10.1097/00003446-198401000-00005. [DOI] [PubMed] [Google Scholar]

- Zekveld AA, Kramer SE, Kessens JM, et al. User evaluation of a communication system that automatically generates captions to improve telephone communication. Trends Amp. 2009;13:44–68. doi: 10.1177/1084713808330207. [DOI] [PMC free article] [PubMed] [Google Scholar]