Abstract

Infants segment words from fluent speech during the same period when they are learning phonetic categories, yet accounts of phonetic category acquisition typically ignore information about the words in which sounds appear. We use a Bayesian model to illustrate how feedback from segmented words might constrain phonetic category learning by providing information about which sounds occur together in words. Simulations demonstrate that word-level information can successfully disambiguate overlapping English vowel categories. Learning patterns in the model are shown to parallel human behavior from artificial language learning tasks. These findings point to a central role for the developing lexicon in phonetic category acquisition and provide a framework for incorporating top-down constraints into models of category learning.

Keywords: language acquisition, phonetic category learning, Bayesian inference

One of the first challenges for language learners is deciding which speech sound distinctions are and are not relevant in their native language. Learning to group perceptual stimuli into categories is a complex task. Categories often overlap, and boundaries are not always clearly defined. This is especially apparent when one looks at sound categories that occur in natural language. Phonetic categories, particularly vowel categories, show substantial acoustic overlap (Figure 2a).1 Even a single speaker’s productions of a specific category in a specific context are variable. Phonetic categories contain even more variability across ranges of speakers and contexts. The high degree of overlap suggests that infants learning language sometimes need to attend carefully to slight differences in pronunciation between different categories while simultaneously ignoring large degrees of within-category variability.

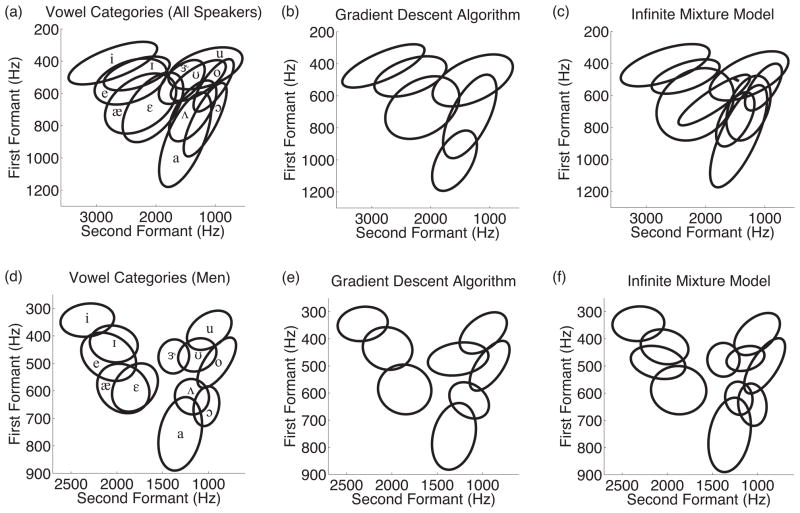

Figure 2.

Results from Simulation 1. Ellipses delimit the area corresponding to 90% of vowel tokens corresponding to (a) vowel categories for all speakers from Hillenbrand et al. (1995) that were used to generate the first corpus and the resulting categories found by (b) the gradient descent algorithm and (c) the infinite mixture model; and (d) vowel categories for men only from Hillenbrand et al. (1995) that were used to generate the second corpus and the resulting categories found by (e) the gradient descent algorithm and (f) the infinite mixture model.

Infants nevertheless appear to learn about the sound categories of their native language quite early. Babies initially discriminate sound contrasts whether or not they are functionally useful in the native language, but this ability declines for most non-native consonant contrasts between six and twelve months of age (Werker & Tees, 1984). During the same period, infants’ ability to discriminate perceptually difficult consonant contrasts in their native language is enhanced (Narayan, Werker, & Beddor, 2010). Vowel perception begins to reflect the learner’s native language as early as six months (Kuhl, Williams, Lacerda, Stevens, & Lindblom, 1992). These perceptual changes are generally interpreted as evidence for infants’ developing knowledge of native phonetic categories, implying that young learners have a remarkable ability to acquire speech sound categories amidst high acoustic overlap.

Identifying the mechanisms that support infants’ early language learning abilities has been a central focus of research in language acquisition. Statistical learning theories propose that infants acquire each layer of structure by observing statistical dependencies in their input. Infants show robust sensitivity to statistical patterns. They extract phonological and phonotactic regularities that govern sound sequences (Seidl, Cristiá, Bernard, & Onishi, 2009; White, Peperkamp, Kirk, & Morgan, 2008), use transitional probabilities to segment fluent speech into word-sized units (Pelucchi, Hay, & Saffran, 2009; Saffran, Aslin, & Newport, 1996), and notice adjacent and non-adjacent dependencies between words in grammar learning tasks (Gómez, 2002; Gómez & Gerken, 1999). Learners are also sensitive to statistical structure in non-linguistic stimuli such as visual shapes (Fiser & Aslin, 2002) and auditory tones (Saffran, Johnson, Aslin, & Newport, 1999), suggesting that statistical learning is a domain general strategy for discovering structure in the world.

Distributional learning has been proposed as a statistical learning mechanism for phonetic category acquisition (Maye & Gerken, 2000; Maye, Werker, & Gerken, 2002). Learners are hypothesized to obtain information about which sounds are contrastive in their native language from the distributions of sounds they hear. Learners hearing a bimodal distribution of sounds along a particular acoustic dimension can infer that the language contains two categories along that dimension; conversely, a unimodal distribution provides evidence for a single phonetic category. Distributional learning is consistent with empirical evidence showing that infants attend to distributional cues at the age when they are first learning phonetic categories (Maye et al., 2002). Computational modeling results also suggest that a distributional learning strategy can be successful at recovering phonetic categories that have sufficient separation in acoustic space (McMurray, Aslin, & Toscano, 2009; Vallabha, McClelland, Pons, Werker, & Amano, 2007). However, distributional learning is less effective when categories have a high degree of overlap. Overlapping categories pose a problem because the distribution of sounds in two overlapping categories can appear unimodal (Figure 1), misleading a learner into believing there are too few categories.

Figure 1.

The problem of overlapping categories. (a) Distribution of sounds in two overlapping categories. The points were sampled from the Gaussian distributions representing the /ɪ/ and /e/ categories based on men’s productions. (b) These sounds appear as a unimodal distribution when unlabeled, creating a difficult problem for a distributional learner.

In this article, we show that learners can overcome the problem of overlapping categories by using feedback from higher levels of structure to constrain category acquisition. Specifically, we show that using feedback from a developing lexicon can improve phonetic category acquisition. Interactive learning of words and sounds is beneficial when sounds occur in distinct lexical contexts. The blue and red categories from Figure 1 overlap acoustically when considered in isolation, but an interactive learner can notice that, for example, the blue sounds occur in the word milk and the red sounds occur in the word game. These lexical contexts are easily distinguishable on the basis of acoustic information and can be used as disambiguating cues to sound category membership. This type of interactive learning does not require meanings or referents to be available to the learner; it requires only that learners use acoustic information to categorize word tokens. Thus, information from lexical contexts has the potential to contribute to early development, even before infants have learned the meanings of many words. Our theoretical framework is similar to that proposed by Swingley (2009), but here we provide a formal account of this interactive learning hypothesis. Our analysis is framed at Marr’s (1982) computational level, examining the statistical solution to the sound category learning problem in a structured environment where sounds are organized into words. We quantitatively investigate the potential benefit of interactive learning by building a computational model that learns to categorize sounds and words simultaneously and show that word-level information provides an informative cue that can help learners acquire phonetic categories.

Although our focus in this article is on linguistic categories, the modeling that we develop here may well have broader application. Distributional learning, for example, can be thought of as a domain general strategy for recovering underlying structure. Learning mechanisms that rely on probability density estimation, in which categories are defined by their probability of producing different stimuli, are popular in research on categorization (Ashby & Alfonso-Reese, 1995). The specific models that have been proposed as accounts of phonetic category learning (e.g., Gaussian mixture models, de Boer & Kuhl, 2003; Vallabha et al., 2007; McMurray et al., 2009; Toscano & McMurray, 2010; Dillon, Dunbar, & Idsardi, 2013) have also been proposed as accounts of category learning more generally (Anderson, 1990; Rosseel, 2002; Sanborn, Griffiths, & Navarro, 2010). While studies of category learning have tended to focus on the acquisition of categories in isolation from their context, earlier work on the effects of prior knowledge on category learning (e.g., Pazzani, 1991; Heit & Bott, 2000; Wattenmaker, Dewey, Murphy, & Medin, 1986; Murphy & Allopenna, 1994) and more recent work on the consequences of learning multiple categories simultaneously (Gureckis & Goldstone, 2008; Canini, Shashkov, & Griffiths, 2010; Canini & Griffiths, 2011) suggests that our conclusions about the importance of using information from multiple levels of structure may have implications beyond just language acquisition.

In the following, we first introduce the idea of modeling category learning as density estimation and show how distributional learning can be viewed in this framework. We then show through an initial simulation that distributional learning can be challenging when categories have a high degree of overlap. Our next section explores how constraints from higher-level structure might supplement distributional learning, formalizing a lexical-distributional model that learns word- and sound-level information simultaneously. Three simulations quantify the benefit of interactive learning by comparing performance of our lexical-distributional model directly to that of distributional models. We conclude by showing that qualitative behavior of our lexical-distributional model mirrors patterns from experiments on sound category learning, suggesting that people behave as interactive learners, and by discussing the plausibility of the interactive learning approach for language acquisition and for category learning more generally.

Distributional learning

Rational analyses of category learning (e.g., Anderson, 1990; Ashby & Alfonso-Reese, 1995) reduce the psychological problem of learning a new category to the statistical problem of density estimation: Learning a category requires estimating a probability distribution over the items that belong to the category. A learner can use the resulting distributions to quickly decide which category a new item belongs to, with categorization being a simple matter of probabilistic inference. This perspective provides a novel interpretation of traditional models of categorization such as prototype and exemplar models (Ashby & Alfonso-Reese, 1995) and provides a productive link between ideas from statistics and theories of human category learning (Griffiths, Sanborn, Canini, Navarro, & Tenenbaum, 2011).

Distributional learning accounts of early language acquisition (Maye et al., 2002) likewise propose that phonetic category acquisition can be viewed as a density estimation problem. That is, adult-like discrimination and processing abilities are assumed to reflect knowledge of the distributions associated with native language phonetic categories. Distributional learning specifies one way in which this knowledge might be acquired: Learners observe sounds in their input that cluster in perceptual space and hypothesize categories to coincide with the locations of those clusters. They can use the clusters they observe to estimate the probability distribution associated with each category. This gives them a way of simultaneously learning which categories are in their language and which sounds are associated with each category.

Distributional learning is supported by experimental evidence that infants are sensitive to distributions of sounds at six and eight months. Maye et al. (2002) familiarized infants with stop consonants ranging from unaspirated [t] to [d]. Although these sounds occur as variants of different phonemes in English, they are not used contrastively, and always appear in different phonological environments. Adults have previously been shown to have difficulty distinguishing these sounds in laboratory settings, whereas young infants are sensitive to the distinction (Pegg & Werker, 1997). Maye et al. investigated infants’ ability to use statistical information to constrain how they interpret these sounds. During familiarization, infants heard either a bimodal distribution of sounds, mimicking the distribution that might be associated with two phonetic categories, or a unimodal distribution, mimicking the distribution that might be associated with a single phonetic category. Infants who heard the sounds embedded in a bimodal distribution exhibited better discrimination of the endpoint stimuli at test than infants who heard the sounds embedded in a unimodal distribution, suggesting that participants’ sensitivity to this contrast had changed to reflect the distributions of sounds that they heard. Bimodal distributions can also facilitate discrimination of a difficult voicing continuum (Maye, Weiss, & Aslin, 2008) and of a place of articulation continuum (Yoshida, Pons, Maye, & Werker, 2010) in infants. Adults retain sensitivity to distributional information in consonants (Maye & Gerken, 2000) and vowels (Gulian, Escudero, & Boersma, 2007), though sensitivity to distributional cues appears to decrease as phonetic category acquisition progresses (Yoshida et al., 2010).

The period around six to eight months when infants show sensitivity to distributional information corresponds closely to the period of time when infants lose sensitivity to non-native contrasts (Werker & Tees, 1984). This suggests that learners can make use of distributional information during the time when they are acquiring phonetic categories, and it is intuitively plausible that finding clusters of sounds would be a useful strategy for acquiring phonetic categories. Computational modeling allows us to look more carefully at the predicted outcome of distributional learning to determine whether infants’ sensitivity would be predicted to facilitate phonetic category acquisition. If computational models can recover the sound categories of a natural language through a purely distributional learning strategy, then this would lend credence to the possibility that infants can do the same. The remainder of this section provides an overview of computational models that have been used to investigate the utility of distributional learning for phonetic category acquisition.

Mixture models

Models of phonetic category acquisition have implemented distributional learning by assuming that learners need to find the set of categories that describe the distribution of sounds in acoustic space, where each category is represented by a Gaussian (i.e., normal) distribution (de Boer & Kuhl, 2003; Dillon et al., 2013; McMurray et al., 2009; Toscano & McMurray, 2010; Vallabha et al., 2007). In this framework, phonetic category learning consists of jointly inferring the mean, covariance, and frequency of each Gaussian category as well as the category label of each sound. This inference process has been implemented through a type of model known as a Gaussian mixture model, which has also appeared in the general literature on category learning (Anderson, 1990; Rosseel, 2002; Sanborn et al., 2010). By comparing the outcome of learning in these models to the true set of phonetic categories in a language, we can gain insight into the plausibility of distributional learning as a mechanism for phonetic category acquisition.

Mixture models assume that there are several categories and that each of the observed data points was generated from one of these categories. In phonetic category acquisition, the categories are phonetic categories and the data points represent speech sounds. Mixture models typically assume that there is a fixed number of categories C; here we refer to each category by a number c ranging from 1 to C. Each category is associated with a probability distribution p(x|c) which defines the probability of generating a stimulus value x from category c. The probability distribution p(x|c) in mixture models can take a variety of forms, but here we focus on the case in which p(x|c) is a Gaussian distribution, so that recovering p(x|c) is equivalent to recovering a mean μc and a covariance matrix Σc. The observed data points are referred to as xi. Each data point is assumed to be associated with a label zi, ranging between 1 and C, that indicates which category it belongs to. In an unsupervised learning setting such as language acquisition, the labels zi are unobserved. Learners need to recover the probability distribution p(x|c) associated with each category as well as the label zi associated with each data point.

Inferring a probability distribution p(x|c) is straightforward when a learner knows which stimuli belong to the category (i.e., when zi is known). If p(x|c) is a Gaussian distribution, the parameter estimates for μ and Σ that maximize the probability of the data are given by the empirical mean and covariance

| (1) |

where n denotes the number of observed data points xi for which zi = c. These equations give optimal estimates for category parameters when a learner has no prior knowledge about what the category mean and covariance should be, but it is also straightforward to incorporate prior beliefs about these parameters in a Bayesian framework using a type of prior distribution known as a normal inverse Wishart distribution (see Gelman, Carlin, Stern, & Rubin, 1995, for details).

Conversely, if the probability density function p(x|c) and frequency p(c) associated with each category is known, it is straightforward to infer zi, assigning a novel unlabeled data point to a category. This amounts to using Bayes’ rule,

| (2) |

to compute the posterior probability of category membership, where x is the unlabeled stimulus, c denotes a particular category, and the sum in the denominator ranges over the set of all possible categories.

The problem faced by language learners acquiring phonetic categories is difficult because neither category assignments zi for individual stimuli, nor probability density functions p(x|c) associated with phonetic categories, are known in advance. This produces a type of chicken-and-egg learning problem that is common to many problems in language acquisition. Algorithms such as Expectation Maximization (EM) (Dempster, Laird, & Rubin, 1977) provide a principled solution to these types of problems by searching for the parameters and category labels that maximize the probability of the data. In phonetic category acquisition, learners using the EM algorithm would begin with an initial hypothesis about the category density functions, then iterate back and forth between inferring category assignments for each sound they have heard according to Equation 2 and inferring probability density functions for each category according to Equation 1.

The EM algorithm has been used to test distributional models on English vowel categories. de Boer and Kuhl (2003) fit Gaussian mixture models to actual formant values in mothers’ spontaneous productions of the /a/, /i/, and /u/ phonemes from the words sock, sheep, and shoe. They compared model performance from infant- and adult-directed speech and found better performance when the models were trained on infant-directed speech, as measured by the accuracy of the inferred category centers. This benefit of infant-directed speech as training data was attributed to the increased separation between categories that is typical of infant-directed speech (Kuhl et al., 1997; but see McMurray, Kovack-Lesh, Goodwin, & McEchron, submitted). However, note that the /i/, /u/, and /a/ vowel categories used by de Boer and Kuhl (2003) are precisely those vowel categories with maximal separation in acoustic space, and children acquiring a full set of phonetic categories would face a more difficult problem. We return to the issue of category separation below.

Inferring the number of categories

The EM algorithm requires the number of categories to be specified in advance. However, it is unlikely that human learners know in advance how many phonetic categories they will be learning, because this number varies across languages. McMurray et al. (2009) and Vallabha et al. (2007) proposed an online sequential learning algorithm similar to EM that provides a way around this limitation. The algorithm resembles EM in that it iterates between estimation of category parameters and assignment of a sound to a particular category. During each iteration the model observes a single speech sound and assigns it to a category. It then updates the mean, covariance, and frequency parameters of each category on the basis of that sound (see Vallabha et al., 2007, for a detailed description of these updates, which proceed by a method of gradient descent). Automatic inference of the number of categories is achieved by eliminating categories whose frequency drops below a predefined threshold. The model begins with a high number of phonetic categories and prunes those that are not needed.

Nonparametric Bayesian models provide a second option for flexibly learning the number of categories. A type of nonparametric Bayesian model known as the Dirichlet process (Ferguson, 1973) has been used to model category learning in language and other domains (Anderson, 1990; Goldwater, Griffiths, & Johnson, 2009, 2011; M. Johnson, Griffiths, & Goldwater, 2007; Sanborn et al., 2010). Dirichlet processes provide a modeling framework similar to the mixture models described above, but they differ from traditional mixture models in that they provide a mechanism for inferring an unbounded number of categories. Because of this, Dirichlet process models are often referred to as infinite mixture models (IMM). They infer the correct number of categories by considering a potentially infinite number of categories but encoding a prior bias toward fewer categories. This bias in the prior distribution encourages the model to use only those categories that are necessary to explain the data. Here we implement distributional learning using the infinite Gaussian mixture model (Rasmussen, 2000), which assumes that the probability density function p(x|c) associated with each category is Gaussian. We use Gibbs sampling (Geman & Geman, 1984), a form of Markov chain Monte Carlo, as an inference algorithm for this model. The details of the model and inference algorithm are given in Appendix A.

The gradient descent models and the IMM each provide a way of inferring the number of categories present in the data, and each can be evaluated on its ability to recover the correct number of categories. Previous work has examined this ability in both types of models. Using the gradient descent method, McMurray et al. (2009) focused on a voicing contrast in consonants. They generated training data for the models by sampling sounds from Gaussian distributions that mimicked the voice onset time (VOT) distributions of voiced and voiceless stops, then showed that their learning algorithm recovered these two categories correctly. Vallabha et al. (2007) performed similar experiments using vowels. They generated training data that mimicked the distributions associated with single speakers producing /i/, /ɪ/, /e/, and /ε/ in English or /i/, /iː/, /e/, and /eː/ in Japanese. The most frequent learning outcome for models trained on these data was to recover four categories in each case. Models trained on English input data recovered categories that were distinguished along all three relevant dimensions (F1, F2, and duration), whereas models trained on Japanese input data recovered categories that were distinguished primarily by F1 and duration. For both consonants and vowels, then, the gradient descent algorithm has yielded initial success in inferring the correct number of categories.

Dillon et al. (2013) examined the performance of the IMM in acquiring a three-category vowel system from Inuktitut. They considered the possibility that the model might acquire categories at either the phonemic level (3 categories) or the phonetic level (6 categories). Simulations showed that given different sets of parameters, the model could acquire either three or six categories, supporting a successful outcome of distributional learning. However, the authors also identified several ways in which the models’ solutions were insufficiently accurate to provide input for learning higher levels of linguistic structure.

Despite the success of these models, it is not yet clear whether distributional learning can accommodate more realistic input data. Phonetic categories, particularly vowel categories, can show a high degree of overlap (e.g. Peterson & Barney, 1952; Hillenbrand, Getty, Clark, & Wheeler, 1995), whereas the input data to these computational models contained only limited category overlap. Categories involved in the voicing contrast from McMurray et al. (2009) are well separated. The vowel contrasts used by Vallabha et al. (2007) were composed of neighboring categories that presumably had some degree of overlap, but even here, each model was trained on data from a single speaker. The training data therefore had lower within-category variability than one would expect to find in real language input, and this presumably led to a lower degree of overlap. The data used by Dillon et al. (2013) contained higher amounts of category overlap, but in this case the authors identified several shortcomings in the distributional model’s performance. Because their paper used the IMM, and used training data that did not conform to their Gaussian assumptions, it is difficult to compare their results directly to those obtained through the gradient descent algorithm on data generated from Gaussians. Our initial simulation tests both types of distributional learning models directly on a single dataset in which the categories have a high degree of overlap, comparing this to performance on a dataset in which categories have a lower degree of overlap.

Simulation 1: The problem of overlapping categories

Overlap between categories can potentially make the learning problem more difficult because the distribution of sounds from two categories can appear unimodal, misleading a distributional learner into assigning the sounds to one category. To explore this challenge, we test the ability of distributional learning models to recover the vowel categories from Hillenbrand et al. (1995). These categories exhibit high acoustic variability and therefore provide a challenging test case for distributional models.

Our simulations use two distributional learning models: the gradient descent algorithm from Vallabha et al. (2007) and the IMM. Each model provides a unique set of advantages. The gradient descent model has been used previously to investigate phonetic category acquisition, and its use here facilitates comparison with this previous work. Its algorithm is sequential and is thus arguably more psychologically plausible than the Gibbs sampling algorithm used with the IMM (but see Sanborn et al., 2010, for a sequential algorithm that can be used with the IMM). However, the drawback of using gradient descent is that the model cannot find a set of globally optimal category parameters, and instead converges to a locally optimal solution. The Gibbs sampling algorithm used with the IMM has some potential to overcome the problem of local optima. Furthermore, there is a straightforward way to extend the IMM to incorporate multiple layers of structure (Teh, Jordan, Beal, & Blei, 2006), and we take advantage of this flexibility to create the interactive lexical-distributional learning model introduced in the next section. Using the IMM as a distributional learning model thus allows for a direct comparison between the distributional and lexical-distributional learning strategies.

Throughout this article, we evaluate models on their ability to recover the correct number of categories, a measure that has become standard for evaluating success in unsupervised models of phonetic category learning (e.g. Dillon et al., 2013; McMurray et al., 2009; Vallabha et al., 2007). In addition, to assess the quality of these categories, we evaluate the models’ ability to identify which sounds from the corpus are in each category. Our analyses look at the categories recovered by each model, rather than at the models’ ability to use those categories in specific psycholinguistic tasks. Our assumption is that a learning strategy that supports robust category learning would also support use of those categories, either implicitly or explicitly, in psycholinguistic tasks.

Methods

Corpus preparation

Phoneme and word frequencies were obtained from the CHILDES parental frequency count (MacWhinney, 2000; Li & Shirai, 2000). We converted all words in the frequency data to their corresponding phonemic representations using the CMU pronouncing dictionary. If the dictionary contained multiple phonemic forms for a word, the first was used. Stress markings were removed, diphthongs /aʊ/, /aɪ/, and /ɔɪ/ were converted to sequences of two phonemes, and /ɝ/ was treated as a single phoneme rather than a sequence of two phonemes. Any words whose orthographic representation in CHILDES contained symbols other than letters of the alphabet, hyphen, and apostrophe were excluded. In addition, words not found in the CMU pronouncing dictionary were excluded. This resulted in the exclusion of 7,911 types, representing 28,447 tokens (approximately 1% of tokens), and left us with a phonematized word list of 15,825 orthographic word types, representing 2,548,494 tokens. This phonematized word list was used to compute empirical probabilities for each vowel (Table 1) for constructing the corpora in Simulations 1 and 2 and to directly sample word tokens for constructing the corpora in Simulations 3 and 4.

Table 1.

Normalized empirical probabilities of each vowel computed from the phonematized CHILDES parental frequency count.

| Vowel | Empirical probability in word tokens | Empirical probability in word types |

|---|---|---|

| /æ/ | 0.080 | 0.068 |

| /ɑ/ | 0.125 | 0.105 |

| /ɔ/ | 0.038 | 0.035 |

| /ε/ | 0.067 | 0.075 |

| /e/ | 0.039 | 0.048 |

| /ɝ/ | 0.035 | 0.083 |

| /ɪ/ | 0.177 | 0.169 |

| /i/ | 0.077 | 0.099 |

| /o/ | 0.061 | 0.041 |

| /ʊ/ | 0.041 | 0.019 |

| /ʌ/ | 0.176 | 0.229 |

| /u/ | 0.083 | 0.030 |

We obtained phonetic category parameters from production data collected by Hillenbrand et al. (1995). Production data by men, women, and children were used to compute empirical estimates of category means and covariances in the two-dimensional space given by the first two formant values using Equation 1. This gave us a set of phonetic categories with high variability and therefore high overlap among neighboring categories. To obtain parameters for a set of categories with lower overlap, we estimated means and covariances based only on productions by men. Note that schwa was absent both from the production data from Hillenbrand et al. (1995) and from the CMU pronouncing dictionary. Thus, schwa was not included as a vowel in any of our simulations.

Vowel tokens in each corpus were sampled from these Gaussian distributions. The same Gaussian parameters were used to sample each token of a phonetic category that appeared in a corpus; the acoustic values in the corpora thus did not reflect any contextual (e.g., coarticulatory) effects, and conformed to the Gaussian assumptions of all the models tested.

For Simulation 1, token frequencies from Table 1 were used to sample the labels zi for two corpora of 20,000 vowels each. To produce each acoustic value xi, a set of formant values was sampled from the Gaussian distribution associated with category zi. The first corpus used phonetic category distributions computed from all speakers’ productions, and the second corpus used phonetic category distributions computed from men’s productions only. This created two corpora consisting of 20,000 F1-F2 pairs, one with high within-category variability and one with lower within-category variability. The label for each sound zi was not provided to the models as training data, but was used for model evaluation.

Simulation parameters

Parameters used for the gradient descent algorithm were based on those from Vallabha et al. (2007). Like McMurray et al. (2009), however, we found that the initial category variance parameter Cr affected performance of this algorithm. Here we present results using Cr = 0.02, which we found to yield quantitatively and qualitatively better results than the value of 0.2 used by Vallabha et al. (2007). Other parameters, including the number of sweeps and the learning rate parameter, were identical to those used by Vallabha et al. Note that although we used 50,000 sweeps, the training data consisted of only 20,000 points; thus, training points were reused over the course of learning.

Parameters in the IMM include the strength of bias toward fewer phonetic categories and the model’s prior beliefs about phonetic category means and covariances. The bias toward fewer phonetic categories is controlled by the concentration parameter αC, with smaller values corresponding to stronger biases. We explored a range of values for this parameter and found little effect on model performance; these simulations use a value of αC = 10. The prior distribution over phonetic category parameters GC is a normal inverse Wishart distribution that is controlled by three parameters: m0, S0, and ν0. These parameters can be thought of as reflecting the mean, sum of squared deviations from the mean, and number of data points in a small amount of pseudodata that the learner imagines having assigned to each new category. Parameters were set to , and ν0 = 1.001. They therefore encoded a bias toward the center of vowel space that was made as weak as possible2 so that it could be overshadowed by real data.

Evaluation

Model performance was evaluated quantitatively by measuring the number of categories recovered by each model and computing two pairwise measures of performance, the F-score and variation of information (VI), which are described in detail in Appendix C. The F-score is a pairwise performance measure that is the harmonic mean of pairwise precision and recall, which are often referred to as accuracy and completeness in the psychology literature. It measures the extent to which pairs of points are correctly categorized together, and ranges between zero and one, with higher numbers corresponding to better performance. VI is a symmetric measure that evaluates the information theoretic difference between the true clustering and the clustering found by the model. It is a positive number, with lower numbers corresponding to better performance. Both performance measures require category assignments for each sound in the corpus. For the IMM we used the category assignments from the final iteration of the Gibbs sampling algorithm; these should correspond to a sample from the posterior distribution on category assignments. The gradient descent learning algorithm does not directly yield a set of category assignments, but we obtained assignments by sampling from the posterior distribution over categories, p(c|x) (Equation 2), for each sound.

Results and discussion

Results from each model are shown in Table 2 and illustrated in Figure 2. Whereas twelve categories were used to generate the corpus, the gradient descent model from Vallabha et al. (2007) recovered only six categories from the corpus with high category overlap and eight categories from the corpus with lower category overlap. The IMM recovered ten and eleven categories from these two corpora, respectively. However, the higher number of categories found by the IMM did not lead to better performance on the quantitative measures in either case. This is likely due to the fact that the extra category divisions found by the IMM did not match precisely with the true category divisions. For example, the long diagonal category in Figure 2c does not correspond to a true category, and even in Figure 2f, the division between the /ɪ/ and /e/ categories is incorrect. Neither model was able to recover the twelve categories used to generate the data. This was true of both corpora, but the problem was more pronounced in the corpus with high acoustic overlap between categories.

Table 2.

Phonetic categorization scores from the infinite mixture model (IMM) and gradient descent algorithm (GD) in Simulation 1.

| All Speakers | Men Only | |||

|---|---|---|---|---|

| IMM | GD | IMM | GD | |

| Number of categories | 10 | 6 | 11 | 8 |

| F-score | 0.453 | 0.480 | 0.699 | 0.727 |

| Variation of information | 3.195 | 2.677 | 1.678 | 1.440 |

Note: The true number of phonetic categories is 12.

These results highlight the potential problem posed by overlapping categories. Often, tests of distributional learning are conducted on corpora in which vowels have an artificially low degree of overlap. de Boer and Kuhl (2003) selected three vowels with a large degree of separation, and Vallabha et al. (2007) removed speaker variability from the training data. Our simulations suggest that this lower degree of overlap between categories may have been critical to the models’ success. This corroborates the findings of Dillon et al. (2013) and suggests that more realistic data can potentially pose a problem for the types of distributional learning models that simply look for clusters of sounds in the acoustic input.

Our results from this simulation should be interpreted with caution, as it is not clear to what extent we have over- or underestimated the difficulty of the learning problem. Some degree of overlap can be overcome by using additional dimensions such as duration (Vallabha et al., 2007) and formant trajectories (Hillenbrand et al., 1995), and augmenting the data with information from these extra dimensions has the potential to improve performance in both models. Learning might also be supported by the increased separation between category means found in infant-directed speech (Kuhl et al., 1997), though it is not yet clear whether this advantage persists when one considers the increased within-category variability of infant-directed speech, especially for contrasts that do not involve the point vowels /a/, /i/, and /u/ (Cristia & Seidl, in press; McMurray et al., submitted). Cristia and Seidl, for example, suggest that some vowel contrasts may be hypoarticulated, that is, less distinct in infant-directed speech than in adult-directed speech. However, it is possible the data used in this simulation were simply too impoverished to support acquisition of a full vowel system in either models or humans. Because our training data were sampled from Gaussian distributions, the IMM is also likely to show better performance if trained on a larger corpus, though this would not necessarily be the case for non-Gaussian data. On the other hand, additional variability beyond what is reflected in Figure 2a is likely to arise through contextual variation, such as coarticulation with neighboring sounds, making the learning problem more difficult than is evident from the Hillenbrand et al. data. On the basis of our results, we wish to merely suggest the possibility that distributional learning may not be as robust as is often assumed. It is therefore important to consider possible supplementary learning mechanisms that could lead to more robust acquisition of phonetic categories. We propose one alternative strategy that children might use for learning phonetic categories, following Swingley (2009): if children are able to learn information about words and sounds simultaneously, they can use word-level information to supplement distributional learning.

Incorporating lexical constraints

Young infants show evidence of segmenting word-sized units at the same time that they are acquiring phonetic categories. Eight-month-olds track transitional probabilities of the speech they hear, discriminating words from non-words and part-words based purely on this statistical information (Saffran et al., 1996). Older infants can learn to map these segmented words onto referents (Graf Estes, Evans, Alibali, & Saffran, 2007), suggesting that infants use their sensitivity to transitional probabilities to begin learning potential wordforms for their developing lexicon. Studies using more naturalistic stimuli have demonstrated that infants can use stress and other cues to segment words from sentences and map these segmented words onto words they hear in isolation. Six-month-old infants can use familiar words such as Mommy to segment neighboring monosyllablic words from fluent sentences (Bortfeld, Morgan, Golinkoff, & Rathbun, 2005), and a more general ability to segment monosyllabic and bisyllabic words develops over the next several months (Jusczyk & Aslin, 1995; Jusczyk, Houston, & Newsome, 1999), during the same time that discrimination of non-native sound contrasts declines.

Segmentation tasks with naturalistic stimuli require infants not only to attend to segmentation cues, but also to ignore the within-category variability that distinguishes different word tokens. Infants need to recognize that the words heard in isolation are instances of the same words that they heard in fluent speech. There can be substantial acoustic differences among these different word tokens. Thus, infants as young as six months, who presumably have not yet finished acquiring native language phonetic categories, appear to be performing some sort of rudimentary categorization of the words they segment from fluent speech. Although young infants may not know meanings of these segmented words, they seem to be categorizing the word tokens on the basis of acoustic properties. This suggests a learning trajectory in which infants simultaneously learn to categorize both speech sounds and words, potentially allowing the two learning processes to interact.

Interaction between sound and word learning is not present in distributional learning theories. Distributional learning treats each sound in the corpus as being independent of its neighbors, ignoring higher level structure. The independence assumption has been present in both empirical and computational work. In experiments, infants have heard only isolated syllables during familiarization. This type of familiarization forces infants to treat those syllables as isolated units. Models of distributional learning similarly assume that infants consider only isolated sounds. In fact, distributional learning is precisely the type of statistical solution to the category learning problem that a learner should use if sounds were generated independently of their neighbors.

Here we demonstrate the importance of higher level structure by considering the optimal solution to the phonetic category learning problem when one assumes that sounds are instead organized into words. Throughout the remainder of this article, we will distinguish between words, acoustic tokens in the corpus, and lexical items, categories (word types) that represent groupings of acoustic tokens. Just like speech sounds are categorized into phonetic categories, we will assume that words are categorized into lexical items. Given this distinction, we can now use our Bayesian framework to define a lexical-distributional model that acquires phonetic categories and lexical items. Our model differs from distributional models in the hypothesis space it assigns to learners. A distributional model’s hypotheses consist of sets of phonetic categories, and learners are assumed to optimize the phonetic category inventory directly to best explain the sounds that appear in the corpus. In contrast, the lexical-distributional model’s hypotheses are combinations of sets of phonetic categories and sets of lexical items. Under this model learners optimize their lexicon to best explain the word tokens in the corpus, while simultaneously optimizing their phonetic category inventory to best explain the lexical items that they think generated the corpus. This allows the lexical-distributional model to incorporate feedback from the developing lexicon in phonetic category learning.

Our lexical-distributional learning model uses the same phonetic category structure from the IMM, allowing a potentially infinite number of Gaussian phonetic categories but incorporating a bias toward fewer categories. The model additionally includes a lexicon in which lexical items are composed of sequences of phonetic categories. Parallel to the phonetic category inventory, the lexicon contains a potentially infinite number of lexical items but incorporates a bias toward fewer lexical items. Word tokens in a corpus are assumed to be produced by selecting a lexical item from the lexicon and then producing an acoustic value from each phonetic category contained in that lexical item. We make the simplifying assumption that each phonetic category corresponds to the same acoustic distribution regardless of context, and thus assume that there is no phonological or coarticulatory variation. We consider in the General Discussion how such variation could be accommodated in an interactive model. We additionally assume that there are no phonotactic constraints, so that phonetic categories are selected independently from the phonetic category inventory regardless of their position in a word. Given these assumptions and a corpus of word tokens, the model needs to simultaneously recover the set of lexical items and the set of phonetic categories that generated the corpus. The model and inference algorithm are described in detail in Appendix B.

Our model is aimed at identifying the learning outcome that one would expect of a learner that makes combined use of sound and word information in a statistically sensible way. Because our framework allows us to implement joint sound and word learning, using this framework provides important data on the utility of an interactive learning strategy, and these data can be used to inform future research into the mechanisms that might support interactive learning. In this work we do not address questions of implementation and algorithm, but we consider in the General Discussion how such questions might be addressed in the future.

We present three simulations that examine the extent to which a lexical-distributional learning strategy can help a learner acquire the categories of a natural language, examining learning performance on corpora composed of acoustic values that are characteristic of English vowel categories. Simulation 2 illustrates the model’s basic behavior using artificial lexicons in which lexical items consist only of vowels. Simulation 3 tests performance on a lexicon of English words from child-directed speech, examining the extent to which words in a natural language contain information that can separate overlapping vowel categories. Simulation 4 extends the results from Simulation 3 to a corpus in which speaker variability is reduced. Together, these simulations test the extent to which making more realistic assumptions about the way in which language data are generated can improve the phonetic category learning outcome.

Simulation 2: Lexical-distributional learning of English vowels

Simulation 2 examines whether lexical-distributional learning confers an advantage over distributional learning in recovering the English vowel categories from Hillenbrand et al. (1995). Our aim is to reveal a general advantage conferred by the use of higher-level structure. This advantage is likely to be strongest when the higher-level structure to be learned matches the learner’s assumptions about that structure. In consideration of this, our corpora for this simulation were based on lexicons generated from the model’s prior distribution over lexical items, but where the phonetic categories contained in those lexical items were vowels whose distributions corresponded to Hillenbrand et al.’s data. We tested ten corpora, each generated from a different artificial lexicon. Each corpus consists of a sequence of 5,000 word tokens, with word boundaries marked, in which vowel tokens are represented by acoustic values based on data from Hillenbrand et al. (1995).

The corpora used for Simulation 2 were similar to those used for Simulation 1, but they differed in two important ways. First, the vowel tokens in these corpora were organized into sequences corresponding to word tokens. Because of this, the corpora for Simulation 2 incorporated the type of structural information that is useful to the lexical-distributional model but is ignored by the distributional model. Word boundaries were assumed to be known, so that the model did not have to solve the segmentation problem. Categorizing word tokens into lexical items is nevertheless a non-trivial problem, as the model needs to decide on the basis of acoustic values whether two words with the same number of phonemes are the same or different. In lexical categorization we compare our lexical-distributional model to a baseline model that uses no distributional information from vowels. This baseline model classifies word tokens together if they have the same number of phonemes and thus produces a lower bound on the word categorization behavior of the lexical-distributional model.

Second, although vowels in the artificial lexicon were drawn from vowel type frequencies in the English lexicon, this did not translate into equivalent token frequencies in the corpus because word frequencies in the artificial lexicon did not match English word frequencies. We ensured that these altered token frequency distributions did not substantially reduce the difficulty of the learning problem by testing the two distributional models on these corpora.

Methods

Corpus preparation

Ten training corpora were constructed. For each corpus, a different set of lexical items consisting only of vowels was drawn from the model’s prior distribution GL. Phonetic categories in these artificial lexicons were drawn according to the type frequency distribution of vowels in the English lexicon (Table 1), but otherwise contained no phonotactic constraints. This yielded lexical items such as /ʌ/, /ɪ ɑ/, /ε/, /ʌʌɝ/, or /ʊεɝ/, where the actual phonemic sequences contained in the lexicon varied across the ten training corpora. Lexical frequencies were drawn according to the lexical-distributional model’s prior distribution. The distribution GL used a geometric distribution over word lengths with parameter . This parameter was different from that used for inference but was chosen to help generate a lexicon that contained enough information about all twelve vowel categories; using the generating parameter for inference produced qualitatively similar results. This lexicon was used to sample a corpus of 5,000 word tokens. When generating these training data, we ensured that each vowel appeared at least twice in the lexicon and at least 50 times in the corpus by discarding and resampling any corpora that did not meet these specifications. The corpora each had 5,000 word tokens and the number of vowel tokens ranged from 8,622 to 19,395 (mean corpus size was 13,489 vowel tokens). The upper end of this range was comparable to the 20,000 vowel tokens used in Simulation 1, whereas the lower end was much smaller, providing potentially a substantial challenge for models of category learning.

Simulation parameters

The prior distribution over phonetic category parameters GC in the IMM and the lexical-distributional model was identical to that used in Simulation 1 for the IMM, with the bias toward fewer phonetic categories set to αC = 10. Parameters for the gradient descent algorithm were also identical to those used in Simulation 1, with an initial category variance of Cr = 0.02.

The lexical-distributional model contains an additional parameter αL that controls the strength of the bias toward fewer lexical items. Smaller values of the parameter correspond to stronger biases. The distribution over word frequencies in the corpus was generated from our model with αL = 10, and we simply used the same value during inference. The prior distribution over lexical items in the lexical-distributional model further includes a geometric parameter g controlling the lengths of lexical items. This parameter did not appear to have a large qualitative effect on results; for the simulations presented here, it was set to a value of .

Evaluation

Phonetic categorization performance was evaluated in the same way as in Simulation 1. For the lexical-distribitional model and the IMM, we used category assignments from the final iteration of Gibbs sampling, which should correspond to a sample from the posterior distribution over category assignments. For the gradient descent algorithm, each sound was assigned probabilistically to one of the categories based on the learned parameters using Equation 2.

Lexical categorization in the lexical-distributional and baseline models was evaluated using these same performance measures (F-score and VI; see Appendix C). However, because the lexical-distributional model in principle allows different lexical items to have identical phonemic forms, we computed both measures twice, in two different ways, for this model. We first counted lexical items with identical phonemic forms as separate, penalizing models for treating words as homophones rather than a single lexical item. We then re-computed the same measures after merging any lexical items that had identical phonemic forms. In the true clustering, all items with the same true phonemic form were treated as a single lexical item, reflecting the gold standard for a form-based learner. Thus, the model was not penalized under either measure for merging homophones into a single category, but was penalized in the first measure for splitting tokens of a single lexical item into two categories.

Results and discussion

The three models were tested on corpora generated from ten different artificial lexicons. The lexical-distributional model recovered an average of 11.9 categories, successfully disambiguating neighboring categories in most cases. In 7 of the 10 runs, the model correctly recovered exactly 12 categories. Two corpora failed to provide sufficient disambiguating information in the lexicon, and in each of these simulations the model recovered only 11 of the 12 categories, mistakenly merging two categories. On the final corpus the sample we obtained from the model’s posterior distribution contained 13 categories. This thirteenth category was spurious, as only two of 12,621 sounds in the corpus were assigned to it. Although the sample we chose to analyze, from the final iteration of Gibbs sampling, contained this thirteenth category, most posterior samples in the Markov chain contained exactly twelve categories. In contrast to the lexical-distributional model, the distributional models mistakenly merged several pairs of neighboring vowel categories, recovering fewer categories than the lexical-distributional model in each of the ten corpora. The IMM recovered an average of 8 of the 12 categories, and the gradient descent algorithm recovered an average of 5.5 of the 12 categories. Neither distributional model recovered all 12 categories in any of the 10 corpora. The lexical-distributional model also outperformed the distributional models along our two quantitative measures. F-scores were higher for the lexical-distributional model than for the distributional models, and VI scores were closer to zero for the lexical-distributional model (Table 3).

Table 3.

Phonetic categorization scores for the lexical-distributional model (L-D), infinite mixture model (IMM), and gradient descent algorithm (GD) in Simulation 2, averaged across all ten corpora.

| L-D | IMM | GD | |

|---|---|---|---|

| Number of categories | 11.9 | 8 | 5.5 |

| F-score | 0.919 | 0.519 | 0.545 |

| Variation of information | 0.671 | 2.762 | 2.426 |

Note: The true number of phonetic categories is 12.

We used a one-way ANOVA to look for statistically significant differences among the models along each measure of phonetic categorization. There were highly significant differences in number of categories (F(2, 27) = 79.35, p < 0.0001), F-score (F(2, 27) = 116.37, p < 0.0001), and VI (F(2, 27) = 149.69, p < 0.0001). Pairwise comparisons showed that the lexical-distributional model outperformed the IMM in the number of categories (t(18) = 11.21, p < 0.0001), F-score (t(18) = 15.80, p < 0.0001), and VI (t(18) = 16.92, p < 0.0001) and outperformed the gradient descent algorithm in the number of categories (t(18) = 11.60, p < 0.0001), F-score (t(18) = 13.10, p < 0.0001), and VI (t(18) = 13.15, p < 0.0001). Between the two distributional models, it was less clear which exhibited better performance. The IMM outperformed the gradient descent algorithm in number of categories recovered (t(18) = 4.16, p = 0.0006), but the gradient descent algorithm achieved a better score on VI (t(18) = 2.54, p = 0.02). The distributional models were statistically indistinguishable from each other in the F-scores they achieved (t(18) = 0.78, p = 0.4).

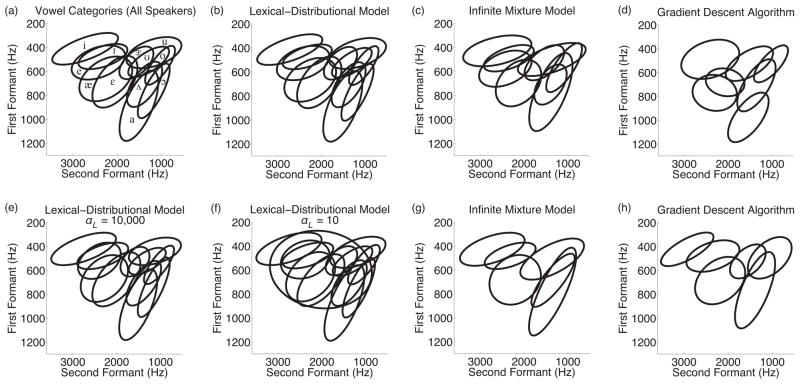

In word categorization, the lexical-distributional model also outperformed the baseline model as measured by F-score and VI (Table 4). These differences were significant under both measures (F-score: t(18) = 9.93, p < 0.0001, VI: t(18) = 7.99, p < 0.0001).3 This indicates that interactive learning improved performance in both the sound and word domains. Figure 3b-d illustrates a representative set of results from Corpus 1.

Table 4.

Lexical categorization scores for the lexical-distributional model (L-D) and baseline model in Simulation 2, averaged across all ten corpora.

| L-D | baseline | |

|---|---|---|

| F-score | 0.799/0.854 | 0.523 |

| Variation of information | 1.263/0.921 | 1.853 |

Note: The first number evaluates performance by treating each cluster as separate, regardless of phonological form, and the second number treats all clusters with identical phonological forms as constituting a single lexical item. The mean number of lexical items recovered is not shown, as the target number of lexical items differed across the ten corpora.

Figure 3.

Results of Simulations 2 and 3. Ellipses delimit the area corresponding to 90% of vowel tokens for Gaussian categories (a) computed from men’s, women’s, and children’s production data in Hillenbrand et al. (1995), recovered in Simulation 2 by (b) the lexical-distributional model, (c) the infinite mixture model, and (d) the gradient descent algorithm, and recovered in Simulation 3 by (e) the lexical-distributional model with αL = 10, 000, (f) the lexical-distributional model with αL = 10, (g) the infinite mixture model, and (h) the gradient descent algorithm.

These results demonstrate that in a language in which phonetic categories have substantial overlap, an interactive system can learn more robustly than a purely distributional learner from the same number of data points. Positing the presence of a lexicon helps the ideal learner separate overlapping vowel categories, even when phonological forms contained in the lexicon are not given to the learner in advance.

However, there was some variability in performance, even for the lexical-distributional model. For the majority of the corpora, lexical structure was sufficient for the lexical-distributional model to recover all twelve categories. In two corpora, however, lexical structure successfully disambiguated 11 of the 12 categories, but was insufficient to distinguish the last two categories. Thus, the performance of a lexical-distributional learner depended to some extent on the specific structure available in the lexicon. Lexical items and lexical frequencies in all of these corpora were drawn from the model’s prior distribution. A question therefore remains as to whether a natural language lexicon contains enough disambiguating information to separate overlapping vowel categories. Simulation 3 tests this directly using a corpus of lexical items from English child-directed speech.

Simulation 3: Information contained in the English lexicon

Simulation 3 tests the model’s ability to recover English vowel categories when trained on English words. We test this using a corpus of words from child-directed speech drawn from the CHILDES parental frequency count (MacWhinney, 2000; Li & Shirai, 2000). Because the corpus is created to mirror English child-directed speech, vowel frequencies in both word types and word tokens match those found in English, and the frequency distribution over words also matches the distribution over words that a child might hear. If the lexical-distributional model outperforms the distributional models on this corpus, it would suggest that input to English-learning children contains sufficient word-level information to allow an interactive learner to recover a full set of vowel categories.

The drawback of using real English lexical items is that they necessarily contain consonants, and it is not straightforward to represent consonants in terms of a small number of continuous acoustic parameters. We sidestep this problem in our simulations by representing consonants categorically. We therefore assume that consonants have been perceived and categorized perfectly by the learner. While not entirely realistic, this assumption allows us to explore vowel learning behavior in a realistic English lexicon. We modify our baseline model for lexical categorization to take this into account. Our new baseline model categorizes words together if they have the same length and the same consonant frame. As before, in phonetic categorization the lexical-distributional model is compared with two distributional models, the IMM and the gradient descent algorithm from Vallabha et al. (2007).

Methods

Corpus preparation

The corpus for Simulation 3 was constructed from the phonematized version of the CHILDES parental frequency count that we used to compute vowel frequencies for Simulations 1 and 2. However, we sampled entire words, rather than individual vowels, in constructing our corpus for Simulation 3. Five thousand word tokens were sampled randomly with replacement based on their token frequencies. This yielded a corpus with 6,409 vowel tokens and 8,917 consonant tokens. A set of formant values for each vowel token was sampled from the Gaussian distributions that were computed from the Hillenbrand et al. data. Consonant tokens in the corpus were represented categorically.

Simulation parameters

Parameters were identical to those used in Simulation 2, except that a range of lexical concentration parameters αL was tested to characterize the influence of this parameter on model performance when using the true frequency distribution of words in the English lexicon.

The prior distribution over lexical items in the lexical-distributional model again used a geometric parameter controlling the lengths of lexical items, but additionally included a parameter to encode the relative frequencies of consonants and vowels. Each phoneme slot in a lexical item was assumed to be designated as a consonant with probability 0.62 (otherwise it was a vowel). This probability was chosen to be approximately equal to the proportion of consonants in the lexicon. For the purposes of likelihood computation, consonants were assumed to be generated from a Dirichlet process with concentration parameter αC=10.

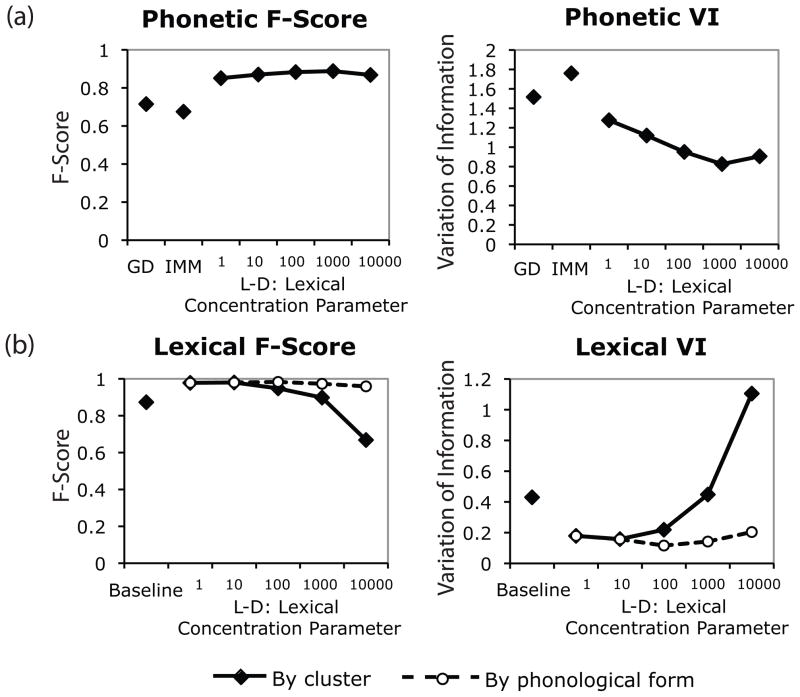

Results and discussion

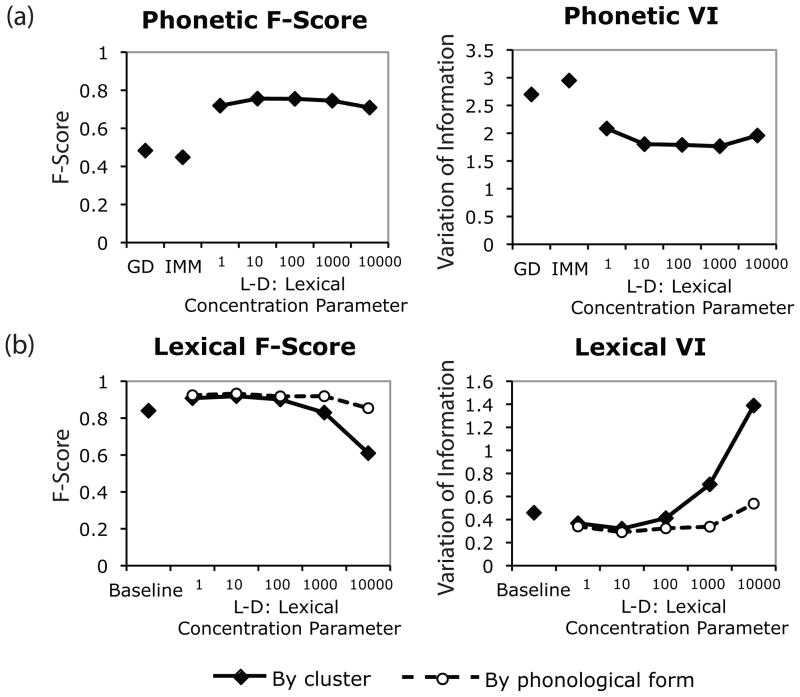

The number of categories recovered by each model is shown in Table 5. In each case, the distributional models merged several sets of overlapping categories. Performance of the lexical-distributional model varied depending on the value of the lexical concentration parameter. With a weak bias toward a smaller lexicon, the model recovered the correct set of twelve categories, but with a stronger bias the model hypothesized more than twelve categories.4 These extra categories had more acoustic variability than the actual categories in the corpus, encompassing more than one vowel category. Examples of each of these types of behavior are illustrated in Figure 3e–h. Numerical phonetic categorization performance was consistently higher in the lexical-distributional model than in the distributional models (Figure 4a), indicating that even for the models that hypothesized extra categories, information from words improved vowel categorization performance.

Table 5.

Phonetic categorization scores for the lexical-distributional model (L-D), infinite mixture model (IMM), and gradient descent algorithm (GD) in Simulation 3.

| L-D | IMM | GD | |||||

|---|---|---|---|---|---|---|---|

| αL = 1 | αL = 10 | αL = 100 | αL = 1000 | αL = 10000 | |||

| Number of categories | 14 | 13 | 13 | 12 | 12 | 6 | 6 |

| F-score | 0.719 | 0.756 | 0.755 | 0.745 | 0.709 | 0.448 | 0.483 |

| Variation of information | 2.085 | 1.803 | 1.790 | 1.765 | 1.959 | 2.949 | 2.699 |

Note: The true number of phonetic categories is 12 for each corpus.

Figure 4.

Results of Simulation 3. (a) F-score and variation of information measuring phonetic categorization performance by the gradient descent algorithm (GD), infinite mixture model (IMM), and lexical-distributional model (L-D). (b) F-score and variation of information measuring lexical categorization performance by the baseline model and lexical-distributional model. Solid lines treat each cluster in the lexicon as its own lexical item, whereas dotted lines treat all clusters with the same phonemic form as a single lexical item.

The number of lexical items recovered by each model is shown in Table 6. Each lexical-distributional model recovered more lexical items than the baseline model, indicating that the model used distributional information to separate distinct lexical items that had the same consonant frame. As expected, stronger biases toward a smaller lexicon resulted in the recovery of a smaller lexicon. With a strong bias, the model recovered fewer lexical items than were used to generate the corpus, merging items that should have been separated. With a weak bias, the model recovered more lexical items than were used to generate the corpus, separating items that should have been assigned to a single category. This separation of items that should be categorized together decreases quantitative lexical categorization performance, but performance improves when different clusters with the same phonemic form are treated as a single lexical item (Figure 4b).

Table 6.

Lexical categorization scores for the lexical-distributional model (L-D) and baseline model in Simulation 3.

| L-D | baseline | |||||

|---|---|---|---|---|---|---|

| αL = 1 | αL = 10 | αL = 100 | αL = 1000 | αL = 10000 | ||

| Number of categories | 900/899 | 926/920 | 958/934 | 1164/989 | 1602/1086 | 852 |

| F-score | 0.908/0.924 | 0.919/0.933 | 0.901/0.918 | 0.830/0.919 | 0.610/0.854 | 0.840 |

| Variation of information | 0.368/0.340 | 0.321/0.290 | 0.412/0.324 | 0.705/0.338 | 1.389/0.538 | 0.459 |

Note: The first number treats each cluster as separate, regardless of phonological form, and the second number treats all clusters with identical phonological forms as belonging to a single lexical item. The true number of lexical items is 1019.

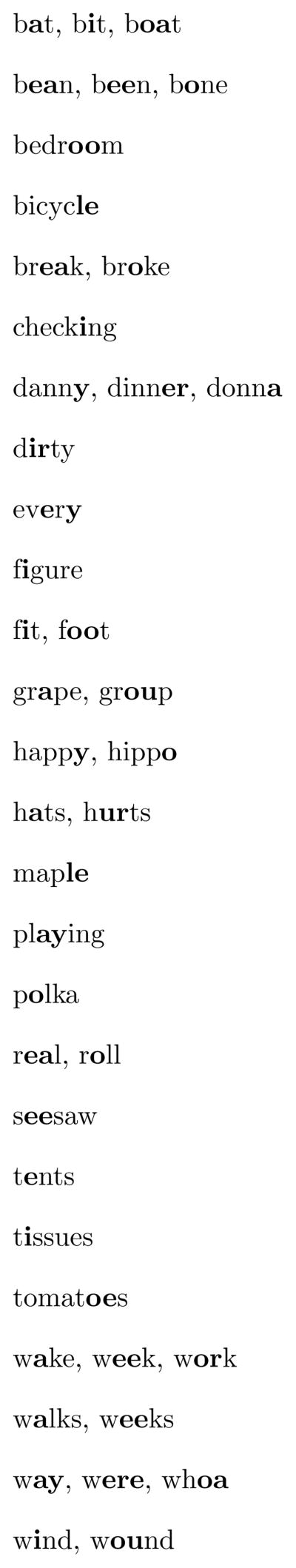

The merger of lexical items in models with a strong lexical bias is related to the extra categories hypothesized by these models. These merged lexical items consist largely of minimal pairs, words in which all but one phoneme are identical, that are assigned by the model to a single lexical item. The category shown in Figure 3f is used in several merged lexical items, such as glad-glued, last-lost, pin-pan-pen, snack-snake, and work-walk-woke-week. This extra category captures the fact that the distribution of acoustic values in these merged lexical items does not fit any of the existing twelve vowel categories, but instead has a broader distribution. Intuitively, these merged lexical items occur because there are many more minimal pairs in English than one would expect if there were no phonotactic constraints on phoneme sequences. This mismatch between the observed and expected numbers of minimal pairs becomes more statistically reliable as corpus size increases, and thus simply adding more training data does not provide a solution to this problem. We consider this issue in more detail in our General Discussion.

In summary, the lexical-distributional model consistently outperformed the distributional models in phonetic categorization performance, indicating that words in the English lexicon contain information that can improve phonetic category learning. With a strong bias toward a smaller lexicon, the model showed high lexical categorization performance but hypothesized extra phonetic categories to account for the high acoustic variability that resulted from erroneously merging minimally different words. With a weaker bias, the model’s lexical categorization performance decreased because lexical items were split into multiple categories, but this allowed the model to find the correct set of categories.

The hard-coding of consonants in this simulation would ideally be relaxed in a more realistic model. However, this hard-coding of consonants is unlikely to have been critical to model success, as our model was able to recover the correct set of vowel categories in the majority of cases in Simulation 2, where no consonant information was present. In addition, follow-up work by Elsner, Goldwater, and Eisenstein (2012) has shown successful learning in a similar model in which consonants can be perceived as mispronunciations of other consonants.

Simulation 4: Reduced speaker variability

Simulations 2 and 3 used corpora in which acoustic values reflected a large degree of variability, encompassing productions by men, women, and children. Speaker normalization is a difficult problem, but one that infants appear to solve quite early (Kuhl, 1979; but see Houston & Jusczyk, 2000). It may therefore be possible for infants to filter out some of this within-category variability when learning about phonetic categories. Overlap between categories may also be reduced if learners use additional dimensions such as duration (Vallabha et al., 2007) or formant trajectories (Hillenbrand et al., 1995). Simulation 4 demonstrates that even when the degree of overlap between categories is reduced, a lexical-distributional learning strategy can enhance learning performance beyond what can be achieved through distributional learning.

We reduced within-category variability by creating our corpus from formant values that were based only on men’s productions. The training data were otherwise parallel to Simulation 3.

Methods

Corpus preparation

Five thousand word tokens were sampled from the same frequency counts used in Simulation 3. The corpus for Simulation 4 contained 6,408 vowel tokens and 8,968 consonant tokens.

Production data by men only from Hillenbrand et al. (1995) were used to compute empirical estimates of category means and covariances in the two-dimensional space given by the first two formant values. Vowel tokens in the corpus were sampled from these Gaussian distributions.

Simulation parameters

Simulation parameters were identical to those used in Simulation 3.

Results and discussion

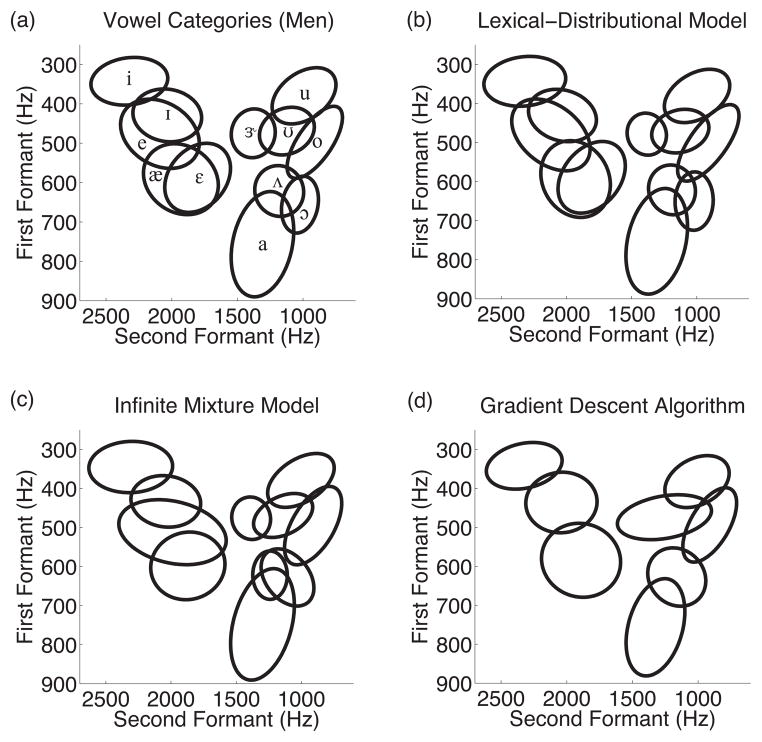

As in Simulation 1, the distributional models benefitted from lowered amounts of speaker variability. However, using word-level information provided an additional boost in performance (Figure 5). Quantitative phonetic categorization performance was consistently better in the lexical-distributional model than in the distributional models (Figure 6a). Whereas the distributional models underestimated the number of phonetic categories, the lexical-distributional model recovered the correct number of categories with a weak prior bias toward a smaller lexicon (Table 7). Lexical categorization performance showed a similar pattern to that obtained in Simulation 3 (Table 8). Extra phonetic categories found by models with a strong prior bias toward a small lexicon were again related to merged lexical items found by those models; the complete contents of one such category are listed in Figure 7. These results replicate the main results from Simulation 3 in a corpus that excludes a large amount of speaker variability.

Figure 5.

Results of Simulation 4. Ellipses delimit the area corresponding to 90% of vowel tokens for Gaussian categories (a) computed from men’s production data in Hillenbrand et al. (1995) and recovered in Simulation 4 by (b) the lexical-distributional model with αL = 10, 000, (c) the infinite mixture model, and (d) the gradient descent algorithm.

Figure 6.

Results of Simulation 4. (a) F-score and variation of information measuring phonetic categorization performance by the gradient descent algorithm (GD), infinite mixture model (IMM), and lexical-distributional model (L-D). (b) F-score and variation of information measuring lexical categorization performance by the baseline model and lexical-distributional model. Solid lines treat each cluster in the lexicon as its own lexical item, whereas dotted lines treat all clusters with the same phonemic form as a single lexical item.

Table 7.

Phonetic categorization scores for the lexical-distributional model (L-D), infinite mixture model (IMM), and gradient descent algorithm (GD) in Simulation 4.

| L-D | IMM | GD | |||||

|---|---|---|---|---|---|---|---|

| αL = 1 | αL = 10 | α L = 100 | αL = 1000 | αL = 10000 | |||

| Number of categories | 17 | 16 | 14 | 13 | 12 | 11 | 8 |

| F-score | 0.851 | 0.870 | 0.883 | 0.888 | 0.868 | 0.675 | 0.715 |

| Variation of information | 1.277 | 1.120 | 0.951 | 0.826 | 0.906 | 1.760 | 1.516 |

Note: The true number of phonetic categories is 12.

Table 8.

Lexical categorization scores for the lexical-distributional model (L-D) and baseline model in Simulation 4.

| L-D | baseline | |||||

|---|---|---|---|---|---|---|

| αL = 1 | αL = 10 | αL = 100 | αL = 1000 | αL = 10000 | ||

| Number of categories | 901/901 | 933/931 | 978/957 | 1117/1002 | 1502/1057 | 840 |

| F-score | 0.978/0.978 | 0.980/0.980 | 0.948/0.983 | 0.898/0.973 | 0.668/0.959 | 0.873 |

| Variation of information | 0.179/0.179 | 0.158/0.157 | 0.219/0.116 | 0.448/0.142 | 1.105/0.204 | 0.430 |

Note: The first number treats each cluster as separate, regardless of phonological form, and the second number treats all clusters with identical phonological forms as belonging to a single lexical item. The true number of lexical items is 1019.

Figure 7.

Contents of one of the super-categories found by a model with a strong bias toward a smaller lexicon (αL = 10). The sounds identified as belonging to the super-category are highlighted in bold. Multiple orthographic forms are listed next to each other if tokens of that lexical item correspond to more than one word. Many of these lexical items are minimal pairs that the model mistakenly categorizes together.

General discussion

In this paper we investigated how higher-level lexical knowledge can contribute to lower-level phonetic category learning by creating a lexical-distributional model of simultaneous phonetic category and word learning. Under this model, learners are not assumed to have knowledge of a lexicon a priori, but are assumed to begin learning a lexicon at the same time they are learning to categorize individual speech sounds, allowing them to take into account the distribution of sounds in words. Across several simulations, phonetic categorization performance was shown to be significantly better in a lexical-distributional model than in distributional models. These results provide support for the hypothesis that the words infants segment from fluent speech can provide useful constraints to guide their acquisition of phonetic categories, as well as for the more general idea that complex systems incorporating multiple levels of structure are not best acquired by focusing on each level in turn, but rather by considering multiple levels simultaneously.

Here we situate the idea of interactive learning in a broader context. We first examine the limitations of our modeling framework and the extent to which those limitations affect the conclusions we can draw. We then consider the role of minimal pairs and discuss ways in which the model’s behavior in dealing with minimal pairs can explain human behavior from artificial language learning experiments. Finally, we discuss the implications of our findings for theories of sound and word learning and for category learning more generally.

Model assumptions

Our lexical-distributional model was built to illustrate how feedback from a developing word-form lexicon can improve the robustness of phonetic category acquisition. A hierarchical nonparametric Bayesian framework was chosen for implementing this interactive model because it allows simultaneous learning of multiple layers of structure, with information from each layer affecting learning in the other layer in a principled way. However, there were several simplifications that we used when creating our corpus that restrict the extent to which we can draw conclusions from these results. One simplification, the lack of phonotactics, actually led to decreased learning performance, and we address this issue in the next section. Here we examine in detail the role of three other simplifying assumptions: the use of only two acoustic dimensions, the reliance on Gaussian distributions of sounds, and the omission of contextually conditioned variability.