Abstract

Background

Competency-based education allows public health departments to better develop a workforce aimed at conducting evidence-based control cancer.

Methods

A two-phased competency development process was conducted that systematically obtained input from practitioners in health departments and trainers in academe and community agencies (n = 60).

Results

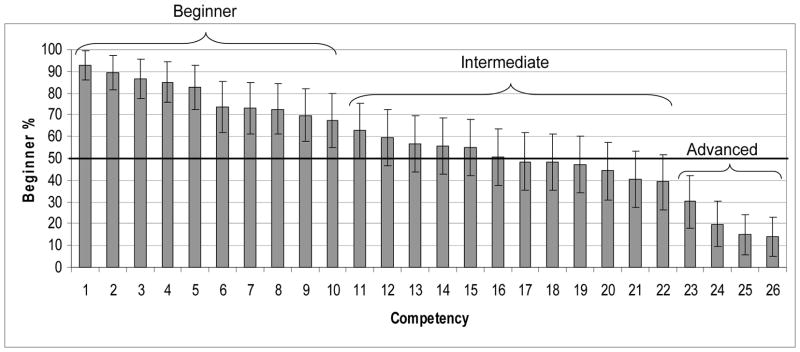

Among the 26 competencies developed, 10 were rated at the beginner level, 12 were intermediate, and 4 were advanced. Community-level input competencies were seen as beginner level, whereas policy-related competencies were rated as advanced.

Conclusion

While adaptation to various audiences is needed, these competencies provide a foundation on which to build practitioner-focused training programs.

INTRODUCTION

The need for a stronger commitment to evidence-based interventions in cancer control is highlighted in the US National Cancer Institute’s (NCI) Strategic Plan for Leading the Nation1 where 7 of 8 strategic objectives includes a call for more widespread adoption of scientifically-proven interventions. Two concepts are fundamental to achieving an evidence-based approach to cancer control. First, we need scientific information on the programs and policies that are most likely to be effective in controlling cancer (i.e., evidence-based decision making).2–4 An array of effective interventions is now available from numerous sources including the Guide to Community Preventive Services,5 the Guide to Clinical Preventive Services,6 and Cancer Control P.L.A.N.E.T..7 Next, dissemination of effective cancer control interventions must occur more effectively at state and local levels.8 State and local public health departments are in key positions to control cancer because of their ability to assess a public health problem, develop an appropriate program or policy, and assure that programs and policies are effectively delivered and implemented.9, 10

Within state and local agencies, an adequately trained workforce is essential to success in cancer control.11 However, there appears to be a widening gap between the skills necessary to reach cancer control goals, and the actual skill set of the public health workforce.12 Competency-based education is rapidly becoming a norm in all levels of education in the United States,13–15 and it applies well to training cancer control practitionersa. Formally, a competency is defined as a cluster of related knowledge, attitudes, and skills that affects the major part of one’s job and can be measured against well-accepted standards and improved through training.16 Competency sets are used both to guide curriculum development and credentialing processes.15, 17–19

In this paper, we present findings from a two-phased competency development process in which we systematically obtained input from practitioners in state and local health departments and trainers in academe and community agencies. Our overall goal was to create a set of competencies for evidence-based cancer control to guide curriculum development for practitioner-focused training efforts.

METHODS

Competency development

In phase one, an initial, general list of competencies on evidence-based decision making was assembled from numerous sources including on-going training courses in evidence-based public,20, 21 findings from a recent project disseminating the US Community Guide,22, 23 the NCI Using What Works trainings,24 and competencies for training in public health.25, 26 We relied on several guiding principles for competency development:

Making decisions based on the best available scientific evidence (both quantitative and qualitative research);

Using data and information systems systematically;

Applying behavioral science theory and program planning frameworks;

Conducting sound evaluation;

Engaging the community in assessment and decision making; and

Disseminating what is learned to key stakeholders and decision-makers.

A draft list of 56 competencies was compiled. The project team evaluated the competencies in five iterative rounds of review: identifying redundancies and missing elements, and seeking comprehensiveness. General competencies were adapted to make them more specific for our cancer control topic (i.e., obesity and cancer prevention). This review process resulted in a list of 26 competencies (Table 1), which were further tested in a card sorting process.

Table 1.

Competencies in evidence-based cancer control, 2007

| Title | Domaina | Levelb | Competency |

|---|---|---|---|

| 1. Community input | C | B | Understand the importance of obtaining community input before planning and implementing evidence-based interventions to prevent obesity. |

| 2. Knowledge of relationship between obesity and cancer | E | B | Understand the relationship between obesity and various forms of cancer. |

| 3. Community assessment | C | B | Understand how to define the obesity issue according to the needs and assets of the population/community of interest. |

| 4. Partnerships at multi-levels | P/C | B | Understand the importance of identifying and developing partnerships in order to address obesity and cancer with evidence-based strategies at multi-levels. |

| 5. Developing a concise statement of the issue | EBP | B | Understand the importance of developing a concise statement of the obesity and cancer issue in order to build support for it. |

| 6. Grant writing need | T/T | B | Recognize the importance of grant writing skills including the steps involved in the application process. |

| 7. Literature searching | EBP | B | Understand the process for searching the scientific literature and summarizing search- derived information on obesity prevention. |

| 8. Leadership and evidence | L | B | Recognize the importance of strong leadership from public health professionals regarding the need and importance of evidence-based public health interventions to prevent obesity. |

| 9. Role of behavioral science theory | T/T | B | Understand the role of behavioral science theory in designing, implementing, and evaluating obesity-related interventions. |

| 10. Leadership at all levels | L | B | Understand the importance of commitment from all levels of public health leadership to increase the use of evidence-based obesity-related interventions. |

| 11. Evaluation in ‘plain English’ | EV | I | Recognize the importance of translating the impacts of obesity programs or policies in language that can be understood by communities, practice sectors and policy makers. |

| 12. Leadership and change | L | I | Recognize the importance of effective leadership from public health professionals when making decisions in the midst of ever changing environments. |

| 13. Translating evidence-based interventions | EBP | I | Recognize the importance of translating evidence-based interventions to prevent obesity to unique ‘real world’ settings. |

| 14. Quantifying the issue | T/T | I | Understand the importance of descriptive epidemiology (concepts of person, place, time) in quantifying obesity and other public health issues. |

| 15. Developing an action plan for program or policy | EBP | I | Understand the importance of developing an obesity plan of action which describes how the goals and objectives will be achieved, what resources are required, and how responsibility of achieving objectives will be assigned. |

| 16. Prioritizing health issues | EBP | I | Understand how to choose and implement appropriate criteria and processes for prioritizing program and policy options for obesity prevention. |

| 17. Qualitative evaluation | EV | I | Recognize the value of qualitative evaluation approaches including the steps involved in conducting qualitative evaluations. |

| 18. Collaborative partnerships | P/C | I | Understand the importance of collaborative partnerships between researchers and practitioners when designing, implementing, and evaluating evidence-based programs and policies for obesity prevention. |

| 19. Non-traditional partnerships | P/C | I | Understand the importance of traditional obesity prevention partnerships as well as those that have been considered non-traditional such as those with planners, department of transportation, and others. |

| 20. Systematic reviews | T/T | I | Understand the rationale, uses, and usefulness of systematic reviews that document effective obesity interventions. |

| 21. Quantitative evaluation | EV | I | Recognize the importance of quantitative evaluation approaches including the concepts of measurement validity and reliability. |

| 22. Grant writing skills | T/T | I | Demonstrate the ability to create an obesity-related grant including an outline of the steps involved in the application process. |

| 23. Role of economic evaluation | T/T | A | Recognize the importance of using economic data and strategies to evaluate costs and outcomes when making public health decisions related to obesity and cancer prevention. |

| 24. Creating policy briefs | P | A | Understand the importance of writing concise policy briefs to address obesity and cancer using evidence-based interventions. |

| 25. Evaluation designs | EV | A | Comprehend the various designs useful in obesity program evaluation with a particular focus on quasi-experimental (non-randomized) designs. |

| 26. Transmitting evidence- based research to policy makers | P | A | Understand the importance of coming up with creative ways of transmitting what we know works (evidence-based interventions for obesity prevention) to policy makers in order to gain interest, political support and funding. |

C = community-level planning; E = etiology; P/C = partnerships & collaboration; EBP = evidence-based process; T/T = theory & analytic tools; L = leadership; EV = evaluation; P = policy.

B = beginner; I = intermediate; A = advanced.

Card sorting

In the second phase of the project, we administered a card sorting exercise among practitioners and trainers in cancer control. Card sorting is a technique that explores how people group and prioritize items,27–29 allowing us to develop a curriculum focusing on key competencies.

Participants for card sorting were drawn from multiple sources: practitioners were selected from two mid-sized state health departments and a county health department; trainers were identified from an ongoing evidence-based public health course,20, 21, 30 the partnership program of NCI’s Cancer Information Service,31 and the CDC- and NCI-funded Cancer Prevention and Control Research Network.32 Within each group, a list of possible participants was enumerated based on experience in the delivery of cancer and other chronic disease programs and expertise in training of practitioners.

There were four steps in the card sorting process. We first defined a competency as “a complex combination of knowledge, skills and abilities; demonstrated by individuals and necessary for them to perform their job functions at a high level.” Each competency was typed on a separate card. We then set out a scenario in which the respondent read each competency card and decided if the knowledge/attitude/skill should be designated as ‘Beginner’ (basic), or ‘Advanced’ (higher level) training (i.e., level of difficulty). Each person ended up with a ‘Beginner’ and an ‘Advanced’ stack of cards. Within each of the two stacks, respondents were then asked to categorize each competency as a low, medium, or high priority (i.e., perceived priority). We also provided blank cards so respondents could write out any competencies that they deemed missing from our list. Card sorting was conducted from July through November 2007 in both a group and individual format to accommodate people’s schedules. Group card sorting (n = 32) took 45–50 minutes per group and individual card sorting (n = 28) took 20–55 minutes per respondent. After several rounds of recruitment, 100% cooperation was obtained from our target audience (n = 60).

Analyses

Descriptive analyses were conducted to summarize all variables. Bivariate relationships were examined using chi-square or ANOVA, depending on the type of data. Summary data were displayed graphically in bar graphs to compare: beginner versus advanced categories, low versus medium versus high priorities, and ratings by trainers versus trainees. For each proportion, a 95% confidence interval was calculated.33 When the percentage beginner versus advanced was statistically the same as 50%, the competency was designated as intermediate. Scatter plots were created using the two scores for each competency: the percentage advanced score and the percentage high priority score. For the scatter plots, lines were drawn at the 33.3% and 66.7% advanced score (X axis) to indicate beginner, intermediate and advanced categories. Lines were also drawn at the 33.3% and 66.7% high priority score (Y axis) to indicate low, medium and high priority.

RESULTS

Participants in the card sorting included a range of job titles, with most respondents serving as program managers/administrators or health educators (Table 2). Respondents were equally distributed across three intervals for agency longevity. Most participants had graduate education, with 68% holding a masters degree in public health or another discipline. Among respondents, 63% were potential trainees and 37% had experience as trainers. Eighty-five percent of respondents worked in obesity and/or cancer prevention. The groups of trainees and trainers differed on two characteristics: job title and highest degree held.

Table 2.

Participants in card sorting for evidence-based cancer control, 2007

| Characteristic | All respondents n (%) | Trainees n (%) | Trainers n (%) | p-valuea |

|---|---|---|---|---|

|

| ||||

| Job Title | ||||

| Program manager/administrator | 26 (43.3) | 17 (44.7) | 9 (40.9) | 0.028 |

| Health educator | 16 (26.7) | 14 (36.8) | 2 (9.1) | |

| Epidemiologist | 2 (3.3) | 0 (0) | 2 (9.1) | |

| Multiple job titlesb | 8 (13.3) | 4 (10.5) | 4 (18.2) | |

| Other job titlesc | 8 (13.3) | 3 (7.9) | 5 (22.7) | |

|

| ||||

| Years working in agency/organization | ||||

| <5 years | 19 (32.2) | 10 (27.0) | 9 (40.9) | 0.626 |

| 5 to <10 years | 19 (32.2) | 13 (35.1) | 6 (27.3) | |

| 10+ years | 21 (35.6) | 14 (37.8) | 7 (31.8) | |

|

| ||||

| Highest degree held | ||||

| Bachelors | 11 (18.3) | 8 (21.0) | 3 (13.6) | 0.002 |

| Masters (other than public health) | 30 (50) | 23 (60.5) | 7 (31.8) | |

| Masters (public health) | 11 (18.3) | 5 (13.2) | 6 (27.3) | |

| Doctorate | 6 (10) | 0 (0) | 6 (27.3) | |

| Other | 2 (3.4) | 2 (5.3) | 0 (0) | |

|

| ||||

| Role in training | ||||

| Trainee | 38 (63.3) | -- | -- | -- |

| Trainer | 22 (36.7) | |||

|

| ||||

| Working in the field of either obesity or cancer? | ||||

| Yes | 51 (85) | 31 (81.6) | 20 (90.9) | 0.281 |

| No | 9 (15) | 7 (18.4) | 2 (9.1) | |

p-value for chi-square testing difference between trainees and trainers

Multiple job titles included: program manager/administrator and program planner; program manager/administrator and division/bureau head; program manager/administrator and health educator; health educator and local project coordinator.

Other job titles included: program coordinator; project coordinator; outreach program manager; academic project director; partnership program planner.

Among the competencies, 10 were rated at the beginner level, 12 were intermediate, and 4 were advanced (Figure 1). There was considerable variation in level of difficulty ratings across competency. For example, the competency on community input (#1) was rated as beginner by 93% of respondents whereas the competency on transmitting evidence-based research to policy makers (#26) was rated as beginner by only 14% of respondents. The 26 competencies covered 8 domains (Table 1). Several patterns emerged across domain and difficulty level. Community-level input competencies were seen as beginner level. Competencies in evaluation and evidence-based processes were likely to be rated as intermediate. The two policy-related competencies were rated as advanced.

Figure 1.

Rating of competencies by level, 2007

Bivariate relationships were examined between the variables in Table 2 and ratings for level of difficulty (beginner, intermediate, advanced) and perceived priority (low, medium, high). There were few significant differences across the categories in Table 1. For difficulty level ratings, those with less than 5 years experience rated competencies as more advanced (p = 0.022). Those who worked in obesity or cancer tended to rate competencies at a more advanced level (p = 0.62).

The data also varied by level of difficulty (beginner, intermediate, advanced) and perceived priority (low, medium, high) (Figure 2). Four competencies (circled in Figure 2) had difficulty levels rated significantly differently by trainers versus trainees; these were: translating evidence-based interventions (#13), qualitative evaluation (#17), non-traditional partnerships (#19), and systematic reviews (#20). Among these four competencies, only non-traditional partnerships was more likely to be rated as an advanced skill among trainers than among trainees whereas the other three were deemed more advanced among trainees.

Figure 2.

Competencies according to ratings for level and priority, 2007

Circled: competencies are those with widest discrepancy between trainers and trainees

1: Community input

2: Knowledge of relationship between obesity and cancer

3: Community assessment

4: Partnerships at multi-levels

5: Developing a concise statement of the issue

6: Grant writing need

7: Literature searching

8: Leadership and evidence

9: Role of behavioral science theory

10: Leadership at all levels

11: Evaluation in ‘plain English’

12: Leadership and change

13: Translating evidence-based interventions

14: Quantifying the issue

15: Developing an action plan for program or policy

16: Prioritizing health issues

17: Qualitative evaluation

18: Collaborative partnerships

19: Non-traditional partnerships

20: Systematic reviews

21: Quantitative evaluation

22: Grant writing skills

23: Role of economic evaluation

24: Creating policy briefs

25: Evaluation designs

26: Transmitting evidence-based research to policy makers

Using open-ended methods, a few additional competencies were identified from two or more respondents. These involved two concepts: 1) the need to explain the importance of evidence-based approaches and related definitions and 2) the importance of building trust and respect when conducting community-based interventions. Several related issues were identified that were related more to implementation of curriculum than to the content (e.g., the importance of understanding and using principles of adult learning).

DISCUSSION

As cancer control has become a key component of day-to-day public health practice over the past two decades,11, 34–36 the need for practitioners knowledgeable in evidence-based approaches has grown.3 Our study identified and prioritized a set of 26 competencies that are likely to be important in improving the delivery of cancer control interventions. By including ratings for level of difficulty (beginner to advanced) and perceived priority (low to high), this competency set provides a foundation for practitioner training programs.

Both researchers35, 37 and practitioners11, 38 have identified cancer control training as a high priority. Yet, implementing competency-based training requires focused and sustained efforts. Weed and Husten37 summarized the training-related issues according to two questions: “Who should be trained?” and “What should be learned?”. The answer to the “who” question for a public health agency is likely to include persons from key disciplines (e.g., epidemiology, health promotion, public information, administration) who are involved in delivering cancer control programs or who encourage others to be trained. Most people working in cancer control within public health settings do not have formal training in public health.30, 39 The “what” question can be largely answered by the competencies that we identified and rated. A third question, “how” is an additional consideration that includes attention to mode of delivery (e.g., in-person, web-based trainings).

There are several implications from our study that should be taken into account in development and delivery of training:

In conducting trainings that span beginning to advanced levels, it is helpful to target approaches to appropriate objectives to the level of difficulty. Beginning competencies may be largely cognitive; those at the intermediate level may apply existing tools and build basic skills; advanced competencies may teach in-depth skills that seek to make a person an expert in a certain area.

For most competencies, there was close agreement between trainers and trainees for ratings on level of difficulty and perceived priority. When there was disagreement between trainers and trainees, trainees were more likely to rate a competency as advanced. Trainers should not assume that what seems “basic” to them is also “basic” to trainees. A skill assessment before delivery of training should help in targeting curricula to the correct level.

It is increasingly clear that policy intervention holds great promise for cancer control.40, 41 From our study, policy-related competencies were the most advanced and may therefore require focused attention to adequately train the workforce. It may be important to identify some “basic” policy skills that in turn help a person build up to the more advanced policy competencies. This may call on training programs to move beyond typical public health training to include skills such advocacy, policy analysis, health communication, and negotiation.

While both competencies for community-level planning (nos. 1 and 3) were deemed as beginner level, they also were considered highest priority. This suggests that these skills are essential for moving to intermediate and advanced levels.

Although not directly addressed in our study, implementation of training to address these competencies should take into account principles of adult learning. These issues were recently articulated by Bryan et al.42 and include the need: 1) to know why the audience is learning; 2) to tap into an underlying motivation to learn by the need to solve problems; 3) to respect and build upon previous experience; 4) for learning approaches that match with background and diversity; and 5) to actively involve the audience in the learning process.

While our study provides useful information, it also has limitations. Our findings are based on self-reported data and were obtained from a convenience sample of trainees and trainers. It is possible that a larger, more geographically-dispersed and professionally-diverse sample would yield different results. For example, training programs may need to be developed specifically for minority researchers and practitioners to adequately address health disparities.43, 44 In addition to individuals employed in state and local health departments, other agencies such as the American Cancer Society and the NCI’s Cancer Information Service play important roles in cancer control. It will be important to track the implementation of training programs in multiple venues. The scenario in our study focused on obesity and cancer. This approach should be validated in a range of cancer control (e.g., screening) and other public health topics (e.g., diabetes, infectious diseases). It is likely that the level of maturity for a public health topic will influence competency needs.

There are several logical next steps for efforts to build the workforce of cancer control practitioners. To better understand the “how” behind these competencies, qualitative, in-depth interviews with potential trainees should help in defining the optimal training approach, mode of delivery, and venue. Numerous training programs3, 24 and analytic tools5, 7, 45–47 for evidence-based public health practice are already available. It would be helpful to map our competencies against what is already available. A general gap in the literature is the lack of published evaluations on training programs.30 More consistent and systematic evaluation should be conducted as new programs are developed.

In summary, our development process identified a manageable set of cancer control competencies, rated by level of difficulty and perceived priority. While adaptation to various audiences is needed, this group of competencies provides a foundation on which to build practitioner-focused training programs.

Acknowledgments

Support and Acknowledgments

This work was supported by the National Cancer Institute (no. 5R25CA113433-02). We are grateful for assistance from participants from the following: Missouri Department of Health and Senior Services; Cancer Information Service; Cancer Prevention and Control Research Network; selected Evidence-Based Public Health trainers; Illinois Department of Public Health and St. Louis County Health Department. We thank Cherrie Bartlett, Belinda Heimericks, Debbie Pfeiffer, Jeff Sunderlin, and Ann Hynes for their help in identifying participants and coordinating meetings. We also are grateful to Amy Gaier and Emily Bullard for assistance in project planning.

Footnotes

In this paper, cancer control practitioners are people who direct and implement population-based intervention programs in agencies or in community-based coalitions.

References

- 1.National Cancer Institute. To Eliminate the Suffering and Death Due to Cancer. Vol. 2006 Bethesda, MD: National Cancer Institute, US Department of Health and Human Services; Jan, 2006. The NCI Strategic Plan for Leading the Nation. [Google Scholar]

- 2.Curry S, Byers T, Hewitt M, editors. Fulfilling the Potential of Cancer Prevention and Early Detection. Washington, DC: National Academies Press; 2003. [PubMed] [Google Scholar]

- 3.Brownson RC, Baker EA, Leet TL, Gillespie KN. Evidence-Based Public Health. New York: Oxford University Press; 2003. [Google Scholar]

- 4.Black BL, Cowens-Alvarado R, Gershman S, Weir HK. Using data to motivate action: the need for high quality, an effective presentation, and an action context for decision-making. Cancer Causes Control. 2005 Oct;16( Suppl 1):15–25. doi: 10.1007/s10552-005-0457-5. [DOI] [PubMed] [Google Scholar]

- 5.Zaza S, Briss PA, Harris KW, editors. The Guide to Community Preventive Services: What Works to Promote Health? New York: Oxford University Press; 2005. [Google Scholar]

- 6.Agency for Healthcare Research and Quality. Periodic Updates. 3. Agency for Healthcare Research and Quality; [Accessed October 11, 2005]. 2005. Guide to Clinical Preventive Services. Available at: http://www.ahrq.gov/clinic/gcpspu.htm. [Google Scholar]

- 7.Cancer Control PLANET. Links resources to comprehensive cancer control. The National Cancer Institute; The Centers for Disease Control and Prevention; The American Cancer Society; The Substance Abuse and Mental Health Services; The Agency for Healthcare Research and Quality; [Accessed March 17, 2007]. 2005. Cancer Control PLANET. Available at: http://cancercontrolplanet.cancer.gov/index.html. [Google Scholar]

- 8.Kerner J, Rimer B, Emmons K. Introduction to the special section on dissemination: dissemination research and research dissemination: how can we close the gap? Health Psychol. 2005 Sep;24(5):443–446. doi: 10.1037/0278-6133.24.5.443. [DOI] [PubMed] [Google Scholar]

- 9.IOM. The Future of Public Health. Washington, DC: National Academy Press; 1988. Committee for the Study of the Future of Public Health. [Google Scholar]

- 10.Association of State and Territorial Directors of Health Promotion and Public Health Education and the Centers for Disease Control and Prevention. Policy and Environmental Change: New Directions for Public Health. Atlanta, GA: ASTDHPPHE and CDC; 2001. [Google Scholar]

- 11.Meissner HI, Bergner L, Marconi KM. Developing cancer control capacity in state and local public health agencies. Public Health Rep. 1992 Jan-Feb;107(1):15–23. [PMC free article] [PubMed] [Google Scholar]

- 12.Allegrante JP, Moon RW, Auld ME, Gebbie KM. Continuing-education needs of the currently employed public health education workforce. Am J Public Health. 2001 Aug;91(8):1230–1234. doi: 10.2105/ajph.91.8.1230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Campbell CR, Lomperis AM, Gillespie KN, Arrington B. Competency-based healthcare management education: the Saint Louis University experience. J Health Adm Educ. 2006 Spring;23(2):135–168. [PubMed] [Google Scholar]

- 14.Institute of Medicine. Who Will Keep the Public Healthy? Educating Public Health Professionals for the 21st Century. Washington, D.C: National Academies Press; 2003. [PubMed] [Google Scholar]

- 15.O’Donnell JF. Competencies are all the rage in education. J Cancer Educ. 2004 Summer;19(2):74–75. doi: 10.1207/s15430154jce1902_2. [DOI] [PubMed] [Google Scholar]

- 16.Parry S. Just what is a competency (and why should we care?) Training. 1998;35:58–64. [Google Scholar]

- 17.O’Donnell JF. A most important competency: professionalism. What is it? J Cancer Educ. 2004 Winter;19(4):202–203. doi: 10.1207/s15430154jce1904_2. [DOI] [PubMed] [Google Scholar]

- 18.Thacker S, Brownson R. Practicing epidemiology: How competent are we? Public Health Rep. 2008;123(Suppl 1):4–5. doi: 10.1177/00333549081230S102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Scharff DP, Rabin BA, Cook RA, Wray RJ, Brownson RC. Bridging research and practice through competency-based public health education. J Public Health Manag Pract. 2008 Mar-Apr;14(2):131–137. doi: 10.1097/01.PHH.0000311890.91003.6e. [DOI] [PubMed] [Google Scholar]

- 20.Brownson RC, Diem G, Grabauskas V, et al. Training practitioners in evidence-based chronic disease prevention for global health. Promot Educ. 2007;14(3):159–163. [PubMed] [Google Scholar]

- 21.O’Neall MA, Brownson RC. Teaching evidence-based public health to public health practitioners. Ann Epidemiol. 2005 Aug;15(7):540–544. doi: 10.1016/j.annepidem.2004.09.001. [DOI] [PubMed] [Google Scholar]

- 22.Brownson RC, Ballew P, Brown KL, et al. The effect of disseminating evidence-based interventions that promote physical activity to health departments. Am J Public Health. 2007 Oct;97(10):1900–1907. doi: 10.2105/AJPH.2006.090399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brownson RC, Ballew P, Dieffenderfer B, et al. Evidence-based interventions to promote physical activity: what contributes to dissemination by state health departments. Am J Prev Med. 2007 Jul;33(1 Suppl):S66–73. doi: 10.1016/j.amepre.2007.03.011. [DOI] [PubMed] [Google Scholar]

- 24.National Cancer Institute. Using What Works: Adapting Evidence-Based Programs to Fit Your Needs. National Cancer Institute; [Accessed December 29, 2007]. 2007. Available at: http://cancercontrol.cancer.gov/use_what_works/start.htm. [Google Scholar]

- 25.Core Competencies for Public Health Professionals. Washington, DC: Public Health Foundation; 2001. Council on Linkages between Academia and Public Health Practice. [Google Scholar]

- 26.National Association of Chronic Disease Directors. [Accessed February 2008];Competencies for Chronic Disease Practice. Available at: http://www.chronicdisease.org/files/public/complete_draft_Competencies_for_Chronic_Disease_Practice.pdf.

- 27.Gaffney G. What is card sorting? [Accessed June 15, 2007];Information & Design. 2007 Available at: http://www.infodesign.com.au/usabilityresources/design/cardsorting.asp.

- 28.Maurer D, Warfel T. Card sorting; a definitive guide. [Accessed June 15, 2007];Boxes and Arrows. 2007 Available at: http://www.boxesandarrows.com/view/card_sorting_a_definitive_guide.

- 29.Robertson J. Information design using card sorting. [Accessed June 15, 2007];Step Two Designs. 2007 Available at: http://www.steptwo.com.au/papers/cardsorting/index.html.

- 30.Dreisinger M, Leet T, Baker E, Gillespie K, Haas B, Brownson R. Improving the public health workforce: evaluation of a training course to enhance evidence-based decision-making. J Public Health Manag Pract. 2008;14:138–143. doi: 10.1097/01.PHH.0000311891.73078.50. [DOI] [PubMed] [Google Scholar]

- 31.National Cancer Institute. Fact Sheet. National Cancer Institute, Cancer Information Service; [Accessed May 3, 2008]. 2008. Available at: http://www.cancer.gov/cancertopics/factsheet/Information/CIS. [Google Scholar]

- 32.Harris JR, Brown PK, Coughlin S, et al. The cancer prevention and control research network. Prev Chronic Dis. 2005 Jan;2(1):A21. [PMC free article] [PubMed] [Google Scholar]

- 33.Armitage P, Berry G. Statistical methods in medical research. 2. Oxford; Boston: Blackwell Scientific; 1987. [Google Scholar]

- 34.Alciati MH, Glanz K. Using data to plan public health programs: experience from state cancer prevention and control programs. Public Health Rep. 1996 Mar-Apr;111(2):165–172. [PMC free article] [PubMed] [Google Scholar]

- 35.Brownson RC, Bright FS. Chronic disease control in public health practice: looking back and moving forward. Public Health Rep. 2004 May-Jun;119(3):230–238. doi: 10.1016/j.phr.2004.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Steckler A, Goodman RM, Alciati MH. The impact of the National Cancer Institute’s Data-based Intervention Research program on state health agencies. Health Educ Res. 1997 Jun;12(2):199–211. doi: 10.1093/her/12.2.199. [DOI] [PubMed] [Google Scholar]

- 37.Weed D, Husten C. Training in cancer prevention and control. In: Greenwald P, Kramer B, Weed D, editors. Cancer Prevention and Control. New York: Marcel Dekker; 1995. pp. 707–717. [Google Scholar]

- 38.Association of State and Territorial Chronic Disease Program Directors. Reducing the Burden of Chronic Disease: Needs of the States. Washington, DC: Public Health Foundation; 1991. [Google Scholar]

- 39.Maylahn C, Bohn C, Hammer M, Waltz E. Strengthening epidemiologic competencies among local health professionals in New York: teaching evidence-based public health. Public Health Rep. 2008;123(Suppl 1):35–43. doi: 10.1177/00333549081230S110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Brownson RC, Haire-Joshu D, Luke DA. Shaping the context of health: a review of environmental and policy approaches in the prevention of chronic diseases. Annu Rev Public Health. 2006;27:341–370. doi: 10.1146/annurev.publhealth.27.021405.102137. [DOI] [PubMed] [Google Scholar]

- 41.Colditz GA, Samplin-Salgado M, Ryan CT, et al. Harvard report on cancer prevention, volume 5: fulfilling the potential for cancer prevention: policy approaches. Cancer Causes Control. 2002 Apr;13(3):199–212. doi: 10.1023/a:1015040702565. [DOI] [PubMed] [Google Scholar]

- 42.Bryan R, Kreuter M, Brownson R. Integrating adult learning principles into training for public health practice. Health Promot Pract. 2008 doi: 10.1177/1524839907308117. (in press) [DOI] [PubMed] [Google Scholar]

- 43.Scharff D, Kreuter M. Training and workforce diversity as keys to eliminating health disparities. Health Promotion Practice. 2000;1:288–291. [Google Scholar]

- 44.Yancey AK, Kagawa-Singer M, Ratliff P, et al. Progress in the pipeline: replication of the minority training program in cancer control research. J Cancer Educ. 2006 Winter;21(4):230–236. doi: 10.1080/08858190701347820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.CDC. Workplans: A Program Management Tool Education and Training Packet, 2000: CDC Cancer Prevention and Control Program. 2000. [Google Scholar]

- 46.Texas Cancer Council. Texas Cancer Control Toolkit. Austin, TX: 2007. [Google Scholar]

- 47.University of Kansas. The Community Tool Box. University of Kansas; Work Group for Community Health and Development. Available at: http://ctb.ku.edu/en/Default.htm. [Google Scholar]