Abstract

Maximum entropy models are the least structured probability distributions that exactly reproduce a chosen set of statistics measured in an interacting network. Here we use this principle to construct probabilistic models which describe the correlated spiking activity of populations of up to 120 neurons in the salamander retina as it responds to natural movies. Already in groups as small as 10 neurons, interactions between spikes can no longer be regarded as small perturbations in an otherwise independent system; for 40 or more neurons pairwise interactions need to be supplemented by a global interaction that controls the distribution of synchrony in the population. Here we show that such “K-pairwise” models—being systematic extensions of the previously used pairwise Ising models—provide an excellent account of the data. We explore the properties of the neural vocabulary by: 1) estimating its entropy, which constrains the population's capacity to represent visual information; 2) classifying activity patterns into a small set of metastable collective modes; 3) showing that the neural codeword ensembles are extremely inhomogenous; 4) demonstrating that the state of individual neurons is highly predictable from the rest of the population, allowing the capacity for error correction.

Author Summary

Sensory neurons encode information about the world into sequences of spiking and silence. Multi-electrode array recordings have enabled us to move from single units to measuring the responses of many neurons simultaneously, and thus to ask questions about how populations of neurons as a whole represent their input signals. Here we build on previous work that has shown that in the salamander retina, pairs of retinal ganglion cells are only weakly correlated, yet the population spiking activity exhibits large departures from a model where the neurons would be independent. We analyze data from more than a hundred salamander retinal ganglion cells and characterize their collective response using maximum entropy models of statistical physics. With these models in hand, we can put bounds on the amount of information encoded by the neural population, constructively demonstrate that the code has error correcting redundancy, and advance two hypotheses about the neural code: that collective states of the network could carry stimulus information, and that the distribution of neural activity patterns has very nontrivial statistical properties, possibly related to critical systems in statistical physics.

Introduction

Physicists have long hoped that the functional behavior of large, highly interconnected neural networks could be described by statistical mechanics [1]–[3]. The goal of this effort has been not to simulate the details of particular networks, but to understand how interesting functions can emerge, collectively, from large populations of neurons. The hope, inspired by our quantitative understanding of collective behavior in systems near thermal equilibrium, is that such emergent phenomena will have some degree of universality, and hence that one can make progress without knowing all of the microscopic details of each system. A classic example of work in this spirit is the Hopfield model of associative or content–addressable memory [1], which is able to recover the correct memory from any of its subparts of sufficient size. Because the computational substrate of neural states in these models are binary “spins,” and the memories are realized as locally stable states of the network dynamics, methods of statistical physics could be brought to bear on theoretically challenging issues such as the storage capacity of the network or its reliability in the presence of noise [2], [3]. On the other hand, precisely because of these abstractions, it has not always been clear how to bring the predictions of the models into contact with experiment.

Recently it has been suggested that the analogy between statistical physics models and neural networks can be turned into a precise mapping, and connected to experimental data, using the maximum entropy framework [4]. In a sense, the maximum entropy approach is the opposite of what we usually do in making models or theories. The conventional approach is to hypothesize some dynamics for the network we are studying, and then calculate the consequences of these assumptions; inevitably, the assumptions we make will be wrong in detail. In the maximum entropy method, however, we are trying to strip away all our assumptions, and find models of the system that have as little structure as possible while still reproducing some set of experimental observations.

The starting point of the maximum entropy method for neural networks is that the network could, if we don't know anything about its function, wander at random among all possible states. We then take measured, average properties of the network activity as constraints, and each constraint defines some minimal level of structure. Thus, in a completely random system neurons would generate action potentials (spikes) or remain silent with equal probability, but once we measure the mean spike rate for each neuron we know that there must be some departure from such complete randomness. Similarly, absent any data beyond the mean spike rates, the maximum entropy model of the network is one in which each neuron spikes independently of all the others, but once we measure the correlations in spiking between pairs of neurons, an additional layer of structure is required to account for these data. The central idea of the maximum entropy method is that, for each experimental observation that we want to reproduce, we add only the minimum amount of structure required.

An important feature of the maximum entropy approach is that the mathematical form of a maximum entropy model is exactly equivalent to a problem in statistical mechanics. That is, the maximum entropy construction defines an “effective energy” for every possible state of the network, and the probability that the system will be found in a particular state is given by the Boltzmann distribution in this energy landscape. Further, the energy function is built out of terms that are related to the experimental observables that we are trying to reproduce. Thus, for example, if we try to reproduce the correlations among spiking in pairs of neurons, the energy function will have terms describing effective interactions among pairs of neurons. As explained in more detail below, these connections are not analogies or metaphors, but precise mathematical equivalencies.

Minimally structured models are attractive, both because of the connection to statistical mechanics and because they represent the absence of modeling assumptions about data beyond the choice of experimental constraints. Of course, these features do not guarantee that such models will provide an accurate description of a real system. They do, however, give us a framework for starting with simple models and systematically increasing their complexity without worrying that the choice of model class itself has excluded the “correct” model or biased our results. Interest in maximum entropy approaches to networks of real neurons was triggered by the observation that, for groups of up to 10 ganglion cells in the vertebrate retina, maximum entropy models based on the mean spike probabilities of individual neurons and correlations between pairs of cells indeed generate successful predictions for the probabilities of all the combinatorial patterns of spiking and silence in the network as it responds to naturalistic sensory inputs [4]. In particular, the maximum entropy approach made clear that genuinely collective behavior in the network can be consistent with relatively weak correlations among pairs of neurons, so long as these correlations are widespread, shared among most pairs of cells in the system. This approach has now been used to analyze the activity in a variety of neural systems [5]–[15], the statistics of natural visual scenes [16]–[18], the structure and activity of biochemical and genetic networks [19], [20], the statistics of amino acid substitutions in protein families [21]–[27], the rules of spelling in English words [28], the directional ordering in flocks of birds [29], and configurations of groups of mice in naturalistic habitats [30].

One of the lessons of statistical mechanics is that systems with many degrees of freedom can behave in qualitatively different ways from systems with just a few degrees of freedom. If we can study only a handful of neurons (e.g., N∼10 as in Ref [4]), we can try to extrapolate based on the hypothesis that the group of neurons that we analyze is typical of a larger population. These extrapolations can be made more convincing by looking at a population of N = 40 neurons, and within such larger groups one can also try to test more explicitly whether the hypothesis of homogeneity or typicality is reliable [6], [9]. All these analyses suggest that, in the salamander retina, the roughly 200 interconnected neurons that represent a small patch of the visual world should exhibit dramatically collective behavior. In particular, the states of these large networks should cluster around local minima of the energy landscape, much as for the attractors in the Hopfield model of associative memory [1]. Further, this collective behavior means that responses will be substantially redundant, with the behavior of one neuron largely predictable from the state of other neurons in the network; stated more positively, this collective response allows for pattern completion and error correction. Finally, the collective behavior suggested by these extrapolations is a very special one, in which the probability of particular network states, or equivalently the degree to which we should be surprised by the occurrence of any particular state, has an anomalously large dynamic range [31]. If correct, these predictions would have a substantial impact on how we think about coding in the retina, and about neural network function more generally. Correspondingly, there is some controversy about all these issues [32]–[35].

Here we return to the salamander retina, in experiments that exploit a new generation of multi–electrode arrays and associated spike–sorting algorithms [36]. As schematized in Figure 1, these methods make it possible to record from  ganglion cells in the relevant densely interconnected patch, while projecting natural movies onto the retina. Access to these large populations poses new problems for the inference of maximum entropy models, both in principle and in practice. What we find is that, with extensions of algorithms developed previously [37], it is possible to infer maximum entropy models for more than one hundred neurons, and that with nearly two hours of data there are no signs of “overfitting” (cf. [15]). We have built models that match the mean probability of spiking for individual neurons, the correlations between spiking in pairs of neurons, and the distribution of summed activity in the network (i.e., the probability that K out of the N neurons spike in the same small window of time [38]–[40]). We will see that models which satisfy all these experimental constraints provide a strikingly accurate description of the states taken on by the network as a whole, that these states are collective, and that the collective behavior predicted by our models has implications for how the retina encodes visual information.

ganglion cells in the relevant densely interconnected patch, while projecting natural movies onto the retina. Access to these large populations poses new problems for the inference of maximum entropy models, both in principle and in practice. What we find is that, with extensions of algorithms developed previously [37], it is possible to infer maximum entropy models for more than one hundred neurons, and that with nearly two hours of data there are no signs of “overfitting” (cf. [15]). We have built models that match the mean probability of spiking for individual neurons, the correlations between spiking in pairs of neurons, and the distribution of summed activity in the network (i.e., the probability that K out of the N neurons spike in the same small window of time [38]–[40]). We will see that models which satisfy all these experimental constraints provide a strikingly accurate description of the states taken on by the network as a whole, that these states are collective, and that the collective behavior predicted by our models has implications for how the retina encodes visual information.

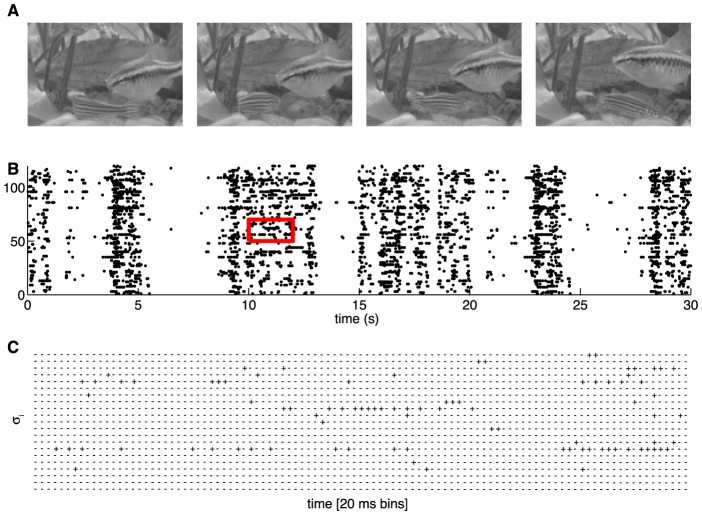

Figure 1. A schematic of the experiment.

(A) Four frames from the natural movie stimulus showing swimming fish and water plants. (B) The responses of a set of 120 neurons to a single stimulus repeat, black dots designate spikes. (C) The raster for a zoomed-in region designated by a red square in (B), showing the responses discretized into Δτ = 20 ms time bins, where  represents a silence (absence of spike) of neuron i, and

represents a silence (absence of spike) of neuron i, and  represents a spike.

represents a spike.

Maximum entropy

The idea of maximizing entropy has its origin in thermodynamics and statistical mechanics. The idea that we can use this principle to build models of systems that are not in thermal equilibrium is more recent, but still more than fifty years old [41]; in the past few years, there has been a new surge of interest in the formal aspects of maximum entropy constructions for (out-of-equilibrium) spike rasters (see, e.g., [42]). Here we provide a description of this approach which we hope makes the ideas accessible to a broad audience.

We imagine a neural system exposed to a stationary stimulus ensemble, in which simultaneous recordings from N neurons can be made. In small windows of time, as we see in Figure 1, a single neuron i either does ( ) or does not (

) or does not ( ) generate an action potential or spike [43]; the state of the entire network in that time bin is therefore described by a “binary word”

) generate an action potential or spike [43]; the state of the entire network in that time bin is therefore described by a “binary word”  . As the system responds to its inputs, it visits each of these states with some probability

. As the system responds to its inputs, it visits each of these states with some probability  . Even before we ask what the different states mean, for example as codewords in a representation of the sensory world, specifying this distribution requires us to determine the probability of each of

. Even before we ask what the different states mean, for example as codewords in a representation of the sensory world, specifying this distribution requires us to determine the probability of each of  possible states. Once N increases beyond ∼20, brute force sampling from data is no longer a general strategy for “measuring” the underlying distribution.

possible states. Once N increases beyond ∼20, brute force sampling from data is no longer a general strategy for “measuring” the underlying distribution.

Even when there are many, many possible states of the network, experiments of reasonable size can be sufficient to estimate the averages or expectation values of various functions of the state of the system,  , where the averages are taken across data collected over the course of the experiment. The goal of the maximum entropy construction is to search for the probability distribution

, where the averages are taken across data collected over the course of the experiment. The goal of the maximum entropy construction is to search for the probability distribution  that matches these experimental measurements but otherwise is as unstructured as possible. Minimizing structure means maximizing entropy [41], and for any set of moments or statistics that we want to match, the form of the maximum entropy distribution can be found analytically:

that matches these experimental measurements but otherwise is as unstructured as possible. Minimizing structure means maximizing entropy [41], and for any set of moments or statistics that we want to match, the form of the maximum entropy distribution can be found analytically:

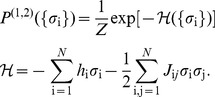

| (1) |

| (2) |

| (3) |

where  is the effective “energy” function or the Hamiltonian of the system, and the partition function

is the effective “energy” function or the Hamiltonian of the system, and the partition function  ensures that the distribution is normalized. The couplings

ensures that the distribution is normalized. The couplings  must be set such that the expectation values of all constraint functions

must be set such that the expectation values of all constraint functions  ,

,  , over the distribution P match those measured in the experiment:

, over the distribution P match those measured in the experiment:

| (4) |

These equations might be hard to solve, but they are guaranteed to have exactly one solution for the couplings  given any set of measured expectation values [44].

given any set of measured expectation values [44].

Why should we study the neural vocabulary,  , at all? In much previous work on neural coding, the focus has been on constructing models for a “codebook” which can predict the response of the neurons to arbitrary stimuli,

, at all? In much previous work on neural coding, the focus has been on constructing models for a “codebook” which can predict the response of the neurons to arbitrary stimuli,  [14], [45], or on building a “dictionary” that describes the stimuli consistent with particular patterns of activity,

[14], [45], or on building a “dictionary” that describes the stimuli consistent with particular patterns of activity,  [43]. In a natural setting, stimuli are drawn from a space of very high dimensionality, so constructing these “encoding” and “decoding” mappings between the stimuli and responses is very challenging and often involves making strong assumptions about how stimuli drive neural spiking (e.g. through linear filtering of the stimulus) [45]–[48]. While the maximum entropy framework itself can be extended to build stimulus-dependent maximum entropy models for

[43]. In a natural setting, stimuli are drawn from a space of very high dimensionality, so constructing these “encoding” and “decoding” mappings between the stimuli and responses is very challenging and often involves making strong assumptions about how stimuli drive neural spiking (e.g. through linear filtering of the stimulus) [45]–[48]. While the maximum entropy framework itself can be extended to build stimulus-dependent maximum entropy models for  and study detailed encoding and decoding mappings [14], [49]–[51], we choose to focus here directly on the total distribution of responses,

and study detailed encoding and decoding mappings [14], [49]–[51], we choose to focus here directly on the total distribution of responses,  , thus taking a very different approach.

, thus taking a very different approach.

Already when we study the smallest possible network, i.e., a pair of interacting neurons, the usual approach is to measure the correlation between spikes generated in the two cells, and to dissect this correlation into contributions which are intrinsic to the network and those which are ascribed to common, stimulus driven inputs. The idea of decomposing correlations dates back to a time when it was hoped that correlations among spikes could be used to map the synaptic connections between neurons [52]. In fact, in a highly interconnected system, the dominant source of correlations between two neurons—even if they are entirely intrinsic to the network—will always be through the multitude of indirect paths involving other neurons [53]. Regardless of the source of these correlations, however, the question of whether they are driven by the stimulus or are intrinsic to the network is unlikely a question that the brain could answer. We, as external observers, can repeat the stimulus exactly, and search for correlations conditional on the stimulus, but this is not accessible to the organism, unless the brain could build a “noise model” of spontaneous activity of the retina in the absence of any stimuli and this model also generalized to stimulus-driven activity. The brain has access only to the output of the retina: the patterns of activity which are drawn from the distribution  , rather than activity conditional on the stimulus, so the neural mechanism by which the correlations could be split into signal and noise components is unclear. If the responses

, rather than activity conditional on the stimulus, so the neural mechanism by which the correlations could be split into signal and noise components is unclear. If the responses  are codewords for the visual stimulus, then the entropy of this distribution sets the capacity of the code to carry information. Word by word,

are codewords for the visual stimulus, then the entropy of this distribution sets the capacity of the code to carry information. Word by word,  determines how surprised the brain should be by each particular pattern of response, including the possibility that the response was corrupted by noise in the retinal circuit and thus should be corrected or ignored [54]. In a very real sense, what the brain “sees” are sequences of states drawn from

determines how surprised the brain should be by each particular pattern of response, including the possibility that the response was corrupted by noise in the retinal circuit and thus should be corrected or ignored [54]. In a very real sense, what the brain “sees” are sequences of states drawn from  . In the same spirit that many groups have studied the statistical structures of natural scenes [55]–[60], we would like to understand the statistical structure of the codewords that represent these scenes.

. In the same spirit that many groups have studied the statistical structures of natural scenes [55]–[60], we would like to understand the statistical structure of the codewords that represent these scenes.

The maximum entropy method is not a model for network activity. Rather it is a framework for building models, and to implement this framework we have to choose which functions of the network state  we think are interesting. The hope is that while there are

we think are interesting. The hope is that while there are  states of the system as a whole, there is a much smaller number of measurements,

states of the system as a whole, there is a much smaller number of measurements,  , with

, with  and

and  , which will be sufficient to capture the essential structure of the collective behavior in the system. We emphasize that this is a hypothesis, and must be tested. How should we choose the functions

, which will be sufficient to capture the essential structure of the collective behavior in the system. We emphasize that this is a hypothesis, and must be tested. How should we choose the functions  ? In this work we consider three classes of possibilities:

? In this work we consider three classes of possibilities:

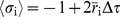

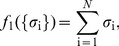

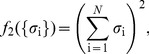

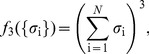

- We expect that networks have very different behaviors depending on the overall probability that neurons generate spikes as opposed to remaining silent. Thus, our first choice of functions to constrain in our models is the set of mean spike probabilities or firing rates, which is equivalent to constraining

, for each neuron i. These constraints contribute a term to the energy function

, for each neuron i. These constraints contribute a term to the energy function

Note that

(5)  , where

, where  is the mean spike rate of neuron i, and Δτ is the size of the time slices that we use in our analysis, as in Figure 1. Maximum entropy models that constrain only the firing rates of all the neurons (i.e.

is the mean spike rate of neuron i, and Δτ is the size of the time slices that we use in our analysis, as in Figure 1. Maximum entropy models that constrain only the firing rates of all the neurons (i.e.  ) are called “independent models”; we denote their distribution functions by

) are called “independent models”; we denote their distribution functions by  .

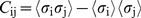

. - As a second constraint we take the correlations between neurons, two by two. This corresponds to measuring

for every pair of cells ij. These constraints contribute a term to the energy function

(6)

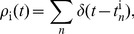

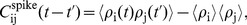

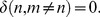

It is more conventional to think about correlations between two neurons in terms of their spike trains. If we define

(7)

where neuron i spikes at times

(8)  , then the spike–spike correlation function is [43]

, then the spike–spike correlation function is [43]

and we also have the average spike rates

(9)  . The correlations among the discrete spike/silence variables

. The correlations among the discrete spike/silence variables  then can be written as

then can be written as

Maximum entropy models that constrain average firing rates and correlations (i.e.

(10)  ) are called “pairwise models”; we denote their distribution functions by

) are called “pairwise models”; we denote their distribution functions by

.

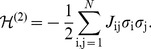

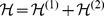

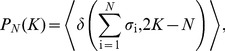

. - Firing rates and pairwise correlations focus on the properties of particular neurons. As an alternative, we can consider quantities that refer to the network as a whole, independent of the identity of the individual neurons. A simple example is the “distribution of synchrony” (also called “population firing rate”), that is, the probability

that K out of the N neurons spike in the same small slice of time. We can count the number of neurons that spike by summing all of the

that K out of the N neurons spike in the same small slice of time. We can count the number of neurons that spike by summing all of the  , remembering that we have

, remembering that we have  for spikes and

for spikes and  for silences. Then

for silences. Then

where

(11)

(12)

If we know the distribution

(13)  , then we know all its moments, and hence we can think of the functions

, then we know all its moments, and hence we can think of the functions  that we are constraining as being

that we are constraining as being

(14)

(15)

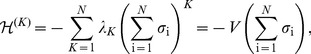

and so on. Because there are only N neurons, there are only N+1 possible values of K, and hence only N unique moments. Constraining all of these moments contributes a term to the energy function

(16)

where V is an effective potential [39], [40]. Maximum entropy models that constrain average firing rates, correlations, and the distribution of synchrony (i.e.

(17)  ) are called “K-pairwise models”; we denote their distribution functions by

) are called “K-pairwise models”; we denote their distribution functions by  .

.

It is important that the mapping between maximum entropy models and a Boltzmann distribution with some effective energy function is not an analogy, but rather a mathematical equivalence. In using the maximum entropy approach we are not assuming that the system of interest is in some thermal equilibrium state (note that there is no explicit temperature in Eq (1)), nor are we assuming that there is some mysterious force which drives the system to a state of maximum entropy. We are also not assuming that the temporal dynamics of the network is described by Newton's laws or Brownian motion on the energy landscape. What we are doing is making models that are consistent with certain measured quantities, but otherwise have as little structure as possible. As noted above, this is the opposite of what we usually do in building models or theories—rather than trying to impose some hypothesized structure on the world, we are trying to remove all structures that are not explicitly contained within the chosen set of experimental constraints.

The mapping to a Boltzmann distribution is not an analogy, but if we take the energy function more literally we are making use of analogies. Thus, the term  that emerges from constraining the mean spike probabilities of every neuron is analogous to a magnetic field being applied to each spin, where spin “up” (

that emerges from constraining the mean spike probabilities of every neuron is analogous to a magnetic field being applied to each spin, where spin “up” ( ) marks a spike and spin “down” (

) marks a spike and spin “down” ( ) denotes silence. Similarly, the term

) denotes silence. Similarly, the term  that emerges from constraining the pairwise correlations among neurons corresponds to a “spin–spin” interaction which tends to favor neurons firing together (

that emerges from constraining the pairwise correlations among neurons corresponds to a “spin–spin” interaction which tends to favor neurons firing together ( ) or not (

) or not ( ). Finally, the constraint on the overall distribution of activity generates a term

). Finally, the constraint on the overall distribution of activity generates a term  which we can interpret as resulting from the interaction between all the spins/neurons in the system and one other, hidden degree of freedom, such as an inhibitory interneuron. These analogies can be useful, but need not be taken literally.

which we can interpret as resulting from the interaction between all the spins/neurons in the system and one other, hidden degree of freedom, such as an inhibitory interneuron. These analogies can be useful, but need not be taken literally.

Results

Can we learn the model?

We have applied the maximum entropy framework to the analysis of one large experimental data set on the responses of ganglion cells in the salamander retina to a repeated, naturalistic movie. These data are collected using a new generation of multi–electrode arrays that allow us to record from a large fraction of the neurons in a 450×450 µm patch, which contains a total of ∼200 ganglion cells [36], as in Figure 1. In the present data set, we have selected 160 neurons that pass standard tests for the stability of spike waveforms, the lack of refractory period violations, and the stability of firing across the duration of the experiment (see Methods and Ref [36]). The visual stimulus is a greyscale movie of swimming fish and swaying water plants in a tank; the analyzed chunk of movie is 19 s long, and the recording was stable through 297 repeats, for a total of more than 1.5 hrs of data. As has been found in previous experiments in the retinas of multiple species [4], [61]–[64], we found that correlations among neurons are most prominent on the ∼20 ms time scale, and so we chose to discretize the spike train into Δτ = 20 ms bins.

Maximum entropy models have a simple form [Eq (1)] that connects precisely with statistical physics. But to complete the construction of a maximum entropy model, we need to impose the condition that averages in the maximum entropy distribution match the experimental measurements, as in Eq (4). This amounts to finding all the coupling constants  in Eq (2). This is, in general, a hard problem. We need not only to solve this problem, but also to convince ourselves that our solution is meaningful, and that it does not reflect overfitting to the limited set of data at our disposal. A detailed account of the numerical solution to this inverse problem is given in Methods: Learning maximum entropy models from data.

in Eq (2). This is, in general, a hard problem. We need not only to solve this problem, but also to convince ourselves that our solution is meaningful, and that it does not reflect overfitting to the limited set of data at our disposal. A detailed account of the numerical solution to this inverse problem is given in Methods: Learning maximum entropy models from data.

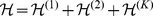

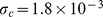

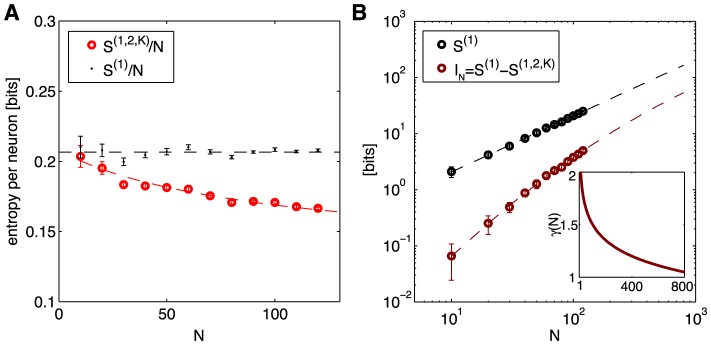

In Figure 2 we show an example of N = 100 neurons from a small patch of the salamander retina, responding to naturalistic movies. We notice that correlations are weak, but widespread, as in previous experiments on smaller groups of neurons [4], [6], [9], [65], [66]. Because the data set is very large, the threshold for reliable detection of correlations is very low; if we shuffle the data completely by permuting time and repeat indices independently for each neuron, the standard deviation of correlation coefficients,

|

(18) |

is  , as shown in Figure 2C, vastly smaller than the typical correlations that we observe (median

, as shown in Figure 2C, vastly smaller than the typical correlations that we observe (median  , 90% of values between

, 90% of values between  and

and  ). More subtly, this means that only ∼6.3% percent of the correlation coefficients are within error bars of zero, and there is no sign that there is a large excess fraction of pairs that have truly zero correlation—the distribution of correlations across the population seems continuous. Note that, as customary, we report normalized correlation coefficients (

). More subtly, this means that only ∼6.3% percent of the correlation coefficients are within error bars of zero, and there is no sign that there is a large excess fraction of pairs that have truly zero correlation—the distribution of correlations across the population seems continuous. Note that, as customary, we report normalized correlation coefficients ( , between −1 and 1), while maximum entropy formally constrains an equivalent set of unnormalized second order moments,

, between −1 and 1), while maximum entropy formally constrains an equivalent set of unnormalized second order moments,  [Eq (6)].

[Eq (6)].

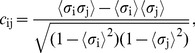

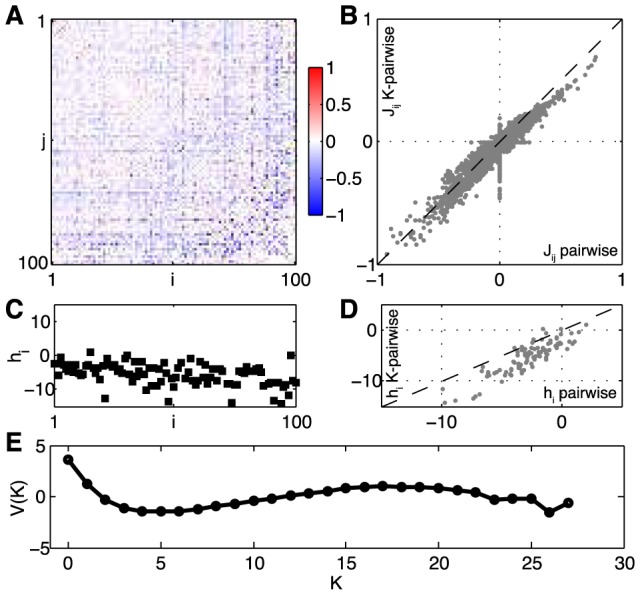

Figure 2. Learning the pairwise maximum entropy model for a 100 neuron subset.

A subgroup of 100 neurons from our set of 160 has been sorted by the firing rate. At left, the statistics of the neural activity: (A) correlations  , (B) firing rates (equivalent to

, (B) firing rates (equivalent to  ), and (C) the distribution of correlation coefficients

), and (C) the distribution of correlation coefficients  . The red distribution is the distribution of differences between two halves of the experiment, and the small red error bar marks the standard deviation of correlation coefficients in fully shuffled data (1.8×10−3). At right, the parameters of a pairwise maximum entropy model [

. The red distribution is the distribution of differences between two halves of the experiment, and the small red error bar marks the standard deviation of correlation coefficients in fully shuffled data (1.8×10−3). At right, the parameters of a pairwise maximum entropy model [ from Eq (19)] that reproduces these data: (D) coupling constants

from Eq (19)] that reproduces these data: (D) coupling constants  , (E) fields

, (E) fields  , and (F) the distribution of couplings in this group of neurons.

, and (F) the distribution of couplings in this group of neurons.

We began by constructing maximum entropy models that match the mean spike rates and pairwise correlations, i.e. “pairwise models,” whose distribution is, from Eqs (5, 7),

|

(19) |

When we reconstruct the coupling constants of the maximum entropy model, we see that the “interactions”  among neurons are widespread, and almost symmetrically divided between positive and negative values; for more details see Methods: Learning maximum entropy models from data. Figure 3 shows that the model we construct really does satisfy the constraints, so that the differences, for example, between the measured and predicted correlations among pairs of neurons are within the experimental errors in the measurements.

among neurons are widespread, and almost symmetrically divided between positive and negative values; for more details see Methods: Learning maximum entropy models from data. Figure 3 shows that the model we construct really does satisfy the constraints, so that the differences, for example, between the measured and predicted correlations among pairs of neurons are within the experimental errors in the measurements.

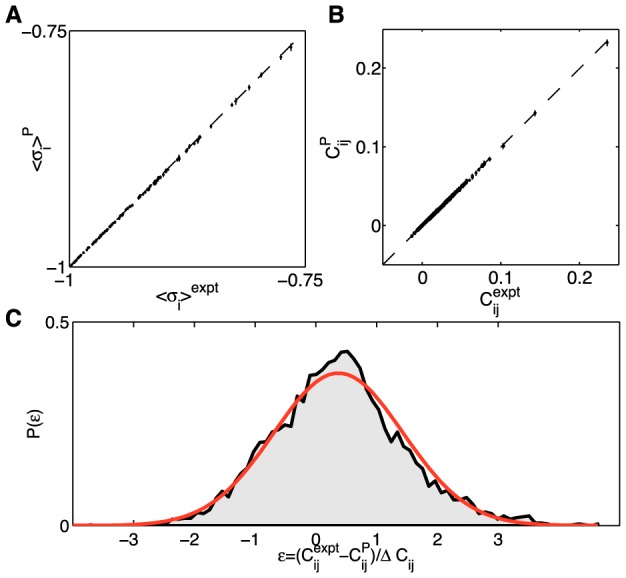

Figure 3. Reconstruction precision for a 100 neuron subset.

Given the reconstructed Hamiltonian of the pairwise model, we used an independent Metropolis Monte Carlo (MC) sampler to assess how well the constrained model statistics (mean firing rates (A), covariances (B), plotted on y-axes) match the measured statistics (corresponding x-axes). Error bars on data computed by bootstrapping; error bars on MC estimates obtained by repeated MC runs generating a number of samples that is equal to the original data size. (C) The distribution of the difference between true and model values for  covariance matrix elements, normalized by the estimated error bar in the data; red overlay is a Gaussian with zero mean and unit variance. The distribution has nearly Gaussian shape with a width of ≈1.1, showing that the learning algorithm reconstructs the covariance statistics to within measurement precision.

covariance matrix elements, normalized by the estimated error bar in the data; red overlay is a Gaussian with zero mean and unit variance. The distribution has nearly Gaussian shape with a width of ≈1.1, showing that the learning algorithm reconstructs the covariance statistics to within measurement precision.

With N = 100 neurons, measuring the mean spike probabilities and all the pairwise correlations means that we estimate  separate quantities. This is a large number, and it is not clear that we are safe in taking all these measurements at face value. It is possible, for example, that with a finite data set the errors in the different elements of the correlation matrix

separate quantities. This is a large number, and it is not clear that we are safe in taking all these measurements at face value. It is possible, for example, that with a finite data set the errors in the different elements of the correlation matrix  are sufficiently strongly correlated that we don't really know the matrix as a whole with high precision, even though the individual elements are measured very accurately. This is a question about overfitting: is it possible that the parameters

are sufficiently strongly correlated that we don't really know the matrix as a whole with high precision, even though the individual elements are measured very accurately. This is a question about overfitting: is it possible that the parameters  are being finely tuned to match even the statistical errors in our data?

are being finely tuned to match even the statistical errors in our data?

To test for overfitting (Figure 4), we exploit the fact that the stimuli consist of a short movie repeated many times. We can choose a random 90% of these repeats from which to learn the parameters of the maximum entropy model, and then check that the probability of the data in the other 10% of the experiment is predicted to be the same, within errors. We see in Figure 4 that this is true, and that it remains true as we expand from N = 10 neurons (for which we surely have enough data) out to N = 120, where we might have started to worry. Taken together, Figures 2, 3, and 4 suggest strongly that our data and algorithms are sufficient to construct maximum entropy models, reliably, for networks of more than one hundred neurons.

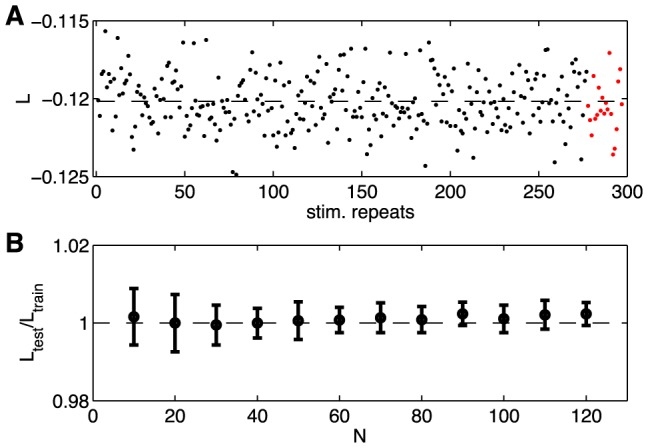

Figure 4. A test for overfitting.

(A) The per-neuron average log-probability of data (log-likelihood,  ) under the pairwise model of Eq (19), computed on the training repeats (black dots) and on the testing repeats (red dots), for the same group of N = 100 neurons shown in Figure 1 and 2. Here the repeats have been reordered so that the training repeats precede testing repeats; in fact, the choice of test repeats is random. (B) The ratio of the log-likelihoods on test vs training data, shown as a function of the network size N. Error bars are the standard deviation across 30 subgroups at each value of N.

) under the pairwise model of Eq (19), computed on the training repeats (black dots) and on the testing repeats (red dots), for the same group of N = 100 neurons shown in Figure 1 and 2. Here the repeats have been reordered so that the training repeats precede testing repeats; in fact, the choice of test repeats is random. (B) The ratio of the log-likelihoods on test vs training data, shown as a function of the network size N. Error bars are the standard deviation across 30 subgroups at each value of N.

Do the models work?

How well do our maximum entropy models describe the behavior of large networks of neurons? The models predict the probability of occurrence for all possible combinations of spiking and silence in the network, and it seems natural to use this huge predictive power to test the models. In small networks, this is a useful approach. Indeed, much of the interest in the maximum entropy approach derives from the success of models based on mean spike rates and pairwise correlations, as in Eq (19), in reproducing the probability distribution over states in networks of size  [4], [5]. With N = 10, there are

[4], [5]. With N = 10, there are  possible combinations of spiking and silence, and reasonable experiments are sufficiently long to estimate the probabilities of all of these individual states. But with N = 100, there are

possible combinations of spiking and silence, and reasonable experiments are sufficiently long to estimate the probabilities of all of these individual states. But with N = 100, there are  possible states, and so it is not possible to “just measure” all the probabilities. Thus, we need another strategy for testing our models.

possible states, and so it is not possible to “just measure” all the probabilities. Thus, we need another strategy for testing our models.

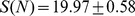

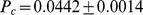

Striking (and model–independent) evidence for nontrivial collective behavior in these networks is obtained by asking for the probability that K out of the N neurons generate a spike in the same small window of time, as shown in Figure 5. This distribution,  , should become Gaussian at large N if the neurons are independent, or nearly so, and we have noted that the correlations between pairs of cells are weak. Thus

, should become Gaussian at large N if the neurons are independent, or nearly so, and we have noted that the correlations between pairs of cells are weak. Thus  is very well approximated by an independent model, with fractional errors on the order of the correlation coefficients, typically less than ∼10%. But, even in groups of N = 10 cells, there are substantial departures from the predictions of an independent model (Figure 5A). In groups of N = 40 cells, we see K = 10 cells spiking synchronously with probability ∼104 times larger than expected from an independent model (Figure 5B), and the departure from independence is even larger at N = 100 (Figure 5C) [12], [15].

is very well approximated by an independent model, with fractional errors on the order of the correlation coefficients, typically less than ∼10%. But, even in groups of N = 10 cells, there are substantial departures from the predictions of an independent model (Figure 5A). In groups of N = 40 cells, we see K = 10 cells spiking synchronously with probability ∼104 times larger than expected from an independent model (Figure 5B), and the departure from independence is even larger at N = 100 (Figure 5C) [12], [15].

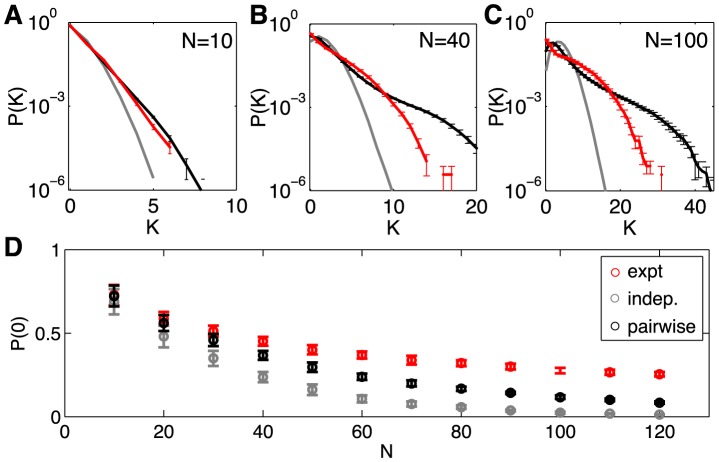

Figure 5. Predicted vs measured probability of K simultaneous spikes (spike synchrony).

(A–C)  for subnetworks of size

for subnetworks of size  ; error bars are s.d. across random halves of the duration of the experiment. For N = 10 we already see large deviations from an independent model, but these are captured by the pairwise model. At N = 40 (B), the pairwise models miss the tail of the distribution, where

; error bars are s.d. across random halves of the duration of the experiment. For N = 10 we already see large deviations from an independent model, but these are captured by the pairwise model. At N = 40 (B), the pairwise models miss the tail of the distribution, where  . At N = 100 (C), the deviations between the pairwise model and the data are more substantial. (D) The probability of silence in the network, as a function of population size; error bars are s.d. across 30 subgroups of a given size N. Throughout, red shows the data, grey the independent model, and black the pairwise model.

. At N = 100 (C), the deviations between the pairwise model and the data are more substantial. (D) The probability of silence in the network, as a function of population size; error bars are s.d. across 30 subgroups of a given size N. Throughout, red shows the data, grey the independent model, and black the pairwise model.

Maximum entropy models that match the mean spike rate and pairwise correlations in a network make an unambiguous, quantitative prediction for  , with no adjustable parameters. In smaller groups of neurons, certainly for N = 10, this prediction is quite accurate, and accounts for most of the difference between the data and the expectations from an independent model, as shown in Figure 5. But even at N = 40 we see small deviations between the data and the predictions of the pairwise model. Because the silent state is highly probable, we can measure

, with no adjustable parameters. In smaller groups of neurons, certainly for N = 10, this prediction is quite accurate, and accounts for most of the difference between the data and the expectations from an independent model, as shown in Figure 5. But even at N = 40 we see small deviations between the data and the predictions of the pairwise model. Because the silent state is highly probable, we can measure  very accurately, and the pairwise models make errors of nearly a factor of three at N = 100, and independent models are off by a factor of about twenty. The pairwise model errors in

very accurately, and the pairwise models make errors of nearly a factor of three at N = 100, and independent models are off by a factor of about twenty. The pairwise model errors in  are negligible when compared to the many orders of magnitude differences from an independent model, but they are highly significant. The pattern of errors also is important, since in the real networks silence persists as being highly probable even at N = 120—with indications that this surprising trend might continue towards larger N

[39] —and the pairwise model doesn't quite capture this.

are negligible when compared to the many orders of magnitude differences from an independent model, but they are highly significant. The pattern of errors also is important, since in the real networks silence persists as being highly probable even at N = 120—with indications that this surprising trend might continue towards larger N

[39] —and the pairwise model doesn't quite capture this.

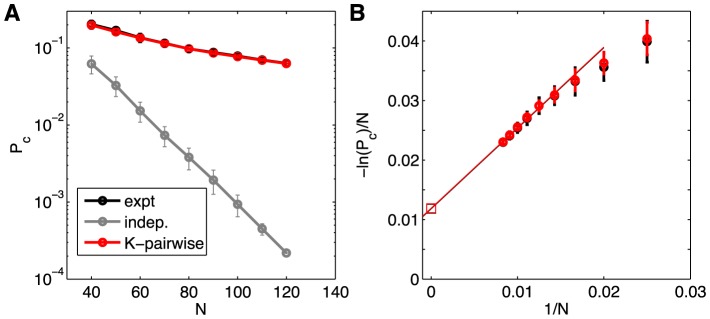

If a model based on pairwise correlations doesn't quite account for the data, it is tempting to try and include correlations among triplets of neurons. But at N = 100 there are  of these triplets, so a model that includes these correlations is much more complex than one that stops with pairs. An alternative is to use

of these triplets, so a model that includes these correlations is much more complex than one that stops with pairs. An alternative is to use  itself as a constraint on our models, as explained above in relation to Eq (17). This defines the “K-pairwise model,”

itself as a constraint on our models, as explained above in relation to Eq (17). This defines the “K-pairwise model,”

|

(20) |

where the “potential” V is chosen to match the observed distribution  . As noted above, we can think of this potential as providing a global regulation of the network activity, such as might be implemented by inhibitory interneurons with (near) global connectivity. Whatever the mechanistic interpretation of this model, it is important that it is not much more complex than the pairwise model: matching

. As noted above, we can think of this potential as providing a global regulation of the network activity, such as might be implemented by inhibitory interneurons with (near) global connectivity. Whatever the mechanistic interpretation of this model, it is important that it is not much more complex than the pairwise model: matching  adds only ∼N parameters to our model, while the pairwise model already has

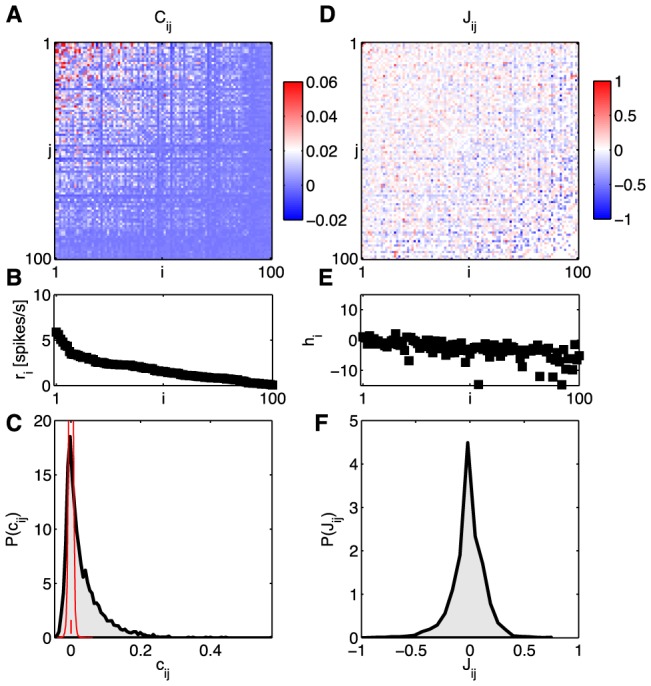

adds only ∼N parameters to our model, while the pairwise model already has  parameters. All of the tests given in the previous section can be redone in this case, and again we find that we can learn the K-pairwise models from the available data with no signs of overfitting. Figure 6 shows the parameters of the K-pairwise model for the same group of N = 100 neurons shown in Figure 2. Notice that the pairwise interaction terms

parameters. All of the tests given in the previous section can be redone in this case, and again we find that we can learn the K-pairwise models from the available data with no signs of overfitting. Figure 6 shows the parameters of the K-pairwise model for the same group of N = 100 neurons shown in Figure 2. Notice that the pairwise interaction terms  remain roughly the same; the local fields

remain roughly the same; the local fields  are also similar but have a shift towards more negative values.

are also similar but have a shift towards more negative values.

Figure 6. K-pairwise model for a the same group of N = 100 cells shown in Figure 1.

The neurons are again sorted in the order of decreasing firing rates. (A) Pairwise interactions,  , and the comparison with the interactions of the pairwise model, (B). (C) Single-neuron fields,

, and the comparison with the interactions of the pairwise model, (B). (C) Single-neuron fields,  , and the comparison with the fields of the pairwise model, (D). (E) The global potential, V(K), where K is the number of synchronous spikes. See Methods: Parametrization of the K-pairwise model for details.

, and the comparison with the fields of the pairwise model, (D). (E) The global potential, V(K), where K is the number of synchronous spikes. See Methods: Parametrization of the K-pairwise model for details.

Since we didn't make explicit use of the triplet correlations in constructing the K-pairwise model, we can test the model by predicting these correlations. In Figure 7A we show

| (21) |

as computed from the real data and from the models, for a single group of N = 100 neurons. We see that pairwise models capture the rankings of the different triplets, so that more strongly correlated triplets are predicted to be more strongly correlated, but these models miss quantitatively, overestimating the positive correlations and failing to predict significantly negative correlations. These errors are largely corrected in the K-pairwise model, despite the fact that adding a constraint on  doesn't add any information about the identity of the neurons in the different triplets. Specifically, Figure 7A shows that the biases of the pairwise model in the prediction of three-point correlations have been largely removed (with some residual deviations at large absolute values of the three-point correlation) by adding the K-spike constraint; on the other hand, the variance of predictions across bins containing three-point correlations of approximately the same magnitude did not decrease substantially. It is also interesting that this improvement in our predictions (as well as that in Figure 8 below) occurs even though the numerical value of the effective potential

doesn't add any information about the identity of the neurons in the different triplets. Specifically, Figure 7A shows that the biases of the pairwise model in the prediction of three-point correlations have been largely removed (with some residual deviations at large absolute values of the three-point correlation) by adding the K-spike constraint; on the other hand, the variance of predictions across bins containing three-point correlations of approximately the same magnitude did not decrease substantially. It is also interesting that this improvement in our predictions (as well as that in Figure 8 below) occurs even though the numerical value of the effective potential  is quite small, as shown in Figure 6E (quantitatively, in an example group of N = 100 neurons, the variance in energy associated with the V(K) potential accounts roughly for only 5% of the total variance in energy). Fixing the distribution of global activity thus seems to capture something about the network that individual spike probabilities and pairwise correlations have missed.

is quite small, as shown in Figure 6E (quantitatively, in an example group of N = 100 neurons, the variance in energy associated with the V(K) potential accounts roughly for only 5% of the total variance in energy). Fixing the distribution of global activity thus seems to capture something about the network that individual spike probabilities and pairwise correlations have missed.

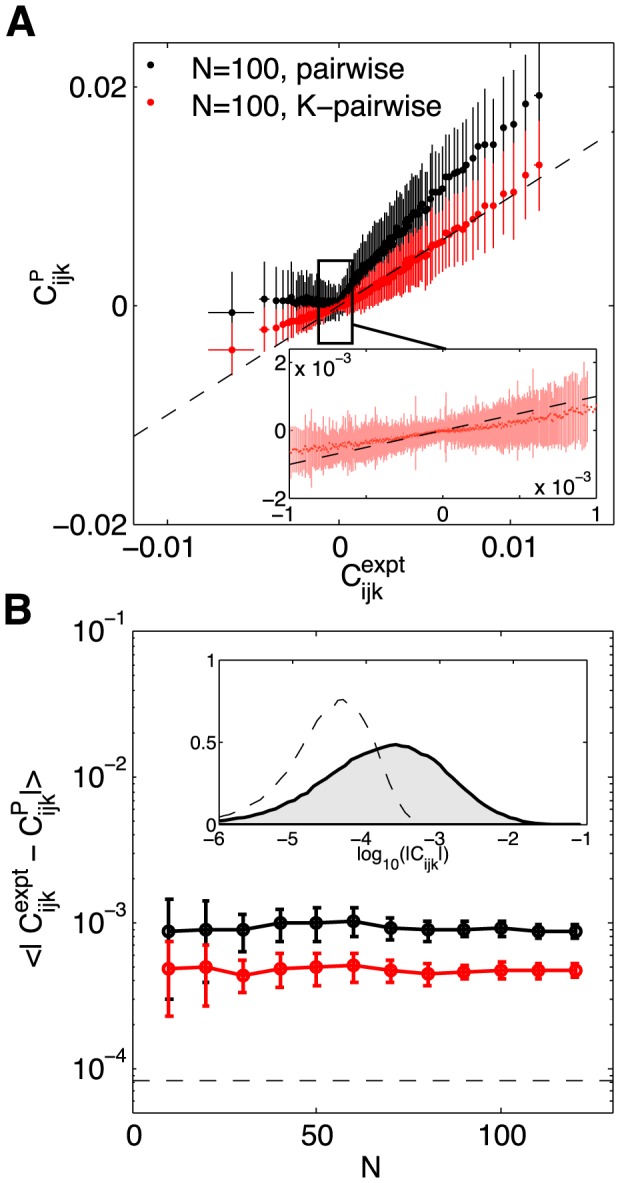

Figure 7. Predicted vs real connected three–point correlations,  from Eq (21).

from Eq (21).

(A) Measured  (x-axis) vs predicted by the model (y-axis), shown for an example 100 neuron subnetwork. The ∼1.6×105 triplets are binned into 1000 equally populated bins; error bars in x are s.d. across the bin. The corresponding values for the predictions are grouped together, yielding the mean and the s.d. of the prediction (y-axis). Inset shows a zoom-in of the central region, for the K-pairwise model. (B) Error in predicted three-point correlation functions as a function of subnetwork size N. Shown are mean absolute deviations of the model prediction from the data, for pairwise (black) and K-pairwise (red) models; error bars are s.d. across 30 subnetworks at each N, and the dashed line shows the mean absolute difference between two halves of the experiment. Inset shows the distribution of three–point correlations (grey filled region) and the distribution of differences between two halves of the experiment (dashed line); note the logarithmic scale.

(x-axis) vs predicted by the model (y-axis), shown for an example 100 neuron subnetwork. The ∼1.6×105 triplets are binned into 1000 equally populated bins; error bars in x are s.d. across the bin. The corresponding values for the predictions are grouped together, yielding the mean and the s.d. of the prediction (y-axis). Inset shows a zoom-in of the central region, for the K-pairwise model. (B) Error in predicted three-point correlation functions as a function of subnetwork size N. Shown are mean absolute deviations of the model prediction from the data, for pairwise (black) and K-pairwise (red) models; error bars are s.d. across 30 subnetworks at each N, and the dashed line shows the mean absolute difference between two halves of the experiment. Inset shows the distribution of three–point correlations (grey filled region) and the distribution of differences between two halves of the experiment (dashed line); note the logarithmic scale.

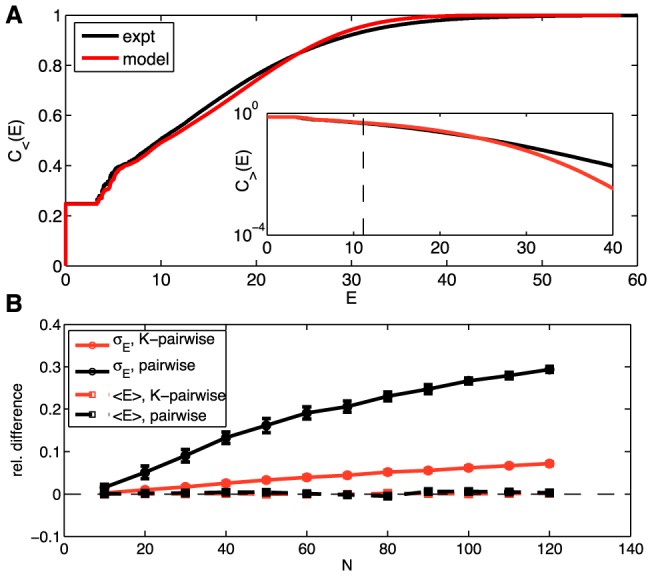

Figure 8. Predicted vs real distributions of energy, E.

(A) The cumulative distribution of energies,  from Eq (22), for the K-pairwise models (red) and the data (black), in a population of 120 neurons. Inset shows the high energy tails of the distribution,

from Eq (22), for the K-pairwise models (red) and the data (black), in a population of 120 neurons. Inset shows the high energy tails of the distribution,  from Eq (24); dashed line denotes the energy that corresponds to the probability of seeing the pattern once in an experiment. See Figure S5 for an analogous plot for the pairwise model. (B) Relative difference in the first two moments (mean,

from Eq (24); dashed line denotes the energy that corresponds to the probability of seeing the pattern once in an experiment. See Figure S5 for an analogous plot for the pairwise model. (B) Relative difference in the first two moments (mean,  , dashed; standard deviation,

, dashed; standard deviation,  , solid) of the distribution of energies evaluated over real data and a sample from the corresponding model (black = pairwise; red = K-pairwise). Error bars are s.d. over 30 subnetworks at a given size N.

, solid) of the distribution of energies evaluated over real data and a sample from the corresponding model (black = pairwise; red = K-pairwise). Error bars are s.d. over 30 subnetworks at a given size N.

An interesting effect is shown in Figure 7B, where we look at the average absolute deviation between predicted and measured  , as a function of the group size N. With increasing N the ratio between the total number of (predicted) three-point correlations and (fitted) model parameters is increasing (from ≈2 at N = 10 to ≈40 for N = 120), leading us to believe that predictions will grow progressively worse. Nevertheless, the average error in three-point prediction stays constant with network size, for both pairwise and K-pairwise models. An attractive explanation is that, as N increases, the models encompass larger and larger fractions of the interacting neural patch and thus decrease the effects of “hidden” units, neurons that are present but not included in the model; such unobserved units, even if they only interacted with other units in a pairwise fashion, could introduce effective higher-order interactions between observed units, thereby causing three-point correlation predictions to deviate from those of the pairwise model [67]. The accuracy of the K-pairwise predictions is not quite as good as the errors in our measurements (dashed line in Figure 7B), but still very good, improving by a factor of ∼2 relative to the pairwise model to well below 10−3.

, as a function of the group size N. With increasing N the ratio between the total number of (predicted) three-point correlations and (fitted) model parameters is increasing (from ≈2 at N = 10 to ≈40 for N = 120), leading us to believe that predictions will grow progressively worse. Nevertheless, the average error in three-point prediction stays constant with network size, for both pairwise and K-pairwise models. An attractive explanation is that, as N increases, the models encompass larger and larger fractions of the interacting neural patch and thus decrease the effects of “hidden” units, neurons that are present but not included in the model; such unobserved units, even if they only interacted with other units in a pairwise fashion, could introduce effective higher-order interactions between observed units, thereby causing three-point correlation predictions to deviate from those of the pairwise model [67]. The accuracy of the K-pairwise predictions is not quite as good as the errors in our measurements (dashed line in Figure 7B), but still very good, improving by a factor of ∼2 relative to the pairwise model to well below 10−3.

Maximum entropy models assign an effective energy to every possible combination of spiking and silence in the network,  from Eq (20). Learning the model means specifying all the parameters in this expression, so that the mapping from states to energies is completely determined. The energy determines the probability of the state, and while we can't estimate the probabilities of all possible states, we can ask whether the distribution of energies that we see in the data agrees with the predictions of the model. Thus, if we have a set of states drawn out of a distribution

from Eq (20). Learning the model means specifying all the parameters in this expression, so that the mapping from states to energies is completely determined. The energy determines the probability of the state, and while we can't estimate the probabilities of all possible states, we can ask whether the distribution of energies that we see in the data agrees with the predictions of the model. Thus, if we have a set of states drawn out of a distribution  , we can count the number of states that have energies lower than E,

, we can count the number of states that have energies lower than E,

| (22) |

where  is the Heaviside step function,

is the Heaviside step function,

| (23) |

Similarly, we can count the number of states that have energy larger than E,

| (24) |

Now we can take the distribution  to be the distribution of states that we actually see in the experiment, or we can take it to be the distribution predicted by the model, and if the model is accurate we should find that the cumulative distributions are similar in these two cases. Results are shown in Figure 8A (analogous results for the pairwise model are shown in Figure S5). Figure 8B focuses on the agreement between the first two moments of the distribution of energies, i.e., the mean

to be the distribution of states that we actually see in the experiment, or we can take it to be the distribution predicted by the model, and if the model is accurate we should find that the cumulative distributions are similar in these two cases. Results are shown in Figure 8A (analogous results for the pairwise model are shown in Figure S5). Figure 8B focuses on the agreement between the first two moments of the distribution of energies, i.e., the mean  and variance

and variance  , as a function of the network size N, showing that the K-pairwise model is significantly better at matching the variance of the energies relative to the pairwise model.

, as a function of the network size N, showing that the K-pairwise model is significantly better at matching the variance of the energies relative to the pairwise model.

We see that the distributions of energies in the data and the model are very similar. There is an excellent match in the “low energy” (high probability) region, and then as we look at the high energy tail ( ) we see that theory and experiment match out to probabilities of better than

) we see that theory and experiment match out to probabilities of better than  . Thus the distribution of energies, which is an essential construct of the model, seems to match the data across >90% of the states that we see.

. Thus the distribution of energies, which is an essential construct of the model, seems to match the data across >90% of the states that we see.

The successful prediction of the cumulative distribution  is especially striking because it extends to E∼25. At these energies, the probability of any single state is predicted to be

is especially striking because it extends to E∼25. At these energies, the probability of any single state is predicted to be  , which means that these states should occur roughly once per fifty years (!). This seems ridiculous—what are such rare states doing in our analysis, much less as part of the claim that theory and experiment are in quantitative agreement? The key is that there are many, many of these rare states—so many, in fact, that the theory is predicting that ∼10% of the all the states we observe will be (at least) this rare: individually surprising events are, as a group, quite common. In fact, of the

, which means that these states should occur roughly once per fifty years (!). This seems ridiculous—what are such rare states doing in our analysis, much less as part of the claim that theory and experiment are in quantitative agreement? The key is that there are many, many of these rare states—so many, in fact, that the theory is predicting that ∼10% of the all the states we observe will be (at least) this rare: individually surprising events are, as a group, quite common. In fact, of the  combinations of spiking and silence (

combinations of spiking and silence ( distinct ones) that we see in subnetworks of N = 120 neurons,

distinct ones) that we see in subnetworks of N = 120 neurons,  of these occur only once, which means we really don't know anything about their probability of occurrence. We can't say that the probability of any one of these rare states is being predicted correctly by the model, since we can't measure it, but we can say that the distribution of (log) probabilities—that is, the distribution of energies—across the set of observed states is correct, down to the ∼10% level. The model thus is predicting things far beyond what can be inferred directly from the frequencies with which common patterns are observed to occur in realistic experiments.

of these occur only once, which means we really don't know anything about their probability of occurrence. We can't say that the probability of any one of these rare states is being predicted correctly by the model, since we can't measure it, but we can say that the distribution of (log) probabilities—that is, the distribution of energies—across the set of observed states is correct, down to the ∼10% level. The model thus is predicting things far beyond what can be inferred directly from the frequencies with which common patterns are observed to occur in realistic experiments.

Finally, the structure of the models we are considering is that the state of each neuron—an Ising spin—experiences an “effective field” from all the other spins, determining the probability of spiking vs. silence. This effective field consists of an intrinsic bias for each neuron, plus the effects of interactions with all the other neurons:

|

(25) |

If the model is correct, then the probability of spiking is simply related to the effective field,

| (26) |

To test this relationship, we can choose one neuron, compute the effective field from the states of all the other neurons, at every moment in time, then collect all those moments when  is in some narrow range, and see how often the neuron spikes. We can then repeat this for every neuron, in turn. If the model is correct, spiking probability should depend on the effective field according to Eq (26). We emphasize that there are no new parameters to be fit, but rather a parameter–free relationship to be tested. The results are shown in Figure 9. We see that, throughout the range of fields that are well sampled in the experiment, there is good agreement between the data and Eq (26). As we go into the tails of the distribution, we see some deviations, but error bars also are (much) larger.

is in some narrow range, and see how often the neuron spikes. We can then repeat this for every neuron, in turn. If the model is correct, spiking probability should depend on the effective field according to Eq (26). We emphasize that there are no new parameters to be fit, but rather a parameter–free relationship to be tested. The results are shown in Figure 9. We see that, throughout the range of fields that are well sampled in the experiment, there is good agreement between the data and Eq (26). As we go into the tails of the distribution, we see some deviations, but error bars also are (much) larger.

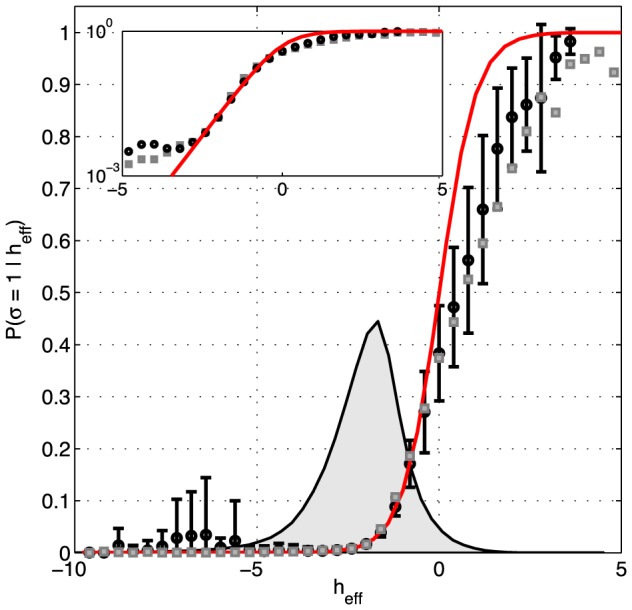

Figure 9. Effective field and spiking probabilities in a network of N = 120 neurons.

Given any configuration of  neurons, the K-pairwise model predicts the probability of firing of the N-th neuron by Eqs (25,26); the effective field

neurons, the K-pairwise model predicts the probability of firing of the N-th neuron by Eqs (25,26); the effective field  is fully determined by the parameters of the maximum entropy model and the state of the network. For each activity pattern in recorded data we computed the effective field, and binned these values (shown on x-axis). For every bin we estimated from data the probability that the N-th neuron spiked (black circles; error bars are s.d. across 120 cells). This is compared with a parameter-free prediction (red line) from Eq (26). For comparison, gray squares show the analogous analysis for the pairwise model (error bars omitted for clarity, comparable to K-pairwise models). Inset: same curves shown on the logarithmic plot emphasizing the low range of effective fields. The gray shaded region shows the distribution of the values of

is fully determined by the parameters of the maximum entropy model and the state of the network. For each activity pattern in recorded data we computed the effective field, and binned these values (shown on x-axis). For every bin we estimated from data the probability that the N-th neuron spiked (black circles; error bars are s.d. across 120 cells). This is compared with a parameter-free prediction (red line) from Eq (26). For comparison, gray squares show the analogous analysis for the pairwise model (error bars omitted for clarity, comparable to K-pairwise models). Inset: same curves shown on the logarithmic plot emphasizing the low range of effective fields. The gray shaded region shows the distribution of the values of  over all 120 neurons and all patterns in the data.

over all 120 neurons and all patterns in the data.

What do the models teach us?

We have seen that it is possible to construct maximum entropy models which match the mean spike probabilities of each cell, the pairwise correlations, and the distribution of summed activity in the network, and that our data are sufficient to insure that all the parameters of these models are well determined, even when we consider groups of N = 100 neurons or more. Figures 7 through 9 indicate that these models give a fairly accurate description of the distribution of states—the myriad combinations of spiking and silence—taken on by the network as a whole. In effect we have constructed a statistical mechanics for these networks, not by analogy or metaphor but in quantitative detail. We now have to ask what we can learn about neural function from this description.

Basins of attraction

In the Hopfield model, dynamics of the neural network corresponds to motion on an energy surface. Simple learning rules can sculpt the energy surface to generate multiple local minima, or attractors, into which the system can settle. These local minima can represent stored memories, or the solutions to various computational problems [68], [69]. If we imagine monitoring a Hopfield network over a long time, the distribution of states that it visits will be dominated by the local minima of the energy function. Thus, even if we can't take the details of the dynamical model seriously, it still should be true that the energy landscape determines the probability distribution over states in a Boltzmann–like fashion, with multiple energy minima translating into multiple peaks of the distribution.

In our maximum entropy models, we find a range of  values encompassing both signs (Figures 2D and F), as in spin glasses [70]. The presence of such competing interactions generates “frustration,” where (for example) triplets of neurons cannot find a combination of spiking and silence that simultaneously minimizes all the terms in the energy function [4]. In the simplest model of spin glasses, these frustration effects, distributed throughout the system, give rise to a very complex energy landscape, with a proliferation of local minima [70]. Our models are not precisely Hopfield models, nor are they instances of the standard (more random) spin glass models. Nonetheless, by looking at the pairwise

values encompassing both signs (Figures 2D and F), as in spin glasses [70]. The presence of such competing interactions generates “frustration,” where (for example) triplets of neurons cannot find a combination of spiking and silence that simultaneously minimizes all the terms in the energy function [4]. In the simplest model of spin glasses, these frustration effects, distributed throughout the system, give rise to a very complex energy landscape, with a proliferation of local minima [70]. Our models are not precisely Hopfield models, nor are they instances of the standard (more random) spin glass models. Nonetheless, by looking at the pairwise  terms in the energy function of our models, 48±2% of all interacting triplets of neurons are frustrated across different subnetworks of various sizes (N≥40), and it is reasonable to expect that we will find many local minima in the energy function of the network.

terms in the energy function of our models, 48±2% of all interacting triplets of neurons are frustrated across different subnetworks of various sizes (N≥40), and it is reasonable to expect that we will find many local minima in the energy function of the network.

To search for local minima of the energy landscape, we take every combination of spiking and silence observed in the data and move “downhill” on the function  from Eq (20) (see Methods: Exploring the energy landscape). When we can no longer move downhill, we have identified a locally stable pattern of activity, or a “metastable state,”

from Eq (20) (see Methods: Exploring the energy landscape). When we can no longer move downhill, we have identified a locally stable pattern of activity, or a “metastable state,”  , such that a flip of any single spin—switching the state of any one neuron from spiking to silent, or vice versa—increases the energy or decreases the predicted probability of the new state. This procedure also partitions the space of all

, such that a flip of any single spin—switching the state of any one neuron from spiking to silent, or vice versa—increases the energy or decreases the predicted probability of the new state. This procedure also partitions the space of all  possible patterns into domains, or basins of attraction, centered on the metastable states, and compresses the microscopic description of the retinal state to a number α identifying the basin to which that state belongs.

possible patterns into domains, or basins of attraction, centered on the metastable states, and compresses the microscopic description of the retinal state to a number α identifying the basin to which that state belongs.

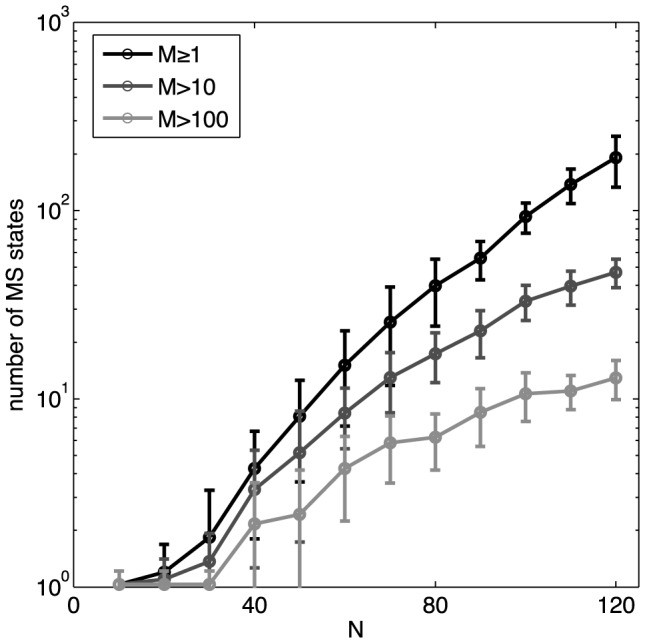

Figure 10 shows how the number of metastable states that we identify in the data grows with the size N of the network. At very small N, the only stable configuration is the all-silent state, but for N>30 the metastable states start to proliferate. Indeed, we see no sign that the number of metastable states is saturating, and the growth is certainly faster than linear in the number of neurons. Moreover, the total numbers of possible metastable states in the models' energy landscapes could be substantially higher than shown, because we only count those states that are accessible by descending from patterns observed in the experiment. It thus is possible that these real networks exceed the “capacity” of model networks [2], [3].

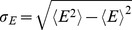

Figure 10. The number of identified metastable patterns.

Every recorded pattern is assigned to its basin of attraction by descending on the energy landscape. The number of distinct basins is shown as a function of the network size, N, for K-pairwise models (black line). Gray lines show the subsets of those basins that are encountered multiple times in the recording (more than 10 times, dark gray; more than 100 times, light gray). Error bars are s.d. over 30 subnetworks at every N. Note the logarithmic scale for the number of MS states.

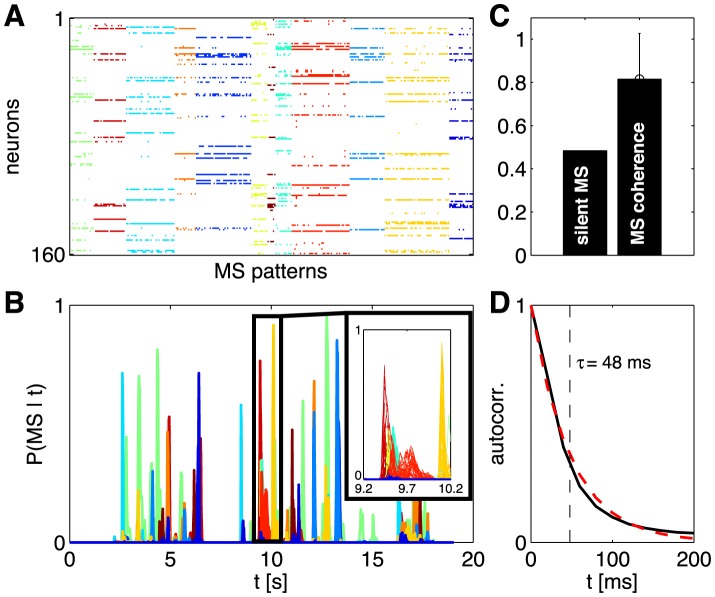

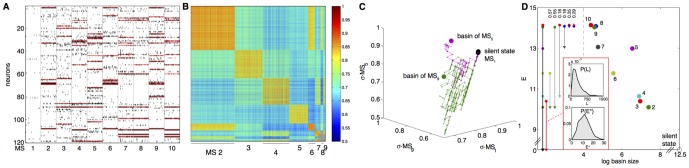

Figure 11A provides a more detailed view of the most prominent metastable states, and the “energy valleys” that surround them. The structure of the energy valleys can be thought of as clustering the patterns of neural activity, although in contrast to the usual formulation of clustering we don't need to make an arbitrary choice of metric for similarity among patterns. Nonetheless, we can measure the overlap  between all pairs of patterns

between all pairs of patterns  and

and  that we see in the experiment,

that we see in the experiment,

| (27) |

and we find that patterns which fall into the same valley are much more correlated with one another than they are with patterns that fall into other valleys (Figure 11B). If we start at one of the metastable states and take a random “uphill” walk in the energy landscape (Methods: Exploring the energy landscape), we eventually reach a transition state where there is a downhill path into other metastable states, and a selection of these trajectories is shown in Figure 11C. Importantly, the transition states are at energies quite high relative to the metastable states (Figure 11D), so the peaks of the probability distribution are well resolved from one another. In many cases it takes a large number of steps to find the transition state, so that the metastable states are substantially separated in Hamming distance.

Figure 11. Energy landscape in a N = 120 neuron K-pairwise model.

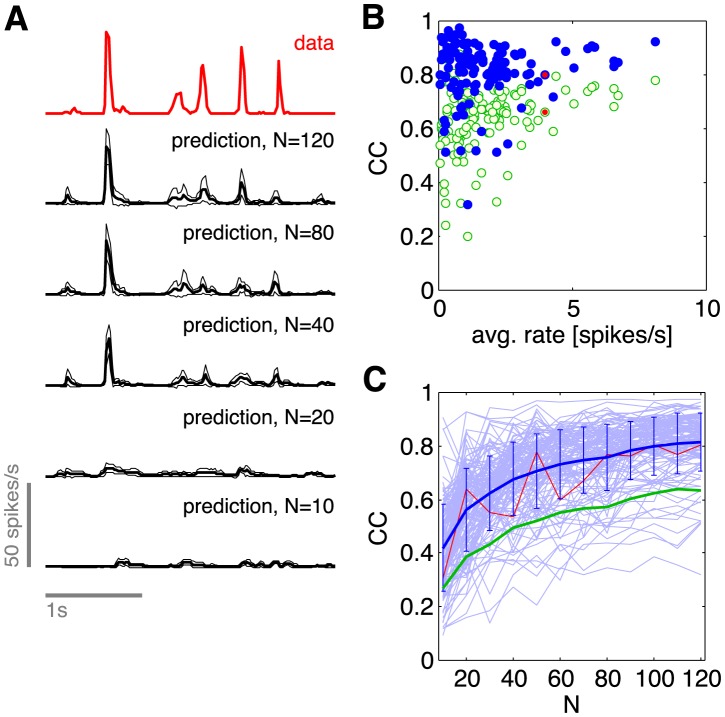

(A) The 10 most frequently occurring metastable (MS) states (active neurons for each in red), and 50 randomly chosen activity patterns for each MS state (black dots represent spikes). MS 1 is the all-silent basin. (B) The overlaps,  , between all pairs of identified patterns belonging to basins 2,…,10 (MS 1 left out due to its large size). Patterns within the same basin are much more similar between themselves than to patterns belonging to other basins. (C) The structure of the energy landscape explored with Monte Carlo. Starting in the all-silent state, single spin-flip steps are taken until the configuration crosses the energy barrier into another basin. Here, two such paths are depicted (green, ultimately landing in the basin of MS 9; purple, landing in basin of MS 5) as projections into 3D space of scalar products (overlaps) with the MS 1, 5, and 9. (D) The detailed structure of the energy landscape. 10 MS patterns from (A) are shown in the energy (y-axis) vs log basin size (x-axis) diagram (silent state at lower right corner). At left, transitions frequently observed in MC simulations starting in each of the 10 MS states, as in (C). The most frequent transitions are decays to the silent state. Other frequent transitions (and their probabilities) shown using vertical arrows between respective states. Typical transition statistics (for MS 3 decaying into the silent state) shown in the inset: the distribution of spin-flip attempts needed, P(L), and the distribution of energy barriers,